Abstract

Introduction:

Liver transplantation (LT) is an effective treatment for hepatocellular carcinoma (HCC), the most common type of primary liver cancer. Patients with small HCC (<5 cm) are given priority over others for transplantation due to clinical allocation policies based on tumor size. Attempting to shift from the prevalent paradigm that successful transplantation and longer disease-free survival can only be achieved in patients with small HCC to expanding the transplantation option to patients with HCC of the highest tumor burden (>5 cm), we developed a convergent artificial intelligence (AI) model that combines transient clinical data with quantitative histologic and radiomic features for more objective risk assessment of liver transplantation for HCC patients.

Methods:

Patients who received a LT for HCC between 2008-2019 were eligible for inclusion in the analysis. All patients with post-LT recurrence were included, and those without recurrence were randomly selected for inclusion in the deep learning model. Pre- and post-transplant magnetic resonance imaging (MRI) scans and reports were compressed using CapsNet networks and natural language processing, respectively, as input for a multiple feature radial basis function network. We applied a histological image analysis algorithm to detect pathologic areas of interest from explant tissue of patients who recurred. The multilayer perceptron was designed as a feed-forward, supervised neural network topology, with the final assessment of recurrence risk. We used area under the curve (AUC) and F-1 score to assess the predictability of different network combinations.

Results:

A total of 109 patients were included (87 in the training group, 22 in the testing group), of which 20 were positive for cancer recurrence. Seven models (AUC; F-1 score) were generated, including clinical features only (0.55; 0.52), magnetic resonance imaging (MRI) only (0.64; 0.61), pathological images only (0.64; 0.61), MRI plus pathology (0.68; 0.65), MRI plus clinical (0.78, 0.75), pathology plus clinical (0.77; 0.73), and a combination of clinical, MRI, and pathology features (0.87; 0.84). The final combined model showed 80% recall and 89% precision. The total accuracy of the implemented model was 82%.

Conclusion:

We validated that the deep learning model combining clinical features and multi-scale histopathologic and radiomic image features can be used to discover risk factors for recurrence beyond tumor size and biomarker analysis. Such a predictive, convergent AI model has the potential to alter the LT allocation system for HCC patients and expand the transplantation treatment option to patients with HCC of the highest tumor burden.

Keywords: Liver Transplantation, Hepatocellular Carcinoma, Recurrence Risk, Deep Learning

1. INTRODUCTION

According to the American Cancer Society [1], about 42,810 new cases (30,170 in men and 12,640 in women) of hepatocellular cancer (HCC) will be diagnosed in the United States for 2020, and about 30,160 people (20,020 men and 10,140 women) will die of these cancers. HCC is the most common type of primary liver cancer in adults and the third leading cause of cancer death worldwide.

Liver transplantation (LT) is the most successful treatment for patients with cirrhosis and HCC. Because LT concomitantly resects the tumor and the underlying liver disease, the main risk factor for the appearance of new tumors is eliminated by the hepatectomy. An unjustified concern for poor outcomes post-liver transplantation for HCC has resulted in a nationally dictated organ allocation policy that limits liver transplantation to a small group of patients with small tumor burdens based on ‘Milan Criteria’ (MC, defined as a single nodule smaller than 5 cm or two or three nodules of up to 3 cm), first described in 1996 [3].

Allocation policies based on tumor size deprive many patients with tumors >5 cm from a life-saving liver transplantation, despite multiple studies demonstrating that liver transplantation for small or selected large tumors exhibits equivalent survival outcomes [45-47]. Additionally, small tumors can be successfully managed with evolving new therapies and may not require liver transplantation. Thus, identification of pre-liver transplantation markers that accurately predict post-liver transplantation survival outcomes would allow transplantation of HCC based on favorable biology rather than size alone. Application of evolving therapies for small tumors would increase availability of organs for liver transplantation of well-selected, large tumors with favorable biology, while maintaining excellent post-liver transplantation outcomes.

Despite using morphologic criteria such as the Milan Criteria [2] to select patients with HCC for LT, recurrence of HCC is noted to occur in 15% to 20% of cases [3-6]. Cancer recurrence following LT is associated with an unfavorable prognosis. Therefore, it is necessary to develop a technology to study the risk factors for tumor recurrence to refine patient selection and to identify modifiable factors that may reduce the incidence of tumor recurrence.

Recently, imageomic analysis [7-10] is emerging as a promising strategy for predicting cancer risk and cancer recurrence. An imageomic approach includes automated or semi-automated signal processing and analysis of large amounts of quantitative imaging features that can be derived from medical images [11-13]. Image features include: tumor spatial complexity, sub-regional identification in terms of tumor viability or aggressiveness, and response to chemotherapy and/or radiation [14-15]. The technology can help to reveal unique information about tumor behavior by integrating multiple types of data with clinical, pathological, and/or genomic information to decode different types of tissue biology [16-17]. Many imageomic studies have been performed on various types of cancers, such as lung cancer [18], breast cancer [19], prostate cancer [20], and liver cancer [21], but scarcely few are reported on risk prediction of HCC recurrence for liver transplantation.

On the other hand, there are several machine learning models reported for processing data of patients with HCC, including tumor segmentation, feature extraction, and liver cancer classification. For example, a classification model was proposed [22] to identify liver cirrhosis by applying the gray-level co-occurrence matrix (GLCM) with singular value decomposition to extract image features and then deploying support vector machine to classify malignant tumors. For detecting the complex hepatocellular carcinoma, an aptamer-based electrochemical biosensor and related statistical model were proposed [23], while an integrative multi-omics strategy was proposed [24] to identify HCC and stratification through prognostic biomarkers. A multivariate based linear model was developed [26] to predict recurrence-free and overall survival outcomes after surgical resection of a solitary HCC. There are also studies to indicate the potential of machine learning models using multiparametric ultrasound [27] and histogram features of CT/MR images [28,29] for staging liver fibrosis and in diagnosing nonalcoholic steatohepatitis. Nevertheless, a common drawback across all these studies is that the accuracy of the model is not robust owing to poor image quality, edge information loss, and computational complexity.

Recently, deep learning methods for synthetic medical image generation was proposed [30] to improve the efficiency of Convolutional Neural Networks (CNNs) for HCC image classification. Cascaded Fully Convolutional Neural Networks (CFCNs) and dense 3D Conditional Random Fields (CRFs) were presented [31] to segment liver and lesions automatically in HCC patients. A fully convolutional network (FCN) was offered to identify liver tumors [32]. There are also many applications that use CNNs in the interpretation of liver cancers, including HCC, liver metastasis, and other liver masses.

This study presents a new imageomics and multi-network based deep learning model that converts expertise in LT, full-slide image digitization, and deep machine learning, and integrates multimodality data of quantitative image features with relevant clinical data to identify pre-clinical and biological markers for predicting good post-transplant outcomes, regardless of size. This convergent AI system includes a multimodality database containing both radiologic and pathologic images with clinical risk factors and integrates natural language processing, image analysis, and deep learning algorithms to assess recurrence risk for LT recipients.

2. METHOD

Our deep learning model is deployed to distinguish post-LT recipients of high recurrence risk with high specificity and sensitivity.

2.1. DATABASE CONSTRUCTION

To develop the risk assessment model for post liver transplant patients, a multimodality database was constructed to aggregate large volumes of de-identified transplant patient information linked by pseudo-Medical Record Number, including:

(1). Volumetric MR images:

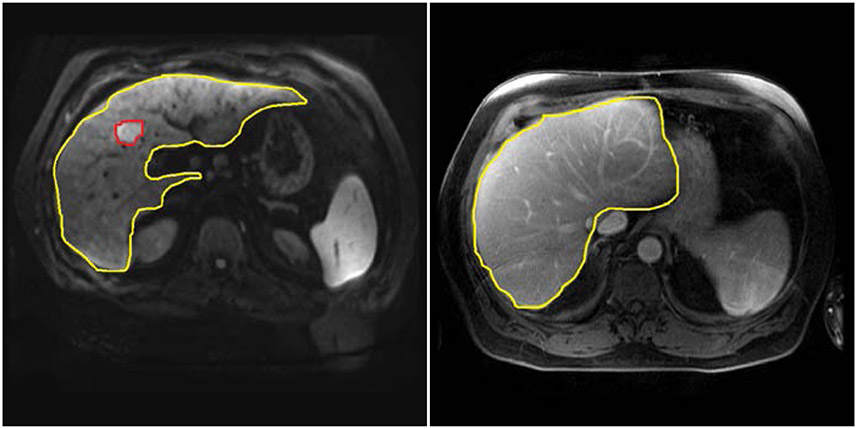

We retrieved magnetic resonance (MR) images from the picture archiving and communication system (PACS) and extracted liver area before archiving them into our multimodality database. We derived the MR images of each patient three months before transplant and three months after transplant. Then we trained the U-net model [33] to detect the regions of liver and tumors. Figure 2-1 shows an example of the liver and tumor detected MR images on different time points.

Figure 2-1:

Example images at different time points retrieved from the enterprise picture archiving and communication system (PACS) at Houston Methodist Hospital with the tumor detection by deploying U-net model [33].

(a) Automatic Liver and Tumor detection on MRI ( three months before liver transplant)

(b) Automatic Liver detection without tumor detected on MRI ( three months after liver transplant)

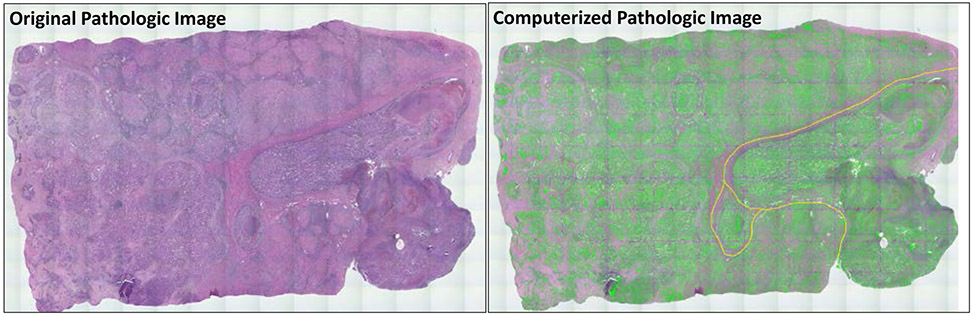

(2). Pathological images:

Explant tissue was obtained for patients with known HCC recurrence. A pathologist selected image signatures for manual inspection and monitored the accuracy of pathological image quantitation. Clinical features of normal or tumor tissue were quantitatively represented by image signatures. We applied our histological image analysis algorithm [34] to detect the areas of interest, extract, and combine features. The combination that generates the best tissue expression is statistical texture descriptors applied to image histogram and incorporating statistical texture descriptors from the co-occurrence matrix. Figure 2-2 shows the pathological image data annotated with areas of interest.

Figure 2-2:

A digitized pathological image (left) with AI annotation (right) on areas of interest. Green annotation represents tumor foci and yellow annotation represents vasculature detected by our histological image segmentation and analysis algorithm [34].

(3). Clinical reports and patient demographics:

Structured clinical data (e.g., age, race, height, weight, body mass index, personal and family history of cancer) were sourced from METEOR [35], a clinical data warehouse that contains clinical records and notes of more than 3 million patients and 8 hospitals of Houston Methodist dating back to 2006.

(4). Clinical and pathological signatures:

Patients with recurrence were identified among this pool by using our natural language processing (NLP) tool [36] to extract pathologic information (staging and microvascular status) from biopsy and surgical pathology reports. Radiologic findings were extracted using statistical database queries and NLP to include clinical symptoms.

2.2. MODEL DEVELOPMENT

We developed an intelligent-augmented deep learning based model for Risk Assessment of Post LIver Transplantation (i-RAPIT) to help determine whether or not clinicians need to increase surveillance (such as more imaging) or provide chemotherapy for the high risk patients after transplantation to prevent cancer before evidence of recurrence. The design of the i-RAPIT model includes several considerations. First, we used supervised information in training the model for extracting visual features. The trained model has reconstruction errors that focus on describing frequent image features, thus, potentially missing rare features relevant for recurrence prediction. Second, we designed a multi-network deep learning architecture for training the model to give a significant boost to the classification accuracy and the ability to generalize with fewer training samples (also known as data-efficiency). Third, despite the impressive image classification accuracy that matches human performance in certain applications, deep networks can fail unexpectedly for the cases obvious to a human. To circumvent this problem, we developed a robust i-RAPIT prediction model using multiple deep networks.

Figure 2-3 illustrates a schematic diagram of the i-RAPIT model. It consists of one deep CapsNet network for extracting low-dimensional visual features from MR images, one deep CapsNet network for extracting visual features from timeline based pathological images, a NLP-based Radial Basis Function (RBF) network for clinical features extraction, and a multilayer perceptron feed-forward network to combine visual features and clinical features for making the final recurrence risk calculation. The main advantage of using the deep CapsNet network rather than the traditional conventional convolutional network (CNN) is that the CapsNet network can reserve the object’s spatial information [48] such that important explainable features of the tumor or cancer cells (e.g., shape, location, scale, etc.) can be identified. CNN is not able to identify such spatial information because CNN is using the pooling layer in which the activation function normally does not change with its proportion if the spatial information of the object (tumor or cells) is slightly altered. Thus, it is difficult to precisely locate an object in the MR image or pathological image. The CNN only considers the existence of the object in the image around a specific location, but does not care about the direction of the object or spatial relations. Although the improved CNN models can reconstruct spatial information via advanced techniques [49], these techniques are not entirely accurate because the reconstruction procedure in these models has are often erroneous. The deep CapsNet network contains spatial information about the tumor because the capsule is a vector with such features of the object [48]. These features can include explainable clinical parameters about the tumor, such as tumor size, cell shape, tumor position, orientation, deformation, and texture.

Figure 2-3:

The deep learning architecture of the hepatocellular cancer (HCC) recurrence risk assessment system. We developed the i-RAPIT model with two CapsNet networks, one Radial Basis Function (RBF) neural network and one multilayer perceptron. The first CapsNet networks is used for compressing the features from the original magnetic resonance (MR) images. The second CapsNet network is used for compressing the features from the original pathological images. A Natural Language Processing (NLP) tool is used to combine with imaging and demographic features as the input of the multiple feature RBF network, whose compressed features are used as input on the final decision- making multilayer perceptron. The output of the multilayer perceptron is the final assessment of HCC recurrence risk.

Model Input.

The i-RAPIT model input includes: (i) MR images and pathological images and (ii) image, pathological, and demographic features extracted from clinical reports by using NLP method. Original patient MR image data were used as an input. To reserve the image information, we did contrast adjustment and brightness correction and normalized the image size to 512*512*120 to ensure all input images have a consistent size. After the liver transplant, the resected tissue with tumor was delivered to the pathology department for histopathological imaging. Then, our imaging technician scanned the pathological image slides and transferred the digitalized pathology image to the pathologist. We used the pathological images with tumor tissue after liver transplant in training our deep learning model. The pathologists marked the regions of interest (ROI) on the pathological images to teach the network to learn the features of suspicious tumor areas. Extracted features from clinical reports of each transplant patient were mined from demographic and clinical signatures in our structured dataset and organized as a feature matrix, with different rows representing different feature types. The feature vector of each row was generated from word2vec model from Mikolov et al. [38] and GloVe model from Pennington et al. [39]. Zero-padding was applied to ensure the dimensionalities of feature vectors were identical across different feature types.

Model Output.

Our model uses Bayesian deep learning techniques [40] for i-RAPIT outputs of recurrence risk assessment (a distribution of probability). This has an advantage over outputting to only one conclusion. We can efficiently compute the mean of N probabilities and compare it to a pre-determined threshold to decide the final assessment: yrecurrence = 1 if , where yrecurrence is the final label, yi is one of N outputs by the model, and τ is an optimal threshold determined during the training by running K-fold cross-validation. More importantly, we can quantify the certainty of the model’s prediction by computing: certainty = 1 – σ2, where is the (unbiased) sample variance of the outputs. This value is high when most N recommendations are similar to the final assessment.

Model Setting.

Since the i-RAPIT model contains several subnetworks, we have defined the settings for each network and a procedure for optimizing the final model.

Medical Image Convergence Network.

The ability to generalize with fewer training examples is highly desirable given that the number of post-transplant patients with recurrence is significantly smaller than the number of images in popular datasets commonly used for training state-of-the-art deep networks. Therefore, we used a deep convergence model combined with the popular networks, namely, CapsNet, as our network for extracting low-dimensional visual features from MR images and pathological images. The prior work [41,42] shows that the convergence AI model outperforms state-of-the-art deep networks in image classification tasks while requiring an order of magnitude less training data.

A schematic diagram of CapsNet network is shown in Figure 2-4. CapsNet network contains two main parts: an encoder and a decoder. The encoder contains multiple convolutional layers (with rectified linear unit [ReLU] activation) and one fully connected layer. A hallmark of CapsNet networks is that they operate on groups of neurons (also called capsules, represented by vectors) instead of individual neurons (represented by scalar values). The lengths of the capsules (or magnitudes of the vectors) encode the identities of different visual features while the orientations (or directions of the vectors) encode the spatial relationships of these features. The explicit modeling of spatial information is the key property for CapsNet networks to effectively interpolate their predictions over novel spatial transformations and reduce the amount of required training examples. Information propagates from one layer to another layer in the encoder through a dynamic voting mechanism (DVM). Specifically, a higher-level capsule is activated only when voting vectors computed from lower-level capsules have consistent orientations. The previous papers used a consistent voting mechanism to replace the computationally expensive DVM and allowed the training of deeper CapsNet architectures [43]. We used a CNN to model our decoder as described by Mobiny et al [43]. The decoder tries to reconstruct the input from the final capsules, which forces the network to preserve as much information from the input as possible across the whole network.

Figure 2-4:

The architecture of CapsNet network. In the encoder, the first two layers perform convolutional operations to construct the primary capsule structure. The decoder tries to reconstruct the input based on two fully connected layers from the final capsules, which has forced the network to preserve as much information from the input as possible across the whole network.

Clinical Feature Radial Basis Function Network.

We used the stochastic kernel approximation approach [44] for multiple clinical-feature learning in the training algorithm, Stochastic Gradient Variational Bayes (SGVB). The RBF network includes one input layer, one feature layer, and one hidden layer. An input-output relation for a neuron is defined as , where xclinical is the input set, is the output features, M2 is the neuron number, and Wg and Wq denote the learnable parameters.

Decision-Making Multilayer Perceptron.

The multilayer perceptron was designed as a feed-forward, supervised neural network topology. It consists of one input layer, one output layer, and several hidden layers. The mission of each neuron is adding weighted inputs, getting a net input and obtaining an output by transferring this net input through a LeLU activation function. An input-output relation for a neuron with inputs is defined as , where xMLP is the input set, is the output of our model, M3 is the number of neurons, and Wh denotes the set of learnable parameters.

Bayesian Optimization for Tuning Parameters.

The previous work [41-42] demonstrates that Bayesian optimization is effective for modeling certainty in medical image classification tasks. We applied this method to tuning the i-RAPIT network. We also investigated the use of Drop Connect as an alternative to Dropout because the preliminary work [40] demonstrated that Drop Connect generates significantly better uncertainty estimates for image classification and segmentation tasks. The real challenge of the implementation of deep learning is tuning various parameters, assessing the convergencies, and selecting the optimal predictive models. In this study, we applied the Bayesian optimization for deep learning model analysis and worked on a Bayesian strategy that is the preferred approach on the parametric optimization in which various strategies have been explored for optimizing the parameters in the machine learning models. Most importantly, Bayesian variation optimization has demonstrated better results than other state-of-art approaches. Hence, we used this kind of Bayesian optimization to augment various parameters in order to achieve an optimized deep learning model.

3. IMPLEMENTATION AND VALIDATION

3.1. DATA SOURCES

We constructed a database to aggregate large volumes of de-identified liver transplant patient information, including 1) MR and pathological images; 2) clinical reports and patient demographic variables; and 3) clinical and pathological signatures. The data were from Houston Methodist’s METEOR clinical data warehouse [35], the Houston Methodist J.C. Walter Jr Transplant Center database, and Houston Methodist’s picture archiving and communication system (PACS). We managed datasets linked by pseudo-Medical Record Numbers. Table 1 lists clinical signatures stored in the database.

Table 1.

Selected clinical features stored in the database for the convergence artificial intelligence model.

| Descriptors | Selected Features |

|---|---|

| Demographic Variables | Age at Transplant, Race, Height, Weight, BMI, Insurance |

| Clinical and Pathological Signatures | Donor Characteristics Including Age, Vasopressor Use, Diabetes status, Weight; Cold Ischemia Time, Warm Ischemia, Blood Product Utilization; Time of Wait Listing, Locoregional Therapy, Ventilator Status, Dialysis, MELD Score, Length of Stay, Post-Operative Type |

Notes: BMI, body mass index; MELD, model of end-stage liver disease.

3.2. STUDY DESIGN

With the i-RAPIT database, we conducted a retrospective, non-interventional, observational study to validate our deep learning predictive model. There were no age, race, or family cancer history restrictions on the inclusion criteria. The study procedure was limited to review of transplant patients’ clinical data and use of the developed model for recurrence risk assessment. Subsequently, these were compared to real clinical follow-up records. For each study participant, we used patient information, such as demographics, imaging features, clinical, and report variables, in the model to predict categorization into either of the subgroups (cancer recurrence group and non-cancer recurrence group) listed above and then retrieved pathology findings from biopsy results as follow-up data. We compared predicted categorizations and risks with actual follow-up records provided by our clinical collaborators. Accuracy was assessed by means of statistical analysis. HCC recurrence as a histological diagnosis after transplant was considered as a positive result. Patients with recurrence were identified within this pool by MR imaging and extracting pathologic information (date of recurrence, stage, differentiation, number, tumor volume, distribution, location, size and presence or absence of vascular invasion) from biopsy and surgical pathology reports.

The number of liver transplant patients used in this cohort was 109 (20 positive patients and 89 negative patients). The patients were randomly divided into two groups, where there were 87 patients for the training group (80% of 109 patients) and 22 patients for the testing group (20% of 109 patients). The training group had 11 positive patients while 76 patients were negative. In the testing group, 9 patients were positive with 13 patients being negative. The generated case sample for training or testing includes, for each patient, (1) their four MR image stack, (2) their divided pathological image, and (3) their clinical signatures.

As mentioned before, each patient has three MR image scans three months before liver transplant and one MR image scan three months after liver transplant, and each MR image scan consists of about 120 image slides. For the first CapsNet network, the number of MR images for training and testing in the dataset is 109*4 = 436. For digitized pathological images, we divided each image into small pieces due to their large size. The dimension of each piece was also 512*512 to fit the input of the second CapsNet network, resulting in 20 pieces per image. For the second CapsNet network, the total number of divided pathological image for training and testing is 109*20 = 2,180. We also used the clinical signatures of 109 images to train and test the RBF network. All image data were divided into a training group and a testing group according to the patient cases assignment. The deep learning model mainly contributed to the precise image feature extraction. The total number of the training and testing samples for the final multilayer perceptron classifier was 109 (80% in training group and 20% in testing group).

3.3. EVALUATION

Diagnostic MR images, pathological images with tumor tissue after liver transplant, and clinical signatures were used as the input of the deep learning model. If a patient had a suspicious area found in the follow-up screening one year after the liver transplant, the transplant surgeon then requested a biopsy procedure to evaluate for HCC recurrence. The result from the biopsy report was used as a histological diagnosis of HCC recurrence after transplant and was considered as a ground truth for evaluating our model. No suspicious area in the regular screening in one year after the liver transplant was also considered as a ground truth of our model with no HCC recurrence, i.e. negative result. Validation of our model prediction was carried out in two ways. The i-RAPIT model predicts whether HCC recurs or not, a binary classification; thus, we employed the area under the ROC curve (AUC). AUC based on the concept of confusion matrix informs on how the proportion of our expected assessment of HCC recurrence by the model ranks before a random prediction for patients who actually had HCC recurrence, P(score(x+) > score(x−)). An AUC between 0.8 and 1.0 (especially closer to 1.0) indicates a strong prediction model. We also evaluated the precision and recall of the model and determined the F-1 score. The Recall, also the True Positive Rate (TPR), denotes the proportion of all real HCC recurrences that are correct.

Precision is a measure of how many actual HCC recurrence cases are predicted correctly.

where FN means the number of false negative samples, P means the number of all positive samples, TP means the number of true positive samples, and FP the number of means false positive samples. The F-1 score, an appropriate measure of a test's accuracy, was used and referred to the harmonic mean of both precision and recall. F1 = 2*(Precision*Recall)/(Precision+Recall). The study had 80% power at the 0.05 significance level to detect a median risk index ≥0.5, assuming the true acceptability proportion of 0.815. The model together with the estimated parameters are applied to sample sizes, and the resulting prediction was compared with the data points.

3.4. EXPERIMENTAL RESULTS

We applied the model settings for CapsNet network and RBF neural network from the prior works [43-44] and used a 10-fold cross-validation method on the training datasets to avoid the over-fitting issue. After the model was trained, we used our testing group data to evaluate the model. Table 2 lists the results of testing group data using our model, which was implemented in our risk assessment tool of HCC recurrence after liver transplantation. We expected high recall and precision and a strong accuracy in the validation.

Table 2.

Number of liver transplant cases and results of testing group data (Total accuracy: 82%).

| Number of patients |

HCC Recurrence Findings (Based on Biopsy Reports) | |

|---|---|---|

| HCC recurrence (Positive) | Non-recurrence (Negative) | |

| 109 | 20 | 89 |

| Training group: 87 | 11 | 76 |

| Testing group: 22 | Actual HCC recurrence patients: 9 | Actual non-recurrence patients: 13 |

| Accurate Predicted HCC recurrence patients: 8 | Accurate Predicted non-recurrence patients: 11 | |

Note: HCC, hepatocellular carcinoma

The results of testing group data in Table 2 shows that the number of HCC recurrence cases was nine, the number of predicted HCC recurrence cases was 8, the number of actual non-recurrence cases was 13, and the number of accurately predicted non-recurrence cases was 11. The recall of our model was 80% and the precision was 89%. The total accuracy of our implemented model in the data is 82%.

We calculated the AUC and F-1 score to validate our model objectively. Since i-RAPIT is a multiple deep network based model, we also compared with other network based models, including: RBF network only, the first CapsNet network only, the second CapsNet network only, the first CapsNet network plus the second CapsNet network, the first CapsNet network plus RBF network, and the second CapsNet network plus RBF network. Table 3 shows the AUC values after comparing with different network based methods. The AUC values in Table 3 illustrate that the i-RAPIT model has a much higher efficiency than all the other six methods. Table 3 also shows F-1 scores in our model validation where the results again indicate that the i-RAPIT model has a higher performance than these other methods.

Table 3.

Area Under the Curve values using different network-combinations based methods for comparison

| Model | AUC | F-1 Score |

|---|---|---|

| RBF network only (Clinical signatures only) |

0.55 | 0.52 |

| First CapsNet network (MR images only) |

0.64 | 0.61 |

| Second CapsNet network (Pathological images only) |

0.64 | 0.61 |

| First CapsNet network + Second CapsNet network (MR images + Pathological images) |

0.68 | 0.65 |

| First CapsNet network + RBF network (MR images + Clinical signatures) |

0.78 | 0.75 |

| Second CapsNet network + RBF network (Pathological images + Clinical signatures) |

0.77 | 0.73 |

| Proposed i-RAPIT model | 0.87 | 0.84 |

Notes: AUC, area under the curve; convergent artificial intelligence; i-RAPIT, intelligent-augmented model for Risk Assessment of Post LIver Transplantation; MR, magnetic resonance; RBF, Radial Basis Function

4. DISCUSSION

Liver transplantation is currently the most successful treatment for HCC despite the risk of recurrence in patients with large HCC. To our knowledge, this i-RAPIT model is the first decision support tool for HCC-recurrence risk stratification of liver transplant patients using convergent AI techniques and improves patient-physician engagement in making an informed decision on whether or not there is a high risk of HCC recurrence after liver transplant. We presented the results of applying the i-RAPIT model to stratify recurrence risk for post-liver transplantation patients. Our risk prediction model demonstrates high sensitivity and specificity.

The advantages of the proposed multi-network model include the following. First, the i-RAPIT model can seamlessly learn from both annotated data and a large number of unannotated clinical image data. Combining labeled data and large amounts of unannotated data can help the deep learning model to better generalize than using labeled data alone. Second, the model uses multiple types of input information including images and clinical report features, whereas the traditional deep networks, such as CNNs [37], can only use a single type of data. Third, it makes use of a high-performance and data-efficient deep network, which our prior work has shown to outperform state-of-the-art deep networks terms of classification accuracy while using an order of magnitude fewer data [37]. Fourth, it employs an effective Bayesian deep learning method to estimate the certainty of its prediction besides the final recurrence risk calculation.

In the i-RAPIT model, CapsNet network extracts the features from MR images and pathological images, and these features contain the spatial information that is fundamentally different from CNN features. Previous work [48] did the activation maximization analysis and showed that CapsNet network features could be used to better describe all facets of tumor objects or cancer cell conditions than CNN features. The researchers believed that the explainable features used for the machine learning model could improve prediction performance in clinics [50]. The CapsNet network used in i-RAPIT model with routing and reconstruction contains the object spatial information across all capsule vectors. The capsule vectors could indeed capture clinical parameters of tumor objects or cancer cells, which is a major benefit over CNN features. Combining with the clinical signatures, i-RAPIT model explores multiple types of data to identify clinical markers that predict recurrence after liver transplant for HCC.

The i-RAPIT model was built, refined, and validated with patient data from a single large urban hospital system in one of the most ethnically diverse cities (Houston, TX) and the multi-site testing was performed with data from hospitals still restricted to South Texas. This may affect generalizability of the study. A wider geographic spread, multi-center study is being planned to assess the scalability of the risk prediction model. This risk assessment is to support liver transplantation decision making for recipients with HCC and not a definitive diagnosis tool. For example, patients assessed as ‘intermediate risk’ (model output is close to 0.5) by the i-RAPIT model would still need to be engaged by their healthcare provider to arrive at an informed decision on whether to proceed with a liver transplantation.

5. CONCLUSION

We adopted the convergent AI strategy to develop an imageomics-based, multi-network deep learning model, i-RAPIT, using multi-scale image and clinical datasets of liver transplantation patients to predict recurrence-free survival in liver transplantation recipients with HCC. We validated the performance of the model using HCC patient data from a major liver transplant center in the United States. The i-RAPIT model aims to support online access by physicians in triaging patients in high recurrence risk groups to reconsider liver transplantation. The model may also allow transplantation of HCC patients based on accurate physiological and clinical data, rather than the current selection criterion based solely on tumor size. The model has the potential to enhance the outcomes of liver transplantation for HCC and expand the number of patients who could receive, but are currently denied, a life-saving organ and an opportunity for freedom from HCC.

ACKNOWLEDGMENT

This research is supported by Tsing Tsung and Wei Fong Chao Foundation, John S. Dunn Research Foundation, Johnsson Estate, NIH R01CA251710-01, and NIH U01 CA253553-01 to S.T.C.W. We would like to thank Dr. Rebecca Danforth for proofreading the manuscript.

REFERENCES

- [1].American Cancer Society. Facts & Figures 2020. American Cancer Society. Atlanta, Ga. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Daoud A, Teeter L, Ghobrial RM, Graviss EA, Mogawer S, Sholkamy A, El-Shazli M, Gaber AO: Transplantation for hepatocellular carcinoma: is there a tumor size limit? Transplantation proceedings 2018, 50(10):3577–3581. [DOI] [PubMed] [Google Scholar]

- [3].Mazzaferro V, Regalia E, Doci R, Andreola S, Pulvirenti A, Bozzetti F, Montalto F, Ammatuna M, Morabito A, Gennari L: Liver transplantation for the treatment of small hepatocellular carcinomas in patients with cirrhosis. The New England journal of medicine 1996, 334(11):693–699. [DOI] [PubMed] [Google Scholar]

- [4].Wiesner R, Edwards E, Freeman R, Harper A, Kim R, Kamath PS, Kremers WK, Lake JR, Howard T, Merion RM et al. : Model for end-stage liver disease (MELD) and allocation of donor livers. Gastroenterology 2003, 124(1):91–96. [DOI] [PubMed] [Google Scholar]

- [5].Mazzaferro V, Llovet JM, Miceli R, Bhoori S, Schiavo M, Mariani L, Camerini T, Roayaie S, Schwartz ME, Grazi GL et al. : Predicting survival after liver transplantation in patients with hepatocellular carcinoma beyond the Milan criteria: a retrospective, exploratory analysis. The Lancet Oncology 2009, 10(1):35–43. [DOI] [PubMed] [Google Scholar]

- [6].Kim WR, Lake JR, Smith JM, Schladt DP, Skeans MA, Noreen SM, Robinson AM, Miller E, Snyder JJ, Israni AK et al. : OPTN/SRTR 2017 Annual Data Report: Liver. American Journal of Transplantation 2019, 19(S2):184. [DOI] [PubMed] [Google Scholar]

- [7].Gillies Robert J., Kinahan Paul E., and Hricak Hedvig. "Radiomics: images are more than pictures, they are data." Radiology 278, no. 2 (2016): 563–577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Wu Jia, Tha Khin Khin, Xing Lei, and Li Ruijiang. "Radiomics and radiogenomics for precision radiotherapy." Journal of radiation research 59, no. suppl_1 (2018): i25–i31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Vallières Martin, Zwanenburg Alex, Badic Bodgan, Rest Catherine Cheze Le, Visvikis Dimitris, and Hatt Mathieu. "Responsible radiomics research for faster clinical translation." (2018): 189–193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Lambin Philippe, Leijenaar Ralph TH, Deist Timo M., Peerlings Jurgen, De Jong Evelyn EC, Van Timmeren Janita, Sanduleanu Sebastian et al. "Radiomics: the bridge between medical imaging and personalized medicine." Nature reviews Clinical oncology 14, no. 12 (2017): 749–762. [DOI] [PubMed] [Google Scholar]

- [11].Rizzo Stefania, Botta Francesca, Raimondi Sara, Origgi Daniela, Fanciullo Cristiana, Morganti Alessio Giuseppe, and Bellomi Massimo. "Radiomics: the facts and the challenges of image analysis." European radiology experimental 2, no. 1 (2018): 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Lao Jiangwei, Chen Yinsheng, Li Zhi-Cheng, Li Qihua, Zhang Ji, Liu Jing, and Zhai Guangtao. "A deep learning-based radiomics model for prediction of survival in glioblastoma multiforme." Scientific reports 7, no. 1 (2017): 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Rogers William, Seetha Sithin Thulasi, Refaee Turkey AG, Lieverse Relinde IY, Granzier Renée WY, Ibrahim Abdalla, Keek Simon A. et al. "Radiomics: from qualitative to quantitative imaging." The British Journal of Radiology 93, no. 1108 (2020): 20190948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Zhang Bin, Tian Jie, Dong Di, Gu Dongsheng, Dong Yuhao, Zhang Lu, Lian Zhouyang et al. "Radiomics features of multiparametric MRI as novel prognostic factors in advanced nasopharyngeal carcinoma." Clinical Cancer Research 23, no. 15 (2017): 4259–4269. [DOI] [PubMed] [Google Scholar]

- [15].Zhou Mu, Scott Jacob, Chaudhury Baishali, Hall Lawrence, Goldgof Dmitry, Yeom Kristen W., Iv Michael et al. "Radiomics in brain tumor: image assessment, quantitative feature descriptors, and machine-learning approaches." American Journal of Neuroradiology 39, no. 2 (2018): 208–216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Huang Shih-ying, Franc Benjamin L., Harnish Roy J., Liu Gengbo, Mitra Debasis, Copeland Timothy P., Arasu Vignesh A. et al. "Exploration of PET and MRI radiomic features for decoding breast cancer phenotypes and prognosis." NPJ breast cancer 4, no. 1 (2018): 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Van Griethuysen Joost JM, Fedorov Andriy, Parmar Chintan, Hosny Ahmed, Aucoin Nicole, Narayan Vivek, Beets-Tan Regina GH, Fillion-Robin Jean-Christophe, Pieper Steve, and Aerts Hugo JWL. "Computational radiomics system to decode the radiographic phenotype." Cancer research 77, no. 21 (2017): e104–e107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Thawani Rajat, McLane Michael, Beig Niha, Ghose Soumya, Prasanna Prateek, Velcheti Vamsidhar, and Madabhushi Anant. "Radiomics and radiogenomics in lung cancer: a review for the clinician." Lung Cancer 115 (2018): 34–41. [DOI] [PubMed] [Google Scholar]

- [19].Valdora Francesca, Houssami Nehmat, Rossi Federica, Calabrese Massimo, and Tagliafico Alberto Stefano"Rapid review: radiomics and breast cancer." Breast cancer research and treatment 169, no. 2 (2018): 217–229. [DOI] [PubMed] [Google Scholar]

- [20].Chaddad Ahmad, Kucharczyk Michael J., and Niazi Tamim. "Multimodal radiomic features for the predicting gleason score of prostate cancer." Cancers 10, no. 8 (2018): 249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Jeong Woo Kyoung, Jamshidi Neema, Felker Ely Richard, Raman Steven Satish, and Lu David Shinkuo. "Radiomics and radiogenomics of primary liver cancers." Clinical and molecular hepatology 25, no. 1 (2019): 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Wang Yu, Cao Luying, Dey Nilanjan, Ashour Amira S., and Shi Fuqian. "Mice Liver Cirrhosis Microscopic Image Analysis Using Gray Level Co-Occurrence Matrix and Support Vector Machines." In ITITS, pp. 509–515. 2017. [Google Scholar]

- [23].Ladju Rusdina Bte, Pascut Devis, Massi Muhammad Nasrum, Tiribelli Claudio, and Sukowati Caecilia HC. "Aptamer: A potential oligonucleotide nanomedicine in the diagnosis and treatment of hepatocellular carcinoma." Oncotarget 9, no. 2 (2018): 2951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Miao Ruoyu, Luo Haitao, Zhou Huandi, Li Guangbing, Bu Dechao, Yang Xiaobo, Zhao Xue et al. "Identification of prognostic biomarkers in hepatitis B virus-related hepatocellular carcinoma and stratification by integrative multi-omics analysis." Journal of hepatology 61, no. 4 (2014): 840–849. [DOI] [PubMed] [Google Scholar]

- [25].Chen Yang, Luo Yan, Huang Wei, Hu Die, Zheng Rong-qin, Cong Shu-zhen, Meng Fan-kun et al. "Machine-learning-based classification of real-time tissue elastography for hepatic fibrosis in patients with chronic hepatitis B." Computers in biology and medicine 89 (2017): 18–23. [DOI] [PubMed] [Google Scholar]

- [26].Chan Anthony WH, Zhong Jianhong, Berhane Sarah, Toyoda Hidenori, Cucchetti Alessandro, Shi KeQing, Tada Toshifumi et al. "Development of pre and post-operative models to predict early recurrence of hepatocellular carcinoma after surgical resection." Journal of hepatology 69, no. 6 (2018): 1284–1293. [DOI] [PubMed] [Google Scholar]

- [27].Li Wei, Huang Yang, Zhuang Bo-Wen, Liu Guang-Jian, Hu Hang-Tong, Li Xin, Liang Jin-Yu et al. "Multiparametric ultrasomics of significant liver fibrosis: A machine learning-based analysis." European radiology 29, no. 3 (2019): 1496–1506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].He Lili, Li Hailong, Dudley Jonathan A., Maloney Thomas C., Brady Samuel L., Somasundaram Elanchezhian, Trout Andrew T., and Dillman Jonathan R.. "Machine learning prediction of liver stiffness using clinical and T2-weighted MRI radiomic data." American Journal of Roentgenology 213, no. 3 (2019): 592–601. [DOI] [PubMed] [Google Scholar]

- [29].Choi Kyu Jin, Jang Jong Keon, Lee Seung Soo, Sung Yu Sub, Shim Woo Hyun, Kim Ho Sung, Yun Jessica et al. "Development and validation of a deep learning system for staging liver fibrosis by using contrast agent–enhanced CT images in the liver." Radiology 289, no. 3 (2018): 688–697. [DOI] [PubMed] [Google Scholar]

- [30].Frid-Adar Maayan, Diamant Idit, Klang Eyal, Amitai Michal, Goldberger Jacob, and Greenspan Hayit. "GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification." Neurocomputing 321 (2018): 321–331. [Google Scholar]

- [31].Sun Changjian, Guo Shuxu, Zhang Huimao, Li Jing, Chen Meimei, Ma Shuzhi, Jin Lanyi, Liu Xiaoming, Li Xueyan, and Qian Xiaohua. "Automatic segmentation of liver tumors from multiphase contrast-enhanced CT images based on FCNs." Artificial intelligence in medicine 83 (2017): 58–66. [DOI] [PubMed] [Google Scholar]

- [32].Chlebus Grzegorz, Schenk Andrea, Moltz Jan Hendrik, van Ginneken Bram, Hahn Horst Karl, and Meine Hans. "Automatic liver tumor segmentation in CT with fully convolutional neural networks and object-based postprocessing." Scientific reports 8, no. 1 (2018): 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Ronneberger Olaf, Fischer Philipp, and Brox Thomas. "U-net: Convolutional networks for biomedical image segmentation." In International Conference on Medical image computing and computer-assisted intervention, pp. 234–241. Springer, Cham, 2015. [Google Scholar]

- [34].Yan Pingkun, Zhou Xiaobo, Shah Mubarak, and Wong Stephen TC. "Automatic segmentation of high-throughput RNAi fluorescent cellular images." IEEE Transactions on Information Technology in Biomedicine 12, no. 1 (2008): 109–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Puppala Mamta, He Tiancheng, Chen Shenyi, Ogunti Richard, Yu Xiaohui, Li Fuhai, Jackson Robert, and Wong Stephen TC. "METEOR: an enterprise health informatics environment to support evidence-based medicine." IEEE Transactions on Biomedical Engineering 62, no. 12 (2015): 2776–2786. [DOI] [PubMed] [Google Scholar]

- [36].Patel Tejal A., Puppala Mamta, Ogunti Richard O., Ensor Joe E., He Tiancheng, Shewale Jitesh B., Ankerst Donna P. et al. "Correlating mammographic and pathologic findings in clinical decision support using natural language processing and data mining methods." Cancer 123, no. 1 (2017): 114–121. [DOI] [PubMed] [Google Scholar]

- [37].Azer Samy A. "Application of Convolutional Neural Networks in Gastrointestinal and Liver Cancer Images: A Systematic Review." In Deep Learners and Deep Learner Descriptors for Medical Applications, pp. 183–211. Springer, Cham, 2020. [Google Scholar]

- [38].Mikolov T, Yih W-t, Zweig G: Linguistic Regularities in Continuous Space Word Representations. In: Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: 2013; Atlanta, Georgia: Association for Computational Linguistics; 2013: 746–751. [Google Scholar]

- [39].Pennington J, Socher R, Manning C: Glove: Global Vectors for Word Representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP): 2014; Doha, Qatar Association for Computational Linguistics; 2014: 1532–1543. [Google Scholar]

- [40].Gal Y, Ghahramani Z: Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In: international conference on machine learning: 2016; 2016: 1050–1059 [Google Scholar]

- [41].He T, Puppala M, Ezeana CF, Huang Y-s, Chou P-h, Yu X, Chen S, Wang L, Yin Z, Danforth RL: A Deep Learning–Based Decision Support Tool for Precision Risk Assessment of Breast Cancer. JCO clinical cancer informatics 2019, 3:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].He T, Puppala M, Ogunti R, Mancuso JJ, Yu X, Chen S, Chang JC, Patel TA, Wong ST: Deep learning analytics for diagnostic support of breast cancer disease management. In: 2017 IEEE EMBS international conference on biomedical & health informatics (BHI): 2017: IEEE; 2017: 365–368. [Google Scholar]

- [43].Mobiny Aryan, and Van Nguyen Hien. "Fast capsnet for lung cancer screening." In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 741–749. Springer, Cham, 2018. [Google Scholar]

- [44].Wilson Andrew G., Hu Zhiting, Salakhutdinov Russ R., and Xing Eric P.. "Stochastic variational deep kernel learning." In Advances in Neural Information Processing Systems, pp. 2586–2594. 2016. [Google Scholar]

- [45].Victor David W. III, Monsour Howard P. Jr, Boktour Maha, Lunsford Keri, Balogh Julius, Graviss Edward A., Nguyen Duc T. et al. "Outcomes of Liver Transplantation for Hepatocellular Carcinoma Beyond the University of California San Francisco Criteria: A Single-center Experience." Transplantation 104, no. 1 (2020): 113–121. [DOI] [PubMed] [Google Scholar]

- [46].Yao Francis Y., Ferrell Linda, Bass Nathan M., Watson Jessica J., Bacchetti Peter, Venook Alan, Ascher Nancy L., and Roberts John P.. "Liver transplantation for hepatocellular carcinoma: expansion of the tumor size limits does not adversely impact survival." Hepatology 33, no. 6 (2001): 1394–1403. [DOI] [PubMed] [Google Scholar]

- [47].Mazzaferro Vincenzo, Llovet Josep M., Miceli Rosalba, Bhoori Sherrie, Schiavo Marcello, Mariani Luigi, Camerini Tiziana et al. "Predicting survival after liver transplantation in patients with hepatocellular carcinoma beyond the Milan criteria: a retrospective, exploratory analysis." The lancet oncology 10, no. 1 (2009): 35–43. [DOI] [PubMed] [Google Scholar]

- [48].Punjabi Arjun, Schmid Jonas, and Katsaggelos Aggelos K.. "Examining the Benefits of Capsule Neural Networks." arXiv preprint arXiv:2001.10964 (2020). [Google Scholar]

- [49].Xie Weiying, Shi Yanzi, Li Yunsong, Jia Xiuping, and Lei Jie. "High-quality spectral-spatial reconstruction using saliency detection and deep feature enhancement." Pattern Recognition 88 (2019): 139–152. [Google Scholar]

- [50].Singh Amitojdeep, Sengupta Sourya, and Lakshminarayanan Vasudevan. "Explainable deep learning models in medical image analysis." Journal of Imaging 6, no. 6 (2020): 52. [DOI] [PMC free article] [PubMed] [Google Scholar]