Abstract

The coronavirus disease-2019 (COVID-19) pandemic has changed the conduct of clinical trials. For studies with physical function and physical activity outcomes that require in-person participation, thoughtful approaches in transitioning to the remote research environment are critical. Here, we share our experiences in transitioning from in-person to remote assessments of physical function and activity during the pandemic and highlight key considerations for success. Details on the development of the remote assessment protocol, integration of a two-way video platform, and implementation of remote assessments are addressed. In particular, procedural challenges and considerations in transitioning and conducting remote assessments will be discussed in terms of efforts to maintain participant safety, maximize study efficiency, and sustain trial integrity. Plans for triangulation and analysis are also discussed. Although the role of telehealth platforms and research activities in remote settings are still growing, our experiences suggest that adopting remote assessment strategies are useful and convenient in assessing study outcomes during, and possibly even beyond, the current pandemic.

Trial register and number: ClinicalTrials.gov [NCT03728257].

Keywords: COVID-19, Clinical trials, Remote assessments, Physical function, Physical activity

1. Introduction

To date, coronavirus disease-2019 (COVID-19), has resulted in over 31 million cases and 560,000 deaths in the U.S. alone [1]. It has led to socio-economic losses across all sectors [2]. It is no exaggeration that COVID-19 has had detrimental effects on global health and its ripple effects have disrupted every aspect of life as we know it. These changes have impacted the conduct of highly choreographed clinical trials, which require timely assessments to maintain scientific validity in evaluating the safety and efficacy of new interventions [3]. COVID-19 restrictions and infection mitigation strategies have greatly restricted participation in face-to-face clinical trial research activities. However, when possible, it is critical to support continuing clinical trials using creative and thoughtful approaches to facilitate continued evaluation of intervention impact for improving health and preventing disability. Rather than halting clinical trials, investigators are considering ways to conduct clinical trials remotely when possible, while maintaining safety, following good clinical practice standards, and preserving the integrity of the trial. The first priority when considering any change in a study protocol is to protect the rights, safety and well-being of trial participants [4]. This is particularly important during a pandemic for trials like ours that include lung transplant recipients (LTR) whose immunosuppression contributes to increased vulnerability to emerging infectious agents [5].

Ironically, our ongoing randomized controlled trial, Lung Transplant GO (LTGO): Improving Self-Management of Exercise After Lung Transplantation, was designed to evaluate a behavioral exercise intervention that provides individualized exercise training for LTR in their homes via a telehealth platform. While the intervention is delivered remotely via telehealth, many of our primary outcome measures were designed for face-to-face assessment. Based on the guidance of our university, state-wide health officials, and Human Research Protection Office, our study was able to continue remotely with appropriately modified protocols. While our original protocol already included strategies to recruit participants, collect self-report data, and deliver our telehealth intervention remotely, there were other factors to consider before converting to remote assessments for our primary study outcomes: physical function and physical activity.

The transition of physical function and activity outcomes to remote assessment were guided by recent recommendations for conducting remote assessments in clinical trials during COVID-19 [6] and preserving trial integrity during the pandemic [7,8]. These considerations included the availability of remote counterparts to face-to-face measures with established reliability and validity; the feasibility and efficacy of performing the assessment remotely; or the need to consider alternative approaches for remote assessment such as the collection of self-reported data that could be used as surrogate measures. We also weighed potential disadvantages of performing assessments of physical function and activity remotely, such as safety concerns like falling, and availability of equipment, against the potential advantages of maintaining health and safety during pandemic. Therefore, the purposes of this paper are to share our experience adapting outcome measures to the remote environment to allow the LTGO trial to proceed during the COVID-19 pandemic, and to inform other researchers who have similar outcomes so that remote measurements are valid, safe, and trial integrity is sustained.

2. LTGO trial

LTGO, a multi-component telerehabilitation exercise intervention, is currently being evaluated in an ongoing randomized, controlled trial (National Clinical Trial: 03728257). LTR are randomized to an enhanced usual care group or the LTGO intervention, a behavioral exercise intervention that provides individualized exercise training and behavioral coaching for LTR in their home via a telehealth platform. The LTGO intervention is delivered in two phases. Phase 1: Intensive home-based exercise training and behavioral coaching sessions via a videoconferencing platform; and Phase 2: Transition to exercise self-management with a behavioral contract followed by 3-monthly telephone sessions to provide behavioral coaching and exercise reinforcement. The primary study aims are to evaluate efficacy of LTGO in improving physical function and physical activity from baseline to 3 and 6 months. Eligibility criteria are shown in Table 1 .

Table 1.

Inclusion and exclusion criteria for study population.

| Inclusion criteria | Exclusion criteria |

|---|---|

|

|

|

|

|

|

|

|

|

|

2.1. Outcome measures: pre and during COVID-19

The measures of physical function and physical activity were examined to determine which outcomes could be assessed remotely, and if not suitable for remote assessment, what other surrogate measures were available as shown in Table 2 .

Table 2.

Summary of measures.

| Pre-COVID-19 measures |

During COVID-19 measure |

||

|---|---|---|---|

| Outcome variables | Measure | Remote measure | |

| Physical function | Walking ability | 6 Minute Walk Test assessed in person: distance walked in feet/ 6 min assessed (longer distance indicates better walking ability) | 30-Second Sit-to-stand Test (30s-STS) assessed remotely via Zoom |

| Balance | Berg Balance Scale assessed in person: 14-items related to balance using a 5-point ordinal scale from 0 (lowest level) to 4 (highest level); Total scores range from 0 to 56 (higher scores indicate better balance) | Berg Balance Scale assessed remotely via Zoom | |

| Lower body strength | 30-Second Sit-to-stand Test assessed in person: the number of sit-stand repetitions performed in 30 s (more repetitions indicate better lower body strength) | 30s-STS assessed remotely via Zoom | |

| Maximal exercise capacity | Cardiopulmonary Exercise Test performed in person in specialized laboratory: watts and blood pressure at isoworkload | Unable to perform during COVID-19 remotely due to potential aerosolized spread | |

| Quality of life | St. George Respiratory Questionnaire (SGRQ) administered in person or remotely: 50-item to assess overall health, daily life, and perceived wellbeing (higher scores indicate more limitations and poorer quality of life.) | SGRQ administered remotely via phone | |

| Physical activity | Steps per day | Fitbit: total steps/day | Fitbit: total steps/day |

| Time spent in light, moderate, & vigorous activities | Actigraph GT3X: mins/day per level of activity | Actigraph GT3X: mins/day per level of activity | |

| International Physical Activity Questionnaire Short Form administered remotely via phone | |||

2.1.1. Physical function

2.1.1.1. Walking ability

The 6 Minute Walk Test (6MWT) is a standardized, well-validated measure of functional capacity [9]. Testing is conducted according to American Thoracic Society Guidelines and participants are asked to walk on a ~ 37.56-m track as far as possible in 6 min while an assessor observes and keeps time. Conducting the 6MWT remotely would require long, unobstructed hallways beyond the space of most places of residence. A PubMed search for “remote 6MWT” revealed several promising options for using a smartphone to conduct the 6MWT [10,11]. However, the apps and algorithms were not available for download by the public or by the research team, preventing their use for remote 6MWTs for our study. Furthermore, the 4-m gait speed (4MGS) test is strongly correlated with the 6WMT (r = 0.85) test [12]. However, the test involves a 1-m acceleration zone, a 4-m testing zone, and 1-m deceleration zone (6 m = 19.7 ft) [13]. Incorporating mobility assessments that required the procuring of a certain distance raised concerns of feasibility and accuracy in measurement given the uncontrolled remote setting (e.g., lack of space in participant's home, difficulty in determining an accurate distance visually via video conferencing). The 30-s sit-to-stand test (30-s STS) measures lower body strength and has been recommended as an alternative when conditions do not permit the 6MWT [14]. Multiple studies in different samples found that the 30-s STS, was moderately correlated with the 6MWT, including correlations of r = 0.528 to 0.660 (p < .001) in a sample of healthy young adults [15]; adults with chronic obstructive pulmonary disease [16]; and pulmonary hypertension [17]. Since the 30-s STS test was already included in our protocol for assessment of lower body strength, it was selected as a reliable remote surrogate measure for walking ability.

2.1.1.2. Balance

The Berg Balance Scale (BBS) [18,19] tests the participant's ability to handle tasks that require balance (e.g., sitting to standing, placing alternate foot on stool). We modified the protocol to perform the test remotely via two-way video; methods are further described in the remote Physical Function Assessment (PFA) protocol below.

2.1.1.3. Lower body strength

The 30-s STS measures lower body strength [20]. A trained assessor instructs the participant to: 1) sit in the middle of a chair (17-in. height, with a straight back without armrests); 2) place hands on the opposite shoulder crossed at the wrists; 3) keep feet flat on the floor and back straight. The participant is asked to rise to a full standing position, sit down on the chair and repeat this move for up to 30 s. The protocol was modified to perform the 30-s STS remotely via two-way video; methods are further described in the remote PFA protocol below.

2.1.1.4. Maximal exercise capacity

A Cardio Pulmonary Exercise Test (CPET) is performed in a laboratory setting to determine physical function (maximum exercise watts for age, height, weight, and gender) based on isoworkload measurements of cardiopulmonary metabolic responses at the point of participant's volitional exhaustion [21]. Due to COVID-19 restrictions, the clinical laboratory suspended non-essential pulmonary testing forcing us to exclude the direct measure of maximal exercise capacity until laboratory testing can be resumed.

2.1.1.5. Quality of life

The St. George Respiratory Questionnaire (SGRQ) [22], a 2 part, 50-item, self-report questionnaire administered in person or by phone to assess overall health, daily life, and perceived wellbeing. Administration of the SGRQ already includes remote data collection options, so no modification was required.

2.1.2. Physical activity

2.1.2.1. Steps per day (SPD)

All participants are provided a Fitbit Charge 3 (Fitbit Inc., San Francisco, CA). The number of days that the participant wears the Fitbit is used to document adherence to self-monitoring of walking goals. SPD are already monitored remotely, so no modification was required.

2.1.2.2. Time spent in light, moderate, and vigorous activities

All participants are provided an Actigraph GT3X (ActiGraph, LLC., Pensacola, FL) and accelerometer to measure physical activity for a 7-day period at baseline, 3 and 6 months. Participants are instructed to: 1) wear the Actigraph on their waist during waking hours (except during imaging studies such as bronchoscopy, bathing or showering), 2) keep a paper activity diary, and 3) return the device and diary by mail in a pre-paid envelope. The Actigraph provides tri-axial vector data in activity units, metabolic equivalent tasks (METs), or kilocalories. Validity has been established in people with chronic lung disease [23]. Physical activity is reported as average minutes spent per day in light (<3 METs) and moderate/vigorous (≥3 METs) activity at each time point. Due to challenges of not having a centralized work location, limited face-to face participant contact, and increased turnaround time for receiving devices from participants during COVID-19, Actigraph data were not always available. Therefore, the International Physical Activity Questionnaire Short Form (IPAQ-S) [24] was added as a surrogate measure, administered over the telephone, of time spent in certain activities during the pandemic. The IPAQ-S is a 7-item, self-reported physical activity assessment completed either by telephone or mailed. The data can be expressed as a categorical score (low, moderate, or high) or a continuous score of metabolic equivalent-minutes per week. The psychometric evaluations report good reliability, with a test-retest Spearman's coefficient of 0.64 for the telephone version and 0.79 for the self-administered version, which we adapted in this study. Spearman's coefficient between accelerometer derived activity and self-reported activity of r = 0.42 to 0.52 for total physical activity and 0.77 to 0.86 for greater than 150 min of physical activity/week provided evidence of its validty [24].

2.2. Development of the remote PFA protocol

2.2.1. Video platform

We selected Zoom as the two-way, remote assessment platform to expand the reach, scalability, and performance of physical assessments (30-s STS and BBS) during COVID-19 and/or situations where participants are limited in leaving their homes or where access to care is restricted such as in rural areas [25]. Zoom is Health Insurance Portability and Accountability Act of 1996 compliant through applying mandatory account settings to customers' accounts. Zoom protects and encrypts all audio, video, and screen sharing data, and does not have access to identifiable personal health information. Other notable Zoom security features are shown in Table 3 . Quality control monitoring for reliability and reproducibility was performed before conducting assessments remotely via videoconferencing in participants' homes. Practice PFA sessions were conducted between assessors with other research staff with no knowledge of the assessment as participants. Training focused on providing consistent participant instructions and determining scores based on standard criteria for consistency and reproducibility. For quality assurance, each assessor was evaluated by a gold-standard reviewer prior to taking the role as an assessor.

Table 3.

Considerations for video conference settings using Zoom.

| Points to consider in setting video conferences | |

|---|---|

| Meeting invite/link/settings |

|

| |

| |

| |

| |

| Chats |

|

| |

| Recording |

|

| Security |

|

| |

| |

| |

| |

| Confidentiality and privacy |

|

2.2.2. Equipment and space needs

To perform remote assessments, participants need a laptop or tablet with a functioning video camera and speakers, a chair with arms, an armless chair, a ruler, tape, a cup, and a step-stool. Adequate space is also required to conduct the assessments; ideally near a wall for safety that allows for roughly 10 ft between the participant and the laptop/tablet so the assessors are able to view the participant clearly from head to toe.In order to ensure that a participant has the necessary equipment and space, a pre-assessment protocol was developed (described below). However, as the remote assessments occurred, scripts and procedures were revised to address unanticipated problems/issues. For example, some participants did not have a ruler in the home, or the ruler markings were difficult for the assessor to see on the video camera during the reach test. To address this problem, we created a paper ruler with bold markings that could be visible to the remote assessor from a distance and included it in the mailed assessment packet. Similarly, instead of displaying the Borg Rating of Perceived Exertion (RPE) scale via screen-sharing, we included this scale in the mailed assessment packet.

2.2.3. Participant instructions

For in-person PFA the assessor sets-up all of the equipment, however for remote assessments, participants are provided additional instructions before and during the assessment to set-up equipment themselves. Once set-up is complete, the assessor demonstrates each test and observes the participant for proper procedure and adequacy of the screen view. The assessor frequently needs to instruct the participant to adjust the distance and angle of the camera so that their entire body (head to toe) is in view to allow the assessor to score the assessment or ensure safety. When the view of the participant is not clear to the assessor despite equipment adjustments, the participants are asked to describe their surroundings such as the distance from the wall.

2.2.4. Safety

In addition to the changes required to make remote testing feasible, the remote PFA protocol adopted additional precautions to ensure participant safety. The original in-person safety protocol outlined circumstances (e.g., signs and symptoms) that warranted stopping the PFA, activating a rapid response team, and notifying the principal investigator (PI) and/or key personnel. In order to make the existing safety protocol applicable for remote PFA, changes included adding instructions to dial 911 in case of an emergency and the national hotline and crisis line in the event of suicidal ideation. Additional safety precautions for the remote setting included assessing whether participants planned to exercise alone, and if so, their comfort level in doing so. This allows the assessor to know who to inform in the event of an emergency. Furthermore, prior to initiating the 30-s STS, the participant's ability to safely stand up from chair without help is assessed. If the participant is unable to stand independently, the test is stopped for safety purposes and the safety protocol is initiated by notifying the PI for further risk assessment and instructions. For the BBS, additional verbal instructions and demonstration of most test-items are included. For tests that require freestanding, the participant is instructed to stand near, but not touching a wall, for safety purposes, in case of loss of balance. If space or room layout prevents standing near a wall, free-standing is permitted if the participant verbalizes comfort and safety. In addition, instructions for suspending the test or initiating the safety protocol are included, such as the participant scores too low on a task or safety is a concern. To prevent possible fatigue during testing, we offer frequent breaks and monitor participant's condition during testing.

2.2.5. Addition of an observer

When assessors began to train for remote PFA protocol in preparation for remote assessment, we realized the assessor was juggling multiple responsibilities including correct equipment set-up in participant's homes, providing instructions, assessing the participant's understanding of instructions, conducting the test, assessing the participant's safety throughout testing, evaluating the performance, and scoring the test. This extensive list of activities often overwhelmed the assessor and introduced testing errors, thus, the observer role was created. The observer's primary responsibility is to assess the testing environment and ensure the assessor has an appropriate view of the participant to score the test. During each PFA session, the observer notes potential issues with: 1) camera positioning; 2) assessment equipment; 3) lighting; 4) video technology; and/or 5) participant safety. The observer serves to assess the quality of the testing and to optimize testing conditions by providing the participant with additional instructions if warranted.

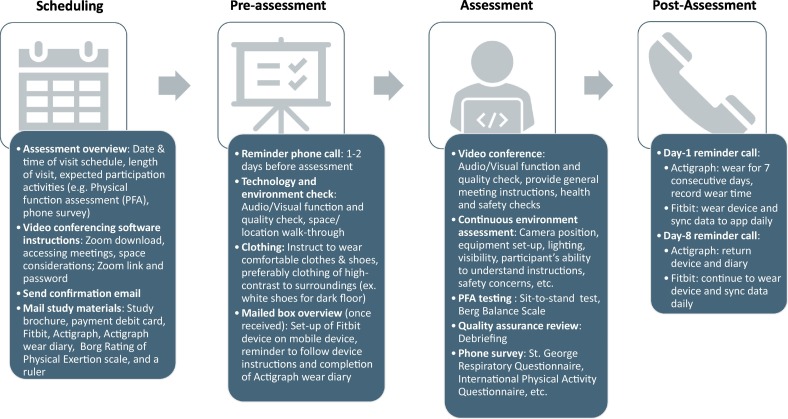

3. Implementation of remote physical assessments

The steps to prepare and implement remote PFAs are shown in Fig. 1 . First, after a participant enrolls in the study, a research staff member contacts the participant for scheduling and pre-assessment checks. Logistics of remote setup and preparation instructions are discussed by phone and email. Once scheduled, the participant is introduced to the video software (Zoom), provided with the assessment meeting link and passcode, and informed that a box will be mailed including all study materials for the scheduled visit. One or two days prior to the assessment, a reminder phone call and pre-assessment video check is performed with the participant. Any issues with video conferencing software are addressed, including internet connectivity, audio and video quality. The participant is guided on how to use the items in the mailed box, including Fitbit application download and set-up, and a testing location is chosen in the home based on the space needs. On the day of testing, the assessor and the observer sign-in to Zoom. The participant joins the video conference by clicking the Zoom link and entering the unique passcode and prompted to provide consent to record the meeting. The assessment session is recorded and starts with a brief introduction and health questions to determine if any new health or safety concerns have arisen. Once the participant is cleared to continue, the assessor begins testing of the 30-s STS and BBS. For each testing item, a verbal instruction is provided followed by demonstration. The participant performs the test and the assessor times with a stopwatch and scores the performance accordingly. The Borg RPE rating is obtained before and after the 30-s STS subtests. Throughout the assessment, the assessor and participant make adjustments to the camera and equipment and visibility is optimized. When audio or video disconnection occurs during a test item, the participant is asked to repeat the item. If the problem persists, the item is skipped. Both the assessor and observer score the participant's performance and the observer makes additional notes for each test item about concerns that might impact the quality of the tests. After the zoom session the assessor and observer debrief about any test items or areas in which scoring may have been impacted due to limited visualization, lack of equipment, or any other reasons. If there is a disagreement about any limitations that may impact scoring, the assessor and the observer may ask another research team member to review the recorded session to reach consensus. The day after the remote PFA session the participant receives a reminder phone call to wear study devices, record wear-time for Actigraph, to sync their Fitbit data daily, and to assist with any issues with device setup and use. A similar call is made seven days after the remote PFA with additional instructions to return the Actigraph device and diary.

Fig. 1.

Steps of the remote physical function assessment implementation process.

4. Challenges and considerations for future

Transitioning from in-person to remote PFA testing required us to recognize challenges, barriers, and considerations (Table 4 ). Given the nature of remote testing, one of our biggest challenges was overcoming technical issues. The most frequently experienced problems were starting and maintaining connectivity during video conferencing and loss of audiovisual features in the course of assessment. Although pre-assessments were helpful in preparing for the assessment, technical issues still occurred on the day of assessment and had to be resolved in real-time (e.g. calling participant's phone when audio failed, reconnecting to video conference link, using a mobile hotspot or contacting the internet provider to resolve weak or unstable connectivity). If an issue was not resolved promptly, the assessment was rescheduled for a later date.

Table 4.

Key considerations in planning remote physical assessments.

| Considerations | |

|---|---|

| Researcher's openness |

|

| Surrogate measures | |

| Quality assurance |

|

| Participant engagement |

|

| Participant safety |

|

| Staffing |

|

| Budget |

|

| Time |

|

| |

| Technical issues |

|

| Communication |

|

| |

Other challenges in preparing and conducting remote PFA were related to participant engagement, participant privacy and safety, and minimizing assessor subjectivity and bias. The PFA protocols and procedures were adapted to overcome such challenges by incorporating various strategies as described in 2, 2.2. Specifically for participant engagement, pre-assessment protocol was developed to address any preparatory difficulties before the assessment, and follow-up calls were made after each visit to address outstanding questions and assist with device set-up and use. For effective and efficient communication with participants it is essential to have accurate and updated participant contact information. To ensure successful delivery and return of study materials, make sure that participants know the value of the items to be mailed and use mailing tracking.

In addition, communication among all the members of the research team is critical. All members must feel comfortable discussing their concerns and possible solutions to issues that arise. Overall, it is important to establish, test and iterate PFA protocols.

As in any study, data loss may still occur, such as inability to score the complete BBS when participants do not have the necessary equipment (e.g., a chair without armrests) or monitoring devices fail to collect data (e.g., technical problems or failure to wear the devices). Additionally, lack of a remote surrogate measure for the CPET, precludes assessments of the impact of the intervention on change in maximal exercise capacity between baseline and three months.

Nevertheless, assessing PFA remotely offers numerous benefits, such as convenience and reduction in patient burden, since the need to travel to the testing center is eliminated. Key observations related to study adherence are also noted during the implementation of PFA (Table 5 ). Transplant patients often reside hours from the program site, so converting from in-person to remote PFA also allows more individuals to participate since distance from the study center is no longer an exclusion criterion. Lastly, remote PFA is more consistent with the telehealth philosophy and increases the generalizability of the study's findings.

Table 5.

Anecdotal observations related to study adherence.

| Observations |

|---|

Retention rates remained strong during the transition from in-person to virtual

|

| Consent process is easier because patients are not required to report in-person |

Data collection is maintained with minimal issues and easier to administer questionnaires remotely

|

5. Plans for triangulation and analysis

The primary outcomes for the study did not change with the move to remote assessments. Therefore, the original data analysis plan for testing hypotheses of between-group differences in physical function and physical activity between baseline, 3 and 6 months is maintained. However, the shift to remote assessments raises issues for how to analyze outcomes when data are collected using different in-person, remote and surrogate measures. Below is a description of the two study outcomes that were affected by the change to remote assessment and how we plan to transform the disparate data sources for use in the analysis.

Prior to COVID-19, the 6MWT was the only outcome measure for walking ability. Since moving to remote assessments, conducting the 30-s STS during a two-way video session is used as a ‘surrogate’ measure for 6MWT. Scores for 6MWT and 30-s STS are treated as continuous variables and will be described using measures of central tendency, including the mode, median, and mean, and measures of distribution and spread, including range, quartiles, absolute deviation, variance and standard deviation in their original metric. However, to combine raw values from the continuous level 6MWT and 30-s STS tests, we will convert them to the standard scores, more commonly referred to as Z-scores [29], which enables comparison of scores that are from different distributions or measures. The result will be a range of standardized scores to compare differences in walking ability between the LTGO and enhanced usual care groups regardless of the original metric.

Similarly, prior to COVID-19, the data recorded using an accelerometer (Actigraph) for 7-days was the only physical activity outcome measure available to quantify the time spent in light and moderate/vigorous activities as continuous scores. To overcome some of the challenges of relying on Actigraphs, the IPAQ-S [24] was added as a surrogate measure. The IPAQ-S data are reported as a continuous score of metabolic equivalent-minutes per week including median values and interquartile ranges for walking, moderate-intensity activities. As with the measures of walking ability, to summarize data from each measure of time spent in levels of physical activity in a meaningful way, descriptive statistics will be reported. Data from the Actigraph and the IPAQ-S will be converted to Z-scores to examine differences in time spent in certain activity levels per day or week between the two groups. Since some participants may have Actigraph and/or IPAQ-S data collected, we will also be able to compare reliability and validity between the objective and self-report measures. Furthermore, as suggested by other clinical trialists [7,30], when all pandemic restrictions are lifted and trials have the option to resume in-person activities, consideration will be given to how best to measure certain variables (such as self-reported measures of physical activity like the IPAQ-S, objective measures such as accelerometers, or in some cases, in combination) to measure outcomes reliably and possibly reduce burden on participants. To this end, we will conduct post-hoc analyses to determine whether any differences in hypothesized effects were explained by combining measurement methods (in person or remote).

6. Conclusion

In response to the ongoing COVID-19 pandemic, the research team swiftly transitioned from in-person to remote physical function assessments, in compliance with national and local guidelines. During this process, we identified a wide range of challenges which required creative and flexible solutions, while ensuring participant safety, good practice standards, and clinical trial integrity. Although remote assessments may not fully represent the experience of in-person assessments, it is an integral component of research, particularly during public health emergencies. Remote assessment strategies have the potential to make research more efficient and convenient for both participants and researchers. Therefore, aforementioned considerations associated with the planning and implementation of remote assessment should be carefully evaluated in conducting research activities.

Funding

This work was supported by the National Institute of Nursing Research [R01NR01719601A1].

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Center for Disesase Control [CDC] US Covid-19 Cases and Deaths. 2021. https://covid.cdc.gov/covid-data-tracker/#cases_casesinlast7days (Accessed April 19 2021)

- 2.Nicola M., Alsafi Z., Sohrabi C., et al. The socio-economic implications of the coronavirus pandemic (COVID-19): a review. Int. J. Surg. 2020;78:185–193. doi: 10.1016/j.ijsu.2020.04.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Desai S., Manjaly P., Lee K.J., et al. The impact of COVID-19 on dermatology clinical trials. J. Investig. Dermatol. 2020 doi: 10.1016/j.jid.2020.06.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.European Medicines Agency [EMA] Guidance on the Management of Clinical Trials during the COVID-19 (Coronavirus) Pandemic. 2020. https://ec.europa.eu/health/sites/health/files/files/eudralex/vol-10/guidanceclinicaltrials_covid19_en.pdf (Accessed October 14 2020)

- 5.Dong J., Olano J.P., McBride J.W., et al. Emerging pathogens: challenges and successes of molecular diagnostics. J. Mol. Diagn. 2008;10:185–197. doi: 10.2353/jmoldx.2008.070063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Izmailova E.S., Ellis R., Benko C. Remote monitoring in clinical trials during the COVID-19 pandemic. Clin. Transl. Sci. 2020 doi: 10.1111/cts.12834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McDermott M.M., Newman A.B. Preserving clinical trial integrity during the coronavirus pandemic. J. Am. Med. Assoc. 2020;323:2135. doi: 10.1001/jama.2020.4689. [DOI] [PubMed] [Google Scholar]

- 8.Alnaamani K., Alsinani S., Barkun A.N. Medical research during the COVID-19 pandemic. World J. Clin. Cases. 2020;8:3156–3163. doi: 10.12998/wjcc.v8.i15.3156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.American Thoracic Society [ATS] ATS statement: guidelines for the six-minute walk yest. Am. J. Respir. Crit. Care Med. 2002;166:111–117. doi: 10.1164/ajrccm.166.1.at1102. [DOI] [PubMed] [Google Scholar]

- 10.Brooks G.C., Vittinghoff E., Iyer S., et al. Accuracy and usability of a self-administered 6-minute walk test smartphone application. Circ. Heart Fail. 2015;8:905–913. doi: 10.1161/circheartfailure.115.002062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Salvi D., Poffley E., Orchard E., et al. The mobile-based 6-minute walk test: usability study and algorithm development and validation. JMIR mHealth and uHealth. 2020;8 doi: 10.2196/13756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Houchen-Wolloff L., Daynes E., Watt A., et al. Which functional outcome measures can we use as a surrogate for exercise capacity during remote cardiopulmonary rehabilitation assessments? A rapid narrative review. ERJ Open Res. 2020;6:00526–02020. doi: 10.1183/23120541.00526-2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Guidelines and Protocols and Advisory Committee . 2017. BC Guidelines.ca: Frailty in Older Adults – Early Identification and Management. [Google Scholar]

- 14.Alves M.A.D.S., Bueno F.R., Haraguchi L.I.H., et al. Correlação entre a média do número de passos diário e o teste de caminhada de seis minutos em adultos e idosos assintomáticos. Fisioterapia e Pesquisa. 2013;20:123–129. doi: 10.1590/s1809-29502013000200005. [DOI] [Google Scholar]

- 15.Gurses H.N., Zeren M., Denizoglu Kulli H., et al. The relationship of sit-to-stand tests with 6-minute walk test in healthy young adults. Medicine. 2018;97 doi: 10.1097/md.0000000000009489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang Q., Li Y.-X., Li X.-L., et al. A comparative study of the five-repetition sit-to-stand test and the 30-second sit-to-stand test to assess exercise tolerance in COPD patients. Int. J. Chron. Obstruct. Pulm. Dis. 2018;13:2833–2839. doi: 10.2147/copd.s173509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ozcan Kahraman B., Ozsoy I., Akdeniz B., et al. Test-retest reliability and validity of the timed up and go test and 30-second sit to stand test in patients with pulmonary hypertension. Int. J. Cardiol. 2020;304:159–163. doi: 10.1016/j.ijcard.2020.01.028. [DOI] [PubMed] [Google Scholar]

- 18.Berg K., Wood-Dauphinee S., Williams J.I. The balance scale: reliability assessment with elderly residents and patients with an acute stroke. Scand. J. Rehabil. Med. 1995;27:27–36. 1995/03/01. [PubMed] [Google Scholar]

- 19.Berg K. ProQuest Dissertations Publishing; 1992. Measuring Balance in the Elderly: Development and Validation of an Instrument. [PubMed] [Google Scholar]

- 20.Jones C.J., Rikli R.E., Beam W.C. A 30-s chair-stand test as a measure of lower body strength in community-residing older adults. Res. Q. Exerc. Sport. 1999;70:113–119. doi: 10.1080/02701367.1999.10608028. [DOI] [PubMed] [Google Scholar]

- 21.Porszasz J., Casaburi R., Somfay A., et al. A treadmill ramp protocol using simultaneous changes in speed and grade. Med. Sci. Sports Exerc. 2003;35:1596–1603. doi: 10.1249/01.MSS.0000084593.56786.DA. [DOI] [PubMed] [Google Scholar]

- 22.Jones B., Taylor F., Wright O.M., et al. Quality of life after heart transplantation in patients assigned to double or triple drug therapy. J. Heart Transplant. 1990;9:392–396. [PubMed] [Google Scholar]

- 23.Van Remoortel H., Raste Y., Louvaris Z., et al. Validity of six activity monitors in chronic obstructive pulmonary disease: a comparison with indirect calorimetry. PLoS One. 2012;7 doi: 10.1371/journal.pone.0039198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Craig C.L., Marshall A.L., Sjöström M., et al. International physical activity questionnaire: 12-country reliability and validity. Med. Sci. Sports Exerc. 2003;35:1381–1395. doi: 10.1249/01.mss.0000078924.61453.fb. [DOI] [PubMed] [Google Scholar]

- 25.Marsh A.P., Wrights A.P., Haakonssen E.H., et al. The virtual short physical performance battery. J. Gerontol. Ser. A Biol. Med. Sci. 2015;70:1233–1241. doi: 10.1093/gerona/glv029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Holland A.E., Malaguti C., Hoffman M., et al. Home-based or remote exercise testing in chronic respiratory disease, during the COVID-19 pandemic and beyond: a rapid review. Chron. Respir. Dis. 2020;17 doi: 10.1177/1479973120952418. 147997312095241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Abshire M., Dinglas V.D., Cajita M.I.A., et al. Participant retention practices in longitudinal clinical research studies with high retention rates. BMC Med. Res. Methodol. 2017;17 doi: 10.1186/s12874-017-0310-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Choi J., Hergenroeder A.L., Burke L., et al. Delivering an in-home exercise program via telerehabilitation: a pilot study of lung transplant go (LTGO) Int. J. Telerehabil. 2016;8:15–26. doi: 10.5195/IJT.2016.6201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Clark-Carter D. Wiley StatsRef: Statistics Reference Online. John Wiley & Sons, Ltd.; 2014. z Scores. Encyclopedia of statistics in behavioral science. [Google Scholar]

- 30.Pahor M., Guralnik J.M., Ambrosius W.T., et al. Effect of structured physical activity on prevention of major mobility disability in older adults. J. Am. Med. Assoc. 2014;311:2387. doi: 10.1001/jama.2014.5616. [DOI] [PMC free article] [PubMed] [Google Scholar]