Highlights

-

•

Mild-to-severe hearing loss causes behavioral delays in language and working memory.

-

•

How hearing loss changes the neural dynamics serving working memory is unknown.

-

•

Working memory encoding and maintenance-related neural responses were compared.

-

•

Children with hearing loss showed increased compensatory right-hemisphere activity.

-

•

Fronto-parietal phase-specific activity was related to verbal intelligence scores.

Keywords: Oscillations, Hearing aids, Audiology, Cognition, Neurophysiology

Abstract

Children with hearing loss (CHL) exhibit delays in language function relative to children with normal hearing (CNH). However, evidence on whether these delays extend into other cognitive domains such as working memory is mixed, with some studies showing decrements in CHL and others showing CHL performing at the level of CNH. Despite the growing literature investigating the impact of hearing loss on cognitive and language development, studies of the neural dynamics that underlie these cognitive processes are notably absent. This study sought to identify the oscillatory neural responses serving verbal working memory processing in CHL compared to CNH. To this end, participants with and without hearing loss performed a verbal working memory task during magnetoencephalography. Neural oscillatory responses associated with working memory encoding and maintenance were imaged separately, and these responses were statistically evaluated between CHL and CNH. While CHL performed as well on the task as CNH, CHL exhibited significantly elevated alpha–beta activity in the right frontal and precentral cortices during encoding relative to CNH. In contrast, CHL showed elevated alpha maintenance-related activity in the right precentral and parieto-occipital cortices. Crucially, right superior frontal encoding activity and right parieto-occipital maintenance activity correlated with language ability across groups. These data suggest that CHL may utilize compensatory right-hemispheric activity to achieve verbal working memory function at the level of CNH. Neural behavior in these regions may impact language function during crucial developmental ages.

1. Introduction

Approximately 1.3% children are born with some degree of hearing loss in the United States, based on the most recent prevalence rates from the Centers of Disease Control (CDC 2017). Over the last 25 years, there has been a formal implementation of newborn hearing screenings in the United States, and recent reports suggest that over 97% of infants are now screened at birth (CDC 2017). Prior to the advent of newborn hearing screening, children with mild to severe degrees of hearing loss were oftentimes not diagnosed until toddler years, which led to significant delays in language acquisition (Moeller, 2000, Pimperton et al., 2016, Robinshaw, 1995, Yoshinaga-Itano et al., 1998). It was hypothesized that early universal hearing screenings and intervention would hold the key to eliminating these delays.

Despite the improvements in early identification and intervention, some children with hearing loss (CHL) still lag behind children with normal hearing (CNH) in language (McCreery et al., 2015, Moeller et al., 2007, Tomblin et al., 2015, Tomblin et al., 2014, Walker et al., 2020), cognitive (Caudle et al., 2014, Dye and Hauser, 2014, Nittrouer et al., 2017, Nittrouer et al., 2013), academic (Khairi Md Daud et al., 2010, Kouwenberg et al., 2012, Theunissen et al., 2015), and psychosocial (Dirks et al., 2017, Netten et al., 2015a, Netten et al., 2015b, Theunissen et al., 2012, Theunissen et al., 2014a, Theunissen et al., 2011, Theunissen et al., 2015, Walker et al., 2017) outcomes. It is important to note that these deficits are not universal; in fact, there is a considerable amount of variability in academic and language outcomes in CHL. Some CHL perform at or above CNH, while others fall significantly behind (Tomblin et al., 2020). It has been proposed that cognitive factors such as attention and working memory may significantly contribute to this variability (Pisoni, 2000), but the matter remains far from resolved. This is especially true regarding children with mild-to-severe hearing loss (i.e., better ear pure-tone average [BEPTA] between 20 and 79 dB).

Broadly speaking, working memory describes the process by which information is stored temporarily in the brain until it can either used in the execution of a task, discarded after a short time, or moved to long-term memory. There has been immense effort put forth in the last century to create an accurate and inclusive working memory model, each with its own strengths and weaknesses (for a review, see (Cowan, 2014)). Two of the most prominent working memory models are those of Cowan and Baddeley. Cowan’s model describes a system by which a central executive controls which long-term memories are “activated” for use, a process that is mediated by an attention filter (Cowan, 1988, Cowan, 2001). This model does not strive to dissociate which type of information (e.g., spatiotemporal, phonological, sensory) is being processed, but more broadly describes how any type of information is kept in a temporary memory store for use, discarded, or moved into long-term storage, with particular emphasis on the role of attention. In contrast, Baddeley’s model of working memory (Baddeley, 1992, Baddeley, 2000, Baddeley, 2003b, Baddeley, 2012) describes a system by which different stimuli (e.g., visual, spatial, and linguistic) are input into and rehearsed within one of two “slave systems”: the visuospatial sketchpad and the phonological loop. These slave systems, as well as an “episodic buffer,” (Baddeley, 2000, Baddeley, 2012) are controlled by a central executive. The episodic buffer is thought to allow for the temporary integration and filtering of information between the two slave systems. The central executive is also included in Baddeley’s model, and is hypothesized to direct attention to incoming stimuli and determine which stimuli to move from the two slave systems into long-term memory, which to manipulate for use, and which to discard. As their names imply, the visuospatial sketchpad stores short-term visuospatial information, while the phonological loop stores and rehearses phonological information. Importantly, the phonological loop requires both information about the phonemes, as well as the temporal order of those phonemes (Baddeley, 2003a, Baddeley et al., 1998). Using both of these models, it makes sense that people with lower working memory capacity might be at a greater disadvantage when attempting to understand speech in suboptimal hearing environments, which require the listener to keep phonemic content in memory in order to piece together meaningful speech streams.

Building on this idea, the relationship between language measures and working memory capacity has been well-documented in normal- and hard-of-hearing adults (Akeroyd, 2008, Besser et al., 2013, Gordon-Salant and Cole, 2016, Hwang et al., 2017) and children (Dawson et al., 2002, Harris et al., 2013, Kirby et al., 2019, Lowenstein et al., 2019, Magimairaj and Nagaraj, 2018, Magimairaj et al., 2018, Magimairaj et al., 2020, McCreery et al., 2017, McCreery et al., 2019, Nittrouer et al., 2017). In the context of children with mild-to-severe hearing loss, Stiles and colleagues (Stiles et al., 2012) found a significant relationship between digit span and vocabulary size in both CNH and CHL. More recently, McCreery et al. (2019) found that, in addition to language and auditory factors, phonological working memory ability significantly predicted speech recognition in CHL, which echoed the pattern of results found in an earlier study in children with normal hearing from the same authors (McCreery et al., 2017). Importantly, neither of these studies showed that there were significant differences in working memory ability between CNH and CHL. However, it is possible that CHL may tax the working memory systems of CHL more strongly than CNH because of differences in the quality of auditory input received. Taken together, this work suggests that working memory capacity may be strongly linked to a child’s ability to understand and retain linguistic stimuli.

While there is convincing evidence that hearing loss results in behavioral decrements spanning multiple cognitive domains in children, there has been surprisingly little research on the neurophysiological bases of these deficits. In fact, to our knowledge this body of literature has largely been limited to studies of brain structure and of basic sensory or speech processing in children with severe-to-profound hearing loss (for a review, see (Glick and Sharma, 2017). This body of work shows widespread alterations in the central neural auditory and language processing, including anatomical changes in structures throughout the auditory neural network (Miao et al. 2013), as well as alterations in response amplitude and functional connectivity between the auditory cortices (Polonenko et al., 2017, Polonenko et al., 2019, Smieja et al., 2020) and within higher-order auditory processing areas (Petersen et al., 2013). Importantly, following cochlear implantation, there is evidence of at least partial normalization of auditory brain function (Polonenko et al., 2017, Polonenko et al., 2019), suggesting that these cortical alterations are malleable. Moreover, in animal models, auditory deprivation during critical developmental periods leads to frequency-specific adaptation of auditory cortical neural activity (de Villers-Sidani et al., 2008). Nonetheless, how this body of literature extends to those with mild-to-severe hearing loss who maintain some residual hearing, as well as any effects on the neural correlates of higher-order cognitive processing outside the auditory domain, are unknown. The only paper to our knowledge that investigated the role of mild-to-severe hearing loss on cortical neural patterns during cognition is a recent fNIRS paper by Bell and colleagues that looked at visual and auditory inhibition responses during a Go-No/Go task in CHL, children with ADHD, and CNH (Bell et al., 2020). Consequently, there have been no studies of the whole-brain neural dynamics serving high-order cognitive processing in CHL. Studies of this nature could hold major promise in unlocking the root of heterogeneity in behavioral outcomes in this population. In particular, neuroimaging with magnetoencephalography (MEG) or EEG would allow for not only the characterization of differences in the neural dynamics serving cognitive function, but also allows for the decomposition of these neural aberrations into different phases of a cognitive process in real-time. Further, MEG technology holds promise in showing differences in neural dynamics that can be detected with relatively good spatial precision and with more sensitivity than behavioral testing alone.

The working memory process is often described in three phases: 1) encoding: processing and storing the incoming stimuli; 2) maintenance: rehearsing the items in the memory store; and 3) retrieval: recovering the items in the memory store for use or manipulation. Recent studies have sought to clarify the dynamic neural patterns that underlie each phase of verbal working memory processing (Embury et al., 2019, Heinrichs-Graham and Wilson, 2015, Proskovec et al., 2016, Proskovec et al., 2019, Roux and Uhlhaas, 2014). During encoding, there is a strong desynchronization in the alpha–beta frequency (10–18 Hz) that begins in the bilateral occipital cortices, and then extends into the left lateral parietal, temporal, and inferior frontal cortices in turn. This posterior-to-anterior desynchronization emerges during encoding and is sustained through maintenance and retrieval. Notably, this neural response pattern has been shown to increase in amplitude and become more widespread as a function of working memory load, suggesting that these oscillatory dynamics are crucial for proper stimulus encoding (Proskovec et al., 2019). In addition, there is a narrow, robust synchronization in the lower alpha (8–12 Hz) frequency that emerges in the bilateral superior parieto-occipital cortices during maintenance and dissipates at the onset of retrieval, which has been shown to be associated with the active inhibition of distractors (Bastiaansen et al., 2002, Bonnefond and Jensen, 2013, Embury et al., 2019, Proskovec et al., 2019, Roux and Uhlhaas, 2014). In sum, there is the wealth of spatiotemporal oscillatory behavior that is elicited during the performance of a verbal working memory task. Exploiting these dynamics in the context of hearing loss has the potential to further clarify the locus of behavioral deficits in this population.

The goal of the current study was two-fold: first, to identify differences in the neural bases of verbal working encoding and maintenance between children with mild-to-severe hearing loss and their normal hearing peers, and second, to determine whether language ability was related to differences in neural oscillatory behavior. To this end, we recorded MEG during a letter-based version of the Sternberg working memory task. We hypothesized that CHL would exhibit aberrations in one or more phases of working memory processing, especially in inferior frontal, lateral parietal, and occipital regions, which are known to be pivotal to proper working memory performance. We also hypothesized that the magnitude of neural activity in these regions would significantly correlate with language measures in CHL, indicative of the tight link between language and cognitive measures exemplified in previous behavioral work.

2. Methods

2.1. Participants

Forty-four children ages 7–15 years old, including 23 children with mild-to-severe hearing loss (CHL; 9 females) and 21 CNH, were recruited from the local community to participate in this study. Exclusionary criteria included any medical illness affecting CNS function, current or previous major neurological or psychiatric disorder, history of head trauma, current substance abuse, and/or the presence of irremovable ferromagnetic material in or on the body (e.g., dental braces, metal or battery-operated implants). After complete description of the study was given to participants, written informed consent was obtained from the parent/guardian of the participant and informed assent was obtained from the participant following the guidelines of the University of Nebraska Medical Center’s Institutional Review Board, which approved the study protocol. A total of 14 youth (9 CHL and 5 CNH) were excluded from analysis due to movement or unexpected magnetic artifacts or inability to perform the task; 14 CHL and 16 CNH were included in the final analysis. Of note, a separate analysis of the impact of hearing aid use on working memory neural dynamics in these 14 CHL are reported elsewhere (Heinrichs-Graham et al., under review).

2.2. Neuropsychological and audiometric testing

All participants completed all four subtests of the Wechsler Abbreviated Scale of Intelligence (WASI-II) to characterize their level of verbal and nonverbal cognitive function. Briefly, the WASI-II consists of the following subtests: Vocabulary, Similarities, Block Design, and Matrix Reasoning, which can be used to calculate an individual’s verbal, nonverbal, and overall IQ. Scores on the Vocabulary and Similarities subtests are combined to create the Verbal Composite Index (VCI), which is a metric of verbal intelligence, while the Block Design and Matrix Reasoning scores are combined to create a Perceptual Reasoning Index (PRI), which is a measure of nonverbal intelligence. In addition, we calculated the degree of hearing loss (i.e., better-ear pure-tone average [BEPTA]) from all CHL from the participant’s most recent clinical audiogram, which was completed within the past year and provided with parent consent. Briefly, audiograms consisted of air-conduction audiometric thresholds that had been measured at octave frequencies from 250 to 8000 Hz. The thresholds at 500, 1000, 2000, and 4000 Hz were averaged to calculate the pure-tone average (PTA) for each ear, and the PTA for the better-ear was used to represent degree of hearing loss in the statistical analyses. The final sample of CHL participants had an average BEPTA of 47.30 dB (SD = 12.98 dB, range 28.75–78.75 dB).

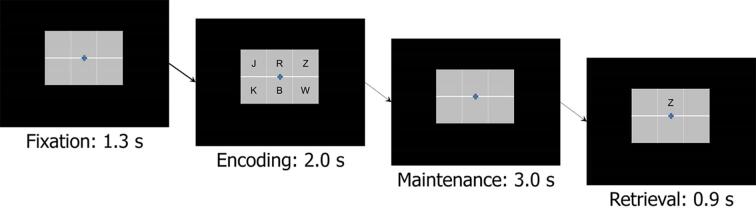

2.3. Experimental paradigm

During MEG recording, participants were instructed to fixate on a crosshair presented centrally. A 19 cm wide × 13 cm tall, 3 × 2 grid containing six letters was then presented for 2.0 s (encoding phase). The letters then disappeared, leaving an empty grid for 3.0 s (maintenance phase). Finally, a single “probe” letter appeared (retrieval phase) for 0.9 s. Participants were instructed to respond with a button press whether the probe letter was one of the six letters previously presented in the stimulus encoding set. Each trial lasted 7.2 s, including a 1.3 s pre-stimulus fixation; Fig. 1 shows an example trial. Each participant completed 128 trials, which were pseudorandomized based on whether the probe letter was one of the previous six letters. The task lasted approximately 15 min.

Fig. 1.

Task paradigm. Participants were initially presented with a fixation for 1.3 s. A 2 × 3 grid of 6 consonants was then presented for 2.0 s (i.e., encoding phase). The letters then disappeared from the grid for 3.0 s (i.e., maintenance phase). Finally, a single probe letter was presented in the top middle square of the grid (i.e., retrieval phase). Participants were instructed to respond via button press whether the probe letter was one of the six letters presented in the previous encoding set. A total of 128 trials were presented.

2.4. MEG data acquisition

Neuromagnetic data were sampled continuously at 1 kHz using a Neuromag system with 306 sensors (Elekta/MEGIN, Helsinki, Finland) with an acquisition bandwidth of 0.1–330 Hz. All recordings were conducted in a one-layer magnetically shielded room with active shielding engaged. Prior to MEG measurement, four coils were attached to the subject’s head and localized, together with the three fiducial points and scalp surface, with a 3-D digitizer (Fastrak 3SF0002, Polhemus Navigator Sciences, Colchester, VT, USA). Once the subject was positioned for MEG recording, an electric current with a unique frequency label (e.g., 322 Hz) was fed to each of the coils. This induced a measurable magnetic field and allowed each coil to be localized in reference to the sensors throughout the recording session, and thus head position was continuously monitored relative to the sensor array. Offline, MEG data from each subject was individually corrected for head motion and subjected to noise reduction using the signal space separation method with a temporal extension (tSSS; (Taulu and Simola, 2006, Taulu et al., 2005)). To correct for head motion, the position of the head throughout the recording was aligned to the individual’s head position when the recording was initiated.

2.5. MEG coregistration & structural MRI Processing.

Because head position indicator coil locations were also known in head coordinates, all MEG measurements could be transformed into a common coordinate system. With this coordinate system, each participant’s MEG data were coregistered with structural T1-weighted MRI data prior to source space analyses using BESA MRI (Version 2.0). Structural MRI data were aligned parallel to the anterior and posterior commissures and transformed into the Talairach coordinate system (Talairach and Tournoux, 1988). Following source analysis (i.e., beamforming), each subject’s functional images were transformed into standardized space using the transform applied to the structural MRI volume.

2.6. MEG time-frequency transformation and statistics

Cardio and eye blink artifacts were removed from the data using signal-space projection (SSP), which was accounted for during source reconstruction (Uusitalo and Ilmoniemi, 1997). The continuous magnetic time series was divided into epochs of 7.2 s duration, with baseline being defined as −0.4 to 0.0 s before initial stimulus onset. Epochs containing artifacts were rejected based on a fixed threshold method, supplemented with visual inspection. Artifact-free epochs were transformed into the time–frequency domain using complex demodulation (resolution: 2.0 Hz, 25 ms; (Papp and Ktonas, 1977)), and the resulting spectral power estimations per sensor were averaged over trials to generate time–frequency plots of mean spectral density. These sensor-level data were normalized by dividing the power value of each time–frequency bin by the respective bin’s baseline power, which was calculated as the mean power during the −0.4 to 0.0 s time period. This normalization allowed task-related power fluctuations to be visualized in sensor space.

The time–frequency windows subjected to beamforming (i.e., imaging) in this study were derived through a two-stage statistical analysis of the sensor-level spectrograms across the entire array of gradiometers (magnetometer data was not analyzed) during the five-second “encoding” and “maintenance” time windows. Each data point in the spectrogram was initially evaluated using a mass univariate approach based on the general linear model. To reduce the risk of false positive results while maintaining reasonable sensitivity, a two stage procedure was followed to control for Type 1 error. In the first stage, one-sample t-tests were conducted on each data point and the output spectrogram of t-values was thresholded at p < 0.05 to define time–frequency bins containing potentially significant oscillatory deviations across all participants. In stage two, time–frequency bins that survived the threshold were clustered with temporally and/or spectrally neighboring bins that were also above the (p < 0.05) threshold on sensors within 4 cm of each other (i.e., spatial clustering), and a cluster value was derived by summing all of the t-values of all data points in the cluster. Nonparametric permutation testing was then used to derive a distribution of cluster-values and the significance level of the observed clusters (from stage one) were tested directly using this distribution (Ernst, 2004, Maris and Oostenveld, 2007). For each comparison, at least 10,000 permutations were computed to build a distribution of cluster values. Based on these analyses, the time–frequency windows that contained significant oscillatory events across all participants during the encoding and maintenance phases were subjected to the beamforming analysis.

2.7. MEG source imaging & statistics

Cortical networks were imaged through an extension of the linearly constrained minimum variance vector beamformer (Hillebrand et al., 2005, Liljeström et al., 2005, Van Veen et al., 1997), which employs spatial filters in the frequency domain to calculate source power for the entire brain volume. The single images are derived from the cross spectral densities of all combinations of MEG gradiometers averaged over the time–frequency range of interest, and the solution of the forward problem for each location on a grid specified by input voxel space. Following convention, we computed noise-normalized, differential source power per voxel in each participant using active (i.e., task) and passive (i.e., baseline) periods of equal duration and bandwidth (Van Veen et al., 1997). Such images are typically referred to as pseudo-t maps, with units (i.e., pseudo-t) that reflect noise-normalized power differences per voxel. MEG pre-processing and imaging used the Brain Electrical Source Analysis (BESA version 6.0) software.

Normalized differential source power was computed for the selected time–frequency bands, using a common baseline, over the entire brain volume per participant at 4.0 × 4.0 × 4.0 mm resolution. Each participant's functional images were then transformed into standardized space using the transform that was previously applied to the structural images and spatially resampled to 1.0 × 1.0 × 1.0 mm resolution. Due to limitations of baseline length, the beamformer images were computed in non-overlapping 400 ms time windows and then averaged across each time window of interest, which resulted in one encoding and one maintenance image per person (see Results for exact time–frequency windows imaged). Then, whole-brain independent-samples t-tests were performed to dissociate the impact of hearing loss on the neural dynamics serving each phase of working memory. All output statistical maps were then adjusted for multiple comparisons using a spatial extent threshold (i.e., cluster restriction; k = 300 contiguous voxels) based on the theory of Gaussian random fields (Poline et al., 1995, Worsley et al., 1999, Worsley et al., 1996). Basically, statistical maps were initially thresholded at p < .005, and then a cluster-based correction method was applied such that at least 300 contiguous voxels must be significant at p < .005 in order for a cluster to be considered significant.

3. Results

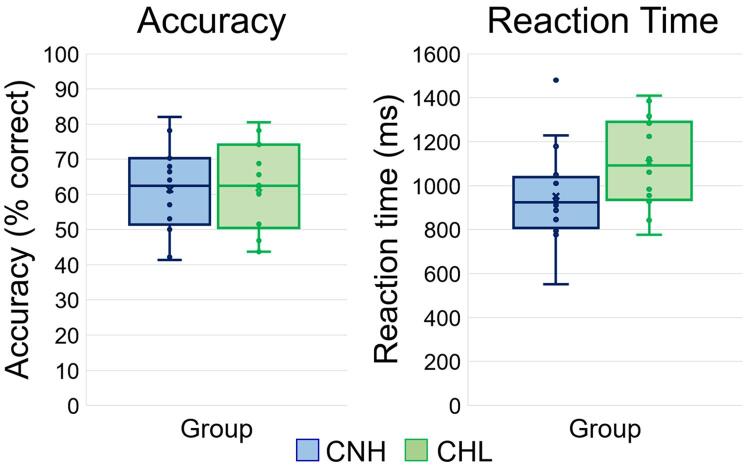

Behavioral and neuropsychological test results from the final sample of 30 participants (14 CHL and 16 CNH) are found in Table 1, and performance on the task for each group is further shown in Fig. 2. There were no significant differences in accuracy between CNH and CHL, t(28) = 0.078, p = .938. However, there was a marginally significant difference in reaction time between groups, t(28) = 1.803, p = .082, Cohen’s d = 0.664, such that CHL were slower to identify whether the probe was one of the previous encoding set than CNH. All trials were subject to preprocessing. Following artifact rejection, an average of 95.79 (SD: 10.82) trials were used for the CHL and an average of 99.64 (SD: 10.64) trials were used for the CNH; this difference was not significant, t(28) = 0.986, p = .333.

Table 1.

Independent t-tests of Behavioral Measures between Groups.

| CNH |

CHL |

|||||

|---|---|---|---|---|---|---|

| Metric | Mean | SD | Mean | SD | t | p |

| Age (months) | 141.313 | 23.044 | 143.500 | 23.067 | −0.259 | 0.797 |

| Accuracy (% correct) | 61.425 | 11.919 | 61.775 | 12.584 | −0.078 | 0.938 |

| Reaction time (ms) | 980.584 | 223.625 | 1118.776 | 191.626 | −1.804 | 0.082 |

| WASI-II Verbal Comprehension Index (VCI) | 98.375 | 15.949 | 102.000 | 11.701 | −0.701 | 0.489 |

| WASI-II Perceptual Reasoning Index (PRI)* | 105.563 | 18.004 | 108.357 | 10.375 | −0.529 | 0.602 |

Notes: CNH = children with normal hearing; CHL = children with hearing loss;

*WASI-II PRI: Levene’s Test for Equality of Variances was significant; t and p values are corrected accordingly

Fig. 2.

Behavioral Results. Box plots of accuracy (in percent correct; left) and reaction time (in ms; right) are shown. The center line within each box denotes the median frequency, and the bottom and top of each box designate the first and third quartile, respectively. Each lower and upper stem reflects the minimum and maximum values. The CNH are denoted in blue, while the CHL are shown in green. There was a marginal difference in reaction time between groups, such that CHL were slower to respond than CNH, t(28) = 1.803, p = .082. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

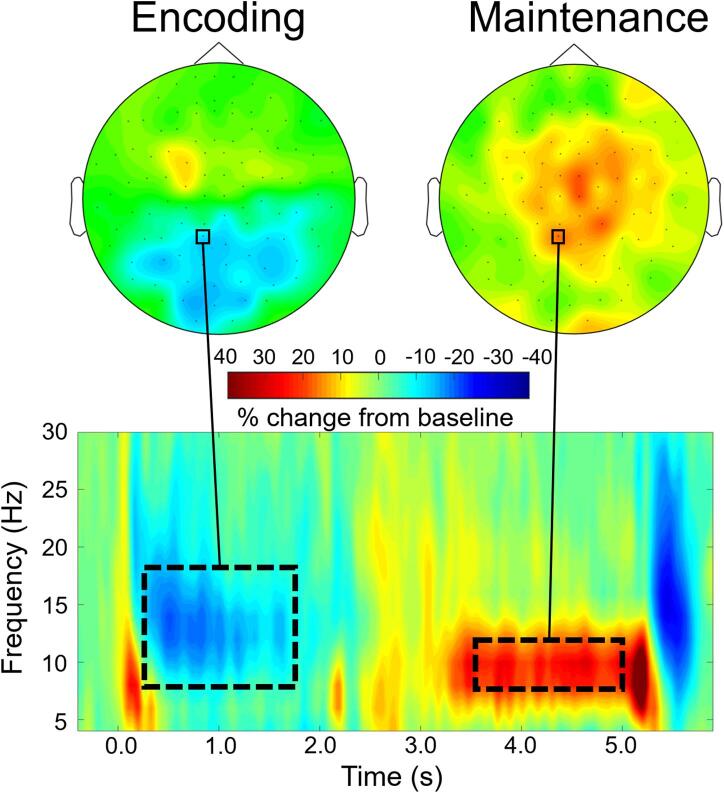

3.1. Identification of oscillatory events

Time-frequency bins of interest were identified across the CHL and CNH together. The two-stage statistical analysis of the time–frequency spectrograms across the sensor array resulted in two significant bins. During the encoding phase, there was a significant alpha–beta (8–18 Hz) event-related desynchronization (ERD) response that began around 200 ms after initial presentation of the encoding set and was sustained throughout encoding (p < .0001, corrected). This cluster was found largely in posterior and central sensors bilaterally. There was also a significant event-related synchronization (ERS) response in a narrower alpha band (i.e., 8–12 Hz) that extended from about 1400 ms after maintenance onset until the onset of retrieval in medial posterior, central, and frontal sensors (p < .0001, corrected). Fig. 3 shows the sensor-level topography of each time–frequency bin across groups, as well as a representative sensor. These bins were imaged individually for each participant to determine the distribution of these neural responses in the brain.

Fig. 3.

Significant time–frequency windows serving working memory processing. Time-frequency spectrogram from a representative sensor averaged across CNH and CHL is shown on the bottom, with frequency (in Hz) shown on the y-axis and time (in s; 0.0 s = encoding stimulus onset) shown on the x-axis. Color bars denote the percentage change from baseline, with warmer colors reflecting increases in power from baseline (i.e., ERS) and cooler colors reflecting decreases in neural power from baseline (i.e., ERD). Dotted boxes denote time–frequency components that were selected for source imaging. The topographic distribution of activity across all sensors within each time–frequency window is shown on top (left: encoding; right: maintenance). Note that the same sensor is highlighted with a black box in each topographic map and shown in the bottom panel.

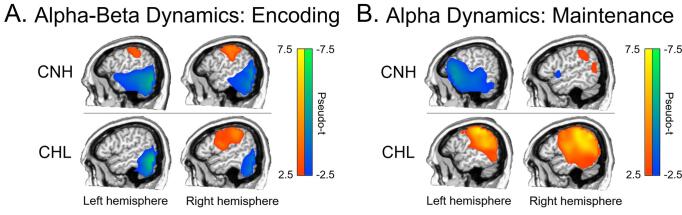

Group-averaged whole-brain maps of each response showed distinct patterns of neural oscillatory activity serving working memory encoding and maintenance in each group. The CNH showed a strong ERD response that peaked in the bilateral lateral occipital cortices during encoding and spread superior and anterior into left parietal regions and left inferior frontal gyrus during maintenance. Interestingly, while these more posterior responses were present in the CHL during encoding, at least qualitatively the left hemisphere dynamics were largely absent in this group during maintenance. In addition, the maintenance-related alpha ERS response was found in the parieto-occipital cortices in both CNH and CHL, in line with previous research. Nonetheless, the CHL showed a maintenance-related ERS response that was more bilateral and widespread on average. Group-wise averages of encoding and maintenance-related neural responses are shown in Fig. 4A and 4B, respectively.

Fig. 4.

Grand averaged maps of encoding and maintenance-related neural activity. A) Grand averages (pseudo-t) of alpha–beta encoding-related activity for CNH (top) and CHL (bottom). Left hemispheric activity is shown on the left, while right hemispheric activity is shown on the right. Pseudo-t values are denoted with the color bar to the right, whereby warmer colors denote an increase (ERS) from baseline, while cooler colors denote a decrease (ERD) from baseline. B) Grand averages (pseudo-t) of alpha maintenance-related activity for CNH (top) and CHL (bottom). Left hemispheric activity is shown on the left, while right hemispheric activity is shown on the right. Pseudo-t values are denoted with the color bar to the right.

3.2. Impact of hearing loss on working memory neural dynamics

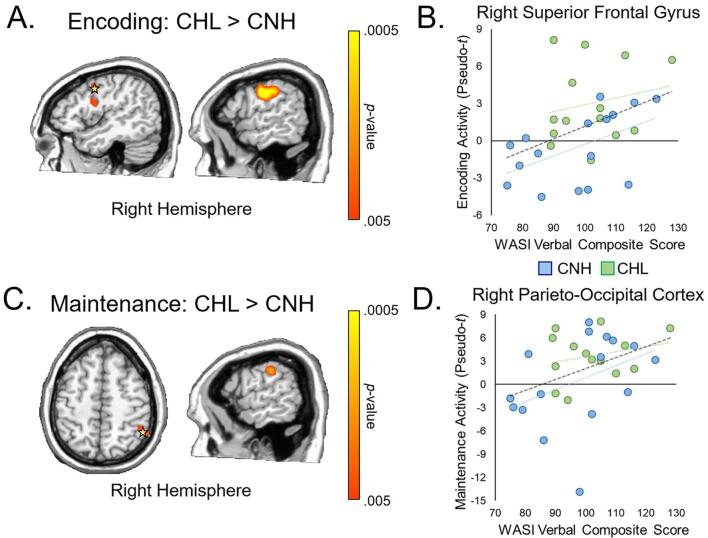

As described above, whole-brain independent samples t-tests (CHL vs. CNH) were performed on the encoding and maintenance images separately. Resultant images were thresholded at p < .005 and corrected for multiple comparisons (k = 300 voxels). We found significant differences in encoding-related alpha–beta activity between CNH and CHL in the right inferior and superior frontal gyri, where CHL showed an ERS while CNH showed little-to-no response, as well as the right precentral gyrus, where CHL displayed an ERS response while CNH had a slight ERD response (p < .005, corrected; Fig. 5A). While both CNH and CHL showed a relative ERS in the right parieto-occipital cortex during maintenance, we found significant elevations (i.e., stronger ERS) in this parieto-occipital maintenance-related activity in CHL relative to CNH (p < .005, corrected; Fig. 5C). In addition, we found a significant elevation in maintenance-related activity in the right precentral gyrus in CHL relative to CNH, where CHL showed an ERS while CNH showed little-to-no response (p < .005, corrected; Fig. 5C). To determine the relationship between differences in the neural dynamics serving working memory and behavioral outcomes, we extracted peak voxel values from the regions that showed significant differences between groups and correlated these values with neuropsychological performance. We found a significant correlation between encoding-related oscillations in the right superior frontal cortex and WASI-II Verbal Composite scores, which quantifies verbal intelligence, r(30) = 0.387, p = .034, such that greater activity in this region during encoding was related to higher scores of verbal intelligence (Fig. 5B). Similarly, we found a significant correlation between parieto-occipital maintenance-related activity and WASI-II Verbal Composite score, r(30) = 0.423, p = .020, such that greater neural activity in this region was related to better verbal intelligence scores (Fig. 5D). As far as behavior on the task, there was a significant correlation between reaction time and encoding-related activity in the right precentral gyrus, r(30) = 0.377, p = .040, such that greater activity in this region was related to slower reaction times. However, given the significant difference in neural activity and trending difference in reaction time between groups, we also ran this correlation after centering each variable and the correlation was no longer significant, r(30) = 0.245, p = .192. No other correlations were significant.

Fig. 5.

Impact of hearing loss on working memory neural dynamics. A) Whole-brain statistical analyses showed that CHL had significantly greater alpha–beta activity in the right superior and inferior frontal and precentral gyri relative to CNH, p < .005 (corrected). B) Response power (pseudo-t, y-axis) in the right superior frontal cortex (starred in A) was significantly correlated with WASI-II Verbal Composite scores (x-axis) across groups, p = .034. Data is color-coded by group, with CNH in blue and CHL in green. C) Whole-brain statistical analyses showed significant elevations in alpha activity during the maintenance phase in CHL relative to CNH in the right parieto-occipital cortex and right precentral gyrus, p < .005 (corrected). D) Maintenance-related alpha activity (pseudo-t, y-axis) in the parieto-occipital cortex (starred in C) was significantly positively correlated with WASI-II Verbal Composite scores (x-axis) across groups, p = .020. Data is color-coded by group, with CNH in blue and CHL in green. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

4. Discussion

Despite the growing literature suggesting that working memory capacity is significantly linked to language function in those with and without hearing loss (McCreery et al., 2017, McCreery et al., 2019, Stiles et al., 2012), there has been no investigation of the neural dynamics underlying these relationships to date. To this end, this study used MEG during a well-established verbal working memory task and a battery of neuropsychological tests to determine the impact of hearing loss on neural oscillatory dynamics that underlie working memory encoding and maintenance processing. We decomposed neural data into the time–frequency domain and imaged significant oscillatory responses from the encoding and maintenance phases separately, and then performed whole-brain independent-samples t-tests between CNH and CHL. Crucially, we found phase-specific differences in neural activity between CHL and CNH. During encoding, CHL had significantly more alpha–beta activity in the right superior and inferior frontal gyri, as well as the right precentral gyrus. In contrast, we found significant elevations in parieto-occipital alpha activity in CHL relative to CNH, as well as persistent differences in the right precentral gyrus. Perhaps most interestingly, encoding-related activity in the superior frontal cortex, as well as maintenance-related activity in the parieto-occipital cortex, were positively correlated with verbal intelligence scores as measured by the WASI-II. These data are the first of their kind to identify aberrations in neural dynamics during higher-order cognitive performance in CHL relative to CNH, and suggest a tight link between task-related activity in these regions and language function.

During encoding of the letter stimuli, CHL showed significant elevations in activity in the right superior and inferior prefrontal cortices relative to CNH. This increased recruitment of task-relevant homologous regions echoes the pattern of results found in healthy aging (Proskovec et al., 2019, Reuter-Lorenz et al., 2000) as well as in disorders such as autism spectrum disorder (Koshino et al., 2005, Yeung et al., 2019) and post-traumatic stress disorder (McDermott et al., 2016). We hypothesize that this increased recruitment serves as a compensatory mechanism so that CHL can perform at the same levels behaviorally as CNH. Indeed, in the healthy aging literature there is a hypothesis called the Compensation-Related Utilization of Neural Circuits Hypothesis (CRUNCH), which states that older adults utilize more widespread neural resources at the same level of task difficulty and performance as younger adults. As task difficulty increases, those additional resources are exhausted and performance decrement ensues. If CRUNCH can be applied in the context of hearing loss, then that may explain some of the variability in behavioral findings. Basically, CHL recruit additional neural resources and perform at the level of CNH, but at some point of task difficulty or exhaustion of resources, behavioral performance declines. The “point of exhaustion” with regards to neural activity is impossible to quantify, but nonetheless this pattern of activity may explain why some previous studies show differences in working memory performance in those with hearing loss relative to CNH (Pisoni and Cleary, 2003) while others do not (McCreery et al., 2019, Stiles et al., 2012).

In addition to encoding-related alpha–beta increases in frontal activity, CHL also showed an increase in maintenance-related alpha activity in the parieto-occipital cortices. One of the most widely-studied neural oscillatory responses induced by working memory tasks is the robust parieto-occipital alpha ERS response that emerges solely during the maintenance phase of the task. This response has been shown to serve a crucial mechanism with which to orient attention and/or inhibit distractors in order to “protect” the representation of a stimulus that has been encoded. For example, Bonnefond and Jensen (2012) presented participants with a verbal working memory task during MEG. During the maintenance phase, participants were given either a related distractor (i.e., strong distractor) or an unrelated distractor (i.e., a weak distractor). They found that the strong distractor elicited a significantly stronger alpha ERS response during the maintenance phase relative to the weak distractor. Further, the larger the difference in alpha ERS during maintenance, the smaller the difference in reaction time between the two conditions; in other words, participants performed better in the strong distractor condition if they exhibited a stronger ERS during maintenance, relative to the weak distractor condition. Of note, the parieto-occipital alpha ERS response emerges during the maintenance phase even in the absence of distractors (such as in the current study), which suggests that it serves as a buffer against even basic inattention. For example, Proskovec et al. (2019) used a task similar to the current study, with two loads of encoding stimuli (i.e., 4 letters or 6 letters). They found that the maintenance-related alpha ERS response increased in amplitude with greater task difficulty (Proskovec et al., 2019), even in the absence of a distractor. In this study, we found that CHL exhibited an elevated parieto-occipital alpha ERS response compared to CNH peers. Given that this response typically increases in amplitude with the difficulty of a task, it is possible that at the same level of difficulty, CHL required a higher level of suppression or inhibition in order to properly maintain the stimulus. Interestingly, increases in maintenance-related alpha ERS responses during working memory processing have also been found in ADHD and other related attention disorders (Lenartowicz et al., 2019, Missonnier et al., 2013); thus, it is possible that this elevation in CHL may be related to altered attention function. Behaviorally speaking, CHL have been shown to be diagnosed with ADHD at higher rates than CNH (Soleimani et al., 2020, Theunissen et al., 2014a), though the literature is relatively variable (see (Theunissen et al., 2014b)). More broadly, CHL exhibit higher false alarm rates in tests of inhibition (e.g., in a “go/no-go” task; (Bell et al., 2020)) relative to CNH, so this elevated attention allocation/distractor inhibition response in CHL may be indicative of widespread (albeit potentially subclinical) attention-related alterations in brain function due to hearing loss.

In addition to the phase-specific (e.g., encoding vs. maintenance) differences in neural activity, CHL also exhibited significantly more activity in the right motor cortex relative to CNH during both encoding and maintenance. Interestingly, this response was an increase in activity (i.e., event-related synchronization, or ERS), while activation of the motor cortex is typically a decrease in activity (i.e., event-related desynchronization, or ERD). Thus, motor cortical ERS responses are typically referred to as motor inhibition responses (Neuper et al., 2006). Bringing this to the current study, there seems to be an increase in motor cortical inhibition during the performance of this task in CHL relative to CNH. It is possible that this response pattern reflects subvocal rehearsal of the encoding stimuli, whereby the motor inhibition response serves to suppress actually vocalizing the letter stimuli. Following this logic, a greater motor suppression response may reflect a less efficient subvocal rehearsal mechanism in CHL relative to CNH. Indeed, a study by Burkholder and Pisoni (2003) investigated the relationship between articulation rates and working memory span in children with severe-to-profound hearing loss who wore cochlear implants. They showed that children with slower articulation rates also had shorter memory spans, suggesting that the efficiency of subvocal rehearsal may predict working memory performance in this population (Burkholder and Pisoni, 2003). This work has since been extended in the context of CHL (Stiles et al., 2012). Taken together, it is possible that the motor response pattern found in the current study is a neurophysiological correlate of aberrations in subvocal rehearsal found in the previous behavioral literature.

A key finding of the present study was that the amplitude of activity in the right superior frontal cortex during encoding, as well as in the parieto-occipital cortex during maintenance, significantly correlated with language function across groups. This working memory-language relationship has been corroborated in behavioral studies of CNH and CHL, which found a tight link between working memory capacity and language ability (McCreery et al., 2017, McCreery et al., 2019, Stiles et al., 2012), and additionally that these factors were significantly related to a child’s ability to understand speech in noise (McCreery et al., 2017, McCreery et al., 2019). Nonetheless, the neural correlates of this relationship have not been studied to date. It is possible that these patterns reflect other aspects of stimulus processing during the task, such as bottom-up visual or letter processing. However, given that the neural data in question was extracted from regions that were significantly different between groups in time–frequency windows that were significantly active during each stage of working memory processing, we argue that these patterns are likely to be related to working memory processing itself and not simply from the visual presentation of the stimuli. Future studies should probe this dissociation. Nevertheless, the current study provides the strong preliminary evidence of a direct physiological link between the phase-specific neural dynamics serving working memory function and language ability. Basically, greater recruitment of these task-relevant fronto-parietal regions positively relates to a child’s language function. It is possible that a child’s ability to better harness additional fronto-parietal dynamics as needed during verbal working memory performance leads to greater success in language acquisition. Future work should more closely investigate the link between neural responsiveness during working memory processing and language function in this population.

In conclusion, this study sought to identify the impact of hearing loss on the neural dynamics serving verbal working memory encoding and maintenance in CHL compared to CNH and how these dynamics are related to language ability. We found elevations in alpha–beta activity during encoding in the right superior and inferior frontal cortices, as well as significantly increased alpha activity in the parieto-occipital cortices during maintenance in CHL relative to CNH peers. These results suggest phase-specific recruitment of additional neural resources in CHL during the performance of the working memory task, possibly as a compensatory mechanism. In addition, we found sustained increases in right precentral gyrus activity during both encoding and maintenance in CHL, which might be related to increased subvocal rehearsal and subsequent motor suppression. Importantly, superior frontal encoding-related activity and parieto-occipital maintenance-related activity significantly correlated with language function across groups. This provides strong neurophysiological evidence of a link between working memory function and language, in line with previous behavioral literature. In sum, these results are the first to suggest altered spatiotemporal neurophysiological dynamics serving verbal working memory processing in CHL, and suggest that neuroimaging with MEG may hold promise in clarifying the impact of hearing loss on language and cognitive development.

Declaration of Competing Interest and Sources of Funding

The authors report no conflicts of interest. This work was supported by the National Institutes of Health (R01-DC013591 to RWM). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Acknowledgements

We want to thank Dr. Meredith Spratford for her assistance and support throughout the project, and Dr. Lori Leibold for critical input during manuscript preparation. We also want to thank our participants and their families for participating. This work was supported by the National Institutes of Health (R01-DC013591 to RWM). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- Akeroyd M.A. Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. Int. J. Audiol. 2008;47(Suppl 2):S53–S71. doi: 10.1080/14992020802301142. [DOI] [PubMed] [Google Scholar]

- Baddeley A. Working memory. Science. 1992;255(5044):556–559. doi: 10.1126/science.1736359. [DOI] [PubMed] [Google Scholar]

- Baddeley A. The episodic buffer: a new component of working memory? Trends Cogn. Sci. 2000;4(11):417–423. doi: 10.1016/s1364-6613(00)01538-2. [DOI] [PubMed] [Google Scholar]

- Baddeley A. Working memory and language: an overview. J. Commun. Disord. 2003;36(3):189–208. doi: 10.1016/s0021-9924(03)00019-4. [DOI] [PubMed] [Google Scholar]

- Baddeley A. Working memory: looking back and looking forward. Nat. Rev. Neurosci. 2003;4(10):829–839. doi: 10.1038/nrn1201. [DOI] [PubMed] [Google Scholar]

- Baddeley A. Working memory: theories, models, and controversies. Annu. Rev. Psychol. 2012;63(1):1–29. doi: 10.1146/annurev-psych-120710-100422. [DOI] [PubMed] [Google Scholar]

- Baddeley A., Gathercole S., Papagno C. The phonological loop as a language learning device. Psychol. Rev. 1998;105(1):158–173. doi: 10.1037/0033-295x.105.1.158. [DOI] [PubMed] [Google Scholar]

- Bastiaansen M.C.M., Posthuma D., Groot P.F.C., de Geus E.J.C. Event-related alpha and theta responses in a visuo-spatial working memory task. Clin. Neurophysiol. 2002;113(12):1882–1893. doi: 10.1016/s1388-2457(02)00303-6. [DOI] [PubMed] [Google Scholar]

- Bell L., Scharke W., Reindl V., Fels J., Neuschaefer-Rube C., Konrad K. Auditory and visual response inhibition in children with bilateral hearing aids and children with ADHD. Brain Sci. 2020;10(5):307. doi: 10.3390/brainsci10050307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Besser J., Koelewijn T., Zekveld A.A., Kramer S.E., Festen J.M. How linguistic closure and verbal working memory relate to speech recognition in noise–a review. Trends Amplif. 2013;17(2):75–93. doi: 10.1177/1084713813495459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnefond M., Jensen O. Alpha oscillations serve to protect working memory maintenance against anticipated distracters. Curr. Biol. 2012;22(20):1969–1974. doi: 10.1016/j.cub.2012.08.029. [DOI] [PubMed] [Google Scholar]

- Bonnefond M., Jensen O. The role of gamma and alpha oscillations for blocking out distraction. Commun. Integr. Biol. 2013;6(1):e22702. doi: 10.4161/cib.22702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burkholder R.A., Pisoni D.B. Speech timing and working memory in profoundly deaf children after cochlear implantation. J. Exp. Child Psychol. 2003;85(1):63–88. doi: 10.1016/s0022-0965(03)00033-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caudle S.E., Katzenstein J.M., Oghalai J.S., Lin J., Caudle D.D. Nonverbal cognitive development in children with cochlear implants: relationship between the Mullen Scales of Early Learning and later performance on the Leiter International Performance Scales-Revised. Assessment. 2014;21(1):119–128. doi: 10.1177/1073191112437594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N. Evolving conceptions of memory storage, selective attention, and their mutual constraints within the human information-processing system. Psychol. Bull. 1988;104(2):163–191. doi: 10.1037/0033-2909.104.2.163. [DOI] [PubMed] [Google Scholar]

- Cowan N. The magical number 4 in short-term memory: a reconsideration of mental storage capacity. Behav. Brain Sci. 2001;24(1):87–114. doi: 10.1017/s0140525x01003922. [DOI] [PubMed] [Google Scholar]

- Cowan N. Working memory underpins cognitive development, learning, and education. Educ. Psychol. Rev. 2014;26(2):197–223. doi: 10.1007/s10648-013-9246-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson P.W., Busby P.A., McKay C.M., Clark G.M. Short-term auditory memory in children using cochlear implants and its relevance to receptive language. J. Speech Lang Hear Res. 2002;45(4):789–801. doi: 10.1044/1092-4388(2002/064). [DOI] [PubMed] [Google Scholar]

- de Villers-Sidani E., Simpson K.L., Lu Y.-F., Lin R.C.S., Merzenich M.M. Manipulating critical period closure across different sectors of the primary auditory cortex. Nat. Neurosci. 2008;11(8):957–965. doi: 10.1038/nn.2144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dirks E., Ketelaar L., van der Zee R., Netten A.P., Frijns J.H.M., Rieffe C. Concern for others: a study on empathy in toddlers with moderate hearing loss. J. Deaf Stud. Deaf. Educ. 2017;22(2):178–186. doi: 10.1093/deafed/enw076. [DOI] [PubMed] [Google Scholar]

- Dye M.W.G., Hauser P.C. Sustained attention, selective attention and cognitive control in deaf and hearing children. Hear. Res. 2014;309:94–102. doi: 10.1016/j.heares.2013.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Embury C.M., Wiesman A.I., Proskovec A.L., Mills M.S., Heinrichs-Graham E., Wang Y.-P., Calhoun V.D., Stephen J.M., Wilson T.W. Neural dynamics of verbal working memory processing in children and adolescents. Neuroimage. 2019;185:191–197. doi: 10.1016/j.neuroimage.2018.10.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst M.D. Permutation methods: a basis for exact inference. Stat. Sci. 2004;19:676–685. [Google Scholar]

- Glick H., Sharma A. Cross-modal plasticity in developmental and age-related hearing loss: clinical implications. Hear. Res. 2017;343:191–201. doi: 10.1016/j.heares.2016.08.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon-Salant S., Cole S.S. Effects of age and working memory capacity on speech recognition performance in noise among listeners with normal hearing. Ear Hear. 2016;37(5):593–602. doi: 10.1097/AUD.0000000000000316. [DOI] [PubMed] [Google Scholar]

- Harris M.S., Kronenberger W.G., Gao S., Hoen H.M., Miyamoto R.T., Pisoni D.B. Verbal short-term memory development and spoken language outcomes in deaf children with cochlear implants. Ear Hear. 2013;34(2):179–192. doi: 10.1097/AUD.0b013e318269ce50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heinrichs-Graham E, Walker EA, Eastman JA, Frenzel MR, McCreery RW (under review). Amount of hearing aid use impacts neural oscillatory dynamics underlying verbal working memory processing for children with hearing loss. Ear Hear. [DOI] [PMC free article] [PubMed]

- Heinrichs-Graham E., Wilson T.W. Spatiotemporal oscillatory dynamics during the encoding and maintenance phases of a visual working memory task. Cortex. 2015;69:121–130. doi: 10.1016/j.cortex.2015.04.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillebrand A., Singh K.D., Holliday I.E., Furlong P.L., Barnes G.R. A new approach to neuroimaging with magnetoencephalography. Hum. Brain Mapp. 2005;25(2):199–211. doi: 10.1002/hbm.20102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hwang J.S., Kim K.H., Lee J.H. Factors affecting sentence-in-noise recognition for normal hearing listeners and listeners with hearing loss. J. Audiol. Otol. 2017;21(2):81–87. doi: 10.7874/jao.2017.21.2.81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khairi Md Daud M., Noor R.M., Rahman N.A., Sidek D.S., Mohamad A. The effect of mild hearing loss on academic performance in primary school children. Int. J. Pediatr. Otorhinolaryngol. 2010;74(1):67–70. doi: 10.1016/j.ijporl.2009.10.013. [DOI] [PubMed] [Google Scholar]

- Kirby B.J., Spratford M., Klein K.E., McCreery R.W. Cognitive abilities contribute to spectro-temporal discrimination in children who are hard of hearing. Ear Hear. 2019;40(3):645–650. doi: 10.1097/AUD.0000000000000645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koshino H., Carpenter P.A., Minshew N.J., Cherkassky V.L., Keller T.A., Just M.A. Functional connectivity in an fMRI working memory task in high-functioning autism. Neuroimage. 2005;24(3):810–821. doi: 10.1016/j.neuroimage.2004.09.028. [DOI] [PubMed] [Google Scholar]

- Kouwenberg M., Rieffe C., Theunissen S.C.P.M., de Rooij M., Scott J.G. Peer victimization experienced by children and adolescents who are deaf or hard of hearing. PLoS One. 2012;7(12):e52174. doi: 10.1371/journal.pone.0052174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenartowicz A., Truong H., Salgari G.C., Bilder R.M., McGough J., McCracken J.T., Loo S.K. Alpha modulation during working memory encoding predicts neurocognitive impairment in ADHD. J. Child Psychol. Psychiatry. 2019;60(8):917–926. doi: 10.1111/jcpp.13042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liljeström M., Kujala J., Jensen O., Salmelin R. Neuromagnetic localization of rhythmic activity in the human brain: a comparison of three methods. Neuroimage. 2005;25(3):734–745. doi: 10.1016/j.neuroimage.2004.11.034. [DOI] [PubMed] [Google Scholar]

- Lowenstein J.H., Cribb C., Shell P., Yuan Y.i., Nittrouer S. Children's suffix effects for verbal working memory reflect phonological coding and perceptual grouping. J. Exp. Child Psychol. 2019;183:276–294. doi: 10.1016/j.jecp.2019.03.003. [DOI] [PubMed] [Google Scholar]

- Magimairaj B.M., Nagaraj N.K., Benafield N.J. Children's speech perception in noise: evidence for dissociation from language and working memory. J. Speech Lang Hear. Res. 2018;61(5):1294–1305. doi: 10.1044/2018_JSLHR-H-17-0312. [DOI] [PubMed] [Google Scholar]

- Magimairaj B.M., Nagaraj N.K. Working memory and auditory processing in school-age children. Lang Speech Hear. Serv. Sch. 2018;49(3):409–423. doi: 10.1044/2018_LSHSS-17-0099. [DOI] [PubMed] [Google Scholar]

- Magimairaj B.M., Nagaraj N.K., Sergeev A.V., Benafield N.J. Comparison of auditory, language, memory, and attention abilities in children with and without listening difficulties. Am. J. Audiol. 2020:1–18. doi: 10.1044/2020_AJA-20-00018. [DOI] [PubMed] [Google Scholar]

- Maris E., Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods. 2007;164(1):177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- McCreery R.W., Walker E.A., Spratford M., Oleson J., Bentler R., Holte L., Roush P. Speech recognition and parent ratings from auditory development questionnaires in children who are hard of hearing. Ear Hear. 2015;36(Suppl 1):60S–75S. doi: 10.1097/AUD.0000000000000213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery R.W., Spratford M., Kirby B., Brennan M. Individual differences in language and working memory affect children's speech recognition in noise. Int. J. Audiol. 2017;56(5):306–315. doi: 10.1080/14992027.2016.1266703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery R.W., Walker E.A., Spratford M., Lewis D., Brennan M. Auditory, cognitive, and linguistic factors predict speech recognition in adverse listening conditions for children with hearing loss. Front. Neurosci. 2019;13:1093. doi: 10.3389/fnins.2019.01093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDermott T.J., Badura-Brack A.S., Becker K.M., Ryan T.J., Khanna M.M., Heinrichs-Graham E., Wilson T.W. Male veterans with PTSD exhibit aberrant neural dynamics during working memory processing: an MEG study. J. Psychiatry Neurosci. 2016;41(4):251–260. doi: 10.1503/jpn.150058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miao W., Li J., Tang M., Xian J., Li W., Liu Z., Liu S., Sabel B.A., Wang Z., He H. Altered white matter integrity in adolescents with prelingual deafness: a high-resolution tract-based spatial statistics imaging study. AJNR Am. J. Neuroradiol. 2013;34(6):1264–1270. doi: 10.3174/ajnr.A3370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Missonnier P., Hasler R., Perroud N., Herrmann F.R., Millet P., Richiardi J., Malafosse A., Giannakopoulos P., Baud P. EEG anomalies in adult ADHD subjects performing a working memory task. Neuroscience. 2013;241:135–146. doi: 10.1016/j.neuroscience.2013.03.011. [DOI] [PubMed] [Google Scholar]

- Moeller M.P. Early intervention and language development in children who are deaf and hard of hearing. Pediatrics. 2000;106(3):E43. doi: 10.1542/peds.106.3.e43. [DOI] [PubMed] [Google Scholar]

- Moeller M.P., Tomblin J.B., Yoshinaga-Itano C., Connor C.M., Jerger S. Current state of knowledge: language and literacy of children with hearing impairment. Ear Hear. 2007;28(6):740–753. doi: 10.1097/AUD.0b013e318157f07f. [DOI] [PubMed] [Google Scholar]

- Netten A.P., Rieffe C., Theunissen S.C.P.M., Soede W., Dirks E., Briaire J.J., Frijns J.H.M., Lamm C. Low empathy in deaf and hard of hearing (pre)adolescents compared to normal hearing controls. PLoS One. 2015;10(4):e0124102. doi: 10.1371/journal.pone.0124102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Netten A.P., Rieffe C., Theunissen S.C., Soede W., Dirks E., Korver A.M., Konings S., Oudesluys-Murphy A.M., Dekker F.W., Frijns J.H. Early identification: language skills and social functioning in deaf and hard of hearing preschool children. Int. J. Pediatr. Otorhinolaryngol. 2015;79(12):2221–2226. doi: 10.1016/j.ijporl.2015.10.008. [DOI] [PubMed] [Google Scholar]

- Neuper C., Wortz M., Pfurtscheller G. ERD/ERS patterns reflecting sensorimotor activation and deactivation. Prog. Brain Res. 2006;159:211–222. doi: 10.1016/S0079-6123(06)59014-4. [DOI] [PubMed] [Google Scholar]

- Nittrouer S., Caldwell-Tarr A., Lowenstein J.H. Working memory in children with cochlear implants: problems are in storage, not processing. Int. J. Pediatr. Otorhinolaryngol. 2013;77(11):1886–1898. doi: 10.1016/j.ijporl.2013.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S., Caldwell-Tarr A., Low K.E., Lowenstein J.H. Verbal working memory in children with cochlear implants. J. Speech Lang. Hear. Res. 2017;60(11):3342–3364. doi: 10.1044/2017_JSLHR-H-16-0474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papp N., Ktonas P. Critical evaluation of complex demodulation techniques for the quantification of bioelectrical activity. Biomed. Sci. Instrum. 1977;13:135–145. [PubMed] [Google Scholar]

- Petersen B., Gjedde A., Wallentin M., Vuust P. Cortical plasticity after cochlear implantation. Neural Plast. 2013;2013:1–11. doi: 10.1155/2013/318521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pimperton H., Blythe H., Kreppner J., Mahon M., Peacock J.L., Stevenson J., Terlektsi E., Worsfold S., Yuen H.M., Kennedy C.R. The impact of universal newborn hearing screening on long-term literacy outcomes: a prospective cohort study. Arch. Dis. Child. 2016;101(1):9–15. doi: 10.1136/archdischild-2014-307516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni D.B. Cognitive factors and cochlear implants: some thoughts on perception, learning, and memory in speech perception. Ear Hear. 2000;21(1):70–78. doi: 10.1097/00003446-200002000-00010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni D.B., Cleary M. Measures of working memory span and verbal rehearsal speed in deaf children after cochlear implantation. Ear Hear. 2003;24(Supplement):106S–120S. doi: 10.1097/01.AUD.0000051692.05140.8E. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poline J.-B., Worsley K.J., Holmes A.P., Frackowiak R.S.J., Friston K.J. Estimating smoothness in statistical parametric maps: variability of p values. J. Comput. Assist. Tomogr. 1995;19(5):788–796. doi: 10.1097/00004728-199509000-00017. [DOI] [PubMed] [Google Scholar]

- Polonenko M.J., Gordon K.A., Cushing S.L., Papsin B.C. Cortical organization restored by cochlear implantation in young children with single sided deafness. Sci. Rep. 2017;7(1):16900. doi: 10.1038/s41598-017-17129-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polonenko M.J., Papsin B.C., Gordon K.A. Cortical plasticity with bimodal hearing in children with asymmetric hearing loss. Hear. Res. 2019;372:88–98. doi: 10.1016/j.heares.2018.02.003. [DOI] [PubMed] [Google Scholar]

- Proskovec A.L., Heinrichs-Graham E., Wilson T.W. Aging modulates the oscillatory dynamics underlying successful working memory encoding and maintenance. Hum. Brain Mapp. 2016;37(6):2348–2361. doi: 10.1002/hbm.23178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proskovec A.L., Heinrichs-Graham E., Wilson T.W. Load modulates the alpha and beta oscillatory dynamics serving verbal working memory. Neuroimage. 2019;184:256–265. doi: 10.1016/j.neuroimage.2018.09.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reuter-Lorenz P.A., Jonides J., Smith E.E., Hartley A., Miller A., Marshuetz C., Koeppe R.A. Age differences in the frontal lateralization of verbal and spatial working memory revealed by PET. J. Cogn. Neurosci. 2000;12(1):174–187. doi: 10.1162/089892900561814. [DOI] [PubMed] [Google Scholar]

- Robinshaw H.M. Early intervention for hearing impairment: differences in the timing of communicative and linguistic development. Br. J. Audiol. 1995;29(6):315–334. doi: 10.3109/03005369509076750. [DOI] [PubMed] [Google Scholar]

- Roux F., Uhlhaas P.J. Working memory and neural oscillations: alpha-gamma versus theta-gamma codes for distinct WM information? Trends Cogn. Sci. 2014;18(1):16–25. doi: 10.1016/j.tics.2013.10.010. [DOI] [PubMed] [Google Scholar]

- Smieja D.A., Dunkley B.T., Papsin B.C., Easwar V., Yamazaki H., Deighton M., Gordon K.A. Interhemispheric auditory connectivity requires normal access to sound in both ears during development. NeuroImage. 2020;208:116455. doi: 10.1016/j.neuroimage.2019.116455. [DOI] [PubMed] [Google Scholar]

- Soleimani R., Jalali M.M., Faghih H.A. Comparing the prevalence of attention deficit hyperactivity disorder in hearing-impaired children with normal-hearing peers. Arch. Pediatr. 2020;27(8):432–435. doi: 10.1016/j.arcped.2020.08.014. [DOI] [PubMed] [Google Scholar]

- Stiles D.J., McGregor K.K., Bentler R.A. Vocabulary and working memory in children fit with hearing aids. J. Speech Lang Hear. Res. 2012;55(1):154–167. doi: 10.1044/1092-4388(2011/11-0021). [DOI] [PubMed] [Google Scholar]

- Talairach G., Tournoux P. Thieme; New York, NY: 1988. Planar Stereotaxic Atlas of the Human Brain. [Google Scholar]

- Taulu S., Simola J., Kajola M. Applications of the signal space separation method (SSS) IEEE Trans. Signal Process. 2005;53(9):3359–3372. [Google Scholar]

- Taulu S., Simola J. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Phys. Med. Biol. 2006;51(7):1759–1768. doi: 10.1088/0031-9155/51/7/008. [DOI] [PubMed] [Google Scholar]

- Theunissen S.C.P.M., Rieffe C., Kouwenberg M., Soede W., Briaire J.J., Frijns J.H.M. Depression in hearing-impaired children. Int. J. Pediatr. Otorhinolaryngol. 2011;75(10):1313–1317. doi: 10.1016/j.ijporl.2011.07.023. [DOI] [PubMed] [Google Scholar]

- Theunissen S.C.P.M., Rieffe C., Kouwenberg M., De Raeve L., Soede W., Briaire J.J., Frijns J.H.M. Anxiety in children with hearing aids or cochlear implants compared to normally hearing controls. Laryngoscope. 2012;122(3):654–659. doi: 10.1002/lary.22502. [DOI] [PubMed] [Google Scholar]

- Theunissen S.C.P.M., Rieffe C., Kouwenberg M., De Raeve L.J.I., Soede W., Briaire J.J., Frijns J.H.M. Behavioral problems in school-aged hearing-impaired children: the influence of sociodemographic, linguistic, and medical factors. Eur. Child Adolesc. Psychiatry. 2014;23(4):187–196. doi: 10.1007/s00787-013-0444-4. [DOI] [PubMed] [Google Scholar]

- Theunissen S.C., Rieffe C., Netten A.P., Briaire J.J., Soede W., Schoones J.W., Frijns J.H. Psychopathology and its risk and protective factors in hearing-impaired children and adolescents: a systematic review. JAMA Pediatr. 2014;168(2):170–177. doi: 10.1001/jamapediatrics.2013.3974. [DOI] [PubMed] [Google Scholar]

- Theunissen S.C., Rieffe C., Soede W., Briaire J.J., Ketelaar L., Kouwenberg M., Frijns J.H. Symptoms of psychopathology in hearing-impaired children. Ear Hear. 2015;36(4):e190–e198. doi: 10.1097/AUD.0000000000000147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomblin J.B., Oleson J.J., Ambrose S.E., Walker E., Moeller M.P. The influence of hearing aids on the speech and language development of children with hearing loss. JAMA Otolaryngol. Head Neck Surg. 2014;140(5):403–409. doi: 10.1001/jamaoto.2014.267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomblin J.B., Harrison M., Ambrose S.E., Walker E.A., Oleson J.J., Moeller M.P. Language outcomes in young children with mild to severe hearing loss. Ear Hear. 2015;36(Suppl 1):76S–91S. doi: 10.1097/AUD.0000000000000219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomblin J.B., Oleson J., Ambrose S.E., Walker E.A., McCreery R.W., Moeller M.P. Aided hearing moderates the academic outcomes of children with mild to severe hearing loss. Ear Hear. 2020;41(4):775–789. doi: 10.1097/AUD.0000000000000823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uusitalo M.A., Ilmoniemi R.J. Signal-space projection method for separating MEG or EEG into components. Med. Biol. Eng. Compu. 1997;35(2):135–140. doi: 10.1007/BF02534144. [DOI] [PubMed] [Google Scholar]

- Van Veen B.D., van Drongelen W., Yuchtman M., Suzuki A. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans. Biomed. Eng. 1997;44(9):867–880. doi: 10.1109/10.623056. [DOI] [PubMed] [Google Scholar]

- Walker E.A., Ambrose S.E., Oleson J., Moeller M.P. False belief development in children who are hard of hearing compared with peers with normal hearing. J. Speech Lang. Hear. Res. 2017;60(12):3487–3506. doi: 10.1044/2017_JSLHR-L-17-0121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker E.A., Sapp C., Dallapiazza M., Spratford M., McCreery R.W., Oleson J.J. Language and reading outcomes in fourth-grade children with mild hearing loss compared to age-matched hearing peers. Lang. Speech Hear Serv. Sch. 2020;51(1):17–28. doi: 10.1044/2019_LSHSS-OCHL-19-0015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley K.J., Marrett S., Neelin P., Vandal A.C., Friston K.J., Evans A.C. A unified statistical approach for determining significant signals in images of cerebral activation. Hum. Brain Mapp. 1996;4(1):58–73. doi: 10.1002/(SICI)1097-0193(1996)4:1<58::AID-HBM4>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- Worsley K.J., Andermann M., Koulis T., MacDonald D., Evans A.C. Detecting changes in nonisotropic images. Hum. Brain Mapp. 1999;8(2-3):98–101. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<98::AID-HBM5>3.0.CO;2-F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeung M.K., Lee T.L., Chan A.S. Right-lateralized frontal activation underlies successful updating of verbal working memory in adolescents with high-functioning autism spectrum disorder. Biol. Psychol. 2019;148:107743. doi: 10.1016/j.biopsycho.2019.107743. [DOI] [PubMed] [Google Scholar]

- Yoshinaga-Itano C., Sedey A.L., Coulter D.K., Mehl A.L. Language of early- and later-identified children with hearing loss. Pediatrics. 1998;102(5):1161–1171. doi: 10.1542/peds.102.5.1161. [DOI] [PubMed] [Google Scholar]