Abstract

Introduction:

Many institutions are attempting to implement patient-reported outcome (PRO) measures. Because PROs often change clinical workflows significantly for patients and providers, implementation choices can have major impact. While various implementation guides exist, a stepwise list of decision points covering the full implementation process and drawing explicitly on a sociotechnical conceptual framework does not exist.

Methods:

To facilitate real-world implementation of PROs in electronic health records (EHRs) for use in clinical practice, members of the EHR Access to Seamless Integration of Patient-Reported Outcomes Measurement Information System (PROMIS) Consortium developed structured PRO implementation planning tools. Each institution pilot tested the tools. Joint meetings led to the identification of critical sociotechnical success factors.

Results:

Three tools were developed and tested: (1) a PRO Planning Guide summarizes the empirical knowledge and guidance about PRO implementation in routine clinical care; (2) a Decision Log allows decision tracking; and (3) an Implementation Plan Template simplifies creation of a sharable implementation plan. Seven lessons learned during implementation underscore the iterative nature of planning and the importance of the clinician champion, as well as the need to understand aims, manage implementation barriers, minimize disruption, provide ample discussion time, and continuously engage key stakeholders.

Conclusions:

Highly structured planning tools, informed by a sociotechnical perspective, enabled the construction of clear, clinic-specific plans. By developing and testing three reusable tools (freely available for immediate use), our project addressed the need for consolidated guidance and created new materials for PRO implementation planning. We identified seven important lessons that, while common to technology implementation, are especially critical in PRO implementation.

Keywords: Patient reported outcome measures (PROs, PROMs); electronic health records (EHRs); implementation science; Patient-Reported Outcomes Measurement Information System (PROMIS); university health systems and hospitals; sociotechnical factors

Introduction

The use of patient-reported outcome (PRO) measures in the clinical setting has the potential to help providers track patient symptoms and function over time, elevating the voice of patients in their own care [1, 2]. Collection of PROs through the electronic health record (EHR) creates new implementation challenges. Because no two clinics are exactly alike – with differing workflows, time constraints, cultures, and requirements – electronic PRO implementations must be customized to meet each clinic’s individual needs [3]. During PRO implementation, it is essential to select measures that are consistent with a given clinic’s goals, to determine which events in which populations will trigger a request that a patient complete a particular measure, and to decide which clinicians will receive scores and act upon them [4]. Clinics must also design the best approach to implementation by including key personnel in the planning process, working skillfully and knowledgeably within the institutional landscape, arranging technical implementation, and determining an optimal implementation schedule [5]. Finally, teams must plan for post-implementation monitoring and evaluation to ensure that PROs are operating smoothly [6]. Choosing wisely among the many options for PRO measures and proceeding systematically with implementation are highly consequential in the success of electronic PROs in the clinical setting.

The EHR Access to Seamless Integration of PROMIS (EASI-PRO; www.easipro.org) Consortium consists of nine universities with the joint goal of integrating Patient-Reported Outcomes Measurement Information System (PROMIS) measures into EHRs for use in clinical care. Three EASI-PRO institutions shared the objective of implementing the PROMIS app within each of their Epic EHRs. In addition, a secondary goal of the group was to use the implementation process itself to gain empirical knowledge about the experience of real-world PRO implementation. This study presents the results of the first step of this EASI-PRO implementation project, which focused on implementation planning.

Implementation Process

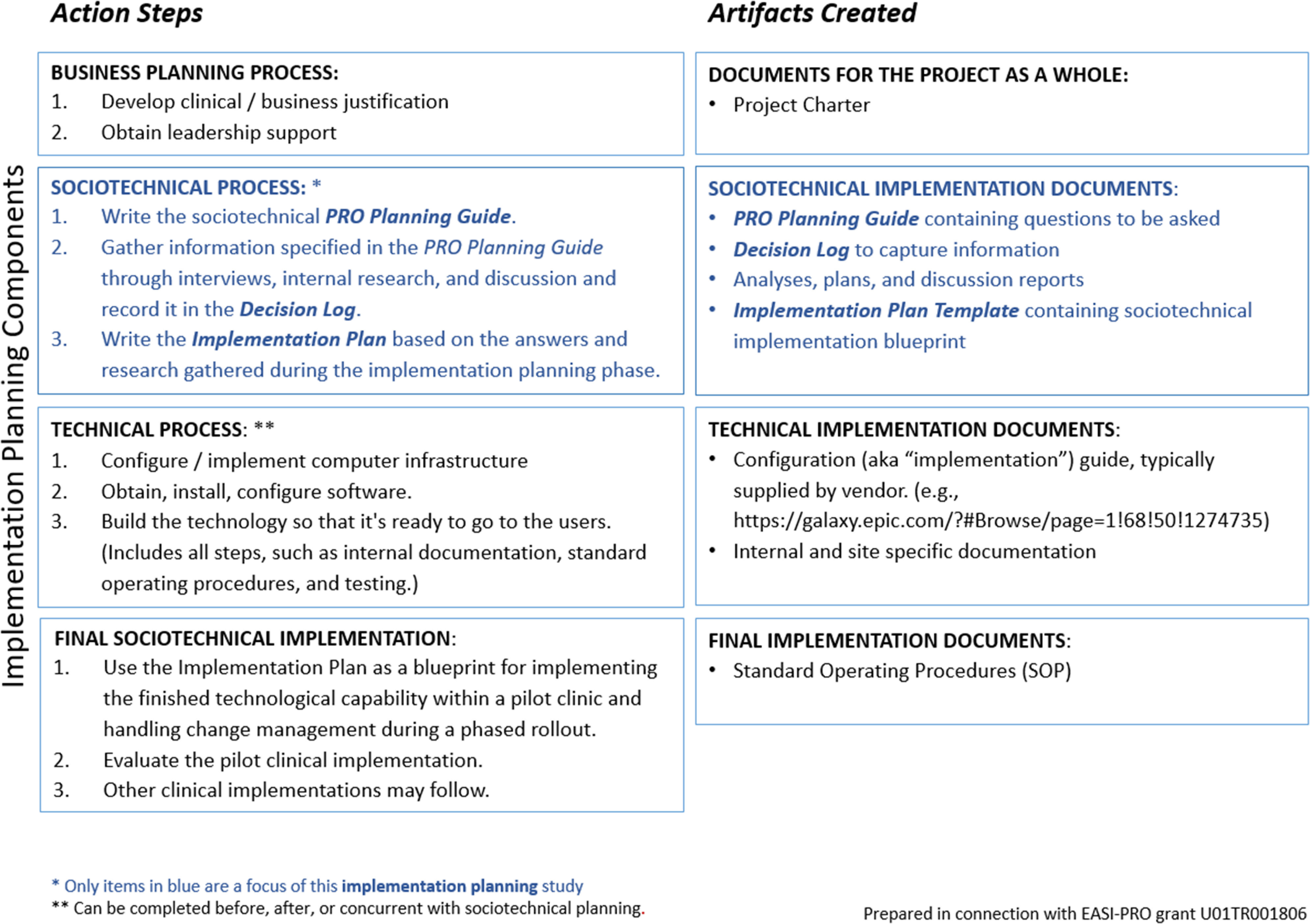

Health systems implementation consists of a complex series of steps, ideally integrating both context (e.g., inner and outer settings) and process [6–8]. The EASI-PRO implementation process (see Fig. 1) encompasses the spectrum of implementation, which includes planning, technical implementation, testing, monitoring, and evaluation. The present study, however, focused solely on the implementation planning phase, highlighted in Fig. 1.

Fig. 1.

Conceptual map of the EASI-PRO implementation process. Implementation consists of both planning work (shown in blue) and technical work (coding, configuration, and technical build). The present study focused solely on planning work.

Sociotechnical Framework

EHR-integrated PRO measures are used directly by distinct actors with varying roles within the organization and with varying levels of patience and knowledge (e.g., patients who complete measures, clinicians who access and respond to scores, and operations personnel involved in all aspects of workflow). PRO implementation success, therefore, depends not just on the capability of PRO software but on factors such as sensitivity to time constraints, attention to smoothing changes in clinic workflow, and devotion of resources to training users and helping them understand the benefits of PROs in the clinic. Facets of implementation that are concerned principally with people and context are known as sociotechnical factors [9]. While excellent technology is a necessary condition of successful PRO implementation, it is not sufficient for success. True success, defined by high usage and clinical value derived from PROs, depends to a great extent on sociotechnical factors [10].

To address the sociotechnical aspects of implementation, this study incorporated the Human, Organization, and Technology Fit (HOT-Fit) framework into the planning process [11, 12]. The HOT-Fit framework examines the relationships between the human, organizational, and technological components of a health information system. The human component encompasses system use and user satisfaction; the organizational component concentrates on structure and environment; and the technological dimension focuses on system, information, and service quality. HOT-Fit theory includes the concept of “net benefits,” meaning the benefits that technological change can bring despite any drawbacks that may exist within the three interrelated dimensions.

PRO Implementation Planning Tools

Several rich sources of PRO implementation guidance exist, including materials from the International Society of Quality of Life Research (ISOQOL) [13], the Patient-Centered Outcomes Research Institute (PCORI) [14], and the HealthMeasures website [15], as well as guidance based on existing implementations [5, 16–18]. In attempting to apply these resources to actual PRO implementation, however, several gaps were identified. First, while each of these sources addressed important facets of the PRO implementation process, none spanned the entire series of implementation planning steps. Second, many of the recommendations required additional work to convert the guidance into concrete decision points. Third, although many sources of PRO implementation guidance implicitly recognized sociotechnical factors, none explicitly utilized sociotechnical conceptual frameworks to examine the full spectrum of factors involved in electronic PRO implementation planning.

To address these gaps, we developed three standardized implementation planning tools and applied these tools systematically at each of four distinct clinics to create pilot implementation plans. Here, we describe these planning tools, which we have made freely available for public use, and we discuss seven lessons learned during real-world implementation planning.

Materials and Methods

The EASI-PRO Consortium was formed to advance the integration of PRO measures into EHR systems. With facilitation from Northwestern University, three institutions in the EASI-PRO Consortium – the University of Chicago, the University of Florida, and the University of Illinois at Chicago – established a joint project with the objective of planning for implementation of the PROMIS app within each university’s Epic EHR system. In this section, we present methodological information about our planning process, including the composition of implementation teams, pilot clinics chosen, motivations for implementing PROs, and other practical topics. In addition, for the convenience of readers who would like more information, we have included in the supplementary material an appendix that provides extensive detail on these topics. Future teams who undertake the challenge of PRO planning may find this information useful as a model and helpful in setting expectations concerning the complexity of a PRO planning project.

Creation of Decision Tools

To begin planning for implementation of EHR-integrated PROs in clinical practice, consortium members conducted a search to identify (a) existing guidance, (b) published descriptions of PRO implementation, and (c) implementation workshop content. Common topics were identified (e.g., PRO measure selection, workflow, governance, teams, and timing). Content within these resources for each topic was consolidated using the HOT-Fit theoretical model and reformulated where necessary into questions that could be posed in interviews with clinicians, informaticians, and senior leadership. EASI-PRO consortium members provided iterative feedback.

Three tools were created. First, a PRO Planning Guide was drafted to summarize existing empirical knowledge and guidance about PRO implementation in routine clinical care. The PRO Planning Guide includes structured lists of specific questions that should be answered to implement PROs (e.g., What is the target patient group? What specific measures will be administered to this group of patients? What should trigger an assessment? Who will provide technical support?), as well as resources to aid in decision making. Second, a Decision Log based on the PRO Planning Guide was created to serve as a record of planning decisions. Third, an Implementation Plan Template was produced to function as a model for a clinic implementation plan.

Selecting Clinics and Forming Teams

As a first step, EASI-PRO site leaders conducted outreach among clinics to identify a pilot site where PROs would be optimally useful. At the University of Illinois and the University of Florida, electronic PROs were new to the institution as a whole. At the University of Chicago, PRO measures utilizing a different technology had been previously used in a limited manner. Because introduction of PROs to the clinical workflow entails significant change, site leaders prioritized clinics whose leadership possessed the motivation, capabilities, and skills to use electronic PROs successfully and to change their workflows as necessary to accommodate new processes. Site leaders did not seek out particular medical specialties. In total, four different clinics were identified to serve as pilot sites, one each at the University of Chicago and the University of Illinois at Chicago and two at the University of Florida. A detailed discussion of how pilot clinics were selected is included in Appendix Table A.

Institutional leaders at all three universities viewed PROs as a key initiative that they wished to implement broadly, and all planning efforts therefore enjoyed robust institutional support. Institutions were motivated to implement PROs by a desire to improve patient satisfaction, to monitor outcomes, and to examine the social determinants of health. Institutions were also aware of potential regulatory changes and wished to remain in the vanguard of quality clinical care. Clinicians regarded PROs as useful tools for screening, monitoring treatment, improving population health, and assessing the value of specific interventions to patients. Appendix Table B provides additional information about the specific motivations of clinics and institutions for each PRO implementation effort.

Each implementation team included an executive sponsor who provided senior leadership and institutional support, a clinician champion who was directly involved in planning and implementing change, informaticians (who were in some cases also clinicians or institutional leaders) who supported change management and facilitated decision making, and technical personnel. Despite their broad similarity, however, no two clinical implementation teams or processes were identical, and each institution proceeded at its own pace with its own array of barriers and facilitators. For example, one site is now collecting PROs. Another site experienced a significant legal barrier that has been resolved. Yet another site awaits installation of an entirely new hospital EHR system. These events highlight the variability of implementation time and course even when using a common planning process. For additional information about the planning process at each institution, including descriptions of the process used, project timing, and resources required, see Appendix Table C. Appendix Table D provides detailed titles and roles of all team members. Teams worked with permission from their respective institutional review boards or quality departments.

Using the Decision Tools

To gather planning information, interviews were conducted with 10 key leaders using questions tailored to respondents’ clinical or institutional roles (interviews: n = 13), as shown in Table 1 [5, 16, 18, 19]. Chicago area interviews were conducted by an informatics research assistant (n = 7) and by the Northwestern University facilitator (n = 2). University of Florida interviews were conducted by the EASI-PRO site leader (n = 4).

Table 1.

Formal interviews conducted at each institution and clinic. To gather information for their respective decision logs, teams conducted formal interviews with key leaders designated by EASI-PRO site leaders and also convened internal planning meetings and discussions.

| Institution and clinic | Interviewee role | Number of interviewees | Number of interviews | Total site interviews |

|---|---|---|---|---|

| University of Chicago Department of Orthopaedic Surgery and Rehabilitation Services | Key institutional leader | 1 | 1 | 4 |

| Informatician | 1 | 2 | ||

| Clinician champion in Orthopaedics | 1 | 1 | ||

| University of Florida Division of Endocrinology, Diabetes & Metabolism and University of Florida Division of Hematology & Oncology | Key institutional leader | 1 | 1 | 4 |

| Clinician champion in Endocrinology | 1 | 2 | ||

| Clinician champion in Hematology & Oncology | 1 | 1 | ||

| University of Illinois at Chicago (UIC) Family Medicine, geriatric population | Key institutional leader | 2 | 2 | 5 |

| Clinician champion and informatician in Family Medicine | 1 | 2 | ||

| Clinician in Family Medicine | 1 | 1 |

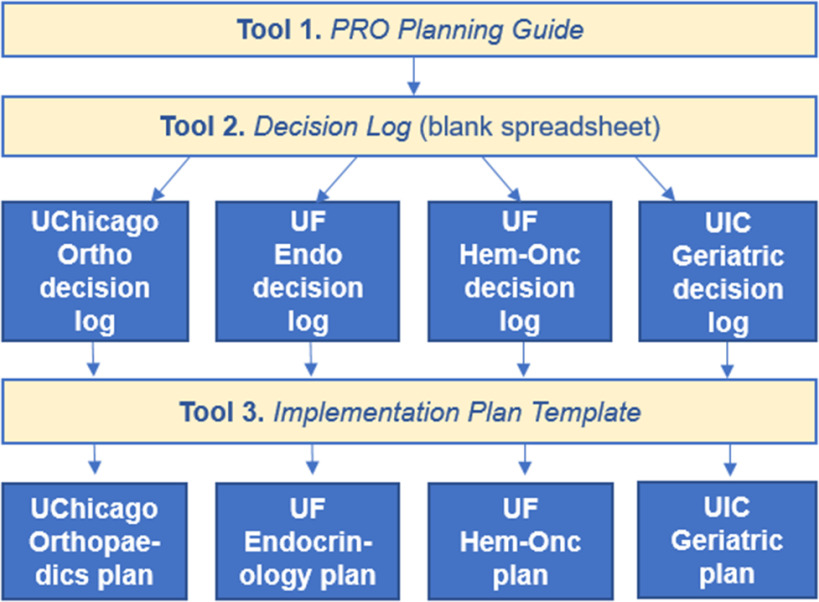

A separate copy of the blank Decision Log was created for each clinic (see Fig. 2). All individual clinic decision logs were made available to the cross-institutional group as network-shared spreadsheets. All interviews were recorded and transcribed, then parsed into planning decisions, which were recorded on each clinic’s individual decision log. In addition, information gleaned from internal planning meetings and discussions at each institution was recorded on the decision log. When finalized, material from each clinic’s decision log was transferred by the research assistant and facilitator to populate each clinic’s implementation plan using the Implementation Plan Template as a model. Sites then worked to complete their plans, which serve as a narrative record of decisions made during the planning process. Draft plans have been completed for all four clinics, but are still being augmented and revised. Appendix Table C contains additional process details for all sites.

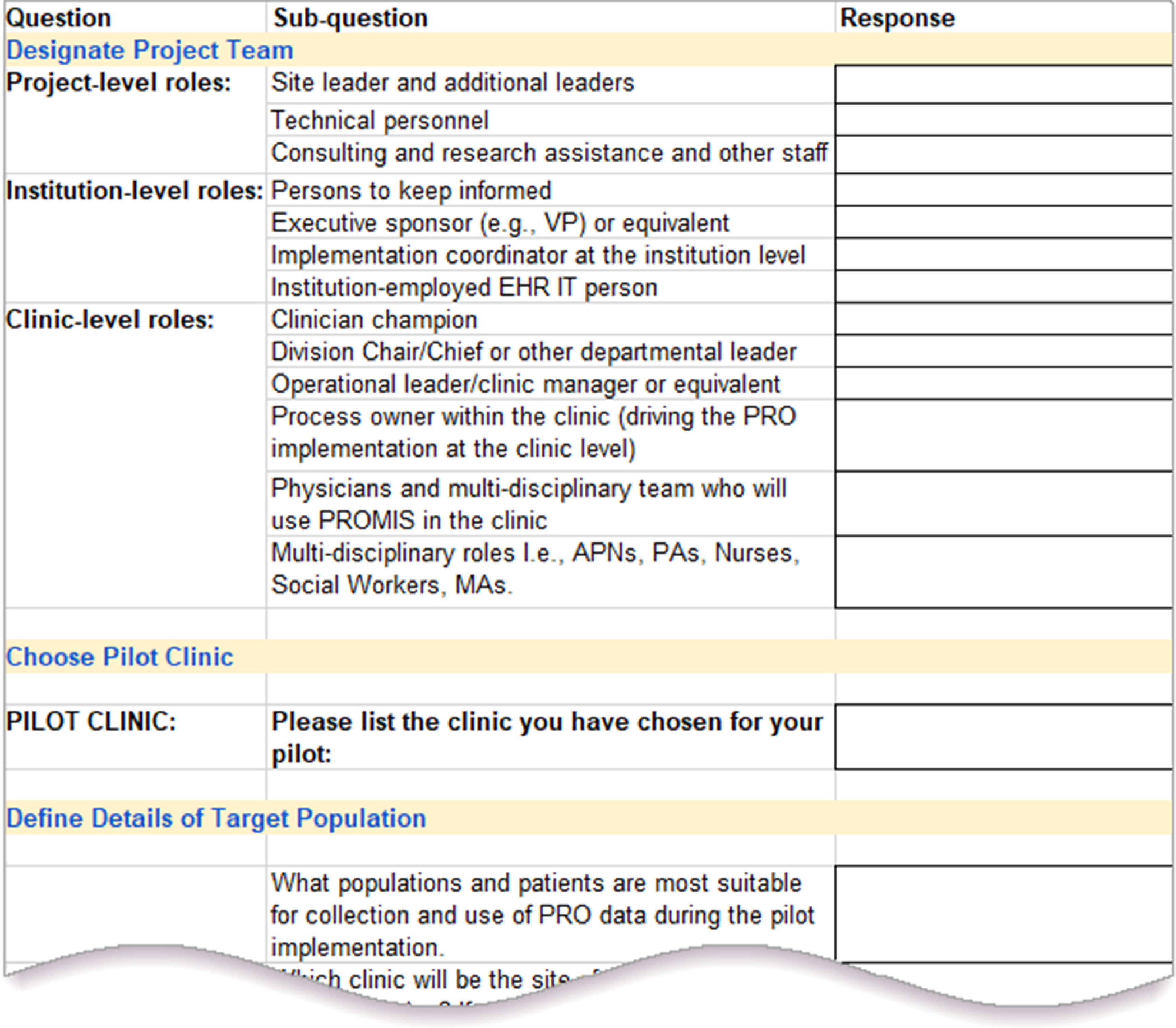

Fig. 2.

Use of decision tools by participating institutions. The blank Decision Log spreadsheet was derived from the PRO Planning Guide. Each institution recorded its PRO planning decisions in its own decision log (one per clinic). Implementation plans were written based on the information recorded in each clinic’s decision log using the Implementation Plan Template as a model. All tools are available in the supplementary material and at https://digitalhub.northwestern.edu/collections/e71c88e3-3a0b-4b56-90ce-8ddc75a78e81.

As an example, teams recorded in their decision logs the initial locations where PROs would be implemented and the target populations they planned to serve. From this data, teams constructed population inclusion and exclusion criteria that were then included in each clinic’s decision log and eventually in their implementation plans. (See Appendix Table E for information on target population and an illustration of its transformation into inclusion and exclusion criteria.)

Although the PRO Planning Guide supplied initial background and context, contained all interview questions, and was meant to serve as an interview guide, consortium participants found it easier to pose questions directly from the Decision Log during informational interviews. During the project, areas of confusion, lack of specificity, or gaps in content in all tools were identified by site teams and used to revise each planning tool.

The EASI-PRO Consortium met by teleconference weekly for approximately five months to discuss progress, resolve unanswered questions, and identify common challenges. Members used these meetings to highlight sociotechnical topics, to discuss human and organizational aspects of planning (e.g., training, workflow), to raise important questions about change management [6, 11], and to discuss practical aspects of PRO implementation. At the conclusion of the project, the Northwestern University facilitator proposed a candidate list of common issues encountered and observations about the implementation planning process that encapsulated the weekly discussions of the cross-institutional group. These were discussed by group members and are presented below in the form of lessons learned. These observations represent a set of consensus recommendations to minimize the difficulties of PRO planning.

Results

Decision Tools

Three implementation decision tools (see Table 2) emerged from the EASI-PRO planning process. These tools consist of a PRO Planning Guide, a Decision Log, and an Implementation Plan Template.

Table 2.

Tools created during the implementation planning process. The pilot implementation planning process resulted in three tools to aid decision making. These tools as well as an explanatory guide for the toolkit are available at https://digitalhub.northwestern.edu/collections/e71c88e3-3a0b-4b56-90ce-8ddc75a78e81 and in the supplemental material.

| Tool 1: PRO Planning Guide | A guide integrating foundational sources from the literature (see Table 3) and transforming advice where necessary into a series of interview questions. | http://doi.org/10.18131/g3-0shy-pn30 |

| Tool 2: Decision Log | A spreadsheet derived from the PRO Planning Guide. The Decision Log (see Fig. 3 for a sample) consists of more than 90 discrete fields in which to record customization and implementation choices. | http://doi.org/10.18131/g3-vy44-c949 |

| Tool 3: Implementation Plan Template | The Implementation Plan Template is a model that may be used to construct a readable and sharable implementation plan using the information recorded in a clinic’s decision log. | https://doi.org/10.18131/g3-fev6-hc15 |

The PRO Planning Guide

To construct the PRO Planning Guide, the planning team identified four foundational sources providing empirical knowledge and guidance for PRO implementation in clinical practice:

-

1.

The HealthMeasures.net website, which is focused specifically on PROMIS measures, but is widely applicable to all PRO measures. The detailed advice on the HealthMeasures website formed the base of the PRO Planning Guide [15]. Two HealthMeasures resources are referenced particularly: “What you need to know before requesting PROs in your EHR” [4] and “Guide to selection of measures from HealthMeasures” [20]. HealthMeasures also contains several video-based tutorials referenced in the PRO Planning Guide.

-

2.

The User’s Guide to Implementing Patient-Reported Outcomes Assessment in Clinical Practice by the International Society for Quality of Life Research (ISOQOL), which contains nine questions to consider in clinical implementation [13], as well as its companion guide that provides additional information and examples from actual implementations [16, 17].

-

3.

The Users’ Guide to Integrating Patient Reported Outcomes in Electronic Health Records by the Patient-Centered Outcomes Research Institute (PCORI), which examines 11 key questions for integrating PROs in the EHR [14].

-

4.

The article, “Patient reported outcomes – experiences with implementation in a University Health Care setting” by Biber et al., which focuses on a large installation of PROs and provides a wealth of practical information [5].

Additional sources included the publications “Implementing patient-reported outcomes assessment in clinical practice: a review of the options and considerations [21],” “Framework to guide the collection and use of patient-reported outcome measures in the learning healthcare system [18],” and the Consolidated Framework for Implementation Research (CFIR) [8], as well as guidance from a technical workshop [22] and implementation experts. In addition, the work Managing Technological Change: Organizational Aspects of Health Informatics highlighted crucial topics such as institutional support, governance, and conflict resolution [6].

Fifteen topics were identified across the PRO Planning Guide’s foundational sources. Table 3 illustrates the sections of the PRO Planning Guide that were most influenced by each source. The advice on the HealthMeasures website [15] formed the base of the PRO Planning Guide, with additional advice grouped thematically.

Table 3.

Foundational sources. Information was consolidated into a single guide featuring interview questions. This table illustrates the sections of the PRO Planning Guide that were most influenced by our top four foundational sources.

| PRO Planning Guide Topic | Health-Measures website [15] | ISOQOL Guidance [13] | PCORI Guide [14] | Biber et al. [5] |

|---|---|---|---|---|

| Part I, Section 1: Institutional Support | X | X | X | |

| Part I, Section 2: How Will the PRO-EHR System be Governed? | X | X | X | |

| Part II, Section 1: Selecting Populations and Patients | X | X | X | X |

| Part II, Section 2: Selecting PRO Measure(s) | X | X | X | |

| Part III, Section 1: Clinical Purpose and Barriers | X | X | X | |

| Part III, Section 2: Workflow | X | X | X | X |

| Part III, Section 3: PRO Delivery and Location | X | X | X | |

| Part III, Section 4: Ordering, Triggers, and Assessment Intervals | X | X | X | |

| Part III, Section 5: Handling Results | X | X | X | X |

| Part IV, Section 1: Work Team for Implementation | X | |||

| Part IV, Section 2: Technical and Financial Considerations | X | X | ||

| Part IV, Section 3: Timing | X | |||

| Part V, Section 1: Sociotechnical Evaluation | X | |||

| Part V, Section 2: Metrics and Analytics of PRO Usage | X | X | ||

| Part V, Section 3: Patient Feedback about PROs |

The Decision Log and Implementation Plan Template

A Decision Log was constructed based on the PRO Planning Guide (see Fig. 3 for a sample of the Decision Log). This log can be used to track and revise decisions as necessary. When information is finalized in a clinic’s decision log, it can be transferred to a copy of the Implementation Plan Template. The clinic implementation plan can then be shared with a wide audience to obtain feedback and enhance communication.

Fig. 3.

Sample screen shot from the Decision Log. The Decision Log spreadsheet consists of more than 90 discrete fields taken from the PRO Planning Guide. It provides a mechanism for recording each clinic’s and institution’s decisions regarding PRO customizations and implementation choices. This Decision Log is available at http://doi.org/10.18131/g3-vy44-c949 and in the supplementary material.

Seven Sociotechnical Lessons from Implementation Planning

A goal of the project was to utilize the planning process itself as a laboratory for learning about the sociotechnical aspects of real-world PRO implementation using the HOT-Fit model [11, 12]. Seven themes (see Table 4) emerged during consortium meetings, all of which served as lessons learned about the salient role of human and organizational factors in PRO implementation.

-

1.

Recognize that planning is both a stepwise and an iterative process.

Table 4.

Lessons learned during the PRO implementation planning process. Seven themes emerged that highlighted the fundamental importance of human and organizational factors in implementation planning.

|

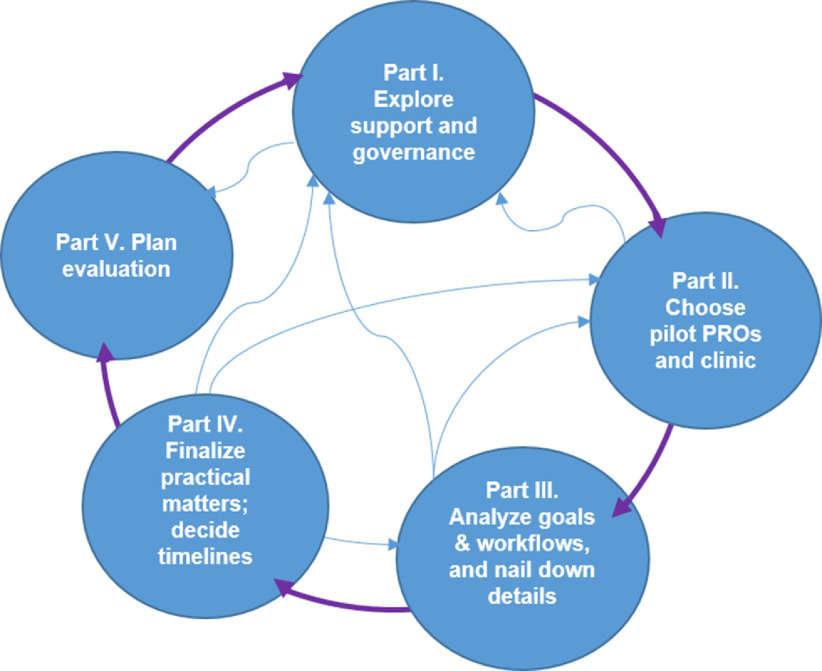

Although we strove to follow a systematic approach, we found it necessary to jump forward and circle back among the various planning stages to capture all necessary information (see Fig. 4). Most of our work on this project so far has been in Parts I–III: “Explore support and governance,” “Choose pilot PROs and clinic,” and “Analyze goals & workflow and nail down details.” In particular, numerous conversations about in-clinic PRO workflow were required because of the complexity and ramifications of workflow issues involving potential changes in staff duties, clinic procedures, training, and equipment. At the conclusion of the planning process, the implementation plan provided an opportunity to solidify decisions and allowed all stakeholders to review the plan and ensure agreement before proceeding with technical implementation.

-

2.

Choose an effective and enthusiastic clinician champion to maximize success.

Fig. 4.

Conceptual diagram of the planning process. This diagram illustrates that the process of implementation planning is simultaneously both sequential and nonlinear. While pursuing the sequential steps of implementation (depicted by the exterior arrows), teams also revisited steps and revised as they encountered new information (a process depicted conceptually by the interior arrows). Part numbers on the diagram correspond to parts within the PRO Planning Guide.

At all institutions, implementation teams began by locating a clinician with the leadership ability and enthusiasm to serve as a champion for implementation of PROs. At one institution, the clinician champion headed the implementation team. At other institutions, the implementation team sought out and worked with clinician partners. To complete the clinic’s decision log, the clinician champion needed to be able to identify the specific benefits of PRO integration in that clinic and make many of the decisions required for a pilot implementation. For example, at the University of Chicago, the clinician champion set a schedule based not only on clinical needs but also on an understanding of the potential use of PROs in future research, thus making a critical decision enabling PROs to serve multiple purposes.

-

3.

Choose PRO measures that are consistent with intended use.

Franklin and colleagues identify four primary PRO uses that create value for key stakeholders: “(1) individual patient care decisions, (2) quality improvement, (3) value-based payment, and (4) population health and research” and also suggest that teams identify the value that PROs will provide prior to implementing PRO-collection strategies [18]. Realizing that PROs have different value propositions within different clinics, our implementation teams sought to be as concrete as possible at this early stage about the aims of PROs in the clinic and the institution. Among many other subjects, in an effort to define their primary goals, interviewees discussed the challenges of change as well as specific benefits, such as compliance with emerging regulatory requirements, enhancement of patient information, and the potential for research. For example, one clinician stated that PROs could allow him to learn directly from his patients about topics of importance that neither he nor the patient might otherwise raise, such as social isolation or lack of family support.

Although our implementation teams faced only the task of choosing PRO measures for pilot clinics, discussions arose concerning institutional aims and the future PRO landscape from an institutional perspective. Though beyond the scope of this project, all teams foresaw the need for eventual governance of PRO selection and timing at the institutional and hospital system level.

-

4.

Expect to identify, embrace, and overcome barriers throughout the process.

Like any implementation that alters workflows, PRO implementations encounter many barriers, both real and perceived. Barriers to PRO implementation identified in the literature include a perception among clinicians that full integration is difficult in clinical practice due to workflow disruption [23]. Users also cite lack of actionability and technical challenges [19, 23, 24]. Barriers to use identified by our participants included language limitations, concerns about the release of data back to patients, and potential disparities among patients in data availability and usage of electronic PROs. In addition, participants raised issues related to expansion beyond the pilot clinic (e.g., policies regarding ownership of results, regulation of inter-clinic sharing, and rationalizing and governing PRO selection across the institution) and recognized that each institution must eventually find a balance between customization and standardization of options.

-

5.

Prepare to tailor workflow to minimize disruption.

In all four pilot clinics, the desired workflow was that patients would complete PROs prior to arriving at the clinic. Nevertheless, due to their expectation that many patients would arrive with uncompleted PROs, clinics explored in-clinic PRO completion processes. Even across our small sample, it was clear that different workflows were optimal for different clinics. Implementation teams considered multiple options and weighed the advantages and disadvantages of each. A rapid-cycle experimental approach (whereby a clinic might try tablets in the waiting room for one to two weeks, then try having patients use the computers in the exam rooms, then try a kiosk) was considered useful for making a final determination of optimal workflow.

-

6.

Allow sufficient time in the planning process to make and discuss decisions.

Throughout implementation planning, participants visited and revisited many questions associated with PRO processes. Arriving at a decision required discussions that continued even after the completion of draft clinic implementation plans. Examples of questions that required careful thought included the following:

Whether PRO results should be connected to clinical visits or collected on a schedule unconnected to appointment times;

Whether PRO measures should be sent to new patients, returning patients, or both;

How far in advance of a clinic visit PRO completion requests should be sent;

How many days the request should remain available;

When reminders should be sent;

Whether or not there was a PRO score threshold that required clinician follow-up outside of the context of a clinic visit; and

How clinicians should follow up on scores exceeding a threshold of concern.

As an example of schedule customization, Orthopaedics at the University of Chicago scheduled PRO assessments at predetermined timepoints (e.g., “Subsequent to surgery at 2 weeks, 6 weeks, 12 weeks, 6 months, 12 months, and yearly to 5 years”), whereas the Family Medicine Clinic at the University of Illinois at Chicago synchronized assessments with appointments to ensure prompt clinician attention.

-

7.

Continuously engage institutional leaders.

To build awareness and a positive partnership for the future, all planning teams met with senior management during the project to discuss the place of PROs within their institution’s goals and priorities, the value proposition for the institution itself, and issues of governance. Interviews with senior leaders provided insight about institutional priorities such as promotion of patient satisfaction with the institution as a whole. Senior leaders also focused on the potential of PROs to meet external requirements for bundled payments and value-based care. For example, one senior leader noted that accountable care organizations are increasingly calling for mechanisms to collect data directly from patients.

PRO governance was a topic of particular concern for both clinicians and senior leaders. Because each clinic and each specialty may have different needs, future patients could potentially be asked to complete multiple PROs or to complete the same PROs for different clinics at different times. Discussion arose about the future need for continuous inter-clinic coordination and leadership.

Discussion

This study demonstrates that highly structured planning tools informed by sociotechnical conceptual frameworks are an effective strategy for enabling clinics to successfully create a PRO implementation plan. This study developed and tested three tools to operationalize PRO implementation planning in EHRs for use in clinical practice. The PRO Planning Guide presents an orderly process and a series of questions for planning. The Decision Log allows implementation teams to gather information expeditiously during interviews and to maintain a record of decisions made. The Implementation Plan Template helps planners to summarize the iterative decision-making process into a customized, sharable implementation plan. These tools integrate, systematize, and extend PRO implementation advice from multiple sources while providing an explicit, built-in sociotechnical perspective to help implementers take human, organizational, and technological considerations into account while planning PROs. They are available for immediate use. We welcome additional testing and refinement.

Sociotechnical Lessons Learned

In real-world clinic implementations, decisions regarding customization and approach have strong sociotechnical consequences. Decision making begins immediately and continues even after implementation is formally completed. The choices made during implementation may have unintended consequences as new processes are activated [24]. Many decisions that may seem straightforward turn out to be quite challenging to make because choosing between several good paths requires multiple in-depth discussions and sometimes even experimentation with different options. Flexibility in moving between different parts of the planning process (e.g., between choosing PROs and determining workflow) allows planners to refine their choices as they gain information. Forward progress, revisiting previous tasks, and looking ahead should all be viewed as normal parts of implementation planning because decisions made at future points can impact decisions made in the past.

In applying our planning tools, we identified numerous challenges shared with prior studies, such as selecting just the right measure(s), engaging patients and physicians to obtain buy-in, and effectively using PRO results [25–27]. Also, concordant with the literature, we observed the importance of approaching PROs from an institutional perspective, identifying committed clinical leaders and teams, selecting appropriate instruments, taking action on concerning scores, and evaluating PRO use [28, 29]. Published research also provided overviews of clinic PRO implementation issues relevant to our study, such as the need to balance frequency of data collection with consideration of patient load [21, 28].

Combining published advice with our experience, we identified seven important lessons, all of which hinge on the ability of planners to navigate HOT-Fit sociotechnical factors. Each lesson reveals that qualities such as leadership and flexibility, combined with deliberate and measured decision making, are as important to successful implementation of PROs as technological considerations.

As an example, we observed that an effective clinical leader can marshal resources, set priorities, and delineate clear objectives, easing decisions about goals and choice of PRO measures and clearing away barriers that could otherwise derail implementation. Our experience echoed recommendations in the informatics literature emphasizing the importance of the clinician champion [4, 16, 19]. Our results also suggested that the pilot clinic for an institution should be chosen based on the presence of an effective clinician champion rather than on an a priori determination about which clinic might be most appropriate for PROs.

This project highlighted the importance of diving deeply into multidimensional topics. As an example, although time consuming, it is important to formulate a clear and specific understanding of overall purposes, which serves as a foundation for all phases of implementation but particularly for the crucial step of selecting the most appropriate PRO measures from the available measures [20, 28, 29]. Likewise, it was important to identify, discuss, and address challenging topics, such as barriers to PRO implementation. The planning phase of an implementation project is an ideal time to involve relevant stakeholders in productive discussions of potential problems and benefits. Planners should recognize that optimal practices may differ among clinics and may require continued experimentation as PROs become more widespread within the institution. Maintaining open channels of communication and invitations to participate in new efforts can help to avoid misunderstanding and failure.

In addition to addressing thorny subjects, planners must adroitly manage changes in clinic workflow to achieve success. In-clinic PRO completion workflow can involve significant change in a clinic’s routine, which can be fraught with sociotechnical consequences involving assignment of new responsibilities, effects on staff morale, and potential confusion. In addition, clinics implementing a new in-clinic process may confront financial hurdles such as purchasing equipment to be used by patients (e.g., tablets or kiosks), technical hurdles such as ensuring security and setting up equipment, and communication issues with both patients and staff. By paying particular attention to workflow, planners can avoid unnecessary obstacles to adoption and usage. Implementation teams that fit PRO completion into clinic workflow with little disruption and engage care providers in discussing results set the stage for a higher rate of PRO completion.

Finally, success requires identifying key institutional leaders and ensuring that the institution is in broad agreement as to the value of PRO collection [5, 16, 18, 19]. PROs in a clinic affect the institution and the hospital system at large through requirements for support, time, and governance, so key institutional leaders should be involved early and kept informed throughout the process. Implementation teams will be far more effective when working with institutional support than when working at cross purposes. Keeping senior leaders informed while seeking guidance and alignment helps make the institution a supportive partner in PRO implementation.

Like all projects, ours had limitations. The scope was limited due to the pilot nature of all four initiatives, all using the same vendor EHR. All three institutions are working with individual clinics at present, rather than with institution-wide or hospital system-wide implementations. In addition, at present, all pilot clinics are implementing only PROMIS measures. It is important to bear in mind that as an institution progresses to a greater number of PRO installations across multiple clinics and across multiple institutions within a larger hospital system, it will need to consider the trade-off between the efficiency arising from creating standard measure repositories used across clinics versus the customizability resulting from allowing clinics to request unique measures matched to their operations. Rather than tackling the entire potential PRO implementation lifecycle, this paper focuses on more basic challenges common across PRO implementations such as stakeholder buy-in, determination of populations and approach, training, evaluation, and workflow changes. In all cases, although this PRO pilot initiative was initiated in specific clinics, the process has been designed to be generalizable and adaptable to the needs of other clinics for future PRO implementations.

Lastly, although the sites have produced draft plans, some questions remain unanswered, particularly in areas such as workflow, score threshold actions, and technical rollout. None of the clinics has been live with PROs for an extended period, so we cannot evaluate gaps in our planning process that may have delayed effects. Even so, it is already apparent that providing a set of specific questions answered by specific individuals in the organization and mapped to specific planning decisions greatly aided and accelerated the planning process.

Conclusion

Clinics undertaking PRO planning needed a means to consolidate and streamline available guidance into applicable and accessible planning tools. Our project addressed this need by producing and testing three tools informed by a sociotechnical perspective. These tools are available at https://digitalhub.northwestern.edu/collections/e71c88e3-3a0b-4b56-90ce-8ddc75a78e81 and in the supplemental material. We detail our experience in this report and provide seven lessons learned from implementation planning. These lessons highlight the importance of human and organizational factors in effective implementation. Used together, these tools and the lessons learned lay the groundwork for future testing of tools in additional real-world settings.

Acknowledgments

The authors wish to thank Joshua Biber, Scott Maresh Nelson, and Luke Rasmussen for critical comments on the manuscript draft. In addition, the authors wish to thank Zachary L. Wang, who helped patients understand use of the patient portal and encouraged enrollment in Orthopaedic Surgery and Rehabilitation Services at the University of Chicago.

This research was supported, in part, by the EHR Access to Seamless Integration of PROMIS (EASI-PRO) grant: U01TR001806, National Center for Advancing Translational Sciences (NCATS)/National Institutes of Health (NIH). (Individual authors funded: BA, JB, ADB, SVB, YG, BAH, KH, KMK, DL, KM, FM, JM, TAN, NER, NDS, JBS, ALV, DW, SHW.)

This research was also supported by local Clinical and Translational Science Awards through the National Institutes of Health’s National Center for Advancing Translational Sciences:

| Institution | Grant Number | Individual Authors Funded |

|---|---|---|

| Northwestern University | UL1TR001422 | TAN, NDS, JBS |

| The University of Chicago | UL1TR002389 | KH, DL, JM |

| The University of Illinois at Chicago | UL1TR002003 | ADB, SVB, BAH, KMK, ALV |

| The University of Florida | UL1TR001427 | JB, YG, KM, FM, DW, SHW |

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

At the University of Florida, this research was also supported, in part, by internal funding from the Cancer Informatics and eHealth Core as part of the University of Florida Health Cancer Center (JB, YG, KM, FM, DW, SHW).

Supplementary material

For supplementary material accompanying this paper visit https://doi.org/10.1017/cts.2020.37.

click here to view supplementary material

Disclosures

The authors wish to make the following disclosures in case they are considered relevant: (a) Author David Liebovitz lists the following conflicts of interest: (1) Past Advisor – 2018 Wolters Kluwer advisory board for educational content design; (2) Current owner/co-patent originator (patent held at Northwestern) for Bedside Intelligence startup company for ICU care (no revenue or paying customers); and (3) Current Advisor – Optum4BP population management tool for blood pressure – startup with research funding and no paying customers. (b) Author Karl M. Kochendorfer co-founded and is the inventor of two patents with MedSocket. No other conflicts of interest.

References

- 1. Greenhalgh J, et al. Functionality and feedback: a realist synthesis of the collation, interpretation and utilisation of patient-reported outcome measures data to improve patient care. Southampton (UK): NIHR Journals Library, 2017. [PubMed]

- 2. Patient-Reported Outcomes. National Quality Forum [Internet] [cited Oct 16, 2019]. (https://www.qualityforum.org/Projects/n-r/Patient-Reported_Outcomes/Patient-Reported_Outcomes.aspx)

- 3. Hsiao C, et al. Advancing the use of patient-reported outcomes in practice: understanding challenges, opportunities, and the potential of health information technology. Quality of Life Research 2019; 28(6): 1575. [DOI] [PubMed] [Google Scholar]

- 4. What you need to know before requesting PROs in your EHR. HealthMeasures [Internet], 2017. [cited Oct 12, 2019]. (http://www.healthmeasures.net/images/applications/What_you_need_to_know_before_requesting_PROs_in_your_EHR_Aug22_2017.pdf)

- 5. Biber J, et al. Patient reported outcomes - experiences with implementation in a University Health Care setting. Journal of Patient-Reported Outcomes 2018; 2: 34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Lorenzi NM, Riley RT. Managing Technological Change: Organizational Aspects of Health Informatics. 2nd ed. New York, NY: Springer Science & Business Media, 2004. [Google Scholar]

- 7. Damschroder LJ, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science 2009; 4: 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. CFIR Technical Assistance Website. [Internet] 2018 [cited Oct 12, 2019]. (https://cfirguide.org)

- 9. Fox WM. Sociotechnical system principles and guidelines: past and present. The Journal of Applied Behavioral Science 1995; 31(1): 91–105. [Google Scholar]

- 10. Wesley DB, et al. A socio-technical systems approach to the use of health IT for patient reported outcomes: patient and healthcare provider perspectives. Journal of Biomedical Informatics 2019; 4: 100, 048. [DOI] [PubMed]

- 11. Yusof MM, et al. An evaluation framework for health information systems: human, organization and technology-fit factors (HOT-fit). International Journal of Medical Informatics 2008; 77(6): 386–398. [DOI] [PubMed] [Google Scholar]

- 12. Erlirianto LM, et al. The implementation of the human, organization, and technology – fit (HOT-fit) framework to evaluate the electronic medical record (EMR) system in a hospital. Procedia Computer Science 2015; 72: 580–587. [Google Scholar]

- 13. Aaronson N, et al. User’s Guide to Implementing Patient-Reported Outcomes Assessment in Clinical Practice (ISOQOL). Semantic Scholar [Internet], 2016. [cited Oct 7, 2019]. (https://www.isoqol.org/wp-content/uploads/2019/09/2015UsersGuide-Version2.pdf)

- 14. Snyder C, Wu A. Users’ Guide to Implementing Patient Reported Outcomes in Health Systems (PCORI). Baltimore, MD: Johns Hopkins; 2017. (https://www.pcori.org/sites/default/files/PCORI-JHU-Users-Guide-To-Integrating-Patient-Reported-Outcomes-in-Electronic-Health-Records.pdf) [Google Scholar]

- 15. HealthMeasures. [Internet] 2019 [cited Oct 12, 2019]. (http://www.healthmeasures.net)

- 16. Chan EKH, et al. Implementing Patient-Reported Outcome Measures in Clinical Practice: A companion Guide to the ISOQOL User’s Guide. ISOQOL [Internet], 2018. [cited Oct 4, 2019]. (https://www.isoqol.org/wp-content/uploads/2019/09/ISOQOL-Companion-Guide-FINAL.pdf) [DOI] [PMC free article] [PubMed]

- 17. Chan EKH, et al. Implementing patient-reported outcome measures in clinical practice: a companion guide to the ISOQOL user’s guide. Quality of Life Research 2019; 28(6): 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Franklin PD, et al. Framework to guide the collection and use of patient-reported outcome measures in the learning healthcare system. eGems 2017; 5(1): 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Antunes B, Harding R, Higginson IJ. Implementing patient-reported outcome measures in palliative care clinical practice: a systematic review of facilitators and barriers. Palliative Medicine 2014; 28: 158–175. [DOI] [PubMed] [Google Scholar]

- 20. Guide to selection of measures from HealthMeasures. HealthMeasures [Internet], 2016. [cited Oct 12, 2019]. (http://www.healthmeasures.net/images/applications/Guide_to_Selection_of_a_HealthMeasures_06_09_16.pdf)

- 21. Snyder C, et al. Implementing patient-reported outcomes assessment in clinical practice: a review of the options and considerations. Quality of Life Research 2012; 21(8): 1305–1314. [DOI] [PubMed] [Google Scholar]

- 22. Austin E, et al. W01: How Health Systems Should Be Thinking About Clinical Integration of Electronic Patient Reported Outcomes (sponsored by Consumer and Pervasive Health Informatics Working Group), American Medical Informatics Association (AMIA) Instructional Workshop materials, 2018.

- 23. Zhang R, et al. Provider perspectives on the integration of patient-reported outcomes in an electronic health record. JAMIA Open, 2019; 2(1): 73–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Anatchkova M, et al. Exploring the implementation of patient-reported outcome measures in cancer care: need for more real-world evidence results in the peer reviewed literature. Journal of Patient-Reported Outcomes 2018; 2:64. [DOI] [PMC free article] [PubMed]

- 25. Warsame R, Halyard MY. Patient-reported outcome measurement in clinical practice: overcoming challenges to continue progress. Journal of Clinical Pathways 2017; 3(1): 43–46. [Google Scholar]

- 26. Velikova G, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. Journal of Clinical Oncology 2004; 22(4): 714–724. [DOI] [PubMed] [Google Scholar]

- 27. Holmes MM, et al. The impact of patient-reported outcome measures in clinical practice for pain: a systematic review. Quality of Life Research 2017; 26: 245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Basch E, et al. Recommendations for incorporating patient-reported outcomes into clinical comparative effectiveness research in adult oncology. Journal of Clinical Oncology 2012; 30: 4249–4255. [DOI] [PubMed] [Google Scholar]

- 29. Gerhardt WE, et al. Systemwide implementation of patient-reported outcomes in Routine Clinical Care at a Children’s Hospital. The Joint Commission Journal on Quality and Patient Safety 2018; 44(8): 441–453. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

For supplementary material accompanying this paper visit https://doi.org/10.1017/cts.2020.37.

click here to view supplementary material