Abstract

Wildland fires can emit substantial amounts of air pollution that may pose a risk to those in proximity (e.g., first responders, nearby residents) as well as downwind populations. Quickly deploying air pollution measurement capabilities in response to incidents has been limited to date by the cost, complexity of implementation, and measurement accuracy. Emerging technologies including miniaturized direct-reading sensors, compact microprocessors, and wireless data communications provide new opportunities to detect air pollution in real time. The U.S. Environmental Protection Agency (EPA) partnered with other U.S. federal agencies (CDC, NASA, NPS, NOAA, USFS) to sponsor the Wildland Fire Sensor Challenge. EPA and partnering organizations share the desire to advance wildland fire air measurement technology to be easier to deploy, suitable to use for high concentration events, and durable to withstand difficult field conditions, with the ability to report high time resolution data continuously and wirelessly. The Wildland Fire Sensor Challenge encouraged innovation worldwide to develop sensor prototypes capable of measuring fine particulate matter (PM2.5), carbon monoxide (CO), carbon dioxide (CO2), and ozone (O3) during wildfire episodes. The importance of using federal reference method (FRM) versus federal equivalent method (FEM) instruments to evaluate performance in biomass smoke is discussed. Ten solvers from three countries submitted sensor systems for evaluation as part of the challenge. The sensor evaluation results including sensor accuracy, precision, linearity, and operability are presented and discussed, and three challenge winners are announced. Raw solver submitted PM2.5 sensor accuracies of the winners ranged from ~22 to 32%, while smoke specific EPA regression calibrations improved the accuracies to ~75–83% demonstrating the potential of these systems in providing reasonable accuracies over conditions that are typical during wildland fire events.

Keywords: Wildland fire smoke, Sensor performance, Particulate matter, Carbon monoxide, Carbon dioxide, Ozone

GRAPHICAL ABSTRACT

1. Introduction

Wildland fires can produce significant air pollution emissions which pose health risks to those working and living in close proximity such as first responders and nearby residents, as well as downwind populations (Bell et al., 2004; Vedal and Dutton, 2006; Rappold et al., 2011; Johnston et al., 2012; Reisen et al., 2015; Reid et al., 2016; Cascio, 2018; Weitekamp et al., 2020). Land management practices affecting forest fuel loading (under growth and tree density), drought, higher global temperatures, longer fire seasons, and increasing acres burned and fire intensity have resulted in increasing smoke emissions over a longer temporal duration (Kitzberger et al., 2007; Littell et al., 2009; Johnston et al., 2012; United States Department of Agriculture, 2014; United States Department of Agriculture, 2016; Westerling, 2016; Landis et al., 2018). The emission and downwind transport of smoke from wildland fires needs to be quantified and managed for first responder force protection and public health messaging by incident response teams, burn teams, and public health professionals.

The important primary constituents of emitted smoke that negatively impact air quality are particulate matter less than 2.5 μm in mass median aerodynamic diameter (PM2.5), carbon monoxide (CO), nitrogen oxides (NOx), and volatile organic compounds (VOCs) (Urbanski et al., 2009). Even in developed countries with relatively advanced regulatory air monitoring networks, remote wildland firefighter camps and population centers impacted by smoke in many instances lack adequate observational air quality data. The meteorological dispersion of wildland fire smoke is influenced by wind, atmospheric stability, and terrain, and therefore regulatory monitoring sites (if any) within the region may not adequately characterize the spatial and temporal variability of smoke impacts. During some large wildfire incidents, the U.S. Interagency Wildland Fire Air Quality Response Program (IWFAQRP) augments long-term regulatory monitoring networks with temporary air quality monitors dispatched with Air Resource Advisors (IWFAQRP, 2021). The cost, technical expertise required, and need for electrical power infrastructure generally limits the number of temporary monitors that are deployed. In many cases no additional monitors are deployed to provide actionable information on ambient air quality resulting from smoke impacts on affected population areas.

Meanwhile, there has been rapid development of miniaturized, user-friendly air quality sensor systems (Williams et al., 2014; Baron and Saffell, 2017; Karagulian et al., 2019; Malings et al., 2019). Significant advancements in internal gas and particulate matter sensor components, compact microprocessors, power supply/management, wireless data telemetry, advanced statistical data fusion/analysis, real-time sensor calibration, and graphical data interfaces hint at the future potential of accurate small form factor integrated sensor systems. This technology is being developed for a variety of potential applications, including exposure assessment (Morawska et al., 2018), industrial applications (Thoma et al., 2016), local source impact estimation (Feinberg et al., 2019) , and to increase the spatial density of outdoor monitoring networks (Mead et al., 2013; Bart et al., 2014). Routine performance testing of sensors, to date, has been mostly limited to typical ambient conditions (Williams et al., 2014; Jiao et al., 2016; Feinberg et al., 2018; Zamora et al., 2019; Collier-Oxandale et al., 2020), with more limited assessment of certain technologies at higher concentrations (Johnson et al., 2020; Zheng et al., 2018). These previously published findings have indicated, in some cases, high correlation between collocated sensors and reference monitors; however, there are also many sensor test results that exhibit measurement artifact (Mead et al., 2013; Lin et al., 2015; Spinelle et al., 2015; Hossain et al., 2016), inconsistency among identical sensors (Williams et al., 2014; Castell et al., 2017; Sayahi et al., 2019), drift over time (Artursson et al., 2000; Feinberg et al., 2018; Sayahi et al., 2019), sensitivity to environmental conditions (e.g., temperature, relative humidity; Cross et al., 2017; Wei et al., 2018), and limitations to upper limit measurement capabilities (Schweizer et al., 2016; Zou et al., 2020).

New approaches to assess smoke impacts from wildfire are of significant interest for U.S. federal agencies coordinating wildfire response and public health officials to communicate appropriate public health messages to impacted populations. Sensor technology may be at a point to improve upon or complement the limited cache of temporary monitors utilized by technical experts during wildfire episodes and, in that use scenario, enable more granular information on air quality for the public to reduce their exposure. Some researchers have integrated satellite and low-cost sensor data with regulatory monitoring network results to better understand and model human exposure and health effects of wildland fire smoke (Liu et al., 2009; Gupta et al., 2018), but the performance of most commercially available sensors under smoke conditions is unknown.

An important consideration in the use of air quality sensors for wildfire smoke is the selection of key pollutants of interest for public health and understanding target measurement ranges. PM2.5 monitors near wildfires have reported hourly concentrations exceeding 3–5 mg m−3 (Landis et al., 2018), resulting in daily averages well above the level of the 24-hr average PM2.5 regulatory standards. Peak hourly levels of CO near wildfire have been recorded between 2 and 3 ppm (Vedal and Dutton, 2006). Ozone (O3) concentrations are typically low near field of wildland fires due to combustion nitrogen oxide (NO) emissions titrating ambient O3 (NO + O3 → NO2 + O2) faster than it can be produced. However, many studies suggest an O3 enhancement further downwind (Jaffe and Wigsder, 2012; Brey and Fischer, 2016; Lindaas et al., 2017; Jaffe et al., 2018; Liu et al., 2018).

Several U.S. federal government agencies including the Environmental Protection Agency (EPA), National Aeronautics and Space Administration (NASA), National Oceanographic and Atmospheric Administration (NOAA), National Park Service (NPS), Forest Service (USFS), and Centers for Disease Control (CDC) sponsored a Challenge in 2017 (U.S. EPA, 2017) to spur development of a prototype small form factor measurement systems that could be deployed rapidly, operated with minimal expertise, and provide continuous ambient monitoring of key air pollutant concentrations during fire events. The challenge specified a system that would include measurements of pollutants with known negative health effects in humans (PM2.5, CO, and O3) and provide carbon dioxide (CO2) levels to quantify fire combustion efficiencies. In addition, each of the measured pollutants needed to be accurate over a large dynamic range of concentrations expected during periods of wildfire smoke impact, the system needed to be designed to transmit data to a central receiving unit, be durable to operate unattended in harsh conditions, and within a production-scale cost limit ($40,000 for a 6-node sensor system with central data receiver).

The purpose of this publication is to present and discuss the performance of the solver sensor systems submitted as part of the Wildland Fire Sensor Challenge under different temperature, relative humidity, and exposure conditions and announce award recipients. Phase I testing evaluated the solver submitted sensors under controlled temperature and relative humidity conditions in EPA’s research facility in Chapel Hill, North Carolina (Ghio et al., 2012) where known concentrations of pure standards were blended with zero air and introduced into an exposure chamber. Phase II testing evaluated the solver submitted sensors under simulated wildland fire exposure conditions at the USFS Rocky Mountain Research Station Fire Sciences Laboratory (FSL) combustion research facility in Missoula, Montana where varying concentrations of smoke from burning biomass fuel typical of the western U.S. under different combustion conditions (e.g., smoldering, flaming) were produced in the chamber. The accuracy, collocated precision, and linearity of each solver submitted sensor system was determined by comparison of the sensors measurement results with EPA designated federal reference method (FRM) or federal equivalent method (FEM) measurements (Hall et al., 2012) during the extended periods of Phase I and Phase II testing.

2. Methods

2.1. Challenge details

Eleven prototype sensor systems from ten different private solvers were received by EPA prior to the January 18, 2018 submission deadline that were responsive to the Sensor Challenge (Appendix Table A.1). Each solver submitted sensor system was first evaluated for condition and operability by the EPA testing team. The solvers were contacted and provided the opportunity to address any observed operation and/or data telemetry deficiencies prior to the Phase I (March 28 – April 2, 2018) and Phase II testing (April 16–24, 2018). Seven of the eleven sensor systems were cleared for Phase I testing, two sensor systems were returned to solver E for repair of physical damage that occurred during shipping, solver H sensors were returned due to inoperability prior to Phase I testing, and one system was disqualified when solver J declined to address identified data telemetry/logging issue(s). Sensor systems were returned to solvers I and F after Phase I and Phase II testing, respectively, to provide them an opportunity to recover internally logged monitoring data after telemetry and EPA attempts to recover data failed. All solver submitted sensor systems were tested as received, no calibrations or modifications of any kind were made. All solver sensor systems were either mounted onto custom made steel mesh support stands or a tripod with a uniform height of ~1.2 m based on its designed mounting configuration (Appendix Figure B.1).

2.2. Phase I testing

Phase I testing was carried out at the EPA Office of Research and Development research facility in Chapel Hill, North Carolina (Ghio et al., 2012) over a predetermined range of target analyte concentrations (Appendix Table A.2) repeated at two temperature and two relative humidity conditions (Appendix Table A.3). Challenge CO2 and CO concentrations were produced by diluting certified reference gas standard cylinders, O3 concentrations were generated using a custom chamber integrated corona discharge system, and ammonium sulfate aerosols were generated using a TSI Incorporated (Shoreview, MN, USA) Model 9306 six-jet seed aerosol generator.

Sensor systems were tested in a 4.8 × 5.8 × 3.2 m (width x depth x height) stainless-steel chamber with a single pass laminar flow (ceiling to floor) air system with approximately 40 air changes per hour (113 m3 min−1). The outside air was purified by passing through a bed of Purafil (potassium permanganate on an alumina substrate) and a bed of Purafil Corporation (Doraville, GA, USA) Puracol® activated charcoal, dehumidified, passed through a bed of Hopcalite, and sent to the Clean Air Plenum. The chamber air was taken from the Clean Air Plenum, brought to the proper test protocol conditions (Appendix Table A.3) by being heated/cooled and humidified with deionized water. Target pollutants were injected into the air stream before passing through a mixing baffle and entering the top of the chamber. The chamber pollutant monitoring system consists of an 8 cm diameter glass sample manifold that starts with an inverted glass funnel in the middle of the chamber at a height of ~3 m. This sample manifold runs along the back of the instrument racks containing the reference gas pollutant analyzers. Each gas analyzer had a 0.64 cm diameter perfluoroalkoxy alkane (PFA) Teflon® sample inlet line connecting the analyzer to the sample manifold. A fan pulled ~1.5 m3 min−1 from the chamber past the reference gas analyzers. FRM filter based PM2.5 and continuous FEM PM2.5 instruments sampled from an isokinetic probe located at the inlet of the chamber. The solver submitted sensor systems performance characteristics were evaluated by comparing their reported measurement concentrations versus the EPA reference instrument reported concentrations.

2.3. Phase II testing

Phase II testing was carried out at the FSL. The main combustion chamber is a square room with internal dimensions 12.4 × 12.4 × 19.6 m and a total volume of 3000 m3 and has been described previously (Christian et al., 2004). The chamber was ventilated with outdoor ambient air prior to each burn. All sensor testing was conducted using “static chamber” burns to simulate sensor exposure under in-situ sampling conditions. During the static chamber burns the combustion chamber was sealed after being flushed with outdoor air. Fuel beds were prepared and placed in the center of chamber. Two large circulation fans mounted on the chamber walls and destratification fans on the chamber ceiling facilitated mixing and maintained homogeneous smoke conditions during the tests. Continuous-sampling gas reference instruments were placed in the observation room adjacent to the combustion chamber and connected to PFA sampling manifolds that brought chamber air from Savillex (Eden Prarie, MN, USA) 47 mm inlet PFA filter packs (401-21-47-10-21-2) loaded with 5 μm pressure drop equivalent Millipore (Burlington, MA, USA) Omnipore® polytetrafluoroethylene (PTFE) Teflon membrane filters mounted on a tripod in the sensor testing zone, and PM2.5 reference instruments were distributed around the solver submitted sensor systems on the chamber floor (Appendix Figure B.1).

The fuels utilized for Phase II burns were ponderosa pine (Pinus ponderosa) needles (PPN) and fine dead wood (PPW), alone or mixed. Combustion efficiency of burns was managed by fuel moisture content as summarized in Appendix Table A.4. The Phase II burn plan was to target six different concentration ranges for the three primary air pollutants (PM2.5, CO, CO2) as summarized in Appendix Table A.5 under different burn conditions for a total of thirty-three 1-h burns.

2.4. EPA reference measurements

EPA gaseous reference instruments utilized in Phase I and Phase II testing included (i) Licor (Lincoln, NE, USA) model LI-850 non-dispersive infrared absorbance (NDIR) CO2 instrument, (ii) ThermoScientific (Franklin, MA, USA) Model 48C gas filter correlation (GFC) FRM CO analyzer, (iii) Teledyne API (San Diego, CA, USA) Model T265 NO chemiluminescence O3 FRM analyzer and 2B Technologies (Boulder, CO, USA) Model 211 UV photometric O3 FEM analyzer, (iv) Teledyne API Model T200 chemiluminescence FEM NO instrument, and (v) Teledyne API Model T500U cavity attenuated phase shift (CAPS) FEM “true” NO2 instrument. All continuous gas analyzers were zeroed, and span calibrated at the beginning and end of each chamber test day using Teledyne API Model T700U dynamic dilution calibration systems with certified O3 photometers. EPA protocol certified gas standard cylinders diluted in ultra-scientific grade zero air were used for CO, CO2, and NO2 instruments. Multi-point span calibrations were conducted at the beginning and end of each Phase of testing to ensure linearity.

A USFS Picarro Inc. (Santa Clara, CA, USA) Model G2401-m cavity ring-down spectroscopy (CRDS) CO/CO2 gas analyzer was utilized to measure high time resolution (2 s) concentrations in the Phase II chamber to calculate burn integrated modified combustion efficiency (MCE) as previously described by Urbanski (2013). A three-point calibration using gas mixtures of CO and CO2 in scientific grade zero air were run daily to maintain accuracy of the CRDS measurements. Continuous optical black carbon (BC) measurements were made during Phase II testing with collocated Magee Scientific (Berkley, CA, USA) Model AE-33 seven wavelength spectrum Aethalometers equipped with Model 5610 sample stream dryers and BGI (Waltham, MA, USA) Model SCC-1.829 sharp cut PM2.5 cyclones. All reported BC concentrations represent the self-adsorption compensated BC6 (880 nm) channel. Performance of the AE-33 light emitting diode (LED) sources were verified prior to and after Phase II testing using the Magee Scientific Model 7662 neutral density optical filter validation kit.

High time resolution (1 min) EPA PM2.5 mass reference measurements were made using Teledyne API Model T640 continuous FEM instruments that were normalized to Tisch Environmental (Cleves, OH, USA) Model TE-WILBUR filter-based (1 h) FRM measurements on a test (Phase I) or burn (Phase II) specific basis. The API T640 FEM indicated PM2.5 concentration was suspected of being sensitive to chamber aerosol size distribution (Phase I & Phase II testing) and BC concentration (Phase II testing) necessitating normalization to the hourly FRM concentration. The Phase I testing FEM correction factor averaged 1.30 ± 0.19 (mean ± standard deviation) and ranged from 1.08 to 1.80 for T640 instrument 296 (serial number). The Phase II testing FEM correction factors averaged 0.99 ± 0.38 and 1.01 ± 0.36, and ranged from 0.58 to 2.11 and 0.50 to 1.86 for T640 instruments 294 and 296, respectively. The T640 PM2.5 instruments were zeroed before each chamber test day. Leak checks and multi-point flow calibrations were conducted on the PM2.5 FRM samplers on a weekly basis.

2.5. Statistical analysis

Data processing and all statistical analyses were performed using SAS v.9.4 (SAS Institute, Cary, NC, USA). Accuracy of solver submitted sensor pods was calculated using Equation (1), and precision was calculated using the coefficient of variation or relative standard deviation using Equation (2), for solvers that submitted two sensor pods as requested in the challenge.

| (1) |

where X is the reported sensor concentration and R is the reference concentration.

| (2) |

where = mean of collocated sensor concentrations, = the sum of square of differences between individual collocated sensor concentrations and the mean, and n = the number of collocated sensor observations. Solvers A-D all provided two sensor pods and therefore have reported precision values. All sensor systems reported data every 5 min and some collocated units reporting were temporally offset. In those cases, temporally offset data was first synchronized using the SAS lag function prior to calculating precision. Solver E provided one repaired sensor pod during Phase II precluding the calculation of collocated precision values.

Parametric statistics used in this analysis include simple linear regression and multivariate analysis of variance (MANOVA). The assumptions of the parametric procedures were examined using residual plots, skewness and kurtosis coefficients, Shapiro-Wilk test, and the Brown-Forsythe test. Phase I and Phase II Δsensor values (EPA Reference instrument concentration – reported sensor concentration) were log transformed, and a constant value was added when necessary to avoid negative values prior to MANOVA analysis to improve normality and stabilize variance. A level of significance of α = 0.05 was used for all statistical procedures unless otherwise stated. The SAS REG and GLM procedures were used for least square general linear model regressions and MANOVA analysis, respectively.

2.6. Challenge award committee

An independent multi-agency award panel reviewed the EPA testing team report for each solver, calculated a score based on sensor accuracy and ancillary capabilities, and awarded prizes to three of the solvers. Two thirds of the score was based on weighted sensor accuracy, with the highest weighting on PM2.5 followed by CO, O3 and CO2. The ancillary capabilities score was based upon form factor (size, weight), design durability, battery life, data transmission range, data visualization, software calibration functionality, and data completeness.

3. Results and discussion

3.1. Phase I clean air testing results

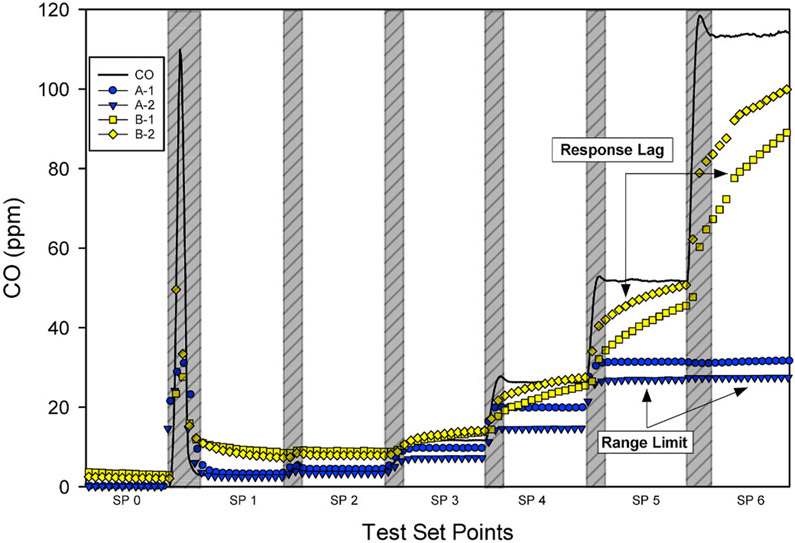

Phase I involved a full day of testing for each temperature and relative humidity set point (Appendix Table A.3). Each test day started with establishing the chamber environmental (temperature/relative humidity) conditions, a 1-h chamber zero followed by 1 h of testing at each concentration set point 1–6 (Appendix Table A.2). Stable transitions between concentration set points were typically achieved in 15–20 min. Results of Phase I testing (Appendix Table A.6) demonstrated that the automated chamber environmental controls accurately maintained the target testing conditions, while the target analyte concentrations were typically achieved within a reasonable tolerance to the test plan ensuring testing over a wide dynamic range. An example testing day from March 28, 2018 is presented in Fig. 1 showing the progression of target gas concentrations from the chamber zero (SP0) through target set point 6 (SP6). Official testing periods are shown, and transition periods are highlighted. PM2.5 target points were more variable and experienced larger deviations from the test plan as indicated by Reference Values (Appendix Figure B.2). This was a product of deteriorated aerosol generator jet performance over time as visible ammonium sulfate salt deposition built up at the jet orifice over the course of testing.

Fig. 1.

Example phase I testing day (March 28, 2018) demonstrating stepping through test points with transition times highlighted.

The Phase I testing accuracy and collocated precision results are summarized in Table 1. We observed mean accuracies ranging from 14 to 70% (PM2.5), 58–91% (CO), 35–88% (CO2), 19–66% (O3), and collocated precision was generally within ±15% for those sensors reporting reasonable results (e.g., responding to changing concentrations and no large negative values). Time series and scatter plots are presented for each EPA reference instrument measurement versus Solver sensor pod concentrations in Appendix Figure B.2 - B.31. Solver C’s sensor pods performed the best in Phase I testing for PM2.5, CO, O3, but did not incorporate a CO2 sensor. Lower maximum thresholds and sensor response lag impacted Solver A and Solver B accuracies, respectively for CO and CO2 (Fig. 2 and Appendix Figure B.4-B.7; B.12-B.15). Solver B’s sensor pod #1 reported significantly lower PM2.5, CO2, and O3 concentrations which also degraded their precision results. Solver D sensors experienced data transmission and logging failures that negatively impacted data completeness (~18%).

Table 1.

Phase I testing accuracy (%) and collocated precision (%) results for PM2.5, CO, CO2, and O3.

| Solver | Sensor | n | Accuracy (Mean ± Standard Deviation) |

Collocated Precision (Mean ± Standard Deviation) |

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PM2.5 | CO | CO2 | O3 | PM2.5 | CO | CO2 | O3 | |||

| A | # 1 | 283 | 22.5 ± 14.8 | 69.7 ± 21.6 | 87.6 ± 10.3 | 44.1 ± 5.4 | 4.9 ± 5.8 | 14.5 ± 6.0 | 3.0 ± 2.8 | 28.2 ± 12.2 |

| A | # 2 | 22.4 ± 14.4 | 57.5 ± 17.4 | 87.5 ± 10.2 | 66.1 ± 7.8 | |||||

| B | # 1 | 288 | 13.5 ± 5.2 | 60.9 ± 41.7 | 34.8 ± 9.7 | 18.7 ± 17.5 | 67.7 ± 9.1 | 7.7 ± 4.9 | 53.1 ± 15.6 | 66.2 ± 18.2 |

| B | # 2 | 37.2 ± 10.1 | 69.7 ± 36.9 | 74.7 ± 4.0 | 39.4 ± 24.0 | |||||

| C | # 1 | 287 | 69.8 ± 31.6 | 72.1 ± 6.6 | – | 66.3 ± 6.1 | 4.8 ± 2.3 | 15.4 ± 0.9 | – | 13.0 ± 7.0 |

| C | # 2 | 65.6 ± 25.1 | 90.6 ± 4.9 | – | 58.7 ± 9.4 | |||||

| D | # 1 | 53 | 52.6 ± 9.3 | −429 ± 552 | 14.1 ± 2.4 | −8.4 ± 3.7 | 12.0 ± 7.3 | −72.1 ± 210.8 | 6.6 ± 5.9 | −1.9 ± 1.6 |

| D | # 2 | 45.9 ± 7.8 | 116 ± 332 | 15.0 ± 3.0 | −8.3 ± 3.4 | |||||

Fig. 2.

Phase I testing (March 28, 2018) demonstrating the solver A and solver B CO results with transition times highlighted.

Solver A reported maximum concentration thresholds of 35 ppm for CO and 2000 ppm for CO2 were exceeded by set point 5 (CO) and set point 6 (CO & CO2). These limitations effectively lowered their overall accuracy scores and artificially improved collocated precision due to invariant maximum concentration values being reported during impacted testing set points (Fig. 2). Accuracy and precision results were recalculated using only those CO (n = 187) and CO2 (n = 235) values under their sensor maximum reporting limits. Solver A’s CO sensor accuracy improved from 70 to 82% (sensor #1) and 58–67% (sensor #2), and precision degraded from 15 to 17%. Solver A’s CO2 sensor accuracy improved from 88 to 92% (sensor #1) and 88–91% (sensor #2), and precision degraded from 3 to 4%.

A multivariate statistical analysis on the impact of chamber temperature (CT) and relative humidity (RH) on all the Solvers’ sensor pod measurements was investigated. The analysis was conducted by first calculating a Δsensor (EPA Reference instrument concentration – reported sensor concentration) value for each Solver’s sensor measurement and then testing the significance of the listed environmental factors on the Δsensor value using a MANOVA type III sum of squares. The results of the MANOVA analysis are summarized in Table 2, and CT and RH effects were found to significantly impact the Δsensor values from each of the Solvers but were not uniformly observed. Differences in Δsensor values could be a result of individual sensor type characteristics, solver implementation of sensors design, and potential implementation of compensation algorithms. For example, PM2.5 results from Solver A and Solver B were significantly impacted by CT but not RH, while Solver C (Sensor #1 and Sensor #2) and Solver D (Sensor #1) PM2.5 measurements were significantly impacted by RH but not CT.

Table 2.

Phase I MANOVA Testing Type III Sum of Squares Results Evaluating the Impact of Chamber Temperature and Relative Humidity on Sensor Measurements (Bold Values are Significant).

| Solver | Sensor | Temperature Effect Pr > F |

Relative Humidity Effect Pr > F |

||||||

|---|---|---|---|---|---|---|---|---|---|

| PM2.5 | CO | CO2 | O3 | PM2.5 | CO | CO2 | O3 | ||

| A | # 1 | 0.0006 | 0.8461 | <0.0001 | 0.0033 | 0.4220 | 0.0955 | 0.9700 | 0.3004 |

| A | # 2 | 0.0005 | 0.1677 | 0.0076 | 0.1029 | 0.7017 | 0.6679 | <0.0001 | <0.0001 |

| B | # 1 | <0.0001 | 0.0039 | 0.2464 | 0.0835 | 0.3712 | 0.0136 | 0.7343 | <0.0001 |

| B | # 2 | 0.0004 | 0.0003 | 0.5218 | 0.0002 | 0.8273 | 0.0042 | 0.3774 | <0.0001 |

| C | # 1 | 0.9685 | 0.7305 | – | 0.1331 | 0.0039 | 0.4336 | – | <0.0001 |

| C | # 2 | 0.8129 | 0.0248 | – | <0.0001 | 0.0343 | 0.0016 | – | 0.0047 |

| D | # 1 | 0.9233 | 0.0275 | 0.1444 | 0.1101 | <0.0001 | 0.0194 | 0.0130 | 0.0002 |

| D | # 2 | 0.5229 | 0.9245 | 0.0539 | 0.4009 | 0.0845 | 0.2439 | 0.2274 | 0.0438 |

3.2. Phase II smoke testing results

Phase II involved eight days of active testing for a total of thirty-three static chamber burns. An example burn day from April 18, 2018 is presented in Fig. 3 showing PM2.5 concentrations through the progression of burns 10–13 and highlighting fuel bed ignitions (red), start and stop times of official 1-hr challenge burn periods (drop lines), and chamber ventilation (blue). A statistical summary of chamber conditions, fuels combusted, and burn integrated MCEs are summarized in Appendix Table A.7. The resulting achieved target pollutant concentrations as well as BC, NO, and NO2 concentrations for each integrated burn are summarized in Appendix Table A.8. The results demonstrate smoke testing over a wide dynamic range of target analytes with a more limited range of integrated burn conditions. The burn integrated MCEs ranged from 0.91 to 0.97 representing a range of predominantly flaming combustion conditions (Akagi et al., 2011, 2013), chamber temperature ranged from 23 to 27 °C, and chamber relative humidity ranged from 16 to 28%. The burn integrated target EPA sensor challenge reference concentrations ranged from 29 to 1815 μg m−3 (PM2.5), 0.5–15.2 ppm (CO), and 465–951 ppm (CO2). Homogeneity of the smoke within the chamber was verified by evaluating the precision of the filter based PM2.5 FRM samplers and continuous PM2.5 FEM instruments within the sensor testing zone (Appendix Figure B1). The burn integrated absolute percent difference between the PM2.5 FRMs was 5.2% (mean) and 2.4% (median), and between the continuous PM2.5 FEMs was 3.5% (mean) and 1.9% (median).

Fig. 3.

Example phase II testing day (April 18, 2018) demonstrating PM2.5 concentrations during sequential test burns with fuel ignition (red) and chamber ventilation start times indicated (blue). (For interpretation of the references to colour in this figure legend, the reader is referred to the Web version of this article.)

The Phase II testing accuracy and collocated precision results are summarized in Table 3. We observed mean accuracies ranging from 26 to 52% (PM2.5), 54–73% (CO), and 14–93% (CO2), and collocated precision was generally within ±20% for those sensors reporting reasonable results (e.g., responding to changing concentrations and no large negative values). O3 present in the chamber prior to fuel bed ignition from ventilated outside ambient air was rapidly titrated by NO to NO2 resulting in the range of burn integrated concentrations from only 0.1–1.1 ppb (Appendix Table A.8). The combination of virtually no O3 present in the chamber and observed positive sensor artifact from NO2 (even for those sensor pods measuring NO2 and correcting reported O3 values) resulted in very large negative accuracies ranging from –1964 to –42598 and poor collocated precision. Improved Phase II testing PM2.5 accuracies from Solvers A and B in relation to Phase I testing suggests that smoke specific calibrations were implemented by the solvers, while Solvers B and D lower Phase II testing accuracy for gas phase target species indicated that smoke meaningfully degraded their gas sensor performance.

Table 3.

Phase II smoke testing accuracy (%) and collocated precision (%) results for PM2.5, CO, CO2, and O3.

| Solver | Sensor | Accuracy (Mean ± Standard Deviation) |

Collocated Precision (Mean ± Standard Deviation) |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| n | PM2.5 | CO | CO2 | O3 | n | PM2.5 | CO | CO2 | O3 | ||

| A | # 1 | 353a | 33.0 ± 12.7 | 73.4 ± 78.8 | 89.9 ± 5.0 | – | 339 | 4.8 ± 10.0 | 18.3 ± 10.6 | 6.5 ± 5.1 | 46.6 ± 196.8 |

| A | # 2 | 398 | 31.9 ± 11.8 | 65.0 ± 12.0 | 92.6 ± 5.6 | – | |||||

| B | # 1 | 398 | 25.7 ± 15.5 | −294 ± 1545 | 14.0 ± 6.2 | – | 398 | 49.7 ± 11.4 | 10.8 ± 6.7 | 90.8 ± 17.1 | 47.3 ± 12.5 |

| B | # 2 | 398 | 48.4 ± 24.4 | −205 ± 1237 | 61.9 ± 2.2 | – | |||||

| C | # 1 | 0 | – | – | – | – | 0 | – | – | – | – |

| C | # 2 | 0 | – | – | – | – | |||||

| D | # 1 | 110 | 32.5 ± 196.4 | −374 ± 668 | 17.5 ± 2.5 | – | 97 | 9.0 ± 9.2 | 3.2 ± 43.4 | 10.5 ± 7.5 | −102 ± 79.8 |

| D | # 2 | 125 | 43.8 ± 141.8 | −643 ±1415 | 18.8 ± 3.4 | – | |||||

| E | # 1 | 129 | 51.7 ± 10.9 | 53.6 ± 40.7 | 87.6 ± 2.5 | – | 0 | – | – | – | – |

EPA testing team inadvertently turned off sensor pod for one day of Phase II testing impacting the number of observations.

Time series and scatter plots are presented for each EPA reference instrument measurement versus Solver sensor pod concentrations in Appendix Figures B.32 - B.55. Solver C’s sensor pods required 2G cellular connectivity to transmit data to their cloud server, and the absence of 2G cellular coverage in Missoula resulted in no data acquisition during Phase II testing. Solver D data receiving unit experienced data transmission and logging failures again that negatively impacted data completeness (~31%). Solver E’s repaired passive sensor pod was received during Phase II testing resulting in lower data completeness (~32%).

The overall Phase II testing PM2.5 sensor calculated accuracy distributions for Solvers A and B with high data completeness (>99%) covering the full range of test burn combustion conditions were relatively similar ranging from 26 to 48% (Table 3). However, when the aggregate results are plotted versus the EPA reference values as presented in Fig. 4a-d some differences in PM2.5 sensor performance are observed. Solver A’s PM2.5 sensor (Plantower Model PMS5003) provides a non-linear response that was well fit to a quadratic model (r2 = 0.94; Fig. 4a and b) consistent with other reported results with Plantower sensors (Zheng et al., 2018) under ambient conditions, and does not appear to be very sensitive to changes in burn conditions. Solver B’s PM2.5 sensor (Nova Model SDS011) provides a moderately well fit linear response (r2 = 0.8; Fig. 4c and d) that appears to be more sensitive to changes in burn conditions. When evaluating Solver B’s PM2.5 sensor performance on an individual burn basis (e.g., Appendix Figure B.56) we observed very good linear responses relative to the EPA reference concentrations but varying sensor sensitivities (slopes ranged from 1.42 to 23.77 for the nine burns presented) due to changing burn conditions. Interestingly, the largest slopes (>10) occurred when fuels burned were >90% fine dead wood by mass.

Fig. 4.

Phase II Chamber Testing PM2.5 Concentration (·g m-3) Scatter Plots for Solver A (a–b) and Solver B (c–d) Raw Challenge Data.

A multivariate statistical analysis on the impact of CT, RH, BC, and NO2 on the Solvers’ sensor pod measurements was investigated in the same manner as previously described in the Phase I testing. The results of the MANOVA analysis are summarized in Table 4, and CT, RH, BC, NO2 effects were found to significantly impact the Δsensor values from each of the Solvers but were not uniformly observed even between collocated sensors from the same Solver. However, a few general trends were observed between modeled chamber conditions and the reported sensor concentrations. The impact of combustion conditions as modeled by BC and NO2 concentrations significantly impacted PM2.5 and O3 sensor performance from most Solvers. Fuel type, arrangement, and moisture content affect the combustion process and hence the size distribution, optical properties, and emission intensity of aerosol produced. For example, flaming combustion of PPN produces higher number concentrations of smaller, more light absorbing aerosols compared with smoldering (Carrico et al., 2016). Additionally, smoke aging within the FSL chamber reduces the aerosol number concentration and increases aerosol size (Carrico et al., 2016). Burn to burn variability in the amount and mix of fuel and combustion conditions likely exerted a variable impact on this aerosol aging process during the 1-h sampling periods of our testing. Laser and LED photometer-based sensors accuracies are affected by changes in aerosol size distributions, aerosol density assumptions, and optical properties of the aerosols (Kelly et al., 2017) as reflected in the range of burn conditions achieved during this evaluation. The cross sensitivity on many electrochemical O3 sensors to NO2 as well as sensitivities to environmental conditions such as CT and RH were also reflected in the MANOVA results.

Table 4.

Phase II MANOVA Testing Type III Sum of Squares Results Evaluating the Impact of Black Carbon (BC), Nitrogen Dioxide (NO2), Chamber Temperature, and Relative Humidity on Sensor Measurements (Bold Values are Significant).

| Solver | Sensor | BC Pr > F |

NO2 Pr > F |

Temperature Effect Pr > F |

Relative Humidity Effect Pr > F |

||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PM2.5 | O3 | PM2.5 | CO | CO2 | O3 | PM2.5 | CO | CO2 | O3 | ||

| A | # 1 | <0.0001 | <0.0001 | <0.0001 | <0.0001 | 0.4538 | 0.0001 | 0.1709 | <0.0001 | 0.0073 | 0.1802 |

| A | # 2 | <0.0001 | <0.0001 | 0.0034 | 0.0008 | <0.0001 | 0.0003 | 0.0974 | 0.0002 | <0.0001 | 0.6401 |

| B | # 1 | <0.0001 | <0.0001 | 0.0538 | 0.0562 | 0.0839 | 0.2836 | 0.7278 | 0.0016 | 0.0006 | 0.2068 |

| B | # 2 | <0.0001 | <0.0001 | 0.2975 | 0.2769 | 0.0234 | 0.0880 | 0.1662 | 0.0032 | <0.0001 | 0.1381 |

| C | # 2 | – | – | – | – | – | – | – | – | – | – |

| D | # 1 | 0.1990 | 0.2007 | 0.1343 | <0.0001 | 0.8908 | 0.0001 | 0.9914 | <0.0001 | <0.0001 | 0.0004 |

| D | # 2 | 0.0548 | <0.0001 | 0.1495 | 0.0001 | 0.6114 | <0.0001 | 0.9058 | <0.0001 | 0.0002 | 0.0001 |

| E | # 1 | <0.0001 | <0.0001 | 0.0008 | <0.0001 | <0.0001 | <0.0001 | 0.1948 | <0.0001 | <0.0001 | 0.0060 |

3.3. Challenge awards

The EPA/USFS testing team produced a report for each Solver submitted sensor system summarizing (i) Phase I and Phase II testing accuracy results as presented and discussed herein, (ii) functionality testing of data telemetry, and (iii) a qualitative review of sensor pod form factor (e.g., size, weight), ease of deployment, battery life, durability, and safety features. These reports were provided to each Solver and an independent interagency judging panel made up of representatives from CDC, EPA, NASA, NPS, NOAA, and USFS. The judging panel reviewed the testing reports and scored each submitted sensor system with sensor performance counting for 65% of the total score weighted most heavily toward PM2.5 followed by CO, O3, and CO2. The remaining 35% of the total score was based on a qualitative review of usability, durability, data telemetry, and cost. The winners of the Wildland Fire Sensor Challenge were announced, and awards presented at the Air Sensors International Conference in Oakland, California on September 12th, 2018 (U.S. EPA, 2018). The First Place Award and $35,000 USD was presented to SenSevere/Sensit Technologies (Pittsburgh, PA, USA; Solver A), the Second Place Award and $25,000 USD was presented to Thingy LLC (Bellevue, WA, USA; Solver B), and an Honorable Mention Award was presented to Kunak Technologies (Pamplona, Spain; Solver C). The Wildland Fire Sensor Challenge testing team provided important feedback to all the Solvers on the quantitative performance of their sensor systems under a wide range of target pollutant concentrations and environmental conditions, data telemetry, data user interface, and form factor. Winners of the challenge have continued to develop their wildland fire sensor technologies and their products are currently commercially available as the Sensit RAMP (Zimmerman et al., 2018; Malings et al., 2019; Sensit, 2020), the Thingy AQ (Thingy, 2020), and the Kunak Air A10 (Kunak, 2020; Reche et al., 2020).

3.4. Post challenge calibration of sensors

As detailed in the rules of the Wildland Fire Sensor Challenge (U.S. EPA, 2017), all Solver submitted sensors were tested and awards distributed based on the performance of the systems “as received” (e.g., no calibrations were conducted by EPA). After the completion of the Challenge post-testing Phase I and Phase II regression calibration equations were developed and applied to all the Solver submitted sensors to evaluate the potential for improving their performance. This was a unique opportunity to re-evaluate the EPA reference “calibrated” systems and their underlying technologies under both ideal clean chamber and controlled smoke chamber conditions over very large concentration ranges. All the Phase I and Phase II testing calibration equations are presented in Table 5, and time series and scatter plots of the EPA reference versus raw and regression calibrated sensor measurement data are presented in Appendix Figures B.2-B.31 (Phase I Testing) and Appendix Figures B.32-B.55 (Phase II Testing).

Table 5.

Summary of sensor calibration equations and reference PM2.5 concentration versus calibrated instrument linear regression equations.

| Instrument | Parameter | Phase I Clean Chamber Testing Calibration Equation |

Phase II Smoke Chamber Testing Calibration Equation |

|---|---|---|---|

| Solver A #1 | PM2.5 | Ref = (3.3855 × Sensor #11.1549) − 19.46; r2 = 0.957 | Ref = (1.3451 × Sensor #1) + (0.0187 × Sensor #12) + 8.52; r2 = 0.936 |

| Solver A #1 | CO | Ref = (1.3749 × Sensor #1) − 1.01; r2 = 0.985 | Ref = (1.2897 × Sensor #1) − 0.19; r2 = 0.991 |

| Solver A #1 | CO2 | Ref = (1.2228 × Sensor #1) − 99.19; r2 = 0.986 | Ref = (0.2868 / Sensor #1) + (0.0010 / Sensor #12) + 165.39; r2 = 0.946 |

| Solver A #1 | O3 | Ref = (2.1683 × Sensor #1) + 6.05; r2 = 0.924 | – |

| Solver A #2 | PM2.5 | Ref = (5.8076 × Sensor #21.0337) − 32.896; r2 = 0.937 | Ref = (2.4324 × Sensor #2) + (0.0132 × Sensor #22) − 13.78; r2 = 0.940 |

| Solver A #2 | CO | Ref = (1.7711 × Sensor #2) − 1.01; r2 = 0.972 | Ref = (1.4075 × Sensor #2) + 0.17; r2 = 0.973 |

| Solver A #2 | CO2 | Ref = (1.2611 × Sensor #2) − 131.80; r2 = 0.987 | Ref = (−3.6716 × Sensor #2) + (0.0040 × Sensor #22) + 1333.91; r2 = 0.875 |

| Solver A #2 | O3 | Ref = (1.3419 × Sensor #2) + 13.81; r2 = 0.905 | |

| Solver B #1 | PM2.5 | Ref = (4.9463 × Sensor #1) + 87.89; r2 = 0.904 | Ref = (2.3247 × Sensor #1) + 135.55; r2 = 0.797 |

| Solver B #1 | CO | Ref = (1.5467 × Sensor #1) − 3.75; r2 = 0.963 | Ref = (1.2993 − Sensor #1) − 4.93; r2 = 0.649 |

| Solver B #1 | CO2 | Ref = (1.8094 × Sensor #1) + 359.69; r2 = 0.964 | Ref = (1.9515 × Sensor #1) + 409.12; r2 = 0.861 |

| Solver B #1 | O3 | Ref = (0.2162 × Sensor #1) + 121.75; r2 = 0.008 | |

| Solver B #2 | PM2.5 | Ref = (2.1353 × Sensor #2) + 43.40; r2 = 0.945 | Ref = (1.6158 × Sensor #2) + 66.05; r2 = 0.811 |

| Solver B #2 | CO | Ref = (1.3269 × Sensor #2) − 2.85; r2 = 0.980 | Ref = (1.2401 × Sensor #2) − 3.88; r2 = 0.858 |

| Solver B #2 | CO2 | Ref = (1.2538 × Sensor #2) + 43.85; r2 = 0.987 | Ref = (1.5560 × Sensor #2) + 21.01; r2 = 0.960 |

| Solver B #2 | O3 | Ref = (0.2981 × Sensor #2) + 111.08; r2 = 0.054 | |

| Solver C #1 | PM2.5 | Ref = (1.2559 × Sensor #1) + 12.57; r2 = 0.951 | |

| Solver C #1 | CO | Ref = (1.6970 × Sensor #1) − 2.17; r2 = 0.979 | – |

| Solver C #1 | O3 | Ref = (1.2837 × Sensor #1) + 16.50; r2 = 0.984 | |

| Solver C #2 | PM2.5 | Ref = (1.3090 × Sensor #2) + 18.79; r2 = 0.944 | |

| Solver C #2 | CO | Ref = (1.3634 × Sensor #2) − 2.18; r2 = 0.978 | – |

| Solver C #2 | O3 | Ref = (1.5432 × Sensor #2) + 12.86; r2 = 0.816 | |

| Solver D #1 | PM2.5 | Ref = (1.7335 × Sensor #1) + 28.63; r2 = 0.956 | Ref = (0.8367 × Sensor #1) + 128.83; r2 = 0.447 |

| Solver D #1 | CO | Ref = (1.3342 × Sensor #1) + 77.40; r2 = 0.731 | Ref = (0.9252 × Sensor #1) − 3.78; r2 = 0.569 |

| Solver D #1 | CO2 | Ref = (9.8540 × Sensor #1) − 364.08; r2 = 0.976 | Ref = (7.5688 × Sensor #1) − 173.40; r2 = 0.565 |

| Solver D #1 | O3 | Ref = (542.6847 × Sensor #1) + 5233.21; r2 = 0.331 | |

| Instrument | Parameter | Phase I Clean Chamber Testing Calibration Equation | Phase II Smoke Chamber Testing Calibration Equation |

| Solver D #2 | PM2.5 | Ref = (2.0810 × Sensor #2) + 12.06; r2 = 0.976 | Ref = (1.0336 × Sensor #2) + 99.56; r2 = 0.533 |

| Solver D #2 | CO | Ref = (0.9059 × Sensor #2) + 18.37; r2 = 0.965 | Ref = (0.2568 × Sensor #2) − 0.52; r2 = 0.710 |

| Solver D #2 | CO2 | Ref = (9.1478 × Sensor #2) − 323.77; r2 = 0.965 | Ref = (3.7927 × Sensor #2) + 179.29; r2 = 0.290 |

| Solver D #2 | O3 | Ref = (−140.9741 × Sensor #2) − 1169.42; r2 = 0.640 | – |

| Solver E #1 | PM2.5 | – | Ref = (1.5545 × Sensor #1) + 74.41; r2 = 0.794 |

| Solver E #1 | CO | – | Ref = (0.3546 × Sensor #1) + 2.21; r2 = 0.280 |

| Solver E #1 | CO2 | – | Ref = (1.1427 × Sensor #1) − 0.26; r2 = 0.985 |

| Solver E #1 | O3 | – | – |

The updated regression calibrated sensor accuracy results are summarized in Table 6 and demonstrate that some sensor systems performance was significantly improved versus their raw challenge accuracies (Table 1, Table 3). As expected, sensors with well fit calibration regression equations produced the most significant performance improvements. For example, the Solver A calibrated PM2.5 measurements improved Phase I mean accuracies from ~22% to ~75% and Phase II mean accuracies from ~32% to ~83%. Sensors that demonstrated responses to Phase II burn conditions that were poorly modeled by best fit regression equations produced no significant improvements. For wildland fire applications the best performing sensors were not overly impacted by burn conditions (BC concentrations and implied changes in aerosol size distribution) and produced well fit calibration models over very large concentration ranges. The accuracy improvements demonstrated for most of the solvers sensors when regression calibrated demonstrates the potential of these systems providing reasonable accuracies over conditions that are typical during wildland fire events. However, as the differences in calibration equations shown in Table 5 indicate, the variability in specific sensor type response can be quite large and more evaluations are needed before generic smoke correction equations could be generally applied.

Table 6.

Post challenge regression calibrated chamber testing accuracy (%) results (mean ± standard deviation) for PM2.5, CO, CO2, and O3.

| Solver | Sensor | Phase I Accuracy (Mean ± Standard Deviation) |

Phase II Accuracy (Mean ± Standard Deviation) |

||||||

|---|---|---|---|---|---|---|---|---|---|

| PM2.5 | CO | CO2 | O3 | PM2.5 | CO | CO2 | O3 | ||

| A | # 1 | 79.4 ± 46.6 | 92.9 ± 9.8 | 95.0 ± 3.2 | 91.2 ± 8.5 | 83.1 ± 18.7 | 82.1 ± 83.5 | 96.3 ± 3.1 | – |

| A | # 2 | 73.8 ± 61.1 | 91.7 ± 5.3 | 95.3 ± 3.7 | 91.0 ± 6.3 | 83.6 ± 18.4 | 88.0 ± 46.1 | 94.3 ± 4.2 | – |

| B | # 1 | 1.1 ±314.3 | 59.8 ± 59.9 | 92.7 ± 5.3 | 63.0 ± 35.4 | 18.8 ± 122.0 | −51.7 ± 643.5 | 95.4 ± 4.8 | – |

| B | # 2 | 50.8 ± 150.3 | 69.3 ± 44.0 | 95.7 ± 3.1 | 64.1 ± 34.0 | 48.0 ± 63.7 | 3.7 ± 458.8 | 97.5 ± 2.3 | – |

| C | # 1 | 79.0 ± 44.4 | 87.1 ± 9.8 | – | 96.2 ± 3.3 | – | – | – | – |

| C | # 2 | 74.3 ± 47.3 | 86.8 ± 9.9 | – | 87.9 ± 10.3 | – | – | – | – |

| D | # 1 | 89.9 ± 14.0 | −32.8 ± 150.9 | 92.0 ± 8.0 | 72.4 ± 28.9 | 12.2 ± 227.2 | 4.0 ± 181.1 | 88.1 ± 9.4 | – |

| D | # 2 | 91.0 ± 12.0 | 52.0 ± 190.9 | 86.1 ± 11.3 | 83.5 ± 20.3 | 28.1 ± 190.1 | 38.2 ± 111.4 | 85.6 ± 9.6 | – |

| E | # 1 | – | – | – | – | 84.0 ± 20.2 | 66.2 ± 42.0 | 97.7 ± 1.6 | – |

4. Conclusions

The Wildland Fire Sensor Challenge succeeded in bringing the sensor manufacturer community’s attention to the unique air pollution measurement needs of federal, state, local, and tribal agencies managing wildland fire response and public health messaging during large events; and several Solvers succeeded in designing and building fit for purpose sensor systems. The small form factor, ruggedness, and easy deployment of the award-winning Solver systems reflected careful design and implementation. However, data telemetry and data presentation/visualization solutions were identified as a general shortcoming. The 1st place award-winning Solver submitted sensor system provided reasonable mean accuracies (>80%) for PM2.5, CO, and CO2 over conditions that are typical during wildland fire events when smoke specific EPA/USFS calibrations were applied. The O3 results in Phase I testing were reasonable for most solvers but Phase II testing were poor for all Solver systems due to very low chamber concentrations (NO titration) and positive NO2 measurement artifacts. This study also highlighted the need for using FRMs for evaluating/calibrating sensor systems in biomass smoke. Regulatory FEMs providing continuous measurements for PM2.5 are not well characterized under smoke conditions and our results demonstrate the 1-h FRM correction factors ranged from 0.50 to 2.11 and were a function of burn condition (BC concentrations and implied changes in aerosol size distribution). Similarly, ultraviolet (UV) photometric FEM O3 instruments suffer from large positive measurement artifacts in the FSL smoke chamber and in near-field prescribed burning smoke plumes (Long et al., 2021).

Supplementary Material

HIGHLIGHTS.

Wildland Fire Sensor Challenge aimed to advance technology for smoke applications.

Fine particulate matter, carbon monoxide, carbon dioxide, and ozone were targeted.

Submitted systems were tested in research chambers over large dynamic ranges.

Sensor accuracy, precision, linearity, and operability are presented and discussed.

Sensor performance was dramatically improved by smoke specific post calibration.

Acknowledgements

The EPA through its Office of Research and Development (ORD) funded and conducted this research. The views expressed in this paper are those of the authors and do not necessarily reflect the views or policies of EPA. It has been subjected to Agency review and approved for publication. Mention of trade names or commercial products do not constitute an endorsement or recommendation for use. We thank the members of the EPA Challenge Technical Development team Gayle Hagler, Kirk Baker, Stacey Katz, and Gail Robarge; the interagency challenge team and judging panel; the EPA Chapel Hill, NC chamber support team Greg Byrd (TRC Companies, Inc.), David Diaz-Sanchez (EPA), and Al Little (EPA); and ORD science staff Richard Walker and Andrew Whitehill.

Footnotes

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Appendix A. Supplementary data

Supplementary data to this article can be found online at https://doi.org/10.1016/j.atmosenv.2020.118165.

Data availability

Datasets related to this article can be found at https://catalog.data.gov/dataset/epa-sciencehub.

References

- Akagi SK, Yokelson RJ, Wiedinmyer C, Alvarado MJ, Reid JS, Karl T, Crounse JD, Wennberg PO, 2011. Emission factors for open and domestic biomass burning for use in atmospheric models. Atmos. Chem. Phys 11, 4039–4072. [Google Scholar]

- Akagi SK, Yokelson RJ, Burling IR, Meinardi S, Simpson I, Blake DR, McMeeking GR, Sullivan A, Lee T, Kreidenweis S, Urbanski S, Reardon J, Griffith DWT, Johnson TJ, Weise DR, 2013. Measurements of reactive trace gases and variable O3 formation rates in some South Carolina biomass burning plumes. Atmos. Chem. Phys 13, 1141–1165. [Google Scholar]

- Artursson T, Eklöv T, Lundström I, Mårtensson P, Sjöström M, Holmberg M, 2000. Drift correction for gas sensors using multivariate methods. J. Chemometr 14, 711–723. [Google Scholar]

- Baron R, Saffell J, 2017. Amperometric gas sensors as a low cost emerging technology platform for air quality monitoring applications: a review. ACS Sens. 2, 1553–1566. [DOI] [PubMed] [Google Scholar]

- Bart M, Williams DE, Ainslie B, McKendry I, Salmond J, Grange SK, Alavi-Shoshtari M, Steyn D, Henshaw GS, 2014. High density ozone monitoring using gas sensitive semi-conductor sensors in the lower fraser valley, British Columbia. Environ. Sci. Technol 48, 3970–3977. [DOI] [PubMed] [Google Scholar]

- Bell ML, McDermott A, Zeger SL, Samet JM, Dominici F, 2004. Ozone and short-term mortality in 95 US urban communities, 1987-2000. Jama 292, 2372–2378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brey SJ, Fischer EV, 2016. Smoke in the city: how often and where does smoke impact summertime ozone in the United States? Environ. Sci. Technol 50, 1288–1294. [DOI] [PubMed] [Google Scholar]

- Carrico CM, Prenni AJ, Kreidenweis SM, Levin EJT, McCluskey CS, DeMott PJ, McMeeking GR, Nakao S, Stockwell C, Yokelson RJ, 2016. Rapidly evolving ultrafine and fine mode biomass smoke physical properties: comparing laboratory and field results. J. Geophys. Res 121 (10), 5750–5768. [Google Scholar]

- Cascio WE, 2018. Wildland fire smoke and human health. Sci. Total Environ 624, 586–595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castell N, Dauge FR, Schneider P, Vogt M, Lerner U, Fishbain B, Broday D, Bartonova A, 2017. Can commercial low-cost sensor platforms contribute to air quality monitoring and exposure estimates? Environ. Int 99, 293–302. [DOI] [PubMed] [Google Scholar]

- Christian TJ, Kleiss B, Yokelson RJ, Holzinger R, Crutzen PJ, Hao WM, Shirai T, Blake DR, 2004. Comprehensive laboratory measurements of biomass-burning emissions: 2. First intercomparison of open-path FTIR, PTR-MS, and GC-MS/FID/ECD. J. Geophys. Res 109, D02311. [Google Scholar]

- Collier-Oxandale A, Feenstra B, Papapostolou V, Zhang H, Kuang M, Boghossian BD, Polidori A, 2020. Field and laboratory performance evaluations of 28 gas-phase air quality sensors by the AQ-SPEC program. Atmos. Environ 220, 117092. [Google Scholar]

- Cross ES, Williams LR, Lewis DK, Magoon GR, Onasch TB, Kaminsky ML, Worsnop DR, Jayne JT, 2017. Use of electrochemical sensors for measurement of air pollution: correcting interference response and validating measurements. Atmos. Meas. Tech 10, 3575–3588. [Google Scholar]

- Feinberg SN, Williams R, Hagler GSW, Rickard J, Brown R, Garver D, Harshfield G, Stauffer P, Mattson E, Judge R, Garvey S, 2018. Long-term evaluation of air sensor technology under ambient conditions in Denver, Colorado. Atmos. Meas. Tech 11, 4605–4615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feinberg SN, Williams R, Hagler G, Low J, Smith L, Brown R, Garver D, Davis M, Morton M, Schaefer J, Campbell J, 2019. Examining spatiotemporal variability of urban particulate matter and application of high-time resolution data from a network of low-cost air pollution sensors. Atmos. Environ 213, 579–584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghio AJ, Soukup JM, Case M, Dailey LA, Richards J, Berntsen J, Devlin RB, Stone S, Rappold A, 2012. Exposure to wood smoke particles produces inflammation in healthy volunteers. Occup. Environ. Med 69, 170–175. [DOI] [PubMed] [Google Scholar]

- Gupta P, Doraiswamy P, Levy R, Pikelnaya O, Maibach J, Feenstra B, Polidori A, Kiros F, Mills KC, 2018. Impact of California fires on local and regional air quality: the role of a low-cost sensor network and satellite observations. GeoHealth 2, 172–181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall ES, Beaver MR, Long RW, Vanderpool RW, 2012. EPA’s reference and equivalent, May. EM, pp. 8–12. [Google Scholar]

- Hossain M, Saffell J, Baron R, 2016. Differentiating NO2 and O3 at low cost air quality amperometric gas sensors. ACS Sens. 1, 1291–1294. [DOI] [PubMed] [Google Scholar]

- Jaffe DA, Cooper OR, Fiore AM, Henderson BH, Tonnesen GS, Russell AG, Henze DK, Langford AO, Lin M, Moore T, 2018. Scientific assessment of background ozone over the US: implications for air quality management. Elem. Sci. Anth 6, 56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaffe DA, Wigder NL, 2012. Ozone production from wildfires: a critical review. Atmos. Environ 51, 1–10. [Google Scholar]

- Jiao W, Hagler G, Williams R, Sharpe R, Brown R, Garver D, Judge R, Caudill M, Rickard J, Davis M, Weinstock L, Zimmer-Dauphinee S, Buckley K, 2016. Community Air Sensor Network (CAIRSENSE) project: evaluation of low-cost sensor performance in a suburban environment in the southeastern United States. Atmos. Meas. Tech 9, 5281–5292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson KK, Bergin MH, Russell AG, Hagler GSW, 2020. Field test of several low-cost particulate matter sensors in high and low concentration urban environments. Aerosol Air Qual. Res 18, 565–578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston FH, Henderson SB, Chen Y, Randerson JT, Marlier M, Defries RS, Kinney P, Bowman DM, Brauer M, 2012. Estimated global mortality attributable to smoke from landscape fires. Environ. Health Perspect 120, 695–701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karagulian F, Barbiere M, Kotsev A, Spinelle L, Gerboles M, Lagler F, Redon N, Crunaire S, Borowiak A, 2019. Review of the performance of low-cost sensors for air quality monitoring. Atmosphere 10, 506. [Google Scholar]

- Kelly KE, Whitaker J, Petty A, Widmer C, Dybwad A, Sleeth D, Martin R, Butterfield A, 2017. Ambient and laboratory evaluation of a low-cost particulate matter sensor. Environ. Pollut 221, 491–500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitzberger T, Brown PM, Heyerdahl EK, Swetnam TW, Veblen TT, 2007. Contingent Pacific-Atlantic Ocean influence on multi-century wildfire synchrony over western North America. P. Natl. Acad. Sci. USA 104, 543–548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kunak Technologies, S.L., 2020. https://www.kunak.es/en/products/ambient-monitoring/air-quality-monitor/. (Accessed 27 August 2020).

- Landis MS, Edgerton ES, White EM, Wentworth GR, Sullivan AP, Dillner AM, 2018. The impact of the 2016 fort McMurray horse river wildfire on ambient air pollution levels in the athabasca oil sands region, alberta, Canada. Sci. Total Environ 618, 1665–1676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin C, Gillespie J, Schuder MD, Duberstein W, Beverland IJ, Heal MR, 2015. Evaluation and calibration of Aeroqual series 500 portable gas sensors for accurate measurement of ambient ozone and nitrogen dioxide. Atmos. Environ 100, 111–116. [Google Scholar]

- Lindaas J, Farmer DK, Pollack IB, Abeleira A, Flocke F, Roscioli R, Herndon S, Fischer EV, 2017. Changes in ozone and precursors during two aged wildfire smoke events in the Colorado Front Range in summer 2015. Atmos. Chem. Phys 17, 10691–10707. [Google Scholar]

- Littell JS, McKenzie D, Peterson DL, Westerling AL, 2009. Climate and wildfire area burned in western U. S. ecoprovinces, 1916-2003. Ecol. Appl 19, 1003–1021. [DOI] [PubMed] [Google Scholar]

- Liu Y, Kahn RA, Chaloulakou A, Koutrakis P, 2009. Analysis of the impact of the forest fires in August 2007 on air quality of Athens using multi-sensor aerosol remote sensing data, meteorology and surface observations. Atmos. Environ 43, 3310–3318. [Google Scholar]

- Liu ZF, Liu Y, Murphy JP, Maghirang R, 2018. Contributions of Kansas rangeland burning to ambient O3: analysis of data from 2001 to 2016. Sci. Total Environ 618, 1024–1031. [DOI] [PubMed] [Google Scholar]

- Long R; Whitehill A; Habel A; Urbanski S; Halliday H; Colon M; Kaushik S; Landis MS (2021). Comparison of ozone measurement methods in biomass burning smoke: an evaluation under field and laboratory conditions. Atmos. Meas. Tech 14:1783–1800. DOI: 10.5194/amt-14-1783-2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malings C, Tanzer R, Hauryliuk A, Kumar SPN, Zimmerman N, Kara LB, Presto AA, Subramanian R, 2019. Development of a general calibration model and long-term performance evaluation of low-cost sensors for air pollutant gas monitoring. Atmos. Meas. Tech 12, 903–920. [Google Scholar]

- Mead MI, Popoola OAM, Stewart GB, Landshoff P, Calleja M, Hayes M, Baldovi JJ, McLeod MW, Hodgson TF, Dicks J, Lewis A, Cohen J, Baron R, Saffell JR, Jones RL, 2013. The use of electrochemical sensors for monitoring urban air quality in low-cost, high-density networks. Atmos. Environ 70, 186–203. [Google Scholar]

- Morawska L, Thai PK, Liu XT, Asumadu-Sakyi A, Ayoko G, Bartonova A, Bedini A, Chai FH, Christensen B, Dunbabin M, Gao J, Hagler GSW, Jayaratne R, Kumar P, Lau AKH, Louie PKK, Mazaheri M, Ning Z, Motta N, Mullins B, Rahman MM, Ristovski Z, Shafiei M, Tjondronegoro D, Westerdahl D, Williams R, 2018. Applications of low-cost sensing technologies for air quality monitoring and exposure assessment: how far have they gone? Environ. Int 116, 286–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rappold AG, Stone SL, Cascio WE, Neas LM, Kilaru VJ, Sue Carraway M, Szykman JJ, Ising A, Cleve WE, Meredith JT, 2011. Peat bog wildfire smoke exposure in rural North Carolina is associated with cardiopulmonary emergency department visits assessed through syndromic surveillance. Environ. Health Perspect 119, 1415–1420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reche C, Viana M, Van Drooge BL, Fernández FJ, Escribano M, Castaño-Vinyals G, Nieuwenhuijsen M, Adami PE, Bermon S, 2020. Athletes’ exposure to air pollution during World Athletics Relays: a pilot study. Sci. Total Environ 717, 137161. [DOI] [PubMed] [Google Scholar]

- Reid CE, Brauer M, Johnston FH, Jerrett M, Balmes JR, Elliott CT, 2016. Critical review of health impacts of wildfire smoke exposure. Environ. Health Perspect 124, 1334–1343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reisen F, Duran SM, Flannigan M, Elliott C, Rideout K, 2015. Wildfire smoke and public health risk. Int. J. Wildland Fire 24, 1029–1044. [Google Scholar]

- Sayahi T, Butterfield A, Kelly KE, 2019. Long-term field evaluation of the Plantower PMS low-cost particulate matter sensors. Environ. Pollut 245, 932–940. [DOI] [PubMed] [Google Scholar]

- Schweizer D, Cisneros R, Shaw G, 2016. A comparative analysis of temporary and permanent beta attenuation monitors: the importance of understanding data and equipment limitations when creating PM2.5 air quality health advisories. Atmospheric Pollut. Res 7, 865–875. [Google Scholar]

- Sensit Technologies, 2020. https://www.gasleaksensors.com/products/sensit-ramp-air-quality-monitor.html. (Accessed 27 August 2020).

- Spinelle L, Gerboles M, Aleixandre M, 2015. Performance evaluation of amperometric sensors for the monitoring of O3 and NO2 in ambient air at ppb level. Procedia Eng. 120, 480–483. [Google Scholar]

- Thingy, L.L.C., 2020. https://thingy.us/wlf/. (Accessed 27 August 2020).

- Thoma ED, Brantley HL, Oliver KD, Whitaker DA, Mukerjee S, Mitchell B, Wu T, Squier B, Escobar E, Cousett TA, Gross-Davis CA, Schmidt H, Sosna D, Weiss H, 2016. South Philadelphia passive sampler and sensor study. J. Air Waste Manag. Assoc 66, 959–970. [DOI] [PubMed] [Google Scholar]

- United States Department of Agriculture, 2014. United States forest Resource facts and historical trends. FS-1035: Washington, DC. https://www.fia.fs.fed.us/library/brochures/docs/2012/ForestFacts_1952-2012_English.pdf. [Google Scholar]

- United States Department of Agriculture, 2016. Forest Health Monitoring: National Status, Trends, and Analysis 2015. Forest Service Research & Development, Southern Research Station, SRS-213, Asheville, NC. [Google Scholar]

- United States Environmental Protection Agency, 2017. Wildland fire sensor challenge. https://innocentive.com/ar/challenge/9933927. (Accessed 27 August 2020).

- United States Environmental Protection Agency, 2018. Winners of the Wildland Fire Sensor Challenge Develop Air Monitoring System Prototypes. https://www.epa.gov/air-research/winners-wildland-fire-sensors-challenge-develop-air-monitoring-system-prototypes#winners. (Accessed 27 August 2020).

- United States Interagency Wildland Fire Air Quality Response Program, 2021. https://sites.google.com/firenet.gov/wfaqrp-external/home. (Accessed 27 August 2020).

- Urbanski SP, Hao WM, Baker S, 2009. Chemical composition of wildland fire smoke. In: Arbaugh M, Riebau A, Andersen C (Eds.), Developments in Environmental Science, vol. 8. A Bytnerowicz, Elsevier B.V, pp. 79–107 (ISSN: 1474-8177). [Google Scholar]

- Urbanski SP, 2013. Combustion efficiency and emission factors for wildfire-season fires in mixed conifer forests of the northern Rocky Mountains, US. Atmos. Chem. Phys 13, 7241–7262. [Google Scholar]

- Vedal S, Dutton SJ, 2006. Wildfire air pollution and daily mortality in a large urban area. Environ. Res 102, 29–35. [DOI] [PubMed] [Google Scholar]

- Wei P, Ning Z, Ye S, Sun L, Yang FH, Wong KC, Westerdahl D, Louie PKK, 2018. Impact analysis of temperature and humidity conditions on electrochemical sensor response in ambient air quality monitoring. Sensors 18, 59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weitekamp CA, Stevens T, Stewart MJ, Bhave P, Gilmour MI, 2020. Health effects from freshly emitted versus oxidatively or photochemically aged air pollutants. Sci. Total Environ 704, 135772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Westerling AL, 2016. Increasing western U.S. forest wildfire activity: sensitivity to changes in the timing of spring. Philos. Trans. R. Soc., B 371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams RK, Kaufman A, Hanley T, Rice J, Garvey S, 2014. Evaluation of Field Deployed Low Cost PM Sensors. U.S. EPA, Washington, DC. EPA/600/R-614/464. [Google Scholar]

- Zamora ML, Xiong FLZ, Gentner D, Kerkez B, Kohrman-Glaser J, Koehler K, 2019. Field and laboratory evaluations of the low-cost plantower particulate matter sensor. Environ. Sci. Technol 53, 838–849. [DOI] [PubMed] [Google Scholar]

- Zheng T, Bergin MH, Johnson KK, Tripathi SN, Shirodkar S, Landis MS, Sutaria R, Carlson DE, 2018. Field evaluation of low-cost particulate matter sensors in high- and low-concentration environments. Atmos. Meas. Tech 11, 4823–4846. [Google Scholar]

- Zimmerman N, Presto AA, Kumar SPN, Gu J, Hauryliuk A, Robinson ES, Robinson AL, Subramanian R, 2018. A machine learning calibration model using random forests to improve sensor performance for lower-cost air quality monitoring. Atmos. Meas. Tech 11, 291–313. [Google Scholar]

- Zou Y, Young M, Chen JW, Liu JQ, May A, Clark JD, 2020. Examining the functional range of commercially available low-cost airborne particle sensors and consequences for monitoring of indoor air quality in residences. Indoor Air 30, 213–234. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Datasets related to this article can be found at https://catalog.data.gov/dataset/epa-sciencehub.