Abstract

Purpose

The goal of this study was to establish the perceptual underpinnings of the terms that are commonly used by patients when describing the quality of their tinnitus.

Method

Using a free-classification method, 15 subjects with normal hearing placed 60 different tinnitus-like sounds into similarity clusters on a grid. Multidimensional scaling, hierarchical clustering, and acoustic analyses were used to determine the acoustic underpinnings of the perceptual dimensions and perceptual similarity.

Results

Multidimensional scaling revealed three different perceptual dimensions (pitch, modulation depth + spectral elements, and envelope rate). Hierarchical clustering revealed five explicit similarity clusters: tonal, steady noise, pulsatile, low-frequency fluctuating noise, and high-frequency fluctuating.

Conclusions

Results are consistent with tinnitus perceptions falling into a small set of categories that can be characterized by their acoustics. As a result, there is the potential to develop different tools to assess tinnitus using a variety of different sounds.

Tinnitus is one of the few auditory disorders for which there is no adopted standard to measure its perception, despite consensus in the research community that standardized procedures should be used worldwide for tinnitus assessment (cf., Landgrebe et al., 2012, Langguth et al., 2007, Tyler, 1985). Measuring tinnitus pitch and loudness of tinnitus is ongoing (e.g., Henry & Manning, 2019; McMillan et al., 2014; Noreña et al., 2002; Roberts et al., 2008; Tyler & Stouffer, 1989), but less effort has been directed toward determining the quality of the tinnitus, or what the tinnitus “sounds like.” Current assessment involves a case history in which a patient describes tinnitus using words; however, these words are nonspecific and do not evoke robust acoustic descriptions. We suggest that measuring tinnitus quality would greatly improve assessment. Here, we present initial steps toward developing a procedure to measure tinnitus quality by establishing the perceptual dimensions that underlie the commonly used descriptors of tinnitus. The primary goal is to determine whether acoustic descriptions can be used to characterize the different perceptions of tinnitus.

Commonly, the case history provides patients with a closed set of terms to be used to describe their tinnitus. For example, Meikle et al. (2004) analyzed data from 1,625 patients who were given 16 different terms with which to describe their tinnitus. Their analysis showed that ringing, hissing, clear tone, high-tension wire, and buzzing were most commonly selected. Similarly, Stouffer and Tyler (1990) found that patients commonly used the terms ringing, buzzing, crickets, hissing, and whistling. While these terms imply perceptual similarities to tones and noises, they also suggest attributes other than just pitch or loudness.

There are numerous reasons to suggest that using words to describe tinnitus is inadequate. Goodhill (1952) suggested that a patient's familiarity with musical terms impacted the ability to describe their tinnitus. Furthermore, words are open to interpretation and rely on high-level semantic features that vary across people (Gustavino, 2007). While it is common for humans to reference perceptions to objects or events, an individual's experience can influence the terms used to describe tinnitus (Searchfield, 2014; Tyler et al., 2008). As a result, patients may use different words to describe the same tinnitus perception, or vice versa. In other cases, patients may not have sufficient access to the language needed to describe their tinnitus perception (e.g., aphasia or nonnative speakers). Tyler et al. (2008) emphasized the importance of an accurate description of tinnitus quality as different tinnitus subgroups may require different treatments. Generally, both clinical and research methods include a quick measurement of tinnitus quality in which a participant selects among a pure tone and one or two narrow bands of noise to match his tinnitus. While this procedure allows an easily interpretable measurement, it neglects the complex perceptions often reported by patients.

One way to improve the characterization of tinnitus is to use sounds, rather than words, such as that done by Goodhill (1952) who allowed patients to select a sound that best-matched their tinnitus from 27 different sounds. Using Goodhill's work as a framework, we suggest that an alternate method for characterizing tinnitus using sounds should tap into the relevant perceptual dimensions of tinnitus, but not be restricted to tones and noises. As a first step toward this goal, this research note describes our effort to determine the underlying perceptual dimensions of the possible characteristics of tinnitus and to determine whether sounds based on tinnitus descriptors fall into groups based on their similarity. Results from these two approaches can ultimately guide a development of a set of sounds that could be used in a tinnitus assessment. For example, patients could select among various sets of sounds (with similar perceptual characteristics) to select the set that best characterizes their tinnitus. The acoustics of that set could then inform audiologists of potential etiology or treatment of tinnitus.

This study extends the work of Lentz and He (2013), who conducted a limited assessment of the perceptual dimensions (using listeners with normal hearing) of sounds based on tinnitus descriptors using multidimensional scaling (MDS). Participants listened to sounds derived from terms commonly used to describe tinnitus and grouped the sound tokens into clusters based on their similarity using a free classification approach developed by Imai and Garner (1965). A similarity matrix was generated by treating sounds in the same cluster as similar to each other, whereas sounds in separate clusters were considered dissimilar. In contrast to Lentz and He, here we applied MDS coupled with acoustic analyses to determine the different perceptual dimensions of these sounds. We also used hierarchical clustering analysis with acoustic analyses to establish which acoustic parameters led sounds to be perceived as similar to each other. A similar approach has been used to determine the perceptual dimensions underlying various aspects of speech perception by both native and nonnative speakers of English (see Atagi & Bent, 2016; Bradlow et al., 2010; Clopper & Pisoni, 2007), and for characterizing dysarthric speech (Lansford et al., 2014). Combined, these approaches will allow us to achieve the primary goals of this study, which are to determine the perceptual dimensions that underlie the terminology used to describe tinnitus and to determine the acoustic characteristics of sounds with similar perceptions.

Method

Stimuli

Sixty unique sounds were chosen as being representative of the major terms used to describe tinnitus (such as buzzing, ringing, whistling, tonal, crickets, roaring etc.; see Meikle et al., 2004, for additional terms). These sounds were chosen to reflect the descriptive terms without making major assumptions about the acoustics. For example, buzzing sounds were bees, transformers, and a chain saw, whereas whistles were sport whistles and a tea kettle. Thirty-seven environmental sound tokens in .wav format were obtained from The General Series 6000 Sound Library (Hollywood Edge and Sound FX), and .mp3 files of four different cricket species were selected from the Singing Insects of North America database (Walker & Cooper, 2019). The duration of stimuli varied from 1.24 to 9.11 s and the average duration was 4.23 s. Because tones and noise bands are commonly used in clinical assessment, we also used 19 synthesized sounds (5 s in duration). These sounds were pure tones of different frequencies, complex tones with various frequencies and amplitude spectra, and noise bands with different bandwidths and center frequencies. All stimuli were normalized to the same root-mean-square (RMS) pressure and were presented to subjects using 150-ms raised cosine on/off ramps.

During the experiment, sounds were presented to subjects at an overall level of about 60 dB SPL using a 24-bit Card Deluxe sound card at a sampling rate of 44.1 kHz. The signal was routed through a Tucker-Davis Technologies headphone buffer (HB5) and a programmable attenuator (PA4) to a single earphone of a Sennheiser HD250 II Linear headset to the right ear.

Subjects and Procedure

Fifteen subjects, 12 female, aged from 22 to 26 years, participated in this study. Three subjects were African American, and one was Asian and a nonnative speaker of English. All others were Caucasian. All subjects had audiometric hearing thresholds of 15 dB HL or better at standard audiometric frequencies (American National Standards Institute, 2010). Subjects sat in front of a computer monitor with a set of 60 numbered icons, each representing one unique sound token, on the left of the screen. On the right of the screen, a 20 × 20 empty grid was visible. Subjects listened to the sounds by clicking each box and placed similar sounds into clusters by dropping each box onto the grid. Subjects could create as many clusters as desired and place as many or as few sounds into the clusters. Subjects were allowed to listen to the audio clips repeatedly and were under no time constraints. Two subjects generated two large clusters and completed the experiment again after being given an example grid, which illustrated unnumbered boxes in groups of various sizes. This was done as we assumed, a priori, that these sounds had multiple perceptual dimensions, rather than just two.

Analysis

A 60 × 60 similarity matrix was derived for each subject by assigning a score of 1 to sound pairs in the same cluster and a score of 0 to sound pairs in different clusters. Then, the grids across the 15 subjects were summed to generate a global similarity matrix. MDS was applied to this matrix using an individual differences scaling method and a standard Euclidean model within SPSS. Hierarchical clustering was also conducted in an attempt to quantify what sounds have similar perceptual characteristics. This procedure used the average between-groups linkage with a squared Euclidean distance metric. As described in subsequent sections, acoustic analyses are used to inform the nature of the MDS dimensions and clusters.

To determine which acoustic parameters best predicted the perceptual dimensions revealed by MDS and the hierarchical clustering, a variety of acoustic analyses were conducted on all 60 stimuli. These 48 measures were based on those used by Gygi et al. (2007) and included spectral measures (RMS pressure, loudness, pitch measures, and spectral moments), temporal measures (duration, crest factor, numbers and size of peaks and bursts, roughness, autocorrelation, and modulation depth in different frequency bands and at different rates), and spectrotemporal measures that included spectral movement and cross-band envelope correlation. Loudness and pitch were both estimated using models (loudness: Genesis Acoustics, 2010; pitch: Das et al., 2017). In order to determine the nature of the perceptual dimensions, we conducted a stepwise regression on each of the three dimensions using the acoustic variables as predictors.

Results

MDS

The three-dimensional MDS solution was selected due to a break in the function relating stress to dimension number occurring between two and three dimensions (stress =.07; RSQ =.98). Across all subjects, Dimension 1 (D1) was weighted slightly higher (.39) than D2 (.30) or D3 (0.29), and weirdness (the amount of deviation from the mean) for each subject ranged between .02 and 1.0. Four subjects had weirdness above .3; three heavily weighted D1 and one only weighted D2. Admittedly, the sample size is not quite large enough to make any significant conclusions about these differences, but it is notable that two of those subjects were African American women and the other two were men. This result provides some support for our contention that an individual's background might influence how they categorize and classify sounds.

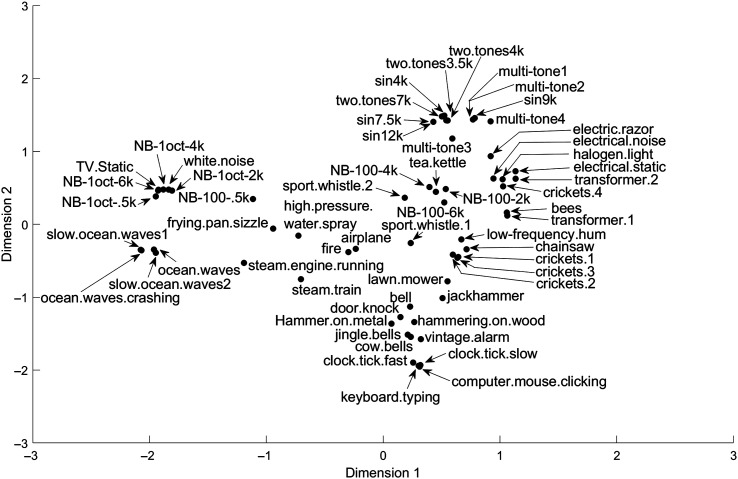

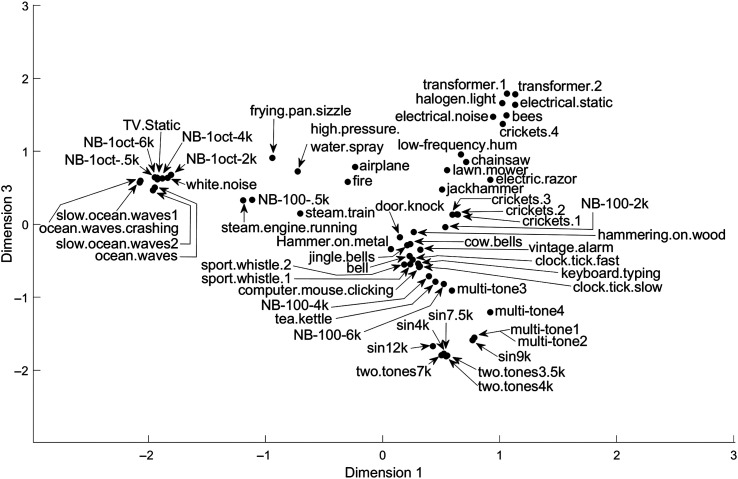

Figure 1 illustrates the MDS solution for D2 versus D1, whereas Figure 2 illustrates D3 versus D1. In Figure 1, we observe a cluster of ocean sounds and noises at the low end of D1 and tones, insects, and electrical sounds at the high end of D1. This visual inspection suggests that D1 may be a pitch strength dimension. For D2, clicking/pulsating sounds appear at the negative side of the dimension, whereas sounds with flatter envelopes are at the positive side (pure tones and electrical noises). Consequently, D2 may be related to the amount of perceived fluctuation within a sound. Figure 2 shows that the negative end of D3 contains tones and narrowband noises, whereas the positive end contains electrical noises and broadband noises. Perhaps this dimension has something to do with the rate of periodicity in sounds (e.g., electrical noises have slow fluctuations, whereas tones have fast fluctuations).

Figure 1.

The multidimensional scaling solution is shown, with Dimension 1 plotted against Dimension 2. Individual sounds are shown by descriptive names. Narrow band noises are specified by their bandwidth (either 100 Hz or 1 octave) and center frequency in hertz. Pure tones are specified as sin and the frequency in hertz.

Figure 2.

The multidimensional scaling solution is shown, with Dimension 1 plotted against Dimension 3. The same descriptive names as those plotted in Figure 1 are used.

Acoustic Analysis and Regression

The stepwise regression generally confirms our initial visual observations of the various dimensions. However, the regression provides a more complex and rich picture of the nature of these dimensions. For D1, the correlation coefficient (r) was .61 with three acoustic variables: the maximum envelope correlation across frequency, duration, and mean envelope correlation across frequency. Generally speaking, the dependence on envelope correlation across frequency suggests that D1 is mostly a pitch strength dimension, separating sounds with high pitch strength (tonal) from those with low pitch strength (noisy). We note, however, duration also unfortunately predicts this dimension, which may be due to the synthesized sounds (many of which were tonal or narrowband noise) all having the same duration. This somewhat complicates the interpretation of this dimension being related to sound quality, although adding duration to the regression only increased the r value from .52 to .57.

The eight predictor variables for D2 are modulation depth at 2 kHz, mean pitch salience, modulation depth at 500 and 62.5 Hz, crest factor, the standard deviation of the spectral centroid, the standard deviation of centroid velocity, and the number of autocorrelation peaks (r = .92). Collectively, these parameters suggest a perceptual dimension that is mostly a temporal dimension that is related to the degree of modulation present in the stimulus but influenced also by spectral–temporal elements. The regression also identified three acoustic variables as predictors of D3 (r = .89): mean size of envelope peaks (a measure of periodicity in the stimulus), roughness, and modulation depth at 125 Hz. This dimension also appears to be a temporal dimension but is different from D2 in that it is more related to the rate of modulation in sounds than the modulation depth.

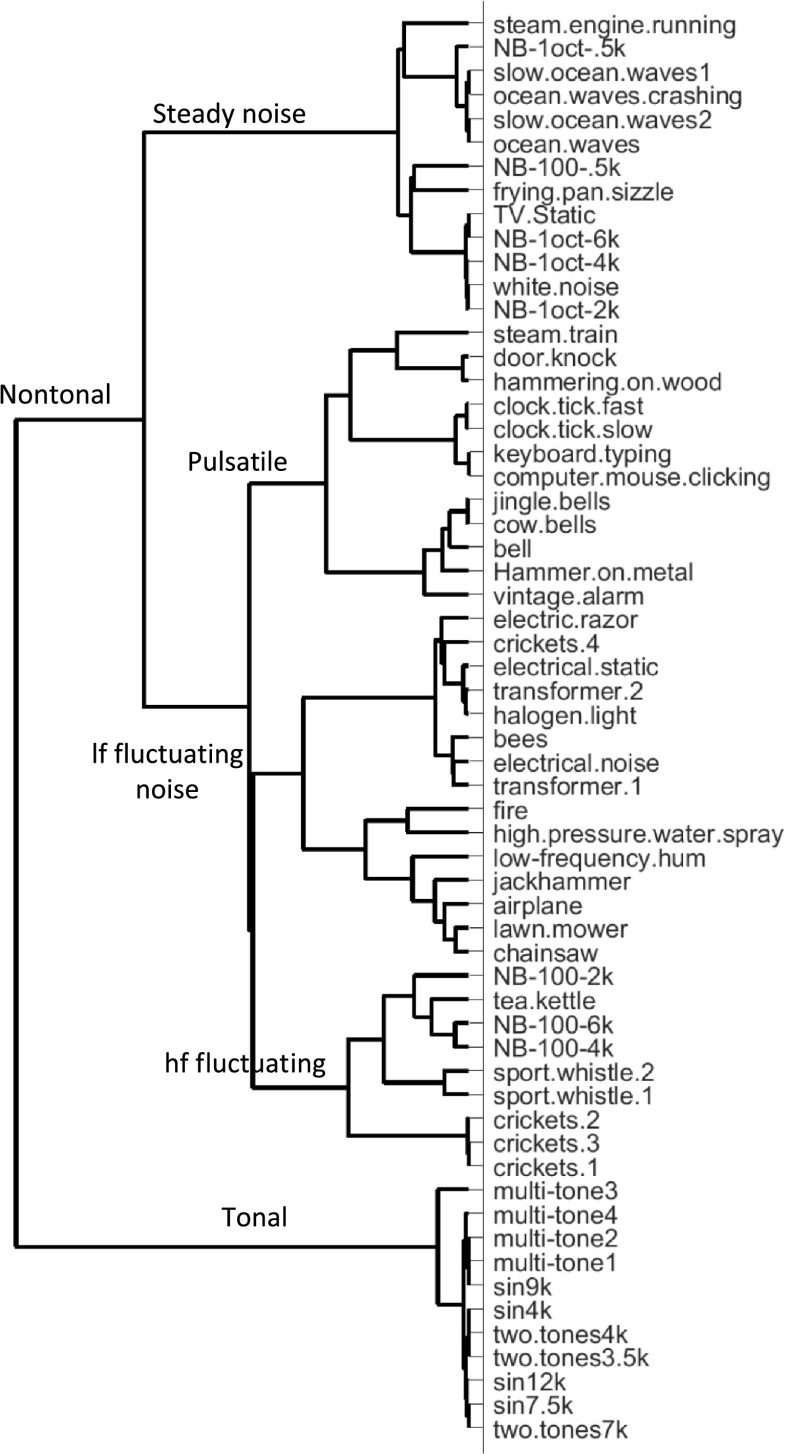

Hierarchical Clustering

To provide further insight, we conducted a hierarchical clustering analysis. This analysis uses the similarity data and mathematically determines which sounds are most similar to each other. Figure 3 plots the tree resulting from the full hierarchical clustering analysis. This hierarchical clustering resulted in two major branches (a tonal and nontonal branch) and five distinct clusters. In order to further our understanding of the nature of these clusters, we also explored the acoustic measures that were common to each of the clusters. In this acoustic analysis, we calculated the means of all acoustic measures for the five clusters and calculated a z score representing the mean for each cluster on the acoustic dimensions. We then used the z score to determine which acoustic measures characterized each cluster. This analysis allowed us to label the clusters on the basis of their acoustics.

Figure 3.

Results of hierarchical clustering, shown in a branching tree format. Two major branches (tonal and nontonal) and five clusters (steady noise, pulsatile, low-frequency [lf] fluctuating noise, high-frequency [hf] fluctuating, and tonal) are labeled.

The hierarchical clustering of Figure 3 illustrates two major branches. One of those branches terminates in a single cluster that exclusively contains all of the tonal sounds, both pure tones and the multitone sounds. This tonal sound cluster is characterized by high pitch and autocorrelation measures (e.g., strong pitch and tonality) and low modulation depth measures (e.g., steady, unmodulated sounds).

The other major branch of the hierarchical clustering tree includes all nontonal sounds, which are the environmental sounds and the noises. One of its branches results in a single cluster, which contains water sounds and broadband noises. This cluster tends to have low and weak pitch measures (low-frequency RMS, pitch measures, and cross-correlation). These sounds also have some, but not extreme, temporal variation (burst duration, roughness, and number of envelope peaks). Consequently, we consider this cluster to be the steady noise cluster.

The other side of the nontonal branch breaks into three clusters: One cluster contains some of the insect sounds and the high-frequency narrow bands of noises; these sounds tend to have a high number of bursts, some modulation in the high-frequency channels, high pitches, and high-envelope cross-correlation. We propose that this cluster is a high-frequency (hf) fluctuating sound cluster. Another contains sounds from man-made sources (transformer and electrical noises, a lawn mower and a chain saw) and a limited set of insect sounds (bees and one of the cricket sounds). These sounds do not stand out in terms of their statistics with respect to the full set of sounds. While they are essentially characterized by being near the mean of all the sounds, they have moderate levels of roughness, low-frequency energy and low and weak pitches. Consequently, we label this cluster as low-frequency (lf) fluctuating noise. The last cluster contains broadband sounds that have high crest factors and high degrees of modulation. Thus, this is the pulsatile sound cluster. Given the characteristics of these clusters, the categories generally reflect the quality of the sound rather than some other dimension (such as loudness, pitch, or duration).

Discussion

The purpose of this study was to determine the acoustic correlates that underlie the perception dimensions of sounds based on tinnitus descriptions. By examining the similarity of sounds used to describe tinnitus, we see that three perceptual dimensions underlie these sounds and that five different types of sounds (clusters) can be used to capture the perception of these sounds. Notably, the MDS solution has converged on the quality of these sounds, rather than the pitch or the loudness. Perhaps more relevant to the goal of developing a test to measure tinnitus are the hierarchical clustering results, which suggested that these sounds could be grouped into five categories: tones, steady noises, low-frequency fluctuating noise, high-frequency fluctuating, and pulsatile sounds.

We first compare our results with those of Gygi et al. (2007), who measured the perceptual dimensions underlying environmental sounds. Although their experiment used a larger set of sounds than here and they applied traditional MDS to pairwise similarity judgments in a small set of subjects, they also established three perceptual dimensions that are rather similar to the dimensions uncovered in our data set. As described in Gygi et al. (2007), their D1 was related to pitch strength, D2 was related to envelope structure, and D3 was related to spectral–temporal complexity. In general, these dimensions have similarity to those in this study, although the dimensions here have different importance (D1: pitch strength, D2: degree of fluctuations and spectral elements, D3: rate of fluctuations). Notably, our MDS analysis provided some insight into the relative weights of the different dimensions, and D2 and D3 were extremely similar in their weights.

The similarity between these two studies (one using tinnitus-like sounds and the other using a large set of environmental sounds) may seem surprising. Yet, the environmental sounds used in Gygi et al.'s (2007) study have a lot in common with the sounds used here, of which many were environmental sounds. Second, people must use their experience with sounds in the environment to describe their tinnitus perception. In some ways, it is reassuring that the current results are so similar to those of Gygi et al., given the differences in methodology.

We also see some similarities in the hierarchical clustering between the two studies, but there are some obvious differences. While Gygi et al. (2007) found a major division between harmonic and inharmonic sounds, the major division here was between tones (which were all notably also all synthesized) and all other sounds. In both studies, sounds with strong periodicity or modulation generally occurred in separate clusters from those with more noisy characteristics. Clicking or periodic sounds fell into separate subclusters from the noisy broadband sounds.

Comparing these findings with the work of Pan et al. (2009) also yields some interesting observations (see also Tyler et al., 2008). In Pan et al.'s analysis, patients' description of tinnitus were grouped into five distinct categories: cricket-like, noise-like (e.g., buzzing, roaring, and whooshing), tone-like (hissing, musical note, ringing and clicking), other (including pulsing), and unknown. Two of these categories are very similar between the studies: the tonal and steady noise categories, categories that are also used to measure tinnitus quality in both clinical assessment and in the “Tinnitus Tester” developed by Roberts et al. (2008).

The other three categories identified here and by Pan et al. (2009) are also somewhat parsimonious. First, Pan et al. identified a “cricket-like” cluster, potentially similar to our high-frequency fluctuating cluster, which contained three of the four cricket sounds. Second, Pan et al. also identified an “other” cluster, which included pulsing tinnitus. This cluster may be similar to our pulsatile (clicking) cluster. On the other hand, the hierarchical clustering identified a fifth cluster (the low-frequency fluctuating noise cluster), which does not align with any of those estimated by Pan et al. Rather, Pan et al.'s fifth category was “unknown.” Despite some of these differences, our results do fit reasonably well with the analysis of Pan et al.

In conjunction with Pan et al. (2009), the results of this study imply that case histories that use more than five or six different terms are likely providing too many options to their patients. Some of the terms may be ambiguous to the patient and have a very different meaning to patients and audiologists. Other terms may be redundant with one another, such as “buzzing” and “humming.” Given that there is no standard selection of terms used across clinics for tinnitus descriptions, clinics should be advised that until better tools are available, great care be taken when selecting the terms available to patients when describing their tinnitus. In light of the results of this study, we could speculate that terms based on the clustering analysis might be more appropriate for the closed set of words. Because it is commonly assumed that patients lack a deep understanding of the word frequency, replacing it with the word pitch would lead to the terms of (a) tone-like; (b) noise-like and steady (like static noise); (c) cricket-like; (d) low-pitched, noise-like, and fluctuating (like transformers or bees); and (e) pulsating.

As the field works toward better tools to assess and diagnose tinnitus, some groups have taken steps toward improving the case history questionnaire. For example, the tinnitus case history questionnaire developed by the Tinnitus Research Initiative (tinnitusresearch.net) contains directed questions about tinnitus and uses a small set of descriptors. In this case history, patients are first asked whether the tinnitus seems to pulsate. An open-ended question then asks patients to describe their tinnitus in their own words, with a follow-up closed-set question with the options of tone, noise, crickets, or other. However, this case history, as with the case histories commonly used in many clinics, still relies on words to describe tinnitus. While using a very small set of descriptors may help to disambiguate the terms, this case history may not allow patients with language difficulties to appropriately describe their tinnitus. Additional work is needed to establish whether a sound-based measurement approach would provide better descriptions of tinnitus than verbal descriptors.

Finally, one must remain cautious about interpreting these data as being truly reflective of the perception of tinnitus. First, we should consider that the dimensions and clusters established here may not capture all of the unique perceptual aspects of these sounds. Second, by using sounds representative of the words to describe tinnitus, we may have missed a variety of descriptors or we may have used sounds that are not similar to tinnitus at all. Indeed, tinnitus patients often find that words provided to them are not sufficient descriptors (cf., Meikle et al., 2004). While one possible explanation for their result is that the words are inadequate to describe tinnitus, tinnitus may be associated with its own unique perception with no acoustic analogue.

Ultimately, these results could be used in a clinical assessment of tinnitus, particularly as we work to home in on a set of sounds (or words) with an unambiguous acoustic description. Perhaps patients could listen to groups of sounds from these acoustic categories and indicate which group of sounds best represents their tinnitus. Then, once the quality or type of the tinnitus is known, further tests such as assessment of tinnitus pitch and tinnitus loudness could be completed. As with current tinnitus assessment, the categories established by this type of technique could also provide the audiologist with information for open-ended questions that are helpful for counseling and for selection of tinnitus treatment (Tyler & Baker, 1983).

Summary and Conclusions

This study adopted a free classification technique to determine the perceptual dimensions and clusters of sounds based on the descriptors of tinnitus. The results demonstrated that the perceptual dimensions underlying tinnitus are similar to those underlying environmental sounds. The clustering analysis indicated that five clusters captured the perception of sounds based on the words used to describe tinnitus: tonal, noise, pulsatile, low-frequency fluctuating noise, and high-frequency fluctuating. Through this work, we have been able to establish a set of acoustic variables on which a set of sounds might be developed in a tool to measure the perception of tinnitus. Further work testing patients with hearing loss and tinnitus is needed to establish whether these dimensions vary in people with hearing loss and whether these dimensions and clusters truly capture the perception of tinnitus.

Acknowledgments

This work was supported by National Institutes of Health Grant R21 DC013171 awarded to Jennifer Lentz. The authors would like to thank Gregory Ellcessor and Grace Hunter-Tran for their assistance with selecting the stimuli and testing experimental subjects.

Funding Statement

This work was supported by National Institutes of Health Grant R21 DC013171 awarded to Jennifer Lentz.

References

- American National Standards Institute. (2010). Specification for audiometers (S3.6-2010). Author. [Google Scholar]

- Atagi, E. , & Bent, T. (2016). Auditory free classification of native and nonnative speech by nonnative listeners. Applied Psycholinguistics, 37(2), 241–263. https://doi.org/10.1017/S014271641400054X [Google Scholar]

- Bradlow, A. , Clopper, C. , Smiljanic, R. , & Walter, M. A. (2010). A perceptual phonetic similarity space for languages: Evidence from five native language listener groups. Speech Communication, 52(11–12), 930–942. https://doi.org/10.1016/j.specom.2010.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clopper, C. G. , & Pisoni, D. B. (2007). Free classification of regional dialects of American English. Journal of Phonetics, 35(3), 421–438. https://doi.org/10.1016/j.wocn.2006.06.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Das, O. , Smith, J. O. , & Chafe, C. (2017). Real-time pitch tracking in audio signals with the extended complex Kalman filter. In Torin A., Hamilton B., Bilbao S., & Newton M. (Eds.), Proceedings of the 20th International Conference on Digital Audio Effects (pp. 118–124). University of Edinburgh.

- Genesis Acoustics. (2010). Loudness toolbox [Computer software].

- Goodhill, V. (1952). A tinnitus identification test. Transactions of the American Otological Society, 40, 122–132. [PubMed] [Google Scholar]

- Gustavino, C. (2007). Categorization of environmental sounds. Canadian Journal of Experimental Psychology, 61(1), 54–63. https://doi.org/10.1037/cjep2007006 [DOI] [PubMed] [Google Scholar]

- Gygi, B. , Kidd, G. , & Watson, C. S. (2007). Similarity and categorization of environmental sounds. Perception and Psychophysics, 69, 839–855. https://doi.org/10.3758/BF03193921 [DOI] [PubMed] [Google Scholar]

- Henry, J. A. , & Manning, C. (2019). Clinical protocol to promote standardization of basic tinnitus services by audiologists. American Journal of Audiology, 28(1S), 152–161. https://doi.org/10.1044/2018_AJA-TTR17-18-0038 [DOI] [PubMed] [Google Scholar]

- Imai, S. , & Garner, W. R. (1965). Discriminability and preference for attributes in free and constrained classification. Journal of Experimental Psychology, 69(9), 596–608. https://doi.org/10.1037/h0021980 [DOI] [PubMed] [Google Scholar]

- Landgrebe, M. , Azevedo, A. , Baguley, D. , Bauer, C. , Cacace, A. , Coelho, C. , Dornhoffer, J. , Figueiredo, R. , Flor, H. , Hajak, G. , van de Heyning, P. , Hiller, W. , Khedr, E. , Kleinjung, T. , Koller, M. , Lainez, J. M. , Londero, A. , Martin, W. H. , Mennemeier, M. , … Langguth, B. (2012). Methodological aspects of clinical trials in tinnitus: A proposal for an international standard. Journal of Psychosomatic Research, 73(2), 112–121. https://doi.org/10.1016/j.jpsychores.2012.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langguth, B. , Goodey, R. , Azevedo, A. , Bjorne, A. , Cacace, A. , Crocetti, A. , Del Bo, L. , De Ridder, D. , Diges, I. , Elbert, T. , Flor, H. , Herraiz, C. , Ganz Sanchez, T. , Eichhammer, P. , Figueiredo, R. , Hajak, G. , Kleinjung, T. , Landgrebe, M. , Londero, A. , … Vergara, R. (2007). Consensus for tinnitus patient assessment and treatment outcome measurement: Tinnitus Research Initiative meeting, Regensburg, July 2006. Progress in Brain Research, 166, 525–536. https://doi.org/10.1016/S0079-6123(07)66050-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lansford, K. L. , Liss, J. M. , & Norton, R. E. (2014). Free-classification of perceptually similar speakers with dysarthria. Journal of Speech, Language, and Hearing Research, 57(6), 2051–2064. https://doi.org/10.1044/2014_JSLHR-S-13-0177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lentz, J. J. , & He, Y. (2013). Acoustic correlates of tinnitus-like sounds. Proceedings of Meetings on Acoustics, 19(1), 050082. https://doi.org/10.1121/1.4799576 [Google Scholar]

- McMillan, G. P. , Thielman, E. J. , Wypych, K. , & Henry, J. A. (2014). A Bayesian perspective on tinnitus pitch matching. Ear and Hearing, 35(6), 687–694. https://doi.org/10.1097/AUD.0000000000000081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meikle, M. B. , Creedon, T. A. , & Griest, S. E. (2004). Tinnitus Archive. http://www.tinnitusarchive.org/

- Noreña, A. , Micheyl, C. , Chéry-Croze, S. , & Collet, L. (2002). Psychoacoustic characterization of the tinnitus spectrum: Implications for the underlying mechanisms of tinnitus. Audiology and Neurotology, 7, 358–369. https://doi.org/10.1159/000066156 [DOI] [PubMed] [Google Scholar]

- Pan, T. , Tyler, R. S. , Ji, H. , Coelho, C. , Gehringer, A. K. , & Gogel, S. A. (2009). The relationship between tinnitus pitch and the audiogram. International Journal of Audiology, 48(5), 277–294. https://doi.org/10.1080/14992020802581974 [DOI] [PubMed] [Google Scholar]

- Roberts, L. E. , Moffat, G. , Baumann, M. , Ward, L. M. , & Bosnyak, D. J. (2008). Residual inhibition functions overlap tinnitus spectra and the region of auditory threshold shift. Journal of the Association for Research in Otolaryngology, 9, 417–435. https://doi.org/10.1007/s10162-008-0136-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Searchfield, G. D. (2014). Tinnitus what and where: An ecological framework. Frontiers in Neurolology, 5, 271. https://doi.org/10.3389/fneur.2014.00271 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stouffer, J. L. , & Tyler, R. S. (1990). Characterization of tinnitus by tinnitus patients. Journal of Speech and Disorders, 55(3), 439–453. https://doi.org/10.1044/jshd.5503.439 [DOI] [PubMed] [Google Scholar]

- Tyler, R. S. , & Baker, L. J. (1983). Difficulties experienced by tinnitus sufferers. Journal of Speech and Hearing Disorders, 48(2), 150–154. https://doi.org/10.1044/jshd.4802.150 [DOI] [PubMed] [Google Scholar]

- Tyler, R. , Coelho, C. , Tao, P. , Ji, H. , Noble, W. , Gehringer, A. , & Gogel, S. (2008). Identifying tinnitus subgroups with cluster analysis. American Journal of Audiology, 17(2), S176–S184. https://doi.org/10.1044/1059-0889(2008/07-0044) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tyler, R. S. (1985). Psychoacoustical measurement of tinnitus for treatment evaluations. In Myers E. (Ed.), New dimensions in otorhinolaryngology head and neck surgery (pp. 455–458). Elsevier. [Google Scholar]

- Tyler, R. S. , & Stouffer, J. L. (1989). A review of tinnitus loudness. Hearing Journal, 42(11), 52–57. [Google Scholar]

- Walker, T. J. , & Cooper, T. M. (2019). Singing insects of North America. https://orthsoc.org/sina/ [Google Scholar]