Abstract

Objective

The electronic health record (EHR) data deluge makes data retrieval more difficult, escalating cognitive load and exacerbating clinician burnout. New auto-summarization techniques are needed. The study goal was to determine if problem-oriented view (POV) auto-summaries improve data retrieval workflows. We hypothesized that POV users would perform tasks faster, make fewer errors, be more satisfied with EHR use, and experience less cognitive load as compared with users of the standard view (SV).

Methods

Simple data retrieval tasks were performed in an EHR simulation environment. A randomized block design was used. In the control group (SV), subjects retrieved lab results and medications by navigating to corresponding sections of the electronic record. In the intervention group (POV), subjects clicked on the name of the problem and immediately saw lab results and medications relevant to that problem.

Results

With POV, mean completion time was faster (173 seconds for POV vs 205 seconds for SV; P < .0001), the error rate was lower (3.4% for POV vs 7.7% for SV; P = .0010), user satisfaction was greater (System Usability Scale score 58.5 for POV vs 41.3 for SV; P < .0001), and cognitive task load was less (NASA Task Load Index score 0.72 for POV vs 0.99 for SV; P < .0001).

Discussion

The study demonstrates that using a problem-based auto-summary has a positive impact on 4 aspects of EHR data retrieval, including cognitive load.

Conclusion

EHRs have brought on a data deluge, with increased cognitive load and physician burnout. To mitigate these increases, further development and implementation of auto-summarization functionality and the requisite knowledge base are needed.

Keywords: electronic health records, user-computer interface, data display, medical records, problem-oriented, clinical decision support systems

INTRODUCTION

BACKGROUND AND SIGNIFICANCE

The ubiquity of the electronic health record (EHR) has caused a data deluge, leading to cognitive overload and clinician burnout.1–6 It is estimated that physician burnout costs the healthcare system $4.6 billion per year,7 with physicians experiencing burnout having double the rate of turnover of other physicians.8 Data summarization holds promise for alleviating cognitive overload and reducing clinician burnout. Recognizing the need for improved data summarization, investigators have described a conceptual model for summarization9 and documented the clinical summarization capabilities of 12 EHRs.10 Currently available summarization capability is wanting.10–14

In order to better organize clinical data, the concept of the problem-oriented medical record (POMR) was first described by Lawrence Weed in 1968.15 One of the most striking aspects of Weed’s article is that even in 1968, he envisioned that the computer would be foundational to implementing a POMR.16 Despite near universal use of electronic health records today, however, barriers to problem-based data presentation and organization still exist.17

Incentive programs for EHR adoption through the American Recovery and Reinvestment Act of 200918 required the use of an active list of current and past diagnoses as part of the Meaningful Use program.19,20 Many EHR systems, following on ideas envisioned by Weed, fulfill this requirement by offering a problem list feature. However, collection of clinical data in a logical problem-oriented format has been minimally realized.21 Determinants necessary for successful development of a POMR include numerous functionalities, but one of the key areas is to “link problems and interventions… to prevent fragmentation of the patient’s data.”22 Tools to improve completeness of problem lists and minimize the complexity of maintenance have been explored, but clinical utility is still limited.23–26

Substandard EHR usability has consistently been cited as one of the top contributors to clinician burnout,1–5,27,28 with over 70% of EHR users noting health information technology–related stress.1 Common challenges include efficient navigation of the user interface and data procurement in the setting of information overload.29,30 As the amount of data necessary for patient care expands, synthesizing that data becomes more challenging. It is estimated that physicians' internal knowledge bases contain around 2 million data items, organized in memory in patterns.31 As the amount of data in each patient record increases, the task of matching these patterns to real-life patient scenarios becomes more and more complex. In 2013, investigators estimated that during a busy 10-hour emergency medicine shift, a physician typically performed 4000 clicks to navigate the EHR.32 A 2018 study found that an average of more than 200 000 individual data points were available during a single hospital stay.33

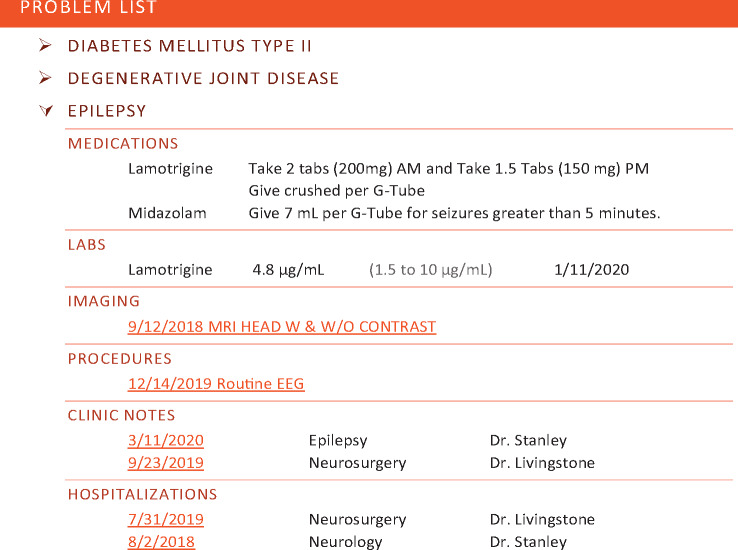

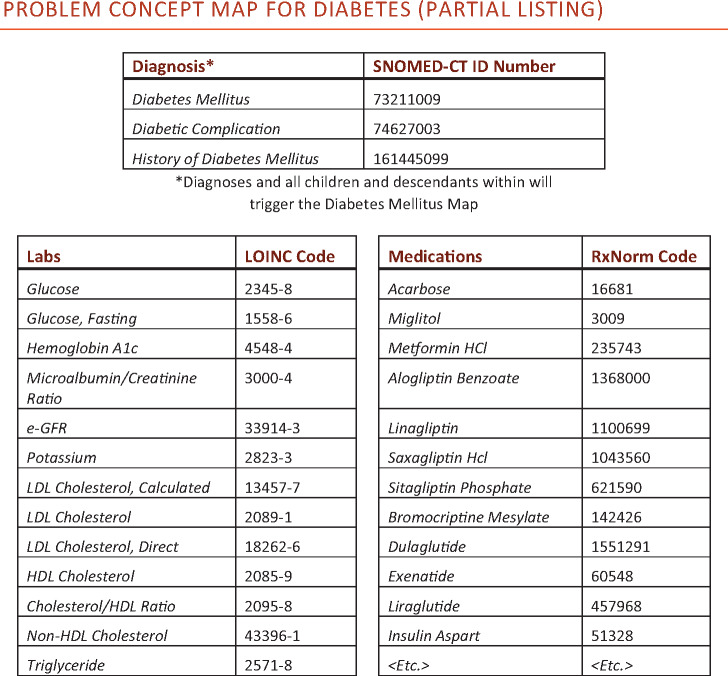

In order to help overcome this data burden, the problem-oriented view (POV), as described by Buchanan,34 includes a problem list with on-demand display of aggregated data and notes relevant to a particular problem. Determining which data is relevant to a particular problem is accomplished by referencing a corresponding problem concept map (PCM). Our team publishes PCMs,35 and the maps can be obtained by vendors and embedded in EHRs. Each PCM contains a cluster of associated SNOMED-CT (Systematized Nomenclature of Medicine Clinical Terms) problem codes,36 defining the problem of interest. For each cluster of SNOMED codes, the PCM points to relevant medications and lab results using linked RxNorm37 and LOINC (Logical Observation Identifiers Names and Codes)38 codes, respectively. When active in a patient chart, the POV leverages the data codes within the PCM knowledge base to retrieve relevant patient data and create a detailed display for each problem of interest. An example of how such a display could appear is included in Figure 1.

Figure 1.

Mockup of a problem-oriented view aggregated data display for epilepsy. The mockup shows lab and medication data and includes links which give access to pertinent data for imaging, procedures and notes.

Our PCMs are created based on national expert consensus. First, for each problem, a terminology team at the University of Wisconsin creates a draft ballot containing data items potentially relevant to the problem at hand. Then, for each problem, 6 volunteer subject matter experts (SMEs) use an online tool for 2 weeks of asynchronous discussion of the relevant data elements. This takes about 1 hour per SME. The 6 SMEs for a problem are each drawn from different institutions. The SMEs work with clinical names for the data, rather than terminology codes. A wrap-up SME phone call is held to finalize consensus on the PCM contents. Then, the terminology team converts the clinical names into the appropriate terminology items for association to the problem and creation of the map.

Progress on map creation can be seen at the Problem List MetaData website.35 A portion of a sample map is shown in Figure 2. Currently, PCMs contain problem-specific listings of medications and labs. Future iterations will also include relevant imaging studies, procedures, clinic notes, and hospitalizations, enabling the more comprehensive display depicted in Figure 1. As with the LOINC and RxNorm terminologies, PCMs are vendor neutral, will be available for downloading with no licensing fee, and will undergo periodic review.

Figure 2.

Example of the contents of a problem concept map.

The POV contrasts with traditional methods of displaying data, which are often based on episodes of care (as in the conventional progress note), or by data type (eg, in a medication list or results flowsheet). In the traditional model, the burden of data aggregation and synthesis is on the user. For example, one must sift through an alphabetical list of medications to determine the presence or absence of a relevant medication. Lab data may be lumped by common tests (eg, a basic metabolic panel which contains a collection of similar electrolytes), but determining the presence or absence of a test result still requires numerous navigation steps. Conversely, the POV provides an on-demand focused view of relevant information for a given problem. This format minimizes steps for data retrieval and potentially reduces cognitive burden from combing through data within the EHR.

Few have studied the workflow impact of interfaces that provide automatic clinical summarization.39–42 The POV warrants such an evaluation.

OBJECTIVE

In this study, we examined the impact of a POV on provider workflow. A display linking problems to relevant lab results and relevant current medications was used. The goal of the study was to assess the impact of the POV on (1) time required for data retrieval, (2) accuracy of data retrieval, (3) user satisfaction, and (4) user workload.

MATERIALS AND METHODS

Baseline data display

In the simulation environment, the traditional, or standard view (SV) served as the baseline or control. Users navigated to the EHR medication section to retrieve medication data and to the EHR lab section to retrieve lab results.

Intervention data display

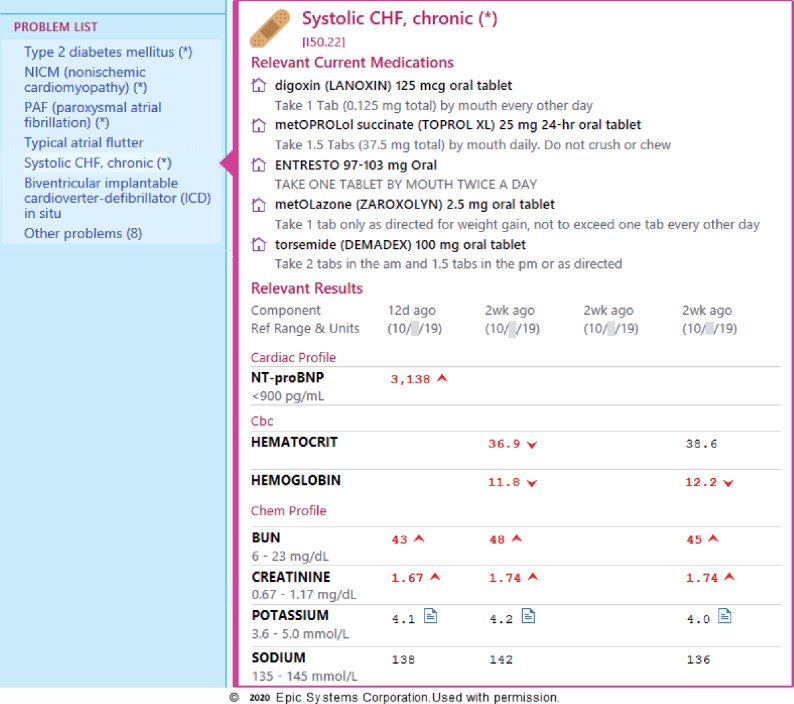

In the simulation environment, the POV served as the intervention. While viewing the problem list, a single click on a problem generated a display of the relevant lab results and medications on one screen, as in Figure 3 .

Figure 3.

The Epic problem-oriented view for chronic systolic congestive heart failure (CHF) selectively displays relevant medications and lab results.

Study design

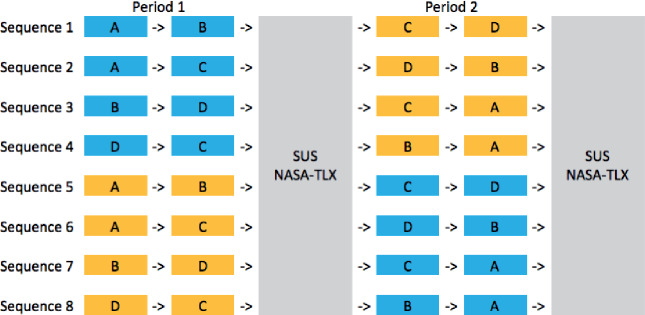

A randomized block allocation design was used. Participants completed the following series of tasks: (1) retrieve lab and medication information from the EHR using either the SV (control) or the POV (intervention) to answer questions about 2 cases; (2) complete the System Usability Scale (SUS)43 and the NASA Task Load Index (NASA-TLX)44 to provide opinions about the view; (3) retrieve information from the EHR using the view not utilized in the first task to answer questions about another 2 cases; and (4) Complete the SUS and NASA-TLX for the second view. The SUS is designed to capture the user’s impression of system usability and the NASA-TLX is designed to assess the workload required to complete a task. The order of the patient cases as well as the sequence of the 2 views were randomized, so that each participant randomly completed 1 of 8 sequences (see Figure 4). The block allocation design accounts for and mitigates learning effects (ie, when participants learn to complete scenarios more quickly over time).

Figure 4.

Participants were randomized to 1 of 8 sequences, as shown. Blue cases represent the standard view (control) and yellow cases represent the problem-oriented view (intervention).

All user interaction and patient data retrieval for each case were completed in a simulated EHR provided by Epic Systems (Verona, WI). The SV consisted of Epic’s standard functionality for accessing lab and medication data (ie, either the Chart Review or Results Review activities for labs and the Medications activity for medications).

To the extent possible, patient questions were designed to test participants’ abilities to extract data rather than test their clinical knowledge (eg, “John has hypothyroidism. When were his thyroid labs last checked?”). There were 4 questions for each patient. The worksheets for each case are included in Supplementary Appendix A. Participants completed the study one a time, each of them monitored by a study proctor.

Outcomes

We assessed the following outcomes: (1) time required for data retrieval, (2) accuracy of data retrieval, (3) user satisfaction, and (4) user workload. Participants were timed both by the study proctor and by an internal system clock. Accuracy of responses was determined by a single author (M.G.S.). The SUS was chosen to evaluate user satisfaction as this scale is commonly used to study EHR usability.45 SUS scores are scored on a 0-100 scale. A copy of the SUS form is shown in Supplementary Appendix B. NASA-TLX surveys were used to assess user workload. This instrument has been utilized and validated in previous health care technologic studies39,42,46 and has been recommended by the Agency for Healthcare Research and Quality to assess digital healthcare workflows.47 NASA-TLX scores are generated by taking the weighted average of 6 subscales that measure different aspects of workload, and lower scores represent reduced workload.44 A copy of the NASA-TLX form is shown in Supplementary Appendix C.

Study participants

Internal medicine residents were recruited from 3 academic medical centers (University of Wisconsin–Madison, University of Texas Southwestern, and Mass General Brigham). Each institution uses Epic as its EHR, so residents were familiar with standard Epic functionality. Residents could be at any level (PGY-1, PGY-2, or PGY-3). Participants were given a short explanation of the POV by study proctors and provided with written instructions about how to use it. Participants were compensated for their time with $50 Amazon gift cards. No information about participants was collected except for their names, to ensure that gift cards could be delivered. This study was approved by the institutional review board at each participating institution.

Statistical analysis

For each of the metrics, the mean and SD were calculated for the control cases and for the intervention cases. In analyzing these primary endpoints, we first calculated the average value for all measurements of a metric with the control and compared that to the average value for all measurements of the same metric with the intervention. We used a linear regression model with generalized estimating equations to determine the statistical significance of the metric differences between the POV modality group and the control group, while controlling for the period effect. Specifically, we used the model, where is the intercept, and are the coefficients corresponding to the treatment effect and period, respectively. All statistical analyses were carried out in SAS 9.4 software (SAS Institute, Cary, NC), and an alpha significance level of 0.05 was utilized.

RESULTS

A total of 51 participants were recruited, 17 from each center. All 51 participants completed the study, and there were no discrepancies that caused any participant data to be withheld from analysis. Table 1 details the mean case completion times, response error rates, SUS scores, and NASA-TLX scores.

Table 1.

Comparison of average completion times, error rates, SUS scores, and NASA-TLX scores by view

| Group | POV | Standard View | Difference | P Value |

|---|---|---|---|---|

| Mean completion time, s | 172.7 | 205.4 | POV 32.7 seconds faster | <.0001 |

| Mean response error rates, % | 3.4 | 7.7 | POV 4.3% more accurate | .0010 |

| Mean SUS score | 58.5 | 41.3 | POV 17.2 points higher | <.0001 |

| Mean NASA-TLX score | 0.72 | 0.99 | POV 0.27 points lower | <.0001 |

NASA-TLX: NASA Task Load Index; POV: problem-oriented view; SUS: System Usability Scale .

When using the POV, subjects gathered data more quickly and error rates were lower. Use of the POV to retrieve data resulted in a relative error rate reduction of 56% (the control error rate was 7.7% and the absolute error rate reduction was 4.3%). The higher SUS scores indicate greater user satisfaction with the POV. The lower NASA-TLX scores show that the subjects encountered less workload with the POV.

DISCUSSION

The POV provides an improved method for data review and acquisition when making clinical decisions. Specifically, by aggregating relevant data for a given problem, we have shown that a user can more accurately retrieve data in less time with a tool that is easier and more satisfying to use.

The cases we developed were clinically valid, and users carried out the simulation in the EHR software that they use on a regular basis. However, real clinical workflows can be more complex. In order to allow for direct comparison of test groups in this study, the tasks required of study participants were relatively narrow and specific (eg, identification of specific lab values or presence or absence of specific medications). In contrast, real-world clinical practice can require retrieving large quantities of data involving multiple problems. If not done efficiently, this retrieval can be labor-intensive and lead to increasing workflow burden.

Difficulty navigating the user interface and information overload have been shown to be sources of frustration with EHR usability and contributors to clinician stress and burnout.29,30,48 This study demonstrated the benefits of using the POV when performing simple data retrieval tasks for clinical problem management. The POV may be even more advantageous when performing more complex data retrieval. With complex data retrieval, the SV requires clinicians to divide their attention between different areas of the screen and then navigate across multiple screens to collate and process the needed information. This split-attention effect causes increased cognitive load.49 When PCMs expand to include additional data types (eg, imaging and procedures), a single POV page will display all the most relevant data for problem management. This will mitigate the split-attention effect and thus lessen the degree of cognitive load even more than with simple data queries. The POV’s improvement in data retrieval, a crucial component of EHR usability, has the potential to decrease clinician burnout given the dose-related relationship previously shown between the two.45

One limitation of this study was the use of a simulation EHR environment. POV is in production use at several sites in the United States, and future studies should include time-motion analysis of providers using POV in these live clinical settings. Audit logs will allow analysis of time spent in different activities such as chart review, the problem list, medication lists and results review. Because of gains in efficiency and accuracy of data retrieval, we expect that use of the POV will improve outcomes of interest, including time spent looking at the screen vs at a patient during an encounter and time spent charting after hours. Another study limitation was that only internal medicine residents were used as study subjects.

Any clinical decision support system (CDS) used in a complex, real-world environment may have unintended consequences, and POV is no exception. By studying the CDS embedded in computerized provider order entry systems, Ash et al50 developed a classification system for unintended consequences resulting from CDS. This scheme can be applied more generally, including to unintended consequences of the POV. Thus, we can utilize the scheme to consider possible unintended consequences of the POV with respect to POV content (ie, the PCMs) and POV presentation.

The PCMs could contain outdated content or erroneous content. To address the first issue and keep content up to date, the PCM staff regularly check for new content such as recently developed medications or laboratory tests for a given problem. These items then undergo review to determine whether or not they should be added to existing PCMs. Erroneous content can be caused by errors of omission or commission. An error of omission in the content knowledge base for drug-drug interaction software could result in a prescribing error. Similarly, in POV, a PCM omission of the hemoglobin LOINC code from the coronary artery disease map could cause a clinician to miss a critical value when reviewing a patient with coronary artery disease. Significant steps are taken during the PCM creation process to prevent this type of omission. Six SMEs are involved in creating each map to ensure that all critical terms (eg, lab results and medications) are included in the map for a problem. A backup method for addressing a term omission is the existence of a feedback link on the PCM website. An error of commission in a PCM (including a term that is not actually relevant to the problem) has less consequence, causing the POV to display a lab result or medication that is not actually relevant to the problem.

Unintended consequences related to POV presentation are likely to be minor. With its generation of auto-summaries, POV is considered passive CDS (as opposed to active support, which generates alerts that require user interaction). By its nature, POV does not require such user interaction, so presentation-associated risks should be minimal.

The POV will provide value in numerous clinical settings. While the simulated cases in this study are germane to ambulatory chronic disease management in primary or specialty care, there is utility in the POV across all phases of care. When evaluating an acute exacerbation of a problem (eg, a seizure in a patient with known epilepsy), an urgent care or emergency department provider benefits from having a summary of recent relevant laboratory trends. Similarly, inpatient providers will benefit from tools to quickly understand a patient’s problem-specific data (eg, when adjusting between outpatient and inpatient medication regimens for a cardiac arrhythmia). There are other applications for PCMs beyond a POV within a single EHR. EHR interoperability may also benefit, as illustrated by a recent study on a dashboard leveraging a FHIR (Fast Healthcare Interoperability Resources)-based approach for integrating health information exchange data.51 Guided by PCMs, a rapid expansion of similar utilities could accelerate data integration for display in a POV.

Our results indicate that clinicians prefer the POV to the SV in a simulation environment. Broad use of a POV will require a knowledge base that contains a sufficient number of problem-clinical data relationships.34 The knowledge base of maps must cover the most commonly encountered conditions for a range of clinical specialties. We estimate this number of maps to be 150-200. Progress on map creation can be seen at the Problem List MetaData website.35

A successful POV requires special EHR functionality from vendors and a knowledge base that specifies relationships between clinical problems and EHR data elements. Several EHR vendors have worked on such functionality in their research and development divisions. However, EHR vendors may not be well positioned to develop and maintain the clinical knowledge bases needed to drive such displays. The publicly available knowledge base we are developing as part of this project will help this vision become reality. Several PCMs are currently used in production by Epic customers (see Figure 3), and we continue to work with other EHR vendors to implement a POV using PCMs in their software. As early-adopting customers start to use POV more widely, market forces should push more vendors to take up this improvement in EHR design. The PCMs already in existence will facilitate the offering of a POV by these other vendors.

Ultimately we envision not only the creation and maintenance of several hundred PCMs, but also ongoing partnership with EHR developers to continuously improve on POV user interfaces. As the knowledge base of PCMs grows to cover the most commonly encountered problems, future study should evaluate the real-world clinical impacts of this innovation, including attempts to identify improvements in efficiency, usability, and provider satisfaction.

CONCLUSION

This study demonstrates the value of a POV, which displays relevant clinical data to assist in decision making. Expansion of such a system has the potential to streamline clinical workflows and allow for more efficient and accurate data retrieval while decreasing cognitive load and improving user satisfaction. The findings of our study support the importance of ongoing development of POV functionality and creation of the requisite map knowledge base.

FUNDING

This study was supported by funding from the University of Wisconsin Institute for Clinical Translational Research Novel Methods Pilot Award, National Institutes of Health grant no. UL1TR002373 (to JB and MS).

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

DATA AVAILABILITY

The data underlying this article will be shared on reasonable request to the corresponding author.

Supplementary Material

ACKNOWLEDGMENTS

We appreciate the support and encouragement of Peggy Hatfield and Mark Drezner, MD, of the University of Wisconsin Institute for Clinical Translational Research and of Maureen Smith, MD, of the University of Wisconsin Health Innovation Program. We thank David Rubins, MD, of Brigham and Women’s Hospital for assistance with subject recruitment.

We additionally express our deep appreciation to all the volunteer subject matter expert physicians who have participated in the problem concept map consensus process. These physicians are essential to the process of producing problem concept maps.

CONFLICT OF INTEREST STATEMENT

None.

REFERENCES

- 1. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc 2019; 26 (2): 106–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Toll E. A piece of my mind. The cost of technology. Jama 2012; 307 (23): 2497–8. [DOI] [PubMed] [Google Scholar]

- 3. Friedberg MW, Van Busum KR, Chen PG, et al. Factors affecting physician professional satisfaction. 2013. https://www.rand.org/pubs/research_briefs/RB9740.html Accessed October 2, 2019. [PMC free article] [PubMed]

- 4. Shanafelt TD, Dyrbye LN, Sinsky C, et al. Relationship between clerical burden and characteristics of the electronic environment with physician burnout and professional satisfaction. Mayo Clin Proc 2016; 91 (7): 836–48. [DOI] [PubMed] [Google Scholar]

- 5. Downing NL, Bates DW, Longhurst CA.. Physician burnout in the electronic health record era: are we ignoring the real cause? Ann Intern Med 2018; 169 (1): 50. [DOI] [PubMed] [Google Scholar]

- 6.Office of the National Coordinator for Health Information Technology. Health IT Quick Stats. https://dashboard.healthit.gov/quickstats/quickstats.php Accessed October 2, 2019.

- 7. Han S, Shanafelt TD, Sinsky CA, et al. Estimating the attributable cost of physician burnout in the United States. Ann Intern Med 2019; 170 (11): 784–90. [DOI] [PubMed] [Google Scholar]

- 8. Hamidi MS, Bohman B, Sandborg C, et al. Estimating institutional physician turnover attributable to self-reported burnout and associated financial burden: a case study. BMC Health Serv Res 2018; 18 (1): 851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Feblowitz JC, Wright A, Singh H, et al. Summarization of clinical information: a conceptual model. J Biomed Inform 2011; 44 (4): 688–99. [DOI] [PubMed] [Google Scholar]

- 10. Laxmisan A, McCoy AB, Wright A, et al. Clinical summarization capabilities of commercially-available and internally-developed electronic health records. Appl Clin Inform 2012; 3 (1): 80–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. McDonald CJ, Callaghan FM, Weissman A, et al. Use of internist’s free time by ambulatory care electronic medical record systems. JAMA Intern Med 2014; 174 (11): 1860–3. [DOI] [PubMed] [Google Scholar]

- 12. Pivovarov R, Elhadad N.. Automated methods for the summarization of electronic health records. J Am Med Inform Assoc 2015; 22 (5): 938–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Christensen T, Grimsmo A.. Instant availability of patient records, but diminished availability of patient information: a multi-method study of GP’s use of electronic patient records. BMC Med Inform Decis Mak 2008; 8 (1): 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. O’Donnell A, Kaner E, Shaw C, et al. Primary care physicians’ attitudes to the adoption of electronic medical records: a systematic review and evidence synthesis using the clinical adoption framework. BMC Med Inform Decis Mak 2018; 18 (1): 101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Weed LL. Medical records that guide and teach. N Engl J Med 1968; 278 (12): 652–7. [DOI] [PubMed] [Google Scholar]

- 16. Schultz JR, Cantrill SV, Morgan KG. An initial operational problem oriented medical record system: for storage, manipulation and retrieval of medical data. In: AFIPS ’71 (Spring): Proceedings of the May 18-20, 1971, Spring Joint Computer Conference; 1971: 239–64.

- 17. Salmon P, Rappaport A, Bainbridge M, et al. Taking the problem oriented medical record forward. Proc AMIA Annu Fall Symp 1996; 463–7. [PMC free article] [PubMed] [Google Scholar]

- 18. American Recovery and Reinvestment Act of 2009 : Law, Explanation and Analysis : P.L. 111-5, as Signed by the President on February 17, 2009. Chicago, IL: CCH; 2009. https://search.library.wisc.edu/catalog/9910071527602121

- 19. Blumenthal D, Tavenner M.. The “Meaningful Use” regulation for electronic health records. N Engl J Med 2010; 363 (6): 501–4. [DOI] [PubMed] [Google Scholar]

- 20.Centers for Medicare & Medicaid Services (CMS), HHS. Medicare and Medicaid programs; electronic health record incentive program–stage 2. Final rule. Fed Regist 2012; 77: 53967–4162. [PubMed] [Google Scholar]

- 21. Bainbridge M, Salmon P, Rappaport A, et al. The Problem Oriented Medical Record - just a little more structure to help the world go round? In: Proceedings of the 1996 Annual Conference of the Primary Health Care Specialist Group; 1996. . [Google Scholar]

- 22. Simons SMJ, Cillessen FHJM, Hazelzet JA.. Determinants of a successful problem list to support the implementation of the problem-oriented medical record according to recent literature. BMC Med Inform Decis Mak 2016; 16: 102. doi : 10.1186/s12911-016-0341-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Bashyam V, Hsu W, Watt E, et al. Problem-centric organization and visualization of patient imaging and clinical data. Radiographics 2009; 29 (2): 331–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Wright A, Chen ES, Maloney FL.. An automated technique for identifying associations between medications, laboratory results and problems. J Biomed Inform 2010; 43 (6): 891–901. [DOI] [PubMed] [Google Scholar]

- 25. Wright A, Pang J, Feblowitz JC, et al. Improving completeness of electronic problem lists through clinical decision support: a randomized, controlled trial. J Am Med Inform Assoc 2012; 19 (4): 555–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Wright A, Pang J, Feblowitz JC, et al. A method and knowledge base for automated inference of patient problems from structured data in an electronic medical record. J Am Med Inform Assoc 2011; 18 (6): 859–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Kane L. Medscape National Physician Burnout & Suicide Report 2020: the generational divide.https://www.medscape.com/slideshow/2020-lifestyle-burnout-6012460 Accessed September 5, 2020.

- 28. Jha AK, Iliff AR, Chaoui AA, et al. A Crisis in Health Care: A Call to Action on Physician Burnout. Waltham, MA: Massachusetts Medical Society, Massachusetts Health and Hospital Association, Harvard TH Chan School of Public Health, and Harvard Global Health Institute; 2019.

- 29. Kroth PJ, Morioka-Douglas N, Veres S, et al. The electronic elephant in the room: Physicians and the electronic health record. JAMIA Open 2018; 1 (1): 49–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Kroth PJ, Morioka-Douglas N, Veres S, et al. Association of electronic health record design and use factors with clinician stress and burnout. JAMA Netw Open 2019; 2 (8): e199609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Smith R. What clinical information do doctors need? Bmj 1996; 313 (7064): 1062–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Hill RG, Sears LM, Melanson SW.. 4000 Clicks: a productivity analysis of electronic medical records in a community hospital ED. Am J Emerg Med 2013; 31 (11): 1591–4. [DOI] [PubMed] [Google Scholar]

- 33. Rajkomar A, Oren E, Chen K, et al. Scalable and accurate deep learning with electronic health records. Npj Digit Med 2018; 1: 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Buchanan J. Accelerating the benefits of the problem oriented medical record. Appl Clin Inform 2017; 26 (01): 180–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Problem List MD: Problem List Metadata Generated by Expert Clinician Consensus. https://problemlist.org Accessed May 18, 2020.

- 36.SNOMED - Who we are. SNOMED. http://www.snomed.org/snomed-international/who-we-are Accessed October 1, 2019.

- 37.RxNorm. https://www.nlm.nih.gov/research/umls/rxnorm/index.html Accessed October 1, 2019.

- 38.About LOINC. https://loinc.org/about/ Accessed October 1, 2019.

- 39. Ahmed A, Chandra S, Herasevich V, et al. The effect of two different electronic health record user interfaces on intensive care provider task load, errors of cognition, and performance. Crit Care Med 2011; 39: 1626–34. [DOI] [PubMed] [Google Scholar]

- 40. Pickering BW, Dong Y, Ahmed A, et al. The implementation of clinician designed, human-centered electronic medical record viewer in the intensive care unit: a pilot step-wedge cluster randomized trial. Int J Med Inform 2015; 84 (5): 299–307. [DOI] [PubMed] [Google Scholar]

- 41. Harry EM, Shin GH, Neville BA, et al. Using cognitive load theory to improve posthospitalization follow-up visits. Appl Clin Inform 2019; 10 (4): 610–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Pollack AH, Pratt W.. Association of health record visualizations with physicians’ cognitive load when prioritizing hospitalized patients. JAMA Netw Open 2020; 3 (1): e1919301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Brooke J. SUS-A quick and dirty usability scale. Usability Eval Ind 1996; 189: 4–7. [Google Scholar]

- 44. Hart SG, Staveland LE.. Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. In: Advances in Psychology. Vol. 52. North-Holland: Elsevier; 1988: 139–83. [Google Scholar]

- 45. Melnick ER, Dyrbye LN, Sinsky CA, et al. The association between perceived electronic health record usability and professional burnout among US Physicians. Mayo Clin Proc 2020; 95 (3): 476–87. [DOI] [PubMed] [Google Scholar]

- 46. Mazur LM, Mosaly PR, Moore C, et al. Association of the usability of electronic health records with cognitive workload and performance levels among physicians. JAMA Netw Open 2019; 2 (4): e191709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Agency for Healthcare Research and Quality: Digital Healthcare Research. NASA Task Load Index. https://digital.ahrq.gov/health-it-tools-and-resources/evaluation-resources/workflow-assessment-health-it-toolkit/all-workflow-tools/nasa-task-load-index Accessed June 18, 2020.

- 48. Khairat S, Coleman C, Newlin T, et al. A mixed-methods evaluation framework for electronic health records usability studies. J Biomed Inform 2019; 94: 103175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Harry E, Pierce R, Kneeland P, et al. Cognitive load and its implications for health care. NEJM Catalyst 2018; 4. [Google Scholar]

- 50. Ash JS, Sittig DF, Campbell EM, et al. Some unintended consequences of clinical decision support systems. AMIA Annu Symp Proc 2007; 2007: 26–30. [PMC free article] [PubMed] [Google Scholar]

- 51. Schleyer TKL, Rahurkar S, Baublet AM, et al. Preliminary evaluation of the Chest Pain Dashboard, a FHIR-based approach for integrating health information exchange information directly into the clinical workflow. AMIA Jt Summits Transl Sci Proc 2019; 2019: 656–64. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article will be shared on reasonable request to the corresponding author.