Abstract.

Purpose: Coronavirus disease 2019 (COVID-19) is a new infection that has spread worldwide and with no automatic model to reliably detect its presence from images. We aim to investigate the potential of deep transfer learning to predict COVID-19 infection using chest computed tomography (CT) and x-ray images.

Approach: Regions of interest (ROI) corresponding to ground-glass opacities (GGO), consolidations, and pleural effusions were labeled in 100 axial lung CT images from 60 COVID-19-infected subjects. These segmented regions were then employed as an additional input to six deep convolutional neural network (CNN) architectures (AlexNet, DenseNet, GoogleNet, NASNet-Mobile, ResNet18, and DarkNet), pretrained on natural images, to differentiate between COVID-19 and normal CT images. We also explored the model’s ability to classify x-ray images as COVID-19, non-COVID-19 pneumonia, or normal. Performance on test images was measured with global accuracy and area under the receiver operating characteristic curve (AUC).

Results: When using raw CT images as input to the tested models, the highest accuracy of 82% and AUC of 88.16% is achieved. Incorporating the three ROIs as an additional model inputs further boosts performance to an accuracy of 82.30% and an AUC of 90.10% (DarkNet). For x-ray images, we obtained an outstanding AUC of 97% for classifying COVID-19 versus normal versus other. Combing chest CT and x-ray images, DarkNet architecture achieves the highest accuracy of 99.09% and AUC of 99.89% in classifying COVID-19 from non-COVID-19. Our results confirm the ability of deep CNNs with transfer learning to predict COVID-19 in both chest CT and x-ray images.

Conclusions: The proposed method could help radiologists increase the accuracy of their diagnosis and increase efficiency in COVID-19 management.

Keywords: convolutional neural network, Coronavirus disease 2019, transfer learning, radiomics

1. Introduction

In December 2019, a new coronavirus disease, called COVID-19 by the World Health Organization,1 was discovered in Wuhan, Hubei, China. This viral infection, for which there is no effective treatment to date, spread quickly across and outside China, causing severe acute respiratory syndrome (SARS) in the infected population.2 In March 2020, the crisis reached the pandemic stage as the worldwide outbreak accelerated.3 Many techniques have been used to estimate and identify the presence of COVID-19, including measuring body temperature, reverse-transcription-polymerase chain reaction (RT-PCR), chest computed tomography (CT)-scan, and chest x-ray.4–7 Unfortunately, body temperature is not an accurate biomarker and molecular analysis techniques (e.g., blood-routine and infection-biomarkers) are not only costly but also need high processing times. Moreover, they can potentially have serious side effects such as secondary infection. The RT-PCR test, which is widely used for confirming COVID-19 infection, can also lead to false negatives. Hence, two studies in Refs. 8 and 9 found that 3% to 30% of COVID-19 patients who initially had a negative RT-PCR test showed a positive chest CT a few days later, this infection was then confirmed by a second RT-PCR. Given the low sensitivity of the RT-PCR test,10 automated and reliable methods to screen COVID-19 patients are required. Medical imaging techniques, such as chest CT and chest x-ray, offer a noninvasive alternative to identify COVID-19.11–14 However, clinicians are not always able to identify small changes within scans/images caused by the presence of COVID-19. Therefore, there is a pressing need for intelligent tools to predict COVID-19 infection from medical images.

Imaging features derived from CT can describe characteristics of infected tissues15 and have been used for detecting the presence of COVID-19. Several recent works have investigated the usefulness of CT imaging features to distinguish COVID-19 from other viral infections.16–20 It was shown, unfortunately, that COVID-19 produces CT features similar to those caused by pneumonia.17 Moreover, the study in Ref. 21 reported that COVID-19 can mimic diverse disease processes, including other infections, which can lead to a misdiagnosis between COVID-19 and other viral pneumonia. It is argued that the automatic classification between COVID-19 and other types of pneumonia could avoid unnecessary efforts and decrease the spread of COVID-19 infection. Also, Wong et al.22 studied the appearance of COVID-19 in chest x-ray, and its correlation with key findings in CT scans and RT-PCR tests. To date, only a few studies have considered imaging features obtained from deep learning models for predicting, detecting, and screening COVID-19.

Machine learning techniques have recently led to a paradigm shift in analyzing complex medical data. In particular, deep learning algorithms such as the convolutional neural network (CNN) have shown an outstanding ability to automatically process large amounts of medical images, and to identify complex associations in high-dimensional data for disease diagnosis and treatment planning.23 Radiomics analysis, which extracts high-throughput features from medical images and uses them for multiple clinical prediction tasks, has had a high impact in medical image analysis and computer-aided diagnosis.24 For instance, radiomics models based on CT and x-ray have been proposed for predicting pneumonia associated with SARS-CoV-2 (COVID19) infection25–27 and for assisting clinical decision making.28 Recently, deep learning algorithms were successfully applied on CT and x-ray images for the automated detection of COVID-1914,27,29–31 and for classifying bacterial from viral pneumonia in pediatric chest radiographs.32 Moreover, many studies have shown the usefulness of CT features related to COVID-19 [e.g., ground-glass opacities (GGO), mixed GGO and consolidation, and subpleural lesions].33,34 Despite their achievements, more investigation is needed to analyze separately the impact of imaging features derived from specific regions of interest (ROI) in CT, namely, GGO, consolidation and pleural effusion (PE), in predicting COVID-19.

While recent work35 has shown the advantage of deep CNNs for predicting clinical outcomes compared to traditional radiomic pipelines, the direct application of such strategy is also prone to overfitting when few labeled examples are available, leading to poor generalization on new data. To overcome the problem of limited training data, the work in Ref. 36 proposed using entropy-related features extracted at different layers of a CNN to train a separate classifier model for the final prediction. The approach of this previous work is based on the principle of transfer learning, where convolutional features learned for a related image analysis task can be reused to improve the learning of a new task. This technique is well-suited for detecting anomalies such as lesions in medical images since those anomalies are typically characterized by local changes in texture and not high-level structures in the image. Therefore, low-level features in the network, capturing general characteristics of texture, can be transferred across different image analysis tasks. However, an important limitation of this work is that it summarized CNN features in a very limited number of texture descriptors and it only considered a single network architecture. This study presents a deep transfer learning (DTL) approach to predict COVID-19 infections from abnormal chest CT and x-ray images. Specifically, we propose to exploit features learned from six different deep CNN architectures and boost DTL models using the ROIs in addition to the training images for predicting COVID-19. We hypothesize that pre-training these networks on a large dataset of images with confirmed COVID-19 ROIs can help to learn informative features that capture local texture anomalies related to COVID-19 infections. Moreover, we demonstrate that analyzing the distribution of these features within ROIs corresponding to distinct findings can yield a high accuracy for discriminating between COVID-19 and other types of pneumonia. The main contributions of our work are the following.

-

1.

We propose a DTL approach that learns image features capturing tissue heterogeneity, which can effectively predict COVID-19 infection with limited training data.

-

2.

To the best of our knowledge, this is the first work to analyze deep features by integrating separate CT lung ROIs images (i.e., GGO, consolidation and PE) in DTL models.

-

3.

We present a comprehensive analysis of DTL for COVID-19 prediction, involving several datasets of different modalities and six deep CNN architectures. Our results demonstrate the potential of the proposed approach for differentiating between COVID-19 and other viral pneumonia.

The rest of this paper is structured as follows. Section 2 describes the data used in this study, as well as the proposed pipeline based on DTL. We then present the experimental results in Section 3 and discuss our main findings in Sec. 4. Finally, Sec. 5 concludes with a summary of our work’s main contributions and results.

2. Materials and Methods

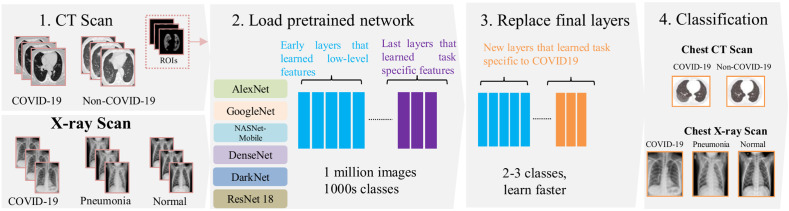

Figure 1 shows the pipeline to detect the presence of COVID-19 in CT and x-ray images. First, images are acquired by a CT or x-ray scanner. One hundred axial CT images are then segmented in a semi-automatic fashion using the MedSeg tool37 to label ROIs corresponding to three types of findings: GGO, consolidation, and PE to then add to the main CT images used in the DTL training. X-ray images are used without segmentation. For transfer learning, six well-known CNN models are considered: AlexNet, DenseNet, GoogleNet, NASNet-Mobile, ResNet18, and DarkNet. These networks were pretrained on a large dataset for image classification and are adapted to the target tasks by retraining only the final layers of the architecture. Models are evaluated on three prediction tasks: (1) classifying COVID-19 versus non-COVID-19 CT scans, (2) classifying COVID-19 versus normal x-ray images, and (3) classifying COVID-19 versus other viral pneumonia in x-ray images.

Fig. 1.

A proposed pipeline for predicting the COVID-19 using the CT and x-ray images with deep transfer learning models. (1) Image acquisition of axial CT scans (or x-ray images) with semi-automatic labeling of lung lesions ROIs (GGO, consolidation, and PE); (3) and (4) six pretrained CNNs models were considered and the last layers were adapted (replaced) to predict COVID-19.

2.1. Patients and Data Acquisition

Our study uses a total of 846 (COVID-19 = 349, non-COVID-19 = 397, and COVID-19 ROIs = 100) axial CT slice images. The COVID-19 datasets have 349 CT images containing clinical findings of COVID-19 from 216 patients. These 349 COVID-19 CT images were selected by a senior radiologist in Tongji Hospital, Wuhan, China, during the outbreak of this disease between January and April 2020 (https://github.com/UCSD-AI4H/COVID-CT). More details about these 349 COVID-19 images are described in Ref. 38.

Moreover, we collected a set of 397 non-COVID-19 CT slice images from 397 patients (36 from Lung Nodule Analysis,39 195 from MedPix,40 136 from PubMed Central,41 and 30 from Radiopaedia42) as detailed in Ref. 38. To tune our DTL models, we used another set of 100 labeled slice images (e.g., GGO, Consolidation, and PE) from 60 COVID-19 patients, obtained from the COVID-19 radiology-Data Collection and Preparation for Artificial Intelligence, the Italian society of medical and interventional radiology (SIRM).43 These labeled images have been previously de-identified by radiologists and, therefore, no institutional review board or Health Insurance Portability and Accountability Act approval was required for our study. Details on the acquisition protocol can be found in Ref. 44. As noted in labeling the ROIs, images were in the format of JPG, resized to , converted to grayscale and then compiled into a single NIFTI-file. The segmentation was performed by radiologists using the MedSeg tool37 to delineate ROIs corresponding to GGO, consolidation and PE findings. In some cases, a label of whole abnormal tissue was used for findings that did not fit in one of the three ROI categories.

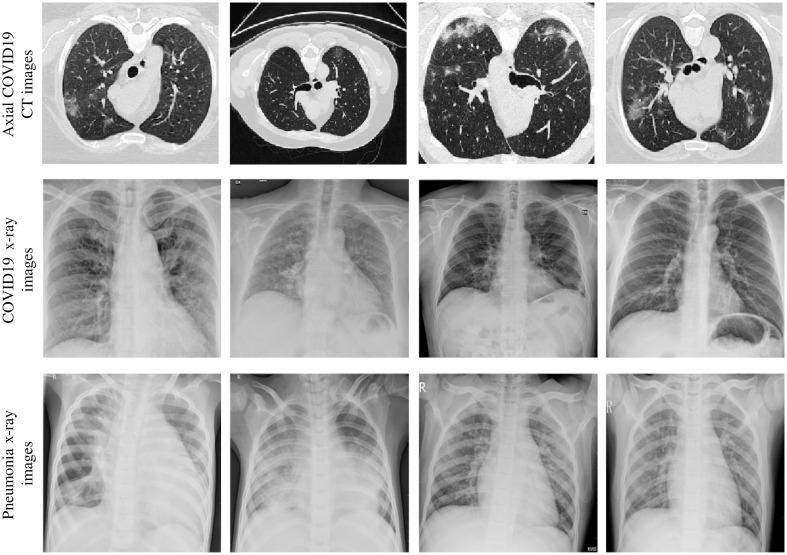

Moreover, our study also leverages 657 chest x-ray slice images collected from multiple sources: 219 x-ray images of COVID19-infected patients from the COVID chest x-ray Dataset (http://github.com/ieee8023/covid-chestxray-dataset), the SIRM, Radiopaedia, and the Radiological Society of North America;45 219 normal (subjects) and 219 patients with pneumonia (i.e., viral and bacterial) x-rays from a publicly available Kaggle dataset.32,46,47 These chest x-ray slice images were in the format of JPG obtained from multisite with various scanner models, pixel spacing, and contrast. Thus, we considered these differences by sampling these entire images to a common resolution (i.e., ) with a size of . All the obtained CT and x-ray images were also normalized to the [0; 255] range. Figure 2 shows examples of COVID-19 chest CT images, and x-ray images of COVID-19 and non-related pneumonia.

Fig. 2.

Examples of COVID-19 in CT and x-ray images. First row: axial COVID-19 CT images with lesions in different positions and sizes. Second row: COVID-19 x-ray images. Third row: pneumonia x-ray images.

2.2. Deep Convolutional Neural Networks

Deep CNNs have demonstrated an impressive performance for various image classification tasks, in particular when large sets of images are available.27,28 Various CNN architectures have been proposed for different applications in computer vision,48 big data, and biomedical imaging.49 At a high level, CNN architectures comprise a repeated stack of convolution and pooling layers, followed by one or more fully connected layers.50 Convolution layers apply a filtering function to extract spatial features from an input image. These features encode different levels of abstraction, with initial layers capturing local image patterns and texture, and deeper layers extracting high-level features representing the global structure. To add non-linearity, a non-saturating activation function such as the rectified linear unit30 is typically employed. Such function helps alleviate the vanishing gradient problem when training deep networks. Pooling layers (e.g., maximum or average) are typically added after each convolution layer block to reduce the spatial dimension of feature maps and make the network invariant to small image translations. CNNs for classification also have fully connected layers at the end of the network, followed by an output layer (e.g., softmax), which converts logits into class probabilities. During training, convolutional filters and fully connected layer weights are updated using the backpropagation algorithm.

2.3. Proposed Transfer Learning Approach

Transfer learning is a powerful strategy that enables to quickly and effectively train of deep neural networks with a limited amount of labeled data. The basic idea of this strategy is to use a pretrained network on a large available dataset, and then use the features of this network as a representation for learning a new task without re-training from scratch. Transferred features can be used directly as input to the new model or adapted to the new task via fine-tuning.

Following this strategy, our method uses six well-known CNN architectures, i.e., AlexNet, GoogleNet, NASNet-Mobile, DenseNet, DarkNet, and ResNet18, pretrained for image classification on the ImageNet dataset. This dataset contains over 14 million natural images belonging to about 20 thousand categories.51 Although the CT and x-ray images in our study are very different from those in this dataset, we argue that relevant information for detecting COVID-19 lies in local changes in texture and that this information can be captured effectively with a general set of low-level features. For adapting these pretrained networks to the task of differentiating between COVID-19 and pneumonia or normal lung images, we replace all layers following the last convolution block (i.e., fully connected and softmax) by new layers of the correct size (e.g., 2 CT image classes and/or 3 x-ray image classes), and fine-tune the modified networks using training examples of the new tasks.

For training, we randomly initialized the weights of fully connected layers and employed stochastic gradient descent with momentum to update all network parameters. We set the batch size to 10, the learning rate to , and the number of epochs to 10. The dataset was split into three independent subsets containing different subjects, with (429 CT-patients; 460 x-ray patients), (61 CT-patients; 66 x-ray patients), and (123 CT-patients; 131 x-ray patients), of examples for training, validation, and testing, respectively. To prevent overfitting,52 we augmented the training dataset using the following image transformations: random flipping, rotation, translation, and scaling.

2.4. Evaluation Metrics

The performance of tested models was evaluated on test images, using the area under the curve (AUC) of the receiver operator characteristic (ROC) curve, accuracy, and confusion matrix. We measured performance separately for the prediction tasks using CT and x-ray images. The statistical significance of the difference in performance was assessed using the Wilcoxon test.53 For multiple comparisons, we considered the Holm–Bonferroni method in correcting the obtained -values.54 All processing/analysis steps were performed using MATLAB’s deep learning, statistics, and machine learning toolbox.

3. Results

As mentioned before, our experiments use a dataset of 746 CT images (COVID-19 = 349 and non-COVID-19 = 397) from 216 patients and 657 chest x-ray images (219 COVID-19, 219 normal, and 219 pneumonia). To assess the ROIs (i.e., GGO, consolidation, and PE), we combined 100 CT images derived from 60 patients with COVID-19 that have a total of 95 GGO, 80 consolidations and 25 PE finding ROIs.

In Table 1, we observe a test accuracy ranging from 70% to 79% (i.e., AlexNet, GoogleNet and ResNet18) to 80% to 82.80% (i.e., DarkNet, DenseNet, and NASNet-Mobile). The baseline and impact of ROIs in predicting the COVID-19 of each model are also given.

Table 1.

Accuracy (%) of tested models for classifying COVID-19 versus non-COVID-19 CT images with different finding labels.

| CNNs | Testing | ||||

|---|---|---|---|---|---|

| Baseline | |||||

| AlexNet | 70.00 | 73.40* | 75.90** | 73.40* | 78.80** |

| GoogleNet | 72.40 | 75.90* | 72.40 | 72.40 | 74.40* |

| DenseNet | 80.80 | 79.30 | 79.30 | 80.80 | 77.80 |

| NASNet-Mobile | 80.30 | 82.30 | 78.80 | 80.80 | 82.30* |

| DarkNet | 82.30 | 80.80 | 82.80 | 80.30 | 82.30 |

| ResNet18 | 79.00 | 78.30 | 79.80 | 77.80 | 80.80 |

significant results with

corrected -value following Holm–Bonferroni

Note: Bold values represent the maximum value for each of CNN models.

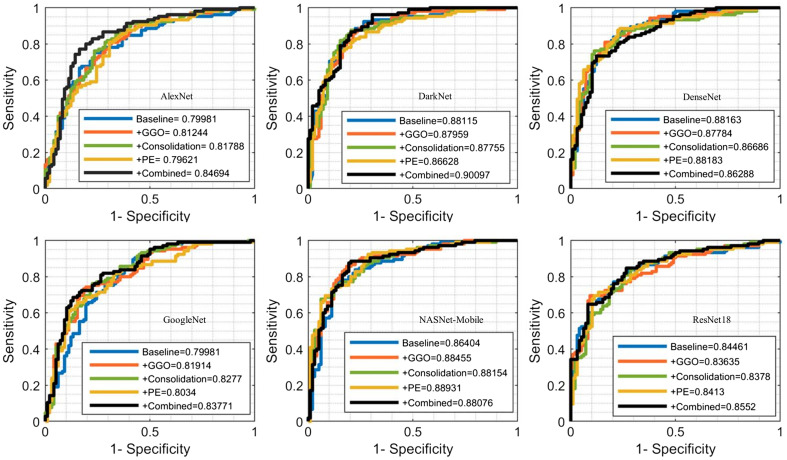

We find that incorporating GGO ROIs to images () improved the accuracy by in AlexNet, GoogleNet, and NASNet-Mobile models. On the other hand, combining training images with consolidation () or PE () ROIs increased the accuracy only for the AlexNet model. Considered all ROIs together, we found that the accuracy increased using the AlexNet (), GoogleNet (), and NASNet-Mobile (). Next, we computed the AUC-ROC of all six models in predicting the COVID-19 using baseline images, , , , and ROI labels. The highest AUC value of 90.09% was obtained from DarkNet model using the combined ROIs (Fig. 3). Except for the DenseNet model, we found that AUC increases when we combine baseline with ROIs. When using DarkNet, the highest AUC of 88.45%, 88.15%, and 88.89% is achieved with the , , and combinations, respectively.

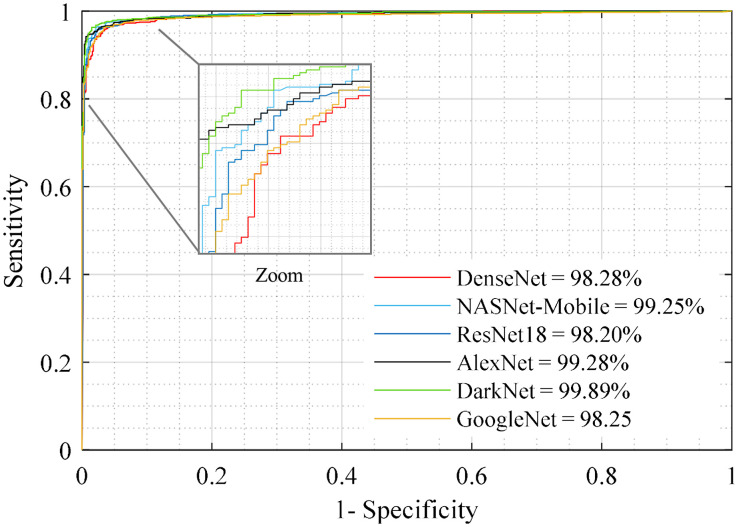

Fig. 3.

Receiver operating characteristic (ROC)-AUC curve for predicting the COVID-19 CT image using deep transfer learning models.

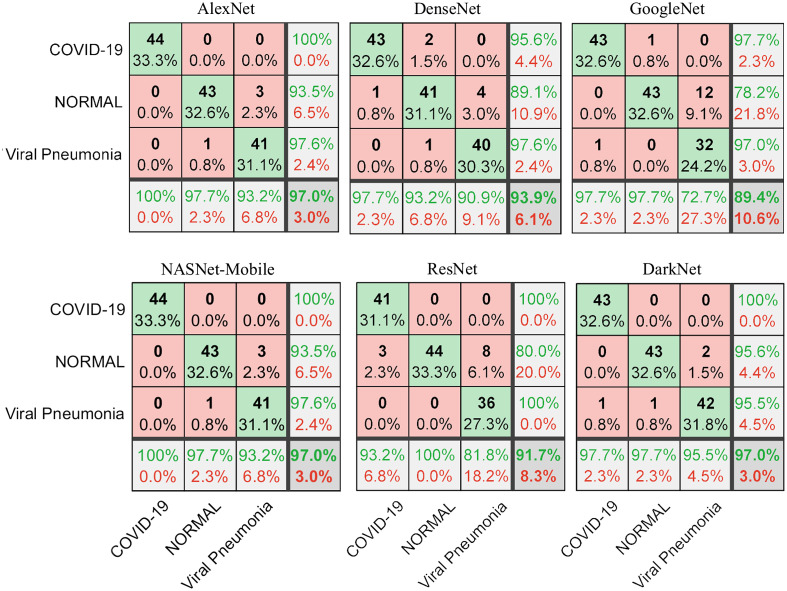

Figure 4 shows the confusion matrix of the six DTL models on the task of distinguishing COVID-19 () from normal () and pneumonia () x-ray images in the test set (20%). We note that AlexNet and NASNet-Mobile yield the highest accuracy of 97% for predicting all three classes and of 100% in differentiating COVID-19 samples from normal or pneumonia classes.

Fig. 4.

The confusion matrix of testing datasets (20%) shows the performance of correctly classified COVID-19 from normal and pneumonia x-ray images.

To measure the impact of DTL in COVID-19 analysis, we combined CT and x-ray images and grouped these images into two groups: COVID-19 (CT: 276 patients + x-ray: 219 patients) and non-COVID-19 (CT: 397 patients + x-ray: 219 normal subjects + 219 pneumonia patients). We applied five-fold cross-validation (CV) for predicting the COVID-19 images (CT + x-ray). Splitting in each CV fold is based on patients to avoid sharing similar images between training and testing sets. We used 15% of training examples in each fold as validation set to choose hyperparameters. We then measured the average accuracy and AUC values across the five folds (Table 2 and Fig. 5). DTL models show accuracy and AUC value range of 96.66% to 99.09% and 98.12% to 99.89%, respectively. We see that the DarkNet architecture shows the highest accuracy and AUC value of 99.09% and 99.89%, respectively with corrected (Table 3).

Table 2.

Average of five folds CV for predicting COVID-19 from other viral pneumonia.

| CNNs | Accuracy | AUC |

|---|---|---|

| AlexNet | 97.04 | 99.28 |

| GoogleNet | 96.84 | 98.25 |

| DenseNet | 96.66 | 98.12 |

| NASNet-Mobile | 98.72 | 99.25 |

| DarkNet | 99.09 | 99.89 |

| ResNet18 | 96.80 | 98.20 |

Fig. 5.

ROC-AUC curve for predicting the COVID-19 CT + x-ray image using DTL models.

Table 3.

Corrected -value between CNN classifiers for predicting COVID-19 from other viral pneumonia.

| CNNs | AlexNet | GoogleNet | DenseNet | NASNet-Mobile | DarkNet | ResNet18 |

|---|---|---|---|---|---|---|

| AlexNet | — | — | — | — | — | — |

| GoogleNet | 0.21 | — | — | — | — | — |

| DenseNet | 0.24 | 0.53 | — | — | — | — |

| NASNet-Mobile | 0.08 | 0.04 | 0.04 | — | — | — |

| DarkNet | 0.03 | 0.02 | 0.02 | 0.08 | — | — |

| ResNet18 | 0.44 | 0.45 | 0.43 | 0.03 | 0.04 | — |

Table 4 compares our results with those of previous works. Our approach yields a higher performance compared to existing litterature, with a increase in accuracy using x-ray scans.

Table 4.

Summary of CNN performance metrics (%) for COVID-19 diagnosis using the CT (or/and x-ray) scans.

| AI models | Accuracy | AUC | Imaging | |

|---|---|---|---|---|

| Yang et al.55 | 89.00 | 98.00 | CT | |

| Loey et al.56 | 82.91 | — | CT | |

| Maghdid et al.57 | 94.10 to 94.00 | — | CT + x-ray | |

| Li et al.14 | — | 96.00 | CT | |

| Our work (i.e., DarkNet) | Training/validation/test | 82.80a | 90.00 | CT |

| 97.00b | — | x-ray | ||

| Five-fold CV | 99.09a | 99.89 | CT + x-ray | |

2 classes: COVID-19 versus non-COVID-19.

3 classes: COVID-19 versus normal versus pneumonia.

4. Discussion

The diagnostic value of chest CT and x-ray is mainly related to the detection of abnormal tissues (lesions) that are not missed by radiography in the early stage. Prediction of these abnormalities will help characterize lesions for further clinical classification and treatment. In this context, deep learning algorithms can be used to improve radiologists’ sensitivity in COVID diagnosis. Specifically, these algorithms have recently demonstrated their potential for screening and detecting COVID-19 in CT and x-ray images.14,58–61 So far, these studies demonstrate the importance of artificial intelligence in facilitating the prediction of COVID-19 using CT and x-ray images.60,62–68

We considered DTL models as a non-invasive technique to detect the presence of COVID-19. Our results indicate that these models can differentiate COVID-19 in CT and x-ray test images from non-COVID-19 tissue with the highest accuracy of and 97%. Using five-fold CV, DarkNet model demonstrated the highest performance metrics with an accuracy and AUC value of 99.09% and 99.89%, respectively. This finding is consistent with previous studies that considered deep learning to predict, detect, and screen COVID-19 patients.26,59,60,69 For example, pretrained CNNs (ResNet50, Inception V3 and Inception-ResNetV2) have shown an accuracy for predicting the COVID-19 using chest x-ray images. Also using deep learning, an AUC of 99.4% was achieved to detect the COVID-19 from non-COVID-19 in Ref. 29. Likewise, a modified pretrained AlexNet model applied on x-rays and CT images obtained an accuracy of 94%.57 In Ref. 69, a CNN model with 17 convolutional layers achieved an accuracy of 87% for multiclass classification (COVID-19 versus normal versus other pneumonia). In Ref. 70, a deep model for COVID-19 detection (COVID-Net) gave 92.4% accuracy in classifying normal, non-COVID pneumonia, and COVID-19 classes. Comparing between the CT and x-ray findings, the results in Ref. 22 suggest that chest x-ray could be helpful in monitoring and prognosis but is not recommended for screening.

Comparing with previous studies, our findings show the importance of ROIs in predicting the COVID-19, namely, regions corresponding to consolidation. These are promising results to detect, classify, and predict COVID-19 despite the small number of images used. Furthermore, our results also demonstrate the usefulness of transfer learning algorithms for extracting multiscale texture/patterns in COVID-19 CT images.71

So far, AI algorithms applied on COVID-19 chest CT and x-ray scans have shown a potential to improve diagnosis by reducing the subjectivity and variability.72 The detection of common findings such as GGO, consolidation, and crazy-paving appearance73 can also be impacted by the timing of examining, within or after the patients’ symptoms, and by pre-existing clinical characteristics of the patient. For example, it was found that patients with negative findings in initial chest CT scans would later have rounded peripheral GGO in follow-up scans.12 Similar observations were made in Refs. 8 and 74. Moreover, as reported in Refs. 18 and 75, the appearance of GGO and consolidations may vary over time, explaining the discrepancy in sensitivity. Other studies report high sensitivity in diagnosing COVID-19 from CT scans. In addition, some studies have demonstrated the usefulness of CT scans to monitor the abnormality of asymptomatic COVID-19 patients.76,77 For instance, 58 asymptomatic cases with COVID-19 showed abnormal CT findings, predominantly GGO, which were confirmed with nucleic acid testing.77,78 On the other hand, Kim et al.79 show that the chest CT screening of patients with suspected disease had a low positive predictive value (range, 1.5% to 30.7%).

This current work has some limitations that could be addressed in future work. We only considered 800 chest CT and 657 chest x-ray images, however, including a larger cohort from different regions of the world could help get a more comprehensive understanding of COVID-19. Moreover, clinical demographics of patients, including age, sex, treatments, and overall survival, were not available for every case and thus not considered in this study.

5. Conclusions

We proposed to investigate and develop six models based on DTL that use CT and x-ray images to predict COVID-19. Our results showed that using ROIs of consolidation, GGO and PE in CT images yields the highest accuracy in predicting COVID-19. Furthermore, our findings suggest that DTL models applied on CT and x-ray images could be used as an effective tool for predicting patients who may have contracted the COVID-19. Specifically, DarkNet model is the best DTL model to predict the COVID-19 image. With these automatic models, future studies could reveal additional insights on radiomic markers to assess COVID-19 progression, thereby contributing toward an improved diagnosis and treatment for this disease.

Acknowledgments

This work was supported by Foreign Young Talents Program (Grant No. QN20200233001).

Biographies

Ahmad Chaddad received his PhD in engineering systems from the University of Lorraine, Metz, France, in 2012. He worked for seven years in McGill University, École de technologie supérieure (ÉTS), University of Texas MD Anderson Cancer Center, and Villanova University. In 2020, he joined the School of Artificial Intelligence, Guilin University of Electronic University, as a professor. His current research interests include AI and radiomics analysis. He has authored over 70 research papers.

Lama Hassan received her PhD in biological sciences from the University of Limoge, Limoge, France in 2016. She worked in McGill University and CHU Saint-Justine, Montreal, Canada. Her main research interests focus on molecular and cellular biology. She has authored over 10 research papers.

Christian Desrosiers received his PhD in computer engineering from Polytechnique Montreal in 2008. He was a postdoctoral researcher at the University of Minnesota on the topic of machine learning. In 2009, he joined the Department of Software and IT Engineering, ÉTS, University of Quebec, as a professor. His main research interests focus on machine learning, image processing, computer vision, and medical imaging.

Disclosures

No conflicts of interest, financial or otherwise, are declared by the authors.

Contributor Information

Ahmad Chaddad, Email: ahmadchaddad@guet.edu.cn.

Lama Hassan, Email: lama.hassan-85@hotmail.com.

Christian Desrosiers, Email: christian.desrosiers@etsmtl.ca.

References

- 1.“Naming the coronavirus disease (COVID-19) and the virus that causes it,” https://www.who.int/emergencies/diseases/novel-coronavirus-2019/technical-guidance/naming-the-coronavirus-disease-(covid-2019)-and-the-virus-that-causes-it (accessed 14 April 2020).

- 2.Gorbalenya A. E., et al. , “The species severe acute respiratory syndrome-related coronavirus: classifying 2019-nCoV and naming it SARS-CoV-2,” Nat. Microbiol. 5(4), 536–544 (2020). 10.1038/s41564-020-0695-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.“WHO Director-General’s opening remarks at the media briefing on COVID-19–11 March 2020,” https://www.who.int/dg/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19—11-march-2020 (accessed 14 April 2020).

- 4.Huang C., et al. , “Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China,” The Lancet 395(10223), 497–506 (2020). 10.1016/S0140-6736(20)30183-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lu H., Stratton C. W., Tang Y.-W., “Outbreak of pneumonia of unknown etiology in Wuhan, China: the mystery and the miracle,” J. Med. Virol. 92(4), 401–402 (2020). 10.1002/jmv.25678 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Organization W. H., “Clinical management of severe acute respiratory infections when novel coronavirus is suspected: what to do and what not to do,” https://www.who.int/csr/disease/coronavirus_infections/InterimGuidance_ClinicalManagement_NovelCoronavirus_11Feb13u.pdf (2014).

- 7.Zhu N., et al. , “A novel coronavirus from patients with pneumonia in China, 2019,” N. Engl. J. Med. 382(8), 727–733 (2020). 10.1056/NEJMoa2001017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Xie X., et al. , “Chest CT for typical coronavirus disease 2019 (COVID-19) pneumonia: relationship to negative RT-PCR testing,” Radiology 296(2), E41–E45 (2020). 10.1148/radiol.2020200343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fang Y., et al. , “Sensitivity of Chest CT for COVID-19: comparison to RT-PCR,” Radiology 296(2), E115–E117 (2020). 10.1148/radiol.2020200432 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ai T., et al. , “Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases,” Radiology 296(2), E32–E40 (2020). 10.1148/radiol.2020200642 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.“ACR recommendations for the use of chest radiography and computed tomography (CT) for suspected COVID-19 infection,” https://www.acr.org/Advocacy-and-Economics/ACR-Position-Statements/Recommendations-for-Chest-Radiography-and-CT-for-Suspected-COVID19-Infection (accessed 14 April 2020).

- 12.Chung M., et al. , “CT imaging features of 2019 novel coronavirus (2019-nCoV),” Radiology 295(1), 202–207 (2020). 10.1148/radiol.2020200230 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wynants L., et al. , “Prediction models for diagnosis and prognosis of covid-19 infection: systematic review and critical appraisal,” BMJ 369, m1328 (2020). 10.1136/bmj.m1328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Li L., et al. , “Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: evaluation of the diagnostic accuracy,” Radiology 296(2), E65–E71 (2020). 10.1148/radiol.2020200905 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li X., et al. , “CT imaging changes of corona virus disease 2019 (COVID-19): a multi-center study in Southwest China,” J. Transl. Med. 18(1), 154 (2020). 10.1186/s12967-020-02324-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bai H. X., et al. , “Performance of radiologists in differentiating COVID-19 from non-COVID-19 viral pneumonia at chest CT,” Radiology 296(2), E46–E54 (2020). 10.1148/radiol.2020200823 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Salehi S., et al. , “Coronavirus disease 2019 (COVID-19): a systematic review of imaging findings in 919 patients,” Am. J. Roentgenol. 215(1), 87–93 (2020). 10.2214/AJR.20.23034 [DOI] [PubMed] [Google Scholar]

- 18.Bernheim A., et al. , “Chest CT findings in coronavirus disease-19 (COVID-19): relationship to duration of infection,” Radiology 295(3), 200463 (2020). 10.1148/radiol.2020200463 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhao W., et al. , “CT scans of patients with 2019 novel coronavirus (COVID-19) pneumonia,” Theranostics 10(10), 4606 (2020). 10.7150/thno.45016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wang X., et al. , “A weakly-supervised framework for COVID-19 classification and lesion localization from chest CT,” IEEE Trans. Med. Imaging 39(8), 2615–2625 (2020). 10.1109/TMI.2020.2995965 [DOI] [PubMed] [Google Scholar]

- 21.Kligerman S., et al. , “Radiologic, pathologic, clinical, and physiologic findings of electronic cigarette or vaping product use-associated lung injury (EVALI): evolving knowledge and remaining questions,” Radiology 294(3), 491–505 (2020). 10.1148/radiol.2020192585 [DOI] [PubMed] [Google Scholar]

- 22.Wong H. Y. F., et al. , “Frequency and distribution of chest radiographic findings in patients positive for COVID-19,” Radiology 296(2), E72–E78 (2020). 10.1148/radiol.2020201160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Abdollahi B., El-Baz A., Frieboes H. B., “Overview of deep learning algorithms applied to medical images,” in Big Data in Multimodal Medical Imaging, pp. 225–237, Chapman and Hall/CRC, Boca Raton: (2019). [Google Scholar]

- 24.Chaddad A., et al. , “Predicting survival time of lung cancer patients using radiomic analysis,” Oncotarget 8(61), 104393 (2017). 10.18632/oncotarget.22251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Qi X., et al. , “Machine learning-based CT radiomics model for predicting hospital stay in patients with pneumonia associated with SARS-CoV-2 infection: a multicenter study,” Ann. Transl. Med. 8(14), 859 (2020). 10.21037/atm-20-3026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Apostolopoulos I. D., Mpesiana T. A., “Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks,” Phys. Eng. Sci. Med. 43, 635–640 (2020). 10.1007/s13246-020-00865-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Luz E., et al. , “Towards an effective and efficient deep learning model for COVID-19 patterns detection in x-ray images,” https://ui.adsabs.harvard.edu/abs/2020arXiv200405717L/abstract.

- 28.Shi F., et al. , “Large-scale screening to distinguish between COVID-19 and community-acquired pneumonia using infection size-aware classification,” Phys. Med. Biol. 66(6), 065031 (2021). 10.1088/1361-6560/abe838 [DOI] [PubMed] [Google Scholar]

- 29.Gozes O., et al. , “Rapid AI development cycle for the Coronavirus (COVID-19) pandemic: initial results for automated detection and patient monitoring using deep learning CT image analysis,” arXiv:2003.05037 (2020).

- 30.Shan F., et al. , “Lung infection quantification of COVID-19 in CT images with deep learning,” arXiv:2003.04655 (2020).

- 31.Shi F., et al. , “Review of artificial intelligence techniques in imaging data acquisition, segmentation, and diagnosis for COVID-19,” IEEE Rev. Biomed. Eng. 44, 4–15 (2020). 10.1109/RBME.2020.2987975 [DOI] [PubMed] [Google Scholar]

- 32.Kermany D. S., et al. , “Identifying medical diagnoses and treatable diseases by image-based deep learning,” Cell 172(5), 1122–1131.e9 (2018). 10.1016/j.cell.2018.02.010 [DOI] [PubMed] [Google Scholar]

- 33.Ardakani A. A., et al. , “COVIDiag: a clinical CAD system to diagnose COVID-19 pneumonia based on CT findings,” Eur Radiol 31(1), 121–130 (2021). 10.1007/s00330-020-07087-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Elmokadem A. H., et al. , “Diagnostic performance of chest CT in differentiating COVID-19 from other causes of ground-glass opacities,” Egypt. J. Radiol. Nucl. Med. 52(1), 12 (2021). 10.1186/s43055-020-00398-6 [DOI] [Google Scholar]

- 35.Sun Q., et al. , “Deep learning vs. radiomics for predicting axillary lymph node metastasis of breast cancer using ultrasound images: don’t forget the peritumoral region,” Front. Oncol. 10, 53 (2020). 10.3389/fonc.2020.00053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chaddad A., et al. , “Deep radiomic analysis based on modeling information flow in convolutional neural networks,” IEEE Access 7, 97242–97252 (2019). 10.1109/ACCESS.2019.2930238 [DOI] [Google Scholar]

- 37.COVID-19 CT Segmentation Dataset, http:// www.medicalsegmentation.com/covid19/ (accessed 7 April 2020).

- 38.Zhao J., et al. , “COVID-CT-dataset: a CT scan dataset about COVID-19,” arXiv:2003.13865 (2020).

- 39.“LUNA16—grand challenge,” grand-challenge.org, https://luna16.grand-challenge.org/ (accessed 19 May 2020).

- 40.“MedPix,” https://medpix.nlm.nih.gov/home (accessed 19 May 2020).

- 41.“Home—PMC—NCBI,” https://www.ncbi.nlm.nih.gov/pmc/ (accessed 19 May 2020).

- 42.Bell D. J., “COVID-19 | radiology reference article | Radiopaedia.org,” Radiopaedia, https://radiopaedia.org/articles/covid-19-3 (accessed 19 May 2020).

- 43.SIRM COVID-19 Database, https://www.sirm.org/category/senza-categoria/covid-19/ (accessed 2 April 2020).

- 44.MedSeg, https://https://www.medseg.ai/ (accessed 3 April 2020).

- 45.Cohen J. P., Jupyter Notebook, ieee8023/covid-chestxray-dataset (2020).

- 46.“Chest x-ray images (pneumonia),” https://kaggle.com/paultimothymooney/chest-xray-pneumonia (accessed 6 May 2020).

- 47.Alqudah A. M., Qazan S., “Augmented COVID-19 x-ray images dataset,” Mendeley; (2020). [Google Scholar]

- 48.Liu L., et al. , “Deep learning for generic object detection: a survey,” Int. J. Comput. Vision 128(2), 261–318 (2020). 10.1007/s11263-019-01247-4 [DOI] [Google Scholar]

- 49.Duncan J. S., Insana M. F., Ayache N., “biomedical imaging and analysis in the age of big data and deep learning [scanning the issue],” Proc. IEEE 108(1), 3–10 (2020). 10.1109/JPROC.2019.2956422 [DOI] [Google Scholar]

- 50.Chollet F., Deep Learning mit Python und Keras: Das Praxis-Handbuch vom Entwickler der Keras-Bibliothek, MITP-Verlags GmbH & Co. KG, Wachtendonk, Germany: (2018). [Google Scholar]

- 51.Russakovsky O., et al. , “Imagenet large scale visual recognition challenge,” Int. J. Comput. Vision 115(3), 211–252 (2015). 10.1007/s11263-015-0816-y [DOI] [Google Scholar]

- 52.Shorten C., Khoshgoftaar T. M., “A survey on image data augmentation for deep learning,” J. Big Data 6(1), 60 (2019). 10.1186/s40537-019-0197-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kruskal W. H., “Historical notes on the Wilcoxon unpaired two-sample test,” J. Am. Stat. Assoc. 52(279), 356–360 (1957). 10.1080/01621459.1957.10501395 [DOI] [Google Scholar]

- 54.Holm S., “A simple sequentially rejective multiple test procedure,” Scand. J. Stat. 6, 65–70 (1979). [Google Scholar]

- 55.Yang X., et al. , “COVID-CT-dataset: a CT scan dataset about COVID-19,” arXiv:2003.13865 (2020).

- 56.Loey M., Smarandache F., Khalifa N. E. M., “A deep transfer learning model with classical data augmentation and CGAN to detect COVID-19 from chest CT radiography digital images,” Neural Comput. Appl., 1–13 (2020). 10.1007/s00521-020-05437-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Maghdid H. S., et al. , “Diagnosing COVID-19 pneumonia from x-ray and CT images using deep learning and transfer learning algorithms,” arXiv:2004.00038 (2020).

- 58.Ting D. S. W., et al. , “Digital technology and COVID-19,” Nat. Med. 26(4), 459–461 (2020). 10.1038/s41591-020-0824-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Shi W., et al. , “Deep learning-based quantitative computed tomography model in predicting the severity of COVID-19: a retrospective study in 196 patients,” SSRN Scholarly Paper ID 3546089, Social Science Research Network, Rochester, NY: (2020). [Google Scholar]

- 60.Qi X., et al. , “Chest x-ray image phase features for improved diagnosis of COVID-19 using convolutional neural network,” Int. J. CARS 16, 197–206 (2021). 10.1007/s11548-020-02305-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Dong D., et al. , “The role of imaging in the detection and management of COVID-19: a review,” IEEE Rev. Biomed. Eng. 14, 16–29 (2021). 10.1109/RBME.2020.2990959 [DOI] [PubMed] [Google Scholar]

- 62.Fang M., et al. , “CT radiomics can help screen the coronavirus disease 2019 (COVID-19): a preliminary study,” Sci. China Inf. Sci. 63(7), 172103 (2020). 10.1007/s11432-020-2849-3 [DOI] [Google Scholar]

- 63.Zhu Y., et al. , “Clinical and CT imaging features of 2019 novel coronavirus disease (COVID-19),” J. Infection (2020). 10.1016/j.jinf.2020.02.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Zhao W., et al. , “Relation between chest CT findings and clinical conditions of coronavirus disease (COVID-19) pneumonia: a multicenter study,” Am. J. Roentgenol. 214(5), 1072–1077 (2020). 10.2214/AJR.20.22976 [DOI] [PubMed] [Google Scholar]

- 65.Guan C. S., et al. , “Imaging features of coronavirus disease 2019 (COVID-19): evaluation on thin-section CT,” Acad. Radiol. 27(5), 609–613 (2020). 10.1016/j.acra.2020.03.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Liu H., et al. , “Clinical and CT imaging features of the COVID-19 pneumonia: focus on pregnant women and children,” J. Infection 80(5), e7–e13 (2020). 10.1016/j.jinf.2020.03.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Brunese L., et al. , “Explainable deep learning for pulmonary disease and coronavirus COVID-19 detection from x-rays,” Comput. Methods Programs Biomed. 196, 105608 (2020). 10.1016/j.cmpb.2020.105608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Fu L., et al. , “A novel machine learning-derived radiomic signature of the whole lung differentiates stable from progressive COVID-19 infection: a retrospective Cohort study,” J. Thorac. Imaging 35(6), 361–368 (2020). 10.1097/RTI.0000000000000544 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Ozturk T., et al. , “Automated detection of COVID-19 cases using deep neural networks with x-ray images,” Comput. Biol. Med. 121, 103792 (2020). 10.1016/j.compbiomed.2020.103792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Wang L., Wong A., “COVID-net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest x-ray images,” Sci. Rep. 10, 19549 (2020). 10.1038/s41598-020-76550-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Barstugan M., Ozkaya U., Ozturk S., “Coronavirus (COVID-19) classification using CT images by machine learning methods,” arXiv:2003.09424 (2020).

- 72.Abelaira M. D. C., et al. , “Use of conventional chest imaging and artificial intelligence in COVID-19 infection. A review of the literature,” Open Resp. Arch. 3(1), 100078 (2021). 10.1016/j.opresp.2020.100078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Cellina M., et al. , “COVID-19 pneumonia—ultrasound, radiographic, and computed tomography findings: a comprehensive pictorial essay,” Emerg. Radiol., 1–8 (2021). 10.1007/s10140-021-01905-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Caruso D., et al. , “Chest CT features of COVID-19 in Rome, Italy,” Radiology 296(2), E79–E85 (2020). 10.1148/radiol.2020201237 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Pan F., et al. , “Time course of lung changes at chest CT during recovery from coronavirus disease 2019 (COVID-19),” Radiology 295(3), 715–721 (2020). 10.1148/radiol.2020200370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Lin C., et al. , “Asymptomatic novel coronavirus pneumonia patient outside Wuhan: The value of CT images in the course of the disease,” Clin. Imaging 63, 7–9 (2020). 10.1016/j.clinimag.2020.02.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Meng H., et al. , “CT imaging and clinical course of asymptomatic cases with COVID-19 pneumonia at admission in Wuhan, China,” J. Infection 81(1), e33–e39 (2020). 10.1016/j.jinf.2020.04.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Youssef I., et al. , “Covert COVID-19: cone beam computed tomography lung changes in an asymptomatic patient receiving radiation therapy,” Adv. Radiat. Oncol. 5(4), 715–721 (2020). 10.1016/j.adro.2020.04.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Kim H., Hong H., Yoon S. H., “Diagnostic performance of CT and reverse transcriptase polymerase chain reaction for coronavirus disease 2019: a meta-analysis,” Radiology 296, E145–E155 (2020). 10.1148/radiol.2020201343 [DOI] [PMC free article] [PubMed] [Google Scholar]