Abstract

Model fit indices are being increasingly recommended and used to select the number of factors in an exploratory factor analysis. Growing evidence suggests that the recommended cutoff values for common model fit indices are not appropriate for use in an exploratory factor analysis context. A particularly prominent problem in scale evaluation is the ubiquity of correlated residuals and imperfect model specification. Our research focuses on a scale evaluation context and the performance of four standard model fit indices: root mean square error of approximate (RMSEA), standardized root mean square residual (SRMR), comparative fit index (CFI), and Tucker–Lewis index (TLI), and two equivalence test-based model fit indices: RMSEAt and CFIt. We use Monte Carlo simulation to generate and analyze data based on a substantive example using the positive and negative affective schedule (N = 1,000). We systematically vary the number and magnitude of correlated residuals as well as nonspecific misspecification, to evaluate the impact on model fit indices in fitting a two-factor exploratory factor analysis. Our results show that all fit indices, except SRMR, are overly sensitive to correlated residuals and nonspecific error, resulting in solutions that are overfactored. SRMR performed well, consistently selecting the correct number of factors; however, previous research suggests it does not perform well with categorical data. In general, we do not recommend using model fit indices to select number of factors in a scale evaluation framework.

Keywords: factor analysis, model fit, latent variable modeling, fit indices, Monte Carlo simulation, exploratory factor analysis

This best answer to this perennial question is not an attempt to say how many factors there are in the universe of psychological content—for the answer to this theoretical question is obviously that the number of factors in any psychological domain is infinite. I am just presenting an answer which will provide one with a basis for finding that number of factors which may be yielded necessarily, reliably, and meaningfully—from the data at hand.

—Kaiser (1960, p. 146)

Factor analysis has long been an important statistical tool in the social sciences. Whether in the guise of exploratory (EFA) or confirmatory factor analysis (CFA), factor analysis has played a prominent role in many of the model-based statistical practices, which have been increasing in popularity (Rodgers, 2010). One area where factor analysis of both sorts is ubiquitous is scale evaluation, a process that spans from the development of new scale to the assessment of existing scales in new contexts or with changes. A critical aspect of scale evaluation—both for reliability and validity—is the empirical establishment of dimensionality. One must know how many things one is measuring to have any hope of knowing what one is measuring. Similarly, it is essential to know which items relate to which of the constructs one is measuring. Not only are these details incredibly important to any argument for the validity of any particular score use and interpretation but also understanding the dimensionality of what we are measuring is important for developing clear theory regarding relationships among variables.

CFA, as a subset of the more general structural equation modeling (SEM) framework, has a long history of using various omnibus model fit indices to support choice among various models as well as the ultimate reasonableness of the chosen model (West et al., 2014). Current trends (and software implementation) seem to suggest that the trio of root mean square error of approximation (RMSEA; Browne & Cudeck, 1992; Steiger & Lind, 1980), comparative fit index (CFI; Bentler, 1990), and Tucker–Lewis index (TLI; Tucker & Lewis, 1973) are among the most popular of these indices. Standardized root mean squared residual (SRMR) is also frequently studied and reported. Until recently, it was unusual to find EFA and CFA in the same software package, which led to different surrounding ecosystems of standard practices for the two variants of the common factor model. While both rely on the same statistical model (i.e., the Thurstonian common factor model), in practice there is a tangible difference in how one goes about implementing them in software.

Common practice in SEM is to use model fit indices to select preferred models, based on certain recommended cutoffs. However, research has shown that using fixed cutoffs is problematic across the variety of circumstances in which structural equation modeling is applied (Heene et al., 2011; Marsh et al., 2004; McNeish et al., 2018; Sellbom & Tellegen, 2019; Sharma et al., 2004). Chen et al. (2008) showed that the traditional cutoff for RMSEA (.05) does not universally control Type I error or power at a specific level but rather these factors will depend on characteristics of the model (e.g., degrees of freedom). Model fit indices can be sensitive to the size of unique variances in confirmatory factor models, such that they underreport model misfit when unique variances are particularly high, making global cutoffs inappropriate (Heene et al., 2011). In the context of CFA, some researchers have shown that the typical cutoffs for a variety of fit measures do not perform well when using diagonally weighted least squares; however, they were able to provide recommended cutoffs based on knowable factors in a study (e.g., data skew, sample size; Nye & Drasgow, 2011). Despite this impressive roster of concerns regarding the use of fixed model fit cutoff, some have made attempts to develop cutoffs specific to EFA to select number of factors (Garrido et al., 2016).

More recently, an alternative method for calculating cutoff values for some fit indices (RMSEA and CFI) has been proposed (Yuan et al., 2016). These alternative fit indices address a very important limitation of the conventional fit index cutoffs, which is that even when an observed fit index is below a cutoff, this does not necessarily provide strong evidence that the population RMSEA is below that cutoff. These alternatives take an equivalence testing approach, whereby the results of the test provide direct evidence that the population fit index is within a certain distance from a prespecified value corresponding to perfect fit (typically 0 or 1). These methods typically use the conventional cutoff values to determine the largest acceptable deviation away from a minimum tolerable size of misspecification. Thus, claims from this test would allow us to claim that population level fit is smaller than or equal to this minimum tolerable size of misspecification. The result from the proposed analysis given a specified level of confidence (e.g., 95%) is what is called a T-size statistic (e.g., RMSEAt), which provides the largest deviation from perfect fit for which we would be able to reject the null hypothesis that the population fit is greater than this value (i.e., we can be 95% confident that the population RMSEA is less than RMSEAt). Typically, an analyst would look for RMSEAt to be below a prespecified cutoff (e.g., .05).

Proponents of this method rightly point out that applying the previous cuttoff criteria (e.g., .05 for RMSEA) under this new equivalence testing procedure may be overly conservative. Yuan et al. (2016) derived methods for adjusting these cutoff values based on sample size and model degrees of freedom, using the conventional cutoff values as a baseline. This newly proposed method has been shown to perform better than conventional cutoffs in the context of measurement invariance (Finch & French, 2018; Yuan & Chan, 2016) and CFA (Marcoulides & Yuan, 2017; Shi et al., 2019).

Popular SEM packages (e.g., Mplus, LISREL) have added functionality for conducting EFA. This provides an opportunity to examine if common practices used in CFA could be useful for EFA. One practice that researchers have begun to examine is the use of SEM-based omnibus model fit indices to help with the “number of factors problem” in EFA (Clark & Bowles, 2018; Garrido et al., 2016; Preacher et al., 2013). Compared with CFA, where one has nearly complete freedom to control individual parameters, in EFA the control of the user is somewhat limited. Essentially, there is just one question: How many factors should I estimate? This has been a challenging problem since the model was created and has generated an extensive literature focused on trying to solve it (for a review, see Preacher & MacCallum, 2003). Many of these approaches rely on eigenvalues of the observed (or reduced) correlation matrix, which provide approximate information about the dimensionality of the matrix, by suggesting the “amount” of information that can be explained by subsequent factors/components. Two very popular, though intensely problematic, approaches include the eigenvalue >1 rule, where the number of eigenvalues that are greater than 1 is the upper bound of the number of factors (Kaiser, 1960). Though this method as proposed defines an upper bound in the number of factors, in practice researchers use this upper bound as the selected number of factors. This is reflected in previous simulation research which suggests that the eigenvalue >1 rule has been repeatedly shown to over1- or underfactor depending on the circumstance (Cattell & Vogelmann, 1977; Hakstian et al., 1982; Tucker et al., 1969). These same studies also examined the scree plot approach, where the eigenvalues are plotted and the researcher chooses the number of factors that has the last “significant” drop off (Cattell, 1966). This approach though very subjective, has been shown to perform reasonably well (Cattell & Vogelmann, 1977; Hakstian et al., 1982; Tucker et al., 1969). Another method, also based on eigenvalues, which seems to have the most empirical support currently is parallel analysis (Dinno, 2009; Glorfield, 1995; Horn, 1965; but see Yang & Xia, 2015). Parallel analysis generates random data sets which are the same size (variables and sample size) as that being analyzed and then subjects these data to an EFA. The eigenvalues of the real data are compared with the average eigenvalues of the simulated random data and (similar to the eigenvalue >1 rule) the upper bound for the number of factors is the number of real-data eigenvalues greater than the corresponding simulated-data eigenvalues. Just as with the eigenvalue >1 rule, in practice most researchers select the upper bound as the number of selected factors.

Researchers have begun comparing eigenvalue-based approaches (e.g., parallel analysis, eigenvalue >1 rule) to model fit measures in terms of their ability to select an appropriate number of factors (Garrido et al., 2016). These studies have found that the fit measures performed poorly when compared with parallel analysis; however, CFI and TLI performed the best among the fit indices, followed by RMSEA and SRMR. In addition, many factors can affect the ability of fit indices to select the correct number of factors. For example, Clark and Bowles (2018) found that higher factor loadings tend to improve the ability of fit indices to select the correct number of factors, and higher factor intercorrelations and cross-loadings tend to reduce the ability of fit indices to select the correct number of factors (typically underfactoring). Sample sizes that are too small, especially in combination with many indicators per factor, can result in overfactoring (Garrido et al., 2016).

This leads us to wonder: How useful are model fit measures for selecting the number of factors in a scale evaluation related EFA under more realistic conditions? One major issue in simulation research is that often the data generating model is identical to the model being tested, which is unrealistic in scale evaluation contexts. In the remainder of this article, we describe a simulation conducted to assess this question in depth.

Before delving into the specific methods of this article we take a step a back to acknowledge the difficulty of the number of factors problem. We by no means believe that the world of psychological phenomenon is in fact organized with a simple factor structure like that proposed in the Thurstonian model. However, we do believe these models can be useful for describing and developing an understanding of psychological phenomenon. We believe that there is no true answer to the number of factors question, or if there is, that answer is unknowable. However, in our simulations we aim to capture useful pockets of variation we then describe as factors, which can be used for modeling purposes and reliably recovered across different samples. Throughout this article, we examine methods for recapturing meaningful variance through factor models, adopting the mentality of Henry Kaiser as quoted at the beginning of this article on factors that are necessary, reliable, and meaningful (Kaiser, 1960).

Motivation for Study Characteristics

Although factor analysis is used in many applications other than scale evaluation, we focus here on its use in assessing and developing scales for several reasons. First, in our experience scale-related use of factor analysis is one of, if not the, leading uses of factor analytic techniques. Second, there are certain characteristics that go along with scale-related uses of factor analysis which warrant consideration. For example, item-level data are often categorical, which requires some adjustment from the usual process. Previous research has explored the performance of fit indices in selecting number of factors for items that are categorical (Clark & Bowles, 2018; Garrido et al., 2016). Clark and Bowles (2018) found that fit indices tend to avoid underfactored solutions (solutions with too few factors) but will often select solutions with too many factors. Garrido et al. (2016) similarly found that the fit indices did not perform well in selecting number of factors with categorical indicators. Given these results, one may wonder if using fit indices is ever appropriate, or perhaps the issues are primarily with the categorical nature of the data. Clark and Bowles (2018) generated continuous data as a conceptual “control” condition, and using an appropriate cutoff for the fit indices, the correct model was selected most of the time. We chose to begin our examination with continuous data for two reasons. First, in an ideal case the “corrections” that are applied to categorical data are meant to mimic what we would have seen had the data been continuous. If these corrections function well—which is debatable—then the result we expect to see should sync up, regardless of the data generating mechanism. Second, and perhaps more critical, if the use of fit measures does not perform well in the “idealized” situation where our data are continuous, is there any rational expectation they would function better once categorical data are added to the mix?

Another common occurrence in scale evaluation is item redundancy (or near redundancy). In item response theory (IRT) this would be viewed as local dependence. In the factor analysis context, this can be modeled explicitly using correlated residuals (though other options exist as well). We do not mean to suggest that residual covariance is solely a problem in scale-related applications of factor analysis, merely that it is a very prominent issue in practice when using factor analysis in a scale-related application. Important for our investigation, these residual covariances are not accounted for when conducting EFA and thus also not taken into account when selecting the number of factors in the model. Item covariances (when unmodeled) may influence the process of selecting number of factors, and we explore this possibility in the simulation study below.

This addition of correlated residuals, which we believe increases the relevance of the simulation to the enterprise of scale evaluation, does add one very notable complication. Technically, one could model a correlated residual as a common factor shared by the two items in question (with the loadings constrained to be equal to identify the model). This complicates the question about “the right number of factors.” We feel that there are two reasonable variants of the question and suspect one is far more common. One variant of the question is “What is the technically correct number of common factors?” and the other is “What is the number of substantively relevant common factors?” These will, in many cases, potentially have different answers. Although many approaches are “built” to assess the former question, in our experience the latter question is far more common in the minds of applied researchers. It is this question that we focus on for the remainder of this study, with an acknowledgment that it is not the only possible question of interest.

Another important aspect of our simulation is a manipulation of the degree to which the data we generated fit the model. While many simulation studies ignore misspecification entirely, it is to our minds a critically important aspect of any simulation. The data we have in the real world will not correspond to any of our models exactly. To have as much fidelity to real life as possible in our simulation, the study design was driven by a real application and includes nonspecific misspecification (more on this below). We next describe the design of the simulation before describing the results and discussing their implications for practitioners and methodologists.

Method

The aim of this simulation was to study how certain factors affect model fit indices when fitting a two-factor EFA. We generated data under a two-factor structure with varying degrees of nonspecific misspecification and correlated residuals, examining the performance of several model fit indices to select the “correct” number of factors under these varying conditions. By identifying if there are conditions in which the model fit indices do not indicate good fit for the two-factor model, which we know to be the generating model, we can identify conditions where using these indices would lead to incorrect conclusions about number of factors in an EFA.

Generating Model

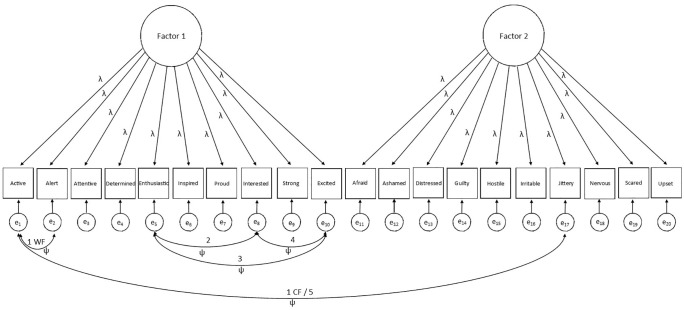

The data were generated based on the two-factor model with correlated residuals presented in Ortuño-Sierra et al. (2015). This study examined the factor structure of the 20-item Spanish translation of the Positive and Negative Affect Schedule measured on 1,000 adolescents and young adults. We focused specifically on the two-factor structure that is typical for the Positive and Negative Affect Schedule with positive affect items loading onto one factor, and negative affect items loading onto the second factor. We kept the factor structure very simple, with the 10 positive items loading 0.6 on the first factor and 0 on the second factor, and the 10 negative items loaded 0 on the first factor and 0.6 on the second (see Figure 1).

Figure 1.

Generating model.

Note. Numbers 1 - 5 indicate order in which correlated residuals were added to the population model. A model with 1 within-factor correlation included a correlation between e1 and e2. A model with 1 cross-factor correlation included a correlation between e1 and e17. A model with 4 correlated residuals included correlations between e1 and e2, e5 and e8, e5 and e10, and e8 and e10.

Ortuño-Sierra et al. (2015) noted a number of correlated residuals in their investigation, which we used as the added correlated residuals in our population model. Previous research found there tends to be additional correlation among the variables interested, enthusiastic, and excited; additionally, the two variables active and alert tend to have residual correlation (Zevon & Tellegen, 1982). Ortuño-Sierra et al. (2015) noted an additional residual correlation between the items jittery and active, believing this correlation occurred because of the similarity in activity level, particularly in the Spanish translations. We systematically included each of these correlated residuals in the creation of the population correlation matrix, varying the magnitude of the correlations in order to examine their effect on model fit when choosing number of factors in an EFA. See Figure 1 for a visualization of the generating model used in the simulations.

Fit Measures

RMSEA

RMSEA measures the discrepancy between the observed and estimated covariance matrix scaled by the degrees of freedom (Browne & Cudeck, 1992; Steiger & Lind, 1980). RMSEA is considered an absolute fit index, as the fitted model is not directly compared with a baseline model. A number of recommendations exist for interpreting RMSEA, with the two most common from Browne and Cudeck (1992; less than 0.05 for close fit and less than 0.08 for reasonable fit) and Hu and Bentler (1998).

RMSEAt

RMSEAt is an equivalence testing statistic calculated from the observed RMSEA, sample size, model degrees of freedom, and α-level (Yuan et al., 2016). We evaluate if RMSEAt is less than the derived cutoff values using scripts from Marcoulides and Yuan (2017). We calculated cutoffs based on the original cutoffs used for RMSEA: .05 (close fit) and .08 (reasonable fit) and used an α-level of .05.

SRMR

The SRMR is also an absolute fit index. SRMR is a function of the differences between the observed and predicted correlation matrix, where lower scores indicate a closer fit. Hu and Bentler (1998) recommend that good fit for SRMR is less than .08.

TLI/CFI

The TLI (Tucker & Lewis, 1973) and CFI (Bentler, 1990) are both incremental fit indices that use different but similar approaches to measuring the discrepancy between a baseline model and the fitted model. In the case of the number of factors problem, the baseline model is a model with no factors. TLI and CFI differ in the way they compare the observed statistic to the degrees of freedom, where the TLI uses a ratio and the CFI uses a difference . CFI and TLI have similar recommended cutoffs, where values greater than 0.90 are consider indicative of “good fit” and values greater than 0.95 are considered “excellent” (Hu & Bentler, 1998).

CFIt

CFIt is an equivalence testing statistic calculated from the observed CFI, sample size, model degrees of freedom, and α-level (Yuan et al., 2016). We evaluate if CFIt is greater than the derived cutoff values using scripts from Marcoulides and Yuan (2017). We calculated cutoffs based on the original cutoffs used for CFI: 0.90 (good fit) and 0.95 (excellent fit) and used an α-level of .05.

Adding Nonspecific Misspecification

In real data analysis, the idea that a two-factor structure would hold exactly in the population is unrealistic. Therefore, we simulated matrices when the population level factor structure is close but slightly perturbed from the two-factor model. This seemed the most realistic for understanding how using model fit indices to select number of factors would work in scale evaluation contexts. To do this, we used the methods described by Cudeck and Browne (1992), which generates covariance matrices for Monte Carlo simulations when researchers are interested in the performance of a method and the model does not hold exactly in the population (e.g., the two-factor model). The method combines a perturbation matrix and the implied population covariance matrix from the two-factor model to create a new covariance matrix with desirable properties. In particular, this new matrix has the same maximum likelihood parameter estimates as the original population covariance matrix. This means that by adding the perturbation matrix, we in no way change or bias the maximum likelihood solutions. The perturbation matrix is generated by specifying a degree of discrepancy desired between the original population covariance matrix and the new covariance matrix. We manipulated discrepancy with respect to population RMSEA. For example, by selecting a perturbation matrix such that population RMSEA = .02, the new calculated matrix would be one such that the maximum likelihood estimates are exactly the same as the original population matrix, but the RMSEA is .02. This means that even with the population data, the fit would not be perfect. See Cudeck and Browne (1992) for a complete description of this method. All calculations were completed in R using a script adapted from Sun (2015).

Simulation Conditions

We used population correlation matrices based on the original factor structure from Ortuño-Sierra et al. (2015) with the added nonspecific misspecification and correlated residuals. We generated matrices with no correlated residuals, one cross-factor correlated residual, one, two, three, and four within-factor correlated residuals, and five correlated residuals (four within-factor and one cross-factor; see Figure 1). The magnitudes of the correlated residuals were either 0, .1, .2, .3, .4, or .5 with all correlated residuals being the same magnitude within each condition. The degree of nonspecific misspecification (population level RMSEA, ϵ) was set to 0, .02, .04, .06, .08, or .10. All factors were completely crossed (number of correlated residuals × magnitude of correlated residuals × degree of nonspecific misspecification), with 100 samples in each of the 252 conditions. Note, when the number of correlated residuals is zero, we cannot systematically alter the magnitude of these correlated residuals. As such, these conditions were all generated, though they resulted in the same population correlation matrix and should not systematically vary from each other.

The sample data sets were generated by taking the product of a data set with 1,000 observations on 20 standard normal variables and the Cholesky decomposition of the perturbed correlation matrix. We generated 100 replications from each of 252 population correlation matrices.

Analysis

Each data set was analyzed in Mplus using a one and two factor EFA model. The EFA model did not include any estimated correlated residuals. This process was automated using R and MplusAutomation, a package that creates Mplus input files, can run Mplus input files, and aggregate results (RMSEA, TLI, CFI, SRMR) from output files (Hallquist & Wiley, 2018).

Results

Data Visualization

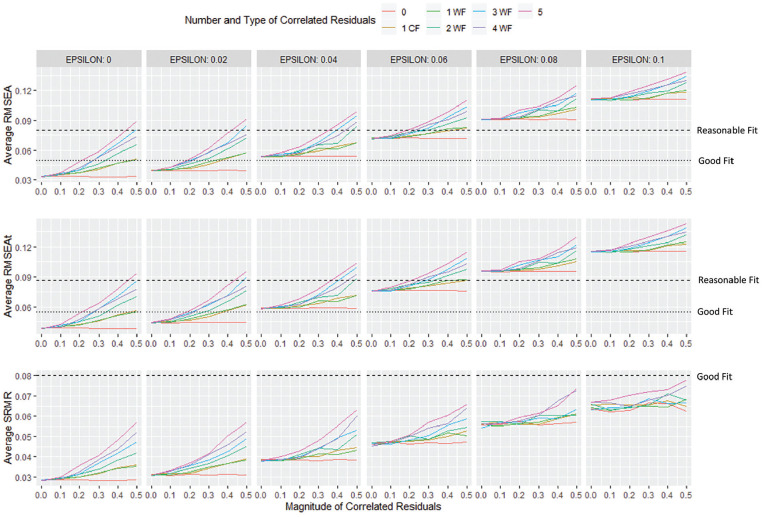

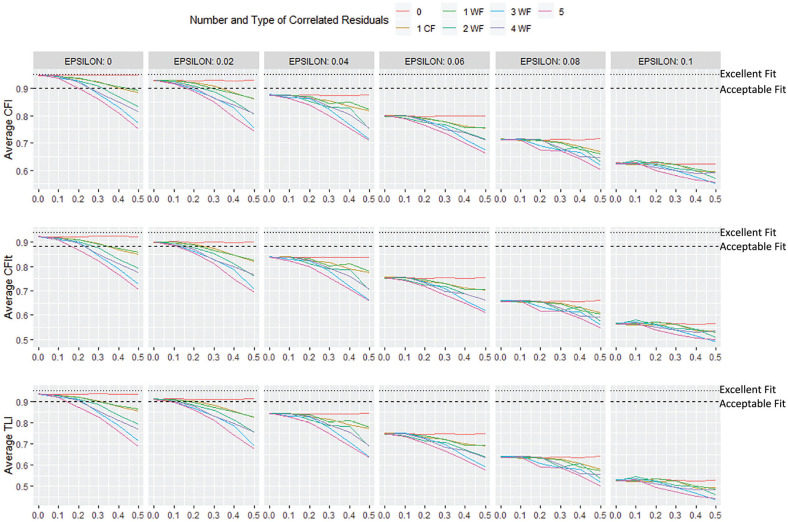

Figures 2 and 3 present graphs of the average RMSEA, RMSEAt, SRMR, CFI, CFIt, and TLI for the two-factor solution as a function of the number, type, and magnitude of the correlated residuals, and the degree of nonspecific misspecification. Based on these graphs, it is clear that model fit gets worse (increase in RMSEA and SRMR and decrease in CFI and TLI) when there are more correlated residuals and the magnitudes of these correlations is higher. As expected, increasing nonspecific misspecification decreases model fit.

Figure 2.

Simulation results for average model fit for RMSEA, RMSEAt, and SRMR.

Note. RMSEA = root mean square error of approximation; RMSEAt = equivalence test-based RMSEA; SRMR = standardized root mean square residual.

Figure 3.

Simulation results for average model fit for CFI, CFIt, and TLI.

Note. CFI = comparative fit index; CFIt = equivalence test-based CFI; TLI = Tucker–Lewis index.

Based on these plots, even when the model fits perfectly at the population level (ϵ = 0), a few correlated residuals of a reasonable magnitude will result in the fit of the two-factor EFA to be poor, based on the recommended cutoffs. One exception to this is SRMR, discussed more below. This means that even when there is no nonspecific misspecification, many researchers who expect correlated errors will choose too many factors since model fit for the correct model (in this case two factors) is not good enough. The conditions with one within-factor correlated residual and those with one cross-factor correlated residual seem to hang together, suggesting that whether the correlated residual is within or across factors has little impact on model fit for this specific model.

As the degree of nonspecific misspecification increases model fit across indices gets worse. Correctly specifying the number of factors in a model is important for achieving good fit in a model; however, other factors also influence model fit, which may preclude fit from being a good criterion for selecting number of factors. In a case when the population model differs from the two-factor model even slightly (e.g., = .04), there is little hope for researchers to select the correct number of factors, because the model fit indices are either too high (RMSEA) or too low (TLI and CFI), even in the case with no correlated residuals. This issue gets particularly bad as the number and magnitude of the residuals gets larger. Even though all these data sets are generated from the two-factor model, the fit of the model depends on other aspects such as the nonspecific misspecification and number and size of correlated residuals.

Linear Model Analyses

Although the data visualization provides clear results, these are plots of the averages across the 100 replications in each condition, and so it is important to take into account sampling variability that could occur during the simulation. We fit linear models to the raw simulation results, to examine trends in the model fit by the manipulated factors in the simulation.

We ran a regression model predicting fit index from number of correlated residuals, magnitude of correlated residuals, degree of nonspecific misspecification (ϵ, whether the correlated residuals were cross- or within-factor, and all possible interactions. As crossed factors were only tested with one correlated residual, all interactions involving number of correlated residuals and type of correlated residuals (within- or cross-factor) were not estimable. The variable for magnitude of correlated residuals was centered around .10 and the variable for number of correlated residuals was centered around 1, to increase interpretability of conditional effects. Below are the results of these analyses for each of the model fit indices. An α-level of .05 was used to determine statistical significance.

RMSEA

The overall model had an adjusted R2 of .95 (unadjusted R2 also .95). With 25,188 degrees of freedom, the overall model fit was significant, as were all main effects and interactions, with specific exceptions. Due to the very high power, it is not worthwhile to report and discuss all significant effects but rather to focus on the nonsignificant effects, as we can be confident these are very small or nearly zero, given the power of this simulation study. Most notably, when there is one correlated residual, the magnitude of the correlated residual is .1, and ϵ = 0, the effect of cross- versus within-factor variables is not significant (b = −7.64 × 10−5, p = .77). This means that for the case of a single correlated residual with a small correlation (.1), whether the correlated residual was within the same factor or different factors did not affect RMSEA. Additionally, the degree of nonspecific misspecification did not interact with the type of correlated residual (within- or cross-factor; b = 2.36 × 10−3, p = .59), conditional on the magnitude of the correlated residuals being .10. This means that the impact of the degree of nonspecific misspecification on RMSEA did not differ whether the correlated residual was across or within factors. All additional factors were significant, p < .003.

Table 1.

RMSEA Linear Model Results.

| Factor | Coefficient estimate | SE | MSR | p |

|---|---|---|---|---|

| NCR | 9.90E-04 | 5.59E-05 | 0.70 | <.0001 |

| M | 3.50E-02 | 5.59E-04 | 1.59 | <.0001 |

| EPSILON | 7.83E-01 | 2.10E-03 | 15.59 | <.0001 |

| CF | −7.64E-05 | 2.66E-04 | 1.21E-05 | .7741 |

| NCR × M | 2.17E-02 | 2.46E-04 | 0.50 | <.0001 |

| NCR × EPSILON | −3.67E-03 | 9.23E-04 | 0.04 | <.0001 |

| M × EPSILON | −1.76E-01 | 9.23E-03 | 0.10 | <.0001 |

| M × CF | 3.51E-03 | 1.17E-03 | 2.16E-06 | .0027 |

| EPSILON × CF | 2.36E-03 | 4.40E-03 | 1.91E-04 | .5920 |

| NCR × M × EPSILON | −1.18E-01 | 4.06E-03 | 0.03 | <.0001 |

| M × EPSILON × CF | −6.69E-02 | 1.93E-02 | 4.23E-04 | .0005 |

Note. All effects have one degree of freedom. NCR = number of correlated residuals, M = magnitude of correlated residuals, EPSILON = degree of nonspecific misspecification; CF = indicator variable for cross factor correlated residuals; RMSEA = root mean square error of approximation; MSR = mean squares of regression .

RMSEAt

Results for RMSEAt were largely identical to RMSEA (see Table 2). The overall model had an unadjusted and adjusted R2 of .95.

Table 2.

RMSEAt Linear Model Results.

| Factor | Coefficient estimate | SE | MSR | p |

|---|---|---|---|---|

| NCR | 9.65E-04 | 5.58E-05 | 0.69 | <.0001 |

| M | 3.44E-02 | 5.58E-04 | 1.57 | <.0001 |

| EPSILON | 7.76E-01 | 2.10E-03 | 15.38 | <.0001 |

| CF | −9.52E-05 | 2.66E-04 | 6.60E-06 | .6653 |

| NCR × M | 2.17E-02 | 2.46E-04 | 0.50 | <.0001 |

| NCR × EPSILON | −3.38E-03 | 9.22E-04 | 0.03 | <.0001 |

| M × EPSILON | 3.36E-03 | 1.17E-03 | 0.09 | <.0001 |

| M × CF | 3.36E-03 | 1.17E-03 | 5.39E-07 | .0041 |

| EPSILON × CF | 2.64E-03 | 4.39E-03 | 1.68E-04 | .5484 |

| NCR × M × EPSILON | −1.15E-01 | 4.06E-03 | 0.03 | <.0001 |

| M × EPSILON × CF | −6.56E-02 | 1.93E-02 | 4.07E-04 | .0001 |

Note. All effects have one degree of freedom. NCR = number of correlated residuals; M = magnitude of correlated residuals; EPSILON = degree of nonspecific misspecification; CF= indicator variable for cross factor correlated residuals; MSR = mean squares of regression; RMSEAt = equivalence test-based RMSEA.

SRMR

Results for SRMR were similar to RMSEA (see Table 3). The overall model had an unadjusted and adjusted R2 of .95. Unlike RMSEA all factors in the model were significant (p < .025).

Table 3.

SRMR Linear Model Results.

| Factor | Coefficient estimate | SE | MSR | p |

|---|---|---|---|---|

| NCR | 3.41E-04 | 2.76E-05 | 0.19 | <.0001 |

| M | 1.46E-02 | 2.76E-04 | 0.33 | <.0001 |

| EPSILON | 3.65E-01 | 1.04E-03 | 3.39 | <.0001 |

| CF | −3.03E-04 | 1.31E-04 | 6.52E-04 | .0211 |

| NCR × M | 1.22E-02 | 1.22E-04 | 0.14 | <.0001 |

| NCR × EPSILON | 1.62E-03 | 4.56E-04 | 0.01 | .0003 |

| M × EPSILON | −8.64E-02 | 4.56E-03 | 0.03 | <.0001 |

| M × CF | 3.64E-03 | 5.78E-04 | 2.39E-05 | <.0001 |

| EPSILON × CF | 1.41E-02 | 2.17E-03 | 7.54E-05 | <.0001 |

| NCR × M × EPSILON | −7.78E-02 | 2.01E-03 | 0.01 | <.0001 |

| M × EPSILON × CF | −6.20E-02 | 9.55E-03 | 3.63E-04 | <.0001 |

Note. All effects have one degree of freedom. SRMR = standardized root mean squared residual; NCR = number of correlated residuals; M = magnitude of correlated residuals, EPSILON = degree of nonspecific misspecification; CF = indicator variable for cross factor correlated residuals. MSR = mean squares of regression.

CFI, CFIt, and TLI

Results for CFI and CFIt were very similar to those of RMSEA and SRMR (see Tables 4 and 5). We will only discuss the differences. The models for CFI and CFIt had an adjusted and unadjusted R2 of .94. Unlike RMSEA, the three-way interaction between magnitude of correlated residuals, type of correlated residuals, and degree of nonspecific misspecification was not significant (CFI: b = 0.149, p = .108; CFIt: b = 0.187, p = .062). Results for TLI were practically identical to those of CFI. Overall model had an adjusted R2 of .94.

Table 4.

CFI Linear Model Results.

| Factor | Coefficient estimate | SE | MSR | p |

|---|---|---|---|---|

| NCR | −3.23E-03 | 2.68E-04 | 7.65 | <.0001 |

| M | −0.13 | 2.68E-03 | 20.49 | <.0001 |

| EPSILON | −3.29 | 0.01 | 278.60 | <.0001 |

| CF | 3.57E-04 | 1.28E-03 | 0.01 | .7796 |

| NCR × M | −0.08 | 1.18E-03 | 5.60 | <.0001 |

| NCR × EPSILON | 0.01 | 4.42E-03 | 0.69 | .0104 |

| M × EPSILON | 0.61 | 0.04 | 1.48 | <.0001 |

| M × CF | −0.01 | 0.01 | 3.88E-03 | .0104 |

| EPSILON × CF | −0.03 | 0.02 | 1.05E-04 | .1832 |

| NCR × M × EPSILON | 0.53 | 0.02 | 0.65 | <.0001 |

| M × EPSILON × CF | 0.15 | 0.09 | 2.10E-03 | .1080 |

Note. All effects have one degree of freedom. NCR = number of correlated residuals; M = magnitude of correlated residuals; EPSILON = degree of nonspecific misspecification; CF = indicator variable for cross factor correlated residuals; MSR = mean squares of regression; CFI = comparative fit index.

Table 5.

CFIt Linear Model Results.

| Factor | Coefficient estimate | SE | MSR | p |

|---|---|---|---|---|

| NCR | −3.74E-03 | 2.90E-04 | 8.71 | <.0001 |

| M | −0.15 | 2.90E-03 | 24.12 | <.0001 |

| EPSILON | −3.64 | 0.01 | 336.14 | <.0001 |

| CF | 2.14E-04 | 1.38E-03 | 0.02 | .8768 |

| NCR × M | −0.09 | 1.28E-03 | 6.32 | <.0001 |

| NCR × EPSILON | 0.02 | 4.79E-03 | 1.01 | .0004 |

| M × EPSILON | 0.77 | 0.05 | 2.21 | <.0001 |

| M × CF | −0.02 | 0.01 | 0.01 | .0014 |

| EPSILON × CF | −0.03 | 0.02 | 1.15E-05 | .1899 |

| NCR × M × EPSILON | 0.63 | 0.02 | 0.90 | <.0001 |

| M × EPSILON × CF | 0.19 | 0.10 | 3.30E-03 | .0627 |

Note. All effects have one degree of freedom. NCR = number of correlated residuals; M = magnitude of correlated residuals; EPSILON = degree of nonspecific misspecification; CF = indicator variable for cross factor correlated residuals; MSR = mean squares of regression; CFIt = equivalence test-based CFI.

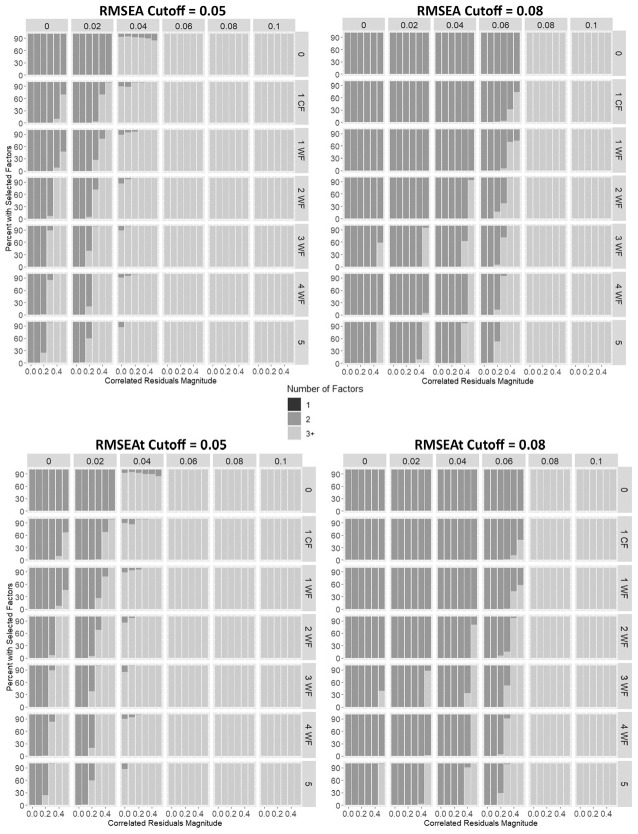

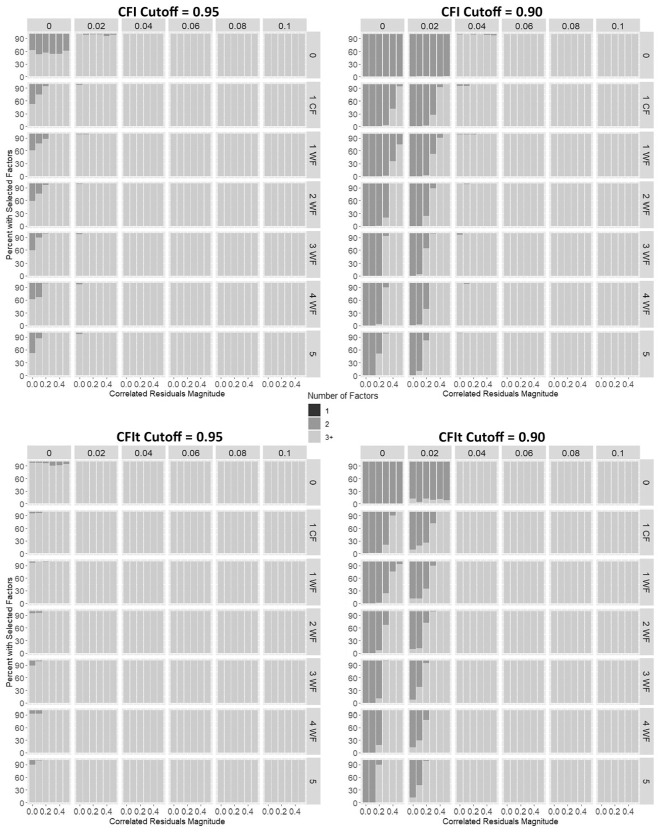

Number of Factors Selected

Based on the results of the simulations, the number of factors that would be selected from each data set were calculated based on each of the six fit criteria (RMSEA, RMSEAt, SRMR, CFI, CFIt, TLI) and the recommended cutoffs for those criteria. The results of these analyses are presented in Figures 4 to 6. Number of factors selected is determined by fitting a one-factor model and a two-factor model only. If the one-factor model falls below the recommended cutoff, one factor is selected. If the one-factor model does not fall below the cutoff but the two-factor model falls below the recommended cutoff, two factors are selected. However, if neither the one- or two-factor model falls below the cutoff, three or more factors are selected. We chose not to run three-factor models, because nonconvergence interfered with collecting information from the simulations.

Figure 4.

Simulation results for number of factors selected using model fit (RMSEA) and recommended cutoff scores (conventional and equivalence test).

Note. RMSEA = root mean square error of approximation.

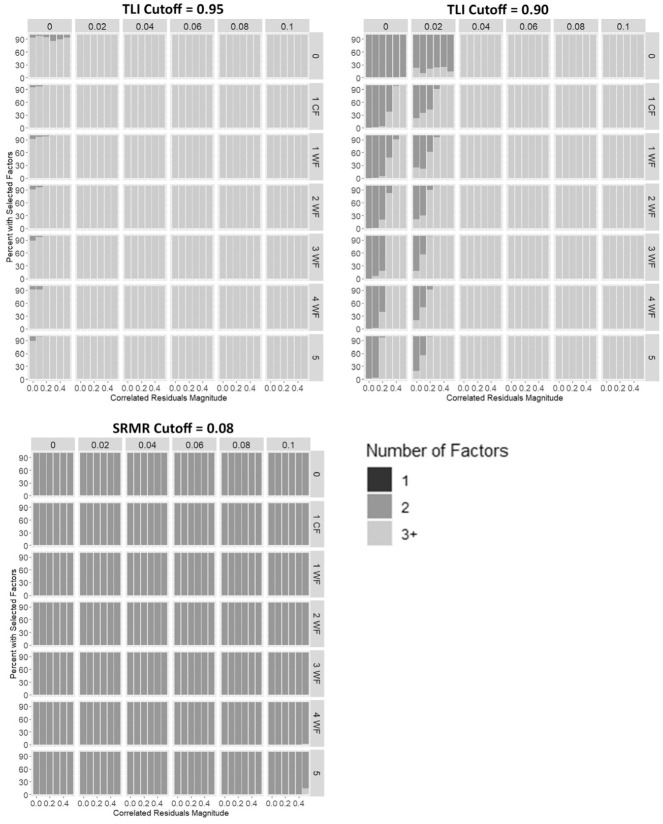

Figure 6.

Simulation results for number of factors selected using different model fit (SRMR and TLI) and conventional recommended cutoff scores.

Note. SRMR = standardized root mean square residual; TLI = Tucker–Lewis index.

Across all methods, either two or three or more factor solutions are selected. Particularly for the more stringent cutoffs (e.g., RMSEA = .05, CFI/TLI = .95), three or more factors would be selected in most of the samples. The conventional cutoffs and the equivalence test approaches for RMSEA were very similar. The equivalence test approach for CFI overfactored more frequently than the conventional CFI approach. A notable result is that no simulated samples selected too few factors (i.e., one factor). A particularly interesting result is that SRMR overwhelmingly chose a two-factor solution, and only in the most extreme cases (five correlated residuals of magnitude .5 with ε = .1) did SRMR choose too many factors. Based on these results the recommended cutoff values for RMSEA, RMSEAt, CFI, CFIt, and TLI tend to choose too many factors.

RMSEA, RMSEAt, CFI, CFIt, and TLI are sensitive to population nonspecific misspecification, the number of correlated residuals, and the magnitude of the correlated residuals affects the ability of these methods to select the correct number of factors. This makes these methods unfit to use for the number of factor selection problem, as in real data analysis these characteristics are unknown. However, the simulation presented here seems to show that SRMR performs well at selecting the correct number of factors; however, previous research has shown that SRMR does not perform well for categorical data (Garrido et al., 2016).

Discussion

Though using model fit indices for selecting number of factors in an EFA may seem intuitive, growing evidence suggests that this approach is problematic in a variety of ways. Previous work has examined the performance of model fit indices under a variety of conditions including sample size, number of response categories, number of factors, number of items per factor, item distributions, interfactor correlation, factor loadings, and presence of cross-loadings (Clark & Bowles, 2018; Garrido et al., 2016). This study examines the performance of model fit indices in selecting the correct number of factors under a combination of unique conditions: continuous data, correlated residuals, and nonspecific misspecification. We believe these factors, and the performance of the tested methods under these conditions, have particularly important implications for scale evaluation. Our results largely show that model fit indices are inappropriate for selecting number of factors in an EFA.

Previous research has found similar results to this study. Garrido et al. (2016) found that model fit indices did not perform comparably to previously preferred methods when analyzing categorical data using polychoric correlations. Clark and Bowles (2018) found similar results with dichotomous data and tetrachoric correlations, suggesting the model fit indices were not well suited to select number of factors. The results of the current study expand the previous results by examining the performance of continuously generated data, suggesting that even under the ideal circumstance of continuous data, model fit indices are not appropriate for selecting number of factors.

This study examines a more recently proposed adjustment to the conventional fit indices and their cutoffs, by examining RMSEAt and CFIt as equivalence testing procedures (Yuan et al., 2016; Marcoulides & Yuan, 2017). This is the first study to examine the potential of these newly proposed indices for the purposes of selecting number of factors in an EFA. These new proposed metrics performed very similarly, and no worse than the existing standard cutoffs. However, we believe that future research should explore these new metrics more in depth. In particular, they are designed to be more accurate across a larger range of sample sizes and model complexities; however, these were not factors that were manipulated in this study. Future research should examine if these fit indices can outperform other metrics across models that vary in sample size and complexity in an EFA context.

This study is the first to experimentally manipulate correlated residuals as part of the data generating model in examining the performance of any method for selecting the number of factors. Our results suggest that correlated residuals can have a large impact on model fit, which is not surprising. However, the downstream effects of this impact on the number of factors selected had not previously been examined. Our results are clear: even a few small correlated residuals can reduce fit to a point where model fit indices will overfactor. Our results suggest that most model fit indices are sensitive to correlated residuals; however, previous research has not examined how other methods of factor selection perform under these conditions as well. Future research should examine how eigenvalue-based methods (e.g., parallel analysis) perform under these conditions. This research would help us better understand if correlated residuals cause a unique problem for model fit indices or if this issue spans across factor selection methods.

Simulation studies often aim to examine how different inferential methods perform under a variety of controlled but realistic conditions. Because the researcher has complete control over the data generating process, often the results of simulation suggest a “best case scenario,” which does not reflect the noisy and multifaceted data generating process we see reflected in real data. This noisy process has direct implications for using statistics such as model fit indices, as when the population model is very complex there is dwindling hope that we will ever have “adequate” model fit even with the population data. Methodologists have made many attempts to create complex data generating models in order to better approximate the performance of different methods in real data. Clark and Bowles (2018) built in cross-loadings and added minor factors in their data generation process while investigating the performance of model fit. They found that cross-loadings improved the ability of the model to select the correct number of factors, but minor factors largely did not influence the results. In our study, we take an alternative method to creating a noisy data generating process: Cudeck and Browne’s (1992) method for adding nonspecific misspecification into a covariance matrix. Using this method, we found that nonspecific misspecification does indeed influence model fit, often reducing the model fit such that the solutions are overfactoring. Examining Figures 2 to 5 makes it clear how much the performance of these methods can vary depending on the level of misspecification. Under perfectly specified conditions (e.g., left most columns of Figures 4 and 5), model fit indices perform quite well in selecting the correct number of factors; however, small misspecifications such as correlated residuals or nonspecific misspecification can quickly affect the performance of these methods. Under realistic conditions, we cannot recommend model fit indices to be used to select the number of factors in an EFA.

Figure 5.

Simulation results for number of factors selected using model fit (CFI) and recommended cutoff scores (conventional and equivalence test).

Note. CFI = comparative fit index.

Similar to Clark and Bowles (2018), we found that the fit indices tend to select models with too many factors rather than too few, however, SRMR was one particular exception where the fit of the two-factor model was typically classified as “adequate.”Figure 4 shows that SRMR was largely accurate in selecting the correct number of factors under our simulation conditions (but see Garrido et al., 2016).

The costs of over- and underfactoring need to be weighed in the selection of methods for selecting number of factors. Previous research has argued that underfactoring has more deleterious effects than overfactoring (Cattell, 1978; Fabrigar et al., 1999; Fava & Velicer, 1992; Gorsuch, 1983; Wood et al., 1996). One of the primary arguments for this is that by building in too many factors the model is more realistic, capturing all potential sources of variance. This is particularly important in a general modeling exercise (as compared with scale evaluation) as the ultimate goal is to create a model that fits well according to the same model fit indices used in this study (e.g., RMSEA). In a modeling framework, including a minor factor in the structural model likely has little cost, and can account for variance in the measurement model with minimal contribution to the structural model. Particularly when factors are correlated, underfactoring can lead to claims that items are measuring the same thing, when in fact they do not. Additionally, underfactoring may muddy the interpretative waters, as combining measures of two related constructs into one construct may make it difficult to conceptualize what the true underlying latent factor is. Overfactoring however, is not without cost. Small and very specific factors can result in a subset of very repetitive items, which, due to a lack of breadth of content coverage, add very little to the theoretical model (Ozer & Reise, 1994). In general, we believe that a more careful balance of over- and underfactoring needs to be taken in a scale evaluation context as compared with other contexts, such as modeling.

In scale evaluation, the purpose is to create a measure that can be used by other researchers that supports valid inferences and generates reliable scores. Both these goals are challenged when the selected number of factors is too few or too many. When too many factors are selected in the original scale development process, the original model is likely overfitting the data and will not be replicable (which also makes valid inference nearly impossible). It may be more practical in a scale evaluation context to define major factors and ignore minor factors in favor of parsimony and reproducibility. As such, we believe that while model fit indices protect from underfactoring in the factor selection process (Clark & Bowles, 2018), they are too prone to overfactoring to be appropriate for scale evaluation contexts. Other methods perform better in terms of balancing under- and overfactoring, and we recommend that those methods (e.g., parallel analysis, minimum average partial test) continue to be preferred in scale evaluation contexts.

By introducing correlated residuals, one could argue that we are adding additional factors, and thus the model fit indices were accurate in their selection of factors. However, we expect in these circumstances a substantive researcher would be uninterested in capturing these minor factors, and that methods that prioritize the selection of major factors would be preferable. Ultimately, the goal in scale evaluation is to select factors that are interpretable in the scientific context and replicable. Though by introducing correlated residuals into the model, equivalent models with minor factors are technically true, we argue that their substantive meaning is limited. On progressing to a CFA, researchers would be more likely to include these types of covariances as correlated residuals rather than minor factors. Ultimately, we believe that methods that succeed at capturing the major factors in a model and minimizing the inclusion of minor factors will be most beneficial in applications to scale evaluation.

This simulation study is limited in several ways. The factors manipulated and the methods chosen were meant to fill a particularly large gap in the literature exploring the performance of fit indices in selecting the number of factors in a factor analysis. In particular, the simulation study only examines a two-factor model and only examines one sample size (1,000), a fixed number of items (20), and fixed factor loadings (0.6). Garrido et al. (2016) examined the impact of these factors and more on the performance of these fit indices with categorical data. Clark and Bowles (2018) examined factor loadings and number of items. Though these factors have been investigated in the past, future research may explore the interaction among these factors and those manipulated in this study (correlated residuals and nonspecific model misspecification) in order to better understand the interplay of the many complex conditions under which factor analysis can occur. Additional factors may be important to consider in future, particularly within the context of scale evaluation. For example, presence of some high- and low-loading items is very common, as items need to be pared down over the course of development. Previous research suggests that the reliability of latent factors can often be in contradiction with the fit of the model, where models with poor measurement can fit well and models with strong measurement can fit poorly (McNeish et al., 2018; Stanley & Edwards, 2016). Future research should examine the unique role that varied factor loadings may play in the ability of fit indices to select the correct number of factors and how this may interact with other factors.

Implications for Practitioners

Model fit indices perform too unpredictably under realistic conditions to warrant use in selecting the number of factors in an EFA. Though SRMR performed very well in our simulation, the results of other simulation studies suggest that this fit index may not perform well in other circumstances. Garrido et al. (2016) found SRMR to be the most inconsistent method, in particular with regard to sample sizes. They found that optimal cutoffs for small sample sizes resulted in suboptimal cutoffs for large sample sizes, and vice versa. These results suggest that the high performance of SRMR in this simulation may be due to the alignment of sample size and cutoff value, thus even though it performed well in this study, we cannot recommend its use for a variety of circumstances. Eigenvalue-based methods (e.g., parallel analysis) continue to be the preferred method for selecting number of factors in an EFA, particularly in a scale evaluation framework as they balance over- and underfactoring very well.

While we sympathize with researchers who would like statistical tools to be as straightforward as possible to use, the current tools (e.g., EFA and CFA) are not particularly straightforward. To date, the search for a perfect decision rule to solve the number of factors problem has been met with failure. We suspect this shall remain true in perpetuity. Given that, we add our voice to the chorus of scholars who have opined against the search for (and even use of) automatic criteria when it comes to determining the number of factors. Researchers must consider statistical, substantive, and pragmatic goals and make an informed and defensible decision.

Implications for Methodologists

Though the focus of this simulation was to provide practical advice on the use of model fit indices to select number of factors, two important methodological implications emerged. Both are related to the issue of sensitivity to misspecification.

Our simulation results suggest that SRMR is not as sensitive to certain types of misspecification (e.g., correlated residuals, nonspecific misspecification) as compared with other model fit indices (RMSEA, CFI, TLI). Some previous work has examined the relative sensitivity of fit indices to different factors such as the number of variables (Kenny & McCoach, 2003), type of model (Fan & Sivo, 2007), and fake responding (Lombardi & Pastore, 2012). However, this research suggests that there may be additional factors to which fit indices are differentially sensitive. This would suggest that once identified, researchers may select the fit index that is most sensitive to the most important type of misspecifications to detect in a given circumstance.

An additional issue of sensitivity arises from our simulation studies, which suggest that given a specific amount of nonspecific misspecification, the impact of correlated residuals can differ greatly. This suggests that different types of misspecification can interact, and that sensitivity of model fit indices to misspecification may vary across levels of other types of misspecification. This can be clearly seen in Figures 2 and 3, where the impact of the magnitude of the correlated residuals is greatest when there is little nonspecific misspecification. However, when nonspecific misspecification is high, the impact of magnitude of correlated residuals is smaller. Future research should delve into the potential interaction among types of misspecification, and their impact on model fit indices. Typically, we might expect that the same misspecification would affect model fit equally across levels of other misspecification, but the current simulation suggests that this may not be the case.

Acknowledgments

We extend our appreciation to Dr. Steve Reise for feedback on this article.

Based on its original conceptualization by Kaiser (1960), this method cannot overfactor as it defines an upper bound for the number of factors.

Footnotes

Authors’ Note: Portions of this study have been presented at the National Council for Measurement in Education, San Antonio, TX, April 2017.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This material is based on work supported by the National Science Foundation Graduate Research Fellowship Program under Grant DGE-1343012.

ORCID iD: Amanda K. Montoya  https://orcid.org/0000-0001-9316-8184

https://orcid.org/0000-0001-9316-8184

References

- Bentler P. M. (1990). Comparative fit indexes in structural models. Psychological Bulletin, 107(2), 238-246. 10.1037/0033-2909.107.2.238 [DOI] [PubMed] [Google Scholar]

- Browne M. W., Cudeck R. (1992). Alternative ways of assessing model fit. Sociological Methods & Research, 21(2), 230-258. 10.1177/0049124192021002005 [DOI] [Google Scholar]

- Cattell R. B. (1966). The scree test for the number of factors. Multivariate Behavioral Research, 1(2), 245-276. 10.1207/s15327906mbr0102_10 [DOI] [PubMed] [Google Scholar]

- Cattell R. B. (1978). The scientific use of factor analysis in behavioral and life sciences. Plenum. [Google Scholar]

- Cattell R. B., Vogelmann S. (1977). A comprehensive trial of the scree and KG criteria for determining the number of factors. Multivariate Behavioral Research, 12(3), 289-325. 10.1207/s15327906mbr1203_2 [DOI] [PubMed] [Google Scholar]

- Chen F., Curran P. J., Bollen K. A., Kirby J., Paxton P. (2008). An empirical evaluation of the use of fixed cutoff points in RMSEA test statistic in structural equation models. Sociological Methods & Research, 36(4), 462-494. 10.1177/0049124108314720 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark D. A., Bowles R. P. (2018). Model fit and item factor analysis: Overfactoring, underfactoring, and a program to guide interpretation, Multivariate Behavioral Research, 53(4), 544-558. 10.1080/00273171.2018.1461058 [DOI] [PubMed] [Google Scholar]

- Cudeck R., Browne M. W. (1992). Constructing a covariance matrix that yields a specified minimizer and a specified minimum discrepancy function value. Psychometrika, 57(3), 357-369. [Google Scholar]

- Dinno A. (2009). Exploring the sensitivity of Horn’s parallel analysis to the distributional form of random data, Multivariate Behavioral Research, 44(3), 362-388. 10.1080/00273170902938969 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fabrigar L. R., Wegener D. T., MacCallum R. C., Strahan E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272-299. 10.1037/1082-989X.4.3.272 [DOI] [Google Scholar]

- Fan X., Sivo S. A. (2007). Sensitivity of fit indices to model misspecification and model types. Multivariate Behavioral Research, 42(3), 509-529. 10.1080/00273170701382864 [DOI] [Google Scholar]

- Fava J. L., Velicer W. F. (1992). The effects of overextraction on factor and component analysis. Multivariate Behavioral Research, 27(3), 387-415. 10.1037/1082-989X.4.3.272 [DOI] [PubMed] [Google Scholar]

- Finch W. H., French B. F. (2018). A simulation investigation of the performance of invariance assessment using equivalence testing procedures. Structural Equation Modeling: A Multidisciplinary Journal, 25(5), 673-686. 10.1080/10705511.2018.1431781 [DOI] [Google Scholar]

- Garrido L. E., Abad F. J., Ponsoda V. (2016). Are fit indices really fit to estimate the number of factors with categorical variables? Some cautionary findings via Monte Carlo simulation. Psychological Methods, 21(1), 93-111. 10.1037/met0000064 [DOI] [PubMed] [Google Scholar]

- Glorfield L. W. (1995). An improvement on Horn’s parallel analysis methodology for selecting the correct number of factors to retain. Educational and Psychological Measurement, 55(3), 377-393. 10.1177/0013164495055003002 [DOI] [Google Scholar]

- Gorsuch R. L. (1983). Factor analysis. Lawrence Erlbaum. [Google Scholar]

- Hakstian A. R., Rogers W. T., Cattell R. B. (1982). The behavior of number-of-factors rules with simulated data. Multivariate Behavioral Research, 17(2), 193-219. 10.1207/s15327906mbr1702_3 [DOI] [PubMed] [Google Scholar]

- Hallquist M. N., Wiley J. F. (2018). MplusAutomation: An R package for facilitating large-scale latent variable analyses in Mplus. Structural Equation Modeling, 25(4), 621-638. 10.1080/10705511.2017.1402334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heene M., Hilbert S., Draxler C., Ziegler M., Buhner M. (2011). Masking misfit in confirmatory factor analysis by increasing unique variances: A cautionary note on the usefulness of cutoff values of fit indices. Psychological Methods, 16(3), 319-336. 10.1037/a0024917 [DOI] [PubMed] [Google Scholar]

- Horn J. L. (1965). A rationale and test for the number of factors in factor analysis. Psychometrika, 30(2), 179-185. 10.1007/BF02289447 [DOI] [PubMed] [Google Scholar]

- Hu L.-T., Bentler P. M. (1998). Fit indices in covariance structure modeling: Sensitivity to underparameterized model misspecification. Psychological Methods, 3(4), 424-453. 10.1037/1082-989X.3.4.424 [DOI] [Google Scholar]

- Kaiser H. F. (1960). The application of electronic computers to factor analysis. Educational and Psychological Measurement, 20(1), 141-151. 10.1177/001316446002000116 [DOI] [Google Scholar]

- Kenny D. A., McCoach D. B. (2003). Effect of the number of variables on measures of fit in structural equation modeling. Structural Equation Modeling: A Multidisciplinary Journal, 10(3), 333-351. 10.1207/S15328007SEM1003_1 [DOI] [Google Scholar]

- Lombardi L., Pastore M. (2012). Sensitivity of fit indices to fake perturbation of ordinal data: A sample by replacement approach. Multivariate Behavioral Research, 47(4), 519-546. 10.1080/00273171.2012.692616 [DOI] [PubMed] [Google Scholar]

- Marcoulides K. M., Yuan K.-H. (2017). New ways to evaluate goodness of fit: A note on using equivalence testing to assess structural equation models. Structural Equation Modeling: A Multidisciplinary Journal, 24(1), 148-153. 10.1080/10705511.2016.1225260 [DOI] [Google Scholar]

- Marsh H. W., Hau K.-T., Wen Z. (2004). In search of golden rules: Comment on hypothesis-testing approaches to setting cutoff values for fit indexes and dangers in overgeneralizing Hu and Bentler’s (1999) findings. Structural Equation Modeling: A Multidisciplinary Journal, 11(3), 320-341. 10.1207/s15328007sem1103_2 [DOI] [Google Scholar]

- McNeish D., An J., Hancock G. R. (2018). The thorny relation between measurement quality and fit index cut-offs in latent variable models. Journal of Personality Assessment, 100(1), 43-52. 10.1080/00223891.2017.1281286 [DOI] [PubMed] [Google Scholar]

- Nye C. D., Drasgow F. (2011). Assessing goodness of fit: Simple rules of thumb simply do not work. Organizational Research Methods, 14(3), 548-570. 10.1177/1094428110368562 [DOI] [Google Scholar]

- Ortuño-Sierra J., Santarén-Rosell M., Pérez de, Albéniz A., Fonseca-Pedrero E. (2015). Dimensional structure of the Spanish version of the Positive and Negative Affect Schedule (PANAS) in adolescents and young adults. Psychological Assessment, 27(3), e1-e9. 10.1037/pas0000107 [DOI] [PubMed] [Google Scholar]

- Ozer D. J., Reise S. P. (1994). Personality assessment. Annual Review of Psychology, 45, 357-388. 10.1146/annurev.ps.45.020194.002041 [DOI] [Google Scholar]

- Preacher K. J., MacCallum R. C. (2003). Repairing Tom Swift’s electric factor analysis machine. Understanding Statistics, 2(1), 13-43. 10.1207/S15328031US0201_02 [DOI] [Google Scholar]

- Preacher K. J., Zhang G., Kim C., Mels G. (2013). Choosing the optimal number of factors in exploratory factor analysis: A model selection perspective. Multivariate Behavioral Research, 48(1), 28-56. 10.1080/00273171.2012.710386 [DOI] [PubMed] [Google Scholar]

- Rodgers J. L. (2010). The epistemology of mathematical and statistical modeling: A quiet methodological revolution. American Psychologist, 65(1), 1-12. 10.1037/a0018326 [DOI] [PubMed] [Google Scholar]

- Sellbom M., Tellegen A. (2019). Factor analysis in psychological assessment research: Common pitfalls and recommendations. Psychological Assessment, 31(12), 1428–1441. 10.1037/pas0000623 [DOI] [PubMed] [Google Scholar]

- Sharma S., Mukherjee S., Kumar A., Dillon W. R. (2004). A simulation study to investigate the use of cutoff values for assessing model fit in covariance structure models. Journal of Business Research, 58(7), 935-943. 10.1016/j.jbusres.2003.10.007 [DOI] [Google Scholar]

- Shi D., Song H., DiStefano D., Maydeu-Olivares A., McDaniel H. L., Jiang Z. (2019). Evaluating factorial invariance: An interval estimation approach using Bayesian structural equation modeling. Multivariate Behavior Research, 54(2), 224-245. 10.1080/00273171.2018.1514484 [DOI] [PubMed] [Google Scholar]

- Stanley L. M., Edwards M. C. (2016). Reliability and model fit. Educational and Psychological Measurement, 76(6), 976-985. 10.1177/0013164416638900 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steiger J. H., Lind J. C. (1980, May). Statistically-based tests for the number of common factors [Paper presentation]. Annual Spring Meeting of the Psychometric Society, Iowa City, IA, United States. [Google Scholar]

- Sun Y. (2015). Constructing a misspecified item response model that yields a specified estimate and a specified model misfit value (Doctoral dissertation), The Ohio State University. https://etd.ohiolink.edu/!etd.send_file?accession=osu1449097866&disposition=inline

- Tucker L. R., Koopman R. F., Linn R. L. (1969). Evaluation of factor analytic research procedures by means of simulated correlation matrices. Psychometrika, 34(4), 421-159. 10.1007/BF02290601 [DOI] [Google Scholar]

- Tucker L. R., Lewis C. (1973). A reliability coefficient for maximum likelihood factor analysis. Psychometrika, 38(1), 1-10. 10.1007/BF02291170 [DOI] [Google Scholar]

- West S. G, Taylor A. B., Wu W. (2014). Model fit and model selection in structural equation modeling. In Hoyle R. H. (Ed.), Handbook of structural equation modeling (pp. 209-231). Guilford Press. [Google Scholar]

- Wood J. M., Tataryn D. J., Gorsuch R. L. (1996). Effects of under- and overextraction on principal axis factor analysis with varimax rotation. Psychological Methods, 1(4), 354-365. 10.1037/1082-989X.1.4.354 [DOI] [Google Scholar]

- Yang Y., Xia Y. (2015). On the number of factors to retain in exploratory factor analysis for ordered categorical data. Behavioral Research Methods, 47(3), 756-772. 10.3758/s13428-014-0499-2 [DOI] [PubMed] [Google Scholar]

- Yuan K.-H., Chan W. (2016). Measurement invariance via multigroup SEM: Issues and solutions with Chi-square-difference tests. Psychological Methods, 21(3), 405-426. 10.1037/met0000080 [DOI] [PubMed] [Google Scholar]

- Yuan K.-H., Chan W., Marcoulides G. A., Bentler P. M. (2016). Assessing structural equation models by equivalence testing with adjusted fit indexes. Structural Equation Modeling: A Multidisciplinary Journal, 23(3), 319-330. 10.1080/10705511.2015.1065414 [DOI] [Google Scholar]

- Zevon M. A., Tellegen A. (1982). The structure of mood change: An idiographic/nomothetic analysis. Journal of Personality and Social Psychology, 43(1), 111-122. 10.1037/0022-3514.43.1.111 [DOI] [Google Scholar]