Abstract

Segmentation is crucial in medical imaging analysis to help extract regions of interest (ROI) from different imaging modalities. The aim of this study is to develop and train a 3D convolutional neural network (CNN) for skull segmentation in magnetic resonance imaging (MRI). 58 gold standard volumetric labels were created from computed tomography (CT) scans in standard tessellation language (STL) models. These STL models were converted into matrices and overlapped on the 58 corresponding MR images to create the MRI gold standards labels. The CNN was trained with these 58 MR images and a mean ± standard deviation (SD) Dice similarity coefficient (DSC) of 0.7300 ± 0.04 was achieved. A further investigation was carried out where the brain region was removed from the image with the help of a 3D CNN and manual corrections by using only MR images. This new dataset, without the brain, was presented to the previous CNN which reached a new mean ± SD DSC of 0.7826 ± 0.03. This paper aims to provide a framework for segmenting the skull using CNN and STL models, as the 3D CNN was able to segment the skull with a certain precision.

Keywords: Convolutional Neural Network, Standard Tessellation Language, Image Segmentation, MRI, CT

1. Introduction

Image segmentation is the process of partitioning an image into multiple sections to simplify the image into something more meaningful so that we can easily locate regions of interest (ROI). In medical imaging and analysis, these ROI, identified by the segmentation process in an image scanning system, can represent various structures in the body such as pathologies, tissues, bone, organs, prosthesis, and so forth.

Magnetic resonance imaging (MRI) and computed tomography (CT) are the most common medical image scanning system used to reveal relevant structures for automated processing of scanned data. Both techniques are excellent in providing non-invasive diagnostic images of organs and structures inside the body. However, CT is not favorable for routine anatomical imaging of the head since it exposes the patient to small doses of ionizing radiation each visit putting the patient at risk for developing diseases such as cancer. For instance, a study in [1] pointed out that the risk to develop leukemia and brain tumors increases with the radiation exposure from CT scans. On the contrary, MRI scans have difficulty identifying different tissues because of the low signal-to-noise ratio of MRI. Additionally, due to bones’ weak magnetic resonance signal, MRI scans struggle with differentiating bone tissue from other structures. Specifically, since different bone tissues have the tendency to differ more in appearance from one another than from the adjacent muscle tissue, segmentation approaches must be robust to account for the variations in the structure [2]. Thus, bone segmentation from MRI presents a challenging problem. Current biomedical imaging segmentation methods take advantage of deep learning with convolutional neural networks (CNNs) [3], as seen in [4] where they trained a large deep CNN to classify over 1 million high-resolution images with a top-1 and top-5 test set error rates of 37.5% and 17.0%, much better than the previous state-of-the-art technique.

In CNN, each layer contains various neurons fixed in subsequent layers and sharing weighted connections. During training, these layers extract features (such as horizontal or vertical edges) from the training images that allows CNN to perform certain tasks such as segmentation by recognizing these features in subsequent images. The advantage of CNN over other techniques is that convolutional image filters are learned and adapted in an automated process for a high-level description in the finest optimization process.

With recent advances in graphical processing units and improvements in computational efficiency, CNNs have achieved excellent results in biomedical image segmentation where deep learning approaches can be performed in an efficient and intelligent way. CNN has been extensively applied in musculoskeletal image segmentation tasks such as brain and spine segmentation [5], acute brain hemorrhage [6], vessel segmentation [7], skull stripping in brain MRI [8], knee bone and cartilage segmentation [9], segmentation of craniomaxillofacial bony structures [10], proximal femur segmentation [11], and cardiac image segmentation [12].

A review on deep learning techniques has been performed by Garcia et al [13] where the authors highlight a promising deep learning framework for segmentation tasks known as UNet [14]. UNet is a CNN which uses an encoding down-sampling path and an up-sampling decoding path for segmentation tasks to increase the resolution of the output, which has shown high performance when applied on biomedical images [7].

Although numerous MRI segmentation techniques are described in the literature, few have focused on segmenting the skull in MRI. One approach to segment the skull in MRI is mathematical morphology [15]. The authors describe a method where they first remove the brain by using a surface extractor algorithms and mask the scalp using thresholding and mathematical morphology. During the skull segmentation process, the authors use mathematical morphology to omit background voxels with similar intensities as the skull. Using thresholding and morphological operations, the inner and outer skull boundaries are identified, and the results are masked with the scalp and brain volumes to establish a closed and nonintersecting skull boundary. Applying this segmentation method to 44 images, the authors were able to achieve a mean dice coefficients of 0.7346, 0.6918, and 0.6337 for shifts CT of 1 mm, 2 mm, and 3 mm respectively.

Wang et al [16] utilized statistical shape information in 15 subjects, where the anatomy of interest is differentiated in the CT data by means of constructing an active shape model of the skull surfaces. The automatic landmarking on the coupled surfaces is optimized in statistical shape information by minimizing the description length that included the local thickness information. This method showed a dice coefficient of 0.7500 for one calvarium segmented. Support vector machine (SVM) combining local and global features are used in [17]. Feature vectors are constructed from each voxel in the image that is used as the first entry. The second input for this method uses a combination of intensities of a set of nearby voxels and statistical moments of the local surroundings. This feature vector is then introduced to a trained SVM that classifies the image as either a skull, or something else. By using SVM, the authors found a dice function mean of 0.7500 (0.68 minimum and 0.81 maximum) from 10 patients in a dataset of 40 patients.

Ref. [18] introduced a convolutional restricted Boltzmann machines (cRBM) for skull segmentation. This technique incorporates cRBM shape model into Statistical Parametric Mapping 8 (SPM8) segmentation framework [19,20] applied in 23 images. This method reached a median dice score for T1-weighted of 0.7344 and for T1-w + T2-w, 0.7446.

Most recently, [21] analyzed three methods of skull segmentation and identified multiple factors contributing to the enhancement of the standard of segmentation. Using a data set obtained from 10 patients, they concluded that improved skull segmentation was accomplished by FSL [22] and SPM12 [23], achieving a mean dice coefficient of 0.76 and 0.80 respectively.

These techniques present an effective method with a mean DSC of 0.75 for small datasets, however, for larger datasets or when extended to images collected from different MRI devices where the image suffers from noise and variation in the choice of parameter values, this effectiveness may be compromised.

Arguably, one of the most important components in machine learning and deep learning are the ground truth labels. Careful collection of data and high-quality ground truth labels that will be used to train and test a model is imperative for a successful deep learning project, but comes with a cost in computation energy and may become very time consuming [24]. Minnema et al [25] displayed a high overlap with gold standard segmentation by introducing a CNN for skull segmentation in CT scans. In the image processing step, a 3D surface model, which represents the label, was created in the standard tessellation language (STL) file format, a well-established method to represent 3D models [26,27,28,29]. To convert the files from CT to STL, segmentation of a high-quality gold standard STL model was performed manually by an experienced medical engineer. The results show a slight one voxel difference between the gold standard segmentation and the CNN segmentation, with a mean dice similarity coefficient of 0.9200.

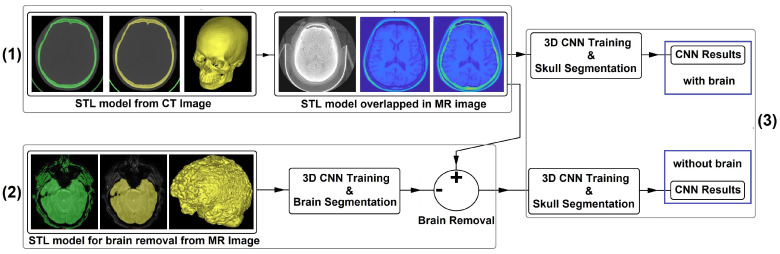

Therefore, this work aims to introduce the deep learning approach, more precisely UNet, for skull segmentation purpose where the ground truth labels are created from CT imaging using the STL representation file format. Figure 1 presents the schematic overview of the proposed study. First STL models are created from 58 CT scans. After being converted into matrices, these images are then overlapped with the MR images to create the gold standard and the first dataset. Then, using dataset 1, the first CNN is created. To improve the accuracy, 62 MR images are used to generate brain STL models. The models are then converted into matrices to create a set of brain gold standard and a second dataset. A brain segmentation algorithm using a second CNN is created and, through this CNN model and manual corrections, the brain is removed from dataset 1. Finally, this new dataset is presented again to the first CNN topology.

Figure 1.

(1) STL models are produced from 58 CT scans and then overlapped with the MR images to create the first dataset. (2) 62 MR images are used to create brain STL models, and a brain segmentation algorithm is created. The brain segmentation algorithm is combined with manual corrections to remove the brain from dataset 1 to create dataset 2. (3) Finally, these 2 datasets are compared using the same CNN topology.

2. Materials and Methods

2.1. Dataset

We used the cancer imaging archive data collections (TCIA) [30] to search for reliable datasets that contain CT and MRI from the same patient and a minimum variation in the coronal, sagittal, and transverse plane. 58 volumetric CT and MR images were selected from four datasets to meet these criteria:

CPTAC-GBM [31]—this dataset contains collection from the National Cancer Institute’s Clinical Proteomic Tumor Analysis Consortium Glioblastoma Multiform cohort. It contains CR, CT, MR, SC imaging modalities from 66 participants, totaling 164 studies;

HNSCC [32,33,34]—this collection contains CT, MR, PT, RT, RTDOSE, RTPLAN, RTSTRUCT, from 627 subjects in a total of 1177 studies;

TCGA-HNSC [35]—the cancer genome atlas head-neck squamous cell carcinoma data collection 479 studies from 227 participants from CT, MR, PET, RTDOSE, RTPLAN, RTSTRUCT modalities;

ACRIN-FMISO [36,37,38]—the ACRIN 6684 multi-center clinical trial contains 423 studies applied in 45 participants using CT, MR, and PET modalities.

2.2. Data Processing I

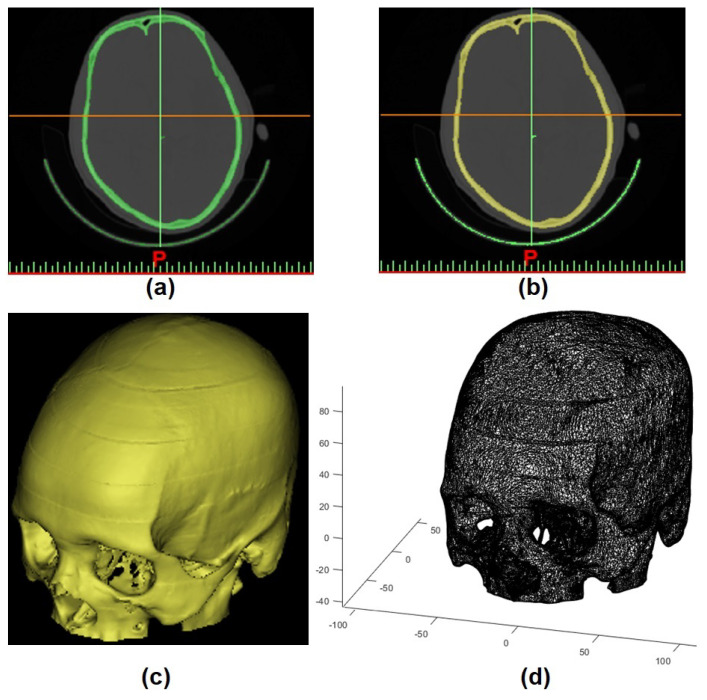

As this study aims to use CT scans to create ground truth labels, the first step was to generate the STL models. To perform this task, CT images were imported into Mimics Medical Imaging Software (Materialise, Leuven, Belgium). By using individual global thresholding in combination with manual corrections, the 3D model mesh was built, which allowed the STL model to be constructed (Figure 2a–c).

Figure 2.

(a) thresholding applied in CT scan, (b) region growing, (c) 3D mesh model (STL model), and (d) STL model converted into matrix.

Then, to convert the geometric information (STL model) into a continuous domain (matrix), voxelisation was performed using [39] in MATLAB R2019B software (Figure 2d).

To generate the MRI labels, the STL models extracted from CT ground truth was overlapped into each MRI slice in 3-modal MRI (T1, T2, and FLAIR) using a combination of manual translations, rotations, and scaling. These manual alignments were followed by visual inspection and fine adjustment to ensure good quality (Figure 3). T2-weighted scans were included because the border between the skull and cerebro-spinal fluid (CSF) can be better delineated, as CSF appears bright in T2-weighted scans and has presented good results in [18,21].

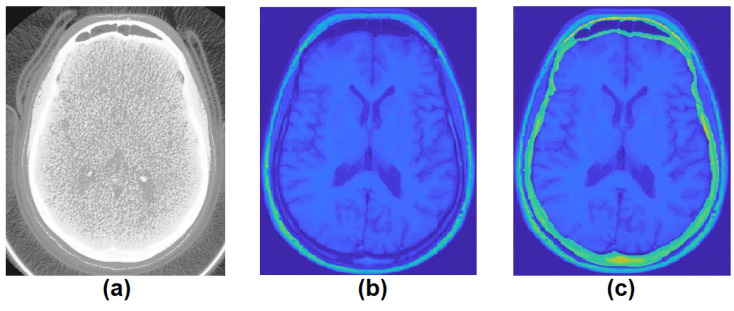

Figure 3.

(a) CT scan, (b) MRI, and (c) STL model extracted from CT scan overlapped in MRI.

Finally, all images were normalized between the range of 0 and 1 and then resized to 256 × 256 using nearest-neighbor interpolation method to improve the processing time.

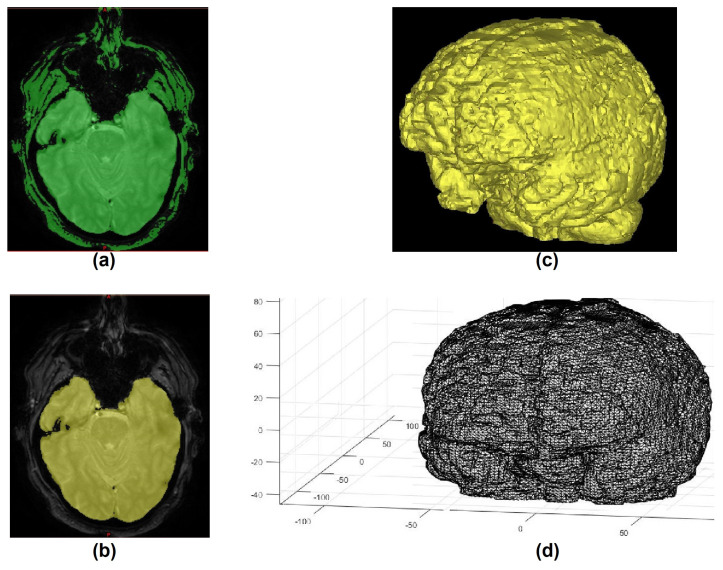

2.3. Data Processing II

To generate a second comparison, which can lead to an improvement in the accuracy, a reduction in the dataset information was performed by removing region of non-interest. The idea is to reduce the information content in the dataset by removing the gray and white matter. To perform this task, 62 volumetric MR images were randomly chosen and, in a similar manner explained in Section 2.2, brain gold standard labels were created from the MR image. The creation of brain labels is easily performed in MRI since the brain is easy to identify in magnetic resonance. The processing initially starts with the application of the thresholding, followed by region growing, and then creation and extraction of the STL models from the MRI (Figure 4).

Figure 4.

(a) thresholding applied in MRI, (b) region growing, (c) 3D mesh model (STL model), and (d) STL model converted into matrix.

2.4. CNN Architecture and Implementation Details

The deep learning framework chosen in this paper was UNet which was introduced by Ronneberger et al. [14]. This type of CNN was chosen because it works well with very few training images, yields more precise segmentation, and has been used in a number of recent biomedical image segmentation applications [5,9,10,11,40]. This network allows for a large number of feature channels in the upsampling procedure, which contribute in the propagation of context information to the highest resolution layers. The result is a more symmetric expansive path and a U-shaped architecture.

In our implementation, we adopted a 3D UNet initially developed for brain tumor segmentation in MRI [41]. To avoid class imbalance when using conventional cross entropy loss, a weighted multiclass dice loss function was used as the general loss of the network [42]. Table 1 shows the implementation parameter chosen for the skull segmentation. The parameters were chosen to avoid computational error, inprove the robustness and generalization ability of the CNN, and obtain a good accuracy for the training set explored in this work.

Table 1.

Skull Segmentation Implementation Details.

| Parameter | Value |

|---|---|

| Optimizer | Adam |

| Encoder Depth | 4 |

| Filter Size | 3 |

| Number of First Encoder Filters | 15 |

| Patch Per Image | 1 |

| Mini Batch Size | 128 |

| Initial Learning Rate | 5 × |

The CNN model was performed on Intel i7-9700 (3.00 GHz) workstations with 64 GB of ram, and two 8GB VRAM graphic cards from NVIDIA (RTX 2070 SUPER and RTX 2080). The code was implemented in MATLAB R2019B.

2.5. Model Performance Evaluation and Statistical Analysis

To evaluate the CNN segmentation, Dice Similarity Coefficient (DSC) [43], Symmetric Volume Difference (SVD) [44], Jaccard Similarity Coefficient (JSC) [45], Volumetric Overlap Error (VOE) [46], and Hausdorff distances (HD) [47] methods were used.

Dice similarity coefficient is a spatial overlap index that varies from the ranges 0, indicating no spatial overlap between two sets of binary segmentation results, to 1, indicating complete overlap [48]. SVD gives the symmetric difference of the shape and segmentation in terms of dice-based error metric. JSC is a similarity ratio which describes the intersection between the ground truth and the machine segmentation regions over their union. It ranges from 0% to 100% of similarity. VOE is the JSC correponding error measure. Finally, to measure the segmentation accuracy in terms of distance betwen the predicted segmentation boundary and the ground truth, Hausdorff distances using Euclidean distance are used.

3. Results and Discussion

3.1. Performance Analysis

The 58 volumetric CT and MR images were randomly divided into 49 for training, 9 for validation/testing. Table 2 presents the statistical analysis of the 9 test images after being trained and tested 10 times for 600 epochs. DSCs, SVDs, JSCs, VOEs, and HDs, are calculated from the gold standard labels and predicted labels. DSCs of the skull varies from 0.6847 to 0.8056, with a mean ± SD of 0.7300 ± 0.04, and from 0.9654 to 0.9833 for background, with a mean ± SD of 0.9740 ± 0.007.

Table 2.

Statistical Analysis of the first dataset.

| DSC (Skull) |

DSC (Background) |

SVD (Skull) |

JSC (Skull) |

JSC (Background) |

VOE (Skull) |

HD (Skull) |

|---|---|---|---|---|---|---|

To improve the results, by a reduction of regions of non-interest, a 3D UNet task was performed by using the same software/equipment previously used. The difference between these two methods, rely on the creation of the gold standard from different image modalities and 3D UNet parameters. This approach does not require the CT and MRI scans to overlap in order to create MRI gold standard labels. Instead, this approach uses it own volumetric MRI to create the labels through the same processing presented previously.

Using the same datasets from the cancer imaging archive data collection, 62 different volumetric MRI were used to create the brain dataset, where 53 were used for training, 3 for validation, and 6 for testing purpose. Table 3 shows the CNN implementation details of the brain segmentation and the statistical analysis of the 6 tested images are presented in the Table 4 after 10 rounds of testing. DSCs, SVDs, JSCs, VOEs, and HDs, are calculated from the brain gold standard labels and brain predicted labels.

Table 3.

Brain Segmentation Implementation Details.

| Parameter | Value |

|---|---|

| Optimizer | Adam |

| Encoder Depth | 3 |

| Filter Size | 5 |

| Number of First Encoder Filters | 7 |

| Patch Per Image | 2 |

| Mini Batch Size | 128 |

| Initial Learning Rate |

Table 4.

Statistical Analysis of the brain segmentation.

| DSC (Brain) |

DSC (Background) |

SVD (Brain) |

JSC (Brain) |

JSC (Background) |

VOE (Brain) |

HD (Brain) |

|---|---|---|---|---|---|---|

These results show an accuracy rate of 0.9244 ± 0.04, however, the brain must be perfeclty extracted. Therefore, after CNN was tested on the 58 initial volumetric MRI, the labels generated in this process were manually corrected using the Matlab program created by [49] in order to optimize brain removal.

After removing the gray and white matter, the modified 58 volumetric images, 49 for training and 9 for validation/testing, were then presented to the CNN using the same parameters shown in Table 1, and the statistical analysis of the 9 tested images are shown in Table 5 and Table 6.

Table 5.

Statistical Analysis of the second dataset.

| DSC (Skull) |

DSC (Background) |

SVD (Skull) |

JSC (Skull) |

JSC (Background) |

VOE (Skull) |

HD (Skull) |

|---|---|---|---|---|---|---|

Table 6.

Differences between Dataset 2 minus Dataset 1.

| DSC (Skull) |

DSC (Background) |

SVD (Skull) |

JSC (Skull) |

JSC (Background) |

VOE (Skull) |

HD (Skull) |

|---|---|---|---|---|---|---|

| 0.0231 | 0.0024 | −0.0231 | 0.0046 | 0.0331 | −0.0331 | −08.36 |

| 0.0390 | 0.0040 | −0.0390 | 0.0077 | 0.0533 | −0.0533 | −12.43 |

| 0.0371 | 0.0038 | −0.0371 | 0.0074 | 0.0503 | −0.0503 | −03.09 |

| 0.0686 | 0.0060 | −0.0686 | 0.0116 | 0.0909 | −0.0909 | −08.96 |

| 0.0727 | 0.0081 | −0.0727 | 0.0155 | 0.0937 | −0.0937 | −21.68 |

| 0.0573 | 0.0086 | −0.0573 | 0.0162 | 0.0711 | −0.0711 | −05.29 |

| 0.0687 | 0.0083 | −0.0687 | 0.0157 | 0.0850 | −0.0850 | −13.91 |

| 0.0616 | 0.0099 | −0.0616 | 0.0187 | 0.0755 | −0.0755 | −15.94 |

| 0.0463 | 0.0065 | −0.0463 | 0.0124 | 0.0555 | −0.0555 | −09.94 |

From Table 5 and Table 6, it can be stated that the reduction in the information contained in the images, such as the removal of the brain, helps in improving the segmentation of the skull bones. In fact, the DSC improvement varied from 2.31% to 7.27% for the skull and 0.24% to 0.99% for the background, which demonstrates that the removal of information in images inherently affects the segmentation of the skull directly. Thus, the initial DSC mean for skull and background improved from 0.7300 ± 0.04 and 0.9740 ± 0.007 to 0.7826 ± 0.03 and 0.9804 ± 0.004 respectively, with a decrease in the standard deviation.

The results represented by DSCs from Table 2 in this present study are marginally lower to those reported in [15,16,17,18], who achieved mean DSCs of (0.7346, 0.6918, 0.6337), 0.75, 0.75, and (0.7344, 0.7446) respectively, and lower than that reported by [21] of (0.76 and 0.80). Howerver, the interpretations of the different results found in these studies must be evaluated with caution due to the differences between the databases used, computational methods, and so forth.

This distinction may be attributed to the size of the dataset used. [40,50] reported a mean dice coefficient of 0.9189 and 0.9800 for CT skull segmentation using CNN when a dataset of 195 and 199 images was used, and [50] attributed this high DSC to the dataset size and image resolution when compared to other study ([25]). Thus, a change in the size of the dataset may contributed to the improvement of the values of the DSCs. In addition, the geometric disparity, variations, and deformity between the skulls sets become more evident as the dataset increases. As the related works used small datasets, this aspect may have led to the high DSCs reported.

Furthermore, we used four distinct datasets [31,32,35,36] that use different CT and MRI devices with a variety of parameters. These datasets included variations in age, ethnicity, and medical history. In addition, several patients have undergone cephalic surgical treatment which may altered the skeletal structure of the skull. In total, 40 images have part of the skull removed due to brain abnormalities. These removals may have affected the performance of the segmentaion.

From Table 6, JSC and HD improved from the initial values (Dataset 1), while SVD and VOE decreased. These improvements and reductions can be due to the fact that there is less overlap between the ground truth and the predicted segmentation in the brain region since there is no brain.

One drawback of presented method is during the creation of the gold standard STL model. An expert manually corrected the models by edge smoothing or noise residue removal which may prompt the CNN to learn the defects the expert may have created. Furthermore, during the conversion from STL model into label (voxelisation), a quantity of information from the skull-voxel may have been erroneously labeled as background when converted into imaging-voxel. Other disadvantage regards the number of training images. Unfortunately, the amount of usable data that can be acquired from the same patient for both CT and MR images is difficult because of alignment issues, and commonly limited due to ethical and privacy considerations and regulations.

The results found in this article reflect a long-standing search for the development of a technique for bone segmentation in MRI, however, the proposed method DSC (0.7826 ± 0.03) does not exceed the performance of current CT techniques, DSC of 0.9189 ± 0.0162 [40]), DSC of 0.9200 ± 0.0400 [25]), and DSC of 0.9800 ± 0.013 [50]).

3.2. Comparison between UNet, UNet++, and UNet3+

Further comparison is necessary to see how the DSC behave in various deep learning methods. UNet was compared to UNet++ [51], an encoder-decoder network where a series of dense skip pathways are connected in the encoder and decoder sub-networks, and UNet3+, a deep learinng approach that uses the full-scale aggregated feature map to learn hierarchical representations while, using feature maps in various scales, incorporate low-level details with high-level semantics [52]. Table 7 compares UNet, UNet++, and UNet3+ architecture in terms of segmentation accuracy measured by dice similarity coefficient on both datasets 1 and 2. The parameters for each CNN were identical, with an encoder depth of 3, a mini batch size of 16, an initial learning rate of 0.005, and the training was carried out in 100 periods.

Table 7.

Comparison between UNet, UNet++, and UNet3+ in four samples.

| Dataset 1 | Dataset 2 | |||||

|---|---|---|---|---|---|---|

| Samples | Unet | Unet++ | Unet3+ | Unet | Unet++ | Unet3+ |

| A | ||||||

| B | ||||||

| C | ||||||

| D | ||||||

As seen, UNet3+ outperformed UNet and UNet++, obtaining average improvement over UNet of 1.51% and 0.26% in datasets 1 and 2. The UNet algorithm took about an hour to train the 100 epochs, and UNet3+ took about 2.5 times longer. Therefore, if the training time of the UNet3+ is disregarded, this network may be used to slightly improve segmentation results.

4. Conclusions

This study presents a 3D CNN developed for skull segmentation in MRI where the trained labels were acquired from the same patient CT scans in standard tessellation language. This method initially demonstrated a skull DSC overlap of 0.7300 ± 0.04 and 0.9740 ± 0.007 for background, however, after the removal of the gray and white matters, DSC reached an average of 0.7826 ± 0.03 and 0.9804 ± 0.004 respectively. Due to the limited number of datasets tested, further research may be undertaken to improve the mean DSC. In summary, the present method is a step forward in the improvement of bone extraction in MRI using CNN to achieve average DSC rates similar to those obtained in CT scans.

Acknowledgments

Data used in this publication were generated by the National Cancer Institute Clinical Proteomic Tumor Analysis Consortium (CPTAC). The results shown in this paper are in part based upon data generated by the TCGA Research Network http://cancergenome.nih.gov/ (accessed on 1 March 2021).

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | convolutional neural network |

| CR | Computed Radiography |

| cRBM | convolutional restricted Boltzmann machines |

| CSF | cerebro-spinal fluid |

| CT | computed tomography |

| DSC | dice similarity coefficient |

| FN | false negatives |

| FP | false positives |

| GB | gigabyte |

| HD | hausdorf distances |

| JSC | jaccard similarity coefficient |

| MRI | magnetic resonance imaging |

| PT or PET | positron emission tomography |

| ROI | regions of interest |

| RT | radiotherapy |

| RTDOSE | radiotherapy dose |

| RTPLAN | radiotherapy plan |

| RTSTRUCT | radiotherapy structure set |

| SC | secondary capture |

| SD | standard deviation |

| SPM8 | statistical parametric mapping 8 |

| STL | standard tessellation language |

| SVD | symmetric volume difference |

| SVM | support vector machine |

| TP | true positives |

| VOE | volumetric overlap error |

| VRAM | video random access memory |

Author Contributions

Conceptualization, R.D.C.d.S.; Project administration, T.R.J.; Writing—review and editing, V.A.C. All authors have read and agreed to the published version of the manuscript.

Funding

No external funding was used for this study.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets presented in this study, are openly available at: TCIA—https://www.cancerimagingarchive.net/ (accessed on 1 March 2021).

Conflicts of Interest

The authors declare no conflict of interest.

Disclosure Statement

This study does not contain any experiment with human participants performed by any of the authors.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Meulepas J.M., Ronckers C.M., Smets A.M., Nievelstein R.A., Gradowska P., Lee C., Jahnen A., van Straten M., de Wit M.C.Y., Zonnenberg B., et al. Radiation Exposure From Pediatric CT Scans and Subsequent Cancer Risk in the Netherlands. JNCI J. Natl. Cancer Inst. 2018;111:256–263. doi: 10.1093/jnci/djy104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Migimatsu T., Wetzstein G. Automatic MRI Bone Segmentation. 2015. unpublished.

- 3.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M., Van Der Laak J.A., Van Ginneken B., Sánchez C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 4.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012;25:1097–1105. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 5.Kolařík M., Burget R., Uher V., Říha K., Dutta M.K. Optimized High Resolution 3D Dense-U-Net Network for Brain and Spine Segmentation. Appl. Sci. 2019;9:404. doi: 10.3390/app9030404. [DOI] [Google Scholar]

- 6.Ker J., Singh S.P., Bai Y., Rao J., Lim T., Wang L. Image Thresholding Improves 3-Dimensional Convolutional Neural Network Diagnosis of Different Acute Brain Hemorrhages on Computed Tomography Scans. Sensors. 2019;19:2167. doi: 10.3390/s19092167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Livne M., Rieger J., Aydin O.U., Taha A.A., Akay E.M., Kossen T., Sobesky J., Kelleher J.D., Hildebr K., Frey D., et al. A U-Net Deep Learning Framework for High Performance Vessel Segmentation in Patients with Cerebrovascular Disease. Front. Neurosci. 2019;13 doi: 10.3389/fnins.2019.00097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hwang H., Rehman H.Z.U., Lee S. 3D U-Net for Skull Stripping in Brain MRI. Appl. Sci. 2019;9:569. doi: 10.3390/app9030569. [DOI] [Google Scholar]

- 9.Ambellan F., Tack A., Ehlke M., Zachow S. Automated segmentation of knee bone and cartilage combining statistical shape knowledge and convolutional neural networks. Med. Image Anal. 2019;52:109–118. doi: 10.1016/j.media.2018.11.009. [DOI] [PubMed] [Google Scholar]

- 10.Dong N., Li W., Roger T., Jianfu L., Peng Y., James X., Dinggang S., Qian W., Yinghuan S., Heung-Il S., et al. Segmentation of Craniomaxillofacial Bony Structures from MRI with a 3D Deep-Learning Based Cascade Framework. Mach. Learn. Med. Imaging. 2017;10541:266–273. doi: 10.1007/978-3-319-67389-9_31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Deniz C., Siyuan X., Hallyburton S., Welbeck A., Babb J., Honig S., Cho K., Chang G. Segmentation of the Proximal Femur from MR Images Using Deep Convolutional Neural Networks. Sci. Rep. 2018;8 doi: 10.1038/s41598-018-34817-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chen C., Qin C., Qiu H., Tarroni G., Duan J., Bai W., Rueckert D. Deep Learning for Cardiac Image Segmentation: A Review. Front. Cardiovasc. Med. 2020;7 doi: 10.3389/fcvm.2020.00025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Garcia-Garcia A., Orts-Escolano S., Oprea S., Villena-Martinez V., Garcia-Rodriguez J. A review on deep learning techniques applied to semantic segmentation. arXiv. 20171704.06857 [Google Scholar]

- 14.Ronneberger O., Fischer P., Brox T. Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015. Springer; Cham, Switzerland: 2015. U-Net: Convolutional Networks for Biomedical Image Segmentation; pp. 234–241. [DOI] [Google Scholar]

- 15.Dogdas B., Shattuck D., Leahy R. Segmentation of skull and scalp in 3-D human MRI using mathematical morphology. Hum. Brain Mapp. 2005;26:273–285. doi: 10.1002/hbm.20159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang D., Shi L., Chu W., Cheng J., Heng P. Segmentation of human skull in MRI using statistical shape information from CT data. J. Magn. Reson. Imaging. 2009;30:490–498. doi: 10.1002/jmri.21864. [DOI] [PubMed] [Google Scholar]

- 17.Sjölund J., Järlideni A., Andersson M., Knutsson H., Nordström H. Skull Segmentation in MRI by a Support Vector Machine Combining Local and Global Features; Proceedings of the 22nd International Conference on Pattern Recognition; Stockholm, Sweden. 24–28 August 2014; pp. 3274–3279. [DOI] [Google Scholar]

- 18.Puonti O., Leemput K., Nielsen J., Bauer C., Siebner H., Madsen K., Thielscher A. Proceedings of the Medical Imaging 2018: Image Processing. Volume 10574. International Society for Optics and Photonics; Bellingham, WA, USA: 2018. Skull segmentation from MR scans using a higher-order shape model based on convolutional restricted Boltzmann machines. [DOI] [Google Scholar]

- 19.Ashburner J., Friston K.J. Unified Segmentation. NeuroImage. 2005;26:839–851. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- 20.UCL Queen Square Institute of Neurology Statistical Parametric Mapping. [(accessed on 17 September 2020)]; Available online: https://www.fil.ion.ucl.ac.uk/spm/software/spm8/

- 21.Nielsen J.D., Madsen K.H., Puonti O., Siebner H.R., Bauer C., Madsen C.G., Saturnino G.B., Thielscher A. Automatic skull segmentation from MR images for realistic volume conductor models of the head: Assessment of the state-of-the-art. NeuroImage. 2018;174:587–598. doi: 10.1016/j.neuroimage.2018.03.001. [DOI] [PubMed] [Google Scholar]

- 22.Smith S.M., Jenkinson M., Woolrich M.W., Beckmann C.F., Behrens T.E., Johansen-Berg H., Bannister P.R., De Luca M., Drobnjak I., Flitney D.E., et al. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage. 2004;23:S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- 23.UCL Queen Square Institute of Neurology Statistical Parametric Mapping. [(accessed on 17 February 2021)]; Available online: https://www.fil.ion.ucl.ac.uk/spm/software/spm12/

- 24.Yamashita R., Nishio M., Do R., Togashi K. Convolutional Neural Networks: An overview and application in radiology. Insights Imaging. 2018;9:611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Minnema J., Eijnatten M., Kouw W., Diblen F., Mendrik A., Wolff J. CT Image Segmentation of Bone for Medical Additive Manufacturing using a Convolutional Neural Network. Comput. Biol. Med. 2018;103:130–139. doi: 10.1016/j.compbiomed.2018.10.012. [DOI] [PubMed] [Google Scholar]

- 26.Ferraiuoli P., Taylor J.C., Martin E., Fenner J.W., Narracott A.J. The Accuracy of 3D Optical Reconstruction and Additive Manufacturing Processes in Reproducing Detailed Subject-Specific Anatomy. J. Imaging. 2017;3 doi: 10.3390/jimaging3040045. [DOI] [Google Scholar]

- 27.Im C.H., Park J.M., Kim J.H., Kang Y.J., Kim J.H. Assessment of Compatibility between Various Intraoral Scanners and 3D Printers through an Accuracy Analysis of 3D Printed Models. Materials. 2020;13:4419. doi: 10.3390/ma13194419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Di Fiore A., Stellini E., Savio G., Rosso S., Graiff L., Granata S., Monaco C., Meneghello R. Assessment of the Different Types of Failure on Anterior Cantilever Resin-Bonded Fixed Dental Prostheses Fabricated with Three Different Materials: An In Vitro Study. Appl. Sci. 2020;10 doi: 10.3390/app10124151. [DOI] [Google Scholar]

- 29.Zubizarreta-Macho Á, Triduo M., Pérez-Barquero J.A., Guinot Barona C., Albaladejo Martínez A. Novel Digital Technique to Quantify the Area and Volume of Cement Remaining and Enamel Removed after Fixed Multibracket Appliance Therapy Debonding: An In Vitro Study. J. Clin. Med. 2020;9:1098. doi: 10.3390/jcm9041098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Clark K., Vendt B., Smith K., Freymann J., Kirby J., Koppel P., Moore S., Phillips S., Maffitt D., Pringle M., et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging. 2013;26:1045–1057. doi: 10.1007/s10278-013-9622-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.National Cancer Institute Clinical Proteomic Tumor Analysis Consortium (CPTAC) Radiology Data from the Clinical Proteomic Tumor Analysis Consortium Glioblastoma Multiforme [CPTAC-GBM] Collection [Dataset] T Cancer Imaging Arch. 2018 doi: 10.7937/k9/tcia.2018.3rje41q1. [DOI] [Google Scholar]

- 32.Grossberg A., Elhalawani H., Mohamed A., Mulder S., Williams B., White A.L., Zafereo J., Wong A.J., Berends J.E., AboHashem S., et al. Anderson Cancer Center Head and Neck Quantitative Imaging Working Group HNSCC [Dataset] Cancer Imaging Arch. 2020 doi: 10.7937/k9/tcia.2020.a8sh-7363. [DOI] [Google Scholar]

- 33.Grossberg A., Mohamed A., Elhalawani H., Bennett W., Smith K., Nolan T., Williams B., Chamchod S., Heukelom J., Kantor M., et al. Imaging and Clinical Data Archive for Head and Neck Squamous Cell Carcinoma Patients Treated with Radiotherapy. Sci. Data. 2018;5:180173. doi: 10.1038/sdata.2018.173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Elhalawani H., Mohamed A.S., White A.L., Zafereo J., Wong A.J., Berends J.E., AboHashem S., Williams B., Aymard J.M., Kanwar A., et al. Matched computed tomography segmentation and demographic data for oropharyngeal cancer radiomics challenges. Sci. Data. 2017;4:170077. doi: 10.1038/sdata.2017.77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Zuley M.L., Jarosz R., Kirk S., Lee Y., Colen R., Garcia K., Aredes N.D. Radiology Data from The Cancer Genome Atlas Head-Neck Squamous Cell Carcinoma [TCGA-HNSC] collection. Cancer Imaging Arch. 2016 doi: 10.7937/K9/TCIA.2016.LXKQ47MS. [DOI] [Google Scholar]

- 36.Kinahan P., Muzi M., Bialecki B., Coombs L. Data from ACRIN-FMISO-Brain. Cancer Imaging Arch. 2018 doi: 10.7937/K9/TCIA.2018.vohlekok. [DOI] [Google Scholar]

- 37.Gerstner E.R., Zhang Z., Fink J.R., Muzi M., Hanna L., Greco E., Prah M., Schmainda K.M., Mintz A., Kostakoglu L., et al. ACRIN 6684: Assessment of Tumor Hypoxia in Newly Diagnosed Glioblastoma Using 18F-FMISO PET and MRI. Clin Cancer Res. 2016;22:5079–5086. doi: 10.1158/1078-0432.CCR-15-2529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ratai E.M., Zhang Z., Fink J., Muzi M., Hanna L., Greco E., Richards T., Kim D., Andronesi O.C., Mintz A., et al. ACRIN 6684: Multicenter, phase II assessment of tumor hypoxia in newly diagnosed glioblastoma using magnetic resonance spectroscopy. PLoS ONE. 2018;13 doi: 10.1371/journal.pone.0198548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Pati S., Ravi B. Voxel-based representation, display and thickness analysis of intricate shapes; Proceedings of the Ninth International Conference on Computer Aided Design and Computer Graphics (CAD-CG’05); Hong Kong, China. 7–10 December 2005; [DOI] [Google Scholar]

- 40.Dalvit Carvalho da Silva R., Jenkyn T.R., Carranza V.A. Convolutional Neural Network and Geometric Moments to Identify the Bilateral Symmetric Midplane in Facial Skeletons from CT Scans. Biology. 2021;10:182. doi: 10.3390/biology10030182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Çiçek Ö., Abdulkadir A., Lienkamp S., Brox T., Ronneberger O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation; Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016, Lecture Notes in Computer Science; Athens, Greece. 17–21 October 2016; 2016. [DOI] [Google Scholar]

- 42.Sudre C., Li W., Vercauteren T., Ourselin S., Cardoso M. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations; Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: Third International Workshop; Quebec City, QC, Canada. 14 September 2017; pp. 240–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Dice L. Measures of the Amount of Ecologic Association Between Species. Ecology. 1945;26:297–302. doi: 10.2307/1932409. [DOI] [Google Scholar]

- 44.Schenk A., Prause G., Peitgen H.O. Efficient semiautomatic segmentation of 3D objects in medical images; Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2000, Lecture Notes in Computer Science, 2000; Pittsburgh, PA, USA. 11–14 October 2000; pp. 186–195. [DOI] [Google Scholar]

- 45.Jaccard P. Distribution de la flore alpine dans le bassin des Dranses et dans quelques regions voisines. Bull. Soc. Vaudoise Des Sci. Nat. 1901;37:241–272. doi: 10.5169/seals-266440. [DOI] [Google Scholar]

- 46.Rusko L., Bekes G., Fidrich M. Automatic segmentation of the liver from multi- and single-phase contrast-enhanced CT images. Med Image Anal. 2009;13:871–882. doi: 10.1016/j.media.2009.07.009. [DOI] [PubMed] [Google Scholar]

- 47.Karimi D., Salcudean S. Reducing the Hausdorff Distance in Medical Image Segmentation With Convolutional Neural Networks. IEEE Trans. Med Imaging. 2020;39:499–513. doi: 10.1109/TMI.2019.2930068. [DOI] [PubMed] [Google Scholar]

- 48.Zou K.H., Warfield S.K., Bharatha A., Tempany C.M., Kaus M.R., Haker S.J., Wells W.M., III, Jolesz F.A., Kikinis R. Statistical validation of image segmentation quality based on a spatial overlap index. Acad. Radiol. 2004;11:178–189. doi: 10.1016/S1076-6332(03)00671-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Wang G. Paint on an BW Image (Updated Version), MATLAB Central File Exchange. [(accessed on 10 September 2020)]; Available online: https://www.mathworks.com/matlabcentral/fileexchange/32786-paint-on-an-bw-image-updated-version.

- 50.Kodym O., Španěl M., Herout A. Segmentation of defective skulls from ct data for tissue modelling. arXiv. 20191911.08805 [Google Scholar]

- 51.Zhou Z., Siddiquee M.M.R., Tajbakhsh N., Liang J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In: Stoyanov D., editor. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. DLMIA 2018, ML-CDS 2018. Lecture Notes in Computer Science. Volume 11045. Springer; Cham, Switzerland: 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Huang H., Lin L., Tong R., Hu H., Zhang Q., Iwamoto Y., Han X., Chen Y.W., Wu J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation; Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); Barcelona, Spain. 4–8 May 2020; pp. 1055–1059. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets presented in this study, are openly available at: TCIA—https://www.cancerimagingarchive.net/ (accessed on 1 March 2021).