Abstract

We analyze the impact of common stereoscopic 3D (S3D) depth distortion on S3D optic flow in virtual reality (VR) environments. The depth distortion is introduced by mismatches between the image acquisition and display parameter. The results show that such S3D distortions induce large S3D optic flow distortions and may even induce partial/full optic flow reversal within a certain depth range, depending on the viewer’s moving speed and the magnitude of S3D distortion introduced. We hypothesize that the S3D optic flow distortion may be a source of intra-sensory conflict that may be a source of visually induced motion sickness (VIMS) in S3D.

Introduction

With the recent growth of interest in virtual reality (VR), many solutions to the visual discomfort that occurs while exploring VR worlds presented in stereoscopic 3D (S3D) have been proposed. Such discomfort symptoms are considered to be critical issues that may prevent potential users from embracing VR devices, as once a user experiences these symptoms, they may be reluctant to try it again. Our work presented here is motivated by the need to eliminate or reduce visually induced motion sickness (VIMS) in S3D systems.

The most common explanation for motion sickness symptoms is based on the inter-sensory motion conflict theory. When motion signals from different sensory systems, such as visual and vestibular, are conflicting with each other, they may cause motion sickness. This theory has been used to explain many real-world motion sickness experiences such as carsickness and seasickness. This theory also may explain motion sickness induced in VR, as users are usually stationary while exploring VR worlds with full virtual and visual motion.

However, there is currently no tested theory or data to explain the finding that S3D presentations induce more motion sickness than 2D presentations, even with the same content, and even though the visual information is considered to be truthfully replicated in S3D.

We have been exploring explanations based on the intra-sensory motion conflict, where the visual motion information does not match with the naturally expected visual motion information. We propose that such intra-sensory conflict could increase overall motion sickness by adding another layer of conflict on top of the existing motion conflict among sensory systems [1]. Since the perception of self-motion (vection) through the visual system is mainly driven by the optic flow of the presented scene [2], we hypothesize that discrepancies between the optic flow generated in S3D and the optic flow experienced in the real world may be a source of additional visually induced motion sickness (VIMS) in S3D VR.

Theories of motion sickness in VR

When a person walks and turns in the real world, his/her vestibular and proprioceptive sensory systems send motion signals to the brain in addition to the efferent copy of the motor commands, while the visual system monitors the optic flow, which confirms the individual’s current state of motion. However, when a person “walks” in a VR environment, only the optic flow indicates self-motion while the other sensory systems provide no motion signals. This conflict is believed to cause motion sickness.

The inter-sensory motion conflict theory [3, 4] states that conflicts arise between motion signals received by two (or more) sensory systems during the information integration process, which determine the state of self-motion, cause motion sickness. Although this theory suggests a plausible underlying mechanism for the onset of motion sickness in VR, it does not explain the reduction of motion sickness symptoms through repeated exposure (habituation).

The sensory rearrangement theory [5, 6] expands on the inter-sensory motion conflict theory to include the concept of a comparator that matches current sensory inputs with the motion information expected or learned from prior experience. Repeated exposure to a certain type of motion signal conflict will eventually be registered as a new type of “normal” experience, such that the comparator no longer generates a motion conflict signal. One example of such response is the vestibular gain adaptation under conditions of optical magnification [7–8].

The sensory rearrangement theory supports other possible causes of motion conflict, such as intra-sensory motion conflict [8], where the VIMS may be induced by a distorted optic flow that has never been experienced before or is not consistent with the expected optic flow.

Elevated VIMS in S3D compared to 2D viewing

Elevated VIMS symptoms resulting from S3D viewing compared to 2D viewing has been reported in numerous studies [10–17]. For example, in a study on motion sickness of movie viewers [12], one group watched a movie in 2D, while another group watched the same movie in S3D. More viewers in the S3D condition experienced motion sickness than did viewers in the 2D condition. This suggests that the stereoscopic depth added in the S3D viewing condition may lead to more VIMS.

Geometric S3D space distortions

Various image distortions in S3D [18, 19] have been discussed in the context of the accuracy of images projected to each eye (e.g. straight lines should not look curved). Other studies [1, 20] pointed out that the corresponding parameters between S3D capturing (or rendering) and displaying are frequently mismatched, and the mismatches introduce S3D space distortions that affect the perception of size-and-depth relations in the reconstructed world.

When such a space distortion is introduced, viewers may experience perception or illusions such as the Alice in Wonderland syndrome [21], where the viewer perceives the displayed world to be either larger (Micropsia), smaller (Macropsia), closer (Peliosia), or farther (Teliopsia) than it should be. However, if the viewer does not actively interact with the reconstructed S3D world (e.g. while watching a stationary scene), these perception may not cause any motion sickness symptoms because other 2D depth cues such as perspective, size, texture, and occlusion are correctly following the distorted space, although the reconstructed scene may look unfamiliar or unnatural in some sense.

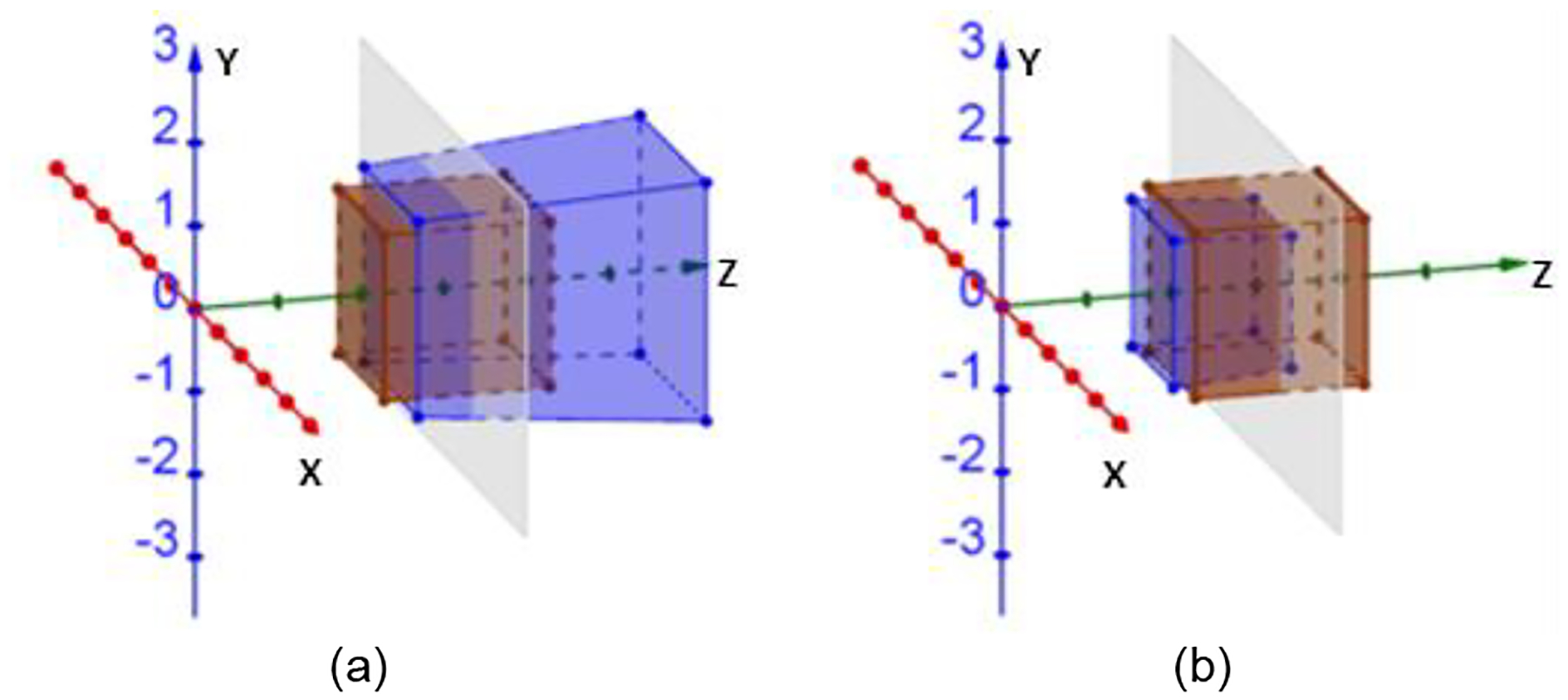

Different types of distortions can co-exist in a single S3D reconstruction and different levels of parameter mismatches may result in compounded non-linear changes of various distortion patterns. Fig. 1 shows a type of S3D distortion caused by mismatch between the virtual camera convergence distance and viewer screen distance. Various other parameter mismatches potentially causing S3D distortion were discussed and demonstrated in [20].

Figure 1.

Patterns of S3D space distortion when the virtual camera convergence distance and viewer screen distance are mismatched (from [20]). The brown cubes are orthoscopic representations of a cube located at the screen distance in the original virtual world. The gray plane represents the display screen. The purple cube represents the reproductions of the cube when the virtual camera convergence distance is (a) shorter than the display screen distance, and (b) longer than the display screen distance. The cube will be displayed as an extended, or compressed hexahedron, respectively.

Perception of motion in distorted S3D space

Although the modeling works described above showed various patterns of the S3D distortion in a stationary scene, it did not address how such S3D space distortions affect the perception of self-motion in the S3D world. For instance, when the user moves forward in a distorted S3D space, objects at near or far distances seem to approach to the viewer slower or faster than the speed expected by natural depth-to-size relationship. Furthermore, since the monocular depth cues remain veridical in the stereo depth distorted scene, the objects may appear to be compressed or expanded along the depth direction (depth wobbling) as the user moves forward, which violates the natural stability of (apparent) rigid objects. In this case, the viewer will experience an optic flow that rarely happens in the real world.

Note that unlike usual 2D projection distortions (e.g. lens distortions or keystone distortions), the stereo disparity based S3D spatial distortions are barely noticeable from a stationary viewpoint because the distortions occur in the depth direction, so that straight lines still appear straight in the distorted S3D world when it is observed from a stationary view point.

In a pseudoscopic condition (i.e. reversed disparity condition), for example, where the left eye view is displayed to the right eye, and the right eye view is displayed to the left eye, each individual view may show no 2D distortion, but when those images are viewed in stereo, the viewer see the extreme (reversed) depth distortion if the stereo cues dominate the percept [22].

Distorted perception of self-motion and VIMS

We hypothesize that unfamiliar optic flow presented to the VR users in distorted S3D space may be a reason for an increased VIMS in S3D.

Similar issues may results from the prismatic (or other optical) effects of refractive ophthalmic lenses in real-world practices [23, 24]. For example, when people are fit with new glasses that have a different optical power or lens design (i.e. base curve), they may need time to adapt to the new lenses (sometimes up to 2 weeks). During the adaptation period, they may experience motion sickness-like symptoms such as nausea and dizziness. Such symptoms may be much more severe and require a longer period of adaptation when the patients are fit with progressive addition lenses, which introduce more complex and spatially variable geometric distortions. These are different from the S3D distortions as the optical lenses may distort both the stereo depth and monocular 2D images.

Since almost all S3D contents are produced by using a set of S3D parameters chosen by the producers and are not customized for each user or user’s display environment, S3D space distortion may be frequently introduced. In addition, since users spend a limited amount of time (e.g. 2–3 hours for watching a movie) with a reconstructed S3D world, it is expected that full adaptation to the S3D distortions will not be achieved in that period. The initial discomfort caused by VIMS may be severe enough to prevent further use, and thus fail to lead to habituation even if it is possible.

Here, we analyze and demonstrate the type of optic flow distortions in such miss matched S3D.

Methods

Using the S3D space distortion models proposed in [20], we computed the S3D optic flow in distorted S3D worlds, which may occur while a VR user is in “motion”, and analyzed their effects.

The sample VR environment

Fig. 2 shows a schematic of the VR environment setup for the S3D optic flow analysis where 20 spherical objects are aligned along the forward depth direction 1m to the left side of the viewer’s path and spaced 1m apart (as if they are on the wall of a corridor, or a road with trees on one side). The viewer is assumed to move forward at 1m/s, 2m/s, or 3m/s. In this configuration, the objects initially cover the viewer’s lateral visual field from 2.8° (20th farthest object) to 45° (1st nearest object).

Figure 2.

Schematic of the VR world where objects are positioned along the depth direction and the viewer “moves” forward at 1m/s, 2m/s, or 3m/s.

Note that since the objects are aligned along the depth direction (e.g. viewer moving direction), near and far objects are located at higher and lower eccentricity, respectively, where the vanishing point is located at the center of the visual field (i.e. eccentricity=0°). This setup simplifies the full-scale optic flow analysis into an analysis of one-dimensional lateral motion of each object at different distances. For the full-scale optic flow, one may imagine that the indicated speed and space distortion may occur along all radial directions from the viewer.

Optic flow analysis for S3D depth distortion

In a conventional 2D optic flow diagram, the apparent object’s motion is described by the angular velocity of objects in the visual field. However, such 2D optic flow has a limited ability to illustrate individual object’s shape transformations in the depth direction.

For example, if virtual objects move toward the viewer along the radial axis while simultaneously shrinking their size following the inverse of the linear perspective ratio, their apparent size will not be changed. The eccentricity of the object will not change either because the object’s motion occurs along the same eccentricity. In this case, an ordinary 2D optic flow diagram shows no apparent motion in the visual field. However, even in those case, human stereoscopic vision fusing the two 2D optic flows can determine that the object is approaching because its angular disparity is increasing.

To overcome this limitations of the conventional 2D optic flow diagram, the linear velocities of objects were computed in xyz Euclidean coordinates, instead of angular velocities, and then the computed velocities of objects was associated with corresponding location in the visual field, so that, the speed changes in distorted depth (i.e. compressed or expanded space) can also be considered in a comparison with the object motions in S3D space without any depth distortion. Still, an angular velocity based optic flow is needed to fully describe actual 3D motion of the object, but even without it, describing optic flow in linear speed is an effective way to show the impact of S3D depth distortion because the S3D depth distortion does not affect angular position of object, but only affects along the depth direction.

Applying the S3D optic flow analysis

The S3D optic flow analysis was applied to the following S3D rendering and display related parameter pair mismatches between: 1) virtual camera separation and viewer eye separation, 2) camera field of view and virtual screen field of view, 3) camera convergence distance and virtual screen distance.

In usual VR head-mounted display (HMD) condition, for instance, the virtual camera and viewer eye separation mismatch may be introduced when a S3D scene is rendered with a virtual camera separation matching an average interpupillary distance (IPD) but shown to viewers with a smaller or larger IPD without any corresponding rendering adjustment; the camera and virtual screen field of view mismatch may be introduced when a S3D scene is rendered with a certain fixed rendering camera frustums but is displayed on a different size of virtual screen in HMD hardware; the camera convergence and virtual screen distance mismatch may be introduced if the virtual cameras render the scene with a certain convergence distance, but the virtual screen is located at a different distance.

Note that it is a common misconception that only the parallel-axis camera setup, but not the converging camera setup, properly renders the binocular scene, mainly driven by the concerns of inducing keystone distortions and accompanying vertical disparity [18]. The important point here is whether the camera axis and display screen orientation are matched or not [1]. If the convergence of camera (optical) axis is achieved by employing asymmetric frustums (for virtual-world) or a sensor-shift (for real-world), the captured/rendered images do not induce any 2D projection distortions when presented on fronto-parallel display screens.

Those parameter mismatches do not require any display-viewer interactions. Therefore, eye movements within the S3D scene while the viewer is standing still does not affect the perception of the scene since the depth structure is maintained [1].

Results

S3D optic flow in orthoscopic reproduction

Fig. 3a shows the distance from the viewer to the objects and visual eccentricity of objects in the sample world of Fig. 1, where the nearer objects are located at higher eccentricities and farther objects are located at lower eccentricities. The distance-to-eccentricity relation in the sample VR world is non-linear in nature. For each of the objects, the displacement following 0.1sec duration was computed when the viewer is assumed to move forward at 1m/s, 2m/s, and 3m/s.

Figure 3.

(a) Apparent position of the objects in various depth, and (b) angular speed and (c) linear speed of the objects from the viewer perspective in an S3D world without any space distortion (i.e. orthoscopic reproduction). Viewer is assumed to move forward at 1m/s, 2m/s, and 3m/s.

In our sample VR world, as a viewer moves forward, the retinal projection of all objects move towards larger eccentricities, where the nearer objects (at larger eccentricities) appear to move faster than the farther objects (at smaller eccentricities), indicating a conventional motion parallax is in place (as shown in Fig. 3b).

Fig. 3c shows the linear speed of objects (computed by the displacement divided by the duration) in the viewer’s visual field in an ideal S3D world without any space distortion (i.e. orthoscopic reproduction). Both angular (Fig. 3b) and linear (Fig. 3c) optic flow plots can be considered as a ground truth or baseline of the S3D optic flow that people experience in real-world condition or distortion-free reproductions of the S3D world.

Fig. 4 shows the linear speed of the object motion in the same forward movements, but with various S3D distortions introduced by the mismatched parameter pairs between capture and display processes. The magnitude of parameter mismatch is marked as a ratio between the corresponding parameters, as described in [20]; 1) the camera and eye separations (Ks), 2) the camera and screen FOVs (Kw), and the camera convergence and screen distances (Kd). In all cases, a ratio of 1 indicates a perfectly matched condition (as in Fig. 3c).

Figure 4.

Apparent linear speed of the objects from the viewer perspective when a viewer moves forward in an S3D world with space distortion (non-orthoscopic reproduction), where the S3D distortions were introduced by the mismatch between (a-b) the camera and eye separation, (c-d) the camera and screen FOV, and (e-f) the camera convergence and screen distance. The plots on the left side represent the parameter mismatch conditions that result in S3D space compression, while the plots on the right side represent the parameter mismatch condition results in S3D space expansion. Note that the vertical range of Fig. 4f covers 6× more speed range.

For Fig. 4a–b, virtual camera separation was assumed to be 6.5cm, while the viewer eye separation are 5.5cm (Ks=0.85) or 7.5cm (Ks=1.15). For Fig. 4c–d, camera FOV is assumed to be 90°, while screen FOV is 80° (Kw=0.90) or 100° (Kw=1.10). For Fig. 4e–f, the camera convergence distance is assumed to be 2m, while the virtual screen distance is 1m (Kd=0.75) or 3m (Kd=1.50).

As can be seen in Fig. 4, the S3D space distortion affects the apparent speed of objects at all distances, and the amount of speed error increases as the viewer motion increases. More dramatic optic flow distortion occurs in conditions with expanded S3D space (i.e. right column plots in Fig. 4, as shown in Fig. 1a) compared to the compressed S3D space (i.e. left column plots in Fig. 4, as shown in Fig. 1b). The larger effects occur at lower eccentricities, where the optic flow motion is slower than at higher eccentricities.

However, in the compressed S3D space conditions (Fig. 4a, 4c, 4e), they violate the trend of the depth motion where far objects (at smaller eccentricities) approach slower than the objects at near/mid distances. In the normal undistorted condition, as shown in Fig. 3c, a monotonic increase of linear speed for the approaching object is expected as the eccentricity decreases. When the reconstructed S3D space is expanded, in most cases (such a Fig. 4b and Fig 4d), such a monotonic linear speed increase can be observed except for the condition in which the space distortion is caused by the screen distance and convergence distance mismatch (Fig. 4f). In this case, the speed direction may be reversed (i.e. negative values), indicating that objects appear to move backward away from the viewer who is moving forward towards the objects. In other words, the ratio of space expansion along the depth direction is faster than the viewer’s displacement in a given time.

Note that the ‘Dolly zoom’ or ‘Contra-zoom’ technique used in 2D movies (i.e. Hitchcock movie “Vertigo”, 1958) [25] induces similar perceptual depth/speed distortion, where objects at certain distances move ‘relatively’ slower (compressed) or faster (expanded) than they should be. This is a monocular effect and is often applied to stationary scenes. Our analysis indicates that similar effects may occur in S3D motion scene due to mismatched parameters

To emphasize the impact of S3D space distortion on optic flow, the differences between the orthoscopic condition (Fig. 3c) and the non-orthoscopic conditions (Fig. 4) were computed (Fig. 5). In those plots, positive and negative values indicate slower and faster optic flow in the distorted S3D world than what it would have been in the natural (orthoscopic) condition, respectively. They do not show the absolute speeds of the objects.

Figure 5.

The amount of linear optic flow distortion computed as the differences between orthoscopic condition (Fig. 3c) and non-orthoscopic conditions (Fig. 4). Positive and negative values indicate slower and faster optic flow in distorted S3D world than perfect replication of the real-world condition, respectively.

In the cases of the mismatches between camera and eye separation condition (Fig. 5a) and between screen and camera FOV mismatch condition (Fig. 5b), the patterns of S3D optic flow distortion are similar. If the reconstructed S3D space is compressed or expanded, the optic flow of far objects (i.e. at small eccentricity in our sample VR world) are mostly affected when a VR user moves. However, since the S3D optic flow distortion changes gradually along the depth direction, and the apparent speed of objects is governed by the linear perspective rule, the S3D optic flow distortion of very far objects may not be noticeable, even if they are large.

This problem might occur at mid-range distances, where the distortion of the S3D optic flow may be noticeable. Note that in our sample VR world, the eccentricity of an object located at 1m in front of the viewer is 45.0°, while the eccentricity of an object at 6m away is 9.5° (Fig. 3a). Over this depth range, the speed of viewer motion may also become a dominant factor because as the viewer moving speed increases, it increases the amount of distortion surpassing the viewer’s threshold, allowing the viewer to detect the S3D optic flow distortion (Fig. 5a–b).

The patterns of S3D optic flow distortion are more complex in the case of the mismatch between the screen distance and virtual convergence distance (Fig. 5c). When a user moves slowly in the virtual world (i.e. V=1m/s), the effect of S3D space compression (Kd<1) causes relatively little apparent speed distortions for objects in all depths, as they all move slightly slower than what they are expected. However, when the same viewer motion happens in the expanded (Kd>1) S3D world condition, the S3D space distortion causes large S3D optic flow distortions for near objects. As the user’s speed increases, a larger speed error will be introduced along the depth direction for near objects, and even larger errors will be introduced in the expanded S3D space. In addition, an abrupt speed sign change (a vertical asymptote in Fig. 4f and Fig. 5c) occurs about 11° eccentricity which is approximately at a distance of 6m in our sample VR world.

The asymptote shown in this condition is not unique for the screen and convergence distance mismatch condition, in fact, such asymptote occurs in all mismatch conditions discussed here depending on the magnitude of parameter mismatch. With the given (and usual) parameter mismatch ranges, the asymptotes are positioned at much farther distances. However, the problem of the relative optic flow reversal (i.e. a reversal of space expansion direction relative to the viewer motion) in the screen distance and convergence distance mismatch is particularly important because such a large amount of optic flow distortion can easily be introduced in practice. In many HMD designs, the virtual screen distance is optically set at a few meters or at infinity, while the convergence distance of the virtual camera is configured to be either parallel axis (i.e. converged to infinite distance) or converged axis (i.e. converged to a certain distance) to render the VR scene. With these parameters, the ratio between the two parameters can be extremely low (i.e. Kd≈0) or high (i.e. Kd=∞). Note again that the parameter ratio for the orthoscopic condition is equal to one (Kd=1).

Fig. 6 shows a few snapshots of the optic flow simulation where the space is dynamically filled with a cloud of random dot. It is different from the sample VR world (Fig. 1) because the dots are floating at different distances and filling up the full visual field while the viewer is moving forward. In this simulation, for each dot’s speed and direction of the apparent motion are marked by length and direction of the tail, respectively. The size of all dots are the same and designed to maintain their initially size regardless of their relative distance to the viewer (i.e. those dots are not following the linear perspective rule), so that each dot represents a point in the space not the object with physical volume. The same size change rule is applied to the tail construction. However, the length of the tail depends on the relative distance to the viewer (i.e. the tails follow the linear perspective rule) to illustrate the motion of the dots in the viewer perspective.

Figure 6.

Example snapshots of optic flow generated by the same number of random cloud points in 3D space when a viewer moves forward at 5m/s. A yellow tail attached to each point represents the direction and speed of the point. (a) With no space distortion, radial expansion optic flow is induced. (b) With medium level space compression, the optic flow at far distance starts reversing (i.e. faster reduction of speed comparing to the viewer’s moving speed). (c) With high level of S3D space compression, the optic flow appears completely reversed. The demo video can be found on YouTube using the following link (https://youtu.be/1Xj0RvkV-wY).

The apparent effect of optic flow distortion is much more easily conveyed in a video. A demo video clip can be found on YouTube using the following link (https://youtu.be/1Xj0RvkV-wY).

Conclusion

Using S3D space distortion models, we demonstrated that the parameter mismatch between the capture and display procedures introduce S3D optic flow distortions, where, in general, the amount of S3D optic flow distortion increases as 1) the viewer’s virtual moving speed increases, 2) more distortions in S3D expansion than compression conditions. Also, the S3D optic flow distortion 3) may violate the linear perspective rule along the depth direction, and 4) in some extreme but practical conditions, the S3D optic flow direction may be reversed.

Since this kind of S3D optic flow distortion with respect to the viewer’s self-motion hardly ever occurs in the real world, the viewer may experience a completely unfamiliar stereoscopic optic flow, which may be conflicting with the 2D optic flow behavior in the scene in each eye. Considering the intra-sensory conflicts with these visual motion signals (with an emphasis on vection), we postulate that the increased VIMS reported in S3D compared to 2D condition may be explained by S3D space distortions.

For the developers of the S3D HMD and corresponding application programming interfaces (APIs), this analysis highlights the importance of parameter matching between the optical configuration of the device and supporting APIs, where some viewer-initiated parameters for the display (e.g. eye separation, screen FOV, or screen distance) should be individually adjustable and directly affecting the render parameters.

This hypothesis on the relation between S3D distortion and VIMS should be verified empirically. We are currently conducting a study in which we compare the level of VIMS induced by various S3D distortion conditions. The results of this study will assist future VR environment design, hopefully, to reduce VIMS.

Author Biography

Alex D. Hwang received BS in mechanical engineering from the University of Colorado Boulder (1999), and received MS (2003) and Ph.D. in computer science from the University of Massachusetts Boston (2010). Since then, he has worked at Schepens Eye Research Institute in Boston MA as a postdoctoral research fellow. He became an Investigator and is appointed as an Instructor of Ophthalmology at Harvard Medical School (2015). His work has focused on bioengineering and low vision rehabilitation.

Eli Peli received BSc and MSc in electrical engineering from Technician-Israel Institute of Technology (IIT), Haifa, Israel (1979), and OD from New England College of Optometry, Boston, MA (1983). Since then he has been at the Schepens Eye Research Institute where he is a senior scientist and the Moakley Scholar in Aging Eye Research, and Professor of Ophthalmology at Harvard Medical School. Dr. Peli is a fellow of the Optical Society of America, the Society for Information Display, the International Society of Optical Engineering (SPIE), the American Academy of Optometry, and the Association for Research in Vision and Ophthalmology.

References

- [1].Hwang AD and Peli E, “Instability of the perceived world while watching 3D stereoscopic imagery: A likely source of motion sickness symptoms,” I-Perception, vol. 5, pp. 515–35, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].J Gibson J. “Optical motions and transformations as stimuli for visual perception,” Psychological review, vol 64(5), pp. 288, 1957. [DOI] [PubMed] [Google Scholar]

- [3].Steele JE, “Motion sickness and spatial perception. A theoretical study,” Aeronautical Systems Div Wright-Patterson AFB OH 1961. [PubMed] [Google Scholar]

- [4].Oman CM, “Sensory conflict in motion sickness: an observer theory approach” 1989. [Google Scholar]

- [5].Reason JT, “Motion sickness adaptation: a neural mismatch model,” Journal of the Royal Society of Medicine, vol. 71, pp. 819–828, 1978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Yardley L, “Motion sickness and perception: A reappraisal of the sensory conflict approach,” British journal of Psychology vol. 83, pp. 449–471, 1992. [DOI] [PubMed] [Google Scholar]

- [7].Demer JL, Porter FI, Goldberg J, Jenkins HA, and Schmidt K, “Adaptation to telescopic spectacles: Vestibulo-ocular reflex plasticity,” Investigative Ophthalmology & Visual Science, vol. 30, pp. 159–170, 1989. [PubMed] [Google Scholar]

- [8].Clendaniel RA, David M Lasker, and Minor Lloyd B., “Horizontal vestibuloocular reflex evoked by high-acceleration rotations in the squirrel monkey. IV. Responses after spectacle-induced adaptation,” Journal of neurophysiology, vol. 86, pp. 1594–1611, 2001. [DOI] [PubMed] [Google Scholar]

- [9].Crane BT, and Joseph L Demer, “Effect of adaptation to telescopic spectacles on the initial human horizontal vestibule-ocular reflex,” Journal of neurophysiology, vol. 83, pp. 38–49, 2000. [DOI] [PubMed] [Google Scholar]

- [10].Hakkinen J, Pölönen M, Takatalo J, and Nyman G, “Simulator sickness in virtual display gaming: a comparison of stereoscopic and non-stereoscopic situations,” Proceedings of MobileHCI’06, September 12–15, 2006, Helsinki, Finland: 2006. [Google Scholar]

- [11].Solimini AG, Mannocci A, and Thiene DD., “A pilot application of a questionnaire to evaluate visually induced motion sickness in spectators of tri-dimensional (3D) movies,” Italian Journal of Public Health, vol. 8, p. 197, 2011. [Google Scholar]

- [12].Solimini AG, Mannocci A, Di Thiene D, and La Torre G, “A survey of visually induced symptoms and associated factors in spectators of three dimensional stereoscopic movies,” BMC Public Health, vol. 12, p. 779, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Yang S, Schlieski T, Selmins B, Cooper SC, Doherty RA, Corriveau PJ, et al. , “Stereoscopic viewing and reported perceived immersion and symptoms.,” Optom Vis Sci. 2012 Jul;89(7):1068–80., 2012. [DOI] [PubMed] [Google Scholar]

- [14].Solimini AG, “Are there side effects to watching 3D movies? A prospective crossover observational study on visually induced motion sickness,” PLoS One, vol. 8, p. e56160, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Zhang L, Zhang YQ, Zhang JS, Xu L, and Jonas JB, “Visual fatigue and discomfort after stereoscopic display viewing,” Acta Ophthalmol, vol. 91, pp. e149–53, March 2013. [DOI] [PubMed] [Google Scholar]

- [16].Zeri F and Livi S, “Visual discomfort while watching stereoscopic three-dimensional movies at the cinema,” Ophthalmic Physiol Opt, vol. 35, pp. 271–82, May 2015. [DOI] [PubMed] [Google Scholar]

- [17].Braschinsky M, Raidvee A, Sabre L, Zmachinskaja N, Zukovskaja O, Karask A, et al. , “3D Cinema and Headache: The First Evidential Relation and Analysis of Involved Factors,” Frontiers in neurology, vol. 7, p. 30, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Woods A, Docherty T, and Koch R, “Image Distortions in Stereoscopic Video Systems,” Proc. of SPIE Stereoscopic Displays and Applications 1993, pp. 36–48, 1993. [Google Scholar]

- [19].Doyen D, Sacré J, and Blondé L, “Correlation between a perspective distortion in a S3D content and the visual discomfort perceived,” In Stereoscopic Displays and Applications XXIII, vol. 8288, p. 82881L, 2012. [Google Scholar]

- [20].Gao Z, Hwang AD, Zhai G, and Peli E, “Correcting geometric distortions in stereoscopic 3D imaging,” PLoS One, vol. 13, p. e0205032, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Todd J, “The syndrome of Alice in Wonderland,” Canadian Medical Association Journal vol. 73, p. 701, 1955. [PMC free article] [PubMed] [Google Scholar]

- [22].Peli E, “Binocular depth reversals despite familiarity cues: An artifact? (Technical Comments),” Science, vol. 249, pp. 565–566, August 3, 1990. [DOI] [PubMed] [Google Scholar]

- [23].Tuan KM, & Jones R, “Adaptation to the prismatic effects of refractive lenses,” Vision research, 37(13), 1851–1857., 1997. [DOI] [PubMed] [Google Scholar]

- [24].Moodley VR, Kadwa F, Nxumalo B, Penciliah S, Ramkalam B, and Zama A, “Induced prismatic effects due to poorly fitting spectacle frames,” African Vision and Eye Health vol. 70, pp. 168174., 2011. [Google Scholar]

- [25].“Dolly zoom” (https://en.wikipedia.org/wiki/Dolly_zoom)