Abstract

We conducted a systematic literature review to assess how conversational agents have been used to facilitate chronic disease self-management. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) framework was used. Literature was searched across five databases, and we included full-text articles that contained primary research findings for text-based conversational agents focused on self-management for chronic diseases in adults. 1,606 studies were identified, and 12 met inclusion criteria. Outcomes were largely focused on usability of conversational agents, and participants mostly reported positive attitudes with some concerns related to privacy and shallow content. In several studies, there were improvements on the Patient Health Questionnaire (p<0.05), Generalized Anxiety Disorder Scale (p=0.004), Perceived Stress Scale (p=0.048), Flourishing Scale (p=0.032), and Overall Anxiety Severity and Impairment Scale (p<0.05). There is early evidence that suggests conversational agents are acceptable, usable, and may be effective in supporting self-management, particularly for mental health.

Introduction

Sixty percent of U.S. adults have chronic diseases, such as hypertension and diabetes1, which are 86%2 of the $2.6 trillion in annual health care expenditures in the U.S.3 Chronic diseases can be controlled through self-management, which refers to an individual’s ability to manage symptoms, treatments, physical and psychosocial consequences of diseases, and lifestyle changes4. Successful self-management requires sufficient knowledge of the disease and the necessary skills to manage and prevent complications, slow disease progression, and improve health outcomes4. Given the near ubiquity of mobile phones with 96% of U.S. adults owning a mobile phone and 81% owning a smartphone in 20195, mobile health (mHealth) approaches to facilitate self-management are a promising supplement or alternative to traditional programs. In particular, short message service (SMS) interventions have shown improvements across a range of self-management behaviors, such as medication adherence, smoking cessation, and physical activity6-9. Patients have reported SMS apps to be highly acceptable and easy to use, though receiving tailored text messages in real-time could improve the user experience10,11. This suggests that conversational interfaces, which can provide automated two-way communication and evidence-based tailored responses, may have potential advantages over current self-management interventions.

Conversational agents, also known as dialogue systems or chatbots, are systems that communicate with users in natural language (text or speech) and can complete specific tasks or mimic “chat” characteristics of human-human interactions12. Conversational agents have the ability to mirror a therapeutic process, such as cognitive behavioral therapy or motivational interviewing13. This could promote goal setting, positive feedback, self-monitoring, overcoming obstacles to self-management, and education, which are important components in chronic disease self-management. Text-based conversational agents can engage users about sensitive or stigmatized topics and have the ability to show empathy, which can help to alleviate negative symptoms or emotions14,15. For example, a conversational agent could be paired with wearable devices to detect the onset of a disease exacerbation and provide the user with appropriate therapy to help manage their symptoms16.

Conversational syntax and semantics could also reveal rich linguistic information about the user, which may allow for a better understanding of the person’s health status15. Given the potential to deliver self-management components through a conversational agent, the objective of this systematic review was to assess how text-based conversational agents have been used for chronic disease self-management.

Methods

Search Strategy.

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines were used as a framework17. The literature search was conducted within five electronic databases (PubMed, Scopus, Embase, Cumulative Index to Nursing and Allied Health Literature, and Association for Computing Machinery Digital Library). We prioritized sensitivity over specificity to ensure that all potentially relevant studies were included. The search was limited to papers published prior to the search date of October 1, 2018. Search terms included variations of: “conversational agent, virtual coach, chatbot, dialogue system, and health.” Search terms could appear anywhere in the title, abstract, or keywords. Bibliographies of included publications were also searched to identify additional relevant literature. All studies were imported from EndNote X8 into Covidence systematic review software to screen studies. Duplicate studies across databases were removed.

Eligibility Criteria.

The seven inclusion criteria were: (1) full-text journal articles or conference proceedings, (2) published in English, (3) contained primary research findings, and (4) included a text-based conversational agent in (5) the context of self-management for (6) chronic diseases in (7) adults 18 years and older. Editorials, letters, design papers, conference abstracts, and study protocols were excluded. For this study, “conversational agent” was defined as an autonomous system that can communicate with users bi-directionally in text12. Thus, automated text messages for reminders or appointments and question and answer systems were not considered conversational agents. Since the focus was on text-based conversational agents, only studies that contained agents with both text-based input and output were included, though the system could also contain speech-based input or output. “Wizard of Oz” studies, which involved a human simulating the response of the system, were included since these studies could potentially reveal valuable information about the nature of interaction and functionalities of the system in relation to self-management.

Self-management was defined as an individual’s ability to manage symptoms, treatments, physical and psychosocial consequences, and lifestyle changes4. We adapted the list of self-management skills from Lorig et al (2003), which has been used in numerous studies and in the Stanford Chronic Disease Self-Management Program18,19. Studies that did not contain at least one of these self-management skills were excluded. We included conversational agents targeted towards one or more of the 19 chronic diseases defined by the Centers for Medicare and Medicaid Services20. Because chronic diseases are defined as conditions that last longer than one year, we also included additional prevalent conditions and populations where self-management is important that were not in the Centers for Medicare and Medicaid Services list, such as obesity and mental health conditions (post-traumatic stress disorder, substance use/addiction). We also included studies for older adults who often have chronic diseases.

Study Selection.

Two reviewers (AG, ZX) independently rated each title and abstract as “potentially relevant” or “not relevant” based upon the abstract potentially meeting the inclusion criteria. Then, each reviewer examined the full-text of the studies rated as “potentially relevant” and applied the inclusion criteria again. Any disagreements were discussed with an adjudicator (AC). Cohen’s kappa statistic21 was calculated to measure inter-rater agreement between each reviewer for screening and full-text review.

Data Extraction and Synthesis.

One reviewer (AG) extracted data from the abstract, main text, and supplemental material into a standardized form, and another reviewer (ZX) examined the form for accuracy. Chronic diseases were grouped into mutually exclusive categories based on the primary disease or population the conversational agent was targeted towards. Self-management skills and attributes of the conversational agent were grouped into one or more categories based on the description of the conversational agent provided in the study. The extracted data were cross tabulated to show a comparison of key concepts across studies.

Risk of Bias.

We assessed the risk of bias within each study using the Agency for Healthcare Research and Quality internal validity quality evaluation form22. One reviewer (AG) evaluated and rated each study’s risk of bias as good, fair, or poor, and another reviewer (ZX) reviewed the form for consistency. We did not assess the validity of the literacy measurement used in each study as there was limited data across studies22.

Results

Study Selection.

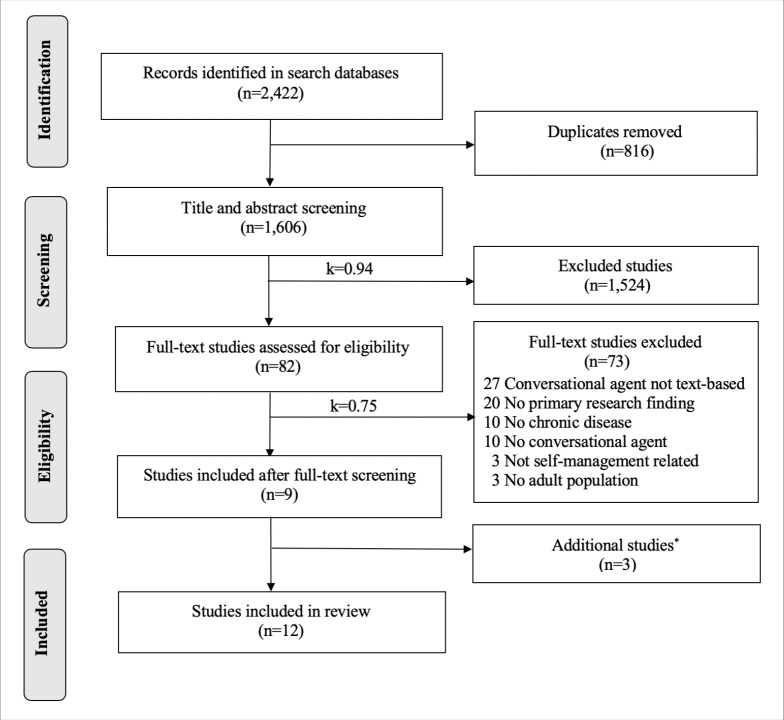

2,422 studies were identified from the database search, and 1,606 studies were screened after removing duplicates. 82 studies were included in the full-text review, and 12 studies were included in the final review, which was comprised of 12 unique conversational agents (see Figure 1). The kappa statistic for title and abstract screening was 0.94 (strong agreement) and 0.75 (moderate agreement) for full-text screening.

Figure 1.

PRISMA diagram.

*The three studies were included through a review of reference citations from primary studies.

Study Characteristics.

The final review included studies23-34 published from 2012-2018 from seven countries. There was a range of study designs and methodologies, including five randomized controlled trials, five quasi-experimental studies, and two non-experimental studies (see Table 1). The majority were pilot studies and study duration ranged from two weeks to four months. The total number of participants ranged from 10 to 401, and participant ages were between 18 and 92 years.

Table 1.

Study methodology and outcomes of reviewed studies.

| Study | Study Design and Methodology | Participant Characteristics | Outcomes |

| Baskar et al, 201523 | Quasi-experimental + interviews 11 older adults and 2 health professionals played the role of a fictitious persona while interacting with the agent for ~10 minutes followed by an interview. |

Age: N/A Gender: 55% female Race: N/A Baseline Clinical Characteristics: N/A |

|

| Elmasri et al, 201624 | Quasi-experimental + interviews 17 participants interacted with the agent to explore their alcohol consumption for ~10 minutes followed by a survey and interview. |

Age: 18-25 Gender: 41% female Race: N/A Baseline Clinical Characteristics: <5 drinks/day |

|

| Fitzpatrick et al, 201726 | RCT 70 participants were randomly assigned to Woebot or directed to a National Institute of Mental Health ebook for 2 weeks. Surveys were completed at baseline and post-intervention. |

Age: 18-28 Gender: 67% female Race: 79% Caucasian Baseline Clinical Characteristics: 46% had moderately-severe or severe PHQ-9 depression scores; 74% had severe GAD-7 anxiety scores |

|

| Gaffney et al, 201325 | RCT 48 participants were randomly assigned to MYLO or ELIZA to discuss a current problem for ~20 minutes. Surveys were completed at baseline, post-intervention, and 2-week follow-up. |

Age: 18-32 Gender: 79% female Race: N/A Baseline Clinical Characteristics: N/A |

|

| Kazemi et al, 201427 | Focus groups 26 participants were placed into one of four focus group sessions to determine their views of mHealth technology to deliver an alcohol-related intervention. |

Age: 18-20 Gender: 73% female Race: 70% Caucasian Baseline Clinical Characteristics: N/A |

|

| Ly et al, 201728 | RCT + interviews 28 participants were randomly assigned to Shim or a wait list control group for 2 weeks. Surveys were completed at baseline and post-intervention. 9 participants from the intervention group were selected for a semi-structured interview. |

Age: 20-49 Gender: 54% female Race: N/A Baseline Clinical Characteristics: N/A |

|

| Schroeder et al, 201829 | RCT 84 participants were randomized into two messaging groups (semi-personalized messages or non-personalized messages). Participants completed weekly surveys over the 4-week study. |

Age: 18-63 Gender: 89% female Race: N/A Baseline Clinical Characteristics: 83% had an anxiety disorder on the Overall Anxiety Severity and Impairment Scale; 68% had moderate to severe range of depression on PHQ-9 |

|

| Stein et al, 201730 | Quasi-experimental 159 participants interacted with Lark for up to 16 weeks followed by a survey. |

Age: 18-76 Gender: 75% female Race: N/A Baseline Clinical Characteristics: BMI>25kg/m2 |

|

| Tsiourti et al, 201431 | Focus group + interviews 20 older adults and 14 health professionals participated in two focus groups and an interview to assess acceptance and expectations. |

Age: 65-92 Gender: 65% female Race: N/A Baseline Clinical Characteristics: N/A |

|

| van Heerden et al, 201732 | Quasi-experimental + interviews 10 Participants interacted with Lwazi/Nolwazi for ~25 minutes and provided feedback. |

Age: 30 (average) Gender: 50% female Race: N/A Baseline Clinical Characteristics: N/A |

|

| Wang et al, 201833 | Quasi-experimental 401 participants were placed into WeChat groups with an agent or received smoking cessation tips over the 8-week study. Participants completed weekly surveys. |

Age: 33 (average) Gender: 40% female Race: N/A Baseline Clinical Characteristics: Smoked in the past 7 days |

|

| Watson et al, 201234 | RCT 70 participants were given a pedometer and randomly assigned to a conversational agent or access to website for 12 weeks. Surveys were completed at baseline and post-intervention. |

Age: 42 (average) Gender: 84% female Race: 76% Caucasian Baseline Clinical Characteristics: BMI between 25-35kg/m2 |

|

*Abbreviations: N/A: data not available in manuscript; RCT: randomized controlled trial; BMI: body mass index

Evaluation Measures.

The majority of studies (10 of 12) evaluated usability, which was primarily measured by assessing users’ attitudes towards using the conversational agent through questionnaires, interviews, or focus groups. Across the studies, the majority of participants reported moderate to high satisfaction with the agents, although only two studies used a validated questionnaire to assess satisfaction specifically. These two studies found the average satisfaction of the intervention was 3.6 out of 4 using the Client Satisfaction Survey24 and 82 out of 100 on the System Usability Scale29. Several studies incorporated the perceptions of healthcare professionals who expressed concerns with the large amount of information presented to older adults, cautioned use due to the potential to reduce independence, and stated the importance of the chatbot guiding rather than directing older adults23,31. Ten studies evaluated usage of the agent, which was measured by the amount of time interacting with the agent or the number of sessions with the agent. For laboratory-based studies, participants interacted with the conversational agent on average between 10 to 26 minutes23-25,32. For non-laboratory based experimental studies, the majority of participants interacted with the agent at least 50% of the study period26,28-30. Seven studies evaluated clinical outcomes, which were primarily self-reported. There were significant improvements in the PHQ-9 (p<0.05)26,29, GAD-7 (p=0.004)26, Overall Anxiety Severity and Impairment Scale (p<0.05)29, Flourishing Scale (p=0.032)28, and Perceived Stress Scale (p=0.048)28 among intervention groups. Only one study evaluated knowledge and found 100% of participants in the intervention group reported that they learned something new compared to 77% in the control group26.

Conversational Agent Attributes.

Conversational agents were focused on a variety of populations and chronic conditions such as depression25,26,28,29, substance use24,27,33, older adults23,31, diabetes30, overweight/obesity34, and HIV/AIDS32 (Table 2). The majority of conversational agents were targeted towards mental health and assisted with managing symptoms or problem solving by helping users cope with their emotions and teaching them how to respond if their feelings worsened24-29,33. For self-management skills, agents focused on skills such as maintaining a healthy lifestyle23,24,27,28,30-34, managing symptoms26,29,32, talking with friends and family31-33, problem solving25,29,34, working with the care team31,32, participating in social activities27,33, managing medications31, and community resources27. The majority of conversational agents were chatbots23-31,33 and almost half used a frame-based system26,28-30,32 to keep track of information the user provided and information the system still needed.

Table 2.

Conversational agent attributes.

| Study | Chronic Disease or Population | Type | Theoretical Framework or Evidence Base | Self-Management Skills |

| Baskar et al, 201523 | Older Adults | Chatbot (finite-state) | N/A |

|

| Elmasri et al, 201624 | Substance Use | Chatbot (finite-state) | Alcohol Use Disorders Identification Test |

|

| Fitzpatrick et al, 201726 | Depression | Chatbot (frame-based) | Cognitive behavior therapy |

|

| Gaffney et al, 201325 | Depression | Chatbot (finite-state) | Perceptual control theory |

|

| Kazemi et al, 201427* | Substance Use | Chatbot** | Ecological momentary interventions, motivational interviewing, transtheoretical model of change |

|

| Ly et al, 201728 | Depression | Chatbot (frame-based) | Cognitive behavior therapy |

|

| Schroeder et al, 201829 | Depression | Chatbot (frame-based) | Dialectical behavior therapy |

|

| Stein et al, 201730 | Diabetes | Chatbot (frame-based) | Cognitive behavior therapy, Diabetes Prevention Program |

|

| Tsiourti et al, 201431 | Older Adults | Chatbot** | N/A |

|

| van Heerden et al, 201732 | HIV/AIDS | Task-oriented (frame-based) | CDC guidelines for HIV counseling in non-clinical setting |

|

| Wang et al, 201833 | Substance Use | Chatbot (finite-state) | PubMed medical information retrieval dataset |

|

| Watson et al, 201234 | Overweight or Obesity | Task-oriented (finite-state) | Behavioral and social cognitive theory |

|

Kazemi et al, 2018 was used to extract conversational agent attributes35.

Dialogue management system was N/A.

Most conversational agents had a mixed dialogue initiative25,26,28-30,33, thereby, allowing the user or system to lead the conversation. The majority of agents had a mobile app interface, which included custom apps or SMS platforms such as Telegram or WeChat26-28,30,32,33. Nearly all were based on theories for behavior change or contained evidence-based content24-30,32-34. Across studies, cognitive behavioral therapy was most commonly used to guide the language the agent used and the direction of the conversation based on the user’s utterances. Few described design principles used to develop the content or types of interactions, with only two studies reporting the use of human-centered design31 or the Integrate, Design, Assess, and Share (IDEAS) framework27,35.

Risk of Bias.

Risk of bias of one study was rated as good, five as fair, and six as poor. There was selection bias across studies with the majority using a convenience sample or not adequately describing participant characteristics. Of the six non-laboratory based experimental studies, participant completion ranged from 56 to 96%, and only three studies conducted an intent-to-treat analysis26,28,34. Measurement bias included self-reported measurements and questionnaires that were not valid or reliable, which may be due to the lack of established instruments to measure the quality of interactions with a conversational agent. Many studies did not assess potential confounders such as the participant’s duration of the condition, comorbidities, health literacy, motivation, and use of technology.

Discussion

Principle Findings.

While limited, early evidence suggests that conversational agents are acceptable, usable, and may be effective in supporting patients in self-management of chronic diseases particularly for mental health conditions such as depression or substance use. Outcomes from reviewed studies were mainly evaluated on usability, usage, and self-reported clinical measurements. Overall, participants reported a positive attitude and moderate to high satisfaction with agents, but there were concerns for privacy, the potential to reduce independence, and for having repetitive or shallow content. There were improvements in several patient-reported outcome scores between the conversational agent intervention and control groups in several studies26,28,29. However, the lack of methodological rigor and heterogeneity in study design make it challenging to interpret results within and across studies.

In this systematic review, we extended prior reviews by assessing the theoretical frameworks, features, content, and design principles of text-based conversational agents for self-management in those with chronic diseases. There was diverse content across the conversational agents. In contrast to the mHealth literature9,10, we found that the majority of conversational agents were based upon theoretical grounding. Agents primarily leveraged the self-management skill of maintaining a healthy lifestyle, but few provided information about community resources or assistance with managing medications. Similar to digital health solutions, conversational agents vary considerably, and it is unclear how their effectiveness may differ based on sociodemographic and clinical characteristics as these were not captured across all studies. Not surprisingly, very few studies reported using established design principles, such as participatory or user-centered design, and none used heuristic evaluation methods.

Comparison to Prior Work.

To our knowledge, there have been no systematic reviews on the use of conversational agents for chronic disease self-management, but other health-related reviews have been conducted with similar findings36-41. We found that the existing literature primarily contains small scale studies that focus on mental health conditions36-40. Many of these studies are in the development or pilot phase and do not contain samples that are generalizable across other populations. Study participants from research included in this review were predominantly young, female, Caucasian, and owned a smartphone, which is not necessarily representative of U.S. patients with chronic diseases. Excluding populations that may benefit the most from using these technologies, such as older adults or those with physical limitations, from design and usability studies could widen disparities. Despite our focus on chronic diseases, the longest study duration was only four months30, and no agents accounted for time since diagnosis to allow for more complex or nuanced interactions. Providing improved continuity and information to users comes with a tradeoff, as agent-based systems are computationally intensive and require more sophisticated natural language capabilities, large training datasets, and a deeper semantic representation. Ideally, sophisticated dialogue and user models that prioritize safety and efficacy and can handle natural language inputs should take into account the users’ goals, intentions, dialogue history, and context.

In accordance with prior reviews, we also found that studies lacked a comprehensive understanding of patients’ needs37,38. Patients may require varying levels of support depending on sociodemographic characteristics, health status, and cultural factors, and these needs largely remain implicit and unaddressed in the existing body of research36,39. For example, patients with low intrinsic motivation may benefit more from human support than those motivated to work independently, and understanding user motivation could contribute to the optimal timing and type of support provided by conversational agents39. While there were no studies that explicitly assessed patient motivation, two studies measured levels of engagement or experience with technology at baseline25,31. These findings suggest the timing and nature of support and how individual characteristics affect one’s ability to self-manage health and use conversational technologies should be design considerations.

Implications for Healthcare and Research.

The use of conversational agents for chronic disease self-management also has a number of potential applications for clinical care and research. In clinical settings, conversational agents could facilitate social, emotional, relational, and task support that could connect users to health professionals, resources, information, and peers. However, safety protocols are needed prior to leveraging conversational agents in clinical care settings, particularly for agents with unconstrained natural language input capabilities42. For example, if the system does not accurately understand the user’s input, it could provide inappropriate medical information which may result in an adverse outcome. Confirming user input, having a default fallback intent for out-of-domain utterances, or constraining user input to menu choices may mitigate some patient safety concerns. These tradeoffs must be considered for user experience, but balanced against the relative risks to patient safety.

The field of health conversational agents, which lies at the intersection of computational linguistics and health informatics43, will likely accelerate with the adoption of conversational developer platforms, such as Amazon Lex44, Facebook Wit.ai45, Google Dialogflow46, and Microsoft Bot Framework47. As mobile phones become increasingly integrated into people’s lives, conversational agents will likely be deployable on new and existing mobile apps, such as social media, messaging platforms, or even other emerging technologies. However, many of these platforms do not meet the requirements of the Health Insurance Portability and Accountability Act (HIPAA) to protect health-related data, which is a potential limitation of implementing conversational agents for use in clinical care. Currently, Amazon Alexa is the only platform that provides a HIPAA-compliant environment to build apps that transmit and receive protected health information, though it is only available to select developers at this time48. Further, the Food and Drug Administration has not made an explicit statement regarding the level of enforcement for health-related conversational agents. It is likely that conversational agents would be regulated like mobile apps, where mobile app entities are not considered medical device manufacturers unless the app delivers care or makes care decisions49. In addition to regulatory and legal provisions, reimbursement mechanisms and the ability to incorporate actionable information from agents into the clinical workflow remains unexplored. Ethical concerns related to the use of assistive technologies, such as the quality of information, over-reliance, and potentially further exacerbating health disparities if user-centered design principles are not utilized, are also barriers to using conversational agents to support self-management.

Limitations.

This review has several limitations. We did not include voice-based conversational agents because they offer a different user experience than text-based agents15. Also, we only included the features, content, theoretical frameworks, and design principles of the conversational agents that were explicitly stated in the manuscripts, so it is possible that some of the characteristics may not be reflected in our review. We rated the majority of papers as poor or fair quality due to the lack of rigor in many of the studies, which suggests the conclusions may not be generalizable given the high degree of bias. Due to the heterogeneity of study designs, we could not assess the strength of evidence in a meta-analysis.

Conclusions

Given the growing burden of chronic diseases in the U.S., text-based conversational agents to support self-management could assist patients to move beyond passively consuming information to actively engaging in disease management. Currently, conversational agents seem to have elementary dialogue management systems that do not take into account the users’ preferences, goals, or history, and the design may need to evolve to better meet user needs. The rich linguistic data generated from agents could also provide additional insights into the patient’s emotional or physical state, could facilitate decision making and self-management for the patient, and provide valuable information for the care team. At this early stage, there are only a few conversational agents that are targeted towards chronic diseases, and most are focused on depression and self-management through maintaining a healthy lifestyle. Future research should assess the influence of more sophisticated dialogue and interactions and leverage established user-centered design principles to tailor agents to support self-management of chronic diseases. Additional investigation is needed to rigorously assess the characteristics of agents that may be most useful for self-management based on the user’s motivation and context, health status, and psychosocial attributes. Safety, privacy, ethical, and regulatory issues should also be addressed as conversational agents are implemented into real-world care settings.

Acknowledgements

AG is supported by the National Institutes of Health National Library of Medicine training grant (5T15LM012500-03). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Figures & Table

References

- 1.Buttorff C, Ruder T, Bauman M. Multiple chronic conditions in the United States [Internet] 2017. [cited 22 May 2019]. Available from: https://www.rand.org/pubs/tools/TL221.html .

- 2.Gerteis J, Izrael D, Deitz D, LeRoy L, Ricciardi R, Miller T, et al. Multiple chronic conditions chartbook. Agency for Healthcare Research and Quality [Internet] 2014. [cited 1 May 2019]. Available from: https://www.ahrq.gov/professionals/prevention-chronic-care/decision/mcc/resources.html .

- 3.Centers for Medicare and Medicaid Services National health expenditures 2010: sponsor highlights [Internet] 2010. [cited 22 May 2019]. Available from: https://www.cms.gov/Research-Statistics-Data-and- Systems/Statistics-Trends-and-Reports/NationalHealthExpendData/

- 4.Barlow J, Wright C, Sheasby J, Turner A, Hainsworth J. Self-Management approaches for people with chronic conditions: a review. Patient Educ Couns. 2002;48(2):177–87. doi: 10.1016/s0738-3991(02)00032-0. [DOI] [PubMed] [Google Scholar]

- 5.Pew Research Center. Mobile fact sheet [Internet] 2019. [cited 22 May 2019]. Available from: http://www.pewinternet.org/fact-sheet/mobile/

- 6.Thakkar J, Kurup R, Laba TL, Santo K, Thiagalingam A, Rodgers A, et al. Mobile telephone text messaging for medication adherence in chronic disease: a meta-analysis. JAMA Intern Med. 2016;176(3):340–9. doi: 10.1001/jamainternmed.2015.7667. [DOI] [PubMed] [Google Scholar]

- 7.Whittaker R, McRobbie H, Bullen C, Rodgers A, Gu Y. Mobile phone-based interventions for smoking cessation. Cochrane Database Syst Rev. 2016;4(CD006611) doi: 10.1002/14651858.CD006611.pub4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Williams AD. Use of a text messaging program to promote adherence to daily physical activity guidelines: a review of the literature. Bariatric Nursing and Surgical Patient Care. 2012;7(1) [Google Scholar]

- 9.Arambepola C, Ricci-Cabello I, Manikavasagam P, Roberts N, French DP, Farmer A. The impact of automated brief messages promoting lifestyle changes delivered via mobile devices to people with type 2 diabetes: a systematic literature review and meta-analysis of controlled trials. J Med Internet Res. 2016;18(4):e86. doi: 10.2196/jmir.5425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Alessa T, Abdi S, Hawley MS, de Witte L. Mobile apps to support the self-management of hypertension: systematic review of effectiveness, usability, and user satisfaction. JMIR Mhealth Uhealth. 2018;6(7):e10723. doi: 10.2196/10723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hamine S, Gerth-Guyette E, Faulx D, Green BB, Ginsburg AS. Impact of mHealth chronic disease management on treatment adherence and patient outcomes: a systematic review. J Med Internet Res. 2015;17(2):e52. doi: 10.2196/jmir.3951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jurafsky D, Martin J. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition. 2nd ed. Upper Saddle River, NJ: Pearson Prentice Hall; 2009. [Google Scholar]

- 13.Bickmore T, Gruber A, Picard R. Establishing the computer-patient working alliance in automated health behavior change interventions. Patient Educ Couns. 2005;59(1):21–30. doi: 10.1016/j.pec.2004.09.008. [DOI] [PubMed] [Google Scholar]

- 14.Klein J, Moon Y, Picard RW. This computer responds to user frustration: theory, design, and results. Interacting with Computers. 2002;14(2):119–140. [Google Scholar]

- 15.Guy I. Searching by talking: analysis of voice queries on mobile web search. Proceedings of the 39th International ACM SIGIR conference on Research and Development in Information Retrieval. 2016. pp. 35–44.

- 16.Kappas A, Kuster D, Basedow C, Dente P. A validation study of the affectiva Q-Sensor in different social laboratory situations. 53rd Annual Meeting of the Society for Psychophysiological Research. 2013.

- 17.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700. doi: 10.1136/bmj.b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lorig K, Holman H, Sobel D, Laurent D, Gonzalez V, Minor M. Living a Healthy Life with Chronic Conditions: For Ongoing Physical and Mental Health Conditions. 4th ed. Boulder, CO: Bull Publishing Company; 2013. [Google Scholar]

- 19.Lorig KR, Holman H. Self-Management education: history, definition, outcomes, and mechanisms. Ann Behav Med. 2003;26(1):1–7. doi: 10.1207/S15324796ABM2601_01. [DOI] [PubMed] [Google Scholar]

- 20.Centers for Medicare and Medicaid Services Chronic conditions [Internet] 2019. [cited 2019 May 22]. Available from: https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/Chronic-Conditions/CC_Main.html .

- 21.Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20:37–46. [Google Scholar]

- 22.Berkman ND, Sheridan SL, Donahue KE, Halpern DJ, Viera A, Crotty K, et al. Evidence Report/Technology Assesment No. 199. AHRQ Publication; Health literacy interventions and outcomes: an updated systematic review. Number 11-E006, 2011. [PMC free article] [PubMed] [Google Scholar]

- 23.Baskar J, Lindgren H. Human-Agent dialogues on health topics - an evaluation study. Communications in Computer and Information Science. 2015;524:28–39. [Google Scholar]

- 24.Elmasri D, Maeder A. A conversational agent for an online mental health intervention. Brain Informatics and Health: International Conference. 2016. pp. 243–251.

- 25.Gaffney H, Mansell W, Edwards R, Wright J. Manage Your Life Online (MYLO): a pilot trial of a conversational computer-based intervention for problem solving in a student sample. Behav Cogn Psychother. 2014;42(6):731–46. doi: 10.1017/S135246581300060X. [DOI] [PubMed] [Google Scholar]

- 26.Fitzpatrick K, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment Health. 2017;4(2):e19. doi: 10.2196/mental.7785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kazemi DM, Cochran AR, Kelly JF, Cornelius JB, Belk C. Integrating mHealth mobile applications to reduce high risk drinking among underage students. Health Education Journal. 2013;73(3) [Google Scholar]

- 28.Ly KH, Ly A, Andersson G. A fully automated conversational agent for promoting mental well-being: A pilot RCT using mixed methods. Internet Interv. 2017;10:39–46. doi: 10.1016/j.invent.2017.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schroeder J, Wilkes C, Rowan K, Toledo A, Paradiso A, Czerwinski M, et al. Pocket skills: a conversational mobile web app to support dialectical behavioral therapy. Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. 2018. pp. 1–15.

- 30.Stein N, Brooks K. A fully automated conversational artificial intelligence for weight loss: longitudinal observational study among overweight and obese adults. JMIR Diabetes. 2017;2(2):e28. doi: 10.2196/diabetes.8590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tsiourti C, Joly E, Wings C, Moussa MB, Wac K. Virtual assistive companions for older adults: qualitative field study and design implications. Proceedings of the 8th International Conference on Pervasive Computing Technologies for Healthcare. 2014. pp. 57–64.

- 32.van Heerden A, Ntinga X, Vilakazi K. The potential of conversational agents to provide a rapid HIV counseling and testing services. International Conference on the Frontiers and Advances in Data Science. 2017.

- 33.Wang H, Zhang Q, Ip M, Lau JT. Social media-based conversational agents for health management and interventions. Computer. 2018;51(8):26–33. [Google Scholar]

- 34.Watson A, Bickmore T, Cange A, Kulshreshtha A, Kvedar J. An internet-based virtual coach to promote physical activity adherence in overweight adults: randomized controlled trial. J Med Internet Res. 2012;14(1):e1. doi: 10.2196/jmir.1629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kazemi DM, Borsari B, Levine MJ, Lamberson KA, Dooley B. REMIT: Development of a mHealth theory- based intervention to decrease heavy episodic drinking among college students. Addiction Research and Theory. 2018;26(5):377–385. doi: 10.1080/16066359.2017.1420783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Scholten MR, Kelders SM, van Gemert-Pijnen JE. Self-Guided web-based interventions: scoping review on user needs and the potential of embodied conversational agents to address them. J Med Internet Res. 2017;19(11):e383. doi: 10.2196/jmir.7351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Laranjo L, Dunn AG, Tong HL, Kocaballi AB, Chen J, Bashir R, et al. Conversational agents in healthcare: a systematic review. J Am Med Inform Assoc. 2018;25(9):1248–1258. doi: 10.1093/jamia/ocy072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hoermann S, McCabe KL, Milne DN, Calvo RA. Application of synchronous text-based dialogue systems in mental health interventions: systematic review. J Med Internet Res. 2017;19(8):e267. doi: 10.2196/jmir.7023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Provoost S, Lau HM, Ruwaard J, Riper H. Embodied conversational agents in clinical psychology: a scoping review. J Med Internet Res. 2017;19(5):e151. doi: 10.2196/jmir.6553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Vaidyam AN, Wisniewski H, Halamka JD, Kashavan MS, Torous JB. Chatbots and conversational agents in mental health: a review of the psychiatric landscape. Can J Psychiatry. 2019;64(7):456–464. doi: 10.1177/0706743719828977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kramer LL, ter Stal S, Mulder BC, de Vet E, van Velsen L. Developing embodied conversational agents for coaching people in a healthy lifestyle: scoping review. J Med Internet Res. 2020;22(2):e14058. doi: 10.2196/14058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Bickmore TW, Trinh H, Olafsson S, O’Leary TK, Asadi R, Rickles NM, et al. Patient and consumer safety risks when using conversational assistants for medical information: an observational study of Siri, Alexa, and Google Assistant. J Med Internet Res. 2018;20(9):e11510. doi: 10.2196/11510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bickmore T, Giorgino T. Health dialog systems for patients and consumers. Journal of Biomedical Informatics. 2006;39(5):556–571. doi: 10.1016/j.jbi.2005.12.004. [DOI] [PubMed] [Google Scholar]

- 44.Amazon. Lex [Internet] 2020. [cited 2020 March 3]. Available from: https://aws.amazon.com/lex/

- 45.Wit.ai, Inc. Natural language for developers [Internet] 2020. [cited 2020 March 3]. Available from: https://wit.ai/

- 46.Google. Dialogflow [Internet] 2020. [cited 2020 March 3]. Available from: https://dialogflow.com/

- 47.Microsoft. Bot framework [Internet] 2020. [cited 2020 March 3]. Available from: https://dev.botframework.com/

- 48.Amazon. Introducing new Alexa healthcare skills. [Internet] 2020. [cited 2020 March 3]. Available from: https://developer.amazon.com/blogs/alexa/post/ff33dbc7-6cf5-4db8-b203-99144a251a21/introducing-new- alexa-healthcare-skills .

- 49.Food and Drug Administration (US) Mobile medical applications [Internet] 2018. [cited 2019 May 22]. Available from: https://www.fda.gov/MedicalDevices/DigitalHealth/MobileMedicalApplications/ucm255978.htm .