Key Points

Question

Using computational methods based on computer vision analysis, can a smartphone or tablet be used in real-world settings to reliably detect early symptoms of autism spectrum disorder?

Findings

In this study, a mobile device application deployed on a smartphone or tablet and used during a pediatric visit detected distinctive eye-gaze patterns in toddlers with autism spectrum disorder compared with typically developing toddlers, which were characterized by reduced attention to social stimuli and deficits in coordinating gaze with speech sounds.

Meaning

These methods may have potential for developing scalable autism screening tools, exportable to natural settings, and enabling data sets amenable to machine learning.

Abstract

Importance

Atypical eye gaze is an early-emerging symptom of autism spectrum disorder (ASD) and holds promise for autism screening. Current eye-tracking methods are expensive and require special equipment and calibration. There is a need for scalable, feasible methods for measuring eye gaze.

Objective

Using computational methods based on computer vision analysis, we evaluated whether an app deployed on an iPhone or iPad that displayed strategically designed brief movies could elicit and quantify differences in eye-gaze patterns of toddlers with ASD vs typical development.

Design, Setting, and Participants

A prospective study in pediatric primary care clinics was conducted from December 2018 to March 2020, comparing toddlers with and without ASD. Caregivers of 1564 toddlers were invited to participate during a well-child visit. A total of 993 toddlers (63%) completed study measures. Enrollment criteria were aged 16 to 38 months, healthy, English- or Spanish-speaking caregiver, and toddler able to sit and view the app. Participants were screened with the Modified Checklist for Autism in Toddlers–Revised With Follow-up during routine care. Children were referred by their pediatrician for diagnostic evaluation based on results of the checklist or if the caregiver or pediatrician was concerned. Forty toddlers subsequently were diagnosed with ASD.

Exposures

A mobile app displayed on a smartphone or tablet.

Main Outcomes and Measures

Computer vision analysis quantified eye-gaze patterns elicited by the app, which were compared between toddlers with ASD vs typical development.

Results

Mean age of the sample was 21.1 months (range, 17.1-36.9 months), and 50.6% were boys, 59.8% White individuals, 16.5% Black individuals, 23.7% other race, and 16.9% Hispanic/Latino individuals. Distinctive eye-gaze patterns were detected in toddlers with ASD, characterized by reduced gaze to social stimuli and to salient social moments during the movies, and previously unknown deficits in coordination of gaze with speech sounds. The area under the receiver operating characteristic curve discriminating ASD vs non-ASD using multiple gaze features was 0.90 (95% CI, 0.82-0.97).

Conclusions and Relevance

The app reliably measured both known and new gaze biomarkers that distinguished toddlers with ASD vs typical development. These novel results may have potential for developing scalable autism screening tools, exportable to natural settings, and enabling data sets amenable to machine learning.

This case-control study examines whether a mobile app that displays strategically designed brief movies can elicit and quantify differences in eye-gaze patterns of toddlers with autism spectrum disorder (ASD) vs those with typical development.

Introduction

Humans have neural circuits that direct their gaze to salient social information, including others’ faces and eyes, facial expressions, voices, and gestures.1 A cortical network involving the inferior occipital gyrus, fusiform gyrus, superior temporal sulcus, and amygdala underlies perception of facial features and expressions,2 which interacts with regions involving reward, such as the ventral striatum, contributing to attentional preferences.3 Infants’ early attention to other people promotes learning about the social world through interaction with others.4 Autism spectrum disorder (ASD) is a neurodevelopmental condition characterized by decreased spontaneous visual attention to social stimuli, evident by 6 months of age.5 Diminished social visual engagement with others influences the early development of social brain circuitry and has been hypothesized to contribute to later deficits in social and communicative abilities associated with autism.6 Early intervention that promotes social engagement can potentially mitigate the cascading effects of reduced social attention on brain and behavioral development, leading to improved outcomes.7,8 Thus, there is a need for reliable, feasible methods for measuring gaze to facilitate early ASD detection.

Current methods for measuring gaze require specialized expensive equipment, calibration, and trained personnel, limiting their use for universal ASD screening, especially in low-resource settings.9,10 Caregiver-report ASD screening questionnaires include questions about social attention, but they require literacy and have lower performance with caregivers from minority racial/ethnic backgrounds and lower education.11,12 This disparity is notable, given documented delays in ASD screening and diagnosis of Black children.13

We developed a digital assessment tool for gaze monitoring that can be delivered on widely available devices by designing an app consisting of brief movies shown on an iPhone or iPad while the child’s behavior is recorded via the frontal camera embedded in the device, and using computer vision analysis to quantify eye-gaze patterns. The movies were strategically designed to assess social attention based on a priori hypotheses. No calibration, special equipment, or controlled environment was needed. We demonstrated that this tool reliably measures both known and novel social attention gaze biomarkers that distinguish toddlers with ASD vs typical development.

Methods

Participants

A total of 1564 caregivers were invited to participate in the study during a well-child primary care visit. Inclusion criteria were aged 16 to 38 months, not ill, and caregiver language of English or Spanish. Exclusionary criteria were sensory or motor impairment that precluded sitting or viewing the app. A total of 993 toddlers (63%) met enrollment criteria and completed study measures (see study flow diagram in eFigure 1 in the Supplement). Mean age of the study sample was 21.1 months (range, 17.1-36.9 months), and 50.6% were boys. Caregiver-reported race/ethnicity, in accordance with National Institutes of Health categories, was 59.8% White individuals, 16.5% Black individuals, 23.7% other race, and 16.9% Hispanic/Latino individuals. A total of 75.7% of caregivers had a college degree, 19.6% had at least a high school education, and 4.6% did not have a high school education. Reasons for exclusion were not meeting enrollment criteria, not interested, needing to take care of a sibling, not enough time, child too upset, and incomplete app or clinical information. Caregivers and legal guardians provided written informed consent, and the study was approved by the Duke University Health System institutional review board.

Measures

Modified Checklist for Toddlers–Revised With Follow-up

Caregivers completed the Modified Checklist for Toddlers–Revised With Follow-up (M-CHAT-R/F)11 during the well-child visit when the app was administered. M-CHAT-R/F consists of 20 questions about the presence or absence of autism symptoms, with a total score of 0 to 2 indicating low risk. Medium-risk scores (3-7) require follow-up questions.

Referral Determination and Diagnostic Assessments

Children whose scores revealed risk for ASD on the M-CHAT-R/F or whose caregiver or physician expressed a concern subsequently were referred by their physician for a diagnostic evaluation conducted by a licensed and research-reliable psychologist. The evaluation included the Autism Diagnostic Observation Schedule–Second Edition (ADOS-2)14 and Mullen Scales of Early Learning.15

Groups

Demographic and clinical characteristics of 3 subgroups are shown in eTable 1 in the Supplement. The 3 subgroups were defined as follows: the ASD group (n = 40) were children referred for evaluation and who met Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition criteria for ASD. Seventeen of 40 children (42.5%) had delayed nonverbal cognitive ability, defined as a score of 30 or less on the Mullen Visual Reception scale. The developmental delay/language delay group (n = 17) had “at risk” scores on the M-CHAT-R/F or had physician or caregiver concern, were referred for evaluation, were administered the ADOS-2 and Mullen scales, and were determined not to meet criteria for ASD. All children scored at least 9 points below the mean on at least 1 Mullen subscale (SD = 10). The typical development group (n = 936) was defined as having a high likelihood of typical development based on a negative M-CHAT-R/F score and no concern raised by the physician or caregiver, or having been referred according to a positive M-CHAT-R/F score (n = 1) or physician or caregiver concern (n = 7) and determined by the psychologist according to the ADOS-2 and Mullen scales to be developing typically. Although it is possible that a child in the typical development group had ASD, developmental or language delay, or both, it was not feasible to administer the ADOS-2 and Mullen scales to all children. Because of the low number of participants with developmental delay or language delay, descriptive results for this group are provided in eFigures 2 to 4 in the Supplement.

Digital App

The app was delivered in 4 clinics during a well-child visit (3 used an iPhone 8 Plus; 1 used a 9.7-in iPad). Caregivers held their child on their lap in well-lit rooms while brief, engaging movies were presented on the device set on a tripod approximately 60 cm (iPad) and approximately 30 cm (iPhone) away from the child. Caregivers were asked to refrain from instructing their child. The frontal camera recorded the child’s behavior at resolutions of 1280 × 720 (iPad) and 1920 × 1080 (iPhone), 30 frames per second. App administration took less than 10 minutes, with each movie no longer than 60 seconds. Some children (1 typical development, 23 ASD, and 11 developmental delay/language delay group) who had failed the M-CHAT-R/F received a second administration of either the iPad or iPhone app (the version they did not receive in the clinic) during their diagnostic evaluation. Repeating analyses using independent samples did not change any results (described in the Supplement).

Four movies (2 per device) assessing social preference depicted a person on one side and a toy (played with by the person) on the opposite side of the screen. A distractor toy on a shelf was in the top corner on the side of the screen with the toy. Two movies showed a man blowing bubbles (“blowing bubbles”) with a wand (iPad) or a bubble gun (iPhone). Two showed a woman spinning a top (iPad: “spinning top”) or a pinwheel (iPhone: “spinning pinwheel”). Similar to a probe used in the ADOS-2, at one point, the person paused expectantly before enthusiastically blowing bubbles (eFigure 3 in the Supplement). The dependent variable was percentage of frames with the child looking to the right side of the screen (percentage right), reflecting the amount of time spent looking at the person or the toys, depending on which side of the screen displayed the person and toys (counterbalanced).

One movie, displayed on the iPad only, assessed attention to speech and depicted 2 women facing each other, 1 on each side of the screen, who talked about a trip to the park (“fun at the park”; illustrated in eFigure 4 in the Supplement). A clock was shown in the upper right-hand corner. Typically developing 6- to 11-month-old infants shift their gaze in accordance with the flow of conversation when shown similar stimuli.16 We measured the time correlation between the conversation and the toddler’s gaze. English or Spanish (n = 65, 6.5%) versions of the app were shown, and actors were diverse in racial/ethnic background.

Two control movies without people or language were shown, one that displayed bubbles cascading down homogeneously across the screen with a gurgling sound (“floating bubbles” on both devices) and a second in which a puppy’s face appeared and the puppy barked in a repeating pattern: twice on the right and once on the left side of the screen (“puppy” on the iPad).

The percentages of assessments to which the child attended for the majority of the app administration were 95% and 87% for the iPhone and 95% and 93% for the iPad for the typical development and ASD groups, respectively.

Gaze Detection

Face Detection and Landmark Extraction

Each video was run through a face detection and recognition algorithm.17 Human supervision was used to verify the result of the face-tracking algorithm for fewer than 10 frames per video when the automatic tracking confidence algorithm was below a predefined threshold. Low tracking confidence is commonly associated with extreme head poses or rapid movement. For each frame in which the child’s face was detected, IntraFace was used to extract 49 facial landmarks and the head pose angles (yaw, pitch, and roll).18

Attention Filtering

A filter was applied to obtain valid gaze data that combined 3 rules: eyes were open, estimated by the eye aspect ratio as computed from the landmarks; estimated gaze was at or close to the area of the screen; and the face was relatively steady to filter frames in which quick head movements occurred.

Gaze Features

To estimate gaze, we used iTracker.19 Raw gaze data were preprocessed with the attention filter, excluding frames in which the child was likely not attending to the movies. The Otsu20 method was used on the valid-gaze horizontal coordinate and split the gaze data into 2 clusters (left and right). A silhouette score was used to assess the significance of the clusters.21 This measure compares the distance of samples within the same cluster with the distance to the neighbors in a different cluster. When 2 clusters are compact and far from each other, the distance to the neighbors in the same cluster will be smaller than the distance to samples outside of the cluster, leading to maximal silhouette score. The clustering measure range is –1 to 1; the closer the measure is to 1, the stronger the separation and definition of the clusters.

Gaze-Speech Correlation

For the “fun at the park” movie, we labeled frames during which the person on the left or right was talking, obtaining a square-wave binary signal. The horizontal gaze coordinate was compared with the binary signal to assess the correlation gaze and the alternating speech. We filtered those frames for valid gaze data and performed a binarization of the horizontal gaze coordinate (thresholding the signal), followed by a median filter for smoothing. The binary horizontal gaze coordinate was compared with the speech signal, and, allowing a time shift of no more than 2 seconds, the correlation between the signals was calculated.

Statistical Analysis

Statistical differences between groups were computed with a 2-tailed Mann-Whitney U test and the Python package scipy.stats.mannwhitneyu with “alternative” parameter set to 2 sided. Effect size r was evaluated with the rank-biserial correlation.22 A tree classifier was used to assess individual gaze features and their combination.23 The scikit-learn implementation sklearn.tree.DecisionTreeClassifier was used with input parameters “criterion = gini” (impurity metric), “max_depth = 2” for a single stimulus and “max_depth = 3” when combining multiple stimuli, “class_weight = balanced,” and “min_samples_leaf = 5.” Statistics were computed in Python with SciPy low-level functions version 1.4.1, Statsmodels version 0.10.1, and scikit-learn version 0.23.2.24,25,26 Significance was set at .05 and receiver operating characteristic curves and areas under the curve (AUCs) were obtained. CIs were computed with the Hanley and McNeil27 method at 95% level.

Results

Social Preference Movies

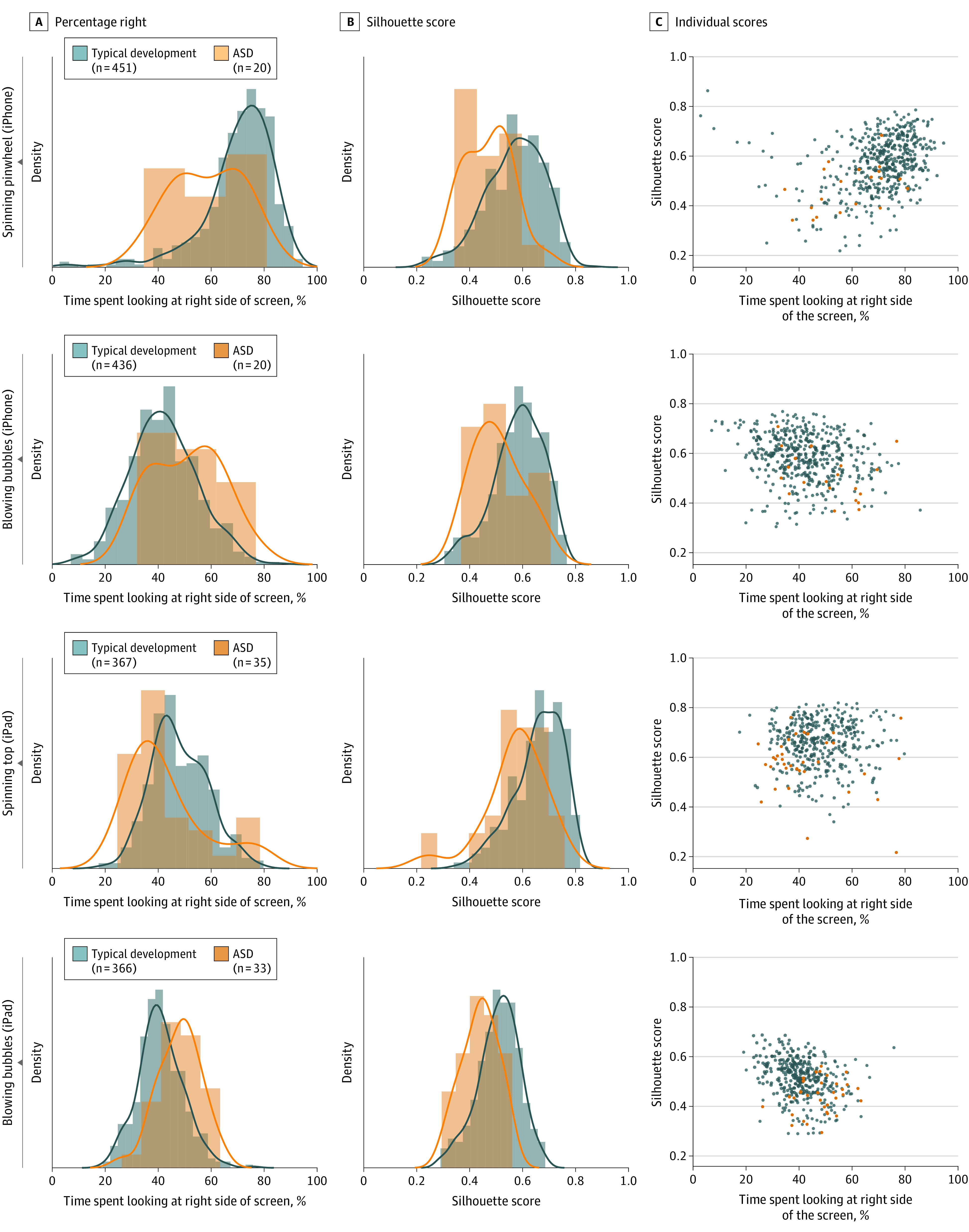

Figure 1 displays the distributions of percentage right gaze for the social preference movies and corresponding silhouette analyses. Analyses of percentage right and silhouette scores revealed significant differences between the typical development and ASD groups (“spinning pinwheel” [iPhone]: P < .001, r = 0.51 for percentage right, and P < .001, r = 0.52 for silhouette score; “blowing bubbles” [iPhone]: P = .007, r = 0.35 for percentage right, and P < .001, r = 0.44 for silhouette score; “spinning top” [iPad]: P = .001, r = 0.33 for percentage right, and P < .001, r = 0.46 for silhouette score; “blowing bubbles” [iPad]: P < .001, r = 0.47 for percentage right, and P < .001, r = 0.51 for silhouette score). Because there was a 3.3-month mean age difference for the typical development vs ASD groups (P < .001), we repeated these analyses with age-matched groups. We identified subgroups of matched typical development participants with a mean age of 22.7 and 23.0 months for the iPhone and iPad, respectively. Gaze differences between toddlers with ASD vs typical development in percentage right and silhouette scores for each of the movies remained significant (“spinning pinwheel” [iPhone]: P < .001, r = 0.49/P < .001, r = 0.51; “blowing bubbles” [iPhone]: P = .03, r = 0.29/P = .002, r = 0.41; “spinning top” [iPad]: P = .007, r = 0.29/P < .001, r = 0.44; blowing bubbles” [iPad]: P < .001, r = 0.46/P < .001, r = 0.53).

Figure 1. Social vs Nonsocial Gaze Preference.

Gaze data for 4 movies that depicted a person on one side of the screen playing with toys located on the opposite side of the screen. A, Distribution of percentage right scores (percentage of time spent looking at the right side of the screen) for each movie. B, Distribution of silhouette scores for each movie. C, Scatterplots displaying individual participant percentage right (horizontal axis) and silhouette scores (vertical axis). For “spinning pinwheel” (iPhone), the person is on the right side of the screen; for “blowing bubbles” (iPhone), the person is on the left side; for “spinning top” (iPad), the person is on the right side; and for “blowing bubbles” (iPad), the person is on the left side. ASD indicates autism spectrum disorder.

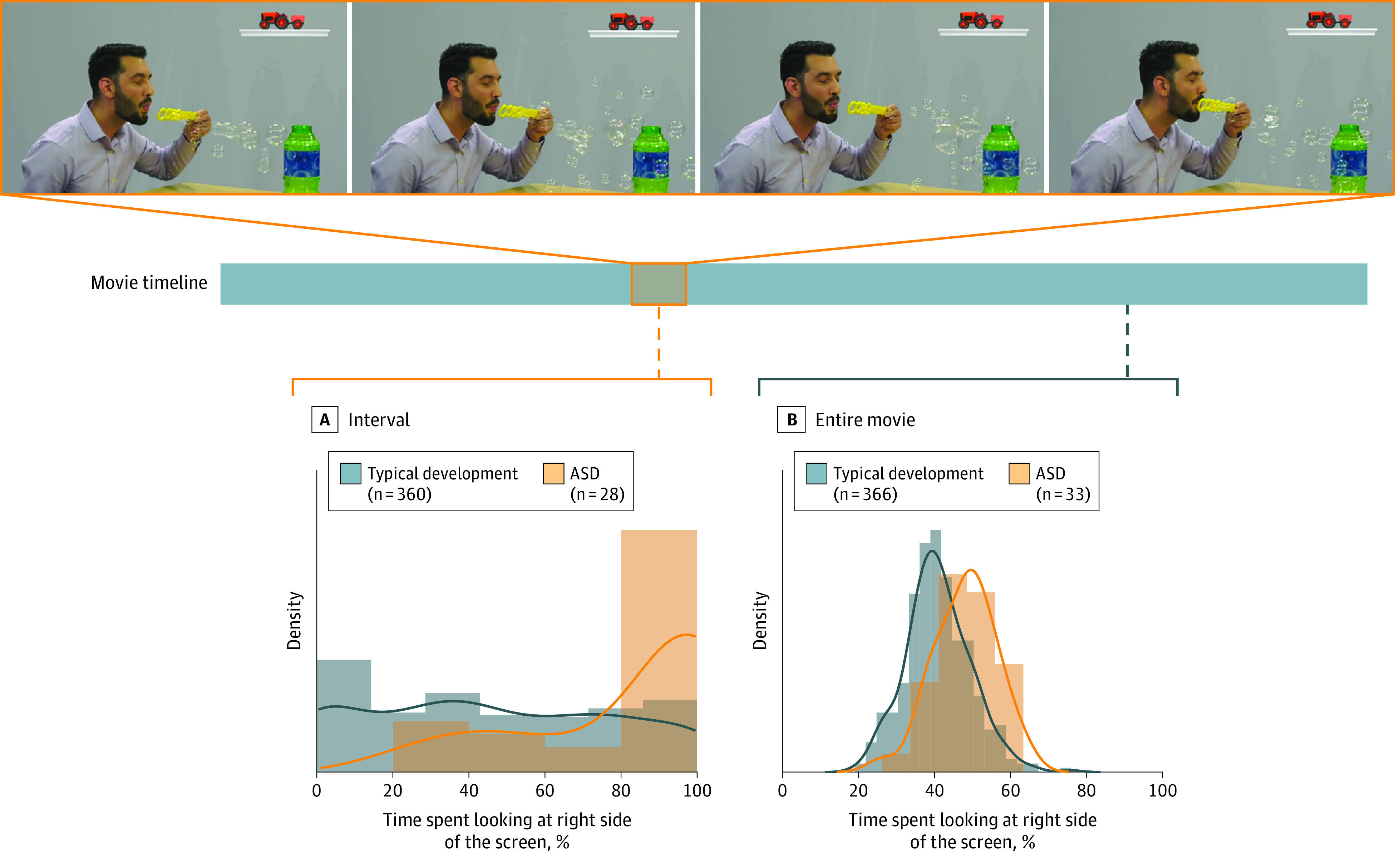

During “blowing bubbles” (iPad), at one point, the person paused expectantly before enthusiastically blowing bubbles, paralleling probes used in the ADOS-2. Consistent with our hypothesis, when the percentage right values were aggregated during this specific interval, the effect size for group differences increased from medium to large (Figure 2) (effect size increased from 0.35 to 0.53 for iPhone and 0.47 to 0.59 for iPad). Compared with children with typical development, those with ASD focused their gaze to a greater degree on the toys than on the person during this interval.

Figure 2. Gaze Patterns During Salient Social Moments.

Children’s gaze behavior was measured during a salient segment of the movie, during which the person paused expectantly before enthusiastically blowing bubbles. Displayed are the percentage right scores reflecting attentional preference for the toys (right side) vs the person (left side) for toddlers with typical development (blue) and autism spectrum disorder (orange) during this interval of the movie (A) and the entire movie (B).

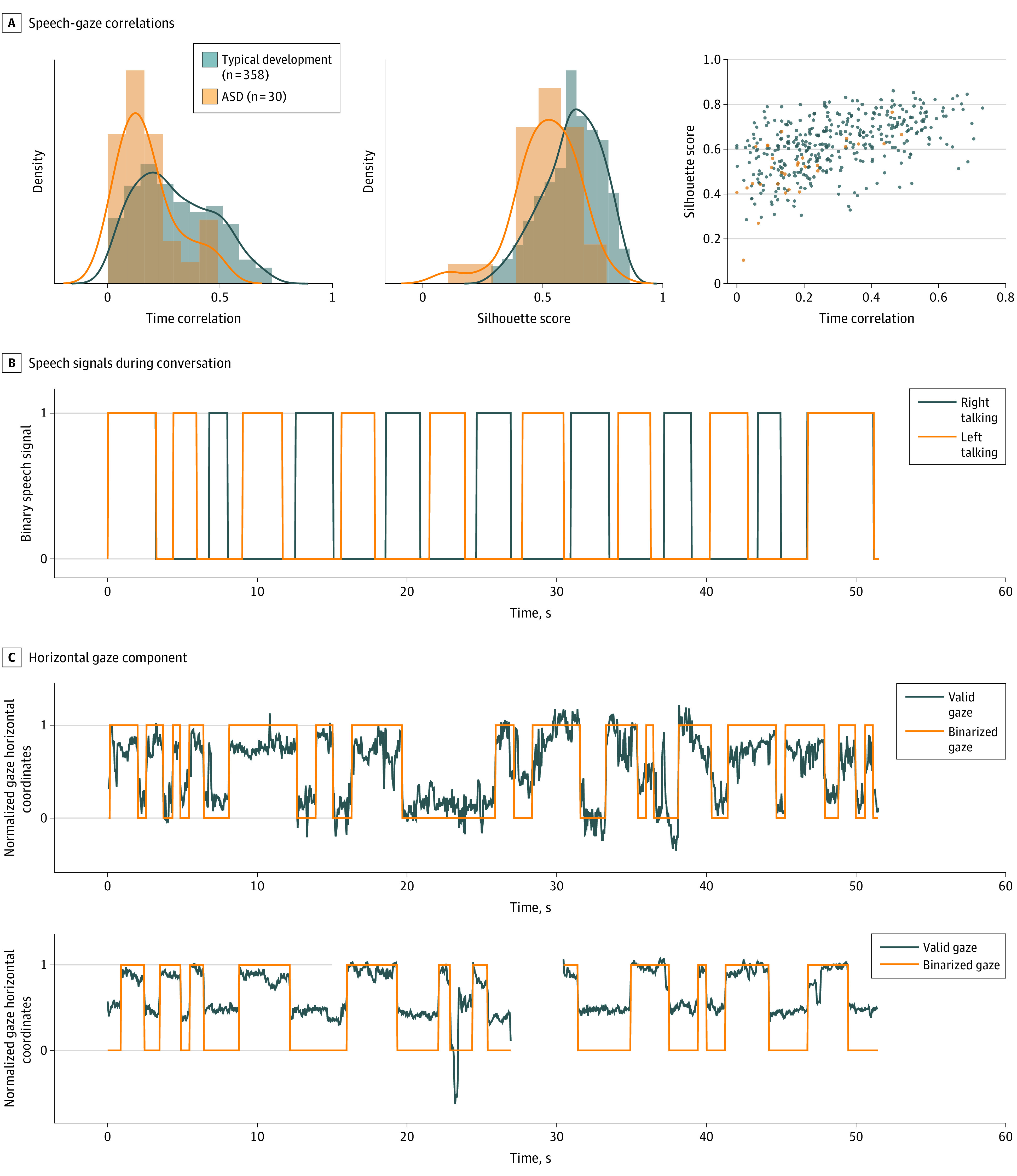

Coordination of Gaze With Conversation

Analysis of the movie “fun in the park” revealed significant differences between the ASD and typical development groups for gaze-speech time correlations (P < .001, r = 0.42) and left-right clusters silhouette scores (P < .001, r = 0.45). Figure 3 illustrates the time correlation and silhouette score distributions for the ASD and typical development groups and shows an example of the temporal gaze pattern for a toddler with typical development and ASD. Whereas children with typical development showed a tight correlation between their gaze and the conversational flow, the toddlers with ASD showed a variable, less coordinated pattern. In repetition of this analysis of the time correlation and silhouette scores using age-matched samples, significant group differences remained (P < .001, r = 0.48 and P < .001, r = 0.49, respectively).

Figure 3. Gaze-Speech Coordination.

Results from a movie designed to assess gaze patterns when the child watched a conversation between 2 people displayed on opposite sides of the screen (illustrated in eFigure 4 in the Supplement). A, Distributions of speech-gaze time correlations and silhouette scores and scatterplot jointly showing individual data for these 2 measures for children with autism spectrum disorder (ASD) (orange) and typical development (blue). B, Alternating speech signal between 2 women having a conversation shown in the movie. C, Alternative gaze patterns in relationship to the conversational speech sounds for a child with autism spectrum disorder and a child with typical development.

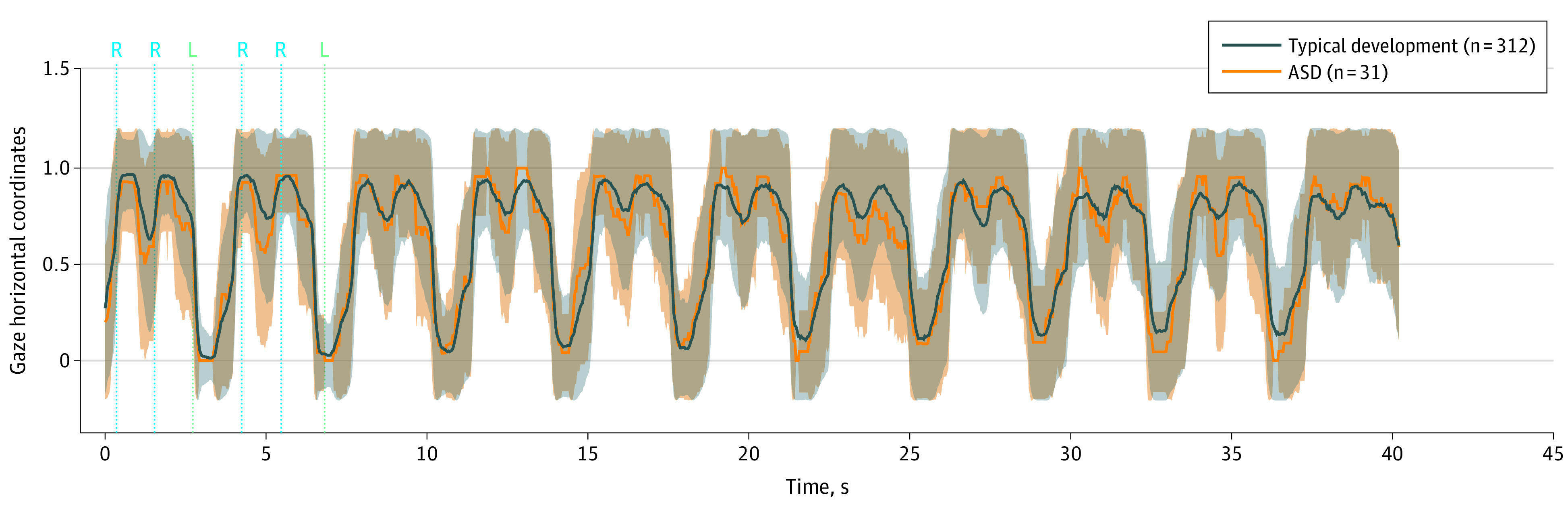

Control Movies

Analysis of the control movie “puppy” revealed that both the typical development and ASD groups alternated their gaze to the right and left side of the screen when the puppy appeared. As shown in Figure 4, both groups showed a clear right, right, left gaze-shift pattern, and significant group differences were not found for this movie (P = .07, r = 0.20). Similarly, a significant group difference in percentage right scores was not found for the second control movie, “floating bubbles” (P = .90, r = 0.17).

Figure 4. Gaze Shifts to Nonhuman Stimuli (Puppy).

In this control movie, a puppy’s face appeared twice on the right and once on the left. Shown are the mean (SD) gaze horizontal coordinates (lines represent means and shaded areas represent SDs) as the puppy’s face appeared in the right-right-left pattern on an iPad for children with typical development (blue) and autism spectrum disorder (ASD) (orange). On the vertical axis, 1 is right and 0 is left.

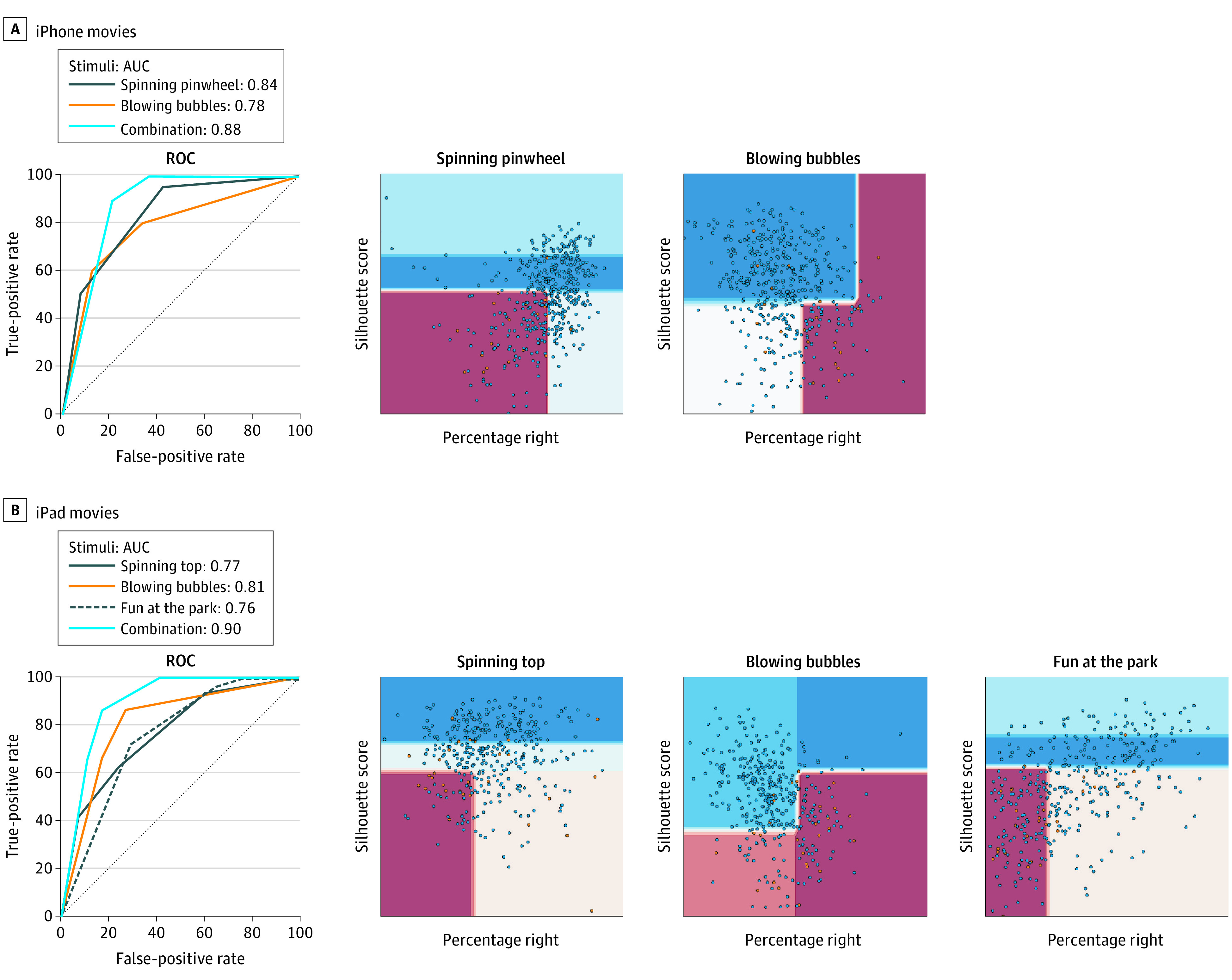

Combining Multiple Types of Gaze Features

We hypothesized that using multiple gaze features would improve group discrimination. We compared the performance of simple tree classifiers using the gaze features from individual movies and combined across movies. We fit the models to evaluate the accuracy of discrimination between ASD vs typical development. Figure 5 presents the receiver operating characteristic curves and AUC obtained for models trained for individual gaze features and their combination. The receiver operating characteristic shows the proportion of children with typical development incorrectly classified in the ASD group vs the proportion of children in the ASD group correctly classified as being diagnosed with ASD.

Figure 5. Combining Gaze Features.

Receiver operating characteristic (ROC) curves and areas under the curve (AUCs) obtained for models trained on percentage right scores and gaze-speech correlations for each movie and their combination for the data collected on the iPhone (A) and iPad (B). Receiver operating characteristics appear nonsmooth due to the small number of levels in the model. On the right, the per-feature classification boundaries associated with the gaze features extracted from each movie are shown, as automatically computed by the classification strategy. A shallow tree was used to learn to classify from the data; the portions of the feature space associated with a higher risk of autism spectrum disorder (ASD) are shown colored.

On the iPhone, the AUCs for “spinning pinwheel” and “blowing bubbles” were 0.84 (95% CI, 0.72-0.94) and 0.78 (95% CI, 0.66-0.90), respectively. When the model was fitted combining the features of both movies, the AUC improved to 0.88 (95% CI, 0.78-0.98). On the iPad, the AUCs associated with “spinning top,” “blowing bubbles,” and “fun at the park” were 0.77 (95% CI, 0.67-0.87), 0.81 (95% CI, 0.72-0.91), and 0.76 (95% CI, 0.65-0.86), respectively. When these features were combined, AUC performance increased to 0.90 (95% CI, 0.82-0.97). eTable 2 in the Supplement provides the AUCs obtained for the models based on combining gaze features separately by sex and racial/ethnic background. The AUC values remained relatively consistent for these subgroups; however, CIs were larger owing to smaller samples.

Discussion

We demonstrated for the first time, to our knowledge, that an app deployed on relatively low-cost, widely available devices can reliably measure gaze and detect early ASD symptoms related to social attention. Eye-gaze data can be collected from toddlers sitting on a caregiver’s lap in pediatric clinics and quantified without any training, special setup, equipment, or calibration. Strategically designed, brief movies, shown on a tablet or smartphone, elicited distinctive patterns of gaze in toddlers with ASD, characterized by reduced preference for social stimuli, lower attentional focus on salient social segments of the movie, and previously unknown deficits in the ability to coordinate their gaze with the speech sounds of others. These results have broad scientific and clinical implications for human behavioral research. They open vast possibilities for gathering large data sets on eye-tracking biomarkers in real-world settings, amenable to big data analysis and machine learning, and applicable to a wide range of psychiatric and medical conditions characterized by atypical gaze patterns.28,29

The temporal resolution of computer vision analysis allowed detection of previously unknown deficits in gaze-speech coordination in toddlers with ASD. Whereas 6- to 11-month-old typically developing infants visually track the conversation between 2 people, toddlers with ASD showed less-coordinated gaze patterns.16 We also found differences in how toddlers with ASD pay attention to salient social moments in the movies, a feature that increased discrimination between toddlers with and without ASD (effect size increased from medium to large). Although any one feature showed overlap between toddlers with and without ASD, a computational approach was used to measure and integrate multiple features, obtaining significantly lower overlap between the groups. We plan to combine multiple gaze features with other behavioral features that can be measured with computer vision analysis using the same app, such as facial expressions,30,31 response to name,32,33 and sensorimotor behaviors.34 Because these behaviors develop during the first year of postnatal life, this approach could detect ASD risk behaviors during infancy.

Strengths and Limitations

A strength of this study is diversity of the sample, recruited from community-based clinics without a known risk factor, such as an older sibling with ASD, or prior ASD diagnosis. This is comparable to how screening tools would be used in real-world settings such as primary care. This study was not designed to evaluate this novel approach as a screening method for ASD. Our goal is to assess multiple types of behavioral features, including gaze, and use all features in combination to evaluate the sensitivity and specificity of the app as a screening tool. A limitation of this study is that the sample size was too small to reliably evaluate the effects of sex, race/ethnicity, and caregiver education. Larger samples and longitudinal information about child diagnosis are needed to evaluate the positive predictive value of this novel approach for detecting ASD risk. We hope a digital screening tool can be complementary to other assessment approaches and ultimately increase the accuracy, exportability, accessibility, and scalability of ASD screening, allowing improved risk detection and earlier intervention.35

eFigure 1. Study consort diagram

eTable 1. Demographic and clinical characteristics

eFigure 2. Social versus nonsocial gaze preference in toddlers with ASD, TD, and DDLD

eFigure 3. Gaze patterns of toddlers with ASD, TD, and DDLD during salient moments

eFigure 4. Correlation between gaze and speech in children with ASD, TD, and DDLD

Reanalyses of social attention data using independent samples

eTable 2. Model performance for combined features by sex, race, and ethnicity

eReference

References

- 1.Kennedy DP, Adolphs R. The social brain in psychiatric and neurological disorders. Trends Cogn Sci. 2012;16(11):559-572. doi: 10.1016/j.tics.2012.09.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4(6):223-233. doi: 10.1016/S1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- 3.Adolphs R. Cognitive neuroscience of human social behaviour. Nat Rev Neurosci. 2003;4(3):165-178. doi: 10.1038/nrn1056 [DOI] [PubMed] [Google Scholar]

- 4.Reynolds GD, Roth KC. The development of attentional biases for faces in infancy: a developmental systems perspective. Front Psychol. 2018;9:222. doi: 10.3389/fpsyg.2018.00222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chawarska K, Macari S, Shic F. Decreased spontaneous attention to social scenes in 6-month-old infants later diagnosed with autism spectrum disorders. Biol Psychiatry. 2013;74(3):195-203. doi: 10.1016/j.biopsych.2012.11.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Webb SJ, Jones EJ, Kelly J, Dawson G. The motivation for very early intervention for infants at high risk for autism spectrum disorders. Int J Speech Lang Pathol. 2014;16(1):36-42. doi: 10.3109/17549507.2013.861018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dawson G, Jones EJ, Merkle K, et al. Early behavioral intervention is associated with normalized brain activity in young children with autism. J Am Acad Child Adolesc Psychiatry. 2012;51(11):1150-1159. doi: 10.1016/j.jaac.2012.08.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jones EJH, Dawson G, Kelly J, Estes A, Webb SJ. Parent-delivered early intervention in infants at risk for ASD: effects on electrophysiological and habituation measures of social attention. Autism Res. 2017;10(5):961-972. doi: 10.1002/aur.1754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chita-Tegmark M. Social attention in ASD: a review and meta-analysis of eye-tracking studies. Res Dev Disabil. 2016;48:79-93. doi: 10.1016/j.ridd.2015.10.011 [DOI] [PubMed] [Google Scholar]

- 10.Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Defining and quantifying the social phenotype in autism. Am J Psychiatry. 2002;159(6):895-908. doi: 10.1176/appi.ajp.159.6.895 [DOI] [PubMed] [Google Scholar]

- 11.Chlebowski C, Robins DL, Barton ML, Fein D. Large-scale use of the modified checklist for autism in low-risk toddlers. Pediatrics. 2013;131(4):e1121-e1127. doi: 10.1542/peds.2012-1525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Khowaja MK, Hazzard AP, Robins DL. Sociodemographic barriers to early detection of autism: screening and evaluation using the M-CHAT, M-CHAT-R, and Follow-Up. J Autism Dev Disord. 2015;45(6):1797-1808. doi: 10.1007/s10803-014-2339-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Constantino JN, Abbacchi AM, Saulnier C, et al. Timing of the diagnosis of autism in African American children. Pediatrics. 2020;146(3):e20193629. doi: 10.1542/peds.2019-3629 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gotham K, Risi S, Pickles A, Lord C. The Autism Diagnostic Observation Schedule: revised algorithms for improved diagnostic validity. J Autism Dev Disord. 2007;37(4):613-627. doi: 10.1007/s10803-006-0280-1 [DOI] [PubMed] [Google Scholar]

- 15.Mullen E. Mullen Scales of Early Learning. Western Psychological Services Inc; 1995. [Google Scholar]

- 16.Augusti EM, Melinder A, Gredebäck G. Look who’s talking: pre-verbal infants’ perception of face-to-face and back-to-back social interactions. Front Psychol. 2010;1:161. doi: 10.3389/fpsyg.2010.00161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.King DE. Dlib-ml: a machine learning toolkit. J Mach Learn Res. 2009;10:1755-1758. [Google Scholar]

- 18.D’e la Torre F, Chu W, Xiong X, Vincente F, Ding X, Cohn J. IntraFace. Presented at: IEEE International Conference on Automatic Face and Gesture Recognition; May 4-8, 2015; Ljuljana, Slovenia. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Krafka K, Khosla A, Kellnhofer P, et al. Eye-tracking for everyone. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016:2176-2184. [Google Scholar]

- 20.Otsu N. A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern. 1979;9(1):62-66. doi: 10.1109/TSMC.1979.4310076 [DOI] [Google Scholar]

- 21.Rousseeuw PJ. Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J Comput Appl Math. 1987;20:53-65. doi: 10.1016/0377-0427(87)90125-7 [DOI] [Google Scholar]

- 22.Cureton EE. Rank-biserial correlation. Psychometrika. 1956;21(3):287-290. doi: 10.1007/BF02289138 [DOI] [Google Scholar]

- 23.Breiman L, Friedman J, Olshen R, Stone C. Classification and Regression Trees. Wadsworth: 1984. [Google Scholar]

- 24.Jones E, Oliphant T, Peterson P, et al. SciPy: Open Source Scientific Tools for Python. 2001. http://www.scipy.org

- 25.Seabold S, Perktold J. Econometric and statistical model with python. Presented at: Proceedings of the 9th Python in Science Conference; June 28-July 3, 2010; Austin, Texas. [Google Scholar]

- 26.Vallat R. Pingouin: statistics in Python. J Open Source Softw. 2018;3(31):1026. doi: 10.21105/joss.01026 [DOI] [Google Scholar]

- 27.Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143(1):29-36. doi: 10.1148/radiology.143.1.7063747 [DOI] [PubMed] [Google Scholar]

- 28.Levy DL, Sereno AB, Gooding DC, O’Driscoll GA. Eye tracking dysfunction in schizophrenia: characterization and pathophysiology. Curr Top Behav Neurosci. 2010;4:311-347. doi: 10.1007/7854_2010_60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tao L, Wang Q, Liu D, Wang J, Zhu Z, Feng L. Eye tracking metrics to screen and assess cognitive impairment in patients with neurological disorders. Neurol Sci. 2020;41(7):1697-1704. doi: 10.1007/s10072-020-04310-y [DOI] [PubMed] [Google Scholar]

- 30.Carpenter KLH, Hahemi J, Campbell K, et al. Digital behavioral phenotyping detects atypical pattern of facial expression in toddlers with autism. Autism Res. 2021;14(3):488-499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Egger HL, Dawson G, Hashemi J, et al. Automatic emotion and attention analysis of young children at home: a ResearchKit autism feasibility study. NPJ Digit Med. 2018;1:20. doi: 10.1038/s41746-018-0024-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Campbell K, Carpenter KL, Hashemi J, et al. Computer vision analysis captures atypical attention in toddlers with autism. Autism. 2019;23(3):619-628. doi: 10.1177/1362361318766247 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Perochon S, Di Martino M, Aiello R, et al. A scalable computational approach to assessing response to name in toddlers with autism. J Child Psychol Psychiatry. Published online February 28, 2021. doi: 10.1111/jcpp.13381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dawson G, Campbell K, Hashemi J, et al. Atypical postural control can be detected via computer vision analysis in toddlers with autism spectrum disorder. Sci Rep. 2018;8(1):17008. doi: 10.1038/s41598-018-35215-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Dawson G, Sapiro G. Potential for digital behavioral measurement tools to transform the detection and diagnosis of autism spectrum disorder. JAMA Pediatr. 2019;173(4):305-306. doi: 10.1001/jamapediatrics.2018.5269 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure 1. Study consort diagram

eTable 1. Demographic and clinical characteristics

eFigure 2. Social versus nonsocial gaze preference in toddlers with ASD, TD, and DDLD

eFigure 3. Gaze patterns of toddlers with ASD, TD, and DDLD during salient moments

eFigure 4. Correlation between gaze and speech in children with ASD, TD, and DDLD

Reanalyses of social attention data using independent samples

eTable 2. Model performance for combined features by sex, race, and ethnicity

eReference