Abstract

To support the successful adoption of digital measures into internal decision making and evidence generation for medical product development, we present a unified lexicon to aid communication throughout this process, and highlight key concepts including the critical role of participant engagement in development of digital measures. We detail the steps of bringing a successful proof of concept to scale, focusing on key decisions in the development of a new digital measure: asking the right question, optimized approaches to evaluating new measures, and whether and how to pursue qualification or acceptance. Building on the V3 framework for establishing verification and analytical and clinical validation, we discuss strategic and practical considerations for collecting this evidence, illustrated with concrete examples of trailblazing digital measures in the field.

Keywords: Digital measures, Digital endpoints, Acceptance, V3, Qualification, Patient centricity

Key Points

A common, unifying lexicon, including key terminology relevant to evaluation and regulatory acceptance and/or qualification, is necessary for the successful development of digital measures for use in medical product development.

Early and continuous patient and stakeholder engagement is critical to defining a concept of interest that will remain relevant throughout the digital measure development process.

Establishing proof of concept is a key step in de-risking further investment into developing a digital measure.

Where regulatory acceptance and/or qualification is required, early engagement with regulators is critical.

The evidence and approach required for digital measure evaluation mirror those required for regulatory acceptance and/or qualification of an endpoint.

Evaluation in the absence of a high-quality comparator measure is highly challenging but also highly impactful, essential for innovating medicinal products in these indications and populations.

Introduction

Maturation of the digital health field, and digital measures in particular, requires that successful proof of concepts evolve into tools that can be the basis for decision making in clinical development. Frameworks have been proposed for the overall digital measure development and evaluation process [1], and specifically for the way in which a digital measure is evaluated as fit for purpose [2]. We build on those works to provide current examples of digital health measurement tools and extend those frameworks by presenting key decisions and challenges at different stages of this journey, as well as practical considerations at each step. Our motivation is to help those frameworks to be applied and to support more highly relevant and high-quality digital measures to make the transition from proof of concept to scale. Here, we use examples to support the development of digital clinical endpoints for medical product development through collaboration and shared industry best practices and make recommendations for how to accelerate the process. Previously, adding novel digital measures to a trial was often perceived by industry as too risky. Given the COVID crisis, we argue that it is now too risky not to [3]. As our reliance on digital drug development tools increases, so too must the rigor and consistency of their evaluation.

The Digital Measurement Lexicon

A lack of a common, broadly accepted lexicon describing digital measures often leads to confusion when developing digital measures of health. In particular, the conflation of measure, outcome, and endpoint tends to confuse discussion [4, 5], as well as overlap between development, evaluation, acceptance, and qualification of a new measure.

For the purpose of this article, we refer to digital measure as an all-inclusive term, encompassing all stages of maturity, settings, and technologies [4]. We will, however, restrict ourselves in referring to digital measures as those arising from “connected digital products” [6] and specifically focus on sensor-derived measures, i.e., we include active tests captured via a mobile platform and continuous, passive data collected from a wearable technology but exclude electronic patient-reported outcomes and other subjective measures collected from mobile platforms.

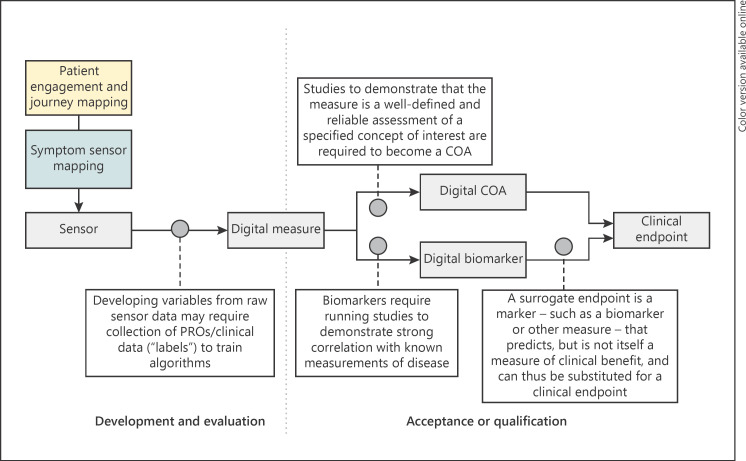

Depending on the level of maturity, measures differ in terms of what they enable us to infer and state about an individual's health. Put simply, getting from a simple measurement collected from a technology to a robust decision-making tool for clinical care or research requires multiple stages of longitudinal validation alongside existing clinical practice [1]. Figure 1 summarizes how these different stages relate to each other as we move from defining an initial concept through initial implementation and finally to the endpoint.

Fig. 1.

From sensor to endpoint: the process of developing digital measures. Key phases of patient and stakeholder engagement, digital measure development and evaluation, and acceptance and qualification are outlined. Key practical differences are highlighted. Left: development and evaluation; participant engagement defines the key COI, sensor mapping defines how the COI might be captured, and this initial proof of concept is evaluated as a robust digital measure. This is typically done in a separate observational/cross-sectional study or directly as an exploratory aim in a clinical trial (or substudy). Right: acceptance and/or qualification: the developed measure is assessed to be fit for purpose, enabling it to be used as a basis for clinical decision making, e.g., for inclusion decisions, or promotion from exploratory to secondary endpoint. This process typically involves longitudinal clinical studies where the novel measure can be directly compared to the existing standard practice and/or gold standard measures. https://www.fda.gov/drugs/development-approval-process-drugs/drug-development-tools-ddts.

Development refers to the earliest phases of maturity starting with participant engagement and establishing basic feasibility and proof of concept. A key early result is defining meaningful aspects of health (MAH) and mapping these to concepts of interest (COI) which the new digital measure should address [7]. Evaluation is the structured assessment of the suitability of the proposed digital measure to address a specific COI. A successfully evaluated, robust digital measure can be used as an exploratory measure in clinical development or as a basis for internal decision making. We reference the V3 evaluation framework of verification, analytical validation, and clinical validation as best practice for digital measure evaluation [2]. Note that our definition of evaluation focuses on the measure itself and not on the ability of a technology to capture a measure. This “technical” evaluation has been covered in depth elsewhere [1]. Use of a digital measure for decision making in clinical development requires regulatory interaction and either acceptance or qualification [8, 9]. In line with US Food and Drug Administration (FDA) guidance [8], we distinguish acceptance as being tied to an individual drug or biologic application, from qualification which is a separate and general process which allows use of the measure across applications, potentially without further justification. Qualified and accepted examples are contained in the FDA compendium (note that this compendium is not restricted to digital measures) [10].

Choosing to pursue acceptance or qualification, a digital measure will be classified as either a biomarker or a clinical outcome assessment (COA). A biomarker is a defined measure of normal or pathogenic processes, or response to therapy, while a COA reflects how a given patient feels, functions, or survives. This choice is highly dependent on the context of use (COU) and the COI, and indeed a given measure can be classified as either, depending on the COU. For example, gait speed as a measure of function and independence would be a COA, but as a predictor of later mortality it would be a biomarker.

Table 1 defines and gives examples of different measurement development stages and types, and it gives canonical, non-digital examples as a comparison. In this rapidly moving field, it should be noted that a truly accepted lexicon is not yet established and many of these terms should be expected to further evolve in the future.

Table 1.

Phases of digital measurement, definition, and examples

| Phase | Definition | Canonical example | Digital example | |

|---|---|---|---|---|

| Proof of concept | Development | Measurement from a digital device that has not yet begun formal evaluation | N/A | Passive monitoring of Parkinson patients [11] or individuals with mild cognitive impairment [12], smartphone-based eye tracking in autism spectrum disorders [13], movement patterns correlating with mood disorder classification [14] |

| Digital measure | Evaluation | Measurement from a digital device that has been assessed as verifiable and analytically and clinically validated | N/A | Gait speed during active minutes [15] Corridor gait speed in older adults at home [16] |

| Clinical outcome assessment | Acceptance or qualification | A well-defined and reliable assessment of a concept of interest that describes or reflects how a patient feels, functions, or survives | Self-reported 36-Item Short Form Survey physical function index [17] | FLOODLIGHT [18] mPOWER [19] Cognition Kit [20] PARADE [21] |

| Biomarker | Acceptance or qualification | A defined characteristic that is measured as an indicator of normal biological processes, pathogenic processes, or a response to an exposure or intervention, including therapeutic interventions | Thalamic cholinergic innervation and postural sensory integration function and gait speed reduction in Parkinson disease [22, 23] | Median gait speed in conditions where the metric has been shown to predict other outcomes in sarcopenia [24, 25], GPS mobility in schizophrenia [26] |

| Surrogate endpoint | Acceptance or qualification | A statistically defined n event or outcome that can be measured objectively to determine whether the intervention being studied is beneficial | 40-m improvement on the 6-min walk test in pulmonary hypertension [27, 28] | Submaximal real-world gait speed in DMD is shown be sensitive to treatment response and to correlate with mobility COA [29, 30] |

Now that we have established our lexicon, we will address practical issues arising in the development and evaluation, and acceptance or qualification, of a new digital measurement.

Continuous Patient and Stakeholder Engagement

Evaluating a new measure and gaining acceptance or qualification is a long journey, which, depending on the degree of novelty, can take as long as the time for drug discovery itself [3, 29, 30]. We define measure development success as creating a measure that: (1) matters to patients, reflecting aspects of their health and condition that are meaningful to them, and (2) is ultimately successful in supporting the clinical development of new interventions that address the needs of patients. Measure development success is dependent on getting the first steps of the process right; patient engagement in defining the COI and in the implementation of the digital measure is critical [31, 32].

Recent work has detailed the process of collecting participant experience and converting that information into specific COI [5]. In Table 2, we recap the key concept definitions and reconcile with the framework for digital measures outlined above.

Table 2.

Key concepts in mapping participant experience to digital measures

| Concept | Definition | Patient input | Considerations |

|---|---|---|---|

| MAH | Aspect of a disease that a patient: (1) does not want to become worse, (2) wants to improve, or (3) wants to prevent | What do you wish that you could do, but your condition prevents you from doing it? What part of your life is most frustratingly impacted by your condition? | May be shared across some conditions and diseases |

| COI | Simplified or narrowed element that can be practically measured | What are the symptoms that most impact your ability to do these activities? | Patients may have different symptoms. Symptoms may vary over time. Symptom relevance may vary over time. |

| Measure | Specific measurable characteristics | Do these measures make sense to you? | Measures may be relevant to multiple symptoms. Assess technical specifications of sensor and whether it is suitable for measuring this outcome in this population. |

| Endpoint | Precisely defined, statistically analyzed variables with demonstrated relevance to clinical benefit | How much change do we need to see in this symptom before it really starts to make a positive difference in your life? | Sensors may support multiple measures & end-points |

Reproduced and extended with permission from Manta et al. [5]

Patient and stakeholder engagement is inclusive of all people with direct experience of the disease in question and should involve all relevant groups including patients, caregivers, and healthcare practitioners. These individual groups should themselves be inclusive of all relevant demographics in order to reveal whether those demographics are differentially impacted by the disease or otherwise have differing needs. For example, a recent qualitative study showed that technology adoption in older, low-income, immigrant adults was heavily influenced by their cultural background [33].

There is an active body of research seeking to improve the science of patient engagement in biomedical research [7]. We cannot hope to summarize that work here, but medical product manufacturers seeking to follow European Medicines Agency (EMA) [9] and FDA Center for Drug Evaluation and Research (CDER) [34] guidance on participant involvement should invest in qualitative and quantitative research around patient needs, in addition to the widespread Key Opinion Leader interviews. However, non-superficial implementation of patient and stakeholder engagement remains rare in biomedical research [35], and it extends to the development of digital measures [36, 37].

Poor patient and stakeholder engagement in the selection and development of new digital measures of health is particularly disappointing as highly engaged patient advocacy groups already exist in many therapeutic areas, representing a broad range of patient needs. In addition to self-organizing, many of these patient organizations have already taken large strides in articulating the needs of their community, as well as encouraging and enabling research to meet those needs. Excellent examples include: the Michael J. Fox Foundation [38], who have actively guided investigators toward key topics and played a major role in putting Parkinson disease and related neurodegenerative conditions at the forefront of digital health research; the work of the Parent Project Muscular Dystrophy [39] helped bring focus to the research on this rare condition, ultimately shaping regulatory policy [40] and directly contributing to digital measure development [29, 30], and the American Association of Heart Failure Nurses have clearly articulated unmet needs from the patient perspective, including symptom recognition and coordination of care [41].

Identifying unmet patient needs is critical because it defines the question you are trying to solve. Patient and stakeholder engagement may highlight needs unmet by current standard practice in several ways: by improving our basic understanding of the disease (e.g., a subpopulation of patients may have a distinct set of needs not addressed by standard practice), by defining a new COI (e.g., a symptom underrepresented in clinical development decision making), or by identifying COI which exist but are implemented in a burdensome or otherwise non-face-valid way [32, 35, 42]. Equally, as for trial inclusion, it is important that diverse and representative cohorts be consulted to ensure that specific demographics are not under- or misrepresented [34].

Patient engagement will often identify MAH that are not currently addressed by available interventions or measures. These situations provide high-impact opportunities to develop new digital measures that are more clearly targeted at MAH versus traditional and potentially less meaningful endpoints. Next, measure developers will map MAH to COI which, in turn, will provide the basis for developing a measure [7]. Many COI are relatively easily converted into simple measures, but others tend toward higher-level concepts related to health-related quality of life. In the former case, COI related to restrictions in activities of daily living [43] or upper limb mobility limitations can be directly measured in performance tests [44]. In the latter case, the anxiety arising from being a patient cannot simply be reduced to a single measurement concept.

Working in partnership with patients and their caregivers is critical to defining a COI that will remain relevant throughout the long journey ahead [45].

Establishing Proof of Concept

Once a guiding COI has been established, initial investigations typically seek to de-risk further investment by demonstrating, on a smaller scale, that the proposed approach and the expected benefits are more than just hypothetical in a proof of concept [46]. At this stage it may not be immediately obvious which data streams are most informative, requiring a head-to-head design comparing data from several sensors [47], or it may not even be clear that person-generated health data (PGHD) can even address the question at all [48, 49]. The available technologies may not be sufficiently mature to deploy into clinical development (e.g., they may lack sufficient security provisions or not meet General Data Protection Regulations (GDPR) which would exclude use in European sites, etc.) [50], or the protocol may otherwise require establishment [51]. Lastly, these smaller studies can demonstrate that the proposed approach is feasible, and has face validity with patients, and that we can reasonably expect patients, sites, and caregivers to shoulder the additional burden of contributing PGHD [52]. All trials are ultimately dependent on patients contributing data and adhering to protocols, and thus early engagement of patients and their representative groups will minimize the chance that the proposed approach is not acceptable or otherwise lacks support of intended trial participants.

Note that we refer to person-generated health data [53], which is an updated definition of the original patient-generated health data [54]. This seemingly small change highlights that digital health, in particular through consumer health devices, has the potential to capture data from before a person becomes a patient and offer insights, for example, into early risk signals.

One way to establish proof of concept is in cross-sectional, observational studies or substudy [51], or as an exploratory variable in a smaller (phase 2a or 2b) longitudinal, investigational study [25]. Timelines and resources tend to dictate which approach is taken. Separate studies are by definition independent and offer the chance to deploy a protocol focused only on the aim of beginning a deeper evaluation of the measure, but this can only be done with sufficient planning and time. Integrating the measure into a bigger study risks failure if the timelines or budget of the “main” study are threatened, but if successful it offers a faster path to developing the evidence package required for the V3 evaluation framework [2] by collecting data for exploring clinical validation and even analytical validation and verification [55].

Evidence Generation for Evaluation

A successful proof of concept is a key step, yet several hurdles lie ahead when establishing a more complete evaluation of a new digital measure. The burden of evaluation has been conceptualized as a 2-dimensional matrix which groups strategies according to whether or not the COI/setting is novel and whether or not the measurement is novel [3]. In practice, these categories offer different availabilities of tools for evidence generation, which in turn strongly influence how straightforward it will be to establish analytical and clinical validation of your new measure.

Does a High-Quality Measure that Assesses the COI Already Exist?

This is the “simplest” situation, and it means your measure is an attempt to improve on an established measure, in the same setting. Analytical validation can be established through a study design that allows simultaneous capture of both the established measure and new measure. Clinical validation is established by demonstrating equivalence to the established measure, which has been itself shown to be clinically valid. Examples in this case often seek to lower the burden (e.g., the new measure is easier to capture) [13, 56] or increase statistical performance (e.g., the new measure demonstrates a lower inter-test variability) [29].

A related concept is to capture PGHD during an established performance assessment. Typically, such performance outcomes are carried out as part of a battery of related tests (e.g., the Short Physical Performance Battery) [57] which require a lot of time and effort from patients and sites. Digital measures can reduce this burden in several ways, i.e., digital measures can enable subscores to be derived and additional insights to be extracted from the assessment (e.g., deriving gait parameters from a Timed-Up-and-Go test) [29, 58]. Similarly, data from one measure can be used to predict or recapitulate the results of another, for example, by deriving results from physical performance tests, which contain sit-to-stand transitions and walking, by combining sit-to-stand data captured in a 5-times-sit-to-stand test with walking data from a gait test [59].

It may also occur that a COI and established measure exists but in a different indication. Again, using the established measure as a reference can quickly establish analytical validity, allowing the protocol to otherwise focus on collecting evidence to demonstrate clinical validity. This is similar to an established outcome measure gaining traction in other indications over time and becoming a generally accepted measure of a COI or MAH [60].

Does Standard Clinical Practice Address Your MAH?

In some cases, there is no directly comparable measure with the same COI, but the higher level MAH is assessed, for example, by clinician- or patient-reported scales. This means that, although head-to-head study designs are not possible, indirect comparison is possible (e.g., comparison of a monthly COA with a digital measure derived from continuously collected passive PGHD during the 2 weeks prior to the COA) [25, 30]. In this situation establishing clinical validity (meaningfulness) may be more straightforward than for analytical validity (algorithm performance), which may require additional observational studies or otherwise specialist protocols that are difficult to implement within a bigger study. Most commonly this pertains to establishing a continuous, real-world version of a clinical assessment, i.e., transitioning from a controlled environment to an uncontrolled environment for data capture [51].

Such indirect comparison designs are common in the development of digital measures, because many of the MAH that participant engagement will introduce into defining the COI lend themselves towards COA rather than biomarkers [7]. Thus many digital measures, especially actively performed tests, take a similar structure to COA, consisting of several subtests which together can be used to produce an overall picture of a patient's disease state. Some examples, generally combining subjective and objective assessments, include the PARADE [21] and the Cognition Kit [20].

The potential to combine participant-reported symptoms and rating scales with PGHD from sensor technologies is a further extension of this concept [48]; indeed several platforms add passive assessment capabilities as an extension, using the original active measure as the reference (e.g., FLOODLIGHT [18] and mPOWER [19] platforms). One possible next step is to replace, prompt, or augment COA items and subscales with digital measures derived from PGHD. It has been shown that PGHD can be used to accurately predict subjective mobility [47], stress [61, 62], and fatigue [63] outcomes, demonstrating the potential of this idea.

Does an Effective Intervention Exist in Your Indication?

If the COI and the setting in which the new measure is deployed are novel, establishing analytical and clinical validity will be highly challenging as no reference measure exists. Viewed from a slightly different perspective, this also means that in these cases no tools to meet particular patient needs exist, indicating use cases where digital measures can have the greatest impact.

A critically important tool to aid measure development and evaluation for truly novel digital measures is the presence of an existing treatment which supports generation of evidence to support clinical validity by showing that the new measure captures positive responses to intervention. Examples include the mPOWER platform capturing improvement after Parkinson disease patients reporting taking medication (typically levodopa and dopamine agonists) [64], or improvements in submaximal gait speed in Duchenne muscular dystrophy patients following steroid treatment [29].

Such self-reported taking of medication can be used as “soft” annotations (as opposed to binary, “hard” annotations like mortality) to develop new composite digital measures. In this case, soft annotations (or “labels” in machine learning terminology) indicate points in a PGHD time course with a statistical likelihood of seeing a signal change, which can be used to train an algorithm based on the assumption that symptom severity will change immediately following treatment. This approach was used to develop the mobile Parkinson disease score [65].

An existing, effective intervention is also often a key component in demonstrating that a new measure is sensitive to change, which is required in pursuing acceptance and qualification [9].

Evaluation without Robust References

In underserved indications and populations, the answer can be negative to all of the above questions. Evaluation in the absence of a high-quality comparator measure and effective intervention is highly challenging yet, as stated previously, highly impactful; the development, evaluation and validation of novel digital measures is essential for innovating medicinal products for these populations. Examples might include work focusing on an orphan or rare condition, or where data generation is otherwise challenging and previous work is sparse. A worked example focusing on Alzheimer disease is provided below.

One option for developing digital measures to address the MAH of these patients may require a ground-up approach utilizing psychometric methods to establish utility and clinical validity [45, 66, 67].

Alternatively, new measures can be established through natural history studies [68, 69]. Digital health approaches have the benefit of enabling highly dispersed patients to connect to each other and to participate in research [70] by giving researchers the tools to run the kind of remote and emerging trial designs required to monitor patients in their home environment over long periods of time [71]. Digital health tools can rapidly establish baseline measurements and the natural history course for these patients, which can both be used to establish the MAH in an underserved population.

A concrete example is Alzheimer disease, a condition with no curative or preventative therapies and only very few options to manage symptoms [72]. Diagnosis of Alzheimer disease is particularly challenging, and many emerging digital tools are not yet ready for use in trials, especially noncontrolled data capture [50]. Natural history studies, in particular “living laboratory” studies which allow for unobtrusive, continuous remote data capture in this vulnerable population [73, 74], are starting to make progress. Proof-of-concept and evaluated digital measures that are predictive of early cognitive decline have been found in PGHD describing computer use [75] and mouse movements [76], as well as gait speed captured in corridors [16] and mediolateral sway captured from sensorized weighing scales [49]. Developing digital measures in this setting has required significant investments, yet it addresses a significant need.

Pursuing Acceptance and/or Qualification

A thorough approach to evaluation of a new digital measure already lays much of the foundation for acceptance and/or qualification. A measure that meets the evaluation criteria outlined in V3 [2] is fit for purpose [4] and should be accepted for a given COU. As we stated earlier, use of the digital measure for regulatory decision making in clinical development requires regulatory interaction and either acceptance or qualification [8, 9].

When a new digital measure has been evaluated and proven successful as a meaningful assessment of health, this may mean that the measure already fulfils its purpose, for example, for internal decision making, or as a robust exploratory outcome, generating supporting information for market approval of an intervention, or substantiating marketing claims. Collecting evidence that supports marketing prior to market authorization has been identified as having a potentially huge impact on the efficiency of clinical development [77].

However, the initial motivation of the measure developer, especially pharmaceutical or sensor technology companies, is often rooted in unsatisfactory tools for decision making in phase 3. This can mean that monitoring adverse events or relevant MAH is unsatisfactory, or diagnosis or inclusion of patients is challenging.

Thus, although exploratory digital outcomes are rapidly gaining traction across clinical development, and especially in phase 2 and 4 studies [6], the ultimate aim often remains that the measure can be used in decision making for market approval. Reaching this aim requires that health authorities agree that the measure can be a basis for evidence generation, either accepting a measure for use in a specific drug or biologic application or qualifying a measure for general use in addressing a specific COI and COU [8].

Health authority guidelines highlight the importance of early engagement to refine potential applications and highlight potential legal and regulatory issues. Both the EMA Innovation Task Force (ITF [78, 79]) and the FDA CDER Drug Development Tool (DDT [80]) guidelines provide paths to initiating this conversation by submitting a letter of intent [81]. Typically discussions will focus on the intended use and early data covering verification and analytical validation. Ideally this is an iterative discussion, starting before evidence generation pertaining to clinical validation, as stated in guidance from the FDA [8] and the EMA [9]. Examples can also be found in publicly available briefing books [82].

Though pursuing acceptance and qualification requires additional effort from the researcher, becoming a basis for decision making in clinical development is the only true way to ensure that the unmet needs defined in the earliest stage of measure development are met by those studies.

The Importance of Collaboration

Finally, it is important to emphasize the critical role of collaboration in digital measure development. Digital health is a highly multifaceted field, necessitating collaboration across and between many different roles and even creating new ones [83]. Digital health bridges communities and should involve all stakeholders, especially those most directly impacted, i.e., the patients themselves, as we outlined above.

Taken from the perspective of clinical development, digital measures can be seen as tools to enable product development and other assets rather than as assets themselves. The view of digital measures as precompetitive tools is increasingly accepted and advocated for [37, 74, 84, 85].

The impact and acceptance of measures is not just enhanced when all stakeholders collaborate in their development, but the path from proof of concept to endpoint is only navigable when we come together to face challenges.

Conclusion

Digital health has come far in recent years. From schools of engineering to medical schools, many faculties are now offering graduate courses with a specific focus on digital health, and many more postgraduates are pursuing research in the field. In addition to academia, industry has contributed to a groundswell of proof of concepts, and it is increasingly incorporating digital measures into clinical development [6]. These examples demonstrate the potential of digital measures to impact many MAH, and we start to see the first proof of concepts which have transitioned into being a basis for decision making in clinical development [30]. Contributing to decision making remains critical in making sure that the needs defined through patient and stakeholder engagement are met by clinical development and raise the chances that new treatments addressing those needs will be brought to those patients.

To help accelerate this process, we clarify some of the digital health lexicon relevant to digital measures and build on the V3 framework [2], discussing key strategic and practical considerations when developing, evaluating, and seeking acceptance or qualification of new digital measures. Developing a new digital measure is a serious undertaking, often taking similar effort and time to bringing a new therapy through clinical development; thus we highlight the importance of participant engagement and of early regulatory engagement if acceptance or qualification is deemed necessary for achieving key milestones. Evaluation can also be made more efficient by commitment to incorporate digital measures into earlier phases of clinical trials, publication of validation studies and data sets, and improved collaboration across and between industry and vendors.

The majority of examples provided here pertain to digital measures deployed as efficacy endpoints. Additional opportunities for digital health to improve care lie in the extension of the measures for deployment as diagnostics and for identification and inclusion [86]. Delayed diagnosis and insufficiently precise inclusion criteria have been cited as possible causes of the low and slow approval rates in neurological and psychiatric disorders, as well as in chronic, high-prevalence cardiovascular conditions [87, 88].

To maintain and advance momentum in digital measure development and deployment, patient groups, researchers, and companies must collaborate in intentional measure selection and robust evaluation in pursuit of acceptance or qualification of these novel tools. Digital measure development, for most pharmaceutical companies, is a precompetitive endeavor and the utility and acceptance of measures is enhanced by multistakeholder collaboration. Indeed, the skills required for successful measure selection, development, and deployment rarely reside in one organization, necessitating a collaborative approach [3, 8, 24, 30, 85, 89]. Recent progress in digital measures for Parkinson disease highlights this point [84]. If we work together, digital health measurement can become a mainstay of clinical development, maximally benefiting patients and speeding and reducing the costs of developing therapies.

Search Strategy and Selection Criteria

References for this Review were identified through searches of PubMed and Google Scholar, prioritizing recent and original articles. Articles were also identified through searches of publicly available material from the FDA and EMA. The final reference list was generated on the basis of novelty, originality and relevance to the broad scope of this Review.

Conflict of Interest Statement

The authors declare the following competing interests: I.C., B.P.-L., and D.S. are employees of, and own employee stock options in, Evidation Health Inc. A.V.D. is an employee of, and owns employee stock in, Takeda Pharmaceuticals. I.C. is a member of the Digital Medicine Society and the Editorial Board of Karger Digital Biomarkers and has lectured on digital health at ETH Zürich and FHNW Muttenz. A.V.D. is a member and serves on the Strategic Advisory Board of the Digital Medicine Society. B.P.-L. consults for Bayer and is on the Scientific Leadership Board of the Digital Medicine Society.

Funding Sources

This work received no external funding.

Author Contributions

I.C. defined the initial concept with D.S. and A.V.D. I.C., B.P.-L., and J.C.G. contributed to researching the literature. D.S. designed the figure. All of the authors contributed to discussions of the article content, as well as to writing, editing, and reviewing of this paper.

Acknowledgement

The authors would like to acknowledge Christine Manta for permission to reproduce Figure 1.

References

- 1.Coravos A, Doerr M, Goldsack J, Manta C, Shervey M, Woods B, et al. Modernizing and designing evaluation frameworks for connected sensor technologies in medicine. npj. Digit Med. 2020 Mar;3((1)):1–10. doi: 10.1038/s41746-020-0237-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Goldsack JC, Coravos A, Bakker JP, Bent B, Dowling AV, Fitzer-Attas C, et al. Verification, analytical validation, and clinical validation (V3): the foundation of determining fit-for-purpose for Biometric Monitoring Technologies (BioMeTs). npj. Digit Med. 2020 Apr;3((1)):1–15. doi: 10.1038/s41746-020-0260-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Goldsack JC, Izmailova ES, Menetski JP, Hoffmann SC, Groenen PM, Wagner JA. Remote digital monitoring in clinical trials in the time of COVID-19. Nat Rev Drug Discov. 2020 Jun;19((6)):378–9. doi: 10.1038/d41573-020-00094-0. [DOI] [PubMed] [Google Scholar]

- 4.Coravos A, Goldsack JC, Karlin DR, Nebeker C, Perakslis E, Zimmerman N, et al. Digital Medicine: A Primer on Measurement. Digit Biomark. 2019 May;3((2)):31–71. doi: 10.1159/000500413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Manta C, Patrick-Lake B, Goldsack JC. Digital Measures That Matter to Patients: A Framework to Guide the Selection and Development of Digital Measures of Health. Digit Biomark. 2020 Sep;4((3)):69–77. doi: 10.1159/000509725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Marra C, Chen JL, Coravos A, Stern AD. Quantifying the use of connected digital products in clinical research. npj. Digit Med. 2020 Apr;3((1)):1–5. doi: 10.1038/s41746-020-0259-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Walton MK, Powers JH, 3rd, Hobart J, Patrick D, Marquis P, Vamvakas S, International Society for Pharmacoeconomics and Outcomes Research Task Force for Clinical Outcomes Assessment et al. Clinical Outcome Assessments: Conceptual Foundation-Report of the ISPOR Clinical Outcomes Assessment - Emerging Good Practices for Outcomes Research Task Force. Value Health. 2015 Sep;18((6)):741–52. doi: 10.1016/j.jval.2015.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Center for Drug Evaluation Research Drug Development Tools (DDT) Qualification Programs [Internet] US Food and Drug Administration. 2020. Dec, [cited 2020 May 27]. Available from: https://www.fda.gov/drugs/development-approval-process-drugs/drug-development-tool-ddt-qualification-programs.

- 9.European Medicines Agency, Human Medicines Division Questions and Answers: Qualification of digital technology-based methodologies to support approval of medicinal products [Internet] 2020. Jun, [cited 2020 May 28]. Available from: https://www.ema.europa.eu/en/documents/other/questions-answers-qualification-digital-technology-based-methodologies-support-approval-medicinal_en.pdf.

- 10.Center for Drug Evaluation Research Clinical Outcome Assessment Compendium [Internet] US Food and Drug Administration. 2019. [cited 2020 May 28]. Available from: https://www.fda.gov/drugs/development-resources/clinical-outcome-assessment-compendium.

- 11.Kabelac Z, Tarolli CG, Snyder C, Feldman B, Glidden A, Hsu CY, et al. Passive Monitoring at Home: A Pilot Study in Parkinson Disease. Digit Biomark. 2019 Apr;3((1)):22–30. doi: 10.1159/000498922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rawtaer I, Mahendran R, Kua EH, Tan HP, Tan HX, Lee TS, et al. Early Detection of Mild Cognitive Impairment With In-Home Sensors to Monitor Behavior Patterns in Community-Dwelling Senior Citizens in Singapore: Cross-Sectional Feasibility Study. J Med Internet Res. 2020 May;22((5)):e16854. doi: 10.2196/16854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Strobl MA, Lipsmeier F, Demenescu LR, Gossens C, Lindemann M, De Vos M. Look me in the eye: evaluating the accuracy of smartphone-based eye tracking for potential application in autism spectrum disorder research. Biomed Eng Online. 2019 May;18((1)):51. doi: 10.1186/s12938-019-0670-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jacobson NC, Weingarden H, Wilhelm S. Digital biomarkers of mood disorders and symptom change. npj. Digit Med. 2019 Feb;2((1)):1–3. doi: 10.1038/s41746-019-0078-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Patel MS, Foschini L, Kurtzman GW, Zhu J, Wang W, Rareshide CA, et al. Using Wearable Devices and Smartphones to Track Physical Activity: Initial Activation, Sustained Use, and Step Counts Across Sociodemographic Characteristics in a National Sample. Ann Intern Med. 2017 Nov;167((10)):755–7. doi: 10.7326/M17-1495. [DOI] [PubMed] [Google Scholar]

- 16.Kaye J, Mattek N, Dodge H, Buracchio T, Austin D, Hagler S, et al. One walk a year to 1000 within a year: continuous in-home unobtrusive gait assessment of older adults. Gait Posture. 2012 Feb;35((2)):197–202. doi: 10.1016/j.gaitpost.2011.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Laddu DR, Wertheim BC, Garcia DO, Woods NF, LaMonte MJ, Chen B, Women's Health Initiative Investigators et al. 36-Item Short Form Survey (SF-36) Versus Gait Speed As Predictor of Preclinical Mobility Disability in Older Women: The Women's Health Initiative. J Am Geriatr Soc. 2018 Apr;66((4)):706–13. doi: 10.1111/jgs.15273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Creagh AP, Simillion C, Scotland A, Lipsmeier F, Bernasconi C, Belachew S, et al. Smartphone-based remote assessment of upper extremity function for multiple sclerosis using the Draw a Shape Test. Physiol Meas. 2020 Jun;41((5)):054002. doi: 10.1088/1361-6579/ab8771. [DOI] [PubMed] [Google Scholar]

- 19.Bot BM, Suver C, Neto EC, Kellen M, Klein A, Bare C, et al. The mPower study, Parkinson disease mobile data collected using ResearchKit. Sci Data. 2016 Mar;3((1)):160011. doi: 10.1038/sdata.2016.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cormack F, McCue M, Taptiklis N, Skirrow C, Glazer E, Panagopoulos E, et al. Wearable Technology for High-Frequency Cognitive and Mood Assessment in Major Depressive Disorder: Longitudinal Observational Study. JMIR Ment Health. 2019 Nov;6((11)):e12814. doi: 10.2196/12814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hamy V, Garcia-Gancedo L, Pollard A, Myatt A, Liu J, Howland A, et al. Developing Smartphone-Based Objective Assessments of Physical Function in Rheumatoid Arthritis Patients: the PARADE Study. Digit Biomark. 2020 Apr;4((1)):26–43. doi: 10.1159/000506860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Müller ML, Albin RL, Kotagal V, Koeppe RA, Scott PJ, Frey KA, et al. Thalamic cholinergic innervation and postural sensory integration function in Parkinson's disease. Brain. 2013 Nov;136((Pt 11)):3282–9. doi: 10.1093/brain/awt247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bohnen NI, Frey KA, Studenski S, Kotagal V, Koeppe RA, Scott PJ, et al. Gait speed in Parkinson disease correlates with cholinergic degeneration. Neurology. 2013 Oct;81((18)):1611–6. doi: 10.1212/WNL.0b013e3182a9f558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mobilise-D. Home [Internet] [cited 2020 May 26]. Available from: https://www.mobilise-d.eu/

- 25.Mueller A, Hoefling HA, Muaremi A, Praestgaard J, Walsh LC, Bunte O, et al. Continuous Digital Monitoring of Walking Speed in Frail Elderly Patients: Noninterventional Validation Study and Longitudinal Clinical Trial. JMIR Mhealth Uhealth. 2019 Nov;7((11)):e15191. doi: 10.2196/15191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Depp CA, Bashem J, Moore RC, Holden JL, Mikhael T, Swendsen J, et al. GPS mobility as a digital biomarker of negative symptoms in schizophrenia: a case control study. npj. Digit Med. 2019 Nov;2((1)):1–7. doi: 10.1038/s41746-019-0182-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.U.S. Department of Health and Human Services Food and Drug Administration Center for Drug Evaluation and Research (CDER) Center for Biologics Evaluation and Research (CBER) Guidance for Industry Expedited Programs for Serious Conditions − Drugs and Biologics [Internet] 2014. May, [cited 2020 May 26]. Available from: https://www.fda.gov/media/86377/download.

- 28.Thomas R. Fleming JHP. Biomarkers and Surrogate Endpoints In Clinical Trials. Stat Med. 2012 Nov;31((25)):2973. doi: 10.1002/sim.5403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Servais L, Gidaro T, Seferian A, Gasnier E, Daron A, Ulinici A, et al. Maximal stride velocity detects positive and negative changes over 6- month-time period in ambulant patients with Duchenne muscular dystrophy. Neuromuscul Disord. 2019 Oct;29:S105. [Google Scholar]

- 30.Haberkamp M, Moseley J, Athanasiou D, de Andres-Trelles F, Elferink A, Rosa MM, et al. European regulators' views on a wearable-derived performance measurement of ambulation for Duchenne muscular dystrophy regulatory trials. Neuromuscul Disord. 2019 Jul;29((7)):514–6. doi: 10.1016/j.nmd.2019.06.003. [DOI] [PubMed] [Google Scholar]

- 31.Clinical Trials Transformation Initiative Use Case For Developing Novel Endpoints Generated Using Mobile Technology: Duchenne Muscular Dystrophy [Internet] 2016. Mar, [cited 2020 May 28]. Available from: https://www.ctti-clinicaltrials.org/files/usecase-duchenne.pdf.

- 32.Us Food And Drug Adminstration Center For Drug Evaluation and Research Office of New Drugs Roadmap to PATIENT-FOCUSED OUTCOME MEASUREMENT in Clinical Trials [Internet] [cited 2020 May 28]. Available from: https://www.fda.gov/media/87004/download.

- 33.Berridge C, Chan KT, Choi Y. Sensor-Based Passive Remote Monitoring and Discordant Values: Qualitative Study of the Experiences of Low-Income Immigrant Elders in the United States. JMIR Mhealth Uhealth. 2019 Mar;7((3)):e11516. doi: 10.2196/11516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.U.S. Department of Health and Human Services, Food and Drug Administration, Center for Drug Evaluation and Research (CDER), Center for Biologics Evaluation and Research (CBER) Enhancing the Diversity of Clinical Trial Populations — Eligibility Criteria, Enrollment Practices, and Trial Designs Guidance for Industry [Internet] 2019. Jun, [cited 2020 May 27]. Available from: https://www.fda.gov/media/127712/download.

- 35.Planner C, Bower P, Donnelly A, Gillies K, Turner K, Young B. Trials need participants but not their feedback? A scoping review of published papers on the measurement of participant experience of taking part in clinical trials. Trials. 2019 Jun;20((1)):381. doi: 10.1186/s13063-019-3444-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wakefield BJ, Turvey CL, Nazi KM, Holman JE, Hogan TP, Shimada SL, et al. Psychometric Properties of Patient-Facing eHealth Evaluation Measures: Systematic Review and Analysis. J Med Internet Res. 2017 Oct;19((10)):e346. doi: 10.2196/jmir.7638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Izmailova ES, Wagner JA, Ammour N, Amondikar N, Bell‐Vlasov A, Berman S, et al. Remote Digital Monitoring for Medical Product Development. Clin Transl Sci. 2020 Aug;3:55. [Google Scholar]

- 38.Our Agenda [Internet] The Michael J Fox Foundation for Parkinson's Research | Parkinson's Disease. [cited 2020 May 26]. Available from: https://www.michaeljfox.org/our-agenda.

- 39.Parent Project Muscular Dystrophy (PPMD) | Fighting to End Duchenne [Internet] Parent Project Muscular Dystrophy. [cited 2020 Jun 1]. Available from: https://www.parentprojectmd.org/

- 40.Furlong P, Bridges JF, Charnas L, Fallon JR, Fischer R, Flanigan KM, et al. How a patient advocacy group developed the first proposed draft guidance document for industry for submission to the U.S. Food and Drug Administration. Orphanet J Rare Dis. 2015 Jun;10((1)):82. doi: 10.1186/s13023-015-0281-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.AAHFN advocacy − American Association of Heart Failure Nurses [Internet] [cited 2020 May 26]. Available from: https://www.aahfn.org/page/advocacy.

- 42.Voqui J. Clinical Outcome Assessment Implementation in Clinical Trials [Internet] 2015. Sep, [cited 2020 May 28]. Available from: https://www.fda.gov/media/94051/download.

- 43.Katz S. Assessing self-maintenance: activities of daily living, mobility, and instrumental activities of daily living. J Am Geriatr Soc. 1983 Dec;31((12)):721–7. doi: 10.1111/j.1532-5415.1983.tb03391.x. [DOI] [PubMed] [Google Scholar]

- 44.Ricotti V, Selby V, Ridout D, Domingos J, Decostre V, Mayhew A, et al. Respiratory and upper limb function as outcome measures in ambulant and non-ambulant subjects with Duchenne muscular dystrophy: A prospective multicentre study. Neuromuscul Disord. 2019 Apr;29((4)):261–8. doi: 10.1016/j.nmd.2019.02.002. [DOI] [PubMed] [Google Scholar]

- 45.Houts CR, Patrick-Lake B, Clay I, Wirth RJ. The Path Forward for Digital Measures: Suppressing the Desire to Compare Apples and Pineapples. Digit Biomark. 2020 Nov;4((1 Suppl 1)):3–12. doi: 10.1159/000511586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Clay I. Impact of Digital Technologies on Novel Endpoint Capture in Clinical Trials. Clin Pharmacol Ther. 2017 Dec;102((6)):912–3. doi: 10.1002/cpt.866. [DOI] [PubMed] [Google Scholar]

- 47.Bahej I, Clay I, Jaggi M, De Luca V. Prediction of Patient-Reported Physical Activity Scores from Wearable Accelerometer Data: A Feasibility Study. Converging Clinical and Engineering Research on Neurorehabilitation III. Cham: Springer; 2018. pp. pp. 668–72. [Google Scholar]

- 48.Chen R, Jankovic F, Marinsek N, Foschini L, Kourtis L, Signorini A, et al. Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. ACM; 2019. Developing Measures of Cognitive Impairment in the Real World from Consumer-Grade Multimodal Sensor Streams. [DOI] [Google Scholar]

- 49.Leach JM, Mancini M, Kaye JA, Hayes TL, Horak FB. Day-to-Day Variability of Postural Sway and Its Association With Cognitive Function in Older Adults: A Pilot Study. Front Aging Neurosci. 2018 May;10:126. doi: 10.3389/fnagi.2018.00126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Piau A, Wild K, Mattek N, Kaye J. Current State of Digital Biomarker Technologies for Real-Life, Home-Based Monitoring of Cognitive Function for Mild Cognitive Impairment to Mild Alzheimer Disease and Implications for Clinical Care: systematic Review. J Med Internet Res. 2019 Aug;21((8)):e12785. doi: 10.2196/12785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Keppler AM, Nuritidinow T, Mueller A, Hoefling H, Schieker M, Clay I, et al. Validity of accelerometry in step detection and gait speed measurement in orthogeriatric patients. PLoS One. 2019 Aug;14((8)):e0221732. doi: 10.1371/journal.pone.0221732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Doerr M, Maguire Truong A, Bot BM, Wilbanks J, Suver C, Mangravite LM. Formative Evaluation of Participant Experience With Mobile eConsent in the App-Mediated Parkinson mPower Study: A Mixed Methods Study. JMIR Mhealth Uhealth. 2017 Feb;5((2)):e14. doi: 10.2196/mhealth.6521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Duke-Margolis Center for Health Policy working group Determining Real-World Data's Fitness for Use and the Role of Reliability [Internet] 2019. Sep, [cited 2020 Aug 17]. Available from: https://healthpolicy.duke.edu/sites/default/files/2019-11/rwd_reliability.pdf.

- 54.What are patient-generated health data? [Internet] [cited 2020 Aug 17]. Available from: https://www.healthit.gov/topic/otherhot-topics/what-are-patient-generated-health-data.

- 55.Midaglia L, Mulero P, Montalban X, Graves J, Hauser SL, Julian L, et al. Adherence and Satisfaction of Smartphone- and Smartwatch-Based Remote Active Testing and Passive Monitoring in People With Multiple Sclerosis: Nonrandomized Interventional Feasibility Study. J Med Internet Res. 2019 Aug;21((8)):e14863. doi: 10.2196/14863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Svensson T, Chung UI, Tokuno S, Nakamura M, Svensson AK. A validation study of a consumer wearable sleep tracker compared to a portable EEG system in naturalistic conditions. J Psychosom Res. 2019 Nov;126:109822. doi: 10.1016/j.jpsychores.2019.109822. [DOI] [PubMed] [Google Scholar]

- 57.Guralnik JM, Simonsick EM, Ferrucci L, Glynn RJ, Berkman LF, Blazer DG, et al. A short physical performance battery assessing lower extremity function: association with self-reported disability and prediction of mortality and nursing home admission. J Gerontol. 1994 Mar;49((2)):M85–94. doi: 10.1093/geronj/49.2.m85. [DOI] [PubMed] [Google Scholar]

- 58.Smith E, Walsh L, Doyle J, Greene B, Blake C. The reliability of the quantitative timed up and go test (QTUG) measured over five consecutive days under single and dual-task conditions in community dwelling older adults. Gait Posture. 2016 Jan;43:239–44. doi: 10.1016/j.gaitpost.2015.10.004. [DOI] [PubMed] [Google Scholar]

- 59.Towards fully instrumented and automated assessment of motor function tests - IEEE Conference Publication [Internet] [cited 2020 May 27]. Available from: https://ieeexplore.ieee.org/abstract/document/8333375.

- 60.Bohannon RW, Crouch R. Minimal clinically important difference for change in 6-minute walk test distance of adults with pathology: a systematic review. J Eval Clin Pract. 2017 Apr;23((2)):377–81. doi: 10.1111/jep.12629. [DOI] [PubMed] [Google Scholar]

- 61.Smets E, Velazquez ER, Schiavone G, Chakroun I, D'Hondt E, De Raedt W, et al. Large-scale wearable data reveal digital phenotypes for daily-life stress detection. npj. Digit Med. 2018 Dec;1((1)):1–10. doi: 10.1038/s41746-018-0074-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Sano A, Picard RW. Stress recognition using wearable sensors and mobile phones. 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction. 2013. pp. pp. 671–6.

- 63.Luo H, Lee PA, Clay I, Jaggi M, De Luca V. Assessment of Fatigue Using Wearable Sensors: A Pilot Study. Digit Biomark. 2020 Nov;4((1 Suppl 1)):59–72. doi: 10.1159/000512166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.E CN. Bot BM. Perumal T, Omberg L, Guinney J, Kellen M, et al. Personalized hypothesis tests for detecting medication response in parkinson disease patients using iphone sensor data. Pac Symp Biocomput. 2016;21:273–84. [PubMed] [Google Scholar]

- 65.Zhan A, Mohan S, Tarolli C, Schneider RB, Adams JL, Sharma S, et al. Using Smartphones and Machine Learning to Quantify Parkinson Disease Severity: The Mobile Parkinson Disease Score. JAMA Neurol. 2018 Jul;75((7)):876–80. doi: 10.1001/jamaneurol.2018.0809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Perski O, Lumsden J, Garnett C, Blandford A, West R, Michie S. Assessing the Psychometric Properties of the Digital Behavior Change Intervention Engagement Scale in Users of an App for Reducing Alcohol Consumption: evaluation Study. J Med Internet Res. 2019 Nov;21((11)):e16197. doi: 10.2196/16197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Dagum P. Digital biomarkers of cognitive function. npj. Digit Med. 2018 Mar;1((1)):1–3. doi: 10.1038/s41746-018-0018-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Karas M, Marinsek N, Goldhahn J, Foschini L, Ramirez E, Clay I. Predicting Subjective Recovery from Lower Limb Surgery Using Consumer Wearables. Digit Biomark. 2020 Nov;4((1 Suppl 1)):73–86. doi: 10.1159/000511531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Ramirez E, Marinsek N, Bradshaw B, Kanard R, Foschini L. Continuous Digital Assessment for Weight Loss Surgery Patients. Digit Biomark. 2020 Mar;4((1)):13–20. doi: 10.1159/000506417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Center for Drug Evaluation Research From our perspective: Encouraging drug development for rare diseases [Internet] US Food and Drug Administration. 2019. Aug, [cited 2020 May 27]. Available from: https://www.fda.gov/drugs/news-events-human-drugs/our-perspective-encouraging-drug-development-rare-diseases.

- 71.Innovative Medicines Agency Shortening the path to Rare Disease diagnosis by using new born genetic screening and digital technologies [Internet] 2015-2020. [cited 2020 May 27]. Available from: https://www.imi.europa.eu/sites/default/files/uploads/documents/apply-for-funding/future-topics/DraftTopic_RareDiseasesScreening_v6April.pdf.

- 72.Treatments [Internet] Alzheimer's Disease and Dementia. [cited 2020 Jun 1]. Available from: https://alz.org/alzheimers-dementia/treatments.

- 73.Kaye J, Reynolds C, Bowman M, Sharma N, Riley T, Golonka O, et al. Methodology for Establishing a Community-Wide Life Laboratory for Capturing Unobtrusive and Continuous Remote Activity and Health Data. J Vis Exp. 2018 Jul;27((137)):56942. doi: 10.3791/56942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Beattie Z, Miller LM, Almirola C, Au-Yeung WM, Bernard H, Cosgrove KE, et al. The Collaborative Aging Research Using Technology Initiative: An Open, Sharable, Technology-Agnostic Platform for the Research Community. Digit Biomark. 2020 Nov;4((1 Suppl 1)):100–18. doi: 10.1159/000512208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Kaye J, Mattek N, Dodge HH, Campbell I, Hayes T, Austin D, et al. Unobtrusive measurement of daily computer use to detect mild cognitive impairment. Alzheimers Dement. 2014 Jan;10((1)):10–7. doi: 10.1016/j.jalz.2013.01.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Seelye A, Hagler S, Mattek N, Howieson DB, Wild K, Dodge HH, et al. Computer mouse movement patterns: A potential marker of mild cognitive impairment. Alzheimers Dement (Amst) 2015 Dec;1((4)):472–80. doi: 10.1016/j.dadm.2015.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Marwaha S, Ruhl M, Shorkey P. Doubling Pharma Value with Data Science [Internet] 2018. Feb, [cited 2021 Jan 14]. Available from: https://www.bcg.com/publications/2018/doubling-pharma-value-with-data-science-b.

- 78.Anonymous Innovation in medicines - European Medicines Agency [Internet]. European Medicines Agency. 2018. Sep, [cited 2020 May 27]. Available from: https://www.ema.europa.eu/en/human-regulatory/research-development/innovation-medicines.

- 79.Cerreta F, Ritzhaupt A, Metcalfe T, Askin S, Duarte J, Berntgen M, et al. Digital technologies for medicines: shaping a framework for success. Nat Rev Drug Discov. 2020 Sep;19((9)):573–4. doi: 10.1038/d41573-020-00080-6. [DOI] [PubMed] [Google Scholar]

- 80.Center for Drug Evaluation Research Drug Development Tool (DDT) qualification process [Internet] US Food and Drug Administration. 2019. Oct, [cited 2020 May 27]. Available from: https://www.fda.gov/drugs/drug-development-tool-ddt-qualification-programs/drug-development-tool-ddt-qualification-process.

- 81.Fitt H. Qualification of novel methodologies for medicine development - European Medicines Agency [Internet] European Medicines Agency. 2020. May, [cited 2020 May 28]. Available from: https://www.ema.europa.eu/en/human-regulatory/research-development/scientific-advice-protocol-assistance/qualification-novel-methodologies-medicine-development-0.

- 82.Daumer M, Lederer C, Aigner G, Neuhaus A, Schneider M, Clay I, et al. Briefing Book for the EMA Qualification of novel methodologies for drug development [Internet] 01-February-2017. [cited 2020 May 27]. Available from: https://www.fda.gov/media/124857/download.

- 83.Goldsack JC, Zanetti CA. Defining and Developing the Workforce Needed for Success in the Digital Era of Medicine. Digit Biomark. 2020 Nov;4((1 Suppl 1)):136–42. doi: 10.1159/000512382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Stephenson D, Alexander R, Aggarwal V, Badawy R, Bain L, Bhatnagar R, et al. Precompetitive Consensus Building to Facilitate the Use of Digital Health Technologies to Support Parkinson Disease Drug Development through Regulatory Science. Digit Biomark. 2020 Nov;4((1 Suppl 1)):28–49. doi: 10.1159/000512500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Rochester L, Mazzà C, Mueller A, Caulfield B, McCarthy M, Becker C, et al. A Roadmap to Inform Development, Validation and Approval of Digital Mobility Outcomes: The Mobilise-D Approach. Digit Biomark. 2020 Nov;4((1 Suppl 1)):13–27. doi: 10.1159/000512513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Cohen AB, Ray Dorsey E, Mathews SC, Bates DW, Safavi K. A digital health industry cohort across the health continuum. npj. Digit Med. 2020 May;3((1)):1–10. doi: 10.1038/s41746-020-0276-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Wong CH, Siah KW, Lo AW. Estimation of clinical trial success rates and related parameters. Biostatistics. 2019 Apr;20((2)):273–86. doi: 10.1093/biostatistics/kxx069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Hay M, Thomas DW, Craighead JL, Economides C, Rosenthal J. Clinical development success rates for investigational drugs. Nat Biotechnol. 2014 Jan;32((1)):40–51. doi: 10.1038/nbt.2786. [DOI] [PubMed] [Google Scholar]

- 89.Ranjan Y, Rashid Z, Stewart C, Conde P, Begale M, Verbeeck D, Hyve. RADAR-CNS Consortium et al. RADAR-Base: Open Source Mobile Health Platform for Collecting, Monitoring, and Analyzing Data Using Sensors, Wearables, and Mobile Devices. JMIR Mhealth Uhealth. 2019 Aug;7((8)):e11734. doi: 10.2196/11734. [DOI] [PMC free article] [PubMed] [Google Scholar]