Abstract

Echocardiography (echo) is a critical tool in diagnosing various cardiovascular diseases. Despite its diagnostic and prognostic value, interpretation and analysis of echo images are still widely performed manually by echocardiographers. A plethora of algorithms has been proposed to analyze medical ultrasound data using signal processing and machine learning techniques. These algorithms provided opportunities for developing automated echo analysis and interpretation systems. The automated approach can significantly assist in decreasing the variability and burden associated with manual image measurements. In this paper, we review the state-of-the-art automatic methods for analyzing echocardiography data. Particularly, we comprehensively and systematically review existing methods of four major tasks: echo quality assessment, view classification, boundary segmentation, and disease diagnosis. Our review covers three echo imaging modes, which are B-mode, M-mode, and Doppler. We also discuss the challenges and limitations of current methods and outline the most pressing directions for future research. In summary, this review presents the current status of automatic echo analysis and discusses the challenges that need to be addressed to obtain robust systems suitable for efficient use in clinical settings or point-of-care testing.

Keywords: Echocardiography, Ultrasound, Doppler, Cardiovascular Diseases, 2D Echo, Supervised Learning, Unsupervised Learning, Deep Learning, Image Processing, Echo Datasets, Point-of-care Testing

1. Introduction

Cardiovascular disease (CVD) is the leading cause of mortality in the United States and globally [1]. CVD is diagnosed using several imaging techniques: echocardiography (echo), cardiac magnetic resonance imaging (CMR), multiple gated acquisition scan (MUGA), and computed tomography (CT). Of these techniques, echo is the most commonly used as it is noninvasive, portable, inexpensive, and widely available [2]. Transthoracic echocardiogram (TTE), a very safe and common type of echocardiogram, involves using a transducer to transmit ultrasound waves to the heart and converting the reflected waves (echoes) into images. The recorded echo data can be either a single shot (static image) at a specific cardiac period or a video sequence over cardiac cycles. A single cardiac cycle starts with ventricular contraction (systole) and ends by ventricular relaxation (diastole). Different echo modes can be obtained using TTE [2], namely M-mode, B-mode, and Doppler, each with purpose-specific characteristics. These modes are typically used in an integrated fashion to provide better visualization and diagnosis of various cardiac conditions. Descriptions of echo modes can be found in Appendix A.

Existing approaches for analyzing echo data can be broadly divided into manual and automated. In the manual approach, echocardiographers manually select good-quality end-systole and end-diastole frames followed by delineating the desired region and measuring cardiac indices. Examples of common cardiac indices include ejection fraction (B-mode), peak velocity (spectral Doppler), and posterior wall thickness (M-mode). Complete list of cardiac indices can be found in [2]. This manual approach has three limitations. First, it is error-prone and suffers from high intra- and inter-reader variability [3], [4]. Manual estimation of cardiac indices is more challenging and prone to larger variability in case of fetuses/infants [5] and animals [6] due to their small cardiac size and unclear boundaries. Second, the manual delineation is a tedious task requiring a significant amount of time. This time commitment paired with insufficient access to technicians increases the workload, which might lead to fatigue and distraction, and therefore, inaccurate or delayed diagnoses [7]. Third, cardiological expertise is a heavily burdened resource and often unavailable in low-resource settings.

Automated echo analysis systems can provide a timely, less subjective, and inexpensive alternative to the manual approach. Such systems can control intra- and inter-reader variability, greatly reduce the workload, and address the shortage of cardiological expertise in low-resource settings. This paper provides a comprehensive and systematic review of existing automated methods for four major echo tasks, namely quality assessment, mode/view classification, segmentation, and CVD diagnosis. The review covers three clinically used echo imaging modes, which are B-mode, Doppler, and M-mode. Previous reviews focus on other modalities (e.g., MRI), single mode (B-mode), specific task (e.g., segmentation), or algorithm (e.g., deep learning).

For example, Litjens et al. [8] presents existing deep learning algorithms for analyzing CT and echo modalities. The paper focuses mainly on convolutional neural network (CNN for classification) and fully convolutional neural network (FCN for segmentation) applied to B-mode images. Similarly, Meiburger et al. [9] reviews existing FCN segmentation methods applied to B-mode ultrasound images of the heart, abdomen, liver, gynecology, and prostate. Other reviews of automated segmentation methods applied to MRI and CT can be found in [10], [11], [12]. A more focused review of segmentation methods applied to B-mode fetal echocardiography is presented in [13]. For CVD diagnosis, Alsharqi et al. [14] presents machine learning methods applied to B-mode echo for disease classification. Similarly, Sudarshan et al. [15] presents a review of machine-learning method applied to 2D echocardiography (classification) and provide a summary of the most commonly used features for identifying a specific cardiac disease (infarcted Myocardium tissue characterization).

Contrary to previous reviews, this paper presents the first comprehensive and systematic review of automated methods for major echo tasks. It makes the following contributions:

It presents the current status and challenges of existing automated echo analysis methods (Section 2).

It provides a summary of the metrics used to assess the performance of various echo tasks (Section 3).

It systematically and comprehensively reviews existing automated methods covering four major tasks: echo quality assessment (Section 4.2), view/mode classification (Section 4.3), segmentation (Section 4.4), and CVD diagnosis (Section 4.5).

The review covers all echo modes, namely B-mode, M-mode, and Doppler. It also provides a summary of the most commonly used clinical and nonclinical features for identifying different CVD from different echo modes.

It presents descriptions of existing publicly available echo datasets (Section 5).

It highlight the most pressing directions for future research (Section 6).

Section 7 concludes the paper.

2. Background

2.1. Echo Analysis: Artifacts and Challenges

The quality of echo data depends highly on the scanning technique and configurations. Because most of echo artifacts occur as a result of improper configurations and acquisition, echo images of a specific cardiac tissue acquired by different operators/vendors or under different configurations can have different visual appearances. These variations can confuse cardiologists and make the image interpretation task challenging. Examples of the main artifacts in B-mode and M-mode echo are: side lobe artifact, mirroring artifact, refraction artifact, and shadowing artifact [16]. The main artifacts of Doppler echo are: aliasing, mirroring, spectral broadening, and blooming [16]. A robust automated echo image analysis system should consider these variations and artifacts by including a variety of dataset collected from different machine configurations and operator setting for the algorithm development and model training.

Another major challenge of echo analysis is the presence of speckle noise. Speckle noise, which has a granular appearance, is a multiplicative noise that occurs when several waves of the same frequency and different phases and amplitude interfere with each other. This type of noise can greatly degrade the quality of the image, and therefore, the quality of the automated algorithms. Several despeckling techniques have been proposed to reduce the effect of speckle noise while preserving structure and contextual features as well as other useful information. We refer the reader to [17], [18], [19], [20] for reviews of traditional despeckling techniques and [21], [22] for deep learning-based despeckling techniques.

2.2. Current Status of Automated Echo Analysis

Existing automated echo analysis systems perform one of the following tasks:

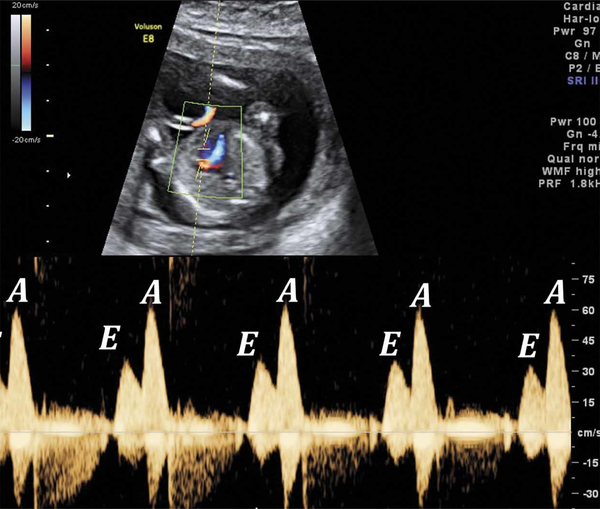

Quality assessment provides a quality score for an echo frame in real-time or classifies the acquired echo frame as low-quality (unmeasurable) or good-quality. Automating this task facilities the analysis of subsequent tasks because it automatically removes unmeasurable echo cases. For example, automated quality assessment can be used to exclude low-quality B-mode images with unclear boundaries or unmeasurable Doppler images with overlapped peaks (e.g., E and A peaks of mitral valve flow are overlapped).

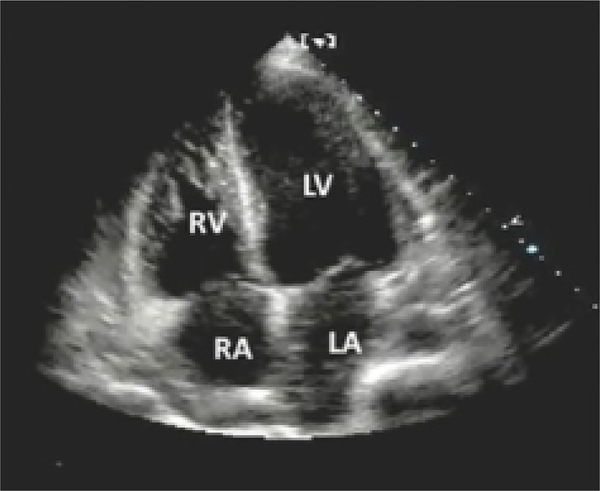

Mode/View classification is the categorization of acquired echo data into different modes (B-mode, M-mode, Doppler) or cardiac views. Each mode of echo can be recorded from different views. For example, a comprehensive B-mode acquisition involves imaging the heart from different windows or views by positioning the transducer in different locations [2]. The most common B-mode views include [2]: Parasternal Long Axis and Short Axis views (PLAX and PSAX), Apical Two-chamber view (A2C), Apical Three-chamber view (A3C), Apical Four-chamber view (A4C), Apical Five-chamber view (A5C), Subcostal Long and Short Axis Views (SCLX and SCSX), and Suprasternal Notch View (SSN). Similarly, Doppler can be acquired, using continuous wave (CW) or pulsed wave (PW), from different locations to measure the function of different valves (e.g., aorta valve [AV], mitral valve [MV]). This task can greatly enhance subsequent tasks because it allows view-specific segmentation and diagnosis.

Boundary segmentation task involves delineating the boundary or segmenting the area of a desired region. This region can be a cardiac chamber in B-mode images, wall in M-mode images, or a spectral envelope in Doppler images. The segmented region is then used to extract features or cardiac indices followed by CVD classification. Automating this task provides fast, accurate, and objective segmentation over the whole cardiac cycle with a minimum time cost.

CVD classification is the detection or predication of specific cardiac disease based on image features or calculated cardiac indices. Fully automated machine-assisted or machine-based screening and diagnostic systems have a significant potential in providing high-quality and cost-efficient health care to the patients in low-resource settings.

The first step for all above-mentioned tasks is the detection or localization of the region of interest (ROI). Accurate ROI detection is an important step that increases the performance and decreases the computational complexity of the method because it removes irrelevant regions that confuses the algorithm. ROI detection in case of B-mode images involves cropping the anatomical area from the background (e.g., waveforms and texts) while the ROI detection in Doppler images involves cropping the Doppler signal region. As shown in the tables (Table 2 – Table 5), the majority of existing works manually localize ROI in echo images prior to further analysis. Other works use semi-automated or fully automated methods to detect ROI in echo images. However, these fully automated methods are view-specific and built with specific assumptions (e.g., distinct chamber shape or fixed locations of Doppler signal), and hence, might fail if these assumptions are violated.

TABLE 2:

Quantitative comparison of automated methods for echo quality assessment. A2C (apical 2 view), A3C (apical 3 view), A4C (apical 4 view), PLAXA (parasternal long axis view, aortic valve), PLAXPM (parasternal long axis view, papillary muscle), GHT (Generalized Hough Transform), CNN (Convolutional neural network), TPR (true positive rate), CC (correlation coefficient).

| Work | ROI Method | Mode & View | Method | System & Data | Train & Test | Ground Truth | Performance |

|---|---|---|---|---|---|---|---|

| [27] | NA | B-mode: A4C | Model-based: B-splines to model four chambers; goodness-of-fit | GE Vivid E9 system 95 videos | Train: 4 patients 35 cases Test: 2 patients 60 cases | Scores by 2 cardiologists: Good, fair, and poor | TPR (Section 3.1): Good quality: 22% Fair quality: 20% Poor quality: 15% |

| [28] | NA | B-mode: PLAX | Model-based: GHT applied to input image compared with Atlas: created from images segmented manually | GE Vivid 7 system 133 images 35 patients | Train: 89 images to create PLAX Atlas Test: 44 | Scores by expert sonographer: Good (score 3) Poor (score 0) | CC (Section 3): 0.84 correlation between manual and automated scores |

| [29] | NA | B-mode: A4C | Deep Learning: Customized regression CNN | NA system; 2,904 A4C images | Train: 80% 2,345 images; Test: 20% 560 images; | Scores by expert cardiologist: Good and Poor | Mean Absolute Error (MAE): 0.87 ± 0.72 |

| [30] | NA | B-mode: AP2, AP3, AP4, PLAXA, PLAXPM | Deep Learning: Customized regression CNN | Different GE and Philips systems; 2,450 cines: A2C (478), A3C (455), A4C (575), PLAXA(480), PLAXP(462) | Train: 80% # videos per view = 935 Total (4,675); Test: 20% # videos per view = 228 Total (1,144); 20 frames videos | Scores by physicians: A2C (0–8), A3C (0–7), A4C (0–10),PLAXA(0–4),PLAXPM(0–5) scores normalized | View accuracy: T: cases per view A-M: auto-hand, A2C (86±9)A3C (89±9)A4C (83±14)PLAXA(84±12)PLAXP(83±13) |

TABLE 5:

Summary of automated CVD classification methods for B-mode and Doppler. LV (left ventricle), WMA (wall motion abnormalities), A2C-A3C-A4C (apical 2–3-4 view), CW (continuous wave), CAD (coronary artery disease), DCM (dilated cardiomyopathy), HCM (Hypertrophic cardiomyopathy), ATH (physiological hypertrophy in athletes), MI (myocardial infarction), AS (aortic stenosis), AR (aortic regurgitation), GLCM (gray level co-occurrence matrix), GLRLM (gray level run length matrix ), GLDS (gray level difference statistics), SM (statistical feature matrix), LCP (Local Configuration Pattern), PCA (Principal Component Analysis), LDA (Linear Discriminant Analysis), DCT (Discrete cosine transform), DT (Decision Tree), RF (Random Forest), NN (neural network), SVM (support vector machine), k-NN (k-nearest neighbors), IG (information gain), KS-test (Kolmogorov-Smirnov test), mRMR (Max-Relevancy and Min-Redundancy Feature).

| Work | ROI | Objective | Data & Labels | Method | Features & Markers | Top Features | Performance |

|---|---|---|---|---|---|---|---|

| [103] | NA | LV WMA Detection; A2C, A4C B-mode | 129 patients, 65 patients (train), 64 patients (test); LV contours and Abnormalities scores by 2 expert readers | LV modeling using PCA; shape modes describe variations in the population; LDA classifier | Features: statistical parameters extracted from shape models; Biomarkers: NA | 8 PCA parameters; | Avg. accuracy (Correctly classified cases): 88.9 |

| [104] | NA | LV WMA Detection; A2C, A3C, A4C B-mode | Data of normal & abnormal (hypokinetic, akinetic, dyskinetic, aneurysm) patients; 220/125, train/test Abnormalities scores | Hand-initialized dual-contours (endocardium and epicardium) tracked over time; bayesian networks (binary) | Features extracted from contour: circumferential strain, radial strain, local, global, and segmental volume markers | 6 features (global & local) based on KS-test | Sensitivity (Section 3.1): 80 to 90 |

| [105] | Manual | LV WMA Detection; A2C & A4C B-mode | Data of 10 healthy & and 14 patients with ischemic; 336 segments: 55% normal, 13% hypo -kinetic, 31% akinetic; 220/125, train/test; Abnormalities scores | Affine registration and B-spline snake to model LV; threshold classifier | Novel regional index computed from control points of B-spline snake; Biomarkers: NA | New Quantitative Regional Index | Agreement between 2 experts and automated: Absolute, 83 Relative, 99 |

| [106] | Manual | CAD risk assessment; B-mode | Stroke-risk (>0.9mm) to label patients as: High risk CAD (9), Low risk CAD (6); 1508 frames high risk, 1357 frames low risk; ROIs by 2 experts | 56 grayscale feature extracted: GLCM, GLRLM, GLDS, SM, invariant moment; SVM classifier; k-fold cross validation | Derived 6 Feature Combinations: FC1, FC2, FC3, F4, F5, F6; Biomarkers: NA | Best feature set was chosen based on classification accuracy (FC6) | Avg. accuracy (Section 3.1): 94.95; AUC: 0.95; |

| [111] | NA | MI stage detection; A4C B-mode | WMSI & LVEF to label patients as: normal (40), 200 moderate (40), severe (40); 600 images, 200 per class; age: 21–75 | Curvelet Transform and LCP features; LDA, SVM, DT, NB, kNN, NN for classification; 10-fold cross validation | 17,850 LCP features extracted from 46,200 CT coefficients; Biomarkers: NA | mRMR method: 30 coefficients, 6 features; proposed Myocardial Infarction Risk Index (MIRI) | Accuracy: 98.99; sensitivity: 98.48; specificity: 100% (SVM, RBF) |

| [114] | Auto. Fuzzy c-means (FCM) | DCM & HCM detection; LV, PSAX, B-mode | Data of 20 normal, 30 DCM, and 10 HCM patients; 60 (4–6 seconds) videos, 46 fps | LV segmentation by FCM clustering; shape & statistical (PCA & DCT) features; NN, SVM & combine k-NN for classification | DCT & PCA features; Biomarkers: EF, EDV, ESV, mass, septal thickness | PCA features is better than DCT and LV biomarkers | TPR: 92.04 (normal, abnormal) (NN) |

| [115] | NA | Distinguish HCM & ATH; LV, A4C, B-mode | 139 male subjects, 77 with ATH, 62 with HCM; poor quality images excluded | TomTec software for LV speckle tracking; ensemble of NN, SVM, RF for classification; 10 cross validation | Speckle-tracking based geometric (e.g., volume) & mechanical (e.g., velocity) parameters | Based on info. gain (IG): Volume (0.24), MLVS (0.134), ALS (0.13) | Sensitivity: overall (87), adjusted for age (96); Specificity: overall (82), adjusted for age (77) |

| [83] | NA | AR assessment; CW, Doppler | 9 male & 2 female subjects with mild, moderate, severe AR; 22 images; 3 age groups: G1 (20–35), G2 (36–50), G3 (51–65); ground truth by experts | Envelope delineation: filtering, morphological operations, thresholding, edge detection | Parameters computed from detected envelope: peak velocity, pressure gradient, pressure half time | Pressure half time (PHT) | High CC between automated and manual: r=0.95 |

| [63] | NA | Valves dysfunctions quantification; CW, Doppler | 60 patients: 30 with aortic/mitral stenosis; 20 with normal sinus rhythm; 10 with atrial fibrillation; ground truth: manual indices by expert | Envelope delineation: Active contour for envelope delineation | Doppler indices computed from detected envelope: Peak velocity (PV ), Mean velocity (MV ), Velocity time integral (VTI) | Mean velocity (MV ) | B & A, LOA (Section 3.2): (−3.9 to +0.5), (−4.6 to −1.4), (−3.6 to +4.4) for PV, MV, and VTI (acceptable) |

3. Evaluation Metrics

This section summarizes different metrics used to evaluate the performance of different echo tasks. We broadly divide these metrics into classification evaluation metrics and segmentation evaluation metrics.

3.1. Classification Evaluation Metrics

Classification metrics are derived from the confusion matrix, which shows the number of correct and incorrect classifications as compared to the ground truth labels [23]. Examples of derived metrics include accuracy, error rate (ER), true positive rate (TPR), and true negative rate (TNR).

Accuracy represents the number of instances (e.g., images or pixels) that are correctly classified divided by the total number of instances in the dataset , where TP, TN, FP, and FN represent true positive, true negative, false positive, and false negative, respectively. ER measures the percentage of incorrect classifications. Dividing the number of instances that are incorrectly classified by the total number of instances gives ER; i.e., subtracting the accuracy percentage from 100. TPR (a.k.a., recall or sensitivity) measures the percentage of actual positive examples that are correctly classified. TNR (a.k.a., specificity) measures the percentage of actual negatives that are correctly classified. ROC (Receiver Operating Characteristic) curve [23] is another evaluation metric that is commonly used in medical applications. ROC plots the false positive rate (FPR) on X-axis and TPR on Y-axis at different threshold settings of the classifier. A curve that climbs toward the top-left corner indicates an ideal classification performance. The area under the ROC curve, known as AUC, is used to measure the quality of the classification models. The value of AUC ranges from 0 (worst) to 1 (best).

3.2. Segmentation Evaluation Metrics

Roughly, segmentation evaluation metrics can be classified as similarity-based metrics, distance-based metrics, and statistical-based metrics.

Similarity-based metrics measure the similarity between the automatically segmented region and the manually segmented region. This region can be left ventricle (LV) cavity in B-mode images or spectral envelope in Doppler images. Examples of the most common similarity-based metrics include Jaccard similarity index (JSI) and Dice similarity index (DSI). JSI evaluates the segmentation performance using TP, FP, and FN rates as follows [24]: , where TP represents the pixels that are correctly classified as the target cardiac region, FP represents the background pixels that are falsely classified as the target region, and FN represents cardiac pixels that are falsely classified as background. DSI is another similarity-based metric that measures the similarity or intersection between the automatically labeled pixels and manually labeled pixels. Mathematically, this metric is formulated as follows [24]: . The main difference between JSI and DSI is that DSI counts TP twice while JSI counts TP once. The value of both JSI and DSI ranges from 0 to 1, where 0 indicates complete dis-similarity and 1 indicates complete similarity. Intersection over Union (IoU) is another metric that calculates the intersection between two regions by dividing the area of overlap between them by the area of union.

A single evaluation category can perhaps not be enough to evaluate the performance of a segmentation algorithm. In addition, similarity-based metrics only report the degree of overlapping and do not report or consider the location or distance between the segmentation and ground truth. Distance-based metrics, on the other hand, consider how far apart the segmentation and ground truth are from each other. Average Contour Distance (ACD) and Average Surface Distance (ASD) are two distance-based metrics that are commonly used to evaluate regions segmentation. Both ACD and ASD are measured in millimeter (mm) [24].

Statistical-based metrics are used to measure the correlation between the automatic and manual segmentation. Specifically, statistical-based metrics evaluate the accuracy of segmentation by measuring the correlation between the cardiac indices calculated based on the automatic segmentation and the manual indices. Correlation coefficients (CC) and Bland-Altman agreement (B&A) are two important statistical metrics that are commonly used to evaluate the performance of cardiac segmentation. CC measures the correlation or the agreement between two sets of data. The mathematical formula of CC, which can be found in [25], returns a value that ranges from −1 to 1, where 1 indicates a strong positive correlation, −1 indicates a strong negative correlation, and 0 indicates no correlation. B&A measures the agreement between two set of measurements or data using the mean difference and limits of agreement. The mathematical formulation of B&A and comparison with CC metric can be found in [26].

4. Automated Echo Analysis

As computing technology and machine intelligence algorithms evolve, automated analysis of echocardiograms have the potential to improve clinical workflows and enhance diagnostic accuracy. This section provides a comprehensive review for existing automated methods of four tasks: echo quality assessment, mode/view classification, boundary segmentation, and CVD classification. The automated methods of these tasks can be divided, based on the underlying algorithm, into low level image processing-based methods, deformable model-based methods, statistical model-based methods, conventional machine learning-based methods, and deep learning-based methods. Table 1 summarizes the advantages and disadvantages of these five algorithm categories.

TABLE 1.

Strengths and limitations of algorithm categories

| Algorithm Category | Strengths | Limitations |

|---|---|---|

| Low Level Image Processing (e.g., Thresholding and Edge Detection) | Simple implementation low computational complexity | Sensitive to the image’s noise and artifacts Perform poorly when applied to obscured images and images with unclear boundaries, non-uniform regional intensities, and confusing structures |

| Deformable Models (e.g., Active Contour Model) | Can segment any shape Highly flexible | Sensitive to the initial contour location/shape Perform poorly when the shape vary widely Tend to become computationally complex |

| Statistical Models (e.g., Active Appearance Model) | Use intensity and shape information Highly effective | Require proper initialization Expensive manual shapes annotations Perform poorly when the shape vary widely Local minimum trap |

| Conventional Machine Learning (e.g., Random Forest Trees) | Good to high performance Good interpretability | Look at specific handcrafted features Bias of engineer who designs the method Require a set of annotated data |

| Deep Learning (e.g., Convolutional Neural Network) | Superior performance | Require a large set of annotated data Long tuning/training process Lack of interpretability |

4.1. Literature Review Design

To ensure the reproducibility of this review, we present our search and selection strategies. A flowchart of our literature review is depicted in Figure 1.

Fig. 1.

Flowchart of our review. The histogram associated with each mode represents the number of automated works for each task.

4.1.1. Search Strategy

We did a systematic review of automated echocardiography using PubMed, IEEE Xplore, Google Scholar, Google Datasets, ACM Digital Library, CiteSeer, PLOS ONE, and Scopus search engines. We searched for scientific conferences, journal articles, technical reports, and dataset papers published up to February 2020, and retrieved relevant literature by using a combination of keyword terms. Examples of these terms include cardiac imaging; automated echo analysis and interpretation; echo review/survey; echo mode/view classification; echo disease classification; echo quality assessment; image-based analysis echo; machine learning-based analysis echo; deep learning-based analysis echo; chamber/envelope/wall segmentation echo; and echocardiographic datasets. Terms related to echocardiographic hardware and other cardiac imaging modalities (e.g., CT and MRI) are excluded because they are outside the scope of this review. We retrieved, using this search strategy, a total of 193 studies.

4.1.2. Selection Strategy

We included a study if all of the following criteria are fulfilled: (1) the full text is written in English; (2) the study includes a clear description of the technical method and used dataset; (3) the study is published as a full conference paper, journal article, open access article, or technical report; and (4) the study is published the year of 2004 or after because a rising amount of interest and publications in automated echocardiography analysis using image processing and machine learning sprouted around that time. We screened the retrieved papers independently and excluded the ones that failed to adhere to these criteria. We included, using this strategy, a total of 94 papers in this systematic review. The selected papers are loaded into EndNote X8 and categorized into different groups.

4.2. Quality Assessment

Unlike other cardiac imaging modalities, the diagnostic accuracy of echocardiography is highly dependent on the image quality at the acquisition stage. Therefore, the quality of the acquired echo depends highly on the technician’s expertise. Automated echo quality assessment task provides a quality score of a given image or categorizes this image as low- or good-quality. These methods can aid during echo acquisition by providing real-time feedback and automatically rejecting low-quality cases. We divide existing automated quality assessment methods into two categories: model-based methods and deep learning-based methods. Table 2 summaries current automated methods for echo quality assessment.

4.2.1. Model-based Methods

One of the first automated methods for assessing echo quality is presented in [27]. The proposed method models the four chambers (left ventricle [LV], right ventricle [RV], left atrium [LA], right atrium [RA]) of A4C view by a non-uniform rational B-splines (nUrBs) using 12 control points. Then, the nUrBs models for all chambers are joined by similarity transforms to create a complete view model. Finally, the model goodness-of-fit is used to calculate a quality score. The proposed method is tuned using 35 B-mode (A4C) echo videos recorded from 4 healthy volunteers. The recorded videos include both good quality and completely erroneous quality. Each of the recorded video is scored as having good, fair, or poor quality by 2 cardiologists. The proposed method improved the quality of the recorded A4C images from poor to fair or good by 89% (i.e., 8 of 9 cases were improved).

Another B-mode echo quality assessment method is presented in [28]. The presented method assesses the quality by comparing the structure of a representative atlas (model) with the structure of the input image. The structure of PLAX atlas is generated from 89 manually segmented images while the structure of the input image is generated using thresholding and the Generalized Hough Transform (GHT). The proposed method is evaluated using echo data (133 PLAX images) of 35 normal and hypertrophic patients. Each image is scored by an expert sonographer as poor, moderate, and good visibility. The automatically generated scores achieved good correlation with manual ratings (correlation coefficient = 0.84).

Although model-based methods for echo quality assessment can achieve good performance, these methods are view-specific because they require to generate a specific model or template for each view. In addition, the accurate generation of the template relies heavily on human experts or the image’s contrast. For example, methods of Snare et al. [27] and Pavani et al. [28] are designed for a specific B-mode view (A4C [27] or PLAX [28]), require manual annotation [28], and both rely heavily on the presence of the sharp edges in the image; i.e., they would fail when applied to low contrast images.

4.2.2. Deep Learning-Based Methods

Abadi et al. [29] proposed a regression CNN architecture for assessing the quality of B-mode videos (A4C view). The proposed architecture is composed of two convolutional layers, each followed by Rectified Linear Units (ReLU), two pooling layers, and two fully connected layers. The loss function (L2 norm) outputs the Euclidean distance of the network score to the manual quality score. The proposed regression CNN architecture is trained using stochastic gradient descent (SGD), a batch size of 16, a momentum of 0.95, weight decay of 0.02, and initial learning rate of 0.0002. The architecture is trained using 2,344 end-systolic A4C frames. Evaluating the performance on 560 test set achieved a mean absolute error (MAE) of 0.87 ± 0.72.

Abadi et al. [30] extends their previous work [29] to include other cardiac views, namely A2C, A3C, A4C, PSAX at the aortic valve, and PSAX at the papillary muscle, as well as echo cine loops instead of static frames. The proposed multi-stream network architecture consists of five regression models with the same weights across the first few layers. The last layers of the proposed architecture are view-specific layers. Similar to [30], the loss function (L2 norm) for each view computes the Euclidean distance of the network score to the manual quality score. The proposed architecture is trained using Adam optimizer and random initialization. This method, which is trained using 4,675 cine loops, achieved a mean quality score accuracy of 85% ± 12 when applied to testing cine loops (1144).

In summary, there has been a little effort [27], [28], [29], [30] to create automated methods for B-mode echo quality assessment. In the case of M-model, we are not aware of any automated method for assessing the quality of the acquired M-mode images. As for Doppler, we are only aware of a recent deep learning-based method presented by Zamzmi et al. in [31]. The proposed method, which was trained on labeled images (good- and bad-quality) representing a wide range of real-world clinical variation, achieved 88.9% overall accuracy. We refer the reader to [31] for a detailed description of the method and presentation of the results.

Existing methods for assessing B-mode echo quality can be divided into model-based methods and deep learning-based methods. As shown in Table 2, deep learning-based methods [29], [30] achieved better performance as compared to model-based methods [27], [28]. The higher performance in deep learning-based methods could be attributed to a broader dataset exploited in the study [29], [30] as well as a more complex feature extraction and model learning. In addition, the deep learning-based method proposed in [30] is evaluated in a dataset collected from different US machines under different configurations in opposition to the methods presented in [27], [28]. Such setting for data collection ensures that the proposed method would be clinically relevant. Another advantage of deep learning-based methods is that these methods do not require the user to build a model or template for each view.

In the future, we would expect to see increasingly more deep learning methods to extend existing B-mode quality assessment methods, and to include quality assessment for all echo modes and views collected using multiple vendors under different configurations. Also, we would expect to integrate quality assessment task into acquisition software to provide a quality score for recorded echo frames in real-time.

4.3. View Classification

Mode or view classification is the categorization of echo images into different cardiac modes (e.g., B-mode) or views (e.g., A4C). Automating this task offers two main benefits. First, it facilities the organization, storage, and retrieval of echo images. Second, it is important for automating subsequent tasks. For example, measuring the function of a specific valve requires knowing the view beforehand because different views show different valves. We broadly categorize existing methods for mode/view classification into: conventional machine learning-based methods and deep learning-based methods. Table 3 provides a summary and quantitative comparisons of these methods.

TABLE 3:

Quantitative comparison of automated methods for mode/view classification. A2C-A3C-A4C-A5C (apical 2–3-4–5 views), PLAX (parasternal long axis view), PSAX (parasternal short axis view), PSA (Parasternal Short Axis), PSAM-PASAP (PSA of Mitral and Papillary), IVC (inferior vena cava), SC2C-SC4C-SCLX (subcostal 2–4 chamber and long axis), GSAT (Gray- Level Symmetric Axis Transform), SVM (support vector machines), TPR (True Positive Rate).

| Work | ROI Method | Mode & View | Method | System & Data | Train & Test | Ground Truth | Performance |

|---|---|---|---|---|---|---|---|

| [32] | NA | B-mode: A2C, A4C, PALX, PSAX, SC2C, SC4C, SCLX, other | Conv. ML method GIST descriptor, probabilistic SVM | Philips CX50; 33Hz; 270 videos, 5–10 heartbeats | Train: 2700, Test: 2700 frames | Domain expert annotation for all views | TPR (Section 3.1): A2C-A4C (100), SAX (100), LAX (98), SC2 (96), SC4 (100), SCL (64), other (96) |

| [35] | LV Detectors (MLBoost) in all viwes | B-mode: A2C, A4C, PALX, PSAX | Conv. ML method Fusion of LV detectors; multi-class boosting | System: NA; 1303 videos, A2C (371), A4C (574), PSAX (203), PLAX (155) | Train: 1080; Test: A2C (61), A4C (96), PSAX (28), PLAX (38) | Manually localized LV regions | TPR (Section 3.1): A2C (93.5), A4C (97.9), PSAX (96.4), PLAX (97.4) |

| [36] | GSAT Detector | B-mode: A4C, PALX, PSAX | Conv. ML method Relational Structures, Markov Random Field, multi-class SVM | System: NA; 15 normal vid., 2657 i-frames; 6 abnormal, 552 i-frames; | Train: 2657, leave-one-out; Test: 552 | Domain expert annotation for all views | Average precision (Section 3.1): 88.35% |

| [37] | Manual | B-mode: A2C, A3C, A4C, A5C, SAB, SAP, PLA, PSAM | Conv. ML method Optical flow, edge-filtered map, SIFT features, SVM | System: NA 113 vid., 25 Hz 320×240 pix. 2470 frames | leave-one-out; | Manual labeling | TPR (Section 3.1): A2C (51), A3C (54), A4C (93), A5C (61), SAB (1.0), SAP (93), PLA (88), PSAM (71) |

| [46] | NA | B-mode: A2C, A3C, A4C, PLAX, PSAX, IVC, other | Deep learning: VGG-based CNN with 6 classes; ADAM, 64 batch 1 x 105 learning rate, 10–20 epochs; 2 hr training (GTX 1080), 600 ms runtime | System: NA > 4000 studies | Train: 40,000 images; Test: VC (159), A2C (555), A3C (174), A4C (756), PLAX (515), PSAX (458) | Manual labeling | TPR (Section 3.1): IVC (100), A2C (94) A3C (93), A4C (98), PLAX (99), PSAX (99.5) |

| [49] | NA | B-mode: A2C, A4C, PLAX, PSAX, ALAX, SC4C, SCVC, unknown | Deep learning: Inception-based CNN with 7 classes; Adam, 10−4 rate, 64 mini-batch, 100 epochs | GE Vivid E9, 4582 vid., 205 patients, avg. age: 64; GE Vivid E7, 2559 vid., 265 patients, avg. age: 49 | Train: 4582 vids., 256,649 frames; Test: 2559 vids., 229,951 frames | Manual labeling | Overall accuracy:Frame (98.3 ± 0.6) Video (98.9 ± 0.6); runtime (4.4 ± 0.3) ms (GPU) |

| [50] | NA | B-mode: 12 apical, parasternal, subcostal, suprasternal views | Deep learning: Lightweights VGG, DenseNet, and ResNet based models; ADAM, 1−4 rate, 300 batch | Philips, GE, and Siemens systems; 3,151 patients, 16,612 cines, 807908 frames | Patient level split: Train (60%), Valid (20%), Test (20%), | Manual labeling by senior cardiologist | Overall accuracy: 88.1%; fusion of 3 models |

| [51] | NA | B-mode: A2C, A3C, A4C, A5C, PLAX, PSAX, PSAM, PSAP | Deep learning: Spatial CNN, input: raw image; Temporal CNN, input: acceleration image | GE Vivid 7 or E9; 432 vid.; age: 7–85; 434×636, 26fps 341×415, 26fps | Train: 280, Test: 152; Re-sized: 227×227×26 frames | Clinicians in 2 hospitals labeled 8 views | TPR (Section 3.1): A2C:100, A3C:100, A4C:100, A5C:71.4, PLAX:96, PSAX:95, PSAM:88, PSAP:75 |

4.3.1. Conventional Machine Learning-Based Methods

These methods use handcrafted features extracted from a detected ROI region with conventional machine learning classifiers to perform view classification. For example, Wu et al. [32] proposed a global approach that uses GIST descriptor with support vector machines (SVM) for classifying 8 B-mode views: PSAX, PLAX, A2C, A4C, SC4C, SC2C, SCLX, and other. GIST descriptor computes the spectral energy of the image and outputs a single feature vector. It uses blocks (4 pixels × 4 pixels) that contains several oriented Gabor filters to model the structure of the image. The final feature vector that represents the entire image is generated by moving these blocks over the image to generate spectrograms followed by concatenating the generated spectrograms. The extracted feature vectors for all images are used to train a probabilistic SVM. The proposed method achieved 98.51% overall accuracy when evaluated on a testing set. Other methods that use descriptors similar to GIST with SVM can be found in [33] (Scale-invariant feature transform [SIFT] descriptor) and [34] (histogram of oriented gradients [HOG] descriptor).

An earlier machine learning-based method for view classification is presented in [35]. The first stage of this method involves training LV detectors for four B-mode views (A4C, A2C, PLAX, PSAX) using a previous approach that incorporates Haar-wavelet type local features and boosting learning technique. Then, global templates (A4C/A2C template, PLAX template, and PSAX template) are constructed based on the detected LV regions and sent to multi-class classifiers. Each classifier is trained using the training images provided by its detector. The final classification is obtained by fusing the classes of all views. The proposed method achieved a classification accuracy over 96% when evaluated on a testing set. This method requires a consistent presence of LV in all views, which limits its usage to cases that hold this constraint.

Instead of building individual LV detectors for each view, Ebadollahi et al. [36] proposed a method that detects the location of chambers using a generic detection approach (GSAT detector). The method models the spatial relationships among cardiac chambers to detect different views. For each view, the chambers spatial relationships and the statistical variations of their properties are modeled using Markov Random Field (MRF) relational graph. The method depends on the assumption that if any two images contain the same chambers where each chamber is surrounded by similar chambers, then the probability that these two images belong to the same view is high. Each model or ”cardiac constellation” is assigned a vector of energies according to the different view-models. The energy vectors obtained from all the training images are used to build a multi-class SVM. Evaluating the proposed method using leave-one-video-out cross validation (LOOCV) achieved up to 88.35% average precision. The dataset that is used for training and testing the method contains 15 normal echo videos, 6 abnormal echo videos, and 10 B-mode views: 2 PLAX views, 4 PSAX views, and 4 apical views. The normal cases are used for training and testing, and the abnormal cases are used only for testing. A main limitation of this method includes sensitivity to ROI detector, noise, and image transformation.

The methods presented in [32], [33], [34], [35], [36] use spatial features extracted from static images instead of videos. In [37], Kumar et al. incorporates temporal or motion information with spatial features to classify 8 B-mode views: A2C, A3C, A4C, A5C, PLAX, PSAX, PSAP, and PSAM. The method starts by manually locating the ROI region in all videos followed by aligning (affine transform) these videos using the extreme corner points of the fan sector. Then, optical flow is applied to each frame to obtain the motion magnitude. Because motions in echo video are only useful when it is associated with the anatomical structures, the motion magnitude images are filtered using an edge map on image intensity. After obtaining the edge-filtered motion maps, several landmark points are detected using SIFT descriptor. Once the salient features are detected and encoded for each frame, the salient features of all frames in the training dataset are used to construct a hierarchical dictionary. This dictionary is used to train a kernel-based SVM. To classify a new input video, the trained classifier provides a label for each frame in the given video and used majority voting to decide the final class label of the video. The proposed method, which is trained using 113 videos, achieved 51%−100% correct classification rates when evaluated on a testing set. The main strength of this method is that it does not require constructing spatial and temporal models for each cardiac view. Other conventional-based methods for view classification are presented in [38] (bag of visual words with SVM), [39] (gradient features and logistic trees), [40] (visual features and boosting), [41] (B-spline and thresholding), and [42] (histogram features and neural network).

Instead of using handcrafted features with traditional classifiers, convolutional neural network (CNN) can provide objective features extracted directly from the image at multiple level of abstractions while preserving the spatial relationship between the image pixels. These networks achieved state-of-the-art performance in different medical domains, including echocardiography.

4.3.2. Deep Learning-Based Methods

Recent works utilize CNN architectures for feature extraction and classification. Examples of CNN architectures that have been used for cardiac view classification include VGG [43], DenseNet [44], and ResNet [45].

For example, Zhang et al. [46] used VGG CNN [43] for distinguishing 6 B-mode views: A2C, A3C, A4C, PLAX, PSAX, and other. Prior to feature extraction and classification, each frame is converted into grayscale and re-sized (224 × 224). The re-sized image is then sent to VGG [43]. The output of the network is the view that has the highest probability of Softmax function. The entire network is trained using 40,000 pre-processed images with ADAM optimizer, 1 × 10−5 learning rate, mini-batch size of 64, and 20 epochs. Testing the trained network using cross-validation protocol achieved excellent accuracy (e.g., 99% accuracy for A4C views). Zhang et al. expanded their work in [47] to distinguish 23 different echo views. The codes and model weights for both works are available online [46], [47]. Similarly, Madani et al. [48] used VGG-based [43] method to distinguish 15 different echo views: 12 views from B-mode (e.g., PLAX and A4C), M-mode, and two Doppler views (CWD and PWD). The final layer of VGG-16 performs classification using Softmax function with 15 nodes. The network is trained using RMSprop optimization over 45 epochs. The overall test accuracy of distinguishing 12 views of B-mode images is above 97%. The accuracies of distinguishing CWD, PWD, and M-mode views are 98%, 83%, and 99%, respectively.

Another recent architecture for cardiac view classification is presented in [49]. The proposed cardiac view classification (CVC) architecture is designed and trained to distinguish 7 B-mode views, namely A2C, A4C, PLAX, PSAX, SC4C, SCVC, and ALAX, as well as unknown view. The pre-processing stage involves normalizing the image and down-sampling it to 128 × 128. The input image is then sent to a series of deep learning blocks where each block consists of convolution layer, max-pooling layer, inception layer, and concatenation layer. This network is trained over a maximum of 100 epochs using Adam optimizer and a mini-batch size of 64. Cross-entropy and mean absolute error (MAE) loss functions are used for computing the error and updating the weights, which are initialized using Uniform method. Ten fold patient-based cross-validation technique is used for training and validation (265,649 frames) while an independent set of unseen data (229,951 frames) is used for testing. The proposed CVC network achieved 97.4% and 98.5% overall accuracies for frame-level and sequence-level view classification.

A lightweight cardiac view classifier is introduced recently by Vaseli et al. [50] to distinguish 12 B-mode views. Several deep learning lightweight models are built based on three CNN architectures, VGG-16 [43], DenseNet [44], and ResNet [45]. These lightweight models contain approximately 1% of regular deep models’ parameters and they are 6 times faster at run-time. The training parameters for all the deep and lightweight models can be found in [50]. These models are trained and evaluated using 16,612 echo videos collected from 3,151 patients. Combining the three lightweight models achieved an accuracy of 88.1% in classifying 12 cardiac views.

Instead of only extracting deep features from static images, Gao et al. [51] fused the output of spatial and temporal networks to classify the cardiac view of echo videos. The spatial CNN takes a 227 × 227 frame as input and sends the input frame to 7 convolutional layers for deep features extraction. The temporal CNN takes as input the acceleration image, generated by applying optical flow twice, and sends this image to 7 convolutional layers for feature extraction. Then, the output of spatial and temporal CNNs are fused together. The final view classification is obtained by the linear combination of both CNNs scores using Softmax function, which provides the probability of 8 classes (A2C, A3C, A4C, A5C, PLA, PSAA, and PSAP). Both networks are trained using a random initialization, 0.01 learning rate, and 120 epochs. Evaluating the proposed method on 152 echo videos achieved 92.1% accuracy. The accuracy of view classification using only the spatial CNN is 89.5%.

Table 3 provides a summary of automated view classification methods. As shown in the table, deep learning-based methods for view classification achieved excellent performance comparable to the human inter-observer performance, and outperform conventional methods in various views (e.g., A3C, A5C, PSAM, [37] vs [51]). In addition, deep learning-based methods are evaluated using larger datasets collected by different machines as compared to the conventional machine learning-based methods. These results suggest the superiority of deep learning-based methods in the presence of relatively large datasets. This indicates better generalizability of these methods across machines and settings. Deep learning methods, however, suffer from interpretability and transparency issues (black box).

To summarize, the majority of current automated methods focus on detecting different views of B-mode. Only a few works [31], [48] includes other echo mode such as M-mode and Doppler. Because automated view classification is critical to obtain a fully automated and real-time system that can be used efficiently in clinical practice, there is a need for future research focus on developing automated and lightweight view classification for all echo modes (B-mode, M-mode, and Doppler).

4.4. Boundary Segmentation

The current practice for cardiac boundary segmentation requires technicians to perform manual delineation followed by using the traced boundaries for computing structural and functional indices. This practice is tedious, error-prone, and subject to high intra- and inter-readers variation. In this section, we review automated methods for segmentation in B-mode, Doppler, and M-mode images, and provide a summary in Table 4.

TABLE 4:

Quantitative comparison of state-of-the-art segmentation methods. LV (Left Ventricle), A2C-A4C (apical 2–4 view), PLAX (parasternal long axis view), PSAX (parasternal short axis view), CW (continuous wave), PW (pulse wave), MV (mitral valve), AV (aortic valve), ED (end-diastole), ES (end-systole), RMSE (root mean square error), SRAD (speckle reduction anisotropic diffusion), PPG (peak pressure gradient), PHT (pressure half time), EPV (E-Wave Peak Velocity), EDT (E-Wave deceleration time), APV (A-Wave Peak Velocity), and ADU (A-Wave duration).

| Work | ROI Method | Mode & View | Method | System & Data | Ground Truth | Performance |

|---|---|---|---|---|---|---|

| [52] | Semi-automated | B-mode: A4C, LV | Low Level Image Processing Pre-processing module (Filtering & morphological operations) Segmentation module (Watershed and contour correction) | ATL HDI 3000; 12 volunteers, 12 vid. 44fps, 900 frames | Manual contours by a specialist | CC (Section 3.2): 0.87 High (0.99±0.01)Average (0.90±0.024) Low (0.73±0.101) |

| [54] | Automated (k-means) | B-mode: A4C, all chambers | Low Level Image Processing SRAD filtering, thresholding, edge detection | System: NA; 20 volunteers, 25 videos | Manual contours by a specialist | ** |

| [60] | NA | B-mode: A2C, LV | Deformable Model: Active contour, coupled optimization function | System: NA; 61 volunteers, 85 ED images | Manual contours for 85 images by expert echo | ** |

| [61] | NA | B-mode: PLAX, PSAX LV | Deformable model: Smoothing, Hough transform, active contour | System: NA; 11 volunteers, 15 ED images | Manual contours for 85 images by echo expert | ** |

| [62] | Manual | B-mode: A2C, A4C LV | Deformable Model: Control points located manually (initial contour) B-spline snake (final contour) | System: GE; Vivid 3; 50 ES and ED images | Manual contours and cardiac indices by echo expert | RMSE between auto and manual: LV area: 1.5, LV volume: 6.8, Ejection fraction: 4.6 |

| [72] | Manual | B-mode: A4C, LV LV | Statistical Model: Global despeckling, active appearance model training, | System: NA; synthetic and clinical echo images, 56 normal fetuses | Manual contours by cardiologist | Pixel accuracy (Section 3.1): Synthetic (84.12%), Clinical (84.39%) |

| [76] | NA | B-mode: A4C, all chambers | Conventional Machine Learning: Adaptive Group Dictionary Learning, Dictionary initialization, sparse group representation, pixel classification | System: NA; 40 clinical images of 50 normal fetuses | Manual contours by cardiologist | ** |

| [46] | NA | B-mode: A2C, A4C PLAX, PSAX; all chambers | Deep Learning (Pixel Segmentation): 4 U-net CNN models trained using images and masks (A2C = 198, A4C = 168, PSAX = 72, PLAX = 128); Augmentation (cropping & blackout); Training, 2 hours on Nvidia GTX 1080; Runtime: 110ms per image on average | System: NA; Train: 566 images and masks; Test: 557 images | Manual segmentation of all chambers | ** IOU value (Section 3.2): 55% to 92% for all views and chambers |

| [82] | Manual | Spectral Doppler; long strips | Low Level Image Processing: Objective thresholding method, morphological operations, biggest-gap algorithm for peak detection | GE Vivid 5; 25 CW & PW normal images | Manual velocity time integral & peak-velocity by cardiologist | CC (Section 3.2): velocity-integral (0.94) peak-velocity (0.98) |

| [83] | Detection based on axes fixed locations | Spectral CW Doppler; | Low Level Image Processing: Noise filtering & contrast adjustment, Canny edge detector, envelope smoothing, peak detector smoothing, peak detector | System: NA; 22 images; 11 normal subjects; 3 age groups | Manual peak velocity, PPG, and PHF by a cardiologist | CC (Section 3.2): Age G1 (20–35): 0.985, Age G2 (36–50): 0.922, Age G3 (51–65): 0.833 |

| [84] | Manual | Spectral CW Doppler | Low Level Image Processing: Texture filters (entropy, range, and standard deviation), thresholding, morphological operations | System: NA; 20 CW images; 25 patients with AR | Manual envelope contours by a cardiologist | ** |

| [63] | NA | Spectral Doppler, MV & AV | Deformable Model: Speckle resistant gradient vector flow, Generalized gradient vector flow field | Philips devices; 30 patients, 10 with atrial fibrillation, 20 normal | Manual velocity time integral, peak velocity, & border contours by 2 experts | CC and B&A (Section 3.2): see [63] for complete results |

| [93] | NA | Spectral CW Doppler | Model-based: Reference image calculated from all training images (model), mapping or registration from input to reference | GE Vivid 7; 59 CW images; 30 normal volunteers | Manual envelope delineation by echo expert | ** |

| [96] | Automated; 3 trained detectors | Spectral Doppler: MV | Conventional Machine Learning: E peak detector (left root), A velocity detector (right root), peak detector; training shape inference model (mapping from image to its shape) | System: NA; 255 training, 43 testing | Manual Doppler indices (EPV, EDT, (APV, ADU) by 2 sonographers | CC (Section 3.2): EPV (0.987), EDT (0.821), APV (0.986), EDU (0.481) |

indicates the performance is reported by superimposing the automated segmentation on raw images. We refer the reader to the actual papers for visualization of the results as including these images would require obtaining permission from the publisher.

4.4.1. B-mode, Chamber Segmentation

We categorized the methods of chamber segmentation into five categories (Table 1): low level image processing-based methods, deformable model-based methods, statistical model-based methods, conventional machine learning-based methods, and deep learning-based methods.

Low Level Image Processing-based Methods:

Melo et al. [52] proposed a low level image processing-based method for segmenting LV chamber. The proposed method has two main modules: pre-processing module and segmentation module. The pre-processing module takes a raw image, performs filtering and morphological operations, and sends the processed image to the segmentation module. This module uses watershed algorithm for segmenting LV border (PLAX view). After detecting LV border, several structural indices, such as LV area, are computed. The proposed method is evaluated using videos of 12 healthy volunteers and measured using eight different metrics [52]. Amorim et al. [53] uses a method similar to [52] for segmenting LV border in PLAX image. The main difference between [52] and [53] is that Amorim et al. [53] applies the watershed algorithm to a composite image obtained by combining the images of three cardiac cycles; this allows to exploit the similarity of corresponding frames from different cycles. As visually reported in [53], using the composite image led to increased delineation accuracy.

Instead of segmenting a specific chamber, John and Jayanthi [54] presented a low level image processing-based method for segmenting all cardiac chambers. The method starts by converting a 2D echo video (2 to 3 seconds) to grayscale frames. It then applies Speckle Reducing Anisotropic Diffusion (SRAD) filter to remove speckle noise from the image. To approximate the chamber locations, k-means algorithm is applied to create clusters of pixels with similar intensities followed by thresholding using an empirically determined value. Visual results demonstrated good agreement between the contour obtained by the proposed method and the manual contour. This method fails to segment frames that have low contrast or dropouts on LV internal walls.

Other low level image processing-based methods for chamber segmentation can be found in [55] (watershed algorithm), [56] (Otsu thresholding and edge detection), [57] (thresholding and morphological operations), [58] (watershed algorithms), and [59] (thresholding and morphological operations). The methods of this category are easy to implement and have low computational complexity as compared to the methods of other categories. However, these methods are highly sensitive to the signal-to-noise ratio (SNR). In addition, these methods perform poorly, and might completely fail, in detecting the border in images with obscure boundaries, non-uniform regional intensities, and confusing anatomical structures (e.g., valve).

Deformable Model-Based Methods:

Chen et al. [60] generates the active contour of LV by solving a coupled optimization function that combines shape and intensity priors. The first optimization part is the weighted sum of the energy of the geometric contours of similar shapes. Minimizing this energy provides the initial contour and the transformation that aligns it to the prior shape. The geometric contours of all shapes, which are used to generate the prior shape, are obtained by manually tracing the cardiac boundaries of 85 images captured from 61 patients at end-diastole (ED). The second optimization part provides the optimal estimate of the weight by maximizing the mutual information of the image geometry (MIIG). The process of solving both parts generates the final LV segmentation. The visual results demonstrate that the proposed method can provide LV contours that are close to the contours provided by experts. It also shows that using MIIG provides a better description than MI (mutual information) because MIIG takes into account the neighborhood intensity distribution. MIIG, however, has a significant computational cost. A simpler active contour-based method is presented in [61]. The method combines Hough transform and active contour to detect LV in PSAX and PLAX images. Hough transform is used to generate LV initial shape. Active contour is then used to generate, via energy minimization, the final exact shape of LV. The detected LV border is used to calculate the following indices: LV areas in PSAX and PLAX views, LV volume, LV mass, and wall thickness.

Conventional active contour methods suffer from slow convergence. In [62], Marsousi et al. used B-spline snake algorithm for segmenting the endocardial boundary of LV chamber. The presented method does not require expensive optimization computation and is faster than conventional active contour methods. The main limitation of this method lies in the selection of the initial contour; i.e., if the selected initial contour lies far from the actual boundary, higher iterations of balloon force or Gradient Vector Flow [63] should be executed, which causes error and leads to tremendous increase in the time complexity. To avoid this problem, the method requires experts to manually select some points inside LV chamber. To automate the point selection, Marsousi et al. extends their method in [64] to select the best initial contour using a novel active ellipse model. Particularly, the intersection point of all chambers in A4C view is detected at the nearest point to the mass center. After detection the point, an initial ellipse is placed on the top-left side of the point followed by growing the initial ellipse until it fits the boundary. This method is tested using 20 A2C and A4C images collected from normal and abnormal cases. A comparison between this approach [64] and the previous approach [62] is performed using Dice’s Coefficient (90.66±5.17 [62] and 92.30 ± 4.45 [64]) and computational time (1.52 ± 0.82 [62] and 0.63 ± 0.29 [64]).

Other deformable-based methods for chamber segmentation can be found in [65] (Speckle resistant Gradient Vector Flow and B-spline), [66] (variational level set approach), [67] (k-means and active contour), [68] (constrained level-set), [69] (phase-based level set evolution), [70] (phase-based level set evolution), and [71] (active contour model and SIFT). Although deformable-based methods provide accurate segmentation, these methods are view-specific and hence do not perform well with widely varying shapes. In addition, these methods are highly sensitive to the initial contours and tend to become computationally complex.

Statistical Model-Based Methods:

One of the first works that use statistical model for LV segmentation is presented in [72]. The proposed framework consists of three main stages: global despeckling, Active Appearance model (AAM) training, and LV segmentation. The global despeckling reduces speckle noise while maintaining the important image features. The second stage involves generating AAM model that represents the shape and texture of all end-diastole (ED) and end-systole (ES) images in the training set. To model the shape from the training images, the manually labeled contour and four landmark points in each image are used to align and register the images in the training set. The appearance model of AAM is generated using a weighted concatenation of three parts: the intensities of the original image shape, the intensities of the denoised image shape, and the mean gradient at each of the four landmark points. The final AAM model is constructed from the eigenvectors of the largest eigenvalues that are obtained by applying PCA to the combined model (shape and texture model). The third stage involves positioning the model in a new target image by solving an optimization problem. The proposed approach is tested using two fetal datasets: synthetic fetal echo images and clinical fetal echo images. The overall segmentation accuracies of the proposed method are 84.12% and 84.39% for synthetic and clinical images, respectively. The visual results demonstrate the superiority of the proposed method as compared to methods that use active shape models (ASM) [73], [74] as well as conventional AAM and constrained AAM [75].

Statistical based methods for chamber segmentation are view-specific and sensitive to the large variations in shape or appearance; i.e., cannot handle the large variations in chamber shape and appearance. Also, these methods can easily be trapped in local minima and require manual annotation.

Conventional Classification-Based Methods:

The methods in this category utilize traditional machine learning approaches for labeling each pixel as chamber or background.

A machine learning-based method for fetal chambers segmentation is presented in [76]. The method starts by initializing a dictionary D0 as a random matrix and computing the sparse coefficients of this matrix (X0) from the training samples using Orthogonal Matching Pursuit (OMP). To generate a compact dictionary, sub-dictionaries (atoms) with utilization ratios less than a pre-determined threshold is discarded followed by updating the atom indices and coefficients to obtain a new group dictionary. After learning the group dictionary D, a new test sample is converted to two sparse coefficients Xout and Xin with respect to Dout and Din sub-dictionaries, where out and in subscripts indicate the area outside and inside the chambers. The corresponding reconstruction residue Rout and Rin are then calculated using a proposed reconstruction residue function.

The final boundary is obtained by classifying each pixel in the sample image as one or zero using the calculated minimum reconstruction residue. The proposed method, called Adaptive Group Dictionary Learning, is evaluated using 40 clinical fetal echocardiograms. The experimental results demonstrate the efficiency of the proposed method as compared to previous machine learning-based methods [77], [78]. The construction of only two sub-dictionaries limits the proposed method to images that have two intensity patterns, and suggest that it might fail when applied to images with several intensity patterns.

Deep Learning-Based Methods:

Semantic CNNs divide the image into different objects by labeling each pixel with the class of its enclosing object. These networks consist of only convolution and pooling layers organized in an encoder-decoder structure.

In [46], four separate semantic U-net models [79] are trained for segmenting the cardiac structures in PLAX, PSAX, A2C, and A4C views. The number of training data (images and masks) for each model are 128, 72, 168, and 198 for PLAX, PSAX, A4C, and A2C, respectively. The training data for all models are augmented using cropping and blacking out techniques. All the models are trained using ADAM optimizer, 1 × 10−4 learning rate, 1 × 10−6 weight decay, 0.8 middle layers drop out, mini-batch size of 5, and 150 epochs. The trained models achieved good to excellent performance with IoU values that range from 73 to 92. The segmented cardiac chambers for each image is used to compute geometric dimensions, volumes, mass, longitudinal strain, and ejection fraction. These indices are then used for assessing cardiac structure and function. As discussed in the paper, the proposed automated framework showed superior performance as compared to manual measurements across all cardiac indices. Recent studies that use U-net and FCN for chamber segmentation can be found in [80], [81].

Machine learning-based methods (conventional and modern) for chamber segmentation showed excellent performance and outperformed the performance of human experts. However, building robust machine learning-based methods require a relatively large and well-annotated datasets. Also, these methods, especially deep learning-based, can be computationally expensive. Further, these methods may segment pixels outside the desired cardiac region due to the lack of model constraint. Finally, existing deep learning-based methods lack interpretability; i.e., they do not interpret nonlinear features or show the important human-recognizable clinical features.

4.4.2. Doppler, Envelopes Segmentation

The accurate tracing of spectral envelopes and estimation of maximum velocities in Doppler images has a great clinical significance. We review next existing methods for spectral envelope segmentation, and provide a summary in Table 4.

Low Level Image Processing-Based Methods:

Zolgharni et al. [82] presented a thresholding-based method to detect spectral envelopes in long Doppler strips that span over several heartbeats. The analysis of long Doppler strips allows to extract additional velocity measures and leads to better understanding of the cardiac function. The method starts by manually locating the Doppler region (ROI) followed by converting pixel to velocity on the vertical axis and pixel to time on the horizontal axis. The baseline (zero velocity) is then determined and used to separate the negative Doppler profiles. Positive Doppler profiles are detected using a proposed objective thresholding method. The generated binary images are further processed to remove small connected areas. Finally, maximum velocity profiles are obtained using the biggest-gap algorithm as follows. A column vector is scanned from left to right to find a gap (cluster of consecutive black pixels). The largest gap from the top is selected as a point on the profile. The final output of the Biggest-Gap algorithm represents the maximum velocity envelope. This envelope is further smoothed using a low-pass first-order Butterworth filter. To extract Doppler indices from the spectral envelopes, Gaussian model is fitted to the velocity profile and used to calculate peak velocity and velocity time integral. The automated measurements of velocity-time-integral showed strong correlation (r = 0.94) and good Bland-Altman agreement (SD = 6.9%) with the expert values. Similarly, the automated measurement of peak-velocities showed strong correlation (r = 0.98) with the expert values.

Another low level image processing-based method is presented in [83]. The proposed method extracts three Doppler indices, namely peak pressure gradient, peak velocity, and pressure half time, from the spectral envelopes for the purpose of assessing the severity of aortic regurgitation (AR). The method starts by locating the Doppler ROI based on the fixed locations of the vertical and horizontal axes (specific assumption). Before applying edge detector, several pre-processing operations such as noise filtering and contrast adjustment are performed. Then, Canny edge detector is applied to segment the spectral envelope. Once the envelope is segmented, the horizontal and vertical axes are converted into time and velocity. Finally, the curve is scanned to detect the highest peak value, which is used to compute the peak pressure gradient and pressure half time. To evaluate the performance of the proposed method, the automatic indices, computed from Doppler images of 11 subjects, are compared with human assessment. The results proved the feasibility of using the proposed algorithm in assessing the severity of AR as it showed strong correlation with human assessment for three age groups: 0.98 correlation for group 1 (20–35 years old), 0.92 correlation for group 2 (36–50 years old), and 0.83 correlation for group 3 (51–60 years old).

Texture analysis is a low level image operation that involves detecting regions in a given image based on their texture content (i.e., spatial variation in pixel intensities). Applying texture filters to an image returns a filtered image. Each pixel of this new image is a statistical representation of a neighborhood around this pixel in the original image. Biradar et al. [84] proposed to use combinations of three texture filters, which are entropy, range, and standard deviation, to detect the envelope in CW Doppler images. The filtered image is then thresholded and processed morphologically using erosion and dilation operations. The proposed method is evaluated using CW images of 25 patients suffering from aortic regurgitation. The experimental results showed that using a combination of entropy, range, and standard deviation filters can accurately delineate the spectral boundaries of CW Doppler images.

Other low level image processing-based methods for detecting velocity profiles can be found in [85] (thresholding and edge detection), [86] (Otsu thresholding), [87] (empirical thresholding and Random sample consensus), [88] (thresholding and edge detection), [89] (local adaptive thresholding), and [90] (thresholding and edge detection). Low level image processing-based methods can detect spectral envelopes of different blood flows without any pre-training and with a minimum amount of data. However, these methods are very sensitive to the image noise and artifacts as well as the image contrast and intensity patterns.

Deformable-Based Methods:

Gaillard et al. [63] investigated the use of active contour for detecting Doppler spectral envelopes. The initial snake is generated using an automated method presented in [65]. The final snake of the envelope is then found by minimizing the internal and external energy functions using the generalized gradient vector flow field (GGVF) [63]. The detected envelopes are used to extract several indices (e.g., velocity time integral). These indices are strongly correlated (r=0.99) with the indices computed manually by human experts. Other contour-based method for cardiac and Doppler segmentation are presented in [91], [92]. As shown in these works, the shape and location of the initial contour greatly impacts the process of segmentation; additionally, these methods might require manual annotations and tend to be computationally expensive.

Model-Based Methods:

Kalinic et al. [93] proposed a model-based method for segmenting the velocity profile in CW images. The segmentation process consists of two main steps: registration step and transferring step. The registration step generates a set of parameters that describe the geometric transformation of target-reference mapping. The reference image (model) is chosen to be the least different from the rest of the images in the dataset. This reference image is chosen by calculating the mutual mappings of all the images as described in [93]. After selecting the reference image, the velocity profile of this image is segmented manually by a cardiologist. The velocity profile of a new target image can be obtained by geometrically transferring, using the parameters obtained in the registration step, the profile of the reference image (model) to target image. The proposed method is evaluated using 59 velocity profiles extracted manually from CW images [93]. Instead of manually selecting the reference image, Kalinic et al. [94] extended [93] and used an atlas generated from CW Doppler images of healthy volunteers to register a new target image. The atlas image (reference image) is the statistical average of all images constructed using the arithmetic image averaging operation as discussed in [94]. Detailed presentation of the atlas model and discussion of results can be found in [94]. A recent model-based method that uses an atlas for constructing the spectral envelope is presented in [95].

Although model-based methods have been successfully used in spectral envelopes segmentation, these methods have difficulty handling the Doppler variations among patients and various disease types. Furthermore, they require manual annotation, and can become computationally expensive.

Machine Learning-Based Methods:

Park et al. [96] introduced a learning-based method for detecting the spectral envelope of mitral valve (MV) inflow. The method starts by training a series of detectors to detect a left root point (E velocity), right root point (A velocity), a single triangle box (E and A velocities overlapped), and a double triangle box (E and A velocities separated). Each of these detectors, which are trained using negative and positive examples, provided label and detection probability. After identifying the region of interest using these detectors, the triangle shape was inferred using a shape inference algorithm [96]. Given training images and their corresponding shapes, this algorithm learns a non-parametric regression function that gives a mapping from an image to its shape. Once the shape profiles are generated, the best shape among all candidates is selected as the final spectral envelope shape. Finally, four flow measurements [96] are computed from the detected envelopes. This method is evaluated using 298 Doppler images and compared with manually traced envelopes. The experimental results presented in [96] proved the superiority of the proposed method as compared to a previous method [97]. Known limitations of machine learning-based methods are: 1) the need of a large number of manually labeled images and 2) the subjectivity and difficulty of extracting the best set of features.

In summary, we present above several automated methods for spectral envelope segmentation. Existing methods for Doppler segmentation have different limitations that need to be addressed to obtain robust and practical automated clinical applications. For example, current methods are sensitive to the image noise, variations, and are designed for specific Doppler profile. Future research, therefore, should focus on developing segmentation methods that are robust to noise, different variations, and blood flows. Another future direction would be to automate Doppler gate localization to speed up the acquisition process, increase the quality of the recorded Doppler, and enhance the segmentation performance. Finally, an interesting direction for future research would be to investigate the use of recent deep learning methods for spectral envelope segmentation.

In addition to the aforementioned methods, we refer the reader to automated non-image methods applied directly to the raw signal for maximum velocity estimation [98], [99], [100], [101]. These methods are highly affected by the signal-to-noise levels and the transducer configurations. Further, they can only be applied to the original Doppler signal during the acquisition.

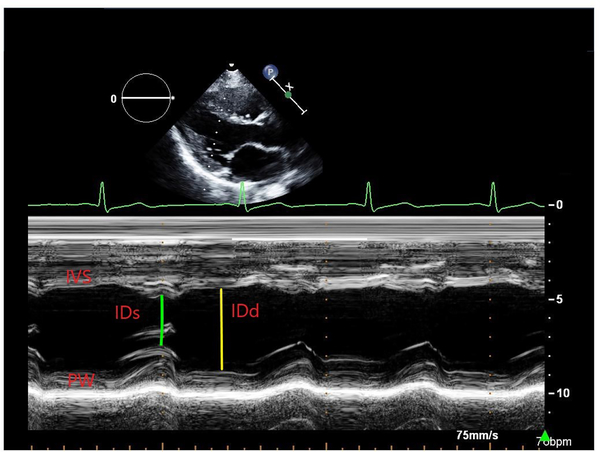

4.4.3. M-mode, Wall Segmentation

M-mode echo is used to provide an accurate assessment of small cardiac structures with rapid motions (e.g., valves). Assessing cardiac function from M-mode requires to accurately delineate wall boundaries followed by estimating different indices (e.g., left ventricular dimension at end-systole). This process is challenging due to the presence of image artifacts and false echoes between the cardiac walls. Contrary to B-mode and Doppler images, only few methods are proposed to delineate wall boundaries in M-mode images.