Practice-based learning and improvement (PBLI) Milestones focus on 2 themes: evidence-based and informed practice (PBLI-1) and reflective practice and commitment to personal growth (PBLI-2).1 The Harmonized Milestones 2.0 were developed with an understanding that graduate medical education programs need to have methods to assess trainees' development in these complex areas. The conceptual framework of self-directed lifelong learning is a key aspect of medical professionalism and an important skill to develop to maintain proficiency in the ever-advancing field of medicine.2,3 The American Board of Medical Specialties values meaningful participation in PBLI that includes aspirational continuing learning expectations.4,5This article intends to provide guidance on assessing PBLI Milestones and provide resources for trainee development.

PBLI-1: Evidence-Based and Informed Practice

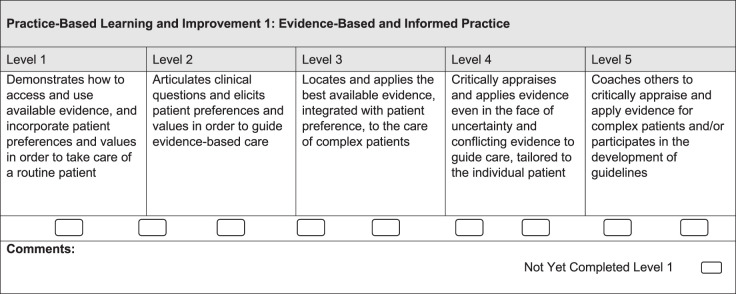

The subcompetency of evidence-based medicine and informed practice highlights the principles of evidence-based medicine, with a focus on integrating the best available evidence into clinical decisions, informed by patient values, and clinical expertise (Figure 1).1 All clinicians need these skills as a foundational component to lifelong learning. Several articles examine how to assess the use of evidence-based medicine in a variety of trainee types (Table 1). Some authors have conducted a needs assessment or summarized the available tools.6,7 The majority of the tools focus on multiple-choice and/or short-answer tests to evaluate the learner's knowledge of the tenets of evidence-based medicine.8–12 The most studied of these tests is the UCSF-Fresno Medical Education tool, a 7-question written test evaluating how to ask a clinical question, assess the hierarchy of evidence, and understand basic statistical and methodological concepts.9,10 The Fresno tool has been validated in several populations of learners and could be used to assess if a trainee has met Level 2 for the PBLI-1 Milestones.

Figure 1.

Harmonized Evidence-Based Medicine and Informed Practice Milestones

Table 1.

Summary of Literature to Assess Learners in Evidence-Based Medicine

| Author(s), (y) | Target Audience | Assessment |

| Bhutiani et al (2016)8 | Third-year medical students | Objective structured clinical examination |

| Bougie et al (2015)9 | Obstetrics and gynecology residents in all programs in Canada | Self-assessment; standardized written questions |

| Epling et al (2018)6 | Family medicine program directors in all programs in the United States | Program director's needs assessment |

| Haspel (2010)13 | Transfusion medicine residents in a university-based program | Journal club curriculum |

| Lentscher and Batig (2017)14 | Obstetrics and gynecology residents in a military program | Structured journal club |

| Patell et al (2020)10 | Internal medicine residents in both university- and community-based programs | Multiple-choice evidence-based medicine test |

| Smith et al (2018)11 | Third-year medical students | Fresno evidence-based medicine test |

| So et al (2019)15 | Foot/ankle residents in 2 community-based programs | Structured review instrument for journal club |

| Thomas and Kreptul (2015)7 | Family medicine residents | Meta-analysis of available tools |

| Tilson (2010)12 | Physical therapy doctorate students | Validation of Fresno test |

Fewer published approaches evaluate the day-to-day clinical application of evidence-based medicine and informed practice. Structured journal clubs are one approach; however, this tactic is retrospective and does not assess an individual trainee's ability to apply findings to a specific patient's needs.13–15 While direct observation and chart-stimulated recall have not been studied, they are potential approaches to assess if a trainee has met Level 3 or 4 for this Milestone. Level 5 can be assessed based on a portfolio of evidence-based guidelines that the trainee creates or documentation of mentoring others in evidence-based medicine.

PBLI-2: Reflective Practice and Commitment to Personal Growth

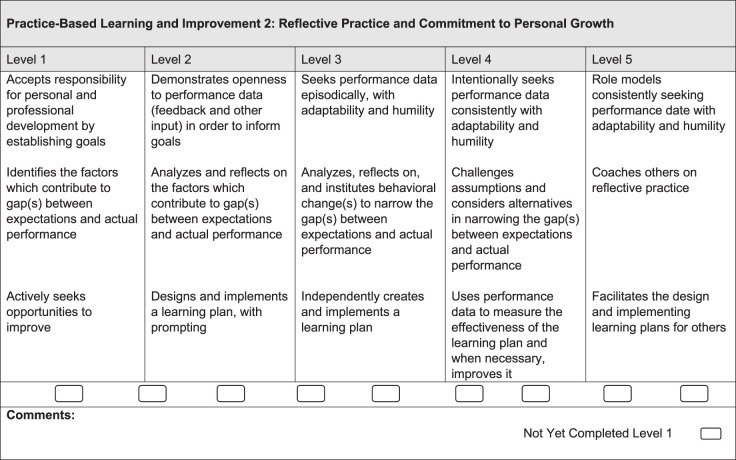

An important goal of medical educators is to foster the development of reflective lifelong learners.3 The subcompetency “Harmonized Reflective Practice and Commitment to Personal Growth” acknowledges this critical skill and uses 3 streams of behavior to differentiate seeking feedback, addressing gaps, and intentionally developing a learning plan (Figure 2).1 While assessment tools that provide formative and summative assessment of this complex framework are lacking, a number of studies described best practice characteristics and implementation strategies to utilize learning plans.

Figure 2.

Harmonized Reflective Practice and Commitment to Personal Growth Milestones

Individualized Learning Plans

Physicians do not always effectively incorporate an individualized learning plan (ILP) and learning goals into their daily work. Lack of time and understanding of the skills needed to be a self-directed learner are barriers to self-directed learning and use of ILPs.16–19 Residents can struggle with identifying specific goals and formulating an effective plan to achieve them.18 Literature exists regarding how to best teach this skill and how to make the process meaningful to the learner.20–22 However, the mechanism to most effectively develop self-directed lifelong learning skills is not fully known at this point. Previous research indicates individual characteristics are more strongly associated with self-directed learning than program characteristics.23 A conceptual model for self-directed learning based on the I-SMART mnemonic (Important, Specific, Measurable, Accountable, Realistic, Timeline) emphasizes specific aspects of the goal itself that lead to success.24 There is initial evidence that the type of learning goal identified by residents is associated with success in achieving that goal.25,26 However, it is suggested that a supportive learning environment is crucial.27 Residents identified key aspects of the learning environment in the longitudinal block that facilitated their success: (1) flexibility to closely align learning goals with clinical activities; (2) adequate time to work on goals; and (3) faculty oversight and support of their learning goals.24,26

Performance Dashboards

Performance dashboards can display a trainee's progress along the Milestones and identify areas of concern and strength. Multiple elements can be pulled from the electronic health record, learning management systems, and administrative data to create performance dashboards (Table 2). These dashboards have been developed for multiple specialties to track progress, provide real-time feedback, and document operative autonomy for surgical cases.28–30 Using performance data can help assess if the trainee achieves Level 2 or 3 of the first PBLI-2 Milestones stream. Performance data, if on a dashboard or as individual elements, is necessary for reflective practice, but by itself is insufficient. Data alone does not drive improvement. Performance data create transparency about expectations and actual performance, which should lead to analysis, reflection, and ultimately improvement. There are limitations to performance dashboards. Using performance metrics compared to standard feedback showed emergency medicine residents had improved satisfaction with the feedback process when the performance metrics were included, but there was no change in clinical productivity or efficiency.31 Performance metrics may not be easily attainable or reflect outcomes that are under the trainee's control. For example, the emergency department metric of “left without being seen” has a number of causes, many of which are unrelated to trainee performance. Using dashboard metrics that will be tracked when the trainee is in autonomous practice may make the data seem more applicable and better prepare them for the future.

Table 2.

Sources of Trainee Data for Performance Dashboards

| Clinical Data | Educational Data |

| Chart audits | Online module completion |

| Procedure/case logs | Direct observation evaluations |

| Medical record completeness and deficiencies | Attendance data |

| Case volumes; appointment volumes | Scholarly output |

| Quality/safety indicators (readmission rates, complication rates) | Rotation evaluations |

| Patient evaluations/patient experience scores | Semiannual program evaluations |

Assessing PBLI Along the Developmental Continuum

The PBLI Milestones echo qualities of reflection and insight that are fundamental to self-directed lifelong learning, and to clinical practice at any stage of professional development. Trainees who do not incorporate constructive feedback into practice can be frustrating to program directors. One way to help a trainee become a self-directed learner is to work with them to develop and implement an ILP with supervision and assistance from a faculty coach. The faculty can act as guide, resource, and expert to provide feedback and course-correction.21 The ILP can be used as a tool—a litmus test—for the CCC to gauge the trainee's insight.

Eva and Regehr drew the distinction between self-assessment (ability), assessment and reflection (pedagogical strategies), and self-monitoring (immediate and contextual responses).32 When given this assigned task, some trainees will refuse (“I don't need to do this” or “I don't have the time to do this”), some will deflect (“I'm being treated unfairly”), and some will concede (“I can't do this”). These responses help gauge the trainee's capability of improving and ability to achieve competence in PBLI. Trainees who are incapable of effectively demonstrating insight are unlikely to achieve the academic standards of the program and to be self-directed lifelong learners. The CCC can use this information to inform their recommendations to the program director.

Conclusions

Use of evidence-based medicine, ILPs, and performance dashboards help clinicians apply evidence to patient care, recognize areas of improvement, and identify when gaps are closed. Programs must evaluate the feasibility of implementing PBLI assessment tools and the impact of the clinical learning and working environment on PBLI.33 Continued research is needed to develop and test assessment strategies for PBLI to create a robust set of tools.

References

- 1.Edgar L, Roberts S, Holmboe E. Milestones 2.0: a step forward. J Grad Med Educ. 2018;10(3):367–369. doi: 10.4300/JGME-D-18-00372.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Fallat ME, Glover J. Professionalism in pediatrics: statement of principles. Pediatrics. 2007;120(4):895–897. doi: 10.1542/peds.2007-2229. [DOI] [PubMed] [Google Scholar]

- 3.Lehmann LS, Sulmasy LS, Desai S. Hidden curricula, ethics, and professionalism: optimizing clinical learning environments in becoming and being a physician: a position paper of the American College of Physicians. Ann Intern Med. 2018;168(7):506–508. doi: 10.7326/M17-2058. [DOI] [PubMed] [Google Scholar]

- 4.Miller SH. American Board of Medical Specialties and repositioning for excellence in lifelong learning: maintenance of certification. J Contin Educ Health Prof. 2005;25(3):151–156. doi: 10.1002/chp.22. [DOI] [PubMed] [Google Scholar]

- 5.Moyer VA. Maintenance of certification and pediatrics milestones-based assessment: an opportunity for quality improvement through lifelong assessment. Acad Pediatr. 2014;14(2 suppl):6–7. doi: 10.1016/j.acap.2013.11.012. [DOI] [PubMed] [Google Scholar]

- 6.Epling J, Heidelbaugh J, Woolever D, et al. Examining an evidence-based medicine culture in residency education. Fam Med. 2018;50(10):751–755. doi: 10.22454/FamMed.2018.576501. [DOI] [PubMed] [Google Scholar]

- 7.Thomas RE, Kreptul D. Systematic review of evidence-based medicine tests for family physician residents. Fam Med. 2015;47(2):107–117. [PubMed] [Google Scholar]

- 8.Bhutiani M, Sullivan WM, Moutsios S, et al. Triple-jump assessment model for use of evidence-based medicine. MedEdPORTAL. 2016;12:10373. doi: 10.15766/mep_2374-8265.10373. [DOI] [Google Scholar]

- 9.Bougie O, Posner G, Black AY. Critical appraisal skills among Canadian obstetrics and gynaecology residents: how do they fare? J Obstet Gynaecol Can. 2015;37(7):639–647. doi: 10.1016/S1701-2163(15)30203-6. [DOI] [PubMed] [Google Scholar]

- 10.Patell R, Raska P, Lee N, et al. Development and validation of a test for competence in evidence-based medicine. J Gen Intern Med. 2019;35(5):1530–1536. doi: 10.1007/s11606-019-05595-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Smith AB, Semler L, Rehman EL, et al. A cross-sectional study of medical student knowledge of evidence-based medicine as measured by the Fresno test of evidence-based medicine. J Emerg Med. 2016;50(5):759–764. doi: 10.1016/j.jemermed.2016.02.006. [DOI] [PubMed] [Google Scholar]

- 12.Tilson JK. Validation of the modified Fresno test: assessing physical therapists' evidence-based practice knowledge and skills. BMC Med Educ. 2010 doi: 10.1186/1472-6920-10-38. 10:38. [DOI] [PMC free article] [PubMed]

- 13.Haspel RL. Implementation and assessment of a resident curriculum in evidence-based transfusion medicine. Arch Pathol Lab Med. 2010;134(7):1054–1059. doi: 10.1043/2009-0328-OA.1. [DOI] [PubMed] [Google Scholar]

- 14.Lentscher JA, Batig AL. Appraising medical literature: the effect of a structured journal club curriculum using the lancet handbook of essential concepts in clinical research on resident self-assessment and knowledge in milestone-based competencies. Mil Med. 2017;182(11/12):e1803–e1808. doi: 10.7205/MILMED-D-17-00059. [DOI] [PubMed] [Google Scholar]

- 15.So E, Hyer CF, Richardson M, et al. How does a structured review instrument impact learning at resident journal club? J Foot Ankle Surg. 2019;58(5):920–929. doi: 10.1053/j.jfas.2019.01.016. [DOI] [PubMed] [Google Scholar]

- 16.Li ST, Favreau MA, West DC. Pediatric resident and faculty attitudes toward self-assessment and self-directed learning: a cross-sectional study. BMC Med Educ. 2009;9:16. doi: 10.1186/1472-6920-9-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li ST, Burke AE. Individualized learning plans: basics and beyond. Acad Pediatr. 2010;10(5):289–292. doi: 10.1016/j.acap.2010.08.002. [DOI] [PubMed] [Google Scholar]

- 18.Stuart E, Sectish TC, Huffman LC. Are residents ready for self-directed learning? A pilot program of individualized learning plans in continuity clinic. Ambul Pediatr. 2005;5(5):298–301. doi: 10.1367/A04-091R.1. [DOI] [PubMed] [Google Scholar]

- 19.Kiger ME, Riley C, Stolfi A, Morrison S, Burke A, Lockspeiser T. Use of individualized learning plans to facilitate feedback among medical students. Teach Learn Med. 2020;32(4):399–409. doi: 10.1080/10401334.2020.1713790. [DOI] [PubMed] [Google Scholar]

- 20.Nothnagle M, Anandarajah G, Goldman RE, Reis S. Struggling to be self-directed: residents' paradoxical beliefs about learning. Acad Med. 2011;86(12):1539–1544. doi: 10.1097/ACM.0b013e3182359476. [DOI] [PubMed] [Google Scholar]

- 21.Reed S, Lockspeiser T, Burke AE, et al. Practical suggestions for the creation and use of meaningful learning goals in graduate medical education. Acad Pediatr. 2016;16(1):20–24. doi: 10.1016/j.acap.2015.10.005. [DOI] [PubMed] [Google Scholar]

- 22.Lockspeiser T, Li ST, Burke AE, et al. In pursuit of the meaningful use of learning goals in pediatric residency: a qualitative study of pediatric residents. Acad Med. 2016;91(6):839–846. doi: 10.1097/ACM.0000000000001015. [DOI] [PubMed] [Google Scholar]

- 23.Li ST, Paterniti DA, Tancredi DJ, et al. Resident self-assessment and learning goal development: evaluation of resident-reported competence and future goals. Acad Pediatr. 2015;15(4):367–373. doi: 10.1016/j.acap.2015.01.001. [DOI] [PubMed] [Google Scholar]

- 24.Li ST, Paterniti DA, Co JP, West DC. Successful self-directed lifelong learning in medicine: a conceptual model derived from qualitative analysis of a national survey of pediatric residents. Acad Med. 2010;85(7):1229–1236. doi: 10.1097/ACM.0b013e3181e1931c. [DOI] [PubMed] [Google Scholar]

- 25.Lockspeiser TM, Schmitter PA, Lane JL, Hanson JL, Rosenberg AA, Park YS. Assessing residents' written learning goals and goal writing skill: validity evidence for the learning goal scoring rubric. Acad Med. 2013;88(10):1558–1563. doi: 10.1097/ACM.0b013e3182a352e6. [DOI] [PubMed] [Google Scholar]

- 26.Li S-TT, Paterniti DA, Tancredi DJ, Co JPT, West DC. Is residents' progress on individualized learning plans related to the type of learning goal set? Acad Med. 2011;86(10):1293–1299. doi: 10.1097/ACM.0b013e31822be22b. [DOI] [PubMed] [Google Scholar]

- 27.Li ST, Tancredi DJ, Co JP, West DC. Factors associated with successful self-directed learning using individualized learning plans during pediatric residency. Acad Pediatr. 2010;10(2):124–130. doi: 10.1016/j.acap.2009.12.007. [DOI] [PubMed] [Google Scholar]

- 28.Durojaiye AB, Snyder E, Cohen M, Nagy P, Hong K, Johnson PT. Radiology resident assessment and feedback dashboard. Radiographics. 2018;38(5):1443–1453. doi: 10.1148/rg.2018170117. [DOI] [PubMed] [Google Scholar]

- 29.Krueger CA, Rivera JC, Bhullar PS, Osborn PM. Developing a novel scoring system to objectively track orthopaedic resident educational performance and progression. J Surg Educ. 2020;77(2):454–460. doi: 10.1016/j.jsurg.2019.09.009. [DOI] [PubMed] [Google Scholar]

- 30.Cooney CM, Cooney DS, Bello RJ, Bojovic B, Redett RJ, Lifchez SD. Comprehensive observations of resident evolution: a novel method for assessing procedure-based residency training. Plast Reconstr Surg. 2016;137(2):673–678. doi: 10.1097/01.prs.0000475797.69478.0e. [DOI] [PubMed] [Google Scholar]

- 31.Mamtani M, Shofer FS, Sackeim A, Conlon L, Scott K, Mills AM. Feedback with performance metric scorecards improves resident satisfaction but does not impact clinical performance. AEM Educ Train. 2019;3(4):323–330. doi: 10.1002/aet2.10348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Eva K, Regehr G. “I'll never play professional football” and other fallacies of self-assessment. J Contin Educ Health Prof. 2008;28(1):14–19. doi: 10.1002/chp.150. [DOI] [PubMed] [Google Scholar]

- 33.Ludmerer KM. Four fundamental educational principles. J Grad Med Educ. 2017;9(1):14–17. doi: 10.4300/JGME-D-16-00578.1. [DOI] [PMC free article] [PubMed] [Google Scholar]