Abstract

Past research indicates that spontaneous mimicry facilitates the decoding of others’ emotions, leading to enhanced social perception and interpersonal rapport. Individuals with schizophrenia (SZ) show consistent deficits in emotion recognition and expression associated with poor social functioning. Given the prominence of blunted affect in schizophrenia, it is possible that spontaneous facial mimicry may also be impaired. However, studies assessing automatic facial mimicry in schizophrenia have yielded mixed results. It is therefore unknown whether emotion recognition deficits and impaired automatic facial mimicry are related in schizophrenia. SZ and demographically matched controls (CO) participated in a dynamic emotion recognition task. Electromyographic activity in muscles responsible for producing facial expressions was recorded during the task to assess spontaneous facial mimicry. SZ showed deficits in emotion identification compared to CO, but there was no group difference in the predictive power of spontaneous facial mimicry for avatar’s expressed emotion. In CO, facial mimicry supported accurate emotion recognition, but it was decoupled in SZ. The finding of intact facial mimicry in SZ bears important clinical implications. For instance, clinicians might be able to improve the social functioning of patients by teaching them to pair specific patterns of facial muscle activation with distinct emotion words.

Keywords: Emotion recognition, Facial mimicry, FEMG, Shared experiences, Schizophrenia

1. Introduction

Abnormalities of emotional experience were recognized as central to schizophrenia by Kraepelin and Bleuler who believed “flat” and “inappropriate” affect to be core features of the illness (see Trémeau, 2006 for a review). Although there is a long history of emotion research in schizophrenia, much of the past work has focused on facial emotion recognition performance and anhedonia (Horan et al., 2005.) Less known is the relationship between the conscious experience of emotion and the unconscious activation of bodily responses that might also be altered in schizophrenia. Interestingly, while some aspects of emotional functioning appear to be altered in individuals with schizophrenia, others might be intact. For instance, although emotion perception and expression have been consistently found to be impaired, emotional experience seems largely unaffected in schizophrenia (for a review see Kring and Elis, 2013). This suggests that while people with schizophrenia report subjective emotional experiences similar to those reported by control individuals, they have difficulty recognizing the emotional facial expressions of others, and exhibit atypical psychophysiological responses to emotional stimuli. Producing and recognizing emotional expressions is crucial to communicating internal feelings and intentions (Ekman et al., 1979). Thus, the social function of emotions (i.e., communicating affective states and understanding the experiences of others) appears to be compromised in schizophrenia.

Appropriate emotional responses within a social interaction are crucial to successful communication and navigation of interpersonal relationships. Research suggests that the facial expression of emotions is reduced in individuals with schizophrenia, even when they report experiencing an emotion (Berenbaum and Oltmanns, 1992; Kring and Moran, 2008). However, studies using facial electromyography (fEMG) found that the recruitment of facial muscles underlying the production of facial expressions is intact in this population (Kring et al., 1999). Together, these results suggest that although observed emotional expressions are affected in schizophrenia, the engagement of facial muscles associated with these expressions is not. In other words, while overt facial expressions are reduced, the underlying moto-kinetic process seems intact.

The ability to recognize the emotional states of others is also critical to social functioning and predicts functional outcome (Hooker and Park, 2002). Emotion detection and identification have been consistently found to be impaired across the schizophrenia spectrum: from individuals at ultra high-risk for psychosis (Amminger et al., 2012a; Cohen et al., 2015; Gooding and Tallent, 2003; Phillips and Seidman, 2008), to first-episode patients (Herbener et al., 2005), and individuals with schizophrenia (Edwards et al., 2002; Kohler et al., 2003, 2010). Importantly, emotion recognition deficits in schizophrenia are independent of medication effects and demographic factors such as race and gender (Kohler et al., 2010). Additionally, emotion recognition deficits appear to be independent of the stage of schizophrenia illness, already contributing to social functioning impairments in emerging psychotic illness (Amminger et al., 2012b).

Research suggests that the ability to identify emotions in others might rely on automatic motor processes such as spontaneous bodily coordination (Lakin and Chartrand, 2003; Moody et al., 2018; Prochazkova and Kret, 2017). For instance, facial mimicry, the automatic process by which individuals match other’s facial expressions during social interactions (Dimberg, 1990) has been shown to contribute to emotion recognition (Goldman and Sripada, 2005; Niedenthal et al., 2010). When facial mimicry is blocked with a muscle paralytic, the ability to identify facial expression of emotions is impaired in healthy individuals (Oberman et al., 2007; Neal and Chartrand, 2011). Furthermore, a specific pattern of muscle activation can induce an emotion (Prigent et al., 2014), suggesting that the automatic mimicry of other’s facial expressions allows us to share their emotional experience. The facial feedback hypothesis further posits that facial synchrony promotes empathy (Adelman and Zajonc, 1999), highlighting the importance of mimicry for social connectedness. In sum, the automatic mimicry of others’ facial expressions mediates emotional contagion, social functioning, and embodied affect. Therefore, deficits in facial mimicry could contribute to emotion identification deficits.

Although it is well established that individuals with schizophrenia exhibit decreased spontaneous facial expressions, studies investigating facial mimicry in this population remain sparse and have yielded mixed results (Kring, 1999). Indeed, facial mimicry has previously been found to be both intact (Chechko et al., 2016) and impaired (Varcin et al., 2010) in individuals with schizophrenia. Thus, it remains unclear whether spontaneous mimicry is altered in schizophrenia. Interestingly, intentional facial expression imitation has been found impaired in schizophrenia (Schwartz et al., 2006), and broader deficits in action imitation have been linked to altered brain activation in this population (Thakkar et al., 2014). Similar disruptions of automatic facial mimicry could explain deficits in emotion recognition and broader social deficits in individuals with schizophrenia.

Deficits in emotion recognition are well established in the literature, however, the ability of people with schizophrenia to automatically mimic the facial expressions of others remains unclear. Furthermore, to our knowledge, automatic facial mimicry and emotion identification have never been tested simultaneously in schizophrenia. In the current study, we assessed performance on a novel dynamic emotion recognition task while monitoring facial mimicry using fEMG. Given past findings, we expected the performance of individuals with schizophrenia to be less accurate than that of matched controls during the emotion recognition task. With respect to facial mimicry measured by fEMG, we expected individuals with schizophrenia to show reduced activity of the zygomatic and corrugator muscles. Although the findings of facial mimicry in schizophrenia are mixed, we decided to test the hypothesis that deficits in facial synchrony underlie impaired emotion recognition in schizophrenia.

2. Methods

2.1. Participants

Twenty-one individuals who met the DSM-V criteria for schizophrenia (SZ) were recruited from an outpatient day facility in Nashville, TN. Diagnosis was confirmed with the Structured Clinical Interview for DSM-V (SCID-5RV; First et al., 2015). Symptoms were assessed with the Brief Psychiatric Rating Scale (BPRS; Overall and Gorham, 1962), the Scale for the Assessment of Positive Symptoms (SAPS; Andreasen, 1984), and the Scale for the Assessment of Negative Symptoms (SANS; Andreasen, 1989). All SZ participants were currently taking antipsychotic medication. Twenty-three demographically matched controls (CO) without any history of DSM-V disorders were recruited by advertisement from the same community. Exclusion criteria for both groups were: history of head injury or seizures, substance use or abuse, neurological diseases (e.g., stroke, tumors), or estimated IQ below 85. Intelligence was estimated using the North American Adult Reading Test, Revised (Blair and Spreen, 1989). Handedness was assessed using the Edinburgh Handedness Inventory (Oldfield, 1971). All participants had normal or corrected-to-normal vision. Groups were matched on age, sex, and handedness. Participants provided written informed consent after full explanation of study procedures, and were paid for their participation. Demographic and clinical information are summarized in Table 1.

Table 1.

Participant demographic and clinical information.

| SZ (N = 21) Mean (SD) | CO (N = 23) Mean (SD) | Test statistic | p value | |

|---|---|---|---|---|

| Male/female | 12/9 | 10/13 | χ2 = 0.65 | p = 0.42 |

| Age | 47.90 (7.83) | 45.65 (8.17) | t = −1.00 | p = 0.33 |

| Handednessa | + 70.00 (38.41) | + 79.55(29.40) | t = 0.91 | p = 0.37 |

| Years of education | 13.05 (2.04) | 15.74 (2.12) | t= 4.30 | p < 0.001* |

| Estimated IQb | 101.61 (8.41) | 107.25 (8.66) | t= 2.19 | p < 0.034* |

| Chlorpromazine equivalent dose (mg/kg/day)c | 336.77 (276.62) | N/A | N/A | N/A |

| BPRS | 21.05 (7.95) | N/A | N/A | N/A |

| SAPS | 21.48 (15.64) | N/A | N/A | N/A |

| SANS | 36.90 (7.95) | N/A | N/A | N/A |

p < 0.05.

Edinburgh Handedness Inventory (Oldfield, 1971). Scores range from −100 (completely left-handed) to +100 (completely right-handed).

Estimated from the National Adult Reading Test, Revised (NART-R; Blair and Spreen, 1989).

All patients were medicated. Antipsychotic dosage was converted to chlorpromazine equivalent (Andreasen et al., 2010).

2.2. Emotion recognition and rating application (ERRATA)

ERRATA, a novel dynamic avatar facial emotion recognition task was used in this study. ERRATA was designed to display faces of a diverse set of avatars with varying emotional expression and emotional intensity. A total of 12 avatars differing in age (child, adult, elderly), gender (male, female), and race/ethnicity (Asian, African, Caucasian, Hispanic, Indian) were created, using characters from Mixamo (www.mixamo.com) and animated in Maya (www.autodesk.com) following the procedure detailed by Bekele et al. (2014) and Wade et al. (2018). Each avatar was designed to display seven different emotional expressions (joy, surprise, sadness, fear, disgust, contempt and anger) at three levels of intensity (low, medium, and extreme). This resulted in 252 unique combinations of avatar, emotion, and intensity (12 × 7 × 3). These 252 combinations were randomly distributed across three blocks that contained the same number of combinations of avatars, emotions, and intensities. Each block contained 84 unique, but equivalent trials. Participants were randomly assigned to one of these blocks. As such, each participant completed 84 ERRATA trials (i.e., 4 trials per emotion at a given intensity.) See Fig. 1 for examples of avatars from ERRATA.

Fig. 1.

Examples of avatar facial expressions presented during ERRATA.

2.3. Physiological monitoring

The BIOPAC MP150 (BIOPAC Systems Inc., Goleta, CA, USA) physiological monitoring system was used to record two electromyograms (EMG) sampled at 1000 Hz. Facial EMG (fEMG) electrodes (Ag/AgCl electrodes, 10 mm diameter contact area) were attached over the corrugator supercilii and the zygomaticus major region on the left side of the face. The sensors measuring corrugator activity were placed above the participants’ left eyebrow, with one of the sensors aligned with the pupil looking straight and the other directly above the tear duct. The sensors measuring zygomatic activity were placed a third of the distance from the left side corner of the mouth and the lower crease where the ear attaches to the head. Before attaching electrodes, the skin was cleaned with alcohol and rubbed with a cotton ball to reduce impedance. The fEMG signals were transmitted to the amplifier wirelessly.

To collect event markers used for data segmentation in the offline analyses, a socket module was developed to transmit task-related (e.g., trial start/stop) event markers from the ERRATA task to the fEMG data recording program. Eighty-four fEMG data samples were segmented from each participant, solely including facial muscle activity while the participant was looking at the avatar. The fEMG data recorded by BIOPAC were raw signals, thus requiring signal preprocessing. First, a local optimized median filter was used to remove outliers. Then, the data were processed by a bandpass and a notch filter to remove noise. A set of features were subsequently extracted from the preprocessed fEMG data samples. Frequency features, which refer to the intensity of muscle activities, containing median, mean, and standard deviation of the fEMG frequency domain data, were generated. Burst features, which provide the counts of muscle activities per unit of time, were also computed. To account for individual differences in baseline muscle tone, a set of baseline fEMG features was collected and subtracted from the task features.

2.4. Procedure

Participants received detailed information regarding the experimental procedure and signed a consent form. The wireless sensors were placed on the participants’ face and checked for signal strength. Participants were then comfortably seated approximately 60 cm in front of an LCD monitor. They began the experiment by quietly sitting for 3 min to allow for collection of baseline fEMG data. After collection of the baseline data, participants performed the ERRATA task. Facial EMG was recorded during the avatar display. During the ERRATA task, the participant saw the neutral expression of an avatar for 2.5 s immediately followed by a transition to an emotional expression for 2 s. Then, a menu appeared on the screen prompting the participant to select the emotion expressed by the avatar from a list of seven emotion words. Response latency was recorded for each trial. After selecting an emotion from the menu, the participant rated the confidence of their response on a 10-point continuous sliding scale (i.e., 0 = Very Unsure to 10 = Very Confident). Each participant completed 84 ERRATA trials. A video recording of a participant completing the ERRATA task is available in Supplement 1. This video shows an overt example of spontaneous facial mimicry of the avatar’s expressed emotion.

2.5. Data analysis

2.5.1. ERRATA

A logistic regression analysis was used to model the odds of correct recognition and how these changed across emotion, intensity, and group (SZ or CO). The results are expressed in odds ratio. Differences in performance by group, as well as the potential interactions of group, emotion, and intensity were further examined. Error patterns for each group were analyzed by generating confusion matrices by emotion, for each group. Chi-square analyses were performed on these error rates to determine whether the pattern of error for each emotion differed between SZ and CO. Logistic regression was also used to model the odds of correct emotion recognition in terms of response latency and confidence rating. The association between performance and clinical and psychological measures (i.e., symptom ratings, and medication dosage) was evaluated by computing Pearson correlation coefficients. To account for multiplicity, a Holm correction was applied.

2.5.2. Electromyography

To investigate the relationship between fEMG data, participants’ responses, and the emotion expressed by the avatars, machine learning (ML) techniques were implemented. Specifically, fEMG features were used to predict the avatar’s expressed emotions, as well as participants’ responses. ML was used in order to investigate potential high order nonlinear relationships often overlooked by classical statistical analysis (e.g., t-test, ANOVA). Importantly, the comparison between the performance of the ML algorithms in the SZ versus CO group, rather than the prediction accuracies achieved, was of interest in this study.

Since fEMG data are particularly good at differentiating the valence of different emotional states (Cacioppo et al., 1986), we used the Affective Norms for English Words (ANEW; Bradley and Lang, 1999) to group emotions with similar valence. As a result, we grouped anger, disgust, and fear into one group, denoted as ADF. Surprise was removed because of its ambiguous valence. Hence, the labels used in the ML model development were contempt, joy, sadness, and ADF.

Two sets of ML experiments were run using the fEMG data of SZ and CO. In the first set, fEMG features were used to predict the actual emotions expressed by the avatars. In the second set of experiments, fEMG features were used to predict participants’ selected emotion based on their spontaneous facial mimicry. To avoid bias in the training and testing samples, also known as overfitting, all experiments were performed using a fivefold cross-validation approach. In this approach, the data samples were divided into five disjointed testing sets. Each time, one of the sets was used to test the model while the remaining four were used to train the model. Seven most commonly used ML algorithms were tested for each set of experiments. Multiple algorithms were used to ensure that potential differences in prediction results between groups resulted from the data rather than the algorithms, and to capture potential nonlinear relationships. The performance of all 7 algorithms was then compared between the two groups for each set of experiment. A Holm correction was applied.

3. Results

3.1. ERRATA performance

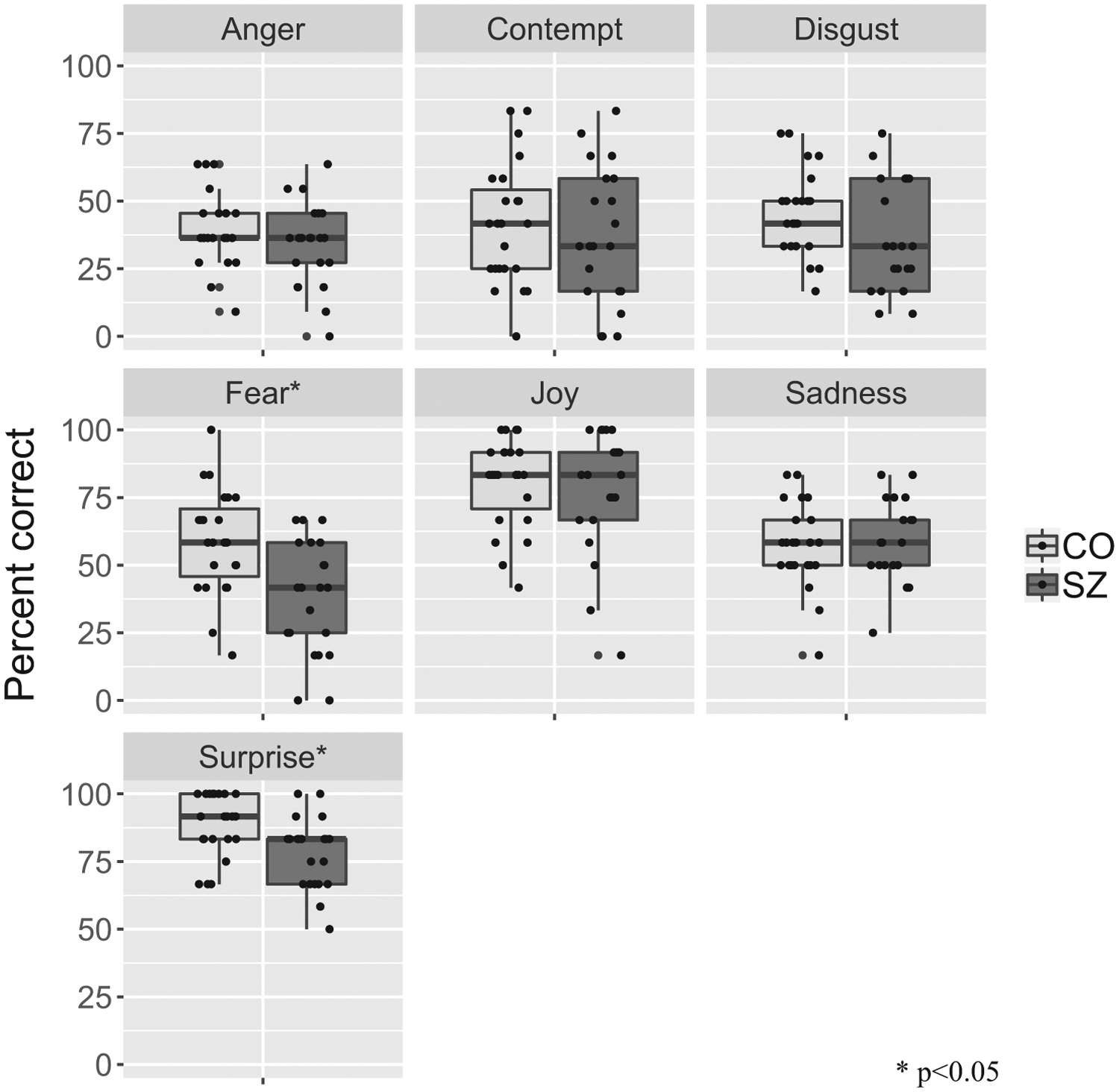

As expected, SZ were less accurate than CO at recognizing avatar’s facial expression on ERRATA across emotions (SZ: 51.3% correct, CO: 58.9% correct; OR = 0.69, p < 0.001). Thus, the odds of SZ correctly identifying an emotion were 31% lower than those of CO. When examined per emotion, recognition rates were significantly lower in SZ compared to CO for fear (OR = 0.43, p < 0.001) and surprise (OR = 0.45, p = 0.011), but not for anger, contempt, joy or sadness (all p > 0.05). After correcting for multiplicity, the observed group difference in recognition of disgust was trending (OR = 0.67, p = 0.12). Fig. 2 summarizes these results.

Fig. 2.

ERRATA performance per emotion for SZ and CO.

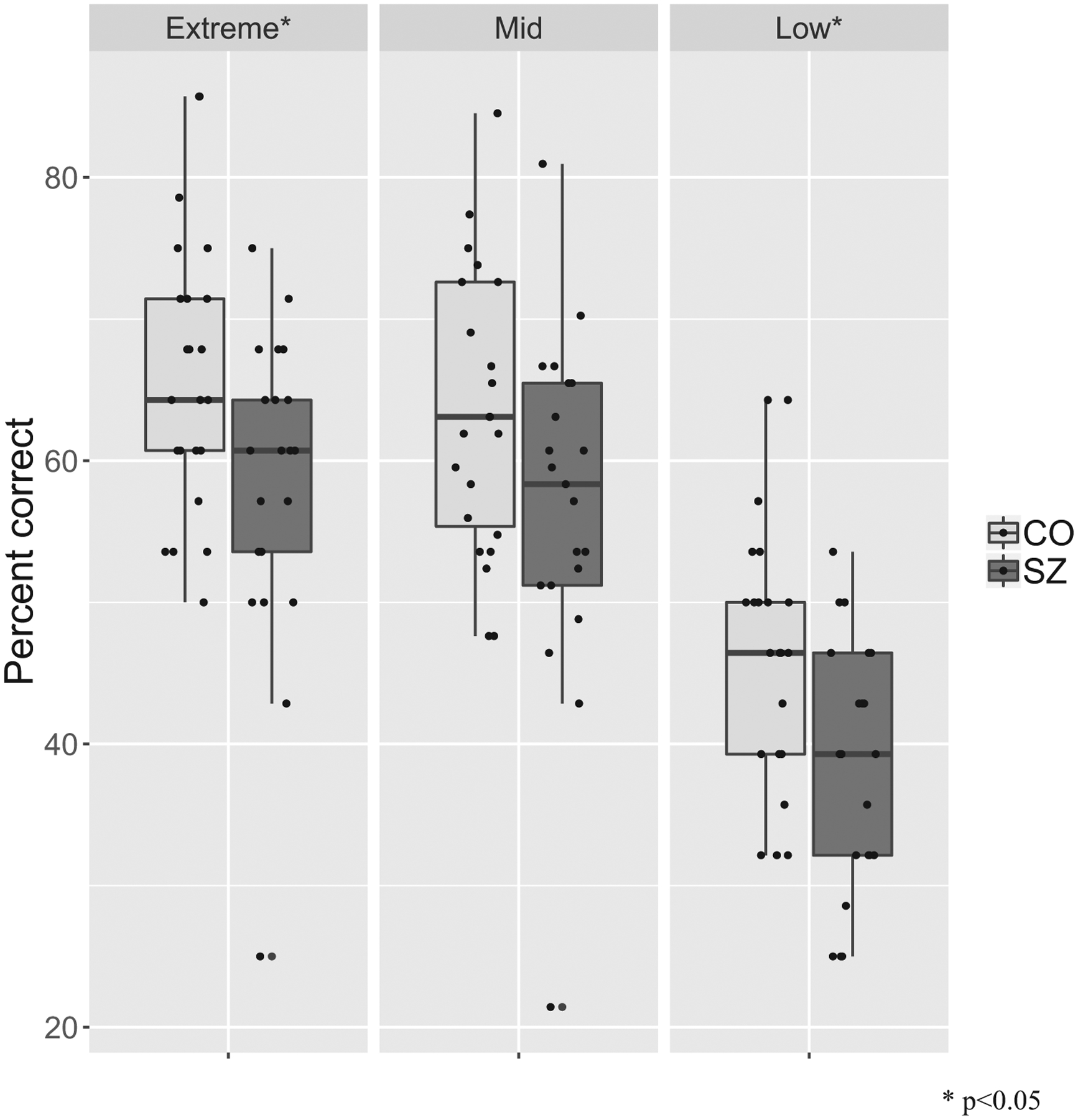

As expected, participants performed better on trials with higher emotion intensity displayed. Compared to trials with extreme intensity (62.4% correct), participants performed significantly worse on trials with low intensity (42.6% correct) (OR = 0.46, p < 0.001) but not on trials with mid intensity (61.2% correct) (OR = 0.31, p = 0.31). SZ performed significantly worse than CO at the low (SZ: 38.4% correct, CO: 46.4% correct; OR = 0.72, p = 0.037) and extreme levels of intensity (SZ: 58.3% correct, CO: 66.1% correct; OR = 0.72, p = 0.037). The group difference was trending at the mid-level of intensity (SZ: 57.7% correct, CO: 64.4% correct; OR = 0.75, p = 0.10.) (see Fig. 3).

Fig. 3.

ERRATA performance per intensity level in SZ and CO.

Response latency (OR = 0.95, p < 0.001) and confidence rating (OR = 1.21, p < 0.001) significantly predicted odds of correct recognition across all participants. Thus, faster response and higher confidence ratings predicted higher odds of correct response. There was no group difference. Indeed, lower response latency predicted better performance in both SZ (OR = 0.95, p < 0.001) and CO (OR = 0.95, p < 0.001). Similarly, higher confidence rating predicted better performance in both SZ (OR = 1.17, p < 0.001) and CO (OR = 1.21, p < 0.001).

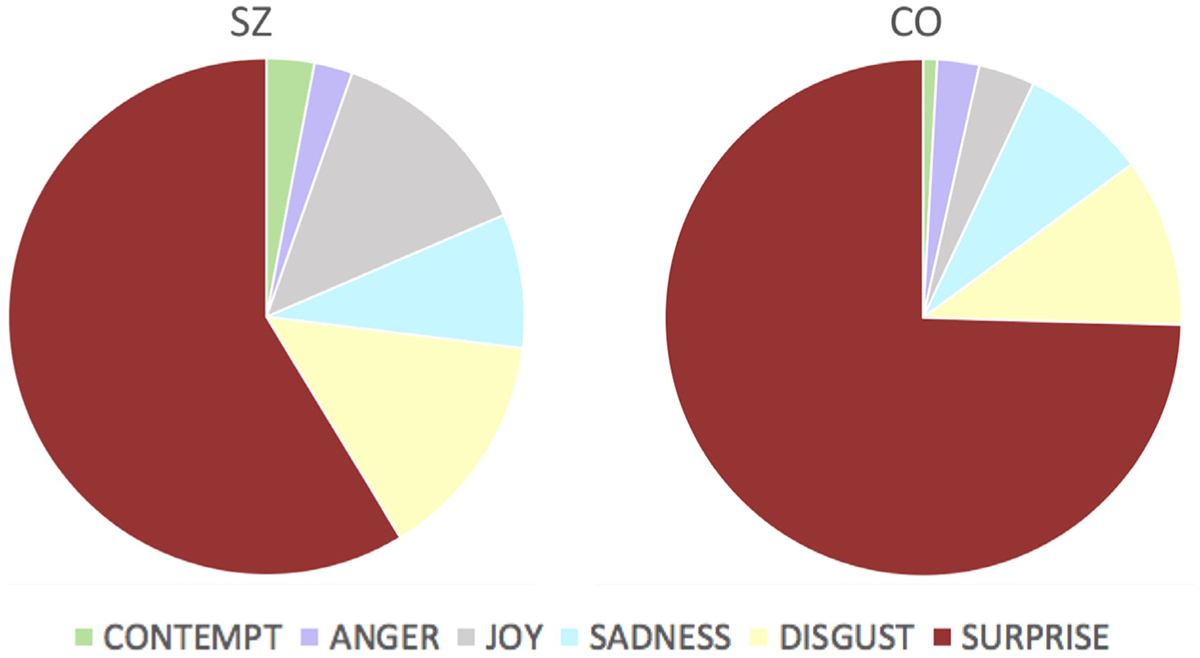

Examining the pattern of error rates, we found that SZ and CO differed in the distribution of errors for disgust (χ2 = 13.7, df = 6, p = 0.033), fear (χ2 = 34.8, df = 6, p < 0.001), sadness (χ2 = 16.17, df = 6, p = 0.013), and surprise (χ2 = 15.2, df = 6, p = 0.019), but not for the error distribution of anger, contempt, or joy. For all these emotions, the mistakes made by SZ were less consistent than those made by CO. For instance, although the misattribution of surprise for fear accounted for 74.6% of the mistakes made by CO on fear trials, it only accounted for 58.7% of the mistakes made by SZ on these trials. Fig. 4 illustrates this example.

Fig. 4.

Errors made by SZ (left) and CO (right) in the recognition of fear.

The correlations between ERRATA performance and symptom severity was significant for negative symptoms (r = −0.46, p = 0.03) but not for positive symptoms (r = −0.098, p = 0.66) or overall symptoms (r = 0.03, p = 0.89). Thus, the more severe the negative symptoms, the poorer the ERRATA performance in the SZ group. Medication dosage did not significantly correlate with ERRATA performance (r = 0.13, p = 0.56), indicating that the deficit in emotion recognition in SZ is independent of antipsychotic medication dosage.

3.2. Electromyography

The accuracies of different types of ML algorithms for each set of experiments are presented in Table 2. Higher predictive accuracies of ML models indicate that independent variables (fEMG features) have a stronger relationship with dependent variables (emotions displayed/selected). When fEMG data were used to predict the actual emotions displayed by the avatars, the ML models for CO and SZ achieved similar performance accuracies (p = 0.443). In other words, fEMG data from SZ and CO were found to have the same predictive power when the actual emotion is used as the label. This result indirectly suggests that the facial muscle activity automatically produced by participants when looking at the avatar’s expressed emotion (i.e., facial mimicry) does not differ between SZ and CO. Indeed, using ML, fEMG data from SZ and CO can be used to predict the avatar’s displayed emotion with similar accuracy. However, when we used fEMG data to predict the participants’ selected emotions, the models for CO statistically outperformed the SZ models (p < 0.05). This result indicates that participants’ fEMG data recorded during ERRATA can better predict the emotion selected by CO than that selected by SZ. We note that although the predictive value of these models was not the focus of our analysis, all models predicted the corresponding labels far better than chance (25%). Rather, the purpose of these models was to investigate the relationship between fEMG data and displayed/selected emotions, and how this relationship differed between groups.

Table 2.

Overall accuracies (%) of different ML algorithms using actual emotions displayed by the avatar (displayed emotion) and emotions selected by participants (selected emotion) as labels.

| Label used | Group | Support vector machine | Random forest | Logistic regression | Bayesian Gaussian mixture model | k nearest neighbor | Artificial neural network | Decision tree |

|---|---|---|---|---|---|---|---|---|

| Displayed emotion | CO | 49.3 | 49.4 | 49.3 | 49.3 | 47.3 | 49.4 | 36.3 |

| SZ | 49.3 | 49.3 | 49.2 | 49.3 | 48.0 | 49.7 | 37.9 | |

| Selected emotion* | CO | 46.1 | 46.1 | 45.7 | 46.0 | 39.4 | 46.0 | 31.4 |

| SZ | 42.3 | 40.6 | 34.8 | 42.3 | 30.1 | 42.5 | 26.1 |

Note: Chance = 25%.

Group difference is significant at p < 0.05.

4. Discussion

SZ’s impaired performance on ERRATA is in line with a large body of literature documenting emotion identification deficits in this population (for a review see Kohler et al., 2010). Importantly, our results also replicate those of previous studies using dynamic emotional facial stimuli (Archer et al., 1994; Johnston et al., 2010). We found that SZ’s performance was affected at all levels of emotional intensity. Although performance was not affected for all emotions, no discernable pattern of recognition rate for different emotions was identified. Similarly, no discernable pattern of error was identified, although the distribution of errors made by SZ and CO differed for some emotions and not others. However, we found that the errors made by SZ were less consistent than those made by CO. Although CO largely confused fear for surprise, which is a common mistake (Roy-Charland et al., 2014), SZ made more uncommon, “true” errors. Our results further show that emotion recognition deficits in SZ are linked to the severity of negative, but not positive, symptoms and that these deficits are not attributable to antipsychotic medication use. In sum, these findings corroborate the well-documented and characteristic emotion recognition impairments in schizophrenia.

A new major finding of this study is that while spontaneous facial mimicry is intact, emotion recognition is impaired in SZ. The present study demonstrates that fEMG data from SZ and CO have the same predictive power when the avatar’s actual expressed emotions are used as labels. This result suggests that when looking at the avatars’ facial expressions, SZ exhibit micro-expressions similar to those of CO. Contrary to our expectations, this finding provides evidence for intact spontaneous facial mimicry in SZ. However, when using fEMG features to predict the participant’s selected emotions, predictive accuracies were higher for CO compared to SZ. Together, these results suggest that ML algorithms can use SZ’s fEMG data to accurately predict the emotion displayed by the avatar, but not the emotion the participant identified. This might indicate that SZ have difficulty transforming unconsciously perceived emotions (facial mimicry), into conscious thoughts and explicit words (selected emotion).

Facial mimicry, a bottom-up automatic process, has been shown to facilitate and accelerate affect recognition in control individuals (Stel and Can Knippenberg, 2008). Additionally, empathy is thought to originate from the shared representations of people’s emotional states (Singer and Lamm, 2009). Our results suggest that the foundational, automatic level of these shared representations—spontaneous facial mimicry—is intact in SZ. However, SZ still have difficulty accurately translating these shared representations into words. Tschacher et al. (2017) make the case for a false interference model of embodiment in schizophrenia symptoms, arguing that symptoms arise from false beliefs and interpretations of sensory signals. In light of the false interference model, our findings suggest that emotion recognition deficits in schizophrenia might result from a false interpretation of one’s own facial muscle activity. Crook et al. (2012) further showed that top-down modulation of social perception, which is disrupted in schizophrenia, is critical to social functioning. Thus, emotion recognition impairments might stem from a high-order, top-down deficit.

Although our study focused on the automatic imitation of facial expressions, a review of the literature reveals impairments in conscious imitation in schizophrenia. Evidence suggests that individuals with schizophrenia display deficits in the conscious imitation of manual and facial movements (Matthews et al., 2012; Park et al., 2008). Thakkar et al. (2014) also found that the mirror neuron system underlying conscious imitation was affected in schizophrenia. More research is needed to determine whether this high-order deficit also underlies SZ’s inability translating adequate facial mimicry into conscious emotion recognition. Although the present study can rule out the hypothesis that emotion recognition deficits in schizophrenia stem from impaired spontaneous facial mimicry, it cannot elucidate the neurobiological origin of emotion recognition deficits in schizophrenia.

To understand the clinical implications of our results, we turn to the embodied cognition theory (Barsalou et al., 2003). The embodied cognition theory posits that social stimuli produce facial and bodily changes both directly and indirectly, through mimicry. These embodied responses, in turn, become stimuli that can induce affective changes propagating to other cognitive processes. In other words, embodiment (e.g., facial expressions) is both a response and a stimulus that mediates many aspects of cognition and social functioning. Processing has been found to be affected when the feedback received from the body and face do not match that of cognitive processes, suggesting that higher cognition relies on embodied states. According to Barsalou et al. (2003), embodiment-cognition compatibility is essential to social functioning. Our main finding empirically highlights the mismatch between bodily signals and cognition in schizophrenia. Our results therefore contribute to the understanding of the nature of social deficits in SZ, and bear important clinical implications. Clinicians might be able to improve the social functioning of patients by teaching them to pair specific patterns of facial muscle activation with distinct emotion words.

Some limitations should be noted. First, our relatively small sample size did not allow us to separate men from women. The contribution of facial mimicry to emotion recognition has been previously found to differ by gender (Stel and van Knippenberg, 2008). However, our SZ and CO groups were matched on biological sex, suggesting that potential sex effects would have been similar in both groups. Our sample size also did not allow us to investigate the link between spontaneous facial mimicry and emotion recognition ability at the individual level. Instead, our study used group-level analyses to draw preliminary conclusions. Furthermore, all our SZ participants were medicated, which limited our ability to test the potential effects of antipsychotic drugs on emotion recognition ability. However, our analyses revealed no link between medication dosage and ERRATA performance, suggesting that emotion recognition deficits in SZ are not due to antipsychotic medication. Finally, there might be different subtypes of individuals with schizophrenia based on their symptom profile. Indeed, our sample reflected a great variety of positive and negative symptom profiles to increase findings generalizability. However, the variability of our SZ group on both ERRATA performance and facial mimicry suggests that there might be different subtypes of patients our design did not account for.

In sum, we found that although facial mimicry, an automatic process facilitating emotion recognition, is intact in SZ, their ability to identify other’s emotions is impaired. SZ’s adequate ability to spontaneously imitate facial expressions has important clinical implications. The present findings suggest that a higher-order, conscious processes might underlie emotion recognition deficits in SZ, this hypothesis needs to be empirically tested.

Supplementary Material

Acknowledgments

This work was supported by NARSAD, MH106748 and the Gertrude Conaway Vanderbilt Endowment fund. We acknowledge and thank Alena Gizdić, Jacqueline Roig, Andrea Prada, Justin Hong, and Eunsol Chon for their help with data collection. We also thank our participants for their time and dedication.

Footnotes

Declaration of conflict of interest

The authors report no conflict of interest.

Supplementary materials

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.psychres.2019.03.035.

References

- Adelmann PK, Zajonc RB, 1999. Facial efference and experience of emotion. Ann. Rev. Psychol 40 (1), 249–280. [DOI] [PubMed] [Google Scholar]

- Amminger GP, Schafer MR, Papageorgiou K, Klier CM, Schlögelhofer M, Mossaheb N, Werneck-Rohrer S, Nelson B, McGorry PD, 2012a. Emotion recognition in individuals at clinical high-risk for schizophrenia. Schizophr. Bull 38 (5), 1030–1039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amminger GP, Schäfer MR, Klier CM, Schlögelhofer M, Mossaheb N, Thompson A, Bechdolf A, Allott K, McGorry PD, Nelson B, 2012b. Facial and vocal affect perception in people at ultrahigh risk of psychosis, first-episode schizophrenia and healthy controls. Early Interv. Psychiatry 6 (4), 450–454. [DOI] [PubMed] [Google Scholar]

- Andreasen NC, 1989. Scale for the assessment of negative symptoms. Br. J. Psychiatry 155 (S7), 49–52. [PubMed] [Google Scholar]

- Andreasen NC, 1984. Scale for the assessment of positive symptoms. Br. J. Psychiatry 155 (S7), 49–55. [Google Scholar]

- Andreasen NC, Pressler M, Nopoulos P, Miller D, Ho BC, 2010. Antipsychotic dose equivalents and dose-years: a standardized method for comparing exposure to different drugs. Biol. Psychiatry 67 (3), 255–262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Archer J, Hay DC, Young AW, 1994. Movement, face processing and schizophrenia: evidence of a differential deficit in expression analysis. Br. J. Clin. Psychol 33 (4), 517–528. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Niedenthal PM, Barbey AK, Ruppert JA, 2003. Social embodiment. Psychol. Learn. Motiv 43, 43–92. [Google Scholar]

- Bekele E, Bian D, Zheng Z, Peterman JS, Park S, Sarkar N, 2014. Responses during facial emotional expression recognition tasks using virtual reality and static IAPS pictures for adults with schizophrenia. VAMR Part II LNCS 8526, 225–235. [Google Scholar]

- Berenbaum H, Oltmanns TF, 1992. Emotional experience and expression in schizophrenia and depression. J. Abnorm. Psychol 101 (1), 37–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blair JR, Spreen O, 1989. Predicting premorbid IQ: a revision of the National Adult Reading Test. Clin. Neuropsychol 3 (2), 129–136. [Google Scholar]

- Bradley MM, Lang PJ, 1999. Affective Norms for English Words (Anew): Instruction Manual and Affective Ratings. Technical report C-1, The Center for Research in Psychophysiology, University of Florida. [Google Scholar]

- Cacioppo JT, Petty RE, Losch ME, Kim HS, 1986. Electromyographic activity over facial muscle regions can differenciate the valence and intensity of affective reactions. J. Pers. Soc. Psychol 50 (2), 260–268. [DOI] [PubMed] [Google Scholar]

- Chechko N, Pagel A, Otte E, Koch I, Habel U, 2016. Intact rapid facial mimicry as well as generally reduced mimic responses in stable schizophrenia patients. Front. Psychol 7, 773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen AS, Mohr C, Ettinger U, Chan RCK, Park S, 2015. Schizotypy as an organizing framework for social and affective sciences. Schizophr. Bull 41 (suppl_2), S427–S435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook JL, Barbalat G, Blakemore SJ, 2012. Top-down modulation of the perception of other people in schizophrenia and autism. Front. Hum. Neurosci 6, 175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dimberg U, 1990. Facial electromyography and emotional reactions. Psychophysiology 27, 481–494. [DOI] [PubMed] [Google Scholar]

- Edwards J, Jackson HJ, Pattison PE, 2002. Emotion recognition via facial expression and affective prosody in schizophrenia: a methodological review. Clin. Psychol. Rev 22 (6), 789–832. [DOI] [PubMed] [Google Scholar]

- Ekman P, Oster H, 1979. Facial expressions of emotion. Annu. Rev. Psychol 30, 527–554. [Google Scholar]

- First MB, Williams JBW, Karg RS, Spitzer RL, 2015. Structured Clinical Interview For DSM-5—Research version (SCID-5-RV). Arlington, VA: American Psychiatric Association. [Google Scholar]

- Goldman AI, Sripada CS, 2005. Simulationist models of face-based emotion recognition. Cognition 94 (3), 193–213. [DOI] [PubMed] [Google Scholar]

- Gooding DC, Tallent KA, 2003. Spatial, object, and affective working memory in social anhedonia: an exploratory study. Schizophr. Res 63 (3), 247–260. [DOI] [PubMed] [Google Scholar]

- Herbener ES, Hill SK, Marvin RW, Sweeney JA, 2005. Effects of Antipsychotic Treatment on Emotion Perception Deficits in First-Episode Schizophrenia. Am. J. Psychiatry 162 (9), 1746–1748. [DOI] [PubMed] [Google Scholar]

- Hooker C, Park S, 2002. Emotion processing and its relationship to social functioning in schizophrenia patients. Psychiatry Res. 112 (1), 41–50. [DOI] [PubMed] [Google Scholar]

- Horan WP, Kring AM, Blanchard JJ, 2005. Anhedonia in schizophrenia: a review of assessment strategies. Schiz. Bull 32 (2), 259–273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston PJ, Enticott PG, Mayes AK, Hoy KE, Herring SE, Fitzgerald PB, 2010. Symptom correlates of static and dynamic facial affect processing in schizophrenia: evidence of a double dissociation. Schizophr. Bull. 36 (4), 687–680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler CG, Turner TH, Bilker WB, Brensinger CM, Siegel SJ, Kanes SJ, Gur RE, Gur RC, 2003. Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am. J. Psychiatry 160 (10), 1768–1774. [DOI] [PubMed] [Google Scholar]

- Kohler CG, Walker JB, Martin EA, Healey KM, Moberg PJ, 2010. Facial emotion perception in schizophrenia: a meta-analytic review. Schizophr. Bull 36 (5), 1009–1019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kring AM, 1999. Emotion in schizophrenia: old mystery, new understanding. Curr. Dir. Psychol. Sci 8 (5), 160–163. [Google Scholar]

- Kring AM, Elis O, 2013. Emotion deficits in people with schizophrenia. Annu. Rev. Clin. Psychol 9, 409–433. [DOI] [PubMed] [Google Scholar]

- Kring AM, Kerr SL, Earnst KS, 1999. Schizophrenic patients show facial reactions to emotional facial expressions. Psychophysiology 36 (2), 186–192. [PubMed] [Google Scholar]

- Kring AM, Moran EK, 2008. Emotional response deficits in schizophrenia: insights from affective science. Schizophr. Bull 34 (5), 819–834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakin J, Chartrand T, 2003. Using nonconscious behavioral mimicry to create affiliation and rapport. Psychol. Sci 14 (4), 334–339. [DOI] [PubMed] [Google Scholar]

- Matthews N, Gold BJ, Sekuler R, Park S, 2012. Gesture imitation in schizophrenia. Schizophr. Bull 39 (1), 94–101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moody EJ, Reed CL, Van Bommel T, App B, McIntosh DN, 2018. Emotional mimicry beyond the face? Rapid face and body responses to facial expressions. Soc. Psychol. Pers. Sci 9 (7), 844–852. [Google Scholar]

- Neal DT, Chartrand TL, 2011. Embodied emotion perception: amplifying and dampening facial feedback modulates emotion perception accuracy. Soc. Psychol. Pers. Sci 2 (6), 673–678. [Google Scholar]

- Niedenthal PM, Mermillod M, Maringer M, Hess U, 2010. The Simulation of Smiles (SIMS) model: embodied simulation and the meaning of facial expression. Behav. Brain Sci 33 (6), 417–433. [DOI] [PubMed] [Google Scholar]

- Oberman LM, Winkielman P, Ramachandran VS, 2007. Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci 2 (3–4), 167–178. [DOI] [PubMed] [Google Scholar]

- Oldfield RC, 1971. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9 (1), 97–113. [DOI] [PubMed] [Google Scholar]

- Overall JE, Gorham DR, 1962. The brief psychiatric rating scale. Psychol. Rep 10 (3), 799–812. [Google Scholar]

- Park S, Matthews N, Gibson C, 2008. Imitation, simulation, and schizophrenia. Schizophr. Bull 34 (4), 698–707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips LK, Seidman LJ, 2008. Emotion processing in persons at risk for schizophrenia. Schizophr. Bull 34 (5), 888–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prigent E, Amorim MA, Leconte P, Pradon D, 2014. Perceptual weighting of pain behaviours of others, not information integration, varies with expertise. Eur. J. Pain 18 (1), 110–119. [DOI] [PubMed] [Google Scholar]

- Prochazkova E, Kret ME, 2017. Connecting minds and sharing emotions through mimicry: a neurocognitive model of emotional contagion. Neurosci. Biobehav. Rev 80, 99–114. [DOI] [PubMed] [Google Scholar]

- Roy-Charland A, Perron M, Beaudry O, Eady K, 2014. Confusion of fear and surprise: a test of the perceptual-attentional limitation hypothesis with eye movement monitoring. Cogn. Emot 28 (7), 1214–1222. [DOI] [PubMed] [Google Scholar]

- Schwartz BL, Mastropaolo J, Rosse RB, Mathis G, Deutsch SI, 2006. Imitation of facial expressions in schizophrenia. Psychiatry Res. 145 (2–3), 87–94. [DOI] [PubMed] [Google Scholar]

- Singer T, Lamm C, 2009. The social neuroscience of empathy. Ann. NY Acad. Sci 1156 (1), 81–96. [DOI] [PubMed] [Google Scholar]

- Stel M, van Knippenberg A, 2008. The role of facial mimicry in the recognition of affect. Psychol Sci. 19 (10), 984. [DOI] [PubMed] [Google Scholar]

- Thakkar KN, Peterman JS, Park S, 2014. Altered brain activation during action imitation and observation in schizophrenia: a translational approach to investigating social dysfunction in schizophrenia. Am. J. Psychiatry 171 (5), 539–548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trémeau F, 2006. Review of emotion deficits in schizophrenia. Dialogues Clin. Neurosci 8, 59–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tschacher W, Giersch A, Friston K, 2017. Embodiment and schizophrenia: a review of implications and applications. Schizophr. Bull 43 (4), 745–753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Varcin KJ, Bailey PE, Henry JD, 2010. Empathic deficits in schizophrenia: the potential role of rapid facial mimicry. J. Int. Neuropsychol. Soc 16 (4), 621–629. [DOI] [PubMed] [Google Scholar]

- Wade J, Nichols HS, Ichinose M, Bian D, Bekele E, Snodgress M, Amat A, Granholm E, Park S, Sarkar N, 2018. Extraction of emotional information via visual scanning patterns: a feasibility study of participants with schizophrenia and neurotypical individuals. ACM Trans. Access. Comput 11 (4) Article 23. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.