Abstract

Purpose

To automatically detect lymph nodes involved in lymphoma on fluorine 18 (18F) fluorodeoxyglucose (FDG) PET/CT images using convolutional neural networks (CNNs).

Materials and Methods

In this retrospective study, baseline disease of 90 patients with lymphoma was segmented on 18F-FDG PET/CT images (acquired between 2005 and 2011) by a nuclear medicine physician. An ensemble of three-dimensional patch-based, multiresolution pathway CNNs was trained using fivefold cross-validation. Performance was assessed using the true-positive rate (TPR) and number of false-positive (FP) findings. CNN performance was compared with agreement between physicians by comparing the annotations of a second nuclear medicine physician to the first reader in 20 of the patients. Patient TPR was compared using Wilcoxon signed rank tests.

Results

Across all 90 patients, a range of 0–61 nodes per patient was detected. At an average of four FP findings per patient, the method achieved a TPR of 85% (923 of 1087 nodes). Performance varied widely across patients (TPR range, 33%–100%; FP range, 0–21 findings). In the 20 patients labeled by both physicians, a range of 1–49 nodes per patient was detected and labeled. The second reader identified 96% (210 of 219) of nodes with an additional 3.7 per patient compared with the first reader. In the same 20 patients, the CNN achieved a 90% (197 of 219) TPR at 3.7 FP findings per patient.

Conclusion

An ensemble of three-dimensional CNNs detected lymph nodes at a performance nearly comparable to differences between two physicians’ annotations. This preliminary study is a first step toward automated PET/CT assessment for lymphoma.

© RSNA, 2020

Summary

An ensemble of three-dimensional convolutional neural networks was implemented to detect lymph nodes with lymphoma involvement in a group of 90 adult patients with lymphoma, which achieved a detection performance nearly comparable to the differences between two physicians’ annotations.

Key Points

■ The model implemented achieved a median detection sensitivity of 85% (923 of 1087) and four FP findings per patient in adult lymphoma.

■ In a subset of 20 patients segmented by two nuclear medicine physicians, model performance was nearly comparable to the disagreements between two physicians.

■ Computer-aided detection methods in lymphoma can improve patient diagnosis and staging or can be used for disease quantification and prognostication.

Introduction

Whole-body fluorine 18 (18F) fluorodeoxyglucose (FDG) PET/CT imaging is a crucial component of care for patients with lymphoma and has an important role in diagnosis, staging, therapy response assessment, and remission assessment (1,2). Currently, patients with lymphoma are assessed using qualitative criteria, such as Deauville scores, for treatment response assessment (3). The prognostic power of PET can be increased through quantification of disease (4–11). However, comprehensive quantification of PET images is infeasible in routine clinical settings due to the requirement of careful delineation of disease, which is challenging and time-consuming, especially for patients with a heavy disease burden. Automated and reproducible methods are needed for comprehensive PET quantitation of lymphoma in a clinical setting and would ultimately enable better guidance for lymphoma therapies.

Previous work in the automated detection of lymphoma on 18F-FDG PET/CT images has focused on classifying areas of high 18F-FDG uptake or has been limited to small patient populations (12–16). One such study trained support vector machine classifiers on handcrafted features of high-uptake areas obtained using thresholding in 10 patients with lymphoma (12). This technique was improved upon through the use of classifying supervoxels using convolutional neural networks (CNNs) in 11 patients with lymphoma (13). A similar method was tested in 48 patients with lymphoma, in which the PET image was divided into supervoxels and clustered based on handcrafted features (16). A drawback of these methods is that classifying high-uptake regions (often identified using a PET threshold) disregards low-uptake disease. Furthermore, information on the proportion of total lesions that are correctly identified and the number of false-positive (FP) detections are not reported.

The purpose of this preliminary study was to demonstrate a fully automated machine learning–based method for localization of lymph nodes on 18F-FDG PET/CT images using CNNs and trained using physician contours. The algorithm was trained and tested in a dataset of patients with heterogeneous lymphoma disease. Performance of our method is benchmarked against the variability between physician labels in a subset of patients.

Materials and Methods

Study Design

This retrospective study included patients who were recruited in one of two separate prospective multicenter imaging trials. The first, described in Hutchings et al, included 126 patients with newly diagnosed Hodgkin lymphoma, stages II-IV, scanned between 2005 and 2011 at various imaging centers in the United States and Europe (17). All patients from Hutchings et al scanned at Mount Sinai Medical Center (MSSM) (n = 24; mean age, 38 years; age range, 19–66 years; 14 women) and in Denmark (n = 39; mean age, 37 years; age range, 16–76 years; 21 male patients) were included in the current study. The second trial, described in Mylam et al, included 112 patients with newly diagnosed diffuse large B-cell lymphoma (DLBCL), stages II-IV, scanned between 2005 and 2011 at various Nordic and U.S. participating imaging centers (18). All patients from Mylam et al scanned at MSSM were included in the current study (n = 27 patients; mean age, 61 years; age range, 23–83 years; 18 women).

The primary endpoint of both Hutchings et al and Mylam et al was to assess the prognostic value of PET after one cycle of chemotherapy. This retrospective study was limited to baseline images only and did not include outcome data. Baseline images were transferred to the imaging laboratory of the University of Wisconsin-Madison for analyses. Retrospective analysis was approved for expedited institutional review board review with no requirement of additional consent from the patients by the respective local ethics committees at each academic site. A total of 90 patients were included in this study.

PET/CT Imaging, Segmentation, and Labeling

All patients underwent 18F-FDG PET/CT imaging before treatment (baseline). Whole-body 18F-FDG PET/CT images were acquired after a 6-hour fast and approximately 60 to 90 minutes after intravenous injection of FDG. Images were obtained by one of three PET/CT scanners: the GE Discovery LS, GE Discovery STE (GE Healthcare, Waukesha, Wis), and Siemens Biograph (Siemens Healthcare, Erlangen, Germany).

Lymphoma lesions were segmented manually on baseline PET/CT images by a nuclear medicine physician (physician 1 with 11 years of experience) on a MIM workstation (MIM Software, Cleveland, Ohio). Segmented lesions were labeled according to physician confidence in malignancy status (1, definitely malignant; 2, likely malignant; 3, equivocal; or 4, likely benign). Lymph node locations were categorized as head and/or neck, chest (including axillary region), abdomen, and pelvis. Malignant and likely malignant nodes were combined and used as the ground truth mask. Extranodal disease thought to be related to lymphoma (eg, bone marrow or spleen) was segmented but not used for algorithm training.

The 90 patients were randomly ordered, and images in the first 20 patients (11 with Hodgkin lymphoma, nine with DLBCL) were chosen to be annotated by a second nuclear medicine physician (physician 2, with more than 30 years of experience) using the same labeling scheme as physician 1. This patient subset was used to assess interphysician variability to draw comparisons with algorithm performance. Readers were provided only the PET and CT images and were blinded to all other clinical information. Physicians 1 and 2 had initials M.W.K. and S.B.P.

Image Preprocessing

PET and CT images were resampled to a common, cubic voxel size of 2 mm using linear resampling. Images were normalized to zero mean and unit variance (excluding image values outside of the patient). Patient data were split into five folds for cross-validation such that each fold used 65% of patient data for training, 15% for validation, and 20% for testing.

Network Architecture and Training

A three-dimensional, multiresolution pathway CNN, DeepMedic (19), was implemented. The network consists of eight convolutional layers (kernel size, 3 × 3 × 3), followed by two fully connected layers implemented as 1 × 1 × 1 convolutions and a final classification layer (Fig 1). Three resolution pathways were implemented, one at a normal image resolution, and two pathways that downsample the image by factors of three and five (increasing the effective field of view by the same amount), which allows the network to consider context in addition to fine detail. Patches of size 25 × 25 × 25 voxels were extracted for training using class balancing of 50% positive, 50% negative samples per patient. A dropout rate of 5% was used for convolutional layers, and 50% dropout was used for the final two fully connected layers.

Figure 1:

DeepMedic network design, adapted for lymph node detection based on Kamnitsas et al (19). A dual-resolution pathway is shown. Three resolution pathways were implemented in this study.

Augmentation using image rotations and intensity shifts were performed during training. The network was trained by minimizing the cross-entropy using RMSProp (20). A decaying learning rate was implemented. Each CNN was trained on a NVIDIA Tesla V100 GPU (Nvidia, Santa Clara, Calif). For each fold of cross-validation, three identical CNNs were trained from scratch with random initialization. An ensemble of the three models’ outputs, calculated as the intersection of all three single CNN outputs, was used for the final inference in the test patients.

Comparing Whole-Body to Region-specific CNN Training

Models were trained using whole-body images. In addition, models were trained using images cropped to focus only above the diaphragm or only below the diaphragm. Based on convergence of the validation datasets, whole-body networks were trained for 70 epochs while cropped networks were trained for 35 epochs. The performance of whole-body CNNs was compared with performance of region-specific CNNs.

Impact of Imaging Centers

To assess the impact of using data from multiple imaging centers with different scanners and evaluate the impact of using an ensemble of networks, three training regimens were compared: (a) ensemble CNN trained using fivefold cross-validation on all patients, as described above (3CNN), (b) a single CNN trained using fivefold cross-validation on all patients (1CNN), and (c) a model trained using only MSSM patients (1CNNMSSM). Performance was assessed for each regimen by testing results in all 90 patients using cross-validation. Performance was also broken down by imaging site (MSSM vs Denmark).

Impact of Patient Number

To assess the impact of the number of patients included during training, networks were trained using fivefold cross-validation with varying patient numbers (five, 10, 20, 30, 40, 58, and 72 randomly sampled patients). Performance was assessed for each network by testing results in all 90 patients.

Statistical Analysis

For all calculated metrics, median and interquartile range are reported. Performance was assessed on the test set using true-positive rate (TPR, percentage of segmented nodes correctly identified) and number of FP findings (positive findings located > 10 mm away from a segmented lymph node) per patient (21). Positive findings that were ranked as equivocal or likely benign by the physician were not counted as FP findings by the model, as was physician-segmented extranodal disease.

By varying the CNN output probability threshold, detection free-response receiver operating characteristic (FROC) curves were computed, which compare TPR against FP findings per patient (21). To compare performance of the different CNNs, the TPR was calculated for each patient at the operating point that resulted in an average of four FP findings per patient, which was approximately the level of interphysician variability. Differences in TPR across training methods were assessed using Wilcoxon signed rank or rank sum tests, with P less than .05 considered significant. The impact of lymph node size and tracer uptake on performance was assessed by implementing PET volume cutoffs of 0.5 and 1.0 cm3, and maximum standard uptake volume (SUVmax) cutoffs of 2.5 and 5 g/mL.

Variability across physicians was quantified by calculating the proportion of lymph nodes identified by physician 2 that were also identified by physician 1, and number of additional nodes identified by physician 2. These numbers capture interobserver variability and were compared in the subset of 20 patients to the model’s TPR and FP findings rate in the same population.

CNN training and testing was performed in Tensorflow (https://www.tensorflow.org/). All other analyses were performed using Matlab version r2018a (MathWorks, Natick, Mass).

Results

Patient and lymph node information is shown in Tables 1 and 2, respectively. A total of 1087 lymph nodes scored as malignant (definitely or likely malignant) were identified, while 145 nodes were annotated as benign (equivocal or likely benign). Five patients had no disease identified above the diaphragm, while 51 patients had no disease identified below the diaphragm. There were 18 patients with annotated extranodal disease, including lesions in the bone marrow, spleen, breast, and liver. Total training time per CNN averaged approximately 68 hours, while full inference took an average of 24 seconds ± 5 (standard deviation). Thus, a full inference using the 3CNN method on a single GPU would take less than 2 minutes.

Table 1:

Patient Characteristics across the Three Patient Populations

Table 2:

Lymph Node Characteristics across the Three Patient Populations

Detection Performance for All Patients

For all definitely and likely malignant nodes, the TPR was 85% (923 of 1087) at four FP findings per patient, achieved using the 3CNN model. The 3CNN method performed better than the 1CNN method, with the 1CNN method achieving a TPR of 79% (859 of 1087) at four FP findings per patient (Wilcoxon signed rank test, P < .001, Table 3). FROC curves of both the 3CNN and 1CNN are shown in Figure 2, A.

Table 3:

Impact of Training Method on Test Set Performance for the Four Methods Tested

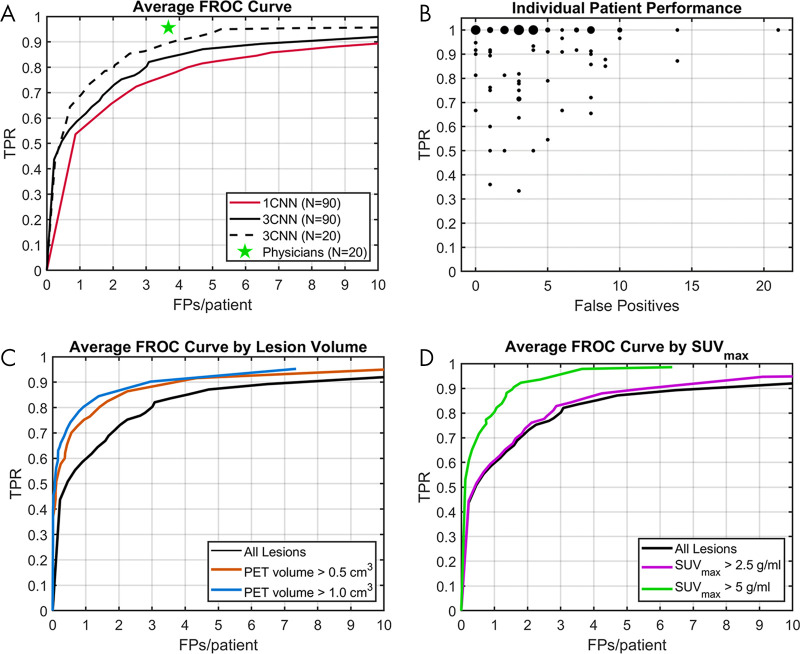

Figure 2:

Test set performance of convolutional neural network (CNN) methods. A, Free-response receiver operating characteristic (FROC) curves comparing the 3CNN method to the 1CNN method, with interobserver variability as measured from two nuclear medicine physician readings in 20 patients. B, True-positive rate (TPR) and false-positive (FP) findings for individual patients for the 3CNN method. Plot markers are scaled based on frequency for which patients had the same performance. C, FROC curves comparing performance of 3CNN method by lesion PET volume. D, FROC curves comparing performance of 3CNN method by lesion SUVmax. SUVmax = maximum standardized uptake value.

Performance of the 3CNN method varied widely across patients, with TPR ranging from 33% to 100% and FP findings from 0 to 21 (Fig 2, A). However, the 3CNN achieved a median patient TPR of 100% and median of three FP findings per patient. Results were skewed by three outlier patients who had greater than 10 FP findings due to substantial uptake of 18F-FDG in brown adipose tissue, located in areas commonly occupied by lymph nodes. Examples of performance in several patients, including two patients with 10 or more FP findings in brown adipose tissue, are shown in Figure 3.

Figure 3:

Ten different examples of ensemble convolutional neural network (CNN) output. Maximum intensity projections (MIPs) of PET images with overlaying MIPs of the 3CNN output show true-positive findings (green), false-positive findings (red), and false-negative findings (blue). A-H show patients of varying disease burden and varying performance. I-J show patients with an above average number of false-positive findings who had significant 18F fluorodeoxyglucose uptake of brown fat in the neck, shoulders, and ribs.

Comparison of Whole-Body and Region-specific Training

A total of 732 malignant nodes were labeled as head and/or neck and chest (above diaphragm), and 355 malignant nodes were labeled as abdomen and pelvis (below diaphragm). An FROC curve for both the 1CNN and 3CNN methods for whole-body and region-specific–trained networks is shown in Figure 4. Performance of the whole-body–trained 3CNN had a TPR of 86% (629 of 732) at two FP findings per patient above the diaphragm, compared with a TPR of 79% (578 of 732) at two FP findings per patient for 3CNN trained on images cropped above the diaphragm (P = .001). For performance below the diaphragm, the whole-body–trained 3CNN had a TPR of 78% (277 of 355) at two FP findings per patient, compared with a TPR of 71% (252 of 355) at two FP findings per patient for 3CNN trained on the cropped images (P = .2).

Figure 4:

Comparison of performance for whole-body convolutional neural network (CNN) training and region-specific CNN training. Performance of a CNN trained on whole-body images was compared with A, a network trained on images cropped above the diaphragm and B, similar comparison was made below the diaphragm. Results are shown for both the 1CNN and 3CNN methods. FP = false positive, TPR = true-positive rate.

Comparison with Interphysician Variability

In the 20 patients labeled by both physicians, physician 1 identified 219 definitely or likely malignant nodes (median, 5.5 per patient; range, 1–49 per patient). Physician 2 identified 96% (210 of 219; range, 40%–100% per patient) of the nodes identified by physician 1 with an average of 3.7 additional nodes (range, 0–13 per patient) labeled per patient. Performance of the 3CNN in these patients at 3.7 FP findings per patient (range, 0–15 per patient) had a TPR of 90% (197 of 219; range, 60–100% per patient). The FROC curve for the 3CNN model in these 20 patients, with comparison for interphysician variability, is shown in Figure 2, A. Interphysician variability was higher for disease above the diaphragm (96% [148 of 154] agreement at 3.3 additional nodes per patient) than it was for disease below the diaphragm (92% [60 of 65] at 0.3 additional nodes per patient). This is likely due to the large majority (70.3% [154 of 219]) of lesions for these 20 patients being located above the diaphragm.

Detection Performance by Lymph Node Size and Uptake

Performance in nodes with a PET volume of greater than 0.5 cm3 (77.8% [846 of 1087]) and volume greater than 1 cm3 (65.8% [715 of 1087]) was improved compared with performance across all nodes, with a TPR of 91% at four FP findings per patient for both volume cutoffs (Fig 2, C ). There were 1071 of 1087 (98.5%) nodes with SUVmax of greater than 2.5 g/mL, for which performance was slightly improved at a TPR of 87% at four FP findings per patient (Fig 2, D). Substantially improved performance was found for nodes with SUVmax of greater than 5 g/mL (n = 842), with a TPR of 98% at four FP findings per patient.

Detection Performance by Imaging Site

Table 3 shows the impact of using images from different imaging sites on model performance. Detection performance in the Denmark population was not significantly different from performance in the MSSM population for any of the CNN training methods (P > .1, Wilcoxon rank sum test). No significant differences in TPR were found when comparing 1CNN to 1CNNMSSM using a Wilcoxon signed rank test across any population of patients (P > .2). TPR in the Denmark population was 78% for 1CNN, compared with 77% for 1CNNMSSM (P = .6), and 76% for 1CNN (P = .36).

Detection Performance by Number of Training Patients

Boxplots of patient-level TPR values at four FP findings per patient for all models of varying training patient number are shown in Figure 5. Improvements in detection performance leveled off after training with 40 or more patients. No significant differences (P > .05) were observed comparing a model trained with 40 patients compared with that of the 1CNN model trained with 58 patients. Likewise, model performance did not significantly improve when training with 72 patients (using the validation group as additional training patients).

Figure 5:

Impact of number of training patients on detection performance. Boxplots show median and interquartile range of patient-level true-positive rate (TPR) across all 90 patients as a function of training patient number, operating at an average of four false-positive findings per patient. Dots represent outliers, which are determined by points lying further than 1.5 times the interquartile range from the edge of the box.

Discussion

In this preliminary work, we demonstrated the feasibility of using a CNN to automatically identify diseased lymph nodes on whole-body PET/CT images in patients with lymphoma. A performance of 85% detection TPR was achieved at an average FP rate of four per patient, with a majority of patients showing three FP findings or fewer. In a subset of 20 patients, the method’s accuracy was nearly comparable to the level of agreement between two physicians. Performance was even better in high uptake, larger lesions, and better above the diaphragm than below the diaphragm.

Few previous studies have aimed to detect lymphoma on 18F-FDG PET/CT images (13–16). A recent study used a classification of superpixels based on a clustering method (16). However, their method resulted in 39% ± 33 of the predicted disease volume being falsely positive. For comparison, our method resulted in 8.9% ± 19.8 of the predicted disease volume being falsely positive. Our method did not rely on any a priori assumptions of lesion uptake. Instead, this information, including location of organs with high physiologic 18F-FDG uptake, was implicitly learned during CNN training.

There were several factors contributing to the increased detection performance found above the diaphragm compared with below. First, there were fewer patients with disease below the diaphragm (39 of 90) than there were with disease above the diaphragm (85 of 90). Furthermore, physiologic 18F-FDG radiotracer distributions are more heterogeneous below the diaphragm due to the kidneys, ureter, and bowel. These findings are similar to those of a previous study by Shin et al, which found worse performance in automatically detecting enlarged abdominal lymph nodes compared with mediastinal nodes on contrast material–enhanced CT images (22). The poorer performance is likely due to abdominal nodes being surrounded by more similar-looking objects, whereas mediastinal nodes are more easily distinguishable (22).

An interesting result of this study is the improved performance using whole-body images for CNN training compared with region-specific, cropped images. Because the patch size from the lowest resolution pathway was roughly 7 inches in each direction, and because each patch is evaluated independently by the model, information in the chest would not influence CNN classification in the abdomen, and vice versa. Whole-body training results in roughly twice as many training samples, which could allow the network to be more generalizable and reduce the possibility of overfitting. This suggests that features learned above the diaphragm are useful below the diaphragm, and vice versa. However, a simpler explanation for this finding is that cropping may adversely impact performance in lymph nodes near the diaphragm, occluding potentially useful information on the other side of the diaphragm.

In deep learning, it is unknown how many training patient cases are needed for a given task, with numbers varying considerably depending on the task difficulty and training sample heterogeneity. For example, Dou et al used only 10–20 patients for training and validation (23), while Kline et al used 2000 patients (24). We investigated the sensitivity of our model’s performance to the number of training patients and found that performance leveled off when using 40 patients or more. It is uncertain if using a much larger dataset could improve performance, or if the model is ultimately limited by the consistency of the physician labels. In addition, training and testing the model using data from different imaging centers did not appear to have an impact on the results of this study. TPRs were nearly identical for the Denmark and MSSM groups, despite the sites having different scanner models. Even when training with data from one group and testing in the other group, results were mostly unchanged from when the model was trained with both groups.

True-positive findings were located in many different locations throughout the body. Likewise, FP and false-negative findings were located throughout the body. FP findings were often, though not always, located in areas of higher physiologic 18F-FDG uptake. A large number of these FP findings were located in patients with brown adipose tissue uptake of 18F-FDG. Due to the small number of patients with brown adipose tissue uptake, the network was apparently unable to learn to distinguish brown adipose tissue uptake with lymph node uptake, despite having the CT as an input.

A limitation of the study was the interphysician variability in lesion labeling, which was only assessed in a subset of 20 patients. This variability is largely explained by the difficulty of the task, as labeling and segmenting all malignant and possibly benign lymph nodes is outside the typical workflow of clinical evaluations. Furthermore, disease was often widespread, making it easy to miss small lesions, especially when adjacent to large clusters of disease. Ideally, histopathologic validation would be obtained for each lesion, but this is clearly impractical given the extent of disease. Thus, interphysician variability was used as a target to account for uncertainties and inconsistencies in the ground truth labels. Furthermore, this study relied primarily on a single physician’s labels for training. Ideally, a consensus of expert labels would have been used to train the model, but this was not possible given how long it took to label all disease (approximately 50 hours). Last, extranodal disease in the spleen and bone marrow was excluded for this study and must be considered in future work for a comprehensive assessment of lymphoma.

This preliminary study is a first step in developing a standalone tool for lymphoma detection or as a first step in extracting quantitative imaging features for diagnosis and response assessment. Future work toward clinical implementation includes identifying an acceptable operating point for clinical use, expanding and validating in larger independent datasets, and confirming FP findings.

Acknowledgments

Acknowledgments

We would also like to thank NVIDIA for their support through their academic GPU seed grant program.

This work was supported by GE Healthcare.

Disclosures of Conflicts of Interest: A.J.W. Activities related to the present article: disclosed money paid to institution for research support by GE Healthcare. Activities not related to the present article: disclosed no relevant relationships. Other relationships: disclosed no relevant relationships. M.W.K. disclosed no relevant relationships. S.B.P. disclosed no relevant relationships. M.H. disclosed no relevant relationships. R.J. Activities related to the present article: disclosed grant money paid to institution by the National Cancer Institute, in part supported by the UW Carbone Cancer Center. Activities not related to the present article: disclosed no relevant relationships. Other relationships: disclosed patent issued by WARF and patent pending with AIQ Solutions. L.K. Activities related to the present article: disclosed no relevant relationships. Activities not related to the present article: disclosed money paid for consultancy by Roche, Genentech. Other relationships: disclosed no relevant relationships. T.J.B. Activities related to the present article: disclosed no relevant relationships. Activities not related to the present article: disclosed no relevant relationships. Other relationships: institution receives research support from GE Healthcare.

Abbreviations:

- CNN

- convolutional neural network

- DLBCL

- diffuse large B-cell lymphoma

- FDG

- fluorodeoxyglucose

- FP

- false positive

- FROC

- free-response receiver operating characteristic

- MSSM

- Mount Sinai Medical Center

- SUV

- standard uptake volume

- TPR

- true-positive rate

References

- 1.Barrington SF, Mikhaeel NG. When should FDG-PET be used in the modern management of lymphoma? Br J Haematol 2014;164(3):315–328. [DOI] [PubMed] [Google Scholar]

- 2.Barrington SF, Meignan M. Time to Prepare for Risk Adaptation in Lymphoma by Standardizing Measurement of Metabolic Tumor Burden. J Nucl Med 2019;60(8):1096–1102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Meignan M, Gallamini A, Meignan M, Gallamini A, Haioun C. Report on the First International Workshop on Interim-PET-Scan in Lymphoma. Leuk Lymphoma 2009;50(8):1257–1260. [DOI] [PubMed] [Google Scholar]

- 4.Ben Bouallègue F, Tabaa YA, Kafrouni M, Cartron G, Vauchot F, Mariano-Goulart D. Association between textural and morphological tumor indices on baseline PET-CT and early metabolic response on interim PET-CT in bulky malignant lymphomas. Med Phys 2017;44(9):4608–4619. [DOI] [PubMed] [Google Scholar]

- 5.Ceriani L, Milan L, Martelli M, et al. Metabolic heterogeneity on baseline 18FDG-PET/CT scan is a predictor of outcome in primary mediastinal B-cell lymphoma. Blood 2018;132(2):179–186. [DOI] [PubMed] [Google Scholar]

- 6.Milgrom SA, Elhalawani H, Lee J, et al. A PET Radiomics Model to Predict Refractory Mediastinal Hodgkin Lymphoma. Sci Rep 2019;9(1):1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Decazes P, Becker S, Toledano MN, et al. Tumor fragmentation estimated by volume surface ratio of tumors measured on 18F-FDG PET/CT is an independent prognostic factor of diffuse large B-cell lymphoma. Eur J Nucl Med Mol Imaging 2018;45(10):1672–1679 [Published correction appears in Eur J Nucl Med Mol Imaging 2018;45(10):1838–1839.]. [DOI] [PubMed] [Google Scholar]

- 8.Mettler J, Müller H, Voltin CA, et al. Metabolic Tumour Volume for Response Prediction in Advanced-Stage Hodgkin Lymphoma. J Nucl Med 2018 Jun 7 [Epub ahead of print]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cottereau AS, El-Galaly TC, Becker S, et al. Predictive Value of PET Response Combined with Baseline Metabolic Tumor Volume in Peripheral T-Cell Lymphoma Patients. J Nucl Med 2018;59(4):589–595. [DOI] [PubMed] [Google Scholar]

- 10.Cottereau AS, Hapdey S, Chartier L, et al. Baseline Total Metabolic Tumor Volume Measured with Fixed or Different Adaptive Thresholding Methods Equally Predicts Outcome in Peripheral T Cell Lymphoma. J Nucl Med 2017;58(2):276–281. [DOI] [PubMed] [Google Scholar]

- 11.Kostakoglu L, Chauvie S. PET-Derived Quantitative Metrics for Response and Prognosis in Lymphoma. PET Clin 2019;14(3):317–329. [DOI] [PubMed] [Google Scholar]

- 12.Bi L, Kim J, Feng D, Fulham M. Multi-stage Thresholded Region Classification for Whole-Body PET-CT Lymphoma Studies. In: Golland P, Hata N, Barillot C, Hornegger J, Howe R, eds. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2014. MICCAI 2014. Lecture Notes in Computer Science, vol 8673. Cham, Switzerland: Springer, 2014; 569–576. [DOI] [PubMed] [Google Scholar]

- 13.Bi L, Kim J, Kumar A, Wen L, Feng D, Fulham M. Automatic detection and classification of regions of FDG uptake in whole-body PET-CT lymphoma studies. Comput Med Imaging Graph 2017;60:3–10. [DOI] [PubMed] [Google Scholar]

- 14.Grossiord É, Talbot H, Passat N, Meignan M, Najman L. Automated 3D lymphoma lesion segmentation from PET/CT characteristics. 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), 2017; 174–178. [Google Scholar]

- 15.Yu Y, Decazes P, Lapuyade-Lahorgue J, Gardin I, Vera P, Ruan S. Semi-automatic lymphoma detection and segmentation using fully conditional random fields. Comput Med Imaging Graph 2018;70:1–7. [DOI] [PubMed] [Google Scholar]

- 16.Hu H, Decazes P, Vera P, Li H, Ruan S. Detection and segmentation of lymphomas in 3D PET images via clustering with entropy-based optimization strategy. Int J CARS 2019;14(10):1715–1724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hutchings M, Kostakoglu L, Zaucha JM, et al. In vivo treatment sensitivity testing with positron emission tomography/computed tomography after one cycle of chemotherapy for Hodgkin lymphoma. J Clin Oncol 2014;32(25):2705–2711. [DOI] [PubMed] [Google Scholar]

- 18.Mylam KJ, Kostakoglu L, Hutchings M, et al. (18)F-fluorodeoxyglucose-positron emission tomography/computed tomography after one cycle of chemotherapy in patients with diffuse large B-cell lymphoma: results of a Nordic/US intergroup study. Leuk Lymphoma 2015;56(7):2005–2012. [DOI] [PubMed] [Google Scholar]

- 19.Kamnitsas K, Ledig C, Newcombe VFJ, et al. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal 2017;36:61–78. [DOI] [PubMed] [Google Scholar]

- 20.Hinton G, Srivastava N, Swersky K. Neural Networks for Machine Learning, Lecture 6a: Overview of mini-batch gradient descent. https://www.cs.toronto.edu/∼tijmen/csc321/slides/lecture_slides_lec6.pdf. Accessed April 7, 2020.

- 21.Roth HR, Lu L, Seff A, et al. A new 2.5D representation for lymph node detection using random sets of deep convolutional neural network observations. Med Image Comput Assist Interv 2014;17(Pt 1):520–527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shin HC, Roth HR, Gao M, et al. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans Med Imaging 2016;35(5):1285–1298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dou Q, Yu L, Chen H, et al. 3D deeply supervised network for automated segmentation of volumetric medical images. Med Image Anal 2017;41:40–54. [DOI] [PubMed] [Google Scholar]

- 24.Kline TL, Korfiatis P, Edwards ME, et al. Performance of an Artificial Multi-observer Deep Neural Network for Fully Automated Segmentation of Polycystic Kidneys. J Digit Imaging 2017;30(4):442–448. [DOI] [PMC free article] [PubMed] [Google Scholar]