Abstract

Traumatic brain injury (TBI) is an extremely complex condition due to heterogeneity in injury mechanism, underlying conditions, and secondary injury. Pre-clinical and clinical researchers face challenges with reproducibility that negatively impact translation and therapeutic development for improved TBI patient outcomes. To address this challenge, TBI Pre-clinical Working Groups expanded upon previous efforts and developed common data elements (CDEs) to describe the most frequently used experimental parameters. The working groups created 913 CDEs to describe study metadata, animal characteristics, animal history, injury models, and behavioral tests. Use cases applied a set of commonly used CDEs to address and evaluate the degree of missing data resulting from combining legacy data from different laboratories for two different outcome measures (Morris water maze [MWM]; RotorRod/Rotarod). Data were cleaned and harmonized to Form Structures containing the relevant CDEs and subjected to missing value analysis. For the MWM dataset (358 animals from five studies, 44 CDEs), 50% of the CDEs contained at least one missing value, while for the Rotarod dataset (97 animals from three studies, 48 CDEs), over 60% of CDEs contained at least one missing value. Overall, 35% of values were missing across the MWM dataset, and 33% of values were missing for the Rotarod dataset, demonstrating both the feasibility and the challenge of combining legacy datasets using CDEs. The CDEs and the associated forms created here are available to the broader pre-clinical research community to promote consistent and comprehensive data acquisition, as well as to facilitate data sharing and formation of data repositories. In addition to addressing the challenge of standardization in TBI pre-clinical studies, this effort is intended to bring attention to the discrepancies in assessment and outcome metrics among pre-clinical laboratories and ultimately accelerate translation to clinical research.

Keywords: big data, common data elements, missing value analysis, pre-clinical, reproducibility

Introduction

Development of novel therapeutics to treat diseases and disorders of the central nervous system (CNS) is an extremely challenging research endeavor. The difficulty and complexity of successful therapeutic translation is illustrated by the United States Food and Drug Administration's (FDA) relatively low approval rate for new CNS drugs in recent years (e.g., 8% from 2010–2014).1 Research to develop novel pharmacological interventions for the treatment of traumatic brain injury (TBI) faces similar challenges. Despite decades of pre-clinical TBI research and demonstration of successful treatments in animal models, the field is still plagued by poor clinical translation.2,3 In fact, despite the success of many pre-clinical studies and over 30 clinical trials, no TBI drug treatments have graduated out of phase III trials to FDA approval.2,4 This lack of translation continues to spark an active international discussion in which TBI researchers are seeking to develop strategies to improve translation from bench to bedside. Among these considerations, many elements of translational science are being actively considered, including improving clinical trial design,2 improving definition of patient heterogeneity,5 and improving the rigor and reproducibility of pre-clinical studies.6

Indeed, there has been a clarion call from the biomedical research community to raise standards for pre-clinical research by improving rigor, reproducibility, and transparency,7–11 and efforts to do this for TBI research are ongoing.12–15 Specific areas for research design attention include improving the statistical power of pre-clinical studies, reducing risk of exaggerated effect sizes and low reproducibility,16 improving internal and external validity in pre-clinical research,17 and the use of techniques to limit bias.18 Similarly, better reporting and sharing of key experimental variables are needed to increase reproducibility and provide more robust pre-clinical platforms.12,13,19–21 Another concern raised with pre-clinical research is that many animal studies typically have low statistical power,16 and reporting of key methodological variables is varied, with many studies not reporting those key variables.22–25 To this end, there have been several initiatives in pre-clinical research communities, including TBI,12 spinal cord injury (SCI),21 and epilepsy26–28 to develop common data elements (CDEs).

CDEs facilitate standardization of datasets and database creation, enabling investigators to systematically collect, analyze, report, and share data across the research community. A data element (DE) is a logical unit of data pertaining to a single measure or piece of information that supports a measure, such that each DE is reduced to a single parameter, with multiple attributes, or descriptors. Expert consensus for a set of DEs that have common data structures for a given research field gives rise to CDEs, which provide reference content standards and can be assembled into a data dictionary. CDEs are identified by a variable name that will be linked to the piece of data in the dataset, in addition to other attributes, such as a title, description, unit of measure, permissible values, type of element (e.g., numeric, alphanumeric), instructions, and references. Data collection Form Structures are then built from CDEs to facilitate systematic assembly of data. See Supplementary Table S1 for a glossary of related terms. CDE standards are intended to be dynamic and can evolve over time, ultimately promoting consistent and universal data collection and reporting.

In this report, we expand upon the previous TBI pre-clinical CDE initiative12 to better define and harmonize experimental variables and data across individual studies and among laboratories. Specifically, we provide updates on the development of pre-clinical TBI CDEs, making new data collection tools available for the pre-clinical TBI research community, and demonstrate proof-of-concept use case studies that examine data missingness across multiple experiments from different laboratories. We posit that the use of CDEs to more carefully define the experimental variables will provide a standard lexicon for researchers and improve the rigor, reproducibility and ultimately the translational potential of pre-clinical research in TBI.

Methods

CDE development

Initial efforts in the pre-clinical TBI CDE initiative identified 167 CDEs describing animal characteristics, animal history, assessments and outcome measures, and pre-clinical TBI injury models.12 Here, we further develop and expand upon these CDEs, define new CDEs, provide the CDE Data Dictionary and Form Structures online, and demonstrate the use of these tools in a missing value analysis of two different multi-study datasets (Fig. 1). Working groups of content experts were formed to develop the CDEs and guide a utilization process. Three working groups were formed: 1) General Health and Affective Disturbances; 2) Cognitive and Motor Function; and 3) Large Animals Outcomes. Each group had co-chairs and met bi-monthly for approximately 12 months, with monthly meetings of the chairs and agency facilitators. Some working group members were part of the previous pre-clinical CDE initiative; new members were chosen for this expanded effort to ensure further diversity of expertise. National Institutes of Health (NIH) and Department of Defense representatives facilitated the process and also provided perspective from the clinical CDE effort.29,30

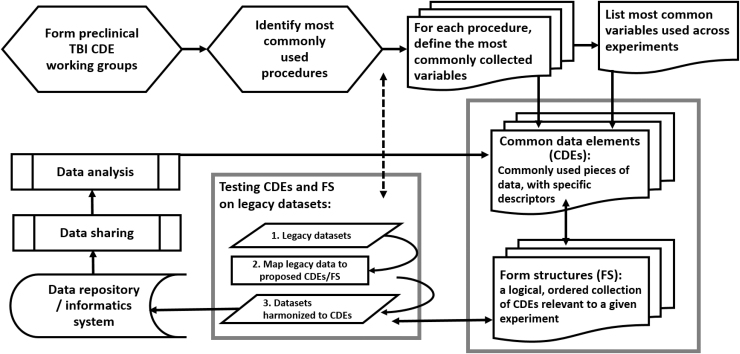

FIG. 1.

Pre-clinical Traumatic Brain Injury (TBI) Common Data Element (CDE) Working Group Workflow Process. Working groups were formed to identify the most commonly used procedures in pre-clinical TBI research. The most commonly collected variables were identified as well as those that were commonly used across experimental procedures. These variables were defined into CDEs and then logical groups of CDEs were made into Form Structures (FS). Once FS were created, reviews of the CDEs prompted revisions, such as clarification of permissible values or consistency with similar CDEs. Legacy data were cleaned and mapped to the CDEs and harmonized within FS. The final FS were validated in and uploaded to a data repository. Data can then be shared and analysis can be performed. Further iterative changes to CDEs are made continually during the process. The demonstration of the platform using legacy data is invaluable to the process and permits improvements to be made based on real-world scenarios.

Working group members focused on identification of data elements that are relevant to pre-clinical TBI studies by drawing from their own expertise and the literature, specifically concentrating on the frequency of use among different research groups and how often a particular outcome measure or variable appeared in published studies. Where appropriate, pre-clinical CDEs were defined in parallel with companion clinical CDEs, to maximize translatability (e.g., Injury Elapsed Time). At least two working group members collaborated on the initial draft and several rounds of group discussion and edits were conducted. The resulting CDEs were available for two rounds of public review (https://fitbir.nih.gov/content/preclinical-common-data-elements) that led to additional revisions in response to suggestions from the research community.

The resulting set of 913 unique CDEs consists of data elements from three general groups: Animal and Study Metadata, Injury Models, and Assessments and Outcomes (Fig. 2). CDEs are described by 18 attributes (variable name, description, datatype, permissible values, etc.). The Animal and Study Metadata CDEs are comprised of a Main Group of eight CDEs that are intended to be included in every form, Animal Characteristics (six CDEs), Animal History (71 CDEs), and All Tests Common (e.g., test equipment information, room environment variables) (84 CDEs; Table 1). The remaining CDEs are distributed among the Injury Models and the Assessments and Outcomes. For this effort, five injury models were completed (205 CDEs) as well as CDEs for General Health and Neurological Function (six assessments, 93 CDEs), Affective Disturbance: Depression/Anxiety (10 tests, 293 CDEs)/Social Interaction (five tests, 361 CDEs), Cognition and Motor: Learning/Memory (seven tests, 306 CDEs)/Sensory/Motor (eight tests, 199 CDEs), and Large Animal Outcomes (three tests, 115 CDEs; Fig. 2). Note that there are repeated (or reused) CDEs among the various tests due to cross cutting common CDEs; therefore, the number of CDE from these groupings exceeds the count of 913. Collectively, the CDEs make up the pre-clinical CDE data dictionary, which is available through the CDE Repository at the National Library of Medicine within the NINDS collection (NLM: https://cde.nlm.nih.gov/form/search?selectedOrg=NINDS&classification=Preclinical%20TBI; https://fitbir.nih.gov).

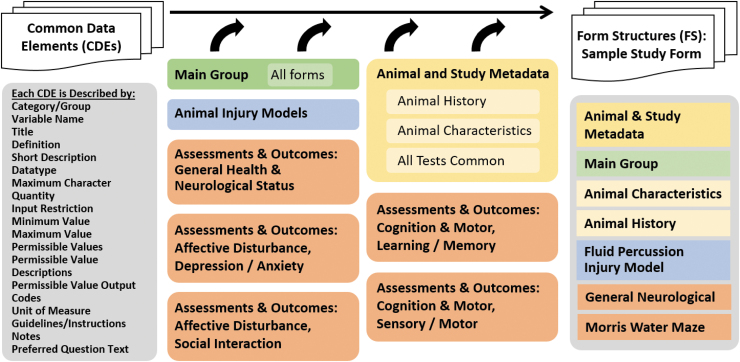

FIG. 2.

Pre-clinical Traumatic Brain Injury (TBI) Common Data Element (CDE) Groups and Form Structure Procedure. The CDEs are described by a set of attributes (left) that described the data element, inform the user about the input format and permissible values, units of measure and similar information necessary to ensure consistent data entry. In addition, there are instructions, guidelines, and references as appropriate. CDEs are organized into several groupings, shown in the center. The Main Group is intended to go on every form and considered essential to every pre-clinical study. The Animal and Study Metadata includes groups on Animal History, Animal Characteristics, and All Tests Common. The Injury Models CDEs are organized by injury model type, but forms may contain other elements, such as Animal History and All Tests Common. Form Structures can then be built from the various groups of CDEs. For example, a FS for a study that uses fluid percussion injury and Morris water maze would be built from the building blocks in the various forms. Color image is available online.

Table 1.

General Study and Animal Subject Metadata

| Main group | |||

|---|---|---|---|

| Title | Short description | Permissible values | |

| 1 | GUID | Global Unique ID, which uniquely identifies a subject | Autogenerated |

| 2 | Subject identifier number | An identification number assigned to the participant/subject within a given protocol or a study. | Free-form text, alphanumeric |

| 3 | Study protocol name | Name of study protocol | Free-form text, alphanumeric |

| 4 | Injury date time | Date (and time, if applicable and known) of injury | Free-form text, ISO 8601 |

| 5 | Animal species type | Type of animal species of being studied | Mice; rats; ferrets; pigs; primates; drosophila |

| 6 | Animal sex type | Type of animal species sex as determined by observation | Male; female; other* |

| 7 | Animal birth date | Date (and time, if applicable and known) the animal participant/subject was born | Free-form text, ISO 8601 |

| 8 | Animal subject injury group assignment type | Type of injury group assignment for an animal subject | Anesthesia controls; Injured; naive; sham injured; other, specify* |

| Animal characteristics (select CDEs) | |||

| Title | Short description | Permissible values, value type | |

| 1 | Animal strain type | A free text describing a type of strain of animal species | Free-form text, alphanumeric |

| 2 | Animal genetic modifications | A free text describing animal genetic modification(s) | Free-form text, alphanumeric |

| 3 | Animal vendor type | Animal vendor type | Archer Farms Inc.; Charles River; Harlan; Jackson Labs; Sinclair Bio Resources; Taconic; Thomas D. Morris Inc.; None; Unknown; Other, specify* |

| Animal history (select CDEs) | |||

| 1 | Animal weight measurement value | Value of measurement of animal weight. Should be used in combination with Animal weight unit of measure. | Free-form text, numericǂ |

| 2 | Change in body weight measurement | The change in absolute body weight from day of injury to time of measurement | Free-form text, numericǂ |

| 3 | Animal subject housing type | Type of animal subject pre-injury housing including individual or group housing | Multiple; single; split cage housing; unknown; other, specify* |

| 4 | Anesthetic type | Type of anesthetic given to a subject | Bupivacaine; chloral hydrate; diethyl ether; isoflurane; ketamine; ketamine/medetomidine; Ketamine/xylazine; lidocaine; none; other, specify* |

| 5 | Anesthesia duration | Duration of time (in minutes) of the subject being anesthetized | Free-form text, numeric |

| 6 | Animal injury number | The number of traumatic event | Free-form text, numeric |

| 7 | Animal injury total number | Number of injury exposures for a given subject | Free-form text, numeric |

CDEs are shown by the variable name, short description, and permissible values, which are three of the 18 attributes. Main Group CDEs are given; these CDEs are intended to be included in every Form Structure. Selected CDEs from the Animal Characteristics and Animal History groups are also shown. Note that some CDEs that may fit in a particular CDE grouping (e.g., Animal Characteristics) are considered part of the required Main group (e.g., Animal Species, Animal Sex). *Other; Other,specify designation requires a corresponding CDE with “Variable Name Other.” ǂFor CDEs needing a unit of measure, there is a corresponding CDE for “Variable Name Unit of Measure.”

CDEs, Common Data Elements.

The Forms (or Form Structures, FS) are analogous to clinical Case Report Forms (CRFs) and are comprised of selected CDEs, with a corresponding data dictionary that defines the CDEs. At the Form level, CDEs are designated as Required, Recommended, or Optional, to indicate the level of need for data harmonization among datasets. There are Categories/Groups in some of the FS to organize related subsets of CDEs (e.g., Software and Scoring, Testing Conditions). FS are created using the building blocks from the Main group of CDEs and relevant CDEs from the Animal and Study Metadata groups, Injury Models, and Assessments and Outcomes (Fig. 2). Many CDEs appear in more than one FS to maintain a common lexicon across FS, and therefore the total number of CDEs among the 46 FS created is 1664 (Table 2). For example, the Animal Characteristics FS contains CDEs from the Main Group and the Animal Characteristics CDEs, for a total of 13 CDEs. Similarly, each of the Injury Models and Assessments and Outcomes have FS with groups of CDEs specific to that model or test as well as CDEs from the Main Group, Animal Characteristics, Animal History, and All Test Common group (e.g., Light Dark Cycle Type, Trial Number). For the purposes of community dissemination, the 46 FS created are posted in the CDE Repository for download and use in several different export formats (e.g., REDCap, FHIR, JSON) (https://cde.nlm.nih.gov/form/search?selectedOrg=NINDS&classification=Preclinical%20TBI; https://fitbir.nih.gov). Researchers can export forms as well as create forms from the available CDEs and field codes from the NIH CDE repository (www.youtube.com/watch?v=LowLJh29-4M&t=1s).

Table 2.

Form Structures

| General study and animal metadata | |||

|---|---|---|---|

| 1 | Main group | Common CDEs for all forms | 8 |

| 2 | Animal characteristics | Inherent animal features | 13 |

| 3 | Animal history | Experimental measurements and conditions, pertaining to the animal | 79 |

| Animal injury models | |||

| 1 | Fluid percussion injury model | Fluid impact to dura mater, diffuse | 32 |

| 2 | Controlled cortical injury model | Piston impact to dura mater, focal | 29 |

| 3 | Penetrating ballistic-like brain injury model | Penetrating injury, focal to path | 21 |

| 4 | Advanced blast simulator ABS model | Shock tube, mimic blast overpressure | 57 |

| 5 | Blast-induced injury model | Blast overpressure | 66 |

| Animal assessments outcomes: general health | |||

| 1 | Body conditioning score | General impairment, health status | 11 |

| 2 | Change in body weight | General impairment, health status, Injury severity | 4 |

| 3 | Morbidity/mortality | Injury severity, health status | 8 |

| Animal assessments outcomes: neurological status | |||

| 1 | Neurological deficit score | Neurological function | 21 |

| 2 | Neurological Severity Score (small animals) | Neurological function | 31 |

| 3 | Righting reflex | Vestibular motor, assessment of consciousness | 18 |

| Affective disturbance: depression/anxiety | |||

| 1 | Elevated plus maze | Anxiety | 35 |

| Elevate zero maze | Anxiety | 35 | |

| 3 | Forced swim yest | Depression | 18 |

| 4 | Learned helplessness | Depression, helplessness, despair-like, affect | 22 |

| 5 | Marble burying | Naturalistic, anxiety, repetitive-like behavior, obsessive compulsive behavior | 34 |

| 6 | Open field test | Anxiety, depression, motor, activity level | 71 |

| 7 | Predatory odor test | Anxiety | 13 |

| 8 | Sucrose preference test | Depression, despair-like, affect, anhedonia | 13 |

| 9 | Startle response | Anxiety/PTSD | 19 |

| 10 | Tail suspension | Depression, helplessness, despair-like, affect | 33 |

| Affective disturbance: social interaction | |||

| 1 | Partition Test | Aggression, impulsivity, dominance, impaired social interaction | 76 |

| 2 | Social Interaction Resident Intruder Test | Aggression, impulsivity, Dominance, impaired social interaction | 95 |

| 3 | Three-Chamber Test | Aggression, impulsivity, dominance, impaired social interaction | 79 |

| 4 | Tube Dominance Test | Aggression, impulsivity, dominance, impaired social interaction | 32 |

| 5 | Urine Open Field Test | Aggression, dominance | 79 |

| Cognition and motor: learning/memory | |||

| 1 | Alternating (Attentional) Set Shift | Frontal lobe function, attentional function | 24 |

| 2 | Barnes maze | Spatial learning and memory, working memory | 71 |

| 3 | Conditional Place Preference | Contextual learning, addiction | 55 |

| 4 | Contextual Fear Conditioning | Associative learning (cue and context) | 39 |

| 5 | Morris Water Maze | Spatial learning and memory, working memory | 46 |

| 6 | Novel Object Recognition | Recognition memory, working memory | 22 |

| 7 | Radial Arm Maze | Spatial learning and memory, Working memory | 49 |

| Cognition and motor: sensory/motor | |||

| 1 | Angle Board/Inclined Plane | Motor function, balance | 11 |

| 2 | Beam Walk | Gross motor function | 29 |

| 3 | Cylinder Test | Motor skills | 18 |

| 4 | Grip Strength | Sensorimotor | 9 |

| 5 | Hole Poke Test | Implicit learning, procedural learning, spatial learning, perseverance, motor function | 53 |

| 6 | Rotor Rod / Rotarod | Motor coordination, balance, Motor skill learning | 48 |

| 7 | Sticky Paper Test | Sensorimotor, proprioception | 22 |

| 8 | String Test | Motor skills, balance | 9 |

| Large animal assessments | |||

| 1 | Neurological Severity Scale (large animals) | Neurological status and function | 26 |

| 2 | Human Approach Test | Motivation, social interaction | 71 |

| 3 | Neurocognitive Test | Memory, cognitive processing | 18 |

The 46 Form Structures that were developed are shown here. The form category is given along with the form name, the general description, and number of CDEs. Note that the total number of elements among all the forms (1664) is more than the number of unique CDEs (913) because of CDEs used across forms.

CDEs, Common Data Elements, PTST, post-traumatic stress disorder.

Use case dataset: Selection and characteristics

To demonstrate the utility of the CDEs, we gathered datasets from several laboratories from working group members and colleagues and piloted the workflow process, from data cleaning, harmonization, and submission, to export for analysis. Analysis included two proof-of-concept use case studies: 1) rat studies from multiple labs using different injury models (fluid percussion injury, FPI; controlled cortical impact, CCI; and penetrating ballistic-like brain injury, PBBI) and the same primary outcome measure (Morris water maze, MWM); and 2) a smaller dataset that used the same injury model (FPI) and the same primary outcome measure (RotorRod or Rotarod). The selection criteria for the datasets included: 1) that the study was already published or derived from published studies; 2) data were available in electronic format; 3) rodent injury models covered in the CDEs were used; and 4) either MWM or Rotarod was a primary outcome measure. There were six different labs and seven different datasets, including the following: 1) MWM: five datasets for MWM, all rat studies, three injury models (CCI, FPI, PBBI), total of 358 subjects, 44 total CDEs for each dataset, with 1851 total cases (animals x behavior trials/animal) and 85,146 total values (cases x #CDEs); and 2) Rotarod: three datasets for Rotarod/Rotor Rod, two mice and one rat, both FPI injury model, total of 97 subjects, with 453 total cases (animals × behavior trials/animal) and 21,744 total values (cases × #CDEs) and 48 total CDEs (see Supplementary Table S2 for summary of lab information). We refer to these datasets as legacy data.

Data cleaning and harmonization

Prior to uploading the data, legacy data underwent cleaning and data harmonization according to CDE definitions. Cleaning included various procedures, such as a simple search and replace (e.g., “rat” to “rats”), spell check, removing extra spaces, etc. The dates were converted to ISO 8601 format, and the injury elapsed time data were recalculated from hours to minutes to correspond with the CDE definition. In addition, some reasonable extrapolations were done, mostly for behavioral tests dates. For example, since the behavioral tests were all done at a specific number of days post-injury, as defined in the study design, if we knew the date of injury, and if the behavioral test dates were not available, we extrapolated these dates based on the study design information. Files were manually checked for values that appeared incorrect (e.g., out of normal range) and if confirmed wrong or inconclusive, were removed from the submission. Legacy data were entered into the appropriate spreadsheet (i.e., FS, comma-separated value [csv] format), and harmonized to the corresponding CDEs. Harmonization entailed mapping (or matching) the collected data categories to the appropriate CDEs and entering the values into the corresponding column in the spreadsheet. Some data pieces were further separated into basic units to facilitate mapping to the simplest CDE level (e.g., 50 mg/kg intraperitoneally is a dose quantity, a dose unit, and a dose route).

Form generation and data submission

The finalized sets of CDEs for MWM and Rotarod were gathered into the logical FS. The FS for MWM contained 44 CDEs, where 20 CDEs were created specifically to capture key parameters of the MWM test and 24 CDEs were taken from the set of variables commonly used across multiple studies (Supplementary Table S3). The CDEs include, among others, injury group (e.g., injured, sham, naïve), time elapsed since injury, species, sex, injury date, tank diameter, water depth, water and room temperature, platform height, duration of each trial, swim speed, latency, and percent time in target quadrant. Overall, for MWM, there were 6 CDEs associated with “data collected” (i.e., dependent variables), with the remaining 38 CDEs from independent variables related to animal and experimental descriptors. The number of rows assigned to each subject equaled the number of days the animal underwent water maze assessment, so that the latency to find the platform on each day of testing was entered in a single column for the corresponding CDE. For example, if there were 4 days of MWM acquisition testing followed by a probe trial on Day 5, there were five rows for each subject. Similarly, the set of CDEs in the Rotarod FS includes 48 CDEs, where 15 CDEs were created specifically to capture key parameters of the Rotarod test, and 33 CDEs were taken from the set of variables commonly used across multiple pre-clinical TBI experiments (Supplementary Table S4). The Rotarod FS contained three CDEs in the “data collected” category and 45 CDEs associated with animal and experimental independent variables.

Each animal subject was assigned a Globally Unique Identifier (GUID), provided by Federal Interagency Traumatic Brain Injury Research (FITBIR) Operations, that was entered into the FS in addition to the subject ID that was given by the investigator. Once the data were mapped into the FS and cleaned, the FS was validated and submitted to the FITBIR demo site (a temporary workspace for the working group and pilot study) using the validation and submission tools on the FITBIR website.

Statistical analysis

We determined how well CDEs aligned on each uploaded dataset by performing a descriptive missing value analysis (MVA module; SPSS v.25, IBM), which analyzes the extent to which data were complete, and the nature of the “missingness.”21,31 Each cell was categorized as “complete” if it contained a value within the cell of the uploaded spreadsheet. Data cells were categorized as “missing values” if they did not contain a value within the cell in the uploaded spreadsheet. This is the foundation of the statistical method known as “missing values analysis” where the pattern of missingness is characterized, and mitigation strategies can be devised to recover missing data, or fill in (impute), or estimate missing values within a particular confidence interval.31 Each dataset (MWM, Rotarod) represents a number of studies within and across labs. As discussed above, the data were structured so that repeated measures were on separate rows of the dataset. Thus, each animal had multiple rows associated with it. Each of these rows are referred to in this missing values analysis as “cases.” To accurately assess the extent of completion, all identifying/demographic values that would not vary across time (e.g., sex) for each animal were repeated. The level of completion was assessed in three dimensions: the number of variables that had complete data across cases, the number of cases with complete data across all variables, and the total number of values that were completed (variables × cases). Finally, the number of variables in each dataset for which there were no data across all cases was reported.

Results

Missing data analysis: Morris water maze

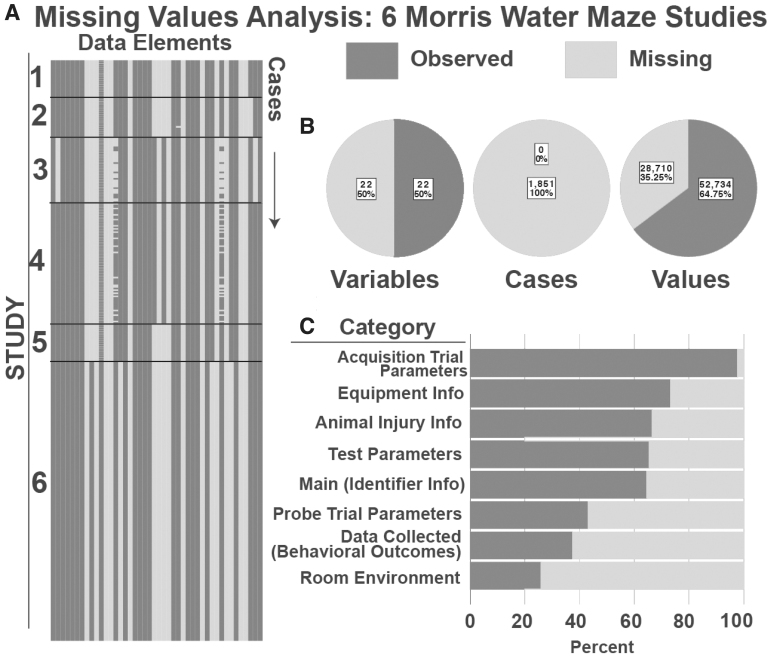

Analysis of data missingness across the full set of data elements for MWM data pooled across the five contributing laboratories are shown in Figure 3. Analysis across data elements revealed that 50% of the MWM data elements were complete, indicating that a half of data elements (spreadsheet columns) contained at least 1 missing value. Analysis of cases (individual animals at individual time-points; spreadsheet rows) revealed that 100% of the animals had at least one missing data element. Yet, when considering the total number of values (data 1elements x cases), a minority of values were missing (35.25%). Examination of the overall matrix of (data elements × cases) revealed that several variables were not being collected at all in these legacy studies. Out of 20 MWM specific CDEs, five CDEs contained no data for all the MWM studies, indicating that 25% of CDEs defined specifically for MWM were not being used at all, including three intended for data collection and two describing MWM test parameters or equipment specifics. Out of 24 cross-cutting CDEs, eight had blank data across all MWM studies (38%), including two from the Main group (animal birthdate and injury date time), two from equipment info (test computer and apparatus model), as well as elements for room illumination level, time point before injury, and alternative (other) elements. In addition, there were 11 distinct patterns of missingness that could be identified in the MWM dataset. This suggests that many of the workgroup-defined MWM data elements are not in common use by the TBI labs participating in the current pilot study. However, there were a number of elements that were collected universally across labs, suggesting feasibility of implementing CDEs for MWM in legacy datasets. The missing percentage for each CDE in the MWM missing value analysis is in Supplementary Table S3.

FIG. 3.

Missing Values Analysis for Morris Water Maze (MWM) Studies. (A) Visualization of missingness from five MWM datasets. Each column represents a data element, each row represents an animal at a particular experimental time-point (defined as a “case”). Thus, each individual animal is represented by multiple rows. (B) Summary of Missingness. Breakdown of missing and complete data by variable, case, and value; 47.5% of variables were filled completely by all cases; 0% of cases were complete, meaning that in each case, at least one variable had a missing value. When assessing all values across variables and cases in total, 38.2% were missing and 61.8% were complete. (C) Percent of missingness for each CDE category in the MWM form structure.

Missing data analysis: Rotarod

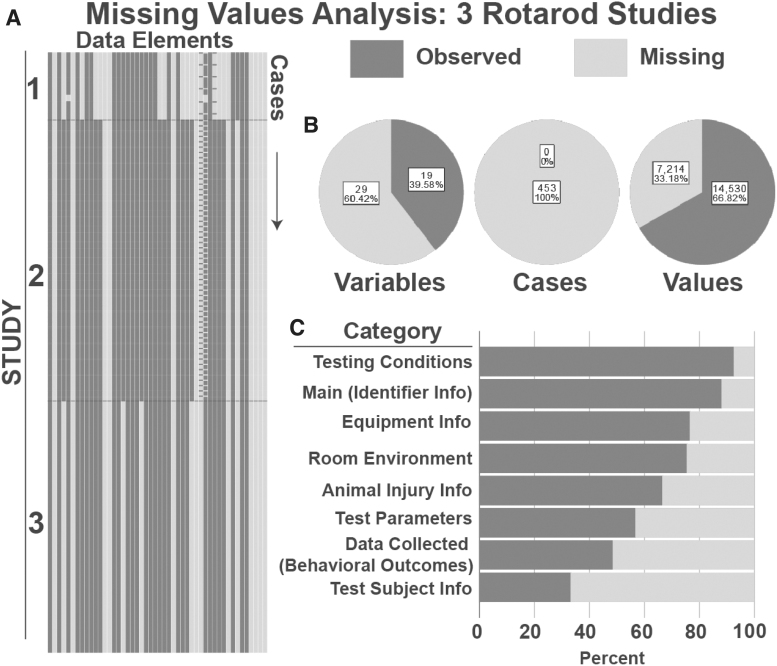

Analysis of data missingness across the full set of data elements for Rotarod data pooled across the two contributing laboratories are shown in Figure 4. Analysis across data elements revealed that 39.6% of the Rotarod data elements were complete, indicating that a majority (60.4%) of data elements (spreadsheet columns) contained at least one missing value. Analysis of cases (individual animals at individual time-points; spreadsheet rows) revealed that 100% of the animals had at least one missing data element. Analysis of the total number of values (data elements × cases) revealed that 33.18% were missing across the three studies. Examination of the overall matrix (data elements × cases) revealed 6 different distinct patterns of missingness, and that several variables were not collected. From the CDE use perspective, out of 15 Rotarod specific CDEs, only three had all blank data for all Rotarod studies, indicating that 20% of these CDEs were not used in legacy studies. Out of 33 CDEs commonly used across multiple pre-clinical tests, eight had blank data across all Rotarod studies, which is 24% of all the CDEs in the Rotarod forms. Of the 24 elements that contained at least one missing value, three were CDEs in the “data collected” category, including the Rotarod RPM value (100% missing), the Rotarod mean distance value (53.6% missing), and the Rotarod latency time (2% missing), with the other 21 missing CDEs being variables associated with groups such as animal information (e.g., animal birthdate) and experimental variables (e.g., trial duration value). The CDEs with 100% missing values included three Rotarod specific variables, five animal information variables, two test parameter variables, two equipment information variables, and one room environment variable. Overall, this analysis suggests that most of the workgroup-defined Rotarod data elements were in common use for this pilot study, although additional legacy datasets should be queried to establish consensus. The missing percentage for each CDE in the Rotarod missing value analysis is in Supplementary Table S4.

FIG. 4.

Missing Values Analysis for Rotarod Studies. (A) Visualization of missingness from 2 Rotarod studies. Each column represents a data element, each row represents an animal at a particular experimental time-point (defined as a “case”). Thus, each individual animal is represented by multiple rows. (B) Summary of Missingness. Breakdown of missing and complete data by variable, case, and value; 38.8% of variables were filled completely by all cases; 0% of cases were complete, meaning that in each case, at least one variable had a missing value. When assessing all values across variables and cases in total, 41.9% were complete. (C) Percent of missingness for each CDE category in the Rotarod form structure.

Discussion

We present the development of CDEs for TBI pre-clinical research and demonstrate the feasibility and utility of managing and sharing data using the CDE platform. Working groups met regularly and focused on refining previously defined data elements, incorporating additional core CDEs, and creating new CDEs for commonly used behavioral outcome measures. In selecting and defining the CDEs, the working groups considered the level of experimental detail that is commonly reported across studies, variables necessary for improved reporting rigor, as well as details that may enhance inter-investigator data harmonization moving forward. The result was 913 CDEs and 46 forms, all of which can be used by the TBI research community to analyze legacy data and for design of prospective studies.

In order to demonstrate utility of the CDEs, we collected seven pre-clinical datasets for two behavioral outcome measurements from six different laboratories and conducted a proof-of-concept exercise to map legacy data to the CDEs, upload the Form Structures to a data repository, and analyze the data for missing values among the datasets. In both datasets, at least half of the CDEs had at least one missing value and 100% of the animal subjects had a least one missing CDE, which is not surprising given the number of CDEs in the FS (44 for MWM and 48 for Rotarod) and multiple trials per animal. When considering all the elements (i.e., values) across a dataset (number of subjects × number of CDEs × number of trials), only 35% of the MWM FS data elements were missing, and 33% of the Rotarod FS data elements were missing, indicating that investigators have a substantial common set of CDEs but that there are also a number of elements identified by the working groups that were not used or reported as part of the legacy dataset.

It is not surprising that any given laboratory does not include all the data elements recommended by a group of peer experts. Working group discussions focused on the ideal set of variables for TBI studies, focusing on common behavioral tests. Development of a comprehensive data dictionary ensures that common language is proposed to the research community. As an ontology evolves refinement of the CDEs will ensure that unnecessary or arbitrary data elements are removed and pertinent elements are added. It should be noted that several variables restrict responses to a set of pre-defined values (e.g., animal injury models, housing conditions) to permit standardization of terms and ease of searches. In these cases, it is typical to include a companion “other” element with a free form text box to allow for nonstandard data entry (e.g., AnimalHousingTyp and AnimalHousingTypOTH). The MWM FS contained two of these (both with 100% missing values) and the Rotarod FS contained five “other” CDEs, with three of them having 100% missing values, inflating the true missing elements slightly.

With respect to CDEs not being used consistently across labs in this analysis, it is possible that the CDEs were not anticipated to be used in the original study. Most of the CDEs in the FS were associated with independent variables, especially those describing the test parameters, test conditions, and equipment. It is worthy to note that the Rotarod datasets were mostly from the same laboratory (two of three, or 88% of the cases), and while the percentage of missing CDEs was lower than in the MWM dataset, likely due to laboratory specific protocols, the percentage of missing values was similar. The patterns of missingness enabled us to examine consistency among datasets as well as the degree of congruency between working group emphasis and actual use. These observations may provide a guide for further work to understand the sources of missing data (e.g., protocol differences, differences in data retention standards across studies).

Missing value analyses can define and highlight numerous systematic patterns of missingness. With larger datasets it may be possible to use advanced analytical tools like machine learning to identify missing data patterns and discover the source of data missingness to improve coherence across labs. There are many tools for dealing with missing values among datasets.31–33 For example, tools such as the Little's test31 allow automated data screening to determine whether missing data can be explained by other key variables and thus data are ‘not missing at random’ (NMAR). If so, these key sources of missingness can then become points for quality improvement in data collection protocols. On the other hand, if data missingness is unrelated to variables of key importance, data can be considered “missing at random” (MAR) and it may be possible to use advanced permutation methods to estimate missing values and fill in (impute) values within a specified margin of uncertainty as a preprocessing step prior to carrying out further statistical analysis. If missing data patterns are completely uncorrelated to any other variable within a dataset, they can be considered ‘missing completely at random’ (MCAR) which allows for a wide range of statistical approaches that can help harmonize data using various data-synthesis approaches (e.g., multiple imputation, expectation maximization). Large datasets (e.g., 100 studies with 10,000 subjects) are required to begin these analyses. While we chose to use a missing value analysis to demonstrate use of the pre-clinical CDEs on combined datasets, there are several other possible analyses that could take advantage of these tools (see CDE Use Cases box).

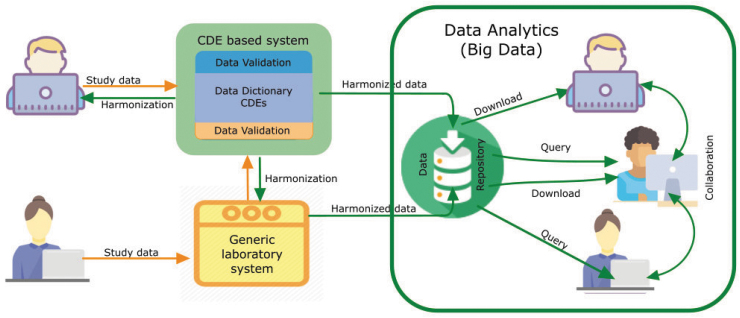

While we used the FITBIR platform for this feasibility study, there are no plans to open up a pre-clinical FITBIR data repository, but rather we provide tools (CDEs, forms) that could be used in an individual laboratory or with another data repository (e.g., www.odc-tbi.org; Fig. 5). In addition, the FITBIR platform has recently developed a “Meta Study” option for the pre-clinical TBI research community to enhance data sharing and transparency (https://fitbir.nih.gov). Using this platform, investigators can enter information about a research project by selecting the “Create Meta Study” option and populating fields to define the study attributes (published or unpublished; e.g., Model Type, Therapeutic Agent, etc.). Study documentation and data may also be uploaded, such as experimental protocols, data tables, and a pdf of the associated publication for research that is published. In addition, a URL can be added to specify the online location for data that are stored in another data repository. Other Meta Study features include the generation of a DOI (digital object identifier), which can be used for reference and reporting purposes, and a search tool that can assist users in locating studies with specific attributes.

FIG. 5.

CDE Use in Data Analytics. CDEs can work prospectively and retrospectively / independently. The top left scenario depicts a user who may work prospectively with a CDE based structured system, while the user on the bottom left may use independent, laboratory specific data collection systems, which are then harmonized with existing CDEs. These harmonized data can then be used by a variety of big data platforms. The use of CDE based informatic systems in research can facilitate data harmonization, thus easing cross study comparisons, data aggregation and meta-analyses, simplifying staff training and study operations, improving overall study efficiency, and promoting interoperability between different systems. Color image is available online.

CDE Use Cases

Case 1 : Reproducing methods between laboratories. A TBI investigator is unable to reproduce the degree of MWM deficits using the same injury model and species reported in a previously published study. The investigator queries a research database that uses CDEs and finds several TBI studies using MWM. No differences were found in how the experimental TBI was produced or cortical lesion volumes, indicating a comparable injury severity, but it was discovered that the published study utilized a 6ft diameter water tank while the investigator's tank was 3ft in diameter. After switching to the larger size tank, the new MWM data were then similar to the published study.

Case 2: Checking novelty. An investigator reads about an interesting drug from another field and wants to determine if it has been studied in TBI. After finding no published citations, she searches a preclinical CDE database and discovers unpublished findings that show no therapeutic efficacy. She examines the CDEs closely and observes that the treatment window may not have been optimal for the target mechanism. The investigator designs a new experiment based on this information

Case 3: Metaanalyses. An investigator is testing the efficacy of a particular drug on cognitive performance after several TBI preclinical studies published results using different behavioral assays and dosing parameters with varying degrees of post-injury efficacy. To gain statistical power to detect drug efficacy, the investigator performs a metaanalysis on the combined results from multiple studies that have been uploaded into a CDE database.

Pre-clinical TBI research has many potential sources of variability from animal models to behavior assay procedures, which can be captured through the use of CDEs. The use of multiple animal models in the TBI pre-clinical community can be advantageous to represent clinical heterogeneity but comes with an enormous variability in injury model parameters among labs and operators. There is a need to accurately and thoroughly report the details of the injury model and animal procedures for induction of TBI in order to identify potential confounders in the response.19,34 Sources of heterogeneity in injury response can also arise from animal strain, animal vendor, and genetic and physiological differences—all of which can be captured in CDEs. Other within laboratory and between laboratory sources of variability (some less studied), including husbandry conditions, procedure time of day, protocol execution, lab environmental conditions, measurement technique, and general procedural differences (e.g., length of anesthesia) are also likely to affect experimental outcomes and, if not reported, could reduce reproducibility of the results.

Designing studies in the context of CDEs and corresponding forms for data collection will result in more thorough reporting of methods, including experimental injury parameters and outcome measures.38 Better definition of variables will provide TBI researchers with tools to evaluate the impact of experimental factors that may be important to the research design, as well as to consider key research design elements that may explain differences in results from study to study.39 However, it is acknowledged that rigor does not necessarily result in reproducibility, but it does allow the research community to identify factors that may explain heterogeneity.

Importantly, CDE creation is not intended to dictate how procedures and outcomes are performed or collected, but rather to provide a set of standardized, flexible tools to assemble the data. Over-standardization may contribute to results that may be more reflective of differences between laboratories and animal phenotypes rather than genuine scientific findings.35 Some recent work suggests that heterogeneity may improve reproducibility. In an analysis of single- versus multi-laboratory studies across 13 different interventions in pre-clinical models of stroke, breast cancer, and myocardial infarction, multi-laboratory studies predicted effect size up to 42% more accurately.36 Thoughtful standardization of some model parameters and careful implementation of deliberate heterogeneity may improve reproducibility and validity of pre-clinical results.37

The use of CDEs within and across laboratories is not without challenges. As we observed, mapping legacy data to defined CDEs is time consuming and is largely a manual process. With respect to data sharing, maintenance of data repositories is not trivial and requires data science expertise and financial resources. In addition to FITBIR (https://fitbir.nih.gov), pre-clinical data repositories such as the Alzheimer's Preclinical Efficacy Database (AlzPED, https://alzped.nia.nih.gov), the Open Data Commons for Spinal Cord Injury (www.odc-sci.org), and the Open Data Commons for Traumatic Brain Injury (www.odc-tbi.org) provide examples of data sharing platforms. Citation of the original publication and reference to the data location itself is essential to ensure proper credit and encourage sharing of positive and negative data alike. Data use agreements are commonly used in biomedical sciences and can clearly designate terms for data ownership, transfer of ownership, proper citation, and public use. Despite these hurdles, the use of CDEs in pre-clinical research will ultimately facilitate sharing and experimental standardization, and aid in compliance with journal data reporting policies.

While the working group focused on small animal behavioral outcomes, it is recommended that future efforts continue development of CDEs for large animal injury models, as such models become more widely used (see Supplementary Fig. S1 for a pilot missing value analysis in porcine TBI model). Large animals, such as the pig, are a necessary piece of the translation pipeline and can better model human neuroanatomy and physiology, compared with rodents. Other domains that will require attention in CDE development include histopathology, physiological measurements (e.g., blood gases, blood pressure, heart rate, electroencephalogram, sleep), biofluid biomarkers, imaging, pharmacokinetics, and molecular and neurochemical assays. The broader goal of the working group was to continue the dialogue with the basic and clinical research communities to maximize the impact of pre-clinical data harmonization and guide the trajectory to purposeful translation.

In summary, it is expected that the development of CDEs for pre-clinical TBI research will help to establish a well-define lexicon for the collection, reporting, and sharing of pre-clinical data with the goal of enhancing rigor, reproducibility, and transparency and to account for difference within and between laboratories. Better reporting will facilitate comparison of results between studies, duplication of published studies and confirmation of findings, possibly revealing new interpretation and hypothesis generation. The prospect of using the tools described here is expected to foster large collaborative efforts that require data sharing, such as prospective multi-site studies, meta-analyses, and data-based modeling efforts, ultimately improving the translation of pre-clinical findings to clinical studies and treatments for TBI.

Supplementary Material

Acknowledgments

We thank the investigators who contributed data to the project (in addition to author contributors): Shaun Carlson, University of Pittsburgh, Anthony Kline, University of Pittsburgh, Rachel K. Rowe, University of Arizona, and Deborah Shear, Walter Reed Army Institute of Research; Stephen Ahlers, Naval Medical Research Center contributed to CDEs development, Matthew McAuliffe and the BRICS staff contributed the FITBIR infrastructure.

Funding Information

Authors acknowledge NIH National Institute of Neurological Disorders and Stroke (NINDS, R21 NS096515-02S1 (JL), R01 NS091062-03S1 (CED)), NIH Center for Information Technology (CIT) and Biomedical Research Informatics Computing System (BRICS), and the Department of Defense for technical and financial contribution to the FITBIR program.

Author Disclosure Statement

A.S. Galanopoulou is co-Editor in Chief of Epilepsia Open and has received royalties for publications from Elsevier and Morgan & Claypool publishers. No competing financial interests exist.

Supplementary Material

References

- 1. McNamee, L.M., Walsh, M.J., and Ledley, F.D. (2017). Timelines of translational science: from technology initiation to FDA approval. PLoS One 12, e0177371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Stein, D.G. (2015). Embracing failure: What the phase III progesterone studies can teach about TBI clinical trials. Brain Inj. 29, 1259–1272 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Maas, A.I., Menon, D.K., Adelson, P.D., Andelic, N., Bell, M.J., Belli, A., Bragge, P., Brazinova, A., Büki, A., and Chesnut, R.M. (2017). Traumatic brain injury: integrated approaches to improve prevention, clinical care, and research. Lancet Neurol. 16, 987–1048 [DOI] [PubMed] [Google Scholar]

- 4. Hersh, D.S., Ansel, B.M., and Eisenberg, H.M. (2018). The future of clinical trials in traumatic brain injury, in: Controversies in Severe Traumatic Brain Injury Management. S.D. Timmons, (ed). Springer: Berlin/Heidelberg, Germany, pps. 247–256 [Google Scholar]

- 5. Kent, D.M., Rothwell, P.M., Ioannidis, J.P., Altman, D.G., and Hayward, R.A. (2010). Assessing and reporting heterogeneity in treatment effects in clinical trials: a proposal. Trials 11, 85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Begley, C.G. and Ioannidis, J.P. (2015). Reproducibility in science: Improving the standard for basic and preclinical research. Circulation Res. 116, 116–126 [DOI] [PubMed] [Google Scholar]

- 7. Ioannidis, J.P. (2005). Why most published research findings are false. PLoS Med 2(8):e124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Dirnagl, U. (2006). Bench to bedside: the quest for quality in experimental stroke research. J. Cereb. Blood Flow Metab. 26, 1465–1478 [DOI] [PubMed] [Google Scholar]

- 9. Howells, D.W., Sena, E.S., and Macleod, M.R. (2014). Bringing rigour to translational medicine. Nat. Rev. Neurol. 10, 37–43 [DOI] [PubMed] [Google Scholar]

- 10. Wallach, J.D., Boyack, K.W., and Ioannidis, J.P. (2018). Reproducible research practices, transparency, and open access data in the biomedical literature, 2015–2017. PLoS Biol. 16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hillary, F.G. and Medaglia, J.D. (2019). What the Replication Crisis Means for Intervention Science. Elsevier: Amsterdam, the Netherlands; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Smith, D.H., Hicks, R.R., Johnson, V.E., Bergstrom, D.A., Cummings, D.M., Noble, L.J., Hovda, D., Whalen, M., Ahlers, S.T., and LaPlaca, M. (2015). Pre-clinical traumatic brain injury common data elements: toward a common language across laboratories. J. Neurotrauma 32, 1725–1735 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. DeWitt, D.S., Hawkins, B.E., Dixon, C.E., Kochanek, P.M., Armstead, W., Bass, C.R., Bramlett, H.M., Buki, A., Dietrich, W.D., Ferguson, A.R., Hall, E.D., Hayes, R.L., Hinds, S.R., LaPlaca, M.C., Long, J.B., Meaney, D.F., Mondello, S., Noble-Haeusslein, L.J., Poloyac, S.M., Prough, D.S., Robertson, C.S., Saatman, K.E., Shultz, S.R., Shear, D.A., Smith, D.H., Valadka, A.B., VandeVord, P., and Zhang, L. (2018). Pre-clinical testing of therapies for traumatic brain injury. J. Neurotrauma 35, 2737–2754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Kochanek, P.M., Dixon, C.E., Mondello, S., Wang, K.K.K., Lafrenaye, A., Bramlett, H.M., Dietrich, W.D., Hayes, R.L., Shear, D.A., Gilsdorf, J.S., Catania, M., Poloyac, S.M., Empey, P.E., Jackson, T.C., and Povlishock, J.T. (2018). Multi-center pre-clinical consortia to enhance translation of therapies and biomarkers for traumatic brain injury: operation brain trauma therapy and beyond. Front Neurol 9, 640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Huie, J.R., Almeida, C.A., and Ferguson, A.R. (2018). Neurotrauma as a big-data problem. Curr. Opin. Neurol. 31, 702–708 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Ioannidis, J.P. (2008). Effect of formal statistical significance on the credibility of observational associations. Am. J. Epidemiol. 168, 374–383 [DOI] [PubMed] [Google Scholar]

- 17. Henderson, V.C., Kimmelman, J., Fergusson, D., Grimshaw, J.M., and Hackam, D.G. (2013). Threats to validity in the design and conduct of preclinical efficacy studies: a systematic review of guidelines for in vivo animal experiments. PLoS Med. 10, e1001489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Warner, D.S., James, M.L., Laskowitz, D.T., and Wijdicks, E.F. (2014). Translational research in acute central nervous system injury: lessons learned and the future. JAMA Neurol. 71, 1311–1318 [DOI] [PubMed] [Google Scholar]

- 19. Kilkenny, C., Browne, W.J., Cuthill, I.C., Emerson, M., and Altman, D.G. (2010). Improving bioscience research reporting: The arrive guidelines for reporting animal research. PLoS Biol. 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Tortella, F.C. (2016). Challenging the paradigms of experimental TBI models: from preclinical to clinical practice. Methods Mol. Biol. 1462, 735–740 [DOI] [PubMed] [Google Scholar]

- 21. Fouad, K., Bixby, J.L., Callahan, A., Grethe, J.S., Jakeman, L.B., Lemmon, V.P., Magnuson, D.S.K., Martone, M.E., Nielson, J.L., Schwab, J.M., Taylor-Burds, C., Tetzlaff, W., Torres-Espin, A., and Ferguson, A.R. (2020). Fair sci ahead: the evolution of the open data commons for pre-clinical spinal cord injury research. J. Neurotrauma 37, 831–838 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Kyzas, P.A., Denaxa-Kyza, D., and Ioannidis, J.P. (2007). Almost all articles on cancer prognostic markers report statistically significant results. Eur. J. Cancer 43, 2559–2579 [DOI] [PubMed] [Google Scholar]

- 23. Landis, S.C., Amara, S.G., Asadullah, K., Austin, C.P., Blumenstein, R., Bradley, E.W., Crystal, R.G., Darnell, R.B., Ferrante, R.J., Fillit, H., Finkelstein, R., Fisher, M., Gendelman, H.E., Golub, R.M., Goudreau, J.L., Gross, R.A., Gubitz, A.K., Hesterlee, S.E., Howells, D.W., Huguenard, J., Kelner, K., Koroshetz, W., Krainc, D., Lazic, S.E., Levine, M.S., Macleod, M.R., McCall, J.M., Moxley, R.T., 3rd, Narasimhan, K., Noble, L.J., Perrin, S., Porter, J.D., Steward, O., Unger, E., Utz, U., and Silberberg, S.D. (2012). A call for transparent reporting to optimize the predictive value of preclinical research. Nature 490, 187–191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Baker, D., Lidster, K., Sottomayor, A., and Amor, S. (2014). Two years later: journals are not yet enforcing the arrive guidelines on reporting standards for pre-clinical animal studies. PLoS Biol, 12, e1001756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Kenall, A., Edmunds, S., Goodman, L., Bal, L., Flintoft, L., Shanahan, D.R., and Shipley, T. (2015). Better reporting for better research: a checklist for reproducibility. BMC Neurosci 16, 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Lapinlampi, N., Melin, E., Aronica, E., Bankstahl, J.P., Becker, A., Bernard, C., Gorter, J.A., Gröhn, O., Lipsanen, A., Lukasiuk, K., Löscher, W., Paananen, J., Ravizza, T., Roncon, P., Simonato, M., Vezzani, A., Kokaia, M., and Pitkänen, A. (2017). Common data elements and data management: Remedy to cure underpowered preclinical studies. Epilepsy Res. 129, 87–90 [DOI] [PubMed] [Google Scholar]

- 27. Harte-Hargrove, L.C., French, J.A., Pitkänen, A., Galanopoulou, A.S., Whittemore, V., and Scharfman, H.E. (2017). Common data elements for preclinical epilepsy research: Standards for data collection and reporting. a task3 report of the aes/ilae translational task force of the ilae. Epilepsia 58 Suppl 4, 78–86 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Harte-Hargrove, L.C., Galanopoulou, A.S., French, J.A., Pitkänen, A., Whittemore, V., and Scharfman, H.E. (2018). Common data elements (cdes) for preclinical epilepsy research: Introduction to cdes and description of core cdes. A task3 report of the ilae/aes joint translational task force. Epilepsia Open 3, 13–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Wilde, E.A., Whiteneck, G.G., Bogner, J., Bushnik, T., Cifu, D.X., Dikmen, S., French, L., Giacino, J.T., Hart, T., Malec, J.F., Millis, S.R., Novack, T.A., Sherer, M., Tulsky, D.S., Vanderploeg, R.D., and von Steinbuechel, N. (2010). Recommendations for the use of common outcome measures in traumatic brain injury research. Arch. Phys. Med. Rehabil. 91, 1650–1660.e17. [DOI] [PubMed] [Google Scholar]

- 30. Hicks, R., Giacino, J., Harrison-Felix, C., Manley, G., Valadka, A., and Wilde, E.A. (2013). Progress in developing common data elements for traumatic brain injury research: version two–the end of the beginning. J. Neurotrauma 30, 1852–1861 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Little, R. and Rubin, D. (2002). Statistical Analysis with Missing Data, 2nd edition ed. 2002, John Wiley and Sons: New York, NY [Google Scholar]

- 32. Rubin, L.H., Witkiewitz, K., Andre, J.S., and Reilly, S. (2007). Methods for handling missing data in the behavioral neurosciences: don't throw the baby rat out with the bath water. J. Undergrad. Neurosci. Educ. 5, A71–A77 [PMC free article] [PubMed] [Google Scholar]

- 33. Duricki, D.A., Soleman, S., and Moon, L.D. (2016). Analysis of longitudinal data from animals with missing values using spss. Nat. Protoc. 11, 1112–1129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Watzlawick, R., Antonic, A., Sena, E.S., Kopp, M.A., Rind, J., Dirnagl, U., Macleod, M., Howells, D.W., and Schwab, J.M. (2019). Outcome heterogeneity and bias in acute experimental spinal cord injury: a meta-analysis. Neurology 93, e40–e51 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Voelkl, B. and Würbel, H. (2016). Reproducibility crisis: are we ignoring reaction norms? Trends Pharmacol. Sci. 37, 509–510 [DOI] [PubMed] [Google Scholar]

- 36. Voelkl, B., Vogt, L., Sena, E.S., and Würbel, H. (2018). Reproducibility of preclinical animal research improves with heterogeneity of study samples. PLoS Biol. 16, e2003693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Kent, T.A. and Mandava, P. (2016). Embracing biological and methodological variance in a new approach to pre-clinical stroke testing. Transl. Stroke Res. 7, 274–283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Gulinello, M., Mitchell, H.A., Chang, Q., Timothy O'Brien, W., Zhou, Z., Abel, T., Wang, L., Corbin, J.G., Veeraragavan, S., Samaco, R.C., Andrews, N.A., Fagiolini, M., Cole, T.B., Burbacher, T.M., and Crawley, J.N. (2019). Rigor and reproducibility in rodent behavioral research. Neurobiol. Learn Mem. 165, 106780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Sheffield, C.L., Refolo, L.M., Petanceska, S.S., and King, R.J. (2017). A librarian's role in improving rigor in research— AlzPED: Alzheimer's disease preclinical efficacy database. Sci. Technol. Libraries 36, 296–308 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.