Abstract

Here we present our Python toolbox “MR. Estimator” to reliably estimate the intrinsic timescale from electrophysiologal recordings of heavily subsampled systems. Originally intended for the analysis of time series from neuronal spiking activity, our toolbox is applicable to a wide range of systems where subsampling—the difficulty to observe the whole system in full detail—limits our capability to record. Applications range from epidemic spreading to any system that can be represented by an autoregressive process. In the context of neuroscience, the intrinsic timescale can be thought of as the duration over which any perturbation reverberates within the network; it has been used as a key observable to investigate a functional hierarchy across the primate cortex and serves as a measure of working memory. It is also a proxy for the distance to criticality and quantifies a system’s dynamic working point.

1 Introduction

Recent discoveries in the field of computational neuroscience suggest a major role of the so-called intrinsic timescale for functional brain dynamics [1–8]. Intuitively, the intrinsic timescale characterizes the decay time of an exponentially decaying autocorrelation function (in this work and in many contexts it is synonymous to the autocorrelation time). Exponentially decaying correlations are commonly found in recurrent networks (see e.g. Refs. [5, 9]), where the intrinsic timescale can be related to information storage and transfer [10–12]. More importantly, such decaying autocorrelations are also found in the network-spiking-dynamics recorded in the brain: Here, the intrinsic timescale serves as a measure to quantify working memory [3, 4] and unravels a temporal hierarchy of processing in primates [1, 2].

Although autocorrelations and the intrinsic timescale can be derived from single neuron activity, they characterize the dynamics within the whole recurrent network. The single neuron basically serves as a readout for the local network activity. One can consider spiking activity in a recurrent network as a branching or spreading process, where each presynaptic spike triggers on average a certain number m of postsynaptic spikes [13–15]. Such a spreading process typically features an exponentially decaying autocorrelation function, and the associated time constant is in principle accessible from the activity of each unit. However, approaching the single-unit level, the magnitude of the autocorrelation function can be much smaller than expected, and can be disguised by noise.

In experiments we approach this level: we typically sample only a small part of the system, sometimes only a single or a dozen of units. This subsampling problem is especially problematic in neuroscience, where even the most advanced electrode measurements can record at most a few thousand out of the billions of neurons in the brain [16, 17]. However, we recently showed that this spatial subsampling only biases the magnitude of the autocorrelation function (of autoregressive processes) and that—despite the bias—the associated intrinsic timescale can still be inferred by using multi-step regression (MR). Because the intrinsic timescale inferred by MR is invariant to spatial subsampling, one can infer it even when recording only a small set of units [5].

Here, we present our Python toolbox “MR. Estimator” that implements MR to estimate the intrinsic timescale of spiking activity, even for heavily subsampled systems. Since our method is based on spreading processes in complex systems, it is applicable beyond neuroscience, e.g. in epidemiology or social sciences such as the timescale of epidemic spreading (from subsampled infection counts) [5] or the timescale of opinion spreading (from subsampled social networks) [18].

The main advantage of using our toolbox over a custom implementation to determine intrinsic timescales is that it provides a consistent way that can now be adopted across studies. It supports trial structures and we demonstrate how multiple trials can be combined to compensate for short individual trials. Lastly, the toolbox calculates confidence intervals by default, when a trial structure is provided.

In the following, we discuss how to apply the toolbox using a code example (Sec. 2). We then briefly focus on the neuroscience context (including a real-life example, Sec. 3) before we derive the MR estimator and discuss technical details such as the impact of short trials (Sec. 4). While of general interest, this section is not required for a general understanding of the toolbox. In the discussion (Sec. 5), we present selected examples where intrinsic timescales play an important role. Lastly, an overview of parameters and toolbox functions is given in Tables 1 and 2 at the end of the document.

Table 1. List of the most common parameters and functions where they are used.

For a full list of each function’s possible arguments, please refer to the online documentation [43].

| Symbol | Parameter description | Function | Example argument |

|---|---|---|---|

| k | Discrete time steps of correlation coefficients (shift between original and delayed time series) | full_analysis() | kmax = 1000 |

| coefficients() | steps=(1, 1000) | ||

| fit() | steps=(1, 1000) | ||

| Unit of discrete time steps | full_analysis() | dtunit=‘ms’ | |

| coefficients() | dtunit=‘ms’ | ||

| fit() | dtunit=‘ms’ | ||

| Δt | Size of the discrete time steps in dtunits | full_analysis() | dt = 4 |

| coefficients() | dt = 4 | ||

| fit() | dt = 4 | ||

| rk | Correlation coefficients | fit() | data |

| Method for calculating rk | full_analysis() | coefficientmethod=‘sm’ | |

| coefficients() | method=‘ts’ | ||

| Selecting Fitfunctions: | full_analysis() | fitfuncs=[‘exp’, ‘offset’, ‘complex’] | |

| fit() | fitfunc=‘exp’ | ||

| α | Subsampling fraction | simulate_subsampling() | prob |

| simulate_branching() | subp | ||

| 〈At〉 | Activity (e.g. of a branching process) | simulate_branching() | a = 1000 |

| m | Branching parameter | simulate_branching() | m = 0.98 |

| h | External input | simulate_branching() | h = 100 |

| Bootstrapping: number of samples, rng seed | full_analysis() | numboot = 100, seed = 101 | |

| coefficients() | numboot = 100, seed = 102 | ||

| fit() | numboot = 100, seed = 103 |

Table 2. The (lengthy) descriptions of fit-functions and coefficient-methods can be abbreviated.

| Full name | Abbreviation |

|---|---|

| ‘trialseparated’ | ‘ts’ |

| ‘stationarymean’ | ‘sm’ |

| ‘exponential’ | ‘e’, ‘exp’ |

| ‘exponential_offset’ | ‘eo’, ‘exp_offset’, ‘exp_off’ |

| ‘complex’ | ‘c’, ‘cplx’ |

2 Workflow

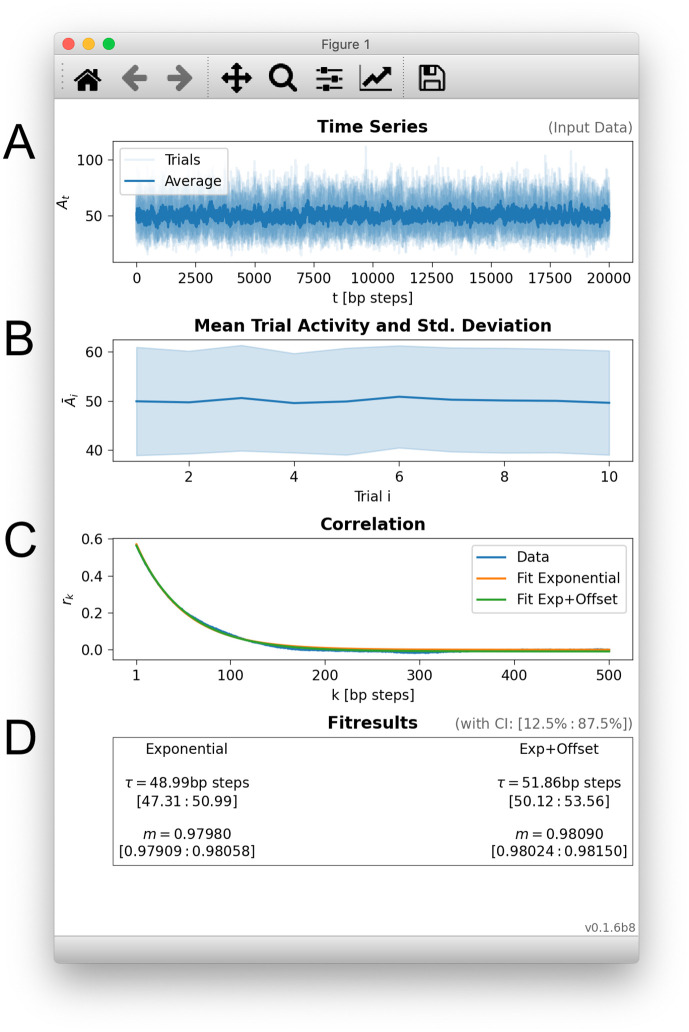

To illustrate a typical workflow, we now discuss an example script that generates an overview panel of results, as depicted in Fig 1. The discussed script and other examples are provided online [19].

Fig 1. The toolbox provides a full_analysis() function that performs all required steps and produces an overview panel.

A: Time series of the input data, here the activity At of ten trials of a branching process with m = 0.98 and τ = Δt/ln(m) ≈ 49.5 steps (Δt is the step size of the branching process). B: Mean activity and standard deviation of activity for each trial. This display can reveal systematic drifts or changes across trials. C: Correlation coefficients rk are determined from the input data, and exponentially decaying autocorrelation functions are fitted to the rk. Several alternative fit functions can be chosen. D: The decay time of the autocorrelation function corresponds to the intrinsic timescale τ, and allows to infer the corresponding branching parameter m. The shown fit results contain confidence intervals in square brackets (75% by default).

In the example, we generate a time series from a branching process with a known intrinsic timescale (Fig 1A). At the discrete time steps Δt of such a branching process, every active unit activates a random number of units (on average m units) for the next time step. As this principle holds for any unit, activity can spread like a cascade or avalanche over the system. Taking the perspective of the entire system, the current activity At (or number of active units) depends on the previous activity and the branching parameter m. Then, the branching parameter is directly linked to the intrinsic timescale τ = −Δt/ln(m): As m becomes closer one, τ grows to infinity (for the mathematical background, see Sec. 4). Because τ corresponds to the decay time of the autocorrelation function (Fig 1C), a larger τ will cause a slower decay.

With this motivation in mind, it is the main task of the toolbox to determine the correlation coefficients rk—that describe the autocorrelation function of the data—and to fit an analytic autocorrelation function to the determined rk—which then yields the intrinsic time scale. In the example, we determined rk with the toolbox’s default settings (Fig 1C) and we fitted two alternative exponentially decaying functions to determine the intrinsic timescale (a plain exponential and an exponential that is shifted by an offset). The toolbox returns estimates and 75% confidence intervals for the branching parameter and the intrinsic timescale (Fig 1D); the estimates match the known values m = 0.98 and τ ≈ 49.5 that were used in the example. To demonstrate the effect of subsampling in the example, we recorded only 5% of the occurring events of the branching process.

Listing 1. Example script (Python) that creates artificial data from a branching process and performs the multistep regression. An example to import experimental data is available online, along with detailed documentation explaining all function arguments [19].

# load the toolbox

import mrestimator as mre

# enable matplotlib interactive mode so

# figures are shown automatically

mre.plt.ion ()

# 1. -----------------------------------#

# example data from branching process

bp = mre. simulate_branching (m = 0.98, a = 1000, subp = 0.05, length = 20000, numtrials = 10, seed = 43771)

# make sure the data has the right format

src = mre.input_handler (bp)

# 2. -----------------------------------#

# calculate autocorrelation coefficients,

# embed information about the time steps

rks = mre.coefficients (src, steps = (1, 500), dt = 1, dtunit = ‘bp steps’, method = ‘trialseparated’)

# 3. -----------------------------------#

# fit an autocorrelation function, here

# exponential (without and with offset)

fit1 = mre.fit(rks, fitfunc = ‘exp’)

fit2 = mre.fit(rks, fitfunc = ‘exp_offset’)

# 4. -----------------------------------#

# create an output handler instance

out = mre.OutputHandler ([rks, fit1, fit2])

# save to disk

out.save (‘∼/mre_example/result’)

# 5. -----------------------------------#

# gives same output with other file title

out2 = mre.full_analysis (data = bp, dt = 1, kmax = 500, dtunit = ‘bp steps’, coefficientmethod = ‘trialseparated’, fitfuncs = [‘exp’, ‘exp_offset’], targetdir = ‘∼/mre_example/’)

1. Prepare data: After the toolbox is loaded, the input data needs to be in the right format: a 2D NumPy array [20–22]. To support a trial structure, the first index of the array corresponds to the trial (even when there is only one trial), the second index corresponds to the time (in fixed time steps). All trials need to have the same length.

We provide an optional input_handler() that tries to guess the passed format and convert it automatically. For instance, it can check and convert data that is already loaded (as shown in Listing 1) or load files from disk, when a file path is provided.

2. Multiple regressions: Once the data is in the right format, multiple linear regressions are initiated by calling coefficients() (see Sec. 4.3 for more details). The function performs linear regressions between the original time series (src), and the same time series after it was shifted by k time steps. It returns the slopes found by the regression—we call them correlation coefficients rk (rks). Here, we specify to calculate the correlation coefficients for steps 1 ≤ k ≤ 500. In Listing 1, the linear regression is performed for each trial separately. To obtain a joint estimate across all trials, the estimated rk are averaged (trialseparated method). Confidence intervals are calculated using bootstrapping.

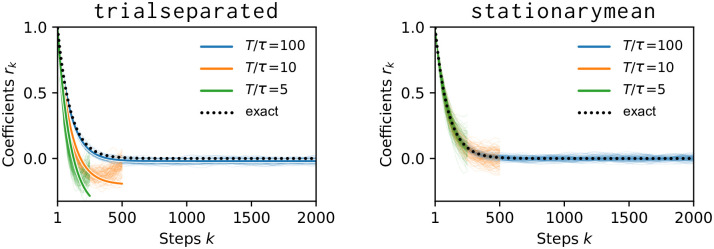

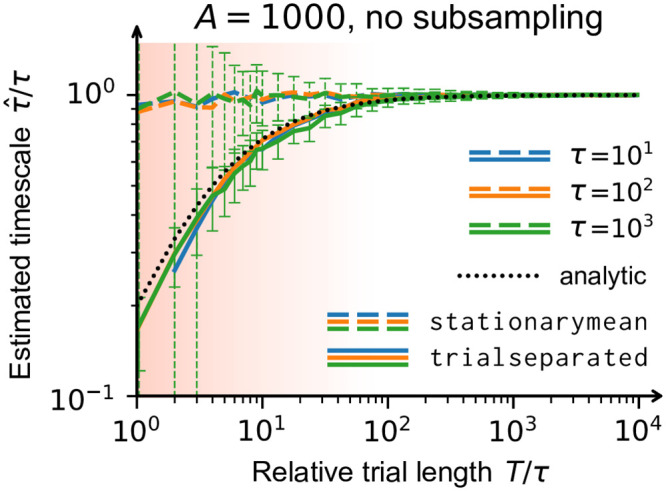

Please note that (independent from subsampling) the linear regression can be biased due to short trials [23, 24]. In case of stationary activity across trials, the issue can be circumvented by using the stationarymean method (see Sec. 4.3 and Fig 5).

Fig 5. Independent of subsampling, correlation coefficients can be biased if trials are short.

As a function of trial length, the autocorrelation time that was estimated by the toolbox () is compared with the known value of a stationary, fully sampled branching process (τ). Each measurement featured 50 trials and was performed once with each method, trialseparated (solid lines) and stationarymean (dashed lines). For short time series (red shaded area), it is known analytically that the correlation coefficients are biased [23]. The bias propagates to the intrinsic timescale (black dotted line) and it is consistent with the timescale obtained from the trialseparated method. The stationarymean method can compensate the bias, if enough trials are available across which the activity is indeed stationary. However, the improvement to the estimates scales directly with the number of trials—the effective statistical information is increased with each trial. Error bars (for clarity only depicted for τ = 103): standard deviation across 100 simulations. For more details, see appendix B.

3. Fit the autocorrelation function: Next, we fit the correlation coefficients using a desired function (fitfunc). In order to estimate the intrinsic timescale, this function needs to decay exponentially. Motivated by recent experimental studies [1], the default function is exponential_offset (other options include an exponential and a complex fit with empirical corrections).

4. Visualize and store results: Multiple correlation coefficients and fits can be exported using an instance of OutputHandler. The save() function not only exports a plot but also a text file containing the full information that is required to reproduce it.

5. Wrapping up: For convenience, the full_analysis() function performs all steps with default parameters and displays an overview panel as shown in Fig 1.

3 Interpretation in a neuroscience context

Timescales of neural dynamics have been analyzed in various contexts and can be interpreted as reward memory [25] or as temporal receptive windows [26]. Here, however, we focus on the timescale of the decay of the autocorrelation function [1], which is thought to be related to the duration of integration in local circuits [2] or to working memory [3, 4]. As such, the intrinsic timescale represents a measure of how long information is kept (or can be integrated) in a local circuit; it ranges between 50 to 500 ms and this diversity of timescales is believed to arise from differences in local connectivity [27, 28].

In the brain, the autocorrelation function is not only determined by the intrinsic timescale. If the spiking activity is dominated by a single timescale τ, the autocorrelation is expected to decay exponentially (see Sec. 4): . However, often the autocorrelation is more complex, which we take into account and provide a complex fit function, based on an empirical analysis of autocorrelation functions by König [29]:

| (1) |

In addition to the exponential decay, the complex fit function features three terms that account for:

Neural oscillations, reflected as an exponentially decaying cosine term: .

Short term dynamics of a neuron with a refractory period, reflected as a Gaussian decay: .

An offset O which arises due to the small non-stationarities of the recordings on timescales longer than a few seconds.

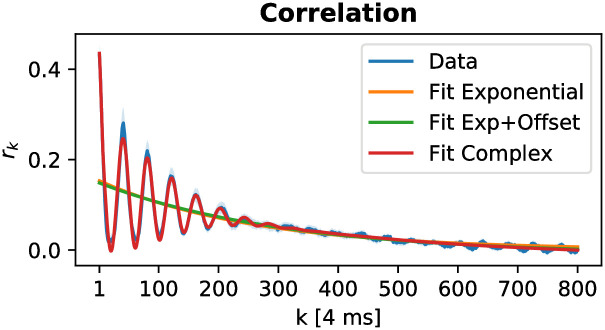

To illustrate the usefulness of the complex fit function, we analyze an openly available dataset of spiking activity in rat hippocampus [30]. We find an intrinsic timescale of around 1.5 seconds (which is similar to the timescales found in rat cortex [31]). One challenging characteristic of this dataset are theta oscillations (5–10 Hz) in the population activity, which carry over to the autocorrelation function. Because the complex fit function features an oscillatory term, it can capture these oscillations, and still yield a solid estimate of the autocorrelation time. (Fits from functions without the oscillatory term will deviate from the data and lead to biased estimates.) Additionally, by including this term into the fit, we also obtain an estimate of the oscillation frequency: In the shown example (Fig 2), we find ν = 6.1 Hz, which is well in the range of theta oscillations. This shows that our toolbox can deal with complex neuronal dynamics of single-cell activity.

Fig 2. Example analysis of spiking activity from rat hippocampus during an open field task that demonstrates the usage of the complex fit function.

A short example code that analyzes the data [30] and produces this figure is listed in appendix A.

4 Technical details

4.1 Derivation of the multi-step regression estimator for autoregressive processes

The statistical properties of activity propagation in networks can be approximated by a stochastic process with an autoregressive representation [15, 18, 32], at least to leading order [14]. We will use this framework of autoregressive processes to derive the multi-step regression estimator and show that it is invariant under subsampling [5].

Here, we consider the class of stochastic processes with an autoregressive representation of first order. This process combines a stochastic, internal generation of activity with a stochastic, external input. The internal generation on average yields m new events per event, where m is called the branching parameter (using the terminology of the driven branching process) [33–35]. The external input is assumed to be an uncorrelated Poisson process with rate h (a generalization to non-stationary input can be found in Ref. [36]). For discrete time steps Δt, we denote the number of active units at time t with At and obtain the autoregressive representation

| (2) |

where 〈⋅〉 denotes the expectation value. This autoregressive representation is the basis of our subsampling invariant method and makes it applicable to the full class of first-order autoregressive processes. From Eq (2), we can also see that one could determine m from a time series of a system’s activity by using linear regression. The linear regression estimate of m is

| (3) |

This well established approach [5, 33, 37, 38] only considers the pairs of activity that are separated by one time step—it measures the slope of the line that best describes the point cloud (At+1, At). Instead, the multi-step regression (MR) estimator considers all the pairs of activity separated by increasing time differences k—it estimates multiple regression slopes.

Analogous to the case of k = 1 in Eq (3), we define the correlation coefficients rk as the slope of the line that best describes the point cloud (At+k, At)

| (4) |

For an autoregressive process that is fully sampled, these correlation coefficients become rk = mk. To show this, we first generalize Eq (2) using the geometric series (cf. Ref. [5, 36])

| (5) |

We then use the law of total expectation to obtain 〈At+k At〉 = 〈〈At+k|At〉At〉 and 〈At+k〉 = 〈〈At+k|At〉〉. This allows us to rewrite the covariance:

| (6) |

| (7) |

| (8) |

| (9) |

When we insert this result into Eq (4), we find that the correlation coefficients are related to the branching parameter as rk = mk, which enables the toolbox to detect the branching parameter from recordings of processes that are subcritical (m < 1), critical (m = 1) or supercritical (m > 1).

In the special case of stationary activity, where 〈At〉 = 〈At+k〉, the correlation coefficients can be further related to an autocorrelation time. In this case, the correlation coefficients, Eq (4), match the correlation function

| (10) |

Note that we here consider the definition of the autocorrelation function normalized to the time-independent variance (other definitions are also common, e.g. a time-dependent Pearson correlation coefficient Cov [At+k, At] / Std [At] Std [At+k]). For stationary autoregressive processes, the correlation function decays exponentially and we can introduce an autocorrelation time τ

| (11) |

| (12) |

We can thus identify a relation between the branching parameter m and the intrinsic timescale τ (or, more precisely, the autocorrelation time) via the time discretization Δt:

| (13) |

It is important to note that τ is an actual physical observable, whereas m offers an interpretation of how the intrinsic timescales are generated—it sets the causal relation between two consecutive generations of activity. Whereas m depends on how we chose the bin size of each time step Δt, the intrinsic timescale τ is independent of bin size.

4.2 Subsampling invariant estimation of the intrinsic timescale by multi-step regression

Subsampling describes the typical experimental constraint that often one can only observe a small fraction of the full system [5, 39, 40]. Given the full activity At, we denote the activity that is recorded under subsampling with at. We describe the amount of subsampling (the fraction of the system that is observed) through the sampling probability α, where α = 1 recovers the case of the fully sampled system.

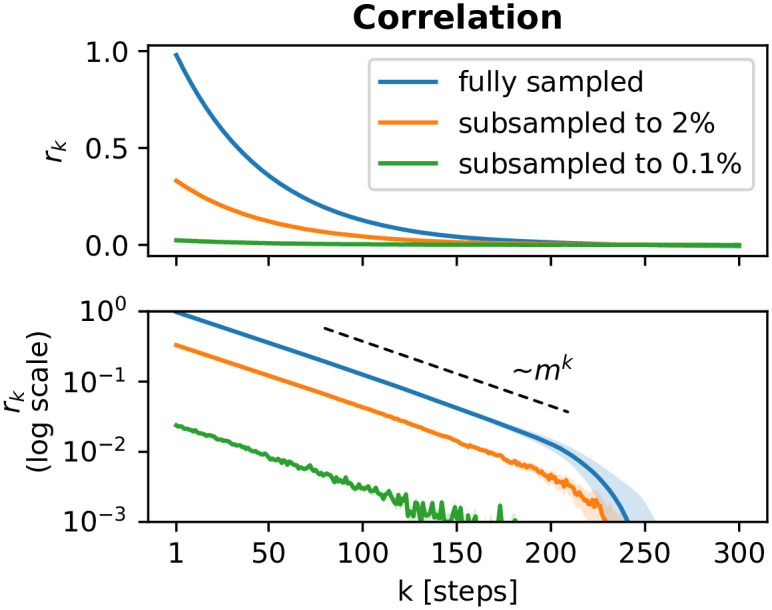

It can be shown that subsampling causes a bias b that only affects the amplitude of the autocorrelation function—but not the intrinsic timescale that characterizes the decay [5]. This is illustrated in Fig 3. By fitting the exponential and the amplitude, the subsampling problem boils down to an additional free parameter in the least-square fit of the correlation coefficients:

| (14) |

where at is the (recorded) activity under subsampling and At is the (unknown) activity that would hypothetically be observed under full sampling. As we see above (Eq (14), Fig 3) the intrinsic timescale τ is independent of the sampling fraction α. In general, when measuring autocorrelations, Eq (10), by definition r0 = 1. Under subsampling however, the amplitude for rk ≥ 1 decreases as fewer and fewer units of the system are observed. This can cause a severe underestimation in the single regression approach, Eq (3).

Fig 3. The amplitude of correlation coefficients decreases under subsampling, whereas the intrinsic timescale τ and the branching parameter m (characterized by the slope of the rk on a logarithmic scale) are invariant.

Coefficients were determined by the toolbox for a fully sampled and binomially subsampled branching processes [19].

In order to formalize the estimation of correlation coefficients rk for subsampled activity, let us denote the set of all activity observations with x = {at} and the observations k time steps later with y = {at+k}. If T is the total length of the recording, then we have T − k discretized time steps to work with. Then

| (15) |

where we approximate the expectation values 〈x〉 and 〈y〉 using

In other words, is the mean of the observed time series and is the mean of the shifted time series.

4.3Different methods to estimate correlation coefficients

The drawback of the naive implementation, Eq (11), is that it is biased if T is rather short—which is often the case if the recording time was limited (for a recent discussion of this topic see also Ref. [24]). In the case of short recordings, and are biased estimators of the expectation values 〈at〉 and 〈at+k〉. However, we can compensate the bias by combining multiple short recordings, if available.

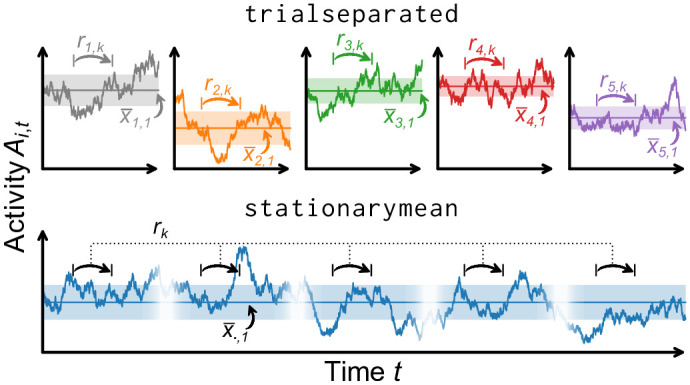

In practice, multiple recordings are often available: If individual recordings are repeated several times under the same conditions, we refer to these repetitions as trials. One typically assumes that across these trials, the expected value of activity is stationary. However, this is not necessarily the case because trial-to-trial variability might be systematic. Since this assumption has to be justified case-by-case, the toolbox offers two methods to calculate the correlation coefficients: the trialseparated and stationarymean method.

4.3.1 Trialseparated

The trialseparated method makes less assumptions about the data than the stationarymean method. Each trial provides a separate estimate of the correlation coefficients ri,k. Let us again denote the observations before (after) the time lag with xi (yi), where index i denotes the i-th out of N total trials. All trials share the same number of time steps T. We can apply Eq (15) to each trial separately and thereafter average over the per-trial result:

| (16) |

with

As the expected activity 〈at〉 is estimated within each trial separately, this method is robust against a change in the activity from trial to trial. On the other hand, the trialseparated method suffers from short trial lengths when and become biased estimates for the activity.

4.3.2 Stationarymean

The stationarymean method assumes the activity to be stationary across trials: Now, the expected activity 〈at〉 is estimated by and that use the full pool of recordings (containing all trials):

| (17) |

with

The two methods are illustrated in Fig 4 and the impact of the trial length on the estimated autocorrelation time is shown in Fig 5. For short trials (red shaded area), the stationarymean provides precise estimates—already for time series that are only on the order of ten times as long as the autocorrelation time itself. The trialseparated method, on the other hand, is biased for short trials but it makes less strict assumptions on the data. Thus, the trialseparated method should be used if one is confident that trial durations are long enough.

Fig 4. Illustration of the two methods for determining the correlation coefficients rk from spiking activity At.

Both methods assume a trial structure of the data (discontinuous time series)Top: The trialseparated method calculates one set of correlation coefficients ri,k for every trial i (via linear regression).Bottom: The stationarymean method combines the information of all trials to perform the linear regression on a single, but larger pool of data. This gives an estimate of rk that is bias corrected for short trial lengths.

As a rule of thumb, if an a priori estimate of τ exists, we advise to use trials that are at least 10 times longer than that estimate. The longer, the better. As an example, to reliably detect (for instance in prefrontal cortex), a time series of 2 s could suffice (when using the stationarymean method). Furthermore, as a consistency check, we recommend to compare estimates that derive from both methods.

4.4 Toolbox interface to estimate correlation coefficients

The correlation coefficients are calculated by calling the coefficients() function, with the method keyword.

# typical keyword arguments, steps from 1 to 500

rks = mre.coefficients(src, method = ‘stationarymean’, steps = (1, 500))

# create custom steps as a numpy array,

# here from 1 to 750 with increment 2

my steps = np.arange(1, 750, 2)

# specify the created steps,

# step size dt and unit of the time-step

rks = mre.coefficients(src, method = ‘stationarymean’, steps = my steps, dt = 1, dtunit = ‘bp steps’)

From the code example above, it is clear that one has to choose for which k-values the coefficients are calculated. This choice needs to reflect the data: the chosen steps determine the range that can be fitted. If not enough steps are included, the tail of the exponential is overlooked, whereas if too many steps are included, fluctuations may cause overfitting. A future version of the toolbox will give a recommendation, for now it is implemented as a console warning.

The k-values can be specified with the steps argument, by either specifying an upper and lower threshold or by explicitly passing an array of desired values. In order to give the rk physical meaning, the function also takes the time bin size Δt (corresponding to the step size k) and the time unit as arguments: dt and dtunit, respectively. Those properties become part of the returned data structure CoefficientResult, so that the subsequent fit- and plot-routines can use them.

4.5 Toolbox data structure

Recordings are often repeated with similar conditions to create a set of trials. We took this into account and built the toolbox on the assumption that we always have a trial structure, even if there is only a single recording.

The trial structure is incorporated in a two dimensional NumPy array [20–22], where the first index (i) labels the trial. The second index (t) specifies the time step of the trials activity recording Ai,t, where time is discretized and each time step has size Δt. All trials must have the same length and the same Δt (or in other words, should be recorded with the same sampling rate).

Because all further processing steps rely on this particular format, we provide the input_handler() that attempts convert data structures into the right one. The input_handler() works with nested lists, NumPy arrays or strings containing file paths. Wildcards in the file path will be expanded and all matching files are imported. If a file has multiple columns, each column is taken to be a trial. To select which of the columns to import, specify for example usecols=(0,1,2) which would import the first three columns.

4.6 Error estimation

The toolbox provides confidence intervals based on bootstrap resampling [41]. Resampling usually requires the original data to be cut into chunks (bins) that are recombined (drawing with replacement) to create new realizations, the so called bootstrap samples. Because the toolbox works on the trial structure, the input data usually does not need to be modified: each trial becomes a bin that can then be drawn with replacement to contribute to the bootstrap sample. While this is a good choice if sufficient (∼100) trials are provided, using trials directly for resampling means that no error estimates are possible with a single trial. If no trial structure is available, such as for resting-state data, an easy workaround is to manually cut long time series into shorter chunks to artificially create the trial structure [19]. The error estimation via bootstrapping is implemented in the coefficients(), fit() and full_analysis() functions. All three take the numboot argument to specify how many bootstrap samples are created.

4.7 Getting help

Please visit the project on GitHub [42] and see our growing online documentation [43]. You can also call help() on every part of the toolbox:

# as an example, create variables.

bp = mre.simulate branching(m = 0.98, a = 10)

# try pressing tab e.g. after typing mre.c

rks = mre.coefficients(bp)

# help() prints the documentation,

# and works for variables and functions alike

help(rks)

help(mre.full analysis)

5 Discussion

Our toolbox reliably estimates the intrinsic timescale from vastly different time series, from electrophysiologal recordings to case numbers of epidemic spreading to any system that can be represented by an autoregressive process. Most importantly, it relies on the multi-step regression estimator so that unbiased timescales are found even for heavily subsampled systems [5].

In this work, we also took a careful look at how a limited duration of the recordings—a common problem in all data-driven approaches—can bias our estimator [23, 24]. With extensive numeric simulations we showed that the estimator is robust if conservatively formulated guidelines are followed. We can also bolster our previous claim [5] that the estimator is very data efficient. Moreover, short time series (trials) can be compensated by increasing the number of trials.

The toolbox thereby enables a systematic study of intrinsic timescales, which are important for a variety of questions in neuroscience [44]. Using the branching process as a simple model of neuronal activity, it is intuitive to think of the intrinsic timescale as the duration over which any perturbation reverberates (or persists) within the network [13, 45]. According to this intuition, different timescales should benefit different functional aspects of cortical networks [12, 46, 47].

Experimental evidence indeed shows different timescales for different cortical networks [5, 48]. It even suggests a temporal hierarchy of brain areas [1, 2, 49]; areas responsible for sensory integration feature short timescales, while areas responsible for higher-level cognitive processes feature longer timescales. For cognitive processes (for example during task-solving), the intrinsic timescale was further linked to working memory. In particular, working memory might be implemented through neurons with long timescales [3, 4, 50].

In general, recordings could exhibit multiple timescales simultaneously [51–53]. This can be readily realized with the toolbox by using a custom fit function (e.g. a sum of exponential functions, see Sec. 3). However, it is important to be aware of the possible pitfalls of fitting elaborate functions to empirical data [53, 54]. In our experience, most recordings exhibit a single dominant timescale.

Lastly, it was theorized that biological recurrent networks can adapt their timescale in order to optimize their processing for a particular task [46, 55, 56]. For artificial recurrent networks, such a tuning capability was already shown to be attainable by operating around the critical point (of a dynamic second order phase transition) [15, 32, 47, 57]. For instance, reducing the distance to criticality increases the information storage in these networks [10, 12]. At the same time, the observed intrinsic timescale increases. It is plausible that the mechanisms of near-critical, artificial systems also apply to cortical networks [58–60]. This and other hypothesis can now be reliably tested with our toolbox and properly designed experiments [8]. For applications of our approach and the MR. Estimator toolbox see e.g. Refs. [48, 61, 62] and Ref. [7, 36, 63], respectively.

6 Appendix

6.1 A Real-world Example

Listing 2. Minimal script that shows how to prepare real-world data [30, 64], and produces Fig 2 from the main text. Characteristic for this dataset are theta oscillations (5–10 Hz) that carry over to the autocorrelation function. We first create a time series of activity by time-binning the spike times. Then, we create an artificial trial structure to demonstrate error estimation and apply the built-in fit functions. Last, we print the frequency ν = 6.13 Hz of the theta oscillations as an example to show how to access the different parameters of the complex fit. The full script is available on GitHub [19], and for further details, also see the online documentation [43].

# helper function to convert a list of time stamps

# into a (binned) time series of activity

def bin_spike_times_unitless (spike_times, bin_size): last_spike = spike_times [−1]

num_bins = int (np. ceil (last_spike / bin_size))

res = np. zeros (num_bins)

for spike_time in spike_times:

target_bin = int (np. floor (spike_time / bin_size))

res [target_bin] = res [target_bin] + 1

return res

# load the spiketimes

res = np. loadtxt (‘./crcns/hc2/ec013.527/ec013.527.res.1’)

# the .res.x files contain the time-stamps of spikes detected

# by electrode x sampled at 20 kHz, i.e. 0.05 ms per time steps.

# we want ‘spiking activity’: spikes added up during a given

# time. usually, ∼4ms time bins (windows) is a good first guess

act = bin_spike_times_unitless (res, bin_size = 80)

# to get error estimates, we create 25 artifical trials by

# splitting the data. not recommended for non-stationary data

triallen = int (np.floor (len (act)/25))

trials = np.zeros (shape = (25, triallen))

for i in range (0, 25):

trials [i] = act [i * triallen: (i + 1) * triallen]

# now we could run the analysis and will get error estimates

# out = mre.full_analysis (trials, dt = 4, dtunit = ‘ms’, kmax = 800,

# method = ‘trialseparated’)

# however, in this dataset we will find theta oscillations.

# let’s try the other fit functions, too.

out = mre.full_analysis (trials, dt = 4, dtunit = ‘ms’, kmax = 800, method = ‘trialseparated’, fitfuncs = [‘exponential’, ‘exponential_offset’, ‘complex’], targetdir = ‘./’, saveoverview = True)

# by assigning the result of mre.full_analysis (…) to a

# variable, we can use fit results for further processing:

# the oscillation frequency nu is fitted by the complex fit

# function as the 7th parameter (see online documentation).

# it is in units of 1/ dtunit and we used ‘ms’.

print (f “theta frequency: {out.fits [2]. popt [6] * 1000} [Hz]”)

The spiking data from rats were recorded by Mizuseki et al. [30] with experimental protocols approved by the Institutional Animal Care and Use Committee of Rutgers University. The data were obtained from theNSF-founded CRCNS data sharing website [64].

6.2 B short trials cause bias

The data shown in Fig 5 was created with the simulation_branching() function included in the toolbox. Every measurement was repeated 100 times, featured 50 trials, target activity 1000 and no subsampling (the bias investigated here is independent from subsampling). The colored lines correspond to the median across 100 independent simulations. Error estimates were calculated but not plotted for clarity—in the red shaded area of Fig 5, the very short trials lead to low statistics (and large error bars). Error bars represent the standard deviation across the 100 simulations. The included steps k covered [1 : 20τ], if available, which corresponds to the fit range of the exponential with offset.

To further illustrate the bias we observed in Fig 5, we plot the correlation coefficients rk that were found by the toolbox with the two different methods in Fig 6. When trials are short, the coefficients found by the trialseparated method are offset and skewed. The stationarymean method finds the correct coefficients because the estimation could profit from the trial structure. Since neither the true timescale nor the stationarity assumption are known in experiments, we suggest to compare results from both methods: if they agree, this is a good indication that the trials are long enough.

Fig 6. Correlation coefficients rk for τ = 102 (orange in Fig 5).

Individual background lines stem from the 100 independent repetitions.Left: Coefficients are shifted and skewed for short trial length T/τ when using the trialseparated method. The solid foreground lines are obtained from Eq. 4.07 of [23]. Right: With 50 trials and the stationarymean method, even very short (green) time series yield unbiased coefficients and, ultimately, precise estimates of the intrinsic timescale.

The black dashed line in Fig 5 is derived from the analytic solution Eq. 4.07 in Ref. [23] that gives the expectation value of the biased correlation coefficient in dependence of the trial length T. For simplicity, we focus on the leading-order estimated branching paramter via the one-step autocorrelation function. Starting from Eq. 4.07 in Ref. [23],

| (18) |

| (19) |

cf. Eqs (4) and (11). Inserted into Eq (13) and with m = exp(−Δt/τ), we find

| (20) |

| (21) |

| (22) |

| (23) |

For sufficiently large τ > Δt, we obtain to leading order

| (24) |

For Fig 5—where Δt = 1, x = T/τ and —this means that

| (25) |

Acknowledgments

We thank Leandro Fosque, Gerardo Ortiz and John Beggs as well as Danylo Ulianych and Michael Denker for constructive discussion and helpful comments. We are grateful for careful proofreading and input from Jorge de Heuvel and Christina Stier.

Data Availability

Referenced scripts are available at https://github.com/Priesemann-Group/mrestimator/blob/v0.1.7/examples/paper Simulation data are available at https://doi.org/10.12751/g-node.licm4y.

Funding Statement

FPS and JD were funded by the Volkswagen Foundation through the SMARTSTART Joint Training Program Computational Neuroscience. JZ is supported by the Joachim Herz Stiftung. All authors acknowledge funding by the Max Planck Society. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Murray JD, Bernacchia A, Freedman DJ, Romo R, Wallis JD, Cai X, et al. A Hierarchy of Intrinsic Timescales across Primate Cortex. Nat Neurosci. 2014;17:1661–1663. 10.1038/nn.3862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Chaudhuri R, Knoblauch K, Gariel MA, Kennedy H, Wang XJ. A Large-Scale Circuit Mechanism for Hierarchical Dynamical Processing in the Primate Cortex. Neuron. 2015;88:419–431. 10.1016/j.neuron.2015.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Cavanagh SE, Towers JP, Wallis JD, Hunt LT, Kennerley SW. Reconciling Persistent and Dynamic Hypotheses of Working Memory Coding in Prefrontal Cortex. Nat Commun. 2018;9:3498. 10.1038/s41467-018-05873-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Wasmuht DF, Spaak E, Buschman TJ, Miller EK, Stokes MG. Intrinsic Neuronal Dynamics Predict Distinct Functional Roles during Working Memory. Nat Commun. 2018;9:3499. 10.1038/s41467-018-05961-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Wilting J, Priesemann V. Inferring Collective Dynamical States from Widely Unobserved Systems. Nat Commun. 2018;9:2325. 10.1038/s41467-018-04725-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Watanabe T, Rees G, Masuda N. Atypical Intrinsic Neural Timescale in Autism. eLife. 2019;8:e42256. 10.7554/eLife.42256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hagemann A, Wilting J, Samimizad B, Mormann F, Priesemann V. No Evidence That Epilepsy Impacts Criticality in Pre-Seizure Single-Neuron Activity of Human Cortex. ArXiv200410642 Phys Q-Bio. 2020;. [DOI] [PMC free article] [PubMed]

- 8. Dehning J, Dotson NM, Hoffman SJ, Gray CM, Priesemann V. Hierarchy and task-dependence of intrinsic timescales across primate cortex. in prep;. [Google Scholar]

- 9. Schuecker J, Goedeke S, Helias M. Optimal Sequence Memory in Driven Random Networks. Phys Rev X. 2018;8:041029. [Google Scholar]

- 10. Boedecker J, Obst O, Lizier JT, Mayer NM, Asada M. Information Processing in Echo State Networks at the Edge of Chaos. Theory Biosci. 2012;131:205–213. 10.1007/s12064-011-0146-8 [DOI] [PubMed] [Google Scholar]

- 11. Wibral M, Lizier JT, Priesemann V. Bits from Brains for Biologically Inspired Computing. Front Robot AI. 2015;2. [Google Scholar]

- 12. Cramer B, Stöckel D, Kreft M, Wibral M, Schemmel J, Meier K, et al. Control of Criticality and Computation in Spiking Neuromorphic Networks with Plasticity. Nat Commun. 2020;11:2853. 10.1038/s41467-020-16548-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Beggs JM, Plenz D. Neuronal Avalanches in Neocortical Circuits. J Neurosci. 2003;23:11167–11177. 10.1523/JNEUROSCI.23-35-11167.2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Zierenberg J, Wilting J, Priesemann V, Levina A. Description of Spreading Dynamics by Microscopic Network Models and Macroscopic Branching Processes Can Differ Due to Coalescence. Phys Rev E. 2020;101:022301. 10.1103/PhysRevE.101.022301 [DOI] [PubMed] [Google Scholar]

- 15. Wilting J, Priesemann V. 25 Years of Criticality in Neuroscience—Established Results, Open Controversies, Novel Concepts. Current Opinion in Neurobiology. 2019;58:105–111. 10.1016/j.conb.2019.08.002 [DOI] [PubMed] [Google Scholar]

- 16. Jun JJ, Steinmetz NA, Siegle JH, Denman DJ, Bauza M, Barbarits B, et al. Fully Integrated Silicon Probes for High-Density Recording of Neural Activity. Nature. 2017;551:232–236. 10.1038/nature24636 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Stringer C, Pachitariu M, Steinmetz N, Reddy CB, Carandini M, Harris KD. Spontaneous Behaviors Drive Multidimensional, Brainwide Activity. Science. 2019;364:eaav7893. 10.1126/science.aav7893 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Pastor-Satorras R, Castellano C, Van Mieghem P, Vespignani A. Epidemic Processes in Complex Networks. Rev Mod Phys. 2015;87:925–979. 10.1103/RevModPhys.87.925 [DOI] [Google Scholar]

- 19.Referenced scripts are available at https://github.com/Priesemann-Group/mrestimator/blob/v0.1.7/examples/paper.

- 20. Oliphant TE. NumPy: A Guide to NumPy. USA: Trelgol Publishing; 2006. Available from: http://www.numpy.org/. [Google Scholar]

- 21. van der Walt S, Colbert SC, Varoquaux G. The NumPy Array: A Structure for Efficient Numerical Computation. Comput Sci Eng. 2011;13:22–30. 10.1109/MCSE.2011.37 [DOI] [Google Scholar]

- 22. Harris CR, Millman KJ, van der Walt SJ, Gommers R, Virtanen P, Cournapeau D, et al. Array Programming with NumPy. Nature. 2020;585:357–362. 10.1038/s41586-020-2649-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Marriott FHC, Pope JA. Bias in the Estimation of Autocorrelations. Biometrika. 1954;41:390–402. 10.1093/biomet/41.3-4.390 [DOI] [Google Scholar]

- 24.Grigera TS. Everything You Wish to Know about Correlations but Are Afraid to Ask. ArXiv200201750 Cond-Mat. 2020;.

- 25. Bernacchia A, Seo H, Lee D, Wang XJ. A Reservoir of Time Constants for Memory Traces in Cortical Neurons. Nat Neurosci. 2011;14:366–372. 10.1038/nn.2752 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Hasson U, Yang E, Vallines I, Heeger DJ, Rubin N. A Hierarchy of Temporal Receptive Windows in Human Cortex. J Neurosci. 2008;28:2539–2550. 10.1523/JNEUROSCI.5487-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Chaudhuri R, Bernacchia A, Wang XJ. A Diversity of Localized Timescales in Network Activity. eLife. 2014;3:e01239. 10.7554/eLife.01239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Helias M, Tetzlaff T, Diesmann M. The Correlation Structure of Local Neuronal Networks Intrinsically Results from Recurrent Dynamics. PLOS Computational Biology. 2014;10:e1003428. 10.1371/journal.pcbi.1003428 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. König P. A Method for the Quantification of Synchrony and Oscillatory Properties of Neuronal Activity. Journal of Neuroscience Methods. 1994;54:31–37. 10.1016/0165-0270(94)90157-0 [DOI] [PubMed] [Google Scholar]

- 30. Mizuseki K, Sirota A, Pastalkova E, Buzsáki G. Theta Oscillations Provide Temporal Windows for Local Circuit Computation in the Entorhinal-Hippocampal Loop. Neuron. 2009;64:267–280. 10.1016/j.neuron.2009.08.037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Meisel C, Klaus A, Vyazovskiy VV, Plenz D. The Interplay between Long- and Short-Range Temporal Correlations Shapes Cortex Dynamics across Vigilance States. J Neurosci. 2017;37:10114–10124. 10.1523/JNEUROSCI.0448-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Muñoz MA. Colloquium: Criticality and Dynamical Scaling in Living Systems. Rev Mod Phys. 2018;90:031001. 10.1103/RevModPhys.90.031001 [DOI] [Google Scholar]

- 33. Harris TE. The Theory of Branching Processes. Grundlehren Der Mathematischen Wissenschaften. Berlin Heidelberg: Springer-Verlag; 1963. Available from: https://www.springer.com/gp/book/9783642518683. [Google Scholar]

- 34. Heathcote CR. Random Walks and a Price Support Scheme. Aust J Stat. 1965;7:7–14. 10.1111/j.1467-842X.1965.tb00256.x [DOI] [Google Scholar]

- 35. Pakes AG. The Serial Correlation Coefficients of Waiting Times in the Stationary GI/M/1 Queue. Ann Math Stat. 1971;42:1727–1734. 10.1214/aoms/1177693171 [DOI] [Google Scholar]

- 36.de Heuvel J, Wilting J, Becker M, Priesemann V, Zierenberg J. Characterizing Spreading Dynamics of Subsampled Systems with Non-Stationary External Input. ArXiv200500608 Q-Bio. 2020;. [DOI] [PubMed]

- 37. Wei CZ, Winnicki J. Estimation of the Means in the Branching Process with Immigration. Ann Stat. 1990;18:1757–1773. [Google Scholar]

- 38. Beggs JM. Neuronal avalanche. Scholarpedia. 2007;2(1):1344. 10.4249/scholarpedia.1344 [DOI] [Google Scholar]

- 39. Priesemann V, Munk MH, Wibral M. Subsampling Effects in Neuronal Avalanche Distributions Recorded in Vivo. BMC Neuroscience. 2009;10:40. 10.1186/1471-2202-10-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Levina A, Priesemann V. Subsampling Scaling. Nat Commun. 2017;8:15140. 10.1038/ncomms15140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Efron B. The Jackknife, the Bootstrap, and Other Resampling Plans. SIAM; 1982. [Google Scholar]

- 42.The toolbox is available via pip and on GitHub https://github.com/Priesemann-Group/mrestimator.

- 43.Full online documenation is available at https://mrestimator.readthedocs.io.

- 44. Huang C, Doiron B. Once upon a (Slow) Time in the Land of Recurrent Neuronal Networks…. Current Opinion in Neurobiology. 2017;46:31–38. 10.1016/j.conb.2017.07.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. London M, Roth A, Beeren L, Häusser M, Latham PE. Sensitivity to Perturbations in Vivo Implies High Noise and Suggests Rate Coding in Cortex. Nature. 2010;466:123–127. 10.1038/nature09086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Wilting J, Dehning J, Pinheiro Neto J, Rudelt L, Wibral M, Zierenberg J, et al. Operating in a Reverberating Regime Enables Rapid Tuning of Network States to Task Requirements. Front Syst Neurosci. 2018;12. 10.3389/fnsys.2018.00055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Zierenberg J, Wilting J, Priesemann V, Levina A. Tailored Ensembles of Neural Networks Optimize Sensitivity to Stimulus Statistics. Phys Rev Research. 2020;2:013115. 10.1103/PhysRevResearch.2.013115 [DOI] [Google Scholar]

- 48. Wilting J, Priesemann V. Between Perfectly Critical and Fully Irregular: A Reverberating Model Captures and Predicts Cortical Spike Propagation. Cereb Cortex. 2019;29:2759–2770. 10.1093/cercor/bhz049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Demirtaş M, Burt JB, Helmer M, Ji JL, Adkinson BD, Glasser MF, et al. Hierarchical Heterogeneity across Human Cortex Shapes Large-Scale Neural Dynamics. Neuron. 2019;101:1181–1194.e13. 10.1016/j.neuron.2019.01.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Loidolt M, Rudelt L, Priesemann V. Sequence Memory in Recurrent Neuronal Network Can Develop without Structured Input. bioRxiv. 2020; p. 2020.09.15.297580. [Google Scholar]

- 51. Chaudhuri R, He BJ, Wang XJ. Random Recurrent Networks Near Criticality Capture the Broadband Power Distribution of Human ECoG Dynamics. Cereb Cortex. 2018;28:3610–3622. 10.1093/cercor/bhx233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Okun M, Steinmetz NA, Lak A, Dervinis M, Harris KD. Distinct Structure of Cortical Population Activity on Fast and Infraslow Timescales. Cereb Cortex. 2019;29:2196–2210. 10.1093/cercor/bhz023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Zeraati R, Engel TA, Levina A. Estimation of Autocorrelation Timescales with Approximate Bayesian Computations. Neuroscience; 2020. Available from: http://biorxiv.org/lookup/doi/10.1101/2020.08.11.245944. [Google Scholar]

- 54. Shrager RI, W Hendler R. Some Pitfalls in Curve-Fitting and How to Avoid Them: A Case in Point. J Biochem Biophys Methods. 1998;36:157–173. 10.1016/S0165-022X(98)00007-4 [DOI] [PubMed] [Google Scholar]

- 55. Beggs JM. The Criticality Hypothesis: How Local Cortical Networks Might Optimize Information Processing. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2008;366:329–343. 10.1098/rsta.2007.2092 [DOI] [PubMed] [Google Scholar]

- 56. Fontenele AJ, de Vasconcelos NAP, Feliciano T, Aguiar LAA, Soares-Cunha C, Coimbra B, et al. Criticality between Cortical States. Phys Rev Lett. 2019;122:208101. 10.1103/PhysRevLett.122.208101 [DOI] [PubMed] [Google Scholar]

- 57. Bertschinger N, Natschläger T. Real-Time Computation at the Edge of Chaos in Recurrent Neural Networks. Neural Comput. 2004;16:1413–1436. 10.1162/089976604323057443 [DOI] [PubMed] [Google Scholar]

- 58. Levina A, Herrmann JM, Geisel T. Dynamical Synapses Causing Self-Organized Criticality in Neural Networks. Nature Phys. 2007;3:857–860. 10.1038/nphys758 [DOI] [Google Scholar]

- 59. Hellyer PJ, Jachs B, Clopath C, Leech R. Local Inhibitory Plasticity Tunes Macroscopic Brain Dynamics and Allows the Emergence of Functional Brain Networks. NeuroImage. 2016;124:85–95. 10.1016/j.neuroimage.2015.08.069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Zierenberg J, Wilting J, Priesemann V. Homeostatic Plasticity and External Input Shape Neural Network Dynamics. Phys Rev X. 2018;8:031018. [Google Scholar]

- 61. Ma Z, Turrigiano GG, Wessel R, Hengen KB. Cortical Circuit Dynamics Are Homeostatically Tuned to Criticality In Vivo. Neuron. 2019;104:655–664.e4. 10.1016/j.neuron.2019.08.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Beggs JM. The Critically Tuned Cortex. Neuron. 2019;104:623–624. 10.1016/j.neuron.2019.10.039 [DOI] [PubMed] [Google Scholar]

- 63. Skilling QM, Ognjanovski N, Aton SJ, Zochowski M. Critical Dynamics Mediate Learning of New Distributed Memory Representations in Neuronal Networks. Entropy. 2019;21:1043. 10.3390/e21111043 [DOI] [Google Scholar]

- 64.The data by Mizuseki et al. is available on crcns.org: 10.6080/K0Z60KZ9. [DOI]