Summary

To formulate a machine learning (ML) model to establish the polymer's structure-property correlation for glass transition temperature , we collect a diverse set of nearly 13,000 real homopolymers from the largest polymer database, PoLyInfo. We train the deep neural network (DNN) model with 6,923 experimental values using Morgan fingerprint representations of chemical structures for these polymers. Interestingly, the trained DNN model can reasonably predict the unknown values of polymers with distinct molecular structures, in comparison with molecular dynamics simulations and experimental results. With the validated transferability and generalization ability, the ML model is utilized for high-throughput screening of nearly one million hypothetical polymers. We identify more than 65,000 promising candidates with > 200°C, which is 30 times more than existing known high-temperature polymers (∼2,000 from PoLyInfo). The discovery of this large number of promising candidates will be of significant interest in the development and design of high-temperature polymers.

Keywords: machine learning, polymer, glass transition temperature, high-throughput screening, feature representation

Graphical abstract

Highlights

-

•

Large datasets for polymer's glass transition temperature are collected

-

•

Transferability of ML models depends on feature representations

-

•

Molecular dynamics models and experimental results validate the formulated ML model

-

•

Extensive promising candidates for high-temperature polymers are screened by ML model

The bigger picture

The design and development of high-temperature polymers has been an experimentally driven and trial-and-error process guided by experience, intuition, and conceptual insights. However, such an Edisonian approach is often costly, slow, biased toward certain chemical space domains, and limited to relatively small-scale studies, which may easily miss promising compounds. To overcome this challenge, we formulate a data-driven machine learning (ML) approach, integrated with high-fidelity molecular dynamics simulations, for quantitatively predicting the glass transition temperature of a polymer from its chemical structure and rapid screening of promising candidates for high-temperature polymers. Our work demonstrates that ML is a powerful method for the prediction and rapid screening of high-temperature polymers, particularly with growing large sets of experimental and computational data for polymeric materials.

Polymers with outstanding high-temperature properties have been identified as promising materials for aerospace, electronics, and automotive applications. However, the current design and development of high-temperature polymers has been an experimentally driven and trial-and-error process guided by experience, intuition, and conceptual insights. Therefore, we formulate a machine learning model that can quantitatively predict the glass transition temperature of a polymer from its chemical structure, such that more promising high-temperature polymers can be efficiently filtered out through high-throughput screening.

Introduction

Lightweight and high-strength polymers with outstanding high-temperature properties have been identified as promising materials for aerospace, electronics, and automotive applications.1, 2, 3 These high-temperature polymers are expected to have long-term durability at high temperatures, high thermal decomposition temperatures, or high glass transition temperature . For example, polytetrafluoroethylene is a synthetic fluoropolymer of tetrafluoroethylene with a maximum service temperature >260°C, which has been widely used for non-stick coatings and insulations.4 The other successful high-temperature polymers are perfluoroalkoxy alkanes, polyether ether ketone (PEEK), and fluorinated ethylene propylene. The high-temperature properties of these polymers are realized through the heteroatoms in the polymer chain of thermoplastics.5, 6, 7 However, the molecular engineering and design of hydrocarbon polymers and other polymers with high-temperature properties remain to be explored. The current design and development of high-temperature polymers have been an experimentally driven and trial-and-error process guided by experience, intuition, and conceptual insights. For example, different experimental strategies have been developed to synthesize high-temperature hydrocarbon polymers, such as (1) enhancement of the tacticity of the polymer chains,8,9 (2) introduction of bulky pendant groups into the side chain,10, 11, 12 and (3) incorporation of cyclic structures into the backbone chain.13, 14, 15 Nevertheless, this Edisonian approach is often costly, slow, biased toward certain chemical space domains, and limited to relatively small-scale studies, which may easily miss promising compounds.16 Thus, a robust and reliable high-throughput screening method is essential for the discovery and design of high-temperature polymers.17

For high-temperature polymers, a critical property is the ,10,13,14 which determines the polymer's phase transition between a rubbery state and a glassy state, yielding orders of magnitude difference in elastic modulus.18 Until now, is well known to be related to many factors, including molecular weight,19 chain stiffness,20 side groups,21 additives,22 regularity.23 Considering these aspects, researchers have proposed theoretical correlations between the chemical structure and the of polymers. These empirical methods are built upon the assumption that the chemical groups in the repeating units of the polymer chain contribute to the additively with different weighting factors.24, 25, 26 For example, Van Krevelen and Te Nijenhuis18 and Hoftyzer and colleagues26 have proposed the “Molar Glass Transition Function,” based on nearly 600 experimental values of polymers, with different group contributions and structural corrections to . This approach provides an effective way for molecular interpretation of . However, this additive method is only applicable to the polymers containing previously investigated chemical structures.18 Later, Dudowicz et al.27 formulated an analytic theory to estimate of polymer melts as a function of the relative rigidities of the chain backbone and side groups, monomer structure, polymer mass, and pressure, based on the generalized Lindemann criteria. This analytical theory can explain the general trends in the variation of related to the microstructure of the polymer, e.g., influences of side-chain length, and relative rigidities between side groups and chain's backbone. Nevertheless, it cannot be used to directly predict the of the polymer based on its chemical structure. Very recently, Xie et al.28 established a relationship between and molecular structure of 32 conjugated polymers with a single adjustable parameter . is an effective mobility value, determined by assigned atomic mobility for the repeating unit of conjugated polymers. The experimental results confirm that is strongly correlated to the of conjugated polymers, although they differ drastically in aromatic backbone and alkyl side-chain chemistry. Yet, quantitatively predicting a polymer's from its chemical structure remains a significant challenge. We still lack a universal model that connect a polymer's to its repeating unit and molecular structure.

With advancements in molecular simulation and high-performance computing, all-atom molecular dynamics (MD) simulations can reasonably predict a polymer's ,29 despite the limitations of computational cost, cooling rate, and uncertainty.30, 31, 32, 33 Nevertheless, it is not feasible to use these expensive MD simulations to explore the vast chemical space of polymers, defined by the almost infinite combinations of their chemical elements and molecular structures. With the growing amount of polymer database,16,30, 31, 32, 33 data-driven methods are emerging to build correlations between chemical structure and the of polymers, including quantitative structure-property relationships (QSPR) method34, 35, 36 and machine learning (ML).37, 38, 39 For the QSPR method, a large array of molecular descriptors are extracted from the polymer's repeating unit, which applies to any chemical structure.40 For example, Katritzky et al. have extracted more than 400 constitutional, topological, geometrical, and quantum chemical descriptors for the repeating unit of the polymer.40 Subsequently, a multi-step linear regression analysis is adopted to train these descriptors, leading to a good match between predicted and experimental values for 88 homopolymers. Wu et al.41 encoded a descriptor vector of seven different fingerprints, such as standard, extended, hybridization, maccs. And their Bayesian linear model reported an R value of 0.916 for prediction. Liu and Cao42 have adopted the artificial neural network to predict the for 113 polyacrylates and polystyrenes, as a function of four molecular descriptors: the molecular average polarizability, the energy of the highest occupied molecular orbital, the total thermal energy, and the total entropy. Later, Cai et al.43 have combined a support vector regression with particle swarm optimization, using six quantum chemical descriptors as inputs, to predict values for 32 methacrylate polymers. However, the QSPR method suffers two major drawbacks: (1) it is expensive to quantify a large array of molecular descriptors, such as quantum chemical descriptors, which require the time-consuming density-functional theory calculations; (2) the QSPR method might generate many parameters that are challenging to physically interpret, such as topological bond connectivity and Kier shape index.40

Considering these aspects, several ML models have been established to predict a polymer's directly from its chemical structure. For instance, Ramprasad and co-workers37, 38, 39,44 utilized three hierarchical levels of descriptors, including atomic level, QSPR, and morphological descriptors, for feature representation of polymers. They fitted their datasets of 451–1,321 polymers with the Gaussian process regression model in the polymer genome platform.38,45, 46, 47, 48 When using 1,321 polymers for training, their ML model reported a root-mean-square error of 27 K and of 0.92.39 In addition to molecular descriptors as feature representation, ML models, such as convolutional neural networks (CNNs) with image-based input, have also been examined. For example, Miccio et al.49,50 converted the Simplified Molecular Input Line Entry System (SMILES) notations of 331 polymers into a two-dimensional (2D) matrix (binary images) by the presence or absence of composing characters in the SMILES formulation. This approach can be used to predict the unknown of polymers with average relative errors as low as 6%, particularly without time-consuming calculations of molecular descriptors. Table 1 summarizes the database, feature representation, models, and prediction metrics from these theoretical, QSPR and ML studies.

Table 1.

Summary of theoretical, QSPR, and machine learning (ML) models investigated in the literature

| Database | Features | Model | Ref. | |

|---|---|---|---|---|

| 600 | chemical groups | group contributions approach | N/Aa | 18 |

| 32 | an effective mobility value | single adjustable parameter | N/Ab | 28 |

| 113 | quantum chemical descriptors | artificial neural networks | 0.955c | 42 |

| 37 | quantum chemical descriptors | support vector regression | 0.97 | 43 |

| 251 | Descriptors | computational neural networks | 0.96 | 51 |

| 389 | descriptors | support vector regression | 0.78 | 52 |

| 133 | descriptors | random forest | N/Ad | 53 |

| 88 | descriptors | multi-layer perceptron neural network | 0.96 | 54 |

| 77 | descriptors | support vector machine (SVM) | 0.92 | 55 |

| 54 | descriptors | artificial neural network | 0.91 | 56 |

| 52 | descriptors | artificial neural network | 0.978e | 57 |

| 451 | hierarchy fingerprint | Gaussian process regression | 0.94 | 38 |

| 751 | hierarchy fingerprint | Gaussian process regression | 0.87 | 37 |

| 1,321 | hierarchy fingerprint | Gaussian process regression | 0.92 | 39 |

| 5,917 | combined fingerprint | Bayesian linear model | 0.916f | 41 |

| 331 | SMILES-based binary images | convolutional neural network | N/Ag | 49 |

| 234 | SMILES-based binary images | fully connected neural networks | N/Ah | 50 |

| 6,923 + 5,690 + 1 million |

descriptors Morgan fingerprint SMILES-based binary images |

lasso regression deep neural network convolutional neural network |

0.80 0.85 0.87 |

this work |

N/A, not applicable.

About 80% of the calculated Tg values differed less than 20 K from the experimental values.

Only root-mean-square error of 13°C was reported for all 32 alkylated conjugated polymers.

R = 0.955 was reported for the prediction set.

Only root-mean-square error of 4.76 K was reported for the test set of the model.

R = 0. 978 was reported for the test set.

R = 0. 916 was reported for the test set.

The model performance was evaluated by relative error of 3%–8%.

The model performance was evaluated by average relative errors of ∼3%.

Despite these extensive studies, we are still facing several significant challenges in creating ML models to directly predict a polymer's based on its chemical structure.16 Firstly, most of these data-driven models are built upon a small dataset of polymer values with less than 1,000 data points, focusing on a certain category of polymers, such as polyacrylates and polystyrenes. It is very difficult to generalize these models for other classes of polymers due to the limited range of chemical space. Secondly, it is challenging to choose appropriate feature representations to describe the chemical structures of polymers. Molecular descriptors, fingerprints, and images have been adopted to represent the chemical structures of polymers. It is not clear which feature representation is the most appropriate, leading to a predictive ML model for exploring a large chemical space of polymers. Finally, it is not straightforward to associate ML predictions on a polymer's with physically meaningful quantities. Since most ML models are highly nonlinear with complicated architectures, it is difficult to pinpoint a specific set of physical quantities or chemical groups that are important in the prediction and design of a polymer's .

To overcome the above challenges, we manually collected about 13,000 homopolymers structures from the largest polymer database, PoLyInfo.58 Copolymers that are formed by two types of monomers are not collected here as the effect of their different components on requires extra consideration,59,60 and polymer composites are not included either when their is affected by polymers interplaying with nanomaterials.61,62 Focusing on homopolymers allows us to put our focus mainly on revealing the correlation of a polymer's chemical structure and its . Among the around 13,000 homopolymers, 6,923 experimental values are available, which form dataset-1, as shown in Figure 1. The remaining 5,690 polymers without reported values form dataset-2. Also, a benchmark database, named PI1M63, with nearly one million hypothetical polymers generated by a recurrent neural network (RNN) model, is taken as dataset-3, while the corresponding values are unknown. Note that dataset-3 covers a similar chemical space as dataset-1 and dataset-2 because the RNN models are also trained on the PoLyInfo database, but significantly populate regions where PolyInfo data are sparse.63 Such a large and diverse dataset allows us to develop four representative ML models based on dataset-1, namely Lasso_Descriptor, Lasso_Fingerprint, DNN_Fingerprint, and CNN_Image, by using the molecular descriptors, Morgan fingerprints, or images as inputs, and Lasso (least absolute shrinkage and selection operator), DNN (deep neural network) or CNN as the ML models. The predictivity and transferability of these ML models are tested on dataset-2 with distinct chemical substructures (Figure 1), in comparison with MD simulations and experimental results. Interestingly, our study reveals that the DNN_Fingerprint model can reasonably predict the values of polymers from dataset-2, as the Morgan fingerprinting method64 can take into account the chemical connectivity and appearance of different substructures of a polymer's repeating unit. More importantly, we use these ML models to identify key molecular descriptors and chemical substructures that can significantly affect the polymer's , providing physical insights into the prediction and design of the for polymeric materials. We further examine the chemical functional groups of high-/low- polymers and their common characteristics through Checkmol.65 We also identify strong correlations between these common functional groups with the key chemical substructures revealed by our ML models. Eventually, we apply the validated DNN_Fingerprint model for rapid screening of one million hypothetical polymers in PI1M (dataset-3), and identify more than 65,000 promising candidates for high-temperature polymers with > 200°C. We then use MD simulations to validate the predicted values of the top four high-temperature polymers, which are previously unexplored and have not been tested to date. Thus, our study demonstrates that ML is a powerful method for the prediction and rapid screening of high-temperature polymers, particularly with growing large sets of experimental and computational data for polymeric materials. The key molecular descriptors and chemical substructures informed by ML models, combined with identified chemical functional groups, are important design motifs for the molecular engineering of high-temperature polymers.

Figure 1.

Chemical space visualization of dataset-1, dataset-2, and dataset-3

(A) 2D visualization based on descriptors and fingerprints using the t-SNE algorithm. Dataset-1 has reported values, and each data point is colored based on the corresponding value. Dataset-2 and dataset-3 do not have reported values, colored with yellow and red, respectively.

(B) Set diagram showing representative substructures in dataset-1 (green circle), dataset-2 (yellow circle), and dataset-3 (red circle) based on Morgan fingerprint. Some substructures are common for all datasets, while some others are unique to certain datasets.

Results and discussion

Dataset, feature representation, and chemical space

To formulate robust and predictable ML models for diverse polymers, we need to consider a larger dataset in contrast to previous studies (cf. Table 1). Dataset-1 contains 6,923 polymers from the largest polymer database, PoLyInfo,58 as listed in Table 2. They are real polymers with experimentally measured values reported in literature. Thus, it is ideal to use dataset-1 as a labeled dataset for ML model training. For experimentally measured values, they depend on conditions, such as the cooling or heating rate, or even curing process and moisture content, thus there cannot be an exact value for .66, 67, 68, 69 Although there are variations in experimental measurements, the reported with a common experiment practice can be considered characteristic only of the polymer and not of the measuring method.70 If measurement conditions are so extreme that the obtained is not a proper representative of the real value, such records will mislead all analysis, including ML model training.

Table 2.

Comparison of three datasets

A total of 5,690 real polymers of dataset-2 were collected from the same data source as dataset-1, but their values were not previously reported. Dataset-3 is based on an ML-generated database PI1M63 with approximately one million hypothetical polymers. Note that PI1M is enumerated using a generative ML model, RNN, based on PolyInfo (dataset-1 plus dataset-2). These three datasets are regarded as similar to each other in terms of chemical space.63 The collected three datasets in Table 2 are more than one order of magnitude of most datasets from the kinds of literature in Table 1, making up a broader range of chemical space involving various categories of polymers. The challenge of having ML models that can be generalized to all categories of polymers then becomes straightforward to address with the collected large datasets.

All polymers' chemical formulas and structures are represented by the SMILES notation,71 which is a line notation for describing the structure of chemical species using short ASCII strings. For example, “∗C(C∗)C” represents the repeating unit for “poly(prop-1-ene).” It is worth noting that a special symbol “∗” is used to indicate the polymerization points for the repeating unit. From the same molecular block, such as “CCC,” the polymerization positions in ∗C(C∗)C take into account the bonding information between repeating units, and determine the spatial structure of the polymer chain. The chemical species contained in these three datasets include C, O, N, Cl, F, Br, I, S, Si, B, P, Sn, Fe, Na, Li, Ge, Se, K, Co, Ni, Ca, Cd, Pb, Zn, and Te.

One challenge when creating ML models for evaluation of a polymer's is choosing appropriate feature representation to describe the chemical structures being studied. Representation options include descriptors, fingerprints, molecular graph, molecular embedding, quantum chemical quantities, images, etc. The effect of using different representations on estimation has been demonstrated through systematic representation evaluation72 or separate model development.37, 38, 39,42,43,50, 51, 52, 53, 54, 55, 56, 57 In addition, the development of new representations remains critical for the development of high-performance ML models. To carry out a thorough study considering different types of representations, we explore three types of feature representation based on the SMILES notation of each polymer: molecular descriptors, Morgan fingerprints, and images, as presented in Figure 2. In terms of molecular descriptors, the feature-generating engine alvaDesc73 supports the calculation of about 5,305 descriptors within 32 categories, ranging from constitutional indices and ring descriptors to chirality descriptors.73,74 The ensemble of descriptors represents the physical and chemical characteristics of polymers/molecules being studied, which have been widely adopted in the QSPR and ML models (Table 1). Thus, these molecular descriptors can provide physical information regarding charges, topological indices, functional groups, etc., of polymers. Among these 5,305 descriptors, 3,579 descriptors are all available for real polymers in dataset-1 and dataset-2. However, not all 3,579 descriptors are available to the one million hypothetical polymers in dataset-3. Around 5% of hypothetical polymers in dataset-3 cannot be processed using the alvaDesc. But it does not affect too much the chemical space visualization based on molecular descriptors for dataset-3. We should emphasize that the alvaDesc cannot process the ∗ symbol in the SMILES notation and, thus, it misses the chemical connectivity of the repeating units.

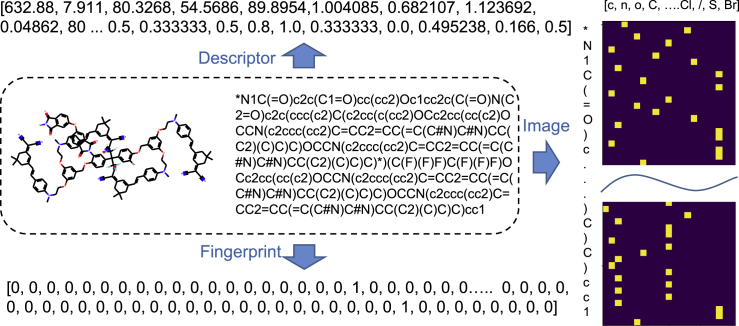

Figure 2.

Three types of feature representation calculated based on the polymer's SMILES notation for ML models: molecular descriptor, Morgan fingerprint, and image

In addition to molecular descriptors, we also choose the fingerprinting method (extended connectivity fingerprinting [ECFP])64 to numerically represent the chemical connectivity in a repeating unit of the polymer. Specifically, the fingerprinting method has a significant advantage over the traditional group contribution and molecular descriptor methods, where all the possible build blocks and molecule descriptors have to be defined a priori and remain static. However, the fingerprinting method is more dynamic, and it can evolve to include new chemical structures and connectivities.64 Essentially, to derive the ECFP of the repeating unit, we need to: (1) assign each atom with an identifier, (2) update each atom's identifiers based on its neighbors, (3) remove duplicates, and (4) fold list of identifiers into a 2,048-bit vector (a Morgan fingerprint). In this case, we transform each polymer's SMILES notation into a binary “fingerprint,” by using the Daylight-like fingerprinting algorithm as implemented in RDKit75 with radius 3 and 2,048 bits. Note that radius 3 is large enough to identify/encode large fragments of the chemical structure, with more than 45,000 distinct substructures detected from all datasets. Such a topological-based approach analyzes the various substructures of a molecule within a certain number of chemical bonds (here it is 3), and then hashes each substructure into a 2,048-bit vector, as shown in Figure 2. If the 45,000 distinct substructures are hashed into 2,048 buckets, collisions are inevitable. Then, the 1/0 (on/off) bit of a bucket does not indicate the occurrence of a specific substructure but represents the occurrence of several substructures. Besides, the number of occurrences for a substructure is not recorded through these buckets. To avoid the drawbacks of using buckets, we directly record each substructure and its number of occurrences. This dictionary of substructures is further used for the training of our ML models. We should emphasize that our fingerprinting method is different from previous studies using the ECFP and Morgan fingerprinting,41,76,77 as we need to consider the number of occurrences for certain substructures in the training of ML models, to be discussed in the following section.

Based on the SMILES notation of polymers, we further define an ordered list of SMILES characters as a dictionary [“c”, “n”, “o”, “C”, “N”, “F”, “ = “, “O”, “(‘, ‘)”, “∗”, “[‘, ‘]”, “1”, “2”, “3”, “#”, “Cl”, “/”, “S”, “Br”]. This dictionary creates a binary column for each character, with which one-hot encoding algorithm78 transforms each polymer's SMILES into a sparse matrix (a 2D binary image in Figure 2). The dimensions of all images are 21 (the number of characters in the dictionary) × 310 (the length of the longest SMILES code in the dataset). The key points of the one-hot encoding algorithm are: (1) defining a reasonable dictionary is the premise of a good model; (2) simple polymers (represented by a short SMILES code) return much sparser matrices than complex polymers (represented by a long SMILES code). Obviously, any change of dataset could lead to changes in the dictionary and corresponding images, significantly influencing the performance of a CNN model.

In view of the molecular descriptors and Morgan fingerprints, similarities between different datasets can be compared from their chemical space. To better visualize this space, the high-dimensional chemical spaces are reduced to a low-dimensional representation. By t-distributed stochastic neighbor embedding,79 the chemical spaces can be shown in 2D plots as shown in Figure 1A. The top row of Figure 1A is for dataset-1, whose values are marked with a color bar. The middle and bottom rows are for dataset-2 and dataset-3, respectively. We can see that, on both descriptor and fingerprint space, dataset-1 and dataset-2 distribute randomly on similar regions. The random distribution suggests that dataset-1 and dataset-2 are across similar chemical spaces. Dataset-3 is also found filling up a similar chemical space but significantly populate regions where PoLyInfo data (dataset-1 plus dataset-2) are sparse. Although Figure 1A shows similarities between dataset-1, dataset-2, and dataset-3, disparities still exist. For example, using Morgan fingerprints, we show some substructures of these polymers in dataset-1, dataset-2, and dataset-3 (Figure 1B). Besides the shared substructures enclosed in the overlapped area of the circles, all three datasets have their own unique substructures. As ML models are trained based on dataset-1, when they encounter a polymer in other datasets with new substructures, it is difficult to make an accurate prediction. Compared with the performance on dataset-1, whether the ML model can be well transferred to new dataset-2 and dataset-3 is more worthy of concern. ML models with good transferability and generalization ability are of significant importance for the discovery and design of high-temperature polymers.

ML models for the chemistry- relation of polymers

Four ML models trained on dataset-1 (listed in Table 3) involve the Lasso model, the DNN model, and the CNN model. Lasso is a least-squares regression model with a shrinkage penalty, through which it performs variable selection by forcing the coefficients of trivial variables to become zero. Thus, the variables that are strongly associated with the output are identified in a variable selection process. DNN consists of connected units called nodes or neurons. Each node receives signals and triggers a process function to output new signals. Several nodes are grouped into layers and constructed into a complicated network architecture, which is processed between the input and output layers. DNN is capable of learning complex relationships between input and output. CNN is distinguished from DNN by its superior performance on image input. The convolutional layers with filters or kernels are the core building blocks of CNN. The optimized weights and biases in convolutional layers can identify the presence of various features in the input, showing an advanced performance, particularly in image processing. Although the ML algorithms are applicable for various kinds of problems, such as video recognition, image analysis, or natural language processing, their suitability and reliability are actually highly domain dependent. For the task of estimating a polymer's based on structure features, ML models require a proper feature representation that depicts polymer physics and chemistry to the greatest extent.

Table 3.

Four ML models trained on dataset-1

| Name | ML model | Features | (train/test) |

|---|---|---|---|

| Lasso_Descriptor | Lasso regression model | 3,579 descriptors | 0.80/0.71 |

| Lasso_Fingerprint | Lasso regression model | 2,048 fingerprints | 0.74/0.73 |

| DNN_Fingerprint | deep neural network | 2,048 fingerprints | 0.85/0.83 |

| CNN_Image | convolutional neural network | 310 × 21 binary images | 0.87/0.80 |

Here, descriptors or fingerprints are used as the input features for Lasso regression models or DNN models. They have clear chemical or physical meanings for an organic molecule, but the time-consuming calculation is usually required considering a very large database of polymers. When representing polymers from the perspective of 2D images, the input is much easier to calculate.49,50 Therefore, a CNN model using images is also investigated for comparison. Through these ML models, we aim to discover critical physical and chemical features affecting , and to establish a reliable model for screening of high-temperature polymers. Lasso regression is suitable for feature selection, while DNN and CNN models are more powerful to establish a correlation between chemical structure and of polymers.76

The performances of these four ML models are illustrated by parity plots in Figures 3A–3D (see the supplemental experimental procedures Figures S1–S3 for model training details). Based on dataset-1, they all show good performances. The best one is the CNN_Image model, which produces an of 0.87/0.80 for training/test sets. It indicates that, although there is no explicit physical meaning in the image representation, the CNN model is still able to establish a correlation between the image input and the physical property of polymers. The DNN and Lasso models also lead to high values of 0.74–0.87. Their performances are satisfactory, considering the large chemical diversity of 6,923 polymers involved in dataset-1.

Figure 3.

Performance of four ML models

(A) The Lasso regression model using descriptors as input features (Lasso_Descriptor model).

(B) The Lasso regression model using fingerprints as input features (Lasso_Fingerprint model).

(C) The DNN model using fingerprints as input features (DNN_Fingerprint model).

(D) The CNN model using images as input features (CNN_Image model).

(E) The comparison between the MD-simulated and the ML-predicted on 20 polymers randomly selected from dataset-2. Three dashed lines are a unity line and lines with a mean absolute error of 40°C. The chemical structure of these 20 polymers is followed by their MD-simulated value.

To examine the transferability of ML models on new polymers, these four ML models are applied to dataset-2 to predict their values. The prediction accuracy of ML models is further validated with MD simulations (see Figure S4 and Table S2 for the MD simulation details and results). Twenty polymers are randomly selected from dataset-2. Their MD-simulated and ML-predicted values are compared in Figure 3E. Four ML models show different prediction performances on these polymers of dataset-2 (see Table S3 in the supplemental experimental procedures). The performances of CNN_Image model and Lasso_Descriptor model degrade remarkably to of −0.52 and 0.39, respectively, indicating poor transferability from dataset-1 to dataset-2. These two previously well-trained ML models on dataset-1 are found to be no longer accurate when giving a new and different dataset. Due to their worse generalization capabilities, the CNN_Image model and Lasso_Descriptor model are not considered for high-temperature polymer screening in the following sections.

On the contrary, the Lasso_Fingerprint and the DNN_Fingerprint models demonstrate good performance on these randomly selected polymers, with of 0.63 and 0.53, respectively. Their small changes of from dataset-1 to dataset-2 suggest good transferability. Although with a little degradation, the prediction performances are still satisfactory considering: (1) dataset-2 is not exactly the same as dataset-1 in terms of substructures (cf. Figure 1), and (2) uncertainties may exist as the reference values obtained by MD simulations can be higher than the true values due to the high cooling rate.31,32,80,81 To avoid the uncertainties from MD simulations, validation using experimental results is more preferred. Thus, a newly reported experimental dataset is further utilized to verify the transferability of these two ML models. The experimental dataset contains 32 semiflexible (mostly conjugated) polymers28 that are new to our ML models. These 32 polymers differ drastically in the aromatic backbone and alkyl side-chain chemistry (Table S4 in the supplemental experimental procedures), serving as an ideal experimental dataset to test our ML models. The predictions of the Lasso_Fingerprint model and the DNN_Fingerprint model lead to values of 0.20 and 0.68 (see Figure S5 in the supplemental experimental procedures for detailed results). Thus, the performance of the Lasso_Fingerprint model is found to be degrading on this new experimental dataset. According to these results, we find that the DNN_Fingerprint model has a consistent performance on different datasets with excellent transferability through the validations by MD simulations and experimental results. Also, Morgan fingerprints are identified to be more appropriate as feature representation for the ML model of polymer in comparison with molecular descriptors and images.

As mentioned above, both molecular descriptors and images are representations of all the possible building blocks of a polymer's repeating unit, which must be defined a priori and remain static. However, Morgan fingerprints are an inherent more dynamic representation, as they can evolve to include new chemical substructures once encountered. Also, according to the previous theoretical models on values of polymers,18 we know that the number of occurrences for these substructures also plays an important role. Therefore, our Morgan fingerprints explicitly consider more than 45,000 distinct substructures and their frequency of occurrence, which allows us to study the effects of various substructures and their linkages on polymer values. Combined with the powerful and transferable DNN model,82 the DNN_Fingerprint model trained from dataset-1 demonstrates the best performance on dataset-2 and a new experimental dataset of 32 conjugated polymers. We should emphasize that, if we only derive the Morgan fingerprints by hashing all the substructures into 2,048-bits, without considering their number of occurrences, the trained DNN model cannot reasonably predict the values of these 32 conjugated polymers (see Figure S6 the supplemental experimental procedures for detailed results). Extensive studies using molecular descriptors, fingerprints, or images alone (Table 1) lead to well-trained ML models that are applicable for a certain category of polymers, but how well these models are suitable to predict other polymers is not getting much attention. Here, we demonstrate an appropriate feature representation through large dataset training, MD simulations, and experimental dataset verification, particularly from a perspective of the model's good transferability and generalization. The Morgan fingerprints with their number of occurrences are found most suitable in terms of prediction, due to the encoded information of substructures and polymerization.

Machine learns physical rules for polymer values

One of the challenges in using ML models for property predictions of organic molecules and polymers is correlating these predictions with meaningful physical quantities.16,83 This is the major driving force of current research activities in interpretable artificial intelligence and ML methods.84, 85, 86 Although our DNN_Fingerprint model demonstrates the best predictivity and transferability, it uses the fingerprinting representation of polymers, leading to the difficulty of pinpointing a specific set of physical quantities that are important in the prediction of a polymer's . On the contrary, the performances of Lasso_Descriptor and Lasso_Fingerprint models are not as ideal as DNN_models, but they are still useful to establish reasonable correlations between a polymer's chemical structure and with > 0.7 (cf. Figure 3). Furthermore, the Lasso method has an advantage for feature selection and extraction.76,87 By applying L1-norm regularization on the weights, unimportant features are shrunk, and only important features are left. The feature importance is directly indicated by the obtained weight for each feature.87,88

Focusing on molecular descriptors, the Lasso_Descriptor model finds 444 descriptors having non-zero weights. More than 50% of the total absolute weight is contributed by 61 features. These features are considered important in determining . The top 10 physical descriptors are listed in Table 4 (see the full list in Table S1 of the supplemental experimental procedures). Descriptors, such as “frequency of C-N at topological distance 2,” “number of heavy atoms,” “number of total quaternary C(sp3),” etc., are revealed to be principle features associated with the of polymers. These structural and chemical parameters are expected to be the essential constituents of polymers in terms of .

Table 4.

The top 10 physical descriptors and their absolute weight ratio from the Lasso model

| Name | Description | Block | Ratio |

|---|---|---|---|

| AVS_B(i) | average vertex sum from Burden matrix weighted by ionization potential | 2D matrix-based descriptors | 0.0684 |

| NssCH2 | number of atoms of type ssCH2 | atom-type E-state indices | 0.0272 |

| F02[C-N] | frequency of C–NA topological distance 2 | 2D atom pairs | 0.0181 |

| nHM | number of heavy atoms | constitutional indices | 0.0145 |

| BIC2 | bond information content index (neighborhood symmetry of 2-order) | information indices | 0.0138 |

| NsCH3 | number of atoms of type sCH3 | atom-type E-state indices | 0.0137 |

| B03[F-F] | presence/absence of F–F at topological distance 3 | 2D atom pairs | 0.0120 |

| nCq | number of total quaternary C(sp3) | functional group counts | 0.0113 |

| nCrs | number of ring secondary C(sp3) | functional group counts | 0.0098 |

| C-006 | CH2RX | atom-centered fragments | 0.0097 |

Several topological descriptors, such as F02[C-N] and B03[F-F], appear in the discovered top features as they encode the spatial relationship of the polymer backbone, such as the molecular size and free volume. Using topological descriptors alone is considered to be enough for a prediction model when dealing with a very limited dataset of 251 polymers.44 However, our Lasso_Descriptor model, dealing with a larger dataset, indicates the same level of importance as other factors, such as the functional group counts. Eleven functional groups (see the full list in Table S1 of the supplemental experimental procedures), such as “number of ring secondary C(sp3),” “number of hydroxyl groups,” and “number of primary amines (aromatic)” are identified key factors affecting the of polymers. They demonstrate no less significance than topological descriptors, and some critical functional groups are found to be good indicators to identify high- or low-polymers as shown later.

Focusing on Morgan fingerprints, the Lasso_Fingerprint model examines local substructures in a similar way. Among the 124 most common substructures found in dataset-1, 85 substructures have non-zero weights, and 18 substructures contribute more than 50% of the total absolute weight. These 18 substructures with the highest absolute weight are presented in Figure 4. These substructures also provide us physical insights into the of polymers, including the importance of aromatic compounds89 (substructures 16406, 24417, 17135, 17618, 11337, 11881, and 4916) and functional groups containing oxygen and nitrogen atoms (substructures 16406, 17748, 426, 24417, 770, 11337, 23586, 11881, 4916, 7305, and 24993), which indicates the positive influence of hydrogen bonds on .90 Also, some of these substructures are highly related to the important physical descriptors shown in Table 4, providing cross-validations between these two ML models.

Figure 4.

Substructures with the highest absolute weight based on Morgan fingerprint and Lasso ML model

The central atom of the substructures is highlighted in blue. Aromatic atoms are highlighted in yellow. Connectivity of Atoms is highlighted in light gray.

Besides the physical insights revealed by the Lasso regression models, critical functional groups can also be identified for their contributions to polymer values as a posteriori analysis. Here, we can examine the polymers with high/low values and their common characteristics (functional groups), and thereby gain insights into what physical quantities are important for enhancing/reducing their values. We process all the polymers in dataset-1 through the Checkmol60 package, and identify the functional groups only occurring in high- (>200°C) and low- (<50°C) polymers. These functional groups are listed in Table 5, where each functional group's key atom is highlighted in the red circle. For high- polymers, we find that the functional groups, such as oxohetarene, lactam, amine, and enamine, play critical roles in their high-temperature property. In contrast, the functional groups, such as disulfide, phosphoric acid, and acetal, are only shown in the low- polymers. These observations are consistent with the key substructures discovered from the fingerprint (Figure 4). For example, the substructures with oxygen “O” atom are revealed to be highly correlated to a polymer's , and the most exclusive functional groups also involve the oxygen O atom in either high- or low- polymers, highlighting its important contribution to the . Therefore, it is evident that the ML models indeed capture the critical features affecting a polymer's .

Table 5.

Important functional groups recognized using the Checkmol package

| Within low- polymers (<50°C) | Within high- polymers (>200°C) |

|---|---|

| Orthocarboxylic acid derivative | Oxohetarene |

|

|

| Disulfide | Lactam |

|

|

| Phosphoric acid derivative | Tertiary arom_amine |

|

|

| Phosphoric acid ester | Secondary aromatic amine |

| Phosphoric acid amide | Secondary mixed amine (aryl alkyl) |

|

|

| Acetal | Enamine |

|

|

These key features not only provide physical insights into understanding how the molecular structures influence a polymer's , but also are design motifs that are important in the inverse molecular design of high-temperature polymers. For instance, the generative ML models, such as variational autoencoders (VAE)91,92 and generative adversarial networks (GAN),93,94 when integrated with reinforcement learning (RL),95,96 can take into account the importance of these physical and chemical features. Such a strategy of combining the predictive ML model and generative ML model has been utilized in the inverse molecular design of small-drug-like molecules and organic molecules.97,98 Successful examples include the chemical VAE,99 ReLeaSE (reinforcement learning for structural evolution),100 and ORGANIC (objective-reinforced generative adversarial network for inverse-design chemistry).101 The generative ML model serves as an agent in generating molecules, while the predictive model acts as an external world to monitor the generation action taken by the agent. According to the feedback, either a reward or penalty can be assigned. Through training, the agent or the generative model learns to make good sequences of decisions in molecular generation toward a maximum reward. Therefore, our predictive ML model demonstrates its potential to be integrated with an inverse molecular design framework for high-temperature polymers or polymers with tailored values.

High-throughput screening of high-temperature polymers

Since the DNN_Fingerprint model demonstrates the best transferability from dataset-1 to dataset-2 and to a new experimental dataset (32 conjugated polymers), we adopt this ML model for high-throughput screening to identify promising candidates for high-temperature polymers. Dataset-1, with 6,923 real polymers, has nearly 2,000 polymers with larger than 200°C, as shown in Figure 5. These polymers have the great potential to be used in a harsh environment with high temperatures, but more candidates are still desired as many of these 2,000 polymers might not be easily synthesized and processed.1 Dataset-2 and dataset-3, with 5,690 real polymers and one million hypothetical polymers, respectively, form a promising candidate pool for the screening of high- polymers. Here, we aim to identify the polymers with values larger than 200°C, because the for high-temperature PEEK polymer is about 143°C.102 Almost all predicted values for dataset-2 and dataset-3 remain in the same range of dataset-1 (−118°C to 495°C), as shown in Figure 5. Excitingly, the population of potential promising candidates has been significantly increased. For example, dataset-1 has about 2,000 known polymers with ≥ 200°C. Through our DNN_Fingerprint model, we find an additional 1,000 and 65,000 new candidates in dataset-2 and dataset-3 with ≥ 200°C, respectively. Thus, through this high-throughput screening, we find 30 times more promising candidates for high-temperature polymers, in comparison with the 2,000 known high-temperature polymers in dataset-1. If we consider a harsher environment with required ≥ 300°C (comparable with melting temperature of lead, 328°C), dataset-1, dataset-2, and dataset-3 have 309, 249, and 3,567 polymers, respectively, that can potentially satisfy this requirement.1 Again, our high-throughput screening method identifies 11 times more promising candidates from dataset-2 and dataset-3 compared with dataset-1. The ML high-throughput screening for high-temperature polymers overcomes the challenges from theoretical analysis or MD simulations. Theoretical equations derived using small groups of polymers have difficulties in handling polymers of different categories, and are therefore not applicable to all data points of the vast chemical space. MD simulations, although capable of computing vaues of various kinds of polymers, are restricted by the computational cost considering the vast amount of candidates to be screened. However, our high-throughput screening method processes the one million hypothetical polymers efficiently with proven reliability for estimation.

Figure 5.

High-throughput screening of high polymers with the DNN_Fingerprint model

The distribution of the dataset-1, dataset-2, and dataset-3 are plotted in green, yellow, and red, respectively. The polymer samples on the right are following by their predicated and true values. For the sample in dataset-1 (green box), true is the collected experimental value. For the samples in dataset-2 (yellow box) and dataset-3 (red box), true is the MD-simulated value. More than 1,000 real polymers and 65,000 hypothetical polymers were discovered with > 200°C.

We then focus our attention on the top four high-temperature polymers, with ML-predicted > 400°C. These four polymers are unknown and hypothetical, although they share similar chemical structures as the other known high-temperature polymers, e.g., aromatic rings, sulfone groups, oxygen linkages, and amine groups. Each of these groups is highlighted during our analysis of the ML models as being related to the high-temperature properties of polymers (Figure 4; Table 5). Without making any assumptions or premises for the ML model, it is observed that the structures of the screened top four high-temperature polymers well follow the general rule controlling the of polymers. The backbone structure with rigid benzene rings contributes to the stiffness of the chain, which is known to play a major role in determining the of a polymer.50,103,104 Also, there are no long alkyl chains that lead to lower glass transition.105 Although the similar sulfur-containing polyimides, such as poly[(2,8-dimethyl-5,5-dioxodibenzothiophene-3,7-diamine)-alt-(biphenyl-3,3’:4,4′-tetracarboxylic dianhydride)] (polymer ID: P130369 in PoLyInfo), have been tested with values as high as 490°C,106 the values of these hypothetical polymers have not yet been reported. We take advantage of MD simulations to build all-atom molecular models for these hypothetical polymers and predict their values (more details are given in the supplemental experimental procedures). As shown in Figure 5, our physics-based MD simulations confirm that these hypothetical polymers indeed have ultra-high values. Furthermore, we find that the MD-predicted and ML-predicted values are in relatively good agreement with each other (within the error of the prediction), indicating that the ML model could be used as a predictive tool for screening of previously unexplored chemical spaces for high-temperature polymers.

The key substructures (Figure 4) and functional groups (Table 5) related to the high- polymers are revealed based on dataset-1. Their important roles are further confirmed on the identified high- polymers with ML-predicted > 200°C from dataset-2 and dataset-3. The key substructures of high- polymers in dataset-1 (2,268 polymers), dataset-2 (1,155 polymers), and dataset-3 (65,283 polymers) are compared in Figure 6A (more details are given in Table S5 of the supplemental experimental procedures). For example, the substructure "16406" (a center carbon connected to aromatic compounds and oxygen) is recognized with percentages of 15.04%, 16.54%, and 27.55% of high-polymers in dataset-1, dataset-2, and dataset-3, respectively. This indicates that the contributions of this substructure to the high-polymers are similar across these different datasets. As mentioned above, one of the most important contributions comes from substructure "23586"—a single oxygen side chain, which consists of 53.40%, 53.16%, and 76.05% high- polymers in dataset-1, dataset-2, and dataset-3, respectively. Overall, most of these 18 key substructures' contributions in different datasets are quite similar. Their comparable influences also explain the good transferability of the ML model based on the Morgan fingerprints. The frequency of occurrence is also an important aspect because of the probability of a substructure emerging during the inverse molecular design of high- polymers. In terms of the functional groups, the six key functional groups exclusive to high- polymers are compared in Figure 6B in a similar manner (also see Table S6 for detailed results). Interestingly, the six recognized functional groups are special ones only found in a few high- polymers. For instance, the secondary aromatic amine functional group is identified in about 0.13% of the high- polymers in dataset-1, while 3.32% of the high- polymers in dataset-3 are found to have this functional group. Although training dataset-1 shows a quite negligible 0.13% of this functional group, its importance is successfully captured by the ML model using Morgan fingerprints and then demonstrated in dataset-3. In addition, we generally observe that polymers containing amine groups, oxygen along the backbone, and/or nitrogen rings, demonstrate high-temperature properties.1 In short, our ML models for the chemistry- relation of polymers seems to pinpoint meaningful physical-chemistry insights that can be used to enhance high-temperature performance and may be further utilized in the inverse molecular design of high- polymers that have not been experimentally studied.

Figure 6.

Comparison of key substructures and functional groups in high-(>200°C) polymers

(A) Comparison of the 18 substructures recognized in Figure 4.

(B) Comparison of the six high--related functional groups recognized in Table 5.

Concluding remarks

Quantitatively predicting a polymer's from its chemical structure is a significant challenge in material science and engineering, chemistry, and polymer science fields. Here, we use an ML-based approach to correlate a polymer's chemical structure with its , taking advantage of a large and diverse dataset collected from PoLyInfo. The transferability and generalization ability of ML models are particularly focused and demonstrated by utilizing a large dataset of different categories of polymers. We consider three different feature representations of polymer's repeating unit, such as molecular descriptors, Morgan fingerprints, and images, and three different ML models, e.g., Lasso, DNN, and CNN. All of these ML models demonstrate comparable performances in training and testing on the experimentally available dataset-1. However, only the DNN_Fingerprint model exhibits the best transferability to dataset-2 with distinct substructures from dataset-1. We find that this excellent transferability is attributed to the dynamic representation of Morgan fingerprints, as they can evolve to include new substructures encountered. Furthermore, our Morgan fingerprints take into account the chemical connectivity between neighboring repeating units and the frequency of occurrence of different substructures, which play important roles in determining a polymer's . Although Morgan fingerprints ignore all high-order polymer descriptors, e.g., stereoregularity, polarity, and chain length, the DNN_Fingerprint model gives satisfactory predictions on the values of unknown polymers from dataset-2 and dataset-3. As we have discussed, choosing the appropriate feature representation for polymeric materials remains an open question in the ML field, which is also highly dependent on the specific application.16,17,48,83

Our ML approaches are designed with the specific goal to quickly predict a polymer's from an extremely large set of known (dataset-2) and hypothetical (dataset-3) polymers. Such a high-throughput screening allows us to perform posterior correlations between high- polymers with common functional groups and chemical substructures. These observations allow us to quantify physical quantities that are important in determining a polymer's . For instance, our Lasso regression models reveal principal -related features, including 61 molecular descriptors and 18 chemical substructures. Also, the functional groups exclusive to high- (>200°C) or low- (<50°C) polymers are further identified, which can cross-validate our Lasso regression models. It allows us to determine which chemical elements and molecular structures are worth experimental studies in molecular engineering and design of high-temperature polymers, leading to a molecular understanding of a polymer's . With the DNN_Fingerprint model for high-throughput screening of nearly one million hypothetical polymers, we find more than 65,000 promising candidates with > 200°C, which is 30 times more than existing known high-temperature polymers (∼2,000 from dataset-1). The discovery of this large number of promising candidates will be of significant interest in the development and design of high-temperature polymers. The same task is very difficult to accomplish by screening with either theoretical equations or MD simulation due to their limitations in dealing with such large and diverse datasets. In summary, our study demonstrates that ML is a powerful method for the prediction and rapid screening of high-temperature polymers, particularly with growing large sets of experimental and computational data for polymeric materials. The key molecular descriptors and chemical substructures informed by ML models, combined with identified chemical functional groups, are important design motifs for the molecular engineering of high-temperature or high-performance polymers in an inverse materials design task.

Experimental procedures

Resource availability

Lead contact

Ying Li is the lead contact of this study and can be reached by e-mail: yingli@engr.uconn.edu.

Materials availability

This study did not generate new unique reagents.

Data and code availability

Data and code are available at https://github.com/figotj/Polymer_Tg_.

Acknowledgments

We gratefully acknowledge financial support from the Air Force Office of Scientific Research through the Air Force's Young Investigator Research Program (FA9550-20-1-0183; program manager: Dr. Ming-Jen Pan) and the National Science Foundation (CMMI-1934829). Y.L. would like to give thanks for the support from 3M's Non-Tenured Faculty Award. This research also benefited in part from the computational resources and staff contributions provided by the Booth Engineering Center for Advanced Technology (BECAT) at UConn. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the US Department of Defense. The authors also acknowledge the Texas Advanced Computing Center (TACC) at The University of Texas at Austin (Frontera project and the National Science Foundation award 1818253) for providing HPC resources that have contributed to the research results reported within this paper.

Author contributions

Conceptualization, Y.L.; methodology, L.T., G.C., and Y.L.; software, L.T. and G.C.; validation, L.T.; formal analysis, L.T., G.C., and Y.L.; investigation, L.T.; resources, Y.L.; data curation, L.T.; writing—original draft, L.T.; writing—review & editing, L.T., G.C., and Y.L.; visualization, L.T.; supervision, Y.L.; funding acquisition, Y.L.

Declaration of interests

The authors declare no competing interests.

Published: April 9, 2021

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.patter.2021.100225.

Supplemental information

References

- 1.Hergenrother P.M. The use, design, synthesis, and properties of high performance/high temperature polymers: an overview. High Perform. Polym. 2003;15:3–45. [Google Scholar]

- 2.Meador M.A. Recent advances in the development of processable high-temperature polymers. Annu. Rev. Mater. Sci. 1998;28:599–630. [Google Scholar]

- 3.Mittal K.L. Vol. 3. CRC Press; 2005. (Polyimides and Other High Temperature Polymers: Synthesis, Characterization and Applications). [Google Scholar]

- 4.Sperati C.A., Starkweather H.W. Fortschritte Der Hochpolymeren-Forschung. Springer; 1961. Fluorine-containing polymers. II. Polytetrafluoroethylene; pp. 465–495. [Google Scholar]

- 5.Petrie E. Extreme high temperature thermoplastics: gateway to the future or the same old trail. Pop. Plast. Packag, 2012;57:30–43. [Google Scholar]

- 6.Imai Y. Synthesis of novel organic-soluble high-temperature aromatic polymers. High Perform. Polym. 1995;7:337–345. [Google Scholar]

- 7.Li Q., Chen L., Gadinski M.R., Zhang S., Zhang G., Li H.U., Iagodkine E., Haque A., Chen L.-Q., Jackson T.N. Flexible high-temperature dielectric materials from polymer nanocomposites. Nature. 2015;523:576–579. doi: 10.1038/nature14647. [DOI] [PubMed] [Google Scholar]

- 8.Kaminsky W., Rabe O., Schauwienold A.-M., Schupfner G., Hanss J., Kopf J. Crystal structure and propene polymerization characteristics of bridged zirconocene catalysts. J. Organomet. Chem. 1995;497:181–193. [Google Scholar]

- 9.McLain S.J., Feldman J., McCord E.F., Gardner K.H., Teasley M.F., Coughlin E.B., Sweetman K.J., Johnson L.K., Brookhart M. Addition polymerization of cyclopentene with nickel and palladium catalysts. Macromolecules. 1998;31:6705–6707. [Google Scholar]

- 10.Kobayashi S., Matsuzawa T., Matsuoka S.-i., Tajima H., Ishizone T. Living anionic polymerizations of 4-(1-adamantyl) styrene and 3-(4-vinylphenyl)-1,1ʹ-biadamantane. Macromolecules. 2006;39:5979–5986. [Google Scholar]

- 11.Fetters L.J., Morton M. Synthesis and properties of block polymers. I. Poly-α-methylstyrene-polyisoprene-poly-α-methylstyrene. Macromolecules. 1969;2:453–458. [Google Scholar]

- 12.Kobayashi S., Kataoka H., Goseki R., Ishizone T. Living anionic polymerization of 4-(1-adamantyl)-α-methylstyrene. Macromol. Chem. Phys. 2018;219:1700450. [Google Scholar]

- 13.Wang W., Schlegel R., White B.T., Williams K., Voyloy D., Steren C.A., Goodwin A., Coughlin E.B., Gido S., Beiner M. High temperature thermoplastic elastomers synthesized by living anionic polymerization in hydrocarbon solvent at room temperature. Macromolecules. 2016;49:2646–2655. [Google Scholar]

- 14.Nakahara A., Satoh K., Kamigaito M. Random copolymer of styrene and diene derivatives via anionic living polymerization followed by intramolecular Friedel–Crafts cyclization for high-performance thermoplastics. Polym. Chem. 2012;3:190–197. [Google Scholar]

- 15.Cai Y., Lu J., Zuo D., Li S., Cui D., Han B., Yang W. Extremely high glass transition temperature hydrocarbon polymers prepared through cationic cyclization of highly 3,4-regulated poly(phenyl-1,3-butadiene) Macromol. Rapid Commun. 2018;39:1800298. doi: 10.1002/marc.201800298. [DOI] [PubMed] [Google Scholar]

- 16.Chen G., Shen Z., Iyer A., Ghumman U.F., Tang S., Bi J., Chen W., Li Y. Machine-learning-assisted de novo design of organic molecules and polymers: opportunities and challenges. Polymer. 2020;12:163. doi: 10.3390/polym12010163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Batra R., Song L., Ramprasad R. Emerging materials intelligence ecosystems propelled by machine learning. Nat. Rev. Mater. 2020:1–24. [Google Scholar]

- 18.Van Krevelen D.W., Te Nijenhuis K. Elsevier; 2009. Properties of Polymers: Their Correlation with Chemical Structure; Their Numerical Estimation and Prediction from Additive Group Contributions. [Google Scholar]

- 19.Dalnoki-Veress K., Forrest J., Murray C., Gigault C., Dutcher J. Molecular weight dependence of reductions in the glass transition temperature of thin, freely standing polymer films. Phys. Rev. E. 2001;63:031801. doi: 10.1103/PhysRevE.63.031801. [DOI] [PubMed] [Google Scholar]

- 20.Privalko V., Lipatov Y.S. Glass transition and chain flexibility of linear polymers. J. Macromol. Sci. Phys. 1974;9:551–564. [Google Scholar]

- 21.Yi L., Li C., Huang W., Yan D. Soluble aromatic polyimides with high glass transition temperature from benzidine containing tert-butyl groups. J. Polym. Res. 2014;21:572. [Google Scholar]

- 22.Huang Y.-J., Horng J.C. Effects of thermoplastic additives on mechanical properties and glass transition temperatures for styrene-crosslinked low-shrink polyester matrices. Polymer. 1998;39:3683–3695. [Google Scholar]

- 23.Hiemenz P.C., Lodge T.P. CRC press; 2007. Polymer Chemistry. [Google Scholar]

- 24.Wiff D., Altieri M., Goldfarb I. Predicting glass transition temperatures of linear polymers, random copolymers, and cured reactive oligomers from chemical structure. J. Polym. Sci. Polym. Phys. Ed. 1985;23:1165–1176. [Google Scholar]

- 25.Barton J.M. Journal of Polymer Science Part C: Polymer Symposia. Wiley Online Library; 1970. Relation of glass transition temperature to molecular structure of addition copolymers; pp. 573–597. [Google Scholar]

- 26.Weyland H., Hoftyzer P., Van Krevelen D. Prediction of the glass transition temperature of polymers. Polymer. 1970;11:79–87. [Google Scholar]

- 27.Dudowicz J., Freed K.F., Douglas J.F. The glass transition temperature of polymer melts. J. Phys. Chem. B. 2005;109:21285–21292. doi: 10.1021/jp0523266. [DOI] [PubMed] [Google Scholar]

- 28.Xie R., Weisen A.R., Lee Y., Aplan M.A., Fenton A.M., Masucci A.E., Kempe F., Sommer M., Pester C.W., Colby R.H. Glass transition temperature from the chemical structure of conjugated polymers. Nat. Commun. 2020;11:1–8. doi: 10.1038/s41467-020-14656-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Han J., Gee R.H., Boyd R.H. Glass transition temperatures of polymers from molecular dynamics simulations. Macromolecules. 1994;27:7781–7784. [Google Scholar]

- 30.Choi J., Yu S., Yang S., Cho M. The glass transition and thermoelastic behavior of epoxy-based nanocomposites: a molecular dynamics study. Polymer. 2011;52:5197–5203. [Google Scholar]

- 31.Patrone P.N., Dienstfrey A., Browning A.R., Tucker S., Christensen S. Uncertainty quantification in molecular dynamics studies of the glass transition temperature. Polymer. 2016;87:246–259. [Google Scholar]

- 32.Buchholz J., Paul W., Varnik F., Binder K. Cooling rate dependence of the glass transition temperature of polymer melts: molecular dynamics study. J. Chem. Phys. 2002;117:7364–7372. [Google Scholar]

- 33.Sharma P., Roy S., Karimi-Varzaneh H.A. Validation of force fields of rubber through glass-transition temperature calculation by microsecond atomic-scale molecular dynamics simulation. J. Phys. Chem. B. 2016;120:1367–1379. doi: 10.1021/acs.jpcb.5b10789. [DOI] [PubMed] [Google Scholar]

- 34.Katritzky A.R., Sild S., Lobanov V., Karelson M. Quantitative structure−property relationship (QSPR) correlation of glass transition temperatures of high molecular weight polymers. J. Chem. Inf. Comput. Sci. 1998;38:300–304. [Google Scholar]

- 35.Schut J., Bolikal D., Khan I., Pesnell A., Rege A., Rojas R., Sheihet L., Murthy N., Kohn J. Glass transition temperature prediction of polymers through the mass-per-flexible-bond principle. Polymer. 2007;48:6115–6124. doi: 10.1016/j.polymer.2007.07.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Camelio P., Cypcar C.C., Lazzeri V., Waegell B. A novel approach toward the prediction of the glass transition temperature: application of the EVM model, a designer QSPR equation for the prediction of acrylate and methacrylate polymers. J. Polym. Sci. A Polym. Chem. 1997;35:2579–2590. [Google Scholar]

- 37.Jha A., Chandrasekaran A., Kim C., Ramprasad R. Impact of dataset uncertainties on machine learning model predictions: the example of polymer glass transition temperatures. Model. Simul. Mater. Sci. Eng. 2019;27:024002. [Google Scholar]

- 38.Kim C., Chandrasekaran A., Huan T.D., Das D., Ramprasad R. Polymer genome: a data-powered polymer informatics platform for property predictions. J. Phys. Chem. C. 2018;122:17575–17585. [Google Scholar]

- 39.Ramprasad M., Kim C. Assessing and improving machine learning model predictions of polymer glass transition temperatures. arXiv. 2019 preprint arXiv:1908.02398. [Google Scholar]

- 40.Katritzky A.R., Kuanar M., Slavov S., Hall C.D., Karelson M., Kahn I., Dobchev D.A. Quantitative correlation of physical and chemical properties with chemical structure: utility for prediction. Chem. Rev. 2010;110:5714–5789. doi: 10.1021/cr900238d. [DOI] [PubMed] [Google Scholar]

- 41.Wu S., Kondo Y., Kakimoto M.-a., Yang B., Yamada H., Kuwajima I., Lambard G., Hongo K., Xu Y., Shiomi J. Machine-learning-assisted discovery of polymers with high thermal conductivity using a molecular design algorithm. Npj Comput. Mater. 2019;5:1–11. [Google Scholar]

- 42.Liu W., Cao C. Artificial neural network prediction of glass transition temperature of polymers. Colloid. Polym. Sci. 2009;287:811–818. [Google Scholar]

- 43.Pei J.F., Cai C.Z., Zhu Y.M., Yan B. Modeling and predicting the glass transition temperature of polymethacrylates based on quantum chemical descriptors by using hybrid PSO-SVR. Macromol. Theory Simul. 2013;22:52–60. [Google Scholar]

- 44.Kim C., Chandrasekaran A., Jha A., Ramprasad R. Active-learning and materials design: the example of high glass transition temperature polymers. MRS Commun. 2019;9:860–866. [Google Scholar]

- 45.Mannodi-Kanakkithodi A., Chandrasekaran A., Kim C., Huan T.D., Pilania G., Botu V., Ramprasad R. Scoping the polymer genome: a roadmap for rational polymer dielectrics design and beyond. Mater. Today. 2018;21:785–796. [Google Scholar]

- 46.Chandrasekaran A., Kim C., Ramprasad R. Machine Learning Meets Quantum Physics. Springer; 2020. Polymer genome: a polymer informatics platform to accelerate polymer discovery; pp. 397–412. [Google Scholar]

- 47.Doan Tran H., Kim C., Chen L., Chandrasekaran A., Batra R., Venkatram S., Kamal D., Lightstone J.P., Gurnani R., Shetty P. Machine-learning predictions of polymer properties with Polymer Genome. J. Appl. Phys. 2020;128:171104. [Google Scholar]

- 48.Chen L., Pilania G., Batra R., Huan T.D., Kim C., Kuenneth C., Ramprasad R. Polymer informatics: current status and critical next steps. arXiv. 2020 preprint arXiv:2011.00508. [Google Scholar]

- 49.Miccio L.A., Schwartz G.A. From chemical structure to quantitative polymer properties prediction through convolutional neural networks. Polymer. 2020:122341. [Google Scholar]

- 50.Miccio L.A., Schwartz G.A. Localizing and quantifying the intra-monomer contributions to the glass transition temperature using artificial neural networks. Polymer. 2020;203:122786. [Google Scholar]

- 51.Mattioni B.E., Jurs P.C. Prediction of glass transition temperatures from monomer and repeat unit structure using computational neural networks. J. Chem. Inf. Comput. Sci. 2002;42:232–240. doi: 10.1021/ci010062o. [DOI] [PubMed] [Google Scholar]

- 52.Higuchi C., Horvath D., Marcou G., Yoshizawa K., Varnek A. Prediction of the glass-transition temperatures of linear homo/heteropolymers and cross-linked epoxy resins. ACS Appl. Polym. Mater. 2019;1:1430–1442. [Google Scholar]

- 53.Pilania G., Iverson C.N., Lookman T., Marrone B.L. Machine-learning-based predictive modeling of glass transition temperatures: a case of polyhydroxyalkanoate homopolymers and copolymers. J. Chem. Inf. Model. 2019;59:5013–5025. doi: 10.1021/acs.jcim.9b00807. [DOI] [PubMed] [Google Scholar]

- 54.Palomba D., Vazquez G.E., Díaz M.F. Novel descriptors from main and side chains of high-molecular-weight polymers applied to prediction of glass transition temperatures. J. Mol. Graph. Model. 2012;38:137–147. doi: 10.1016/j.jmgm.2012.04.006. [DOI] [PubMed] [Google Scholar]

- 55.Yu X. Support vector machine-based QSPR for the prediction of glass transition temperatures of polymers. Fibers Polym. 2010;11:757–766. [Google Scholar]

- 56.Liu W. Prediction of glass transition temperatures of aromatic heterocyclic polyimides using an ANN model. Polym. Eng. Sci. 2010;50:1547–1557. [Google Scholar]

- 57.Ning L. Artificial neural network prediction of glass transition temperature of fluorine-containing polybenzoxazoles. J. Mater. Sci. 2009;44:3156–3164. [Google Scholar]

- 58.Otsuka S., Kuwajima I., Hosoya J., Xu Y., Yamazaki M. International Conference on Emerging Intelligent Data and Web Technologies, IEEE; 2011. In PoLyInfo: Polymer database for polymeric materials design; pp. 22–29. [Google Scholar]

- 59.Lee J.-C., Litt M.H. Glass transition temperature-composition relationship of oxyethylene copolymers with chloromethyl/(ethylthio) methyl, chloromethyl/(ethylsulfinyl) methyl, or chloromethyl/(ethylsulfonyl) methyl side groups. Polym. J. 2000;32:228–233. [Google Scholar]

- 60.Fox T.G. Influence of diluent and of copolymer composition on the glass temperature of a polymer system. Bull. Am. Phys. Soc. 1956;1:123. [Google Scholar]

- 61.Hadipeykani M., Aghadavoudi F., Toghraie D. A molecular dynamics simulation of the glass transition temperature and volumetric thermal expansion coefficient of thermoset polymer based epoxy nanocomposite reinforced by CNT: a statistical study. Phys. Stat. Mech. Appl. 2020;546:123995. [Google Scholar]

- 62.Hadipeykani M., Aghadavoudi F., Toghraie D. Thermomechanical properties of the polymeric nanocomposite predicted by molecular dynamics. ADMT J. 2019;12:25–32. [Google Scholar]

- 63.Ma R., Luo T. PI1M: a benchmark database for polymer informatics. J. Chem. Inf. Model. 2020;60:4684–4690. doi: 10.1021/acs.jcim.0c00726. [DOI] [PubMed] [Google Scholar]

- 64.Rogers D., Hahn M. Extended-connectivity fingerprints. J. Chem. Inf. Model. 2010;50:742–754. doi: 10.1021/ci100050t. [DOI] [PubMed] [Google Scholar]

- 65.Haider N. Functionality pattern matching as an efficient complementary structure/reaction search tool: an open-source approach. Molecules. 2010;15:5079–5092. doi: 10.3390/molecules15085079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Baur E., Ruhrberg K., Woishnis W. William Andrew; 2016. Chemical Resistance of Commodity Thermoplastics. [Google Scholar]

- 67.Simatos D., Blond G., Roudaut G., Champion D., Perez J., Faivre A. Influence of heating and cooling rates on the glass transition temperature and the fragility parameter of sorbitol and fructose as measured by DSC. J. Therm. Anal. Calorim. 1996;47:1419–1436. [Google Scholar]

- 68.McKenna G.B. Looking at the glass transition: challenges of extreme time scales and other interesting problems. Rubber Chem. Technol. 2020;93:79–120. [Google Scholar]

- 69.Biron M. Thermosets and Composites. 2004. Detailed accounts of thermoset resins for moulding and composite matrices; pp. 183–327. [Google Scholar]

- 70.Rudin A., Choi P. Academic Press; 2012. The Elements of Polymer Science and Engineering. [Google Scholar]

- 71.Weininger D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 1988;28:31–36. [Google Scholar]

- 72.Ma R., Liu Z., Zhang Q., Liu Z., Luo T. Evaluating polymer representations via quantifying structure–property relationships. J. Chem. Inf. Model. 2019;59:3110–3119. doi: 10.1021/acs.jcim.9b00358. [DOI] [PubMed] [Google Scholar]

- 73.Mauri A. Ecotoxicological QSARs. Springer; 2020. alvaDesc: a tool to calculate and analyze molecular descriptors and fingerprints; pp. 801–820. [Google Scholar]

- 74.alvaDesc molecular descriptors. https://www.alvascience.com/alvadesc-descriptors/

- 75.Landrum G. Academic Press; 2013. RDKit: A Software Suite for Cheminformatics, Computational Chemistry, and Predictive Modeling. [Google Scholar]

- 76.Chen G., Shen Z., Li Y. A machine-learning-assisted study of the permeability of small drug-like molecules across lipid membranes. Phys. Chem. Chem. Phys. 2020;22:19687–19696. doi: 10.1039/d0cp03243c. [DOI] [PubMed] [Google Scholar]

- 77.Barnett J.W., Bilchak C.R., Wang Y., Benicewicz B.C., Murdock L.A., Bereau T., Kumar S.K. Designing exceptional gas-separation polymer membranes using machine learning. Sci. Adv. 2020;6:eaaz4301. doi: 10.1126/sciadv.aaz4301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Alkharusi H. Categorical variables in regression analysis: a comparison of dummy and effect coding. Int. J. Educ. 2012;4:202. [Google Scholar]

- 79.Maaten L.V.D., Hinton G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008;9:2579–2605. [Google Scholar]

- 80.Yu K.Q., Li Z.S., Sun J. Polymer structures and glass transition: a molecular dynamics simulation study. Macromol. Theory Simul. 2001;10:624–633. [Google Scholar]

- 81.Mohammadi M., Davoodi J. The glass transition temperature of PMMA: a molecular dynamics study and comparison of various determination methods. Eur. Polym. J. 2017;91:121–133. [Google Scholar]

- 82.Yosinski J., Clune J., Bengio Y., Lipson H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014:3320–3328. [Google Scholar]

- 83.Sivaraman G., Jackson N., Sanchez-Lengeling B., Vasquez-Mayagoitia A., Aspuru-Guzik A., Vishwanath V., de Pablo J. Machine Learning: Science and Technology; 2020. A machine learning workflow for molecular analysis: application to melting points. [Google Scholar]

- 84.Doshi-Velez F., Kim B. Towards a rigorous science of interpretable machine learning. arXiv. 2017 preprint arXiv:1702.08608. [Google Scholar]

- 85.Jiménez-Luna J., Grisoni F., Schneider G. Drug discovery with explainable artificial intelligence. Nat. Mach. Intell. 2020;2:573–584. [Google Scholar]

- 86.Molnar C. Lulu. com; 2020. Interpretable Machine Learning. [Google Scholar]

- 87.Fonti V., Belitser E. Feature selection using lasso. VU Amsterdam Research Paper in Business Analytics. 2017;30:1–25. [Google Scholar]

- 88.Muthukrishnan R., Rohini R. IEEE International Conference on Advances in Computer Applications (ICACA), IEEE; 2016. In LASSO: A feature selection technique in predictive modeling for machine learning; pp. 18–20. [Google Scholar]

- 89.Naito K., Miura A. Molecular design for nonpolymeric organic dye glasses with thermal stability: relations between thermodynamic parameters and amorphous properties. J. Phys. Chem. 1993;97:6240–6248. [Google Scholar]

- 90.Painter P.C., Graf J.F., Coleman M.M. Effect of hydrogen bonding on the enthalpy of mixing and the composition dependence of the glass transition temperature in polymer blends. Macromolecules. 1991;24:5630–5638. [Google Scholar]

- 91.Kusner M.J., Paige B., Hernández-Lobato J.M. Grammar variational autoencoder. arXiv. 2017 preprint arXiv:1703.01925. [Google Scholar]

- 92.Pu Y., Gan Z., Henao R., Yuan X., Li C., Stevens A., Carin L. Advances in Neural Information Processing Systems. 2016. Variational autoencoder for deep learning of images, labels and captions; pp. 2352–2360. [Google Scholar]