Randomized controlled trials (RCTs) are the gold-standard approach for evaluating the risks, benefits, and comparative effectiveness of critical care interventions. However, RCT-level evidence evaluating many intensive care therapies is scarce (1–4). Characteristics inherent to critical care imbue unique challenges to RCTs, including time-sensitive eligibility windows, the complexity of interventions and baseline critical care, and real or perceived ethical concerns over withholding potentially lifesaving therapy from critically ill patients. Even when theoretically feasible, RCTs can be slow or prohibitively expensive—constraints that are often compounded when evaluating treatment effects among subgroups or measuring rare outcomes. In these cases, analyses of existing observational data may provide rapid, actionable information at substantially lower cost. The increasing availability of “big data” in health care combined with contemporary computational and analytic advances has enabled a proliferation of observational research using electronic health records (5). Yet, it has also prompted acrimonious debates about the role that observational research should play in the assessment of treatment effects (6).

Critics of observational causal inference studies often cite their lack of random treatment assignment as predisposing to confounded effect estimates (6, 7). Careful specification of causal pathways, measurement of and adjustment for relevant confounders, and attention to the role of unmeasured confounding are paramount in addressing bias arising from a lack of randomization (8, 9). While analytic methods like regression adjustment and propensity score matching are commonly used to adjust for confounding, other fundamental flaws in the design of observational causal inference studies are often ignored (10, 11). One important error is the failure to synchronize time anchors that determine eligibility, treatment assignment, and the start of follow-up (12–14). In an observational study, the timing of eligibility refers to the point at which a participant meets criteria to be included in the study. This includes any criteria necessary for inclusion in an observational registry or dataset (e.g., intensive care unit transfer, endotracheal intubation, pulmonary hypertension diagnosis, etc.). The timing of treatment assignment refers to the point at which a patient is “assigned” (nonrandomly in most observational studies) to a treatment or treatment strategy. This might be the point at which a physician selects an induction agent at intubation, orders a certain vasopressor to treat shock, or initiates corticosteroid therapy for acute exacerbation of idiopathic pulmonary fibrosis. Finally, the beginning of follow-up marks the point at which a patient’s outcome would be counted in the study and attributed to the patient’s specific treatment strategy. In randomized controlled trials and properly conducted observational studies, the timing of eligibility assessment, treatment assignment, and start of follow-up are synchronized (Figure 1), or differences are explicitly addressed in the analysis.

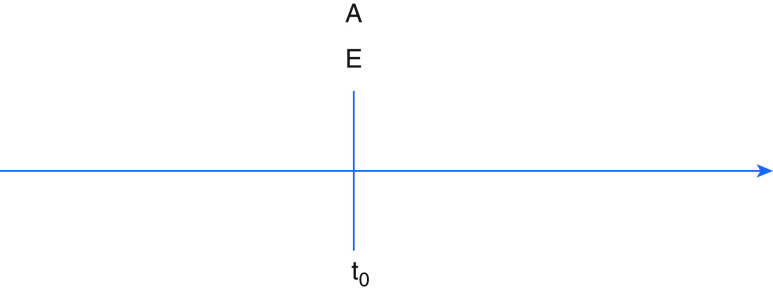

Figure 1.

Synchronization of eligibility and treatment group determination with start of follow-up (as in randomized controlled trial). A = treatment assignment; E = eligibility determination; t0 = start of follow-up.

To aid both readers and practitioners of observational research, we present three common misapplications of these time points in pulmonary and critical care research together with the biases they introduce. We then describe a design framework to overcome biases owing to discrepant time points.

Scenario 1: Treatment Assignment Placed after Eligibility Assessment and Start of Follow-Up

First, treatment assignment may occur after eligibility and the start of follow-up (Figure 2). Consider an observational study evaluating whether empiric anticoagulation improves mortality among patients hospitalized with coronavirus disease (COVID-19) pneumonia. In this example, patients enter the cohort at the time of hospital admission and are assigned to the treatment group if they received empiric anticoagulation during the hospitalization, which may not occur until days later. By design, patients included in the “anticoagulation” group cannot die during the period between admission and treatment assignment (because assignment occurs at the initiation of anticoagulation). As a result, deaths during this time can only be attributed to patients in the “no anticoagulation” group. Failing to either align assignment of anticoagulation with start of follow-up or to account for the time-varying nature of treatment leads to immortal time. When immortal time observed in the anticoagulation group is not addressed by the analysis, the resulting bias introduces a spurious survival advantage. This scenario cannot arise in an intention-to-treat analysis of a corresponding RCT, in which treatment assignment is made at the time of enrollment and patients are analyzed in the groups to which they are randomized.

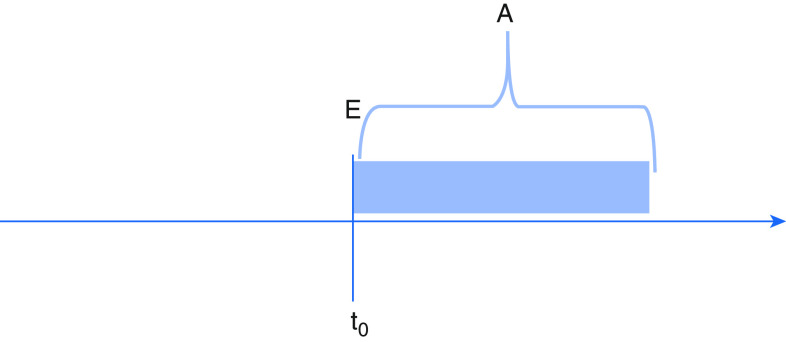

Figure 2.

Eligibility synchronized with start of follow-up, but treatment group assignment occurs after. A = treatment assignment; E = eligibility determination; t0 = start of follow-up.

Scenario 2: Eligibility Occurs after Treatment Assignment and Start of Follow-Up

Second, eligibility may be determined by characteristics occurring after treatment assignment and the start of follow-up (Figure 3). Consider a retrospective study evaluating whether early versus delayed antibiotics in the emergency department improve mortality from culture-positive sepsis. Outcome ascertainment and follow-up might begin at emergency department arrival with treatment assignment (early or delayed antibiotics) occurring soon after. Culture-based eligibility, however, would not occur until one or more days later. Observational studies that establish eligibility using post-treatment factors such as culture results or discharge diagnoses commit several errors that introduce potential bias. First, because RCT eligibility criteria can only include information available to investigators at the time of prospective enrollment, observational studies that use “posterior” eligibility determination are susceptible to selection bias, and results are unlikely to replicate RCTs examining related questions. More importantly, they fail to address actionable clinical questions (because post-treatment data is not available to clinicians making decisions on whether to treat at the bedside). Finally, by basing eligibility on downstream factors that might themselves be affected by treatment, they risk arriving at incorrect estimates of treatment efficacy.

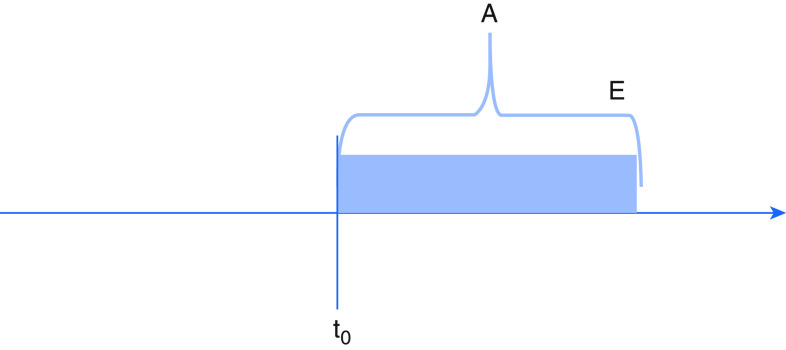

Figure 3.

Eligibility and treatment group assignment both determined after start of follow-up. A = treatment assignment; E = eligibility determination; t0 = start of follow-up.

Scenario 3: Follow-Up Begins after Eligibility and Treatment Assignment

Third, the start of follow-up might begin after both eligibility and treatment assignment (Figure 4). Consider a registry-based study evaluating the effectiveness of β-blockers in reducing acute exacerbation of chronic obstructive pulmonary disease (COPD). Patients with COPD are “assigned” to β-blocker treatment nonrandomly by a clinician’s decision and then later enroll in a registry. Follow-up and outcome ascertainment begin at registry enrollment when a study team member begins tracking the patient’s clinical trajectory. Including patients who have already received treatment (“prevalent users”) risks introducing selection bias. If, for example, the true effect of β-blockers is early harm, patients who receive β-blockers and die before registry inclusion will not have their outcomes counted. Results from this study will therefore be biased in favor of β-blockers because those patients surviving to registry enrollment and thus appearing in the study cohort will have demonstrated tolerance to the treatment.

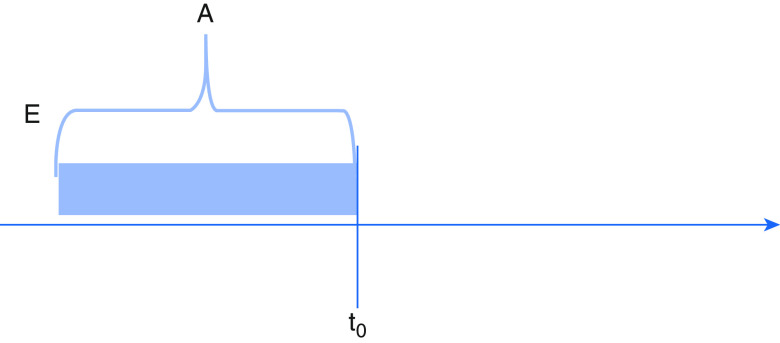

Figure 4.

Eligibility and treatment group both assigned before start of follow-up. A = treatment assignment; E = eligibility determination; t0 = start of follow-up.

A Modern Epidemiologic Strategy for Eliminating Bias owing to Misaligned Time Anchors

Observational causal inference research methods will continue to play a role in guiding clinical decisions in critical care when randomized trial results are not available or feasible. Our confidence in these data depends on the degree to which such studies produce accurate conclusions comparable to those from RCTs. Control of confounding (and attention to unmeasured confounding) is paramount to achieving such comparability. However, even well-controlled studies are vulnerable to bias when the design flaws described above are not explicitly mitigated or avoided. One strategy for mitigating the threats described above is by designing the observational analysis to explicitly emulate a (hypothetical) pragmatic target trial. This intuitive technique, termed target trial emulation, is a modern epidemiological approach that reduces the likelihood of bias stemming from such design problems (15). In target trial emulation, investigators begin by specifying characteristics of the hypothetical clinical trial they are seeking to replicate using observational data. Target trial emulation begins with a well-defined research question (e.g., “among eligible patients, what is the effect of treatment A versus treatment B on mortality?”). Importantly, reliably captured time-stamped data is helpful for ensuring that time anchors closely align to mirror a randomized trial. Investigators then select eligibility criteria for the target trial using baseline data only, which ensures that eligibility specification is aligned with the start of follow-up as would occur in the RCT and limits selection bias. Often, specification of an eligibility grace period—a time period after eligibility during which treatment initiation can happen—is necessary to reflect clinical practice. Analytical techniques (e.g., cloning, weighting, or time-dependent censoring) are used to account for this grace period without introducing immortal time bias. In addition to providing a framework to aid synchronization of time anchors in observational research, the target trial emulation approach includes specification of other aspects of study design and analysis (e.g., outcomes, treatment strategies, follow-up, and causal contrasts) that help reduce bias and improve the interpretability of observational comparative effectiveness research. Indeed, Admon and colleagues demonstrated that applying target trial emulation to existing observational data produced results similar to those of a randomized trial regarding the effects of positive-pressure ventilation during tracheal intubation on oxygen saturation and severe hypoxemia (16).

Standard use of design tools such as the Comparative Effectiveness Research Based on Observational Data to Emulate a Target Trial tool (17) together with reporting guides such as the Strengthening the Reporting of Observational Studies in Epidemiology (18) are also important to ensure transparency and complete reporting of authors’ handling of time anchors in observational designs. Prospective registration of observational comparative effectiveness studies should also be considered routine practice.

Conclusions

Given the challenges of conducting informative RCTs in critical care, observational data is an important source of evidence to guide clinical decisions. Observational researchers have begun to develop standardized methodology and reporting guidelines guided by causal inference principles that prioritize control of confounding. However, underrecognized errors in the synchronization of time points that determine eligibility, treatment assignment, and the start of follow-up commonly afflict observational analyses. Together with careful control of confounding, recognition of these errors and explicit efforts to avoid them will improve the quality and comparability of observational research.

Supplementary Material

Footnotes

Supported by the U.S. National Institutes of Health (NIH) National Institute of Nursing Research and National Library of Medicine (S.P.T. and M.A.K.), Patient Centered Outcomes Research Institute (M.A.K.), and the NIH National Heart, Lung, and Blood Institute (A.J.A.).

Author disclosures are available with the text of this article at www.atsjournals.org.

References

- 1.Santacruz CA, Pereira AJ, Celis E, Vincent JL. Which multicenter randomized controlled trials in critical care medicine have shown reduced mortality? A systematic review. Crit Care Med. 2019;47:1680–1691. doi: 10.1097/CCM.0000000000004000. [DOI] [PubMed] [Google Scholar]

- 2.Vincent JL. We should abandon randomized controlled trials in the intensive care unit. Crit Care Med. 2010;38(Suppl):S534–S538. doi: 10.1097/CCM.0b013e3181f208ac. [DOI] [PubMed] [Google Scholar]

- 3.Ospina-Tascón GA, Büchele GL, Vincent JL. Multicenter, randomized, controlled trials evaluating mortality in intensive care: doomed to fail? Crit Care Med. 2008;36:1311–1322. doi: 10.1097/CCM.0b013e318168ea3e. [DOI] [PubMed] [Google Scholar]

- 4.Niven AS, Herasevich S, Pickering BW, Gajic O. The future of critical care lies in quality improvement and education. Ann Am Thorac Soc. 2019;16:649–656. doi: 10.1513/AnnalsATS.201812-847IP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Semler MW, Bernard GR, Aaron SD, Angus DC, Biros MH, Brower RG, et al. Identifying clinical research priorities in adult pulmonary and critical care: NHLBI working group report. Am J Respir Crit Care Med. 2020;202:511–523. doi: 10.1164/rccm.201908-1595WS. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Collins R, Bowman L, Landray M, Peto R. The magic of randomization versus the myth of real-world evidence. N Engl J Med. 2020;382:674–678. doi: 10.1056/NEJMsb1901642. [DOI] [PubMed] [Google Scholar]

- 7.Gerstein HC, McMurray J, Holman RR. Real-world studies no substitute for RCTs in establishing efficacy. Lancet. 2019;393:210–211. doi: 10.1016/S0140-6736(18)32840-X. [DOI] [PubMed] [Google Scholar]

- 8.Lederer DJ, Bell SC, Branson RD, Chalmers JD, Marshall R, Maslove DM, et al. Control of confounding and reporting of results in causal inference studies: guidance for authors from editors of respiratory, sleep, and critical care journals Ann Am Thorac Soc 20191622–28.[Published erratum appears in Ann Am Thorac Soc 16:283.] [DOI] [PubMed] [Google Scholar]

- 9.Harhay MO, Au DH, Dell SD, Gould MK, Redline S, Ryerson CJ, et al. Methodologic guidance and expectations for the development and reporting of prediction models and causal inference studies. Ann Am Thorac Soc. 2020;17:679–682. doi: 10.1513/AnnalsATS.202002-141ED. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Forbes SP, Dahabreh IJ. Benchmarking observational analyses against randomized trials: a review of studies assessing propensity score methods. J Gen Intern Med. 2020;35:1396–1404. doi: 10.1007/s11606-020-05713-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Harhay MO, Donaldson GC. Guidance on statistical reporting to help improve your chances of a favorable statistical review. Am J Respir Crit Care Med. 2020;201:1035–1038. doi: 10.1164/rccm.202003-0477ED. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hernán MA, Sauer BC, Hernández-Díaz S, Platt R, Shrier I. Specifying a target trial prevents immortal time bias and other self-inflicted injuries in observational analyses. J Clin Epidemiol. 2016;79:70–75. doi: 10.1016/j.jclinepi.2016.04.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Suissa S, Dell’Aniello S. Time-related biases in pharmacoepidemiology. Pharmacoepidemiol Drug Saf. 2020;29:1101–1110. doi: 10.1002/pds.5083. [DOI] [PubMed] [Google Scholar]

- 14.Suissa S.Single-arm trials with historical controls: study designs to avoid time-related biases Epidemiology[online ahead of print] 28 Sep 2020 [DOI] [PubMed] [Google Scholar]

- 15.Hernán MA, Robins JM. Using big data to emulate a target trial when a randomized trial is not available. Am J Epidemiol. 2016;183:758–764. doi: 10.1093/aje/kwv254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Admon AJ, Donnelly JP, Casey JD, Janz DR, Russell DW, Joffe AM, et al. Emulating a novel clinical trial using existing observational data: predicting results of the PreVent study. Ann Am Thorac Soc. 2019;16:998–1007. doi: 10.1513/AnnalsATS.201903-241OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhang Y, Thamer M, Kshirsagar O, Hernàn MA.Comparative effectiveness research based on observational data to emulate a target trial 2019[accessed 2020 Sep 16]. Available from: http://cerbot.org/

- 18.von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP STROBE Initiative. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. J Clin Epidemiol. 2008;61:344–349. doi: 10.1016/j.jclinepi.2007.11.008. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.