Abstract

Viral tests including polymerase chain reaction (PCR) tests are recommended to diagnose COVID-19 infection during the acute phase of infection. A test should have high sensitivity; however, the sensitivity of the PCR test is highly influenced by viral load, which changes over time. Because it is difficult to collect data before the onset of symptoms, the current literature on the sensitivity of the PCR test before symptom onset is limited. In this study, we used a viral dynamics model to track the probability of failing to detect a case of PCR testing over time, including the presymptomatic period. The model was parametrized by using longitudinal viral load data collected from 30 hospitalized patients. The probability of failing to detect a case decreased toward symptom onset, and the lowest probability was observed 2 days after symptom onset and increased afterwards. The probability on the day of symptom onset was 1.0% (95% CI: 0.5 to 1.9) and that 2 days before symptom onset was 60.2% (95% CI: 57.1 to 63.2). Our study suggests that the diagnosis of COVID-19 by PCR testing should be done carefully, especially when the test is performed before or way after symptom onset. Further study is needed of patient groups with potentially different viral dynamics, such as asymptomatic cases.

Keywords: COVID-19, false-negative rate, PCR test, virus dynamics

1. Introduction

In persons with signs or symptoms consistent with COVID-19, or with a high likelihood of exposure (e.g. history of close contact with a confirmed case, travel history to an epicentre), viral testing combined with other tests (e.g. X-ray) is recommended for the diagnosis of acute infection [1]. Viral tests (such as the polymerase chain reaction (PCR) test) look for the presence of SARS-CoV-2, the causative virus of COVID-19. Viral testing is also recommended to screen asymptomatic individuals regardless of suspected exposure to the virus for early identification and to survey the prevalence of infection and disease trends [1]. Although antibody testing is another option to confirm infection, it is used to confirm past infection, because it takes a few weeks for antibody levels to reach detectable amounts after infection [1,2].

PCR tests for SARS-CoV-2 vary according to the sampling process used (i.e. sampled by patients or by healthcare workers [3]), the specimen type (upper and lower respiratory tract, saliva, blood, stool [4,5]), the collection kit, and different target and detection limits [6–8]. Further, test results can differ among runs, laboratories and PCR assays. It is still under debate which specimen type is best. The choice of specimen type should be determined by the quality of the test (i.e. sensitivity and specificity) and by the safety and purpose of the test. For example, saliva samples can be self-collected, which will mitigate the risk of infection of healthcare workers and which is helpful for mass screening [9–12]. However, saliva samples from some patients can be thick, stringy and difficult to pipette [13]. Meanwhile, the viral load in nasal samples collected by patients was reported to be not as high as that in nasopharyngeal swabs collected by health practitioners, which yields lower sensitivity of nasal samples collected by patients [3].

In the context of controlling the COVID-19 pandemic, the probability of failing to detect a case appears to be the most important metric. Note that the probability is not the same as the false-negative rate. The false-negative rate is the probability of negative results given that a swab contains viral genetic material, whereas the probability of failing to detect a case is the probability that an individual is infected (and potentially infectious) but the sample provided is like to not have any viral material in it due to either being prior to viral shedding or at a stage of infection where viral load is below the threshold of consistent detection. Indeed, failing to detect a case leads to lifting precautions and isolation for patients who are still infectious, thus further increasing the transmission risk in households and communities. In contrast, the probability of falsely detecting a case is considered negligible in general unless there are technical errors or contamination in the reagent [2].

The sensitivity of a PCR test is influenced by the sampling process and other factors including the quality of sample collection [14]. Although not frequently discussed, sensitivity is also dependent on the timing of sample collection [2]. Viral load typically increases exponentially during the acute phase of infection, hits a peak, and then declines and disappears. Because sensitivity is dependent on the viral load, the probability of failing to detect a case changes corresponding to the temporal dynamics of viral load. In particular, the probability of failing to detect a case is high at the beginning of infection and long after infection, and it is low when the viral load hits its peak. For example, Kucirka et al. [15] and Borremans et al. [4] showed that the probability of failing to detect a case of SARS-CoV-2 tests varies dependent on time since exposure or onset. The lowest rate was achieved 3 days after symptom onset, which corresponds to peak viral load as observed in clinical data and as estimated from mathematical models [16–21]. However, most of the data used by Kucirka et al. and Borremans et al. were collected after symptom onset. Furthermore, those authors estimated the probability of failing to detect a case before symptom onset by using data from a single person, which may be an extremely poor estimate of the true probability of failing to detect a case. Kucirka also did not consider the different types of tests used in the different studies, which is problematic because the detection limit varied between studies.

In the present study, we investigated the probability of failing to detect a case over time by using a viral dynamics model rather than observed test results. Our approach enabled us to investigate the probability of failing to detect a case even before symptom onset by extrapolating the viral load before symptom onset from the model and allowed us to derive the probability of failing to detect a case for different detection limits. First, we parametrized the viral dynamics model by fitting the model to the data. Then, we ran simulations based on the parametrized viral dynamics model, adding errors to create realistic viral-load distributions, and computed the probability of failing to detect a case over time.

2. Results

2.1. Simulation to compute the probability of failing to detect a case over time

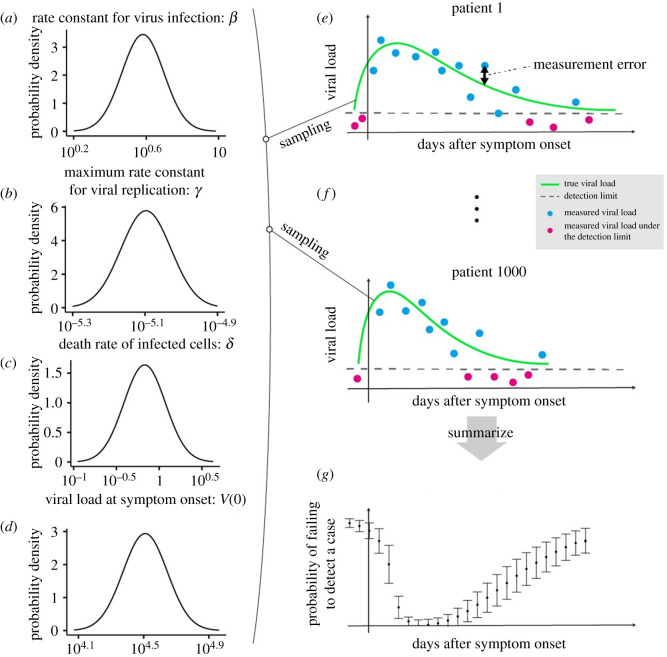

Using the parametrized viral dynamics model, we computed the viral-load distribution over time with days since symptom onset as the time scale. The fitted viral dynamics and the data are depicted in the electronic supplementary material, figure S1 and table S1. We randomly resampled the parameter set (i.e. β, γ, δ and V(0)) from the estimated distributions (lognormal distributions), accounting for both fixed-effect estimation and variation in random effects, and ran the model (see Methods). We assumed that the viral load obtained by running the model is expected viral load. Thus, each viral load curve corresponds to each patient; in other words, the parameter distributions reflect a random-effect component that accounts for individual variability. However, what we obtain from the PCR test is subject to some measurement error. Thus, we added the measurement error to the expected viral load to obtain measured viral load data. We assumed that the error follows a normal distribution with a mean of zero and the variance on log 10 transformed viral load, computed in the process of fitting. In other words, we assumed that the error is independent and identically distributed (i.e. the errors are not correlated between patients or within patients from multiple measurements). We repeated this process 1000 times to create the viral-load distribution over time. The probability of failing to detect a case is computed as the proportion of cases with a viral load below the detection limit at day t (t ∈ { − 2 , … , 20}), denoted by p(t): , where VL(t)i is the measured viral load of individual i at time t, DL is the detection limit and I is the identity function. The large-sample 95% confidence intervals (CIs) of the probability of failing to detect a case were computed by assuming a binominal distribution: . Note that the detection limit varied depending on the test assay [7,22,23]. The lowest was 1 copy ml−1 and the highest was over 1000 copies ml−1. We used 100 copies ml−1 because it is roughly the median value that we have seen in the literature. As a sensitivity analysis, we performed the same simulation using different detection limits (10 and 1000 copies ml−1). The computational process is summarized in figure 1.

Figure 1.

Process of computing the probability of failing to detect a case. The parameter distributions are estimated by fitting the viral dynamics model to the viral load data extracted from clinical studies of SARS-CoV-2 (a–d). The parameter values are resampled from the estimated parameter distributions, and 1000 expected viral loads are computed by running the viral dynamics model (e,f). The green lines correspond to the computed expected viral load. The dashed grey line is the detection limit. The red and blue dots are the measured viral load, which is calculated by adding measurement error to the expected viral load. Then, we calculated the probability of failing to detect a case on day t, which is the number of negative measurements (red dots) among all the measurements on day t (g).

2.2. Time-dependent probability of failing to detect a case during SARS-CoV-2 infection

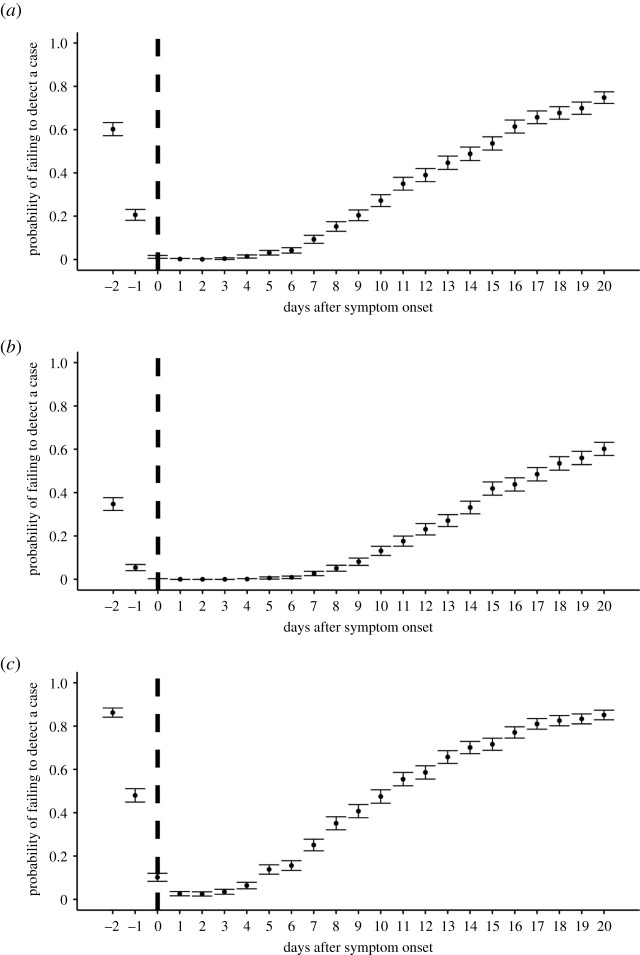

Figure 2a shows the computed probability of failing to detect a case over time with a detection limit of 100 copies ml−1. As expected from typical viral dynamics, the probability of failing to detect a case was high during the early phase of infection because of the low viral load, which is consistent with previous studies [4,15]. Before symptom onset, the probability of failing to detect a case was over 20% (60.2% (95%CI: 57.2% to 63.2%) at 2 days before symptom onset), suggesting it is difficult to identify all presymptomatic cases with viral testing. The probability of failing to detect a case is minimized at 2 days after symptom onset: 0.1% (95%CI: 0% to 0.3%), which corresponds to the timing of peak viral load. After that, the probability of failing to detect a case increases as the viral load declines or as a virus is eliminated from patients. As a sensitivity analysis, we also computed the probability of failing to detect a case for different detection limits (figure 2b,c: detection limit = 10 copies ml−1 and 1000 copies ml−1, respectively) and confirmed similar trends. The probability of failing to detect a case was high with a higher detection limit: the rate was over 40% before symptom onset with the detection limit of 1000 copies ml−1.

Figure 2.

The probability of failing to detect a case over time with different DLs. (a) DL = 100 copies ml−1, (b) DL = 10 copies ml−1, (c) DL = 1000 copies ml−1. The dots are the estimated probability of failing to detect a case at each time point and the bars correspond to the 95%CIs. The vertical dashed lines show the day of symptom onset.

3. Discussion

We computed the probability of failing to detect a case of PCR test over time using a viral dynamics model. The probability of failing to detect a case was substantially high (over 20%) before symptom onset. The lowest probability of failing to detect a case appeared 2 days after symptom onset. After that, the probability of failing to detect a case declined as the virus was gradually washed out from the host. A similar time trend was observed for different detection limits; however, a higher detection limit yielded a higher probability of failing to detect a case.

We need to be careful in interpreting the probability of failing to detect a case before symptom onset as computed based on our approach. We simply hindcasted the model without considering the timing of infection. Therefore, the viral load we computed may not exist if it is before infection. This becomes a serious issue when we compute the probability of failing to detect a case way before symptom onset. For this reason, we decided to show the probability of failing to detect a case from 2 days before symptom onset, because the 2.5%ile of the incubation period was 2.2 days [15]. In other words, most of the simulated patients are infected and shedding virus 2.2 days before symptom onset. Further study may be needed to consider the timing of infection for a more accurate estimation of the probability of failing to detect a case.

Providing an accurate probability of failing to detect a case is of importance in understanding the epidemiology of COVID-19 as well as its clinical characteristics. For example, the detected prevalence of COVID-19 in the general population based on PCR testing was recently reported from England [24]. The data provide a baseline for monitoring prevalence prospectively and will be useful in, for example, assessing the impact of countermeasures against the COVID-19 pandemic. However, the detected prevalence could be influenced by the probability of failing to detect a case. Given that the probability of failing to detect a case is dependent on the time of specimen collection, recording the timing of the test (days since symptom onset) might be helpful in estimating the true prevalence by accounting for the probability of failing to detect a case. Our estimated probability of failing to detect a case before symptom onset is also suggestive for contact tracing or quarantine, in which cases before symptom onset would be tested; we do not recommend using PCR testing to rule out infected cases in those situations. Further, we do not recommend fully depending on the results of the PCR test in diagnosis, given its non-negligible probability of failing to detect a case depending on the timing of the test. Comprehensive medical tests such as chest X-ray and interviewing for contact history would complement the PCR test for acute cases.

PCR tests have been extensively used in SARS-CoV-2 research because of their high sensitivity and specificity compared with other tests such as antibody and antigen tests. However, this does not undermine the value of other tests, and appropriate tests should be chosen depending not only on their sensitivity and specificity but also on the purposes of testing and the cost [25–27]. For example, frequency has been suggested to be more important than sensitivity for screening purposes [27]. For influenza, rapid molecular assays (i.e. nucleic acid amplification tests) and rapid influenza diagnostic tests (RIDTs) have been extensively used for diagnosis purposes for outpatients [28]. A meta-analysis reported the sensitivity of the RIDT to be 62.3%, which was assessed by using the PCR test as a gold standard (thus 100% sensitivity is assumed for the PCR test) [29]. The sensitivity peaks around 2 to 3 days after symptom onset [29,30], which corresponds to the viral load peak [31] and is in line with our finding for SARS-CoV-2.

The strength of our approach is that we used viral dynamics rather than the observed probability of failing to detect a case, which enabled us to assess the probability of failing to detect a case at time points for which available data were scarce, especially before symptom onset. One of the reasons for the limited data before symptom onset is that people are rarely tested before symptom onset, as the test is more commonly used for diagnosis rather than for screening or surveillance. Although Kucirka et al. and Zhen et al. estimated the probability of failing to detect a case over time using observed test results, the estimation for before symptom onset was dependent on a single set of data, which we do not believe is a reliable estimation [15,32]. Another strength of our approach is that we can estimate the probability of failing to detect a case for different detection limits because we estimated the distribution of viral load at each time point. Further, although we specifically computed the probability of failing to detect a case for SARS-CoV-2, the framework is applicable to other viruses causing acute respiratory infection, including influenza.

A few points need to be addressed in future studies. We used the viral load measured in upper respiratory specimens because such specimens are prevalently used for the PCR test. However, using saliva may also be considered because the collection of saliva specimens is easy and safe for healthcare practitioners, and the viral load is high enough compared with that from nasopharyngeal specimens, which is a gold standard approach [5,9,11,33]. It might be worth computing the probability of failing to detect a case for saliva specimens if the viral dynamics are not the same as in upper respiratory specimens. Further, the probability of failing to detect a case might be computed for subgroups of the population. In our previous study, we found that viral load dynamics is highly variable among cases [17]. Virus shedding continued for 10 days after symptom onset in some patients but continued for more than 30 days after symptom onset in others. Therefore, the probability of failing to detect a case should differ between those patient groups. If any biomarkers or demographics (i.e. age, sex, race/ethnicity) differentiating the viral dynamics are identified, they should be considered in computing the probability of failing to detect a case. We used only symptomatic cases in this study because data from asymptomatic cases were not available. However, the probability of failing to detect a case could differ between symptomatic and asymptomatic cases. Although the difference in duration of virus shedding between symptomatic and asymptomatic cases is still controversial from the literature [34,35], viral load dynamics and the corresponding probability of failing to detect a case might be dependent on the presence or absence of symptoms. Similarly, the probability of failing to detect a case was nearly zero in the first week since symptom onset, which might be because the data included only hospitalized patients. Indeed, the viral load is known to be positively associated with disease severity [36–38]. Lastly, we need to update the viral dynamics model accounting for new findings once available. For example, if a complex immunologic response is important and measured over time, such mechanisms should be incorporated in the model. As such data are still limited, we used the simplest model.

We computed the probability of failing to detect a case of the PCR test over the time course of infection using a viral dynamics model. The computed probability of failing to detect a case needs to be considered in the context of catching cases (such as screening, test and trace, and epidemiological surveillance).

4. Methods

4.1. Data

The longitudinal viral load data were extracted from four COVID-19 clinical studies [21,39–41]. The data include only symptomatic and hospitalized cases. The viral load was measured continuously within the interval of a few days since hospitalization. For some studies, the viral load was measured from different specimens (i.e. sputum, stool, blood); however, we used the data from upper respiratory specimens because (1) the upper respiratory tract is the primary target of infection, (2) these specimens are commonly used for diagnosis and (3) for consistency of the data. Data from patients under antiviral treatment and data with less than two data points were excluded from the analysis. Ethics approval was obtained from the ethics committee of each medical/research institute for each study. Written informed consent was obtained from patients or their next of kin, as was described in the original papers. We summarize the data in table 1.

Table 1.

Summary of data.

| papers | country | no. of included (excluded) cases | site of viral load data used | reporting value | detection limit (copies ml–1) | range of symptom onset | agec | sex (M : F) |

|---|---|---|---|---|---|---|---|---|

| Young et al. [40] | Singapore | 12 (6) | nasopharyngeal swab | cycle thresholda | 68.0 | 1/21–1/30 | 37.5 (31–56) | 6 : 6 |

| Zou et al. [39] | China | 8 (8) | nasal swab | cycle thresholda | 15.3 | 1/11–1/26 | 52.5 (28–78) | 3 : 5 |

| Kim et al. [41] | Korea | 2 (7) | nasopharyngeal and oropharyngeal swab | cycle thresholda | 68.0 | NA | NA | NA |

| Wölfel et al. [21] | Germany | 8 (1) | pharyngeal swab | viral load (copies/swab)b | 33.3 | 1/23–2/4 | NA | NA |

acycle threshold values were converted by using the formula: log10(viral load [copies ml−1]) = −0.32 × Ct values [cycles] + 14.11 [39].

b1 swab was assumed to be 3 ml in Wölfel et al. according to the original paper.

cmedian (range).

4.2. A mathematical model for virus dynamics and parameter estimation by nonlinear mixed-effect model

Following is the mathematical model describing viral dynamics, previously proposed in [18,42,43]:

where f(t) is the relative fraction of uninfected target cell population at day t to that at day 0 (i.e. f(0) = 1), and V(t) is the amount of virus at day t. This two-dimensional model was derived from the three-dimensional model composed of viruses, uninfected cells and infected cells by assuming a quasi-steady state of the number of viruses [42]. This assumption is reasonable for most of the viruses causing acute infectious disease because the clearance rate of the virus is typically much larger than the death rate of the infected cells as evidenced in vivo [42,44,45]. Note that time 0 corresponds to the day of symptom onset for practical purposes. The parameters β, γ and δ are the rate constant for virus infection, the maximum rate constant for viral replication and the death rate of infected cells, respectively. The viral load data from the five different papers were fitted using a nonlinear mixed-effect model accounting for inter-individual variability in each parameter. Specifically, the parameter for individual k is presented by , where θ is the fixed effect and πk is the random effect, which follows the normal distribution: N(0, σ). Fixed-effect parameters and random-effect parameters were estimated using the stochastic approximation EM (expectation-maximization) algorithm and empirical Bayes' method, respectively. The mixed model approach is commonly used to analyse longitudinal viral load data [46,47], because the model can account for variability in parameters between cases, and parameter estimation is feasible for cases with limited data points. We used MONOLIX 2019R2 for the implementation of parameter estimation [48]. To account for data points under detection limits (the detection limits were 15.3 copies ml−1 [39], 33.3 copies [21] and 68 copies ml−1 [40,41], respectively), the likelihood function assumed that data under the detection limit are censored [49]. Finally, we fitted the normal distribution with mean zero to the difference between the model and empirical viral load data to estimate the variance of the error.

Contributor Information

Keisuke Ejima, Email: kejima@iu.edu.

Shingo Iwami, Email: siwami@kyushu-u.org.

Data accessibility

The codes and data used in this paper are available from the corresponding authors on reasonable request.

Authors' contributions

K.E. and S.I. conceived and designed the study. K.E., K.S.K., S.I. and S.Iw. analysed the data. K.E., K.S.K. and S.I., Y.F., M.L., R.S.Z., K.A., T.W., S.Iw. wrote the paper. All authors read and approved the final manuscript.

Competing interests

The authors have declared that no conflict of interest exists.

Funding

This study was supported in part by the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education 2019R1A6A3A12031316 (to K.S.K.); Grants-in-Aid for JSPS Scientific Research (KAKENHI) Scientific Research B (18KT0018 to S.Iw., 18H01139 to S.Iw. and 16H04845 to S.Iw.), Scientific Research S 15H05707 (to K.A.), Scientific Research in Innovative Areas (20H05042 to S.Iw., 19H04839 to S.Iw. and 18H05103 to S.Iw.); AMED JP20dm0307009 (to K.A.); AMED CREST 19gm1310002 (to S.Iw.); AMED Research Program on HIV/AIDS 19fk0410023s0101 (to S.Iw.); Research Program on Emerging and Re-emerging Infectious Diseases (19fk0108050h0003 to S.Iw., 19fk0108156h0001 to S.Iw., 20fk0108140s0801 to S.Iw. and 20fk0108413s0301 to S.Iw.); Program for Basic and Clinical Research on Hepatitis 19fk0210036h0502 (to S.Iw.); Program on the Innovative Development and the Application of New Drugs for Hepatitis B 19fk0310114h0103 (to S.Iw.); Moonshot R&D (grant nos. JPMJMS2021 (to K.A. and S.Iw.) and JPMJMS2025 (to S.Iw.)); JST MIRAI (to S.Iw.); Mitsui Life Social Welfare Foundation (to S.Iw.); Shin-Nihon of Advanced Medical Research (to S.Iw.); Life Science Foundation of Japan (to S.Iw.); SECOM Science and Technology Foundation (to S.Iw.); the Japan Prize Foundation (to S.Iw.); Daiwa Securities Health Foundation (to S.Iw.); and Foundation for the Fusion of Science and Technology (to S.Iw.). This research was supported through the MIDAS Coordination Center (MIDASSUGP2020-6) by a grant from the National Institute of General Medical Science (3U24GM132013-02S2 to K.E.).

References

- 1.Centers for Disease Control and Prevention. 2020. Overview of testing for SARS-CoV-2. https://www.cdc.gov/coronavirus/2019-ncov/hcp/testing-overview.html?CDC_AA_refVal=https%3A%2F%2Fwww.cdc.gov%2Fcoronavirus%2F2019-ncov%2Fhcp%2Fclinical-criteria.html#asymptomatic_without_exposure (accessed 23 June 2020).

- 2.Sethuraman N, Jeremiah SS, Ryo A. 2020. Interpreting diagnostic tests for SARS-CoV-2. JAMA 323, 2249-2251. ( 10.1001/jama.2020.8259) [DOI] [PubMed] [Google Scholar]

- 3.Tu Y-P, Jennings R, Hart B, Cangelosi GA, Wood RC, Wehber K, Verma P, Vojta D, Berke EM. 2020. Swabs collected by patients or health care workers for SARS-CoV-2 testing. N. Engl. J. Med. 383, 494-496. ( 10.1056/NEJMc2016321) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Borremans B, Gamble A, Prager KC, Helman SK, Mcclain AM, Cox C, Savage V, Lloyd-Smith JO. 2020. Quantifying antibody kinetics and RNA detection during early-phase SARS-CoV-2 infection by time since symptom onset. eLife 9, e60122. ( 10.7554/eLife.60122) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Iwasaki S, et al. 2020. Comparison of SARS-CoV-2 detection in nasopharyngeal swab and saliva. J. Infect. 81, e145-e147. ( 10.1016/j.jinf.2020.05.071) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Iglói Z, Abou-Nouar ZA, Weller B, Matheeussen V, Coppens J, Koopmans M, Molenkamp R. 2020. Comparison of commercial realtime reverse transcription PCR assays for the detection of SARS-CoV-2. J. Clin. Virol. 129, 104510. ( 10.1016/j.jcv.2020.104510) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.van Kasteren PB, et al. 2020. Comparison of seven commercial RT-PCR diagnostic kits for COVID-19. J. Clin. Virol. 128, 104412. ( 10.1016/j.jcv.2020.104412) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Arnaout R, Lee RA, Lee GR, Callahan C, Yen CF, Smith KP, Arora R, Kirby JE. 2020. SARS-CoV2 testing: the limit of detection matters. bioRxiv. 2020.2006.2002.131144.

- 9.Azzi L, et al. 2020. Saliva is a reliable tool to detect SARS-CoV-2. J. Infect. 81, e45-e50. ( 10.1016/j.jinf.2020.04.005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wyllie AL, et al. 2020. Saliva or nasopharyngeal swab specimens for detection of SARS-CoV-2. N. Engl. J. Med. 383, 1283-1286. ( 10.1056/NEJMc2016359) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pasomsub E, Watcharananan SP, Boonyawat K, Janchompoo P, Wongtabtim G, Suksuwan W, Sungkanuparph S, Phuphuakrat A. 2021. Saliva sample as a non-invasive specimen for the diagnosis of coronavirus disease 2019: a cross-sectional study. Clin. Microbiol. Infect. 27, 285-e1. ( 10.1016/j.cmi.2020.05.001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sapkota D, Thapa SB, Hasséus B, Jensen JL. 2020. Saliva testing for COVID-19? Br. Dent. J. 228, 658-659. ( 10.1038/s41415-020-1594-7) [DOI] [PubMed] [Google Scholar]

- 13.Landry ML, Criscuolo J, Peaper DR. 2020. Challenges in use of saliva for detection of SARS CoV-2 RNA in symptomatic outpatients. J. Clin. Virol. 130, 104567. ( 10.1016/j.jcv.2020.104567) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Alsaleh AN, Whiley DM, Bialasiewicz S, Lambert SB, Ware RS, Nissen MD, Sloots TP, Grimwood K. 2014. Nasal swab samples and real-time polymerase chain reaction assays in community-based, longitudinal studies of respiratory viruses: the importance of sample integrity and quality control. BMC Infect. Dis. 14, 15. ( 10.1186/1471-2334-14-15) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kucirka LM, Lauer SA, Laeyendecker O, Boon D, Lessler J. 2020. Variation in false-negative rate of reverse transcriptase polymerase chain reaction-based SARS-CoV-2 tests by time since exposure. Ann. Intern. Med. 173, 262-267. ( 10.7326/M20-1495) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ejima K, et al. 2020. Inferring timing of infection using within-host SARS-CoV-2 infection dynamics model: are ‘Imported Cases’ truly imported? medRxiv. 2020.2003.2030.20040519.

- 17.Iwanami S, et al. 2020. Rethinking antiviral effects for COVID-19 in clinical studies: early initiation is key to successful treatment. medRxiv. 2020.2005.2030.20118067.

- 18.Kim KS, et al. 2020. Modelling SARS-CoV-2 dynamics: implications for therapy. medRxiv. 2020.2003.2023.20040493.

- 19.To KK-W, et al. 2020. Temporal profiles of viral load in posterior oropharyngeal saliva samples and serum antibody responses during infection by SARS-CoV-2: an observational cohort study. Lancet Infect. Dis. 20, 565-574. ( 10.1016/S1473-3099(20)30196-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.He X, et al. 2020. Temporal dynamics in viral shedding and transmissibility of COVID-19. Nat. Med. 26, 672-675. ( 10.1038/s41591-020-0869-5) [DOI] [PubMed] [Google Scholar]

- 21.Wölfel R, et al. 2020. Virological assessment of hospitalized patients with COVID-2019. Nature 581, 465-469. ( 10.1038/s41586-020-2196-x) [DOI] [PubMed] [Google Scholar]

- 22.Fung B, Gopez A, Servellita V, Arevalo S, Ho C, Deucher A, Thornborrow E, Chiu C, Miller S. 2020. Direct comparison of SARS-CoV-2 analytical limits of detection across seven molecular assays. J. Clin. Microbiol. 58, e01535-01520. ( 10.1128/JCM.01535-20) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Giri B, Pandey S, Shrestha R, Pokharel K, Ligler FS, Neupane BB. 2021. Review of analytical performance of COVID-19 detection methods. Anal. Bioanal. Chem. 413, 35-48. ( 10.1007/s00216-020-02889-x) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Riley S, et al. 2020. Community prevalence of SARS-CoV-2 virus in England during May 2020: REACT study. medRxiv. 2020.2007.2010.20150524.

- 25.Quilty BJ, et al. 2021. Quarantine and testing strategies in contact tracing for SARS-CoV-2: a modelling study. Lancet Public Health 6, e175-e183. ( 10.1016/S2468-2667(20)30308-X) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Boehme C, Hannay E, Sampath R. 2021. SARS-CoV-2 testing for public health use: core principles and considerations for defined use settings. Lancet Glob. Health 9, e247-e249. ( 10.1016/S2214-109X(21)00006-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Larremore DB, Wilder B, Lester E, Shehata S, Burke JM, Hay JA, Tambe M, Mina MJ, Parker R. 2021. Test sensitivity is secondary to frequency and turnaround time for COVID-19 screening. Sci. Adv. 7, eabd5393. ( 10.1126/sciadv.abd5393) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Uyeki TM, et al. 2018. Clinical practice guidelines by the infectious diseases society of America: 2018 update on diagnosis, treatment, chemoprophylaxis, and institutional outbreak management of seasonal influenzaa. Clin. Infect. Dis. 68, e1-e47. ( 10.1093/cid/ciy866) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chartrand C, Leeflang MM, Minion J, Brewer T, Pai M. 2012. Accuracy of rapid influenza diagnostic tests: a meta-analysis. Ann. Intern. Med. 156, 500-511. ( 10.7326/0003-4819-156-7-201204030-00403) [DOI] [PubMed] [Google Scholar]

- 30.Tanei M, et al. 2014. Factors influencing the diagnostic accuracy of the rapid influenza antigen detection test (RIADT): a cross-sectional study. BMJ Open 4, e003885. ( 10.1136/bmjopen-2013-003885) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Boivin G, Goyette N, Hardy I, Aoki F, Wagner A, Trottier S. 2000. Rapid antiviral effect of inhaled zanamivir in the treatment of naturally occurring influenza in otherwise healthy adults. J. Infect. Dis. 181, 1471-1474. ( 10.1086/315392) [DOI] [PubMed] [Google Scholar]

- 32.Zhang Z, et al. 2021. Insight into the practical performance of RT-PCR testing for SARS-CoV-2 using serological data: a cohort study. Lancet Microbe 2, e79-e87. ( 10.1016/S2666-5247(20)30200-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wyllie AL, et al. 2020. Saliva is more sensitive for SARS-CoV-2 detection in COVID-19 patients than nasopharyngeal swabs. medRxiv. ( 10.1101/2020.04.16.20067835) [DOI]

- 34.Long Q-X, et al. 2020. Clinical and immunological assessment of asymptomatic SARS-CoV-2 infections. Nat. Med. 26, 1200-1204. ( 10.1038/s41591-020-0965-6) [DOI] [PubMed] [Google Scholar]

- 35.Lee S, et al. 2020. Clinical course and molecular viral shedding among asymptomatic and symptomatic patients with SARS-CoV-2 infection in a community treatment center in the Republic of Korea. JAMA Intern. Med. 180, 1447-1452. ( 10.1001/jamainternmed.2020.3862) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Magleby R, et al. In press. Impact of SARS-CoV-2 viral load on risk of intubation and mortality among hospitalized patients with coronavirus disease 2019. Clin. Infect. Dis. ( 10.1093/cid/ciaa851) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zheng S, et al. 2020. Viral load dynamics and disease severity in patients infected with SARS-CoV-2 in Zhejiang province, China, January–March 2020: retrospective cohort study. BMJ 369, m1443. ( 10.1136/bmj.m1443) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Liu Y, Yan LM, Wan L, Xiang T-X, Le A, Liu J-M, Peiris M, Poon LLM. 2020. Viral dynamics in mild and severe cases of COVID-19. Lancet Infect. Dis. 20, 656-657. ( 10.1016/S1473-3099(20)30232-2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Zou L, et al. 2020. SARS-CoV-2 viral load in upper respiratory specimens of infected patients. N. Engl. J. Med. 382, 1177-1179. ( 10.1056/NEJMc2001737) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Young BE, et al. 2020. Epidemiologic features and clinical course of patients infected with SARS-CoV-2 in Singapore. JAMA 323, 1488-1494. ( 10.1001/jama.2020.3204) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kim ES, et al. 2020. Clinical course and outcomes of patients with severe acute respiratory syndrome coronavirus 2 infection: a preliminary report of the first 28 patients from the Korean cohort study on COVID-19. J. Korean Med. Sci. 35, e142. ( 10.3346/jkms.2020.35.e142) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ikeda H, Nakaoka S, de Boer RJ, Morita S, Misawa N, Koyanagi Y, Aihara K, Sato K, Iwami S. 2016. Quantifying the effect of Vpu on the promotion of HIV-1 replication in the humanized mouse model. Retrovirology 13, 23. ( 10.1186/s12977-016-0252-2) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Perelson AS. 2002. Modelling viral and immune system dynamics. Nat. Rev. Immunol. 2, 28-36. ( 10.1038/nri700) [DOI] [PubMed] [Google Scholar]

- 44.Martyushev A, Nakaoka S, Sato K, Noda T, Iwami S. 2016. Modelling Ebola virus dynamics: implications for therapy. Antiviral Res. 135, 62-73. ( 10.1016/j.antiviral.2016.10.004) [DOI] [PubMed] [Google Scholar]

- 45.Nowak MA, May RM. 2000. Virus dynamics. Oxford, UK: Oxford University Press. [Google Scholar]

- 46.Gonçalves A, et al. 2020. Timing of antiviral treatment initiation is critical to reduce SARS-CoV-2 viral load. CPT: Pharmacometrics Syst. Pharmacol. 9, 509-514. ( 10.1002/psp4.12543) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Best K, Guedj J, Madelain V, De Lamballerie X, Lim S-Y, Osuna CE, Whitney JB, Perelson AS. 2017. Zika plasma viral dynamics in nonhuman primates provides insights into early infection and antiviral strategies. Proc. Natl Acad. Sci. USA 114, 8847-8852. ( 10.1073/pnas.1704011114) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Traynard P, Ayral G, Twarogowska M, Chauvin J. 2020. Efficient pharmacokinetic modeling workflow with the monolixsuite: a case study of remifentanil. CPT: Pharmacometrics Syst. Pharmacol. 9, 198-210. ( 10.1002/psp4.12500) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Samson A, Lavielle M, Mentré F. 2006. Extension of the SAEM algorithm to left-censored data in nonlinear mixed-effects model: application to HIV dynamics model. Comput. Stat. Data Anal. 51, 1562-1574. ( 10.1016/j.csda.2006.05.007) [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The codes and data used in this paper are available from the corresponding authors on reasonable request.