Abstract

Automated visual tracking of animals is rapidly becoming an indispensable tool for the study of behavior. It offers a quantitative methodology by which organisms’ sensing and decision-making can be studied in a wide range of ecological contexts. Despite this, existing solutions tend to be challenging to deploy in practice, especially when considering long and/or high-resolution video-streams. Here, we present TRex, a fast and easy-to-use solution for tracking a large number of individuals simultaneously using background-subtraction with real-time (60 Hz) tracking performance for up to approximately 256 individuals and estimates 2D visual-fields, outlines, and head/rear of bilateral animals, both in open and closed-loop contexts. Additionally, TRex offers highly accurate, deep-learning-based visual identification of up to approximately 100 unmarked individuals, where it is between 2.5 and 46.7 times faster, and requires 2–10 times less memory, than comparable software (with relative performance increasing for more organisms/longer videos) and provides interactive data-exploration within an intuitive, platform-independent graphical user-interface.

Research organism: D. melanogaster, Zebrafish, Other

Introduction

Tracking multiple moving animals (and multiple objects, generally) is important in various fields of research such as behavioral studies, ecophysiology, biomechanics, and neuroscience (Dell et al., 2014). Many tracking algorithms have been proposed in recent years (Ohayon et al., 2013, Fukunaga et al., 2015, Burgos-Artizzu et al., 2012, Rasch et al., 2016), often limited to/only tested with a particular organism (Hewitt et al., 2018, Branson et al., 2009) or type of organism (e.g. protists, Pennekamp et al., 2015; fly larvae and worms, Risse et al., 2017). Relatively few have been tested with a range of organisms and scenarios (Pérez-Escudero et al., 2014, Sridhar et al., 2019, Rodriguez et al., 2018). Furthermore, many existing tools only have a specialized set of features, struggle with very long or high-resolution (≥4 K) videos, or simply take too long to yield results. Existing fast algorithms are often severely limited with respect to the number of individuals that can be tracked simultaneously; for example, xyTracker (Rasch et al., 2016) allows for real-time tracking at 40 Hz while accurately maintaining identities, and thus is suitable for closed-loop experimentation (experiments where stimulus presentation can depend on the real-time behaviors of the individuals, for example Bath et al., 2014, Brembs and Heisenberg, 2000, Bianco and Engert, 2015), but has a limit of being able to track only five individuals simultaneously. ToxTrac (Rodriguez et al., 2018), a software comparable to xyTracker in it’s set of features, is limited to 20 individuals and relatively low frame-rates (≤25fps). Others, while implementing a wide range of features and offering high-performance tracking, are costly and thus limited in access (Noldus et al., 2001). Perhaps with the exception of proprietary software, one major problem at present is the severe fragmentation of features across the various software solutions. For example, experimentalists must typically construct work-flows from many individual tools: One tool might be responsible for estimating the animal’s positions, another for estimating their posture, another one for reconstructing visual fields (which in turn probably also estimates animal posture, but does not export it in any way) and one for keeping identities – correcting results of other tools post-hoc. It can take a very long time to make them all work effectively together, adding what is often considerable overhead to behavioral studies.

TRex, the software released with this publication (available at trex.run under an Open-Source license), has been designed to address these problems, and thus to provide a powerful, fast and easy to use tool that will be of use in a wide range of behavioral studies. It allows users to track moving objects/animals, as long as there is a way to separate them from the background (e.g. static backgrounds, custom masks, as discussed below). In addition to the positions of individuals, our software provides other per-individual metrics such as body shape and, if applicable, head-/tail-position. This is achieved using a basic posture analysis, which works out of the box for most organisms, and, if required, can be easily adapted for others. Posture information, which includes the body center-line, can be useful for detecting for example courtship displays and other behaviors that might not otherwise be obvious from mere positional data. Additionally, with the visual sense often being one of the most important modalities to consider in behavioral research, we include the capability for users to obtain a computational reconstruction of the visual fields of all individuals (Strandburg-Peshkin et al., 2013; Rosenthal et al., 2015). This not only reveals which individuals are visible from an individual’s point-of-view, as well as the distance to them, but also which parts of others’ bodies are visible.

Included in the software package is a task-specific tool, TGrabs, that is employed to pre-process existing video files and which allows users to record directly from cameras capable of live-streaming to a computer (with extensible support from generic webcams to high-end machine vision cameras). It supports most of the above-mentioned tracking features (positions, posture, visual field) and provides access to results immediately while continuing to record/process. This not only saves time, since tracking results are available immediately after the trial, but makes closed-loop support possible for large groups of individuals (≤ 128 individuals). TRex and TGrabs are written in C++ but, as part of our closed-loop support, we are providing a Python-based general scripting interface which can be fully customized by the user without the need to recompile or relaunch. This interface allows for compatibility with external programs (e.g. for closed-loop stimulus-presentation) and other custom extensions.

The fast tracking described above employs information about the kinematics of each organism in order to try to maintain their identities. This is very fast and useful in many scenarios, for example where general assessments about group properties (group centroid, alignment of individuals, density, etc.) are to be made. However, when making conclusions about individuals instead, maintaining identities perfectly throughout the video is a critical requirement. Every tracking method inevitably makes mistakes, which, for small groups of two or three individuals or short videos, can be corrected manually – at the expense of spending much more time on analysis, which rapidly becomes prohibitive as the number of individuals to be tracked increases. To make matters worse, when multiple individuals stay out of view of the camera for too long (such as if individuals move out of frame, under a shelter, or occlude one another) there is no way to know who is whom once they re-emerge. With no baseline truth available (e.g. using physical tags as in Alarcón‐Nieto et al., 2018, Nagy et al., 2013; or marker-less methods as in Pérez-Escudero et al., 2014, Romero-Ferrero et al., 2019, Rasch et al., 2016), these mistakes cannot be corrected and accumulate over time, until eventually all identities are fully shuffled. To solve this problem (and without the need to mark, or add physical tags to individuals), TRex can, at the cost of spending more time on analysis (and thus not during live-tracking), automatically learn the identity of up to approximately 100 unmarked individuals based on their visual appearance. This machine-learning-based approach, herein termed visual identification, provides an independent source of information on the identity of individuals, which is used to detect and correct potential tracking mistakes without the need for human supervision.

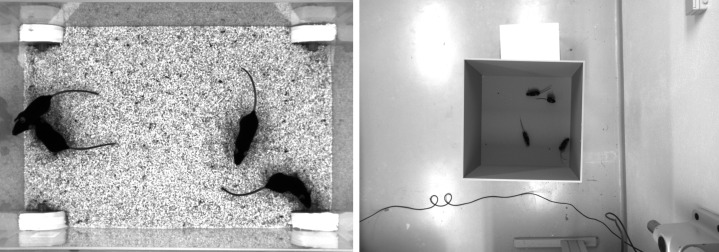

In this paper, we evaluate the most important functions of our software in terms of speed and reliability using a wide range of experimental systems, including termites, fruit flies, locusts, and multiple species of schooling fish (although we stress that our software is not limited to such species).

Specifically regarding the visual identification of unmarked individuals in groups, idtracker.ai is currently state-of-the-art, yielding high-accuracy (> 99% in most cases) in maintaining consistent identity assignments across entire videos (Romero-Ferrero et al., 2019). Similarly to TRex, this is achieved by training an artificial neural network to visually differentiate between individuals, and using identity predictions from this network to avoid/correct tracking mistakes. Both approaches work without human supervision, and are limited to approximately 100 individuals. Given that idtracker.ai is the only currently available tool with visual identification for such large groups of individuals, and also because of the quality of results, we will use it as a benchmark for our visual identification system. Results will be compared in terms of both accuracy and computation speed, showing TRex’ ability to achieve the same high level of accuracy but typically at far higher speeds, and with a much reduced memory requirement.

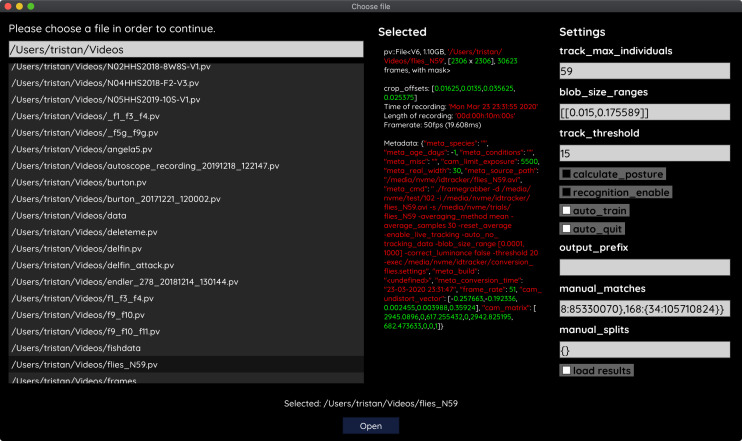

TRex is platform-independent and runs on all major operating systems (Linux, Windows, macOS) and offers complete batch processing support, allowing users to efficiently process entire sets of videos without requiring human intervention. All parameters can be accessed either through settings files, from within the graphical user interface (or GUI), or using the command-line. The user interface supports off-site access using a built-in web-server (although it is recommended to only use this from within a secure VPN environment). Available parameters are explained in the documentation directly as part of the GUI and on an external website (see below). Results can be exported to independent data-containers (NPZ, or CSV for plain-text type data) for further analyses in software of the user’s choosing. We will not go into detail regarding the many GUI functions since albeit being of great utility to the researcher, they are only the means to easily apply the features presented herein. Some examples will be given in the main text and appendix, but a comprehensive collection of all of them, as well as detailed documentation, is available in the up-to-date online-documentation which can be found at trex.run/docs.

Results

Our software package consists of two task-specific tools, TGrabs and TRex, with different specializations. TGrabs is primarily designed to connect to cameras and to be very fast. It employs the same program code as TRex to achieve real-time online tracking, such as could be employed for closed-loop experiments (the user can launch TGrabs from the opening dialog of TRex). However, its focus on speed comes at the cost of not having access to the rich GUI or more sophisticated (and thus slower) processing steps, such as deep-learning-based identification, that TRex provides. TRex focusses on the more time-consuming tasks, as well as visual data exploration, re-tracking existing results – but sometimes it simply functions as an easier-to-use graphical interface for tracking and adjusting parameters. Together they provide a wide range of capabilities to the user and are often used in sequence as part of the same work-flow. Typically, such a sequence can be summarized in four stages (see also Figure 1 for a flow diagram):

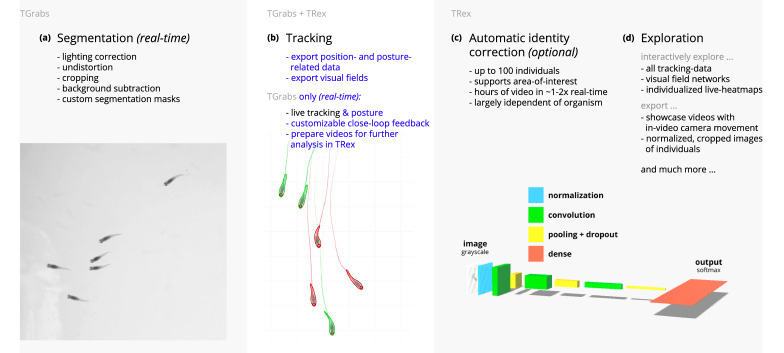

Figure 1. Videos are typically processed in four main stages, illustrated here each with a list of prominent features.

Some of them are accessible from both TRex and TGrabs, while others are software specific (as shown at the very top). (a) The video is either recorded directly with our software (TGrabs), or converted from a pre-recorded video file. Live-tracking enables users to perform closed-loop experiments, for which a virtual testing environment is provided. (b) Videos can be tracked and parameters adjusted with visual feedback. Various exploration and data presentation features are provided and customized data streams can be exported for use in external software. (c) After successful tracking, automatic visual identification can, optionally, be used to refine results. An artificial neural network is trained to recognize individuals, helping to automatically correct potential tracking mistakes. In the last stage, many graphical tools are available to users of TRex, a selection of which is listed in (d).

Segmentation in TGrabs. When recording a video or converting a previously recorded file (e.g. MP4, .AVI, etc.), it is segmented into background and foreground-objects (blobs), the latter typically being the entities to be tracked. Results are saved to a custom, non-proprietary video format (PV) (Figure 2a).

Tracking the video, either directly in TGrabs, or in TRex after pre-processing, with access to customizable visualizations and the ability to change tracking parameters on-the-fly. Here, we will describe two types of data available within TRex, 2D posture- and visual-field estimation, as well as real-time applications of such data (Figure 2b).

Automatic identity correction (Figure 2c), a way of utilizing the power of a trained neural network to perform visual identification of individuals, is available in TRex only. This step may not be necessary in many cases, but it is the only way to guarantee consistent identities throughout the video. It is also the most processing-heavy (and thus usually the most time-consuming) step, as well as the only one involving machine learning. All previously collected posture- and other tracking-related data are utilized in this step, placing it late in a typical workflow.

Data visualization is a critical component of any research project, especially for unfamiliar datasets, but manually crafting one for every new experiment can be very time-consuming. Thus, TRex offers a universal, highly customizable, way to make all collected data available for interactive exploration (Figure 2d) – allowing users to change many display options and recording video clips for external playback. Tracking parameters can be adjusted on the fly (many with visual feedback) – important for example when preparing a closed-loop feedback with a new species or setup.

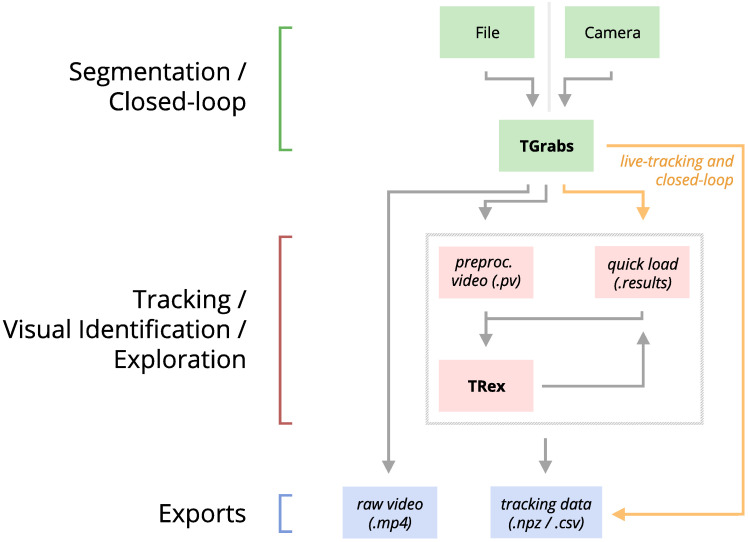

Figure 2. An overview of the interconnection between TRex, TGrabs and their data in- and output formats, with titles on the left corresponding to the stages in 1.

Starting at the top of the figure, video is either streamed to TGrabs from a file or directly from a compatible camera. At this stage, preprocessed data are saved to a .pv file which can be read by TRex later on. Thanks to its integration with parts of the TRex code, TGrabs can also perform online tracking for limited numbers of individuals, and save results to a .results file (that can be opened by TRex) along with individual tracking data saved to numpy data-containers (.npz) or standard CSV files, which can be used for analysis in third-party applications. If required, videos recorded directly using TGrabs can also be streamed to a .mp4 video file which can be viewed in commonly available video players like VLC.

Figure 2—video 1. This video shows an overview of the typical chronology of operations when using our software.

Below we assess the performance of our software regarding three properties that are most important when using it (or in fact any tracking software) in practice: (i) The time it takes to perform tracking (ii) the time it takes to perform automatic identity correction and (iii) the peak memory consumption when correcting identities (since this is where memory consumption is maximal), as well as (iv) the accuracy of the produced trajectories after visual identification.

While accuracy is an important metric and specific to identification tasks, time and memory are typically of considerable practical importance for all tasks. For example, tracking-speed may be the difference between only being able to run a few trials or producing more reliable results with a much larger number of trials. In addition, tracking speed can make a major difference as the number of individuals increases. Furthermore, memory constraints can be extremely prohibitive making tracking over long video sequences and/or for a large number of individuals extremely time-consuming, or impossible, for the user.

In all of our tests, we used a relatively modest computer system, which could be described as a mid-range consumer or gaming PC:

Intel Core i9-7900X CPU

NVIDIA Geforce 1080 Ti

64 GB RAM

NVMe PCIe x4 hard-drive

Debian bullseye (debian.org)

As can be seen in the following sections (memory consumption, processing speeds, etc.) using a high-end system is not necessary to run TRex and, anecdotally, we did not observe noticeable improvements when using a solid state drive versus a normal hard drive. A video card (presently an NVIDIA card due to the requirements of TensorFlow) is recommended for tasks involving visual identification as such computations will take much longer without it – however, it is not required. We decided to employ this system due to having a relatively cheap, compatible graphics card, as well as to ensure that we have an easy way to produce direct comparisons with idtracker.ai – which according to their website requires large amounts of RAM (32 – 128 GB, idtrackerai online documentation) and a fast solid-state drive.

Table 1 shows the entire set of videos used in this paper, which have been obtained from multiple sources (credited under the table) and span a wide range of different organisms, demonstrating TRex’ ability to track anything as long as it moves occasionally. Videos involving a large number (> 100) of individuals are all the same species of fish since these were the only organisms we had available in such quantities. However, this is not to say that only fish could be tracked efficiently in these quantities. We used the full dataset with up to 1024 individuals in one video (Video 0) to evaluate raw tracking speed without visual identification and identity corrections (next sub-section). However, since such numbers of individuals exceed the capacity of the neural network used for automatic identity corrections (compare also Romero-Ferrero et al., 2019 who used a similar network), we only used a subset of these videos (videos 7 through 16) to look specifically into the quality of our visual identification in terms of keeping identities and its memory consumption.

Table 1. A list of the videos used in this paper as part of the evaluation of TRex, along with the species of animals in the videos and their common names, as well as other video-specific properties.

Videos are given an incremental ID, to make references more efficient in the following text, which are sorted by the number of individuals in the video. Individual quantities are given accurately, except for the videos with more than 100 where the exact number may be slightly more or less. These videos have been analyzed using TRex’ dynamic analysis mode that supports unknown quantities of animals. Videos 7 and 8, as well as 13–11, are available as part of the original idtracker paper (Pérez-Escudero et al., 2014). Many of the videos are part of yet unpublished data: Guppy videos have been recorded by A. Albi, videos with sunbleak (Leucaspius delineatus) have been recorded by D. Bath. The termite video has been kindly provided by H. Hugo and the locust video by F. Oberhauser. Due to the size of some of these videos (>150 GB per video), they have to be made available upon specific request. Raw versions of these videos (some trimmed), as well as full preprocessed versions, are available as part of the dataset published alongside this paper (Walter et al., 2020).

| ID | Species | Common | # ind. | Fps (Hz) | Duration | Size (Px2) () |

|---|---|---|---|---|---|---|

| 0 | Leucaspius delineatus | Sunbleak | 1024 | 40 | 8 min 20 s | 3866 × 4048 |

| 1 | Leucaspius delineatus | Sunbleak | 512 | 50 | 6 min 40 s | 3866 × 4140 |

| 2 | Leucaspius delineatus | Sunbleak | 512 | 60 | 5 min 59 s | 3866 × 4048 |

| 3 | Leucaspius delineatus | Sunbleak | 256 | 50 | 6 min 40 s | 3866 × 4140 |

| 4 | Leucaspius delineatus | Sunbleak | 256 | 60 | 5 min 59 s | 3866 × 4048 |

| 5 | Leucaspius delineatus | Sunbleak | 128 | 60 | 6 min | 3866 × 4048 |

| 6 | Leucaspius delineatus | Sunbleak | 128 | 60 | 5 min 59 s | 3866 × 4048 |

| 7 | Danio rerio | Zebrafish | 100 | 32 | 1 min | 3584 × 3500 |

| 8 | Drosophila melanogaster | Fruit-fly | 59 | 51 | 10 min | 2306 × 2306 |

| 9 | Schistocerca gregaria | Locust | 15 | 25 | 1hr 0 min | 1880 × 1881 |

| 10 | Constrictotermes cyphergaster | Termite | 10 | 100 | 10 min 5 s | 1920 × 1080 |

| 11 | Danio rerio | Zebrafish | 10 | 32 | 10 min 10 s | 3712 × 3712 |

| 12 | Danio rerio | Zebrafish | 10 | 32 | 10 min 3 s | 3712 × 3712 |

| 13 | Danio rerio | zebrafish | 10 | 32 | 10 min 3 s | 3712 × 3712 |

| 14 | Poecilia reticulata | Guppy | 8 | 30 | 3 hr 15 min 22 s | 3008 × 3008 |

| 15 | Poecilia reticulata | Guppy | 8 | 25 | 1 hr 12 min | 3008 × 300 |

| 16 | Poecilia reticulata | Guppy | 8 | 35 | 3 hr 18 min 13 s | 3008 × 3008 |

| 17 | Poecilia reticulata | Guppy | 1 | 140 | 1 hr 9 min 32 s | 1312 × 1312 |

Tracking: speed and accuracy

In evaluating the 4.2 Tracking portion of TRex, the main focus lies with processing speed, while accuracy in terms of keeping identities is of secondary importance. Tracking is required in all other parts of the software, making it an attractive target for extensive optimization. Especially with regard to closed-loop, and live-tracking situations, there may be no room even to lose a millisecond between frames and thus risk dropping frames. We therefore designed TRex to support the simultaneous tracking of many (≥256) individuals quickly and achieve reasonable accuracy for up to 100 individuals – which are the two suppositions we will investigate in the following.

Trials were run without posture/visual-field estimation enabled, where tracking generally, and consistently, reaches speeds faster than real-time (processing times of 1.5 – 40 % of the video duration, 25 – 100 Hz) even for a relatively large number of individuals (77 – 94.77 % for up to 256 individuals, see Appendix 4—table 1). Videos with more individuals (> 500) were still tracked within reasonable time of 235– 358 % of the video duration. As would be expected from these results, we found that combining tracking and recording in a single step generally leads to higher processing speeds. The only situation where this was not the case was a video with 1024 individuals, which suggests that live-tracking (in TGrabs) handles cases with many individuals slightly worse than offline tracking (in TRex). Otherwise, 5– 35 % shorter total processing times were measured (14.55 % on average, see Appendix 4—table 4), compared to running TGrabs separately and then tracking in TRex. These percentage differences, in most cases, reflect the ratio between the video duration and the time it takes to track it, suggesting that most time is spent – by far – on the conversion of videos. This additional cost can be avoided in practice when using TGrabs to record videos, by directly writing to a custom format recognized by TRex, and/or using its live-tracking ability to export tracking data immediately after the recording is stopped.

We also investigated trials that were run with posture estimation enabled and we found that real-time speed could be achieved for videos with ≤128 individuals (see column ‘tracking’ in Appendix 4—table 4). Tracking speed, when posture estimation is enabled, depends more strongly on the size of individuals in the image.

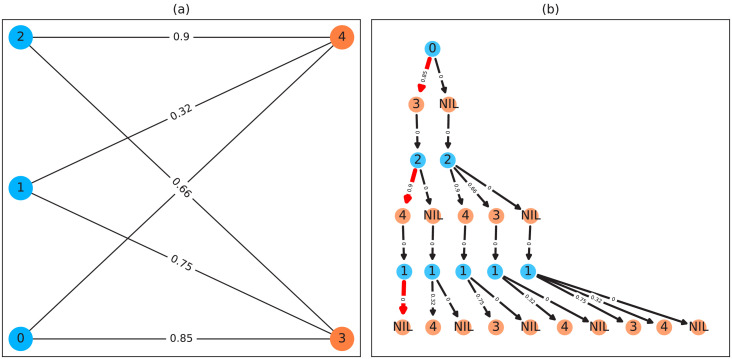

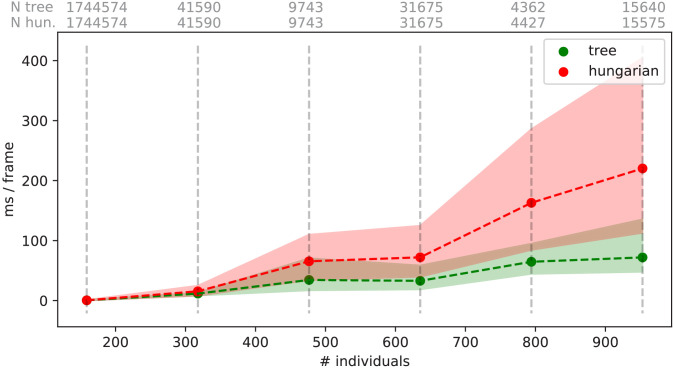

Generally, tracking software becomes slower as the number of individuals to be tracked increases, as a result of an exponentially growing number of combinations to consider during matching. TRex uses a novel tree-based algorithm by default (see Tracking), but circumvents problematic situations by falling back on using the Hungarian method (also known as the Kuhn-Munkres algorithm, Kuhn, 1955) when necessary. Comparing our mixed approach (see Tracking) to purely using the Hungarian method shows that, while both perform similarly for few individuals, the Hungarian method is easily outperformed by our algorithm for larger groups of individuals (as can be seen in Appendix 4—figure 3). This might be due to custom optimizations regarding local cliques of individuals, whereby we ignore objects that are too far away, and also as a result of our optimized pre-sorting. The Hungarian method has the advantage of not leading to combinatorical explosions in some situations – and thus has a lower maximum complexity while proving to be less optimal in the average case. For further details, see the appendix: Appendix D Matching an object to an object in the next frame.

In addition to speed, we also tested the accuracy of our tracking method, with regard to the consistency of identity assignments, comparing its results to the manually reviewed data (the methodology of which is described in the next section). In order to avoid counting follow-up errors as ‘new’ errors, we divided each trajectory in the uncorrected data into ‘uninterrupted’ segments of frames, instead of simply comparing whole trajectories. A segment is interrupted when an individual is lost (for any of the reasons given in 4.3.1 Preparing Tracking-Data) and starts again when it is reassigned to another object later on. We term these (re-)assignments decisions here. Each segment of every individual can be uniquely assigned to a similar/identical segment in the baseline data and its identity. Following one trajectory in the uncorrected data, we can detect these wrong decisions by checking whether the baseline identity associated with one segment of that trajectory changes in the next. We found that roughly 80 % of such decisions made by the tree-based matching were correct, even with relatively high numbers of individuals (100). For trajectories where no manually reviewed data were available, we used automatically corrected trajectories as a base for our comparison – we evaluate the accuracy of these automatically corrected trajectories in the following section. Even though we did not investigate accuracy in situations with more than 100 individuals, we suspect similar results since the property with the strongest influence on tracking accuracy – individual density – is limited physically and most of the investigated species school tightly in either case.

Visual identification: accuracy

Since the goal of using visual identification is to generate consistent identity assignments, we evaluated the accuracy of our method in this regard. As a benchmark, we compare it to manually reviewed datasets as well as results from idtracker.ai for the same set of videos (where possible). In order to validate trajectories exported by either software, we manually reviewed multiple videos with the help from a tool within TRex that allows to view each crossing and correct possible mistakes in-place. Assignments were deemed incorrect, and subsequently corrected by the reviewer, if the centroid of a given individual was not contained within the object it was assigned to (e.g. the individual was not part of the correct object). Double assignments per object are impossible due to the nature of the tracking method. Individuals were also forcibly assigned to the correct objects in case they were visible but not detected by the tracking algorithm. After manual corrections had been applied, ‘clean’ trajectories were exported – providing a per-frame baseline truth for the respective videos. A complete table of reviewed videos, and the percentage of reviewed frames per video, can be found in Table 2. For longer videos (> 1 hr), we relied entirely on a comparison between results from idtracker.ai and TRex. Their paper (Romero-Ferrero et al., 2019) suggests a very high accuracy of over 99.9 % correctly identified individual images for most videos, which should suffice for most relevant applications and provide a good baseline truth. As long as both tools produce sufficiently similar trajectories, we therefore know they have found the correct solution.

Table 2. Results of the human validation for a subset of videos.

Validation was performed by going through all problematic situations (e.g. individuals lost) and correcting mistakes manually, creating a fully corrected dataset for the given videos. This dataset may still have missing frames for some individuals, if they could not be detected in certain frames (as indicated by ‘of that interpolated’). This was usually a very low percentage of all frames, except for Video 9, where individuals tended to rest on top of each other – and were thus not tracked – for extended periods of time. This baseline dataset was compared to all other results obtained using the automatic visual identification by TRex () and idtracker.ai () to estimate correctness. We were not able to track Videos 9 and 10 with idtracker.ai, which is why correctness values are not available.

| Video metrics | Review stats | % correct | |||

|---|---|---|---|---|---|

| Video | # ind. | Reviewed (%) | Of that interpolated (%) | TRex | idtracker.ai |

| 7 | 100 | 100.0 | 0.23 | 99.07 ± 0.013 | 98.95 ± 0.146 |

| 8 | 59 | 100.0 | 0.15 | 99.68 ± 0.533 | 99.94 ± 0.0 |

| 9 | 15 | 22.2 | 8.44 | 95.12 ± 6.077 | N/A |

| 10 | 10 | 100.0 | 1.21 | 99.7 ± 0.088 | N/A |

| 13 | 10 | 100.0 | 0.27 | 99.98 ± 0.0 | 99.96 ± 0.0 |

| 12 | 10 | 100.0 | 0.59 | 99.94 ± 0.006 | 99.63 ± 0.0 |

| 11 | 10 | 100.0 | 0.5 | 99.89 ± 0.009 | 99.34 ± 0.002 |

A direct comparison between TRex and idtracker.ai was not possible for Videos 9 and 10, where idtracker.ai frequently exceeded hardware memory-limits and caused the application to be terminated, or did not produce usable results within multiple days of run-time. However, we were able to successfully analyze these videos with TRex and evaluate its performance by comparing to manually reviewed trajectories (see below in Visual identification: accuracy). Due to the stochastic nature of machine learning, and thus the inherent possibility of obtaining different results in each run, as well as other potential factors influencing processing time and memory consumption, both TRex and idtracker.ai have been executed repeatedly (5x TRex, 3x idtracker.ai).

The trajectories exported by both idtracker.ai and TRex were very similar throughout (see Table 3). While occasional disagreements happened, similarity scores were higher than 98 % in all and higher than 99 % in most cases (i.e. less than 1 % of individuals have been differently assigned in each frame on average). Most difficulties that did occur were, after manual review, attributable to situations where multiple individuals cross over excessively within a short time-span. In each case that has been manually reviewed, identities switched back to the correct individuals – even after temporary disagreement. We found that both solutions occasionally experienced these same problems, which often occur when individuals repeatedly come in and out of view in quick succession (e.g. overlapping with other individuals). Disagreements were expected for videos with many such situations due to the way both algorithms deal differently with them: idtracker.ai assigns identities only based on the network output. In many cases, individuals continue to partly overlap even while already being tracked, which results in visual artifacts and can lead to unstable predictions by the network and causing idtracker.ai’s approach to fail. Comparing results from both idtracker.ai and TRex to manually reviewed data (see Table 2) shows that both solutions consistently provide high-accuracy results of above 99.5 % for most videos, but that TRex is slightly improved in all cases while also having a better overall frame coverage per individual (99.65 % versus idtracker.ai’s 97.93 %, where 100 % would mean that all individuals are tracked in every frame; not shown). This suggests that the splitting algorithm (see appendix, Appendix K Algorithm for splitting touching individuals) is working to TRex’ advantage here.

Table 3. Evaluating comparability of the automatic visual identification between idtracker.ai and TRex.

Columns show various video properties, as well as the associated uniqueness score (see Guiding the training process) and a similarity metric. Similarity (% similar individuals) is calculated based on comparing the positions for each identity exported by both tools, choosing the closest matches overall and counting the ones that are differently assigned per frame. An individual is classified as ‘wrong’ in that frame, if the euclidean distance between the matched solutions from idtracker.ai and TRex exceeds 1 % of the video width. The column ‘% similar individuals’ shows percentage values, where a value of 99% would indicate that, on average, 1 % of the individuals are assigned differently. To demonstrate how uniqueness corresponds to the quality of results, the last column shows the average uniqueness achieved across trials. A file containing all X and Y positions for each trial and each software combined into one very large table is available from Walter et al., 2020, along with the data in different formats.

| Video | # ind. | N TRex | % similar individuals | Final uniqueness |

|---|---|---|---|---|

| 7 | 100 | 5 | 99.8346 ± 0.5265 | 0.9758 ± 0.0018 |

| 8 | 59 | 5 | 98.6885 2.1145 | 0.9356 ± 0.0358 |

| 13 | 10 | 5 | 99.9902 0.3737 | 0.9812 ± 0.0013 |

| 11 | 10 | 5 | 99.9212 ± 1.1208 | 0.9461 ± 0.0039 |

| 12 | 10 | 5 | 99.9546 ± 0.8573 | 0.9698 ± 0.0024 |

| 14 | 8 | 5 | 98.8356 ± 5.8136 | 0.9192 ± 0.0077 |

| 15 | 8 | 5 | 99.2246 ± 4.4486 | 0.9576 ± 0.0023 |

| 162 | 8 | 5 | 99.7704 ± 2.1994 | 0.9481 ± 0.0025 |

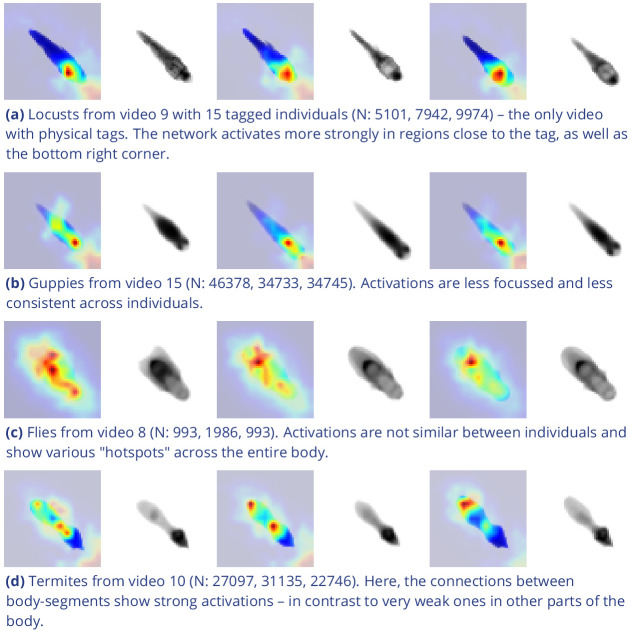

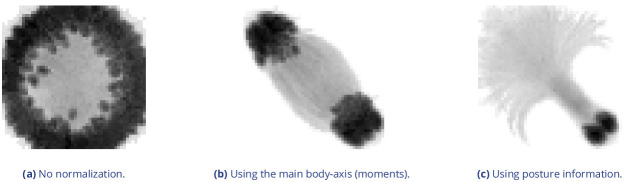

Additionally, while TRex could successfully track individuals in all videos without tags, we were interested to see the effect of tags (in this case QR tags attached to locusts, see Figure 3a) on network training. In Figure 3, we visualize differences in network activation, depending on the visual features available for the network to learn from, which are different between species (or due to physically added tags, as mentioned above). The ‘hot’ regions indicate larger between-class differences for that specific pixel (values are the result of activation in the last convolutional layer of the trained network, see figure legend). Differences are computed separately within each group and are not directly comparable between trials/species in value. However, the distribution of values – reflecting the network’s reactivity to specific parts of the image – is. Results show that the most apparent differences are found for the stationary parts of the body (not in absolute terms, but following normalization, as shown in Figure 4c), which makes sense seeing as this part (i) is the easiest to learn due to it being in exactly the same position every time, (ii) larger individuals stretch further into the corners of a cropped image, making the bottom right of each image a source of valuable information (especially in Figure 3a and b) and (iii) details that often occur in the head-region (like distance between the eyes) which can also play a role here. ‘Hot’ regions in the bottom right corner of the activation images (e.g. in Figure 3d) suggest that also pixels are reacted to which are explicitly not part of the individual itself but of other individuals – likely this corresponds to the network making use of size/shape differences between them.

Figure 3. Activation differences for images of randomly selected individuals from four videos, next to a median image of the respective individual – which hides thin extremities, such as legs in (a) and (c).

The captions in (a-d) detail the species per group and number of samples per individual. Colors represent the relative activation differences, with hotter colors suggesting bigger magnitudes, which are computed by performing a forward-pass through the network up to the last convolutional layer (using keract). The outputs for each identity are averaged and stretched back to the original image size by cropping and scaling according to the network architecture. Differences shown here are calculated per cluster of pixels corresponding to each filter, comparing average activations for images from the individual’s class to activations for images from other classes.

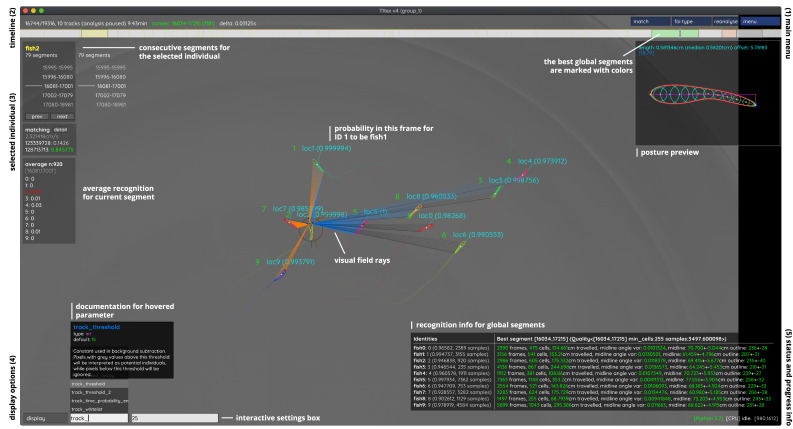

Figure 4. An overview of TRex’ the main interface, which is part of the documentation at trex.run/docs.

Interface elements are sorted into categories in the four corners of the screen (labelled here in black). The omni-box on the bottom left corner allows users to change parameters on-the-fly, helped by a live auto-completion and documentation for all settings. Only some of the many available features are displayed here. Generally, interface elements can be toggled on or off using the bottom-left display options or moved out of the way with the cursor. Users can customize the tinting of objects (e.g. sourcing it from their speed) to generate interesting effect and can be recorded for use in presentations. Additionally, all exportable metrics (such as border-distance, size, x/y, etc.) can also be shown as an animated graph for a number of selected objects. Keyboard shortcuts are available for select features such as loading, saving, and terminating the program. Remote access is supported and offers the same graphical user interface, for example in case the software is executed without an application window (for batch processing purposes).

As would be expected, distinct patterns can be recognized in the resulting activations after training as soon as physical tags are attached to individuals (as in Figure 3a). While other parts of the image are still heavily activated (probably to benefit from size/shape differences between individuals), tags are always at least a large part of where activations concentrate. The network seemingly makes use of the additional information provided by the experimenter, where that has occurred. This suggests that, while definitely not being necessary, adding tags probably does not worsen, and likely may even improve, training accuracy, for difficult cases allowing networks to exploit any source of inter-individual variation.

Visual identification: memory consumption

In order to generate comparable results between both tested software solutions, the same external script has been used to measure shared, private and swap memory of idtracker.ai and TRex, respectively. There are a number of ways with which to determine the memory usage of a process. For automation purposes, we decided to use a tool called syrupy, which can start and save information about a specified command automatically. We modified it slightly, so we could obtain more accurate measurements for Swap, Shared and Private separately, using ps_mem.

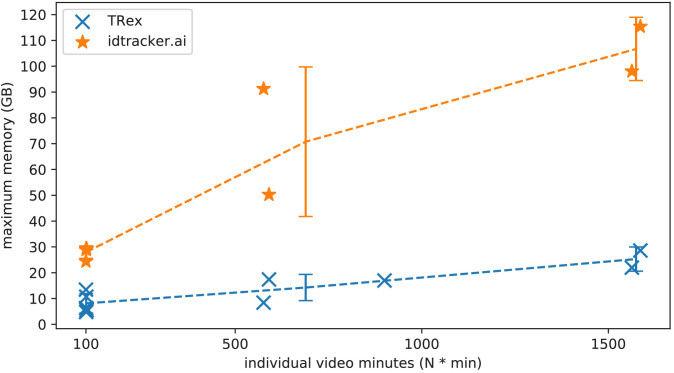

As expected, differences in memory consumption are especially prominent for long videos (4-7x lower maximum memory), and for videos with many individuals (2-3x lower). Since we already experienced significant problems tracking a long video (> 3 hr) of only eight individuals with idtracker.ai, we did not attempt to further study its behavior in long videos with many individuals. However, we would expect idtracker.ai memory usage to increase even more rapidly than is visible in Figure 5 since it retains a lot of image data (segmentation/pixels) in memory and we already had to ‘allow’ it to relay to hard-disk in our efforts to make it work for Videos 8, 14, and 16 (which slows down analysis). The maximum memory consumption across all trials was on average 5.01±2.54 times higher in idtracker.ai, ranging from 1.81 to 10.85 times the maximum memory consumption of TRex for the same video (see Table 4).

Figure 5. The maximum memory by TRex and idtracker.ai when tracking videos from a subset of all videos (the same videos as in Table 3).

Results are plotted as a function of video length (min) multiplied by the number of individuals. We have to emphasize here that, for the videos in the upper length regions of multiple hours (2, 2), we had to set idtracker.ai to store segmentation information on disk – as opposed to in RAM. This uses less memory, but is also slower. For the video with flies we tried out both and also settled for on-disk, since otherwise the system ran out of memory. Even then, the curve still accelerates much faster for idtracker.ai, ultimately leading to problems with most computer systems. To minimize the impact that hardware compatibility has on research, we implemented switches limiting memory usage while always trying to maximize performance given the available data. TRex can be used on modern laptops and normal consumer hardware at slightly lower speeds, but without any fatal issues.

Table 4. Both TRex and idtracker.ai analyzed the same set of videos, while continuously logging their memory consumption using an external tool.

Rows have been sorted by , which seems to be a good predictor for the memory consumption of both solutions. idtracker.ai has mixed mean values, which, at low individual densities are similar to TRex’ results. Mean values can be misleading here, since more time spent in low-memory states skews results. The maximum, however, is more reliable since it marks the memory that is necessary to run the system. Here, idtracker.ai clocks in at significantly higher values (almost always more than double) than TRex.

| Video | #ind. | Length | Max.consec. | TRex memory (GB) | Idtracker.ai memory (GB) |

|---|---|---|---|---|---|

| 12 | 10 | 10 min | 26.03s | 4.88 ± 0.23, max 6.31 | 8.23 ± 0.99, max 28.85 |

| 13 | 10 | 10 min | 36.94s | 4.27 ± 0.12, max 4.79 | 7.83 ± 1.05, max 29.43 |

| 11 | 10 | 10 min | 28.75s | 4.37 ± 0.32, max 5.49 | 6.53 ± 4.29, max 29.32 |

| 7 | 100 | 1 min | 5.97s | 9.4 ± 0.47, max13.45 | 15.27 ± 1.05, max 24.39 |

| 15 | 8 | 72 min | 79.4s | 5.6 ± 0.22, max 8.41 | 35.2 ± 4.51, max 91.26 |

| 10 | 10 | 10 min | 1391s | 6.94 ± 0.27, max 10.71 | N/A |

| 9 | 15 | 60 min | 7.64s | 13.81 ± 0.53, max 16.99 | N/A |

| 8 | 59 | 10 min | 102.35s | 12.4 ± 0.56, max 17.41 | 35.3 ± 0.92, max 50.26 |

| 14 | 8 | 195 min | 145.77s | 12.44 ± 0.8, max 21.99 | 35.08 ± 4.08, max 98.04 |

| 16 | 8 | 198 min | 322.57s | 16.15 ± 1.6, max 28.62 | 49.24 ± 8.21, max 115.37 |

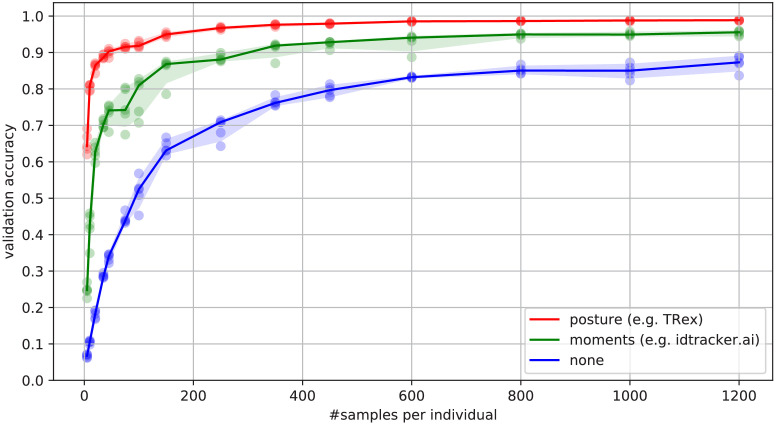

Overall memory consumption for TRex also contains posture data, which contributes a lot to RAM usage. Especially with longer videos, disabling posture can lower the hardware needs for running our software. If posture is to be retained, the user can still (more slightly) reduce memory requirements by changing the outline re-sampling scale (one by default), which adjusts the outline resolution between sub- and super-pixel accuracy. While analysis will be faster – and memory consumption lower – when posture is disabled (only limited by the matching algorithm, see Appendix 4—figure 3), users of the visual identification might experience a decrease in training accuracy or speed (see Figure 6).

Figure 6. Convergence behavior of the network training for three different normalization methods.

This shows the maximum achievable validation accuracy after 100 epochs for 100 individuals (Video 7), when sub-sampling the number of examples per individual. Tests were performed using a manually corrected training dataset to generate the images in three different ways, using the same, independent script (see Figure 8): Using no normalization (blue), using normalization based on image moments (green, similar to idtracker.ai), and using posture information (red, as in TRex). Higher numbers of samples per individual result in higher maximum accuracy overall, but – unlike the other methods – posture-normalized runs already reach an accuracy above the 90 % mark for ≥75 samples. This property can help significantly in situations with more crossings, when longer global segments are harder to find.

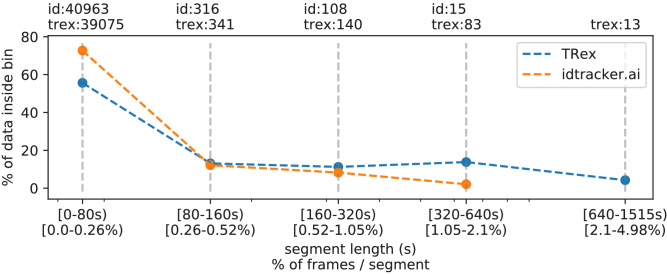

Visual identification: processing time

Automatically correcting the trajectories (to produce consistent identity assignments) means that additional time is spent on the training and application of a network, specifically for the video in question. Visual identification builds on some of the other methods described in this paper (tracking and posture estimation), naturally making it by far the most complex and time-consuming process in TRex – we thus evaluated how much time is spent on the entire sequence of all required processes. For each run of TRex andidtracker.ai, we saved precise timing information from start to finish. Since idtracker.ai reads videos directly and preprocesses them again each run, we used the same starting conditions with our software for a direct comparison:

A trial starts by converting/preprocessing a video in TGrabs and then immediately opening it in TRex, where automatic identity corrections were applied. TRex terminated automatically after satisfying a correctness criterion (high uniqueness value) according to equation (Accumulation of additional segments and stopping-criteria). It then exported trajectories, as well as validation data (similar to idtracker.ai), concluding the trial. The sum of time spent within TGrabs and TRex gives the total amount of time for that trial. For the purpose of this test it would not have been fair to compare only TRex processing times to idtracker.ai, but it is important to emphasize that conversion could be skipped entirely by using TGrabs to record videos directly from a camera instead of opening an existing video file.

In Table 5, we can see that video length and processing times (in TRex) did not correlate directly. Indeed, a 1 min video (Video 8) took significantly longer than one that was 60 min long (Video 15). The reason for this, initially counterintuitive, result is that the process of learning identities requires sufficiently long video sequences: longer samples have a higher likelihood of capturing more of the total possible intra-individual variance which helps the algorithm to more comprehensively represent each individual’s appearance. Longer videos naturally provide more material for the algorithm to choose from and, simply due to their length, have a higher probability of containing at least one higher quality segment that allows higher uniqueness-regimes to be reached more quickly (see Guiding the training process and H.2 Stopping-criteria). Thus, it is important to use sufficiently long video sequences for visual identification, and longer sequences can lead to better results – both in terms of quality and processing time.

Table 5. Evaluating time-cost for automatic identity correction – comparing to results from idtracker.ai.

Timings consist of preprocessing time in TGrabs plus network training in TRex, which are shown separately as well as combined (ours (min), ). The time it takes to analyze videos strongly depends on the number of individuals and how many usable samples per individual the initial segment provides. The length of the video factors in as well, as does the stochasticity of the gradient descent (training). idtracker.ai timings () contain the whole tracking and training process from start to finish, using its terminal_mode (v3). Parameters have been manually adjusted per video and setting, to the best of our abilities, spending at most one hour per configuration. For videos 16 and 14, we had to set idtracker.ai to storing segmentation information on disk (as compared to in RAM) to prevent the program from being terminated for running out of memory.

| Video | # ind. | Length | Sample | TGrabs (min) | TRex (min) | Ours (min) | idtracker.ai (min) |

|---|---|---|---|---|---|---|---|

| 7 | 100 | 1min | 1.61s | 2.03 ± 0.02 | 74.62 ± 6.75 | 76.65 | 392.22 ± 119.43 |

| 8 | 59 | 10min | 19.46s | 9.28 ± 0.08 | 96.7 ± 4.45 | 105.98 | 495.82 ± 115.92 |

| 9 | 15 | 60min | 33.81s | 13.17 ± 0.12 | 101.5 ± 1.85 | 114.67 | N/A |

| 11 | 10 | 10min | 12.31s | 8.8 ± 0.12 | 21.42 ± 2.45 | 30.22 | 127.43 ± 57.02 |

| 12 | 10 | 10min | 10.0s | 8.65 ± 0.07 | 23.37 ± 3.83 | 32.02 | 82.28 ± 3.83 |

| 13 | 10 | 10min | 36.91s | 8.65 ± 0.047 | 12.47 ± 1.27 | 21.12 | 79.42 ± 4.52 |

| 10 | 10 | 10min | 16.22s | 4.43 ± 0.05 | 35.05 ± 1.45 | 39.48 | N/A |

| 14 | 8 | 195min | 67.97s | 109.97 ± 0.05 | 70.48 ± 3.67 | 180.45 | 707.0 ± 27.55 |

| 15 | 8 | 72min | 79.36s | 32.1 ± 0.42 | 30.77 ± 6.28 | 62.87 | 291.42 ± 16.83 |

| 16 | 8 | 198min | 134.07s | 133.1 ± 2.28 | 68.85 ± 13.12 | 201.95 | 1493.83 ± 27.75 |

Compared to idtracker.ai, TRex (conversion + visual identification) shows both considerably lower computation times ( to faster for the same video), as well as lower variance in the timings (79% lower for the same video on average).

Discussion

We have designed TRex to be a versatile and fast program that can enable researches to track animals (and other mobile objects) in a wide range of situations. It maintains identities of up to 100 un-tagged individuals and produces corrected tracks, along with posture estimation, visual-field reconstruction, and other features that enable the quantitative study of animal behavior. Even videos that cannot be tracked by other solutions, such as videos with over 500 animals, can now be tracked within the same day of recording.

While all options are available from the command-line and a screen is not required, TRex offers a rich, yet straight-foward to use, interface to local as well as remote users. Accompanied by the integrated documentation for all parameters, each stating purpose, type and value ranges, as well as a comprehensive online documentation, new users are provided with all the information required for a quick adoption of our software. Especially to the benefit of new users, we evaluated the parameter space using videos of diverse species (fish, termites, locusts) and determined which parameters work best in most use-cases to set their default values.

The interface is structured into groups (see Figure 5), categorized by the typical use-case:

The main menu, containing options for loading/saving, options for the timeline and reanalysis of parts of the video

Timeline and current video playback information

Information about the selected individual

Display options and an interactive ‘omni-box’ for viewing and changing parameters

General status information about TRex and the Python integration.

The tracking accuracy of TRex is at the state-of-the-art while typically being to faster than comparable software and having lower hardware requirements – especially RAM. In addition to visual identification and tracking, it provides a rich assortment of additional data, including body posture, visual fields, and other kinematic as well as group-related information (such as derivatives of position, border and mean neighbor distance, group compactness, etc); even in live-tracking and closed-loop situations.

Raw tracking speeds (without visual identification) still achieved roughly 80 % accuracy per decision (as compared to > 99% with visual identification). We have found that real-time performance can be achieved, even on relatively modest hardware, for all numbers of individuals ≤256 without posture estimation (≤ 128 with posture estimation). More than 256 individuals can be tracked as well, remarkably still delivering frame-rates at about 10 – 25 frames per second using the same settings.

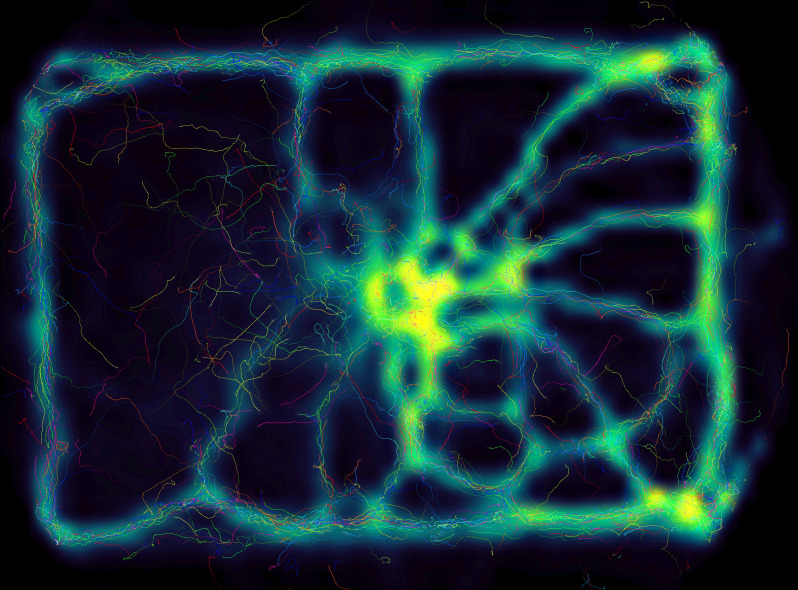

Not only does the increased processing-speeds benefit researchers, but the contributions we provide to data exploration should not be underestimated as well – merely making data more easily accessible right out-of-the-box, such as visual fields and live-heatmaps (see Appendix 1—figure 1), has the potential to reveal features of group- and individual behavior which have not been visible before. TRex makes information on multiple timescales of events available simultaneously, and sometimes this is the only way to detect interesting properties (e.g. trail formation in termites).

Since the software is already actively used within the Max Planck Institute of Animal Behavior, reported issues have been taken into consideration during development. However, certain theoretical, as well as practically observed, limitations remain:

Posture: While almost all shapes can be detected correctly (by adjusting parameters), some shapes – especially round shapes – are hard to interpret in terms of ‘tail’ or ‘head’. This means that only the other image alignment method (moments) can be used. However, it does introduce some limitations for example calculating visual fields is impossible.

Tracking: Predictions, if the wrong direction is assumed, might go really far away from where the object is. Objects are then ‘lost’ for a fixed amount of time (parameter). This can be ‘fixed’ by shortening this time-period, though this leads to different problems when the software does not wait long enough for individuals to reappear.

General: Barely visible individuals have to be tracked with the help of deep learning (e.g. using Caelles et al., 2017) and a custom-made mask per video frame, prepared in an external program of the users choosing.

Visual identification: All individuals have to be visible and separate at the same time, at least once, for identification to work at all. Visual identification, for example with very high densities of individuals, can thus be very difficult. This is a hard restriction to any software since finding consecutive global segments is the underlying principle for the successful recognition of individuals.

We will continue updating the software, increasingly addressing the above issues (and likely others), as well as potentially adding new features. During development, we noticed a couple of areas where improvements could be made, both theoretical and practical in nature. Specifically, incremental improvements in analysis speed could be made regarding visual identification by using the trained network more sporadically – for example it is not necessary to predict every image of very long consecutive segments, since, even with fewer samples, prediction values are likely to converge to a certain value early on. A likely more potent change would be an improved ‘uniqueness’ algorithm, which, during the accumulation phase, is better at predicting which consecutive segment will improve training results the most. This could be done, for example, by taking into account the variation between images of the same individual. Other planned extensions include:

(Feature): We want to have a more general interface available to users, so they can create their own plugins. Working with the data in live-mode, while applying their own filters. As well as specifically being able to write a plugin that can detect different species/annotate them in the video.

(Crossing solver): Additional method optimized for splitting overlapping, solid-color objects. The current method, simply using a threshold, is effective for many species but often produces large holes when splitting objects consisting of largely the same color.

To obtain the most up-to-date version of TRex, please download it at trex.run or update your existing installation according to our instructions listed on trex.run/docs/install.html.

Materials and methods

In the following sections, we describe the methods implemented in TRex and TGrabs, as well as their most important features in a typical order of operations (see Figure 1 for a flow diagram), starting out with a raw video. We will then describe how trajectories are obtained and end with the most technically involved features.

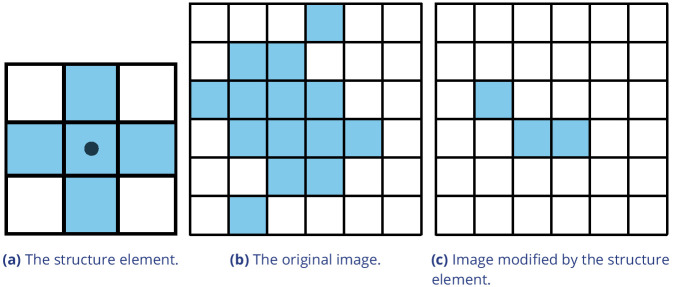

Segmentation

When an image is first received from a camera (or a video file), the objects of interest potentially present in the frame must be found and cropped out. Several technologies are available to separate the foreground from the background (segmentation). Various machine learning algorithms are frequently used to great effect, even for the most complex environments (Hughey et al., 2018; Robie et al., 2017; Francisco et al., 2019). These more advanced approaches are typically beneficial for the analysis of field-data or organisms that are very hard to see in video (e.g. very transparent or low contrast objects/animals in the scene). In these situations, where integrated methods might not suffice, it is possible to segment objects from the background using external, for example deep-learning based, tools (see next paragraph). However, for most laboratory experiments, simpler (and also much faster), classical image-processing methods yield satisfactory results. Thus, we provide as a generically useful capability background-subtraction, which is the default method by which objects are segmented. This can be used immediately in experiments where the background is relatively static. Backgrounds are generated automatically by uniformly sampling images from the source video(s) – different modes are available (min/max, mode and mean) for the user to choose from. More advanced image-processing techniques like luminance equalization (which is useful when lighting varies between images), image undistortion, and brightness/contrast adjustments are available in TGrabs and can enhance segmentation results – but come at the cost of slightly increased processing time. Importantly, since many behavioral studies rely on ≥4 K resolution videos, we heavily utilize the GPU (if available) to speed up most of the image-processing, allowing TRex to scale well with increasing image resolution.

TGrabs can generally find any object in the video stream, and subsequently pass it on to the tracking algorithm (next section), as long as either (i) the background is relatively static while the objects move at least occasionally, (ii) the objects/animals of interest have enough contrast to the background, or (iii) the user provides an additional binary mask per frame which is used to separate the objects of interest from the background, the typical means of doing this being by deep-learning based segmentation (e.g. Caelles et al., 2017). These masks are expected to be in a video-format themselves and correspond 1:1 in length and dimensions to the video that is to be analyzed. They are expected to be binary, marking individuals in white and background in black. Of course, these binary videos could be used on their own, but would not retain grey-scale information of the objects. There are a lot of possible applications where this could be useful; but generally, whenever individuals are really hard to detect visually and need to be recognized by a different software (e.g. a machine-learning-based segmentation like Maninis et al., 2018). Individual frames can then be connected using our software as a second step.

The detected objects are saved to a custom non-proprietary compressed file format (Preprocessed Video or PV, see appendix Appendix G The PV file format), that stores only the most essential information from the original video stream: the objects and their pixel positions and values. This format is optimized for quick random index access by the tracking algorithm (see next section) and stores other meta-information (like frame timings) utilized during playback or analysis. When recording videos directly from a camera, they can also be streamed to an additional and independent MP4 container format (plus information establishing the mapping between PV and MP4 video frames).

Tracking

Once animals (or, more generally, termed ‘objects’ henceforth) have been successfully segmented from the background, we can either use the live-tracking feature in TGrabs or open a pre-processed file in TRex, to generate the trajectories of these objects. This process uses information regarding an object’s movement (i.e. its kinematics) to follow it across frames, estimating future positions based on previous velocity and angular speed. It will be referred to as ‘tracking’ in the following text, and is a required step in all workflows.

Note that this approach alone is very fast, but, as will be shown, is subject to error with respect to maintaining individual identities. If that is required, there is a further step, outlined in Automatic visual identification based on machine learning below, which can be applied at the cost of processing speed. First, however, we will discuss the general basis of tracking, which is common to approaches that do, and do not, require identities to be maintained with high-fidelity. Tracking can occur for two distinct categories, which are handled slightly differently by our software:

There is a known number of objects

There is an unknown number of objects

The first case assumes that the number of tracked objects in a frame cannot exceed a certain expected number of objects (calculated automatically, or set by the user). This allows the algorithm to make stronger assumptions, for example regarding noise, where otherwise ‘valid’ objects (conforming to size expectations) are ignored due to their positioning in the scene (e.g. too far away from previously lost individuals). In the second case, new objects may be generated until all viable objects in a frame are assigned. While being more susceptible to noise, this is useful for tracking a large number of objects, where counting objects may not be possible, or where there is a highly variable number of objects to be tracked.

For a given video, our algorithm processes every frame sequentially, extending existing trajectories (if possible) for each of the objects found in the current frame. Every object can only be assigned to one trajectory, but some objects may not be assigned to any trajectory (e.g. in case the number of objects exceeds the allowed number of individuals) and some trajectories might not be assigned to any object (e.g. while objects are out of view). To estimate object identities across frames, we use an approach akin to the popular Kalman filter (Kalman, 1960) which makes predictions based on multiple noisy data streams (here, positional history and posture information). In the initial frame, objects are simply assigned from top-left to bottom-right. In all other frames, assignments are made based on probabilities (see appendix Appendix D Matching an object to an object in the next frame) calculated for every combination of object and trajectory. These probabilities represent the degree to which the program believes that ‘it makes sense’ to extend an existing trajectory with an object in the current frame, given its position and speed. Our tracking algorithm only considers assignments with probabilities larger than a certain threshold, generally constrained to a certain proximity around an object assigned in the previous frame.

Matching a set of objects in one frame with a set of objects in the next frame is representative of a typical assignment problem, which can be solved in polynomial time (e.g. using the Hungarian method Kuhn, 1955). However, we found that, in practice, the computational complexity of the Hungarian method can constrain analysis speed to such a degree that we decided to implement a custom algorithm, which we term tree-based matching, which has a better average-case performance (see evaluation), even while having a comparatively bad worst-case complexity. Our algorithm constructs a tree of all possible object/trajectory combinations in the frame and tries to find a compatible (such that no objects/trajectories are assigned twice) set of choices, maximizing the sum of probabilities amongst these choices (described in detail in the appendix Appendix D Matching an object to an object in the next frame). Problematic are situations where a large number of objects are in close proximity of one another, since then the number of possible sets of choices grows exponentially. These situations are avoided by using a mixed approach: tree-based matching is used most of the time, but as soon as the combinatorical complexity of a certain situation becomes too great, our software falls back on using the Hungarian method. If videos are known to be problematic throughout (e.g. with > 100 individuals consistently very close to each other), the user may choose to use an approximate method instead (described in the appendix Appendix D), which simply iterates through all objects and assigns each to the trajectory for which it has the highest probability and subsequently does not consider whether another object has an even higher probability for that trajectory. While the approximate method scales better with an increasing number of individuals, it is ‘wrong’ (seeing as it does not consider all possible combinations) – which is why it is not recommended unless strictly necessary. However, since it does not consider all combinations, making it more sensitive to parameter choice, it scales better for very large numbers of objects and produces results good enough for it to be useful in very large groups (see Appendix 4—table 2).

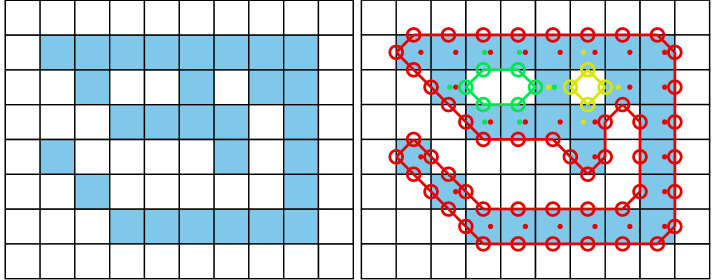

Situations where objects/individuals are touching, partly overlapping, or even completely overlapping, is an issue that all tracking solutions have to deal with in some way. The first problem is the detection of such an overlap/crossing, the second is its resolution. idtracker.ai, for example, deals only with the first problem: It trains a neural network to detect crossings and essentially ignores the involved individuals until the problem is resolved by movement of the individuals themselves. However, using such an image-based approach can never be fully independent of the species or even video (it has to be retrained for each specific experiment) while also being time-costly to use. In some cases the size of objects might indicate that they contain multiple overlapping objects, while other cases might not allow for such an easy distinction – for example when sexually dimorphic animals (or multiple species) are present at the same time. We propose a method, similar to xyTracker in that it uses the object’s movement history to detect overlaps. If there are fewer objects in a region than would be expected by looking at previous frames, an attempt is made to split the biggest ones in that area. The size of that area is estimated using the maximal speed objects are allowed to travel per frame (parameter, see documentation track_max_speed). This, of course, requires relatively good predictions or, alternatively, high frame-rates relative to the object’s movement speeds (which are likely necessary anyway to observe behavior at the appropriate time-scales).

By default, objects suspected to contain overlapping individuals are split by thresholding their background-difference image (see appendix Appendix K), continuously increasing the threshold until the expected number (or more) similarly sized objects are found. Grayscale values and, more generally, the shading of three-dimensional objects and animals often produces a natural gradient (see for example Figure 4) making this process surprisingly effective for many of the species we tested with. Even when there is almost no visible gradient and thresholding produces holes inside objects, objects are still successfully separated with this approach. Missing pixels from inside the objects can even be regenerated afterwards. The algorithm fails, however, if the remaining objects are too small or are too different in size, in which case the overlapping objects will not be assigned to any trajectory until all involved objects are found again separately in a later frame.

After an object is assigned to a specific trajectory, two kinds of data (posture and visual-fields) are calculated and made available to the user, which will each be described in one of the following subsections. In the last subsection, we outline how these can be utilized in real-time tracking situations.

Posture analysis

Groups of animals are often modeled as systems of simple particles (Inada and Kawachi, 2002; Cavagna et al., 2010; Perez-Escudero and de Polavieja, 2011), a reasonable simplification which helps to formalize/predict behavior. However, intricate behaviors, like courtship displays, can only be fully observed once the body shape and orientation are considered (e.g. using tools such as DeepPoseKit, Graving et al., 2019, LEAP Pereira et al., 2019/SLEAP Pereira et al., 2020, and DeepLabCut, Mathis et al., 2018). TRex does not track individual body parts apart from the head and tail (where applicable), but even the included simple and fast 2D posture estimator already allows for deductions to be made about how an animal is positioned in space, bent and oriented – crucial for example when trying to estimate the position of eyes/antennae as part of an analysis, where this is required (e.g. Strandburg-Peshkin et al., 2013; Rosenthal et al., 2015). When detailed tracking of all extremities is required, TRex offers an option that allows it to interface with third-party software like DeepPoseKit (Graving et al., 2019), SLEAP (Pereira et al., 2020), or DeepLabCut (Mathis et al., 2018). This option (output_image_per_tracklet), when set to true, exports cropped and (optionally) normalized videos per individual that can be imported directly into these tools – where they might perform better than the raw video. Normalization, for example, can make it easier for machine-learning algorithms in these tools to learn where body-parts are likely to be (see Figure 6) and may even reduce the number of clicks required during annotation.

In TRex, the 2D posture of an animal consists of (i) an outline around the outer edge of a blob, (ii) a center-line (or midline for short) that curves with the body and (iii) positions on the outline that represent the front and rear of the animal (typically head and tail). Our only assumptions here are that the animal is bilateral with a mirror-axis through its center and that it has a beginning and an end, and that the camera-view is roughly perpendicular to this axis. This is true for most animals, but may not hold for example for jellyfish (with radial symmetry) or animals with different symmetries (e.g. radiolaria (protozoa) with spherical symmetry). Still, as long as the animal is not exactly circular from the perspective of the camera, the midline will follow its longest axis and a posture can be estimated successfully. The algorithm implemented in our software is run for every (cropped out) image of an individual and processes it as follows:

A tree-based approach follows edge pixels around an object in a clock-wise manner. Drawing the line around pixels, as implemented here, instead of through their centers, as done in comparable approaches, helps with very small objects (e.g. one single pixel would still be represented as a valid outline, instead of a single point).

The pointiest end of the outline is assumed, by default, to be either the tail or the head (based on curvature and area between the outline points in question). Assignment of head vs. tail can be set by the user, seeing as some animals might have ‘pointier’ heads than tails (e.g. termite workers, one of the examples we employ). Posture data coming directly from an image can be very noisy, which is why the program offers options to simplify outline shapes using an Elliptical Fourier Transform (EFT, see Iwata et al., 2015; Kuhl and Giardina, 1982) or smoothing via a simple weighted average across points of the curve (inspired by common subdivision techniques, see Warren and Weimer, 2001). The EFT allows for the user to set the desired level of approximation detail (via the number of elliptic fourier descriptors, EFDs) and thus make it ‘rounder’ and less jittery. Using an EFT with just two descriptors is equivalent to fitting an ellipse to the animal’s shape (as, for example, xyTracker does), which is the simplest supported representation of an animal’s body.

The reference-point chosen in (ii) marks the start for the midline-algorithm. It walks both left and right from this point, always trying to move approximately the same distance on the outline (with limited wiggle-room), while at the same time minimizing the distance from the left to the right point. This works well for most shapes and also automatically yields distances between a midline point and its corresponding two points on the outline, estimating thickness of this object’s body at this point.

Compared to the tracking itself, posture estimation is a time-consuming process and can be disabled. It is, however, required to estimate – and subsequently normalize – an animal’s orientation in space (e.g. required later in Automatic visual identification based on machine learning), or to reconstruct their visual field as described in the following sub-section.

Reconstructing 2D visual fields

Visual input is an important modality for many species (e.g. fish Strandburg-Peshkin et al., 2013, Bilotta and Saszik, 2001 and humans Colavita, 1974). Due to its importance in widely used model organisms like zebrafish (Danio rerio), we decided to include the capability to conduct a two-dimensional reconstruction of each individual’s visual field as part of the software. The requirements for this are successful posture estimation and that individuals are viewed from above, as is usually the case in laboratory studies.

The algorithm makes use of the fact that outlines have already been calculated during posture estimation. Eye positions are estimated to be evenly distanced from the ‘snout’ and will be spaced apart depending on the thickness of the body at that point (the distance is based on a ratio, relative to body-size, which can be adjusted by the user). Eye orientation is also adjustable, which influences the size of the stereoscopic part of the visual field. We then use ray-casting to intersect rays from each of the eyes with all other individuals as well as the focal individual itself (self-occlusion). Individuals not detected in the current frame are approximated using the last available posture. Data are organized as a multi-layered 1D-image of fixed size for each frame, with each image prepresenting angles from to for the given frame. Simulating a limited field-of-view would thus be as simple as cropping parts of these images off the left and right sides. The different layers per pixel encode:

identity of the occluder

distance to the occluder

body-part that was hit (distance from the head on the outline in percent).

While the individuals viewed from above on a computer screen look two-dimensional, one major disadvantage of any 2D approach is, of course, that it is merely a projection of the 3D scene. Any visual field estimator has to assume that, from an individual’s perspective, other individuals act as an occluder in all instances (see Figure 7). This may only be partly true in the real world, depending on the experimental design, as other individuals may be able to move slightly below, or above, the focal individuals line-of-sight, revealing otherwise occluded conspecifics behind them. We therefore support multiple occlusion-layers, allowing second-order and Nth-order occlusions to be calculated for each individual.

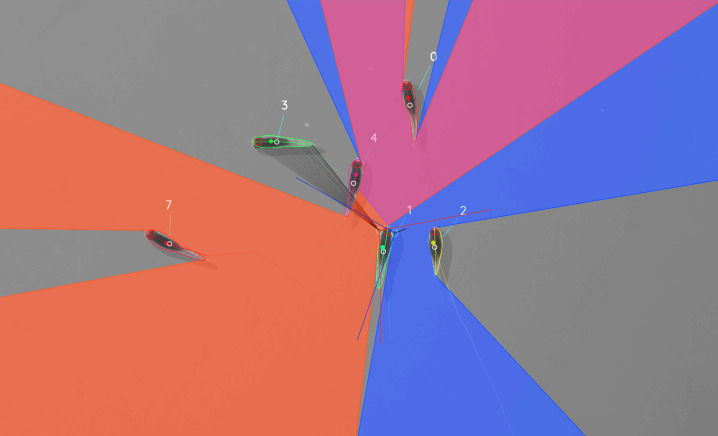

Figure 7. Visual field estimate of the individual in the center (zoomed in, the individuals are approximately 2 – 3 cm long, Video 15).

Right (blue) and left (orange) fields of view intersect in the binocular region (pink). Most individuals can be seen directly by the focal individual (1, green), which has a wide field of view of per eye. Individual three on the top-left is not detected by the focal individual directly and not part of its first-order visual field. However, second-order intersections (visualized by gray lines here) are also saved and accessible through a separate layer in the exported data.

Figure 7—video 1. A clip from Video 15, showing TRex’ visual-field estimation for Individual 1 https://youtu.be/yEO_3lpZIzU.

Realtime tracking option for closed-loop experiments

Live tracking is supported, as an option to the user, during the recording, or conversion, of a video in TGrabs. When closed-loop feedback is enabled, TGrabs focusses on maintaining stable recording frame-rates and may not track recorded frames if tracking takes too long. This is done to ensure that the recorded file can later be tracked again in full/with higher accuracy (thus no information is lost) if required, and to help the closed-loop feedback to stay synchronized with real-world events.

During development we worked with a mid-range gaming computer and Basler cameras at 90fps and 20482px resolution, where drawbacks did not occur. Running the program on hardware with specifications below our recommendations (see 2 Results), however, may affect frame-rates as described below.

TRex loads a prepared Python script, handing down an array of data per individual in every frame. Which data fields are being generated and sent to the script is selected by the script. Available fields are:

Position

Midline information

Visual field.

If the script (or any other part of the recording process) takes too long to execute in one frame, consecutive frames may be dropped until a stable frame-rate can be achieved. This scales well for all computer-systems, but results in fragmented tracking data, causing worse identity assignment, and reduces the number of frames and quality of data available for closed-loop feedback. However, since even untracked frames are saved to disk, these inaccuracies can be fixed in TRex later. Alternatively, if live-tracking is enabled but closed-loop feedback is disabled, the program maintains detected objects in memory and tracks them in an asynchronous thread (potentially introducing wait time after the recording stops). When the program terminates, the tracked individual’s data are exported – along with a results file that can be loaded by the tracker at a later time.

In order to make this interface easy to use for prototyping and to debug experiments, the script may be changed during its run-time and will be reloaded if necessary. Errors in the Python code lead to a temporary pause of the closed-loop part of the program (not the recording) until all errors have been fixed.

Additionally, thanks to Python being a fully-featured scripting language, it is also possible to call and send information to other programs during real-time tracking. Communication with other external programs may be necessary whenever easy-to-use Python interfaces are not available for for example hardware being used by the experimenter.

Automatic visual identification based on machine learning