It’s too new. Made too fast. Don’t know enough about long-term possible effects.

Don’t trust the system—or the motives of the government, big business, or the big funders.

Don’t tell me what to do. It’s my choice.

It’s not natural, it’s going to manipulate my DNA.

These are among the myriad of concerns and conspiracy theories driving COVID-19 vaccine hesitancy and refusal. These concerns vary across population groups, political contexts, and personal and collective histories. They particularly thrive in the context of uncertain science and a dynamic and ever-evolving new virus.

One of the strongest underlying drivers that determines whether individuals or groups are vaccine confident or vaccine hesitant is the level of trust—or distrust—in the individuals and institutions that discover, develop, and deliver vaccines. Higher levels of trust mean willingness to accept a level of risk for a greater benefit. Those with lower levels of trust are less likely to accept even the smallest perceived risk.1

The messenger matters as much as the message. Particularly in vulnerable populations characterized by attributes associated with race, economic status, and immigrant status, familiar community members or local leaders are likely to be more trustworthy than those from outside the community. Successful initiatives have involved bringing relevant community members together with health authorities to cocreate communication and engagement strategies.

There is a confusing landscape of evolving scientific information, as well as mis- and disinformation, about COVID-19. Vaccines have been a particular focus of conspiracies and mis- and disinformation. The threat of viral misinformation spread through social media was recognized well before this pandemic struck.2 The World Health Organization named “vaccine hesitancy” as one of the top 10 global health threats in 2019 and pointed to the risks of an “infodemic.” To combat this threat, public health organizations have created content designed as corrective information (e.g., “myth busters”). Although a welcome addition to the arsenal of countermisinformation efforts, such debunking strategies are inadequate to address the deep-seated emotions and drivers of dissent.

Vaccine information does not exist in isolation. Vaccine views exist in a contentious landscape, whereby people make sense of this information in terms of political, cultural, and social values that set the stage for whether individuals or communities trust or distrust authority. As human beings, we are constantly trying to make sense of our environment. Mysterious and unexpected events, such as the COVID-19 pandemic, seem to come out of nowhere, defying explanation. Under these circumstances, it is only rational for members of the public to ask, “Why is this happening?” Unfortunately, there are no easy answers; the state of scientific knowledge is simply too limited, albeit evolving. These uncertain times are fertile ground for rumors and conspiracy theories, allowing outlandish and implausible claims that seek to explain misfortune to become compelling.

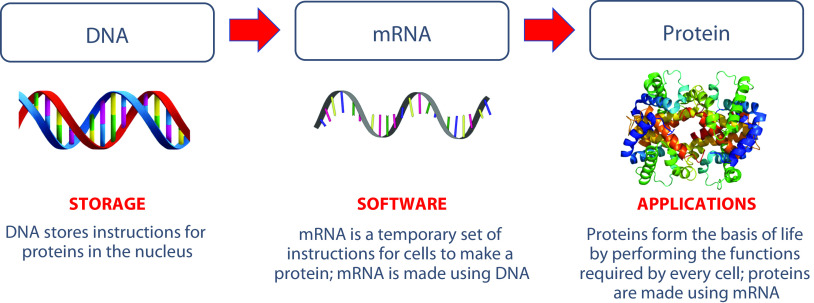

Consider the case of someone who has been exposed to misinformation claiming that a COVID-19 mRNA vaccine can “change your DNA.” Like most misinformed rumors, this false claim is rooted in a misinterpretation of the truth. Specifically, this misinformation originated as a consequence of a metaphor used by Moderna—one of the primary manufacturers of COVID-19 mRNA vaccines—which states that they “set out to create an mRNA technology platform that functions very much like an operating system on a computer . . . the ‘program’ . . . is our mRNA drug.”3

This statement is accompanied by an explanatory figure (Figure 1) making explicit the analogy between computer software and mRNA, describing mRNA medicines as “the software of life.”

FIGURE 1—

Image That Could Be Misinterpreted to Falsely Suggest That mRNA Vaccines Program (and Therefore Change) One’s DNA

Source. Image adapted from Moderna.3

Correctly interpreting this metaphor requires university-level knowledge of biology and computer science. This information is easy to misinterpret. If one takes seriously the idea that mRNA is the software of the human body, then the vaccine can easily be misconstrued as an attempt to “program” vaccinated individuals: to rob them of their autonomy.

What makes this misinformation compelling? First, it must be plausible that pharmaceutical companies seek to challenge the autonomy of the average person. To many, this narrative is plausible: it is precisely the narrative that those trying to undermine confidence in vaccines are promoting on social media.4 Second, the misinformation contains a gist—a compelling, simple, bottom-line meaning—that interprets the facts in light of political, cultural, and social values held in long-term memory by its audience.5 In the midst of a pandemic marked by repeated restrictions on movement, the value of personal autonomy is even more pronounced. Under these circumstances, in which individuals may feel their personal autonomy under threat, it is not only reasonable but rational to experience fear and anxiety at the prospect of being vaccinated.

Attempts to debunk this rumor are likely to be ineffective unless they provide a more compelling gist. Consider that a simple search on Google using the terms “vaccine mRNA” immediately yields a “COVID-19 alert” with several “common questions,” including “Could an mRNA vaccine change my DNA?” Clicking on this question yields the following answer: “An mRNA vaccine—the first COVID-19 vaccine to be granted emergency use authorization (EUA) by the FDA [US Food and Drug Administration]—cannot change your DNA” (https://bit.ly/3uzxpnP). This decontextualized factual statement might lead this person to draw several erroneous conclusions: (1) mRNA vaccines are new, (2) the first mRNA vaccine was granted an emergency use authorization by the FDA and therefore might not have been tested fully, and (3) several people are asking Google whether mRNA vaccines can change one’s DNA, lending the question social validity. Even a more detailed factual response, such as the statement provided by the Centers for Disease Control and Prevention that “mRNA from the vaccine never enters the nucleus of the cell and does not affect or interact with a person’s DNA” (https://bit.ly/2Pc95tn) may be misconstrued because it assumes that the listener possesses, and can contextualize, knowledge of cell biology. Worse, these points do not address the fundamental concern of the vaccine-hesitant individual: a perceived threat to their value of personal autonomy.

Decades of empirical research in experimental psychology, and especially medical decision making,6 demonstrates conclusively that providing detailed factual information is not an effective antidote to vaccine safety concerns. When asked in an experimental or didactic setting, individuals may repeat these facts in the short term but, on their own, easily forget, with relatively small impacts on intentions or behaviors. This same research shows that messages are more compelling when they communicate a gist or simple bottom-line meaning that helps the listener make sense of the message.

To adequately address misinformation and build trust, it is crucial to directly address the concerns of audiences. In the words of a recent article published in the Proceedings of the National Academy of Sciences, “Science communication needs to shift from an emphasis on disseminating rote facts to achieving insight, retaining its integrity but without shying away from emotions and values.”5(p1) Thus, rather than simply debunking the decontextualized claim that “mRNA vaccines can change your DNA”—which, if done inappropriately, may simply trigger a negative response to authority—health communicators must understand the context that gave rise to the claim and recontextualize their response in terms of the values of the vaccine-hesitant individual.

For example, one might point out that the software metaphor is actually an inaccurate description—rather than forcing the human body to follow a program, it is actually the vaccine that will give the body the ability to defend itself naturally. This statement is factual, meaningful, and relevant to the listener’s core values of autonomy and self-reliance.

The above example highlights the importance of empathy. A decontextualized debunking strategy does not engage with the substance of the listener’s concern, the debunker’s job is to educate or otherwise fill an information gap. A more effective response to misinformation is more compassionate; it starts from the premise that the misinformed individual has legitimate concerns and feelings. Listening plays an important role in understanding those concerns. By seeking to get the gist of their concerns—that is, to understand what they mean, how it makes them feel, and why it is important to them—it demonstrates a motive of caring and can contribute to building trust.7

The implications of this reality are that simply responding to misinformation with factual corrections is not likely to turn the tide of public dissent. There are deeper issues at play: building trust means changing perceptions of risk and requires being responsive to felt needs and concerns, putting facts in context, and ultimately building relationships.

This is just the beginning: our handling of the COVID-19 vaccine rollout will be foundational for future vaccine confidence as several new vaccines are introduced around the world. A recent study conducted in Africa found that 42% of respondents reported that they were exposed to a lot of misinformation. Correcting misinformation, one piece at a time, is important for today, but only if we address the underlying issues driving misinformation will we be able to build vaccine confidence for the longer term.

We still have time to get it right.

ACKNOWLEDGMENTS

This work was supported by a grant from the John S. and James L. Knight Foundation to the George Washington University Institute for Data, Democracy, and Politics.

CONFLICTS OF INTEREST

H. J. Larson has received research grants from GlaxoSmithKline (GSK), Johnson & Johnson, and Merck and serves on the Merck Vaccine Confidence Advisory Board. She has received honoraria for speaking at training seminars hosted by GSK and Merck. D. A. Broniatowski received a speaking honorarium from the UN Shot@Life Foundation—a nonprofit organization that promotes childhood vaccination.

Footnotes

REFERENCES

- 1.Karafillakis E, Larson HJ. ADVANCE consortium. The benefit of the doubt or doubts over benefits? A systematic literature review of perceived risks of vaccines in European populations. Vaccine. 2017;35(37):4840–4850. doi: 10.1016/j.vaccine.2017.07.061. [DOI] [PubMed] [Google Scholar]

- 2.Larson HJ. The biggest pandemic risk? Viral misinformation. Nature. 2018;562(7727):309. doi: 10.1038/d41586-018-07034-4. [DOI] [PubMed] [Google Scholar]

- 3.Moderna. mRNA platform: enabling drug discovery & development. Available at: https://www.modernatx.com/mrna-technology/mrna-platform-enabling-drug-discovery-development. Accessed March 30, 2021.

- 4.Broniatowski DA, Jamison AM, Johnson NF et al. Facebook pages, the “Disneyland” measles outbreak, and promotion of vaccine refusal as a civil right, 2009–2019. Am J Public Health. 2020;110(S3):S312–S318. doi: 10.2105/AJPH.2020.305869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Reyna VF. A scientific theory of gist communication and misinformation resistance, with implications for health, education, and policy. Proc Natl Acad Sci U S A. 2020 doi: 10.1073/pnas.1912441117. Epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Reyna VF. A theory of medical decision making and health: fuzzy trace theory. Med Decis Making. 2008;28(6):850–865. doi: 10.1177/0272989X08327066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Larson HJ. Stuck: How Vaccine Rumors Start—and Why They Don’t Go Away. New York, NY: Oxford University Press; 2020. [Google Scholar]