Abstract

Background:

Colorectal cancer is harmful to the patient's life. The treatment of patients is determined by accurate preoperative staging. Magnetic resonance imaging (MRI) played an important role in the preoperative examination of patients with rectal cancer, and artificial intelligence (AI) in the learning of images made significant achievements in recent years. Introducing AI into MRI recognition, a stable platform for image recognition and judgment can be established in a short period. This study aimed to establish an automatic diagnostic platform for predicting preoperative T staging of rectal cancer through a deep neural network.

Methods:

A total of 183 rectal cancer patients’ data were collected retrospectively as research objects. Faster region-based convolutional neural networks (Faster R-CNN) were used to build the platform. And the platform was evaluated according to the receiver operating characteristic (ROC) curve.

Results:

An automatic diagnosis platform for T staging of rectal cancer was established through the study of MRI. The areas under the ROC curve (AUC) were 0.99 in the horizontal plane, 0.97 in the sagittal plane, and 0.98 in the coronal plane. In the horizontal plane, the AUC of T1 stage was 1, AUC of T2 stage was 1, AUC of T3 stage was 1, AUC of T4 stage was 1. In the coronal plane, AUC of T1 stage was 0.96, AUC of T2 stage was 0.97, AUC of T3 stage was 0.97, AUC of T4 stage was 0.97. In the sagittal plane, AUC of T1 stage was 0.95, AUC of T2 stage was 0.99, AUC of T3 stage was 0.96, and AUC of T4 stage was 1.00.

Conclusion:

Faster R-CNN AI might be an effective and objective method to build the platform for predicting rectal cancer T-staging.

Trial registration:

chictr.org.cn: ChiCTR1900023575; http://www.chictr.org.cn/showproj.aspx?proj=39665.

Keywords: Magnetic resonance imaging, Rectal neoplasm, TNM staging, Artificial intelligence, Convolutional neural networks

Introduction

Colorectal cancer is one of the most common malignant tumors of the digestive tract in the world; its incidence ranks third among all malignant tumors, and its mortality ranks fourth among all cancer mortalities.[1] In addition, colorectal cancer is also a common malignant tumor in China,[2] seriously decreasing the average life expectancy and affecting the quality of life of the affected individual.

The comprehensive surgery-based treatment has greatly prolonged the life expectancy and improved the quality of life of patients with rectal cancer. However, not all patients with rectal cancer are suitable for surgical treatment. Even if surgical treatment is an option, the choice of surgical methods can have a great impact on the quality of life and prognosis of the patients.[3,4] Accurate preoperative staging plays an important role in the selection and determination of treatment options for patients with rectal cancer.[3–6] Postoperative pathological diagnosis is the gold standard for determining the condition of, the prognosis of, and treatment plan for advanced rectal cancer patients. However, postoperative pathological results are obtained after surgical treatment. The delay in diagnosis results in inconsistencies between the preoperative and postoperative treatment plans, and inflated or insufficient staging caused by an inaccurate preoperative diagnosis can cause permanent and irreversible trauma to patients who have already undergone surgery. The development of imaging has provided strong support for the preoperative diagnosis of rectal cancer.[7] At present, endoscopic ultrasound (EUS), computed tomography (CT), magnetic resonance imaging (MRI), and other auxiliary examination methods are used for the preoperative tumor staging of rectal cancer.[8] Various strategies can also be used to ensure that preoperative imaging staging and postoperative pathological staging are highly consistent.[6] Although EUS can have an accuracy of 91% for the preoperative T-staging of some rectal tumors,[9] MRI is less technically demanding for clinical staff than EUS[10] and can be used to achieve more accurate staging for tumors causing obstruction and those with an out-of-reach tumor location that cannot be evaluated by EUS, while MRI has fewer restrictions in the application. Compared with CT examination, MRI has high tissue resolution and high preoperative diagnostic accuracy.[11] Therefore, MRI has become the most valuable method of preoperative tumor staging for rectal cancer worldwide,[12,13] and high-resolution MRI technology has led to a better imaging of soft tissue and tumors. With the continuous improvement in imaging technology and the diagnostic experience of radiologists, the accuracy of preoperative diagnoses has greatly improved. However, the large population in China, very large image data, and a shortage of radiologists have resulted in currently practicing radiologists in different regions and hospitals to have high workloads, work in highly stressful environments, and have different diagnostic abilities. MRI reading is operator-dependent and this can result sometimes in misdiagnosis; therefore, reducing mistakes must take on a more prominent role in preoperative diagnoses.[14] As an important computation technology, artificial intelligence (AI) has a stable error rate, fast calculation speed, and high precision when processing data. Through the use of deep learning techniques when acquiring data on imaging features and the corresponding diagnostic results, AI can achieve image reading and diagnostic capabilities similar to those of radiologists. At present, AI technology has already helped clinicians interpret images for the diagnosis and solved problems in many aspects.[15,16] The application of AI for interpreting images can address the shortage of radiologists in China, improve the speed of reading images, reduce the errors caused by human factors, and improve the accuracy of the overall imaging diagnosis.[14] To improve the accuracy of the preoperative diagnosis of patients with rectal cancer and reduce the workload of the radiologists, we used AI technology based on deep learning to establish an automatic T-staging diagnosis system for rectal cancer based on a large number of rectal cancer MRI combined with postoperative pathological diagnosis results. The accuracy of the automatic diagnosis system was then evaluated and verified using data from a verification group to assess the feasibility of its clinical application. The system establishment process and learning results are provided in this article.

Methods

This study was approved by the Ethics Committee of the Affiliated Hospital of Qingdao University (No. QYFYYWZLL26093). Because this was a retrospective study and the data analysis was performed anonymously, this study was exempt from informed consent from patients.

Establishment of the database for rectal cancer T-staging

We enrolled a total of 183 patients with rectal cancer from the Chinese Colorectal Cancer Database (CCCD) who were treated at the Affiliated Hospital of Qingdao University from July 2016 to July 2017. The inclusion criteria were a pathological diagnosis based on a preoperative colonoscopic biopsy indicating rectal cancer with no concurrent malignant tumors in other organs; a preoperative high-resolution pelvic (rectal) dynamic MR and thin-layer enhanced scan in our hospital; radical surgical resection; and complete postoperative pathological diagnostic findings. The exclusion criteria were patients with preoperatively or postoperatively uncharacterized rectal space-occupying lesions; patients who did not undergo surgery; patients who did not undergo radical tumor resection; and patients with rectal malignancies of other origins. A total of 183 patients (121 males and 62 females) with rectal cancer were included in this study. The specific ages and sexes of the included patients are shown in Table 1. Among those included, 59 patients had lower rectal cancer, 91 had mid-rectal cancer, and 33 had upper rectal cancer, as reported in preoperative colonoscopy and MRI reports. A comparison of the postoperative pathological results with the imaging diagnosis revealed a correct rate of diagnosis by our radiologists of only 62%. Regarding the data grouping, the data were randomized, and then 80% of the data were randomly extracted to be included in the training set; the remaining 20% was used as the verification set.

Table 1.

Baseline clinical characteristics of the rectal cancer patients.

| Parameters | T1 | T2 | T3 | T4 |

| Age | 59.2 ± 10.4 | 65.1 ± 9.7 | 61.3 ± 10.0 | 60.6 ± 9.7 |

| <60 years | 41 | 27 | 42 | 50 |

| ≥60 years | 59 | 73 | 58 | 50 |

| Sex | ||||

| Male | 72 | 81 | 65 | 65 |

| Female | 28 | 19 | 35 | 35 |

| Tumor size (cm) | 5.8 ± 0.9 | 4.5 ± 0.5 | 3.8 ± 1.2 | 2.5 ± 0.8 |

| <5 | 41 | 52 | 56 | 60 |

| ≥5 | 59 | 48 | 44 | 40 |

| Tumor location | ||||

| High | 18 | 28 | 20 | 30 |

| Middle | 41 | 45 | 51 | 40 |

| Low | 41 | 27 | 29 | 30 |

| CEA (ng/mL) | 3.2 ± 14.2 | 2.5 ± 5.6 | 5.6 ± 2.0 | 10.2 ± 13.2 |

| Normal | 82 | 59 | 45 | 30 |

| Elevated | 18 | 41 | 55 | 70 |

| CA19–9 (U/mL) | 16.0 ± 5.0 | 17.5 ± 9.6 | 15.6 ± 8.0 | 14.5 ± 5.0 |

| Normal | 100 | 86 | 82 | 55 |

| Elevated | 0 | 14 | 18 | 45 |

Continuous variables are expressed as mean ± standard deviation. Categorical variables given are the number of patients unless indicated otherwise. CA19–9: Carbohydrate antigen 19–9; CEA: Carcinoembryonic antigen.

MRI scans were performed using a GE Signa 3.0T HDX MR scanner and a multichannel phased array coil. During the examination, the patient was in the supine position, and the center of the magnetic field was positioned on the superior edge of the pubis. The main parameters and sequences for the scans are provided in Table 2. To reduce the influence of projection orientation on tumor T-staging, images from three planes, such as sagittal, coronal, and horizontal, were selected for simultaneous learning to obtain more complete tumor imaging information.[17] The three planes complemented each other, thus improving the accuracy of the diagnosis. A total of 10,800 preoperative MR images from 183 patients were included in the rectal cancer T-staging database for platform establishment and verification.

Table 2.

Rectal cancer T-staging automatic identification platform database scanning parameters.

| Projection orientation | Sequence | Repetition time (ms) | Echo time (ms) | Layer space (mm) | Layer thickness (mm) | Imaging matrix | Field of view (cm) | Incentive frequency |

| Sagittal view | T2WI | 2000–4000 | 60–120 | 1 | 6 | 320 × 256 | 32–44 | 1 |

| Coronal view | T2WI | 2000–4000 | 60–120 | 2 | 5 | 320 × 192 | 36–44 | 2 |

| Horizontal view | T2WI | 2000–4000 | 60–120 | 1.5 | 6 | 320 × 192 | 36–52 | 2 |

T2WI: T2-weighted images.

Pathological T-stage diagnosis and staging were performed on all surgically removed specimens included in the study. T-staging was determined according to the tumor-node-metastasis (TNM) staging criteria for rectal cancer established by the American Joint Committee on Cancer. Two senior gastrointestinal radiologists in gastrointestinal surgery will read the images, mark the areas of interest, and check each other. If the images cannot be determined, they will jointly decide and the final will be determined by a gastroenterologist. Labeling software was used to label the MR images of the patients included in the study. During labeling, the contents of the intestine and air were avoided to minimize interference with the test results. The imaging plane with the deepest tumor infiltration was selected, and the entire intestinal wall was selected and labeled. The labeling was based on the T-staging from the postoperative pathological diagnosis of the patient. All the data were randomized; 80% of the images (approximately 8640 MR images) were randomly selected to be included in the training set, and the remaining 20% (approximately 2160 MR images) comprised the verification set.

Data analysis of MR images for rectal cancer T-staging

The learning process for the establishment of an automatic diagnosis platform for rectal cancer T-staging are as follows:

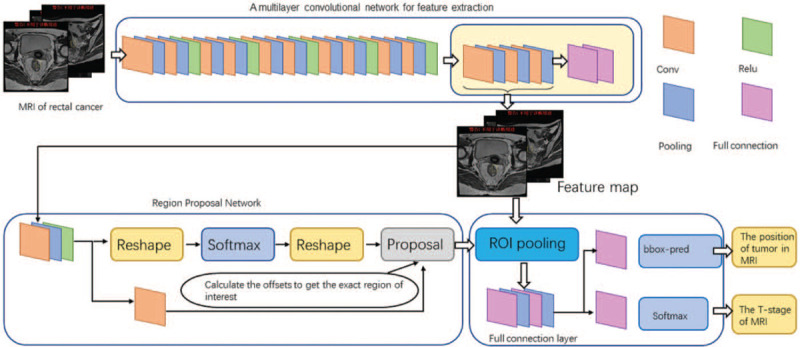

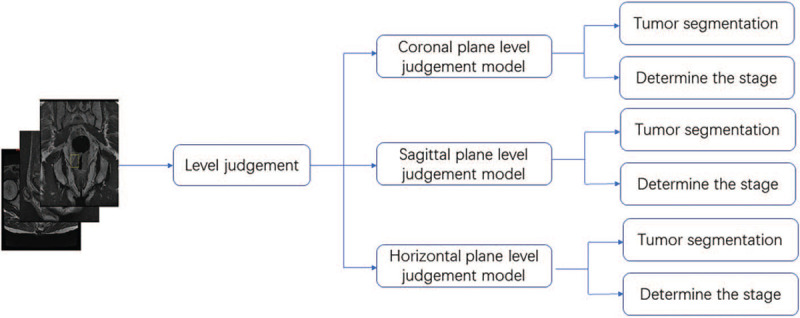

As the Faster region-based convolutional neural network (Faster R-CNN) algorithm is commonly used in AI,[18] and we programmed the platform in Faster R-CNN. As shown in Figure 1, the Faster R-CNN is composed of convolutional layers, region proposal network (RPN), region of interest (ROI) pooling and classification. We used 80% of the randomly assigned data as the training set for platform training. We uniformly scaled the images to 512 × 557 pixels, and the images were normalized so that the pixels of each channel had a standard normal distribution with a mean of 0 and a variance of 1 to build the convolutional layers. We trained the Faster R-CNN through a four-step iteration. First, the images which contain the annotations of T stage information were used to train the RPN in the network. The aim of this step is for the region proposal task and the RPN is initialized by the VGG16. Second, we trained the detection network by the RPN that we have trained. This step also was initialized by the VGG16 model and the network did not share the same layers with RPN. Third, we initialized the RPN by detection network. We fine-tuned the RPN layers and fixed the convolutional layers. Finally, we still kept the convolutional layers fixed and fine-tuned the detection of the network. After these four steps, we established four learning models, which contained a plane type judging model and three automatic segments and stages to determine the model. The learning process is shown in Figure 2.

Figure 1.

The platform was built by Faster R-CNN, the composition of Faster R-CNN. The convolutional layer is used to extract the features of the image. The input is the whole image and the output is a set of feature maps. The RPN, used for recommending candidate regions; ROI pooling: just as Faster R-CNN, this converts inputs of different sizes into outputs of fixed length; classification and regression, which produces the final output. Faster R-CNN: Faster region-based convolutional neural network; ROI: Region of interest; RPN: Region proposal network. Conv: Convolutions layers; Relu: Rectified linear unit layers; Pooling: Pooling layers; Full connection: Fully connection layers.

Figure 2.

The learning process for the establishment of the automatic diagnosis platform for rectal cancer T-staging. We input the images of the training group into the software. The learning process is divided into two major steps, and a total of four learning models are established to ensure that we can judge the planes, sketch the ROIs, and predict the T stage. ROIs: Region of interests.

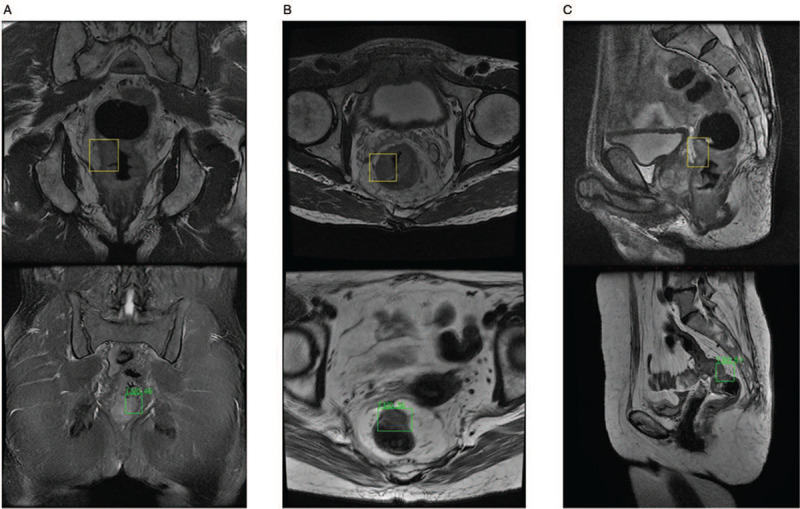

The verification process for the automatic diagnosis platform for rectal cancer T-staging: 20% of the data were randomly assigned to the verification set. The patient images were input into the automatic diagnosis platform, and the platform determined from which the plane image was taken and to which T stage did the image belong. Then, the tumor area delineation results and the T-staging results provided by the platform were compared with the diagnosis by the radiologists and the T-staging based on postoperative pathological results; the correct number of assessments made by the platform was counted in Figure 3. A receiver operating characteristic (ROC) curve was used to evaluate the AI learning results. The area under the curve (AUC) was derived to evaluate the machine learning results.

Figure 3.

This figure shows what kind of data was input to the T-stage automatic identification platform and what kind of data was output by the platform in three planes. Coronal images are shown in (A), horizontal images are shown in (B), and sagittal images are shown in (C). The up image has been marked by the imaging physician for the AI platform to learn. The down image contains the identification result of the AI platform. The automatic delineation of the tumor area identified at the different imaging planes and the determination of tumor stage have reached a high-performance level. AI: Artificial intelligence.

Statistical analysis

Some of the measurements and counting data in this study were statistically analyzed by SPSS20.0 statistical software (SPSS Statistics for Windows, IBM, Armonk, NY, USA). Analysis of the machine learning results was conducted using Python 3.7, which is freely available at https://www.python.org/, and the Classification-report function in the Metric module was used to generate multiclass reports.

The number of true positives and false positives at each node of each category was counted, and the true positive rate and false-positive rate under different probability thresholds were calculated to draw the ROC curves. The AUC micro values for the three imaging planes were obtained by calculating the AUCs of the ROC curves.

Results

Learning results

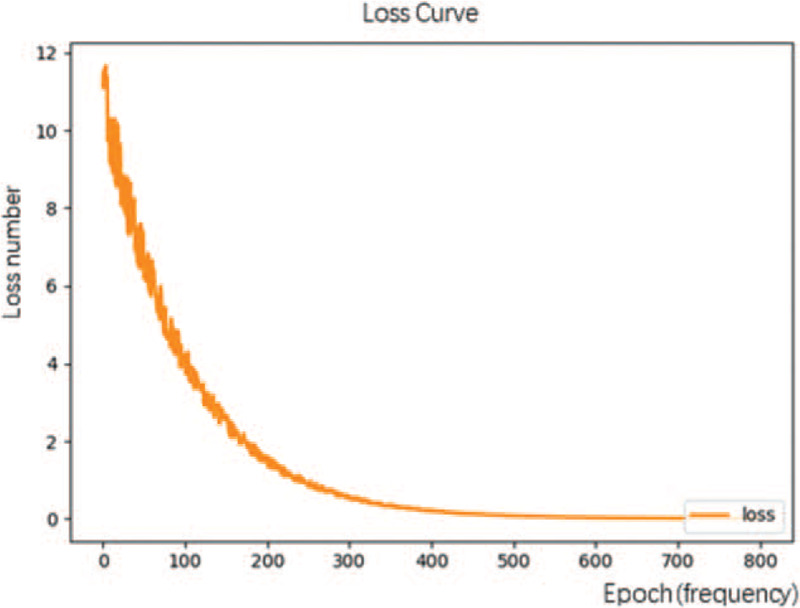

To establish the plane detection models, 50 epochs of learning were conducted. For the tumor recognition and staging diagnosis model, the initial learning rate was set at 0.0002, and the model for each plane was trained with 700 epochs. Figure 4 shows the loss curve for the learning process. With repeated learning, the value of the loss function for the machine learning gradually decreases.

Figure 4.

The loss curve of the T-stage automatic identification platform shows that as the number of repetitions of the machine learning data increases, the loss function value gradually decreases.

Platform verification results

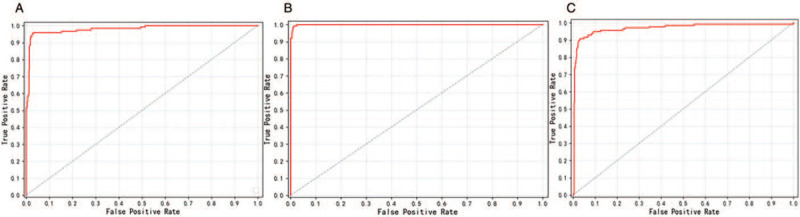

After 50 epochs of learning, the automatic plane recognition model reached 100% accuracy in automatic image plane identification, indicating that the platform could correctly distinguish the projection plane of the rectal cancer MRI data. In the T-staging identification phase, the automated diagnostic platform resulted in the following AUC values for T-staging, as shown in Figure 5: in the coronal plane (AUC = 0.98); in the horizontal plane (AUC = 0.99); and in the sagittal plane (AUC = 0.97). The platform performed well on every plane. All were higher than the 86% accuracy of MRI diagnosis from the manual method.

Figure 5.

The ROC curves of T-stage automatic identification platform in different planes. (A) Machine learning ROC curve for coronal images, AUC = 0.98; (B) machine learning ROC curve for horizontal images, AUC = 0.99; and (C) machine learning ROC curve for sagittal images, AUC = 0.97. Based on the AUC values, the automatic diagnosis platform for T-staging based on the Faster R-CNN deep neural network can achieve the diagnostic level of clinical radiologists in all three planes. AUC: Area under the curve; Faster R-CNN: Faster region-based convolutional neural network; ROC: receiver operating characteristic.

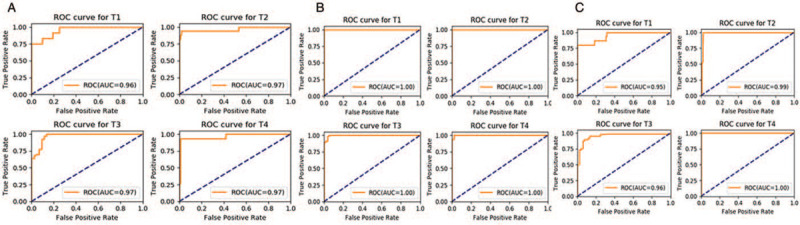

We evaluated the machine learning results at different stages for each plane, and we obtained the results shown in Figure 6: coronal plane: AUC (T1) = 0.96, AUC (T2) = 0.97, AUC (T3) = 0.97, AUC (T4) = 0.97; horizontal plane: AUC (T1) = 1, AUC (T2) = 1, AUC (T3) = 1, AUC (T4) = 1; sagittal plane AUC (T1) = 0.95, AUC (T2) = 0.99, AUC (T3) = 0.96, AUC (T4) = 1.00. The platform performed well for every stage in every plane. Through the results of the evaluation, the diagnostic platform based on Faster R-CNN reached the level of a highly qualified radiologist in the diagnosis of rectal cancer T-staging.

Figure 6.

The ROC curves of T-stage automatic identification platform for different stages at different planes: in the coronal plane (A), AUC (T1) = 0.96, AUC (T2) = 0.97, AUC (T3) = 0.97, AUC (T4) = 0.97; in the horizontal plane (B), AUC (T1) = 1.00, AUC (T2) = 1.00, AUC (T3) = 1.00, AUC (T4) = 1.00; and in the sagittal plane (C), AUC (T1) = 0.95, AUC (T2) = 0.99, AUC (T3) = 0.96, AUC (T4) = 1.00. AUC: Area under the curve; ROC: receiver operating characteristic.

Discussion

The subject of our study is rectal cancer, which has a high incidence and mortality rates; therefore, this study has high social value. The high incidence of rectal cancer and the fact that our hospital has conducted high-resolution pelvic MRI for many years allowed us to obtain large quantities of clinical data for analysis and research. Because T2-weighted MR images are often used by radiologists in the preoperative diagnosis of rectal cancer and have better imaging quality than T1-weighted images,[19,20] we selected T2-weighted high-resolution MR images for this study. Due to limitations regarding patient education level and understanding of the disease, some patients with a preoperative diagnosis of T3 and with indications of neoadjuvant chemoradiation and other patients with a preoperative diagnosis of T4 still select surgical resection, although we had communicated with the patients about alternative treatment plans before surgery; however, such instances allowed us to obtain clinical data from patients with the corresponding staging. The automated diagnostic platform constructed in this study has a good ability to assess the T-stage of rectal cancer at all levels. It also performs well in the diagnosis of T1-stage rectal cancer, which negates the insufficiency of MRI in distinguishing T1 and T2 stages of rectal cancer. The combination of AI and medical big data has greatly improved the efficiency and accuracy of medical care. Previously, researchers achieved promising results for skin cancer, prostate cancer, and breast cancer through deep learning algorithms. For rectal cancer, AI has also been used to experimentally diagnose metastatic lymph nodes in MR images, obtaining satisfactory results.[21,22] This study used the more advanced Faster R-CNN computation algorithm.[18] The main reason is that the process of this algorithm used in learning is similar to the process used by the radiologists in reading. The past algorithms, such as histogram of gradient (HoG) features, are the support vector machine (SVM) algorithm and deformable part model (DPM) algorithm. They take more time in learning and they only can learn the shallow features. The past algorithms are poor in generalization ability and complex scenes. Faster R-CNN is a new target algorithm, which introduced RPN and reduced computational cost. The Faster R-CNN algorithm performed well in calculation speed and detection accuracy. Therefore, we choose Faster R-CNN as algorithms. We utilized postoperative pathology results and corrected some common issues regarding inflated or insufficient staging caused by imaging quality, body position during imaging, and human factors. T-staging based on pathological diagnoses further improved the AI diagnosis system for rectal cancer. Using the “gold standard” for rectal cancer diagnosis and disease evaluation for learning further reduced the possibility of mistakes made by this platform in the preoperative diagnosis of rectal cancer, leading to a more accurate understanding of the patient's condition before surgery, and more effective preoperative treatment plans. Compared with improving imaging accuracy and improving the diagnostic experience of radiologists, training AI can achieve better results in a shorter period. In terms of image processing, image normalization should be adopted to obtain relatively uniform input data and improving the convergence speed and accuracy of data processing, in addition to image normalization, also enables processing data from different centers.[21,22]

Although this automatic diagnosis system for T-staging has achieved good results, the study still has the following limitations. The patients included in the learning set were all treated with radical surgery. There were no patients who were not suitable for surgical treatment. The patients did not include those who were at stages too advanced to receive surgery or those who were at earlier stages and had received endoscopic submucosal dissection or transanal rectal lesion resection. The lack of the above information in the AI learning process may cause inaccurate MRI assessments for similar patients. The corresponding relationship between the depth of invasion and the pathological stage is different for tumors located at different distances from the anus,[23] which may affect correct imaging assessment by AI. Restaging after neoadjuvant chemoradiotherapy is equally important for the treatment of tumors and the development of surgical methods. However, MRI, CT, and EUS have limited results in terms of restaging after chemotherapy. In the future, we will attempt to use machine learning to improve the accuracy of restaging. In this article, there is a large difference in the proportion of the subjects in each stage, which makes the machine biased. In the clinical application process, the image recognition of individual stages may be inaccurate.

In summary, we aimed to design and create an automatic diagnosis platform for rectal cancer T-staging that enables automatic T-staging and leads to results comparable to those made by radiologists. The platform takes into consideration pathological diagnosis results and has higher accuracy than previous recognition platforms based on radiological diagnostic results alone. As an effort to combine medical and engineering technology, this platform achieved high experimental efficacy. However, due to the diverse conditions of patients, the anti-interference ability of the platform is relatively insufficient. Therefore, this platform can only be used as an auxiliary tool for radiologists. The combination of AI and medical imaging has exerted a 1 + 1 > 2 effect.[5] For the computation field, it is a novel AI application that expands the connotation of and increases the application scenario of AI, enables AI to increase and develop productivity in a new field, and strengthens the role of computation technology. For the medical industry, the proposed method significantly improves the efficiency and accuracy of image reading by radiologists, leads to better utilization of imaging materials, enables imaging examinations to better serve as auxiliary examination methods for clinical treatment, accurately determines tumor staging, and makes it possible to achieve more personalized and precise treatment plans. AI requires professional clinician input and authoritative clinical data; otherwise, treatment recommendations provided by the AI may be misguided, resulting in repetitive clinical work for doctors due to mistrust of AI.

Funding

The study was supported by the National Natural Science Foundation of China (No. 81802888); the Key Research and Development Project of Shandong Province (No. 2018GSF118206; No. 2018GSF118088); and the Natural Science Foundation of Shandong Province (No. ZR2019PF017).

Conflicts of interest

None.

Footnotes

How to cite this article: Wu QY, Liu SL, Sun P, Li Y, Liu GW, Liu SS, Hu JL, Niu TY, Lu Y. Establishment and clinical application value of an automatic diagnosis platform for rectal cancer T-staging based on a deep neural network. Chin Med J 2021;134:821–828. doi: 10.1097/CM9.0000000000001401

References

- 1.Ferlay J, Soerjomataram I, Dikshit R, Eser S, Mathers C, Rebelo M, et al. Cancer incidence and mortality worldwide: Sources, methods and major patterns in GLOBOCAN 2012. Int J Cancer 2015; 136:E359–E386. doi: 10.1002/ijc.29210. [DOI] [PubMed] [Google Scholar]

- 2.Zheng R, Sun K, Zhang S, Zeng H, Zou X, Chen R, et al. Report of cancer epidemiology in China (in Chinese). Chin J Oncol 2019; 41:19–28. doi: 10.3760/cma.j.issn.0253-3766.2019.01.005. [DOI] [PubMed] [Google Scholar]

- 3.Wang H, Fu C. Value of preoperative accurate staging of rectal cancer and the effect on the treatment strategy choice (in Chinese). Chin J Pract Surg 2014; 34:37–40. doi: 10.3969/j.issn.1674-9316.2016.02.058. [Google Scholar]

- 4.Cui L. Selection of surgical methods for low rectal cancer (in Chinese). J Surg Con Pract 2010; 15:96–99. doi: 10.3969/j.issn.1001-5779.2020.04.012. [Google Scholar]

- 5.Liu B, He K, Zhi G. The impact of big data and artificial intelligence on the future medical model (in Chinese). J Med Philos 2018; 39:1–4. doi: 10.12014/j.issn.1002-0772.2018.11b.01. [Google Scholar]

- 6.Guillem JG, Cohen AM. Current issues in colorectal cancer surgery. Semin Oncol 1999; 26:505–513. [PubMed] [Google Scholar]

- 7.Balyasnikova S, Brown G. Optimal imaging strategies for rectal cancer staging and ongoing management. Curr Treat Options Oncol 2016; 17:32.doi: 10.1007/s11864-016-0403-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wang X, Gao Y, Li J, Wu J, Wang B, Ma X, et al. Diagnostic accuracy of endoscopic ultrasound, computed tomography, magnetic resonance imaging, and endorectal ultrasonography for detecting lymph node involvement in patients with rectal cancer: a protocol for an overview of systematic reviews. Medicine (Baltimore) 2018; 97:e12899.doi: 10.1097/MD.0000000000012899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Marone P, de Bellis M, D’Angelo V, Delrio P, Passananti V, Di Girolamo E, et al. Role of endoscopic ultrasonography in the loco-regional staging of patients with rectal cancer. World J Gastrointest Endosc 2015; 7:688–701. doi: 10.4253/wjge.v7.i7.688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Edelman BR, Weiser MR. Endorectal ultrasound: its role in the diagnosis and treatment of rectal cancer. Clin Colon Rectal Surg 2008; 21:167–177. doi: 10.1055/s-2008-1080996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ou Y, Zhang H, Yuan X, Yang C, Zhou C. Value of MRI staging of rectal carcinoma: Compared non-enhancement with enhancement MRI (in Chinese). Chin J Med Imag Tech 2003; 19:585–587. doi: 10.3321/j.issn:1003-3289.2003.05.025. [Google Scholar]

- 12.Schmoll HJ, Van Cutsem E, Stein A, Valentini V, Glimelius B, Haustermans K, et al. ESMO Consensus Guidelines for management of patients with colon and rectal cancer. A personalized approach to clinical decision making. Ann Oncol 2012; 23:2479–2516. doi: 10.1093/annonc/mds236. [DOI] [PubMed] [Google Scholar]

- 13.Jhaveri KS, Sadaf A. Role of MRI for staging of rectal cancer. Expert Rev Anticancer Ther 2009; 9:469–481. doi: 10.1586/era.09.13. [DOI] [PubMed] [Google Scholar]

- 14.Xiao Y, Liu S. Artificial intelligence will change the future of imaging medicine (in Chinese). J Technol Finance 2018; 10:11–15. doi: 10.3969/j.issn.2096-4935.2018.10.006. [Google Scholar]

- 15.Harangi B. Skin lesion classification with ensembles of deep convolutional neural networks. J Biomed Inform 2018; 86:25–32. doi: 10.1016/j.jbi.2018.08.006. [DOI] [PubMed] [Google Scholar]

- 16.Bejnordi BE, Veta M, van Diest PJ, van Ginneken B, Karssemeijer N, Litjens G, et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. JAMA 2017; 318:2199–2210. doi: 10.1001/jama.2017.14585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kaur H, Choi H, You YN, Rauch GM, Jensen CT, Hou P, et al. MR imaging for preoperative evaluation of primary rectal cancer: practical considerations. Radiographics 2012; 32:389–409. doi: 10.1148/rg.322115122. [DOI] [PubMed] [Google Scholar]

- 18.Ren S, He K, Girshick R, Sun J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 2017; 39:1137–1149. doi: 10.1109/TPAMI.2016.2577031. [DOI] [PubMed] [Google Scholar]

- 19.Zhang XM, Zhang HL, Yu D, Dai Y, Bi D, Prince MR, et al. 3-T MRI of rectal carcinoma: preoperative diagnosis, staging, and planning of sphincter-sparing surgery. AJR Am J Roentgenol 2008; 190:1271–1278. doi: 10.2214/AJR.07.2505. [DOI] [PubMed] [Google Scholar]

- 20.Brown G, Daniels IR, Richardson C, Revell P, Peppercorn D, Bourne M. Techniques and trouble-shooting in high spatial resolution thin slice MRI for rectal cancer. Br J Radiol 2005; 78:245–251. doi: 10.1259/bjr/33540239. [DOI] [PubMed] [Google Scholar]

- 21.Zhou YP, Li S, Zhang XX, Zhang ZD, Gao YX, Ding L, et al. High definition MRI rectal lymph node aided diagnostic system based on deep neural network. Chin J Surg 2019; 57:108–113. doi: 10.3760/cma.j.issn.0529-5815.2019.02.007. [DOI] [PubMed] [Google Scholar]

- 22.Lu Y, Yu Q, Gao Y, Zhou Y, Liu G, Dong Q, et al. Identification of metastatic lymph nodes in MR imaging with Faster region-based convolutional neural networks. Cancer Res 2018; 78:5135–5143. doi: 10.1158/0008-5472.CAN-18-0494. [DOI] [PubMed] [Google Scholar]

- 23.Liu Y, Yao H. Re-evaluation of the clinical significance of TNM staging of mid-low rectal cancer (in Chinese). Chin J Gastrointest Surg 2014; 17:530–533. doi: 10.3760/cma.j.issn.1671-0274.2014.06.003. [PubMed] [Google Scholar]