Abstract

We herein report a study on the intelligent control of microfluidic systems using reinforcement learning. Integrated microvalves are utilized to realize a variety of microfluidic functional modules, such as switching of flow pass, micropumping, and micromixing. The application of artificial intelligence to control microvalves can potentially contribute to the expansion of the versatility of microfluidic systems. As a preliminary attempt toward this motivation, we investigated the application of a reinforcement learning algorithm to microperistaltic pumps. First, we assumed a Markov property for the operation of diaphragms in the microperistaltic pump. Thereafter, components of the Markov decision process were defined for adaptation to the micropump. To acquire the pumping sequence, which maximizes the flow rate, the reward was defined as the obtained flow rate in a state transition of the microvalves. The present system successfully empirically determines the optimal sequence, which considers the physical characteristics of the components of the system that the authors did not recognize. Therefore, it was proved that reinforcement learning could be applied to microperistaltic pumps and is promising for the operation of larger and more complex microsystems.

I. INTRODUCTION

The emergence of deep learning and the development of deep reinforcement learning have made it possible for artificial intelligence (AI) to make decisions in higher-dimensional states and action spaces.1,2 In the field of microfluidics or biochemistry, for example, AI technologies have been applied to flow cytometry3 and the segmentation of cell images.4 In addition, there are applications of flow design, such as determining the channel structure to obtain the desired fluid distribution from an image,5 estimating flow velocity and physical properties by learning droplet shapes,6 and estimating the shape of droplets in emulsions.7 More details of the research examples in these fields are summarized in the following reviews.8,9 Among the existing AI technologies, reinforcement learning is a type of machine learning technique in which the optimal policy to address continuous decision-making problems is learned through trial and error.10 A typical example is an AI that has learned the skills to surpass humans in video games11 and Go.12 In the field of microfluidics, reinforcement learning is applied for microdroplet control on digital microfluidic devices13 and the optimization of microchannel structures.14 Dressler et al. realized super stable droplet generation by applying reinforcement learning for the control of a syringe pump.15 This study is particularly notable because it proves that the application of intelligent control to a microfluidic system can realize the adaptability of the microfluidic system to environmental changes. Microvalves are one of the most basic elements in microfluidics and act as switches to turn a flow on or off.16,17 By controlling multiple integrated microvalves, a variety of microfluidic functions have been realized, such as simple switching of the flow path,18 dynamic switching of the task mode,19 micromixing,20 and micropumping.21 Therefore, we consider the application of reinforcement learning to integrated microvalves as a key attempt to potentially realize a more flexible and versatile microfluidic system. This is dynamically and autonomously adaptable to environmental changes. Accordingly, we investigated the applicability of reinforcement learning to a microperistaltic pump, which is a functional module comprising minimally integrated microvalves. Specifically, the optimal operation sequence to achieve the maximum flow rate was determined. We assumed that the operation of a microperistaltic pump satisfied the Markov property. Reinforcement learning was applied to the decision-making problem that determined the optimal next state from a certain state in a series of actuation sequences. A method that did not require exploration by the agent was employed because the environmental changes in the experimental system were insignificant. In addition, we determined that a simple algorithm would be sufficient to demonstrate this and that advanced algorithms such as Deep Q-networks were not necessary. First, we modeled and formulated the operation of the pump based on the MDP. Subsequently, a learning system consisting of a pump, a microscope, and a computer for the calculation processing of reinforcement learning was constructed. The flow rate generated by the diaphragm as it transitions between the two phases was sampled using this system. To obtain an actuation sequence for maximizing the flow rate, reinforcement learning was applied using the sampled flow rate as a reward in the MDP. In addition, the flow rates of the obtained sequence and the conventional sequence were compared to evaluate this method.

II. MATERIALS AND METHODS

A. Adaptation of the Markov decision process

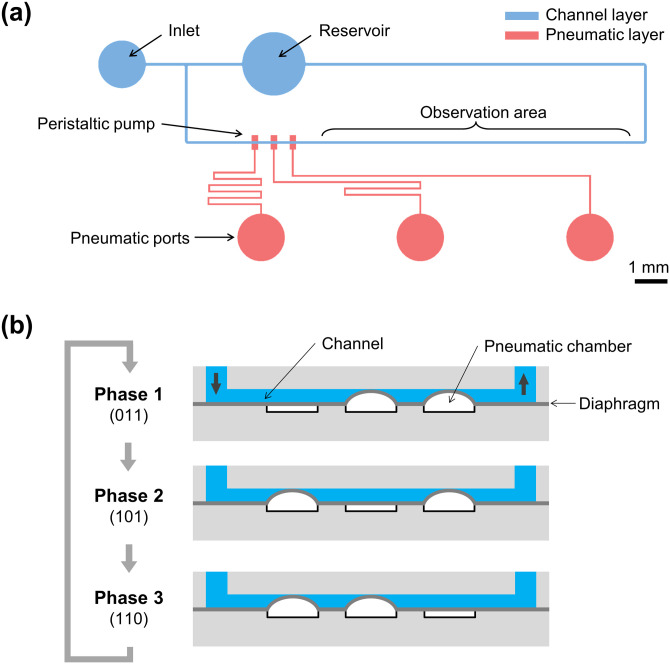

Figure 1(a) shows the structure of a microperistaltic pump. The microchannel extending from both ends of the pump was connected via a reservoir and formed a closed channel. The channel is filled with liquid, and the flow rate is measured by the moving distance of a microbead arranged in the observation area. If the experimental system satisfies the Markov property, it can be formulated as a MDP and acquire a policy based on dynamic programming. The MDP is defined by a state set S, an action set A, a state transition probability function P, and a reward function R. The policy was optimized to maximize the rewards given by the environment for states and actions. This method is effective for a control target that is complicated and variable because the learning is performed based on rewards.

FIG. 1.

(a) Structure of the microperistaltic pump device. (b) Diaphragm actuation sequence of a microperistaltic pump.

Figure 1(b) shows the actuation sequence of the pump. We modeled a microperistaltic pump consisting of three diaphragms and applied a reinforcement learning. The open/close (or simply O/C) of the diaphragms were represented as logic 0/1. Logic 1 indicates that the pneumatic chamber is pressurized and the diaphragm is raised. Logic 0 indicates that the pneumatic chamber is depressurized and the diaphragm is lowered. There are eight phases in the case of three diaphragms, and pumping of the liquid is done by sequentially transitioning through these eight phases. Figure 1(b) shows an example of a three-phase sequence. We assume that the Markov property is satisfied in the system, assuming that the flow rate generated by the transition of the diaphragm is determined only by the current phase of the diaphragm and the phase after the transition. Here, the components of the MDP in the microperistaltic pump are defined as follows:

State : Current phase of the diaphragm,

Action : The next phase of the diaphragm, and

Reward : The flow rate when acting a in state s.

Reward and transition functions have to be defined to acquire the action policy with the use of dynamic programming. The moving distances of the microbeads were sampled for all combinations of s and a and utilized as a reward function. Based on the sampled values, the flow rate corresponding to a specific action in a specific state was defined and utilized as a reward. In this study, the flow rate was defined as the volume of the moving fluid per action. The flow rate V was calculated using the formula: , where x is the moving distance of the microbead and is the cross-sectional area of the microchannel. In addition, the transition function (where is the state after the transition) in the MDP was omitted because it was assumed that the state transition of the pump is determined only by the current state and the action. It is empirically known that the operation of a diaphragm is not influenced by probability but certainly changes to the expected state. Based on this definition, the expression of the value was obtained from the Bellman equation

| (1) |

Here, is the discount rate and is the action in state .

The algorithm is as follows:

-

1.

The value function was initialized to 0 for all states and actions.

-

2.was updated for all states and actions using the following formula:

where is the value function after updating.(2) -

3.If a convergence condition

(3)

is satisfied, obtain the policy by

| (4) |

and end the program. Otherwise, execute

| (5) |

and go to step 2.

B. Design and fabrication of the device

A polydimethylsiloxane (PDMS)-based device was fabricated using soft lithography.22 A negative photoresist (SU-8 3050; Nippon Kayaku Co., Ltd., Japan) was spin coated on a silicon wafer and soft baked at 95 °C for 30 min. The thickness of the photoresist layer was 28.5 μm. Then, the wafer was exposed to UV light at 250 mJ/cm2 through a photomask that was printed with a channel pattern. It was post-baked at 95 C for 5 min and then developed. To seal the diaphragm, a modeling wax (file-A-wax) with a thickness of 30 μm and a width of 100 μm was placed on the diaphragm part of the channel layer. The substrate was heated on a hot plate at 130 °C for 10 s to shape the cross section of the flow channel into an arc. After mold fabrication, a silicon rubber sheet with a thickness of 2 mm was attached to the mold as a spacer to adjust the device thickness. PDMS (SYLGARD 184; The Dow Chemical Co., USA) was mixed with a base, and a curing agent at a ratio of 10:1 (w:w) was applied onto the mold. It was cured by heating at 75 °C for 90 min. The outer shape of the peeled PDMS from the mold was cut, and its chambers were punched out. The vent hole connected to the reaction chamber was punched out using an 18 gauge syringe needle. The diaphragm was made by spin coating on a silicon wafer with PDMS mixed at a ratio of 10:0.7 to a thickness of 23.7 μm. The diaphragm and the pneumatic layer were irradiated with vacuum plasma and bonded by heating on a hot plate at 75 °C for 15 min. This layer and the channel layer were bonded using a similar method.

C. Design of the learning system

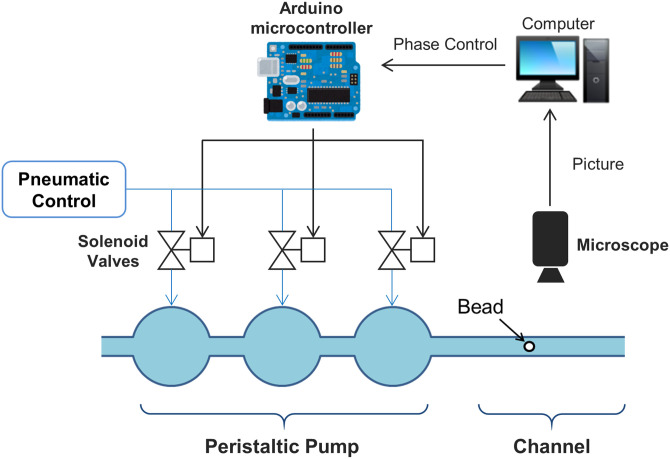

Figure 2 shows the learning system. The system consists of a microperistaltic pump, pneumatic control, a solenoid valve (USG3-6-1-DC12V; CKD Corporation, Japan) for driving the diaphragm, an Arduino microcontroller (Arduino Uno, Arduino SRL, Italy) for operating the valve, a computer for executing the reinforcement learning program, a microscope for observing the beads, and a CMOS (complementary metal–oxide–semiconductor) camera (VC-320; Gazo Co., Ltd.). The device was connected to a pneumatic chamber and a diaphragm pump to control the air pressure via a solenoid valve. The solenoid valve was driven by the control signal of an Arduino microcontroller. The pressure applied to the pneumatic chamber was 50 kPa. The channel of the device was filled with Tween20 (170-6531; Bio-Laboratories, Inc., USA) diluted to 0.05% (v/v) in Milli-Q water. Then, a 20 μm polystyrene bead (No. 18329; Polysciences Inc., USA) was placed in the observation area of the device. Images were captured by using a CMOS camera attached to a microscope connected to a computer, and the positions of the beads were measured in real time by extracting the outline of the beads using OpenCV 4.0. The observation area was fixed during the experiment because the relative position of the microfluidic device and microscope was also fixed. The learning algorithm was executed on the computer by utilizing the sampled moving distance of the microbead. Then, the pumping sequence was implemented by the Arduino microcontroller based on the calculated result.

FIG. 2.

Structure of the learning system.

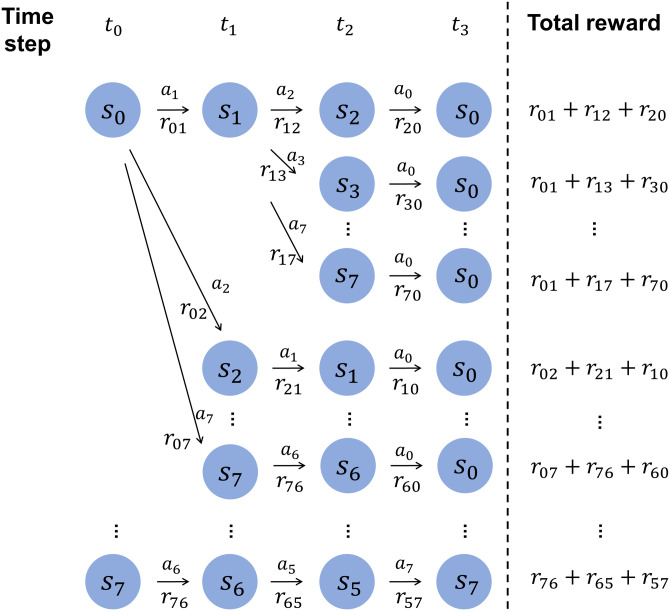

D. Sampling and simulating method of the flow rate

In the flow rate sampling process, first, the diaphragm was shifted to (000) to reset the state. Subsequently, the diaphragm was transitioned to state s, and the bead position was measured. Thereafter, the state was transitioned to , and the bead position was measured in the same way. The volume of fluid that had moved was calculated from the difference between the bead positions measured before and after the transition and the cross-sectional area of the flow channel. Thus, the flow rate generated when the action a was taken in the state s can be sampled. All combinations of s and a and the sampled flow rates were used as a reward function . For the evaluation of the obtained sequences, we compared the flow rates measured in experiments and simulations. Figure 3 shows a tree diagram of the state transitions for each sequence. The flow rate generated by the pump was determined based on the combination of state transitions. Also, the flow rate generated in any sequence can be simulated by summing the all sampled flow rates in the series of transitions. Here, the state transition frequency was sufficiently slow compared to the opening and closing behavior of the diaphragm. Therefore, we assumed that the sum of the moving distances of the microbeads could be approximated as the flow rate. For example, in the case of a three-phase sequence of (011)(101)(110), the flow rate generated per circulation can be obtained by summing the flow rate generated by three transitions of (011) → (101), (101) → (110), and (110) → (011).

FIG. 3.

Method for the flow rate estimation of a three-phase pumping sequence. Here, the subscripts indicate the states of the diaphragms. For example, indicates state (0, 1, 1) and indicates the obtained reward when transition from (0, 0, 0) to (0,1,0) is carried out.

III. RESULTS AND DISCUSSION

The flow rate generated by the transition between the two states was sampled by the system. Table I lists the sampled moving distances of the microbeads. The actuation sequence was calculated by the reinforcement learning algorithm using the sampled flow rate as a reward function. The total time required for the learning process is calculated as the sum of the time required for sampling and the time required by the computer for calculation. The sampling took approximately 10 min, and the calculation takes up to 10 s when a general laptop PC (Intel core i3-5005U, 12 GB RAM) was used. In the case where the discount rate was set lower, future rewards were estimated lower so that a more short-term reward was valued, and the convergence sequence would be shorter. Here, the total flow rate obtained after a longer series of transitions is of more significance than the flow rate obtained after a single state transition. Therefore, a higher discount rate results in a longer series of transitions, and the discount rate must be closer to 1. Thus, it is important to set a suitable parameter to obtain the target output.

TABLE I.

Moving distances of the microbead corresponding to the state transition of the diaphragms. Unit: (μm).

| State | Action (next state) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

| (0,0,0) | (0,0,1) | (0,1,0) | (0,1,1) | (1,0,0) | (1,0,1) | (1,1,0) | (1,1,1) | ||

| 0 | (0,0,0) | 2.1 | 91.2 | 75.5 | 139.9 | 109.9 | 157.4 | 216.3 | 249.1 |

| 1 | (0,0,1) | −57.1 | −1.0 | −225.9 | −3.4 | −180.6 | −3.4 | −136.6 | −5.4 |

| 2 | (0,1,0) | −40.2 | 204.4 | −3.1 | 202.7 | −155.2 | 203.0 | 8.8 | 202.7 |

| 3 | (0,1,1) | −88.7 | 2.0 | −239.2 | −0.6 | −408.6 | −0.8 | −224.7 | −3.3 |

| 4 | (1,0,0) | −45.4 | 165.1 | 168.7 | 339.2 | −2.1 | 161.8 | 181.5 | 346.1 |

| 5 | (1,0,1) | −83.7 | 2.0 | −169.6 | 0.1 | −164.1 | −0.3 | 24.8 | 96.0 |

| 6 | (1,1,0) | −100.0 | 208.2 | 2.7 | 206.2 | −177.9 | 207.6 | −0.4 | 204.0 |

| 7 | (1,1,1) | −117.0 | 4.2 | −188.4 | 1.7 | −350.8 | 1.7 | −164.9 | −0.7 |

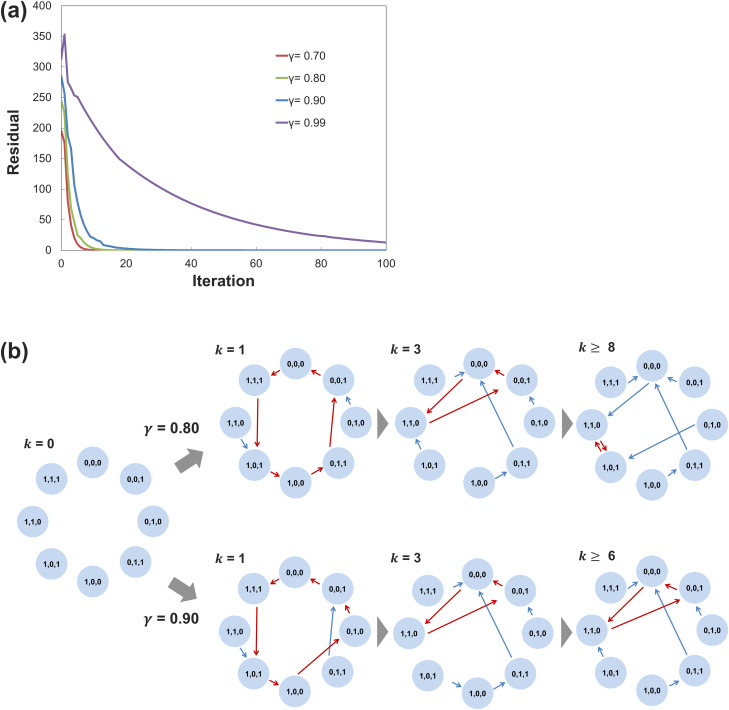

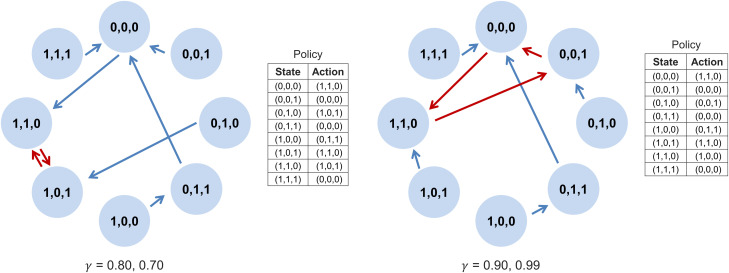

Figure 4(a) shows the maximum residual in each iteration at certain discount rates. At the discount rate , the convergence condition was achieved within 100 iterations. Here, is set sufficiently small as compared to the mean of the absolute value of the rewards. At , the convergence condition was not reached by 100 iterations, but it was achieved at approximately 700 iterations. Figure 4(b) shows the progress of policy convergence at . The policy converged within a few iterations. The arrows in the figure show the direction of transitions that should be followed in the policy. Figure 5 shows the acquired policies at each discount rate. Regardless of which state was selected as the initial state, the operation converged to the cycles (110)(101) or (000)(110)(001) by executing the actions that follow the policy. The cases of and converged to the same policy, respectively. Table II lists the Q-values when . The agent executed the action with the maximum value in each state. Here, the highest amount of bead movement was achieved in the transition (100)(011). However, the converged sequence did not include this transition because there was an operation sequence that had a higher total bead moving distance than the sequences containing (100)(011).

FIG. 4.

(a) Residuals of the value function . (b) Progress of policy convergence in different discount rates () from the initial state. k indicates the number of iteration. The circle indicates the state of the pump, and the arrow indicates the action (the state to be changed next) given by the policy. When transitioning from any initial state according to the policy, it cycles through two or three states (red arrow).

FIG. 5.

Calculated policy function π (s).

TABLE II.

Q-values when converged at γ = 0.90.

| State | Action (next state) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

| (0,0,0) | (0,0,1) | (0,1,0) | (0,1,1) | (1,0,0) | (1,0,1) | (1,1,0) | (1,1,1) | ||

| 0 | (0,0,0) | 840.6 | 783.3 | 830.4 | 797.6 | 922.4 | 828.5 | 932.3 | 856.8 |

| 1 | (0,0,1) | 798.7 | 718.1 | 617.3 | 696.3 | 717.0 | 714.8 | 682.8 | 676.9 |

| 2 | (0,1,0) | 810.7 | 863.3 | 774.8 | 842.0 | 735.0 | 860.7 | 785.6 | 824.0 |

| 3 | (0,1,1) | 776.4 | 720.2 | 607.9 | 698.3 | 555.8 | 716.6 | 620.5 | 678.4 |

| 4 | (1,0,0) | 807.0 | 835.5 | 896.3 | 938.5 | 843.2 | 831.6 | 907.7 | 925.4 |

| 5 | (1,0,1) | 779.9 | 720.2 | 657.1 | 698.8 | 728.7 | 717.0 | 796.9 | 748.6 |

| 6 | (1,1,0) | 768.4 | 866.0 | 778.9 | 844.5 | 718.9 | 864.0 | 779.1 | 824.9 |

| 7 | (1,1,1) | 756.4 | 721.8 | 643.8 | 699.9 | 596.7 | 718.4 | 662.8 | 680.2 |

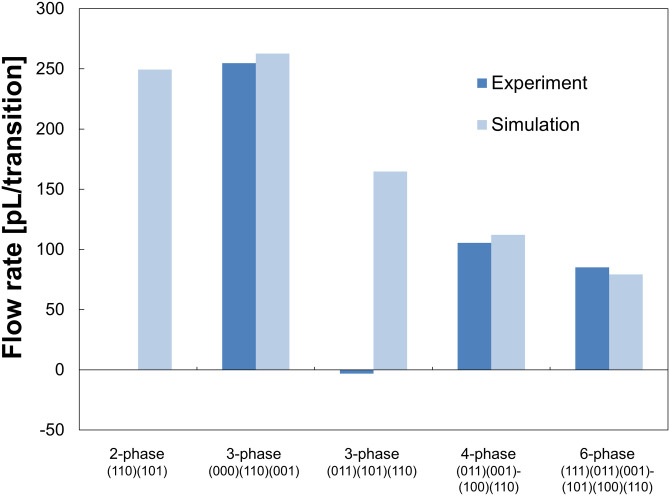

To evaluate the flow rate of the acquired sequence, it was compared with the flow rate from the conventional actuation sequences. Figure 6 shows the measured and simulated flow rates for each sequence. The mean flow rate per transition in each sequence can be estimated by summing up the flow rates occurring in each transition in one cycle and dividing by the phase number of the sequence. The flow rate of the sequence of (000)(110)(001) obtained by reinforcement learning increased twofold compared to the conventional four or six phase sequence.23 In addition, it can be said that the predicted and measured values were in good agreement. The sequence of (110)(101) converged at , and the simulated value and the measured values from the conventional three-phase sequence (011)(101)(110) shows significant inconsistency. In the case of the two-phase sequence (110)(101), it was evident that no flow occurred because the left diaphragm was always closed. This is due to the speed difference between the opening and closing behavior of the diaphragms, as explained below.

FIG. 6.

Comparison of simulation and experiment flow rate with each actuation sequence. These flow rates indicate the averaged flow rate per single transition, which is calculated from the measured total flow rate with a transition frequency of 3 Hz.

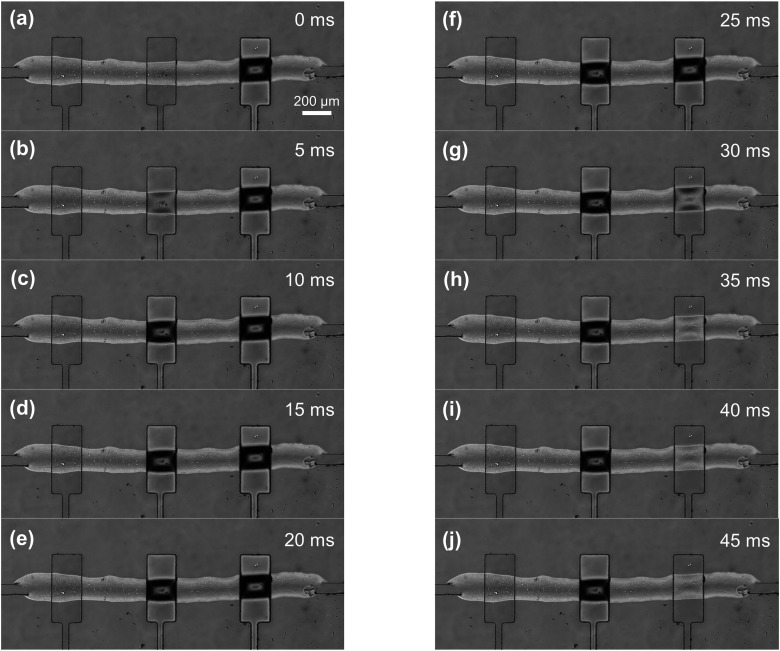

Figure 7 shows the observed results along with the transition process of diaphragms. The center diaphragm was closed approximately 15 ms after the start of the transition. On the contrary, the diaphragm on the right released the seal of the channel in 30 ms. Thereafter, the diaphragm was completely opened after 45 ms. This difference in the opening and closing speeds of the diaphragm was mainly caused by the difference in response speed of the solenoid valve that controls the pressure of the diaphragm. Another impact is that the movement of the diaphragm to the rest position depends on the elasticity of PDMS.21 For the convenience of the discussion, we define the physical state of the diaphragms corresponding to the numerical state (110) as [CCO] (referring to closed, closed, open). In addition, if the state is indicated as [CcC], the small letter “c” indicates the inconsistency between the numerical state and the physical state of the center diaphragm. Based on the observed behavior of the diaphragm, it was determined that the physical transition of the obtained two-phase sequence (110)(101) was [CCO][CcC]. The reason why the center diaphragm is indicated with “c” in the second [CcC] is that the outer diaphragms closed first. Therefore, the fluid was not supplied, and the physically closed state of the center diaphragm was always maintained.

FIG. 7.

Step-by-step observation of the transition of the diaphragm from (001) to (010). (a) Initial state (001). (b)–(e) Center diaphragm closes and the state of the diaphragms becomes (011). (f)–(j) Right diaphragm opens.

When the flow rate was sampled, (110)(101) became [CCO][CcC], as the deformation of the right diaphragm contributes to pumping. Additionally, under sampling, (101)(110) became [COC][CCO], an operation in which the numerical transition and the physical transition agree. In this transition, the deformation of the center diaphragm and the right diaphragm was canceled, and the pumping did not occur, while a backward flow of the liquid occurred in the actual pumping experiment. Numerically, because a high value was obtained by switching back and forth between these states, it is considered that the sequence has converged. Convergence to the two-phase sequence was caused by the delayed behavior of the solenoid valve; therefore, this problem can be solved by replacing the solenoid valve with a smaller opening and closing speed difference. However, it is sometimes difficult to eliminate the difference between the real environment and the modeled learning system. To avoid this, it is important to explore the algorithm in a real environment. Conversely, the sequence converged to (000)(110)(001), and the maximum flow rate was obtained (Figs. 4 and 6). The transition (110)(001) is included in this sequence; if the physical transition and the numerical transition perfectly coincide, it becomes [CCO][OOC], so a significant flow rate is not likely to be obtained. However, owing to the difference in the opening/closing speed of the diaphragm, the transition became [CCO][ccC][OOC], and the deformation of the right diaphragm contributed to pumping. Therefore, it can be interpreted that the learning system had empirically found the usefulness of this transition. Additionally, it can be said that this sequence has another unique characteristic because it includes the phase (000), which is not typically used. The device has a closed flow path; in other words, no difference in the head pressure exists between both ends of the pump. Consequently, backflow does not occur even when all the diaphragms are released. Thus, the transition to (000) does not adversely affect pumping. This shows that a sequence considering the characteristics of the system was obtained.

Moreover, it was confirmed that the flow rate of the conventional three-phase sequence (011)(101)(110) was extremely low when compared with the other sequences. This fact indicates a significant inconsistency with the simulation. This inconsistency was caused by the following process. First, at the time of the transition of (011)(101), the physical transition became [OCC][CcC][CcC] owing to the difference in the opening/closing speed of the diaphragm, indicating that the center diaphragm is always closed. Thereafter, as a result of the transition of (101)(110), the fluid did not move from the center to the right chamber, and the right diaphragm flowed backward from the right channel. Thus, owing to the inconsistency between the numerical state and the physical state due to the past operation history, it is presumed that a flow rate consistent with the simulation was not obtained in the conventional three-phase sequence. Conversely, from Fig. 6, it is confirmed that the simulated and measured values of the three sequences, which were not affected by the delay of the diaphragm operation, agree well with each other.

The flow rates for all three-phase sequence combinations were simulated. It was confirmed that the acquired sequence had the highest flow rates of all the three-phase sequences and converged to the optimal solution. These results show that reinforcement learning can be applied to the operation of the pump by assuming a Markov property for the operation of the microperistaltic pump and formulating it based on the MDP. Additionally, the use of reinforcement learning is expected to improve the adaptability of the system to environmental changes. An additional experiment (refer to the supplementary material) was conducted to demonstrate this adaptation capability. In this experiment, the environment was changed by replacing the solenoid valves in the middle of the learning process. We observed that agents adapt to environmental changes by changing their policies. In addition, this method can be applied to several microvalves if the reward function is defined by the sampled flow rate. However, it is expected that the result of the calculation sometimes may not converge to an optimal behavior due to violation of the Markov property, as demonstrated in this study. To obtain optimal results in a large-scale integrated system, the application of a method that explores the environment is speculated to be effective. Because, in the real environment, the reward (amount of beads moving) from the transition to (110)(101) is reduced. Thus, the agent will estimate the value of this action to be low and prevent the convergence to the above sequence.

A. Future outlook

The method proposed in this study can be extended to a larger scale of an integrated microfluidic system by extending the state action space and by defining the reward function. This means that the system can be scaled by changing the reward function itself according to the purpose. For example, physical scale expansion such as the number of valves can be realized by redefining the reward function according to the number of valves in the system. In addition to simple pumping, more complex functions can be realized by the aforementioned extension. In particular, the acquisition of pumping sequencing optimized for lower pulsation and micromanipulation of small objects in a microfluidic system can be realized by the proper design of the reward function. Moreover, versatile and scalable microfluidic systems with highly integrated microvalves are realized using a multi-layered microfluidic chip.24,25 Additionally, a microfluidic system that adapts its behavior to the change of environment in real time by employing the algorithm that includes exploring the behavior can be realized. By applying the proposed method to these integrated microvalves, the flexible microfluidic system, which can autonomously adapt its behavior to a variety of tasks, is possible. On the contrary, the increase in the complexity of the system and exploration results in an increase in the learning cost; therefore, the learning efficiency must be improved. Simulation is effective for ensuring efficient learning and efficient development of a real environment. However, differences between a real and virtual environment sometimes make it difficult to properly learn the agent, as reported in this paper.

IV. CONCLUSION

In this study, we assumed that the operation of a microperistaltic pump satisfies the Markov property and applied reinforcement learning to the continuous decision-making process of the optimal phase in a microperistaltic pump. We measured the flow rates generated from all combinations of transitions between the two states of the system. Then, reinforcement learning was applied to the system with the flow rate as a reward value. Convergence and acquired learning sequences at several discount rates were discussed. As a result of reinforcement learning, the agent obtained a three-phase sequence of (000)(110)(001). The flow rate of this sequence increased by two times more than that of the conventional sequence. Additionally, an analysis of the operating characteristics of the diaphragm was performed. It was confirmed that the acquired sequence selected the optimal phase transition considering the unique operating characteristics. These results demonstrate the effectiveness of reinforcement learning in optimizing the actuation sequence. Reinforcement learning is effective for realizing intelligent control, which considers the characteristics of a physical property. In addition, it can extend its applications to the ever-changing environmental conditions of the microfluidic platform. In this study, a relatively simple task was chosen because the purpose of this study was to demonstrate the application of reinforcement learning to a microvalve on a device. Thus, for a simple task such as that in this study, we must only calculate the combinations. However, in the application to a more complex system, the environmental adaptation capability using reinforcement learning contributes to the stable operation of the system. Adaptation to environmental changes using reinforcement learning has already been demonstrated by Dressler et al.15 In addition, to demonstrate the adaptation capability of the environmental change, we conducted an additional experiment. This indicates that reinforcement learning is capable of responding to environmental changes. Therefore, we believe that this is an important attempt to demonstrate the concept of applying reinforcement learning to small-scale devices that integrate microvalves. However, as shown in this study, the state transition affected by the operation delay of the diaphragm was included in the acquired sequence. Consequently, there was a significant inconsistency between the simulation and measured values. Hence, these facts indicate the assumption that the generated flow rate by each transition is determined only by the current state and that the after-transition state is not partially satisfied. Therefore, in some transitions, the influence of this delay, such as adding the past state to the variable of the reward function, must be determined. In addition, it is hypothesized that this problem can be solved using an algorithm that explores the real environment.

SUPPLEMENTARY MATERIAL

See the supplementary material for the result of the additional experiment when the environment was changed.

DATA AVAILABILITY

The data that support the findings of this study are available from the corresponding author upon reasonable request.

REFERENCES

- 1.Arulkumaran K., Deisenroth M. P., Brundage M., and Bharath A. A., “Deep reinforcement learning: A brief survey,” IEEE Signal Process. Mag. 34(6), 26–38 (2017). 10.1109/MSP.2017.2743240 [DOI] [Google Scholar]

- 2.Henderson P., Islam R., Bachman P., Pineau J., Precup D., and Meger D., “Deep reinforcement learning that matters,” in Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI, 2018). [Google Scholar]

- 3.Chen C. L., Mahjoubfar A., Tai L.-C., Blaby I. K., Huang A., Niazi K. R., and Jalali B., “Deep learning in label-free cell classification,” Sci. Rep. 6, 21471 (2016). 10.1038/srep21471 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Valen D. A. V., Kudo T., Lane K. M., Macklin D. N., Quach N. T., DeFelice M. M., Maayan I., Tanouchi Y., Ashley E. A., and Covert M. W., “Deep learning automates the quantitative analysis of individual cells in live-cell imaging experiments,” PLoS Comput. Biol. 12(11), e1005177 (2016). 10.1371/journal.pcbi.1005177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Stoecklein D., Lore K. G., Davies M., Sarkar S., and Ganapathysubramanian B., “Deep learning for flow sculpting insights into efficient learning using scientific simulation data,” Sci. Rep. 7, 46368 (2017). 10.1038/srep46368 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hadikhani P., Borhani N., Mohammad S., Hashemi H., and Psaltis D., “Learning from droplet flows in microfluidic channels using deep neural networks,” Sci. Rep. 9, 8114 (2019). 10.1038/s41598-019-44556-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Khor J. W., Jean N., Luxenberg E. S., Ermon S., and Tang S. K. Y., “Using machine learning to discover shape descriptors for predicting emulsion stability in a microfluidic channel,” Soft Matter 15(6), 1361–1372 (2019). 10.1039/C8SM02054J [DOI] [PubMed] [Google Scholar]

- 8.Galan E. A., Zhao H., Wang X., Dai Q., Huck W. T. S., and Ma S., “Intelligent microfluidics: The convergence of machine learning and microfluidics in materials science and biomedicine,” Matter 3(6), 1893–1922 (2020). 10.1016/j.matt.2020.08.034 [DOI] [Google Scholar]

- 9.Riordon J., Sovilj D., Sanner S., Sinton D., and Young E. W. K., “Deep learning with microfluidics for biotechnology,” Trends Biotechnol. 37(3), 310–324 (2019). 10.1016/j.tibtech.2018.08.005 [DOI] [PubMed] [Google Scholar]

- 10.Sutton R. S. and Barto A. G., Reinforcement Learning: An Introduction (MIT Press, 1998). [Google Scholar]

- 11.Mnih V., Kavukcuoglu K., Silver D., Rusu A. A., Veness J., Bellemare M. G., Graves A., Riedmiller M., Fidjeland A. K., Ostrovski G., Petersen S., Beattie C., Sadik A., Antonoglou I., King H., Kumaran D., Wierstra D., Legg S., and Hassabis D., “Human-level control through deep reinforcement learning,” Nature 518, 529–533 (2015). 10.1038/nature14236 [DOI] [PubMed] [Google Scholar]

- 12.Silver D., Huang A., Maddison C. J., Guez A., Sifre L., Driessche G. V. D., Schrittwieser J., Antonoglou I., Panneershelvam V., Lanctot M., Dieleman S., Grewe D., Nham J., Kalchbrenner N., Sutskever I., Lillicrap T., Leach M., Kavukcuoglu K., Graepel T., and Hassabis D., “Mastering the game of Go with deep neural networks and tree search,” Nature 529, 484–489 (2016). 10.1038/nature16961 [DOI] [PubMed] [Google Scholar]

- 13.Liang T.-C., Zhong Z., Bigdeli Y., Ho T.-Y., Chakrabarty K., and Fair R., “Adaptive droplet routing in digital microfluidic biochips using deep reinforcement learning,” Proc. Mach. Learn. Res. 119, 6050–6060 (2020). [Google Scholar]

- 14.Lee X. Y., Balu A., Stoecklein D., Ganapathysubramanian B., and Sarkar S., “A case study of deep reinforcement learning for engineering design: Application to microfluidic devices for flow sculpting,” J. Mech. Des. 141(11), 111401 (2019). 10.1115/1.4044397 [DOI] [Google Scholar]

- 15.Dressler O. J., Howes P. D., Choo J., and deMello A. J., “Reinforcement learning for dynamic microfluidic control,” ACS Omega 3(8), 10084–10091 (2018). 10.1021/acsomega.8b01485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lau A. T. H., Yip H. M., Ng K. C. C., Cui X., and Lam R. H. W., “Dynamics of microvalve operations in integrated microfluidics,” Micromachines 5(1), 50–65 (2014). 10.3390/mi5010050 [DOI] [Google Scholar]

- 17.Qian J.-Y., Hou C.-W., Li X.-J., and Jin Z.-J., “Actuation mechanism of microvalves: A review,” Micromachines 11(2), 172 (2020). 10.3390/mi11020172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chen Y., Tian Y., Xu Z., Wang X., Yu S., and Dong L., “Microfluidic droplet sorting using integrated bilayer micro-valves,” Appl. Phys. Lett. 109, 143510 (2016). 10.1063/1.4964644 [DOI] [Google Scholar]

- 19.Nguyen T. V., Duncan P. N., Ahrar S., and Hui E. E., “Semi-autonomous liquid handling via on-chip pneumatic digital logic,” Lab Chip 12, 3991–3994 (2012). 10.1039/c2lc40466d [DOI] [PubMed] [Google Scholar]

- 20.Hong J. W., Studer V., Hang G., Anderson W. F., and Quake S. R., “A nanoliter-scale nucleic acid processor with parallel architecture,” Nat. Biotechnol. 22, 435–439 (2004). 10.1038/nbt951 [DOI] [PubMed] [Google Scholar]

- 21.Unger M. A., Chou H.-P., Thorsen T., Scherer A., and Quake S. R., “Monolithic microfabricated valves and pumps by multilayer soft lithography,” Science 288, 113–116 (2000). 10.1126/science.288.5463.113 [DOI] [PubMed] [Google Scholar]

- 22.Duffy D. C., McDonald J. C., Schueller O. J. A., and Whitesides G. M., “Rapid prototyping of microfluidic systems in poly(dimethylsiloxane),” Anal. Chem. 70, 4974–4984 (1998). 10.1021/ac980656z [DOI] [PubMed] [Google Scholar]

- 23.Jang L.-S., Li Y.-J., Lin S.-J., Hsu Y.-C., Yao W.-S., Tsai M.-C., and Hou C.-C., “A stand-alone, peristaltic micropump based on piezoelectric actuation,” Biomed. Microdevices 9, 185–194 (2007). 10.1007/s10544-006-9020-8 [DOI] [PubMed] [Google Scholar]

- 24.Thorsen T., Maerkl S. J., and Quake S. R., “Microfluidic large-scale integration,” Science 298, 5593, 580–584 (2002). 10.1126/science.1076996 [DOI] [PubMed] [Google Scholar]

- 25.Fidalgo L. M. and Maerkl S. J., “A software-programmable microfluidic device for automated biology,” Lab Chip 11, 1612 (2011). 10.1039/c0lc00537a [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

See the supplementary material for the result of the additional experiment when the environment was changed.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.