Abstract

Background and Aim

Endoscopic differentiation between malignant and benign gastric ulcers (GU) affects further evaluation and prognosis. The aim of our study was to evaluate a deep learning algorithm for discrimination between benign and malignant GU in a database of endoscopic ulcer images.

Methods

We retrospectively collected consecutive upper gastrointestinal endoscopy images of GU performed between 2011 and 2019 at the Sheba Medical Center. All ulcers had a corresponding histopathology result of either benign peptic ulcer or gastric adenocarcinoma. A convolutional neural network (CNN) was trained to classify the images into either benign or malignant. Endoscopies from 2011 to 2017 were used for training (2011–2015) and validation (2016–2017). Hyper-parameters, image augmentation and pre-training on Google images obtained images were evaluated on the validation data. Held-out data from 2018 to 2019 was used for testing the final model.

Results

Overall, the Sheba dataset included 1978 GU images; 1894 images from benign GU and 84 images of malignant ulcers. The final CNN model showed an AUC 0.91 (95% CI 0.85–0.96) for detecting malignant ulcers. For cut-off probability 0.5, the network showed a sensitivity of 92% and specificity of 75% for malignant ulcers.

Conclusion

Our study displays the applicability of a CNN model for automated evaluation of gastric ulcers images for malignant potential. Following further research, the algorithm may improve accuracy of differentiating benign from malignant ulcers during endoscopies and assist in patients’ stratification, allowing accelerated patients management and individualized approach towards surveillance endoscopy.

Keywords: gastric ulcer, malignant, benign, artificial intelligence, AI, deep learning

Introduction

Gastric ulcer is a common medical condition, with yearly incidence of more than 5 per 1000 adults.1 Malignancy rate in endoscopically diagnosed gastric ulcers diverges substantially, ranging between 2.4% and 21%.2,3

Gastric cancer is the 3rd leading cause of cancer death worldwide.4 Histology of adenocarcinoma is prevalent in 95% of cases, with poor prognosis of 5-year overall survival rate less than 30% in most countries.5

Early detection of malignant ulcers is highly important for further treatment and improved survival. Therefore, endoscopic characteristics for malignant potential are widely implemented and are a common practice, and the term endoscopically suspicious appearance is used in daily practice as well as in literature.6,7 These characteristics include: dirty base, elevated ulcer borders, irregular ulcer borders.7

Dirty base is characterized as containing areas of necrosis of different colors. Elevation of ulcer borders are considered if the borders are raised compared to ulcer base, and irregular borders are defined as asymmetrical borders.7 Naturally, these characteristics are not well defined and prone to high inter-observer variability.

Automated image analysis is termed computer vision, which is a multi-disciplinary field that centers on computers’ gaining comprehension of digital images.8 In the past few years, artificial intelligence (AI) deep learning algorithms, termed convolutional neural networks (CNNs), have reformed the computer vision field, offering significant accuracy in various image analysis fields, including medical image analysis.9,10

We speculated that AI may yield better patients’ stratification and improve detection of malignant GU during endoscopy.

Therefore, the aim of our study was to evaluate the accuracy of CNN for detection of malignant ulcers on gastroscopy image sets from individual patients. If proven accurate and feasible, implementation of AI will allow better detection of GU at high risk for malignancy and allow enhanced patients’ evaluation and treatment.

Methods

Study Design

We retrospectively retrieved gastric endoscopy images of benign and malignant gastric ulcers taken during upper endoscopies. The endoscopies were conducted at the department of gastroenterology at the Chaim Sheba Medical Center between the years 2011–2019 after obtaining an informed consent. Intravenous sedation was given in various combinations of midazolam (2–5mg), fentanyl (0.05–0.1mg) and propofol (up to 1mg/kg) as per patient requirement and endoscopist’s preference.

Clinical indications for upper endoscopy procedure varied considerably, but included mainly upper abdominal pain or discomfort, anemia, vomiting, weight loss, upper gastrointestinal bleeding or any combination of symptoms.

All included images had a corresponding biopsy result, and were performed at ulcers’ diagnosis. Images were detected and evaluated by two experienced gastroenterologists, and only high-quality consensus pictures (see below) were included for analysis.

Patients’ medical charts were reviewed in order to ensure correct diagnosis of benign ulcers.

The images were obtained during upper endoscopy using Olympus gastroscopes – high definition system 180 and 190-series (Olympus, Japan).

A convolutional neural network (CNN) was trained and tested for the ability to detect images with malignant ulcers.

This retrospective study has been approved by the local (Chaim Sheba Medical Center) ethics committee. Informed consent was waived by the IRB committee. All data was anonymized and patients’ confidentiality was fully secured in compliance with the Declaration of Helsinki.

Study Cohort

We searched our department’s electronic medical records for consecutive endoscopies where there was a morphological diagnosis of gastric ulcer. Images taken during endoscopy were evaluated by two experienced endoscopists, and only consensus high quality images showing a clear picture of the ulcer including full picture of ulcer’s base and margins that enable complete assessment of ulcer’s size and morphology were included for analysis. Thereafter, biopsy results were obtained for all included patients.

To enrich the data, we also obtained publicly available images using the Google images search engine. Combined automated and manual searches were performed on Google images using the search terms: gastric ulcer/stomach ulcer/gastric carcinoma/gastric adenocarcinoma/gastric malignancy/gastric tumor/gastric malignant ulcer/stomach malignancy/stomach malignant ulcer/stomach tumor/gastric ulcer benign/stomach ulcer benign AND endoscopy/upper endoscopy/procedure/EGD. No other limits were applied. The search results were manually searched. The number of pictures identified at beginning was more than 1000; of which 100 were selected – 50 classified as benign gastric ulcers and 50 classified as malignant gastric ulcers. Images were selected according to their quality as described above; only high-quality images showing a clear picture of the ulcer including full picture of ulcer’s base and margins were included for analysis.

Naturally, for these images, we did not have clear biopsy results. Thus, we used the public dataset strictly for pre-training the CNN.

Software and Hardware

The models were written in Python (ver. 3.6.5, 64 bits). We used the Keras (ver 2.1.5) library and the TensorFlow (ver. 1.5.0) library as backend for the CNN models. Experiments were conducted on an Intel i7 CPU and two GeForce GTX 1080ti GPU machine.

Data and Model Preparation

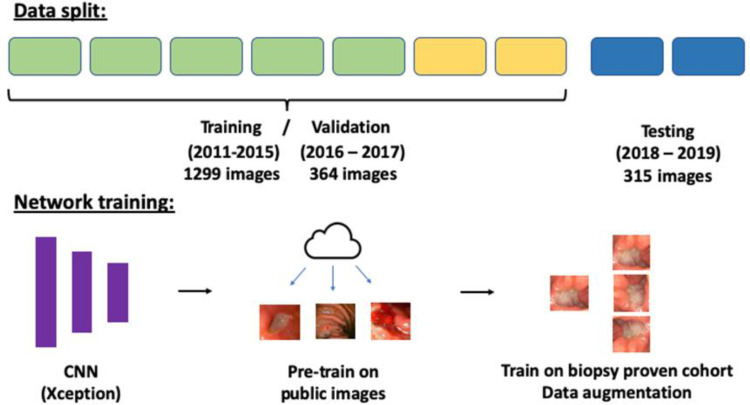

Data from 2011 to 2017 was used for training and validation: training was done on years 2011–2015 and validations on years 2016–2017. Held-out images from 2018 to 2019 were used for testing the final model.

To keep a patient level separation, if a patient had more than one endoscopy, all his studies were considered as having the last endoscopy date. That way, these patients’ endoscopies were exclusive in either the training, validation or testing sets.

For the experiments, we used the Xception CNN.11 Xception showed high top-1 accuracy (0.790) on the ImageNet challenge.12 We have recently showed good results for Xception in classification of Crohn’s disease ulcers on video capsule endoscopy.13,14

Preprocessing of the images included normalization by dividing each RGB pixel by 255. Images were rescaled to 299X299X3 to fit the pre-trained Xception model. To counter data imbalance, we have up-sampled the images of the malignant ulcers with a rate of 20:1. Up-sampling was done only in the training set.

Pre-trained weights were used for all the networks. These weights are derived from the model trained on the 1.2 million everyday color images of ImageNet.12 Hyper-parameters used for training: 10 epochs; batch size 8; Adam optimization with a learning rate of 10−6. Softmax was used as the output activation function.

CNN Experiments

Training/validation split was used to evaluate two experiments: I) added value of using publicly available images and II) added value of data augmentation.

I) The added value of pre-training using a publicly available data was evaluated. The Google images search engine was used to download 50 images of benign gastric ulcers and 50 images of malignant gastric ulcers. These images were evaluated as a first pre-training stage of the network.

II) The following image augmentation techniques were experimented on the training/validation data: random horizontal flipping, rotation (90, 180, 270 degrees), contrast and brightness adjustments (range of ± 10–20 RBG points) and zooming in and out (range of ± 10–20%).

Study design is presented in Figure 1.

Figure 1.

Study design.

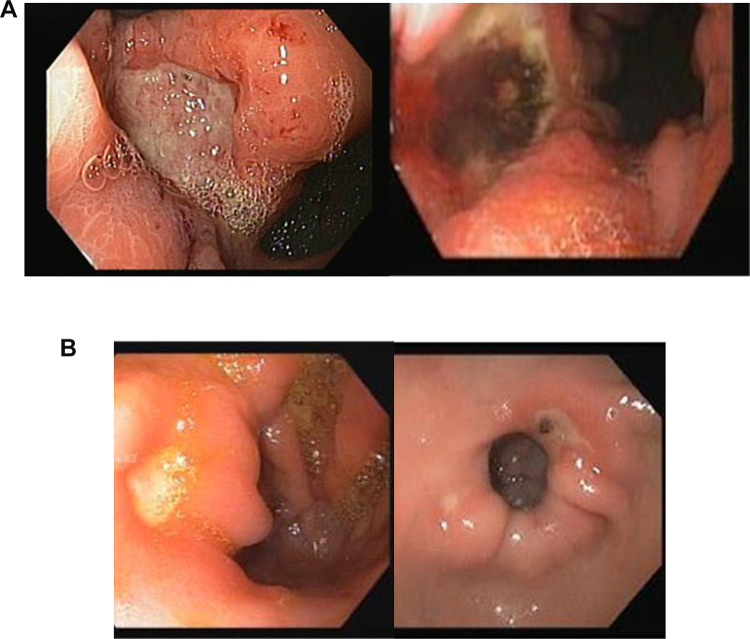

Figure 2 demonstrates morphologic characteristics of malignant (a) and benign (b) ulcers.

Figure 2.

Morphologic characteristics of malignant (A) and benign (B) ulcers. (A) malignant gastric ulcers. Ulcers are usually large (more than 1 cm) with irregular borders. Borders are elevated compared to the base, and base discoloration is commonly present. (B) Benign gastric ulcers. Ulcers are usually smaller (less than 1 cm), with a clean base and regular flat borders.

Statistical Analysis

Receiver operating curves (ROC) were plotted for each training/validation experiment by varying the operating threshold. The mean AUC of the validations were compared and the best was used to determine the schema of the final model, to be tested on the held-out data.

The metrics for final model included area under the curve (AUC), accuracy, sensitivity, specificity, false positive rate (FPR), positive predictive value (PPV), negative predictive value (NPV) and F1 score, for detecting malignant ulcers. The network’s final layer is a softmax activation function. Similar to logistic regression, images are assigned a probability between 0 and 1. Thus, as in logistic regression, a cut-off probability of 0.5 is targeted by the model to maximize accuracy. Lesions with a probability higher than 0.5 are considered malignant. Those with a probability of less than 0.5 are considered benign. Different cut-off values can be used for a tradeoff between sensitivity and specificity. We evaluated the metrics for different cut-off probabilities of the model (0.3, 0.5, and 0.7).

Bootstrapping validations (1000 bootstrap resamples) were used to calculate 95% confidence intervals (CI) for metrics.

Results

Study Population

Overall, our dataset included 1202 endoscopies conducted for 1091 patients. Of the patients, 1039 had benign ulcers and 52 had malignant ulcers. Patients with benign ulcers were older compared to patients in the malignant ulcers group (68.3±15.7 vs 64.3 ± 18.7, p=0.132), and more frequently women (45.0% women in benign ulcers group vs 34.6% women in the malignant ulcers group, p=0.134).

Only patients with biopsy proven malignant adenocarcinoma of the stomach were included in the malignant group.

For each endoscopy, 1–2 images were available in our electronic medical records, yielding a total of 1978 images. Of those, 1894 were benign ulcers and 84 malignant ulcers.

Pre-Training Using Publicly Available Images

Without pre-training on the public dataset, Xception showed AUC 0.79 (95% CI: 0.66–0.90). With pre-training using Google images retrieved public dataset, Xception showed AUC 0.84 (95% CI: 0.76–0.92).

Image Augmentation

Image augmentation experiments were conducted without pre-training on the Google images dataset. Experiments were conducted on the training/validation data split. Overall, Image augmentation showed an increase in the validation AUC from 0.79 to 0.83. Table 1 presents the results of the experiments with different image augmentation techniques.

Table 1.

Results of Image Augmentation Experiments

| Image Augmentation | AUC (95% CI) |

|---|---|

| None | 0.79 (95% CI 0.66–0.90) |

| Horizontal flipping; Rotation (90, 180, 270 degrees) | 0.83 (95% CI 0.74–0.90) |

| Horizontal flipping; Rotation (90, 180, 270 degrees); Brightness and contrast (in the range of ± 10 RGB points); Zooming in and out (in the range of ± 10%). | 0.82 (95% CI 0.73–0.89) |

| Horizontal flipping; Rotation (90, 180, 270 degrees); Brightness and contrast (in the range of ± 20 RGB points); Zooming in and out (in the range of ± 20%). | 0.83 (95% CI 0.75–0.90) |

Final Model

For the final model, we pre-trained on the Google images data, and used the full data augmentation. Images from years 2011–2017 were used to train the network and images from years 2018–2019 were used for testing. The final model showed AUC=0.91.

The final model’s metrics for different cut-off values are presented in Table 2. For cut-off probability of 0.5, the model’s shows sensitivity 92% and specificity 75%.

Table 2.

Final Model’s Metrics Table

| Cut-Off Probability | Sensitivity | Specificity | PPV | NPV | F1 Score |

|---|---|---|---|---|---|

| 0.3 | 1.0 | 0.33 | 0.06 | 1.0 | 0.11 |

| 0.5 | 0.92 | 0.75 | 0.13 | 1.0 | 0.22 |

| 0.7 | 0.42 | 0.95 | 0.26 | 0.98 | 0.32 |

Abbreviations: PPV, positive predictive value; NPV, negative predictive value.

Discussion

Early detection and differentiation between benign and malignant gastric ulcers are substantially important for accelerated and improved cancer processing and treatment. Therefore, accurate during endoscopy characterizations of ulcers suspicious of malignancy is highly valuable for patients’ management. Herein, we were able to show that CNN has potential in classification of gastric ulcers images taken during endoscopic procedure to benign or malignant. The algorithm was able to detect malignant ulcers with an AUC 0.91.

Due to the high mortality rates associated with gastric cancer,4,5 and the relatively prominent percentage of malignancy among the common diagnoses of gastric ulcer2,3 many studies assessed the yield and sensitivity of endoscopic evaluation for malignancy diagnosis.

According to literature, both endoscopic benign appearance per endoscopists’ impression at the time of the procedure and ulcer morphology were significantly predictors of malignant or benign potential of gastric ulcers (P<0.001 for both).6

Specific morphologic characteristics of malignancy were described in detail in many publications.7,15–18 These characteristics consist of a few parameters, mainly discolorations of the base of the ulcer and specific structure and appearance of the ulcer’s borders, along with their relations to the surrounding mucosa.

Each of these parameters alone was shown to have high sensitivity for the diagnosis of malignant gastric potential: ulcer base discoloration – 79, elevated ulcer border – 94% and irregular gastric border − 91%. The specificity was 93, 82 and 89%, respectively.7

Ulcer’s median diameter as estimated by the endoscopist during procedure was also shown to correlate to its malignant potential. Thus, 86% of ulcers more than 3 cm were malignant, compared to only 2% of ulcers smaller than 1 cm (p<0.001).7

The experienced endoscopists’ judgment combines all these morphological characteristics into diagnostic estimation (benign versus malignant ulcer) with a very high prognostic yield.6,7

Hence, performed by a single highly experienced endoscopist using white light imaging, the reported sensitivity of endoscopy for detecting malignant gastric ulcer was 0.98 with specificity of 0.84 (positive predictive value 0.84 and negative predictive value 0.98).7 Naturally, these results are highly operator – dependent, and less experienced endoscopists may not reach such high specificity and sensitivity.

Due to the accuracy of morphologic characteristics and the importance attributed to endoscopists’ evaluation, applying the CNN model seemed promising since experience is evidently a major factor in malignancy detection.

In our study, we managed to achieve an AUC of 0.91, which denotes high accuracy for detection of malignancy in endoscopic pictures of gastric ulcers, with sensitivity 92% and specificity 75%. Importantly, this score, is close to the reported results in the literature of a single highly experienced endoscopist.7 A recent study by Hirasawa et al using AI for detection of early and advanced gastric cancer,19 reached overall sensitivity of 92% in malignancy detection using CNN, with a positive predictive value of 30.6%. In that study, originated in Japan, more than 13,000 endoscopic images of gastric cancer were used for training. Following further practice, we expect improved results with higher sensitivity and specificity.

Another recent study using CNN for determine invasion depth to distinguished early gastric cancer from deeper submucosal invasion,20 reached sensitivity and specificity of 76%, and 95%, respectively. Both latter studies were performed in Japan and China, where gastric cancer prevalence is much higher than in Western countries, and screening programs for early detection are performed.4,5 As far as we know, our study is the first to use CNN in gastric cancer on Caucasian population in a Western country.

Notably, as described above (see methods), all ulcers’ diagnoses were pathologically confirmed, since only images of patients with present pathological results were included in the study. This adds to the strength of our results, and reduces the risk of bias due to false diagnosis. Patients’ medical records were reviewed in order to avoid a bias from false negative biopsy and exclude delayed cancer diagnosis.

Data in literature diverges in the matter of appropriate follow-up for patients with endoscopically diagnosed gastric ulcers. While current European and British guidelines advice follow-up gastroscopy for all gastric ulcer until full healing is achieved in order to exclude malignancy,21 the American guidelines of the ASGE recommend follow-up endoscopy only in selected cases.22 This conflict reflects the mal prognosis of late diagnosis of gastric cancer on one hand, and the added risk and low cost-effectiveness of performing repeated endoscopies on the other hand. Recent data from studies assessing the yield of performing follow-up endoscopy for benign-appearing gastric ulcers found no additive value in detecting malignancy in these cases,7,23 emphasizing the need for accurate high quality endoscopic diagnosis. Obviously, diagnosis must be pathologically confirmed.

We believe that following further validation and training, the neural network–based systems can facilitate decision-making and assist in characterizing and differentiating between benign malignant and ulcers. The system can be regarded as a second-opinion experienced reader and enhance diagnostic accuracy. This will hopefully lead to faster management of patients suffering from malignant disease on one hand, and to reduction in unindicted follow-up endoscopies on the other hand.

Our study had limitations. Firstly, the study is a single center retrospectively designed.

The retrospective design of the study might have caused selection bias; differences in images quality among different endoscopists and along the years with different endoscopes may lead to bias between representative vs non-representative data. However, it covers a decade of endoscopies and endoscopic images included were performed by all members of the gastroenterology departments (24 physicians), so it reflects a wide and diverse variety of patients and endoscopists. Secondly, our cohort had relatively small group of malignant ulcers. We believe that a larger dataset may improve and raise the accuracy of the results. We augmented the small dataset by using Google images to obtain data for a pre-training step. This showed promising results and may be considered in future studies. For the public dataset, we did not have biopsy results. Yet, it was used strictly as a preliminary pre-training stage, and thus, for validation/testing of the models, all images had a corresponding biopsy result from our institution. We downloaded 100 images for the public dataset. This was close to the number of patients with malignant lesions in our initial cohort. Naturally, a larger number of images can be downloaded from Google images. Thirdly, we chose to perform train/validation/test split using chronological split, while maintaining a strict patient level separation. This ensures no chronological data leakage. Other forms of data split, such as random train/validation/test split or n-fold cross validation, may be good alternatives.

Fourthly, our study did not address T staging of the malignant ulcers. Our aim was to differentiate malignant from benign GU at first endoscopy, and therefore did not include further evaluation as EUS or CT. This subject merit further investigation.

Lastly, there are numerous different ways of applying neural networks for the problem. For example, multiple other “off-the-shelf” networks, or specifically designed networks, or other numerous architectural and hyper-parameter decisions can be made. Despite these limitations, our proof-of-concept study shows possible clinical benefits of employing CNN to gastric ulcers, with sensitivity and specify close to those reported in the literature for highly experienced endoscopists. An interesting validation can be performed with an independent group of endoscopists classifying the same images that were used to test the algorithm. This, naturally, merits further investigation.

In conclusion, our study displays the applicability of a CNN model for automated evaluation of gastric ulcers images for malignant potential. Following further validation, the algorithm may improve accuracy of differentiating benign from malignant ulcers during endoscopies and assist in patients’ stratification, allowing accelerated patients management and individualized approach towards surveillance endoscopy. Ideally, this will be performed in a real-time setting during endoscopy, with specific marks indication suspicious eras in GU where focused biopsies can yield an accurate diagnosis. A similar technique is currently being used commercially for polyp detection during colonoscopy and shows elevation in polyp detection rate during endoscopy.24

Funding Statement

The study had no financial support.

Abbreviations

GU, gastric ulcers; CNN, convolutional neural network; AI, artificial intelligence; AUC, area under the curve; FPR, false positive rate; PPV, positive predictive value; NPV, negative predictive value.

Author Contributions

A(D)L conceived the study, was involved in data acquisition and analysis and drafted the manuscript. EK and YF designed the study and were involved in data analysis and drafted the manuscript. A(S)L and NA were involved in data acquisition and analysis. All authors participated in critical revision of the manuscript for important intellectual property and all have approved the final draft submitted. All authors contributed to data analysis, drafting or revising the article, have agreed on the journal to which the article will be submitted, gave final approval of the version to be published, and agree to be accountable for all aspects of the work.

Disclosure

The abstract of this paper was presented at the UEGW (United European Gastroenterology week) 2020 Conference as a poster presentation with interim findings. The poster’s abstract was published in “Poster Abstracts” in United European Gastroenterology Journal name UEG Week 2020 Poster Presentations. Volume: 8 issue: 8_suppl, page(s): 144–887. Article first published online: October 10, 2020; Issue published: October 1, 2020 https://doi.org/10.1177/2050640620927345. None of the authors have any conflicts of interest to declare.

References

- 1.Everhart JE, Byrd-Holt D, Sonnenberg A. Incidence and risk factors for self-reported peptic ulcer disease in the United States. Am J Epidemiol. 1998;147(6):529–536. doi: 10.1093/oxfordjournals.aje.a009484 [DOI] [PubMed] [Google Scholar]

- 2.Lv SX, Gan JH, Ma XG, et al. Biopsy from the base and edge of gastric ulcer healing or complete healing may lead to detection of gastric cancer earlier: an 8 years endoscopic follow-up study. Hepatol Gastroenterol. 2012;59(115):947–950. doi: 10.5754/hge10692 [DOI] [PubMed] [Google Scholar]

- 3.Amorena Muro E, Borda Celaya F, Martínez-Peñuela V, et al. [Analysis of the clinical benefits and cost-effectiveness of performing a systematic second-look gastroscopy in benign gastric ulcer]. Gastroenterol Hepatol. 2009;32(1):2–8. Spanish. doi: 10.1016/j.gastrohep.2008.07.002 [DOI] [PubMed] [Google Scholar]

- 4.Fitzmaurice C, Akinyemiju TF, Al Lami FH, et al. Global, regional, and national cancer incidence, mortality, years of life lost, years lived with disability, and disability-adjusted life-years for 29 cancer groups, 1990 to 2016: a systematic analysis for the global burden of disease study. JAMA Oncol. 2018;4(11):1553–1568. doi: 10.1001/jamaoncol.2018.2706 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Allemani C, Weir HK, Carreira H, et al. Global surveillance of cancer survival 1995–2009: analysis of individual data for 25,676,887 patients from 279 population-based registries in 67 countries (CONCORD-2). Lancet. 2015;385(9972):977–1010. doi: 10.1016/S0140-6736(14)62038-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Selinger CP, Cochrane R, Thanaraj S, et al. Gastric ulcers: malignancy yield and risk stratification for follow-up endoscopy. Endosc Int Open. 2016;4(6):E709–14. doi: 10.1055/s-0042-106959 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gielisse EA, Kuyvenhoven JP. Follow-up endoscopy for benign-appearing gastric ulcers has no additive value in detecting malignancy: it is time to individualise surveillance endoscopy. Gastric Cancer. 2015;18(4):803–809. doi: 10.1007/s10120-014-0433-4 [DOI] [PubMed] [Google Scholar]

- 8.Klang E. Deep learning and medical imaging. J Thorac Dis. 2018;10(3):1325–1328. doi: 10.21037/jtd.2018.02.76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Soffer S, Ben-Cohen A, Shimon O, et al. Convolutional neural networks for radiologic images: a radiologist’s guide. Radiology. 2019;290(3):590–606. doi: 10.1148/radiol.2018180547 [DOI] [PubMed] [Google Scholar]

- 10.Barash Y, Klang E. Automated quantitative assessment of oncological disease progression using deep learning. Ann Transl Med. 2019;7:S379–S. doi: 10.21037/atm.2019.12.101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chollet F. Xception: deep learning with depthwise separable convolutions. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017. 21–26July 2017. [Google Scholar]

- 12.Russakovsky O, Deng J, Su H, et al. ImageNet large scale visual recognition challenge. arXiv e-prints [Internet]; 2014. Available from: https://ui.adsabs.harvard.edu/abs/2014arXiv1409.0575R. Accessed April28, 2021.

- 13.Klang E, Barash Y, Margalit RY, et al. Deep learning algorithms for automated detection of Crohn’s disease ulcers by video capsule endoscopy. Gastrointest Endosc. 2019;91:606–613.e2. doi: 10.1016/j.gie.2019.11.012 [DOI] [PubMed] [Google Scholar]

- 14.Soffer S, Klang E, Shimon O, et al. Deep learning for wireless capsule endoscopy: a systematic review and meta-analysis. Gastrointest Endosc. 2020;92:831–839.e8. doi: 10.1016/j.gie.2020.04.039 [DOI] [PubMed] [Google Scholar]

- 15.Mizutani T, Araki H, Saigo C, et al. Endoscopic and pathological characteristics of Helicobacter pylori infection-negative early gastric cancer. Digest Dis. 2020;1–10. [DOI] [PubMed] [Google Scholar]

- 16.Kodama M, Okimoto T, Mizukami K, et al. Endoscopic and immunohistochemical characteristics of gastric cancer with versus without Helicobacter pylori eradication. Digestion. 2018;97(4):288–297. doi: 10.1159/000485504 [DOI] [PubMed] [Google Scholar]

- 17.Chen CY, Kuo YT, Lee CH, et al. Differentiation between malignant and benign gastric ulcers: CT virtual gastroscopy versus optical gastroendoscopy. Radiology. 2009;252(2):410–417. doi: 10.1148/radiol.2522081249 [DOI] [PubMed] [Google Scholar]

- 18.Majima A, Dohi O, Takayama S, et al. Linked color imaging identifies important risk factors associated with gastric cancer after successful eradication of Helicobacter pylori. Gastrointest Endosc. 2019;90(5):763–769. doi: 10.1016/j.gie.2019.06.043 [DOI] [PubMed] [Google Scholar]

- 19.Hirasawa T, Aoyama K, Tanimoto T, et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer. 2018;21(4):653–660. doi: 10.1007/s10120-018-0793-2 [DOI] [PubMed] [Google Scholar]

- 20.Zhu Y, Wang QC, Xu MD, et al. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest Endosc. 2019;89(4):806–15.e1. doi: 10.1016/j.gie.2018.11.011 [DOI] [PubMed] [Google Scholar]

- 21.Internal Clinical Guidelines T. National Institute for Health and Care Excellence: Clinical Guidelines. Dyspepsia and Gastro-Oesophageal Reflux Disease: Investigation and Management of Dyspepsia, Symptoms Suggestive of Gastro-Oesophageal Reflux Disease, or Both. London: National Institute for Health and Care Excellence (UK)Copyright © National Institute for Health and Care Excellence; 2014. [PubMed] [Google Scholar]

- 22.Banerjee S, Cash BD, Dominitz JA, et al. The role of endoscopy in the management of patients with peptic ulcer disease. Gastrointest Endosc. 2010;71(4):663–668. doi: 10.1016/j.gie.2009.11.026 [DOI] [PubMed] [Google Scholar]

- 23.Saini SD, Eisen G, Mattek N, et al. Utilization of upper endoscopy for surveillance of gastric ulcers in the United States. Am J Gastroenterol. 2008;103(8):1920–1925. doi: 10.1111/j.1572-0241.2008.01945.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hoerter N, Gross SA, Liang PS. Artificial intelligence and polyp detection. Curr Treat Options Gastroenterol. 2020;21(10):1007/s11938-020-00274–2. [DOI] [PMC free article] [PubMed] [Google Scholar]