Abstract

Background:

The COVID-19 pandemic accelerated the widespread adoption of digital pathology (DP) for primary diagnosis in surgical pathology. This paradigm shift is likely to influence how we function routinely in the postpandemic era. We present learnings from early adoption of DP for a live digital sign-out from home in a risk-mitigated environment.

Materials and Methods:

We aimed to validate DP for remote reporting from home in a real-time environment and evaluate the parameters influencing the efficiency of a digital workflow. Eighteen pathologists prospectively validated DP for remote use on 567 biopsy cases including 616 individual parts from 7 subspecialties over a duration from March 21, 2020, to June 30, 2020. The slides were digitized using Roche Ventana DP200 whole-slide scanner and reported from respective homes in a risk-mitigated environment.

Results:

Following re-review of glass slides, there was no major discordance and 1.2% (n = 7/567) minor discordance. The deferral rate was 4.5%. All pathologists reported from their respective homes from laptops with an average network speed of 20 megabits per second.

Conclusion:

We successfully validated and adopted a digital workflow for remote reporting with available resources and were able to provide our patients, an undisrupted access to subspecialty expertise during these unprecedented times.

Keywords: Digital pathology, remote reporting, whole-slide imaging

INTRODUCTION

Digital pathology (DP) represents a third revolution in surgical pathology. Whole-slide imaging (WSI) enables sequential digitization with high-resolution image acquisition and digital assembly of the entire tissue section on glass slide which further supports navigation and analysis akin to conventional microscopy. Utility of WSI in extending quality diagnostic services to remote/underserved regions, second opinion, seeking expert consultations in subspecialties, peer review, tumor boards, education, and quality assurance is proven beyond contention. Adoption of DP into routine diagnostics promises improved turnaround time (TAT) with efficient digital workflow and easy archival of diagnostic material. Literature on noninferiority of WSI in rendering primary diagnosis in surgical pathology has substantially evolved, even addressing niche and complex areas within some subspecialties.[1,2,3,4,5,6,7,8,9,10,11,12,13]

The COVID-19 pandemic posed significant challenges to oncology services amid a global lockdown situation. Tata Memorial Centre (India's largest tertiary oncology center), which caters to more than 70,000 new patients with cancer every year, was quick to adopt a measured de-escalation of services using a proactive and multipronged approach.[14] Adoption of a digital workflow was necessary because of the changes in institutional staffing norms to accommodate social distancing, cross-sectoral skill adjustments leading to additional shared responsibilities, travel restrictions due to the lockdown and reduced workforce due to staff in quarantine/isolation. As a result, this evolving pandemic situation presented DP a novel opportunity for rendering primary diagnosis from remote sites and offered patients the much needed critical access to subspecialty expert opinions and efficient TAT.

Guidance to laboratories related to the use of DP for remote reporting in response to this health emergency has been published across countries in the recent months.[15,16,17,18,19] This study presents learnings from early adoption of DP for reporting from home for cancer patients during an ongoing pandemic and prospective validation as an emergency response during this period. We aimed to validate the DP system for review from home and primary diagnosis of surgical pathology biopsies in a real-time environment and test the feasibility of a digital workflow for primary diagnosis and evaluate factors influencing its performance.

MATERIALS AND METHODS

This study was based out of the Department of Pathology at the Tata Memorial Hospital, Mumbai, India, a high-volume tertiary oncology center, and included real-time digital reporting from home. The participating 18 pathologists included 6 generalists and 12 subspecialty experts in various stages of their respective careers and experience with DP. Six pathologists had already validated DP for primary diagnosis in surgical pathology in their subspecialties.[20,21] Before engaging in live digital sign-out from remote site, a formal training was undertaken to familiarize the pathologists with the image viewer software and digital workflow. Standard operating procedure and user manual were issued. A common training set consisted of 10 randomly selected, previously signed out cases from head-and-neck (HN) pathology, breast (BR) pathology, and gastrointestinal (GI) pathology. Digital slides along with their respective histopathology reports were made available for all pathologists to assess the feasibility of remote reporting from home. These cases were not included in the validation set for analysis. The study was undertaken following the Institutional Ethics Committee review.

Case selection and whole-slide imaging

This study included biopsy specimens and cell block preparations for primary diagnosis over a duration from March 21, 2020, to June 30, 2020. Cytology smears were not included in the study. Subspecialties included HN pathology, BR pathology, GI pathology, thoracic pathology (TH), bone and soft tissue pathology, gynecologic pathology, and genitourinary pathology. The cases followed standard laboratory operating protocol including bar-coded accessioning, specimen tracking through gross evaluation, tissue processing, embedding, microtomy, staining, and case handling. Request forms were scanned and integrated digitally with the reporting software. Standard laboratory quality control measures were followed through these work levels. Additional prescanning quality checks performed by the scanning technologist included correct placement of slide labels, tissue and coverslips on the glass slide, absence of overhanging of any of the former, slide chippings, air bubbles, or ink markings. Slides not meeting required levels of quality for digitization were rectified wherever possible before exclusion.

All slides and tissue section levels were scanned for every case including those with multiple parts and corresponding frozen section (FS) slides. Biopsies from different/multiple sites for a given case were considered as individual parts. Subsequent additional requests of serial deep cuts, recuts after tissue repositioning, special stains, and immunohistochemistry (IHC) slides were associated with digital cases. Scanning was performed by trained technologists on VENTANA DP200 whole-slide scanner (Hemel Hempstead, UK) using automated or manual selection of area of interest (AOI). All biopsy hematoxylin and eosin (H&E)-stained and IHC slides were scanned at × 20 (0.46 μ/pixel) unless requested for rescan at × 40 (0.25 μ/pixel). Special stained sections were scanned at × 40 by default.

Scanning technologists ensured adequate calibration and primary real-time quality checks which included verification of pathology identity number, tissue coverage of all bits present on glass slide from the macroslide images and adequate digital image quality, and subject to rescan on failure. Scanned WSIs were managed using associated Image Management Software (IMS) uPath version 1.0. (Tucson, Arizona, United States of America).

Cases were previewed by trainee pathologists who carried out secondary quality checks and ensured minimal slide preparation artifacts (at cutting and staining levels) and that all the tissue bits were scanned. In addition, preliminary reports were prepared in the reporting software similar to routine workflow pattern. Cases were assigned to pathologists according to a predetermined schedule for reporting from home.

Remote digital sign-out

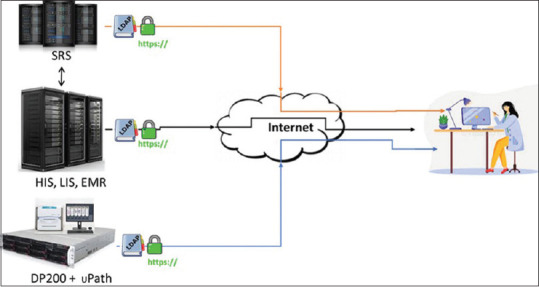

The digital infrastructure consisted of the electronic medical records (EMR), Roche uPath image management system (IMS), and an in-house developed pathology synoptic reporting system (SRS) synchronized with the laboratory information system (LIS). Each of these databases was individually accessible through the Internet using the HTTPS protocol coupled with timed Lightweight Directory Access Protocol authentication. The pathologists accessed the EMR for clinical information and used the web-based image viewer software for reviewing digital slides from remote site/home. Real-time digital sign-out was enabled by the web-based reporting platform [Figure 1]. The patient ID and the laboratory ID are generated by an automated data management system which ensures that the mapping between the two IDs is error free. The EMR, LIS, and SRS are synchronized at the database levels and provide pathology ID level linkage between LIS and SRS and patient ID level linkage between SRS and EMR, permitting to jump between systems for the same patient without any error. Since the IMS was not integrated with the other systems during the study, verification of the patient and pathology IDs on the IMS were done visually by the reporting pathologist. This also included verification of the macro-image of the scanned slide bearing patient and laboratory IDs, to ensure that the pathologist does not inadvertently have two different patients on different information systems.

Figure 1.

Digital environment for reporting from remote site. SRS: Synoptic reporting system, HIS: Hospital information system, LIS: Laboratory information system, EMR: Electronic medical record

Risk mitigation measures included preview of cases by trainee pathologists, case-based risk assessment while making a digital diagnosis, requesting for digital slides of higher resolution (rescan at × 40) if required, deferrals for glass slide evaluation, and second opinions from colleagues working either remotely or on-site. Requests for review with previous diagnostic material, additional cuts, special stains, and IHC were recorded in spreadsheets along with other case-related data.

The hardware (RAM, processor, monitor size, screen resolution, color bit depth, color format, and navigation tools) and network specifications (web browser and Internet connection speed) at remote workstations were collated. Other data collated included TAT per case, if access to clinical data was adequate, quality of digital image including presence of artifacts on glass or virtual slides, average time spent on case sign-out, level of confidence for the diagnosis rendered, and network-related issues such as WSI latency (the lag in image loading after opening the digital image), wherever relevant. Qualitative feedback data collated included IMS-related features such as arrangement of cases, ease of navigation, zooming, annotation and quantification tools, comparison between multiple slides, digital image retrieval, and perception of digital image in comparison to microscopy. The overall preferred mode for diagnosis during the ongoing pandemic was noted along with the preferred tool for navigation.

Glass slide review and concordance

Blinded re-review of glass slides for all cases was recorded after a minimum interval of 2 weeks. Diagnostic reads on glass slides were recorded in a controlled environment with de-identified clinical data, identical to that available at the time of digital sign-out. Diagnostic data recorded included all relevant data elements in addition to the top-line diagnosis and interpretation of ancillary tests. Time spent on arriving at a diagnosis was noted along with level of confidence for the glass slide diagnosis similar to digital slide evaluation, in order to eliminate bias arising from complexity of case.

Intra-observer concordance rate was calculated for paired digital and glass diagnostic reads. Major discordances were defined as differences with significant clinical impact including misclassification of cases between benign and malignant pathologies (major harm, major morbidity and moderate harm, moderate morbidity). Minor discordances were defined as those with minimal clinical impact (minor harm, minor morbidity and minimal harm, no morbidity).[1,3,22,23] Concordances were adjudicated by a referee pathologist not participating in sign-out in the study. Blinded consensus diagnosis was established as the reference diagnosis for discordant cases. Establishment of noninferiority of WSI versus microscopy was based on 4% cutoff when compared with reference diagnosis.[3]

RESULTS

A total of 594 cases were included for digital sign-out from home over a duration from March 21, 2020, to June 30, 2020. Following 27 case deferrals for glass sign-out (deferral rate, 4.5%), a total of 567 cases (616 individual parts and 1426 slides) were included for data analysis [Tables 1 and 2]. The study set comprised 776 H and E slides (including 27 FS slides), 618 IHC slides (in 191 cases), and 32 slides with special histochemical stains (in 12 cases). The number of slides examined per case was 1–14. The mean number of slides was 4, in cases where IHC or special stains were requested.

Table 1.

Distribution of cases and deferrals by subspecialty

| Subspecialty | Total cases | Deferred | Cases digitally signed out | Parts | Slides |

|---|---|---|---|---|---|

| HN | 214 | 6 | 208 | 220 | 361 |

| BR | 87 | 4 | 83 | 91 | 366 |

| GI | 122 | 7 | 115 | 117 | 225 |

| TH | 82 | 5 | 77 | 77 | 191 |

| GY | 52 | 3 | 49 | 53 | 168 |

| GU | 25 | 1 | 24 | 47 | 74 |

| BST | 12 | 1 | 11 | 11 | 41 |

| Total | 594 | 27 | 567 | 616 | 1426 |

HN: Head and neck, BR: Breast, GI: Gastrointestinal, TH: Thoracic pathology, BST: Bone and soft tissue, GY: Gynecologic pathology, GU: Genitourinary

Table 2.

Deferred cases

| Site | Reason |

|---|---|

| Oral cavity, esophagus | Invasion assessment of a scant focus (2) |

| Lung | Unusual morphology of metastasis in a known case |

| Oral cavity, cervix | Grading of dysplasia (2) |

| Mediastinum | Grey zone between Hodgkin lymphoma and primary mediastinal B-cell lymphoma |

| Hard palate, urinary bladder, lung | Grading of tumor (3) |

| Ascitic fluid | Scant atypical cells (3) |

| Gallbladder | Scant focus of adenocarcinoma |

| Colorectum, lung, nasal cavity | Poorly differentiated malignant tumor (3) |

| Colorectum | Inflammatory atypia versus dysplasia |

| Ascitic fluid | Presence of nucleated RBCs |

| Liver biopsy | Carcinoma inconclusive on IHC |

| Breast | Fixation artifacts |

| Breast | IHC internal control unsatisfactory (2) |

| Breast, lung | Pixelation and WSI latency (2) |

| Lung | Neoplastic versus reactive spindle cell proliferation |

| Lung | Benign versus low-grade neoplasm |

| Endometrium | Proliferative changes versus hyperplasia |

*Numbers in brackets indicate number of cases with the same reason for deferral. ESI: Whole-slide imaging, IHC: Immunohistochemistry, RBCs: Red blood cells, WSI: Whole-slide imaging

The rescan rate was 2.3% for the 1426 slides scanned and was due to incomplete tissue inclusion for scanning (n = 3), macro-focus failure (n = 6), image quality (n = 22), tissue detection error (n = 1), and slide related factors (n = 1). Rescan at ×40 along with special stains was requested at three instances during sign-out in cases with inflammatory pathology and suspected fungal etiology. The median file size at ×20 equivalent resolution was 269 MB (range, 8 MB–1.5 GB) with a median scan time of 62 s (range, 15–267 s). The median of file size and scan time was marginally higher for slides scanned at × 40 equivalent resolution (454 MB and 75 s).

Twelve subspecialized pathologists and six generalists reported on cases from seven subspecialties [Table 3]. The cases generated a total of 1232 reads from 616 diagnoses on individual parts on WSI and glass slides. The case types represented a standard cross section expected in a routine surgical pathology practice in a tertiary oncology center [Tables 4 and 5].

Table 3.

Distribution of subspecialized and general pathologists across subspecialties

| Subspecialty | Pathologists |

|---|---|

| HN | A*, E, G*, I*, J, L*, N*, P, Q |

| GI | C, E, G*, H*, J*, N, O |

| BR | A*, B*, F, K*, O |

| TH | C, F, J*, P*, R |

| GY | D*, E, I*, K, M*, Q, R, U |

| GU | F, K*, M*, R |

| BST | C, D*, E, N |

*Indicates subspecialty expertise. HN: Head and neck, BR: Breast, GI: Gastrointestinal, TH: Thoracic pathology, BST: Bone and soft tissue, GY: Gynecologic pathology, GU: Genitourinary

Table 4.

Subspecialties with distribution of cases by site and corresponding diagnostic parts

| Subspecialty | Site | Total parts |

|---|---|---|

| HN | Oral cavity | 168 |

| Oropharynx | 10 | |

| Larynx | 9 | |

| Nasopharynx | 1 | |

| Maxilla | 1 | |

| Orbit, eye | 3 | |

| Lymph node | 10 | |

| Lung | 5 | |

| External auditory canal | 2 | |

| Temporal region | 4 | |

| Chest wall | 2 | |

| Skin | 2 | |

| Thyroid | 1 | |

| Others | 2 | |

| TH | Lung | 34 |

| Pleural fluid | 11 | |

| Lymph node | 12 | |

| Mediastinum | 1 | |

| Rib | 3 | |

| Abdominal wall | 1 | |

| Scapula | 1 | |

| Esophagus, GE junction | 9 | |

| Liver | 5 | |

| GI | Colorectum | 11 |

| Gallbladder | 19 | |

| Ampulla/periampullary region | 3 | |

| Pancreas | 8 | |

| Liver | 27 | |

| Stomach, GE junction | 5 | |

| Ascitic fluid | 15 | |

| Abdominopelvic region | 9 | |

| Omentum | 4 | |

| Lymph node | 8 | |

| Lung, pleural fluid | 4 | |

| Ovary | 2 | |

| Esophagus | 1 | |

| Duodenum | 1 | |

| GY | Cervix, endocervix, vaginal vault | 23 |

| Ovary, omentum | 6 | |

| Endometrium | 2 | |

| Ascitic fluid | 16 | |

| Lymph node | 2 | |

| Kidney | 1 | |

| Pleural fluid | 3 | |

| BR | Breast | 62 |

| Skin | 6 | |

| Lung | 2 | |

| Pleural fluid | 3 | |

| Chest wall | 1 | |

| Lymph node | 4 | |

| Abdominal wall | 1 | |

| Ascitic fluid | 4 | |

| BR (Contd.) | Omentum | 2 |

| Liver | 4 | |

| Endometrium | 1 | |

| Esophagus | 1 | |

| GU | Urinary bladder, ureteric orifice | 24 |

| Kidney | 5 | |

| Penis | 2 | |

| Prostate | 12 | |

| Iliac fossa | 1 | |

| Lung | 1 | |

| Endometrium | 1 | |

| Rectum | 1 | |

| BST | Bone | 7 |

| Soft tissue | 1 | |

| Lymph node | 1 | |

| Skin | 1 | |

| Lung | 1 |

HN: Head and neck, BR: Breast, GI: Gastrointestinal, TH: Thoracic pathology, BST: Bone and soft tissue, GY: Gynecologic pathology, GU: Genitourinary, GE: Gastroesophageal

Table 5.

Subspecialties and distribution of individual parts by diagnostic category

| Subspecialty | Diagnostic category | Total parts |

|---|---|---|

| HN | Negative for malignancy | 22 |

| Reactive lymphoid tissue | 2 | |

| Inflammation including granulomatous type | 15 | |

| Benign | 5 | |

| Dysplasia | 10 | |

| Atypical squamous proliferation | 8 | |

| Suspicious for carcinoma | 6 | |

| Carcinoma | 138 | |

| Malignancy/metastasis | 11 | |

| Necrosis only/inadequate/nonrepresentative biopsy | 3 | |

| BR | Negative for malignancy | 3 |

| Inflammation | 3 | |

| Benign | 9 | |

| DCIS | 1 | |

| Invasive breast carcinoma | 53 | |

| Malignancy/metastasis | 21 | |

| Necrosis | 1 | |

| GI | Negative for malignancy | 6 |

| Inflammation including granulomatous type | 4 | |

| Benign | 3 | |

| Suspicious for adenocarcinoma | 1 | |

| Malignancy/ metastasis | 101 | |

| Inadequate | 1 | |

| GY | Benign | 3 |

| CIN/CIS | 2 | |

| Suspicious for metastasis | 1 | |

| Metastasis | 19 | |

| Carcinoma | 25 | |

| Inflammation | 3 | |

| BST | Granuloma | 1 |

| Sarcoma | 3 | |

| Metastasis | 5 | |

| Plasma cell neoplasm | 2 | |

| TH | Negative for malignancy | 3 |

| Reactive | 1 | |

| Granuloma | 5 | |

| Carcinoma | 37 | |

| Malignancy/metastasis | 31 | |

| GU | Negative for malignancy | 11 |

| Necrosis | 1 | |

| Inflammation | 2 | |

| Carcinoma | 25 | |

| Atypical small acinar proliferation | 1 | |

| Germ cell tumor | 1 | |

| Benign | 6 |

HN: Head and neck, BR: Breast, GI: Gastrointestinal, TH: Thoracic pathology, BST: Bone and soft tissue, GY: Gynepathology, GU: Genitourinary, DCIS: Ductal carcinoma in-situ, CIN/CIS: Cervical intraepithelial neoplasia/Carcinoma in-situ

Fourteen participating pathologists preferred digital sign-out from home during the COVID-19 pandemic to facilitate social distancing norms and due to travel constraints to attend to limited work. All pathologists used laptops for digital sign-out from home with monitor size and screen resolution ranging from 10 to 15.6” and 1366 × 768–2736 × 1824. Color bit depth of displays used by the pathologists had 8 bits per color in a sRGB color space. The computer processor units comprised Intel i3/i5/i7 and were run at 1.5–2.9 GHz. The RAM configuration ranged from 3 GB to 16 GB. Four pathologists used a mouse for navigation, whereas the rest used trackpad. However, overall 10 pathologists preferred to use a mouse for navigation. Network bandwidth ranged from 4 to 80 megabits per second (Mbps) with an average download speed of 20 Mbps.

Twelve pathologists preferred digital annotation and quantification for sign-out. Eight pathologists rated panning and zooming features as convenient and simple to use. Similarly, eight pathologists preferred the digital format of arrangement of cases. Five out of six pathologists preferred comparison of AOI on digital slides over that on glass slides. Nine pathologists perceived that the digital slides closely resembled the glass slides. All pathologists rated the ease of retrieval of digital slides as advantageous compared to manual retrieval of glass slides in routine workflow.

Time spent on digital sign-out included both diagnostic time taken for interpretation of case and nondiagnostic time which included modification of preliminary report and finalization of report. This time ranged from 3 to 25 min per case with a median of 5 min. Although not directly comparable since the evaluation on glass slides did not include the nondiagnostic component of time spent, the time for glass slide diagnosis ranged from 30 s to 10 min, with over 90% of cases requiring time under 4 min for interpretation. The overall average TAT for the study cases was 5 days, with an average reduction in TAT by 1 day (in comparison to cross-sectional data from previous year). The average TAT for cases with IHC was 6 days and 3 days for cases without IHC.

The 567 cases included in the study comprised paired WSI and glass reads which showed no major discordance in diagnosis. Minor discordances were recorded in seven cases (1.2%), amounting to an overall diagnostic concordance rate of 98.8% between WSI for remote primary diagnosis and glass slides. The discordances were identified in four subspecialties including HN, GU, BR, and TH. Upon blinded re-review of the discordant cases, the glass reads were identified to be concordant with the reference diagnoses. The mean difference in diagnostic accuracy between WSI and microscopy with the reference diagnosis was <4%. Clinical information required to make the diagnosis was adequate in all discordant cases. WSI latency was recorded during sign-out of case 1. Level of confidence was scored 2 (on a scale of 1–3) in cases 1 and 5 [Table 6]. Level of confidence was scored 2 in 48 other reads (46 WSI reads and 2 glass reads).

Table 6.

Discordances between whole-slide imaging and glass reads

| Site/diagnosis | WSI | Glass | Reference | Discordance | |

|---|---|---|---|---|---|

| 1 | High-grade urothelial carcinoma of urinary bladder | Lamina propria invasion is not seen | Focal lamina propria invasion is seen | Lamina propria invasion is seen | Minor |

| 2 | Invasive breast carcinoma | HER2/neu equivocal (score 2+) by IHC | HER2/neu negative (score 1+) by IHC | HER2/neu negative (score 1+) by IHC | Minor |

| 3 | Breast | Invasive breast carcinoma Grade 2. Modified RB score 3+2=2=7 | Invasive breast carcinoma Grade 3. Modified RB score 3+3+2=8 | Invasive breast carcinoma Grade 3. Modified RB score 3+3+2=8 | Minor |

| 4 | Lung | Adenocarcinoma | Adenosquamous carcinoma | Adenosquamous carcinoma | Minor |

| 5 | Oral cavity | Atypical squamous proliferation, suspicious for squamous cell carcinoma | Squamous cell carcinoma | Squamous cell carcinoma | Minor |

| 6 | Oral cavity | Atypical squamous proliferation, suspicious for squamous cell carcinoma | Squamous cell carcinoma | Squamous cell carcinoma | Minor |

| 7 | Oral cavity | Atypical squamous proliferation, suspicious for squamous cell carcinoma | Consistent with squamous cell carcinoma | Squamous cell carcinoma | Minor |

WSI: Whole-slide imaging, IHC: Immunohistochemistry, HER2: Human epidermal growth factor receptor 2, RB: Richardson-Bloom

Despite a higher deferral rate for cell blocks (n = 4/27; 6.6%), there was absolute concordance for diagnosis (n = 57) even in cases with scanty atypical cell clusters (n = 3), effusions with reactive mesothelial proliferation (n = 11), and in cases suggestive of metastasis from a second primary (n = 4).

Confirmation of acid-fast bacilli morphology detected in a single case on WSI required evaluation of glass slide by an on-site attending pathologist before digital sign-out. There was an overall concordance between WSI and glass reads for the presence or absence of microorganisms in all cases (n = 11). Evaluation of Congo red stain for amyloid in a single case required microscopy under polarized light which was negative.

DISCUSSION

The switch to a digital workflow including pathology review of cases from home was necessitated to accommodate preventative measures such as social distancing while imparting maximum flexibility to the reporting pathologists during the lockdown. The processes of verification of performance a DP system and its validation for an intended purpose have been amply discussed in published literature. The existing literature including large preclinical validation studies substantiates the noninferiority of WSI technology for rendering primary diagnosis.[1,2,3,6,7] With growing interest in the use of DP for routine diagnostics, a number of laboratories have reported successful validation and incorporated WSI for primary diagnosis in at least a significant proportion of cases.[7,24,25,26,27,28,29]

Utility of telepathology in the pre-COVID era has been largely restricted to intraoperative FS diagnosis, remote cytology evaluation, and inter-pathologist consultation for second opinions.[30,31,32,33,34,35,36,37,38] Few previous guidelines for telepathology and reporting from home have addressed relevant general issues including security, standards, liability and areas of governance, confidentiality, record keeping, result transmission, and audit, relevant when DP is used to report remotely from home. However, they do not allude to validation of DP for diagnosis from remote site.[39,40] A feasibility study for distance reporting in pathology by Vodovnik et al. concluded DP as efficient and reliable means for remote pathology services in surgical pathology and cytology. The study included 950 cases and evaluated network speeds, operational workflows, workstation displays, and TAT.[41] Vodovnik et al. also validated diagnostic concordance between WSI and microscopy for primary diagnosis (retrospective study) and a complete remote DP service including remote reporting, fine-needle aspiration cytology clinics, FSs, diagnostic sessions with residents, and autopsy services.[28,42]

In light of the COVID-19 public health emergency, the temporary waiver of CMS regulations along with FDA nonbinding recommendations has enabled the very relevant digital switch in surgical pathology, with the deployment of DP systems where such devices do not create an undue risk.[17] The recent College of American Pathologists (CAP) guidance on remote sign-out addresses patient privacy, medicolegal considerations, diagnostic procedures that can be reported from remote site, validation before engaging in remote sign-out, and the need for SOPs in individual laboratories. The CAP maintains recommendations on validation of a DP system for diagnostic use. Verification of a smaller number of previously signed out cases from pathologist's individual homes was suggested as an alternative for complete validation, before engaging in live sign-out. The guidance addressed the need to adopt practical alternatives to the recommended 2-week washout period and suggested that the laboratories consider washout periods of any duration that may be deemed more practical while attempting to control for recall bias.[18,19] However, the guidance from the Digital Pathology Committee of the Royal College of Pathologists recognized that the pathologists even with limited DP experience may be able to confidently report some or many cases digitally, without undertaking a formal 1–2-month validation, and may do so using risk mitigation approach.[15]

India has a nascent policy on telemedicine, and the updated guidance in response to the COVID-19 health emergency does not cover telepathology for routine diagnostic services. Validation for intended use is critical to ensure that images and data transmitted over an integrated digital platform are adequate for rendering a correct opinion. Remote reporting of digital slides might be challenging for pathologists who have not validated DP for primary diagnosis. Few of our participating pathologists had prior experience with validation of WSI systems for primary diagnosis which set the stage to carry out primary diagnostic services remotely.[20,21] Since the guidelines across different countries were still evolving when we switched to remote/home diagnostic services using DP, we undertook orientation and training sessions for the pathologists in order to increase the user-friendliness of DP remotely. We adopted a risk mitigation approach for the remote digital workflow which included preview of cases by trainee pathologists, case-based risk assessment while making a digital diagnosis, requesting for digital slides of higher resolution (rescan at × 40) wherever required, deferrals for glass slide evaluation, and second opinions from colleagues working either remotely or on-site. Data security was layered and included timed-out password-protected logins, firewall enabled servers, and data encryption. It can further be strengthened using multifactor authentication, virtual private network (VPN), etc., according to local regulatory requirements. We employed a digital platform requiring separate access to each of EMR, SRS, and IMS data systems since they were not yet integrated during the time of this study. The manual verification of patient ID before remote sign-out was of utmost importance but could be carried out easily due to the reduced volume of workload. A fully integrated digital platform would, however, be the most ideal way to sustain a remote sign-out practice, especially on single-monitor setups.

This study constitutes a prospective validation of digital workflow in a real-time environment with live digital sign-out from remote site and substantiates the diagnostic equivalence of WSI to microscopy. Literature documents true intra-observer variability in surgical pathology with error rates or major discrepancies ranging from 1.5% to 6%.[22,43,44] Based on a priori power study for noninferiority of WSI review at 4% margin and 0.05% significance, it was calculated that 450 cases (225 per group) would need to be reviewed to establish noninferiority. In this study by Bauer et al., 2 pathologists reviewed 607 cases and recorded 1.65% and 2.31%, major and minor discrepancy rates for WSI, and similarly, 0.99% and 4.93% for microscopic slide review.[3] We analyzed 567 cases for diagnostic accuracy and recorded no major discordance and 1.2% minor discrepancy rate with WSI. Diagnoses on glass slide review were concordant with the reference diagnoses in discrepant cases. Extrapolating from a priori power calculation by Bauer et al., the present study reinforces the noninferiority of WSI for primary diagnosis even in a remote setting. Vodovnik et al. evaluated operational feasibility for distance reporting in surgical pathology on 950 cases but did not include diagnostic concordance in their analysis.[41] Recently, Hanna et al. prospectively validated a DP system and complete workflow from sample accessioning to final reporting from a CLIA-licensed facility in response to COVID-19 health emergency. The study evaluated 108 cases (254 parts) and included biopsies, resection specimens, and referral material with a 2-day washout period between WSI and glass review, to comply with the laboratory TAT.[45] In comparison to the above-referenced studies, the present study included an adequate number of cases to establish diagnostic concordance and noninferiority of WSI for remote reporting in surgical pathology for primary diagnosis. The immediate recall of prior diagnostic impressions on WSI was adequately addressed by including a 2-week washout period.

Deferral rate of 0.21%–2.3% has been recorded for primary diagnosis in surgical pathology using WSI.[2,12,41] The prospective model of the present study which included live digital sign-out accounts for a higher deferral rate. However, there were no changes in the preliminary impressions in 63% (n = 17) of the deferred cases. The remaining ten deferred cases were justified by either a change in the initial impressions made on WSI (n = 2) or required evaluation on glass slides for further diagnostic workup, mostly due to complexity of cases (n = 8).

The absolute major concordance in our study testifies the safety of remote diagnosis in a risk-mitigated environment. The minor discordances were mainly factored in by the intra-observer variability arising from either interpretative diagnostic criteria or pathologist error. The discordance in the case of transurethral resection of bladder tumor (TURBT) specimen which showed high-grade urothelial carcinoma and the diagnostic error of missing focal lamina propria invasion was probably factored in at least partly by the diagnostic modality due to error in screening along with WSI latency. Effective navigation tools play an important role, especially in screening fragmented tissue specimens such as TURBT and curettage samples. Slower network speed, large image size, and higher screen resolution are important determinants of WSI latency.

Digital workstations for routine diagnostics are commonly associated with calibrated high-quality displays including medical-grade monitors, which have high contrast, brightness, and resolution and function at fast network connections. In contrast, home computers/laptops invariably have lower resolution displays where assessment of pathological features may be perceived as challenging. There is a paucity of evidence to support the minimum display requirements for digital reporting at remote sites. At this stage, it is important to note that equivalence of display only translates to color accuracy and is not the sole factor accounting for diagnostic accuracy. In a previous study evaluating remote reporting from home by Vodovnik et al., they displayed the accessed digital images of 950 routine cases on a 14” (1600 × 900) laptop or a 3840 x 2160 LED television screen whenever available.[41] In the recent study by Hanna et al., the remote reader sessions used single-screen displays or dual-monitor displays ranging in size from 13.3 to 42” and resolution from 1280 × 800 to 3840 × 2160 pixels.[45] Pathologists in our study used laptops with screen size ranging from 10 to 15.6” and resolution from 1366 × 768 to 2736 × 1824. Scale of image display with smaller screens limited easy navigation during digital interpretation. Currently, in the absence of sufficient data to suggest minimum display requirements, a Point-of-Use Quality Assurance Tool has been developed for remote assessment and compatibility of viewing conditions for review of digital slides.[46] Network speed of >20 Mbps has been found to be compatible with consumer-grade monitors with lower screen resolution displays typically ranging between 2 and 4 megapixels for remote digital sign-out.[15,41,45] In our study, issues with remote sign-out were associated at network speed lesser than 10 Mbps. No significance could be established regarding other hardware specifications and diagnostic concordance. Better navigation tools, however, contributed to easier adaptability to remote digital sign-out.

Although the pathologists took a longer time for WSI evaluation and digital sign-out compared to glass slide evaluation, the digital workflow eliminated the intermediate steps dependent on secretarial staff for report preparation before sign-out and helped to achieve an overall gain in efficiency quantifiable in terms of reduced TAT. The real-time digital workflow allowed the flexibility to adjust time for same-day sign-out from a remote site as opposed to sign-out from the hospital premises at a later time to accommodate staggered workdays during the pandemic. The efficiency of the digital workflow in rendering undisrupted diagnostic services was particularly more pronounced due to the COVID-19 public health emergency.

Our study acknowledges a few limitations. Cases from hematopathology and neuropathology subspecialties were not included as per the choice of respective specialist/s. Biopsies were prioritized in these times of exceptional service pressure since primary diagnosis dictates further clinical management and constituted the only specimen type included. Inclusion of resection specimen types for routine remote reporting would further test the versatility of DP. The means of data security employed can be further enhanced using VPN and multifactor authentication. DP for primary diagnosis remains challenged in instances requiring polarized microscopy. Similarly, confirmation of microorganisms digitally requires higher resolution digital images or scanning at multiple z-levels.[47]

CONCLUSION

DP helped us face COVID 19 pandemic, when the conventional rulebook is replaced by measures which deal exclusively with the emergency. Careful re-assessment of existing infrastructure and need-based repurposing helped in quick adoption of DP and efficient management of our laboratory workflow. This study also validates a DP system and digital workflow for primary diagnosis from remote site with absolute concordance and proves the efficiency of the workflow. It reinforces the noninferiority of WSI when compared with microscopy even in a remote setting and provides evidence for safe and efficient diagnostic services when carried out in a risk-mitigated environment. The study adds value to the growing body of validation data on remote reporting, specifically in these unprecedented times.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

Acknowledgments

The authors would like to acknowledge Mr. Pratik Rambade, Mr. Anil Singh, Mr. Pravin Valvi, and Mr. Manoj Chavan for the technical support. We also thank Ms. Anuradha Dihingia and Mr. Sameer Shaikh (Roche Diagnostics, India) for their valuable support.

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2021/12/1/3/306286

REFERENCES

- 1.Mukhopadhyay S, Feldman MD, Abels E, Ashfaq R, Beltaifa S, Cacciabeve NG, et al. Whole slide imaging versus microscopy for primary diagnosis in surgical pathology: A multicenter blinded randomized noninferiority study of 1992 cases (Pivotal study) Am J Surg Pathol. 2018;42:39–52. doi: 10.1097/PAS.0000000000000948. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Borowsky AD, Glassy EF, Wallace WD, Kallichanda NS, Behling CA, Miller DV, et al. Digital whole slide imaging compared with light microscopy for primary diagnosis in surgical pathology: A multicenter, double-blinded, randomized study of 2045 cases. Arch Pathol Lab Med. 2020;144:1245–53. doi: 10.5858/arpa.2019-0569-OA. [DOI] [PubMed] [Google Scholar]

- 3.Bauer TW, Schoenfield L, Slaw RJ, Yerian L, Sun Z, Henricks WH. Validation of whole slide imaging for primary diagnosis in surgical pathology. Arch Pathol Lab Med. 2013;137:518–24. doi: 10.5858/arpa.2011-0678-OA. [DOI] [PubMed] [Google Scholar]

- 4.Campbell WS, Lele SM, West WW, Lazenby AJ, Smith LM, Hinrichs SH. Concordance between whole-slide imaging and light microscopy for routine surgical pathology. Hum Pathol. 2012;43:1739–44. doi: 10.1016/j.humpath.2011.12.023. [DOI] [PubMed] [Google Scholar]

- 5.Fónyad L, Krenács T, Nagy P, Zalatnai A, Csomor J, Sápi Z, et al. Validation of diagnostic accuracy using digital slides in routine histopathology. Diagn Pathol. 2012;7:35. doi: 10.1186/1746-1596-7-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Goacher E, Randell R, Williams B, Treanor D. The diagnostic concordance of whole slide imaging and light microscopy: A systematic review. Arch Pathol Lab Med. 2017;141:151–61. doi: 10.5858/arpa.2016-0025-RA. [DOI] [PubMed] [Google Scholar]

- 7.Snead DR, Tsang YW, Meskiri A, Kimani PK, Crossman R, Rajpoot NM, et al. Validation of digital pathology imaging for primary histopathological diagnosis. Histopathology. 2016;68:1063–72. doi: 10.1111/his.12879. [DOI] [PubMed] [Google Scholar]

- 8.Al-Janabi S, Huisman A, Vink A, Leguit RJ, Offerhaus GJ, Ten Kate FJ, et al. Whole slide images for primary diagnostics in dermatopathology: A feasibility study. J Clin Pathol. 2012;65:152–8. doi: 10.1136/jclinpath-2011-200277. [DOI] [PubMed] [Google Scholar]

- 9.Al-Janabi S, Huisman A, Vink A, Leguit RJ, Offerhaus GJ, Ten Kate FJ, et al. Whole slide images for primary diagnostics of gastrointestinal tract pathology: A feasibility study. Hum Pathol. 2012;43:702–7. doi: 10.1016/j.humpath.2011.06.017. [DOI] [PubMed] [Google Scholar]

- 10.Al-Janabi S, Huisman A, Nikkels PG, Ten Kate FJ, Van Diest PJ. Whole slide images for primary diagnostics of paediatric pathology specimens: A feasibility study. J Clin Pathol. 2013;66:218–23. doi: 10.1136/jclinpath-2012-201104. [DOI] [PubMed] [Google Scholar]

- 11.Al-Janabi S, Huisman A, Jonges GN, Ten Kate FJ, Goldschmeding R, van Diest PJ. Whole slide images for primary diagnostics of urinary system pathology: A feasibility study. J Ren Inj Prev. 2014;3:91. doi: 10.12861/jrip.2014.26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Williams BJ, Hanby A, Millican-Slater R, Nijhawan A, Verghese E, Treanor D. Digital pathology for the primary diagnosis of breast histopathological specimens: An innovative validation and concordance study on digital pathology validation and training. Histopathology. 2018;72:662–71. doi: 10.1111/his.13403. [DOI] [PubMed] [Google Scholar]

- 13.Evans AJ, Salama ME, Henricks WH, Pantanowitz L. Implementation of whole slide imaging for clinical purposes: Issues to consider from the perspective of early adopters. Arch Pathol Lab Med. 2017;141:944–59. doi: 10.5858/arpa.2016-0074-OA. [DOI] [PubMed] [Google Scholar]

- 14.Pramesh C, Badwe RA. Cancer management in India during Covid-19. N Eng J Med. 2020;382:e61. doi: 10.1056/NEJMc2011595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Williams BJ, Brettle D, Aslam M, Barrett P, Bryson G, Cross S, et al. Guidance for remote reporting of digital pathology slides during periods of exceptional service pressure: An emergency response from the UK royal college of pathologists. J Pathol Inform. 2020;11:12. doi: 10.4103/jpi.jpi_23_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Clinical Laboratory Improvement Amendments (CLIA) Laboratory Guidance During COVID-19 Public Health Emergency. 2020. [Last accessed on 2020 Aug 11]. Available from: https://www.cms.gov/medicareprovider-enrollment-and-certific ationsurveycertificationgeninfopolicy-and-memos-states-and/clinicallaboratory-improvement-amendments-clia-laboratory-guidance-duringcovid-19-public-health .

- 17.Enforcement Policy for Remote Digital Pathology Devices During the Coronavirus Disease 2019 (COVID-19) Public Health Emergency. Guidance for Industry, Clinical Laboratories, Healthcare Facilities, Pathologists, and Food and Drug Administration Staff. 2020. [Last accessed on 2020 Aug 11]. Available from: https://www.fda.gov/regulatory-information/search-fdaguidancedocuments/enforcement-policy-remote-digital-pathologydevices-during-coronavirus-disease-2019-covid-19-public .

- 18.College of American Pathologists. COVID-19-Remote Sign-Out Guidance. 2020. [Last accessed on 2020 Aug 11]. Available from: https://www.documents.cap.org/documents/COVID19-Remote-Sign-Out-Guidance-vFNL.pdf .

- 19.College of American Pathologists. Remote Sign-Out FAQs. 2020. [Last accessed on 2020 Aug 11]. Available from: https://www.documents.cap.org/documents/Remote-Sign-Out-FAQs-FINAL.pdf .

- 20.Rao V, Subramanian P, Sali A, Menon S, Desai S. Validation of whole slide imaging for primary surgical pathology diagnosis of prostate biopsies. Indian J Pathol Microbiol. 2021 doi: 10.4103/IJPM.IJPM_855_19. DOI: 10.4103/IJPM. IJPM_855_19. [DOI] [PubMed] [Google Scholar]

- 21.Rajaganesan S, Kumar R, Rao V, Pai T, Mittal N, Shahay A, et al. Performance assessment of various digital pathology whole slide imaging systems in real-time clinical setting. J Pathol Inform. 2020;11:1. [Google Scholar]

- 22.Raab SS, Nakhleh RE, Ruby SG. Patient safety in anatomic pathology: Measuring discrepancy frequencies and causes. Arch Pathol Lab Med. 2005;129:459–66. doi: 10.5858/2005-129-459-PSIAPM. [DOI] [PubMed] [Google Scholar]

- 23.Williams BJ, DaCosta P, Goacher E, Treanor D. A systematic analysis of discordant diagnoses in digital pathology compared with light microscopy. Arch Pathol Lab Med. 2017;141:1712–8. doi: 10.5858/arpa.2016-0494-OA. [DOI] [PubMed] [Google Scholar]

- 24.Stathonikos N, Veta M, Huisman A, van Diest PJ. Going fully digital: Perspective of a Dutch academic pathology lab. J Pathol Inform. 2013;4:15. doi: 10.4103/2153-3539.114206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stathonikos N, Nguyen TQ, Spoto CP, Verdaasdonk MA, van Diest PJ. Being fully digital: Perspective of a Dutch academic pathology laboratory. Histopathology. 2019;75:621–35. doi: 10.1111/his.13953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Thorstenson S, Molin J, Lundström C. Implementation of large-scale routine diagnostics using whole slide imaging in Sweden: Digital pathology experiences 2006-2013. J Pathol Inform. 2014;5:14. doi: 10.4103/2153-3539.129452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fraggetta F, Garozzo S, Zannoni GF, Pantanowitz L, Rossi ED. Routine digital pathology workflow: The Catania experience. J Pathol Inform. 2017;8:51. doi: 10.4103/jpi.jpi_58_17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Vodovnik A, Aghdam MR. Complete routine remote digital pathology services. J Pathol Inform. 2018;9:36. doi: 10.4103/jpi.jpi_34_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Retamero JA, Aneiros-Fernandez J, del Moral RG. Complete digital pathology for routine histopathology diagnosis in a multicenter hospital network. Arch Pathol Lab Med. 2020;144:221–8. doi: 10.5858/arpa.2018-0541-OA. [DOI] [PubMed] [Google Scholar]

- 30.Pantanowitz L, Wiley CA, Demetris A, Lesniak A, Ahmed I, Cable W, et al. Experience with multimodality telepathology at the university of Pittsburgh medical center. J Pathol Inform. 2012;3:45. doi: 10.4103/2153-3539.104907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Thrall MJ, Rivera AL, Takei H, Powell SZ. Validation of a novel robotic telepathology platform for neuropathology intraoperative touch preparations. J Pathol Inform. 2014;5:21. doi: 10.4103/2153-3539.137642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wilbur DC, Madi K, Colvin RB, Duncan LM, Faquin WC, Ferry JA, et al. Whole-slide imaging digital pathology as a platform for teleconsultation: A pilot study using paired subspecialist correlations. Arch Pathol Lab Med. 2009;133:1949–53. doi: 10.1043/1543-2165-133.12.1949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sirintrapun SJ, Rudomina D, Mazzella A, Feratovic R, Alago W, Siegelbaum R, et al. Robotic telecytology for remote cytologic evaluation without an on-site cytotechnologist or cytopathologist: A tale of implementation and review of constraints. J Pathol Inform. 2017;8:32. doi: 10.4103/jpi.jpi_26_17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Chong T, Palma-Diaz MF, Fisher C, Gui D, Ostrzega NL, Sempa G, et al. The California telepathology service: UCLA's experience in deploying a regional digital pathology subspecialty consultation network. J Pathol Inform. 2019;10:31. doi: 10.4103/jpi.jpi_22_19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Chen J, Jiao Y, Lu C, Zhou J, Zhang Z, Zhou C. A nationwide telepathology consultation and quality control program in China: Implementation and result analysis. Diagn Pathol. 2014;19(Suppl 1):S2. doi: 10.1186/1746-1596-9-S1-S2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Têtu B, Perron É, Louahlia S, Paré G, Trudel MC, Meyer J. The Eastern Québec telepathology network: A three-year experience of clinical diagnostic services. Diagn Pathol. 2014;19(Suppl 1):S1. doi: 10.1186/1746-1596-9-S1-S1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mpunga T, Hedt-Gauthier BL, Tapela N, Nshimiyimana I, Muvugabigwi G, Pritchett N, et al. Implementation and validation of telepathology triage at cancer referral center in rural Rwanda. J Glob Oncol. 2016;2:76–82. doi: 10.1200/JGO.2015.002162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Evans AJ, Chetty R, Clarke BA, Croul S, Ghazarian DM, Kiehl TR, et al. Primary frozen section diagnosis by robotic microscopy and virtual slide telepathology: The university health network experience. Semin Diagn Pathol. 2010;27:160–6. doi: 10.1053/j.semdp.2009.09.006. [DOI] [PubMed] [Google Scholar]

- 39.Rashbass JF. Telepathology: Guidance from the Royal College of Pathologists. London, UK: The Royal College of Pathologists; 2013. [Google Scholar]

- 40.Lowe JT. Guidance for Cellular Pathologists on Reporting at Home. 3rd ed. London, UK: The Royal College of Pathologists; 2014. [Google Scholar]

- 41.Vodovnik A. Distance reporting in digital pathology: A study on 950 cases. J Pathol Inform. 2015;6:18. doi: 10.4103/2153-3539.156168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Vodovnik A, Aghdam MR, Espedal DG. Remote autopsy services: A feasibility study on nine cases. J Telemed Telecare. 2018;24:460–4. doi: 10.1177/1357633X17708947. [DOI] [PubMed] [Google Scholar]

- 43.Tsung JS. Institutional pathology consultation. Am J Surg Pathol. 2004;28:399–402. doi: 10.1097/00000478-200403000-00015. [DOI] [PubMed] [Google Scholar]

- 44.Frable WJ. Surgical pathology second reviews, institutional reviews, audits, and correlations: What's out there.Error or diagnostic variation? Arch Pathol Lab Med. 2006;130:620–5. doi: 10.5858/2006-130-620-SPRIRA. [DOI] [PubMed] [Google Scholar]

- 45.Hanna MG, Reuter VE, Ardon O, Kim D, Sirintrapun SJ, Schüffler PJ, et al. Validation of a digital pathology system including remote review during the COVID-19 pandemic. Mod Pathol. 2020;33:2115–27. doi: 10.1038/s41379-020-0601-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wright AI, Clarke EL, Dunn CM, Williams BJ, Treanor DE, Brettle DS. A point-of-use quality assurance tool for digital pathology remote working. J Pathol Inform. 2020;11:17. doi: 10.4103/jpi.jpi_25_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rhoads DD, Habib-Bein NF, Hariri RS, Hartman DJ, Monaco SE, Lesniak A, et al. Comparison of the diagnostic utility of digital pathology systems for telemicrobiology. J Pathol Inform. 2016;7:10. doi: 10.4103/2153-3539.177687. [DOI] [PMC free article] [PubMed] [Google Scholar]