Abstract

In many problems, a sensible estimator of a possibly multivariate monotone function may fail to be monotone. We study the correction of such an estimator obtained via projection onto the space of functions monotone over a finite grid in the domain. We demonstrate that this corrected estimator has no worse supremal estimation error than the initial estimator, and that analogously corrected confidence bands contain the true function whenever the initial bands do, at no loss to band width. Additionally, we demonstrate that the corrected estimator is asymptotically equivalent to the initial estimator if the initial estimator satisfies a stochastic equicontinuity condition and the true function is Lipschitz and strictly monotone. We provide simple sufficient conditions in the special case that the initial estimator is asymptotically linear, and illustrate the use of these results for estimation of a G-computed distribution function. Our stochastic equicontinuity condition is weaker than standard uniform stochastic equicontinuity, which has been required for alternative correction procedures. This allows us to apply our results to the bivariate correction of the local linear estimator of a conditional distribution function known to be monotone in its conditioning argument. Our experiments suggest that the projection step can yield significant practical improvements.

MSC2020 subject classifications: Primary 62G20, secondary 60G15

Keywords: Asymptotic linearity, confidence band, kernel smoothing, projection, shape constraint, stochastic equicontinuity

1. Introduction

1.1. Background

In many scientific problems, the parameter of interest is a component-wise monotone function. In practice, an estimator of this function may have several desirable statistical properties, yet fail to be monotone. This often occurs when the estimator is obtained through the pointwise application of a statistical procedure over the domain of the function. For instance, we may be interested in estimating a conditional cumulative distribution function θ0, defined pointwise as θ0(a, y) = P0(Y ≤ y ∣ A = a), over its domain . Here, Y may represent an outcome and A an exposure. The map y ↦ θ0(a, y) is necessarily monotone for each fixed a. In some scientific contexts, it may be known that a ↦ θ0(a, y) is also monotone for each y, in which case θ0 is a bivariate component-wise monotone function. An estimator of θ0 can be constructed by estimating the regression function (a, y) ↦ EP0 [I(Y ≤ y) ∣ A = a] for each (a, y) on a finite grid using kernel smoothing, and performing suitable interpolation elsewhere. For some types of kernel smoothing, including the Nadaraya-Watson estimator, the resulting estimator is necessarily monotone as a function of y for each value of a, but not necessarily monotone as a function of a for each value of y. For other types of kernel smoothing, including the local linear estimator, which often has smaller asymptotic bias than the Nadaraya-Watson estimator, the resulting estimator need not be monotone in either component.

Whenever the function of interest is component-wise monotone, failure of an estimator to itself be monotone can be problematic. This is most apparent if the monotonicity constraint is probabilistic in nature – that is, the parameter mapping is monotone under all possible probability distributions. This is the case, for instance, if θ0 is a distribution function. In such settings, returning a function estimate that fails to be monotone is nonsensical, like reporting a probability estimate outside the interval [0, 1]. However, even if the monotonicity constraint is based on scientific knowledge rather than probabilistic constraints, failure of an estimator to be monotone can be an issue. For example, if the parameter of interest represents average height or weight among children as a function of age, scientific collaborators would likely be unsatisfied if presented with an estimated curve that were not monotone. Finally, as we will see, there are often finite-sample performance benefits to ensuring that the monotonicity constraint is respected.

Whenever this phenomenon occurs, it is natural to seek an estimator that respects the monotonicity constraint but nevertheless remains close to the initial estimator, which may otherwise have good statistical properties. A monotone estimator can be naturally constructed by projecting the initial estimator onto the space of monotone functions with respect to some norm. A common choice is the L2-norm, which amounts to using multivariate isotonic regression to correct the initial estimator.

1.2. Contribution and organization of the article

In this article, we discuss correcting an initial estimator of a multivariate monotone function by computing the isotonic regression of the estimator over a finite grid in the domain, and interpolating between grid points. We also consider correcting an initial confidence band by using the same procedure applied to the upper and lower limits of the band. We provide three general results regarding this simple procedure.

- Building on the results of Robertson, Wright and Dykstra (1988) and Chernozhukov, Fernández-Val and Galichon (2009), we demonstrate that the corrected estimator is at least as good as the initial estimator, meaning:

- its uniform error over the grid used in defining the projection is less than or equal to that of the initial estimator for every sample;

- its uniform error over the entire domain is less than or equal to that of the initial estimator asymptotically;

- the corrected confidence band contains the true function on the projection grid whenever the initial band does, at no cost in terms of average or uniform band width.

We provide high-level sufficient conditions under which the uniform difference between the initial and corrected estimators is for a generic sequence rn → ∞.

- We provide simpler lower-level sufficient conditions in two special cases:

- when the initial estimator is uniformly asymptotically linear, in which case the appropriate rate is rn = n1/2;

- when the initial estimator is kernel-smoothed with bandwidth hn, in which case the appropriate rate is rn = (nhn)1/2 for univariate kernel smoothing.

We apply our theoretical results to two sets of examples: nonparametric efficient estimation of a G-computed distribution function for a binary exposure, and local linear estimation of a conditional distribution function with a continuous exposure.

Other authors have considered the correction of an initial estimator using isotonic regression. To name a few, Mukarjee and Stern (1994) used a projectionlike procedure applied to a kernel smoothing estimator of a regression function, whereas Patra and Sen (2016) used the projection procedure applied to a univariate cumulative distribution function in the context of a mixture model. These articles addressed the properties of the projection procedure in their specific applications. In contrast, we provide general results that are applicable broadly.

1.3. Alternative projection procedures

The projection approach is not the only possible correction procedure. Dette, Neumeyer and Pilz (2006), Chernozhukov, Fernández-Val and Galichon (2009), and Chernozhukov, Fernández-Val and Galichon (2010) studied a correction based on monotone rearrangements. However, monotone rearrangements do not generalize to the multivariate setting as naturally as projections — for example, Chernozhukov, Fernández-Val and Galichon (2009) proposed averaging a variety of possible multivariate monotone rearrangements to obtain a final monotone estimator. In contrast, the L2 projection of an initial estimator onto the space of component-wise monotone functions is uniquely defined, even in the context of multivariate functions.

Daouia and Park (2013) proposed an alternative correction procedure that consists of taking a convex combination of upper and lower monotone envelope functions, and they demonstrated conditions under which their estimator is asymptotically equivalent in supremum norm to the initial estimator. There are several differences between our contributions and those of Daouia and Park (2013). For instance, Daouia and Park (2013) did not study correction of confidence bands, which we consider in Section 2.3, or the important special case of asymptotically linear estimators, which we consider in Section 3.1. Our results in these two sections apply equally well to our correction procedure and to the correction procedure considered by Daouia and Park (2013).

Perhaps the most important theoretical contribution of our work beyond that of existing research is the weaker form of stochastic equicontinuity that we require for establishing asymptotic equivalence of the initial and projected estimators. In contrast, Daouia and Park (2013) explicitly required the usual uniform asymptotic equicontinuity, while application of the Hadamard differentiability results of Chernozhukov, Fernández-Val and Galichon (2010) requires weak convergence to a tight limit, which is stronger than uniform asymptotic equicontinuity. Our weaker condition allows us to use our general results to tackle a broader range of initial estimators, including kernel smoothed estimators, which are typically not uniformly asymptotically equicontinuous at useful rates, but nevertheless can frequently be shown to satisfy our condition. We discuss this in detail in Section 3.2. We illustrate this general contribution in Section 4.2 by studying the bivariate correction of a conditional distribution function estimated using local linear regression, which would not be possible using the stronger asymptotic equicontinuity condition. In numerical studies, we find that the projected estimator and confidence bands can offer substantial finite-sample improvements over the initial estimator and bands in this example.

2. Main results

2.1. Definitions and statistical setup

Let be a statistical model of probability measures on a probability space (, ). Let be a parameter of interest on , where and is the Banach space of bounded functions from to equipped with supremum norm . We have specified this particular for simplicity, but the results established here apply to any bounded rectangular domain . For each , denote by θP the evaluation of θ at P and note that θP is a bounded real-valued function on . For any , denote by the evaluation of θP at t.

For any vector and 1 ≤ j ≤ d, denote by tj the jth component of t. Define the partial order ≤ on by setting t ≤ t′ if and only if for each 1 ≤ j ≤ d. A function is called (component-wise) monotone non-decreasing if t ≤ t′ implies that f(t) ≤ f(t′). Denote ∥t∥ = max1≤j≤d ∣tj∣ for any vector . Additionally, denote by the convex set of bounded monotone non-decreasing functions from to . For concreteness, we focus on non-decreasing functions, but all results established here apply equally to non-increasing functions.

Let and suppose that is nonempty. Generally, this inclusion is strict only if, rather than being implied by the rules of probability, the monotonicity constraint stems at least in part from prior scientific knowledge. Also, define Θ0 := {θ ∈ Θ : θ = θP for some } ⊆ Θ. We are primarily interested in settings where Θ0 = Θ, since in this case there is no additional knowledge about θ encoded by , and in particular there is no danger of yielding a corrected estimator that is compatible with no .

Suppose that observations X1, X2, … , Xn are sampled independently from an unknown distribution , and that we wish to estimate θ0 := θP0 based on these observations. Suppose that, for each , we have access to an estimator θn(t) of θ0(t) based on X1, X2, … , Xn. We note that the assumption that the data are independent and identically distributed is not necessary for Theorems 1 and 2 below. For any suitable , we define Pf := ʃ f(x) P(dx) and , where is the empirical distribution based on X1, X2, … , Xn.

The central premise of this article is that θn(t) may have desirable statistical properties for each t or even uniformly in t, but that θn as an element of may not fall in Θ for any finite n or even with probability tending to one. Our goal is to provide a corrected estimator that necessarily falls in Θ, and yet retains the statistical properties of θn. A natural way to accomplish this is to define as the closest element of Θ to θn in some norm on . Ideally, we would prefer to take to minimize over θ ∈ Θ. However, this is not tractable for two reasons. First, optimization over the entirety of is an infinite-dimensional optimization problem, and is hence frequently computationally intractable. To resolve this issue, for each n, we let be a finite rectangular lattice in over which we will perform the optimization, and define and consider . as the supremum norm over . While it is now computationally feasible to define as a minimizer over θ ∈ Θ of the finite-dimensional objective function , this objective function is challenging due to its non-differentiability. Instead, we define

| (2.1) |

The squared-error objective function is smooth in its arguments. In dimension d = 1, thus defined is simply the isotonic regression of θn on the grid , which has a closed-form representation as the greatest convex minorant of the so-called cumulative sum diagram. Furthermore, since , many of our results also apply to .

We note that is only uniquely defined on . To completely characterize , we must monotonically interpolate function values between elements of . We will permit any monotonic interpolation that satisfies a weak condition. By the definition of a rectangular lattice, every can be assigned a hyper-rectangle whose vertices {s1, s2 … , s2d} are elements of and whose interior has empty intersection with . If multiple such hyper-rectangles exist for t, such as when t lies on the boundary of two or more such hyper-rectangles, one can be assigned arbitrarily. We will assume that, for ,

for weights λ1,n(t), λ2,n(t), … , λ2d,n(t) ∈ (0, 1) such that Σk λk,n(t) = 1. In words, we assume that is a convex combination of the values of on the vertices of the hyper-rectangle containing t. A simple interpolation approach consists of setting with t′ the element of closest to t, and choosing any such element if there are multiple elements of equally close to t. This particular scheme satisfies our requirement.

Finally, for each n, we let ℓn(t) ≤ un(t) denote lower and upper endpoints of a confidence band for θ0(t). We then define and as the corrected versions of ℓn and un using the same projection and interpolation procedure defined above for obtaining from .

In dimension d = 1, , , and can be obtained for via the Pool Adjacent Violators Algorithm (Ayer et al., 1955), as implemented in the R command isoreg (R Core Team, 2018). In dimension d = 2, the corrections can be obtained using the algorithm described in Bril et al. (1984), which is implemented in the R command biviso in the package Iso (Turner, 2015). In dimension d ≥ 3, Kyng, Rao and Sachdeva (2015) provides algorithms for computing the isotonic regression based on embedding the points in a directed acyclic graph. Alternatively, general-purpose algorithms for minimization of quadratic criteria over convex cones have been developed and implemented in the R package coneproj and may be used in this case (Meyer, 1999; Liao and Meyer, 2014).

2.2. Properties of the projected estimator

The projected estimator is the isotonic regression of θn over the grid . Hence, many existing finite-sample results on isotonic regression can be used to deduce properties of . Theorem 1 below collects a few of these properties, building upon the results of Barlow et al. (1972) and Chernozhukov, Fernández-Val and Galichon (2009). We denote as the mesh of in .

Theorem 1. (i) It holds that .

(ii) If ωn = oP(1) and θ0 is continuous on , then

(iii) If there exists some α > 0 for which as δ → 0, then

(iv) If θ0(t) ∈ [ℓn(t), un(t)] for all , then for all .

(v) It holds that and

Theorem 1 is proved in Appendix A.1. We remark briefly on the implications of Theorem 1. Part (i) says that the estimation error of over the grid is never worse than that of θn, whereas parts (ii) and (iii) provide bounds on the estimation error of on all of in supremum norm. In particular, part (ii) indicates that is uniformly consistent on as long as θn is uniformly consistent on , θ0 is continuous on , and ωn = oP(1). Part (iii) provides an upper bound on the uniform rate of convergence of − θ0, and indicates that if θ0 is known to lie in a Hölder class, then ωn can be chosen in such a way as to guarantee that the estimation error of on all of is asymptotically no worse than the estimation error of θn on in supremum norm. We note that parts (i)–(iii) also hold for the Lp norm with respect to uniform measure on for any p ∈ [1, ∞). Part (iv) guarantees that the isotonized band [, ] never has worse coverage than the original band over . Finally, part (v) states that the potential increase in coverage comes at no cost to the average or supremum width of the bands over . We note that parts (i), (iv) and (v) hold true for each n.

While comprehensive in scope, Theorem 1 does not rule out the possibility that performs strictly better, even asymptotically, than θn, or that the band [, ] is asymptotically strictly more conservative than [ℓn, un]. In order to construct confidence intervals or bands with correct asymptotic coverage, a stronger result is needed: it must be that , where rn is a diverging sequence such that converges in distribution to a non-degenerate limit distribution. Then, we would have that converges in distribution to this same limit, and hence confidence bands constructed using approximations of this limit distribution would have correct coverage when centered around , as we discuss more below.

We consider the following conditions on θ0 and the initial estimator θn:

- there exists a deterministic sequence rn tending to infinity such that, for all δ > 0,

there exists K1 < ∞ such that ∣θ0(t) − θ0(s)∣ ≤ K1∥t − s∥ for all t, ;

there exists K0 > 0 such that K0∥t − s∥ ≤ ∣θ0(t) − θ0(s)∣ for all t, .

Based on these conditions, we have the following result.

Theorem 2. If (A)–(C) hold and , then .

The proof of Theorem 2 is presented in Appendix A.2. This result indicates that the projected estimator is uniformly asymptotically equivalent to the original estimator in supremum norm at the rate rn.

Condition (A) is related to, but notably weaker than, uniform stochastic equicontinuity (van der Vaart and Wellner, 1996, p. 37). (A) follows if, in particular, the process converges weakly to a tight limit in the space . However, the latter condition is sufficient but not necessary for (A) to hold. This is important for application of our results to kernel smoothing estimators, which typically do not converge weakly to a tight limit, but for which condition (A) nevertheless often holds. We discuss this at length in Section 4.2. The results of Daouia and Park (2013) (see in particular condition (C3) therein) and Chernozhukov, Fernández-Val and Galichon (2010) rely on uniform stochastic equicontinuity in demonstrating asymptotic equivalence of their correction procedures, which essentially limits the applicability of their procedures to estimators that converge weakly to a tight limit in .

Condition (B) constrains θ0 to be Lipschitz. Condition (C) constrains the variation of θ0 from below, and is slightly more restrictive than a requirement for strict monotonicity. If, for instance, θ0 is differentiable, then (C) is satisfied if all first-order partial derivatives of θ0 are bounded away from zero. Condition (C) excludes, for instance, situations in which θ0 is differentiable with null derivative over an interval. In such cases, may have strictly smaller variance on these intervals than θn because will pool estimates across the flat region while θn may not. Hence, in such cases, may potentially asymptotically improve on θn, so that and θn are not asymptotically equivalent at the rate rn. Theoretical results in these cases would be of interest, but are beyond the scope of this article.

In addition to conditions (A)–(C), Theorem 2 requires that the mesh ωn of tend to zero in probability faster than . Since is chosen by the user, as long as rn (or an upper bound thereof) is known, this is not a problem in practice. Furthermore, except in irregular problems, the rate of convergence is typically not faster than n−1/2, and hence it is typically sufficient to set ωn = cnn−1/2 for some cn = o(1). We note, however, that the computational complexity of obtaining the isotonic regression of θn over increases as ωn decreases. Hence, in cases where the rate of convergence of the initial estimator is strictly slower than n−1/2, it may be preferable to choose ωn more carefully based on a precise determination of rn. We expect this to be especially true in the context of large d and n.

We note that conditions (A)–(C) and also imply that for any p ∈ [1, ∞), where is the Lp norm on with respect to uniform measure on . However, it may be possible to relax conditions (A)–(C) for the purpose of demonstrating Lp asymptotic equivalence of and θn for p < ∞. It is not clear whether our method of proof of Theorem 2 is amenable to such weakening. We have chosen to focus on uniform asymptotic equivalence in part for its use in constructing uniform confidence bands for θ0, as we discuss in the next section.

2.3. Construction of confidence bands

Suppose there exists a fixed function such that ℓn and un satisfy:

;

;

P0 [rn∣θn(t) − θ0(t)∣ ≥ γα(t) for all ] → 1 − α.

As an example of a confidence band that satisfies conditions (a)–(c), suppose that is a scaling function and cα is a fixed constant such that, as n tends to infinity,

If σn is an estimator of σ0 satisfying 0 and cα,n is an estimator of cα such that cα,n →P cα, then the Wald-type band defined by lower and upper endpoints and satisfies (a)–(c) with γα = cασ0. However, the latter conditions can also be satisfied by other types of bands, such as those constructed with a consistent bootstrap procedure.

Under conditions (a)–(c), the confidence band [ℓn, un] has asymptotic coverage 1 − α. When conditions (A) and (B) also hold, the corrected band [, ] has the same asymptotic coverage as the original band [ℓn, un], as stated in the following result.

Corollary 1. If (A)–(B) and (a)–(c) hold, γα is uniformly continuous on , and , then the band [, ] has asymptotic coverage 1 − α.

The proof of Corollary 1 is presented in Appendix A.3. We also note that Theorem 2 implies that Wald-type confidence bands constructed around θn have the same asymptotic coverage if they are constructed around instead.

3. Refined results under additional structure

In this section, we provide more detailed conditions that imply condition (A) in two special cases: when θn is asymptotically linear, and when θn is a kernel smoothing-type estimator.

3.1. Special case I: asymptotically linear estimators

Suppose that the initial estimator θn is uniformly asymptotically linear (UAL): for each , there exists depending on P0 such that ʃ ϕ0,t dP0 = 0, and

| (3.1) |

for a remainder term Rn,t with n1/2 . The function ϕ0,t is the influence function of θn(t) under sampling from P0. It is desirable for θn to have representation (3.1) because this implies its uniform weak consistency as well as the pointwise asymptotic normality of n1/2 [θn(t) − θ0(t)] for each . If in addition the collection {} of influence functions forms a P0-Donsker class, then {} converges weakly in to a Gaussian process with covariance function Σ0 : (t, s) ↦ ʃ ϕ0,t(x)ϕ0,s(x)dP0(x). Uniform asymptotic confidence bands based on θn can then be formed by using appropriate quantiles from any suitable approximation of the distribution of the supremum of the limiting Gaussian process.

We introduce two additional conditions:

(A1) the collection {} of influence curves is a P0-Donsker class;

(A2) Σ0 is uniformly continuous in the sense that

Whenever θn is uniformly asymptotically linear, Theorem 2 can be shown to hold under (A1), (A2) and (B), as implied by the theorem below. The validity of (A1) and (A2) can be assessed by scrutinizing the influence function ϕ0,t of θn(t) for each . This fact renders the verification of these conditions very simple once uniform asymptotic linearity has been established.

Theorem 3. For any UAL estimator θn, (A1)–(A2) together imply (A).

The proof of Theorem 3 is provided in Appendix A.4. In Section 4.1, we illustrate the use of Theorem 3 for the estimation of a G-computed distribution function.

We note that conditions (A1)–(A2) are actually sufficient to establish uniform asymptotic equicontinuity, which as discussed above is stronger than (A). Therefore, Theorem 3 can also be used to prove asymptotic equivalence of the majorization/minorization correction procedure studied in Daouia and Park (2013).

3.2. Special case II: kernel smoothed estimators

For certain parameters, asymptotically linear estimators are not available. In particular, this is the case when the parameter of interest is not sufficiently smooth as a mapping of P0. For example, density functions, regression functions, and conditional quantile functions do not permit asymptotically linear estimators in a nonparametric model when the exposure is continuous. In these settings, a common approach to nonparametric estimation is kernel smoothing.

Recent results suggest that, as a process, the only possible weak limit of {} in is zero when θn is a kernel smoothed estimator. For example, in the case of the Parzen-Rosenblatt density estimator with bandwidth hn, Theorem 3 of Stupfler (2016) implies that if

then {} converges weakly to zero in , whereas if cn → c ∈ (0, ∞], then it does not converge weakly to a tight limit in . As a result, {} only satisfies uniform stochastic equicontinuity for rn such that cn → 0. However, for any such rate rn, is slower than the pointwise and uniform rates of convergence of θn − θ0. As a result, θn and may not be asymptotically equivalent at the uniform rate of convergence of θn − θ0, so that confidence intervals and regions based on the limit distribution of θn − θ0, but centered around , may not have correct coverage. We note that, while Stupfler (2016) establishes formal results for the Parzen-Rosenblatt estimator, we expect that the results therein extend to a variety of kernel smoothed estimators.

As a result of the lack of uniform stochastic equicontinuity of rn(θn − θ0) for useful rates rn, establishing (A) is much more difficult for kernel smoothed estimators than for asymptotically linear estimators. However, since (A) is weaker than uniform stochastic equicontinuity, it may still be possible. Here, we provide alternative sufficient conditions that imply condition (A) and that we have found useful for studying a kernel smoothed estimator θn.

When the initial estimator θn is kernel smoothed, we can often show that

| (3.2) |

where is a deterministic bias, an is sequences of positive constants, and is a random remainder term. We then have that

If b0 is uniformly continuous on and an = O(1), or b0 is uniformly α-Hölder on and , then the first term on the right-hand side tends to zero in probability. Attention may then be turned to demonstrating that the second term vanishes in probability. It appears difficult to provide a general characterization of the form of Rn that encompasses kernel smoothed estimators. However, in our experience, it is frequently the case that Rn(t) involves terms of the form , where is a deterministic function for each n ∈ {1, 2, …} and . In the course of demonstrating that

a rate of convergence for

is then required. Defining for each η > 0, this is equivalent to establishing a rate of convergence for the local empirical process . Such rates can be established using tail bounds for empirical processes. We briefly comment on two approaches to obtaining such tail bounds.

We first define bracketing and covering numbers of a class of functions — see van der Vaart and Wellner (1996) for a comprehensive treatment. We denote by ∥F∥P,2 = [P(F2)]1/2 the L2(P) norm of a given P-square-integrable function . The bracketing number of a class of functions with respect to the L2(P) norm is the smallest number of ε-brackets needed to cover , where an ε-bracket is any set of functions {f : ℓ ≤ f ≤ u} with ℓ and u such that ∥ℓ − u∥P,2 < ε. The covering number of with respect to the L2(Q) norm is the smallest number of ε-balls in L2(Q) required to cover . The uniform covering number is the supremum of over all discrete probability measures Q such that ∥F∥Q,2 > 0, where F is an envelope function for . The bracketing and uniform entropy integrals for with respect to F are then defined as

We discuss two approaches to controlling using these integrals. Suppose that has envelope function Fn,η in the sense that ∣ξ(x)∣ ≤ Fn,η for all and . The first approach is useful when ∥Fn,δ/rn∥P0,2 can be adequately controlled. Specifically, if either or is O(1), then for all n and some constant Mδ ∈ (0, ∞) not depending on n by Theorems 2.14.1 and 2.14.2 of van der Vaart and Wellner (1996).

The second approach we consider is useful when the envelope functions do not shrink in expectation, but the functions in still get smaller in the sense that tends to zero. For example, if νn,t is defined as νn,t(x) := I(0 ≤ x ≤ t) for each , t ∈ [0, 1], and n, then Fn,η : x ↦ I(0 ≤ x ≤ 1) is the natural envelope function for for all n and η, so that ∥Fn,δ/rn∥P0,2 does not tend to zero. However, if the density p0 corresponding to P0 is bounded above by , then , which does tend to zero. In these cases, the basic tail bounds in Theorem 2.14.1 and 2.14.2 of van der Vaart and Wellner (1996) are too weak. Sharper, but slightly more complicated, bounds may be used instead. Specifically, if Fn,δ/rn ≤ C < ∞ for all n large enough and either

are , then by Lemma 3.4.2 of van der Vaart and Wellner (1996) and Theorem 2.1 of van der Vaart and Wellner (2011). Analogous statements hold if these expressions are .

In some cases, both of these approaches must be used to control different terms arising within Rn(t), as for the conditional distribution function discussed in Section 4.2.

4. Illustrative examples

4.1. Example 1: Estimation of a G-computed distribution function

We first demonstrate the use of Theorem 3 in the particular problem in which we wish to draw inference on a G-computed distribution function. Suppose that the data unit is the vector X = (Y, A, W), where Y is an outcome, A ∈ {0, 1} is an exposure, and W is a vector of baseline covariates. The observed data consist of independent draws X1, X2, … , Xn from , where is a nonparametric model.

For and a0 ∈ {0, 1}, we define the parameter value θP,a0 pointwise as θP,a0(t) := EP {P (Y ≤ t ∣ A = a0, W)} the G-computed distribution function of Y evaluated at t, where the outer expectation is over the marginal distribution of W under P. We are interested in estimating θ0,a0 := θP0,a0. This parameter is often of interest as an interpretable marginal summary of the relationship between Y and A accounting for the potential confounding induced by W. Under certain causal identification conditions, θ0,a0 is the distribution function of the counterfactual outcome Y(a0) defined by the intervention that deterministically sets exposure to A = a0 (Robins, 1986; Gill and Robins, 2001).

For each t, the parameter P ↦ θP,a0 (t) is pathwise differentiable in a nonparametric model, and its nonparametric efficient influence function φP,a0,t at is given by

where gP(a0 ∣ w) := P(A = a0 ∣ W = w) is the propensity score and is the conditional exposure-specific distribution function, as implied by P (van der Laan and Robins, 2003). Given estimators gn and of g0 := gP0 and , respectively, several approaches can be used to construct, for each t, an asymptotically linear estimator of θ0(t) with influence function ϕ0,a0,t = φP0,a0,t. For example, the use of either optimal estimating equations or the one-step correction procedure leads to the doubly-robust augmented inverse-probability-of-weighting estimator

as discussed in detail in van der Laan and Robins (2003). Under conditions on gn and , including consistency at fast enough rates, θn,a0(t) is asymptotically efficient relative to . In this case, θn,a0(t) satisfies (3.1) with influence function ϕ0,a0,t. However, there is no guarantee that θn,a0 is monotone.

In the context of this example, we can identify simple sufficient conditions under which conditions (A)–(B), and hence the asymptotic equivalence of the initial and isotonized estimators of the G-computed distribution function, are guaranteed. Specifically, we find this to be the case when both:

there exists η > 0 such that g0(a0 ∣ W) ≥ η almost surely under P0;

there exist non-negative real-valued functions K1, K2 such that

for all t, , and such that, under P0, K1(W) is strictly positive with non-zero probability and K2(W) has finite second moment.

We conducted a simulation study to validate our theoretical results in the context of this particular example. For samples sizes 100, 250, 500, 750, and 1000, we generated 1000 random datasets as follows. We first simulated a bivariate covariate W with independent components W1 and W2, respectively distributed as a Bernoulli variate with success probability 0.5 and a uniform variate on (−1, 1). Given W = (w1, w2), exposure A was simulated from a logistic regression model with

Given W = (w1, w2) and A = a, Y was simulated as the inverse-logistic transformation of a normal variate with mean 0.2 − 0.3a − 4w2 and variance 0.3.

For each simulated dataset, we estimated θ0,0(t) and θ0,1(t) for t equal to each outcome value observed between 0.1 and 0.9. To do so, we used the estimator described above, with propensity score and conditional exposure-specific distribution function estimated using correctly-specified parametric models. We employed two correction procedures for the estimators θn,0 and θn,1. First, we projected θn,0 and θn,1 onto the space of monotone functions separately. Second, noting that θ0,0(t) ≤ θ0,1(t) for all t, so that (a, t) ↦ θ0,a(t) is component-wise monotone for this particular data-generating distribution, we considered the projection of (a, t) ↦ θn,a(t) onto the space of bivariate monotone functions on . For each simulation and each projection procedure, we recorded the maximal absolute differences between (i) the initial and and projected estimates, (ii) the initial estimate and the truth, and (iii) the projected estimate and the truth. We also recorded the maximal widths of the initial and projected confidence bands.

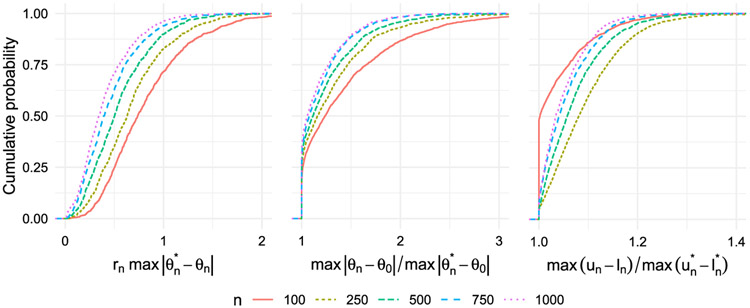

Figure 1 displays the results of this simulation study, with output from the univariate and bivariate projection approaches summarized in the top and bottom rows, respectively. The left column displays the empirical distribution of the scaled maximum absolute discrepancy between θn and for all sample sizes studied. This plot confirms that the discrepancy between these two estimators indeed decreases faster than n−1/2, as our theory suggests. Furthermore, for each n, the discrepancy is larger for the two-dimensional projection.

Fig 1.

Summary of simulation results for G-computed distribution function. Each plot shows cumulative distributions of a particular discrepancy over 1000 simulated datasets for different values of n. Left panel: maximal absolute difference between the initial and isotonic estimators over the grid used for projecting, scaled up by root-n. Middle panel: ratio of the maximal absolute difference between the initial estimator and the truth and the maximal absolute difference between the isotonic estimator and the truth. Right panel: ratio of the maximal width of the initial confidence band and the maximal width of the isotonic confidence band. The top row shows the results for the univariate projection, and the bottom row shows the results for the bivariate projection.

The middle column of Figure 1 displays the empirical distribution function of the ratio between the maximum discrepancy between θn and θ0 and that of and θ0. This plot confirms that is always at least as close to θ0 than is θn over . The maximum discrepancy between θn and θ0 can be more than 25% larger than that between and θ0 in the univariate case, and up to 50% larger in the bivariate case.

The right column of Figure 1 displays the empirical distribution function of the ratio between the maximum size of the initial uniform 95% influence function-based confidence band and that of the isotonic band. For large samples, the maximal widths are often close, but for smaller samples, the initial confidence bands can be up to 50% larger than the isotonic bands, especially for the bivariate case. The empirical coverage of both bands is provided in Table 1. The coverage of the isotonic band is essentially the same as the initial band for the univariate case, whereas it is slightly larger than that of the initial band in the bivariate case.

Table 1.

Coverage of 95% confidence bands for the true counterfactual distribution function.

| n | 100 | 250 | 500 | 750 | 1000 | |

|---|---|---|---|---|---|---|

| d=1 | Initial band | 92.5 | 94.1 | 96.0 | 94.5 | 95.5 |

| Monotone band | 92.5 | 94.1 | 96.0 | 94.5 | 95.5 | |

| d=2 | Initial band | 93.9 | 94.0 | 95.0 | 94.6 | 94.9 |

| Monotone band | 95.7 | 95.9 | 95.5 | 95.3 | 95.1 |

4.2. Example 2: Estimation of a conditional distribution function

We next demonstrate the use of Theorem 2 with dimension d = 2 for drawing inference on a conditional distribution function. Suppose that the data unit is the vector X = (A, Y), where Y is an outcome and A is now a continuous exposure. The observed data consist of independent draws (A1, Y1), (A2, Y2), … , (An, Yn), from , where is a nonparametric model. We define the parameter value θP pointwise as θP(t1, t2) := P (Y ≤ t1 ∣ A = t2). Thus, θP is the conditional distribution function of Y at t1 given A = t2. The map (t1, t2) ↦ θP(t1, t2) is necessarily monotone in t1 for each fixed t2, and in some settings, it may be known that it is also monotone in t2 for each fixed t1. This parameter completely describes the conditional distribution of Y given A, and can be used to obtain the conditional mean, conditional quantiles, or any other conditional parameter of interest.

For each t1, the true function θ0(t1, t2) = θP0 (t1, t2) may be written as the conditional mean of I(Y ≤ t1) given A = t2. Hence, any method of nonparametric regression can be used to estimate t2 ↦ θ0(t1, t2) for fixed t1, and repeating such a method over a grid of values of t1 yields an estimator of the entire function. We expect that our results would apply to many of these methods. Here, we consider the local linear estimator (Fan and Gijbels, 1996), which may be expressed as

where is a symmetric and bounded kernel function, hn → 0 is a sequence of bandwidths, and

for j ∈ {0, 1, 2}. Under regularity conditions on the true distribution function θ0, the marginal density f0 of A, the bandwidth sequence hn, and the kernel function K, for any fixed (t1, t2), θn satisfies

where VK := ʃ x2K(x)dx is the variance of K, SK := ʃ K(x)2dx, and b0(t1, t2) and v0(t1, t2) depend on the derivatives of θ0 and on f0. If hn is chosen to be of order n−1/5, the rate that minimizes the asymptotic mean integrated squared error of θn relative to θ0, then n2/5 [θn(t1, t2) − θ0(t1, t2)] converges in law to a normal random variate with mean VKb0(t1, t2) and variance SKv0(t1, t2). Under stronger regularity conditions, the rate of convergence of the uniform norm can be shown to be (nhn/ log n)1/2 (Hardle, Janssen and Serfling, 1988).

Theorem 3 cannot be used to establish (A) in this problem, since θn is not an asymptotically linear estimator. Furthermore, as discussed above, recent results suggest that {} does not converge weakly to a tight limit in for any useful rate rn. Despite this lack of weak convergence, condition (A) can be verified directly in the context of this example under smoothness conditions on θ0 and f0 using the tail bounds for empirical processes outlined in Section 3.2. Denoting by and the first and second derivatives of θ0 with respect to its second argument, we define

and , where is the derivative of f0. We then introduce the following conditions on θ0, f0, and K:

(d) exists and is continuous on , and as δ → 0, ;

(e) , exists and is continuous on , and ;

(f) K is a Lipschitz function supported on [−1, 1] satisfying condition (M) of Stupfler (2016).

We also define

We then have the following result.

Proposition 1. If (d)–(f) hold, and , then

Proposition 1 aids in establishing the following result, which formally establishes asymptotic equivalence of the local linear estimator of a conditional distribution function and its correction obtained via isotonic regression at the rate rn = (nhn)1/2.

Proposition 2. If (d)–(f) hold and , then (A) holds for the local linear estimator with rn = (nhn)1/2.

The proofs of Propositions 1 and 2 are provided in Appendix A.5. These results may also be of interest in their own right for establishing other properties of the local linear estimator.

As with the first example, we conducted a simulation study to validate our theoretical results. For samples sizes n ∈ {100, 250, 500, 750, 1000}, we generated 1000 random datasets as follows. We first simulated A as a Beta(2, 3) variate. Given A = a, Y was simulated as the inverse-logistic transformation of a normal variate with mean 0.5 × [1 + (a − 1.2)2] and variance one.

For each simulated dataset, we estimated θ0(y, a) for each (y, a) in an equally spaced square grid of mesh ωn = n−4/5. For each unique y in this grid, we estimated the function a ↦ θ0(y, a) using the local linear estimator, as implemented in the R package KernSmooth (Wand, 2015; Wand and Jones, 1995). For each value of y in the grid, we computed the optimal bandwidth based on the direct plug-in methodology of Ruppert, Sheather and Wand (1995) as implemented by the dpill function, and we then set our bandwidth as the average of these y-specific bandwidths. We constructed initial confidence bands using a variable-width nonparametric bootstrap (Hall and Kang, 2001).

We first note that, for all sample sizes considered, over 99% of simulations had monotonicity violations in both the y- and a-directions. Figure 2 displays the results of this simulation study. The left exhibit of Figure 2 confirms that the discrepancy between θn and decreases faster than , as our theory suggests. The middle exhibit indicates that in roughly 50% of simulations, there is less than 5% difference between and , but even for n = 1000, in roughly 25% of simulations, offers at least a 25% improvement in estimation error. In smaller samples, the estimation error of is less than half that of θn in 5–10% of simulations. The rightmost exhibit indicates that the projected confidence bands regularly reduce the uniform size of the initial bands by 10–20%. Finally, the empirical coverage of uniform 95% bootstrap-based bands and their projected versions is provided in Table 2. As before, the projected band is always more conservative than the initial band, and the difference in coverage diminishes as n grows. However, the initial bands in this example are anti-conservative, even at n = 1000, likely due to the slower rate of convergence, and the corrected bands offer a much more substantial improvement in this example than in the first.

Fig 2.

Summary of simulation results for conditional distribution function. The three columns display the same results as those in Figure 1.

Table 2.

Coverage of 95% confidence bands for the true conditional distribution function.

| n | 100 | 250 | 500 | 750 | 1000 |

|---|---|---|---|---|---|

| Initial band | 37.6 | 64.9 | 83.2 | 86.3 | 89.7 |

| Monotone band | 60.8 | 80.4 | 90.3 | 92.3 | 93.9 |

5. Discussion

Many estimators of function-valued parameters in nonparametric and semiparametric models are not guaranteed to respect shape constraints on the true function. A simple and general solution to this problem is to project the initial estimator onto the constrained parameter space over a grid whose mesh goes to zero fast enough with sample size. However, this introduces the possibility that the projected estimator has different properties than the original estimator. In this paper, we studied the important shape constraint of multivariate component-wise monotonicity. We provided results indicating that the projected estimator is generically no worse than the initial estimator, and that if the true function is strictly increasing and the initial estimator possesses a relatively weak type of stochastic equicontinuity, the projected estimator is uniformly asymptotically equivalent to the initial estimator. We provided especially simple sufficient conditions for this latter result when the initial estimator is uniformly asymptotically linear, and provided guidance on establishing the key condition for kernel smoothed estimators.

We studied the application of our results in two examples: estimation of a G-computed distribution function, for use in understanding the effect of a binary exposure on an outcome when the exposure-outcome relationship is confounded by recorded covariates, and of a conditional distribution function, for use in characterizing the marginal dependence of an outcome on a continuous exposure. In numerical studies, we found that the projected estimator yielded improvements over the initial estimator. The improvements were especially strong in the latter example.

In our examples, we only studied corrections in dimensions d = 1 and d = 2. In future work, it would be interesting to consider corrections in dimensions higher than 2. For example, for the conditional distribution function, it would be of interest to study multivariate local linear estimators for a continuous exposure A taking values in for d > 2. Since tailored algorithms for computing the isotonic regression do not yet exist for d > 2, it would also be of interest to determine whether a version of Theorem 2 could be established for the relaxed isotonic estimator proposed by Fokianos, Leucht and Neumann (2017). Alternatively, it is possible that the uniform stochastic equicontinuity currently required by Chernozhukov, Fernández-Val and Galichon (2010) and Daouia and Park (2013) for asymptotic equivalence of the rearrangement- and envelope-based corrections, respectively, could be relaxed along the lines of our condition (A). Finally, our theoretical results do not give the exact asymptotic behavior of the projected estimator or projected confidence band when the true function possesses flat regions. This is also an interesting topic for future research.

Acknowledgements

The authors gratefully acknowledge the constructive comments of the editors and anonymous reviewers as well as grant support from the National Institute of Allergy and Infectious Diseases (NIAID) and the National Heart, Lung and Blood Institute (NHLBI) of the National Institutes of Health.

Supported by NIAID grant UM1AI068635.

Supported by NIAID grant R01AI074345.

Supported by NHLBI grant R01HL137808.

Appendix A: Technical proofs

A.1. Proof of Theorem 1

Part (i) follows from Corollary B to Theorem 1.6.1 of Robertson, Wright and Dykstra (1988). For parts (ii) and (iii), we note that by assumption

for every , where Σk λk,n(t) = 1, and for each k, and ∥sk − t∥ ≤ 2ωn. By part (i), the first term is bounded above by . The second term is bounded above by γ(2ωn), where we define

If θ0 is continuous on , then it is also uniformly continuous since is compact. Therefore, γ(δ) → γ(0) = 0 as δ → 0, so that γ(2ωn) →P 0 if ωn →P 0. If γ(δ) = o(δα) as δ → 0, then .

Part (iv) follows from the proof of Proposition 3 of Chernozhukov, Fernández-Val and Galichon (2009), which applies to any order-preserving monotonization procedure. For the first statement of (v), by their definition as minimizers of the least-squares criterion function, we note that , and similarly for . The second statement of (v) follows from a slight modification of Theorem 1.6.1 of Robertson, Wright and Dykstra (1988). As stated, the result says that for any convex function and monotone function ψ, where θ* is the isotonic regression of θ over . A straightforward adaptation of the proof indicates that , where now and are the isotonic regressions of θ1 and θ2 over , respectively. As in Corollary B, taking G(x) = ∣x∣p and letting p → ∞ yields that . Applying this with θ1 = un and θ2 = ℓn establishes the second portion of (v). □

A.2. Proof of Theorem 2

We prove Theorem 2 via three lemmas, which may be of interest in their own right. The first lemma controls the size of deviations in θn over small neighborhoods, and does not hinge on condition (C) holding.

Lemma 1. If (A)–(B) hold and , then

Proof of Lemma 1. In view of the triangle inequality,

The first term is by (A), whereas the second term is by (B). □

The second lemma controls the size of neighborhoods over which violations in monotonicity can occur. Henceforth, we define

In this lemma we again require (A) but now require (C) rather than (B).

Lemma 2. If (A) and (C) hold, then .

Proof of Lemma 2. Let ϵ > 0 and ηn := ϵ/rn. Suppose that κn > ηn. Then, there exist s, with s < t and ∥t − s∥ > ηn such that θn(s) ≥ θn(t). We claim that there must also exist s*, with s* < t* and ∥t* − s*∥ ∈ [ηn/2, ηn] such that θn(s*) ≥ θn(t*). To see this, let J = ⌊∥t − s∥/(ηn/2)⌋ − 1, and note that J ≥ 1. Define tj := s+(jηn/2)(t − s)/∥t − s∥ for j = 0, 1, … , J, and set tJ+1 := t. Thus, tj < tj+1 and ∥tj+1 − tj∥ ∈ [ηn/2, ηn] for each j = 0, 1, … , J. Since then , it must be that θn(tj+1) ≤ θn (tj) for at least one j. This proves the claim.

We now have that κn > ηn implies that there exist s, with s < t and ∥t − s∥ ∈ [ηn/2, ηn] such that θn(s) ≥ θn(t). This further implies that

by condition (B). Finally, this allows us to write

By condition (A), this probability tends to zero for every ϵ > 0, which completes the proof. □

Our final lemma bounds the maximal absolute deviation between and θn over the grid in terms of the supremal deviations of θn over neighborhoods smaller than κn. This lemma does not depend on any of the conditions (A)–(C).

Lemma 3. It holds that .

Proof of Lemma 3. By Theorem 1.4.4 of Robertson, Wright and Dykstra (1988), for any ,

where, for any finite set , θn(S) is defined as ∣S∣−1 Σs∈S θn(s). The sets U range over the collection of upper sets of containing t, where is called an upper set if t1 ∈ U, and t1 ≤ t2 implies t2 ∈ U. The sets L range over the collection of lower sets of containing t, where is called a lower set if t1 ∈ L, and t2 ≤ t1 implies t2 ∈ L.

Let Ut := {s : s ≥ t} and Lt := {s : s ≤ t}. First, suppose there exists and s0 ∈ L0 with s0 > t and ∥t − s0∥ > κn. Then, we claim that there exists another lower set such that θn(Ut ∩ L0) > θn . If θn(Ut ∩ L0) > θn(t) = θn(Ut ∩ Lt), then satisfies the claim. Otherwise, if θn(Ut ∩ L0) ≤ θn(t), let

One can verify that , and since s0 ∈ L0 \ , is a strict subset of L0. Furthermore, by definition of κn, θn(s) > θn(t) for all s > t such that ∥t − s∥ > κn, and since θn(Ut ∩ L0) ≤ θn(t), removing these elements from L0 can only reduce the average, so that < θn(Ut ∩ L0). This establishes the claim. By an analogous argument, we can show that if there exists and s0 ∈ U0 with s0 < t and ∥t − s0∥ > κn, then there exists another upper set such that θn(U0 ∩ Lt) < .

Let and . Then,

Hence, . By the above argument, we have that both

Therefore, we find that

and thus, . Taking the maximum over yields the claim. □

The proof of Theorem 2 follows easily from Lemmas 1, 2 and 3.

Proof of Theorem 2. By construction, for each , we can write

where and ∥sj − t∥ ≤ 2ωn for all t, sj by definition. Thus, since Σj λj,n(t) = 1, it follows that

By Lemma 3, the first summand is bounded above by sup∥s−t∥≤κn ∣θn(s) − θn(t)∣, which is by Lemmas 1 and 2. The second summand is by Lemma 1. □

A.3. Proof of Corollary 1

We note that ℓn(t) ≤ θ0(t) ≤ un (t) if and only if

Therefore, by conditions (a)–(c), . Next, we let δ > 0 and note that

The first term tends to zero in probability by (A), the second by conditions (a)–(c), and the third by the assumed uniform continuity of γα. An analogous decomposition holds for un. Therefore, we can apply Theorem 2 with un and ℓn in place of θn to find that and . Finally, applying an analogous argument to the event ≤ θ0 ≤ as we applied to ℓn ≤ θ0 ≤ un above yields the result. □

A.4. Proof of Theorem 3

Let ϵ, δ, η > 0. By (3.1) and since ,

Condition (A2) implies that {} is uniformly mean-square continuous, in the sense that

Since is totally bounded in ∥ · ∥, this also implies that {} is totally bounded in the L2(P0) metric. This, in addition to (A1), implies that : {} converges weakly in to a Gaussian process with covariance function Σ0. Furthermore, (A2) implies that this limit process is a tight element of . By Theorem 1.5.4 of van der Vaart and Wellner (1996), {} is asymptotically tight. By Theorem 1.5.7 of van der Vaart and Wellner (1996), {} is thus asymptotically uniformly mean-square equicontinuous in probability, in the sense that there exists some δ0 = δ0(ϵ, η) > 0 such that

with ρ(s, t) := [ʃ{ϕ0,t(x) − ϕ0,s(x)}2dP0(x)]1/2. By (A2), sup∥t−s∥≤h ρ(t, s) < δ0 for some h > 0. Hence, for all n large, both δn−1/2 ≤ h and

so that

and the proof is complete. □

A.5. Proof of Propositions 1 and 2

Below, we refer to van der Vaart and Wellner (1996) as VW. Throughout, the symbol ≲ should be interpreted to mean ‘bounded above, up to a multiplicative constant not depending on n, t, y or a.’

We first note that condition (M) of Stupfler (2016) guarantees that the class

is Vapnik–Chervonenkis (henceforth VC) with index 2. In addition, we define Kj := ʃ ujK(u) du and

Before proving Propositions 1 and 2, we state and prove a lemma we will use.

Lemma 4. If (d)–(f) hold, and , then

and for any δ > 0,

Proof of Lemma 4. We first show that . We have that

By the change of variables u = (a − t2)/hn, we have that

which, in view of the assumed uniform negligibility of , tends to zero uniformly over t2 faster than hn. For the second term, since K is uniformly bounded and the class

is P0-Donsker, as implied by condition (M) of Stupfler (2016), Theorem 2.14.1 of VW implies that

Then, since , this term is also oP(hn).

We next show that (). We have that

By the change of variables u = (a − t2)/hn, the first term equals

By the assumed uniform negligibility of and since hn = O(n−1/5), the first term tends to zero in probability uniformly over .

Turning to the second term in s1,n(t2), we will apply Theorem 2.14.1 of VW to obtain a tail bound for the supremum of this empirical process over the onedimensional class indexed by t2. We note that, since K is bounded by some and supported on [−1, 1],

Therefore, the class of functions

has envelope . Furthermore, since (y, a) ↦ (a − t2) and K are both uniformly bounded VC classes of functions, and K is bounded, the class of functions possesses finite entropy integral. Hence, we have that

We now have that , which implies in particular that

Next, we show that . The proof of this is nearly identical to the preceding proof. We have that

By the change of variables u = (a − t2)/hn, the first term equals

By the uniform negligibility of , the first term is oP(hn) uniformly in t.

Analysis of the second term in s2,n is analogous to that of s1,n, except that the envelope function is now , so that the empirical process term is . We also note that supt2 ∣s2,n(t2)∣ = OP ((nhn)−1/2).

The above derivations imply that

We now proceed to the statements in the lemma. We write that

Since , we have that

and the result follows.

We omit the proof of the statement about sn,2, since it is almost identical to the above. For the statement about wn, by the above calculations, we have that

We write that

and the result follows.

We note that the above implies that

so that

Similarly, we have that sup∣t2−s2∣≤η ∣s0,n(t2) − s0,n(s2)∣ = oP (hn) and

Therefore, we find that

We can now write that

and

We can now prove Proposition 1.

Proof of Proposition 1. We define

Then, we have that θn(t1, t2) − θ0(t1, t2) = m1,n(t1, t2) + m2,n(t1, t2). We note that, since E0 [I(Y ≤ t1) ∣ A = a] = θ0(t1, a), (nhn)1/2m2,n(t1, t2) equals

Therefore, we can write that

We now proceed to analyze m1,n. We have that (nhn)1/2 m1,n(t1, t2) equals

The second term in m1,n may be further decomposed as

with and ℓt(y, a) := a − t2. By Lemma 4, we have that

and similarly for s1,n. We will use Theorem 2.14.2 of VW to obtain bounds for and . We first note that, since K is bounded and supported on [−1, 1] and θ0 is Lipschitz on , and . These will be our envelope functions for these classes. Next, since K is Lipschitz, we have that

Therefore, by VW Theorem 2.7.11, we have

where . Thus, by Theorem 2.14.2 of VW,

where we have used the fact that for all z small enough. A similar argument applies to . We thus have that the second summand in m1,n is bounded above up to a constant not depending on n and uniformly in t by

which is oP (1) since .

By the change of variables u = (a − t2)/hn, the first term in m1,n equals

Expanding the product, this is equal to

By the assumed negligibility of and as well as Lemma 4, the second through fourth summands tend to zero in probability uniformly over . The first term equals

By Lemma 4, the first summand tends to zero uniformly over . By symmetry of K, the second plus third summands simplifies to

Once again, the second and third summands tend to zero uniformly over by Lemma 4. We have now shown that

which completes the proof. □

Finally, we prove Proposition 2.

Proof of Proposition 2. Since is uniformly continuous and ,

Therefore, it only remains to show that . Recalling that ℓt(y, a) := a − t2 and

we have that Rn(t) − Rn(s) equals

Focusing first on , we have for

The classes

are both uniformly bounded above and VC. Therefore, the uniform covering numbers of the class

are bounded up to a constant by ε−V for some V < ∞, so that the uniform entropy integral satisfies

for all η small enough, where . We also have P0 (νn,t,1)2 ≲ hn for all and all n large enough. Thus, Theorem 2.1 of van der Vaart and Wellner (2011) implies that

For , we have that

for all n large enough and all (y, a). We can therefore apply Theorem 2.7.11 of VW to conclude that for all ε small enough, where }, which implies that . Thus, we have that

Since P0 (νn,t,2)2 ≲ hn as well, by Lemma 3.4.2 of VW, we then have

Combining these two bounds with the last statement of Lemma 4 yields

Both terms tend to zero.

The analysis for is very similar. In this case, we have , so that, using the same approach as above, we get

and therefore, in view of Lemma 4,

which goes to zero in probability.

It remains to bound

For the former, we work on the terms and separately. For the first of these, we let . We have that ∥νn,t,1 − νn,s,1∥P0,2 is bounded above by

Therefore, it follows that

for all n large enough. In addition, has uniform covering numbers bounded up to a constant by ε−V for all n and δ because the classes {} and

are VC. Therefore, for all η small enough. Thus, Theorem 2.1 of VW implies that

Turning to , we analogously define

By the Lipschitz property of θ0 and K, we have that

Therefore, up to a constant, an envelope function Fn,δ,2 for is given by . Next, we have, for any (t, s) and (t′, s′) in ,

with . Therefore, by Theorem 2.7.11 of VW, we have that

where . Since , we trivially have that . Thus, it follows that

Therefore, Theorem 2.14.2 of VW implies that

We now have that

Both terms tend to zero in probability.

We can address in a very similar manner. As before, we work on terms and separately. It is straightforward to see that the same line of reasoning as used above applies to each of these terms as well, yielding the same negligibility. □

Contributor Information

Ted Westling, Department of Mathematics and Statistics, University of Massachusetts Amherst, Amherst, Massachusetts, USA.

Mark J. van der Laan, Division of Biostatistics, University of California, Berkeley, Berkeley, California, USA

Marco Carone, Department of Biostatistics, University of Washington, Seattle, Washington, USA.

References

- Ayer M, Brunk HD, Ewing GM, Reid WT and Silverman E (1955). An Empirical Distribution Function for Sampling with Incomplete Information. Ann. Math. Statist 26 641–647. [Google Scholar]

- Barlow RE, Bartholomew DJ, Bremner JM and Brunk HD (1972). Statistical Inference Under Order Restrictions: The Theory and Application of Isotonic Regression. Wiley; New York. [Google Scholar]

- Bril G, Dykstra R, Pillers C and Robertson T (1984). Algorithm AS 206: Isotonic Regression in Two Independent Variables. J. R. Stat. Soc. Ser. C. Appl. Stat 33 352–357. [Google Scholar]

- Chernozhukov V, Fernández-Val I and Galichon A (2010). Quantile and Probability Curves Without Crossing. Econometrica 78 1093–1125. [Google Scholar]

- Chernozhukov V, Fernández-Val I and Galichon A (2009). Improving point and interval estimators of monotone functions by rearrangement. Biometrika 96 559–575. [Google Scholar]

- Daouia A and Park BU (2013). On Projection-type Estimators of Multivariate Isotonic Functions. Scandinavian Journal of Statistics 40 363–386. [Google Scholar]

- Dette H, Neumeyer N and Pilz KF (2006). A simple nonparametric estimator of a strictly monotone regression function. Bernoulli 12 469–490. [Google Scholar]

- Fan J and Gijbels I (1996). Local Polynomial Modelling and Its Applications. CRC Press, Boca Raton. [Google Scholar]

- Fokianos K, Leucht A and Neumann MH (2017). On Integrated L1 Convergence Rate of an Isotonic Regression Estimator for Multivariate Observations. arXiv e-prints arXiv:1710.04813. [Google Scholar]

- Gill RD and Robins JM (2001). Causal Inference for Complex Longitudinal Data: The Continuous Case. Ann. Statist 29 1785–1811. [Google Scholar]

- Hall P and Kang K-H (2001). Bootstrapping nonparametric density estimators with empirically chosen bandwidths. Ann. Statist 29 1443–1468. [Google Scholar]

- Hardle W, Janssen P and Serfling R (1988). Strong Uniform Consistency Rates for Estimators of Conditional Functionals. Ann. Statist 16 1428–1449. [Google Scholar]

- Kyng R, Rao A and Sachdeva S (2015). Fast, Provable Algorithms for Isotonic Regression in all ℓp-norms. In Advances in Neural Information Processing Systems 28 (Cortes C, Lawrence ND, Lee DD, Sugiyama M and Garnett R, eds.) 2719–2727. Curran Associates, Inc. [Google Scholar]

- Liao X and Meyer MC (2014). coneproj: An R Package for the Primal or Dual Cone Projections with Routines for Constrained Regression. Journal of Statistical Software 61 1–22. [Google Scholar]

- Meyer MC (1999). An extension of the mixed primal–dual bases algorithm to the case of more constraints than dimensions. Journal of Statistical Planning and Inference 81 13–31. [Google Scholar]

- Mukarjee H and Stern S (1994). Feasible Nonparametric Estimation of Multiargument Monotone Functions. Journal of the American Statistical Association 89 77–80. [Google Scholar]

- Patra RK and Sen B (2016). Estimation of a two-component mixture model with applications to multiple testing. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 78 869–893. [Google Scholar]

- Robertson T, Wright F and Dykstra R (1988). Order Restricted Statistical Inference. Wiley, New York. [Google Scholar]

- Robins J (1986). A new approach to causal inference in mortality studies with a sustained exposure period – application to control of the healthy worker survivor effect. Mathematical Modelling 7 1393–1512. [Google Scholar]

- Ruppert D, Sheather SJ and Wand MP (1995). An effective bandwidth selector for local least squares regression. Journal of the American Statistical Association 90 1257–1270. [Google Scholar]

- Stupfler G (2016). On the weak convergence of the kernel density estimator in the uniform topology. Electron. Commun. Probab 21 13 pp. [Google Scholar]

- R Core Team (2018). R: A Language and Environment for Statistical Computing R Foundation for Statistical Computing, Vienna, Austria. [Google Scholar]

- Turner R (2015). Iso: Functions to Perform Isotonic Regression R package version 0.0–17. [Google Scholar]

- van der Laan MJ and Robins JM (2003). Unified methods for censored longitudinal data and causality. Springer Science & Business Media. [Google Scholar]

- van der Vaart AW and Wellner JA (1996). Weak Convergence and Empirical Processes. Springer-Verlag; New York. [Google Scholar]

- van der Vaart A and Wellner JA (2011). A local maximal inequality under uniform entropy. Electron. J. Statist 5 192–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wand M (2015). KernSmooth: Functions for Kernel Smoothing Supporting [Google Scholar]

- Wand & Jones (1995) R package version 2.23–15. [Google Scholar]

- Wand MP and Jones MC (1995). Kernel Smoothing. Chapman and Hall, London. [Google Scholar]