Abstract

In observational studies, potential confounders may distort the causal relationship between an exposure and an outcome. However, under some conditions, a causal dose-response curve can be recovered using the G-computation formula. Most classical methods for estimating such curves when the exposure is continuous rely on restrictive parametric assumptions, which carry significant risk of model misspecification. Nonparametric estimation in this context is challenging because in a nonparametric model these curves cannot be estimated at regular rates. Many available nonparametric estimators are sensitive to the selection of certain tuning parameters, and performing valid inference with such estimators can be difficult. In this work, we propose a nonparametric estimator of a causal dose-response curve known to be monotone. We show that our proposed estimation procedure generalizes the classical least-squares isotonic regression estimator of a monotone regression function. Specifically, it does not involve tuning parameters, and is invariant to strictly monotone transformations of the exposure variable. We describe theoretical properties of our proposed estimator, including its irregular limit distribution and the potential for doubly-robust inference. Furthermore, we illustrate its performance via numerical studies, and use it to assess the relationship between BMI and immune response in HIV vaccine trials.

1. Introduction

1.1. Motivation and literature review

Questions regarding the causal effect of an exposure on an outcome are ubiquitous in science. If investigators are able to carry out an experimental study in which they randomly assign a level of exposure to each participant and then measure the outcome of interest, estimating a causal effect is generally straightforward. However, such studies are often not feasible, and data from observational studies must be relied upon instead. Assessing causality is then more difficult, in large part because of potential confounding of the relationship between exposure and outcome. Many nonparametric methods have been proposed for drawing inference about a causal effect using observational data when the exposure of interest is either binary or categorical – these include, among others, inverse probability weighted (IPW) estimators (Horvitz and Thompson, 1952), augmented IPW estimators (Scharfstein et al., 1999; Bang and Robins, 2005), and targeted minimum loss-based estimators (TMLE) (van der Laan and Rose, 2011).

In practice, many exposures are continuous, in the sense that they may take any value in an interval. A common approach to dealing with such exposures is to simply discretize the interval into two or more regions, thus returning to the categorical exposure setting. However, it is frequently of scientific interest to learn the causal dose-response curve, which describes the causal relationship between the exposure and outcome across a continuum of the exposure. Much less attention has been paid to continuous exposures. Robins (2000) and Zhang et al. (2016) studied this problem using parametric models, and Neugebauer and van der Laan (2007) considered inference on parameters obtained by projecting a causal dose-response curve onto a parametric working model. Other authors have taken a nonparametric approach instead. Rubin and van der Laan (2006) and Díaz and van der Laan (2011) discussed nonparametric estimation using flexible data-adaptive algorithms. Kennedy et al. (2017) proposed an estimator based on local linear smoothing. Finally, van der Laan et al. (2018) recently presented a general framework for inference on parameters that fail to be smooth enough as a function of the data-generating distribution and for which regular root-n estimation theory is therefore not available. This is indeed the case for the causal dose-response curve, and van der Laan et al. (2018) discussed inference on such a parameter as a particular example.

Despite a growing body of literature on nonparametric estimation of causal dose-response curves, to the best of our knowledge, existing methods do not permit valid large-sample inference and may be sensitive to the selection of certain tuning parameters. For instance, smoothing-based methods are often sensitive to the choice of a kernel function and bandwidth, and these estimators typically possess non-negligible asymptotic bias, which complicates the task of performing valid inference.

In many settings, it may be known that the causal dose-response curve is monotone in the exposure. For instance, exposures such as daily exercise performed, cigarettes smoked per week, and air pollutant levels are all known to have monotone relationships with various health outcomes. In such cases, an extensive literature suggests that monotonicity may be leveraged to derive estimators with desirable properties – the monograph of Groeneboom and Jongbloed (2014) provides a comprehensive overview. For example, in the absence of confounding, isotonic regression may be employed to estimate the causal dose-response curve (Barlow et al., 1972). The isotonic regression estimator does not require selection of a kernel function or bandwidth, is invariant to strictly increasing transformations of the exposure, and upon centering and scaling by n−1/3, converges in law pointwise to a symmetric limit distribution with mean zero (Brunk, 1970). The latter property is useful since it facilitates asymptotically valid pointwise inference.

Nonparametric inference on a monotone dose-response curve when the exposure-outcome relationship is confounded is more difficult to tackle and is the focus of this manuscript. To the best of our knowledge, this problem has not been comprehensively studied before.

1.2. Parameter of interest and its causal interpretation

The prototypical data unit we consider is O = (Y, A, W), where Y is a response, A a continuous exposure, and W a vector of covariates. The support of the true data-generating distribution P0 is denoted by , where , is an interval, and . Throughout, the use of subscript 0 refers to evaluation at or under P0. For example, we write θ0 and FP0 to denote θP0 and FP0, respectively, and E0 to denote expectation under P0.

Our parameter of interest is the so-called G-computed regression function from to , defined as

where the outer expectation is with respect to the marginal distribution Q0 of W. In some scientific contexts, θ0(a) may have a causal interpretation. Adopting the Neyman-Rubin potential outcomes framework, for each a ∈ , we denote by Y(a) a unit’s potential outcome under exposure level A = a. The causal parameter m0(a) := E0 [Y (a)] corresponds to the average outcome under assignment of the entire population to exposure level A = a. The resulting curve m0 : → is what we formally define as the causal dose-response curve. Under varying sets of causal conditions, m0(a) may be identified with functionals of the observed data distribution, such as the unadjusted regression function r0(a) := E0 (Y ∣ A = a) or the G-computed regression function θ0(a).

Suppose that (i) each unit’s potential outcomes are independent of all other units’ exposures; and (ii) the observed outcome Y equals the potential outcome Y(A) corresponding to the exposure level A actually received. Identification of m0(a) further depends on the relationship between A and Y(a). If (i) and (ii) hold, and in addition, (iii) A and Y (a) are independent, and (iv) the marginal density of A is positive at a, then m0(a) = r0(a). Condition (iii) typically only holds in experimental studies (e.g., randomized trials). In observational studies, there are often common causes of A and Y(a) – so-called confounders of the exposure-outcome relationship – that induce dependence. In such cases, m0(a) and r0(a) do not generally coincide. However, if W contains a sufficiently rich collection of confounders, it may still be possible to identify m0(a) from the observed data. If (i) and (ii) hold, and in addition, (v) A and Y(a) are conditionally independent given W, and (vi) the conditional density of A given W is almost surely positive at A = a, then m0(a) = θ0(a). This is a fundamental result in causal inference (Robins, 1986; Gill and Robins, 2001). Whenever m0(a) = θ0(a), our methods can be interpreted as drawing inference on the causal dose-response parameter m0(a).

We note that the definition of the counterfactual outcome Y(a) presupposes that the intervention setting A = a is uniquely defined. In many situations, this stipulation requires careful thought. For example, in Section 6 we consider an application in which body mass index (BMI) is the exposure of interest. There is an ongoing scientific debate about whether such an exposure leads to a meaningful causal interpretation, since it is not clear what it means to intervene on BMI.

Even if the identifiability conditions stipulated above do not strictly hold or the scientific question is not causal in nature, when W is associated with both A and Y, θ0(a) often has a more appealing interpretation than the unadjusted regression function r0(a). Specifically, θ0(a) may be interpreted as the average value of Y in a population with exposure fixed at A = a but otherwise characteristic of the study population with respect to W. Because θ0(a) involves both adjustment for W and marginalization with respect to a single reference population that does not depend on the value a, the comparison of θ0(a) over different values of a is generally more meaningful than for r0(a).

When P0(A = a) = 0, the parameter P ↦ θP(a) is not pathwise differentiable at P0 with respect to the nonparametric model (Díaz and van der Laan, 2011). Heuristically, due to the continuous nature of A, θP(a) corresponds to a local feature of P. As a result, regular root-n rate estimators cannot be expected, and standard methods for constructing efficient estimators of pathwise differentiable parameters in nonparametric and semiparametric models (e.g., estimating equations, one-step estimation, targeted minimum loss-based estimation) cannot be used directly to target and obtain inference on θ0(a).

1.3. Contribution and organization of the article

We denote by FP : the distribution function of A under P, by the class of non-decreasing real-valued functions on , and by the class of strictly increasing and continuous distribution functions supported on . The statistical model we will work in is , which consists of the collection of distributions for which θP is non-decreasing over and the marginal distribution of A is continuous with positive Lebesgue density over .

In this article, we study nonparametric estimation and inference on the G-computed regression function a ↦ θ0(a) = E0 [E0 (Y ∣ A = a, W)] for use when A is a continuous exposure and θ0 is known to be monotone. Specifically, our goal is to make inference about θ0(a) for a ∈ using independent observations O1, O2, … ,On drawn from P0 ∈ . This problem is an extension of classical isotonic regression to the setting in which the exposure-outcome relationship is confounded by recorded covariates – this is why we refer to the method proposed as causal isotonic regression. As mentioned above, to the best of our knowledge, nonparametric estimation and inference on a monotone G-computed regression function has not been comprehensively studied before. In what follows, we:

show that our proposed estimator generalizes the unadjusted isotonic regression estimator to the more realistic scenario in which there is confounding by recorded covariates;

investigate finite-sample and asymptotic properties of the proposed estimator, including invariance to strictly increasing transformations of the exposure, doubly-robust consistency, and doubly-robust convergence in distribution to a non-degenerate limit;

derive practical methods for constructing pointwise confidence intervals, including intervals that have valid doubly-robust calibration;

illustrate numerically the practical performance of the proposed estimator.

We note that in Westling and Carone (2019), we studied estimation of θ0 as one of several examples of a general approach to monotonicity-constrained inference. Here, we provide a comprehensive examination of estimation of a monotone dose-response curve. In particular, we establish novel theory and methods that have important practical implications. First, we provide conditions under which the estimator converges in distribution even when one of the nuisance estimators involved in the problem is inconsistent. This contrasts with the results in Westling and Carone (2019), which required that both nuisance parameters be estimated consistently. We also propose two estimators of the scale parameter arising in the limit distribution, one of which requires both nuisance estimators to be consistent, and the other of which does not. Second, we demonstrate that our estimator is invariant to strictly monotone transformations of the exposure. Third, we study the joint convergence of our proposed estimator at two points, and use this result to construct confidence intervals for causal effects. Fourth, we study the behavior of our estimator in the context of discrete exposures. Fifth, we propose an alternative estimator based on cross-fitting of the nuisance estimators, and demonstrate that this strategy removes the need for empirical process conditions required in Westling and Carone (2019). Finally, we investigate the behavior of our estimator in comprehensive numerical studies, and compare its behavior to that of the local linear estimator of Kennedy et al. (2017).

The remainder of the article is organized as follows. In Section 2, we concretely define the proposed estimator. In Section 3, we study theoretical properties of the proposed estimator. In Section 4, we propose methods for pointwise inference. In Section 5, we perform numerical studies to assess the performance of the proposed estimator, and in Section 6, we use this procedure to investigate the relationship between BMI and immune response to HIV vaccines using data from several randomized trials. Finally, we provide concluding remarks in Section 7. Proofs of all theorems are provided in Supplementary Material.

2. Proposed approach

2.1. Review of isotonic regression

Since the proposed estimator of θ0(a) builds upon isotonic regression, we briefly review the classical least-squares isotonic regression estimator of r0(a). The isotonic regression rn of Y1, Yn, … , Yn on A1, A2, …, An is the minimizer in r of over all monotone non-decreasing functions. This minimizer can be obtained via the Pool Adjacent Violators Algorithm (Ayer et al., 1955; Barlow et al., 1972), and can also be represented in terms of greatest convex minorants (GCMs). The GCM of a bounded function f on an interval [a, b] is defined as the supremum over all convex functions g such that g ≤ f. Letting Fn be the empirical distribution function of A1, A2, … , An, rn(a) can be shown to equal the left derivative, evaluated at Fn(a), of the GCM over the interval [0,1] of the linear interpolation of the so-called cusum diagram

where := 0 and is the value of Y corresponding to the observation with ith smallest value of A.

The isotonic regression estimator rn has many attractive properties. First, unlike smoothing-based estimators, isotonic regression does not require the choice of a kernel function, bandwidth, or any other tuning parameter. Second, it is invariant to strictly increasing transformations of A. Specifically, if H : → is a strictly increasing function, and is the isotonic regression of Y1, Y2, … , Yn on H(A1), H(A2), …, H(An), it follows that . Third, rn is uniformly consistent on any strict subinterval of . Fourth, n1/3[rn(a) – r0(a)] converges in distribution to []1/3 for any interior point a of at which , and exist, and are positive and continuous in a neighborhood of a. Here, , where Z0 denotes a two-sided Brownian motion originating from zero, and is said to follow Chernoff’s distribution. Chernoff’s distribution has been extensively studied: among other properties, it is a log-concave and symmetric law centered at zero, has moments of all orders, and can be approximated by a N(0, 0.52) distribution (Chernoff, 1964; Groeneboom and Wellner, 2001). It appears often in the limit distribution of monotonicity-constrained estimators.

2.2. Definition of proposed estimator

For any given P ∈ , we define the outcome regression pointwise as μP(a, ω) := EP (Y ∣ A = a, W = ω), and the normalized exposure density as , where πP(a ∣ ω) is the evaluation at a of the conditional density function of A given W = ω and is the marginal density function of A under P. Additionally, we define the pseudo-outcome ξμ,g,Q(y, a, ω) as

As noted by Kennedy et al. (2017), E0 [ξμ,g,Q0 (Y, A, W) ∣ A = a] = θ0(a) if either μ = μ0 or g = g0. They used this fact to motivate an estimator θn,h(a) of θ0(a), defined as the local linear regression with band-width h > 0 of the pseudo-outcomes ξμn,gn,Qn (Y1, A1, W1), ξμn,gn,Qn(Y2,A2,W2), …, ξμn,gn,Qn(Yn, An, Wn)on A1, A2, …, An, where μn is an estimator of μ0, gn is an estimator of g0, and Qn is the empirical distribution function based on W1, W2, …, Wn. The study of this nonparametric regression problem is not standard because these pseudo-outcomes are dependent when the nuisance function estimators μn and gn are estimated from the data. Nevertheless, Kennedy et al. (2017) showed that their estimator is consistent if either μn or gn is consistent. Additionally, under regularity conditions, they showed that if both nuisance estimators converge fast enough and the bandwidth tends to zero at rate n−1/5, then n2/5 where b0(a) is an asymptotic bias depending on the second derivative of θ0, and ν0(a) is an asymptotic variance.

In our setting, θ0 is known to be monotone. Therefore, instead of using a local linear regression to estimate the conditional mean of the pseudo-outcomes, it is natural to consider as an estimator the isotonic regression of the pseudo-outcomes on A1, A2, … , An. Using the GCM representation of isotonic regression stated in the previous section, we can summarize our estimation procedure as follows:

Construct estimators μn and gn of μ0 and g0, respectively.

For each a in the unique values of A1, A2, …, An, compute and set

| (1) |

Compute the GCM of the set of points {(0,0)} ∪ {(Fn(Ai), Γn(Ai)) : i = 1, 2, … , n} over [0,1].

Define θn(a) as the left derivative of evaluated at Fn(a).

As in the work of Kennedy et al. (2017), while the proposed estimator θn can be defined as an isotonic regression, the asymptotic properties of our estimator do not appear to simply follow from classical results for isotonic regression because the pseudo-outcomes depend on the estimators μn, gn and Qn, which themselves depend on all the observations. However, θn is of generalized Grenander-type, and thus the asymptotic results of Westling and Carone (2019) can be used to study its asymptotic properties. To see that θn is a generalized Grenander-type estimator, we define ψP := θP o and note that since θP and are increasing, so is ψP. Therefore, the primitive function is convex. Next, we define ΓP := ΨP o FP, so that . The parameter ΓP(a0) is pathwise differentiable at P in for each a0, and its nonparametric efficient influence function

Denoting by Pn any estimator of P0 compatible with estimators μn, gn, Fn and Qn of μ0, g0, F0 and Q0, respectively the one-step estimator of Γ0(a) is given by Γn(a) := Γμn,Fn,Qn(a) + where we define . This one-step estimator is equivalent to that defined in (1). We then define the empirical quantile function of A as our estimator of Ψ0, and ψn as the left derivative of the GCM of Ψn. Thus, we find that θn = ψn o Fn is the estimator defined in steps 1–4. This form of the estimator was described in Westling and Carone (2019), where it was briefly discussed as one of several examples of a general strategy for nonparametric monotone inference.

If θ0(a) were only known to be monotone on a fixed sub-interval , we would define FP(a) := P(A ≤ a ∣ A ∈ ) as the marginal distribution function restricted to , and Fn as its empirical counterpart. Similarly, I(−∞,a](Ai) in (1) would be replaced with (Ai). In all other respects, our estimation procedure would remain the same.

Finally, as alluded to earlier, we observe that the proposed estimator generalizes classical isotonic regression in a way we now make precise. If it is known that A is independent of W (Condition 1), so that g0(a, ω) = 1 for all supported (a, ω), we may take gn = 1. If, furthermore, it is known that Y is independent of W given A (Condition 2), then we may construct μn such that μn(a, ω) = μn(a) for all supported (a, ω). Inserting gn = 1 and any such μn into (1), we obtain that and thus that θn(a) = rn(a) for each a. Hence, in this case, our estimator reduces to least-squares isotonic regression.

3. Theoretical properties

3.1. Invariance to strictly increasing exposure transformations

An important feature of the proposed estimator is that, as with the isotonic regression estimator, it is invariant to any strictly increasing transformation of A. This is a desirable property because the scale of a continuous exposure is often arbitrary from a statistical perspective. For instance, if A is temperature, whether A is measured in degrees Fahrenheit, Celsius or Kelvin does not change the information available. In particular, if the parameters θ0 and correspond to using as exposure A and H(A), respectively, for H some strictly increasing transformation, then θ0 and encode exactly the same information about the effect of A on Y after adjusting for W. It is therefore natural to expect any sensible estimator to be invariant to the scale on which the exposure is measured.

Setting X := H(A) for a strictly increasing function , we first note that the function : x ↦ E0 [E0 (Y ∣ X = x, W)] = θ0 o H−1(x) is non-decreasing. Next, we define and , is the evaluation at x of the conditional density function of X given is the marginal density function of X under P0, and we denote by and estimators of and , respectively. The estimation procedure defined in the previous section but using exposure X instead of A then leads to estimator , where is the empirical distribution function based on X1, X2, …, Xn, and is the left derivative of the GCM of for

If it is the case that and , implying that nuisance estimators μn and gn are themselves invariant to strictly increasing transformation of A, then we have that , and so, . It follows then that . In other words, the proposed estimator θn of θ0 is invariant to any strictly increasing transformation of the exposure variable.

We note that it is easy to ensure that and . Set U := Fn(A), which is also equal to , and let be an estimator of the conditional mean of Y given (U, W) = (u,w). Then, taking , we have that satisfies the desired property. Similarly, letting be an estimator of the conditional density of U = u given W = ω, and setting , we may take .

3.2. Consistency

We now provide sufficient conditions under which consistency of θn is guaranteed. Our conditions require controlling the uniform entropy of certain classes of functions. For a uniformly bounded class of functions , a finite discrete probability measure Q, and any ε > 0, the ε-covering number N(ε,,L2(Q)) of relative to the L2(Q) metric is the smallest number of L2(Q)-balls of radius less than or equal to e needed to cover . The uniform ε-entropy of is then defined as logsupQ N(ε, , L2(Q)), where the supremum is taken over all finite discrete probability measures. For a thorough treatment of covering numbers and their role in empirical process theory, we refer readers to van der Vaart and Wellner (1996).

Below, we state three sufficient conditions we will refer to in the following theorem.

(A1) There exist constants C, δ, K0, K1, K2 ∈ (0, +∞) and V ∈ [0, 2) such that, almost surely as n → ∞, μn and gn are contained in classes of functions and , respectively, satisfying:

∣μ∣ ≤ K0 for all μ ∈ , and K1 ≤ g ≤ K2 for all g ∈ ;

logsupQ N(ε, , L2(Q)) ≤ Cε−V/2 and logsupQ N(ε, , L2(Q)) ≤ Cε−V for all ε ≤ δ.

(A2) There exist μ∞ ∈ and g∞ ∈ such that and .

(A3) There exist subsets S1, S2 and S3 of such that P0(S1 ∪ S2 ∪ S3) = 1 and:

μ∞(a, ω) = μ0(a, ω) for all (a, ω) ∈ S1;

g∞(a, ω) = g0 (a, ω) for all (a, ω) ∈ S2;

μ∞(a,ω) = μ0(a,w) and g0(a, ω) = g0(a,ω) for all (a, ω) ∈ S3.

Under these three conditions, we have the following result.

Theorem 1 (Consistency). If conditions (A1)-(A3) hold, then for any value a ∈ that F0(a) ∈ (0,1), θ0 is continuous at a, and F0 is strictly increasing in a neighborhood of a. uniformly continuous and F0 is strictly increasing on , then for any bounded strict subinterval .

We note that in the pointwise statement of Theorem 1, F0(a) is required to be in the interior of [0, 1], and similarly, the uniform statement of Theorem 1 only covers strict subintervals of . This is due to the well-known boundary issues with Grenander-type estimators. Various remedies have been proposed in particular settings, and it would be interesting to consider these in future work (see, e.g., Woodroofe and Sun, 1993; Balabdaoui et al., 2011; Kulikov and Lopuhaä, 2006).

Condition (A1) requires that μn and gn eventually be contained in uniformly bounded function classes that are small enough for certain empirical process terms to be controlled. This condition is easily satisfied if, for instance, and are parametric classes. It is also satisfied for many infinite-dimensional function classes. Uniform entropy bounds for many such classes may be found in Chapter 2.6 of van der Vaart and Wellner (1996). We note that there is an asymmetry between the entropy requirements for and in part (b) of (A1). This is due to the term ∫ appearing in Γn(a). To control this term, we use an upper bound of the form from the theory of empirical U-processes (Nolan and Pollard, 1987) – this contrasts with the uniform entropy integral 1/2dε that bounds ordinary empirical processes indexed by a uniformly bounded class . In Section 3.7, we consider the use of cross-fitting to avoid the entropy conditions in (A1).

Condition (A2) requires that μn and gn tend to limit functions μ∞ and g∞, and condition (A3) requires that either μ∞(a, ω) = μ0(a, ω) or g∞(a, ω) = g0(a, ω) for (F0 × Q0)-almost every (a, ω). If either (i) S1 and S3 are null sets or (ii) S2 and S3 are null sets, then condition (A3) is known simply as double-robustness of the estimator θn relative to the nuisance functions μ0 and g0: θn is consistent as long as μ∞ = μ0 or g∞ = g0. Doubly-robust estimators are at this point a mainstay of causal inference and have been studied for over two decades (see, e.g., Robins et al., 1994; Rotnitzky et al., 1998; Scharfstein et al., 1999; van der Laan and Robins, 2003; Neugebauer and van der Laan, 2005; Bang and Robins, 2005). However, (A3) is more general than classical double-robustness, as it allows neither μn nor gn to tend to their true counterparts over the whole domain, as long as at least one of μn or gn tends to the truth for almost every point in the domain.

3.3. Convergence in distribution

We now study the convergence in distribution of n1/3[θn(a) – θ0(a)] for fixed a. We first define for any square-integrable functions h1, h2 : × → , ε > 0 and S ⊆ × the pseudo-distance

| (2) |

We also denote by the conditional variance E0 {[Y – μ0(A,W)]2 ∣A = a, W = ω} of Y given A = a and W = ω under P0. Below, we will refer to these two additional conditions:

(A4) There exists ε0 > 0 such that:

max{d(μn, μ∞; a, ε0, S1), d(gn, g∞; a, ε0, S2)} = oP(n−1/3);

max{d(μn, μ∞; a, ε0, S2), d(gn; g,∞; a, ε0, S1)} = oP(1);

d(μn, μ∞; a, ε0, S3)d(gn, g∞; a, ε0, S3) = op(n−1/3).

(A5) F0, μ0, μ∞, g0, g∞ and are continuously differentiable in a neighborhood of a uniformly over ω ∈ . Under conditions introduced so far, we have the following distributional result.

Theorem 2 (Convergence in distribution). If conditions (A1)–(A5) hold, then

for any a ∈ such that F0(a) ∈ (0,1), where follows the standard Chernoff distribution and

with θ∞(a) denoting ∫ μ∞(a,ω)Q0(dω).

We note that the limit distribution in Theorem 2 is the same as that of the standard isotonic regression estimator up to a scale factor. As noted above, when either (i) Y and W are independent given A or (ii) A is independent of W, the functions θ0 and r0 coincide. As such, we can directly compare the respective limit distributions of n1/3 [θn(a) – θ0(a)] and n1/3 [rn(a) – r0(a)] under these conditions. When both μ∞ = μ0 and g∞ = g0, rn(a) is asymptotically more concentrated than θn(a) in scenario (i), and less concentrated in scenario (ii). This is analogous to findings in linear regression, where including a covariate uncorrelated with the outcome inflates the standard error of the estimator of the coefficient corresponding to the exposure, while including a covariate correlated with the outcome but uncorrelated with the exposure deflates its standard error.

Condition (A4) requires that, on the set S1 where μn is consistent but gn is not, μn converges faster than n−1/3 uniformly in a neighborhood of a, and similarly for gn on the set S2. On the set S3 where both μn and gn are consistent, only the product of their rates of convergence must be faster than n−1/3. Hence, a non-degenerate limit theory is available as long as at least one of the nuisance estimators is consistent at a rate faster than n−1/3, even if the other nuisance estimator is inconsistent. This suggests the possibility of performing doubly-robust inference for θ0(a), that is, of constructing confidence intervals and tests based on θn (a) with valid calibration even when one of μ0 and g0 is inconsistently estimated. This is explored in Section 4. Finally, as in Theorem 1, we allow that neither μn nor gn be consistent everywhere, as long as for (F0 × Q0)-almost every (a,ω) at least one of μn or gn is consistent.

We remark that if it is known that μn (a, ·) is consistent for μ0(a, ·) in an L2(Q0) sense at rate faster than n−1/3, the isotonic regression of the plug-in estimator θμn(a) := ∫ μn(a,ω)Qn(dω) – which can be equivalently obtained by setting gn(a, ·) = +∞ in the construction of θn(a) – achieves a faster rate of convergence to θ0(a) than does θn(a). This might motivate an analyst to use θμn (a) rather than θn(a) in such a scenario. However, the consistency of θμn(a) hinges entirely on the fact that μ∞ = μ0, and in particular, θμn (a) will be inconsistent if μ∞ ≠ μ0, even if g∞ = g0. Additionally, the estimator θμn (a) may not generally admit a tractable limit theory upon which to base the construction of valid confidence intervals, particularly when machine learning methods are used to build μn.

3.4. Grenander-type estimation without domain transformation

As indicated earlier, the isotonic regression estimator based on estimated pseudo-outcomes coincides with a generalized Grenander-type estimator for which the marginal exposure empirical distribution function is used as domain transformation. An alternative estimator could be constructed via Grenander-type estimation without the use of any domain transformation. Specifically, we let a−,a+ ∈ be fixed, and we define . Under regularity conditions, for a ≤ a+, the one-step estimator of Θ0(a) given by

is asymptotically efficient, where πn is an estimator of π0, the conditional density of A given W under P0. The left derivative of the GCM of Θn over [a−,a+] defines an alternative estimator (a).

It is natural to ask how compares to the estimator θn we have studied thus far. First, we note that, unlike θn, neither generalizes the classical isotonic regression estimator nor is invariant to strictly increasing transformations of A. Additionally, utilizing the transformation F0 fixes [0, 1] as the interval over which the GCM should be performed. If is known to be a bounded set, [a−,a+] can be taken as the endpoints of , but otherwise the domain [a−, a+] must be chosen in defining . Turning to an asymptotic analysis, using the results of Westling and Carone (2019), it is possible to establish conditions akin to (A1)–(A5) under which n1/3 with scale parameter

where π∞ is the limit of πn in probability. We denote by [4τ0(a)]1/3 and []1/3 the limit scaling factors of n1/3 [θn(a) – θ0(a)] and n1/3 [ – θ0(a)], respectively. If g∞ = π∞/f0 and μ∞ = μ0, then , and n1/3 [θn(a) – θ0(a)] and n1/3 [] have the same limit distribution. If instead g∞ = π∞/f0 = g0 but μ∞ ≠ μ0, this is no longer the case. In fact, we can show that

Hence, when the outcome regression estimator μn is inconsistent, gains in efficiency are achieved by utilizing the transformation, and the relative gain in efficiency is directly related to the amount of asymptotic bias in the estimation of μ0.

3.5. Discrete domains

In some circumstances, the exposure A is discrete rather than continuous. Our estimator works equally well in these cases, since, as we highlight below, it turns out to then be asymptotically equivalent to the well-studied augmented IPW (AIPW) estimator. As a result, the large-sample properties of our estimator can be derived from the large-sample properties of the AIPW estimator, and asymptotically valid inference can be obtained using standard influence function-based techniques.

Suppose that and f0,j := P0(A = aj) > 0 for all j ∈ {1, 2,…, m} and . Our estimation procedure remains the same with one exception: in defining g0 := π0/f0, we now take π0 to be the conditional probability π0(aj ∣ ω) := P0(A = aj ∣ W = ω) rather than the corresponding conditional density, and we take f0 as the marginal probability f0(aj) := P0(A = aj) = f0,j rather than the corresponding marginal density. We then set gn := πn/fn, as the estimator of g0, where πn is any estimator of π0 and . In all other respects, our estimation procedure is identical to that defined previously. With these definitions, we denote by ξn,i the estimated pseudo-outcome for observation i. Our estimator is then the isotonic regression of ξn,1, ξn,2, …, ξn,n on A1, A2, …, An. However, since for each i there is a unique j such that Ai = aj, this is equivalent to performing isotonic regression of , , …, on a1, a2, … , am, where . It is straightforward to see that

which is exactly the AIPW estimator of θ0(aj). Therefore, in this case, our estimator reduces to the isotonic regression of the classical AIPW estimator constructed separately for each element of the exposure domain.

The large-sample properties of , including doubly-robust consistency and convergence in distribution at the regular parametric rate n−1/2, are well-established (Robins et al., 1994). Therefore, many properties of θn in this case can be determined using the results of Westling et al. (2018), which studied the behavior of the isotonic correction of an initial estimator. In particular, as long as θ0 is non-decreasing on . Uniform consistency of over thus implies uniform consistency of θn. Furthermore, if θ0 is strictly increasing on and {n1/2 [] : a ∈ } converges in distribution, then , so that large-sample standard errors for , are also valid for θn. If θ0 is not strictly increasing on but instead has flat regions, then θn is more efficient than θ0 on these regions, and confidence intervals centered around θn but based upon the limit theory for will be conservative.

3.6. Large-sample results for causal effects

In many applications, in addition to the causal dose response curve a ↦ m0(a) itself, causal effects of the form (a1, a2) ↦ m0(a1) – m0(a2) are of scientific interest as well. Under the identification conditions discussed in Section 1.2 applied to each of a1 and a2, such causal effects are identified with the observed-data parameter θ0(a1) – θ0(a2). A natural estimator for such a causal effect in our setting is θn(a1) – θn(a2). If the conditions of Theorem 1 hold for both a1 and a2, then the continuous mapping theorem implies that . However, since Theorem 2 only provides marginal distributional results, and thus does not describe the joint convergence of Zn(a1, a2) := (n1/3[θn(a1)–θ(a1)],n1/3[θn(a2)–θ0(a2)–θ0(a2)], it cannot be used to determine the large-sample behavior of n1/3 {[θn(a1) – θn(a2)] – [θ0(a1) – θ0(a2)]}. The following result demonstrates that such joint convergence can be expected under the aforementioned conditions, and that the bivariate limit distribution of Zn(a1, a2) has independent components.

Theorem 3 (Joint convergence in distribution). If conditions (A1)–(A5) hold for a ∈ {a1,a2} ⊂ and F0(a1), F0(a2) ∈ (0,1), then Zn(a1, a2) converges in distribution to (, ), where and are independent standard Chernoff distributions and the scale parameter τ0 is as defined in Theorem 2.

Theorem 3 implies that, under the stated conditions, n1/3 {θn(a1) – θn(a2)] – [θ0(a1) – θ0(a2)]} converges in distribution to – .

3.7. Use of cross-fitting to avoid empirical process conditions

Theorems 1 and 2 reveal that the statistical properties of θn depend on the nuisance estimators μn and gn in two important ways. First, we require in condition (A1) that μn or gn fall in small enough classes of functions, as measured by metric entropy, in order to control certain empirical process remainder terms. Second, we require in conditions (A2)–(A3) that at least one of μn or gn be consistent almost everywhere (for consistency), and in condition (A4) that the product of their rates of convergence be faster than n−1/3 (for convergence in distribution). In observational studies, researchers can rarely specify a priori correct parametric models for μ0 and g0. This motivates use of data-adaptive estimators of these nuisance functions in order to meet the second requirement. However, such estimators often lead to violations of the first requirement, or it may be onerous to determine that they do not. Thus, because it may be difficult to find nuisance estimators that are both data-adaptive enough to meet required rates of convergence and fall in small enough function classes to make empirical process terms negligible, simultaneously satisfying these two requirements can be challenging in practice.

In the context of asymptotically linear estimators, it has been noted that cross-fitting nuisance estimators can resolve this challenge by eliminating empirical process conditions (Zheng and van der Laan, 2011; Belloni et al., 2018; Kennedy, 2019). We therefore propose employing cross-fitting of μn and gn in the estimation of Γ0 in order to avoid entropy conditions in Theorems 1 and 2. Specifically, we fix V ∈ {2, 3, …, n/2} and suppose that the indices {1, 2, … , n} are randomly partitioned into V sets , , …, . We assume for convenience that N := n/V is an integer and that ∣∣ = N for each ν, but all of our results hold as long as . For each ν ∈ {1, 2, … , V}, we define as the training set for fold ν, and denote by μn,ν and gn,ν the nuisance estimators constructed using only the observations from . We then define pointwise the cross-fitted estimator of Γ0 as

| (3) |

Finally, the cross-fitted estimator of θ0 is constructed using steps 1–4 outlined in Section 2.2, with Γn replaced by .

As we now demonstrate, utilizing the cross-fitted estimator allows us to avoid the empirical process condition (A1b). We first introduce the following two conditions, which are analogues of conditions (A1) and (A2).

(B1) There exist constants C′, δ′, , , , ∈ (0, +∞) such that, almost surely as n → ∞ and for all ν, μn,ν and gn,ν, are contained in classes of functions and , respectively, satisfying:

for all , and ≤ g ≤ for all g ∈; and for almost all a, ω.

(B2) There exist and such that maxν and .

We then have the following analogue of Theorem 1 establishing consistency of the cross-fitted estimator .

Theorem 4 (Consistency of the cross-fitted estimator). If conditions (B1)–(B2) and (A3) hold, then for any a ∈ such that F0(a) ∈ (0,1), θ0 is continuous at a, and F0 is strictly increasing in a neighborhood of a. If θ0 is uniformly continuous and F0 is strictly increasing on , then for any bounded strict subinterval .

For convergence in distribution, we introduce the following analogue of condition (A4).

(B4) There exists ε0 > 0 such that:

(a) maxν max{d(μn,ν, μ∞; a, ε0, S1), d(gn,ν, g∞; a, ε0, S2)} = oP(n−1/3);

(b) maxν max{d(μn,ν, μ∞; a, ε0, S2), d(gn,ν, g∞; a, ε0, S1)} = oP(1);

(c) maxν d(μn,ν, μ∞; a, ε0, S3)d(gn,ν, g∞; a, ε0, S3) = oP(n−1/3).

We then have the following analogue of Theorem 2 for the cross-fitted estimator .

Theorem 5 (Convergence in distribution for the cross-fitted estimator). If conditions (B1), (B2), (A3), (B4), and (A5) hold, then n1/3 for any a ∈ such that F0(a) ∈ (0,1).

The conditions of Theorems 4 and 5 are analogous to those of Theorems 1 and 2, with the important exception that the entropy condition (A1b) is no longer required. Therefore, the estimators μn,ν and gn,ν may be as data-adaptive as one desires without concern for empirical process terms, as long as they satisfy the boundedness conditions stated in (B1).

4. Construction of confidence intervals

4.1. Wald-type confidence intervals

The distributional results of Theorem 2 can be used to construct a confidence interval for θ0(a). Since the limit distribution of n1/3 [θn(a) – θ0(a)] is symmetric around zero, a Wald-type construction seems appropriate. Specifically, writing and denoting by τn(a) any consistent estimator of τ0(a), a Wald-type 1 – α level asymptotic confidence interval for θ0(a) is given by

where qp denotes the pth quantile of . Quantiles of the standard Chernoff distribution have been numerically computed and tabulated on a fine grid (Groeneboom and Wellner, 2001), and are readily available in the statistical programming language R. Estimation of τ0(a) involves, either directly or indirectly, estimation of and . We focus first on the former.

We note that with . This suggests that we could either estimate and f0 separately and consider the ratio of these estimators, or that we could instead estimate directly and compose it with the estimator of F0 already available. The latter approach has the desirable property that the resulting scale estimator is invariant to strictly monotone transformations of the exposure. As such, this is the strategy we favor. To estimate , we recall that the estimator ψn from Section 2 is a step function and is therefore not differentiable. A natural solution consists of computing the derivative of a smoothed version of ψn. We have found local quadratic kernel smoothing of points {(uj, ψn (uj)) : j = 1, 2, … K}, for uj the midpoints of the jump points of ψn, to work well in practice.

Theorem 3 can be used to construct Wald-type confidence intervals for causal effects of the form θ0(a1) – θ0(a2). We first construct estimates τn(a1) and τn(a2) of the scale parameters τ0(a1) and τ0(a2), respectively, and then compute an approximation of the (1 – α/2)-quantile of – , where and are independent Chernoff distributions, using Monte Carlo simulations, for example. An asymptotic 1 – α level Wald-type confidence interval for .

In the next two subsections, we discuss different strategies for estimating the scale factor κ0(a).

4.2. Scale estimation relying on consistent nuisance estimation

We first consider settings in which both μn and gn are consistent estimators, that is, g∞ = g0 and μ∞ = μ0. In such cases, we have that with denoting the conditional variance E0{[Y – μ0(a, W)]2 ∣ A = a, W = ω}. Any regression technique could be used to estimate the conditional expectation of Zn := [Y – μn(A,W)]2 given A and W, yielding an estimator of . A plug-in estimator of κ0(a) is then given by

Provided μn, gn and are consistent estimators of μ0, g0 and , respectively, κn(a) is a consistent estimator of κ0(a). We note that in the special case of a binary outcome, the fact that motivates the use of μn(a, ω)[1 – μn(a, ω)] as estimator , and thus eliminates the need for further regression beyond the construction of μn and gn. In practice, we typically recommend the use of an ensemble method – for example, the SuperLearner (van der Laan et al., 2007) – to combine a variety of regression techniques, including machine learning techniques, to minimize the risk of inconsistency of μn, gn and .

4.3. Doubly-robust scale estimation

As noted above, Theorem 2 provides the limit distribution of n1/3 [θn(a) – θ0(a)] even if one of the nuisance estimators is inconsistent, as long as the consistent nuisance estimator converges fast enough. We now show how we may capitalize on this result to provide a doubly-robust estimator of κ0(a). Since ψn is itself a doubly-robust estimator of ψ0, so will be the proposed estimator of and hence also of the resulting estimator τn(a) of τ0(a). This contrasts with the estimator of κ0(a) described in the previous section, which required the consistency of both μn and gn.

To construct an estimator of κ0(a) consistent even if either μ∞ ≠ μ0 or g∞ ≠ g0, we begin by noting that κ0(a) = limh↓0 E0 [Kh (F0(A) – F0(a)) η∞(Y, A, W)], where Kh : u ↦ h−1 K(uh−1) for some bounded density function K with bounded support, and we have defined

Setting θμn := ∫ μn(a, ω)Qn(dω) with Qn the empirical distribution based on W1, W2, …, Wn, we define with ηn obtained by substituting μ∞, g∞, and μ∞ by θn, gn, and θμn, respectively, in the definition of η∞. Under conditions (A1)–(A5), it can be shown that by standard kernel smoothing arguments for any sequence hn → 0. In particular, is consistent under the general form of doubly-robustness specified by condition (A3).

To determine an appropriate value of the bandwidth h in practice, we propose the following empirical criterion. We first define the integrated scale γ0 := ∫ κ0(a)F0(da), and construct the estimator γn(h) := ∫ κn,h(a)Fn(da) for any candidate h > 0. Furthermore, we observe that γ0 = E0 [η∞(Y, A, W)], which suggests the use of the empirical estimator . This motivates us to define , that is, the value of h that makes γn(h) and closest. The proposed doubly-robust estimator of κ0(a) is thus .

We make two final remarks regarding this doubly-robust estimator of κ0(a). First, we note that this estimator only depends on A and a through the ranks Fn(A) and Fn(a). Hence, as before, our estimator is invariant to strictly monotone transformations of the exposure A. Second, we note that if μn(a, ω) = μn(a) does not depend on ω and gn = 1, κn,dr(a) tends to the conditional variance Var0(Y ∣ A = a), which is precisely the scale parameter appearing in standard isotonic regression.

4.4. Confidence intervals via sample splitting

As an alternative, we note here that the sample-splitting method recently proposed by Banerjee et al. (2019) could also be used to perform inference. Specifically, to implement their approach in our context, we randomly split the sample into m subsets of roughly equal size, perform our estimation procedure on each subset to form subset-specific estimates θn,1, θn,2, …, θn,m, and then define . Banerjee et al. (2019) demonstrated that if m > 1 is fixed, then under mild conditions has strictly better asymptotic mean squared error than θn(a), and that for moderate m,

| (4) |

forms an asymptotic 1 – α level confidence interval for θ0(a), where and t1-α/2,m-1 is the (1 – α/2)-quantile of the t-distribution with m – 1 degrees of freedom.

5. Numerical studies

In this section, we perform numerical experiments to assess the performance of the proposed estimators of θ0(a) and of the three approaches for constructing confidence intervals, which we also compare to that of the local linear estimator and associated confidence intervals proposed in Kennedy et al. (2017).

In our experiments, we simulate data as follows. First, we generate W ∈ as a vector of four independent standard normal variates. A natural next step would be to generate A given W. However, since our estimation procedures requires estimating the conditional density of U := F0(A) given W, we instead generate U given W, and then transform U to obtain A. This strategy makes it easier to construct correctly-specified parametric nuisance estimators in the context of these simulations. Given W = ω, we generate U from the distribution with conditional density function for . We note that for all u ∈ [0,1] and ω ∈ , and also, that , so that U is marginally uniform. We then take A to be the evaluation at U of the quantile function of an equal-weight mixture of two normal distributions with means −2 and 2 and standard deviation 1, which implies that A is marginally distributed according to this bimodal normal mixture. Finally, conditionally upon A = a and W = ω, we simulate Y as a Bernoulli random variate with conditional mean function given by μ0(a, ω) := expit (), where w denotes (1,ω). We set , , and γ3 = 3 in the experiments we report on.

We estimate the true confounder-adjusted dose-response curve θ0 using the causal isotonic regression estimator θn, the local linear estimator of Kennedy et al. (2017), and the sample-splitting version of θn proposed by Banerjee et al. (2019) with m = 5 splits. For the local linear estimator, we use the data-driven bandwidth selection procedure proposed in Section 3.5 of Kennedy et al. (2017). We consider three settings in which either both μn and gn are consistent; only μn consistent; and only gn consistent. To construct a consistent estimator μn, we use a correctly specified logistic regression model, whereas to construct a consistent estimator gn, we use a maximum likelihood estimator based on a correctly specified parametric model. To construct an inconsistent estimator μn, we still use a logistic regression model but omit covariates W3, W4 and all interactions. To construct an inconsistent estimator gn, we posit the same parametric model as before but omit W3 and W4. We construct pointwise confidence intervals for θ0 in each setting using the Wald-type construction described in Section 4 using both the plug-in and doubly-robust estimators of κ0(a). We expect intervals based on the doubly-robust estimator of κ0(a) to provide asymptotically correct coverage rates for θ0(a) for each of the three settings, but only expect asymptotically correct coverage rates in the first setting when the plug-in estimator of κ0(a) is used. We construct pointwise confidence intervals for the local linear estimator using the procedure proposed in Kennedy et al. (2017), and for the sample splitting procedure using the procedure discussed in Section 4.4. We consider the performance of these inferential procedures for values of a between −3 and 3.

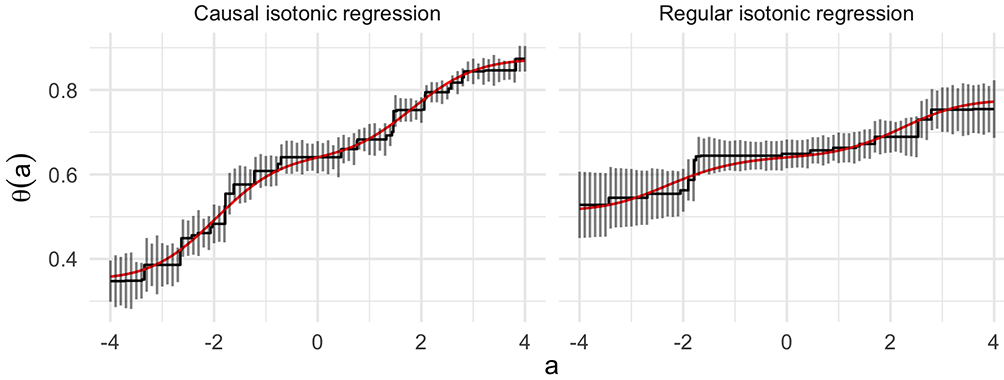

The left panel of Figure 1 shows a single sample path of the causal isotonic regression estimator based on a sample of size n = 5000 and consistent estimators μn and gn. Also included in that panel are asymptotic 95% pointwise confidence intervals constructed using the doubly-robust estimator of κ0(a). The right panel shows the unadjusted isotonic regression estimate based on the same data and corresponding 95% asymptotic confidence intervals. The true causal and unadjusted regression curves are shown in red. We note that θ0(a) ≠ r0(a) for a ≠ 0, since the relationship between Y and A is confounded by W, and indeed the unadjusted regression curve does not have a causal interpretation. Therefore, the marginal isotonic regression estimator will not be consistent for the true causal parameter. In this data-generating setting, the causal effect of A on Y is larger in magnitude than the marginal effect of A on Y in the sense that θ0(a) has greater variation over values of a than does r0(a).

Figure 1:

Causal isotonic regression estimate using consistent nuisance estimators μn and gn (left), and regular isotonic regression estimate (right). Pointwise 95% confidence intervals constructed using the doubly-robust estimator are shown as vertical bars. The true functions are shown in red.

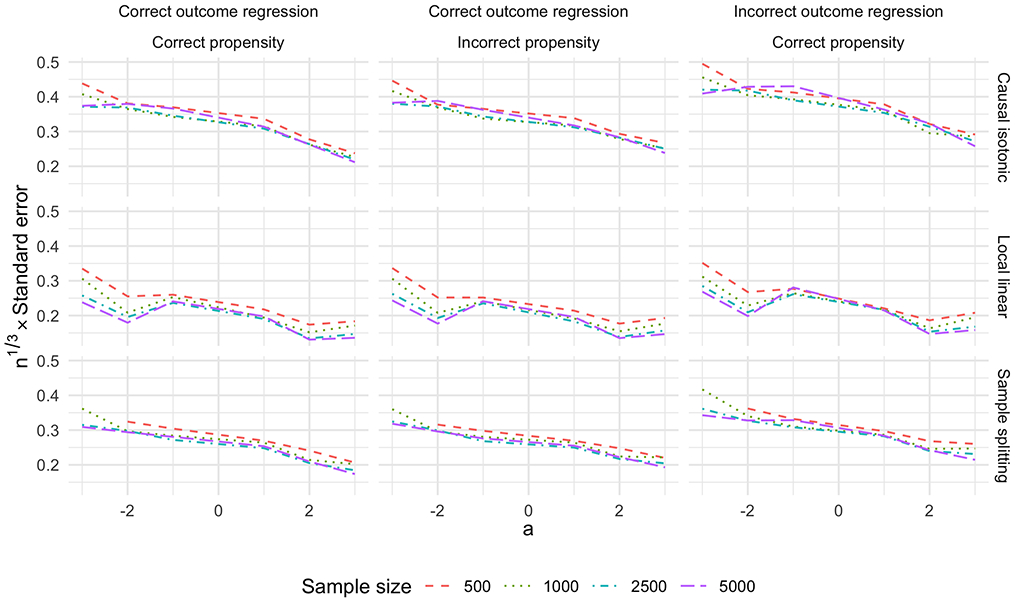

We perform 1000 simulations, each with n ∈ {500,1000, 2500, 5000} observations. Figure 2 displays the empirical standard error of the three considered estimators over these 1000 simulated datasets as a function of a and for each value of n. We first note that the standard error of the local linear estimator is smaller than that of θn, which is expected due to the faster rate of convergence of the local linear estimator. The sample splitting procedure also reduces the standard error of θn. Furthermore, the standard deviation of the local linear estimator appears to decrease faster than n−1/3, whereas the standard deviation of the estimators based on θn do not, in line with the theoretical rates of convergence of these estimators. We also note that inconsistent estimation of the propensity has little impact on the standard errors of any of the estimators, but inconsistent estimation of the outcome regression results in slightly larger standard errors.

Figure 2:

Standard error of the three estimators scaled by n1/3 as a function of n for different values of a and in contexts in which μn and gn are either consistent or inconsistent, computed empirically over 1000 simulated datasets of different sizes.

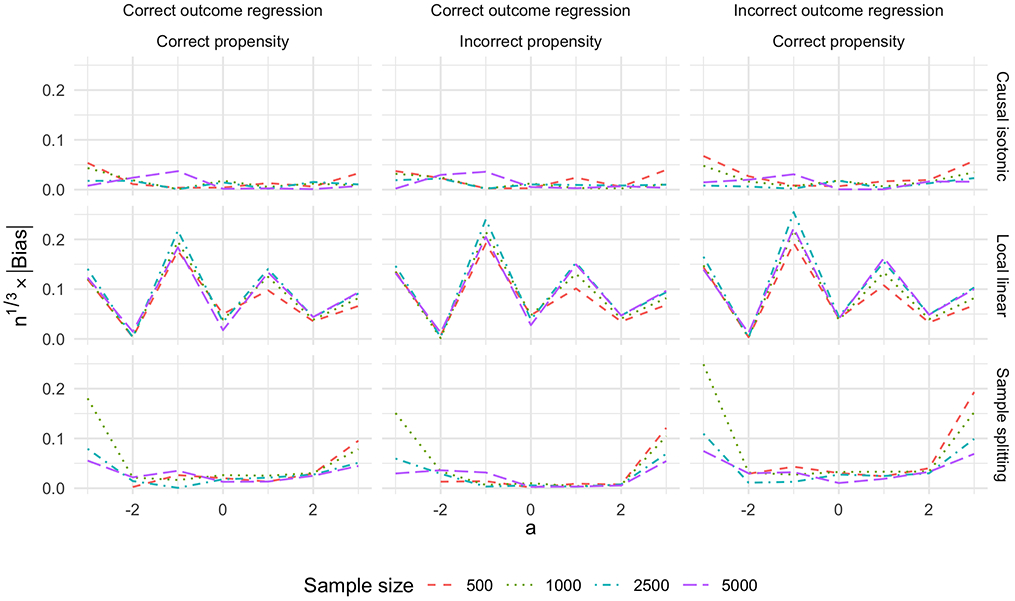

Figure 3 displays the absolute bias of the three estimators. For most values of a, the estimator θn proposed here has smaller absolute bias than the local linear estimator, and its absolute bias decreases faster than n−1/3. The absolute bias of the local linear estimator depends strongly on a, and in particular is largest where the second derivative of θ0 is large in absolute value, agreeing with the large-sample theory described in Kennedy et al. (2017). The sample splitting estimator has larger absolute bias than θn because it inherits the bias of θn/m. The bias is especially large for values of a in the tails of the marginal distribution of A.

Figure 3:

Absolute bias of the three estimators scaled by n1/3 as a function of n for different values of a and in contexts in which μn and gn are either consistent or inconsistent, computed empirically over 1000 simulated datasets of different sizes.

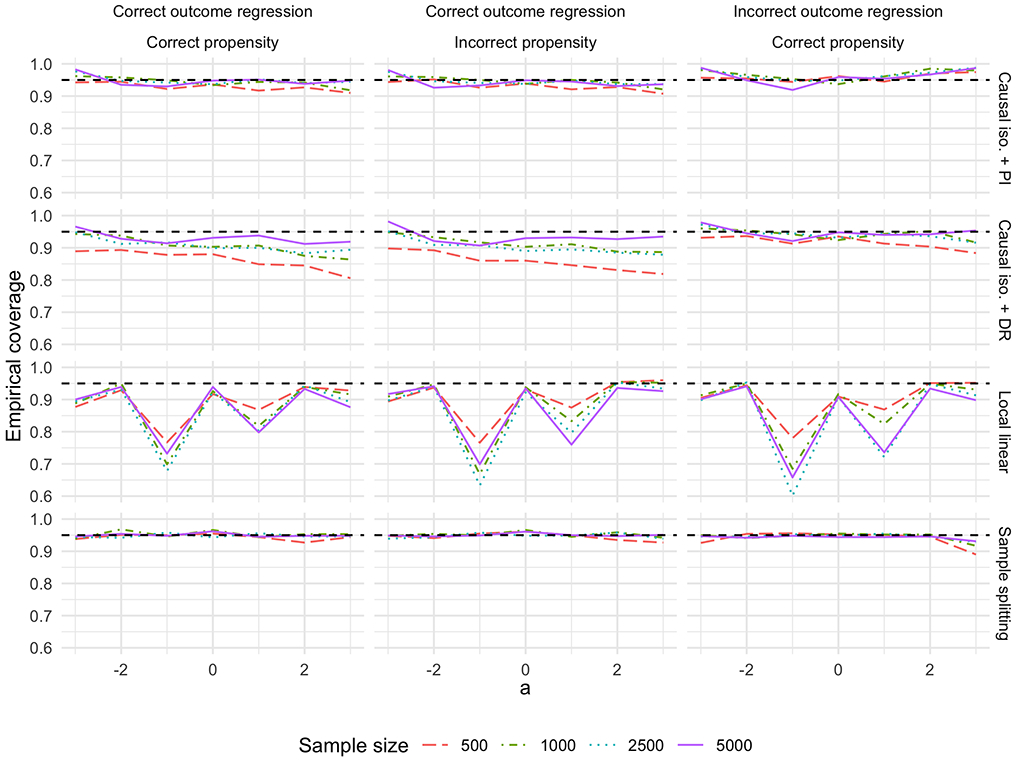

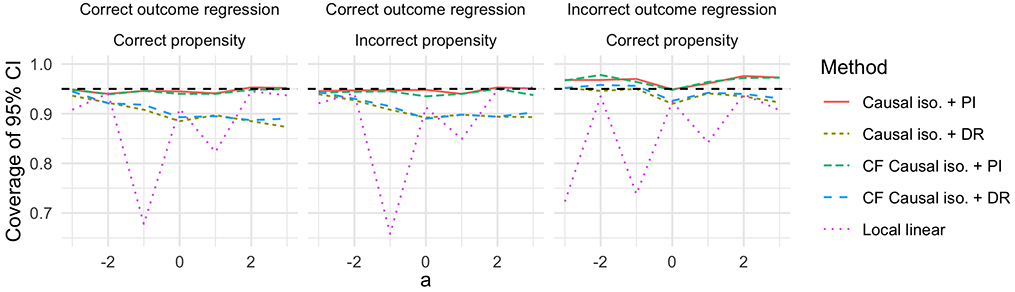

Figure 4 shows the empirical coverage of nominal 95% pointwise confidence intervals for a range of values of a for the four methods considered. For both the plug-in and doubly-robust intervals centered around θn, the coverage improves as n grows, especially for values of a in the tails of the marginal distribution of A. Under correct specification of outcome and propensity regression models, the plug-in method attains close to nominal coverage rates for a between −3 and 3 by n = 1000. When the propensity estimator is inconsistent, the plug-in method still performs well in this example, although we do not expect this to always be the case. However, when μn is inconsistent, the plug-in method is very conservative for positive values of a. The doubly-robust method attains close to nominal coverage for large samples as long as one of gn or μn is consistent. Compared to the plug-in method, the doubly-robust method requires larger sample sizes to achieve good coverage, especially for extreme values of a. This is because the doubly-robust estimator of κ0(a) has a slower rate of convergence than does the plug-in estimator, as demonstrated by box plots of these estimators provided in Supplementary Material.

Figure 4:

Observed coverage of pointwise 95% confidence intervals using θn and the plug-in method (top row), θn and eht doubly-robust method (second row), the local linear estimator and associated intervals (third row), and the sample splitting estimator (bottom row), considered for different values of a and computed empirically over 1000 simulated datasets of different sizes. Columns indicate whether μn and gn is consistent or not. Black dashed lines indicate the nominal coverage rate.

The confidence intervals associated with the local linear estimator have poor coverage for values of a where the bias of the estimator is large, which, as mentioned above, occurs when the second derivative of θ0 is large in absolute value. Overall, the sample splitting estimator has excellent coverage, except perhaps for values of a in the tails of the marginal distribution of A when n is small or moderate, in which case the coverage is near 90%.

We also conducted a small simulation study to illustrate the performance of the proposed procedures when machine learning techniques are used to construct μn and gn. To consistently estimate μ0, we used a Super Learner (van der Laan et al., 2007) with a library consisting of generalized linear models, multivariate adaptive regression splines, and generalized additive models. To consistently estimate g0, we used the method proposed by Díaz and van der Laan (2011) with covariate vector (W1, W2, W3, W4). To produce inconsistent estimators μn or gn, we used the same estimators but omitted covariates W1 and W2. We also considered the estimator obtained via cross-fitting these nuisance parameters, as discussed in Section 3.7, as well as the local linear estimator. Due to computational limitations, we performed 1000 simulations at sample size n = 1000 only. Figure 5 shows the coverage of nominal 95% confidence intervals. The plug-in intervals achieve very close to nominal coverage under consistent estimation of both nuisances, and also achieve surprisingly good coverage rates when the propensity is inconsistently estimated. The plug-in intervals are somewhat conservative when the outcome regression is inconsistently estimated. The doubly-robust method is anti-conservative under inconsistent estimation of both nuisances and also when the propensity is inconsistently estimated, with coverage rates mostly between 90 and 95%. Good coverage rates are also achieved when the outcome regression is inconsistently estimated. These results suggest that the doubly-robust intervals may require larger sample sizes to achieve good coverage, particularly when machine learning estimators are used for μn and gn. The plug-in intervals appear to be relatively robust to moderate misspecification of models for the nuisance parameters in smaller samples. Histograms of the estimators of κ0(a) and (a) are provided in the Supplementary Material. Confidence intervals based on the local linear estimator show a similar pattern as in the previous simulation study, undercovering where the second derivative of the true function is large in absolute value. Cross-fitting had little impact on coverage.

Figure 5:

Observed coverage of pointwise 95% doubly-robust and plug-in confidence intervals using machine learning estimators based on simulated data including n = 1000 observations. Columns indicate whether μn and gn are consistent or not. Black dashed lines indicate the nominal coverage rate. CF stands for cross-fitted; PI for plug-in; DR for doubly-robust.

As noted above, we found in our numerical experiments that the plug-in estimator of the scale parameter was surprisingly robust to inconsistent estimation of the nuisance parameters, while its doubly-robust estimator was anti-conservative even when the nuisance parameters were estimated consistently. This phenomenon can be explained in terms of the bias and variance of the two proposed scale estimators. On one hand, under inconsistent estimation of any nuisance function, the plug-in estimator of the scale parameter is biased, even in large samples. However, its variance decreases relatively quickly with sample size, since it is a simple empirical average of estimated functions. On the other hand, the doubly-robust estimator is asymptotically unbiased, but its variance decreases much slower with sample size. These trends can be observed in the figures provided in the Supplementary Material. In sufficiently large samples, the doubly-robust estimator is expected to outperform the plug-in estimator in terms of mean squared error when one of the nuisances is inconsistently estimated. However, the sample size required for this trade-off to significantly affect confidence interval coverage depends on the degree of inconsistency. While we did not see this tradeoff occur at the sample sizes used in our numerical experiments, we expect the benefits of the doubly-robust confidence interval construction to become apparent in smaller samples in other settings.

6. BMI and T-cell response in HIV vaccine studies

The scientific literature indicates that, for several vaccines, obesity or BMI is inversely associated with immune responses to vaccination (see, e.g. Sheridan et al., 2012; Young et al., 2013; Jin et al., 2015; Painter et al., 2015; Liu et al., 2017). Some of this literature has investigated potential mechanisms of how obesity or higher BMI might lead to impaired immune responses. For example, Painter et al. (2015) concluded that obesity may alter cellular immune responses, especially in adipose tissue, which varies with BMI. Sheridan et al. (2012) found that obesity is associated with decreased CD8+ T-cell activation and decreased expression of functional proteins in the context of influenza vaccines. Liu et al. (2017) found that obesity reduced Hepatitis B immune responses through “leptin-induced systemic and B cell intrinsic inflammation, impaired T cell responses and lymphocyte division and proliferation.” Given this evidence of a monotone effect of BMI on immune responses, we used the methods presented in this paper to assess the covariate-adjusted relationship between BMI and CD4+ T-cell responses using data from a collection of clinical trials of candidate HIV vaccines. We present the results of our analyses here.

In Jin et al. (2015), the authors compared the compared the rate of CD4+ T cell response to HIV peptide pools among low (BMI < 25) medium (25 ≤ BMI < 30) and high (BMI ≥ 30) BMI participants, and they found that low BMI participants had a statistically significantly greater response rate than high BMI participants using Fisher’s exact test. However, such a marginal assessment of the relationship between BMI and immune response can be misleading because there are known common causes, such as age and sex, of both BMI and immune response. For this reason, Jin et al. (2015) also performed a logistic regression of the binary CD4+ responses against sex, age, BMI (not discretized), vaccination dose, and number of vaccinations. In this adjusted analysis, they found a significant association between BMI and CD4+ response rate after adjusting for all other covariates (OR: 0.92; 95% CI: 0.86, 0.98; p=0.007). However, such an adjusted odds-ratio only has a formal causal interpretation under strong parametric assumptions. As discussed in Section 1.2, the covariate-adjusted dose-response function θ0 is identified with the causal dose-response curve without making parametric assumptions, and is therefore of interest for understanding the continuous covariate-adjusted relationship between BMI and immune responses.

We note that there is some debate in the causal inference literature about whether exposures such as BMI have a meaningful interpretation in formal causal modeling. In particular, some researchers suggest that causal models should always be tied to hypothetical randomized experiments (see, e.g., Bind and Rubin, 2019), and it is difficult to imagine a hypothetical randomized experiment that would assign participants to levels of BMI. From this perspective, it may therefore not be sensible to interpret θ0(a) in a causal manner in the context of this example. Nevertheless, as discussed in the introduction, we contend that θ0(a) is still of interest. In particular, it provides a meaningful summary of the relationship between BMI and immune response accounting for measured potential confounders. In this case, we interpret θ0(a) as the probability of immune response in a population of participants with BMI value a but sex, age, vaccination dose, number of vaccinations, and study with a similar distribution to that of the entire study population.

We pooled data from the vaccine arms of 11 phase I/II clinical trials, all conducted through the HIV Vaccine Trials Network (HVTN). Ten of these trials were previously studied in the analysis presented in Jin et al. (2015), and a detailed description of the trials are contained therein. The final trial in our pooled analysis is HVTN 100, in which 210 participants were randomized to receive four doses of the ALVAC-HIV vaccine (vCP1521). The ALVAC-HIV vaccine, in combination with an AIDSVAX boost, was found to have statistically significant vaccine efficacy against HIV-1 in the RV-144 trial conducted in Thailand (Rerks-Ngarm et al., 2009). CD4+ and CD8+ T-cell responses to HIV peptide pools were measured in all 11 trials using validated intracellular cytokine staining at HVTN laboratories. These continuous responses were converted to binary indicators of whether there was a significant change from baseline using the method described in Jin et al. (2015). We analyzed these binary responses at the first visit following administration of the last vaccine dose-either two or four weeks after the final vaccination depending on the trial. After accounting for missing responses from a small number of participants, our analysis datasets consisted of a total of n = 439 participants for the analysis of CD4+ responses and n = 462 participants for CD8+ responses. Here, we focus on analyzing CD4+ responses; we present the analysis of CD8+ responses in Supplementary Material.

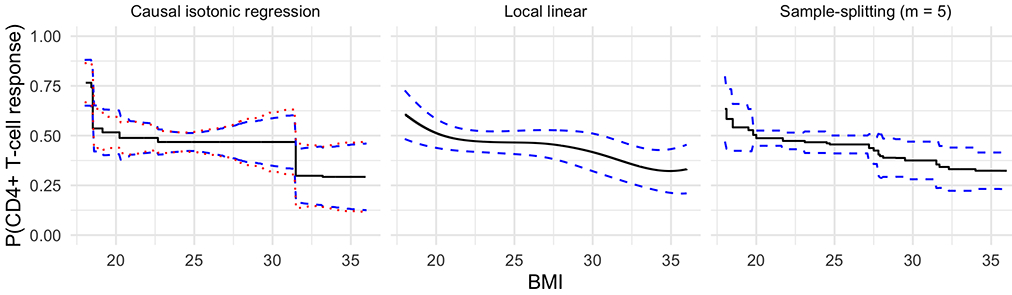

We assessed the relationship between BMI and T-cell response by estimating the covariate-adjusted dose-response function θ0 using our cross-fitted estimator , the local linear estimator, and the sample-splitting version of our estimator with m = 5 splits. We adjusted for sex, age, vaccination dose, number of vaccinations, and study. We estimated μ0 and g0 as in the machine learning-based simulation study described in Section 5, and constructed confidence intervals for our estimator using both the plug-in and doubly-robust estimators described above.

Figure 6 presents the estimated probability of a positive CD4+ T-cell response as a function of BMI for BMI values between the 0.05 and 0.95 quantile of the marginal empirical distribution of BMI using our estimator (left panel), the local linear estimator (middle panel), and the sample-splitting estimator (right panel). Pointwise 95% confidence intervals are shown as dashed/dotted lines. The three methods found qualitatively similar results. We found that the change in probability of CD4+ response appears to be largest for BMI < 20 and BMI > 30. We estimated the probability of having a positive CD4+ T-cell response, after adjusting for potential confounders, to be 0.52 (95% doubly-robust CI: 0.44–0.59) for a BMI of 20, 0.47 (0.42–0.52) for a BMI of 25, 0.47 (0.32–0.62) for a BMI of 30, and 0.29 (0.12–0.47) for a BMI of 35. We estimated the difference between these probabilities for BMIs of 20 and 35 to be 0.22 (0.03{0.41).

Figure 6:

Estimated probabilities of CD4+ T-cell response and 95% pointwise confidence intervals as a function of BMI, adjusted for sex, age, number of vaccinations received, vaccine dose, and study. The left panel displays the estimator proposed here, the middle panel the local linear estimator of Kennedy et al. (2017), and the right panel the sample-splitting version of our estimator with m = 5 splits. In the left panel, the blue dashed lines are confidence intervals based on the plug-in estimator of the scale parameter, and the dotted lines are based on the doubly-robust estimator of the scale parameter.

7. Concluding remarks

The work we have presented in this paper lies at the interface of causal inference and shape-constrained nonparametric inference, and there are natural future directions building on developments in either of these areas. Inference on a monotone causal dose-response curve when outcome data are only observed subject to potential coarsening, such as censoring, truncation, or missingness, is needed to increase the applicability of our proposed method. To tackle such cases, it appears most fruitful to follow the general primitive strategy described in Westling and Carone (2019) based on a revised causal identification formula allowing such coarsening.

It would be useful to develop tests of the monotonicity assumption, as Durot (2003) did for regression functions. Such a test could likely be developed by studying the large-sample behavior of under the null hypothesis that θ0 is monotone, where Ψn and are the primitive estimator and its greatest convex minorant as defined in Section 2.2. Such a result would likely permit testing with a given asymptotic size when θ0 is strictly increasing, and asymptotically conservative inference otherwise. It would also be useful to develop methods for uniform inference. Uniform inference is difficult in this setting due to the fact that {n1/3 [θn(a) – θ0(a)] : a ∈ } does not convergence weakly as a process in the space of bounded functions on to a tight limit process. Indeed, Theorem 3 indicates that {n1/3[θn(a) – θ0(a)] : a ∈ } converges to an independent white noise process, which is not tight, so that this convergence is not useful for constructing uniform confidence bands. Instead, it may be possible to extend the work of Durot et al. (2012) to our setting (and other generalized Grenander-type estimators) by demonstrating that log n [] converges in distribution to a non-degenerate limit for some constant α0 depending upon P0, a deterministic sequence cn, and a suitable sequence of subsets increasing to . Developing procedures for uniform inference and tests of the monotonicity assumption are important areas for future research.

An alternative approach to estimating a causal dose-response curve is to use local linear regression, as Kennedy et al. (2017) did. As is true in the context of estimating classical univariate functions such as density, hazard, and regression functions, there are certain trade-offs between local linear smoothing and monotonicity-based methods. On the one hand, local linear regression estimators exhibit a faster n−2/5 rate of convergence whenever optimal tuning rates are used and the true function possesses two continuous derivatives. However, the limit distribution involves an asymptotic bias term depending on the second derivative of the true function, so that confidence intervals based on optimally-chosen tuning parameters provide asymptotically correct coverage only for a smoothed parameter rather than the true parameter of interest. In contrast, monotonicity-constrained estimators such as the estimator proposed here exhibit an n−1/3 rate of convergence whenever the true function is strictly monotone and possesses one continuous derivative, do not require choosing a tuning parameter, are invariant to strictly increasing transformations of the exposure, and their limit theory does not include any asymptotic bias (as illustrated by Theorem 2). We note that both estimators achieve the optimal rate of convergence for pointwise estimation of a univariate function under their respective smoothness constraints. In our view, the ability to perform asymptotically valid inference using a monotonicity-constrained estimator is one of the most important benefits of leveraging the monotonicity assumption rather than using smoothing methods. This advantage was evident in our numerical studies when comparing the isotonic estimator proposed here and the local linear method of Kennedy et al. (2017). Under-smoothing can be used to construct calibrated confidence intervals using kernel-smoothing estimators, but performing adequate under-smoothing in practice is challenging.

The two methods for pointwise asymptotic inference we presented require estimation of the derivative and the scale parameter κ0(a). We found that the plug-in estimator of κ0(a) had low variance but possibly large bias depending on the levels of inconsistency of μn and gn, and that its doubly-robust estimator instead had high variance but low bias as long as either μn or gn is consistent. In practice, we found the low variance of the plug-in estimator to often outweigh its bias, resulting in better coverage rates for intervals based on the plug-in estimator of κ0(a), especially in samples of small and moderate sizes. Whether a doubly-robust estimator of κ0(a) with smaller variance can be constructed is an important question to be addressed in future work. We found that sample splitting with as few as m = 5 splits provided doubly-robust coverage, and the sample splitting estimator also had smaller variance than the original estimator, at the expense of some additional bias.

It would be even more desirable to have inferential methods that do not require estimation of additional nuisance parameters or sample splitting. Unfortunately, the standard nonparametric bootstrap is not generally consistent in Grenander-type estimation settings, and although alternative bootstrap methods have been proposed, to our knowledge, all such proposals require the selection of critical tuning parameters (Kosorok, 2008; Sen et al., 2010). Likelihood ratio-based inference for Grenander-type estimators has proven fruitful in a variety of contexts (see, e.g. Banerjee and Wellner, 2001; Groeneboom and Jongbloed, 2015), and extending such methods to our context is also an area of significant interest in future work.

Supplementary Material

Contributor Information

Ted Westling, Department of Mathematics and Statistics, University of Massachusetts Amherst.

Peter Gilbert, Vaccine and Infectious Disease Division, Fred Hutchinson Cancer Research Center.

Marco Carone, Department of Biostatistics, University of Washington.

References

- Ayer M, Brunk HD, Ewing GM, Reid WT, and Silverman E (1955, 12). An empirical distribution function for sampling with incomplete information. Ann. Math. Statist 26(4), 641–647. [Google Scholar]

- Balabdaoui F, Jankowski H, Pavlides M, Seregin A, and Wellner J (2011). On the Grenander estimator at zero. Statistica Sinica 21 (2), 873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee M, Durot C, and Sen B (2019, 04). Divide and conquer in nonstandard problems and the super-efficiency phenomenon. Ann. Statist 47(2), 720–757. [Google Scholar]

- Banerjee M and Wellner JA (2001). Likelihood ratio tests for monotone functions. Ann. Stat 29(6), 1699–1731. [Google Scholar]

- Bang H and Robins JM (2005). Doubly robust estimation in missing data and causal inference models. Biometrics 61 (4), 962–973. [DOI] [PubMed] [Google Scholar]

- Barlow RE, Bartholomew DJ, Bremner JM, and Brunk HD (1972). Statistical Inference Under Order Restrictions: The Theory and Application of Isotonic Regression. Wiley; New York. [Google Scholar]

- Belloni A, Chernozhukov V, Chetverikov D, and Wei Y (2018, 12). Uniformly valid post-regularization confidence regions for many functional parameters in Z-estimation framework. Ann. Statist 46(6B), 3643–3675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bind M-AC and Rubin DB (2019). Bridging observational studies and randomized experiments by embedding the former in the latter. Statistical Methods in Medical Research 28(7), 1958–1978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunk HD (1970). Estimation of isotonic regression. In Nonparametric Techniques in Statistical Inference (Proc. Sympos., Indiana Univ., Bloomington, Ind., 1969), London, pp. 177–197. Cambridge Univ. Press. [Google Scholar]

- Chernoff H (1964). Estimation of the mode. Annals of the Institute of Statistical Mathematics 16(1), 31–41. [Google Scholar]

- Díaz I and van der Laan MJ (2011). Super learner based conditional density estimation with application to marginal structural models. The International Journal of Biostatistics 7(1), 1–20. [DOI] [PubMed] [Google Scholar]

- Durot C (2003). A Kolmogorov-type test for monotonicity of regression. Statistics & Probability Letters 63(4), 425 – 433. [Google Scholar]

- Durot C, Kulikov VN, and Lopuhaä HP (2012). The limit distribution of the L∞-error of Grenander-type estimators. The Annals of Statistics 40(3), 1578–1608. [Google Scholar]

- Gill RD and Robins JM (2001). Causal inference for complex longitudinal data: The continuous case. The Annals of Statistics 29(6), 1785–1811. [Google Scholar]

- Groeneboom P and Jongbloed G (2014). Nonparametric estimation under shape constraints. Cambridge University Press. [Google Scholar]

- Groeneboom P and Jongbloed G (2015, 10). Nonparametric confidence intervals for monotone functions. The Annals of Statistics 43(5), 2019–2054. [Google Scholar]

- Groeneboom P and Wellner JA (2001). Computing Chernoff’s distribution. Journal of Computational and Graphical Statistics 10(2), 388–400. [Google Scholar]

- Horvitz DG and Thompson DJ (1952). A Generalization of Sampling Without Replacement from a Finite Universe. Journal of the American, Statistical Association 47(260), 663–685. [Google Scholar]

- Jin X, Morgan C, et al. (2015). Multiple factors affect immunogenicity of DNA plasmid HIV vaccines in human clinical trials. Vaccine 33(20), 2347–2353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy EH (2019). Nonparametric Causal Effects Based on Incremental Propensity Score Interventions. Journal of the American Statistical Association 114 (526), 645–656. [Google Scholar]

- Kennedy EH, Ma Z, McHugh MD, and Small DS (2017). Non-parametric methods for doubly robust estimation of continuous treatment effects. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 79(4), 1229–1245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosorok MR (2008). Bootstrapping the grenander estimator. In Balakrishnan N, Peñ EA, and Silvapulle MJ (Eds.), Beyond Parametrics in Interdisciplinary Research: Festschrift in Honor of Professor Pranab K. Sen, Volume 1 of Collections, pp. 282–292. Institute of Mathematical Statistics. [Google Scholar]

- Kulikov VN and Lopuhaä HP (2006). The behavior of the NPMLE of a decreasing density near the boundaries of the support. The Annals of Statistics 34 (2), 742–768. [Google Scholar]

- Liu F, Guo Z, and Dong C (2017). Influences of obesity on the immunogenicity of hepatitis b vaccine. Human vaccines & immunotherapeutics 13(5), 1014–1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neugebauer R and van der Laan M (2005). Why prefer double robust estimators in causal inference? Journal of Statistical Planning and Inference 129(1-2), 405–426. [Google Scholar]

- Neugebauer R and van der Laan MJ (2007). Nonparametric causal effects based on marginal structural models. Journal of Statistical Planning and Inference 137(2), 419–434. [Google Scholar]

- Nolan D and Pollard D (1987). U-Processes: Rates of Convergence. Ann. Statist 15(2), 780–799. [Google Scholar]

- Painter SD, Ovsyannikova IG, and Poland GA (2015). The weight of obesity on the human immune response to vaccination. Vaccine 33(36), 4422–4429. [DOI] [PMC free article] [PubMed] [Google Scholar]