Abstract

Background

The heterogeneous nature of mood and anxiety disorders highlights a need for dimensionally based descriptions of psychopathology that inform better classification and treatment approaches. Following the Research Domain Criteria (RDoC) approach, the current investigation sought to derive constructs assessing positive and negative valence domains across multiple units of analysis.

Methods

Adults with clinically impairing mood and anxiety symptoms (N=225) completed comprehensive assessments across several units of analysis. Self-report assessments included nine questionnaires that assess mood and anxiety symptoms and traits reflecting the negative and positive valence systems. Behavioral assessments included emotional reactivity and distress tolerance tasks, during which skin conductance and heart rate were measured. Neuroimaging assessments included fear conditioning and a reward processing task. The latent variable structure underlying these measures was explored using sparse Bayesian group factor analysis (GFA).

Results

GFA identified 11 latent variables explaining 31.2% of the variance across tasks, none of which loaded across units of analysis or tasks. Instead, variance was best explained by individual latent variables for each unit of analysis within each task. Post-hoc analyses showed 1) associations with small effect sizes between latent variables derived separately from fMRI and self-report data and 2) that some latent variables are not directly related to individual valence system constructs.

Conclusions

The lack of latent structure across units of analysis highlights challenges of the RDoC approach, and suggests that while dimensional analyses work well to reveal within-task features, more targeted approaches are needed to reveal latent cross-modal relationships that could illuminate psychopathology.

Keywords: Research Domain Criteria (RDoC), Group Factor Analysis (GFA), Positive Valence Processing, Negative Valence Processing, Mood Disorders, Anxiety Disorders

Introduction

Mood and anxiety disorders are among the most common and debilitating mental health conditions worldwide (1,2). Diagnostic systems for these disorders have largely relied upon presenting signs and symptoms that do not necessarily reflect underlying neurobiological and behavioral systems (3). However, the phenotypic and etiological heterogeneity of Major Depressive Disorder (MDD) and anxiety disorders poses significant challenges to understanding biological and behavioral mechanisms, which may account for the partial effectiveness of extant treatments (e.g., 4,5).

These challenges have motivated the use of latent variable approaches (6) to help delineate distinct syndromes of mood and anxiety disorders that better reflect the underlying neurobiology. Latent variable approaches treat mental disorders as latent constructs that can be associated with measures from multiple units of analysis and multiple tasks (7–9). These approaches are consistent with the goal of the Research Domain Criteria (RDoC) framework – to develop a research classification system for mental disorders based upon both neurobiology and observable behavior (10,11). The RDoC approach aims to derive psychological constructs that are observable in multiple units of analysis, including but not limited to self-report, behavior, physiology, and neural circuits. The identification of such constructs may allow us to transcend traditional diagnostic groups and more adequately capture variation in clinical populations. This could lead to more accurate, personalized diagnostic measures for mental health that could inform more targeted treatments.

RDoC constructs of particular relevance to mood and anxiety disorders are the positive and negative valence systems (12). Low positive affect is linked to depression and some anxiety disorders, high negative affect is common to both anxiety and depression, and comorbid anxiety and depression are associated with more negative affect than either disorder alone (7,13–20). Additionally, psychophysiological and neurobiological data indicate that the positive and negative valence systems are closely tied to reward and threat sensitivity, respectively (21), making them amenable to investigation through reinforcement-based behavioral paradigms. The goal then, from the RDoC perspective, is to determine whether common measures of anxiety, depression, threat sensitivity, and reward sensitivity — extending across the self-report, behavioral, physiological, and neural domains — map onto shared latent constructs that reflect underlying positive and negative valence systems.

Despite this promising blueprint, however, previous results suggest limitations in the RDoC approach. For example, previous studies have failed to identify latent psychological constructs that cut across two critical units of analysis: behavioral tasks and self-report measures (22,23). Eisenberg et al. (22) found that self-report and task measures of self-regulation cluster in separate regions of a latent psychological space, suggesting a weak relation across the two units of analysis. This is consistent with correlational approaches that indicate a lack of coherence between behavioral and self-report measures for self-control (24,25), impulsivity (26), and cognitive empathy (27; see (28) for a review). Thus, it remains unclear whether unifying psychological constructs are observable across units of analysis and what the optimal analytic approaches are for identifying them.

Previous reports, however, have two important limitations. First, they have largely been restricted to behavioral and self-report levels of analysis. It therefore remains an open question whether expanding analyses to include neural and physiological data can enhance the identification of latent psychological constructs. Second, previous latent variable approaches have used methods like exploratory factor analysis (22) and principal component analysis (29) that are designed to account for correlational structure between individual variables, but that do not explicitly account for relationships between groups of variables. In this regard, group factor analysis (GFA; 30) provides a compelling alternative as it allows for examination of the latent variable structure underlying a set of experimental measures across multiple units of analysis, while rigorously accounting for relationships between the different groups of variables that compose each unit.

Here, we used GFA to analyze self-report, behavioral, physiological, and neural data from a population with significant mood and anxiety symptoms to (1) extract latent factors putatively reflecting underlying positive and negative valence systems, and (2) examine whether variables across different units of analysis map onto the same latent factor. We studied a clinical population with significant mood and anxiety symptoms that, importantly, were not required to meet DSM disorder criteria for study entry. By studying patients in this setting, we gain access to a generalizable sample of persons with anxiety and/or depressive symptoms without the filtering inherent to general psychiatric or other more specialized clinical settings.

Participants completed a battery of well-established tasks and self-report questionnaires that are heuristically aimed at quantifying positive and negative valence domains, as well as anxiety and depression symptoms. For physiology and behavior, we measured heart rate and subjective ratings to positive, negative, and neutral images from the International Affective Picture System (IAPS; 31,32). Further, we measured heart rate while participants performed the mirror tracing persistence task (MTPT), a measure of distress tolerance (33,34). For neural circuits, functional magnetic resonance imaging (fMRI) was used to measure brain activity while participants performed fear conditioning (35–37) and monetary incentive delay (MID; 38–40) tasks. These tasks targeted neural circuits for threat sensitivity and reward processing, respectively. We hypothesized that using a relatively broad range of tasks and units of analysis would improve the chances of identifying either cross-unit or cross-task latent variables reflecting the positive and negative valence systems that are putatively perturbed in anxiety and depression.

Methods

Sampling and Participants

We recruited participants from two clinics: UCSD Primary Care Clinics (N=101) and the UCLA Family Health Center (N=124). For inclusion and exclusion criteria and sample characteristics see supplemental methods section 1.1 and Tables S2, S3.

Procedures

Patients presented to the UCSD and UCLA clinics or responded to flyers and completed screening measures (OASIS and PHQ-9) for eligibility. Individuals who accepted an invitation to participate in the study provided consent and underwent a structured diagnostic DSM-5, Mini International Neuropsychiatric Interview (MINI Version 7.0.0.0, used by permission of David Sheehan, MD). Subsequently, participants returned for a behavioral testing session and a neuroimaging testing session.

Nine scales were used to assess self-reported symptoms of positive and negative valence processing in the behavioral session (see Supplemental Materials for details). An International Affective Picture System (IAPS) task (31,32; Supplemental Materials) was used to measure physiological and behavioral responses to positively and negatively valenced stimuli. For images that represent pleasant, unpleasant, and neutral valence categories, we measured subjective valence and arousal ratings as well as heart rate (HR) and skin conductance response (SCR). Distress tolerance was indexed by the total duration of a frustrating computerized mirror tracing persistence task (MTPT; 34) that participants could opt out of at any time. Mean HR and the change of HR over the first minute (see Supplemental Materials) were included as physiological measures.

In the neuroimaging session, fear conditioning and monetary incentive delay (MID) tasks were conducted. To measure neural circuits associated with fear learning, participants completed a differential Pavlovian Fear Learning Task (41,42; Supplemental Materials) with two stages: fear acquisition and fear extinction. The MID task was used to probe neural responses to the anticipation and receipt of monetary reward and loss (38,39,43,44).

Group Factor Analysis

Group Factor Analysis (30) is an unsupervised learning technique that identifies latent variables, or “group factors” (GFs), across “blocks” of input variables that are assumed to be related due to an underlying construct. GFA extends traditional factor analysis approaches, which identify latent variables that describe relationships between individual variables of a dataset, by identifying additional latent variables that describe relationships between groups, or blocks of related variables with a sparsity constraint. Specifically, a sparse Bayesian estimation procedure identifies latent factors that either explain variance that is unique to a specific block or that describe a robust relationship between all or a given subset of blocks; otherwise a sparsity prior sets the factor loadings for all other blocks to zero. Here, we treated separate tasks and separate units of analysis within tasks (e.g., behavioral versus physiological measures) as separate GFA blocks.

Table 1 illustrates how different measures from each task map onto constructs and subconstructs of the positive and negative valence systems according to RDoC guidelines. Among the 225 participants, 107 had missing or unusable data in at least one task due to factors such as participant dropout or poor signal-to-noise ratios for physiological measures. In our first GFA (Figure 1), which uses blocks of variables from different tasks, we used a subset of all of the available variable blocks in order to maximize the number of participants with complete data, yielding a total of 118 participants with complete data. The variable blocks included were fear acquisition fMRI, fear extinction fMRI, MID fMRI, MTPT behavior, MTPT heart rate, IAPS heart rate, and self-report symptoms (see Supplemental Table S1 for detailed information on missing data for each measurement). Larger sample sizes (from N=139 to N=220) were achieved in subsequent GFAs that were constrained to within-task variable blocks that contained fewer missing entries (see Within-task group factor analysis section and Supplemental Materials).

Table 1:

Mapping between RDoC constructs/subconstructs and GFA variable blocks. A total of 7 variable blocks were included in the cross-task GFA. IAPS: International Affective Picture System, HR: heart rate, SCR: skin conductance response, MTPT: mirror tracing persistence task, MID: monetary incentive delay.

| Negative Valence Systems | ||||

|---|---|---|---|---|

| Construct/Subconstruct | Circuits | Physiology | Behavior | Self-Report |

| Acute Threat (“Fear”) | fear conditioning fMRI | IAPS HR/SCR | fear conditioning contingency ratings | fear conditioning and IAPS valence and arousal ratings |

| Potential Threat (“Anxiety”) | - | - | - | OASIS, GAD7, MASQ Anxious Arousal, BIS, MASQ General Distress, SPSRQ punishment sensitivity, AcSEAS |

| Loss | MID fMRI | - | - | PHQ9, PANAS negative, MASQ Depressive |

| Frustrative Nonreward | - | MTPT HR | MTPT duration | - |

| Positive Valence Systems | ||||

| Construct/Subconstruct | Circuits | Physiology | Behavior | Self-Report |

| Reward Responsiveness | MID fMRI | IAPS HR/SCR | - | SPSRQ reward sensitivity, BAS reward responsiveness, TEPS anticipatory pleasure, TEPS consumatory pleasure, PANAS positive, TEPS anticipatory pleasure, MASQ Anhedonic, IAPS valence and arousal ratings |

| Reward Valuation | - | - | - | SPSRQ reward sensitivity, BAS drive, BAS funseeking |

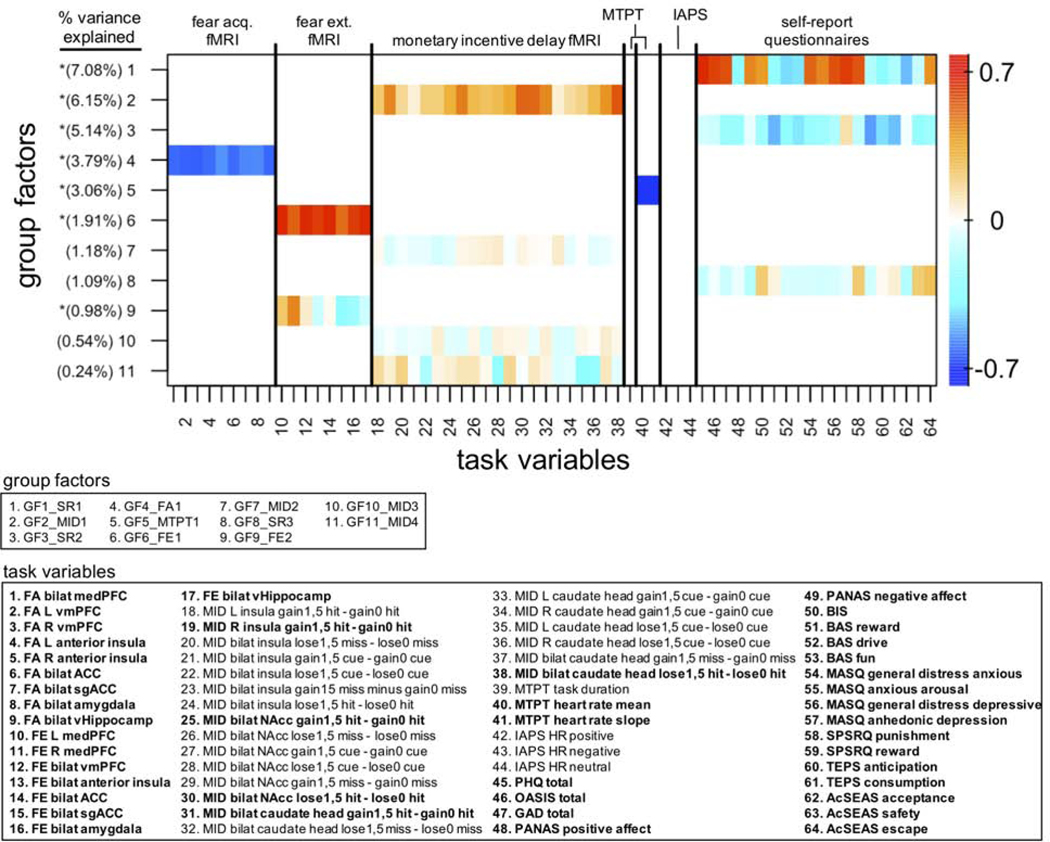

Figure 1.

GFA robust factor loadings (N=118). Heatmap colors indicate the weight of each task variable loading. Robust group factors are sorted in descending order by mean % variance explained across all groups. Asterisks indicate group factors that contained at least one task variable loading whose 95% credible interval did not contain zero. These task variables are bolded in the lower key beneath the heatmap. Group factor labels, indicating the variable block onto which each group factor loaded and the order by most variance explained, are given in the upper key beneath the heatmap. GF: group factor, MID: monetary incentive delay, SR: self-report, FA: fear acquisition, FE: fear extinction, MTPT: mirror tracing persistence task, IAPS: international affective picture system task, L: left hemisphere, R: right hemisphere, PHQ: Patient Health Questionnaire, OASIS: Overall Anxiety Severity and Impairment Scale, GAD: Generalized Anxiety Disorder questionnaire, PANAS: Positive and Negative Affective Schedule, BIS/BAS: Behavioral Inhibition System and Behavioral Activation System questionnaire, MASQ: Mood and Anxiety Symptom Questionnaire, SPSRQ: Sensitivity to Punishment and Sensitivity to Reward Questionnaire, TEPS: Temporal Experience of Pleasure Scale, AcSEAS: Acceptance, Safety, Escape/Avoidance Scale.

For fear conditioning, CS+ minus CS- contrasts from 7 regions of interest (ROIs) (ACC, vmPFC, dmPFC, sgACC, anterior insula, ventral hippocampus, amygdala) based on the results of meta-analyses (45,46) were included as GFA blocks for acquisition and extinction phases respectively. For the MID task, percent signal change scores from fMRI contrasts (e.g., gain versus no gain; see Supplemental Materials) in 3 previously identified ROIs (insula, NAcc, caudate head; 39,44) were used as a block. For all fMRI data, bilateral ROIs that did not show significantly different activations across subjects were merged together (e.g., left and right amygdala; see Supplemental Materials for details).

Heart rate responses for the MTPT and IAPS tasks were each included as separate physiology blocks, while the single behavioral measure of MTPT duration was included as a behavioral block. Lastly, 20 subscale scores across 9 self-report questionnaires were entered as a block for self-report measures. Fear conditioning contingency ratings and IAPS SCR, which were omitted from the cross-task GFA in order to maximize the sample size, were included in subsequent within-task GFAs.

We did not attempt to increase GFA sample sizes through imputation due to the non-random nature of the missing data. Namely, all missing data occurred either for an entire unit of analysis (e.g., skin conductance) or for an entire task. However, as a supplemental control we reran the GFA with expectation maximization imputation and found a similar pattern of results (Supplemental Results 2.3, Figure S1).

Per the assumptions of factor analysis, all input variables were standardized to have a mean of zero and standard deviation of 1. To minimize the risk of identifying spurious GFs, GFA estimation was repeated 10 times with different random seeds to retain robust components consistent across sampling chains. Robust components were selected by optimizing two parameters in order to minimize cross-correlation among the resulting group factors and to maximize variance explained. The first parameter was a “correlation threshold”, which describes the minimum Pearson correlation between posterior means of GFs obtained from separate sampling chains in order for those GFs to be considered as the “same”. The second parameter was a “matching threshold”, which describes the minimum proportion of iterations that a single GF must be identified in, per a given correlation threshold, to be deemed robust. Although the GFA procedure is designed to extract orthogonal GFs (30), some random nonzero correlation will still occur. To ensure the orthogonality of extracted GFs, we used an iterative procedure that removed GFs contributing to any observed intercorrelation greater than that expected by chance (see Supplemental Materials). Data analyses were carried out using the statistical software R (47) and the GFA package (30).

Results

Cross-Task Group Factor Analysis

Robust GFs from the cross-task GFA are shown in Figure 1, with each row representing factor loadings of one GF. We identified optimal correlation and matching thresholds of 0.8 and 0.9, respectively, using a grid search with values of 0.1, 0.3 0.5, 0.6, 0.7, 0.8, and 0.9 for each parameter. A total of 11 robust GFs were extracted, explaining approximately 31.2% of the variance across variable blocks. Importantly, with each variable block restricted to one unit of analysis within one task, none of the extracted robust GFs loaded onto more than one block. In other words, the GFA failed to show any coherence across either units of analysis or tasks in latent variable space. To confirm that these findings were not specifically driven by the GFA algorithm, we conducted a classic principal component analysis (PCA; 29) with orthogonal rotation and found a similar pattern of factor loadings (Supplemental results section 2.4, Figure S3). However, because this frequentist approach lacks something equivalent to the GFA sparsity prior, it does produce factors with small magnitude loadings that extend across variable blocks. Therefore, for further clarity, we visualized the significant pairwise correlations in our dataset through a graphical network modeling procedure. The resulting model confirms the sparse correlational structure between variable blocks in our dataset (Supplemental results section 2.5, Figure S4), but suggests that there may be some weak cross-modal relationships between individual variables in different blocks that were not identified by the GFA. Further, a quantitative analysis of the potential extent of valence processing for each GF suggests that some GFs may not be directly related to their target valence system constructs (Supplemental Results 2.9).

Within-Task Group Factor Analysis

Although only a subset of participants had complete data across all tasks (N=118 out of 225), we can achieve larger sample sizes and increase statistical power by examining the complete dataset acquired within a single task. We therefore computed additional within-task GFAs in order to (1) examine whether an increase in statistical power could lead to the identification of cross-unit robust GFs, and (2) test the stability of the GFs extracted from the cross-task GFA. To test stability we examined the correlation structure between GFs extracted from the cross-task and within-task GFAs (see supplemental results section 2.7 for comparison results).

Because we had measures from multiple units of analysis for the fear conditioning, IAPS, and MTPT tasks, we focus on those within-task GFAs here (Figure 2; but see Supplemental results section 2.6, Figure S5, for within-task GFAs for the MID and self-report tasks). Fear conditioning (N=158) data blocks included fMRI and contingency rating data from both the acquisition and extinction phases of conditioning. For the IAPS task (N=141), heart rate, SCR, and subjective ratings of image valence and arousal were entered as three separate blocks. For the MTPT (N=180), task duration and heart rate were entered as two separate blocks. The same procedure of extracting robust GFs was applied here as in the cross-task GFA.

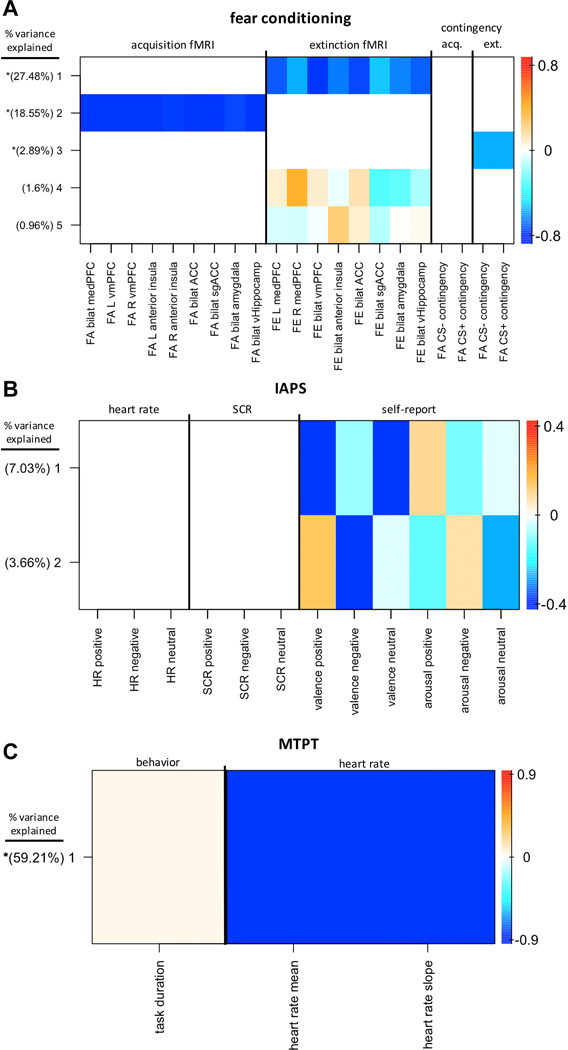

Figure 2.

Within-task group factor (GF) analysis factor loadings. (A) Fear conditioning (n = 158). (B) International Affective Picture System task (IAPS) (n = 141). (C) Mirror tracing persistence task (MTPT) (n = 180). Heatmap colors indicate the normalized weight of each variable loading. The x-axis indicates the variables included in each task-specific GF analysis. Extracted robust GFs and the percentage of within-task variance explained by each is shown on the y-axis. GFs are sorted in descending order by percent variance explained. Asterisks indicate GFs that contained at least one loading weight whose 95% credible interval did not contain 0. ACC, anterior cingulate cortex; acq., acquisition; bilat, bilateral; CS, conditioned stimulus; ext., extinction; FA, fear acquisition; FE, fear extinction; fMRI, functional magnetic resonance imaging; HR, heart rate; L, left; medPFC, medial prefrontal cortex; R, right; SCR, skin conductance response; sgACC, subgenual anterior cingulate cortex; vHippocamp, ventral hippocampus; vmPFC, ventromedial prefrontal cortex.

Despite the increases in sample size from the full GFA for the fear conditioning (+N=40) and IAPS (+N=21) within-task GFAs, no robust cross-unit GFs were identified in either case (Figure 2a,b). In fact, GFA identified no latent factors corresponding to physiological measures, either heart rate or SCR, on the IAPS task (Figure 2b). The MTPT GFA (+N=62), on the other hand, identified a single robust latent factor that loaded across behavioral (task duration) and physiological (heart rate) units of analysis. However, the mean variance explained was 0.17% and 88.73 % within the task duration and heart rate variable blocks, respectively. Thus, while this latent factor technically contained loadings across behavioral and physiological levels of analysis, the physiological variables accounted for virtually all of the variance explained.

To further investigate the sparse cross-modal relationships at the individual variable level implied by our network analysis model (Figure S4), we examined the relationship between GFs identified in our within-task GFAs using linear regression (Supplemental Results 2.8, Table S4). Briefly, we found that three of the within-task fear conditioning GFs and one within-task MID GF were significantly associated with two of the within-task self-report GFs that were found to reflect valence processing (see Supplemental Results 2.9), all with small effect sizes (0.02 > Cohen’s f2 > 0.15; Table S4). However, these results should be interpreted with caution, as they are exploratory in nature, and none of the associated p-values survive adjustment for multiple comparisons.

Discussion

This investigation used a sparse Bayesian group factor analysis to assess the latent variable structure underlying positive and negative valence processing across units of analyses in a population with anxiety or depressive symptoms. Valence processing was measured by a battery of tasks extending across four units of analysis: self-report, behavior, physiology, and neural circuits. Both cross- and within-task GFAs failed to identify robust latent variables that loaded onto measures spanning either multiple units of analysis or multiple tasks. Instead, variance was best explained by individual latent components for each unit of analysis within each task. However, exploratory post hoc regression analyses show some marginal relationships between valence-related within-task self-report GFs and GFs derived from the within-task fear conditioning and MID GFAs.

The failure to identify robust latent variables across units of analyses through GFA is in line with several previous studies (22–26,48). While we hypothesized that the inclusion of measurements from neural and physiological units of analysis might improve the chances of identifying cross-unit latent variables underlying anxiety and depression, we found no evidence for this hypothesis. These results extend previous concerns about the challenges of dimensional approaches in psychology (22,23). Below we consider potential non-mutually exclusive explanations for the lack of cross-unit and cross-task latent structure observed here.

First, while the tasks included here are designed to target the positive and negative valence systems, different tasks may actually tap into independent latent sub-constructs within these systems. For example, according to the current NIMH RDoC guidelines (12), five sub-constructs are nested within the main construct of the negative-valence system: Acute Threat (“Fear”), Potential Threat (“Anxiety”), Sustained Threat, Loss, and Frustrative Nonreward. The fear conditioning and MID tasks can be thought to map onto the Acute Threat and Loss sub-constructs, respectively (Table 1). Assuming this nested structure, the result that no GFA factors loaded onto both tasks may suggest that these different sub-constructs are relatively independent. It is also worth noting that the targeted sub-constructs here were asymmetric in terms of valence: four sub-constructs were in the negative area, while only two were in the positive area, the latter of which did not include a behavioral measure. While it is not clear that this would bias our analyses towards or away from identifying crossmodal latent constructs, future studies may benefit from targeting a more balanced set of constructs and corresponding tasks.

Even when looking within tasks with larger sample sizes, GFA failed to identify robust cross-unit latent variables (Figure 2). The only exception to this pattern was the cross-unit GF that loaded onto both heart rate and task duration for the MTPT (Figure 2, bottom). However, the stark contrast in variance explained between the two variable loadings (see Results), with variance explained by task duration being nearly zero (0.17% ± 0.05%), suggests that this GF essentially reflects physiological processing alone. Therefore, differences in targeted sub-constructs between tasks alone cannot explain the observed results.

Further, valence quantification analyses (Figure S7) suggest that some latent factors identified by GFA may reflect constructs outside of the positive and negative valence systems altogether. Specifically, the MID GF that explained the most variance in the cross-task GFA (GF2_MID1, Figure 1) was not found to reflect valence processing, but instead reflect the distinction between anticipation and consumption in the MID task (Figure S7b,c). This is in contrast to the self-report GFs that explained the most variance in the cross-task GFA (GF1_SR1 and GF1_SR2, Figure 1), which were both shown to strongly reflect valence processing (Figure S7b). This is in line with previous studies that have found a lack of coherence in latent structure between self-report and behavioral measures (22–26,48)

Another possible explanation is that while a given task is theoretically associated with a given latent construct, measurements in that task can be influenced by state effects. For example, evidence has shown that exercise immediately before an experiment can improve performance on speeded-information processing and memory tasks (49). Further, cognitive performance varies across the day (e.g., drastic change in memory function in the morning versus later in the day) with large individual differences (50,51). Therefore, the measures used in the GFA here likely reflect a combination of many other factors in addition to the targeted positive and negative valence processing systems. Such state effects may have led to the identification of latent variables that have relatively low factor loadings and/or explain a relatively small portion of the total variance among experimental variables (e.g., see bottom half of the heatmap in Figure 1).

Previous studies have also cited differences in test-retest reliability between self-report and behavioral measures as a potential explanation for the lack of shared latent structure between the two types of measure (23,52). FMRI and physiological measures have notoriously low signal-to-noise ratios and low test-retest reliability (53,54), while self-report measures are known to be relatively stable (23,52). This issue may have contributed to the lack of cross task structure observed between physiological variable blocks and other variable blocks in the current dataset, as physiological measures showed relatively low split-half reliability (Table S5). This is consistent with the lack of factor loadings on physiological variables observed in the cross- and within-task GFAs. Importantly, the sparse prior implemented by the GFA prevents noise in one variable block from interfering with the identification of relationships between other variable blocks (30), so any issues of poor reliability in the current physiological measures cannot explain the lack of latent structure observed between the neural, behavioral, and self-report variable blocks.

The lack of crossmodal latent structure may have been due to insufficient power to detect weak but consistent relationships across units of analyses. This could be due to the relatively low observations to features ratio of 118:64 used in the current cross-task GFA. However, previous simulations suggest that the current Bayesian approach to GFA can provide reliable estimates of latent factor structures over a range of observations to feature ratios that include the one used here (see Figure 4 in Klami et al., 2015 (30)). That the within-task GFAs with larger observations to features ratios (e.g., 158:21 for fear conditioning variables) still failed to show evidence for robust cross-unit factors further suggests that insufficient power was not a critical limiting factor.

Despite the failure of the current GFA procedure to identify robust cross-modal latent factors, our exploratory regression results suggest that more targeted future approaches may be able to identify some small effects that are relevant to the RDoC approach. However, given that the expected effects (roughly Cohen’s f=0.03–0.04) are small, it is an open question whether these cross-modal relationships would be clinically relevant. In addition, small effects will be easily obscured by the limitations discussed above like state effects and poor signal to noise ratios. We therefore conclude that our data do not provide an outright indictment of the RDoC approach, but rather highlight its challenges, and point towards more targeted approaches that emphasize design features like construct coherence, conditions that minimize the influence of state effects, and greater numbers of repeated measures. Similarly, the current findings do not rule out the possibility that the RDoC approach and the DSM-based multimodal categorical approach may ultimately reveal complementary information (55) about how to better diagnose and treat depression and anxiety disorders moving forward.

Supplementary Material

Acknowledgments

The research reported and the preparation of this article was supported by National Institute of Mental Health Grants (NIH-NIMH) R01 MH101453 to Martin Paulus, Murray Stein, Michelle Craske, Susan Bookheimer, and Charles Taylor. Dr Paulus is also supported by grants from the National Institute on Drug Abuse (U01 DA041089) and National Institute of General Medical Sciences (P20GM121312). We thank Boyang Fan, Natasha Sidhu, and Richard Kim for helping with data collection and data analysis.

Dr. Stein has in the past 3 years been a consultant for Actelion, Acadia Pharmaceuticals, Aptinyx, Bionomics, Epivario, GW Pharmaceuticals, Janssen, Jazz Pharmaceuticals, and Oxeia Biopharmaceuticals. Dr. Paulus is an advisor to Spring Care, Inc., a behavioral health startup, he has received royalties for an article about methamphetamine in Uptodate. Dr. Taylor declares that in the past 3 years he has been a paid consultant for Homewood Health, and receives payment for editorial work for UpToDate.

Footnotes

Disclosures

All other authors have no biomedical financial disclosures or potential conflicts of interest to declare.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Unick GJ, Snowden L, Hastings J (2009): Heterogeneity in comorbidity between major depressive disorder and generalized anxiety disorder and its clinical consequences. J Nerv Ment Dis 197: 215–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Roy-Byrne PP, Davidson KW, Kessler RC, Asmundson GJG, Goodwin RD, Kubzansky L, et al. (2008): Anxiety disorders and comorbid medical illness. Gen Hosp Psychiatry 30: 208–225. [DOI] [PubMed] [Google Scholar]

- 3.Hyman SE (2007): Can neuroscience be integrated into the DSM-V? Nat Rev Neurosci 8: 725–732. [DOI] [PubMed] [Google Scholar]

- 4.Linde K, Kriston L, Rücker G, Jamil S, Schumann I, Meissner K, et al. (2015): Efficacy and acceptability of pharmacological treatments for depressive disorders in primary care: systematic review and network meta-analysis. Ann Fam Med 13: 69–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Loerinc AG, Meuret AE, Twohig MP, Rosenfield D, Bluett EJ, Craske MG (2015): Response rates for CBT for anxiety disorders: Need for standardized criteria. Clin Psychol Rev 42: 72–82. [DOI] [PubMed] [Google Scholar]

- 6.Bollen KA (2002): Latent Variables in Psychology and the Social Sciences. Annu Rev Psychol 53: 605–634. [DOI] [PubMed] [Google Scholar]

- 7.Prenoveau JM, Zinbarg RE, Craske MG, Mineka S, Griffith JW, Epstein AM (2010): Testing a hierarchical model of anxiety and depression in adolescents: a tri-level model. J Anxiety Disord 24: 334–344. [DOI] [PubMed] [Google Scholar]

- 8.Prenoveau JM, Craske MG, Zinbarg RE, Mineka S, Rose RD, Griffith JW (2011): Are anxiety and depression just as stable as personality during late adolescence? Results from a three-year longitudinal latent variable study. J Abnorm Psychol 120: 832–843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Monden R, Wardenaar KJ, Stegeman A, Conradi HJ, de Jonge P (2015): Simultaneous Decomposition of Depression Heterogeneity on the Person-, Symptom- and Time-Level: The Use of Three-Mode Principal Component Analysis. PLoS One 10: e0132765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cuthbert BN, Insel TR (2013): Toward the future of psychiatric diagnosis: the seven pillars of RDoC. BMC Med 11: 126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Insel T, Cuthbert B, Garvey M, Heinssen R, Pine DS, Quinn K, et al. (2010): Research domain criteria (RDoC): toward a new classification framework for research on mental disorders. Am J Psychiatry 167: 748–751. [DOI] [PubMed] [Google Scholar]

- 12.James W (2007): The Principles of Psychology. Cosimo, Inc. [Google Scholar]

- 13.Chorpita BF, Albano AM, Barlow DH (1998): The structure of negative emotions in a clinical sample of children and adolescents. J Abnorm Psychol 107: 74–85. [DOI] [PubMed] [Google Scholar]

- 14.Chorpita BF (2002): The tripartite model and dimensions of anxiety and depression: an examination of structure in a large school sample. J Abnorm Child Psychol 30: 177–190. [DOI] [PubMed] [Google Scholar]

- 15.Clark LA, Watson D (1991): Tripartite model of anxiety and depression: psychometricevidence and taxonomic implications. J Abnorm Psychol 100: 316–336. [DOI] [PubMed] [Google Scholar]

- 16.Craske MG, Rauch SL, Ursano R, Prenoveau J, Pine DS, Zinbarg RE (2011): What is an anxiety disorder? Focus 9: 369–388. [DOI] [PubMed] [Google Scholar]

- 17.Kashdan TB (2007): Social anxiety spectrum and diminished positive experiences: theoretical synthesis and meta-analysis. Clin Psychol Rev 27: 348–365. [DOI] [PubMed] [Google Scholar]

- 18.Weinstock LM, Whisman MA (2006): Neuroticism as a common feature of the depressive and anxiety disorders: a test of the revised integrative hierarchical model in a national sample. J Abnorm Psychol 115: 68–74. [DOI] [PubMed] [Google Scholar]

- 19.Craske MG, Meuret AE, Ritz T, Treanor M, Dour HJ (2016): Treatment for Anhedonia: A Neuroscience Driven Approach. Depress Anxiety 33: 927–938. [DOI] [PubMed] [Google Scholar]

- 20.Craske MG, Meuret AE, Ritz T, Treanor M, Dour H, Rosenfield D (2019): Positive affect treatment for depression and anxiety: A randomized clinical trial for a core feature of anhedonia. J Consult Clin Psychol 87: 457–471. [DOI] [PubMed] [Google Scholar]

- 21.Shankman SA, Klein DN, Tenke CE, Bruder GE (2007): Reward sensitivity in depression: a biobehavioral study. J Abnorm Psychol 116: 95–104. [DOI] [PubMed] [Google Scholar]

- 22.Eisenberg IW, Bissett PG, Zeynep Enkavi A, Li J, MacKinnon DP, Marsch LA, Poldrack RA (2019): Uncovering the structure of self-regulation through data-driven ontology discovery. Nat Commun 10. 10.1038/s41467-019-10301-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Frey R, Pedroni A, Mata R, Rieskamp J, Hertwig R (2017): Risk preference shares the psychometric structure of major psychological traits. Sci Adv 3: e1701381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Saunders B, Milyavskaya M, Etz A, Randles D, Inzlicht M (2018): Reported Self-control is not Meaningfully Associated with Inhibition-related Executive Function: A Bayesian Analysis. Collabra: Psychology 4: 39. [Google Scholar]

- 25.Duckworth AL, Kern ML (2011): A Meta-Analysis of the Convergent Validity of Self-Control Measures. J Res Pers 45: 259–268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cyders MA, Coskunpinar A (2011): Measurement of constructs using self-report and behavioral lab tasks: is there overlap in nomothetic span and construct representation for impulsivity? Clin Psychol Rev 31: 965–982. [DOI] [PubMed] [Google Scholar]

- 27.Murphy BA, Lilienfeld SO (2019): Are self-report cognitive empathy ratings valid proxies for cognitive empathy ability? Negligible meta-analytic relations with behavioral task performance. Psychol Assess 31: 1062–1072. [DOI] [PubMed] [Google Scholar]

- 28.Dang J, King KM, Inzlicht M (2020): Why Are Self-Report and Behavioral Measures Weakly Correlated? Trends Cogn Sci 24: 267–269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Paulus MP, Stein MB, Craske MG, Bookheimer S, Taylor CT, Simmons AN, et al. (2017): Latent variable analysis of positive and negative valence processing focused on symptom and behavioral units of analysis in mood and anxiety disorders. J Affect Disord 216: 17–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Klami A, Virtanen S, Leppäaho E, Kaski S (2015): Group Factor Analysis. IEEE Trans Neural Netw Learn Syst 26: 2136–2147. [DOI] [PubMed] [Google Scholar]

- 31.Stratta P, Tempesta D, Bonanni RL, de Cataldo S, Rossi A (2014): Emotional reactivity in bipolar depressed patients. J Clin Psychol 70: 860–865. [DOI] [PubMed] [Google Scholar]

- 32.Sloan DM, Strauss ME, Quirk SW, Sajatovic M (1997): Subjective and expressive emotional responses in depression. J Affect Disord 46: 135–141. [DOI] [PubMed] [Google Scholar]

- 33.Renna ME, Chin S, Seeley SH, Fresco DM, Heimberg RG, Mennin DS (2018): The Use of the Mirror Tracing Persistence Task as a Measure of Distress Tolerance in Generalized Anxiety Disorder. J Ration Emot Cogn Behav Ther 36: 80–88. [Google Scholar]

- 34.Strong DR, Lejuez CW, Daughters S, Marinello M, Kahler CW, Brown RA, Others (2003): The computerized mirror tracing task, version 1. Unpublished manual. [Google Scholar]

- 35.Gottfried JA, Dolan RJ (2004): Human orbitofrontal cortex mediates extinction learning while accessing conditioned representations of value. Nature Neuroscience, vol. 7. pp 1144–1152. [DOI] [PubMed] [Google Scholar]

- 36.Molchan SE, Sunderland T, McIntosh AR, Herscovitch P, Schreurs BG (1994): A functional anatomical study of associative learning in humans. Proc Natl Acad Sci U S A 91: 8122–8126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Pittig A, Treanor M, LeBeau RT, Craske MG (2018): The role of associative fear and avoidance learning in anxiety disorders: Gaps and directions for future research. Neurosci Biobehav Rev 88: 117–140. [DOI] [PubMed] [Google Scholar]

- 38.Knutson B, Westdorp A, Kaiser E, Hommer D (2000): FMRI visualization of brain activity during a monetary incentive delay task. Neuroimage 12: 20–27. [DOI] [PubMed] [Google Scholar]

- 39.Knutson B, Heinz A (2015): Probing psychiatric symptoms with the monetary incentive delay task. Biol Psychiatry 77: 418–420. [DOI] [PubMed] [Google Scholar]

- 40.Nusslock R, Almeida JR, Forbes EE, Versace A, Frank E, Labarbara EJ, et al. (2012): Waiting to win: elevated striatal and orbitofrontal cortical activity during reward anticipation in euthymic bipolar disorder adults. Bipolar Disord 14: 249–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Milad MR, Pitman RK, Ellis CB, Gold AL, Shin LM, Lasko NB, et al. (2009): Neurobiological Basis of Failure to Recall Extinction Memory in Posttraumatic Stress Disorder. Biol Psychiatry 66: 1075–1082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Milad Mohammed R. Quirk Gregory J. Pitman Roger K. Orr Bruce Fischl Scott P. Rauch Scott L. (2007): A Role for the Human Dorsal Anterior Cingulate Cortexin Fear Expression. Biol Psychiatry 62: 1191–1194. [DOI] [PubMed] [Google Scholar]

- 43.Knutson B, Fong GW, Adams CM, Varner JL, Hommer D (2001): Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport 12: 3683–3687. [DOI] [PubMed] [Google Scholar]

- 44.Wu CC, Samanez-Larkin GR, Katovich K, Knutson B (2014): Affective traits link to reliableneural markers of incentive anticipation. Neuroimage 84: 279–289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Fullana MA, Harrison BJ, Soriano-Mas C, Vervliet B, Cardoner N, Àvila-Parcet A, Radua J (2016): Neural signatures of human fear conditioning: An updated and extended meta-analysis of fMRI studies. Mol Psychiatry 21: 500–508. [DOI] [PubMed] [Google Scholar]

- 46.Fullana MA, Albajes-Eizagirre A, Soriano-Mas C, Vervliet B, Cardoner N, Benet O, et al. (2018): Fear extinction in the human brain: A meta-analysis of fMRI studies in healthy participants. Neurosci Biobehav Rev 88: 16–25. [DOI] [PubMed] [Google Scholar]

- 47.Team RC, Others (2013): R: A language and environment for statistical computing. Retrieved from https://repo.bppt.go.id/cran/web/packages/dplR/vignettes/intro-dplR.pdf

- 48.McHugh RK, Daughters SB, Lejuez CW, Murray HW, Hearon BA, Gorka SM, Otto MW (2011): Shared Variance among Self-Report and Behavioral Measures of Distress Intolerance. Cognit Ther Res 35: 266–275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lambourne K, Tomporowski P (2010): The effect of exercise-induced arousal on cognitive task performance: a meta-regression analysis. Brain Res 1341: 12–24. [DOI] [PubMed] [Google Scholar]

- 50.May CP, Hasher L, Stoltzfus ER (1993): Optimal Time of Day and the Magnitude of Age Differences in Memory. Psychol Sci 4: 326–330. [Google Scholar]

- 51.Yoon C, May CP, Hasher L (1998): Aging, circadian arousal patterns, and cognition. Cognition, Aging and Self-Reports. Psychology Press, pp 113–136. [Google Scholar]

- 52.Zeynep Enkavi A, Eisenberg IW, Bissett PG, Mazza GL, MacKinnon DP, Marsch LA, Poldrack RA (2019): Large-scale analysis of test–retest reliabilities of self-regulation measures. Proc Natl Acad Sci U S A 116: 5472–5477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Elliott ML, Knodt AR, Ireland D, Morris ML, Poulton R, Ramrakha S, et al. (2020): What Is the Test-Retest Reliability of Common Task-Functional MRI Measures? New Empirical Evidence and a Meta-Analysis. Psychol Sci 31: 792–806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Schmidt LA, Santesso DL, Miskovic V, Mathewson KJ, McCabe RE, Antony MM, Moscovitch DA (2012): Test–retest reliability of regional electroencephalogram (EEG) and cardiovascular measures in social anxiety disorder (SAD). Int J Psychophysiol 84: 65–73. [DOI] [PubMed] [Google Scholar]

- 55.Marin M-F, Hammoud MZ, Klumpp H, Simon NM, Milad MR (2020): Multimodal Categorical and Dimensional Approaches to Understanding Threat Conditioning and Its Extinction in Individuals With Anxiety Disorders. JAMA Psychiatry. 10.1001/jamapsychiatry.2019.4833 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.