Abstract

Background:

Previous simulation studies evaluated either dry lab (DL) or virtual reality (VR) simulation, correlating simulator training with the performance of arthroscopic tasks. However, these studies did not compare simulation training with specific surgical procedures.

Purpose/Hypothesis:

To determine the effectiveness of a shoulder arthroscopy simulator program in improving performance during arthroscopic anterior labral repair. It was hypothesized that both DL and VR simulation methods would improve procedure performance; however, VR simulation would be more effective based on the validated Arthroscopic Surgery Skill Evaluation Tool (ASSET) Global Rating Scale.

Study Design:

Controlled laboratory study.

Methods:

Enrolled in the study were 38 orthopaedic residents at a single institution, postgraduate years (PGYs) 1 to 5. Each resident completed a pretest shoulder stabilization procedure on a cadaveric model and was then randomized into 1 of 2 groups: VR or DL simulation. Participants then underwent a 4-week arthroscopy simulation program and completed a posttest. Sports medicine–trained orthopaedic surgeons graded the participants on completeness of the surgical repair at the time of the procedure, and a single, blinded orthopaedic surgeon, using the ASSET Global Rating Scale, graded participants’ arthroscopy skills. The procedure step and ASSET grades were compared between simulator groups and between PGYs using paired t tests.

Results:

There was no significant difference between the groups in pretest performance in either the procedural steps or ASSET scores. Overall procedural step scores improved after combining both types of simulator training (P = .0424) but not in the individual simulation groups. The ASSET scores improved across both DL (P = .0045) and VR (P = .0003), with no significant difference between the groups.

Conclusion:

A 4-week simulation program can improve arthroscopic skills and performance during a specific surgical procedure. This study provides additional evidence regarding the benefits of simulator training in orthopaedic surgery for both novice and experienced arthroscopic surgeons. There was no statistically significant difference between the VR and DL models, which disproved the authors’ hypothesis that the VR simulator would be the more effective simulation tool.

Clinical Relevance:

There may be a role for simulator training in the teaching of arthroscopic skills and learning of specific surgical procedures.

Keywords: Arthroscopic Surgery Skill Evaluation Tool (ASSET), shoulder arthroscopy, simulation training

Orthopaedic surgical training has traditionally relied on an apprenticeship model coupled with didactic training. In this model, surgeons learn complex surgical tasks through practice and repetition, primarily on patients.4,9,14,21 This method has been criticized for being inefficient, costly, and possibly unsafe for patients because of its use by inexperienced surgeons to perform the procedures.3,11,14,23 The focus on patient safety as well as the establishment of resident work-hour restrictions have pushed the need for alternative methods of resident education and teaching surgical skills. This is particularly important in knee and shoulder arthroscopy, as they are among the most commonly performed orthopaedic procedures in the United States, accounting for 4 of the top 6 procedures performed by applicants for part 2 of the American Board of Orthopaedic Surgery’s certification examination.9

As with other surgical skills, the technical skills needed for arthroscopy are obtained through repetition under the direction and supervision of an experienced surgeon. This type of training can be both inefficient and costly. Surgical arthroscopy simulation may provide valuable repetition for training in arthroscopic triangulation, instrument handling and familiarity, and hand-eye coordination independent of human factors inherent with actual surgical cases.4,12,13,21,23 Currently available simulations for arthroscopic training include cadaver operative labs, dry models, animal models, and computerized simulators. As the surgical training curriculum for orthopaedics (particularly for arthroscopy) evolves, evaluation of the effectiveness of surgical simulation systems will be necessary to ensure effective training of future orthopaedic surgeons.5,9,27,30

While studies have validated the use of computerized surgical simulators and dry models with the improvement in cadaveric procedures and even for diagnostic arthroscopic procedures in the operating room, we know of no previous studies comparing dry lab (DL) arthroscopy with virtual reality (VR) simulation.4,6,12,13,20,21,23 Therefore, the purpose of the current study was to evaluate the effectiveness of an arthroscopic DL versus a VR simulator to improve both arthroscopic skills as based on the Arthroscopic Surgery Skill Evaluation Tool (ASSET) scoring system. In addition, we evaluated the ability of these 2 training modalities to improve overall surgical performance during a labral repair, as previous simulation studies have not compared simulation training with specific surgical procedures.2,12,16,17,19,24,29 We hypothesized that both the arthroscopic DL and the VR simulator would lead to statistically significant improvements in overall arthroscopic performance based on improvement in ASSET scores but that the VR simulator would show greater overall improvement. Furthermore, we believed it would lead to improvement in the trainee’s ability to perform an arthroscopic Bankart repair in the cadaver lab.

Methods

This study was approved by our hospital’s institutional review board. All residents at a single orthopaedic residency were offered enrollment over 2 years so as to include 2 consecutive intern classes. Residents were eligible for participation if they were in and/or entering training during the study period, which started on July 1, 2015, and ended in December 2016, and would be at the enrolling location throughout the data-collection period. After enrollment, each participant received didactic training on the steps of an anterior shoulder stabilization procedure from a board-certified orthopaedic surgeon fellowship trained in sports medicine (C.J.R.). The training materials were provided for review 2 weeks before the first study-related test. Each participant was also given an orientation to the 2 simulators used for the study: the VR training (VirtaMed ArthroS), which was rented for US$12,000 for the duration of the study and subsequently purchased after the conclusion of the study, and the DL model (Sawbones), to include the models, monitors, and implants, which was purchased by our institution.

Participants then completed a pretest in which they performed a shoulder arthroscopic anterior stabilization procedure on a cadaveric upper extremity hemispecimen. The mobile bioskills cadaver lab (The Surgical Training Institute) allowed for 10 specimens to be set up at a time (Figure 1). Before participants entered the lab, staff surgeons placed anterior, posterior, and anterosuperior glenohumeral portals and created in each specimen an anterior labral tear using a tissue elevator. After this pretest cadaveric procedure, the postgraduate year (PGY) group participants were separately randomized to participate in either the DL or VR simulator practice.

Figure 1.

Station setup of the mobile cadaver lab.

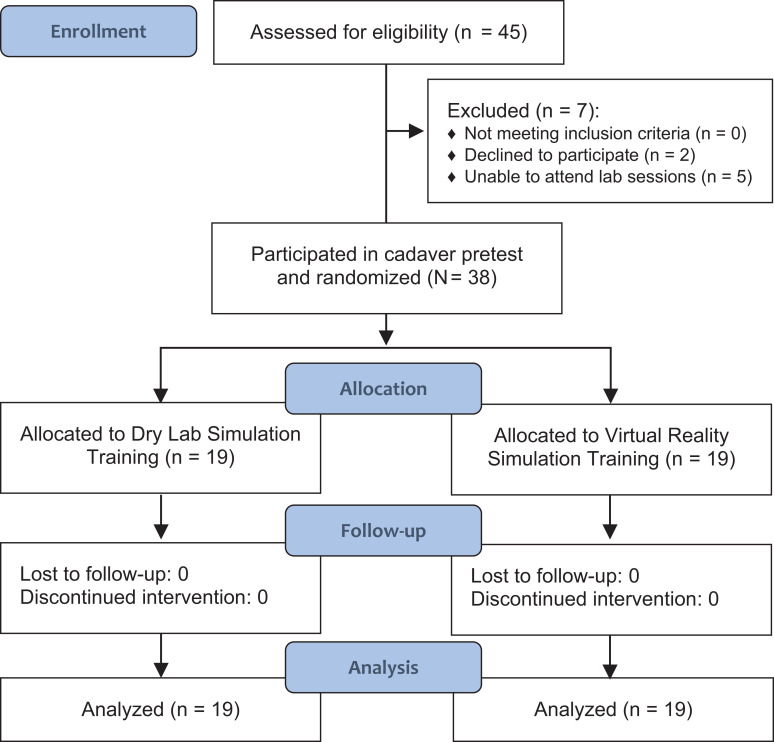

A total of 45 individuals were screened for the study. Two declined participation, and 5 were unable to attend the posttest or laboratory sessions. Therefore, a total of 38 individuals were enrolled. Participants were randomized evenly between the DL and VR groups (n = 19 each) using block randomization, based on their years of training, by drawing sealed envelopes with their study groups. Randomization was administered by one of the associate study investigators (J.C.R.). All participants completed the study, and none were lost to follow-up. Figure 2 demonstrates a flowchart summarizing the enrollment process.

Figure 2.

Flowchart of the study enrollment process.

Before the first simulation session, each study group was given a tutorial on either the VR simulator or the Sawbones model based on the group to which they were randomized. This training was conducted by a sports medicine–trained orthopaedic surgeon (C.J.R.). The VirtaMed simulator had preloaded modules for participants to complete with postmodule feedback provided by the VirtaMed simulator. The DL participants were instructed to complete a series of predetermined steps beginning with a diagnostic scope and labral repair, establishing working and accessory portals, and then performing a labral repair. Both groups were also instructed on arthroscopic knot-tying. A sports medicine–trained orthopaedic surgeon (C.J.R., T.C.B.) was present for the initial practice session and for 1 session per week thereafter. During the remaining sessions, the surgeons were available for questions or additional instruction as needed.

Based on the randomization group (VR vs DL group), participants were then instructed to practice the anterior shoulder stabilization procedure or modules on their respective simulator at least 3 times per week for 4 weeks. Each session was 30 minutes long. After the practice period was completed, the cadaver lab was repeated, and each participant performed a posttest cadaveric procedure. Both the pre- and posttest procedures were video recorded to capture the participant’s hand movements and camera view as captured on the arthroscopy screen.

Outcome Measures

Two grading scales were applied to each participant’s pre- and posttest procedure performance. First, a basic list of sequential steps required to complete the anterior shoulder stabilization was used to gauge the participants’ ability to complete the specific procedure. Each step was graded as either complete or incomplete, and 1 point was assigned to each completed task, for a maximum of 32 points. Single step items included identification of the labral defect, capsulolabral mobilization, glenoid preparation, and final repair evaluation. Three suture anchors were required for completion of the procedure, and the following steps were graded in triplicate: anchor placement, suture passer placement, suture shuttling, and knot-tying. Procedure step grading was conducted by a sports medicine–trained staff orthopaedic surgeon (C.J.R., T.C.B.) during the pre- and posttest and was therefore not blinded.

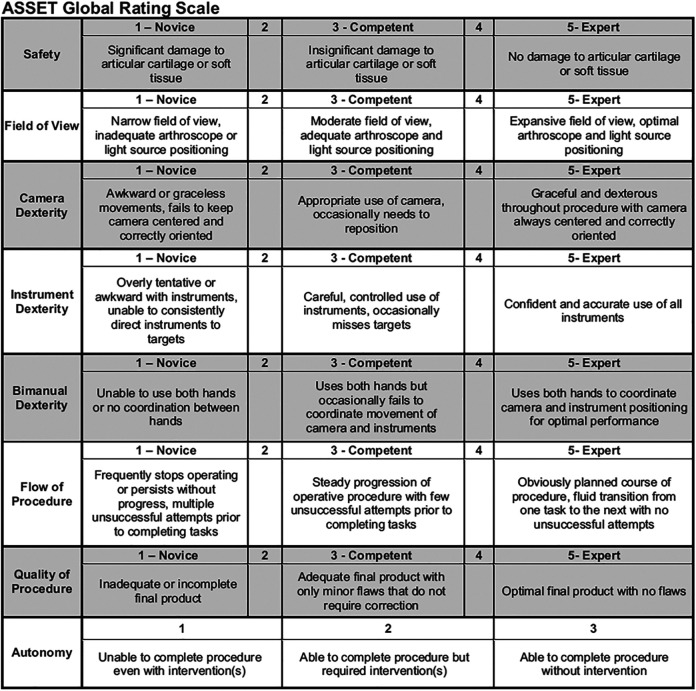

The ASSET Global Rating Scale was used to gauge the participants’ arthroscopy skills (Figure 3). Procedure safety toward the articular cartilage, field of view, camera dexterity, instrument dexterity, bimanual dexterity, flow of procedure, and quality of procedure were graded from Novice to Expert on a 5-point Likert scale, and the participants’ autonomy was graded on a 3-point Likert scale, for a total of 38 possible points.18 The single blinded staff surgeon (J.C.R.) performed the ASSET grading by viewing the video recordings of the cadaveric procedures.

Figure 3.

ASSET (Arthroscopic Surgery Skill Evaluation Tool) Global Rating Scale.18

Statistical Analysis

Baseline characteristics of the participants in the VR group were compared with those of the participants in the DL group using the Fisher exact test for categorical self-reported prior arthroscopy experience and the Student t test for comparing means of pretest scores to demonstrate a baseline. Baseline characteristics were further explored to determine if self-reported prior arthroscopy experience was associated with higher pretest scores using linear regression. The mean procedure step grades and ASSET grades were then compared between simulator groups and between PGYs using a paired t test for repeated measures, and the change in scores was tested for association with simulator groups using linear regression. Participants with incomplete data were dropped from the data set. Analysis was performed using Stata 14.2/IC (StataCorp).

Results

The 38 study participants consisted of 9 PGY5, 5 PGY4, 5 PGY3, 5 PGY2, and 14 PGY1 residents. Based on self-reported arthroscopy experience, an expected increase in all arthroscopy and shoulder arthroscopy experience was seen from PGY1 to PGY5 residents. Table 1 indicates the self-reported experience of the participants by PGY. There were no differences between the VR and DL simulator groups in baseline arthroscopy experience (P = .992), shoulder arthroscopy experience (P = .630), or prior simulator experience (P = .783).

Table 1.

Self-Reported Arthroscopy Experience by PGY (N = 38 Participants)a

| PGY1 | PGY2 | PGY3 | PGY4 | PGY5 | |

|---|---|---|---|---|---|

| Arthroscopy casesb | |||||

| >100 | 0 | 0 | 0 | 0 | 4 (44) |

| 50-99 | 0 | 0 | 0 | 1 (20) | 5 (56) |

| 20-49 | 0 | 1 (20) | 3 (60) | 4 (80) | 0 |

| 1-19 | 11 (79) | 2 (40) | 2 (40) | 0 | 0 |

| No cases | 3 (21) | 2 (40) | 0 | 0 | 0 |

| Shoulder arthroscopy casesc | |||||

| >20 | 0 | 0 | 0 | 0 | 1 (11) |

| 10-19 | 0 | 0 | 0 | 0 | 5 (56) |

| 1-9 | 6 (43) | 2 (40) | 4 (80) | 5 (100) | 3 (33) |

| No cases | 8 (57) | 3 (60) | 1 (20) | 0 | 0 |

| Simulator casesc | |||||

| >10 | 1 (7) | 0 | 0 | 1 (20) | 3 (33) |

| 1-9 | 1 (7) | 2 (40) | 3 (60) | 4 (80) | 5 (56) |

| No cases | 12 (86) | 3 (60) | 2 (40) | 0 | 1 (11) |

aData are reported as n (%). PGY, postgraduate year.

bStatistically significant difference between PGY groups, P < .001.

cStatistically significant difference between PGY groups, P < .01.

Table 2 demonstrates the paired t test results of procedure step posttest scores compared with the pretest scores. The mean baseline (pretest) procedure step scores were no different between the VR and DL simulator groups (P = .8153), and self-reported prior arthroscopy experience was not associated with any variation in pretest procedure step scores (P = .4209). Procedure step scores improved when both study groups were pooled (P = .0424) but not for individual group years or the separate simulator groups.

Table 2.

Comparison of Procedure Step Scores Before and After Training Period by Simulator Training and PGY Groupa

| Pretest Score | Posttest Score | P Value | |

|---|---|---|---|

| Simulator group | |||

| DL | 20.5 ± 8.6 (16.4-24.7) | 23.5 ± 7.1 (20.0-26.9) | .1975 |

| VR | 21.2 ± 8.0 (17.3-25.0) | 25.1 ± 5.6 (22.4-27.7) | .1296 |

| Year group | |||

| PGY1 | 17.6 ± 9.9 (11.9-23.3) | 22.6 ± 7.7 (18.2-27.1) | .1590 |

| PGY2 | 17.2 ± 8.2 (6.9-27.5) | 22.2 ± 2.4 (19.2-25.2) | .2481 |

| PGY3 | 21.2 ± 6.3 (13.4-29.0) | 21.6 ± 7.4 (12.4-30.8) | .9497 |

| PGY4 | 26.8 ± 2.3 (24.0-29.6) | 28.8 ± 2.0 (26.2-31.3) | .2826 |

| PGY5 | 24.3 ± 5.6 (20.0-28.6) | 26.9 ± 4.9 (23.1-30.7) | .3773 |

| All residents | 20.8 ± 8.2 (18.2-23.5) | 24.3 ± 6.4 (22.2-26.4) | .0424 |

aData are reported as mean ± SD (95% CI). Bolded P values indicate statistically significant difference between groups (P < .05). DL, dry lab; PGY, postgraduate year; VR, virtual reality.

Baseline (pretest) ASSET means were no different between the VR and DL groups (P = .9782). Self-reports of performing >50 arthroscopy procedures was associated with a 13.4 higher log odds of an increased ASSET score at baseline (P = .011). ASSET grades improved across all participants after the training period (P < .0001) and for participants in individual year groups PGY1 and PGY5. Participants in both the VR and DL simulator groups experienced an improvement in ASSET scoring; however, the magnitude of change after the training was not different between simulators (P ≥ .999). Table 3 demonstrates the paired t test results of ASSET posttest scores compared with the pretest scores.

Table 3.

Comparison of ASSET Scores Before and After Training Period by Simulator Training and PGY Groupa

| Pretest Score | Posttest Score | P Value | |

|---|---|---|---|

| Simulator group | |||

| DL | 18.0 ± 6.5 (14.9-21.1) | 23.1 ± 6.4 (19.9. 26.2) | .0045 |

| VR | 18.1 ± 5.3 (15.5-20.6) | 23.1 ± 5.7 (20.4-25.8) | .0003 |

| Year group | |||

| PGY1 | 13.7 ± 4.3 (11.2-16.2) | 19.6 ± 5.4 (16.5-22.7) | .0062 |

| PGY2 | 16.0 ± 4.4 (10.5-21.5) | 19.8 ± 3.5 (15.5-24.1) | .2537 |

| PGY3 | 18.2 ± 1.9 (15.8-20.6) | 22.0 ± 4.7 (16.1-27.9) | .1592 |

| PGY4 | 22.2 ± 6.2 (14.5-29.9) | 26.2 ± 4.9 (20.2-32.2) | .0716 |

| PGY5 | 23.4 ± 4.2 (20.2-26.6) | 29.1 ± 3.9 (26.1-32.1) | .0244 |

| All residents | 18.0 ± 5.8 (16.1-19.9) | 23.1 ± 6.0 (21.1-25.0) | <.0001 |

aData are reported as mean ± SD (95% CI]). Bolded P values indicate statistically significant difference between groups (P < .05). DL, dry lab; PGY, postgraduate year; VR, virtual reality.

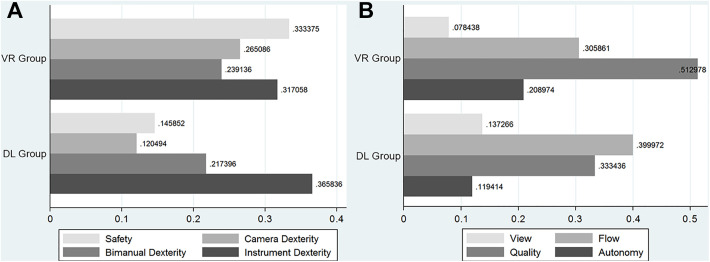

When individual components of the ASSET score were compared, no differences were found between performance after VR versus DL simulation. Figure 4 demonstrates the separate ASSET component means normalized to pretest means as each component improved after VL and DL training.

Figure 4.

Grading improvements by study group normalized to mean pretest score for the separate Arthroscopic Surgery Skill Evaluation Tool components for (A) dexterity and (B) quality. There were no statistically significant differences between the simulator groups. DL, dry lab; VR, virtual reality.

Discussion

The purpose of this study was to evaluate the effectiveness of an arthroscopic dry lab versus a virtual arthroscopic simulator to improve both arthroscopic skills and the ability to effectively perform a specific arthroscopic procedure (ie, a labral repair). Previous studies have demonstrated the validity and interobserver reliability of the ASSET score system for use in simulation evaluation in both shoulders and knees.18,22 Our data are in alignment with those of previous studies, which showed improvement in the ASSET from virtual reality simulators.7,18,22,28 In addition, we also demonstrated improvement in the ASSET with the use of dry model simulators as the primary learning modality. There was no statistically significant difference between the VR and DL groups in overall ASSET score.

Our data showed that novice (intern) and the most experienced (PGY5) surgeons demonstrated the most improvement with simulation training across the study participants. This suggests that simulation training is an important adjunct to standard orthopaedic training methods regardless of experience level, as different aspects of simulation could meet evolving needs of progressively experienced arthroscopic surgeons. While most simulator studies to date focus on the novice arthroscopic surgeon, senior-level residents still benefit from simulator training as they practice more technical skills or procedure-specific step repetition. We attribute the improvements detected in the PGY5 resident performance to the benefit of repetition in executing the procedure steps, while the improvements in the PGY1 resident performance are attributed to the benefit of repetition in basic skill acquisition.

Previous studies have shown that both arthroscopic dry labs and virtual simulators demonstrate subjective and objective improvement in arthroscopic skills as well as diagnostic arthroscopy in cadaveric models.4,10,12,15,21,25,26 To the best of our knowledge, this is the first study to compare dry labs with virtual simulators for the use of resident/surgeon education as it pertains to shoulder arthroscopy. Additionally, there is no current literature we are aware of linking virtual surgical simulators or dry labs to the performance of a specific surgical repair procedure.

To further support that the 2 simulation modalities may confer different benefits, there are trends measured by the different ASSET components. Our findings indicate that score changes versus baseline for safety, camera dexterity, and quality tended to be higher for residents who trained with the virtual reality simulator. In contrast, score changes versus baseline for instrument dexterity and flow tended to be higher for residents who trained with the dry lab simulator. While our findings were not significant, the trends could indicate a differential benefit for the different training modalities. For example, the haptic feedback that can be trained using a virtual simulator probably helps with acquiring skills for safely maneuvering instruments in a joint space. On the other hand, having the ability to handle actual instruments, including implant instrumentation, is a benefit of dry lab simulators that may explain the trends we identified in the instrument dexterity and procedural flow ASSET scores. However, because of the small sample size resulting in a type 2 error, we were not able to elicit a statistically significant difference between these ASSET components.

Our data support our hypothesis that simulator training would provide improved use of arthroscopic equipment and improvements in specific technical skills based on ASSEST scoring required to perform a common surgical procedure, such as a Bankart labral repair. This is in agreement with the work by Angelo et al,1 which demonstrated that a proficiency-based progression program in conjunction with simulator training improved surgical performance on cadaveric models with Bankart lesions. This is an important distinction because the ultimate goal of any simulator model is to improve the surgeon’s ability to perform specific surgical procedures and arthroscopic tasks. The question remains as to whether the skills learned and the improvement seen from the dry lab as well as the virtual reality simulator would transfer to in vivo surgery. Prior studies have shown that these skills transfer to improved performance in vivo with diagnostic arthroscopy but not with other surgical tasks.1,7,8,15 In addition, the study by Dunn et al7 showed that improvement did not persist at 12 months after simulation training. Further studies will be required to determine the amount of maintenance training required to keep surgeons performing at higher levels during arthroscopy and for specific arthroscopic tasks beyond diagnostic steps. Additionally, future studies should focus on the assessment of residents in the operating room with specific surgical procedures practiced using dry lab or virtual simulation using either the ASSET or other competency-based/objective grading systems to determine at which point the resident surgeon should be allowed to perform these procedures with attending surgeon supervision in the operating room.

Several studies have looked at virtual and dry lab simulations and how they affect resident performance on the simulated procedures. For the most part, these studies have not outlined a standardized schedule for the number of times per week and length of time that should be spent with the simulators. Our study differs in that all participants were instructed to perform 3 sessions per week that were 30 minutes in length. The initial training was supervised by a sports fellowship–trained orthopaedic surgeon. Further sessions were completed based on the VirtaMed simulator, which had preloaded modules with postmodule feedback provided by the VirtaMed simulator. The dry lab participants were instructed to complete a series of predetermined steps beginning with a diagnostic scope and labral repair, establishing working and accessory portals, and then performing a labral repair. Both groups also practiced arthroscopic knot-tying. A sports medicine–trained orthopaedic surgeon was present for the initial practice session and 1 session per week thereafter. During the remaining sessions, the surgeons were available for questions or additional instruction as needed. The study was developed in this way so as to mimic a theoretical standard curriculum incorporating arthroscopic training into a weekly schedule. Further studies would be needed to study the optimal timing and duration of simulation required in the residency. It is also unclear how long the improvement in arthroscopy lasts after cessation of simulation training. Based on our findings, residents at every level could benefit from additional virtual reality and/or dry lab training, in both learning the basic skills and preparing for cases later on in their surgical education.

Finally, cost is a major contributing factor in all aspects of medical training and surgical care. We did not specifically address the cost question, as it is difficult to comment on the longevity of the dry lab models as well as the overall cost of periodic software updates for the virtual reality simulators. Furthermore, there are many virtual simulators on the market, and it is difficult to generalize the results to other virtual reality models. An overall cost comparison between the 2 groups is therefore technically challenging. The virtual simulator is essentially a 1-time cost in the low 6 figures with periodic software updates moving forward. The dry lab is much less expensive, with a $2000 to $3000 investment in cameras, monitors, and scope equipment. The dry lab also has an ongoing cost related to replacement shoulder models, both bone and soft tissue components, and the costs associated with replacement implants, cameras, and scopes as needed.

This study does have limitations. A post hoc power analysis showed that outside of the parameters meeting statistical significance (P < .05), which were the overall ASSET (ASSET scores pooled between both groups) and procedural step score, we would need more than 150 participants to reach statistically significant data for the remaining outcomes. This would be technically challenging to obtain in most residency programs because of class size. Further studies could be performed in collaboration with other residency programs to help increase our sample size and could be an area for future study. Because the study was underpowered, we were unable to ascertain whether certain individuals would benefit more than others based on their prior skill level or year of training. Furthermore, the ASSET score system was not used during any of the practice session to look at improvement over time while using the simulators. This may have been able to provide more feedback for individualized training and could be an area for further study. Additionally, we classified residents based on year group rather than objective prior arthroscopic experience for the comparisons.

The use of objective measures such as the American Council for Graduate Medical Education (ACGME) case log system to determine experience may be a better means to stratify experience. However, this method has its own drawbacks as the trainees’ level of involvement in the case is unknown, leading to possible inflation in the perceived level of arthroscopic experience using the ACGME case log system alone.

Conclusion

Simulator training, both with dry lab and virtual reality simulators, led to improvement in arthroscopic performance on simulated Bankart repairs in cadaveric models. This study found statistically significant improvement in both novice and more experienced arthroscopic surgeons after simulator training. While there is no clear benefit of one type of simulator versus another, each of the simulators does offer a different experience, which may influence different aspects of learning necessary skills. Further studies are needed to determine whether there is a difference between the surgical skill sets learned from each model type. Likewise, future studies will also need to determine if this improvement translates to in vivo situations.

Footnotes

Final revision submitted September 28, 2020; accepted November 23, 2020.

One or more of the authors has declared the following potential conflict of interest or source of funding: This study was funded by the Telemedicine and Advanced Technology Research Center (award No. D14-MSVP-I-14-J1-786). A.E.J. has received educational payments from Arthrex/Medinc, consulting fees from Pacira Pharmaceuticals and Sanofi-Aventis, and hospitality payments from DePuy. T.C.B. has received consulting fees from DePuy/Medical Device Business Systems and Fx Shoulder USA and hospitality payments from Wright Medical. C.J.R. has received educational payments from Arthrex and hospitality payments from DePuy, Medinc, Musculoskeletal Transplant Foundation, and Smith & Nephew. AOSSM checks author disclosures against the Open Payments Database (OPD). AOSSM has not conducted an independent investigation on the OPD and disclaims any liability or responsibility relating thereto.

Ethical approval for this study was obtained from the Brooke Army Medical Center institutional review board.

References

- 1. Angelo RL, Ryu RKN, Pedowitz RA, et al. A proficiency-based progression training curriculum coupled with a model simulator results in the acquisition of a superior arthroscopic Bankart skill set. Arthroscopy. 2015;31(10):1854–1871. [DOI] [PubMed] [Google Scholar]

- 2. Belmont PJJ, Goodman GP, Waterman B, et al. Disease and nonbattle injuries sustained by a U.S. Army Brigade Combat Team during Operation Iraqi Freedom. Mil Med. 2010;175(7):469–476. [DOI] [PubMed] [Google Scholar]

- 3. Bridges M, Diamond DL. The financial impact of teaching surgical residents in the operating room. Am J Surg. 1999;177(1):28–32. [DOI] [PubMed] [Google Scholar]

- 4. Butler A, Olson T, Koehler R, Nicandri G. Do the skills acquired by novice surgeons using anatomic dry models transfer effectively to the task of diagnostic knee arthroscopy performed on cadaveric specimens? J Bone Joint Surg Am. 2013;95(3):e15(1–8). [DOI] [PubMed] [Google Scholar]

- 5. Cannon WD, Eckhoff DG, Garrett WEJ, Hunter RE, Sweeney HJ. Report of a group developing a virtual reality simulator for arthroscopic surgery of the knee joint. Clin Orthop Relat Res. 2006;442:21–29. [DOI] [PubMed] [Google Scholar]

- 6. Ceponis PJM, Chan D, Boorman RS, Hutchison C, Mohtadi NGH. A randomized pilot validation of educational measures in teaching shoulder arthroscopy to surgical residents. Can J Surg. 2007;50(5):387–393. [PMC free article] [PubMed] [Google Scholar]

- 7. Dunn JC, Belmont PJ, Lanzi J, et al. Arthroscopic shoulder surgical simulation training curriculum: transfer reliability and maintenance of skill over time. J Surg Educ. 2015;72(6):1118–1123. [DOI] [PubMed] [Google Scholar]

- 8. Frank RM, Wang KC, Davey A, et al. Utility of modern arthroscopic simulator training models: a meta-analysis and updated systematic review. Arthroscopy. 2018;34(5):1650–1677. [DOI] [PubMed] [Google Scholar]

- 9. Garrett WEJ, Swiontkowski MF, Weinstein JN, et al. American Board of Orthopaedic Surgery Practice of the Orthopedic Surgeon: Part-II, certification examination case mix. J Bone Joint Surg Am. 2006;88(3):660–667. [DOI] [PubMed] [Google Scholar]

- 10. Gomoll AH, O’Toole RV, Czarnecki J, Warner JJP. Surgical experience correlates with performance on a virtual reality simulator for shoulder arthroscopy. Am J Sports Med. 2007;35(6):883–888. [DOI] [PubMed] [Google Scholar]

- 11. Gomoll AH, Pappas G, Forsythe B, Warner JJP. Individual skill progression on a virtual reality simulator for shoulder arthroscopy: a 3-year follow-up study. Am J Sports Med. 2008;36(6):1139–1142. [DOI] [PubMed] [Google Scholar]

- 12. Henn RF III, Shah N, Warner JJP, Gomoll AH. Shoulder arthroscopy simulator training improves shoulder arthroscopy performance in a cadaveric model. Arthroscopy. 2013;29(6):982–985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Howells NR, Gill HS, Carr AJ, Price AJ, Rees JL. Transferring simulated arthroscopic skills to the operating theatre: a randomised blinded study. J Bone Joint Surg Br. 2008;90(4):494–499. [DOI] [PubMed] [Google Scholar]

- 14. Hui Y, Safir O, Dubrowski A, Carnahan H. What skills should simulation training in arthroscopy teach residents? A focus on resident input. Int J Comput Assist Radiol Surg. 2013;8(6):945–953. [DOI] [PubMed] [Google Scholar]

- 15. Insel A, Carofino B, Leger R, Arciero R, Mazzocca AD. The development of an objective model to assess arthroscopic performance. J Bone Joint Surg Am. 2009;91(9):2287–2295. [DOI] [PubMed] [Google Scholar]

- 16. Jones BH, Canham-Chervak M, Canada S, Mitchener TA, Moore S. Medical surveillance of injuries in the U.S. military: descriptive epidemiology and recommendations for improvement. Am J Prev Med. 2010;38(1)(suppl):S42–S60. [DOI] [PubMed] [Google Scholar]

- 17. Kairys JC, McGuire K, Crawford AG, Yeo CJ. Cumulative operative experience is decreasing during general surgery residency: a worrisome trend for surgical trainees? J Am Coll Surg. 2008;206(5):804–813. [DOI] [PubMed] [Google Scholar]

- 18. Koehler RJ, Amsdell S, Arendt EA, et al. The Arthroscopic Surgical Skill Evaluation Tool (ASSET). Am J Sports Med. 2013;41(6):1229–1237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Mabrey JD, Gillogly SD, Kasser JR, et al. Virtual reality simulation of arthroscopy of the knee. Arthroscopy. 2002;18(6):e28. [DOI] [PubMed] [Google Scholar]

- 20. Martin KD, Belmont PJ, Schoenfeld AJ, et al. Arthroscopic basic task performance in shoulder simulator model correlates with similar task performance in cadavers. J Bone Joint Surg Am. 2011;93(21):e1271–e1275. [DOI] [PubMed] [Google Scholar]

- 21. Michelson JD. Simulation in orthopedic education: an overview of theory and practice. J Bone Joint Surg Am. 2006;88(6):1405–1411. [DOI] [PubMed] [Google Scholar]

- 22. Middleton RM, Baldwin MJ, Akhtar K, Alvand A, Rees JL. Which global rating scale? A comparison of the ASSET, BAKSSS, and IGARS for the assessment of simulated arthroscopic skills. J Bone Joint Surg Am. 2016;98(1):75–81. [DOI] [PubMed] [Google Scholar]

- 23. Modi CS, Morris G, Mukherjee R. Computer-simulation training for knee and shoulder arthroscopic surgery. Arthroscopy. 2010;26(6):832–840. [DOI] [PubMed] [Google Scholar]

- 24. Owens BD, Harrast JJ, Hurwitz SR, Thompson TL, Wolf JM. Surgical trends in Bankart repair: an analysis of data from the American Board of Orthopaedic Surgery certification examination. Am J Sports Med. 2011;39(9):1865–1869. [DOI] [PubMed] [Google Scholar]

- 25. Pedowitz RA, Esch J, Snyder S. Evaluation of a virtual reality simulator for arthroscopy skills development. Arthroscopy. 2002;18(6):e29. [DOI] [PubMed] [Google Scholar]

- 26. Srivastava S, Youngblood PL, Rawn C, et al. Initial evaluation of a shoulder arthroscopy simulator: establishing construct validity. J Shoulder Elbow Surg. 2004;13(2):196–205. [DOI] [PubMed] [Google Scholar]

- 27. Vitale MA, Kleweno CP, Jacir AM, et al. Training resources in arthroscopic rotator cuff repair. J Bone Joint Surg Am. 2007;89(6):1393–1398. [DOI] [PubMed] [Google Scholar]

- 28. Waterman BR, Martin KD, Cameron KL, Owens BD, Belmont PJJ. Simulation training improves surgical proficiency and safety during diagnostic shoulder arthroscopy performed by residents. Orthopedics. 2016;39(3):e479–e485. [DOI] [PubMed] [Google Scholar]

- 29. Wheeler JH, Ryan JB, Arciero RA, Molinari RN. Arthroscopic versus nonoperative treatment of acute shoulder dislocations in young athletes. Arthroscopy. 1989;5(3):213–217. [DOI] [PubMed] [Google Scholar]

- 30. Zacchilli MA, Owens BD. Epidemiology of shoulder dislocations presenting to emergency departments in the United States. J Bone Joint Surg Am. 2010;92(3):542–549. [DOI] [PubMed] [Google Scholar]