Abstract

Realizing facial expression is likely to be one of the earliest facilitators of social engagement, and it is a core deficit for individuals with autism spectrum disorder (ASD) to build social relationship. Mobile learning creates the possibility of a learning environment and visual stimuli that can adapt its informal learning contexts to improve recognizing emotions for people with ASD. This study adopted features of mobile learning to help the realization of facial expression for the ASD. The proposed 3D Complex Facial Expression Recognition (3CFER) system was developed to help the deficit in facial expressions for this population. This study therefore, explored how children with ASD performed realizing facial expression using the 3CFER system; and how the phenomena of using the learning system for people with ASD performs. Participants (n = 24, 16 males and 8 females, m = 11.3 years old) were randomly assigned to either a control or an experimental group, and were involved with the pre-and post-test sessions. The control was not engaged in the system-treatment; and the experimental undertook the system-operation. The result showed that the experimental had great improvement in realizing facial expression compared with control, and surprise and shyness were mostly easy to be identified for them. The performance of using mobile learning system was promising well. However, the informal experience of recognizing facial expression in different social contexts was meaningful learning for this population.

Keywords: Autistic spectrum disorders, Facial emotion recognition, 3D characters, Mobile learning

Introduction

The ability to perceive emotion from facial expressions is essential for a successful social interaction because this information helps to explain and anticipate other peoples’ actions. According to Darwin (1872), the recognition and understanding of emotional expressions is an innate ability; and it may therefore be necessary for the typical development of facial emotion recognition (Leppanen and Nelson 2006). Because of its primary role in social situations, deficits in recognizing facial expression are a key component of the social disabilities that have been observed in children with autism spectrum disorder (ASD; Collin et al. 2013). Children with ASD present a collection of problems that are directly related to the development of three domains of the basic functioning and are caused by a disorder of the brain’s development. These symptoms include difficulties in social interaction and communication development, repetitive patterns of behavior, thought, and interests (Kathy and Howard 2001). Due to these problems, individuals with ASD have difficulties with social interaction, including the use of inappropriate emotions and the maintenance of friendships (Machintosh and Dissanayake 2006). More importantly, they exhibit limited abilities to understand others’ mental conditions, and they find it difficult to interpret emotions through others’ facial expressions (Moore et al. 2005; Rieffe et al. 2007; Rutherford and McIntosh 2007; Wagner et al. 2005).

Many studies have revealed a broad recognition of facial expression deficits in both low-functioning (Celani et al. 1999; Hobson 1986) and high-functioning children with ASD (Hobson 1986; Lindner and Rosen 2006). A study (Corona et al. 1998) indicated that compared with typically developing individuals, those with ASD performed poorly on tasks that involved responding correctly after recognizing emotions. More specifically, high-functioning children with ASD seem to do well on recognizing facial expression of the basic emotions of happiness, anger, sadness, and fear (Davies et al. 1994; Law Smith et al. 2010), but they also have difficulty recognizing complex emotions in films and photographs, and are unable to match faces and voices indicating more complex, such as surprise (Baron-Cohen et al. 1993), pride (Capps et al. 1992; Kasari et al. 1993), shame or embarrassment (Capps et al. 1992; Golan et al. 2006; Heerey et al. 2003), and jealousy (Bauminger 2004).

Previous studies have successfully explored the use of 3D representation of computer technology to teach facial expression with system-intervention to people with ASD and have generally used a desktop computer representation (Cheng and Chen 2010). To assess the understanding of these emotions in individuals with ASD using dynamic emotions, Golan and Baron-Cohen (2006) applied an intervention-system, ‘Mind Reading system: The Interactive Guide to Emotions.’ This system uses real humans to model facial expressions in a video format. They found that the dynamic visual conditioning led to improvements in facial expression and mental states for people with intellectual and developmental disabilities. Similar studies of Tardif et al. (2007) have examined the effects of slow motion of facial movement and the vocal sounds that correspond to such facial expressions in children with autism. More studies found that the performance of individuals with ASD was much better with dynamic facial expression stimuli than with static expressions for shorter periods of time (Gepner et al. 2001). Furthermore, the studies have found that individuals with ASD rely more on information from the lower portions of the face (i.e. mouth) in facial expressions (Neumann et al. 2006; Spezio et al. 2007), and also look more towards regions outside the core facial features when decoding emotions (Bal et al. 2010; Hernandez et al. 2009). The other studies (Baron-Cohen et al. 1997; Grossmann et al. 2000) had suggested that presenting only the eye regions during facial expressions or combining expressions with mismatching emotional words leads to a poorer performance for children with ASD. In the aforementioned studies, users had to sit in front of a desktop computer, without the comfort and freedom to select their own choice of locations.

A new line of research emerging alongside the development of mobile technology brings this into play during visual learning, which differs from classroom learning, or learning in limited locations. This new field of study is called Mobile Learning (Sharples 2000). This technology is a form of visual motion processing for people with ASD and provides a visual mediated treatment that may help individuals with autism to learn (Kathy and Howard 2001).

The benefits of using mobile learning technology for learning

Mobile technology has now become a focal point for providing new designs, new interfaces, and new interactions. It makes available freedom of movement across locations, and it can be used within the school schedule or at the learner’s own pace, and can complete tasks flexibly at any time (Pownell and Baily 2000; Reis and Escudeiro 2012). Mobile learning systems provide the learner with independent times for a specific learning activity, shared learning objectives/activities, and a suitable educational environment, e.g. the application of multimedia content, location-based learning materials, and serious games, thereby enhancing the learners’ enjoyment and motivation (Caudill 2007; Mayer 2003; Sharples 2000). Mobile learning offers the possibility to create learning environments in accordance with constructivist principles, in which the social contexts of people with ASD are central. Accordingly, in environment design, the models tend to associate personalization with individualization and with respect to customization, e.g. systemic and repetitive learning (Moore et al. 2005). The context-design can match scaled assessments of the learner’s interests, whereas personalization lets the learner interact with the material on the device (Sharples 2000). This type of learning environment could be helpful in special education because it facilitates tailoring of the educational content according to each individual’s learning differences (Korucu and Alkan 2011).

A similar study suggested that mobile learning systems would be useful for people with special education needs as a way of enhancing interactive skills or academic tasks (Cihak et al. 2010). In this sense, mobile learning creates the possibility of a learning environment and visual stimuli that can adapt its informal learning contexts to improve recognizing emotions for people with ASD (Xavier et al. 2015). The presented information is directly applicable to the situation at hand, and it is tailored for when users meet an embarrassing situation. Potentially, people with ASD could use this learning environment to share their learned information with others, or to practice resolving especially difficult circumstances, and then they could immediately receive feedback from a well-developed application.

This study extended the findings of a 3D-basic-emotions system intervention program (Cheng and Chen 2010) for people with ASD and modified it into a mobile learning system. The current study investigated the learning effects of a novel mobile complex-emotion learning system without location limits for this population; and explored the phenomena after using a mobile emotional learning system for people with ASD. The proposed 3D Complex Facial Expression Recognition (3CFER) system, therefore, was developed to help the deficit in facial expressions for people with ASD. Thus, the main research question was to explore how children with ASD performed recognizing facial expression using the 3CFER system. The following aims were explored: (a) to evaluate the learning effects of the 3CFER system used by people with ASD; (b) to investigative the recognition of complex emotion; and (c) to explore how the phenomena of using the learning system for people with ASD performs.

System development

The system architecture was designed on a client-server solution, and the 3CFER system was run on the Android 1.5 platform located on the server. The 3CFER system was loaded onto a mobile learning device (ViewSonic ViewPad 7e: 7-inch screen, Intel Oak Trail Atom Z670 Processor, 32 GB RAM, and Android 2.3 operating system). Each participant in the experimental group received a tablet computer during the study. Moreover, a user guide for the 3CFER system was provided for the educators.

Each user accessed the mobile learning platform through a unique login interface via the Internet to operate the 3CFER system. Some of the people with developmental disabilities are not keen on using assistive tools that involve an operating system; however, finger interactions can eliminate the need for an assistive tool for this special group (Shih et al. 2010). To this end, the features of ‘drag & drop’ buttons were designed in the proposal system. All the participants’ responses were stored in a database system, which assisted educators in tracking the learning process of the individual users. All of the mobile learning technology used in the study had common features, such as a touch screen, a digital camera, and the multimedia content (image, text, and audio). The 3D-animated characters displayed a slowed presentation of facial expressions (e.g. mouth opening and eyebrows frowning exaggeratedly; Cheng and Chen 2010), and the 3D social scenarios were built using a 3D Max and Poser. The server and the database were structured using PHP and MySQL. The content of the 3D Complex Facial Expression Recognition (3CFER) system.

The learning environment adopted features of mobile learning, which involved the content dimension (e.g. the use of text, audio, and animation), the user model dimension (e.g. providing hints and user-centered learning design for impairments with ASD), the device dimension (e.g. multimedia or text-based representation), and the connectivity dimension (e.g. users can learn in their own time, suitable learning environments via the Internet; Goh and Kinshuk 2006). However, this development relates to the design principle of the mobile learning system, which allows learners to be engaged in activities that are meaningful to them.

Thus, the 3CFER system provided four 3D complex emotions (surprise, shyness, nervousness, and embarrassment). The design facial features were enhanced in the eye region and lower portions (e.g. mouth) of the face, with gradual dynamic representations. The content of the system involved an animated series of social events and fun learning situations for improving the capability of recognizing facial expression (Golan et al. 2010). It was based on different social activities, in which the four emotions occur in response to events such as a social event (Ekman 1993), anticipated, or imagined, which were validated by a group of ASD experts for their suitability. The social event design was also suggested by the ASD-parents, these social scenarios might often meet in their child. Further, the 3CFER system provided immediate feedback on the right or wrong answers. Upon selecting the appropriate answers, some questions resulted in one or two possible answers. If a participant selected a correct answer, the system generated the message, ‘Congratulations! You are excellent. You got the right answer!’. If the user noted the wrong answer, the following messages with verbal sounds appeared, ‘Please, attempt it again!’ or ‘John may not feel like that, you may choose another emotion!’. Each participant had two chances to select the appropriate answer until the next question appeared on the screen. The 3CFER system included three stages: (1) identifying a 3D-animated, complex facial expression (Stage 1); (2) specifying the social events that preceded the emotion (Stage 2); and (3) choosing a situation caused by an emotion.

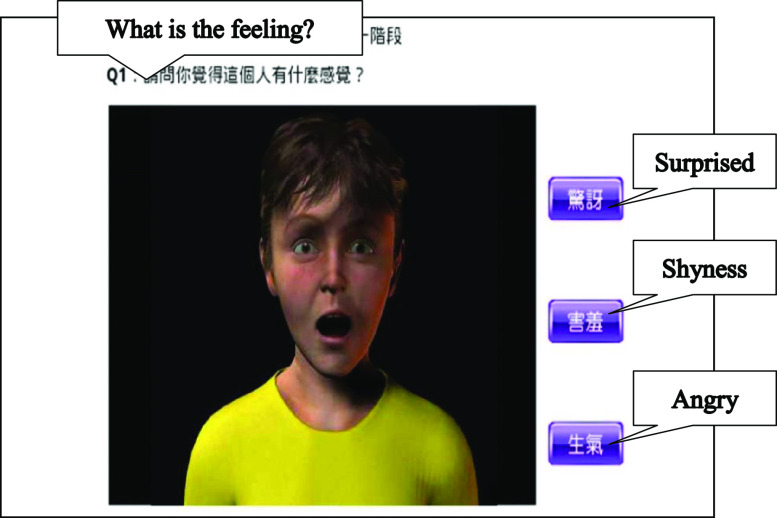

Stage 1: Identifying 3D-animated complex facial expressions

In this stage, participants were presented with four questions, which involved complex emotions, such as surprise, shyness, nervousness, and embarrassment (Fig. 1). The system presented the 3D-animated character with an emotion and showed the three possible answers to the user, with the emotion shown on the left side of the screen, and the answer was presented on the right side of the screen. The participants chose one appropriate answer and then another, if needed, for their response. The participants could select their answer using ‘drag & drop’ or ‘finger pointing’ if desired.

Figure 1.

Example of Stage 1.

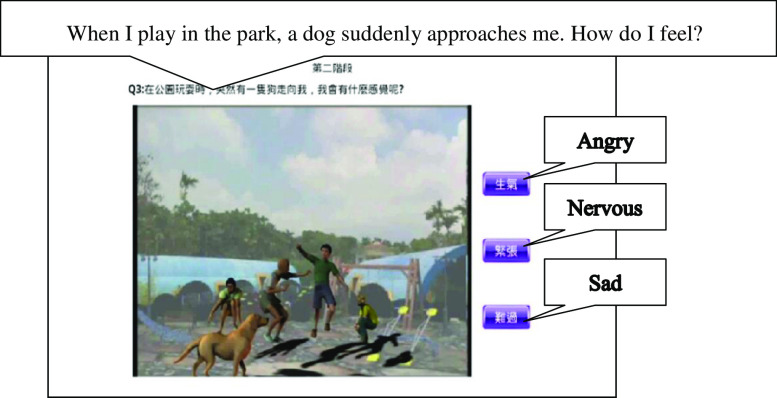

Stage 2: Specifying the social events that precede emotions (Fig. 2)

Figure 2.

Example of Stage 2.

In this stage, a 3D social event is presented, as a 3D animated characteristic of a specific emotion. Potential answers included four emotions based on the previous situation. In this task, the participants had to select the emotion that they may have felt was appropriate given the possible situations. For example, ‘When I play in the park, a dog suddenly approaches me. How do I feel?’

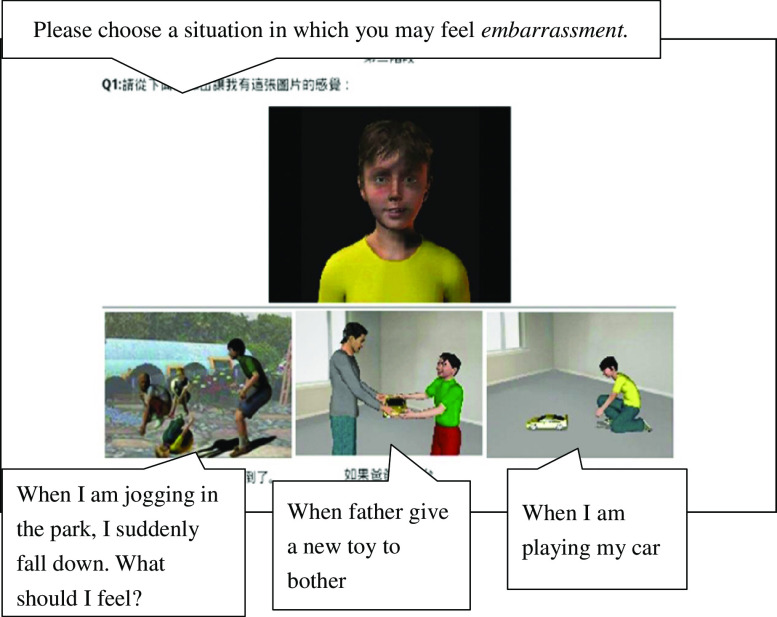

Stage 3: Choosing a situation caused by an emotion (Fig. 3)

Figure 3.

Example of Stage 3.

In this task, a 3D character’s emotion is presented on screen, and the participant is asked to think of the possible social situations that may have been the cause of this emotion. For instance, the instruction, ‘Please choose a situation in which you may feel embarrassment,’ would then be followed by a list of different 3D animated situations that cause ‘embarrassment,’ such as ‘When I am jogging in the park, I suddenly fall down. What should I feel?’

Method

A pretest–post-test design was employed for this study. Participants were randomly assigned to either a control or an experimental group, and were involved with the pre-and post-test sessions. The control was not engaged in the system treatment; they used paper-based emotion pictures and designed social stories for recognizing emotions, referred from Howlin et al. (1999). Conversely, the experimental undertook the system operation. The experimental group used the mobile emotional learning system three times during the experimental session, and the scores of the individual participants were recorded after each operation. In the present study, we examined: (a) the compared scores for these two groups in recognizing complex emotions; (b) the frequency of errors made for recognizing complex emotions while operating the system; (c) and the phenomena of using the mobile emotional learning system in the experimental group.

Participants

Twenty-four participants with ASD were recruited from different schools with a special education program. They had been diagnosed according to the Diagnostic and Statistical Manual of Mental Disorders, 5th Edition, (DSM-5; American Psychiatric Association 2013) from their records. Participants with verbal IQ (VIQ), performance IQ (PIQ), and full-scale IQ (FSIQ) over 70 were selected, (experimental group: FSIQ, M = 81.08; VIQ, M = 81.42; PIQ, M = 79.25; the control group: FSIQ, M = 79.67; VIQ, M = 79.5; PIQ, M = 77.33), as evaluated by the Wechsler Abbreviated Scale of Intelligence (WASI-IV; Wechsler 2008). The participants in the experimental group (8 males and 4 females) were aged between 9.2 and 12.7 years (M = 11.3), and the participants in the experimental group (8 males and 4 females) were aged between 9.5 and 12.6 years (M = 10.9). The details of each participant group are described in Table 1. The presence of an emotional disorder was evaluated using the Scale for Assessing Emotional Disturbance (SAED) for each participant (Epstein and Cullinan 1998). To protect participants’ rights to privacy and confidentiality, participant information was kept anonymous. It should be noted that before the commencement of the study, consent was sought from the participants’ parents.

Table 1. Age and WASI-IV’s scores of participants in the experimental group (n = 12) and the control group (n = 12).

| Mean |

Minimum |

Maximum |

||||

|---|---|---|---|---|---|---|

| Experimental | Control | Experimental | Control | Experimental | Control | |

| Chronological age | 11.3 | 10.9 | 9.2 | 9.5 | 12.7 | 12.6 |

| Verbal IQ | 81.42 | 79.5 | 76 | 76 | 88 | 84 |

| Performance IQ | 79.25 | 77.33 | 73 | 73 | 86 | 81 |

| Full-scale IQ | 81.08 | 77.33 | 75 | 75 | 88 | 84 |

Setting and design

Only the experimental group operated the 3CFER system over the three-week period of the study. The researcher explained to the participants how they could operate the system; all the participants were unable to see their obtained scores while using the operating system. Educators were allowed to provide suitable help to participants after evaluating the participants’ stored answers in the database system. The 3CFER system was installed on three mobile emotional learning systems such that three of the experimental group participants could manipulate the system at the same time. All experimental sessions took five weeks to complete. The experimental group could choose a comfortable environment and an individualized timetable for using the system. The school campus was the most preferred environment chosen by this group (e.g. classroom, playground, or other acceptable areas within the school). Very few participants in the experimental group preferred to use this system at their home, and participants interacted with the software at their own pace, with minimal supervision from the researchers. However, all of the responses (‘clicked’ answers) from the experimental group in different locations were sent to the database system during the system operation. Additionally, the control group did not use the operating system.

Measurement

Complex-emotion scale

A complex-emotion (CE) scale was designed, and was then used to investigate the learning effects of the proposed system for each participant. The content of the CE scale was based on the Facial Affect Scoring Technique (FAST; Ekman et al. 1971). The CE scale contained 12 emotion-related questions, and it simulated events to elicit emotion and emotional states that required social judgment. Its validity was confirmed through the assessment with a group of ASD experts. The CE scale comprised four complex-emotion pictures (CEP) and situation-pictures (SP) in the form of cards, which were used in the pre- and post-test sessions. The CE scale was administered in three sessions: (a) identifying the facial expressions from the CEP, (b) comprehending the provoked emotion from the CEP, and describing a social event in the SP (e.g. ‘When I play ball in the park, and my brother’s friend praises me by saying that my playing skills are very good, what should I feel?’), and (c) explaining a facial expression in the CEP that occurs as a result of a social situation in the SP (e.g. ‘What is the situation that caused this emotion?’). In order to simulate different social events and facial expressions, there were eight questions in each session. Each participant was asked four questions during each session, which were chosen randomly from the CE scale. Participants were instructed to pick up a CEP or SP card to answer the question. Each participant received verbal prompting once from the researcher if the participant gave a wrong answer or did not respond at all. If they were able to provide an appropriate answer, or to make a different narrative explanation that was reasonable or acceptable; then the researcher proceeded to the next question. The reason is that the CE scale used in the sessions of pre- and post-tests could precisely evaluate the performance of recognizing emotions from the participants. In the experiment, one researcher and two trained observers coded the appropriate/wrong responses, or no responses, of each participant. Both coders/observers understood the purpose of the study.

Brief interview

After the post-test, each participant in the experimental group was briefly interviewed. Through these interviews, we aimed to further explore the participants’ learning experiences with the mobile learning system. We asked participants who had used the 3CFER system the following questions during the interview:

-

(1)

Do you like this portable learning system? If not, why?

-

(2)

If yes, which parts of the 3CFER system did you like?

-

(3)

What was your experience using this mobile learning system?

-

(4)

Do you wish to use this 3CFER system to learn more facial expressions in the future? Why?

Procedures

The experiment was conducted in four phases, and different materials were used in each phase:

-

(1)

The pre-test session: As explained above, the CE scale was used to assess the recognition of the complex emotions in the two groups. Each participant was asked four randomly selected questions from the CE scale. This session proceeded as follows: the researcher showed a participant the SP, and the participants (in both groups) used the CEP to respond to the questions. The participant could choose which of the emotions was the most similar to what had happened in the social situation. This session was conducted in a quiet room at the special needs school. The researcher and the two observers recorded the participants’ responses for data analysis. This was done by considering the numbers of appropriate/wrong answers, and no responses, selected during the pre-session.

-

(2)

The intervention session: The experimental group was provided with a tablet computer on which the 3CFER system had been installed. Each participant had the chance to practice using the system in advance. The researcher also provided assistance when the participants needed it. Participants in the experimental group used the 3CFER system three times, and each session lasted 40 min (Fig. 4). Each participant used the system over 21 days. Further, the understanding rate of each complex emotion was calculated during the operation of the 3CFER system, which was stored on the database system. Further, no system intervention was given to the control group for them to participate in this session. Only the designed social stories and paper-based emotion pictures were provided for their treatment.

-

(3)

The post-test session: As explained above, the post-test session followed the same procedure as the pre-test session. Again, recognizing complex emotions abilities were examined using the scores on the CE scale for both groups. All participants were instructed as follows: the researcher showed a participant the SP again, and the participant was required to choose the appropriate CEP caused by the social situation. The session was conducted in a quiet room of the special needs school.

-

(4)

A brief interview was held with participants who had operated the 3CFER system. The researcher and observers noted each of the participant’s responses for further analysis.

Figure 4.

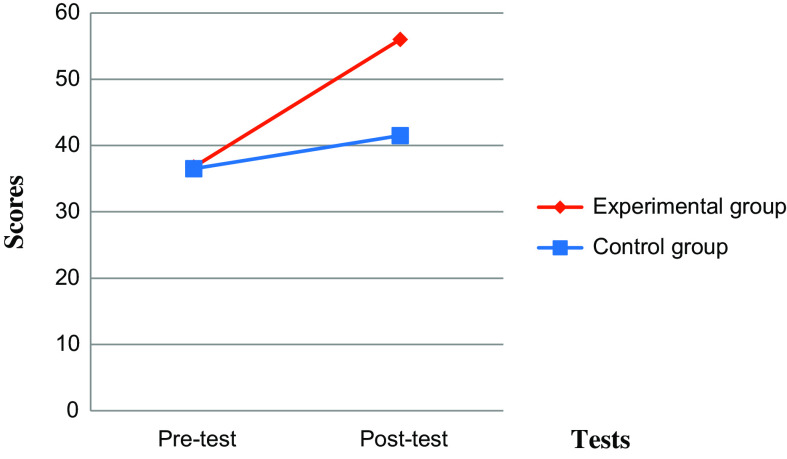

Comparison of pre-test and post-test scores for the experimental and control groups.

Data collection

The experimental and control groups were examined using the CE scale. The pre-and post-tests sessions, the frequencies of giving appropriate (scored 6 points)/wrong answers (scored 3 points), or no responses (scored 0 points), were counted for each participant, and the total score was 72 points. The dependent variable, and/or identifying and comprehending complex emotions, consisted of a participant’s total score for recognizing complex emotions. This score was the sum of scores for understanding the four emotions (as coded by the independent raters) received during the pre-and post-tests sessions. For the experimental group, the participants’ appropriate and wrong answers, and the error rate, were also calculated during the operation of the 3CFER system.

To calculate the reliability of the experimenters’ scoring of the participants’ responses, one researcher and two assistant observers watched and recorded the pre- and post-test sessions. The observers received individual training on the coding procedures and independently coded two sessions until at least 95% agreement was obtained. Inter-observer reliability was calculated as the number of agreements divided by the number of agreements plus disagreements multiplied by 100 (Tekin-Iftar et al. 2001). Inter-observer agreement data were recorded for the pre- and post-test sessions, with a criterion of 88% inter-observer agreement.

Data analysis

The understanding of complex emotions was compared using the average scores in the pre- and post-test for each group. To investigate the learning effects of recognizing complex emotions in both the intervention and control groups, a one-way ANOVA was employed to assess the differences between the pre- and post-test for each group. Analyses were performed using SPSS Version 18 for Windows. Furthermore, for the experimental group, the reductions in error variance associated with the individual differences were recorded in the database, e.g. participants’ appropriate/wrong answers were analyzed. Finally, key points from the interviews were identified via the content analysis of the participants’ experiences using the mobile emotional learning system.

Results

Understanding the complex emotions

The pre-test scores of both the experimental (M = 36.75) and the control group (M = 36.50) did not differ significantly. After using the system, the experimental group (M = 56) showed significant improvements in scores when compared with the control group (M = 41.5) on the post-test session (Table 2).

Table 2. Descriptive statistics for the groups’ pre-test and post-test achievement scores.

| Groups | N | Mean | SD | SE | |

|---|---|---|---|---|---|

| Pre-test | Experimental group | 12 | 36.75 | 3.415 | 0.986 |

| Control group | 12 | 36.50 | 3.090 | 0.892 | |

| Total | 24 | 36.63 | 3.187 | 0.651 | |

| Post-test | Experimental group | 12 | 56.00 | 6.439 | 1.859 |

| Control group | 12 | 41.50 | 3.802 | 1.098 | |

| Total | 24 | 48.75 | 9.033 | 1.844 | |

Notes: SD = Standard Deviation. SE (Std. Error Mean)

The test of homogeneity of variance was not significant (pre-test, p = 0.593 > 0.05; post-test, p = 0.098 > 0.05) for both groups (Table 3), so a one-way ANOVA proceeded. The results of the ANOVA are as presented; the two groups did significantly differ in their understanding of the 3D complex emotions in the pre-test session (F(1,24) = 0.035, p = 0.853 > 0.05). On the other hand, there was a significant difference in performance between the groups in their understanding of complex emotions in the post-test session (F (1, 24) = 45.127, p = 0.000 < 0.05; Table 4). The experimental group was significantly better than the control group in the post-test session.

Table 3. Test of homogeneity of variance for the groups’ pre-test and post-test.

The mean difference is significant at the 0.05 level.

Table 4. One-way ANOVA for the achievement scores of the groups.

| Sum of Squares | df | Mean Square | F | Sig. | ||

|---|---|---|---|---|---|---|

| Pre-test | Between groups | 0.375 | 1 | 0.375 | 0.035 | 0.853* |

| Within groups | 233.250 | 22 | 10.602 | |||

| Total | 233.625 | 23 | ||||

| Post-test | Between groups | 1261.500 | 1 | 1261.500 | 45.127 | 0.000* |

| Within groups | 615.000 | 22 | 27.955 | |||

| Total | 1876.500 | 23 | ||||

The mean difference is significant at the 0.05 level.

Figure 4 shows the scores obtained by the experimental and control groups in the pre- and post-test sessions. The participants in the experimental group who gave correct answers increased in the post-text when compared with the pre-text.

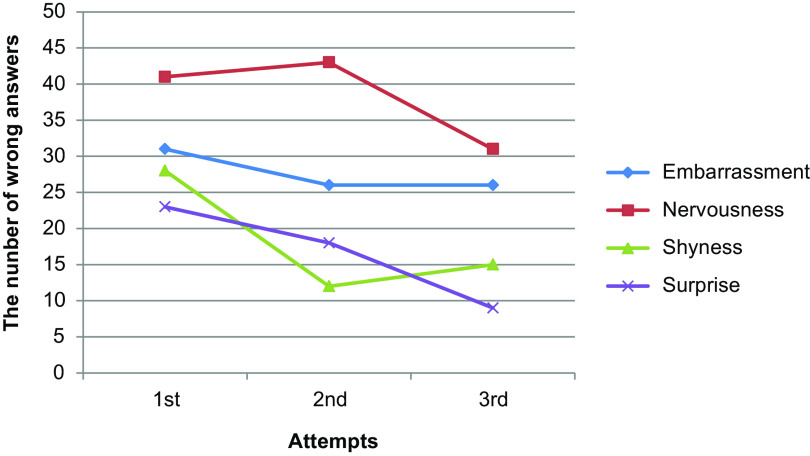

The mean numbers of incorrect responses for the experimental group are presented in Table 5. The numbers of wrong responses decreased across the intervention sessions. Specifically, the mean for the errors was 10 points in the first intervention session, and then decreased to 7 points by the third intervention session. Thus, there was a gradual reduction in wrong answers (error rate for the second session = −2.08, < 0; error rate for the third session = −1.83, < 0), over the intervention sessions.

Table 5. Error rate in the 3CFER group during the three intervention sessions.

| Mean number of wrong answers chosen | SD of sample | Error rate | |

|---|---|---|---|

| 1st | 10 | ±3.51 | – |

| 2nd | 7 | ±3.83 | −2.08 |

| 3rd | 6 | ±4.17 | −1.83 |

Understanding each complex emotion in the 3DFER system

The numbers of wrong answers for each emotion were analyzed. Figure 5 shows the mean number of wrong answers by the experimental group for each emotion over the three attempts during the intervention. It shows that the error rate was reducing by the third try of using the system. More specifically, the 3D-animated characters with surprise and shyness were more easily identified in this system context for users with ASD.

Figure 5.

Number of wrong answers for each emotion by the experimental group using the 3CFER system.

Participants’ experience explored through interviews

Throughout the experiment, the researcher made careful observations and maintained records of the students’ performance. Most of the participants in the experimental group appeared excited, curious, and motivated, while operating the system. According to the short interview, most participants presented a positive attitude towards the 3CFER system. They were very excited when the researcher showed them the portable system, and they expressed their willingness to use the system again in the future. Only three participants remained quiet when the researcher asked whether they liked using the system. Some of them mentioned that they preferred using it at home, and said ‘My mom could see it,’ and ‘My grandmamma was copying facial expressions from it.’ Some of the participants in the experimental group said: ‘Many friends came to see me using it,’ ‘I used it after my class,’ and ‘I showed this (facial expression) to my classmate.’

Further, participants mentioned several advantages of the 3CFER system, including that the animated stories and facial expression were interesting, funny, and understandable. They even imitated the facial expressions presented by the 3D characters. Regarding their favorite parts of the system, seven participants indicated that they liked to use Stage 2 because of the feedback provided (e.g. sound effects, applause), and the animated social events that they repeatedly liked to watch. They commented that ‘It is a very funny story.’ Interestingly, one participant asked the researcher to turn the installed camera so that she could see her own face on the screen. Another commented that ‘the drag and drop function is fun, I am able to use it’; he also liked to listen to and watch the 3D scenarios. Moreover, most of the participants mentioned that they felt really good when using the mobile learning system and felt happy to agree that the system was fun. Two participants said, ‘My mother (or grandma) has these facial expressions sometimes.’ Four participants mentioned that they often received the warring feedback from the system, and they could not obtain the applauding sounds.

In the end, we asked participants whether they wished to use the 3CFER system to learn more facial expression in the future and, if so, why. Most participants nodded and said that they would like to use it again to learn more facial expressions. One participant said that he wanted to see more stories and facial expressions. Overall, these findings are encouraging. The main aim of the study was to help children with ASD understand complex facial expressions by using the 3CFER system. The findings provided evidence that children with ASD showed a significant effect in their understanding of 3D complex facial expressions after using the system.

Discussion

This study demonstrated that the learning effects of recognizing complex emotion was positive and socially accepted by most of the individuals in the experimental group; and they performed well in comprehending the 3D animated complex emotions through the proposal system. They were able to recognize the 3D humanoid characteristic represented by the complex facial expression in different social situations. This demonstrated that the design of the instructional content (e.g. the 3D-complex emotions & repertoire of types of social activities) was primarily helpful for the experimental group, which happened during their daily-living activities. Similar findings also indicated that the well-established instructional procedure in the context of social activities might increase their interests in learning (Dye et al. 2003; Ekman 1993). Another possible explanation could be that the duplicating practices of using the system might be beneficial for them in recognizing emotions. The advantages of frequent practice are obviously beneficial for improvements; and some individuals performed better with repetition (e.g. Cheng and Chen 2010; Golan and Baron-Cohen 2006; Silver and Oakes 2001).

More specifically, the 3D-animated characters with surprise and shyness were mostly easy to be identified in this system context for users with ASD. Some studies (Balconi et al. 2012) also indicated that children with autism recognized surprise more easily than other complex emotions using their dynamic stimuli of varying intensities to detect subtle emotion recognition in autism (e.g. disgust; Law Smith et al. 2010). In contrast, Golan et al. (2006) revealed that children with ASD had difficulty recognizing surprise, possibly because their study involved different materials and tools such that the outcome was different.

Furthermore, the mobile emotional learning system also simulated participant’s motivation to learn the facial expressions; and their performance in using the system was outstanding during the experimental study. As to our observation in the interview session, some of participants in the experimental group duplicated the facial expressions when the 3D animated emotions were presented on the screen in slow motion, and then they tried to copy the facial expressions shown on the 3D characters to practice them (as reported by their parents and teachers). In this context, the 3D facial expression was designed with slow motion; this, perhaps, motivated the children’s imitation of emotions. Moreover, two of them noticed that their grandmother or mother had emotions that were similar to those shown on the screen. This simplicity might have stimulated the children with ASD to recall the emotions that they had witnessed, as one of the participants requested that the camera of the mobile device be turned on to show her face on the screen, and this seemed to increase her interest in presenting a facial expression, as it is rare for children with ASD to request to see their own face onscreen.

Concerning the use of a mobile emotional learning system, which as designed, was an easy-to-use application with preferred stimuli. The features of the mobile learning system bring many benefits, as users with ASD intuitively interacted with the system’s touch, drag, and camera features, and they enjoyed immediate feedback and visual information. The mobile learning system helped mold the learning process to different cognitive impairments (e.g. facial expression, sensorial, or social interaction) for users with special education (Fernández-López et al. 2013). Consequently, most of the participants in the experimental group seemed to enjoy the system because this device can be carried to a comfortable place, and at a suitable time, when they liked to use it. Some of them showed off this device and noted a specific emotion for others (including their friends, and the researchers); this demonstrated that the location of learning for each participant who chooses to participate can become a relevant part of the learning context (Baldauf et al. 2007). The system provides freedom of movement between different locations (e.g. classroom, playground) within a school or outside (e.g. home, stress). Participants can also work outside the classroom, without any teacher supervision or stress from other classmates (Norbrook and Scott 2003). The informal learning experiences of facial expression recognition offer meaningful learning opportunities for participants with ASD. This was particularly encouraging for social behavior in children with ASD, which involved sharing information with others because they typically lacked the appropriate social skills for reciprocal friendship (Cheng and Huang 2012; Golan and Baron-Cohen 2006).

However, we interpreted our data only to the learning effects of recognizing complex emotions with 3D characters, and to the experience of using a mobile learning system for children with ASD. We did not investigate the generalization of complex emotions used in their daily life. A few limitations of our present study need to be considered at this time. Only a few of the participants could converse with initiative to the researcher; most of them were not talkative and were shy children, and they used nodding or moving their head to respond to the researcher. To obtain a workable research friendship with this special group, further studies should consider spending a period of time building a good relationship with these children, which may lead to more conversation. The relatively small sample size may have limited the generalizability of the data. However, the skills that were learned from this system, and could be applied to the real world, were the primary interests of this study. In our future studies, a larger number of participants will be sampled in order to collect enough data to reach the requirements for generalization. Additionally, the participants may provide the socially desirable answers during the operating of the system; their cognitive capability of recognizing facial expression that might be taught in advance. This study only investigated the learning effects of using this proposal system. Yet, we still required basic cognitive and reading capabilities from each participant, which would have been helpful in understanding the meaning and content within the system. Additionally, more emotions (e.g. contempt, disgust, tenderness, and excitement) should be added to enhance the user experience. Such practice may be able to help prevent children with ASD from experiencing vicious cycles of negative emotions, such as social anxiety and depression. Further research should consider the use of real people in virtual scenarios to help children who are emotion-deficit understand social emotions.

Conclusion

This article examines utility of a mobile emotional learning system for individuals with ASD. Individuals with ASD can be taught to use such mobile emotional learning systems for a variety of purposes; specifically, the program can be used to enhance the comprehension of facial expressions, social activities, and reading abilities. Although, the potential value of incorporating a mobile emotional learning system can be emerged within the educational and rehabilitation medication for individuals with ASD, the repeated practice in social activities and presenting facial expressions is still required in several aspects of more complex emotions.

Conflict of interest

No potential conflict of interest was reported by the authors.

Funding

This work was financially supported by the Ministry of Science and Technology, Taiwan, ROC under the [Contract number 100-2511-S-018-031].

Acknowledgments

We acknowledge the Ministry of Science and Technology, Taiwan, R.O.C. for the financial support under the Contract No. 100-2511-S-018-031. More importantly, we are grateful to the students with ASD, their teachers, and their parents who participated in this study. They offered for their generously volunteering time.

References

- American Psychiatric Association . 2013. Diagnostic and statistical manual of mental disorders (DSM-5®). Arlington, VA: American Psychiatric Association Publishing. [Google Scholar]

- Bal, E., Harden, E., Lamb, D., Van Hecke, A. V., Denver, J. W. and Porges, S. W.. 2010. Emotion recognition in children with autism spectrum disorders: Relations to eye gaze and autonomic state. Journal of Autism and Developmental Disorders , 40(3), 358–370. [DOI] [PubMed] [Google Scholar]

- Balconi, M., Amenta, S. and Ferrari, C.. 2012. Emotional decoding in facial expression, scripts and videos: A comparison between normal, autistic and Asperger children. Research in Autism Spectrum Disorders , 6(1), 193–203. [Google Scholar]

- Baldauf, M., Dustdar, S. and Rosenberg, F.. 2007. A survey on context-aware systems. International Journal of Ad Hoc and Ubiquitous Computing , 2, 263–277. [Google Scholar]

- Baron-Cohen, S., Spitz, A. and Cross, P.. 1993. Do children with autism recognize surprise? A Research Note. Cognition and Emotion , 7, 507–16. [Google Scholar]

- Baron-Cohen, S., Wheelwright, S. and Jolliffe, T.. 1997. Is there a “Language of the Eyes”? Evidence from normal adults, and adults with autism or asperger syndrome. Visual Cognition , 4, 311–31. [Google Scholar]

- Bauminger, N. 2004. The expression and understanding of jealousy in children with autism. Development and Psychopathology , 16, 157–77. [DOI] [PubMed] [Google Scholar]

- Capps, L., Yirmiya, N. and Sigman, M.. 1992. Understanding of simple and complex emotions in non-retarded children with autism. Journal of Child Psychology and Psychiatry , 33(7), 1169–82. [DOI] [PubMed] [Google Scholar]

- Caudill, J. G. 2007. The growth of m-learning and the growth of mobile computing: Parallel developments. The International Review of Research in Open and Distributed Learning , 8(2), 1–13. [Google Scholar]

- Celani, G., Battacchi, M. W. and Arcidiacono, L.. 1999. The understanding of the emotional meaning of facial expressions in people with autism. Journal of Autism and Developmental Disorders , 29(1), 57–66. [DOI] [PubMed] [Google Scholar]

- Cheng, Y. and Chen, S.. 2010. Improving social understanding of individuals of intellectual and developmental disabilities through a 3D-facail expression intervention program. Research in Developmental Disabilities, 31(6), 1434–1442. [DOI] [PubMed] [Google Scholar]

- Cheng, Y. and Huang, R.. 2012. Using virtual reality environment to improve joint attention associated with pervasive developmental disorder. Research in Developmental Disabilities , 33, 1–12. [DOI] [PubMed] [Google Scholar]

- Cihak, D., Fahrenkrog, C., Ayres, K. and Smith, C.. 2010. The use of video modeling via a video iPod and a system of least prompts to improve transitional behaviors for students with autism spectrum disorders in the general education classroom. Journal of Positive Behavior Interventions , 12, 103–115. [Google Scholar]

- Collin, L., Bindra, J., Raju, M., Gillberg, C. and Minnis, H.. 2013. Facial emotion recognition in child psychiatry: A systematic review. Research in Developmental Disabilities , 34, 1505–1520. [DOI] [PubMed] [Google Scholar]

- Corona, R., Dissanayake, C., Arbelle, S., Wellington, P. and Sigman, M.. 1998. Is affect aversive to young children with autism? Behavioural and cardiac responses to experimenter distress. Child Development , 69, 1494–1502. [PubMed] [Google Scholar]

- Darwin, C. 1872, reprinted 1998. The expression of the emotions in man and animals. 3rd ed. Ekman P., ed. New York: Oxford University Press. [Google Scholar]

- Davies, S., Bishop, D., Manstead, A. S. R. and Tantam, D.. 1994. Face perception in children with autism and asperger’s syndrome. Journal of Child Psychology and Psychiatry , 35, 1033–57. [DOI] [PubMed] [Google Scholar]

- Dye, A., K’Odingo J. A. and Solstad B.. 2003. Mobile education – A glance at the future. Available at: <http://www.dye.no/articles/a_glance_at_the_future/theoretical_foundation_for_mobile_education.html> [Accessed 20 July 2014].

- Ekman, P. 1993. Facial expression and emotion. American Psychologist , 48, 384–392. [DOI] [PubMed] [Google Scholar]

- Ekman, P., Friesen, W. V. and Tomkins, S. S.. 1971. Facial affect scoring technique (FAST): A first validity study. Semiotica , 3, 37–58. [Google Scholar]

- Epstein, M. H. and Cullinan, D.. 1998. Scale for assessing emotional disturbance. Austin, TX: Pro-Ed. [Google Scholar]

- Fernández-López, A., Rodríguez-Fórtiz, M. J., Rodríguez-Almendros, M. L. and Martínez-Segura, M. J.. 2013. Mobile learning technology based on iOS devices to support students with special education needs. Computers & Education , 61, 77–90. [Google Scholar]

- Gepner, B., Deruelle, D. and Grynfeltt, S.. 2001. Motion and emotion: A novel approach to the study of face processing by young autistic children. Journal of Autism and Developmental Disorders , 31, 37–45. [DOI] [PubMed] [Google Scholar]

- Goh, T. and Kinshuk, D.. 2006. Getting ready for mobile learning adaptation perspective. Journal of Educational Multimedia and Hypermedia , 15(2), 175–198. [Google Scholar]

- Golan, O., Ashwin, E., Granader, Y., McClintock, S., Day, K., Leggett, V. and Baron-Cohen, S.. 2010. Enhancing emotion recognition in children with autism spectrum conditions: An intervention using animated vehicles with real emotional faces. Journal of autism and developmental disorders , 40(3), 269–279. [DOI] [PubMed] [Google Scholar]

- Golan, O. and Baron-Cohen, S.. 2006. Systemizing empathy: Teaching adults with asperger syndrome or high-functioning autism to recognize complex emotions using interactive multimedia. Development and Psychopathology , 18(2), 591–617. [DOI] [PubMed] [Google Scholar]

- Golan, O., Baron-Cohen, S. and Hill, J.. 2006. The Cambridge mindreading (CAM) face-voice battery: Testing complex emotion recognition in adults with and without asperger syndrome. Journal of Autism and Developmental Disorders , 36, 169–83. [DOI] [PubMed] [Google Scholar]

- Grossmann, J. B., Klin, A., Carter, A. S. and Volkmar, F. R.. 2000. Verbal bias in recognition of facial emotions in children with asperger syndrome. Journal of Child Psychology and Psychiatry , 41, 369–79. [PubMed] [Google Scholar]

- Heerey, E. A., Keltener, D. and Capps, L. M.. 2003. Making sense of self-conscious emotion: Linking theory of mind and emotion in children with autism. Emotion , 3, 394–400. [DOI] [PubMed] [Google Scholar]

- Hernandez, N., Metzger, A., Magne, R., Bonnet-Brilhault, F., Roux, S. and Barthelemy, C.. 2009. Exploration of core features of a human face by healthy and autistic adults analyzed by visual scanning. Neuropsychologia , 47(4), 1004–1012. [DOI] [PubMed] [Google Scholar]

- Hobson, R. P. 1986. The autistic child’s appraisal of expressions of emotion. Journal of Child Psychology and Psychiatry and Allied Disciplines , 27(3), 321–342. [DOI] [PubMed] [Google Scholar]

- Howlin, P., Baron-Cohen, S. and Hadwin, J.. 1999. Teaching children with autism to mind-read: A practical guide. West Suseex, England: John Wiley & Sons. [Google Scholar]

- Kasari, C., Sigman, M. D., Baumgartner, P. and Stipek, D. J.. 1993. Pride and mastery in children with autism. Journal of Child Psychology and Psychiatry , 34(3), 353–362. [DOI] [PubMed] [Google Scholar]

- Kathy, S. T. and Howard, G. 2001. Social stories, written text cues, and video feedback: Effects on social communication of children with autism. Journal of applied behaviour analysis , 34, 425–446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korucu, A. T. and Alkan, A.. 2011. Differences between m-learning (mobile learning) & e-learning, basic terminology & usage of m-learning in education. Procedia Social & Behavioral Sciences , 15, 1925–1930. [Google Scholar]

- Law Smith, M. J., Montagne, B., Perrett, D. I., Gill, M. and Gallagher, L.. 2010. Detecting subtle facial emotion recognition deficits in high-functioning autism using dynamic stimuli of varying intensities. Neuropsychologia , 48, 2777–2781. [DOI] [PubMed] [Google Scholar]

- Leppanen, J. M. and Nelson, C. A.. 2006. The development and neural bases of facial emotion recognition. Advances in Child Development and Behavior , 34, 207–246. [DOI] [PubMed] [Google Scholar]

- Lindner, J. L. and Rosen, L. A.. 2006. Decoding of emotion through facial expression, prosody and verbal content in children and adolescents with asperger’s syndrome. Journal of Autism and Developmental Disorders , 36(6), 769–777. [DOI] [PubMed] [Google Scholar]

- Machintosh, K. and Dissanayake, C.. 2006. A comparative study of the spontaneous social interactions of children with high-functioning autism and children with Asperger’s disorder. Autism , 10, 199–220. [DOI] [PubMed] [Google Scholar]

- Mayer, R. 2003. The promise of multimedia learning: Using the same instructional design methods across different media. Learning and Instruction , 13, 125–139. [Google Scholar]

- Moore, D., Cheng, Y., McGrath, P. and Powell, N.. 2005. Collaborative virtual environment technology for people with autism. Focus on Autism and Other Developmental Disabilities , 20(4), 231–243. [Google Scholar]

- Neumann, D., Spezio, M. L., Piven, J. and Adolphs, R.. 2006. Looking you in the mouth: Abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Social Cognitive and Affective Neuroscience , 1, 194–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norbrook, H. and Scott, P.. 2003. Motivation in mobile modern foreign language learning. In: Attewell J., Da Bormida G., M. Sharples, & Savill-Smith C.. MLEARN: Learningwith mobile devices. London: Learning and Skills Development. pp.50–51. [Google Scholar]

- Pownell, D. and Bailey, G. D.. 2000. Handheld computing for educational leaders: A tool for organizing or empowerment. Learning and Leading with Technology. 27(8), 46–49, 59–60. PRO-ED. [Google Scholar]

- Reis, R. and Escudeiro, P.. 2012. Portuguese education going mobile learning with M-Learning. Recent Progress in Data Engineering and Internet Technology , 2, 459–464. [Google Scholar]

- Rieffe, C., Meerum Terwogt, M. and Kotronopoulou, K.. 2007. Awareness of single and multiple emotions in high-functioning children with autism. Journal of Autism and Developmental Disorders , 37, 455–465. [DOI] [PubMed] [Google Scholar]

- Rutherford, M. D. and McIntosh, D.. 2007. Rules versus prototype matching: Strategies of perception of emotional facial expressions in the autism spectrum. Journal of Autism and Developmental Disorders , 37(2), 187–196. [DOI] [PubMed] [Google Scholar]

- Sharples, M. 2000. The design of personal mobile technologies for lifelong learning. Computers & Education , 34(3), 177–193. [Google Scholar]

- Shih, C.-H., Huang, H.-C., Liao, Y.-K., Shih, C.-T. and Chiang, M.-S.. 2010. An automatic drag-and-drop assistive program developed to assist people with developmental disabilities to improve drag-and-drop efficiency. Research in Developmental Disabilities , 31, 416–425. [DOI] [PubMed] [Google Scholar]

- Silver, M. and Oakes, P.. 2001. Evaluation of a new computer intervention to teach people with autism or Asperger syndrome to recognize and predict emotions in others. Autism , 5, 299–316. [DOI] [PubMed] [Google Scholar]

- Spezio, M. L., Adolphs, R., Hurley, R. S. and Piven, J.. 2007. Abnormal use of facial information in high-functioning autism. Journal of Autism and Developmental Disorders , 37(5), 929–939. [DOI] [PubMed] [Google Scholar]

- Tardif, C., Lainé, F., Rodriguez, M. and Gepner, B.. 2007. Slowing down presentation of facial movements and vocal sounds enhances facial expression recognition and induces facial-vocal imitation in children with autism. Journal of Autism and Developmental Disorders , 37, 1469–1484. [DOI] [PubMed] [Google Scholar]

- Tekin-Iftar, E., Kırcaali-Iftar, G., Birkan, B., Uysal, A., Yıldırım, S. and Kurt, O.. 2001. Using a constant time delay to teach leisure skills to children with developmental disabilities. Mexican Journal of Behavior Analysis , 27, 337–362. [Google Scholar]

- Wagner, M., Kutash, K., Duchnowski, A. J., Epstein, M. H. and Sumi, W. C.. 2005. The children and youth we serve: A national picture of the characteristics of students with emotional disturbances receiving special education. Journal of Emotional and Behavioral Disorders , 13, 79–96. [Google Scholar]

- Wechsler, D. 2008. Wechsler abbreviated scale of intelligence-Fourth Edition (WISC®-IV). San Antonio, TX: Harcourt Assessment. [Google Scholar]

- Xavier, J., Vignaud, V., Ruggiero, R., Bodeau, N., Cohen, D. and Chaby, L.. 2015. A multidimensional approach to the study of emotion recognition in autism spectrum disorders. Frontiers in Psychology , 6, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]