Abstract

HEALTH CARE REPORT CARDS INVOLVE COMPARISONS of health care systems, hospitals or clinicians on performance measures. They are going to be an important feature of medical care in Canada in the new millennium as patients demand more information about their medical care. Although many clinicians are aware of this growing trend, they may not be prepared for all of its implications. In this article, we provide some historical background on health care report cards and describe a number of strategies to help clinicians survive and thrive in the report card era. We offer a number of tips ranging from knowing your outcomes first to proactively getting involved in developing report cards.

So far in this series, we have stressed that errors in clinical judgement may be subtle, unavoidable and widely shared. Lawyers, journalists, administrators and others with less clinical experience, however, sometimes take an alternative position and assert that such errors are easy to identify and signify an incompetent practitioner. At its worst, this perspective degenerates into selective reporting of simplistic anecdotes. At its best, this approach leads to careful quantitative assessments of performance through health care report cards at the system, hospital or practitioner level. For a physician, such report cards might include measurements of a patient's length of stay in hospital, patient mortality, delivery of Papanicolaou smears, prescriptions for β-blockers, vaccinations missed, surveys of patient satisfaction and so on.

Consider the following scenario. Dr. X is an active cardiac surgeon with years of experience at hospital Y. One morning, he reads the following headline in the newspaper, “Dr. X ranked as the best cardiac surgeon.” The newspaper article goes on to describe how he has the lowest patient mortality rate of all cardiac surgeons in his province. When he arrives at his office that day, the phone is ringing off the hook and reporters are calling from all the local media. He has trouble getting any work done that week, because he is bombarded by phone, fax and email messages from patients and their families requesting that he operate on them. He wonders what he did to deserve all the adulation.

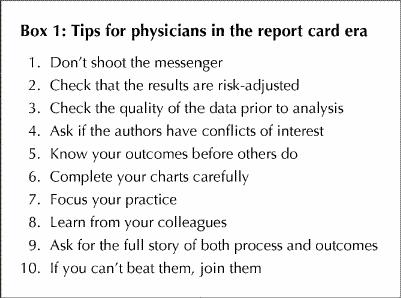

In this article, we discuss the topic of health care report cards and describe a number of strategies with which clinicians and hospitals in Canada can achieve positive results in the report card era. As clinical researchers affiliated with the Institute for Clinical Evaluative Sciences (ICES) in Toronto, who have been active in the development of health care report cards, we offer 10 tips to practising clinicians on how best to interpret and respond to the clinical report card era. These tips are intended to be practical (Box 1); they are not intended to be substitutes for other insights into clinical judgement that are covered elsewhere in this series.

Box 1.

1. Don't shoot the messenger

As far back as the 1920s, Dr. Ernest Codman of the Massachusetts General Hospital in Boston advocated the “end results” idea that surgeons should be accountable for their outcomes and that surgical results should be publicly disseminated. Dr. Codman's ideas were not too popular with his colleagues, and he was eventually forced to leave the hospital. He then set up his own hospital where he pursed his ideas, largely in isolation from the rest of the medical profession at that time. His unpopular ideas led him to disgrace, notoriety and near financial ruin.1 Since the beginning, the idea of health care report cards has not always been well received by the medical community.

A more recent example of a health care report card is the New York State Cardiac Surgery Report. In 1991, newspapers published information on the mortality rates of patients of all cardiac surgeons performing coronary artery bypass graft (CABG) surgery in the state of New York. Although the Department of Health of New York State had initially refused to release the information, a journalist successfully sued the department for the data, with a judge agreeing that the “public had a right to know this information.” The publication of this data has been associated with a substantial decline in the mortality rate following CABG surgery in New York,2 although critics maintain that this is a reflection of “upcoding” of the data and avoidance of high-risk patients.3

In Canada, many provinces are developing performance indicators and report cards for various aspects of the health care system.4 In Ontario, the ICES has published a number of practice atlases documenting substantial regional and interhospital variations in the rates and outcomes of several surgical and medical conditions.5,6,7 The Canadian Institute for Health Information (CIHI) has also published a national report card comparing the delivery of medical care among 63 communities throughout Canada, portions of which were featured in Maclean's, a Canadian, national magazine.8 Given the intense interest in the development of performance reports on the part of multiple stakeholders, including senior policy-makers, the media and the public, report cards are almost certainly here to stay in Canada.

2. Check that the results are risk-adjusted

Fair outcome comparisons require that the analysis be adjusted for severity of illness and comorbidity. Otherwise, those who treated sicker patients might be unfairly penalized. Several methods are available for conducting these adjustments, but in general the principle is the same. Namely, data are obtained about important prognostic factors that influence the outcome of interest, and then statistical techniques are used to adjust for imbalances to “level the playing field.” The mortality rate after adjustment for case-mix differences is usually called the risk-adjusted mortality rate.

Providers whose outcomes are statistically more extreme than expected are deemed “outliers.” Although there is uncertainty regarding the optimal statistical method for defining an outlier,9 it is generally assumed that differences in outcomes for outliers are attributable to differences in quality of care. Overall, this stream of logic is most compelling if the outcome is salient, the differences are large and there are only a few outliers, each of whom is clearly different from the main group of providers.

3. Check the quality of the data prior to analysis

Many report cards are drawn from administrative databases rather than clinical registries. For example, the CIHI hospital discharge database has been used for several report cards in Canada. The CIHI database covers hospital discharges in 8 of the 10 Canadian provinces (except Quebec and Manitoba). It contains information about patient demographics, the primary admission diagnosis, up to 15 secondary diagnoses, in-hospital procedures and in-hospital mortality. Therefore, it is a very rich source of information. Audits of the data elements in the CIHI database have suggested that they are very accurate for patient demographics and mortality, moderately accurate for primary diagnoses and major procedures, and least accurate for comorbidities, complications or other subtleties.10

Most researchers will attempt to validate the accuracy of the data that they are using prior to its inclusion in a report card, but they also welcome external help. For example, prior to the release of the ICES Cardiovascular Health and Services in Ontario atlas,7 our team of researchers at the ICES gave representatives of all hospitals in Ontario an opportunity to check that the information about patients with acute myocardial infarction at their hospital had been accurately coded and that any miscoded cases were excluded from the analysis. This allowed us to improve the quality of the data analyzed and also allowed hospital staff an opportunity to validate the information. Hospital staff were also given their final results, prior to their public release, so that they had time to prepare for media inquiries.

4. Ask if the authors have conflicts of interest

In general, the development of a health care report card should be conducted by independent investigators rather than by one of the individuals or hospitals being assessed. Otherwise, there are opportunities for temptation or accusations of bias on the part of those being studied. For example, it would be potentially misleading if one hospital were to issue a report indicating that their patients had the best mortality rate for a certain condition, if they controlled the data sources and the statistical methodology in the report. Report cards have the greatest credibility if they are developed by those without direct conflicts of interest.

A more subtle potential source of bias lies in the source of the outcomes being assessed. For example, research has shown that postoperative stroke rates after carotid endarterectomy are lower in studies in which surgeons report their own results compared with studies in which neurologists assess the incidence of postoperative stroke.11 Although this may be a reflection of the best surgeons reporting their results, the appearance of bias decreases the credibility of a report card based on a surgeon's assessment.

5. Know your outcomes before others do

Clinicians should monitor their own outcomes where possible, far ahead of other people. As is the case for report cards for students in academic training, the final marks should be no big surprise to any individual. Clinicians should also be aware of established norms in their particular field regarding acceptable outcome rates. For example, several surgical and medical specialties have published guidelines outlining recommended, minimally acceptable volume and outcome standards for several common procedures.12,13

Clinicians who wish to monitor their outcomes (such as the mortality rate for a particular diagnosis) can do so in one of several ways. For example, they could contact their hospital's medical records department and ask to review the data for all of the patients who were admitted with a particular condition over the past year for whom they were responsible. They could then request a profile of basic data for their patients, such as length of stay or their mortality rate. An alternative to using hospital discharge databases would be to participate in a clinical registry. For example, cardiovascular surgeons in Ontario have developed a common database that allows them to track information about their patients' clinical condition prior to surgery, as well as their postoperative outcomes.14

For primary care physicians, we acknowledge that it may be much more difficult to monitor outcomes, because adverse events are very rare in the office setting, and there are less well-established performance standards. Nevertheless, there is some pilot work being done in this area (e.g., patient satisfaction ratings, vaccination rates), and it is likely that some standardized performance measurement tools will be developed in the future.

6. Complete your charts carefully

Researchers often rely on administrative databases or on other data available through chart review. In consequence, clinicians should code their hospital discharge abstracts carefully and completely to ensure that all admission diagnoses, comorbidities and complications are accurately and completely coded both on the discharge form and on the medical record. If the information is not recorded in the chart, a patient will be assumed not to have any comorbidities, and that could adversely affect the clinician when patient comorbidities are statistically adjusted for in an outcomes analysis. Clinicians may also wish to conduct internal self-audits of their hospital's discharge database to ensure that the medical records personnel are recording accurately and completely all of the information in the chart.

7. Focus your practice

A large body of research has shown a strong relationship between the volume of many procedures and their outcomes.15,16 In general, practice makes perfect. For example, cancer surgery mortality rates are much lower in high-volume regional referral centres than in small community hospitals.17 Some clinicians still argue that these differences occur because low-volume providers operate on sicker patients, yet sophisticated analyses do not support this claim. Providers in remote geographical areas may be required to do a low volume of many procedures in order to maintain reasonable access for residents of a small community. However, many physicians who do a low volume of procedures also exist in large metropolitan areas. Clinicians should consider concentrating on doing a few procedures well rather than many procedures infrequently. An awareness of recommended volume standards for procedures may help.

8. Learn from your colleagues

A common initial response among providers whose institutions have fared poorly in an outcomes analysis is to question the validity of the data. Critics may state that the data are inaccurately coded, or that the risk adjustment is inadequate. Although this may be true in some isolated cases, a more useful response is to view a poor performance on a report card as a valuable opportunity to improve care. Clinicians should consult colleagues who have better results to find out how their treatment approaches differ, with the aim of learning strategies for improving their outcomes. They should also develop a formal quality improvement plan and continually monitor their progress before future report cards are released.

9. Ask for the full story of both process and outcomes

Many reports of outcomes have focused solely on the outcomes of care and have offered little direct information on the factors or processes that contributed to the differences observed in outcomes. Wherever possible, clinicians should expect health care report cards to provide some information on the processes of care that may be correlated with better outcomes. For example, it is more useful for hospitals to know that their β-blocker prescription rates for acute myocardial infarction are low than to know that their post–myocardial infarction mortality rate is high, because they can immediately develop strategies to increase their β-blocker prescription rates. It is much more difficult to know where to start improving quality when hospitals are just told that their mortality rates are high, with no other information.

10. If you can't beat them, join them

More clinicians need to become involved in report card development. If more members of the medical profession do not become involved, the development of health care report cards may be dominated by individuals with less clinical insight, and misleading conclusions are more likely to be drawn. Similarly, it is also important that clinicians who are interested in developing report cards do so while working with colleagues trained in statistics and epidemiology. Scientists trained in these disciplines can often offer important methodological advice and can ensure the statistical robustness of any analysis.

Articles to date in this series .

Redelmeier DA, Ferris LE, Tu JV, Hux JE, Schull MJ. Problems for clinical judgement: introducing cognitive psychology as one more basic science. CMAJ 2001; 164 (3): 358-60.

Redelmeier DA, Schull MJ, Hux JE, Tu JV, Ferris LE. Problems for clinical judgement: 1. Eliciting an insightful history of present illness. CMAJ 2001;164(5):647-51.

Redelmeier DA, Tu JV, Schull MJ, Ferris LE, Hux JE. Problems for clinical judgement: 2. Obtaining a reliable past medical history. CMAJ 2001;164(6):809-13.

Schull MJ, Ferris LE, Tu JV, Hux JE, Redelmeier DA. Problems for clinical judgement: 3. Thinking clearly in an emergency. CMAJ 2001;164(8):1170-5.

Footnotes

This article has been peer reviewed.

Acknowledgements: Dr. Tu is supported by a Canada Research Chair in Health Services Research. This article was supported in part by an operating grant from the Canadian Institutes of Health Research.

Competing interests: None declared.

Reprint requests to: Dr. Jack V. Tu, Institute for Clinical Evaluative Sciences, Rm. G106, 2075 Bayview Ave., Toronto ON M4N 3M5; fax 416 480-6048

References

- 1.Donabedian A. The end results of health care: Ernest Codman's contribution to quality assessment and beyond. Milbank Q 1989;67:233-56. [PubMed]

- 2.Hannan EL, Kilburn H Jr, Racz M, Shields E, Chassin MR. Improving the outcomes of coronary artery bypass surgery in New York State. JAMA 1994; 271:761-6. [PubMed]

- 3.Green J, Wintfeld N. Report cards on cardiac surgeons: assessing New York State's approach. N Engl J Med 1995;332:1229-32. [DOI] [PubMed]

- 4.Baker GR, Brooks N, Anderson G, Brown A, McKillop I, Murray M, et al. Healthcare performance measurement in Canada: Who's doing what? Hosp Q 1998;2:22-6. [DOI] [PubMed]

- 5.Naylor CD, Anderson GM, Goel V. Patterns of health care in Ontario. The ICES practice atlas. vol. 1. Ottawa: Canadian Medical Association; 1994.

- 6.Goel V, Williams JI, Anderson GM, Blackstien-Hirsch P, Fooks C, Naylor CD, editors. Patterns of health care in Ontario. The ICES practice atlas. 2nd ed. Ottawa: Canadian Medical Association, 1996. p. 85-9.

- 7.Naylor CD, Slaughter P, editors. Cardiovascular health and services in Ontario: an ICES atlas. 1st ed. Toronto: ICES; 1999. p. 199-238.

- 8.Health report: the annual ranking. The best health care. Maclean's 2000; 113 (23):18-33.

- 9.Brophy JM, Joseph L. Practice variations, chance and quality of care [editorial]. CMAJ 1998;159(8):949-52. Available: www.cma.ca/cmaj/vol-159/issue-8/0949.htm [PMC free article] [PubMed]

- 10.Williams JI, Young W. Appendix 1. A summary of studies on the quality of health care administrative databases in Canada. In: Goel V, Williams JI, Anderson GM, Blackstien-Hirsch P, Fooks C, Naylor CD, editors. Patterns of health care in Ontario. The ICES practice atlas. 2nd ed. Ottawa: Canadian Medical Association; 1996. p. 339-45.

- 11.Rothwell P, Warlow C. Is self-audit reliable? [letter]. Lancet 1995;346:1623. [DOI] [PubMed]

- 12.ACC/AHA Task Force Report. Guidelines and indications for coronary artery bypass surgery: a report of the American College of Cardiology/American Heart Association Task Force on assessment of diagnostic and therapeutic cardiovascular procedures (Subcommittee on Coronary Artery Bypass Graft Surgery). J Am Coll Cardiol 1991;17:543. [PubMed]

- 13.Beebe HG, Clagett GP, DeWeese JA, Moore WS, Robertson JT, Sandok B, et al. Assessing risk associated with carotid endarterectomy. A statement for health professionals by an Ad Hoc Committee on Carotid Surgery Standards of the Stroke Council, American Heart Association. Circulation 1989;79:472-3. [DOI] [PubMed]

- 14.Tu JV, Naylor CD. Coronary artery bypass mortality rates in Ontario. A Canadian approach to quality assurance in cardiac surgery. Steering Committee of the Provincial Adult Cardiac Care Network of Ontario. Circulation 1996;94:2429-33. [DOI] [PubMed]

- 15.Hannan EL, Siu AL, Kumar D, Kilburn H Jr, Chassin MR. The decline in coronary artery bypass graft surgery mortality in New York State: the role of surgeon volume. JAMA 1995;273:209-13. [PubMed]

- 16.Grumbach K, Anderson GM, Luft HS, Roos LL, Brook R. Regionalization of cardiac surgery in the United States and Canada: geographic access, choice, and outcomes. JAMA 1995;274:1282-8. [PubMed]

- 17.Begg CB, Cramer LD, Hoskins WJ, Brennan MF. Impact of hospital volume on operative mortality for major cancer surgery. JAMA 1998;280:1747-51. [DOI] [PubMed]