Abstract

The fast pandemics of coronavirus disease (COVID-19) has led to a devastating influence on global public health. In order to treat the disease, medical imaging emerges as a useful tool for diagnosis. However, the computed tomography (CT) diagnosis of COVID-19 requires experts’ extensive clinical experience. Therefore, it is essential to achieve rapid and accurate segmentation and detection of COVID-19. This paper proposes a simple yet efficient and general-purpose network, called Sequential Region Generation Network (SRGNet), to jointly detect and segment the lesion areas of COVID-19. SRGNet can make full use of the supervised segmentation information and then outputs multi-scale segmentation predictions. Through this, high-quality lesion-areas suggestions can be generated on the predicted segmentation maps, reducing the diagnosis cost. Simultaneously, the detection results conversely refine the segmentation map by a post-processing procedure, which significantly improves the segmentation accuracy. The superiorities of our SRGNet over the state-of-the-art methods are validated through extensive experiments on the built COVID-19 database.

Keywords: COVID-19, Segmentation, Detection, Context enhancement, Edge loss

1. Introduction

AS the serious pandemic, coronavirus disease 2019 (COVID-19) [1], [2] has infected 56,898,415 people and caused 1,360,381 deaths, according to the Center for Systems Science and Engineering (CSSE) at Johns Hopkins University (JHU) [3] (updated 19 November 2020). COVID-19 originates from the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), which can be spread by infectious viruses through breathing, coughing, and sneezing, etc. Effective screening of infected patients is critical to the fight against COVID-19, as shown by 1) early diagnosis of COVID-19 can reduce the transmission of the virus by isolating infected patients early; 2) early treatment can also improve the chance of survival, and these two steps for confirmed patients to confirm that the virus has been cleared. One of the gold criteria for the diagnosis of COVID-19 is polymerase chain reaction (PCR), which measures the biosafety level of nucleic acid (RNA) of SARS-CoV-2 in respiratory specimens, e.g., nasopharyngeal swabs, oropharyngeal swabs, middle nasal turbinate. However, PCR tests are short supply, laborious, time-consuming, and require a complex manual process. Besides, computed tomography (CT) can quantitatively analyze the progress of COVID-19, while PCR can only determine the presence or absence of COVID-19.

Another way to screen COVID-19 is to perform a radiographic examination by analyzing chest images, e.g., X-rays, and CT. Recent studies [4], [5], [6], [7], [8] have found characteristic abnormalities exist in the chest radiographic images of infected patients. Some researchers [9], [10] suggest that chest CT should be considered the primary tool for screening COVID-19 in endemic areas. Compared to X-rays, CT screening is widely used due to its merit and three-dimensional view of the lung. In recent studies [11], [12], typical signs of infection can be observed in CT images, e.g., early ground-glass opacity (GGO) and late lung consolidation. Therefore, a qualitative assessment of the longitudinal changes in infection and CT scans can provide useful and essential information for combating COVID-19. However, manually delineating lung infections is tedious and time-consuming. Besides, the radiologist’s annotation of the infection is a very subjective task influenced by personal bias and clinical experience. Therefore, computer-aided systems are expected for an automatic and accurate radiographic interpretation in less time.

Recently, deep learning-based technology [13] has been widely used in COVID-19 image processing for its powerful image feature extraction. A COVID-Net [8] is proposed to detect COVID-19 from chest X-ray images. Wang et al. [14] adopted a deep learning method to extract COVID-19s graphical features for a clinical diagnostic test. Joaquin [15] introduced a transfer learning with ResNet50 to detect COVID-19. Butt et al. [16] developed a location-attention oriented model to calculate the infection probability of COVID-19. Tang et al. [17] presented a random forest method to assess the severity based on quantitative features. Shi et al. [18] utilized an infection Size Aware Random Forest method (iSARF) to classify COVID-19. Shan+ et al. [19] devised a deep-learning-based method to segment and quantify the infection regions from CT scans. Though plenty of deep-learning-based methods have been proposed to provide assistance and diagnose COVID-19, few publications are available to segment and detect COVID-19 in CT scans.

We aim to integrate the deep convolutional neural network (CNN) into the segmentation [20], [21] and detection of the COVID-19 chest CT images. Fig. 1 shows the COVID-19 infections of chest CT images, which contain ground-glass opacities in the early stage, pulmonary consolidation in the late stage, and mix areas of both. The qualitative evaluation of infection areas and longitudinal changes in CT scans could reward useful information, e.g. lesion location, lesion size, and critical condition, in fighting against COVID-19. However, due to the unique structure of infection regions, it is difficult to distinguish the boundaries of COVID-19, which makes the accurate segmentation of COVID-19 lesions difficult. Besides, the manual delineation of COVID-19 infections is tedious and time-consuming, often influenced by individual bias and clinical experience. In the annotation process, the boundaries of COVID-19 infected areas can be better visualized by adjusting different parameters of window breadth and window locations, as shown in Fig. 1, which is beneficial to the segmentation of COVID-19.

Fig. 1.

The chest CT images of threepatients with COVID-19 pneumonia. The ground-glass opacities (GGOs) and pulmonary consolidation areas are the highlights in the top row. However, the infection regions in the bottom line are hard to distinguish with an inappropriate window width and window level. In this paper, we set window width and window level to 1500 a −500, respectively, confirmed by the expert.

In this work, a light-weight yet accurate framework is presented, termed Sequential Region Generate Network (SRGNet), which jointly detects and segments the chest CT images of COVID-19 infections. SRGNet aims to fully mine the supervisory information of COVID-19 infection images. We first design a context enhancement module to increase the contextual information between encoder and decoder. Then, a novel edge loss is designed to augment the contextual information of the image. Thus, the bounding boxes of the segmented image across different layers can be generated to reduce the computation of the detection task and mitigate the problem of an unbalanced category in detecting COVID-19. Lastly, the predicted boxes are further fed forward to refine the segmentation results via a post-processing.

To summarize, the main contributions of this paper are listed below: 1) We propose a new framework, termed SRGNet, to jointly detect and segment COVID-19 infection images in a mutually reinforcing manner. 2) A novel edge auxiliary loss function is designed to enhance the spatial constraints of features. 3) We enhance the contextual information by context enhancement modules from low-level layers to high-level layers.

2. Related works

In this section, we introduce the three types of methods related to our work, including Semantic Segmentation, Object detection and Multi-tasks learning.

Semantic Segmentation: A fully convolutional network (FCN) was proposed in [26] for semantic segmentation, which classifies each pixel of the image. Following FCN, [27] introduces Atrous Spatial Pyramid Pooling (ASPP) with different rates and the dilated convolution, which increases the receptive field with a little parameter increasing. Zhao et al. [28] proposed pyramid pooling module, which contains different scale information between different subregions, and improves the ability to obtain contextual information. Badrinarayanan et al. [29] introduces a decoder network, along with a pixel-wise classification layer. The decoder’s primary function is to restore the feature map to the full input resolution at the pixel level. Another standard model is U-Net [30], which contains a contracting path to capture context and a symmetric expanding path that enables precise localization. Furthermore, [31] extended U-Net to leverage information in multi-modal data. Each available image modality is processed differently to exploit their unique information instead of combining them at the input. Song [32] proposed a novel generative model-based framework for CT image segmentation and proposed a novel pixel-region loss function to handle data unbalance problem. Fan et al. [33] proposed a lung infection segmentation deep network to automatically identify infected regions from chest CT scans, which uses implicit attention and explicit edge-attention to model the boundaries and enhance the representations. Boldsen et al. [34] utilized a machine learning algorithm with decision trees and spatial regularization to outline the lesion. Abulnaga and Rubin [35] introduced the pyramid pooling method to combine global and local contextual information to segment lesions from CT images. Zhang et al. [36] equipped 3D deep convolutional network to learn 3D contextual information efficiently. However, a 3D convolution network is computationally intensive and time-consuming to predict infection areas. Liu et al. [37] proposed a novel deep residual attention convolutional neural network to accurately and simultaneously segment and quantify ischemic stroke and WMH lesions in the MRI images. Wang et al. [38] introduced a hybrid loss function to pay more attention to lesion regions and high-level contextual consistency.

Object Detection: Object detection is a fundamental task in computer vision, which locates and classifies objects in an image. Generally, the object detection method can be divided into two categories: One-stage detection and Two-stage detection. One-stage methods, e.g., YOLO [39], SSD [40], DSSD [41], YOLO v2 [42], directly predict object class and location in a single network. Therefore, it has a faster detection speed than two-stage method. Two-stage methods generate a series of candidate region proposals as samples by the algorithm and then classifies the samples through the convolutional neural network. Among them, R-CNN [43], Fast R-CNN [44] and Faster R-CNN [45] are the most representative frameworks. R-CNN uses selective search to generate region proposals and then feed those region proposals into classification and regression network. Fast R-CNN feeds the image to a convolutional network and crops a set region proposal through ROI pooling layers to extract a fixed-size feature vector, leading to a faster speed and better accuracy. Faster R-CNN is a more flexible and robust deep learning framework, which further replaces regional recommendations with regional suggestion networks. With the continuous public of CT data, many variant algorithms for CT images have been proposed. For example, [46] presents a threshold-based method to separate the candidate nodules from other structures in CT images of the lungs. Xie et al. [47] adjusts Faster R-CNN structure with two region proposal networks and a deconvolutional layer to detect nodule candidates. Wang and Wong [8] leverages long range connections to computational complexity to reduce the computational complexity in COVID-19 detection.

Multi-tasks Learning: Recently, many multi-task learning frameworks for detection and segmentation have been proposed. For example, [22] implemented the task of segmentation and detection in the last layer of the encoder, which suggested multi-task learning could be effectively optimized for both tasks. Brazil et al. [22] implemented a segmentation infusion network to jointly supervise semantic segmentation and object detection in the last encoder layer, that the additional supervision helps guide features in shared layers to be more sophisticated in object detector. Mao et al. [23] proposed a novel hyperlearner network to learn the CNN based detection, which benefited from the extra feature. He et al. [24] used a different branch to parallel predict a segmentation mask, and the other existing branch is implemented for bounding box recognition. Dvornik et al. [25] jointly performed object detection and semantic segmentation in a forward pass that benefits from each other in terms of accuracy. All these frameworks of [22], [23], [24], [25] are compared in Fig. 2 . Unlike previous works, our SRGNet performs semantic segmentation in multiple layers in both encoder and decoder, then region proposals for detection are directly generated from the segment maps. Meanwhile, the previous predicted segmentation layer play as a partial input to the next predicted segmentation layer, and detected lesions refine segmentation result in post-processing.

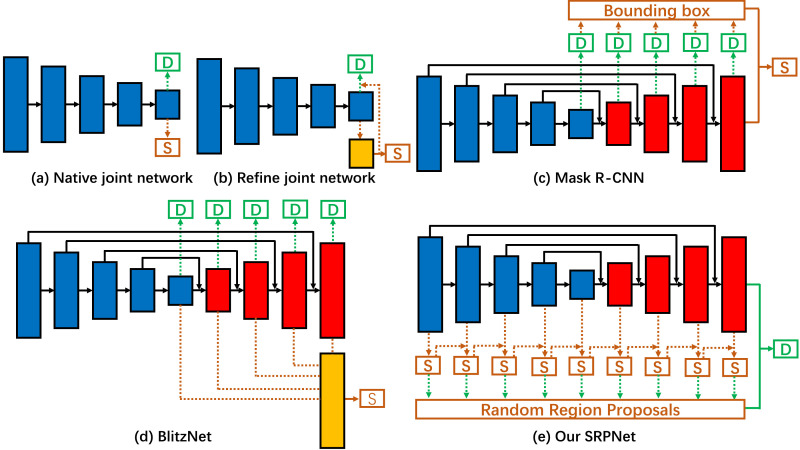

Fig. 2.

Different frameworks of joint object detection and semantic segmentation. (a) Both detection and segmentation are added to the last layer of the encoder [22]. (b) The segmentation branch is used to refine the detection branch [23]. (c) Each layer of the decoder detects the object in various scales and selects the ROI areas for segmentation [24]. (d) Each layer of the decoder detects the object, and the multi-scale features are combined for segmentation [25]. (e) Our proposed SRPNet, in which each layer of the encoder and decoder can be used for segmentation, and region proposals for detection are directly generated from the segment maps. Meanwhile, the previous predicted segmentation layer is as a partial input to the next predicted segmentation layer. Among them, “S” and “D” denote segmentation and detection prediction, respectively.

3. The proposed method

The proposed method is a 2D patch-based deep learning framework to jointly detect and segment the lesion areas of COVID-19 from CT images. Firstly, the network architecture is introduced in Section 3.1. Secondly, the context enhancement module is presented in Section 3.2, which enhances the context information of each pixel on the image feature through multiple convolutions for the coexistence of lesions of different sizes. Thirdly, the loss functions of detection and segmentation are described in Section 3.3. Then, a random region proposal is shown in Section 3.4. Finally, a post-processing procedure is introduced in Section 3.5.

3.1. Model overview

Our key insight is that the real scene’s structure information is explicitly useful for reasoning lesions from a CT image, as the lesion is closely associated with the local structure and surface regions. The traditional deep-based-methods, e.g. U-Net [30], suffer from unsatisfactory performance since they do not adequately take advantage of structure information, e.g., the corners and edges.

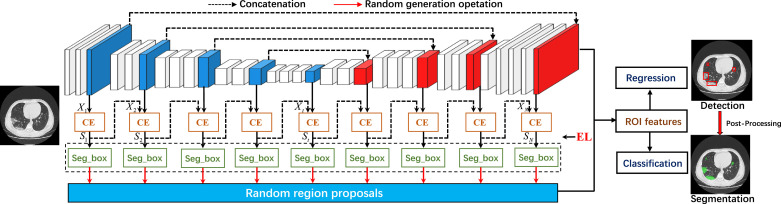

We design an innovative hybrid model to fully explore the structure information at a single image’s multi-scale to solve this. The overview of the proposed SRGNet is shown in Fig. 3 . Let be an input of the network, where is a CT slice image. Our goal is to train a CNN that can simultaneously locate and segment the lesion areas of COVID-19 from . We first produce a set of segmented features , which convolutes with the intermediate features by a context enhancement module. Here, is concatenated by the last layer of the th block and previous segmented map . Thus, the semantic information can be spread through the second path network. Next, we further enhance the feature context information by using edge auxiliary loss, which benefits the prediction of segmentation and detection. Then, we directly obtain bounding boxes from the sequential series , and a random region proposal method is explored to train the detection network. Finally, a post-processing procedure is proposed to refine the predicted segmentation results.

Fig. 3.

The proposed SRGNet. All the encoder and decoder blocks are used for segmentation, and the bounding boxes are directly generated from the segmented maps. Meanwhile, the previous predicted segmentation layer will be considered a partial input to the next predicted segmentation layer. In this stage, we add a context enhancement module (CE) and edge loss (EL) to enhance features’ contextual information. Then, we increase the number of practical bounding boxes through random region proposals and extract responding features from the network’s last layer. Finally, ROI features can be directly resized from these features and as the input to train the detection network with classification loss and regression loss. The predicted bounding boxes can be further used to refine the predicted segmentation results by post-processing during the testing.

3.2. Context enhancement

A key component of SRGNet is the Context Enhancement (CE) module to enhance the contextual information of the intermediate features , and output segmented features .

CE is used to enhance the local contextual information for each intermediate feature . More specifically, CE consists of a convolution, a dilated convolution with dilation 2 and a depth separable convolution to enhance the contextual information of lesion areas. As shown in Fig. 4 , convolution convolves each point with 8 adjacent points, while dilated convolution performs for convolution operation with adjacent point of larger intervals, which increase the receptive field of the network. Compared to convolution, convolution consumes about 2.78 times of computations in the same input channel and output channel. To this end, we select depth separable convolution to embed into our module, which greatly reduces the computation of CE module. Among them, is the input of in the th block, and , and are the output features of convolution, dilated convolution and depth separable convolution, respectively. To further reduce the computation cost, we decrease the output channel of , and . In details, the channel number of is half of , and and are a quarter of . Without loss of generality, we can assume that each position is more relevant to the nearest positions than other distant positions. Thus, we utilize more output channels for the convolution. Then, we concatenate , and , and increase the non-linearity by Leak Relu. As a result, we obtain the enhanced contextual features , and then concatenate and as the input of the CE module. The feature after multi-scale local enhancement has twice as many channels as . Finally, we apply a convolution to output the segmentation map with two channels.

Fig. 4.

The local enhancement module contains three convolutions, including convolution, dilated convolution with dilate 2, and depth separable convolution.

3.3. Loss functions

Semantic Segmentation. In addition to supervising the pixel-level labels of the lesion area, we also add a multi-scale segmentation auxiliary loss to tune contextual information of each segmentation map from the encoder and decoder modules. The th stage uses the softmax cross-entropy loss and an edge loss, defined as:

| (1) |

where denotes the predicted feature map of size , denotes the corresponding ground-truth, is the number of categories, and is the index. is used to balance the softmax cross entropy loss and the edge loss .

The edge loss (EL) is another crucial component of SRGNet, enhancing the spatial constraints of features. We adopt as the auxiliary loss and set to 0.02 to balance the weight between the pixel level and the spatial level. is defined according to the spatial edge maps and is calculated in the horizontal and vertical directions by introducing an offset parameter of . The edge maps are defined as:

| (2) |

where and denote the th row and th column of the matrix. and are short for horizontal and vertical, respectively. and can directly generate from and through a argmax operation in channel , respectively. and denote the category value of and at , respectively. In our scenario, such a setting aims to capture the edges in the horizontal and vertical directions, which have a high activation on multiple steps. The final loss is calculated among the edge maps , , and by a normalized loss.

Based on the edge maps introduced above, we consider the following loss function to minimize the difference edge maps between (i.e., and ) and (i.e., and ):

| (3) |

where and are the pixel numbers of different edge maps in the horizontal and vertical directions. In our experiment, is set to 3, and , and are set to 2, 1, and 0.5, respectively.

The total segmentation loss of all the stages is a weighted sum of :

| (4) |

Here, we introduce the hyperparameters to balance the main segmentation loss function and the auxiliary losses , . gradually increases as becomes larger. More details are discussed in the ablation study of the experiments.

Object Detection. To train the region proposal network of detection task, we assign a binary class label to each anchor, conducted by ROI features in the last layer. Thus, we assign positive labels to these anchors with Intersection-over-Union (IoU) higher than 0.7 and assign negative labels if IoU ratios were lower than 0.3. Note that anchors neither positive nor negative do not contribute to training the network. With these considerations, we minimize the following objective function:

| (5) |

where is the anchor index, and denote the predicted probability and ground-truth probability of anchor , respectively. equalizes to 1 if the anchor is positive, otherwise 0. is the 4 parameter coordinate vector of a predicted bounding box and is the vector of the ground-truth bounding box associated with a positive anchor. The classification loss is the two-class cross-entropy loss, and the regression loss is the smooth loss, as defined in [44]. makes be activated only when the anchor is positive (=1). The two terms and are weighted by a parameter , which is set to 10. Note that and are trained separately. In this way, we first use the segmentation loss to learn the segmentation task. When the network converges, we keep the parameters of the segmentation task fixed and continue to use the detection loss to learn the parameters of the detection task.

3.4. Random region proposals

The second key component of SRGNet is the random region proposal method, which is shown in Fig. 5 . The bounding boxes are directly extracted from the segmented image. In detail, each module in the network outputs a segmentation map, and the bounding boxes can directly obtained from the segmented lesions, which is expressed as follows:

| (6) |

where , and is the number of bounding boxes in the th segmentation image. denotes the rectangle operation, which returns the location of bounding boxes for each lesion area. and denote the coordinates of the upper left corner of the th bounding box in th segmentation map. and denote the width and height of the th bounding box in th segmentation map, respectively.

Fig. 5.

Random region proposals. The left image predicts the segmentation result, and then the intermediate image directly generates bounding boxes from the segmentation result. Finally, we use the random region proposals method to change the coordinates, heights randomly, and widths of the bounding boxes within a specific scale to train the detection task (right image).

However, those bounding boxes are not enough for training. Therefore, we use the random region proposal (RRP) method to increase the number of bounding boxes, which is defined as:

| (7) |

where is the function of random region proposal, which randomly changes the coordinates, heights, and widths of the bounding boxes within a certain scale train the detection model. In these experiments, we set the horizontal and vertical coordinates to be randomly shifted within 10 pixels. Moreover, the widths and heights to be randomly scaled between 0.8 and 1.2. indicates that each bounding box can be expanded to bounding boxes. , , and denote the location information of bounding boxes after expansion. For each segmented image , we can get bounding boxes. Finally, we select those bounding boxes as the anchor part, which effectively alleviates the unbalanced category problem when training the detection network.

3.5. Post-processing

In the test phase, we directly obtain bounding boxes from the last segmentation map. A certain number of anchor boxes are generated by a specific offset and scaling from these bounding boxes. Those anchor boxes are regressed and classified by a network, and 3 anchor boxes with the highest classification scores are used to calculate the overlapping rates with the bounding boxes from segmentation. If an overlapping rate is less than , we consider that the bounding box’s detected lesion is invalid.

4. Experimental results

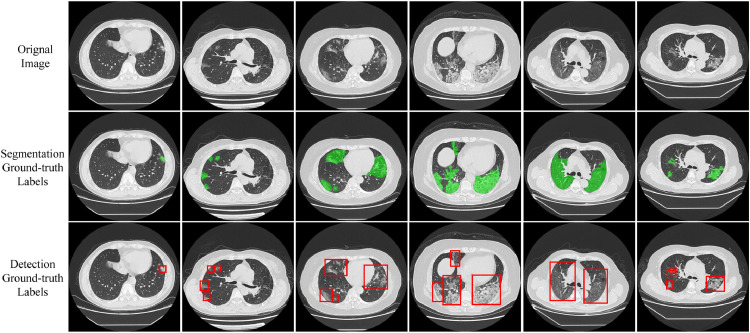

Dataset. Currently, there are few publicly available COVID-19 datasets with pixel-level markings. To this end, we constructed a new COVID-19 segmented (COVID) dataset. In this section, we introduced data collection, professional marking. Fig. 6 shows some examples of our COVID-19 dataset.

Fig. 6.

Examples of our COVID-19 dataset, including CT scan images, segmentation labels and detection labels from top to bottom. Note detection labels are directly acquired from corresponding segmentation labels.

A. Data collection To protect the privacy of patients, we ignore their personal information in the dataset. We collected 313,167 CT slices from 438 patients, all of which are positive for COVID-19 and confirmed by RT-PCR tests. All images were acquired at 2 Chinese hospitals between January and February 2020. A medium sharp reconstruction algorithm reconstructed the CT slices with a thickness range from 0.5 mm to 1.25 mm. As in the previous study [48], we did not take patients with community-acquired pneumonia (CAP) into consideration.

B. Data annotation Although we have captured enough COVID-19 chest CT image data, accurate annotated labels are essential for deep learning. To this end, we formed a team of 3 annotators with deep radiology background and proficient annotation skills to annotate the areas and boundaries of the COVID-19 infected area, and a senior radiologist checks the final annotation with first-line clinical experience in COVID-19. For the segmentation task, we perform pixel-level labeling as strategies: 1. To save labeling time, the radiologists randomly select CT scan images of 50 patients. In this step, our goal is to label infected areas with pixel-level annotations. 2. To generate high-quality annotations, we invite a senior radiologist to refine the labeling marks for cross-validation. Some inaccurately labeled images have been removed in this stage.

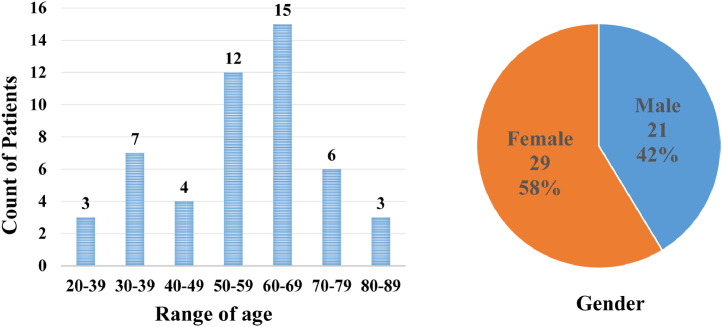

After the above annotation procedures, we finally obtain 12,604 pixel-level labeled CT slices from 50 COVID-19 patients with a resolution of . Note our detection labels can be directly acquired from corresponding segmentation labels, which is shown in Fig. 6. Figure 7 introduced the age and gender distribution of patients in our dataset. Among them, 1,360 slices were marked with lesions. To reduce the labeling process’s errors, we only select infected slices with lesion labeling for training and testing. We randomly split the dataset into training and testing on the patient-level, i.e., we select 1,024 CT slices for training and the other 336 for testing.

Fig. 7.

The age and gender distribution of patients in our dataset.

Training Detail. Our model is implemented with Tensorflow-GPU 1.8.0. All training and testing are done on a single Tesla V100 GPU using CUDA 10.0 and CUDNN 7.5. In detail, we train the network parameters over 120 epochs using the training set with a learning rate of 0.001. We utilize the stochastic gradient descent (SGD) with a momentum of 0.99 and a weight decay of 0.0005 in training for all experiments. The batch size is set to 12.

Limitation. Compared with 2D segmentation, 3D segmentation can better use spatial context information in the third dimension. However, when doctors mark covid data, some of them will inevitably be mismarked. If we use data for 3D segmentation, some incorrect annotations may be introduced. Moreover, the computation of 3D convolution is much higher than 2D convolution. Limited by GPU video memory, 3D convolution needs to use local features for training, and some context information will be lost. Therefore, we mainly focus on the 2D segmentation and detection of ischemic stroke.

4.1. Comparison to state-of-the-art methods

We compare SRGNet with state-of-the-art methods on the COVID-19 dataset. Table 1 shows the comparison results with Faster-RCNN [45], Cascade-RCNN [49], Scratch-Faster-RCNN [50], U-Net [30], U-Net++ [51], Attention U-Net [52], Mask-RCNN [24], BlitzNet [25], Yolact [53] and Solo [54]. Codes of these methods are publicly available, and we follow the authors’ instructions to retrain the models on the COVID-19 dataset. Note that Faster-RCNN [45], Cascade-RCNN [49] and Scratch-Faster-RCNN [50] detect the lesions of COVID-19, which are compared in terms of the accuracy . 0, 0.2 and 0.5 are threshold, comparing to IoU between prediced bounding box and corresponding ground truth, to judge whether the predicted bounding boxes is right or not. U-Net [30], U-Net++ [51], Attention U-Net [52], Mask-RCNN [24] and BlitzNet [25] are semantic segmentation methods, which are compared in terms of IoU and Dice [55]. Mask-RCNN [24], BlitzNet [25], Yolact [53] and Solo [54] jointly detect and segment the lesions of COVID-19. In Table 1, “-” indicates that the method is not compared on this metric.

Table 1.

Segmentation and detection comparison among our SRGNet and other state-of-the-art methods on the COVID-19 dataset.

| Method | Pixel Acc | IoU | Dice | AP | AP | AP |

|---|---|---|---|---|---|---|

| Faster-RCNN [45] | - | - | - | 83.2 | 72.4 | 58.7 |

| Cascade-RCNN [49] | - | - | - | 87.1 | 75.6 | 65.2 |

| Scratch-Faster-RCNN [50] | - | - | - | 85.4 | 74.2 | 64.3 |

| U-Net [30] | 77.7 | 66.8 | 78.9 | - | - | - |

| U-Net+ [51] | 78.4 | 67.8 | 80.2 | - | - | - |

| Attention U-Net [52] | 81.4 | 69.7 | 82.1 | - | - | - |

| Mask-RCNN [24] | 78.5 | 67.2 | 80.7 | 89.7 | 77.8 | 66.4 |

| BlitzNet [25] | 81.7 | 69.5 | 81.1 | 89.2 | 78.3 | 66.2 |

| Yolact [53] | 69.6 | 58.4 | 74.2 | 84.3 | 73.1 | 61.9 |

| Solo [54] | 82.4 | 70.1 | 83.0 | 90.6 | 78.1 | 66.8 |

| SRGNet(Ours) | 83.2 | 71.1 | 83.0 | 90.6 | 78.7 | 67.2 |

The evaluation result of SRGNet and other compared methods are displayed in Table 1. We can see that Cascade-RCNN, which uses the previous detection results as the latter detection stage input, outperforms Faster-RCNN, and Scratch-Faster-RCNN on average precision. It proves our conjecture that mining structure information in previous levels can improve the prediction of the network. Among the segmentation methods, Attention U-Net achieves the best performance, owing to Attention U-Net increases the network’s receptive field, which contributes to the segmentation task. In multi-task learning, BlitzNet performs better than Mask-RCNN in five metrics, including pixel accuracy, IOU, Dice, , and . Yolact [53] directly generates the segmentation and detection result in one stage. Mask-RCNN first performs the detection task and then performs image segmentation in the detection box, which damages the segmentation task’s contextual information. BlitzNet simultaneously predicts the segmentation and detection results in the decoder network, which further utilizes the supervision information than Mask-RCNN. Solo [54] outputs segmentation masks on whole image. However, the proposed SRGNet is more stable across various evaluation metrics. From Table 1, we can see that the multi-task methods, e.g., Mask-RCNN, BlitzNet, and our SRGNet, outperform the traditional lesion detection task, e.g., Faster-RCNN, Cascade-RCNN, and Scratch-Faster-RCNN. It proves that the extra supervision of segmentation can effectively improve the performance of lesion detection. In terms of AP, Dice, and IOU’s best-known metric, SRGNet achieves the best performance, demonstrating the superiority of SRGNet in COVID-19 infected area segmentation and detection.

Moreover, some visual comparisons among U-Net [30], Faster-RCNN [45], Yolact [53], and SRGNet are shown in Fig. 8 . We can observe that the results of SRGNet are very close to the ground truth.

Fig. 8.

Some predicted results by our method and other state-of-the-art approaches in the COVID-19 dataset.

4.2. Ablation study

In this section, we first analyze the effects of different components in the proposed SRGNet. To examine the context enhancement (CE), edge loss (EL), random region proposals (RRP) and post-processing modules, we report the experimental results in Table 2 . For the base network, U-Net based framework is adopted to our network, and the softmax cross-entropy loss is merely used for training. We use resnet50 as the encoder network and four modules on the decoder network. Each decoder module has 2 convolutional layers, including a deconvolution with stride 2 and a convolution with stride 1. Note deconvolution is used to restore the resolution of the feature.

Table 2.

The ablation study of our SRGNet. “Base” is trained with only the softmax cross entropy loss, and “CE” denotes the context enhancement module. “EL” represent the edge loss. “RRP” denotes random region proposals.

| Method | Pixel Acc | IoU | Dice | AP | AP | AP |

|---|---|---|---|---|---|---|

| Base | 78.3 | 68.7 | 81.2 | 88.2 | 75.3 | 61.8 |

| Base+CE (decoder) | 80.7 | 69.7 | 81.7 | 89.1 | 76.5 | 63.4 |

| Base+CE | 81.2 | 70.2 | 81.9 | 89.4 | 77.2 | 64.2 |

| Base+CE+EL | 83.0 | 70.8 | 82.8 | 90.1 | 78.5 | 66.4 |

| Base+CE+EL+Post-processing | 83.2 | 71.1 | 83.0 | 90.6 | 78.7 | 67.2 |

| SRGNet(without RRP) | 83.0 | 70.9 | 82.9 | 89.2 | 77.1 | 63.9 |

It can be seen that all three modules have a significant contribution to the excellent performance of SRGNet, especially the context enhancement and edge loss. Among them, “Base+CE (decoder)” only uses the context enhancement in the decoder network, while “Base+CE” applies it in both encoder and decoder, which proved the effectiveness of utilizing the feature from the whole network. From Table 2, SRGNet obtains 0.5%, 1.0% and 2.4% improvement in Dice, IoU, and Pixel accuracy when CE is applied in decoder modules, and further gets 0.2%, 0.5% and 0.5% improvement when CE adopted in both encoder and decoder modules. Simultaneously, benefited from the context enhancement module, there is also a corresponding improvement in detection. Then we use the edge auxiliary loss function to optimize the semantic spatial information of the whole network. In the same way as the context enhancement module, edge loss is applied to the feature with different resolutions among low-layers and high-layers to obtain the spatial optimization in different resolutions. In this way, we obtain 0.9%, 0.6%, and 1.8% improvement in Dice, IoU, and Pixel accuracy, respectively. Finally, SRGNet jointly detects and segments ischemic stroke by post-processing. Table 2 shows that our SRGNet obtain 0.2%, 0.3% and 0.2 improvement in Dice, IoU, and Pixel accuracy obtained the corresponding improvement in lesion detection. “SRGNet(without RRP)” means SRGNet does not use RRP. In this way, we randomly crop features as the anchor of the detection task. As can be seen, we further obtain 0.6%, 1.6% and 3.3% improvement in , and when RRP embedded with SRGNet.

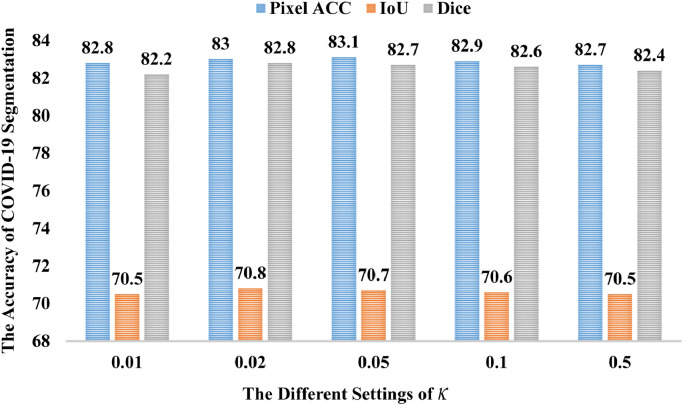

Then, we explore hyper-parameters’ influence on the experimental results, including and . As shown in Fig. 9 , the first set of hyperparameters is set to 0.2, while the second set of hyperparameters increases in the segment, and the third set of hyperparameters increases linearly. From Fig. 9, the third set of hyperparameters yields the best result. It proved that later modules are more conducive to the prediction of the network. Fig. 10 show the segmentation result with different settings. When is set to 0.02, the network obtains the IOU and Dice’s best performance. Note none of Figs. 9 and 10 use post-processing.

Fig. 9.

Results of the COVID-19 segmentation with different settings.

Fig. 10.

Results of the COVID-19 segmentation with different settings.

5. Conclusion

We have proposed an effective Sequential Region Generation Network (SRGNet) to jointly detect and segment the lesions of COVID-19 in a mutually reinforcing manner. SRGNet directly generates bounding boxes from the segmented images in various layers, which decreases the computation cost and alleviates the unbalanced category problem when training the detection network. The predicted bounding boxes further refine the segmented result by a post-processing process. Besides, SRGNet employs a context enhancement module and edge loss to enhance the local and global information of the features from the low-level layers to the high-level layers, which further improves the accuracy of segmentation and detection of COVID-19. The experimental results have demonstrated the superior performance of SRGNet. However, there are still some limitations worth noting.

Firstly, the detection and segmentation of the COVID-19 lesion area are highly dependent on the annotation of the clinical experts, which is very time-consuming and affected by the prior knowledge of experts. Secondly, the affected area of COVID-19 has similar imaging features with other pneumonia viruses. Due to the lack of confirmation of each cause, we do not add other viral pneumonia for comparison. Finally, CT images collected by different devices will hinder the promotion of the algorithm.

In our future work, we are going to increase annotated examples of COVID-19 and invite more experts to check the accuracy of the annotated data. Furthermore, we plan to add data on pneumonia caused by other types of viruses and retrain the data to improve the robustness of the algorithm. Finally, we will consider joining multiple institutions to collect chest CT images of various lesions of different severity for comparison to verify the effectiveness of the proposed method. We hope the proposed method can be promoted on a large scale clinically application and help diagnose other diseases.

Declaration of Competing Interest

We would like to declare that no conflict of interest exists in the submission of this manuscript, and this manuscript is approved by all authors for publication.

Acknowledgments

This work is supported by the National Natural Science Foundation of China (Nos. 62025603, U1705262, 61772443, 61572410, 61802324 and 61702136), Principal Fund (No. 20720200091) and Natural Science Foundation of Guangdong Province in China (No. 2019B1515120049).

Biographies

Jipeng Wu received the B.Sc. in School of Mathematical Science from Ningbo University of Technology, Ningbo, China, and the M.Sc. in School of Science from Jimei University, Xiamen, China. He is currently pursuing the Ph.D. degree in Intelligence Department, Xiamen University, Xiamen, China. His research interests include computer vision, deep learning and medical image processing.

Haibo Xu received the B.Sc. and M.Sc. degrees in medicine from the Tongji Medical University, Wuhan, China and the Ph.D. and M.D. degrees in medical imaging and nuclear medicine from the Tongji Medical School of Huazhong University of Science and technology. He was an Academic Visiting Scholar at the Research Center of Magnetic Resonance, Massachusetts General Hospital (MGH), School of Medicine, Harvard University, Boston, U.S.A., from 2004 to 2005. He was a Professor and the chief doctor at the Department of Radiology at the Union Hospital of Tongji Medical College of Huazhong University of Science and Technology, from 2005 to 2015. In 2015, He joined the Wuhan University as a Professor and the director of Medical Imaging Department of Zhongnan Hospital of Wuhan University. His current research interests include central nervous system imaging, molecular imaging, infectious disease imaging, biomedical engineering, and artificial intelligence in medical imaging.

Shengchuan Zhang received the Ph.D. degree in Information and Telecommunications Engineering, School of Electronic Engineering, Xidian University, Xin, China, in 2016. He received the B.Eng. degree in electronic information engineering from Southwest University, Chongqing, China, in 2011. He is currently an Assistant Professor in School of Informatics, Xiamen University. His current research interests include computer vision and pattern recognition. He has published some scientific papers in leading journals, like IEEE TIP, IEEE TCSVT, SP, etc.

Xi Li received the B.M(Bachelor of Medicine degree) in Wuhan Medicine from Wuhan University, Hubei, Wuhan, China in 2001 and the M.D (medical doctor) degree from Wuhan University, Hubei in 2006. He is now working in the Shenzhen Hospital of Peking University, Shenzhen, China. His current research interests include artificial intelligence research about medical pathology and CT imaging.

Jie Chen received the MSc and PhD degrees from the Harbin Institute of Technology, China, in 2002 and 2007, respectively. He joined the faculty with the Graduate School in Shenzhen, Peking University, in 2019, where he is currently an associate professor with the School of Electronic and Computer Engineering. Since 2018, he has been working with the Peng Cheng Laboratory, China. From 2007 to 2018, he worked as a senior researcher with the Center for Machine Vision and Signal Analysis, University of Oulu, Finland. In 2012 and 2015, he visited the Computer Vision Laboratory, University of Maryland and School of Electrical and Computer Engineering, Duke University respectively. His research interests include pattern recognition, computer vision, machine learning, deep learning, and medical image analysis. He is an associate editor of the Visual Computer. He is a member of the IEEE.

Jiawen Zheng obtained a B.Sc. in School of Information Science & Engineering, Lanzhou University, Lanzhou, China. He is currently studying for a M.Sc. at the School of Informatics Xiamen University, Xiamen, China. His main research includes deep learning, computer vision and pose estimation.

Yue Gao received the B.S. degree from Harbin Institute of Technology, Harbin, China, in 2005, and the M.E. degree from Tsinghua University, Beijing, China, in 2008. He is currently working toward the Ph.D. degree with the Department of Automation, Tsinghua University, Beijing, China. His research interests include multimedia information retrieval, machine learning, and pattern recognition.

Yonghong Tian (Senior Member, IEEE) is currently a Full Professor with the School of Electronics Engineering and Computer Science, Peking University, Beijing, China, and the Deputy Director of AI Research Center, Pengcheng Lab, Shenzhen, China. His research interests include computer vision, multimedia big data, and brain-inspired computation. He is the author or coauthor of more than 170 technical articles in refereed journals and conferences. He was/is an Associate Editor for the IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS FOR VIDEO TECHNOLOGY (2018), IEEE TRANSACTIONS ON MULTIMEDIA (20142018), IEEE Multimedia Magazine (2018), and IEEE Access (2017). He co-initiated the IEEE International Conference on Multimedia Big Data (BigMM) and served as the TPC Co-Chair of BigMM 2015, and also served as the Technical Program Co-Chair of IEEE ICME 2015, IEEE ISM 2015 and IEEE MIPR 2018/2019, and the organizing committee member of ACM Multimedia 2009, IEEE MMSP 2011, IEEE ISCAS 2013, IEEE ISM 2015/2016. He is the steering member of IEEE ICME (2018) and IEEE BigMM (2015), and is a TPC Member of more than ten conferences such as CVPR, ICCV, ACM KDD, AAAI, ACM MM and ECCV. He was the recipient of the Chinese National Science Foundation for Distinguished Young Scholars in 2018, two National Science and Technology Awards and three ministerial-level awards in China, and received the 2015 EURASIP Best Paper Award for the EURASIP Journal on Image and Video Processing and the Best Paper Award of IEEE BigMM 2018. He is a senior member of CIE and CCF and a member of ACM.

Yongsheng Liang received the Ph.D. degree in communication and information systems from the Harbin Institute of Technology, Harbin, China, in 1999. From 2002 to 2005, he was a Research Assistant with the Harbin Institute of Technology, where he has been a Professor with the School of Electronic and Information Engineering, since 2017. His research interests include video coding and transferring and machine learning and applications. He was the recipient of the Wu Wenjun Artificial Intelligence Science and Technology Award for Excellence and the Second Prize in Science and Technology of Guangdong Province

Rongrong Ji (Senior Member, IEEE) is currently a Professor and the Director of the Intelligent Multimedia Technology Laboratory, and the Dean Assistant with the School of Information Science and Engineering, Xiamen University, Xiamen, China. His work mainly focuses on innovative technologies for multimedia signal processing, computer vision, and pattern recognition, with over 100papers published in international journals and conferences. He is a member of the ACM. He also serves as a program committee member for several Tier-1 international conferences. He was a recipient of the ACM Multimedia Best Paper Award and the Best Thesis Award of Harbin Institute of Technology. He serves as an Associate/Guest Editor for international journals and magazines, such as Neurocomputing, Signal Processing, Multimedia Tools and Applications, the IEEE Multimedia Magazine, and Multimedia Systems.

References

- 1.Wang C., Horby P.W., Hayden F.G., Gao G.F. A novel coronavirus outbreak of global health concern. Lancet. 2020;395(10223):470–473. doi: 10.1016/S0140-6736(20)30185-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Huang C., Wang Y., Li X., Ren L., Zhao J., Hu Y., Zhang L., Fan G., Xu J., Gu X., et al. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet. 2020;395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.J. CSSE, Coronavirus COVID-19 global cases by the center for systems science and engineering (CSSE) at Johns Hopkins University (JHU), 2020.

- 4.Bernheim A., Mei X., Huang M., Yang Y., Fayad Z.A., Zhang N., Diao K., Lin B., Zhu X., Li K., et al. Chest CT findings in coronavirus disease-19 (COVID-19): relationship to duration of infection. Radiology. 2020:200463. doi: 10.1148/radiol.2020200463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Xie X., Zhong Z., Zhao W., Zheng C., Wang F., Liu J. Chest CT for typical 2019-nCoV pneumonia: relationship to negative RT-PCR testing. Radiology. 2020:200343. doi: 10.1148/radiol.2020200343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.A. Narin, C. Kaya, Z. Pamuk, Automatic detection of coronavirus disease (COVID-19) using x-ray images and deep convolutional neural networks, arXiv preprint arXiv:2003.10849 (2020). [DOI] [PMC free article] [PubMed]

- 7.Apostolopoulos I.D., Mpesiana T.A. COVID-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020:1. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.L. Wang, A. Wong, COVID-Net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest radiography images, arXiv preprint arXiv:2003.09871 (2020). [DOI] [PMC free article] [PubMed]

- 9.Oulefki A., Agaian S., Trongtirakul T., Laouar A.K. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2020:107747. doi: 10.1016/j.patcog.2020.107747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chen X., Yao L., Zhou T., Dong J., Zhang Y. Momentum contrastive learning for few-shot COVID-19 diagnosis from chest CT images. Pattern Recognit. 2020:107826. doi: 10.1016/j.patcog.2021.107826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ai T., Yang Z., Hou H., Zhan C., Chen C., Lv W., Tao Q., Sun Z., Xia L. Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology. 2020:200642. doi: 10.1148/radiol.2020200642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ye Z., Zhang Y., Wang Y., Huang Z., Song B. Chest CT manifestations of new coronavirus disease 2019 (COVID-19): a pictorial review. Eur. Radiol. 2020:1–9. doi: 10.1007/s00330-020-06801-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shi F., Wang J., Shi J., Wu Z., Wang Q., Tang Z., He K., Shi Y., Shen D. Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for COVID-19. IEEE Rev. Biomed. Eng. 2020 doi: 10.1109/RBME.2020.2987975. [DOI] [PubMed] [Google Scholar]

- 14.Wang S., Kang B., Ma J., Zeng X., Xiao M., Guo J., Cai M., Yang J., Li Y., Meng X., et al. A deep learning algorithm using CT images to screen for corona virus disease (COVID-19) MedRxiv. 2020 doi: 10.1007/s00330-021-07715-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.A. Joaquin, Using deep learning to detect pneumonia caused by nCoV-19 from x-ray images, available at: https://towardsdatascience.com/using-deep-learning-to-detect-ncov-19-from-x-ray-images-1a89701d1acd (2020).

- 16.Butt C., Gill J., Chun D., Babu B.A. Deep learning system to screen coronavirus disease 2019 pneumonia. Appl. Intell. 2020:1. doi: 10.1007/s10489-020-01714-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Z. Tang, W. Zhao, X. Xie, Z. Zhong, F. Shi, J. Liu, D. Shen, Severity assessment of coronavirus disease 2019 (COVID-19) using quantitative features from chest CT images, arXiv preprint arXiv:2003.11988 (2020).

- 18.Shi F., Xia L., Shan F., Song B., Wu D., Wei Y., Yuan H., Jiang H., He Y., Gao Y., et al. Large-scale screening of COVID-19 from community acquired pneumonia using infection size-aware classification. Phys. Med. Biol. 2021;66:065031. doi: 10.1088/1361-6560/abe838. [DOI] [PubMed] [Google Scholar]

- 19.F. Shan+, Y. Gao+, J. Wang, W. Shi, N. Shi, M. Han, Z. Xue, D. Shen, Y. Shi, Lung infection quantification of COVID-19 in ct images with deep learning, arXiv preprint arXiv:2003.04655 (2020).

- 20.Fu J., Liu J., Li Y., Bao Y., Yan W., Fang Z., Lu H. Contextual deconvolution network for semantic segmentation. Pattern Recognit. 2020;101:107152. [Google Scholar]

- 21.Peng C., Ma J. Semantic segmentation using stride spatial pyramid pooling and dual attention decoder. Pattern Recognit. 2020;107:107498. [Google Scholar]

- 22.Brazil G., Yin X., Liu X. Proceedings of the IEEE International Conference on Computer Vision. 2017. Illuminating pedestrians via simultaneous detection & segmentation; pp. 4950–4959. [Google Scholar]

- 23.Mao J., Xiao T., Jiang Y., Cao Z. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. What can help pedestrian detection? pp. 3127–3136. [Google Scholar]

- 24.He K., Gkioxari G., Dollár P., Girshick R. Proceedings of the IEEE International Conference on Computer Vision. 2017. Mask R-CNN; pp. 2961–2969. [Google Scholar]

- 25.Dvornik N., Shmelkov K., Mairal J., Schmid C. Proceedings of the IEEE International Conference on Computer Vision. 2017. BlitzNet: a real-time deep network for scene understanding; pp. 4154–4162. [Google Scholar]

- 26.Long J., Shelhamer E., Darrell T. Proceedings of the IEEE conference on computer vision and pattern recognition; 2015. Fully convolutional networks for semantic segmentation; pp. 3431–3440. [DOI] [PubMed] [Google Scholar]

- 27.L.-C. Chen, G. Papandreou, F. Schroff, H. Adam, Rethinking atrous convolution for semantic image segmentation, arXiv preprint arXiv:1706.05587 (2017).

- 28.Zhao H., Shi J., Qi X., Wang X., Jia J. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2017. Pyramid scene parsing network; pp. 2881–2890. [Google Scholar]

- 29.Badrinarayanan V., Kendall A., Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39(12):2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 30.Ronneberger O., Fischer P., Brox T. International Conference on Medical Image Computing and Computer-assisted Intervention; 2015. U-Net: convolutional networks for biomedical image segmentation; pp. 234–241. [Google Scholar]

- 31.Dolz J., Ayed I.B., Desrosiers C. International MICCAI Brainlesion Workshop. Springer; 2018. Dense multi-path u-net for ischemic stroke lesion segmentation in multiple image modalities; pp. 271–282. [Google Scholar]

- 32.T. Song, Generative model-based ischemic stroke lesion segmentation, arXiv preprint arXiv:1906.02392 (2019).

- 33.D.-P. Fan, T. Zhou, G.-P. Ji, Y. Zhou, G. Chen, H. Fu, J. Shen, L. Shao, Inf-Net: automatic COVID-19 lung infection segmentation from CT scans, arXiv preprint arXiv:2004.14133 (2020). [DOI] [PubMed]

- 34.Boldsen J.K., Engedal T.S., Pedraza S., Cho T.-H., Thomalla G., Nighoghossian N., Baron J.-C., Fiehler J., Østergaard L., Mouridsen K. Better diffusion segmentation in acute ischemic stroke through automatic tree learning anomaly segmentation. Front. Neuroinformatics. 2018;12:21. doi: 10.3389/fninf.2018.00021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Abulnaga S.M., Rubin J. International MICCAI Brainlesion Workshop. Springer; 2018. Ischemic stroke lesion segmentation in CT perfusion scans using pyramid pooling and focal loss; pp. 352–363. [Google Scholar]

- 36.Zhang R., Zhao L., Lou W., Abrigo J.M., Mok V.C., Chu W.C., Wang D., Shi L. Automatic segmentation of acute ischemic stroke from DWI using 3-D fully convolutional DenseNets. IEEE Trans. Med. Imaging. 2018;37(9):2149–2160. doi: 10.1109/TMI.2018.2821244. [DOI] [PubMed] [Google Scholar]

- 37.Liu L., Kurgan L., Wu F.-X., Wang J. Attention convolutional neural network for accurate segmentation and quantification of lesions in ischemic stroke disease. Med. Image Anal. 2020;65:101791. doi: 10.1016/j.media.2020.101791. [DOI] [PubMed] [Google Scholar]

- 38.Wang G., Song T., Dong Q., Cui M., Huang N., Zhang S. Automatic ischemic stroke lesion segmentation from computed tomography perfusion images by image synthesis and attention-based deep neural networks. Med. Image Anal. 2020;65:101787. doi: 10.1016/j.media.2020.101787. [DOI] [PubMed] [Google Scholar]

- 39.Redmon J., Divvala S., Girshick R., Farhadi A. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016. You only look once: unified, real-time object detection; pp. 779–788. [Google Scholar]

- 40.Liu W., Anguelov D., Erhan D., Szegedy C., Reed S., Fu C.-Y., Berg A.C. European Conference on Computer Vision. Springer; 2016. SSD: single shot multibox detector; pp. 21–37. [Google Scholar]

- 41.C. Fu, W. Liu, A. Ranga, A. Tyagi, A.C. Berg, DSSD: deconvolutional single shot detector, arXiv preprint arXiv:1701.06659 (2017).

- 42.Redmon J., Farhadi A. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. YOLO9000: better, faster, stronger; pp. 7263–7271. [Google Scholar]

- 43.Girshick R., Donahue J., Darrell T., Malik J. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2014. Rich feature hierarchies for accurate object detection and semantic segmentation; pp. 580–587. [Google Scholar]

- 44.Girshick R. Proceedings of the IEEE International Conference on Computer Vision. 2015. Fast R-CNN; pp. 1440–1448. [Google Scholar]

- 45.Ren S., He K., Girshick R., Sun J. Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2016;39:1137–1149. doi: 10.1109/TPAMI.2016.2577031. [DOI] [PubMed] [Google Scholar]

- 46.Khehrah N., Farid M.S., Bilal S., Khan M.H. Lung nodule detection in CT images using statistical and shape-based features. J. Imaging. 2020;6(2):6. doi: 10.3390/jimaging6020006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Xie H., Yang D., Sun N., Chen Z., Zhang Y. Automated pulmonary nodule detection in CT images using deep convolutional neural networks. Pattern Recognit. 2019;85:109–119. [Google Scholar]

- 48.Q. Yan, B. Wang, D. Gong, C. Luo, W. Zhao, J. Shen, Q. Shi, S. Jin, L. Zhang, Z. You, COVID-19 chest CT image segmentation–a deep convolutional neural network solution, arXiv preprint arXiv:2004.10987 (2020). [DOI] [PMC free article] [PubMed]

- 49.Cai Z., Vasconcelos N. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018. Cascade R-CNN: delving into high quality object detection; pp. 6154–6162. [Google Scholar]

- 50.He K., Girshick R., Dollár P. Proceedings of the IEEE International Conference on Computer Vision. 2019. Rethinking imagenet pre-training; pp. 4918–4927. [Google Scholar]

- 51.Zhou Z., Siddiquee M.M.R., Tajbakhsh N., Liang J. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Springer; 2018. UNet++: a nested U-Net architecture for medical image segmentation; pp. 3–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.O. Oktay, J. Schlemper, L.L. Folgoc, M. Lee, M. Heinrich, K. Misawa, K. Mori, S. McDonagh, N.Y. Hammerla, B. Kainz, et al., Attention U-Net: learning where to look for the pancreas, arXiv preprint arXiv:1804.03999 (2018).

- 53.Bolya D., Zhou C., Xiao F., Lee Y.J. Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019. YOLACT: real-time instance segmentation; pp. 9157–9166. [Google Scholar]

- 54.Wang X., Kong T., Shen C., Jiang Y., Li L. European Conference on Computer Vision. Springer; 2020. SOLO: segmenting objects by locations; pp. 649–665. [Google Scholar]

- 55.Milletari F., Navab N., Ahmadi S.-A. 2016 Fourth International Conference on 3D Vision (3DV) IEEE; 2016. V-Net: Fully convolutional neural networks for volumetric medical image segmentation; pp. 565–571. [Google Scholar]