Abstract

As digital health technologies (DHT) have been embraced as a ‘panacea’ for health care systems, they have evolved from a buzzword into a high priority objective for health policy across the globe. In the realm of quality and safety standards for medical devices, the US Food and Drug Administration (FDA) has been a frontrunner in adapting its regulatory framework to DHT. However, despite the utmost relevance of quality and safety standards and their role for sustaining the innovation pathway of DHT, their actual making has not yet been subjected to in-depth social-science scrutiny. Drawing on the conceptual repertoires of Science and Technology Studies (STS), this article investigates how digital health evolved from a buzzword into an ‘object of government’, or gained material meaning and transformed into a regulatable object, by charting the standard-making process of FDA’s medical digital health policy between 2008 and 2018. From this, we reflect on the mutually sustaining dynamics between technological and organizational innovation, as the FDA’s attempts to standardize medical DHT not only shaped the lifestyle/medical boundary for DHT. It also led to significant reconfigurations within the FDA itself, while fostering a broader shift toward the uptake of alternative forms of evidence in regulatory science.

Keywords: Digital health, FDA, Standard-making, Regulatory science, Lifestyle, Medical boundary

Introduction

April 26, 2018, Hilton, Washington D.C. True to annual tradition, public and private health actors gathered for the 2018 “Health Datapalooza,” a two-day conference organized by the nonprofit organization AcademyHealth to discuss new strategies for improving the US health care system. One of the keynote guests, Scott Gottlieb took center-stage to introduce the brand-new policy initiatives on digital health taken by the Food and Drug Administration (FDA). In his speech, he argued for the transformative potential of digital health technologies (DHT) toward “improving our ability to accurately diagnose and treat disease and to enhance the delivery of health care for the individual, making medical care truly patient centric” (Fieldnotes first author 2018).

The former FDA Commissioner had not been alone in fueling expectations about digital health—which has been conceived, in one of its most prominent policy renderings, as “a broad umbrella term encompassing eHealth as well as developing areas such as the use of advanced computing sciences (in the fields of ‘big data’, genomics and artificial intelligence, for example).” (WHO 2017, p. 1). In the past decade, consultants, policymakers, and researchers have argued that a growing aging population, an ever-increasing number of chronically ill people, and rising health care costs have all been exerting a significant strain on Western health care systems, while heralding the potential of digital health to address these diverse challenges by empowering patients, improving the quality of care, and contributing to more cost-efficient and ‘personalized’ health care systems (PwC 2013; PwC 2014; Deloitte 2015; Roland Berger 2016; Montgomery et al. 2017; OECD 2017; EC 2018; OECD 2019).

From 2010 onward, the promise of digital health became intertwined with a growing number of financial investments leading to the emergence of an entirely new digital health market (Geiger and Gross 2017). Globally, this new market is expected to grow from 106 billion dollars in 2019 up to nearly 640 billion dollars by 2026 (Mikulic 2020). At the same time, the promise of digital health fostered reconfigurations in health care practices. Through the ‘googlization’ and ‘platformization’ of health research (Sharon 2016; van Dijck et al. 2018), dominant tech companies (e.g. Google, Apple, Microsoft, Amazon) managed to secure a foothold in the health domain by providing new ways of collecting, analyzing, and storing health data. Furthermore, social scientists have pointed to the social import of DHT for the individual’s privacy (e.g. Neff and Nafus 2016; Lupton 2017; Marelli, Lievevrouw and Van Hoyweghen 2020), autonomy (e.g. Lupton 2013; Crawford et al. 2015), and inequality and discrimination issues (e.g. Rich, Miah and Lewis 2019).

Increasingly framed as a ‘panacea’ for health care systems (Lievevrouw and Van Hoyweghen 2019), digital health not only has attracted the attention of financial investors and entrepreneurs, but also acquired priority status in the health policy agenda worldwide (Marelli, Lievevrouw and Van Hoyweghen 2020). Over the past years, policymakers have enacted a variety of policies to facilitate the uptake of digital health technologies within health care systems, as exemplified by the launch of specific digital health strategies by the European Commission, national governments in Australia, Japan, and Singapore, and the introduction of reimbursement initiatives in the UK and Germany (EC 2014a, b; Australian Digital Health Agency 2018; Kumar 2019; Ministry of Health Singapore 2019; Federal Ministry of Health 2019; NICE 2019).

However, walking a fine line between lifestyle consumer products and the rigid (densely regulated) world of medical professionals, pharmaceutical and medical device companies, insurance companies, and patient organizations, DHT prompted more significant governance adjustments over the years (Lucivero and Prainsack 2015; Faulkner 2018; Blassimme, Vayena and Van Hoyweghen 2019; Joyce 2019; Geiger and Kjellberg 2021). Sleep applications are a case in point. While most people use their sleep tracker as a fun gadget for a few months, others deploy it as a medical diagnostic tool to gather evidence revealing or confirming a more serious sleep disorder. As such, DHT are the product of a process of ‘medicalization’ and ‘lifestylization’ at the same time: they enable the entry of lifestyle data (e.g. sleep tracking information, step counting, and dietary habits) into the medical sphere, while medicalizing people’s lifestyle choices at home (Lucivero and Prainsack 2015; Joyce 2019; McFall, Meyers and Van Hoyweghen 2020).

In an effort to clarify their position on the legally constructed boundary between consumer and medical products, a relevant part of DHT policies have revolved around defining the ‘medical deviceness’ (Faulkner 2008) of these technologies. Several national bodies, such as in the US, Germany, and the UK, and supranational bodies, such as the European Commission and the International Medical Device Regulatory Coalition, have revised their existing regulatory standards for safety and efficacy to accommodate the specificities of DHT (IGES Institute 2016; Medicines and Healthcare Products Regulatory Agency 2014; Official Journal of the European Union 2017a, 2017b; Ordish et al. 2019). In attempting to standardize quality and safety requirements, thus defining the scope of medical DHT, these national regulatory bodies play a crucial role in shaping the lifestyle/medical boundary for digital health technologies.

Despite the utmost relevance of quality and safety standards (QSS), in terms of both the design of the technology and their role in sustaining the innovation pathway of DHT, they have not yet been examined in-depth from a social-science angle. Drawing upon a Science and Technology Studies (STS) approach, this article charts the efforts to standardize the quality and safety of medical digital health technologies by the US Food and Drug Administration—a frontrunner in devising QSS for digital health products ever since the publication of its first draft guideline on “Mobile Medical Applications” in 2011 (FDA 2013). From this, we investigate how—through this standard-making process—DHT have gained material meaning and became regulatable as it evolved from a ‘buzzword’ (Bensaude Vincent 2014) into an ‘object of government’ (Lezaun 2006). Through a document analysis of policy, media, and industry publications between 2008 and 2018, we map the controversies and stakes at play in the development of two key policy items: the FDA’s guideline on “Mobile Medical Applications: Guidance for Industry and Food and Drug Administration Staff” and its “Digital Health Innovation Action Plan no”. Building on this, we reflect on the mutually sustaining dynamics between technological and organizational innovation (Jasanoff 2005; Cambrosio et al. 2014), as the FDA standard-making process not only shaped the lifestyle/medical boundary for DHT—thus addressing the question of what should count as a medical as opposed to a lifestyle device—but also led to significant reconfigurations within the FDA, as well as to a broader shift toward the uptake of ‘alternative’ forms of evidence in regulatory science.

Social studies of standard-making

The question of how standard-making plays an enabling role in shaping up new innovation pathways has long since captured the attention of social scientists (Abraham 1995; Barry 2001; Webster 2007; Faulkner 2008; Demortain 2011). Standards are “any set of agreed-upon rules for the production of (textual or material) objects” (Bowker and Star 1999 p. 13). They are often enforced by legal organizations (e.g. professional or manufacturers organizations, or the state) and in doing so always impose a way of classifying the world (Bowker and Star 1999). Safety, quality, and efficacy standards are mobilized as part of the governance of risk and the protection of citizens against adverse health effects (Demortain 2011). In doing so, standardization represents a crucial component in the organization of healthcare systems (Bowker and Star 1999; Faulkner 2018). While various social scientists have pointed to the performativity of discursive expectations in the shaping of the early-stage innovation pathway of technologies (Brown 2003; Borup et al. 2006; Van Lente et al. 2013; Pickersgill 2019), standard-making processes can be regarded as distinct elements in the later stages of the innovation pathway, involving a ‘granular’ set of (material, industrial, and regulatory) actors (Webster 2007; Faulkner 2008; Ulucanlar et al. 2013).

The role of regulatory expertise in standard-making

Regulatory frameworks play an important role in the later stages of innovation pathways (Lezaun 2006; Faulkner 2008, 2018; Hendrickx 2014; Hogle 2018). As regulations classify and fix boundaries to provide legal clarity (Faulkner 2012), they function as a way to enforce standards (Bowker and Star 1999). In this context, in his work on genetically modified organisms, Lezaun (2006) illustrates how regulatory processes (e.g. legal documents and administrative mechanisms) have enabled GMO’s to have acquired material meaning, therefore enabling these technologies to become subject to governance mechanisms. In doing so, he highlights the interplay between the construction of what he defines as an ‘object of government’ in a legal document and the administrative mechanisms that add material meaning to it.

The direction that legal standard-making processes take and the reasons provided for maintaining, stretching, or breaking with an existing regulatory framework largely depend on regulatory knowledge and expertise, which consist of scientific knowledge as well as societal, economic, procedural, and legal knowledge (Faulkner and Poort 2017). Within this broad range of regulatory forms of knowledge, ‘regulatory science’ (Jasanoff 1990; Irwin et al. 1997) is the institutionalized form of knowledge, which industries, regulators, and experts have agreed to adopt as the most relevant for evaluating technologies, and which can accordingly legitimize regulatory and policy measures. This legitimation, however, is constantly re-negotiated, often less visibly through the ‘law-making’1 process of a specific new technology (Faulkner and Poort 2017; Demortain 2017).

Accordingly, a substantial corpus of research has pointed to the practice of regulatory agencies as one of the most prominent spaces where contestations—of both the standard-making for new technologies and the re-negotiating of the legitimation of regulatory science—become visible (e.g. Abraham 1995; Abraham and Davis 2007; Davis and Abraham 2011; Faulkner 2008, 2018; Webster 2019). Regulatory agencies are knowledge-intensive organizations. Their reputation and power therefore depend on the credibility of the knowledge they produce (Demortain 2017). In the process of making standards, these agencies function as crucial gatekeepers—and ever increasingly as powerful ‘innovation enablers’—for the adoption of new technologies (Abraham 1995; Davis and Abraham 2011; Faulkner and Poort 2017; Geiger and Kjellberg 2021).

Co-production: The looping back of standard-making

Recursively, the standard-making process of technologies often ‘loops back’ on their functional and organizational configuration (Cambrosio et al. 2014, 2017)), as it re-configures the criteria at the core of institutionalized regulatory frameworks, and questions what counts as legitimate ‘evidence’ for regulatory purposes, as well as how agencies conceive of their organizational cultures and functions. In line with this, Pickersgill (2019) refers to practices of ‘performative nominalism’, or the co-emergence of actors of innovative new fields “talking [these innovations] into existence” (pp. 25–26), while at the same time reconfiguring their own identity. Behind the process of standard-making resides a crucial, two-way interplay between regulators and the objects they regulate, resulting in a process of ‘co-production’ between technological and organizational innovations (Jasanoff 2005; Cambrosio et al. 2014, 2017). Building on these conceptual frameworks, the empirical sections of this article will consist of two parts. First, we scrutinize the efforts carried out by the FDA to stretch, break, and maintain its traditional QSS framework so as to standardize the quality and safety of medical DHT. In so doing, we investigate how this standard-making process has transformed Digital Health from a buzzword into a governable object. The second empirical part of the article draws on the conceptual frameworks of ‘coproduction’ (Jasanoff 2005) and ‘performative nominalism’ (Pickersgill 2019) to highlight regulatory science’s ‘looping back’ effect, or the way in which the FDA’s attempt to standardize medical digital health technologies has also brought about a significant transformation in its own mode of functioning. Before delving into the empirical section, we will provide some background on the FDA’s functioning as a regulatory agency and describe our methods.

Studying the US Food and Drug Administration (FDA)

Why we wanted that approval? Well, because the American market is leading the way in medical apps and Digital Health in Europe, and because it is the largest market in the world which we would have to miss out on today without that FDA approval. (First author interview with European digital health developer 2020)

The US Food and Drug Administration (FDA) is one of the most renowned regulatory agencies tasked with developing standards for, and overseeing the quality and safety of every food item, drug, cosmetic product, medical device, and cigarette sold on US territory. The agency has a crucial gatekeeper power, as these products can only be marketed after having received approval from the FDA (Carpenter 2010). Although its gatekeeping function is only applicable on US soil, FDA policy initiatives carry extensive global weight because the US are among the world’s major players in the production of medical devices, representing 45 percent of this market in 2011 (Carpenter 2010; White and Walters 2018; Davis and Abraham 2011). Moreover, other major national regulators (e.g. Australia, Japan, France, Egypt, the UK) have benchmarked the organization of their regulatory agencies against the FDA’s institutional organization (Carpenter 2010).

The agency was created in 1906 when President Roosevelt signed into law the Food & Drug Act (Hilts 2003). Over the years, the FDA managed to expand its regulatory oversight power, partly as a result of a series of tragic drug-related adverse health effects, such as the Elixir sulfanilamide incident in the 1930s and, most notably, the Thalidomide tragedy at the turn of 1960s (Abraham 1995; Hilts 2003; Maschke and Gusmano 2018). Since its inception, however, and ever increasingly since the late 1970s (Hilts 2003; Maschke and Gusmano 2018; Abraham 1995), the agency has had to address the tension between ensuring the quality and safety of products in order to protect American citizens and supporting companies to spur technological innovation. As such, the agency’s policy actions are driven by its self-understanding as an entity at once acting as a ‘safety watchdog’ (Cortez 2015) and as an ‘innovation enabler’ (Abraham 1995; Davis and Abraham 2011; White and Walters 2018).

Eventually, the aftermath of the technological advancements of the Second World War urged the inclusion of medical devices in the regulatory oversight of the FDA. However, it was only in 1976, following the Dalkon Shield tragedy that the agency became responsible for the safety and effectiveness of medical devices (Medical Devices Amendments) (Hilts 2003; Bauman 2012; White and Walters 2018). The FDA’s medical device framework differs from the one for pharmaceuticals. Instead of uniformly undergoing the same phase I, II, and III clinical trial trajectories, the agency developed a less stringent, three-tiered ‘risk-based framework’: a procedure for clearance depending on the intended use and risk level of each specific device (Bauman 2012). Medical devices are thus classified in Class I, Class II, and Class III groups, ranging from low to high-risk devices in causing a disease or injury.

Only the latter are required to undergo a thorough pre-market approval procedure demonstrating the safety and efficacy of the device through clinical trials. For Class I and II devices, manufacturers are solely obliged to submit a so-called 510(k) notification 90 days prior to entering the US market (Bauman 2012; White and Walters 2018). This clearance process requires to prove ‘substantial equivalence’ between the new marketed device and at least one FDA-cleared medical device by demonstrating that they have the same intended use and the same technological characteristics (or that they do not raise novel safety issues) (White and Walters 2018).

For low-risk devices (Class I), the required evidence consists of general controls of good manufacturing and labelling practices. As Class II devices are moderate-risk devices, FDA was given the authority to develop device-specific performance standards and request additional evidence, including clinical data, from the manufacturers (Bauman 2012). However, the agency did not manage to carry out a thorough execution of this framework, causing serious gaps in the approval procedure for Class II medical devices—the class applicable to most digital health technologies. Several congressional hearings and reports have been drawn up over the years to examine possible ways of remedying this procedure. The most recent one, established in 2009 by the FDA (based on a report from the 510(k) Working Group) concluded that “the 510(k) lacks the legal basis to be a reliable premarket screen of the safety and effectiveness of moderate risk devices, and furthermore, that it cannot be transformed to one” (IOM 2011b, p.2 in White and Walters 2018, p.365). Despite these conclusions, 510(k) clearance remains the standard policy to allow medical devices on the US market.

To empirically study the role of standard-making in the shaping of the innovation pathway of digital health, we focused on the FDA’s publications on ‘Mobile Medical Applications’ (FDA 2013). Functioning for over three years as a lead on whether mobile health apps could be considered as medical devices, the FDA guidelines were adopted as reference documents across the world (European Commission 2014a, b). From this starting-point, we used an iterative search strategy to collect digital health related policy, industry, and media documents published between 2008 and 2018 in the US. We first collected regulatory documents, including legislative and policy material on, for instance, the FDA and Digital Health, the HITECH Act, the Affordable Care Act (ACA), the NIH Precision Medicine Initiative, the FDASIA Act, and the 21st Century Cures Act, as well as White House press releases and speeches on US health care. Secondly, we selected relevant articles from two highly influential newspapers in the political discourse (The New York Times and Politico), as well as material from three important technology-specific news websites (MobiHealthNews, FierceHealthcare, Techcrunch) and different FDA stakeholders (e.g., AdvaMed and the Mhealth Regulatory Coalition). The iterative search strategy resulted in 159 documents as main corpus for our analysis. Finally, the first author carried out fieldwork during a stay in the US, including participation in health policy conferences (such as the 2018 Wharton Health Care Business Conference, PACT 2018 Philadelphia Alliance for Capital and Technologies, and AcademyHealth’s 2018 Health Datapalooza) and conducted six interviews with scientific experts and stakeholders to gain a more fine-grained understanding of the current debates on digital health, the FDA policy, and US Congress’ regulatory initiatives on health care.

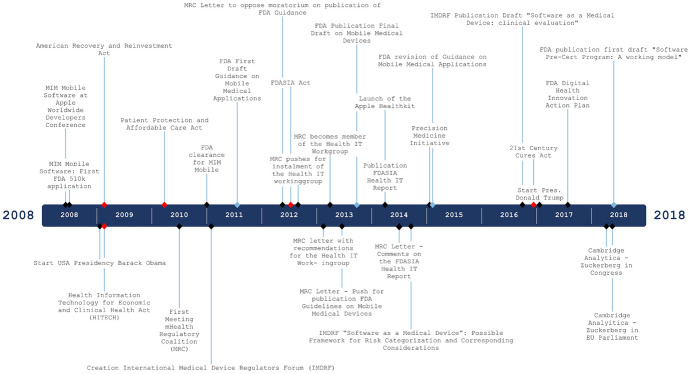

For data analysis, we built on Foucault’s approach of ‘eventualization,’ or the importance of considering the particularity of events by tracing “the connections, encounters, supports, blockages, plays of forces, strategies, … that at a given moment establish what subsequently counts as being self-evident, universal, and necessary” (Foucault 2002, pp. 226–227) as this enables us to visualize key ‘events’ in FDA standard-making on digital health. Starting from a visual ‘event’ timeline (see Fig. 1) based on our empirical materials, we performed an inductive content analysis of the collected documents and notes. Further, we conducted a ‘material’ analysis of FDA policy documents to understand how these regulations frame digital health actors, narrow and unify the possibilities of what digital health technology could be, and eventually shape the boundaries of a digital health’s innovation pathway (Faulkner 2012).

Fig. 1.

Timeline FDA standard-making for medical digital health technologies.

(source: authors own)

The making of the FDA’s digital health standardization

The Mobile MIM Software ‘event’—opening a space of politics for digital health

Imagine a doctor sitting with her patient, sharing the images with him, Iphone to Iphone. Or an oncologist interactively reviewing radiation treatment. The Iphone has created a new direction for our company. We have taken a complex desktop application, removed it from the realm of black art, and placed it in the hands of physicians and patients. And we only have scratched the surface. (MIM Software Chief Data Officer at WWDC 2008, AppleVideoArchive 2011)

At Apple’s World-Wide Developers Conference (WWDC) in 2008—one of the most important yearly events of the tech industry—the MIM software Chief Data Officer, was invited on stage next to Steve Jobs to promote the first diagnostic radiology app enabling clinicians to access images at all time (AppleVideoArchive 2011). The Mobile MIM app was welcomed with great enthusiasm and even won the prestigious 2008 Apple Design Award for best Iphone Healthcare & Fitness Application. Although MIM Software immediately filed an application for an FDA 510 k clearance after their presentation at the conference (July 2008), they decided to offer the app for free in the App Store. This reached the ears of the FDA, which immediately ordered the MIM mobile app to be taken down due to ‘regulatory concerns’ (Dolan 2011; Wodajo 2011). As the FDA did not have a straightforward framework for approving these technologies, the Mobile MIM Software got entangled in an extensive approval process as a medical device. Eventually, in December 2010, the Mobile MIM Software became the first radiology app with FDA approval (Dolan 2011).

Intended to be deployed both within a medical context as well as ‘at home,’ the launch of the Mobile MIM Software app challenged the (institutionalized) boundary between consumer and medical device products. While numerous social scientists have pointed to the complex hybrid (both medical and lifestyle) character of digital health technologies and the difficulties this might entail for QSS (Lucivero and Prainsack 2015; Faulkner 2018; Joyce 2019; Geiger and Kjellberg 2021), the MIM Software events actually translated this concern into practical difficulties. For one thing, do we allow them to be used in a medical context? Which FDA QSS are applicable? If so, under what conditions? Who is responsible for oversight in this context?

As such, this ‘event’ opened up a space of politics regarding the ‘medical deviceness’ (Faulkner 2008) of digital health technologies, resulting in controversies around these technologies within the realm of medical device regulatory agencies. Confronted with the MIM Software event in 2008, the FDA was pushed to take a stance in the question whether DHT should be considered as medical devices. As a consequence, the FDA became a frontrunner in DTH QSS. In Europe for example, the new medical device regulations—which included ‘software as medical device’—were only enacted in 2017. The draft guidance documents of the international medical device regulators forum (IMDRF) on ‘Software as Medical device’ were not published before 2014. Because the FDA guidance documents provided the sole direction on the QSS for medical digital health for a long time, the FDA’s national standard-making process following this event eventually played an important role in the worldwide delineation of lifestyle and medical digital health technologies.

However, as we outline in the following section, the early discussions with the FDA pertaining to DHT got caught up in the more general political debates on the role of governmental (FDA) oversight in new markets. Similar to what Lynch and Cohen (2015a, b) describe as the key issue for every FDA policy, DHT pushed the agency to rebalance the tension between emphasizing speed or enabling innovation and protecting patient safety. With their reaction on the Mobile MIM Software ‘event,’ the FDA re-allowed the questioning of its position as safety watchdog or innovation enabler to seep into the negotiations around the agency’s legal authority to oversee digital health technologies.

The controversy around digital health technologies as medical devices

Following the Mobile MIM Software ‘event’ the FDA was pressured into making sense of this growing group of digital health technologies. In a first effort to provide QSS for, and define the scope of medical DHT, all the while clarifying its own oversight, the agency eventually decided to issue specific guidance on which quality and safety standards should be required for medical digital devices, as it was used to do for new technologies. According to Cortez (2015) the decision to publish non-binding policy on digital health is not surprising, as “[the] FDA’s framework for software relies on a loose scaffolding of de facto but not de jure rules” (Cortez 2015, p. 447). Ever since its creation, the FDA has published over three thousand guidelines for industry and staff (Lynch and Cohen 2015a, b). As such, the issuing of guidelines is a regular FDA practice when dealing with new technological developments. On July 21, 2011, the agency published its first “Draft Guidance for Industry and Food and Drug Administration Staff on Mobile Medical Applications.” With this, the initial strategy was to clarify the position of digital technologies in the existing approval framework.

This first draft played a prominent role in the delineation of digital health’s innovation pathway, not only in demarcating the boundary of these technologies, but also because of its ‘enactive performativity’ (Faulkner 2012) as a guideline document. The publication of the draft created a space for controversy, where the questioned boundaries could be opened up for discussion and again be demarcated by the stakeholders involved. Further, the guideline made the field regulatable by limiting the possible options that can be discussed. Moreover, the publication of this first draft also spurred the interest of the US Congress in regulating digital health (Cortez 2015, 2019). Eventually, it took the agency almost two years (2011–2013) to publish the final draft of this guideline, as the opened space for politics was used by American politicians and the industry’s lobby to influence the future course of the FDA in regulating digital health technologies.

The folks involved were not experienced, many of them were—you know—Silicon Valley app developers, maybe they had historically developed games or productivity software—or any number of things. But now they wanted to get into health-related apps, and when that guidance was published, they went a bit crazy as they interpreted it as an expansion of FDA’s regulatory authority over this new and growing area of mobile apps. (First author interview with industry stakeholder, 2020)

After the publication of the first guideline draft, the FDA received 130 comments from the industry, mainly asking for more clarity on the scope of the definition of a medical device (Fornell 2013). On the one hand, the MedTech and tech industry and pro-business Republicans pushed for an innovation-friendly and minimal mediation of the FDA, pushing for a narrow interpretation of the definition of digital health devices. But this group was quite fragmented, notably because of disagreement among actors from the industry. While they all aspired to avoid over-regulation for digital health technologies, tech companies shifted the focus from discussing the boundary between medical and lifestyle devices to debating the authority of the FDA in the regulation of DHT. In their opinion, DHT was not covered by the existing Medical Device legislation and therefore there was no FDA clearance to be developed for these technologies.

The traditional MedTech companies on the other hand were primarily interested in clarity about the definition of digital health. Their push for clarity in FDA’s authority of digital health stemmed from discontent “because what was inhibiting the development of the industry was that you had a lot of companies that were developing products that were completely ignoring FDA regulation and saving substantial money” (First author interview with industry stakeholder 2020). To push for clarity in this regard, in 2010, the “mHealth Regulatory Coalition” was formed. This MRC is an interest group based in Washington D.C., or, as described on its website, it serves as “the voice of the mHealth technology stakeholders in Washington” (mHealth Regulatory Coalition, n.d.). According to its website, the MRC coalition was created in 2010 to “help make certain the regulatory environment for mHealth technologies allows for innovation while at the same time protecting patient safety” (mHealth Regulatory Coalition, n.d.). As of their first meeting in July 2010, this coalition managed to play a significant role in the further standard-making process around digital health by the FDA (Dolan 2010).

In line with this de-regulatory, innovation-friendly stance, Republicans tried to push for a bill called the Protect Act (2014), to legally restrain the scope of the FDA in mHealth matters (Allen 2014). According to senator Blackburn, “it is clear from the recent guidance documents released by the FDA that they intend to maintain regulatory discretion—that is, they can change the rules whenever they want. Guidance is not the way to go.” As such, “they want laws that assure industry it can innovate and sell its products without federal meddling” (quoted in Gold, 2015).

Health researchers have taken a critical view toward the new direction of the FDA. They have pointed to the lack of scientific evidence and the growing privacy concerns for a lot of mHealth apps and as such argue for broadening and strengthening the FDA’s ‘safety watchdog’ role. This also found an audience among patient advocates and the Democratic party. Democrats submitted a bill (the Software Act) to ensure that the FDA would allocate enough resources to protecting patient safety. They suggested to create an additional Health IT Safety Center to oversee the mHealth applications that fall outside of the FDA high-risk approvals (Allen 2014).

This highly contested debate stirred up the interest from Congress. On July 9, 2012, the Food and Drug Administration Safety and Innovation Act (FDASIA) was enacted, focusing on strengthening the power of the FDA and enhancing innovation (Food and Drug Administration Safety and Innovation Act 2012). In the interest of enlarging stakeholder-engagement, it ordered to develop a public–private working group that could assist the FDA in publishing a Health IT Report (FDA 2014). This document would have to prepare a ‘risk-based regulatory framework’ for health information technologies before January 2014 (Carver 2013). Instead of speeding up the publication of a final draft of the FDA guideline, the act triggered a new debate on, first, when the report and the guideline had to be published and, second, who would be a member of the stakeholder group. As such, this fierce debate overshadowed the discussion on the content of the guideline.

Eventually, the Health IT workgroup, consisting of industry groups such as MRC and Federal agencies as the FDA, the ONC and the FCC, issued its report in April 2014 (FDA 2014). The report suggested the implementation of a ‘risk-based regulatory framework’ for health IT, with a focus on the functionality of the application instead of on the platform on which it resides. Although in terms of content, the main conclusions of this report are in line with the final guidance document of the FDA (2013), with this report Congress brought new actors to the table (such as the FCC and the ONC) in order to discuss the proper understanding of digital health and the role of the FDA in its oversight.

Digital health technologies turned ‘medical’: The FDA’s guideline on ‘mobile medical applications’

Consistent with the FDA’s existing approach that considers functionality rather than platform, the FDA intends to apply its regulatory oversight to only those mobile apps that are medical devices and whose functionality could pose a risk to a patient’s safety if the mobile app were to not function as intended. This subset of mobile apps the FDA refers to as mobile medical apps. (FDA, 2013, p. 4)

On September 25, 2013, the FDA published the long awaited “Mobile Medical Applications Guidance for Industry and Food and Drug Administration Staff” (FDA 2013).2 After a two-year controversy, this final draft has been a first effort to standardize the quality and safety of medical DHT. In doing so, it has also has been one of the world’s first attempt to demarcate the boundary between lifestyle and medical digital health technologies. Through its attempt to standardize these technologies, this document enables the development of DHT as an object of government, as it succeeded in reducing the proliferations of expectations and hopes around digital health to a ‘regulatable domain’ (Faulkner 2012), clarified its definition, while at the same time defined the boundaries of its own FDA regulatory oversight.

The concerns raised by industry groups on the time-consuming validation processes and unclear definitions led the FDA to take an innovation-friendly approach in defining the boundary between lifestyle versus medical digital health technologies. As one industry stakeholder described it, the FDA proceeded very cautiously because “they were worried about politics, … they were worried about perception, worried about whether they were overregulating or underregulating” (First author interview with industry stakeholder 2020).

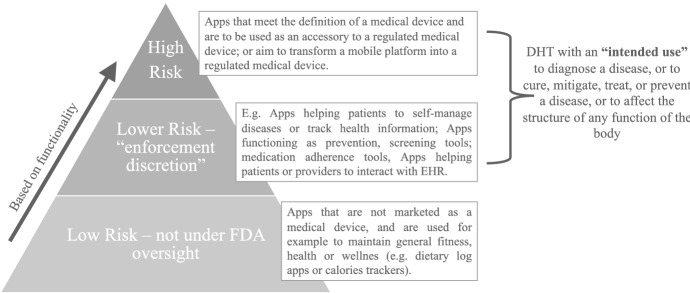

As visualized in Fig. 2, In the end, the publication of the guideline on ‘Mobile Medical Applications’ has turned digital health from a buzzword into a regulatable ‘object of government’ in different ways. First, following the advice of the Health IT working group, the FDA decided to develop a specific ‘risk-based regulatory framework’ for health IT, with a focus on the functionality of the application instead of the platform on which it resides. This marked a fundamental break with its existing risk-based framework for regulating medical devices, the FDA now deciding to develop a separate risk framework for health IT, based on the function of the device. In doing so, the FDA ended its forty-year tradition of regulating computerized medical devices through its (highly criticized) 510(k) clearance procedure.

Fig. 2.

Overview of the FDA guideline on “Mobile Medical Applications”.

Second, the FDA adopted a rather narrow conceptual interpretation of ‘medical’ digital health devices, all the while limiting the scope of its own role in regulating them (Tavernise 2013). As such, for a digital health device to be defined as a ‘mobile medical app’, its ‘intended use’ has to be to diagnose a disease, or to cure, mitigate, treat, or prevent a disease, or to affect the structure of any function of the body (FDA 2013, p. 8). Further, the agency did not only limit the scope of its regulatory supervision; it also decided to opt for a de-regulative approach toward those apps that meet the definition of a medical device but pose a low risk for patients. For them, the FDA applied an ‘enforcement discretion’ (FDA 2013, p. 4), meaning that they can go through a simplified procedure to get an FDA approval. Instead of defining the meaning of ‘low risk’, the FDA choose to add a list to the guidance document with examples of the apps that would be eligible for enforcement discretion. In the light of the controversy of what has to be considered either as medical or lifestyle, the FDA did acknowledge apps that encourage healthy lifestyle as a possible medical device (if they meet the intended use criteria). However, even if they may meet the definition of a medical device, since the FDA considers apps encouraging healthy eating, weight loss, tracking sleep patterns, … as ‘low risk’ devices, they meet the enforcement discretion criteria and do not have to be subjected to the complex approval procedure (FDA 2013, p. 25).

In this context, the FDA issued an additional guideline on July 29, 2016: “General Wellness: Policy for Low-Risk Devices. Guidance for Industry and Food and Drug Administration Staff” (FDA 2016). This guideline ought to provide clarity on what the FDA considers ‘low risk’ products that promote a healthy lifestyle. According to Simon Choi, Senior Science Health Advisor at the FDA, “The final guidance takes a hands-off approach to the regulation of low risk, general wellness products that only promote a healthy lifestyle or promote a well-known association between healthy lifestyle and a certain chronic disease or condition. FDA will continue to focus its oversight on products that are invasive, implanted or pose a greater risk to patients” (FDA 2016, p. 3).

As such, boundary-making between lifestyle and medical DHT that came along with FDA’s attempted to standardize the quality and safety of medical DHT, has shaped the further innovation pathway of digital health technologies as it restricted the ‘pool’ of health applications and devices presented as ‘medical’ digital health applications. This makes an FDA approval a quality brand for only a few health applications. Through the labelling by industrials as ‘digital therapeutics’ (Geiger and Kjellberg 2021), these medical digital health devices differentiate themselves from non-medical device digital health applications. at the same time, this narrow definition leaves a great deal of health applications out of FDA oversight, meaning that they do not have any compliance-checks on the quality or effectiveness of their technology. In this respect, the FDA did classify a large proportion of digital health technologies as medical devices, but—based on the belief that they are low-risk—has pulled back as QSS gatekeeper of these devices.

The demarcation of digital health in the FDA’s regulatory practice: The 21st Century Cures Act, the Digital Health Innovation Action Plan, and the Pre-Certification Program

While FDA had already taken a more hands-off approach to lower-risk digital health technology, including software, we will update current policies and issue new guidance to be consistent with and provide greater clarity on the 21st Century Cures Act software provision. (FDA 2017a, p. 4)

In December 2016, former President Obama used his last month in office to sign the 21st Century Cures Act, intended to usher in a new era of ‘precision medicine.’ In the beginning of the FDA’s standard-making for digital health, the agency’s interest in these technologies was rather incidental as reflected by the Mobile MIM Software case. Its initial intention was to incorporate DHT into the FDA’s existing approval policy. With the enactment of the 21st Century Cures Act, however, US Congress enlarged the FDA’s authority in governing digital health technologies. From then on, digital health became an explicit high-priority policy objective (Cortez 2019), for which the agency designed an actual digital health policy plan.

The 21st Century Cures Act provided 4.8 billion dollars in spending over 10 years for new medical research. More precisely, it reserved an extra 1.4 billion dollars to be spent on the Precision Medicine Initiative, as it was originally called (Thompson 2016). Furthermore, the Act also enabled the FDA to rethink its high evidence standards in certain situations, aiming to facilitate the approval of new drugs and devices (Thompson 2016). According to the FDA’s website, the act “is designed to help accelerate medical product development and bring new innovations and advances to patients who need them faster and more efficiently” (FDA 2020a), and therefore reinforces the innovation-enabling approach of the agency to new technologies.

While the MIM Software event and the controversy around the draft guidance document “talked [medical digital health] into existence” (Pickersgill 2019, pp. 25–26), and the 2013 guidance document contributed to the “delimitation” of medical DHT as an object of government (Lezaun 2006), the opening of this new legislative space led the FDA to demarcate (Lezaun 2006), or develop, a specific policy for medical digital health technologies within its regulatory practices. At the 2018 Health Datapalooza, Scott Gottlieb outlined the FDA’s “Digital Health Innovation Action Plan” (2017) as being aimed at providing guidance to modernize its policies (in accordance with the 21st Century Cures Act), while increasing the expertise of its Digital Health staff and developing a digital health software precertification pilot program (Pre-Cert). While the guidance document on ‘mobile medical applications’ contributed to the shaping of the conceptual boundaries of “medical” digital health devices (FDA 2013), the FDA’s “digital health innovation action plan,” through the development of its specific approval procedure, standardized new forms of evidence and expertise as regulatory science. As claimed by the former FDA Commissioner:

The challenge FDA faced in the past is determining how to best regulate these non-traditional medical tools with the traditional approach to medical product review. We envision and seek to develop through the Pre-Cert for Software Pilot a new and pragmatic approach to digital health technology. Our method must recognize the unique characteristics of digital health products and the marketplace for these tools, so we can continue to promote innovation of high-quality, safe, and effective digital health devices. (FDA Commissioner Gottlieb 2017).

On July 27, 2017, the FDA announced its “Pre-Cert for Software Pilot Program,” created to develop an even more tailored (faster and more efficient) approach for validating digital health technologies. Instead of embedding digital health into the existing approval procedures, the agency thus developed a ‘digital health specific’ validation procedure. Instead of validating the quality of each product separately, the FDA would now examine ‘the culture of quality’ of the applying companies (Gottlieb 2017). At the end of September 2017, the FDA announced nine companies that would participate in the Pre-Cert pilot program: Apple, Samsung, Verily, Pear Therapeutics, Tidepool, Phosphorus, Roche, Johnson & Johnson, and Fitbit (Comstock 2017). According to Gottlieb, “these pilot participants will help the agency shape a better and more agile approach toward digital health technology that focuses on the software developer rather than an individual product” (Comstock 2017).

Looping back: Reconfiguring the FDA through digital health standard-making

FDA plays a critical role in supporting this continued innovation as part of our mission to protect and promote public health. … Doing so means that we must also recognize that FDA’s usual approach to medical product regulation is not always well suited to emerging technologies like digital health, or the rapid pace of change in this area. If we want American patients to benefit from innovation, FDA itself must be as nimble and innovative as the technologies we’re regulating. (FDA Commissioner Gottlieb, 2018)

April 26, 2018, Washington D.C. Back to the Health Datapalooza, attended by the first author and more than 1,200 US policymakers, health professionals, researchers, and entrepreneurs. At one of the numerous round tables in the ‘International Ballroom’ of the Hilton—a windowless, slightly outdated, enormous conference hall—former FDA Commissioner Gottlieb continued his keynote lecture on “Transforming FDA’s Approach to Digital Health.” He envisioned the role the FDA ought to play in the expansion of digital health technologies (Fieldnotes first author 2018). In doing so, this keynote lecture exemplified the other side of the coin of FDA’s attempt to standardize medical digital health technologies. The following subsections will illustrate how the above-described events between 2008 and 2018 around digital health technologies caused the FDA to rethink its own organizational culture and mode of functioning.

FDA’s shift from safety watchdog to innovation enabler

In 2008, after withdrawing the MIM Software app from the App store, it initially appeared as if the FDA was planning to take a ‘safety watchdog’ (Cortez 2015) stance toward DHT. Following the withdrawal, the MIM Software company became entangled in a painful approval process with respect to its medical device: after the company’s application in July 2008, it took the FDA 221 days (instead of 90) to reply. When in January 2009 the company finally received a response, it was not the one it hoped for: the FDA declined the first 510 k clearance based on the lack of ‘substantial equivalence to a predicate device’ (Wodajo 2011).

In an interview with ImedicalApps.com, the Chief Data Officer at MIM explained that after a request to one of the directors of the FDA for information on the decision, they “were told that the decision was unanimous, and that even a de novo3 would be very unlikely to succeed, so don’t bother appealing” (Wodajo 2011). The company still decided to apply for a ‘class III Premarket approval,’ which eventually was approved in December 2010. The Mobile MIM thus became the first radiology app cleared by the FDA (Dolan 2011). In the process, however, the FDA adhered to its existing approval system for Medical Devices, resulting in MIM software to be classified as a ‘high risk’ device for which the developers had to complete a complex, time-intensive clinical trial procedure similar to the drug approval process.

Although the US Congress has been drawing attention to ‘computerized medicine’ for some time, it never clarified the authority of the FDA to regulate these technologies in legislation (Cortez 2015). Despite the FDA’s attempts in the 1980s to draft a policy for computer products, the agency relied on the 1976 risk-based approach to computerized medical devices for over forty years (Cortez 2019), and this approach it also applied to the Mobile MIM. While acting as a ‘safety watchdog’ for some apps (e.g. Mobile MIM), however, the FDA also allowed a lot of DHT to enter the market without requiring any FDA approval. This resulted in much ‘unclarity and unease’ as to its role in regulating DHT (First author interview with industry stakeholder, 2020).

It was only after the controversies surrounding the publication of the draft guideline in 2011 that the FDA gradually started to ‘break’ (Faulkner and Poort 2017) with its prevailing framework, and began to re-think its forty-year policy for computerized devices. The mHealth coalition, for example, urged the FDA in 2011 to relax some of the proposed restrictions from the first draft, pointing to the highly expensive and time-consuming process for proving the effectiveness of medical digital health devices. This coalition asked the FDA to publish merely a final document after having developed a clear framework “that can accommodate the rapid pace of innovation in the sector” (Norman 2012). To accommodate these industry concerns, the FDA stated in the final publication of the guideline that it would not enforce its oversight for a large part of DHT (cf. Enforcement discretion) in order to make the approval pathway for DHT less costly and less time-consuming, while acknowledging that a number of these technologies would actually meet the 1976 statutory definition of a medical device (FDA 2013). According to an industry stakeholder, the new framework “needed to be risk-based. So that higher risk products got regulated and lower-risk products were left unregulated” (First author interview with industry stakeholder 2020).

In this way, the FDA, in its effort to standardize DHT, basically discarded its existing risk framework for medical devices. Following the industry’s plea for a risk-based framework and the advice of the Health IT working group, the agency decided to develop a specific ‘risk-based regulatory framework’ for health IT. In contrast to the traditional risk-based framework for medical devices, the new approval framework that was introduced in the FDA guideline on “Mobile Medical Applications” (FDA 2013) focuses on the functionality of the application instead of depending on the intended use and risk level of each specific device (Bauman 2012). By developing an approval framework based on the functionality of the device, the FDA embraced the complexity of new technologies and created a space for flexibility leaving a considerable amount of leeway within their QSS framework for dealing with “the rapid pace of innovation in the sector” (Norman 2012). In doing so, the FDA ended its forty-year tradition of regulating computerized medical devices through its (highly criticized) 510(k) clearance procedure. As argued by one of the industry’s stakeholders:

It is a huge change. … They want to move to a system where they don’t look at all that evidence and instead basing their system on who is supplying rather than what’s the subject of the application. Congress has to say that’s the way to market in order for the FDA to have the power to do it. They don’t have the power to do it. (First author interview with industry stakeholder, 2020).

Arguably one of the most significant transformations for the mode of operation of the FDA, then, was found in its development of a pre-certification program for digital health companies. Instead of approving DHT based on their product specificities, the pre-cert experiment allowed digital health technologies to enter the US market based on a company-based ‘culture of quality’ label (Gottlieb 2017), which would speed the approval process for digital health medical device companies. An industry advocate pointed to the subjective nature of this policy framework: how can they “with precision say which company has the right culture and which company has the wrong culture and make a decision then on who’s to get to market first?” (First author interview with industry stakeholder 2020). However, while this ‘regulatory experiment’ (Cortez 2019) opened a radical new way of approving innovative software-driven technologies, for now the pre-certification has been running as a pilot-project. Since new QSS approval procedures require the approval of US Congress by law, the FDA will first have to receive the authority from Congress to approve technologies through ‘a culture of quality’ label (Gottlieb 2017) attributed to the company.

The implementation of an enforcement discretion for low-risk DHT, the development of a risk-based approach based on the functionality of the technologies, and the pre-certification program—resulting from the FDA’s standard-making process for medical DHT—resonate within the FDA’s new vision to be versatile toward technological innovation in order to address the rapid pace of change in the area of digital health technologies (Gottlieb 2018). As such, the digital health standard-making process has enabled a shift within the FDA from being a reluctant ‘safety watchdog’ for computerized products (Cortez 2015) toward an innovation-enabler in the regulatory overview of digital health technologies. From this, tech-companies have gained a more robust position within the stakeholder discussions regarding QSS for DHT—all the while having evolved into producers of valuable epistemic knowledge, next to pharmaceutical companies, patient groups, and health professionals.

Embracing alternative forms of regulatory knowledge

Some of that data [real-world data] is going to be really valuable, and valuable in a way that clinical data never will be. This data will be [gathered] real-life where clinical trial data is [resulting from] a highly cleaned, unrealistic arrangement. So, there is real value in that. It can not only help with pre-market review but it can help us to ensure that the software continues to be safe and effective over time. (First author interview with industry stakeholder, 2020).

As mentioned in the section above, the enactment of the 21st Century Cures Act led the FDA to allow ‘alternative,’ or real-world evidence into their approval frameworks for new drugs and devices (Hogle 2016b, 2018). In doing so, the Act opened up a new ‘window of opportunity’ for DHT as it allowed the FDA to develop ‘alternative’ forms of evidence within its approval frameworks. First, as the digital health innovation action plan aims to adapt the FDA’s existing digital health policies to the 21st Century Cures Act, this document introduces the use of ‘patient experience data,’ as “collected by patients, parents, caregivers, patient advocacy organizations, disease research foundations, researchers, and drug manufacturers” (21st Century Cures Act, Sect. 3001), and “real world evidence,” or “data regarding the usage, potential benefits or risks, or a drug derived from sources other than randomized clinical trials,” to prove the effectiveness and safety of their devices (21st Century Cures Act, Sect. 505F(b)). The latter can also involve information retrieved from social media or medical histories from patients (Hogle and Das 2017). The 21st Century Cures Act has thus broadened the array of legitimate types of evidence for ‘medical’ digital health technologies to prove their safety and efficacy to the FDA.

Further, as contended by Faulkner and Poort (2017), experts (in the broad sense) are instrumental in the way a standard-making process evolves. As we outlined above, through the negotiation of the digital health approval framework and the development of the Pre-Cert program, technological companies have gradually evolved into legitimate ‘experts’ or partners in FDA policymaking. This, in turn, has significant implications for regulatory science. While inscribed into the 21st Century Cures Act, this Act legitimates these new types of evidence for FDA’s regulatory reviews and, consequently, marks a broader knowledge paradigm shift within the US healthcare system from evidence-based approval approaches toward alternative, more data-driven and value-based forms of evidence (Hogle 2016a, 2016b; Hogle and Das 2017).

Similar to what Cambrosio (2017) refers to as the interconnections between different regulatory interventions, these alternative types of evidence do not only play a major role in the standard-making process of digital health, but they are also deployed in the process of FDA oversight on other pharmaceutical technologies, such as regenerative medicine and direct-to-consumer genetic testing—innovative technologies deemed unfit for approval within the established and ‘rigid’ clinical trial format of regulatory agencies (Hogle and Das 2017; Webster 2019). In these contexts, DHT emerge as the ideal measuring tool for the ‘alternative’ quality and safety evidence for these new pharmaceutical technologies.

Building on the FDA’s innovation-enabling approach to DHT and the developments around alternative forms of evidence, its standard-making process did not only involve an attempt to set the boundaries within which DHT can legitimately function as ‘medical devices.’ In line with Pickersgill’s (2019) practices of ‘performative nominalism,’ the FDA’s standard-making process also, and simultaneously, gave rise to a reconfiguration of the FDA identity. The Health Datapalooza keynote speech by FDA Commissioner Gottlieb illustrated the agency’s shifting stance from being a ‘safety watchdog’ to a flexible ‘innovation enabler’ for software-related technologies. The limited scope of FDA oversight on medical digital health technologies, its enforcement discretion, the ‘digital health’- adapted risk framework, and its pre-certification program further highlight the FDA’s embracement of both software and their developers within the regulatory space of medical devices. In a similar move, as the 21st Century Cures Act opened up a ‘window of opportunity’ allowing the agency embed (in its digital health action plan) the use of ‘alternative’ forms of evidence, the FDA embraced new (tech) experts and legitimated their knowledge within its approval procedures. As such, the standard-making process looped back, co-producing the FDA in its role as a regulatory agency (Jasanoff 2005; Cambrosio et al. 2014, 2017), and giving way to a broader paradigm shift from evidence-based practices toward real-world evidence knowledge practices.

Discussion and conclusions

As tech companies have secured a foothold in the health domain by providing new ways of collecting, analyzing, and storing health data (Sharon 2016; van Dijck et al. 2018), the promise of Digital Health has become an indispensable aspect of public healthcare and public health policy worldwide. Promising to empower patients, improve the quality of care, and contribute to more cost-efficient and ‘personalized’ health care systems, DHT have been put forward as a panacea for Western healthcare systems (PwC 2013; PwC 2014; Deloitte 2015; Roland Berger 2016; Montgomery et al. 2017; OECD 2017; EC 2018; OECD 2019). In contrast, social scientists have questioned the social import of these technologies in relation to individual privacy (e.g. Neff and Nafus 2016; Lupton 2017; Marelli, Lievevrouw and Van Hoyweghen 2020), autonomy (e.g. Lupton 2013; Crawford et al. 2015), and also inequality and discrimination issues (e.g. Rich, Miah and Lewis 2019). However, while various social-science research has drawn attention to the pressure exerted by digital health technologies on the socio-legally constructed boundary between lifestyle and medical products (Lucivero and Prainsack 2015; Faulkner 2018; Joyce 2019; Geiger and Kjellberg 2021), the role of regulators in attempting to position DHT across this boundary has hardly been studied from a social-science angle.

Through an analysis of FDA policy documents, press-releases, newspaper articles, and industry reports, as well as through fieldwork, we zeroed in on this issue by exploring and documenting the highly ‘political’ character (Barry 2001) of the FDA quality and safety standard-making processes to govern medical digital health innovation—turning these technologies into regulatable objects (Lezaun 2006). While the political character of this process is often black-boxed once new policies have been (temporarily) consolidated, we argue it is paramount for social scientists to unravel such complex process of ‘standards-in-the-making’ (Latour 1987), as it represents a crucial element for capturing the unfolding of the innovation pathway of digital health technologies. Moreover, through its looping-back effect (Jasanoff 2005; Cambrosio et al. 2014, 2017), the standard-making process also enables a more fine-grained understanding of changing modes of functioning within existing regulatory institutions.

Reflecting on the broader societal consequences, FDA’s efforts to standardize medical digital health technologies seem to open up new spaces for contestation and controversy in the area of regulatory science. In its attempt to (temporarily) define the position of medical DHT on the lifestyle/medical boundary, the FDA standard-making process has opened up new spaces for contestation, giving rise to important new social issues around the quality and safety of these ‘medical’ digital health devices, the development of new actor configurations within the healthcare domain, and the politics around the development of new knowledge/power regimes within US health care.

First, there remains considerable concern about the quality and safety of these medically framed digital health technologies. A recent controversy involving the “Natural Cycles” app in Sweden, where an EU certified birth control app was seen as the cause of thirty-seven unwanted pregnancies, exemplifies the persisting difficulties (Wong 2018)—linked to QSS approved digital health medical devices—to guarantee the quality and safety of these technologies. In this regard, the FDA’s shifting stance from being a ‘safety watchdog’ to a flexible ‘innovation enabler’ for software-related technologies and the statutory space (through the 21st Century Cures Act) for the uptake of alternative forms of evidence illustrate how regulatory agencies such as the FDA have to a certain extent embraced the uncertainties coming along with new DHT, but also innovative pharmaceuticals, treatments, and devices.

This innovation-friendly approach to pre-market approvals would require a more stringent monitoring after these products are authorized on the market. However, allegedly owing to budgetary reasons, the FDA currently fails to enforce its own guidelines (First author interview industry stakeholder 2020). This once again calls into question the procedures for ensuring the quality and safety of these devices: an innovation-friendly, faster system on the pre-market approval side would need to be accompanied by an extensive post-market monitoring framework. By failing to do so, the FDA not only risks jeopardizing its reputation; it also generates potentially serious safety risks. Moreover, it remains to be seen how the FDA’s pre-certification system—approving companies instead of products—can be integrated into this post-market monitoring system.

The delicate balance between safety and innovation, including the importance of enforcement, has become all the more apparent in the light of the recent COVID-19 pandemic. In attempting to manage the pandemic, the FDA has immediately deployed the establishment of provisional ‘fast-track’ approval procedures for software-related medical devices designed to cure, diagnose, and detect the virus (FDA 2020b). Furthermore, in the quest for a vaccine, the agency has allowed the use of alternative, real-world evidence to expedite vaccine approvals for COVID-19 (FDA 2020c). While these FDA initiatives partly originate within the above-discussed new vision associated with being innovation-enabling, they risk to undermine the ongoing issues of quality and safety and post-market enforcement. As the original terms of evidence have not yet been clearly determined, it will be quite a challenge to approve fast-track devices in a safe way. Moreover, it is yet to be determined how these newly approved devices will be revised after the exceptional COVID-19 situation, and how this situation affects the future QSS enforcement for DHT.

Secondly, while the FDA attempted to consolidate the boundary between medical and lifestyle digital health products, the ‘events’ around digital health’s standard-making process gave rise to new actor configurations within the US health care space. These new constellations are perhaps best reflected in the fact that tech companies—specialized in creating products beyond health care—have become legitimate FDA stakeholders throughout the standard-making process around DHT. Since these companies have brought an entirely new area of expertise to the discussions on making standards for digital health, this resulted in a power shift in relation to traditional medical device companies—which is exemplified in the companies the FDA has selected for its Pre-Cert pilot program. Aside from pharmaceutical giants such as Johnson & Johnson and Roche, the program also includes big tech companies such as Apple, Samsung, Verily, and Fitbit (Comstock 2017). In the face of the rapid pace of health innovation (e.g. Artificial Intelligence, DTC genetic testing, regenerative medicine), these standard-making ‘events’ (Foucault 2002) have opened up the health space for digital health technologies, all the while having set the stage for a flux of new stakeholders and types of data to enter the existing health realm of medical professionals, pharmaceutical and medical device companies, insurance companies, and patient organizations. Therefore, we will have to consider ways to integrate the hybrid character of such companies as tech firms in the health care system, while keeping a watchful eye for the issues concerning the protection of privacy, the risk for algorithmic biases and new social inequalities, and the quality and safety of these products that come along with these new constellations.

Finally, by allowing new alternative forms of evidence to be used in FDA approval procedures, the 21st Century Cures Act enables lifestyle data (collected by DHT) to be considered as another legitimate form of knowledge within medical approval systems next to the gold standard of RTCs. While this marks a knowledge paradigm shift from evidence-based approval approaches toward alternative and more data-driven and value-based forms of evidence within regulatory agencies (Hogle 2016a, 2016b; Hogle and Das 2017), it remains to be seen how these new power/knowledge relations will evoke new understandings of ‘the gold standard’ for regulatory science and how the will affect the balancing-act for regulatory agencies between innovation and safety for the approval procedures of innovative pharmaceuticals and devices. From this, the ‘events’ of the FDA’s standard-making process, outlined in this article, did not only prompt organizational and technological innovation; they also opened up new spaces of controversy, giving rise to new social contestations.

To conclude, while the emergence of digital health technologies is often attributed to the ‘vanguard visions’ (Hilgartner 2015) of prominent Silicon Valley tech developers, this article demonstrates that the making of quality and safety standards by regulatory agencies has been a crucial constitutive element for the shaping of the innovation pathways of digital health. Moreover, this standard-making process has considerably ‘looped-back’ and reconfigured the mode of functioning of the FDA itself. These new constellations have brought up new issues regarding the quality and safety of ‘medical’ digital health devices, while entailing significant transformations in health categorizations, expertise, and power/knowledge relations within regulatory agencies. Following these insights, it is paramount for social scientific research to account for the ‘hybrid’ network of material, social, and economic actors in the shaping of new technologies—all the while also paying close attention to the institutional reconfigurations of standard-making processes within the (ongoing and international) development of a digital health innovation pathway and the new openings it creates for other new innovations such as the recent COVID-19 vaccinations, the developments in regenerative medicine, and the expansion of ‘personalized medicine.’

Acknowledgements

Special thanks to Tom Baker for the insightful discussions on the US Healthcare system, while hosting Elisa Lievevrouw for 6 months at the University of Pennsylvania Law School during this research. Also, the authors would like to thank the anonymous reviewers for their insightful suggestions and feedback on previous versions of the article.

Biographies

Elisa Lievevrouw

is an FWO PhD Fellow with the Life Sciences & Society Lab at the Centre for Sociological Research (KU Leuven). Her doctoral research, at the intersection between Foucauldian, science and technology, and socio-legal studies, focuses on the social aspects of digital health policy- making in the US and the EU.

Luca Marelli (PhD, 2016)

is a Senior Research Fellow with the Life Sciences & Society Lab at the Centre for Sociological Research, KU Leuven. He also holds appointments as Adjunct Professor of Bioethics at the Department of Medical Biotechnologies and Translational Medicine, University of Milan, and Visiting Research Fellow at the Department of Experimental Oncology, European Institute of Oncology IRCCS. His main research activities, at the intersection of Science & Technology Studies (STS), data governance and biomedical research policy, focus on the ethical, legal, and social aspects of contemporary data-intensive biomedicine and digital health.

Ine Van Hoyweghen

is a Research Professor at the Centre for Sociological Research (KU Leuven) where she directs the Life Sciences & Society Lab. She is a leading and internationally renowned researcher in sociology of biomedicine, science and technology studies (STS), and governance of health care innovation.

Funding

This work was supported by the Research Foundation Flanders (FWO), under the PhD Fellowship Fundamental Research (grant number 11C8520N), and the Odysseus Project ‘Postgenomic Solidarity. European Life Insurance in the Era of Personalised Medicine’ (grant number 3H140131), and by the European Union’s Horizon 2020 research and innovation program, under the Marie Sklodowska-Curie grant agreement number 753531 (LM). We confirm that the manuscript is comprised of original material, and that it is not under review elsewhere.

Declarations

Conflict of interest

The authors declare no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Footnotes

Law-making refers to “both the negotiating processes of drafting a framework for making legal decisions … and the interpretative process geared towards ‘implementing’ regulations” (Faulkner and Poort 2017, p. 210).

The guidance document has since then been updated on February 9, 2015, and September 27, 2019. The latest update adapted the definition of “device” in accordance to the US 21st Century Cures Act, clarifying the function-specific definition of “device.” As such, “mobile application” was changed into “software application” (FDA 2019).

A “De Novo application” is “an alternate pathway to classify novel medical devices that had automatically been placed in Class III after receiving a “not substantially equivalent” (NSE) determination in response to a premarket notification [510(k)] submission.” (FDA 2021).

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Elisa Lievevrouw, Email: elisa.lievevrouw@kuleuven.be.

Luca Marelli, Email: luca.marelli@kuleuven.be.

Ine Van Hoyweghen, Email: ine.vanhoyweghen@kuleuven.be.

References

- Abraham J. Science, Politics, and the Pharmaceutical Industry: Controversy and Bias in Drug Regulation. London: UCL Press; 1995. [Google Scholar]

- Abraham J, Davis C. Deficits, expectations and paradigms in British and American Drug Safety Assessments: prising open the Black Box of Regulatory Science. Science, Technology, & Human Values. 2007;32(4):399–431. doi: 10.1177/0162243907301002. [DOI] [Google Scholar]

- Allen, A. 2014. Health Apps boom while regs lag. Politico, 3 June. https://www.politico.com/story/2014/06/health-apps-boom-while-regs-lag-107345_Page2.html

- AppleVideoArchive. 2011. Apple WWDC 2008. Youtube.com, 31 December. https://www.youtube.com/watch?reload=9&v=AHolDv1plk0

- Australian Digital Health Agency. 2018. Australia’s National Digital Health Strategy. Safe, seamless and secure: evolving health and care to meet the needs of modern Australia. https://conversation.digitalhealth.gov.au/sites/default/files/adha-strategy-doc-2ndaug_0_1.pdf

- Barry A. Political Machines: Governing a Technological Society. London and New York: The Athlone Press; 2001. [Google Scholar]

- Bauman J. The ‘Déjà Vu Effect:’ evaluation of United States medical device legislation, regulation, and the Food and Drug Administration’s contentious 510(k) program. Food and Drug Law Journal. 2012;67(3):337–361. [PubMed] [Google Scholar]

- Bensaude Vincent B. The politics of buzzwords at the interface of technoscience, market and society: the case of ‘public engagement in science’. Public Understanding of Science. 2014;23(3):238–253. doi: 10.1177/0963662513515371. [DOI] [PubMed] [Google Scholar]

- Blasimme, A., E. Vayena, and I. Van Hoyweghen. 2019. Big data, precision medicine and private insurance: a delicate balancing act. Big Data & Society, 1–6. 10.1177/2053951719830111

- Brown N. Hope against hype—accountability in biopasts. Presents and Futures. Science Studies. 2003;16(2):3–21. [Google Scholar]

- Borup M, et al. The sociology of expectations in science and technology. Technology Analysis & Strategic Management. 2006;18(3–4):285–298. doi: 10.1080/09537320600777002. [DOI] [Google Scholar]

- Bowker, G.C., and S.L. Star. 1999. Sorting Things Out: Classification and Its Consequences, 2nd print. Cambridge, MA: MIT press.

- Businesswire. 2020. Global Digital Health Market 2020–2024. Evolving Opportunities With Alphabet Inc. and Apple Inc. Technavio. 4 March. https://www.businesswire.com/news/home/20200303005780/en/Global-Digital-Health-Market-2020-2024-Evolving-Opportunities

- Callon, M. 1998. The Laws of the Markets. Oxford: Blackwell (Sociological Review Monographs).

- Cambrosio A, Keating P, Nelson N. Régimes thérapeutiques et dispositifs de preuve en oncologie : l’organisation des essais cliniques, des groupes coopérateurs aux consortiums de recherche. Sciences Sociales et Santé. 2014;32(3):13–42. doi: 10.3917/sss.323.0013. [DOI] [Google Scholar]

- Cambrosio A, Bourret P, Keating P. Opening the Regulatory Black Box of clinical cancer research: transnational expertise networks and ‘Disruptive’ technologies. Minerva. 2017;55(2):161–185. doi: 10.1007/s11024-017-9324-2. [DOI] [Google Scholar]

- Carpenter D. Reputation and Power: Organizational Image and Pharmaceutical Regulation at the FDA. Princeton and Oxford: Princeton University Press; 2010. [Google Scholar]

- Carver, K. 2013. Coalition Seeks to Delay Mobile Medical Apps Guidance. Updates on Development for medical devices—Covington & Burling LLP. https://www.cov.com/en/practices-and-industries/practices/regulatory-and-public-policy/food-drug-and-device/medical-devices-and-diagnostics

- Cochoy F, Giraudeau M, McFall L. Performativity, economics and politics: an overview. Journal of Cultural Economy. 2010;3(2):139–146. doi: 10.1080/17530350.2010.494116. [DOI] [Google Scholar]

- Cortez N. Analog Agency in a Digital World. In: Lynch HF, Cohen G, editors. FDA in the Twenty-First Century: The Challenges of Regulating Drugs and New Technologies. New York: Columbia University Press; 2015. [Google Scholar]

- Cortez N. Digital health and regulatory experimentation at the FDA. Yale Journal of Health Policy, Law, and Ethics. 2019;18(3):4–26. [Google Scholar]

- Comstock, J. 2017. Apple, Fitbit, Samsung, and Verily among FDA’s picks for precertification pilot. MobiHealthNews, 26 September. https://www.mobihealthnews.com/content/apple-fitbit-samsung-and-verily-among-fdas-picks-precertification-pilot

- Crawford et al. 2015. Our metrics, ourselves: a hundred years of self- tracking from the weight scale to the wrist wearable device. European Journal of Cultural Studies 18 (4–5): 479–496.

- 21st Century Cures Act (2016) US 114th Congress. https://www.congress.gov/bill/114th-congress/house-bill/34

- Davis C, Abraham J. Desperately seeking cancer drugs: explaining the emergence and outcomes of accelerated pharmaceutical regulation. Sociology of Health & Illness. 2011;33(5):731–747. doi: 10.1111/j.1467-9566.2010.01310.x. [DOI] [PubMed] [Google Scholar]

- Deloitte. 2015. No appointment necessary: How the IoT and patient-generated data can unlock health care value. https://www2.deloitte.com/tr/en/pages/life-sciences-and-healthcare/articles/internet-of-things-iot-in-health-care-industry.html

- Demortain D. Scientists and the Regulation of Risk: Standardising Control. Cheltenham, UK: Edward Elgar Publishing; 2011. [Google Scholar]

- Demortain, D. 2017. Expertise, Regulatory Science and the Evaluation of Technology and Risk: Introduction to the Special Issue. Minerva 55(2): 139.

- Dolan, B. 2010. mHealth Regulatory Coalition’s first meeting. MobiHealthNews, 14 July. https://www.mobihealthnews.com/8358/update-mhealth-regulatory-coalitions-first-meeting

- Dolan, B. 2011. FDA clears first diagnostic radiology app, Mobile MIM. MobiHealthNews, 4 February. https://www.mobihealthnews.com/10173/fda-clears-first-diagnostic-radiology-app-mobile-MIM

- European Commission. 2014a. Green Paper on mobile Health (“mHealth”). https://ec.europa.eu/digital-single-market/en/news/green-paper-mobile-health-mhealth

- European Commission. 2014b. Commission Staff Working Document on the existing EU legal framework applicable to lifestyle and wellbeing apps Accompanying the document GREEN PAPER on mobile Health (“mHealth”). https://op.europa.eu/en/publication-detail/-/publication/8dcf22a2-c091-11e3-86f9-01aa75ed71a1/language-en