Abstract

Purpose of Review

With the rapid growth and development of robotic technology, its implementation in medical fields has also been significantly increasing, with the transition from the period of mainly using surgical robots to the era with combinations of multiple types of robots. Therefore, this paper introduces the newest robotic systems and technology applied in operating rooms as well as their architectures for integration.

Recent Findings

Besides surgical robots, other types of robotic devices and machines such as diagnostic and treatment devices with robotic operating tables, robotic microscopes, and assistant robots for surgeons emerge one after another, improving the quality of surgery from different aspects. With the increasing number and type of robots, their integration platforms are also proposed and being spread.

Summary

This review paper presents state-of-the-art robot-related technology in the operating room. Robotic platforms and robot components which appeared in the last decade are described. In addition, system architectures for the integration of robots as well as other devices in operating rooms are also introduced and compared.

Keywords: Operating room, Robot technology, Robotic surgery

Introduction

Similar to AI (artificial intelligence), robotics has become one of the “game-changer” class technology at present. They both have shown their incredible capability and impact on this world as well as huge potential in the revolution of a human being’s life, while AI works mainly in cyberspace (unsubstantial data space) and robotics works mainly in physical space. The application of robotics in medical care is also the case without any doubt. The application of robotics in the operating room (OR in short for the rest of this paper) starts around the 1990s, with the release and rapid spread of surgical robots, and FDA-approved representatives are da Vinci Surgical System [1] (1990~), NeoGuide Colonoscope [2] (2007~), Sensei X Robotic Catheter System [3] (2007~), FreeHand 1.2 [3] (2010~), Monarch Platform [4] (~2014), Flex® Robotic System [5] (2018~), and so on.

However, with the improvement of robotic technology, the role of robots in the OR has changed greatly, from the individual use of one or two surgical robots to integrated systems including multiple robotic devices that support the surgery from different aspects and levels. That is to say, a new era of surgery has begun during the latest decade (2010~2020), with the appearance of the new concept “Robotic Operating Room.” Meanwhile, for the increasing requirement of system management for devices as well as the total integration of the whole OR, architectures of robotic ORs are also proposed and being spread for standardization.

Therefore, this review paper first presents two typical up-to-date instances of a robotic OR. After that, the newest robotic components working or to be working in ORs are also introduced and discussed. Finally, three projects launched for the integration of robotic/hybrid OR are introduced.

Robotic Operating Rooms

In this section, two representative robotic ORs are mainly introduced: SCOT and AMIGO Suite. Although they share various similar features and devices, SCOT concentrates more on the integration of information and IoT technology connecting robotic devices, while the AMIGO suite relies more on the integrated system and combination of automatic devices on a hardware level.

SCOT

Aiming at a high survival rate and prevention of postoperative complications, the decision-making in excision surgery of malignant brain tumors is dependent on information obtained from various medical devices, including intraoperative MRI, operation navigation system, nerve monitoring device, intraoperative rapid diagnosis device (intraoperative flow cytometer), and so on. However, these devices and machines are mostly working independently in a stand-alone manner without data interaction, and this fact makes it difficult for surgeons to understand and manage all information in a unified way. To tackle this issue, the SCOT (Smart Cyber Operating Theater) project started in 2014, and its state-of-the-art flagship model OR Hyper SCOT was introduced to Tokyo Women’s Medical University in 2019 (Fig. 1), with the implementation of robotic technology and basis for AI analysis to be added in future [6••].

Fig. 1.

Overview of Hyper SCOT [6••]. (From [6••], with permission of Hitachi, Ltd.)

There are four main goals for SCOT: packaging, networking, robotization, and AI assistance. The original concept of SCOT was proposed in 2012, and up to now SCOT has been evolving into three versions: (1) Basic SCOT (Packaging of basic surgical devices); (2) Standard SCOT (Networking of the OR)l and (3) Hyper SCOT (Robotization of the surgical devices and AI assistance), which were put into use in 2014, 2018, and 2019, respectively.

Hyper SCOT introduces not only cutting-edge technology but also new approaches from the perspective of architectural design. For the reason of (1) enhancement of visibility for intraoperative information; (2) adaptation to different scenes in surgery; (3) relaxation of patients awake and so on, the design of Hyper SCOT has the following features [6••]:

Optimal layout for advanced OR: Monitors are placed in the layout that the surgeon facing operative field can obtain all required information at a glance. Devices equipped on the wall are in the layout that is comfortable for medical staff to check intraoperative information. HEPA (high-efficiency particulate air filter) unit equipped on the ceiling keeps the operative field clean.

Interior designed for the focus on treatment: Simple space suitable for treatment is realized by flat and frameless wall panels and the design that control devices, pipelines, and RF (radiofrequency) shielding are all hidden behind doors.

Lighting plans for different scenes: OLED lighting equipped at the edges of a ceiling (Pioneer Corporation, Japan) and indirect LED lighting on the base of the wall allow lighting plans in various colors. Instances include lighting in warm colors for the relief from the anxiety of patients entering the OR, blue lighting for arthroscopic surgery, red lighting for the heads-up of surgeons, and high illumination, color temperature lighting for efficient cleaning and preparation of surgery.

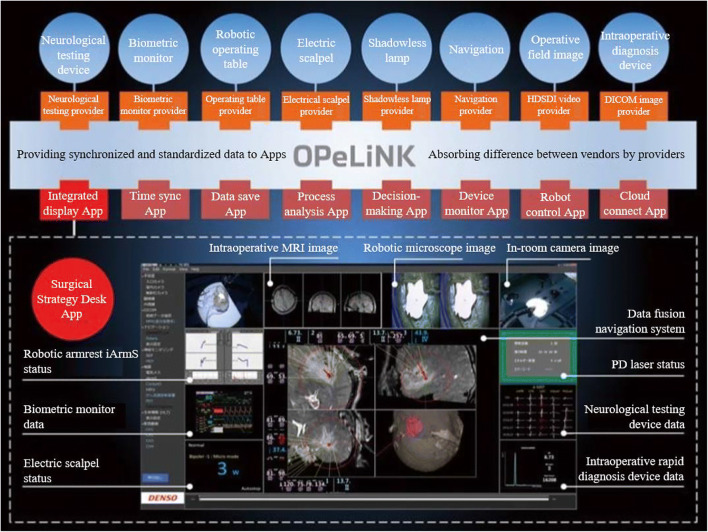

The communication interface of OPeLiNK is used in Hyper SCOT, which is based on its core technology: industrial middleware ORiN (Open Resource interface for the Network). It enables the architecture that applications are not influenced by the change of devices from different makers. Its details and mechanism will be further explained later in this paper. Figure 2 shows the system view of OPeLiNK.

Fig. 2.

System view of OPeLiNK. (From [6••], with permission of Hitachi, Ltd.)

In the SCOT project, the application of “Strategy Desk” for summarizing information collected by OPeLiNK and visualizing of operation progress was developed. It is capable of displaying data, status and images from more than 20 types of devices, including surgery navigation system (Brainlab AG, Germany or Hitachi, Japan), intraoperative rapid diagnosis device (Nihon Kohden, Japan), neurological testing device (Nihon Kohden, Japan), electrical scalpel (MIZUHO Corporation, Japan), PDT (photodynamic therapy) device (Meji Seika Pharma, Japan), microscopic image, intraoperative MRI (Hitachi, Japan), in-room camera image, and others. It has the function called “data fusion navigation” that is capable of overlapping the data of tumor grades and amplitude data of MEP (motor evoked potential) to MRI images once they are obtained. This function enables the visualization of function information for different regions of the brain as well as tumor grades so that brain regions to be excised or reserved can be recognized at a glance. Moreover, the trajectory of a bipolar scalpel as well as the position and amount of its energy output are all recorded and saved as time-synchronized data in the server of OPeLiNK. In this way, the playback of the data is also possible on Strategy Desk even in the operation at any necessary timing. One significant point of this system is that the time-synchronized comparison between treatment reasons (output of electric scalpel, position data of instruments shown in navigation system, etc.) and results (MEP testing device data, biometric monitor data, etc.) with operative fields becomes possible, which contributes to the cause search of complications related to the operation or medical error and improves the transparency of the treatment.

In Hyper SCOT, a robotic operating table developed by Medicaroid Corporation, MIZUHO Corporation with Tokyo Women’s Medical University is implemented. For conventional intraoperative MRI, patient transfer has been a heavy task with the help of about 5~7 medical staff guaranteeing safety. A robotic operating table not only reduces the labor but also increases the pairs of eyes for safety checks and decreases the risk of human error.

Besides the operating table, the robotic armrest iAmrS is another main robotic component used in Hyper SCOT along with robotic microscopes. Fatigue of arm and resulting hand tremor is proven to be an obstacle for accurate movement and risk of safety in neurosurgery, which may last for hours. As its solution, iArmS detects the motion of the surgeon’s arm and supports the arm, which is verified to be capable of decreasing about 70% of hand tremors [7]. Further details of iArmS will be presented in the next section.

As for the spread of SCOT, Basic SCOT is commercially available at present and has been introduced by 4 hospitals; Standard SCOT has just begun its sale in 2020 and Hyper SCOT will be commercially available in 2021.

AMIGO Suite

Unveiled in 2011, the AMIGO (Advanced Multimodality Image Guided Operating) suite [8••] is the operating suite introduced to Brigham and Women’s Hospital. With an area of 5700 square feet (about 530 m2), it consists of 3 individual and integrated procedure rooms. AMIGO is one of the first operating suites in the world integrating various types of advanced imaging technologies, including (1) cross-sectional digital imaging systems of CT and MRI; (2) real-time anatomical imaging of x-ray and ultrasound; (3) molecular imaging of beta probes (with detection of malignant tissue by measuring sensitivity to beta radiation), (4) PET/CT; and (5) targeted optical imaging (based on the nature of light). Besides multi-modality imaging, there are various navigational devices, robotic devices, and therapy delivery systems helping doctors to pinpoint and treat tumors and other target abnormalities in the AMIGO.

One of the biggest characteristics of AMIGO is that its MRI room, OR, and CT/PET room are connected, and moving the MRI scanner from the MRI room to the OR for intraoperative imaging and the transfer of the patient from the OR to the CT/PET room are possible. Moreover, the operating table can also rotate freely to face different imaging systems (MRI scanner, PET scanner, or x-ray system). As for the layout in the AMIGO Suite, the central OR with ceiling-mounted single plane x-ray machine is flanked by a PET/CT room on the left and an MRI room on the right. There are two sliding doors connecting these three rooms and each room has an individual entrance door to the control corridor and support spaces [8••]. These three major components of AMIGO Suite are described in detail as follows.

MRI Room

The MRI room is centered around an MRI scanner with a high field of 3 T and a wide bore of 70 cm, which is integrated with full OR-grade medical gases, MRI-compatible anesthesia delivery and monitoring system, view screens, lighting, and therapy delivery equipment [8••]. Here, image-guidance principles are utilized by the clinical team for various oncology applications. The design of the room provides 2 options: (1) independent use for interventional procedures or (2) interaction with the OR, which is realized by the ceiling-mounted MRI scanner sliding on rails from the MRI room to the patient on the operating table in the OR. With this innovation, transfer of the patient between tables for MRI imaging is no longer necessary.

Operating Room

The OR is the core of the suite and it is integrated with the flanking rooms. The room is equipped with (1) MRI-compatible anesthesia delivery and monitoring systems; (2) surgical microscope with near-infrared capability; (3) surgical navigation systems that track handheld tools, probes, and the surgical microscope and display images corresponding to the location of tools; (4) a ceiling-mounted single plane x-ray system; (5) 2D and 3D ultrasound imaging devices; and (6) an armamentarium of surgical support equipment. Video integration technology is implemented so that all images and data related to the procedure can be collected, prioritized, and recorded or displayed on a large monitor, making it possible for the medical team to select and view all patient information available.

PET/CT Room

One of the most innovative features of AMIGO is the inclusion of Positron Emission Tomography (PET) in surgery. Although the PET/CT Room can be used independently like the MRI room for standalone interventional procedures, the difference between it and the MRI room is that the PET/CT scanner is fixed and unable to move. Patients can be transferred on a shuttle system between the PET/CT table for imaging and the operating table for surgery.

Robotic Components Used and to Be Used in Operating Rooms

Besides integrated robotic ORs introduced above, new types of assistant robots other than surgical robots in ORs are also studied and developed to enhance the quality of surgeries. Here, instances of (1) robotic microscope; (2) robotic armrest; (3) robotic scrub nurse; (4) intelligent/robotic lighting system; and (5) cleaning/sterilization robots are presented and discussed as follows.

Robotic Microscope

Safe and successful microsurgery greatly depends on intraoperative illumination and visualization, which calls upon the rapid development of microscope technology in ORs. In recent years, robotic microscopes also emerge with high-definition, 3D visual, and voice control. ORBEYE developed by Olympus [9], Modus V system developed by Synaptive Medical [10], and KINEVO 900 developed by Carl Zeiss [11] shown in Fig. 3 stand out among other microscopes in ORs. ORBEYE is particularly strong in its visualization. It is equipped with two 4K Exmor R® CMOS image sensors by SONY and provides high-definition 3D digital visualization on a monitor.

Fig. 3.

ORBEYE (upper) (From [9], with permission of Olympus Medical Systems; https://www.g-mark.org/award/describe/47719?locale=en); Modus V (lower left) (From [10], with permission of Synaptive Medical Inc.); and KINEVO 900 (lower right) (From [11], with permission of Carl Zeiss Meditec Co.)

For Modus V, it has a unique feature that voice-activated control is available for all of its system settings, including optics and robotics so that contact with the microscope is not necessary during the operation. Bookmarking and recall of positions are also available.

As for KINEVO 900, the robotic function of PointLock (target point of the microscope keeps fixed while moving the microscope) enables its precise movement and position adjustment in the operation and function of Position Memory makes it possible to recall pre-saved location in the operation as well. In addition, digital hybrid visualization offers the surgeon options of both heads-up surgery or traditional microsurgery for the microscope.

Robotic Armrest

Endoscopic surgery has been spread widely since the late 1980s and has become a type of standard treatment in various fields. However, for long surgeries such as neurosurgery (with a maximal time of more than 12 h), physiological fatigue and tremor of hands may be an obstacle to the precision of movements in operation or even cause serious accidents. The birth of the robotic armrest iArmS, therefore, was trying to provide a strong solution to this issue.

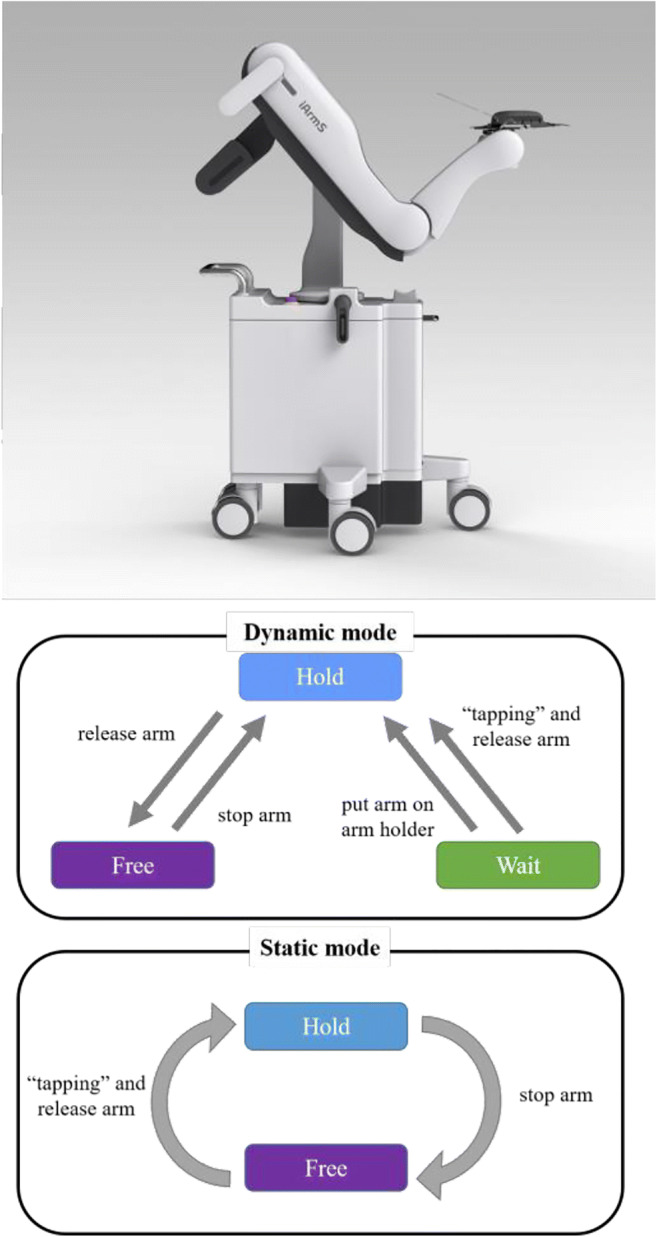

iArmS (Intelligent Arm Support System, DENSO CORPORATION, Japan) was developed and became commercially available in 2015 [12•], which is the commercial version of its prototype EXPERT [13]. Its weight is 97 kg, 600 mm in both width and diameter, and 1100–1390mm in height [14]. It consists of a carbon arm holder, a 5-DoF (degree of freedom) robotic arm, and a base. One crucial feature of iArmS is that it is completely passive, which means that there is no actuator (motor) in the robot. Instead, it is fully controlled by the feedback of force sensors, encoders, and electric brakes. The overview and the diagram of mechanical state transitions of iArmS are depicted in Fig. 4. The system has two modes: dynamic mode and static mode (either one can be chosen freely by the preference of the surgeon), and three states: free, hold, and wait. The transition between states is realized by the analysis of feedback from force sensors and encoders of each arm joint.

Fig. 4.

iArmS (upper) (From [16], with permission of Toho Technology Corporation) and the transition among 3 states under 2 modes (lower)

In the free state, electric brakes of iArmS are released and the robot arm moves up by the counterweight in the arm joint so that the arm holder can freely follow the movements of the surgeon’s arm.

In the hold state, the electric brakes keep locked so that the arm holder can maintain its position and the weight of the surgeon’s arm can be fully supported.

In the wait state, when the feedback of force sensors finds that the surgeon’s arm is not on the arm holder (no load), electric brakes are also kept locked just like in the hold state.

For evaluation, among 14 neurosurgeons who experienced iArmS, 86% (12) of them felt that their hand tremble decreased significantly and 86% (12) of them reported that the accomplishment of procedures became easier [12•].

Moreover, recent studies of iArmS claimed that iArmS is also capable of prolonging endoscope lens life by reducing the times of wiping of the lens, which has been very frequent in the former endoscopic surgery [15].

Robotic Scrub Nurse

As is well known, scrub nurses are playing crucial roles in the surgery besides surgeons, and their performance makes a difference to the speed and smoothness of the whole surgery. Although their work seems to be simple, with handing, taking, or preparing instruments in surgery, their skill highly depends on a thorough understanding of the whole process and details of surgery, and therefore, it may take years to train a proficient scrub nurse. Considering the simplicity of work and high training cost for scrub nurses, the application of robotic scrub nurses has been discussed and efforts have been made in their development and improvement. Penelope has been known as the first robotic scrub nurse successfully applied in surgery, with a semi-autonomous system that is capable to predict, pick up, and deliver the target instruments on verbal commands [17]. Gestonurse is a magnetic-based robotic scrub nurse that can select and deliver surgical instruments to the surgeon with hand gestures [18].

For the up-to-date robotic scrub nurses known, T. Zhou et al. from Purdue University developed a robotic scrub nurse with the remarkable capability of instrument recognition [19•] in 2017. Imperial College London developed a 3D gaze-guided system [20•] in 2019. The system consists of a robot arm (Universal Robot UR5) for picking up and handing the desired instruments to the surgeon, wireless SMI (SensoMotoric Instruments GmbH) glasses for eye-tracking, Microsoft Kinect v2 for RGB-D sensing, and OptiTrack MCS for head pose tracking. This system enables the handing of the surgical instrument by the robot with the command of “gaze” (keeping watching) to the figure of target instrument displayed on a monitor. Although the speed of the handing motion was still apparently slower than humans and the types of target instruments were also limited, it presented a smart and integrated solution and was verified to be effective in an ex vivo task on a porcine colon.

In summary, the implementation and spread of robotic scrub nurses in real surgery still seems to take a couple of years or even more than a decade, but their recent improvement is increasingly faster and thus is exciting and promising as well.

Intelligent/Robotic Lighting System

The significance of lighting in the ORs is apparent since a clear view of the operative field is indispensable during surgery. Having been developed and improved during these decades, the major existing lighting systems in ORs still suffer from the limitation that their position and direction can only be adjusted manually. This limitation might not seem to be a huge problem since lighting is usually pre-positioned before the surgery, but during surgery, it may cause (1) the need of extra staff(s) for manual adjustment when surgeons are not allowed to touch the lamps themselves due to sterilization and other reasons; (2) the collision and entanglement of arms, which is especially the case for lamps mounted at the end of arms; and (3) the optimal position being out of reach or unable to reach.

The solution to the issues described above can be mainly divided into two types: (1) an intelligent lighting system that mainly controls the lighting on the level of sensing and software and (2) a robotic lighting system that controls the lighting with robot arm(s). While the former has just been developed to systems and started to be announced to the public, the latter still seems to be understudy and the development of hardware systems is ongoing.

Regarding intelligent lighting systems, the control of light with non-contact signs such as hand gestures and verbal commands is implemented. Research and development of hand gestures for lighting control have been particularly active in the last decade [21–24]. Taking [23] as an example, a set of simple gestures is proposed to enable the control of system log-on, illumination level, color temperature, and camera control (zoom and angle rotation).

With sufficient development of non-contact input for lighting control, intelligent lighting systems in ORs have just been integrated and released recently, and the smart lighting system by Gentex Corporation and Mayo Clinic [25, 26] is a representative example. With a ceiling mount design, it is completely free from issues related to arms and its manual adjustment. Consisting of a series of flush lighting units containing dynamically adjustable LED arrays, its target lighting zone as well as other functions can be controlled by hand gestures, voice commands, or a hand-held tracking device.

As for robotic lighting systems, their feasibility has been discussed in [21] and specific hardware designs for an expected solution are also described in [27•], with details about the expected surgical scenes and corresponding 3D robotic movement, specifications, and consideration of kinematics structure as well as dynamic analysis, which are evaluated by a 3D-printed prototype.

In comparison with intelligent lighting systems, robotic lighting systems require more consideration in surgery because of the addition of a robot arm and its movement, yet they have the advantage in providing more flexible options in terms of lighting direction and position; thus, their prospective application in ORs is also expected to be more flexible.

Cleaning/Sterilization Robots

Having been developed and introduced to hospitals and ORs, the attention on and the requirement of sterilization as well as cleaning robots has increased drastically due to the situation of the Coronavirus pandemic.

Among robotic solutions for cleaning and sterilization, the UVD robot [28, 29] stands out and has been introduced and spread worldwide due to its features of (1) high disinfection rate with UV (ultraviolet) light, (2) disinfection time of ten minutes for one room, and (3) full autonomy in mobility. With the Lidar (light detection and ranging) sensor and SLAM (simultaneous localization and mapping) technology for navigation, the UVD robot is capable of moving autonomously throughout its surrounding space and avoiding obstacles. Furthermore, it is also able to detect motion and shut down UV lights if there is anyone around it.

Projects for Integration in Operating Rooms

As is explained earlier in this paper, with the increase of medical devices in ORs, the requirement of their integration platform for the management of information including collected data, time synchronization, device status, and so on is increasingly apparent, and it is particularly the case for robotic ORs. Therefore, the rest of this section introduces and compares three projects dealing with this issue.

MD PnP

The MD PnP (Medical Device Plug-and-Play Interoperability Program) project [30] was proposed and launched mainly by Prof. Julian M. Goldman from Massachusetts General Hospital in 2004. With the standard of ASTM F2761 Integrated Clinical Environment (ICE) established, the platform OpenICE complying ICE has been open to the public free of charge. OpenICE mainly consists of the middleware implementing DDS (Data Distribution Service) proposed by OMG (Object Management Group) and is capable of connecting devices used in anesthesiology (anesthesia machines, ventilators, and biometric monitor) made by Phillips, Dräger, GE, etc. This platform offers (1) smart alarms judged from complex parameters from devices; (2) development of closed-loop anesthesia system; (3) visualization of data; and (4) development of clinical data collection function.

OR.net

The OR.net project [31, 32] has been joined and implemented mainly by German universities, such as the University of Heidelberg, University of Leipzig, University of Lübeck, Technical University of Munich, and RWTH Aachen University. One of the biggest features of OR.net is that it aims at the interaction among devices and applications without any central system like middleware through standardization of communication and data model. OR.net has ended as a national project in 2016 and is now promoting the spread of IEEE 11073 SDC, a standard based on the concept of SOMDA (Service-Oriented Medical Device Architecture) originated from the term of SOA (Service Orientated Architecture) in the Web world. In short, it aims at automatic recognition of device services and applications once connected to the network and interaction via semantic data with metadata.

SCOT [33••]

In the SCOT project, the interface OPeLiNK® [34] for OR was developed, with the core of industrial middleware ORiN (Open Resource interface for the Network) [35, 36]. Data acquired from medical devices, medical information, intraoperative diagnostic images, and devices in OR can be captured and stored with time synchronization in the server of OPeLiNK® by the programs called “providers” that are compatible with all related devices. The data can also be obtained from the server by the application with a server-client model.

A provider, which is equivalent to a “device driver” in this project, serves as the role of device abstraction from the view of applications. This mechanism enables the construction of an architecture with applications free from the influence caused by a change of devices. OPeLiNK® is playing the role of standardization for data format in the OR. And from the perspective of the whole system, it is similar to the architecture of MD PnP in the way that it is using middleware.

As a summary, the comparison among these three projects with their features is listed in Table 1.

Table 1.

Comparison of projects mentioned

| Name | Content | Features |

|---|---|---|

| MD PnP | Developing new protocols for the connection of medical devices |

Open libraries for implementation of protocols; Mainly for academia |

| OR.net | Developing a middleware for the connection of anesthesia devices |

Open middleware; Only for anesthesia devices; For academia |

| SCOT | Developing a middleware for the connection of medical devices | In practical use now |

Conclusions

The application of robotics in the medical field has been increasingly stable in recent decades, and it is also the case in ORs. In this review, two typical representatives of a robotic OR are focused and introduced in detail: SCOT series and AMIGO Suite.

For the SCOT, the majority of devices and robots are connected to and managed by the interface of OPeLiNK so that the packaging and IoT networking of the whole OR can be realized, with “playback” of surgery being possible and thus contributing to the improvement of the surgery quality and prevention of medical errors.

For the AMIGO Suite, a variety of equipment, robotized OR, and other devices (including MRI scanner on rails) make it possible for flexible and strong image-guided therapy, with the first realization of connectivity as well as compatibility of the OR with MRI and PET/CT room in the world.

Besides integrated robotic ORs, innovative and potential robotic components in ORs are also mentioned and described respectively. They are (1) robotic microscopes; (2) robotic armrest; (3) robotic scrub nurse; (4) intelligent/robotic lighting system; and (5) cleaning/sterilization robots. For 1, three products of robotic microscopes are introduced with their outstanding functions and features. For 2, iArmS is introduced in detail with its mechanism and achievements. For 3, the latest results for its development and improvement in instrument recognition and total system are provided, with the expectation of its future implementation and spread in ORs. For 4, two main types of solutions to existing issues of lighting in ORs are presented. For 5, a robot capable of sterilization in ORs and other places with autonomous mobility is described.

Finally, projects for the integration of ORs are presented and compared: MD PnP, OR.net, and SCOT.

Declarations

Conflict of Interest

The authors report grants and personal fees from AMED (Japan Agency for Medical Research and Development), during the conduct of the study.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Footnotes

This article is part of the Topical Collection on Medical and Surgical Robotics

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Xiao Sun, Email: xsun@yamanashi.ac.jp.

Jun Okamoto, Email: okamoto.jun@twmu.ac.jp.

Ken Masamune, Email: masamune.ken@twmu.ac.jp.

Yoshihiro Muragaki, Email: ymuragaki@twmu.ac.jp.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

- 1.About da Vinci Systems. https://www.davincisurgery.com/da-vinci-systems/about-da-vinci-systems. Accessed 28 Aug 2020.

- 2.Eickhoff A, Van Dam J, Jakobs R, Kudis V, Hartmann D, Damian U, Weickert U, Schilling D, Riemann JF. Computer-assisted colonoscopy (The NeoGuide Endoscopy System): results of the First Human Clinical Trial (“PACE Study”) Am J Gastroenterol. 2007;102:261–266. doi: 10.1111/j.1572-0241.2006.01002.x. [DOI] [PubMed] [Google Scholar]

- 3.Beasley RA. Medical robots: current systems and research directions. Journal of Robotics. 2012;2012:1–14. doi: 10.1155/2012/401613. [DOI] [Google Scholar]

- 4.MONARCHTM Endoscopy Transformed. https://www.aurishealth.com/monarch-platform. Accessed 28 Aug 2020.

- 5.Rassweiler JJ, Autorino R, Klein J, Mottrie A, Goezen AS, Stolzenburg JU, Rha KH, Schurr M, Kaouk J, Patel V, Dasgupta P, Liatsikos E. Future of robotic surgery in urology. BJU Int. 2017;120:822–841. doi: 10.1111/bju.13851. [DOI] [PubMed] [Google Scholar]

- 6.•• Okamoto J, Kusuda K, Horise Y, Kobayashi E, Masamune K, Muragaki Y. Cutting-edge Information Guided Surgery Created by Hyper SCOT. In HITACHI MEDIX Vol. 70. 2018. https://www.hitachi.co.jp/products/healthcare/products-support/contents/medix/pdf/vol70/p20-24.pdf. Accessed: 28 August 2020 (in Japanese). This paper summarizes the innovation and considerations for Hyper SCOT in detail.

- 7.Okuda H, Goto T, Okamoto J. Operation Assistant Robot iArmS®. In: The 6th Robot Award Archive, Japan. 2014. https://www.robotaward.jp/archive/2014/prize/robot06.pdf. Accessed: 28 August, 2020 (in Japanese).

- 8.•• Kacher DF, Whalen B, Handa A, Jolesz FA (2013) The Advanced Multimodality Image-Guided Operating (AMIGO) Suite. In: Intraoperative imaging and image-guided therapy. Springer New York, pp 339–368. doi:10.1007/978-1-4614-7657-3_24. This long chapter introduces all features and plenty of details of AMIGO Suite as well as ideas and considerations “behind the scene”.

- 9.Good Design Award. https://www.g-mark.org/award/describe/47719?locale=en. Accessed 18 Apr 2021.

- 10.Modus V - Synaptive Medical. https://www.synaptivemedical.com/products/modus-v/. Accessed 28 Aug 2020.

- 11.ZEISS Surgical Microscopes. https://www.zeiss.com/meditec/int/product-portfolio/surgical-microscopes/kinevo-900.html. Accessed 28 Aug 2020.

- 12.Goto T, Hongo K, Ogiwara T, Nagm A, Okamoto J, Muragaki Y, Lawton M, McDermott M, Berger M. Intelligent surgeon’s arm supporting system iArmS in microscopic neurosurgery utilizing robotic technology. World Neurosurgery. 2018;119:e661–e665. doi: 10.1016/j.wneu.2018.07.237. [DOI] [PubMed] [Google Scholar]

- 13.Goto T, Hongo K, Yako T, Hara Y, Okamoto J, Toyoda K, Fujie MG, Iseki H. The Concept and Feasibility of EXPERT. Neurosurgery. 2013;72:A39–A42. doi: 10.1227/NEU.0b013e318271ee66. [DOI] [PubMed] [Google Scholar]

- 14.Ogiwara T, Goto T, Nagm A, Hongo K. Endoscopic endonasal transsphenoidal surgery using the iArmS operation support robot: initial experience in 43 patients. Neurosurgical Focus. 2017;42:E10. doi: 10.3171/2017.3.FOCUS16498. [DOI] [PubMed] [Google Scholar]

- 15.Okuda H, Okamoto J, Takumi Y, Kakehata S, Muragaki Y (2020) The iArmS robotic armrest prolongs endoscope lens–wiping intervals in endoscopic sinus surgery. Surg Innov 155335062092986. doi:10.1177/1553350620929864 [DOI] [PubMed]

- 16.Operation Assisting Device iArmS. http://design.denso.com/works/works_020.html

- 17.Treat MR, Amory SE, Downey PE, Taliaferro DA. Initial clinical experience with a partly autonomous robotic surgical instrument server. Surg Endosc. 2006;20:1310–1314. doi: 10.1007/s00464-005-0511-0. [DOI] [PubMed] [Google Scholar]

- 18.Jacob MG, Li Y-T, Wachs JP (2012) Gestonurse. Proceedings of the seventh annual ACM/IEEE international conference on Human-Robot Interaction - HRI ’12. doi:10.1145/2157689.2157731.

- 19.• Zhou T, Wachs Juan P (2017) Finding a needle in a haystack: recognizing surgical instruments through vision and manipulation. Electronic Imaging 2017:37–45. doi:10.2352/ISSN.2470-1173.2017.9.IRIACV-264. One of the newest progress in robotic scrub nurse

- 20.• Kogkas A, Ezzat A, Thakkar R, Darzi A, Mylonas G (2019) Free-view, 3D gaze-guided robotic scrub nurse. in: Lecture notes in computer science. Springer International Publishing, pp 164–172. doi:10.1007/978-3-030-32254-0_19. One of the newest progress in robotic scrub nurse

- 21.Hartmann F, Schlaefer A. Feasibility of touch-less control of operating room lights. Int J Comput Assist Radiol Surg. 2013;8(2):259–268. doi: 10.1007/s11548-012-0778-2. [DOI] [PubMed] [Google Scholar]

- 22.Collumeau J, Nespoulous E, Laurent H, Magnain B (2013) Simulation interface for gesture-based remote control of a surgical lighting arm. Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics. doi: 10.1109/SMC.2013.795.

- 23.Dietz A, Schröder S, Pösch A, Frank K, Reithmeier E (2016) Contactless Surgery Light Control based on 3D Gesture Recognition. GCAI. doi: 10.29007/zmz9.

- 24.Joseph J, Divya DS. Hand gesture interface for smart operation theatre lighting. International Journal of Engineering & Technology. 2018;7(2.25):20–23. doi: 10.14419/ijet.v7i2.25.12358. [DOI] [Google Scholar]

- 25.Gentex Medical Smart Lighting. https://www.youtube.com/watch?v=2fAKOEtTdio. Accessed: 18 Apr 2021.

- 26.Gentex Announces Smart Lighting Technology For Medical Applications. https://ir.gentex.com/news-releases/news-release-details/gentex-announces-smart-lighting-technology-medical-applications. Accessed: 18 Apr 2021.

- 27.• Sandoval J, Nouaille L, Poisson G, Parmantier Y. (2018) Kinematic design of a lighting robotic arm for operating room. Computational Kinematics, Springer, Cham., pp 44-52. doi: 10.1007/978-3-319-60867-9_6. One of the newest progress in robotic lighting systems

- 28.UVD Robots®. https://www.uvd-robots.com/. Accessed: 18 Apr 2021.

- 29.Autonomous Robots Are Helping Kill Coronavirus in Hospitals. https://spectrum.ieee.org/automaton/robotics/medical-robots/autonomous-robots-are-helping-kill-coronavirus-in-hospitals. Accessed: 18 Apr 2021.

- 30.Projects-MD PnP Program. http://www.mdpnp.org/projects.html. Accessed: 28 Aug 2020.

- 31.Rockstroh M, Franke S, Hofer M, Will A, Kasparick M, Andersen B, Neumuth T. OR.NET: multi-perspective qualitative evaluation of an integrated operating room based on IEEE 11073 SDC. Int J CARS. 2017;12:1461–1469. doi: 10.1007/s11548-017-1589-2. [DOI] [PubMed] [Google Scholar]

- 32.OR.NET. http://www.ornet.org. Accessed: 28 Aug 2020.

- 33.•• Masamune K, Nishikawa A, Kawai T, Horise Y, Iwamoto N (2018) The development of Smart Cyber Operating Theater (SCOT), an innovative medical robot architecture that can allow surgeons to freely select and connect master and slave telesurgical robots. impact 2018:35–37. doi:10.21820/23987073.2018.3.35. This paper presents the concepts, advantages, and challenges in the whole SCOT project and also focuses on the connection between SCOT and robotic devices.

- 34.Okamoto J, Masamune K, Iseki H, Muragaki Y. Development concepts of a Smart Cyber Operating Theater (SCOT) using ORiN technology. Biomedical Engineering / Biomedizinische Technik. 2018;63:31–37. doi: 10.1515/bmt-2017-0006. [DOI] [PubMed] [Google Scholar]

- 35.Mizukawa M, Matsuka H, Koyama T, Matsumoto A. ORiN: open robot interface for the network, a proposed standard. Industrial Robot. 2000;27:344–350. doi: 10.1108/01439910010372992. [DOI] [Google Scholar]

- 36.Homepage of ORiN. http://www.orin.jp/. Accessed: 28 Aug 2020.