Abstract

Network meta-analysis (NMA) is a popular tool to synthesize direct and indirect evidence for simultaneously comparing multiple treatments, while evidence inconsistency greatly threatens its validity. One may use the inconsistency degrees of freedom (ICDF) to assess the potential that an NMA might suffer from inconsistency. Multi-arm studies provide intrinsically consistent evidence and complicate the ICDF’s calculation; they commonly appear in NMAs. The existing ICDF measure may not feasibly handle multi-arm studies. Motivated from the effective numbers of parameters of Bayesian hierarchical models, we propose new ICDF measures in generic NMAs that may contain multi-arm studies. Under the fixed- or random-effects setting, the new ICDF measure is the difference between the effective numbers of parameters of the consistency and inconsistency NMA models. We used artificial NMAs created based on an illustrative example and 39 empirical NMAs to evaluate the performance of the existing and new measures. In NMAs with two-arm studies only, the proposed ICDF measure under the fixed-effects setting was nearly the same with the existing measure. Among the empirical NMAs, 27 (69%) contained at least one multi-arm study. The existing measure was not applicable to them, while the proposed measures led to interpretable ICDFs in all NMAs.

Keywords: Bayesian analysis, direct evidence, effective number of parameters, evidence inconsistency, indirect evidence, network meta-analysis

1. Introduction

Meta-analysis is an important tool to integrate and contrast findings from various independent studies on common topics. It has been widely used to produce high-quality overall evidence for treatment comparisons that can help underpin guidelines and make decisions (Gurevitch et al. 2018; Niforatos, Weaver, and Johansen 2019). For a certain disease condition, multiple treatments are often available, and clinicians aim at choosing optimal treatment options for patients. In recent years, network meta-analysis (NMA), also known as multiple-treatment meta-analysis or mixed treatment comparison, has been rapidly developed to inform the benefits and harms of all available treatments (Lumley 2002; Lu and Ades 2004; Salanti 2012; Cipriani et al. 2013; Nikolakopoulou et al. 2018). The fundamental idea is to simultaneously synthesize both direct and indirect evidence for treatment comparisons, and thus to provide more precise treatment effect estimates than conventional pairwise meta-analysis that separately and directly compares each pair of treatments (Caldwell, Ades, and Higgins 2005; Riley et al. 2017).

An NMA often relies on a critical assumption that the direct and indirect evidence is consistent for all treatment comparisons (Donegan et al. 2013; Jansen and Naci 2013). Such consistency is sometimes termed coherence (Ioannidis 2009; Mills, Thorlund, and Ioannidis 2013; Brignardello-Petersen et al. 2019). Consider that the comparison between treatments A and B is of interest; the studies that include both treatment groups provide the direct evidence for this comparison. However, such evidence may be sparse (e.g., when no financial incentive supports conducting head-to-head trials of A vs. B). Alternatively, many studies may compare A or B with another common comparator such as placebo, denoted by C. These studies of A vs. C and B vs. C provide the indirect evidence for comparing A and B. The three treatments A, B, and C form an evidence loop in the treatment network; such a loop is critical for an NMA to outperform the conventional pairwise meta-analyses (Salanti, Kavvoura, and Ioannidis 2008; Mills, Thorlund, and Ioannidis 2013; Lin et al. 2019; Lin, Chu, and Hodges 2020; Papakonstantinou et al. 2020).

The evidence consistency assumption may not hold in some NMAs; violating this important assumption may lead to biased treatment effect estimates (Song et al. 2011). The inconsistency may be caused by many factors, such as imbalanced distributions of effect modifiers between different types of comparisons, outlying treatment effects, treatment intransitivity, and publication and related bias (Jansen and Naci 2013; Brignardello-Petersen et al. 2019). Higgins et al. (2012) introduced the concepts of loop inconsistency and design inconsistency. The loop inconsistency is relevant to the scenario of three treatments A, B, and C illustrated above; that is, the underlying overall effect sizes of A vs. C and C vs. B may not add up to that of A vs. B. This article is primarily concerned with such inconsistency. On the other hand, the design inconsistency refers to differences in underlying overall effect sizes in different study designs (i.e., sets of treatments compared within studies).

Each NMA should rigorously evaluate the evidence consistency assumption for rating evidence quality and validating its conclusions (Mills et al. 2012; Puhan et al. 2014; Hutton et al. 2015), whereas empirical studies have found that many published NMAs did not carefully check this assumption (Veroniki et al. 2013; Nikolakopoulou et al. 2014; Petropoulou et al. 2017). Most existing approaches focus on testing for the presence of evidence inconsistency and modeling treatment comparisons with such inconsistency; they include the node-splitting approach, the model with inconsistency factors, the design-by-treatment interaction model, etc. (Lu and Ades 2006; Dias et al. 2010; Higgins et al. 2012; van Valkenhoef et al. 2012; Jackson et al. 2014; Tu 2016; Donegan, Dias, and Welton 2019). These approaches typically specify additional parameters in NMA models to permit evidence inconsistency.

Before using statistical methods to test and adjust for evidence inconsistency, it is also critical to assess the potential that an NMA might suffer from such inconsistency. Lu and Ades (2006) referred to such potential as inconsistency degrees of freedom (ICDF), and they proposed a measure to quantify it. This ICDF measure is interpreted as the number of independent evidence loops in an NMA. For example, in a star-shaped NMA with all active treatments compared with a common placebo, no evidence loop exists and thus its ICDF is 0. As such, the ICDF is a characteristic about the treatment comparison structure; it does not draw conclusions about whether evidence inconsistency is actually present.

In addition, the ICDF relates closely to the benefit of performing an NMA compared with performing separate pairwise meta-analyses; to gain more power from an NMA, researchers need to accept a greater risk of evidence inconsistency. For example, although a star-shaped NMA does not suffer from evidence inconsistency with ICDF = 0, the absence of evidence loops implies no synthesis of direct and indirect evidence. Such evidence synthesis is the primary reason that an NMA could outperform separate pairwise meta-analyses (Lu and Ades 2004).

The explicit calculation of the ICDF proposed by Lu and Ades (2006) is restricted to special cases, i.e., when all studies in an NMA are two-armed. Multi-arm studies, however, are common in practice (Chan and Altman 2005). Because the evidence from multi-arm studies is intrinsically consistent, the presence of multi-arm studies complicates the calculation of the ICDF (Lu and Ades 2006; Dias et al. 2010; Higgins et al. 2012; Dias, Welton, et al. 2013). For example, if an evidence loop is formed by two-arm studies only, then the ICDF is clearly 1. On the other hand, if this loop is formed by a multi-arm study only, it is not subject to evidence inconsistency and thus does not contribute to the ICDF. When the evidence loop is mixed by both two- and multi-arm studies, the calculation of the ICDF needs a tradeoff between the two types of studies. Because of such complication, the ICDF is rarely reported in the current literature, although it provides important information about NMAs.

Based on the effective number of parameters (ENP) of Bayesian hierarchical models (Spiegelhalter et al. 2002), this article proposes alternative methods to calculate the ICDF in generic NMAs that may or may not include multi-arm studies. The ICDF can be expressed as the difference between the ENPs in NMA models with and without the evidence consistency assumption. It can be feasibly calculated in all NMAs, because the ENP can be obtained in various software packages for Bayesian analyses.

The rest of this article is organized as follows. We first present an illustrative example of comparisons among multiple antidepressants, and review the commonly-used Bayesian hierarchical models to implement NMAs. Based on these setups, we demonstrate the problem of evidence inconsistency and the difficulty in deriving the ICDF in the presence of multi-arm studies. Then, we introduce the proposed methods to calculate the ICDF in generic NMAs under both the fixed-effects (FE) and random-effects (RE) settings. The performance of the proposed methods is assessed using both artificial and empirical datasets. We conclude this article with a brief discussion.

2. Methods

2.1. Illustrative example and model of network meta-analysis

We consider an illustrative example of NMA reported by Cipriani et al. (2009) that investigated the efficacy of 12 antidepressants. Figure 1 presents its treatment network, and the treatment names are in the figure legend. This NMA includes a total of 111 studies, among which 109 are two-armed and two are three-armed, both comparing treatments 5, 9, and 11.

Figure 1.

Treatment network plot of the NMA by Cipriani et al. (2009). The 12 treatments are sorted in alphabetical order: 1) bupropion; 2) citalopram; 3) duloxetine; 4) escitalopram; 5) fluoxetine; 6) fluvoxamine; 7) milnacipran; 8) mirtazapine; 9) paroxetine; 10) reboxetine; 11) sertraline; and 12) venlafaxine. Each node denotes a treatment; each edge represents a direct comparison between the corresponding two treatments. The node size is proportional to the total sample size in the corresponding treatment groups across studies; the edge width is proportional to the number of studies that directly compare the corresponding treatments. The shaded loop indicates that it contains evidence contributed by multi-arm studies.

We consider Bayesian hierarchical models for implementing NMAs. Suppose that an NMA collects studies and compares a total of (>2) treatments. Each study compares a subset of the treatments; denote the treatment set of study by . Let be the observed outcome measure in study ’s treatment group . It follows a distribution with the probability density/mass function , which depends on an unknown parameter , representing treatment ’s effect in study , and some known data . For example, in the illustrative NMA, = 12 and = 111. For binary outcomes, represents event counts and follows a binomial distribution, with being the true event rate and being the sample size.

An NMA typically focuses on estimating relative effects between treatments. A baseline treatment, denoted by , needs to be specified for each study. The treatment sets of different studies need not intersect, so different studies may have different baseline treatments. When it does not lead to confusion, is simply denoted by . The Bayesian hierarchical RE model for the NMA is (Lu and Ades 2004, 2009):

| (1) |

The link function transforms to be in a linear form; it is usually the logit link for binary outcomes. The indicator function returns 0 if treatment is study ’s baseline (); otherwise, it returns 1 (). The parameters represent the studies’ baseline effects; they are usually of less interest and modeled as nuisances, because the studies’ baselines may dramatically differ in terms of their patients’ characteristics, etc. Moreover, are study-specific relative effects between treatments; they represent log odds ratios when using the logit link.

This model assumes the study-specific relative effects are random effects, following normal distributions, whose means are the overall relative effects and variances are across studies due to heterogeneity. Within multi-arm studies, the relative effects are correlated with correlation coefficients . To reduce model complexity and computational difficulty, the between-study variances of all treatment comparisons are frequently assumed equal, i.e., , and all within-study correlation coefficients are 0.5 (Higgins and Whitehead 1996; Lu and Ades 2004). We will make the above assumptions in the following data analyses.

Besides this RE model, the FE model may be also used. The FE model assumes homogeneity across studies; that is, all between-study variances are 0, so the study-specific underlying relative effects shrink to the common overall relative effect for each treatment comparison. Specifically, the FE model for NMA is:

| (2) |

Because the FE model has much fewer parameters than the RE model, its implementation is easier. However, the studies in an NMA are likely heterogeneous in practice (Higgins 2008), so the FE model may produce too narrow credible intervals with low coverage probabilities (Mills, Thorlund, and Ioannidis 2013).

2.2. Evidence inconsistency

The overall relative effects of different treatment comparisons in Equation (1) or (2) are of primary interest. For the comparison of vs. ), its overall direct evidence is quantified by ; the evidence consistency assumes that the direct evidence is identical to the evidence provided by indirect comparisons via any other treatment . Formally, the evidence consistency implies that for any trio of treatments , and . Consequently, under this assumption, a certain treatment, say treatment 1, can be selected as a reference, and the overall relative effect of any treatment comparison is determined by comparisons vs. the reference: . The evidence consistency reduces the set of all possible treatment comparisons to the set of “basic” parameters (i.e., ).

However, treatment effects based on direct evidence may not always agree with those based on indirect evidence, leading to evidence inconsistency. The inconsistency is a sort of discrepancy that lies between treatment comparisons, which differs from the heterogeneity between individual studies (Lu and Ades 2006; Salanti 2012). Several methods have been proposed to account for evidence inconsistency. For example, Dias, Welton, et al. (2013) proposed the unrelated-mean-effects (UME) model, which treats all direct treatment comparisons as separate, unrelated parameters to be estimated, so this model does not require the consistency assumption.

Lu and Ades (2006) proposed the ICDF to quantify the potential that an NMA might suffer from evidence inconsistency; we denote it by . It is interpreted as the number of independent evidence loops, where the independence means that evidence consistency within a certain loop does not rely on evidence consistency within other loops. For example, in an NMA with four treatments A, B, C, and D, suppose that A, B, C form a loop and B, C, D form another loop, and denote these loops by A-B-C and B-C-D, respectively. Then, the loop A-B-C-D depends on A-B-C and B-C-D, and thus these three loops are not independent.

For an NMA with two-arm studies only, Lu and Ades (2006) calculated the ICDF as

| (3) |

where is the total number of treatment pairs that are directly compared. For example, in a simple NMA with only three mutually compared treatments A, B, and C, = 3 and = 3, so the ICDF = 1, coinciding with the fact that this NMA has one evidence loop. If the two three-arm studies are removed from the NMA by Cipriani et al. (2009), all remaining studies are two-armed. The network structure in Figure 1 remains unchanged, with = 42 and = 12, so the ICDF = 31.

Equation (3), however, is not directly applicable to NMAs with multi-arm studies. In an effort to account for such studies, Lu and Ades (2006) also suggested calculating the ICDF as , where denotes the number of loops in which direct treatment comparisons are only present in multi-arm studies. However, when an NMA contains many multi-arm studies, this calculation becomes fairly difficult, and it has been concluded that the ICDF may have to be counted “by hand.” For example, recall that the original NMA by Cipriani et al. (2009) contains two three-arm studies, comparing treatments 5, 9, and 11. The evidence loop of 5-9-11 is intrinsically consistent within each three-arm study. Meanwhile, ten two-arm studies of treatment comparison 9 vs. 5, six of 11 vs. 5, and two of 11 vs. 9 also contribute evidence to this loop; their evidence is subject to inconsistency. Such mixtures of evidence from these two- and multi-arm studies complicate the concept of the ICDF (Lu and Ades 2006; Dias, Welton, et al. 2013). To facilitate calculating the ICDF in generic NMAs, we propose new methods based on the ENPs of Bayesian hierarchical models.

2.3. Effective number of parameters

The ENP is frequently used to assess the complexity of Bayesian hierarchical models; it is an important part for deriving the deviance information criterion (DIC) for Bayesian model selection. The DIC is the sum of a deviance term, representing the goodness-of-fit, and a penalty term, representing the model complexity (Spiegelhalter et al. 2002); that is,

where is the deviance term, and penalizes the model complexity and is interpreted as the ENP. A complex model with many parameters generally fits the data well, but it may be bad for prediction, producing estimates with large variances. On the other hand, a simple model with few parameters may fit the data poorly. Therefore, the DIC is a useful tool for trading off the goodness-of-fit and the model complexity.

In NMAs, the calculation of the deviance depends on the outcome measures (Dias, Sutton, et al. 2013). Each treatment group in each study contributes an expected value 1 to , so the deviance is expected to be around (i.e., the total number of treatment groups in the NMA), where denotes a set’s size.

The describes the number of parameters that effectively model the NMA data. For example, under the evidence consistency assumption, the ENP of the FE model in Equation (2) is expected to be

| (4) |

which is contributed by the baseline effects of all studies and the “basic” parameters of relative effects ). On the other hand, using the UME model by Dias, Welton, et al. (2013) to permit evidence inconsistency and assuming that all studies are two-armed, the ENP is expected to be

| (5) |

because this model treats the relative effects as separate, unrelated parameters. Of note, in the presence of multi-arm studies, Equation (4) for the consistency model is valid because such studies intrinsically satisfy the consistency assumption. However, Equation (5) for the inconsistency model may not hold, because it treats all comparisons as entirely separate parameters and does not account for the intrinsic consistency within multi-arm studies. For example, consider a three-arm study comparing treatments A, B, and C. The inconsistency UME model assigns 3 parameters, , and , to estimate the relative effects; however, within this three-arm study, is actually determined by and , so the ENP is 2, rather than 3. The exact form of may not be explicitly derived when two-arm studies are mixed with this three-arm study.

It is also not straightforward to derive the exact form of the ENP of the RE model. Although the study-specific relative effects are different, they cannot be counted as separate effective parameters because they are assumed as random effects: . If the between-study variance is zero, then all equal to , effectively leading to a single parameter; if approaches infinity, then all are essentially separate parameters. The ENP of the RE model lies between the two extreme cases above.

Despite of the difficulty in theoretically deriving the ENPs’ explicit forms in generic cases, they can be numerically calculated by many software packages via rigorous Bayesian methods (Plummer 2002; Spiegelhalter et al. 2002; Gelman et al. 2014).

2.4. Proposed methods

Based on the ENP, we propose new methods to calculate the ICDF in generic NMAs, regardless of the presence of multi-arm studies. Recall that Lu and Ades (2006) calculated the ICDF as in an NMA with two-arm studies only; this turns out to be the difference between the ENPs and under the FE setting with and without the evidence consistency assumption in Equations (4) and (5). Motivated by this observation, the ICDF’s definition can be naturally generalized to handle NMAs with multi-arm studies: for the FE setting, we define

| (6) |

In NMAs with two-arm studies only, is expected to be identical to . In practice, is numerically calculated by Bayesian methods via the Markov chain Monte Carlo (MCMC) algorithm, while is given by an explicit form in Equation (3); therefore, they may be slightly different owing to computing errors. This difference may also relate to Markov chains’ stabilization and convergence.

The ICDF’s alternative definition in Equation (6) is valid for generic NMAs, even in the presence of multi-arm studies, because the ENPs can be readily obtained in all Bayesian hierarchical models. In addition, this definition is intuitive because the difference in the ENPs naturally represents the change of the degrees of freedom of treatment comparisons caused by making the evidence consistency/inconsistency assumption.

The is derived under the FE setting, which is inappropriate if substantial heterogeneity appears. We may define the ICDF similarly under the RE setting:

| (7) |

where and are the ENPs of the consistency and inconsistency RE models, respectively.

2.5. Analyses of artificial and empirical data

We constructed artificial data based on the illustrative NMA by Cipriani et al. (2009) to assess the proposed ICDFs’ performance; Figure 2 shows the process. Specifically, we first reduced the original data (Data O) to be star-shaped (Data S) with the center at treatment 5 (fluoxetine), because it had been conventionally used as the reference antidepressant. As the star-shaped NMA contained no loop, its ICDF is 0 in theory. On the one hand, by iteratively adding two-arm studies of different direct comparisons to Data S, we created Data T1 to T3. Here, we use “T” to indicate that these artificial data contained two-arm studies only, so that could be calculated and thus be compared with our proposed ICDFs. On the other hand, by iteratively adding three-arm studies with different treatment sets to Data S, we created Data M1 to M3, where “M” indicates the existence of multi-arm studies. These three artificial datasets contained different numbers of multi-arm studies comparing different sets of treatments, so that we could explore their impact on the ICDF.

Figure 2.

Flow chart of constructing artificial NMA datasets.

We also assessed ICDFs in empirical NMA datasets with binary outcomes. They were extracted from a total of 58 NMAs investigated by Bafeta et al. (2013, 2014), Veroniki et al. (2013), and Trinquart et al. (2016). After excluding datasets that were originally devoted to extensions of the classic NMA model (e.g., models for competing risks) or those containing overlapping studies, we obtained 40 NMAs with various disease outcomes, including cancer, depressive disorder, major cardiovascular events, rheumatoid arthritis, stroke, etc. These 40 NMAs included our illustrative example. We calculated ICDFs of the remaining 39 NMAs; each NMA will be denoted by the first author’s surname with the publication year. The Supplemental Material (Appendix A) presents the full references of these 39 NMAs.

We used the MCMC algorithm to implement the Bayesian analyses in both artificial and empirical NMA data with R (version 4.0.2) via the package “rjags” (version 4–10) and JAGS (version 4.3.0). The results were based on three Markov chains; each chain contained 200,000 iterations after a burn-in period with 50,000 iterations, and the thinning rate was 2 for reducing posterior sample autocorrelations. The vague normal prior was used for the baseline effects and the overall log odds ratios. Under the RE setting, the uniform prior was used for the heterogeneity standard deviation. We examined the Markov chains’ convergence based on their traceplots. The Supplemental Material (Appendix B) includes the code for all analyses.

3. Results

3.1. Artificial data

Table 1 shows the ICDFs in the various artificial NMA datasets. Each Markov chain for each NMA converged well. Because Data O and M1 to M3 contained multi-arm studies, the was not applicable to them. When could be calculated, it was close to . Moreover, was generally smaller than .

Table 1.

Inconsistency degrees of freedom in artificial data based on the network meta-analysis by Cipriani et al. (2009).

| Data | No. of studies (no. of multi-arm studies) | |||

|---|---|---|---|---|

| Data O | 111 (2) | NA | 31.05 | 26.99 |

| Data S | 50 (0) | 0 | 0.02 | 0.24 |

| Data T1 | 58 (0) | 2 | 2.04 | 1.21 |

| Data T2 | 68 (0) | 5 | 4.88 | 2.62 |

| Data T3 | 109 (0) | 31 | 31.02 | 26.52 |

| Data M1 | 52 (2) | NA | 0.06 | 0.13 |

| Data M2 | 60 (10) | NA | 0.01 | 0.26 |

| Data M3 | 68 (18) | NA | 1.92 | 1.32 |

NA, not applicable; the ICDF proposed by Lu and Ades (2006) is applicable to network meta-analyses with two-arm studies only.

Recall that Data S was star-shaped, so it contained no evidence loop; consequently, = 0 and was almost 0. Both and were slightly negative; they were possibly subject to Monte Carlo errors. Data T1 added more studies that directly compared two treatment pairs to Data S, creating two independent loops; thus, = 2, which approximately equaled to = 2.04. These two ICDF measures also had similar values for Data T2.

In addition, Data T3 and O differed by only two three-arm studies of 5 vs. 9 vs. 11. Data T3 had = 31 and = 31.02, which were fairly close to = 31.05 in Data O. The two three-arm studies did not have much impact on the ICDF, likely because the NMA contained much more two-arm studies. Similarly, the ICDF of Data M1 was close to that of Data S; they also only differed by the two three-arm studies. Increasing the number of such three-arm studies as in Data M2 did not dramatically change the ICDF; both and were close to 0 because the loop of 5-9-11 was contributed mostly by the three-arm studies and thus tended to be consistent. However, after adding eight three-arm studies of 9 vs. 10 vs. 11 to Data M2, and increased to 1.92 and 1.32, respectively. Although the added studies were three-armed and the evidence was consistent within them, they contributed comparisons to form evidence loops with other two-arm studies. For example, the comparisons of 9 vs. 10 from the three-arm studies formed an evidence loop with the two-arm studies of 5 vs. 9 and 5 vs. 10. Therefore, the ICDF was no longer 0 in Data M3.

3.2. Empirical data

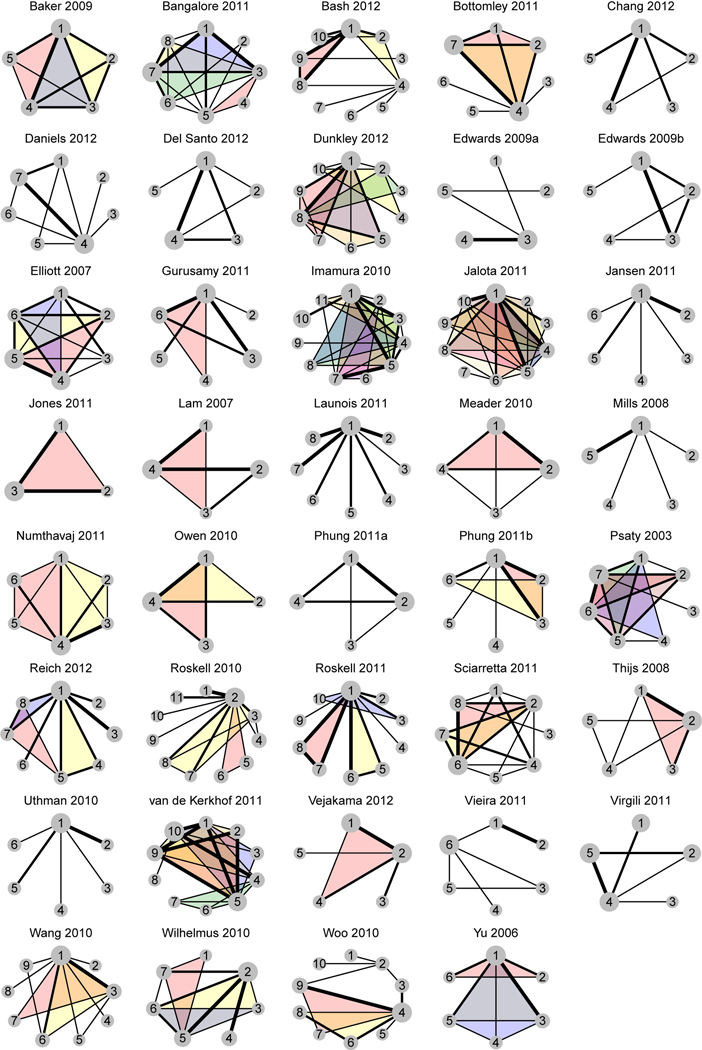

Figure 3 shows the treatment networks of 39 empirical NMA datasets, and Table 2 presents their ICDFs. Among the 39 NMAs, 27 (69%) contained at least one multi-arm study, so the was not applicable to them. For each of the remaining 12 NMAs, was fairly close to ; their difference was up to 0.04. Also, was generally smaller than .

Figure 3.

Treatment network plots of 39 empirical NMA datasets. Shaded loops in different colors indicate multi-arm studies with different treatment sets.

Table 2.

Characteristics and inconsistency degrees of freedom in the 39 empirical network meta-analyses.

| Network meta-analysis | No. of studies (no. of multi-arm studies) | No. of treatments | Poor MCMC convergence | |||

|---|---|---|---|---|---|---|

| Baker 2009 | 38 (9) | 5 | NA | 3.00 | 2.18 | No |

| Bangalore 2011 | 49 (4) | 8 | NA | 9.83 | 8.89 | (IC, BE) |

| Bash 2012 | 20 (3) | 10 | NA | 3.03 | 1.37 | No |

| Bottomley 2011 | 10 (2) | 7 | NA | 3.01 | 1.05 | No |

| Chang 2012 | 12 (0) | 5 | 1 | 1.03 | 0.23 | No |

| Daniels 2012 | 18 (0) | 7 | 3 | 2.99 | 0.41 | No |

| Del Santo 2012 | 10 (0) | 5 | 2 | 2.03 | 1.14 | No |

| Dunkley 2012 | 13 (5) | 10 | NA | 2.03 | 0.79 | No |

| Edwards 2009a | 34 (0) | 5 | 0 | 0.03 | 0.01 | No |

| Edwards 2009b | 10 (0) | 5 | 2 | 2.03 | 1.47 | No |

| Elliott 2007 | 22 (4) | 6 | NA | 9.00 | 4.84 | No |

| Gurusamy 2011 | 11 (1) | 6 | NA | 1.04 | 0.49 | (C, RE); (IC, RE) |

| Imamura 2010 | 36 (7) | 11 | NA | 11.72 | 2.87 | (IC, BE) |

| Jalota 2011 | 99 (19) | 10 | NA | 0.03 | 0.09 | No |

| Jansen 2011 | 8 (0) | 6 | 0 | 0.01 | 0.01 | No |

| Jones 2011 | 19 (1) | 3 | NA | 1.01 | 0.86 | No |

| Lam 2007 | 11 (1) | 4 | NA | 1.01 | 1.01 | No |

| Launois 2011 | 19 (0) | 8 | 0 | 0.01 | 0.06 | No |

| Meader 2010 | 20 (1) | 4 | NA | 2.99 | 1.36 | No |

| Mills 2008 | 19 (0) | 5 | 0 | 0.02 | 0.05 | No |

| Numthavaj 2011 | 6 (2) | 6 | NA | 3.00 | 1.11 | No |

| Owen 2010 | 14 (2) | 4 | NA | 1.97 | 2.84 | No |

| Phung 2011a | 13 (0) | 4 | 2 | 1.99 | 0.24 | No |

| Phung 2011b | 20 (2) | 6 | NA | 0.65 | 0.66 | (IC, RE) |

| Psaty 2003 | 30 (7) | 7 | NA | 7.02 | 1.17 | No |

| Reich 2012 | 18 (5) | 8 | NA | 2.05 | 1.65 | No |

| Roskell 2010 | 17 (2) | 11 | NA | 1.00 | 0.20 | No |

| Roskell 2011 | 12 (6) | 10 | NA | 0.01 | 0.05 | No |

| Sciarretta 2011 | 26 (2) | 8 | NA | 9.08 | 1.92 | No |

| Thijs 2008 | 25 (3) | 5 | NA | 3.00 | 1.33 | No |

| Uthman 2010 | 14 (0) | 6 | 0 | 0.02 | 0.00 | No |

| van de Kerkhof 2011 | 7 (5) | 10 | NA | 3.94 | 3.36 | No |

| Vejakama 2012 | 9 (1) | 5 | NA | 1.07 | 0.55 | (C, BE); (IC, BE) |

| Vieira 2011 | 7 (0) | 6 | 1 | 0.99 | 0.55 | No |

| Virgili 2011 | 10 (0) | 5 | 1 | 1.04 | 1.17 | No |

| Wang 2010 | 43 (2) | 9 | NA | 3.02 | 0.78 | No |

| Wilhelmus 2010 | 43 (3) | 7 | NA | 5.98 | 4.65 | No |

| Woo 2010 | 13 (2) | 10 | NA | 0.66 | 1.01 | (C, BE); (IC, BE) |

| Yu 2006 | 14 (3) | 6 | NA | 0.04 | 0.11 | No |

ICDF represents inconsistency degrees of freedom.

MCMC represents Markov chain Monte Carlo algorithm.

NA, not applicable; the ICDF proposed by Lu and Ades (2006) is applicable to network meta-analyses with two-arm studies only.

C, IC, RE, and BE represent the consistency model, the inconsistency model, the random-effects setting, and both the fixed- and random-effects settings, respectively; represents a comparison between treatments specified in its subscript; an asterisk () in the subscript indicates any other treatment in the network.

The proposed measures were consistent with the intuition that better-connected NMAs generally have larger ICDFs; for example, Bangalore 2011 had relatively large and . Some NMAs seemed well-connected, but most treatment loops were actually formed by multi-arm studies, which were not subject to inconsistency. The proposed methods accurately yielded ICDFs in such situations. For example, both and of Jalota 2011 were nearly 0; although this NMA seemed to contain many loops, they were mostly formed by multi-arm studies. Specifically, this NMA contained 99 studies; except one study of 6 vs. 7, all remaining studies contained treatment 1. If multi-arm studies were removed, this NMA would be nearly star-shaped. Additionally, the ICDFs under the FE and RE settings dramatically differed in some NMAs; for example, Sciarretta 2011 had = 9.08 and = 1.92. On the other hand, was noticeably larger than in a few NMAs, such as Owen 2010.

Of note, computational issues occurred in three NMAs, i.e., Edwards 2009a, Jones 2011, and Wilhelmus 2010. Specifically, Edwards 2009a contained a two-arm study with event counts equaling to the corresponding sample sizes in both arms. Jones 2011 similarly contained two such studies. In addition, Wilhelmus 2010 contained a four-arm study of 2 vs. 3 vs. 5 vs. 6; treatments 2 and 3 were directly compared by only this study in the whole network, while the event count in treatment group 3 equaled to its sample size. These cases were counterparts of the problem of zero-count studies, leading to potential estimation issues; see, e.g., Chapter 10.4.4 in Higgins et al. (2019). To successfully produce the ICDFs in these three NMAs, we reduced event counts by one in each treatment group in the aforementioned studies. Their results in Table 2 were based on the adjusted event counts. Another computational problem was related to the MCMC convergence. Table 2 indicates that six NMAs contained comparisons with poor MCMC convergence. They had relatively rare outcomes with low or zero event counts. Some adjustment for the zero events might help improve the convergence.

4. Discussion

As its name suggests, the ICDF describes the degrees of freedom that an NMA could suffer from evidence inconsistency. It is not designed to test for the evidence consistency assumption; a large ICDF value indicates the potential, but not the presence, of evidence inconsistency. As such, the ICDF relates closely to the possible ways of effectively mixing the direct and indirect evidence, and thus implies the benefit of an NMA over separate pairwise meta-analyses that cannot synthesize these two types of evidence.

This article has proposed new measures to assess the ICDF. The existing measure can be feasibly applicable to NMAs with two-arm studies only, because multi-arm studies form intrinsically consistent evidence loops and complicate the calculation. Due to this limitation, the current literature seldom reports this measure. Based on the ENPs of Bayesian hierarchical models, the proposed measures can be naturally applied to generic NMAs that may contain multi-arm studies under both the FE and RE settings. The data analyses have shown that the new measure under the FE setting produced essentially the same values with the existing measure in NMAs with two-arm studies only. In addition, although we have focused on NMAs with binary outcomes, the proposed measures can be similarly obtained for those with other outcomes. In summary, the ICDF is an important characteristic of an NMA; the proposed methods enable its calculation in generic NMAs, and it may be routinely reported in practice.

The by Lu and Ades (2006) is arguably a geometry-based measure, because it is driven by the concept of “number of independent loops in a network.” This nature makes it difficult to handle multi-arm studies when they are mixed with the inconsistency loops. On the other hand, the and may be considered as model-based measures, because they are driven by Bayesian models. Their interpretations as differences in the ENPs of consistency and inconsistency models may be more intuitive than the from the statistical perspective; the latter is interpreted purely from the geometric perspective. For example, suppose a simple network contains three treatments A, B, and C, and 10 studies directly compare each pair of treatments. If only one three-arm study of A vs. B vs. C is added to this network, its impact on the ICDF is expected to be small. However, if 100 three-arm studies are added, their intrinsically consistent evidence would dominate the network and the ICDF would decrease. Therefore, it may be more sensible to use the model-based, rather than the geometry-based, measures of ICDF to make a tradeoff between the two- and multi-arm studies.

The data analyses indicated that the was slightly less than 0 in some NMAs due to Monte Carlo errors. It is expected to be nonnegative in theory, because the inconsistency FE model should contain more effective parameters than the consistency FE model. On the other hand, the was noticeably negative in a few NMAs; this seems counterintuitive as the inconsistency model appears to have more parameters than the consistency model. Nevertheless, this intuition is based on the conventional counting of parameters in a model, rather than the ENP used for calculating the . Under the RE setting, the consistency and inconsistency models may produce fairly different estimates of heterogeneity variances, which have great impact on the ENPs. The ENP of the inconsistency model is not necessarily larger than that of the consistency model, possibly yielding a negative value of ICDF. Indeed, the is not proposed in an effort to mimic the ; it aims to provide new insights for understanding the evidence inconsistency under the RE setting. We will further explore the properties of these ICDF measures in future studies.

Supplementary Material

Acknowledgments:

We thank the Associate Editor and two anonymous reviewers for their many helpful suggestions.

Funding: This study was supported in part by the Committee on Faculty Research Support (COFRS) program from the Florida State University Council on Research and Creativity; the U.S. National Institutes of Health/National Library of Medicine grant R01 LM012982; and the National Institutes of Health/National Center for Advancing Translational Sciences grant UL1 TR001427. The content is solely the responsibility of the author and does not necessarily represent the official views of the National Institutes of Health. The financial support had no involvement in the conceptualization of the report and the decision to submit the report for publication.

Footnotes

Disclosure statement: No potential competing interest was reported.

Data availability statement: This article uses datasets reported in published network meta-analyses, which are available upon request.

References

- Bafeta A, Trinquart L, Seror R, and Ravaud P. 2013. Analysis of the systematic reviews process in reports of network meta-analyses: methodological systematic review. BMJ 347:f3675. doi: 10.1136/bmj.f3675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bafeta A, Trinquart L, Seror R, and Ravaud P. 2014. Reporting of results from network meta-analyses: methodological systematic review. BMJ 348:g1741. doi: 10.1136/bmj.g1741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brignardello-Petersen R, Mustafa RA, Siemieniuk RAC, Murad MH, Agoritsas T, Izcovich A, Schünemann HJ, and Guyatt GH 2019. GRADE approach to rate the certainty from a network meta-analysis: addressing incoherence. Journal of Clinical Epidemiology 108:77–85. doi: 10.1016/j.jclinepi.2018.11.025. [DOI] [PubMed] [Google Scholar]

- Caldwell DM, Ades AE, and Higgins JPT 2005. Simultaneous comparison of multiple treatments: combining direct and indirect evidence. BMJ 331(7521):897–900. doi: 10.1136/bmj.331.7521.897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan A-W, and Altman DG 2005. Epidemiology and reporting of randomised trials published in PubMed journals. The Lancet 365(9465):1159–1162. doi: 10.1016/S0140-6736(05)71879-1. [DOI] [PubMed] [Google Scholar]

- Cipriani A, Furukawa TA, Salanti G, Geddes JR, Higgins JPT, Churchill R, Watanabe N, Nakagawa A, Omori IM, McGuire H, et al. 2009. Comparative efficacy and acceptability of 12 new-generation antidepressants: a multiple-treatments meta-analysis. The Lancet 373(9665):746–758. doi: 10.1016/S0140-6736(09)60046-5. [DOI] [PubMed] [Google Scholar]

- Cipriani A, Higgins JPT, Geddes JR, and Salanti G. 2013. Conceptual and technical challenges in network meta-analysis. Annals of Internal Medicine 159(2):130–137. doi: 10.7326/0003-4819-159-2-201307160-00008. [DOI] [PubMed] [Google Scholar]

- Dias S, Sutton AJ, Ades AE, and Welton NJ 2013. Evidence synthesis for decision making 2: a generalized linear modeling framework for pairwise and network meta-analysis of randomized controlled trials. Medical Decision Making 33(5):607–617. doi: 10.1177/0272989X12458724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dias S, Welton NJ, Caldwell DM, and Ades AE 2010. Checking consistency in mixed treatment comparison meta-analysis. Statistics in Medicine 29(7–8):932–944. doi: 10.1002/sim.3767. [DOI] [PubMed] [Google Scholar]

- Dias S, Welton NJ, Sutton AJ, Caldwell DM, Lu G, and Ades AE 2013. Evidence synthesis for decision making 4: inconsistency in networks of evidence based on randomized controlled trials. Medical Decision Making 33(5):641–656. doi: 10.1177/0272989X12455847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donegan S, Dias S, and Welton NJ 2019. Assessing the consistency assumptions underlying network meta-regression using aggregate data. Research Synthesis Methods 10(2):207–224. doi: 10.1002/jrsm.1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donegan S, Williamson P, D’Alessandro U, and Tudur Smith C. 2013. Assessing key assumptions of network meta‐analysis: a review of methods. Research Synthesis Methods 4(4):291–323. doi: 10.1002/jrsm.1085. [DOI] [PubMed] [Google Scholar]

- Gelman A, Carlin JB, Stern HS, Dunson DB, Vehtari A, and Rubin DB 2014. Bayesian Data Analysis. 3rd ed. Boca Raton, FL: CRC Press. [Google Scholar]

- Gurevitch J, Koricheva J, Nakagawa S, and Stewart G. 2018. Meta-analysis and the science of research synthesis. Nature 555:175–182. doi: 10.1038/nature25753. [DOI] [PubMed] [Google Scholar]

- Higgins JPT 2008. Commentary: heterogeneity in meta-analysis should be expected and appropriately quantified. International Journal of Epidemiology 37(5):1158–1160. doi: 10.1093/ije/dyn204. [DOI] [PubMed] [Google Scholar]

- Higgins JPT, Jackson D, Barrett JK, Lu G, Ades AE, and White IR 2012. Consistency and inconsistency in network meta-analysis: concepts and models for multi-arm studies. Research Synthesis Methods 3(2):98–110. doi: 10.1002/jrsm.1044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, and Welch VA 2019. Cochrane Handbook for Systematic Reviews of Interventions. Chichester, UK: John Wiley & Sons. [Google Scholar]

- Higgins JPT, and Whitehead A. 1996. Borrowing strength from external trials in a meta-analysis. Statistics in Medicine 15(24):2733–2749. doi: . [DOI] [PubMed] [Google Scholar]

- Hutton B, Salanti G, Caldwell DM, Chaimani A, Schmid CH, Cameron C, Ioannidis JPA, Straus S, Thorlund K, Jansen JP, et al. 2015. The PRISMA extension statement for reporting of systematic reviews incorporating network meta-analyses of health care interventions: checklist and explanations. Annals of Internal Medicine 162(11):777–784. doi: 10.7326/M14-2385. [DOI] [PubMed] [Google Scholar]

- Ioannidis JPA 2009. Integration of evidence from multiple meta-analyses: a primer on umbrella reviews, treatment networks and multiple treatments meta-analyses. Canadian Medical Association Journal 181(8):488–493. doi: 10.1503/cmaj.081086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson D, Barrett JK, Rice S, White IR, and Higgins JPT 2014. A design-by-treatment interaction model for network meta-analysis with random inconsistency effects. Statistics in Medicine 33(21):3639–3654. doi: 10.1002/sim.6188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jansen JP, and Naci H. 2013. Is network meta-analysis as valid as standard pairwise meta-analysis? It all depends on the distribution of effect modifiers. BMC Medicine 11:159. doi: 10.1186/1741-7015-11-159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin L, Chu H, and Hodges JS 2020. On evidence cycles in network meta-analysis. Statistics and Its Interface 13(4):425–436. doi: 10.4310/SII.2020.v13.n4.a1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin L, Xing A, Kofler MJ, and Murad MH 2019. Borrowing of strength from indirect evidence in 40 network meta-analyses. Journal of Clinical Epidemiology 106:41–49. doi: 10.1016/j.jclinepi.2018.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu G, and Ades AE 2004. Combination of direct and indirect evidence in mixed treatment comparisons. Statistics in Medicine 23(20):3105–3124. doi: 10.1002/sim.1875. [DOI] [PubMed] [Google Scholar]

- Lu G, and Ades AE 2006. Assessing evidence inconsistency in mixed treatment comparisons. Journal of the American Statistical Association 101(474):447–459. doi: 10.1198/016214505000001302. [DOI] [Google Scholar]

- Lu G, and Ades AE 2009. Modeling between-trial variance structure in mixed treatment comparisons. Biostatistics 10(4):792–805. doi: 10.1093/biostatistics/kxp032. [DOI] [PubMed] [Google Scholar]

- Lumley T 2002. Network meta-analysis for indirect treatment comparisons. Statistics in Medicine 21(16):2313–2324. doi: 10.1002/sim.1201. [DOI] [PubMed] [Google Scholar]

- Mills EJ, Ioannidis JPA, Thorlund K, Schünemann HJ, Puhan MA, and Guyatt GH 2012. How to use an article reporting a multiple treatment comparison meta-analysis. JAMA 308(12):1246–1253. doi: 10.1001/2012.jama.11228. [DOI] [PubMed] [Google Scholar]

- Mills EJ, Thorlund K, and Ioannidis JPA 2013. Demystifying trial networks and network meta-analysis. BMJ 346:f2914. doi: 10.1136/bmj.f2914. [DOI] [PubMed] [Google Scholar]

- Niforatos JD, Weaver M, and Johansen ME 2019. Assessment of publication trends of systematic reviews and randomized clinical trials, 1995 to 2017. JAMA Internal Medicine 179(11):1593–1594. doi: 10.1001/jamainternmed.2019.3013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nikolakopoulou A, Chaimani A, Veroniki AA, Vasiliadis HS, Schmid CH, and Salanti G. 2014. Characteristics of networks of interventions: a description of a database of 186 published networks. PLOS ONE 9(1):e86754. doi: 10.1371/journal.pone.0086754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nikolakopoulou A, Mavridis D, Furukawa TA, Cipriani A, Tricco AC, Straus SE, Siontis GCM, Egger M, and Salanti G. 2018. Living network meta-analysis compared with pairwise meta-analysis in comparative effectiveness research: empirical study. BMJ 360:k585. doi: 10.1136/bmj.k585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papakonstantinou T, Nikolakopoulou A, Egger M, and Salanti G. 2020. In network meta-analysis, most of the information comes from indirect evidence: empirical study. Journal of Clinical Epidemiology 124:42–49. doi: 10.1016/j.jclinepi.2020.04.009. [DOI] [PubMed] [Google Scholar]

- Petropoulou M, Nikolakopoulou A, Veroniki A-A, Rios P, Vafaei A, Zarin W, Giannatsi M, Sullivan S, Tricco AC, Chaimani A, et al. 2017. Bibliographic study showed improving statistical methodology of network meta-analyses published between 1999 and 2015. Journal of Clinical Epidemiology 82:20–28. doi: 10.1016/j.jclinepi.2016.11.002. [DOI] [PubMed] [Google Scholar]

- Plummer M 2002. Discussion of “Bayesian measures of model complexity and fit” by Spiegelhalter et al. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 64(4):620–621. doi: 10.1111/1467-9868.00353. [DOI] [Google Scholar]

- Puhan MA, Schünemann HJ, Murad MH, Li T, Brignardello-Petersen R, Singh JA, Kessels AG, and Guyatt GH 2014. A GRADE Working Group approach for rating the quality of treatment effect estimates from network meta-analysis. BMJ 349:g5630. doi: 10.1136/bmj.g5630. [DOI] [PubMed] [Google Scholar]

- Riley RD, Jackson D, Salanti G, Burke DL, Price M, Kirkham J, and White IR 2017. Multivariate and network meta-analysis of multiple outcomes and multiple treatments: rationale, concepts, and examples. BMJ 358:j3932. doi: 10.1136/bmj.j3932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salanti G 2012. Indirect and mixed-treatment comparison, network, or multiple-treatments meta-analysis: many names, many benefits, many concerns for the next generation evidence synthesis tool. Research Synthesis Methods 3(2):80–97. doi: 10.1002/jrsm.1037. [DOI] [PubMed] [Google Scholar]

- Salanti G, Kavvoura FK, and Ioannidis JPA 2008. Exploring the geometry of treatment networks. Annals of Internal Medicine 148(7):544–553. doi: 10.7326/0003-4819-148-7-200804010-00011. [DOI] [PubMed] [Google Scholar]

- Song F, Xiong T, Parekh-Bhurke S, Loke YK, Sutton AJ, Eastwood AJ, Holland R, Chen Y-F, Glenny A-M, and Deeks JJ 2011. Inconsistency between direct and indirect comparisons of competing interventions: meta-epidemiological study. BMJ 343:d4909. doi: 10.1136/bmj.d4909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spiegelhalter DJ, Best NG, Carlin BP, and van der Linde A. 2002. Bayesian measures of model complexity and fit. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 64(4):583–639. doi: 10.1111/1467-9868.00353. [DOI] [Google Scholar]

- Trinquart L, Attiche N, Bafeta A, Porcher R, and Ravaud P. 2016. Uncertainty in treatment rankings: reanalysis of network meta-analyses of randomized trialsuncertainty in treatment rankings from network meta-analyses. Annals of Internal Medicine 164(10):666–673. doi: 10.7326/M15-2521. [DOI] [PubMed] [Google Scholar]

- Tu Y-K 2016. Node-splitting generalized linear mixed models for evaluation of inconsistency in network meta-analysis. Value in Health 19(8):957–963. doi: 10.1016/j.jval.2016.07.005. [DOI] [PubMed] [Google Scholar]

- van Valkenhoef G, Tervonen T, de Brock B, and Hillege H. 2012. Algorithmic parameterization of mixed treatment comparisons. Statistics and Computing 22(5):1099–1111. doi: 10.1007/s11222-011-9281-9. [DOI] [Google Scholar]

- Veroniki AA, Vasiliadis HS, Higgins JPT, and Salanti G. 2013. Evaluation of inconsistency in networks of interventions. International Journal of Epidemiology 42(1):332–345. doi: 10.1093/ije/dys222. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.