Abstract

Stochastic reaction network models are often used to explain and predict the dynamics of gene regulation in single cells. These models usually involve several parameters, such as the kinetic rates of chemical reactions, that are not directly measurable and must be inferred from experimental data. Bayesian inference provides a rigorous probabilistic framework for identifying these parameters by finding a posterior parameter distribution that captures their uncertainty. Traditional computational methods for solving inference problems such as Markov Chain Monte Carlo methods based on classical Metropolis-Hastings algorithm involve numerous serial evaluations of the likelihood function, which in turn requires expensive forward solutions of the chemical master equation (CME). We propose an alternate approach based on a multifidelity extension of the Sequential Tempered Markov Chain Monte Carlo (ST-MCMC) sampler. This algorithm is built upon Sequential Monte Carlo and solves the Bayesian inference problem by decomposing it into a sequence of efficiently solved subproblems that gradually increase both model fidelity and the influence of the observed data. We reformulate the finite state projection (FSP) algorithm, a well-known method for solving the CME, to produce a hierarchy of surrogate master equations to be used in this multifidelity scheme. To determine the appropriate fidelity, we introduce a novel information-theoretic criteria that seeks to extract the most information about the ultimate Bayesian posterior from each model in the hierarchy without inducing significant bias. This novel sampling scheme is tested with high performance computing resources using biologically relevant problems.

Keywords: Bayesian inference, Stochastic modeling, Systems Biology, UQ, MCMC, SMC

1. INTRODUCTION

A distinguishing feature of biology is the diversity manifested by living things across different scales, from the readily observed multitude of species to the differences between individuals of the same species. At the microscopic level, a population of cells with the same genetic code, growing under the same lab conditions, could still display phenotypic variability in gene products [1–5]. Phenotypic variability has been observed in an increasing volume of data obtained from single-cell, single-molecule measurements enabled by recent progresses in chemical labeling and imaging techniques [6–8].

Much of the variability in gene expression is attributed to the stochasticity of vital cellular processes (e.g., transcription, translation) that are subjected to the randomness of molecular interactions. Stochastic reaction networks (SRN) represent a class of models that have been widely used to capture temporal and spatial fluctuations in single-cell gene expression [9]. SRN models treat the copy numbers of biochemical species, i.e. the number of molecules of a given type within a cell, as states in a discrete-space, continuous-time Markov process, where chemical reactions are represented by transitions between states. Given an SRN model, the probabilities of gene expression states within a cell can be computed by solving the chemical master equation (CME). This is a dynamical system in an infinite-dimensional space that describes the evolution of the probability distribution of all states. The finite state projection (FSP) is a well-known approximation method to obtain high-fidelity solutions of the CME [10]. This method reduces the intractable state space of the original SRN into a finite subset chosen based on a proven error bound, turning the infinite-dimensional CME system into a finite problem of linear differential equations.

The present work is concerned with the selection, parameter estimation, and uncertainty propagation of these reaction network models within the Bayesian framework. Bayesian methods are a powerful tool for system identification for SRN models because they provide rigorous uncertainty quantification by identifying a probability distribution over plausible model parameters instead of selecting a single model that may fit the data well [11–15]. This distribution over the models given the data is called the posterior distribution. Quantifying model parameter uncertainty is critical because it is difficult to model the full complexity of the biological system that may exhibit experimental context dependence [16]. Further, once model parameter uncertainty has been quantified, further experiments can be designed to provide new information about the system [17–21].

In this paper, we focus on data obtained by the experimental technique of single-molecule fluorescent in situ hybridization (smFISH). These datasets consist of independent single-cell measurements, each of which measures the copy number of biochemical species at a single time point. The standard approach to sample from the posterior distribution implied by this data is to use Markov Chain Monte Carlo (MCMC) algorithms such as the random walk Metropolis-Hastings MCMC sampler [11,22]. With high-fidelity CME solutions enabled by the FSP, one can compute the likelihood of observing these single-cell data and then perform Bayesian inference for model parameters. However, this approach suffers from two drawbacks. The first drawback is that the MCMC is inherently serial, preventing it from utilizing the massively parallel processing capability provided by modern high performance computing clusters. Therefore, if standard MCMC techniques are used, several tens to hundreds of thousands of sequential model evaluations may be needed to adequately sample the posterior distribution. The second drawback is that the FSP solutions required for the likelihood function are expensive, often requiring several minutes per model evaluation for a moderately sized problem. Typically, the number of differential equations that the FSP algorithm needs to solve grows exponentially with the number of species in the network, so the size of the state space and transition matrix quickly grows intractable. This has motivated Approximate Bayesian Computation (ABC) approaches that replace the computationally expensive single-cell likelihood function with less expensive model-data discrepancy functions [23–25]. Samples produced by ABC are, in general, not distributed according to the true posterior distribution, and a careful choice of summary statistics is critical for the performance and reliability of ABC samplers. Another prominent direction to circumvent the cost of solving the CME is to use moment-based likelihood functions [26,27]. When the SRN consists only of linear first-order reactions, the equations describing the first centralized moments (such as mean and variance) can be solved exactly at very low computational cost, which is a large advantage over FSP-based approaches. However, for general reaction networks, only approximations to the moments based on moment closure techniques are available and the appropriate choice of closure method is essential for making reliable inference [27]. There has also been recent progress on non-Bayesian approaches to fitting SRN models to smFISH data, such as by replacing the expensive CME-based likelihood with the Wasserstein distance that could be much easier to estimate for certain networks [28].

In this work, we propose a different approach that uses a parallel and multifidelity MCMC computational framework to produce samples from the true posterior distribution. Many approaches to parallel MCMC methods have been proposed either based on parallel proposals or parallel Markov chains [29–32]. One family of popular parallel MCMC methods are those based upon sequential Monte Carlo (SMC) samplers [31,33–35]. For this work, we replace the standard MCMC methods with the Sequentially Tempered Markov Chain Monte Carlo (ST-MCMC) [35], which is a massively parallel sampling scheme based on SMC. This method transports a population of model parameter samples through a series of intermediate annealing levels that reflect the gradual increase in the influence of the data likelihood. At each level, MCMC is used to explore the intermediate distribution and re-balance the distribution of the samples. Since this method is population based, these MCMC steps can be done in parallel. Further, this method can effectively adapt to the target posterior distribution to speed up sampling.

Similarly, approaches to multifidelity MCMC have been explored in the literature such as multifidelity delayed acceptance schemes, Multilevel Markov Chain Monte Carlo, and multifidelity approaches to SMC [36–41]. Multifidelity delayed acceptance schemes have been applied to Bayesian inference for the CME before [22,42,43]. Within these methods, a fast surrogate of the expensive likelihood function is used to pre-screen proposed samples within MCMC before they are accepted or rejected based on the expensive CME likelihood. This method still requires many sequential full model evaluations in order to sample the posterior. Multilevel MCMC [40] uses a hierarchy of models, such as different discretization grids of a PDE, to design an estimator for a specific quantity of interest. This uses parallel Markov chains at different model fidelities to estimate a correction to the quantity of interest estimate incurred by refining the model fidelity. Multifidelity SMC methods like the Multilevel Sequential2 Monte Carlo sampler [41] use an embarrassingly parallel approach and a hierarchy of multifidelity models. Therefore, only a few sequential full model evaluations may be needed. We take this approach to develop a multifidelity form of ST-MCMC for solving the CME. Within our method, instead of only considering a series of annealing levels, we also consider a hierarchy of model fidelity. Thus, the solution of the full inference problem is broken down by steering the samples from a distribution that reflects a low fidelity model with little influence from the likelihood to a distribution that reflects the high-fidelity model with the full influence of the likelihood. By performing the early updates using fast models, the sampler can quickly converge to the most important regions of parameter space, where a higher fidelity model can then be used to better assess which regions of this high-likelihood space are most likely. The key challenge for applying multifidelity methods in the SMC context is deciding which annealing factor and model fidelity is appropriate at a given level. Latz et al. suggest an approach based on the effective sample size of the population [41]. We take a different approach by leveraging a limited number of high fidelity model solves to estimate the information gained about the ultimate posterior given a current model fidelity and annealing factor. This information-theoretic criteria can effectively identify when the lower fidelity model is overly biasing the solution and should be discarded in favor of a higher fidelity model.

We demonstrate the efficiency and accuracy of our novel scheme when solving parameter estimation, model selection, and uncertainty propagation for stochastic chemical kinetic models in the Bayesian framework. This new approach to multifidelity ST-MCMC using fast surrogates from reduced order models based on a novel reformulation of the FSP significantly reduces the number of expensive likelihood function required to sample the posterior. The example problems are based on models from the system and synthetic biology literature. These include a three-dimensional repressilator gene circuit, a spatial bursting gene expression network, and a stochastic transcription network for the inflammation response gene IL1beta [44]. As such the primary contributions of this paper are:

Development of multifidelity ST-MCMC for Bayesian inference of SRNs

Introduction of an information-theoretic criteria for assessing the appropriate model fidelity within multifidelity ST-MCMC

Description of a novel surrogate model of the CME to be used within multifidelity inference problems

This paper is organized as follows: Section 2 describes general background for stochastic reaction network modeling, finite state projection, Bayesian inference, and MCMC methods. Section 3 describes the Multifidelity Sequential Tempered MCMC algorithm and the information-theoretic criteria for adapting model fidelity. Section 4 describes a novel surrogate models for the CME. Section 5 describes three experiments to identify model parameters in SRNs using Multifidelity ST-MCMC. Finally, Section 6 concludes.

2. BACKGROUND

2.1. Stochastic reaction networks for modeling gene expression

A reaction network consists of N different chemical species S1, …, SN that are interacting via the following M chemical reactions

| (1) |

We are interested in keeping track of the integral vectors x≡ (x1, …, xN)T, where xi is the population of the ith species. Assuming constant temperature and volume, the time-evolution of this system can be modeled by a continuous-time, discrete-space Markov process [9]. The jth reaction channel is associated with a stoichiometric vector (j = 1, …,M) such that, if the system is in state x and reaction j occurs, the system transitions to state . Given x(t) = x, the propensity αj(x; θ)dt determines the probability that reaction j occurs in the next infinitesimal time interval [t, t + dt), where θ is the vector of model parameters. In other words,

An important case of reaction networks are those that follow mass-action kinetics, whose propensity functions take the form

| (2) |

In this formulation, cj(θ) is the probability per unit time that a combination of specific reactant molecules can react via reaction j, and the remaining factor is the number of ways the existing molecules can be combined to form the left side of the chemical equation (1).

The time-evolution of the probability distribution of this Markov process is the solution of the linear system of differential equations known as the chemical master equation (CME)

| (3) |

where p(t) is the time-dependent probability distribution of all states, p(t, x) = Prob{x(t) = xi|x(0)}. The initial distribution p0 is assumed to be given, and A(θ) is the infinitesimal generator of the Markov process, defined entrywise as

| (4) |

Here, we have made explicit the dependence of A on the model parameter vector θ, which we need to infer from experimental data.

2.2. The finite state projection

Typically, reaction networks model open biochemical systems, where the set of all possible molecular states is unbounded. This makes the CME an infinite-dimensional linear system of ODEs. The finite state projection (FSP) is a well-known strategy to systematically reduce this linear system into a finite surrogate model with a strict error bound.

The FSP can be thought of as a special class of projection-based model reduction applied to the CME. Specifically, let Ω be a finite subset of the CME state space. The projection of the CME operator A onto the subspace spanned by the point-mass measures {δx|x ∈ Ω} is given by

| (5) |

We can then define a reduced model of the dynamical system (3) based on this projection as

| (6) |

Clearly, to solve (6) we only need to keep track of the equations corresponding to states in the finite set Ω, which is amenable to numerical treatments.

In contrast to generic projection methods, the gap between the reduced-order model and the true CME can be computed for the FSP. Indeed, Munsky and Khammash [45, Theorem 2] proved that the truncation error can be quantified in ℓ1 norm as

| (7) |

Clearly, the right hand side can be readily computed from the solution of the reduced system (6). From this precise error quantification, we have effective iterative method for solving the CME. Choosing an error tolerance ε > 0, starting from any initial set Ω := Ω0, we solve system (6) and check that the right hand side of (7) is less than ε. If this fails, we add more states to Ω0 to get a strictly larger set Ω1 and repeat the procedure until we find an approximation that satisfies our error tolerance.

As the sequence of subsets Ωi grows until it eventually covers the whole state space, we might expect that the finite-time solution of (6) will likewise converge to the true solution. This is indeed the case for all models in practice, with only a few theoretical counterexamples in which the Markov chain is explosive [10]. Sufficient conditions for the convergence of the FSP can be checked based on the form of the propensity function [46,47]. In practice, reaction networks tend to have only reactions between two or fewer molecules, with propensity functions in mass-action form (2), and these are guaranteed to be approximable with the FSP [46].

For the rest of the paper, we only concern ourselves with non-explosive SRNs where the FSP converges. Given such models, any exhaustive sequence of subsets {Ωj} suffices to guarantee that the FSP solution eventually satisfies any prespecified error tolerance. However, some choices are more efficient than other, and it is also more advantageous to partition the time interval into smaller timesteps and use a smaller Ωj on each step [48]. We will return to these observations in section 4.3.

A final point to make about the FSP is that, if the sequence of reduced sets Ωj are increasing, that is, Ωj ⊂ Ωj+1, j = 1,2, …, the resulting truncation error has been shown to decrease monotonically [45, Theorem 1] (also see [49, Theorem 2.5]). This gives us a natural way to form a hierarchy of reduced models for the CME, and we will return to this in section 4.1. With the computed distribution of the SRN in our hands, we can now match them directly to experimental data using a straightforward likelihood function.

2.3. Bayesian inference of SRN models from discrete single-cell measurements

In this work, we focus on inferring the parameters for the reaction network from discrete, single-cell datasets [3,6–8,50] that consist of several snapshots of many independent cells taken at discrete times t1, …, tT. The snapshot at time ti records gene expression in ni independent cells, each of which can be collected in the data vector cj,i, j = 1, …, ni of molecular populations in cell j at time ti. Since smFISH experiments [6,50] measure a new batch of cells at every time point, there is no correlation between observations at different time points.

Assume that a model class of stochastic reaction networks has been chosen to model the data consisting of a fixed set of reactions with unknown reaction parameters θ. Let p(t, x|M(θ)) denote the entry of the CME solution corresponding to state x at time t, given by SRN model M(θ). The log-likelihood of the dataset given M(θ) is given by

| (8) |

A common approach to fitting this model is to find model parameters θ that maximize the log-likelihood function. However, there are potentially many other model parameters that could fit the data approximately as well as the maximum likelihood model.

In contrast, the Bayesian approach quantifies the range of parameter uncertainty. Bayesian inference is rooted in the Bayesian philosophy in which our uncertainty is modeled using probability distributions [51]. Inference begins with a prior distribution, p(θ), that captures our initial beliefs. After data has been observed, the likelihood of the data given a model class and associated parameters is found as . Then by applying Bayes’ Theorem, we construct the posterior distribution on model parameters that reflects our updated beliefs:

| (9) |

Here, is a normalization constant known as the model evidence. Sampling from the posterior distribution on parameters allows us to quantify our uncertainty regarding the parameter fit and predictions. Therefore, the Bayesian approach allows us to be confident in the inference results, understand the influence of uncertainty on predictions, and design experiments to reduce the parameter uncertainty. However, it can be computationally challenging.

The Bayesian framework also provides a criteria to select or weigh different model classes as data become available. Suppose, instead of a single model class, we are given K possible network structures that could potentially explain the observations. Let denote the k-th class, where the parameter domains Θk need not have the same dimensionality. Each model class is associated with a prior weight that represents the prior level of belief in each class. If denotes the dataset as before, we can compute the model evidence of as

| (10) |

The posterior probability of each model class can then be computed by applying Bayes’ Theorem:

| (11) |

These probabilities reflect the posterior weighting of the different model classes and can be used to make average predictions over the models. The drawback of Bayesian model selection in the context of stochastic gene expression is the computational cost of computing the model evidences. We will return to this issue in section 3 where we show how the Multifidelity ST-MCMC framework provides an efficient way to estimate model evidence.

2.4. Markov Chain Monte Carlo samplers

Markov Chain Monte Carlo algorithms are widely used for sampling the posterior distribution of a Bayesian inference problem. These methods design a Markov chain whose stationary distribution is the target posterior distribution, . Therefore, by simulating the evolution of samples, θ, according to the Markov chain, correlated samples are asymptotically drawn from the posterior distribution. A common MCMC method is the Metropolis-Hastings algorithm. The algorithm begins by initializing the parameter state to some θ0. Then at a step i + 1 in the evolution, a candidate sample θ′ is drawn according to a proposal distribution Q(θ′ | θi). This candidate is then accepted or rejected with probability α(θ′ | θi) given by

| (12) |

This acceptance probability is independent of the normalization constant and therefore computationally tractable. If the candidate is accepted θi+1 = θ′; otherwise, θi+1 = θi. This algorithm iterates until a sufficient number of posterior samples have been generated to accurately represent the posterior.

Effective sample size (ESS) provides a metric to judge whether sufficient samples have been generated. The ESS of a N sample population θi=1…N corresponds to the number of independent samples, NESS, which would estimate a quantity of interest, , with the same variance as the estimate from the N samples. Therefore, when designing a sampler we want to maximize NESS to attain the highest possible sampling efficiency. For MCMC this corresponds to minimizing sample correlation. However, even very effective samplers often need tens of thousands of sequential model evaluations to generate sufficient effective samples, making this form of MCMC challenging for computationally expensive models.

2.5. Sequential Monte Carlo samplers

To overcome many of the challenges associated with a standard Metropolis-Hastings based MCMC method, parallel methods, like Sequential Monte Carlo (SMC), have been introduced to better leverage high performance computing resources. SMC methods for Bayesian inference transport a sample population, initially distributed so that it can approximate expectations with respect to the prior, to one which can approximate posterior expectations [31,33–35]. Typically, this means a population of samples initially distributed according to the prior being transformed into a population of samples approximately distributed according to the posterior. For Sequential Tempered MCMC (ST-MCMC) [35], we break down the inference problem into a series of annealing levels i defined by an annealing factor βi ∈ [0, 1]. Each level defines an intermediate distribution, , which we would like to use to generate samples and to compute expectations. These intermediate distributions take the form of

| (13) |

This annealing approach is common to many SMC methods used for Bayesian inference and can be thought of as gradually integrating the influence of the data into the solution. Tempering provides several benefits namely: 1) robust handling of potentially multimodal or unidentifiable posteriors, 2) smoother evolution of the parallel sample population to avoid different rates of convergence to the posterior, 3) online adaptation of the MCMC sampler, and 4) estimation of the model evidence for model selection through thermodynamic integration.

To simplify the problem of transporting samples from the prior, π0 (θ), to posterior, π1 (θ), we transport samples sequentially through each level in the sequence, i.e. to with βi+1 > βi. Because we can control the size of the jump Δβ = βi+1 − βi, we can ensure that this change is not too drastic as to cause poor approximation of the true distribution i.e. too drastic a decrease in the ESS. Transporting samples is done in three steps:

Reweight the previous sample population, distributed according to , with unnormalized weights to reflect expectations with respect to the new distribution .

Resample the population according to the weights so that the samples now reflect .

Seed a Markov chain starting at each sample and then use MCMC to explore .

The MCMC step is essential to ensure the sample population does not degenerate since the reweighting and resampling steps reduce the ESS of the population. MCMC increases the ESS because it decorrelates the seeds and explores the target distribution, causing samples to better reflect it. Typically, Δβ is chosen adaptively to not decrease the ESS too much during the update. This is achieved by finding a Δβ such that the coefficient of variation (COV) of the sample weights equals a target κ. The COV approximates the ESS by . Therefore, we find a Δβ > 0 that solves the equation

| (14) |

Here, and . Typically, we choose κ = 1, which corresponds to a target ESS of N/2. With this method for finding Δβ, we then sequentially move through all the adaptively tuned annealing levels until we reach the final posterior reflected by π1 (θ). For more details about this algorithm, see [35].

3. MULTIFIDELITY ST-MCMC

For expensive models, ST-MCMC and similar SMC-based methods may still be computationally prohibitive. One approach to overcome this computational burden is to utilize a multifidelity model hierarchy that can speed up sampling. The key idea is that for early levels of the ST-MCMC algorithm a low fidelity, but computationally cheap model, may be sufficiently informative to guide the samples towards the ultimate posterior distribution. This is because at early levels, the annealing factor causes the contribution of the likelihood to be damped, so perturbations in the likelihood caused by the decrease in model fidelity are less important. Intuitively, a lower fidelity model may be useful when the bias it introduces in the likelihood function is less than the variance of the likelihood at the annealing level. We consider different strategies for rigorously defining this intuition in the rest of this section.

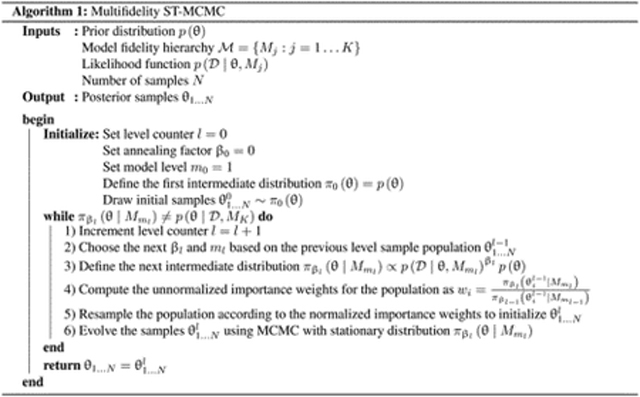

We extend ST-MCMC to multifidelity ST-MCMC by defining intermediate levels both in terms of their annealing factor β and the choice of model fidelity M. We consider a hierarchy of models with increasing fidelity and computational cost. This algorithm is described in Algorithm 1. The key challenge is determining the best strategy for choosing βl and ml at Step 2 since the rest of the algorithm proceeds like standard ST-MCMC.

3.1. Tempering and bridging using an Effective Sample Size criteria

One approach to choosing the appropriate annealing factor and model fidelity is a combined likelihood tempering and model bridging scheme discussed in Latz et al. [41]. This scheme is based upon the ESS statistic discussed in Section 2.5. Within Multifidelity ST-MCMC, at every level l of Algorithm 1, we choose whether to temper by changing βl = βI−1 +Δβ or to bridge by changing the model fidelity, ml = ml−1 + 1. This choice is made by measuring the ESS of the sample population with respect to the next change in the model fidelity by computing the unnormalized weights as if we were to bridge:

| (15) |

We can then compute the coefficient of variation of the weights, wi, to determine if it exceeds a target κ. If it does, we choose to bridge to the next model fidelity because the sample population is beginning to degenerate, so it no longer has sufficient ESS to approximate the next level intermediate posterior. If the COV is less than κ, we choose to keep the current model but instead increase β. The next beta is chosen using the same strategy as before by solving Equation 14.

3.2. Information-theoretic criteria for model fidelity adaptation

We introduce a new criteria for model fidelity selection based on information theory. This criteria is motivated by the fact that the ESS-based strategy described above only decides to change fidelity based on the next model in the hierarchy. This means the sampler may continue to use a low fidelity model because it still meets the ESS criteria with respect to the next model, even when it is drifting away from the high fidelity posterior. Instead, we introduce a method that utilizes a limited number of full fidelity model evaluations to help us better decide when to bridge model fidelity. Depending on the computational cost of the full fidelity simulations, the improved bridging strategy and the improved robustness of this method may outweigh the cost of these full fidelity solutions.

Within multifidelity ST-MCMC, if the algorithm is at annealing level l with annealing factor βl ∈ [0, 1] and has been sampling the intermediate posterior defined by a model, , in a model hierarchy , we would like to know whether still provides meaningful information about the ultimate posterior once we move to level l + 1 with annealing factor βl+1. Here we assume the ultimate posterior is , where MK is the highest fidelity model. Therefore, unlike the previous ESS-based method, we begin by proposing a tempering step under the assumption that the current model fidelity is valid. We find the proposed βl+1 by solving Equation 14.

If no longer provides meaningful information at the next level, we use the next highest fidelity model in the algorithm, . This criteria can be formulated using a generalization of information theory [52], where the information gained about the full posterior, , by moving from level l to l + 1 with model is:

| (16) |

If this quantity is positive, then the intermediate posterior defined by βl+1 and ml is closer to the ultimate posterior than the previous level, so we choose ml+1 = ml. However if this is negative, this update is driving the distribution away from the ultimate posterior, so we should use a higher fidelity model for the next update, thus ml+1 = ml + 1.

If we choose to update the model fidelity, we consider two strategies for choosing βl+1 for the next level. In the first strategy, keeping with the ESS-based tempering and bridging framework from above, is to set βl+1 = βl. The second strategy is to tune βl+1 to try to attain an ESS target. The first strategy is often more computationally efficient, but may not be as robust if changing model fidelity introduces significant variations in the likelihood. To tune βl+1, we first define the importance weight for transitioning from a level defined by βi and ml to a level defined by βl+1 and ml+1 = ml + 1 as

| (17) |

Using the same approach as before, we can then tune βl+1 to meet some ESS target based upon the COV of the weights. However, unlike in previous problems, this might not be achievable. If Equation (14) has a solution, we chose the largest βl+1 such that the COV target is met. If Equation (14) does not have a solution, we find the βl+1 that minimizes the COV and thus maximizes the ESS.

3.3. Computing the information-theoretic criteria

Since computing the information in Equation 16 requires marginalizing over the posterior, it can be challenging. However, this computation can be approximated using the samples from ST-MCMC. The first step is to recognize the connection between computing this criteria and estimating the model evidence:

| (18) |

Here, is the model evidence, i.e. the normalization, for the likelihood defined by the model M with an annealing factor β:

| (19) |

By noting the relationship to model evidence, the ratio of the evidences can be expressed as:

| (20) |

indicates that the expectation is taken over the distribution . Therefore,

| (21) |

Since we cannot yet sample we use importance sampling to express this integral in terms of the level l distribution, which we have samples for:

| (22) |

This integral only needs to be known up to a constant of proportionality since we only need to assess whether it is positive. We can then express it in terms of expectations as:

| (23) |

We can now estimate whether is positive or negative to determine if information is gained or lost by this next update. To approximate these expectations we use the N ST-MCMC samples at level l, where , which are approximately distributed according to . We also use the evaluation of the full fidelity model likelihood at these points:

| (24) |

| (25) |

| (26) |

3.4. Multifidelity ST-MCMC and Bayesian model selection

SMC and ST-MCMC methods not only enable robust solutions of Bayesian inference problems for parameter calibration, but also enable Bayesian model selection by providing asymptotically unbiased estimates of the model evidence. Model evidence estimates are generally highly computationally expensive since they require estimating the normalization constant,

| (27) |

which consists of marginalizing the likelihood over the prior distribution. If the high probability content of the prior differs significantly from the most likely parameters according to the likelihood, it is difficult to estimate this integral using Monte Carlo samples. Instead, SMC type methods break down this estimate into a series of Monte Carlo approximations over the intermediate distribution levels previously discussed. As such, a hierarchy of multifidelity models can also be used to accelerate this estimate within the Multifidelity ST-MCMC framework. Using the methods described in [31,53] a SMC based sampler, like Multifidelity ST-MCMC, can estimate the model evidence of the highest fidelity model, MK, by estimating the product:

| (28) |

where , and L is the final level of ST-MCMC. The ratio cl can be written as

| (29) |

where are the unnormalized resampling weights at level l for the sample population . Therefore, using the weights we already computed as part of Multifidelity ST-MCMC, we are able to compute an estimate of the model evidence. The error in the estimate of the model evidence is controlled by the ESS of the Monte Carlo samples used to estimate cl and the choice of annealing factors βl. For a comprehensive analysis of the statistical properties of the estimator see [31,53].

4. MULTIFIDELITY REDUCED MODELS OF THE CHEMICAL MASTER EQUATION

The FSP algorithm introduced in section 2 is commonly used to compute the likelihood of observed data measurements. When used within MCMC sampling, the FSP is usually implemented in one of the following two ways.

The first is to fix a single, large, subset of states for all parameter samples [11]. Since the probability distribution of the CME changes significantly as the MCMC explores the parameter space, it is very difficult to specify a finite state set that accurately captures a significant portion of the probability mass for all times and all parameters. One can end up choosing a static FSP that is either inaccurate or inefficient. This scenario is similar to when a static discretization scheme (e.g., finite element) is employed in the simulation of parametric partial differential equation models, in which the manually chosen grid size may turn out to be too coarse for some parameter regimes and excessive for others.

This drawback motivates the second approach that instead uses adaptive CME solvers. There have been many adaptive formulations of the FSP [48,54–57] in which an approximation to the full-fidelity CME is sought within a user-specified error tolerance by expanding the state set iteratively. There could be regions in the parameter space where the adaptive state set has to be expanded to an enormous size to accurately approximate the CME solution. Yet, most of these parameter combinations fit poorly to the data, and therefore the large computational effort for their forward solutions does not provide useful information about the posterior. On the other hand, since reaction networks usually comprise of nonlinear and unpredictable interactions, it is difficult to know a priori which parameter values would give rise to such difficult (but meaningless) forward solutions of the full-fidelity CME. Simple techniques to regularize the cost of the forward solutions by restricting either the computational time or the number of time steps may run the risk of mistakenly ignoring genuinely informative parameter candidates whose evaluation just happens to require high computational cost.

The framework of the Multifidelity ST-MCMC sampler allows us to conceive of a compromise. In particular, we introduce a series of surrogate CMEs whose solution complexity is uniformly bounded across all parameters. An adaptive FSP method with strict error tolerance is applied only to these surrogate master equations. In other words, the solution to each surrogate CME can be computed accurately by the FSP with bounded cost, where coarser, yet cheaper, surrogate CMEs are used to guide the sampling process at the early annealing level, while the more expensive solutions of the high-fidelity CME are only required at the last annealing steps. This allows us to avoid overcommitting to parameters that have low posterior probabilities during the early annealing levels, yet still guarantee accurate computation of the likelihood at later sampling stages.

4.1. Implicitly defined finite state projection for constructing surrogate CME models

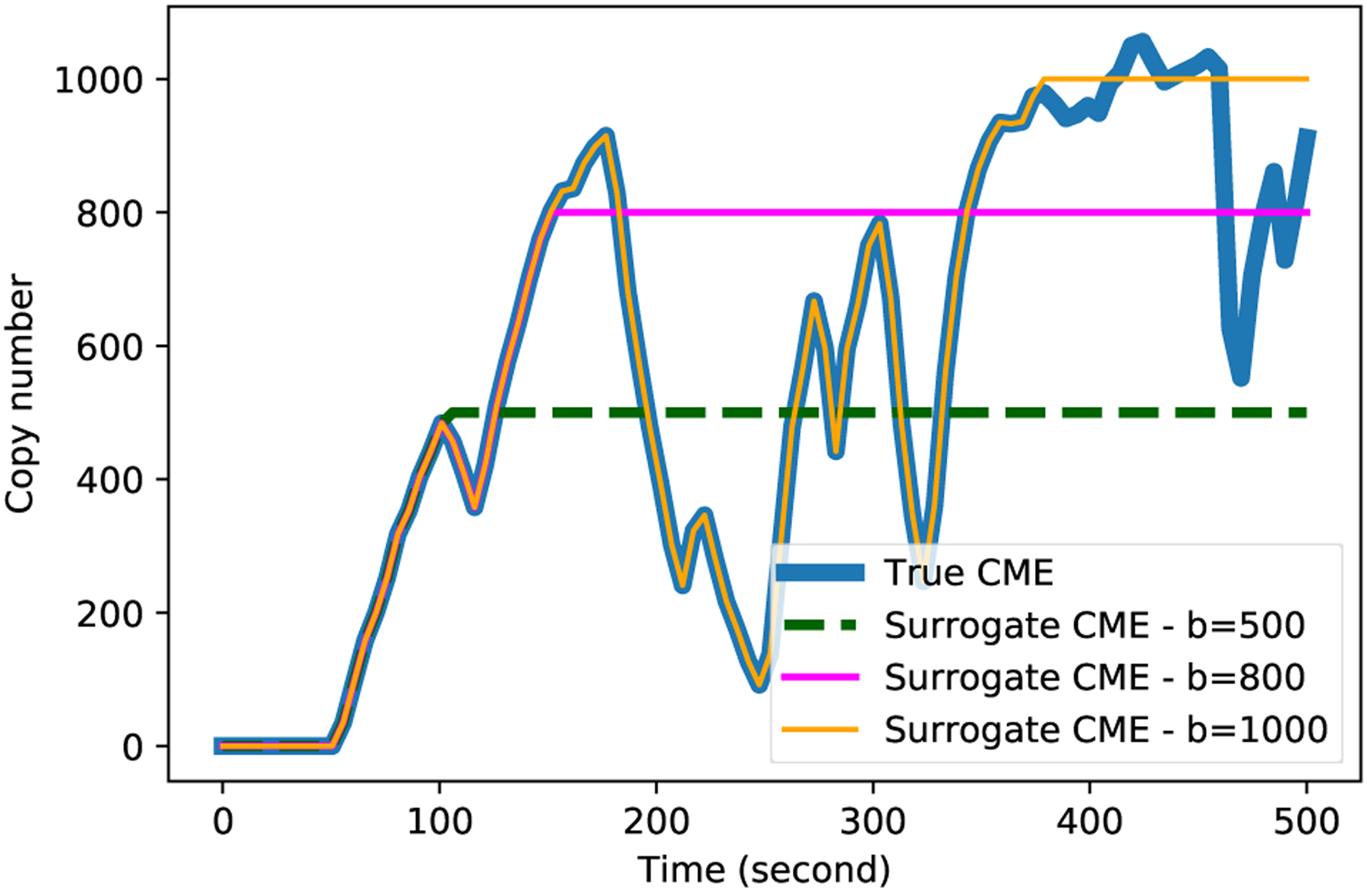

Surrogate models can be derived by adding restrictive assumptions to the physics of the original model. In particular, consider a hypothetical physical biological surrogate of the original cells modeled by the full CME in which all cellular processes ‘freeze’ when molecular copy numbers reach a certain set of thresholds. As we increase these thresholds, the surrogate cells behave more freely and closer to the original cells and the master equation describing their behavior becomes closer to the original CME, illustrated in Fig. 1.

FIG. 1:

Illustrative realizations of the full and surrogate CMEs for a simple system with mass-action propensity. Given the same random seed, the simulated trajectory of the surrogate CME will be identical to that of the true CME until the state reaches a threshold, b, where the surrogate trajectory freezes. Increasing the threshold reduces the chance that the surrogate trajectories hit the bounds and consequently more realizations of the true CME are captured by the surrogate model.

Let b1, …, bN be bounds on the copy number of species 1 through N. We define an approximate SRN whose propensities are surrogates of the original SRN propensities and are given by

| (30) |

where [E] takes value 1 if expression E is true and zero otherwise. Since there are no further transitions once the process enters a state that exceeds the bounds, the state space of the surrogate chemical master equation is effectively reduced to the hyper-rectangle . Thus, the infinite-dimensional system of differential equations (3) is replaced by the finite-dimensional surrogate dynamical system

| (31) |

where the truncated infinitesimal generator is defined similar to eq. (4) but with the exact propensities replaced by the surrogate propensities given in eq. (30).

We note that eq. (31) is equivalent to eq. (6) with Ω = H(b). Thus, our surrogate propensities implicitly define a finite state projection of the original CME. We also note that the surrogate CMEs need not be constrained within a hyper-rectangle as considered here (see, e.g., [56,57] for examples of non-rectangular FSP). It may be beneficial to derive a sequence of transformations to the original propensities, with the approximations chosen in such a way that alleviate the computational burden of solving the original model by, e.g., making the lower-fidelity dynamical system less stiff than the high-fidelity one. We leave this more general strategy to future work.

For the present choice hyper-rectangular state spaces, we recall the important result mentioned in section 2.2 that, as the entries of b increase monotonically, the state space H(b) includes more states and the truncation error, measured as the ℓ1-distance between and the true CME solution p(t) decreases monotonically. This provides us with a straightforward and natural way to form a hierarchy of surrogate models within the Multifidelity ST-MCMC framework.

4.2. Using the hierarchy of surrogate CMEs within the ST-MCMC framework

With the surrogate CME models formulated, a strict hierarchy of surrogate models can be defined by a sequence of bounding vectors b(1) ≤ b(2) ≤ … ≤ b(K) where the “≤” sign applies element-wise. The corresponding surrogate models Ml := M(b(l)) are then defined as in eq. (31). As mentioned earlier, the error in the surrogate CMEs decrease monotonically as we increase the bounds. Therefore, {Ml} forms a hierarchy in which each level attains more fidelity than its predecessor.

At the l-th level, the log-likelihood function in eq. (8) is approximated by [58]

| (32) |

In the surrogate log-likelihood, the data is projected onto the finite state space H(bl), and the probabilities of the data at different time points are computed from the surrogate Markov model Ml. Clearly, as l increases, the surrogate function becomes a more accurate approximation to the true log-likelihood . In the ideal situation where the hierarchy is allowed to have infinite depth, these surrogates are guaranteed to form a sequence that asymptotically converges pointwise to the true log-likelihood from below. This is shown formally in the following proposition.

Proposition 4.1.

Let the sequence of bounds , where be chosen such that b(l) ≤ b(l+1) elementwise (i.e., ). Assume that the continuous-time Markov chain underlying the SRN is non-explosive for all parameters and that the initial distribution of the CME (3) has finite support. For each fixed value of the parameter θ, we have the following:

as l → ∞.

There exists a subsequence li such that .

Here, the log-likelihood function is defined as in (8) and the surrogate is defined as in (32).

Proof.

Without loss of generality, we assume that the initial distribution is concentrated at a single state x0. Let R be the number of reactions that occur during the finite time interval [0, tT] given the starting state x0. If there exists ε > 0 for which for every choice of Rε, then we have , violating the assumption of non-explosion. Thus, for every ε > 0, there exists Rε such that . Furthermore, we can find lε such that contains all states that are reachable from x0 via Rε reactions or fewer. Thus, the probability for a sample path within [0, tT] of the surrogate CME to ever exceed H(b(l)) is less than ε, and the corresponding solution of the surrogate CME is guaranteed to be less than ε away (in one-norm) from the true CME solution. This proves (i).

We have min(cj,i, b(l)) = cj,i for sufficiently large l. Entry-wise, the FSP approximations increase monotonically [10, Theorem 2.2], so p(ti, cj,i|Ml(θ)) increases monotonically. We can then choose the subsequence {li} from {l} by simply truncating the leading elements until b(l) disappears from the min(, ) function in eq. (32). This proves (ii).□

In summary, the FSP scheme allows us to define a hierarchy of surrogate master equations that approach the true CME as the surrogate state space enlarges. From this, we can define a sequence of surrogate log-likelihood functions that converge to the true log-likelihood from below. These surrogates could be used within the Multifidelity ST-MCMC framework introduced in section 3. Before we do so, however, we must first ensure that an accurate solution to the system (31) could be computed efficiently.

4.3. Fast and accurate solution of the surrogate master equation

Although the surrogate master equation (31) is a significant reduction from the infinite-dimensional CME, the number of states included in the truncated state space H(b) still grows as O(b1 · … · bN) and the surrogate CME can quickly become expensive as we increase the entries of b. However, in practice, the probability mass of the solution vector tends to concentrate at a much smaller subset of states. It is therefore advantageous to approximate with a more compactly supported distribution. More precisely, if we let ε > 0 be an error tolerance, we can use a distribution supported on Ω ⊂ H such that . Here, the FSP error bound (8) plays a critical role in choosing the appropriate support set Ω. We note that this error bound was recently utilized by Fox et al. [58] to compute rigorous lower and upper bounds for the true log-likelihood function (8), from which comparison between certain models could be done even at a low-fidelity FSP solution. We do not pursue this direction in the present work.

To efficiently compute the solution of the surrogate CMEs using the principles just mentioned, we employ a new FSP implementation recently developed by Vo and Munsky [59]. This solver divides the time interval of interest [0, tf] into subintervals Ij := [tj, tj+1), j = 0, …, nstep − 1 with 0 := t0 < t1 < … < tnstep := tf. On each time subinterval Ij, the dense tensor that is the solution of the surrogate CME (31) is approximated by a sparse tensor supported on Ωj ⊂ H, obtained from solving

| (33) |

where

Clearly, in solving (33), we only need to keep track of the equations corresponding to states in Ωj and that reduces the computational cost significantly.

From the FSP error bound (7), we derive an error-control criteria of the form

| (34) |

If at some t ∈ [tj, tj+1) we find that the inequality is not satisfied, more states are added to Ωj and the integration starts again from tj until the criteria is satisfied over the whole interval. The determination of the time steps tj is left to the ODE integrator employed for solving eq. (33). The advantage of using such an adaptive FSP approach, compared to the classic FSP as formulated in [10], is that we need not start with the full state space at the beginning of the integration. Rather, a small projection can be used to advance the solution over the early time points, while more states are included at the later time points only when needed using the time-dependent FSP error bound (34).

The state sets Ωj are chosen as integral solutions of a set of inequality constraints. In particular, they have the form

| (35) |

where fi are functions that are chosen a priori, and ci > 0 are positive scalars. To expand Ωj, we simply increase and run a breadth-first-search routine to explore all reachable states that satisfy the relaxed inequality constraints.

Implementation-wise, the approximate solution is stored in the coordinate format similar to that used for sparse tensors [60]. The list of tensor indices is managed with the Distribute Dictionary data structure in the software package Zoltan [61,62]. We also make use of parallel objects from the PETSc library [63–65].

These MPI-based libraries allow our implementation to scale into multiple computing nodes, details of the parallel version of our CME solver is communicated elsewhere [59]. However, it is worth pointing out that, as the number of cores increase, there is a diminishing return on the speedup gained from parallelizing the forward solution of the CME due to the communication cost inherent in numerical operations such as matrix-vector multiplications. Therefore, simply plugging a parallel forward solution code on an increasing number of nodes into a serial MCMC sampler such as Metropolis-Hastings will have diminishing benefits. The ST-MCMC, in contrast, allows us to achieve better utilization of the computing resources, since it is embarrassingly parallel. Doubling the number of processors simply enables us to simultaneously sample twice as many parameter samples in about the same computational time.

We also note that using an adaptive solver such as one we present here incurs some numerical error in the surrogate likelihood function. However, we expect this error to be negligible with a conservative choice of error tolerance. In particular, the error threshold ε is always fixed at 10−8 in our numerical tests.

In the next section, we will confirm the accuracy and efficiency of our combined multifidelity sampler and adaptive model reduction scheme when applied to two biologically inspired problems and one on a real experimental dataset.

5. NUMERICAL EXAMPLES

In the following tests we compare the four variants of the ST-MCMC described above: the Full-fidelity, ESS-Bridge, IT-Bridge, and Tuned IT-Bridge schemes. The full-fidelity scheme is the classic ST-MCMC with every likelihood evaluation using the highest model fidelity. The remaining three schemes are Multifidelity ST-MCMC variants in which the bridging between fidelity levels are determined based on the ESS, the new information-theoretic criteria with or without β-tuning (see eq. (17) and the preceding discussion in section 3.2). In each of these Multifidelity schemes, the surrogate likelihood is formulated as described in section 4.1. When all propensities are time-invariant as in the first two examples, the reduced system of ODEs in eq. (33) is solved by computing the action of the matrix exponential operator using the Krylov approximation with Incomplete Orthogonalization Procedure [66–68], with the Krylov basis size fixed at 30. In the case of time-varying propensities in the third example, we use the Four stage third order L-stable Rosenbrock-W scheme [69] implemented in the TS module of PETSc [70]. All these ODEs solvers are set with conservative absolute tolerance of 10−14, and relative tolerance of 10−4. All the codes for the numerical experiments are available at the public Github repository at https://github.com/voduchuy/StMcmcCme.

5.1. Parameter inference for repressilator gene circuit

We first consider a three-species model inspired by the well-known repressilator gene circuit [1]. This model consists of three species, TetR, λcI and LacI, which constitute a negative feedback network (Table 1). We simulate a dataset that consists of five measurement times 2, 4, 6, 8, and 10 minutes, with 1000 cells measured at each time point. These numbers of single-cell measurements are typical of smFISH experiments [6,44]. We assume that all cells start at the state x0 = (TetR, λcI, LacI) = (0, 0, 0), so that at the initial time where there are no gene products.

TABLE 1:

Reactions and propensities in the repressilator model. ([X] is the number of copies of the species X.)

| reaction | propensity | |

|---|---|---|

| 1. | ∅ → TetR | |

| 2. | TetR → ∅ | γ0 [TetR] |

| 3. | ∅ → λcI | |

| 4. | λcI → ∅ | γ1 [λcI] |

| 5. | ∅ → LacI | |

| 6. | LacI → ∅ | γ2[LacI] |

The hierarchy of surrogate CMEs (cf. (31)) is defined by the bounds

where (c1, c2, c3) = (20, 40, 40) and (d1, d2, d3) = (50, 100, 100), with Lmax = 10. Therefore, the multifidelity ST-MCMC will transit through ten levels, with the highest-fidelity model having a state space of size 51×101×101.

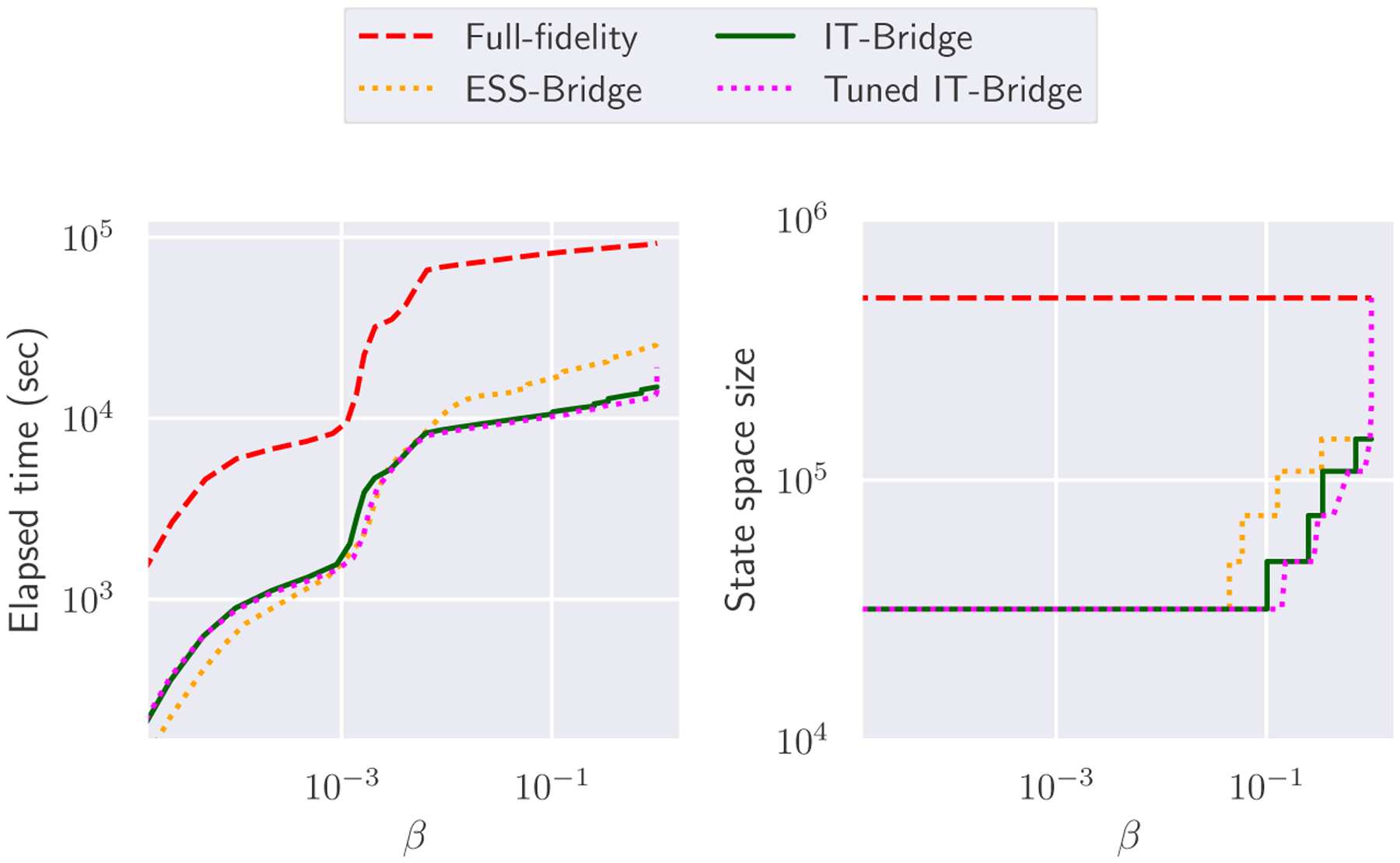

We conduct parameter inference in log10-transformed space. The prior for the parameters is chosen to be a multivariate normal distribution (in log10 space) with a diagonal covariance matrix (see table 2). We ran both the ST-MCMC with the highest-fidelity surrogate CME and the multifidelity ST-MCMC on 29 nodes, with 36 cores per node with 1044 parallel chains. For each level, samples were evolved using Metropolis-Hastings MCMC until a correlation target of 0.6 was reached. The proposal distribution was adaptively tuned as part of the algorithm. Details on the ST-MCMC sampler and its tuning can be found in [35]. Fig. 2 shows the time taken for each sampling scheme to reach a certain annealing level, with the multifidelity schemes with our proposed IT-based criteria outperforming the state-of-the-art fixed-fidelity ST-MCMC and ESS Bridging schemes. Specifically, while the full-fidelity ST-MCMC took over 25 hours to finish, the Multifidelity ST-MCMC with ESS, Information-theoretic, and Tuned Information-theoretic Bridging took respectively 7.2, 4.1 and 5.3 hours, resulting in speedup factors of about 3.5, 6.2 and 4.8. The novel Information-theoretic (IT) schemes are clearly faster than the ESS-based scheme in this example, with the untuned IT scheme almost twice as fast as the ESS-based scheme. We observe that for the early levels of the algorithms, when β is small, the lowest fidelity model is sufficiently informative. Further, at these early levels the ESS-based scheme is slightly faster than the others since it does not require any full model evaluations. However, after β gets larger, the IT-based methods start to outperform the ESS-based method since they use the full model evaluations to judge that they do not need to bridge to the higher fidelity models as quickly as the ESS-based scheme does. Although the prior assigns a probability density of only about 8.766 × 10−20 to the true parameter vector, all samplers were able to bring the particles close to the true parameters (Fig. 4). There is no notable difference in the shapes of the posterior distributions constructed from the samples of these two schemes (Fig.3 and table 2).

TABLE 2:

Model parameters in the repressilator example. The second column presents the parameters of the prior distribution, where we use a Gaussian prior in the log10-transformed parameter space with a diagonal covariance matrix. The last four columns present the posterior mean and standard deviation of model parameters estimated using four methods: fixed-fidelity ST-MCMC (Fixed), Multifidelity ST-MCMC with ESS-Bridging, Multifidelity ST-MCMC with IT-Bridging, and Multifidelity ST-MCMC with β-tuning and IT-Bridging.

| Parameter | True | Prior | Posterior | |||

|---|---|---|---|---|---|---|

| Full-fidelity | ESS-Bridge | IT-Bridge | Tuned IT-Bridge | |||

| log10(k0) | 1.00 | 10.00 ± 0.3 | 1.00 ± 0.01 | 1.00 ± 0.02 | 0.99 ± 0.01 | 1.00 ± 0.01 |

| log1o(γ0) | −2.00 | 0.10 ± 0.3 | −1.98 ± 0.07 | −1.98 ± 0.08 | −1.98 ± 0.07 | −1.98 ± 0.07 |

| log10(a0) | −1.00 | 0.10 ± 0.3 | −1.05 ± 0.06 | −1.05 ± 0.07 | −1.07 ± 0.06 | −1.06 ± 0.06 |

| log10(b0) | 0.30 | 0.10 ± 0.3 | 0.31 ± 0.01 | 0.31 ± 0.01 | 0.31 ± 0.01 | 0.31 ± 0.01 |

| log10(k1) | 0.88 | 10.00 ± 0.3 | 0.87 ± 0.00 | 0.87 ± 0.01 | 0.87 ± 0.00 | 0.87 ± 0.00 |

| log1o(γ1) | −1.70 | 0.10 ± 0.3 | −1.71 ± 0.05 | −1.73 ± 0.06 | −1.73 ± 0.05 | −1.71 ± 0.05 |

| log10(a1) | −2.00 | 0.10 ± 0.3 | −1.98 ± 0.05 | −1.98 ± 0.06 | −1.99 ± 0.05 | −1.98 ± 0.05 |

| log10(b1) | 0.40 | 0.10 ± 0.3 | 0.40 ± 0.01 | 0.40 ± 0.01 | 0.40 ± 0.01 | 0.40 ± 0.01 |

| log10(k2) | 1.00 | 10.00 ± 0.3 | 0.98 ± 0.01 | 0.99 ± 0.01 | 0.98 ± 0.01 | 0.98 ± 0.01 |

| log10(γ2) | −1.30 | 0.10 ± 0.3 | −1.34 ± 0.03 | −1.34 ± 0.04 | −1.34 ± 0.03 | −1.34 ± 0.03 |

| log10 (a2) | −1.30 | 0.10 ± 0.3 | −1.35 ± 0.06 | −1.34 ± 0.07 | −1.36 ± 0.06 | −1.35 ± 0.06 |

| log10 (b2) | 0.48 | 0.10 ± 0.3 | 0.48 ± 0.01 | 0.48 ± 0.01 | 0.48 ± 0.01 | 0.48 ± 0.01 |

FIG. 2:

Performance of ST-MCMC samplers on the repressilator example. The horizontal axis represent the annealing factor, i.e. inverse temperature. Significant speed up is observed for the multifidelity schemes.

FIG. 4:

Evolution of the population of samples for the repressilator model parameters using four different ST-MCMC variants: full-fidelity, multifidelity strategies with bridging based on ESS, Information-theoretic Criteria and Tuned Information-theoretic Criteria. The solid lines represent the history of the sample means. The area of the mean ± standard deviation is presented in the shaded region. Notice in the γ1 parameter that bias starts to accumulate for the IT-based methods. This bias is corrected when the sampler starts bridging since the bias began to exceed the natural parameter variability.

FIG. 3:

Prior and posterior densities in the repressilator example. See table 2 for the numerical values of the estimated means and standard deviations of these posterior distributions. It is evident that all methods converge to virtually the same distribution.

5.2. Bayesian comparison of compartmental models of gene expression

We next explore the application of multifidelity ST-MCMC to the problem of model selection. We consider a class of compartmental multi-state gene expression models based on the model considered in [71]. The model separates biomolecules into the nuclear and cytoplasmic compartments. The reaction network consists of a gene that could switch between an inactivated state G0 and several activated states Gi, i = 1, …, nG −1. When activated, these gene can be transcribed into RNA molecules within the nucleus at the rate of ri molecule/minute on average. These nuclear mRNA molecules are then transported into the cytoplasm at a rate of ktrans molecule/min, where they degrade at the probabilistic rate γ molecule/minute. Overall, the model consists of nG + 2 species: genes that are at different states, nuclear mRNA and cytoplasmic mRNA. These molecular species that can go through 3nG +1 reaction channels (Table 3). Only the copy numbers of the nuclear and cytoplasmic mRNA species are observable in experiments. We want to use model selection to decide the number nG of gene states that best explain the observed data.

TABLE 3:

Reactions and propensities in the compartmental gene expression model.

| reaction index | reaction | propensity |

|---|---|---|

| 1,…,nG | Gi−1 → Gi, i = 1,…, nG − 1 | |

| nG + 1,…, 2nG | Gi → Gi−1, i = 1,…, nG − 1 | |

| 2nG + 1,…, 3nG − 1 | Gi → Gi + RNAunc, i = 1,…, nG − 1 | ri[Gi] |

| 3nG | RNAnuc → RNAcyt | ktrans [RNAnuc] |

| 3nG + 1 | RNAcyt → ∅ | γ[RNAcyt] |

We simulate a ground truth dataset based on the model with nG := 3, which consists of 1000 single-cell measurements for each time point t ∈ {2, 4, 6, 8, 10} (with hour as time unit). We then use the multifidelity ST-MCMC using the information-theoretic criteria with β-tuning to estimate the model evidence for three classes of reaction networks that consist of two, three, and four gene states and compare these results with the model evidence founding using the full model. We choose the information-theoretic criteria with β-tuning over the other multifidelity approaches because it is the most robust and uses the β-tuning to avoid sampling degeneracy when the model fidelity changes. This is very important for computing model evidence because the model evidence estimate error is related to the KL-divergence between the intermediate distributions. The 2 and 3 state model were run using 7 nodes with 36 cores, while the 4 state model was run on 14 nodes also with 36 cores each. Each ST-MCMC used 1008 parallel samples. For each level, chains were run using MCMC until a correlation target of 0.6 was reached or 100 iterations exceeded. These results are summarized in Table 4. The model evidence ranges are computed using the approach described in [53].

TABLE 4:

Comparison of the model evidence computation for the 2,3, and 4 state gene expression model using ST-MCMC with the full fidelity and Multifidelity ST-MCMC with β-tuning. The evidence estimates from the full-fidelity and multifidelity methods are consistent, but the multifidelity scheme is significantly faster.

| Model | Full-fidelity | Tuned IT Bridge | ||

|---|---|---|---|---|

| Log Evidence | Time (Sec) | Log Evidence | Time (Sec) | |

| 2 Gene | −20108.8 ± 5.4 | 1244 | −20112.6 ± 2.0 | 758 |

| 3 Gene | −20111.9 ± 5.6 | 67496 | −20115.7 ± 2.0 | 17511 |

| 4 Gene | −20113.0 ± 5.7 | 76546 | −20117.5 ± 1.8 | 23777 |

From our results, we observe that not only does the Tuned-IT multifidelity ST-MCMC provide consistent estimate of the model evidence compared to the full-model based ST-MCMC, it actually predicts less error. All the while taking less time with speed up factors of about 1.6, 3.8 and 3.2 for the 2, 3, and 4 gene state model respectively. The improved estimate of the multifidelity approach is likely due to the fact that it uses more intermediate levels so it has a finer discretionary of the thermodynamic integration used to estimate the evidence.

The computed evidence indicates that the present data does not significantly favor one model choice over others. Clearly more experiments are required to provide conclusive evidence for model selection, and the multifidelity framework allows us to realize the insufficiency of data faster than the full-fidelity scheme. This is an important advantage in practice, as the faster assessment of current experimental data will likely reduce the lag time between consecutive batches of experiments. Further it may be possible to integrate the same multifidelity ST-MCMC based approach into Bayesian experimental design to speed up estimating the expected information gain from various experimental setups in order to design experiments to better discriminate between the models.

5.3. Stochastic transcription of the inflammation response gene IL1beta

Having explored the performance of the Multifidelity ST-MCMC schemes with FSP on theoretical examples with simulated datasets, we apply our method on modeling real datasets. We consider the expression of the IL1beta gene in response to LPS stimulation that was studied in Kalb et al. [44]. The dataset consists of mRNA counts for IL1beta measured right before applying LPS stimulation, as well as those at [0.5, 1, 2, 4] hours after. We consider a three-state gene expression model with a time-varying deactivation rate. We assume the initial state (2, 0, 0, 0). The observed mRNA counts are fit to the solutions of the CME at times T0 +{0, 0.5, 1, 2, 4} hour, where the time offset T0 is to be estimated. The influence of LPS-induced signaling molecules is modeled by the function of the form

| (36) |

This signal affects the rate by which the gene turns off,

This results in a chemical reaction network with time-varying propensities and eleven uncertain parameters (Table 5). Similar to the previous example, the gene state is hidden and the data only contains measurements of the mRNA copy numbers.

TABLE 5:

Reactions and propensities in the IL1beta model.

| reaction | propensity | |

|---|---|---|

| 1. | G0 → G1 | k01 [G0] |

| 2. | G1 → G2 | k12 [G1] |

| 3. | G2 → G1 | k21 [G2] |

| 4. | G1 → G0 | k10(t) = max{0,a10 − b10S(t)}, see eq.(36) |

| 5. | ∅ → RNA | α1 [G1] + α2 [G2] |

| 6. | RNA → ∅ | γ[RNA] |

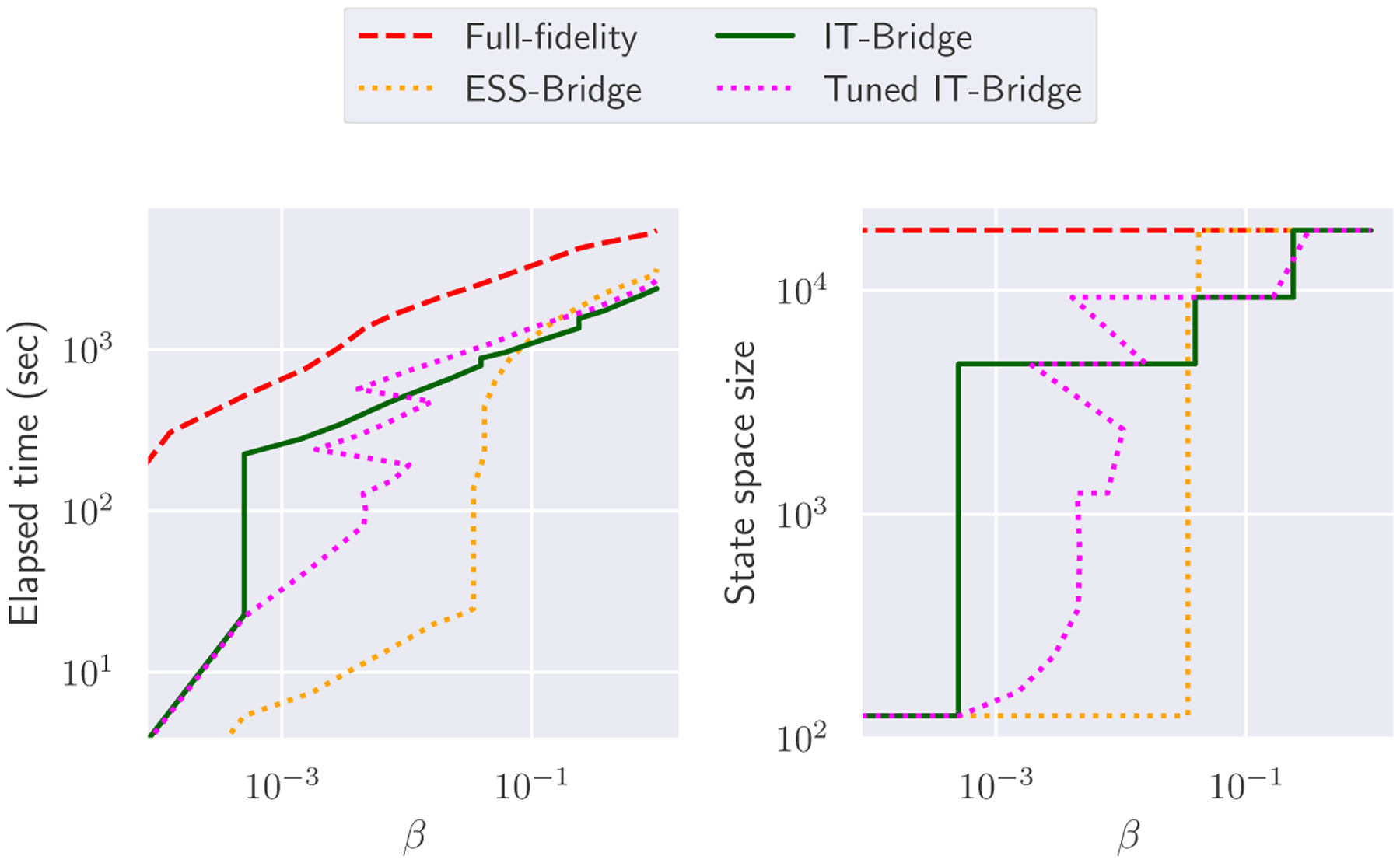

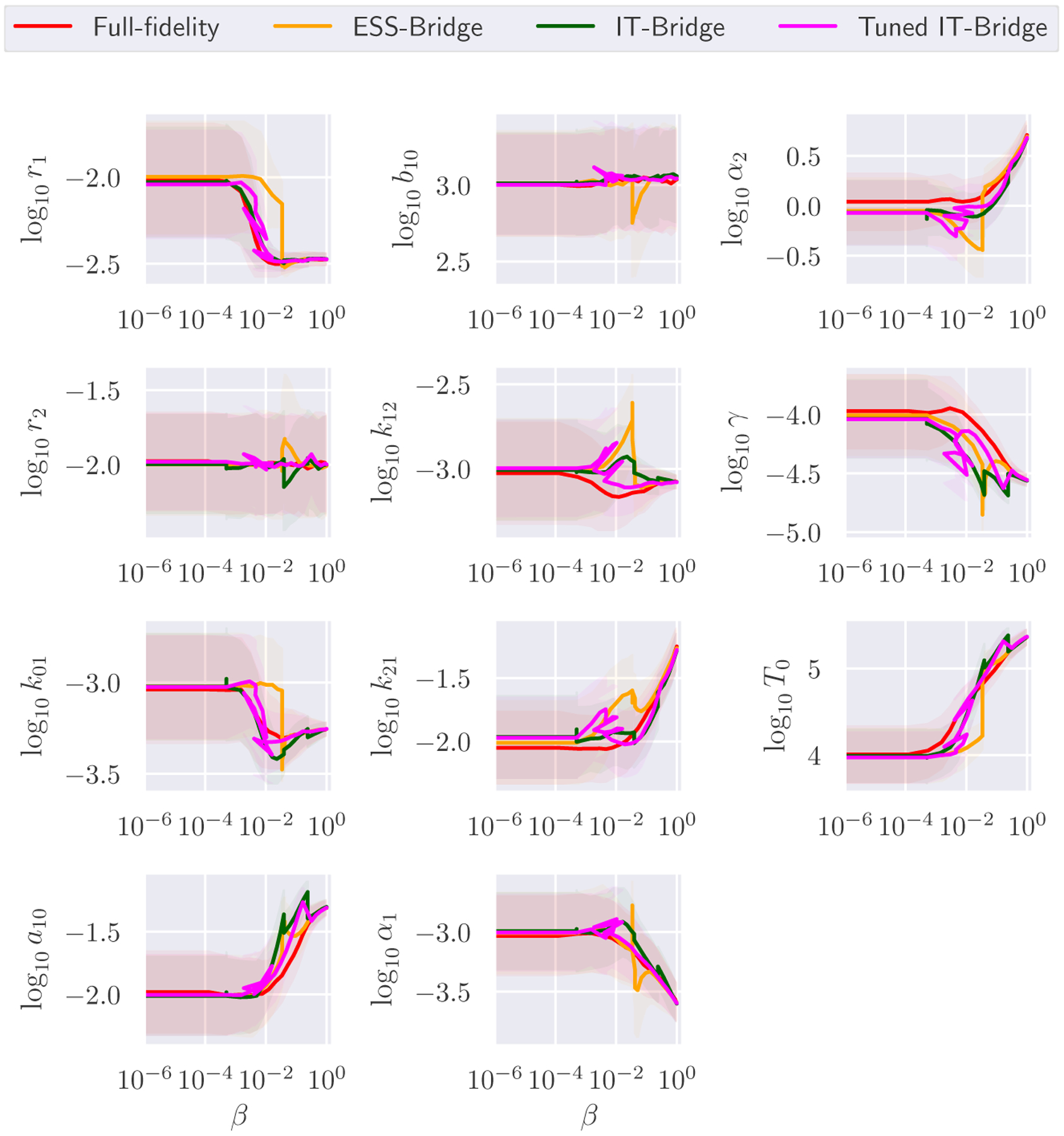

Fig. 5 summarizes the performance of the four sampling schemes. The full-fidelity model considered in this example has a state space of only 18,432 states, and could be solved quickly without any reduction scheme. Yet, we still observe significant speedup from the Multifidelity schemes. Specifically, the Multifidelity ST-MCMC with ESS, Information-theoretic and Tuned Information-theoretic Bridging took respectively 3153, 2384, and 2703 seconds to finish, with speedup factors of 1.7, 2.2, and 2.0 over the full-fidelity scheme that takes over 5468 seconds. In Figure 6, we see the evolution of the model parameters for the different methods, and we can use this to better understand differences from Figure 5. It took significantly longer for the ESS-based method to bridge to higher fidelity models than the IT-based methods. However, when it did bridge it jumped straight to the highest model fidelity. For several parameters, we see that their evolution under the ESS-based scheme accumulated significant bias at lower β levels before being corrected when the ESS sampler started bridging much later on (e.g. parameters r1, k01, and T0). Furthermore, once bridging occurred the distributions were very far apart from each other so the sample population degenerated. This can be seen in the bias that occurs in parameters r2, b10 and α1 immediately after bridging. The fact that the ESS-based method does not use any information from the full posterior explains this delay and degeneracy as it is unable to detect the emergence of bias. In contrast, the IT-based methods use the guidance of full model evaluations to better recognize the emergence of bias, so they correct it quicker. Therefore, the IT-based schemes have a smoother evolution and as a result take less time. However, we do observe bias occur in r2 after one bridging step for the IT-Bridge without β tuning, indicating some sampling degeneracy. This is not the case for IT-Bridge with β tuning since it is specifically designed to avoid degeneracy.

FIG. 5:

Performance of ST-MCMC samplers on the IL1beta example. The horizontal axis represents the inverse temperature.

FIG. 6:

Evolution of the population of samples for the IL1beta model parameters using four different ST-MCMC variants: full-fidelity, multifidelity strategies with bridging based on ESS, Information-theoretic Criteria and Tuned Information-theoretic Criteria. The solid lines represent the history of the sample means. The area of the mean ± standard deviation is presented in the shaded region.

All sampling schemes essentially arrived at the same posterior estimates for the model parameters (Table 6). Despite significant posterior variance for some parameters, the Bayesian prediction for the distributions of RNA copy number has negligible uncertainties, and they appear to correspond reasonably well with the experimental data at the beginning and the end of the measurement time period (Fig. 7). We notice that this is not necessarily the only model structure that could explain the data, and there may yet be other models that could fit and predict single-cell behavior more accurately. The speedup enabled by the Multifidelity framework will allow the researcher the ability to more rapidly propose, assess, and choose between different alternative models.

TABLE 6:

Model parameters in the IL1beta example. The second column presents the parameters of the prior distribution, where we use a Gaussian prior in the log10-transformed parameter space with a diagonal covariance matrix. The last four columns present the posterior mean and standard deviation of model parameters estimated using the ST-MCMC with full-fidelity model and the Multifidelity ST-MCMC with three different bridging strategies.

| Parameter | Prior | Posterior | |||

|---|---|---|---|---|---|

| Full-fidelity | ESS-Bridge | IT-Bridge | Tuned IT-Bridge | ||

| log10(r1) | −2.00 ± 0.33 | −2.48 ± 0.03 | −2.47 ± 0.03 | −2.47 ± 0.03 | −2.47 ± 0.03 |

| log10(r2) | −2.00 ± 0.33 | −2.00 ± 0.34 | −2.00 ± 0.33 | −2.00 ± 0.33 | −2.00 ± 0.32 |

| log10(k01) | −3.00 ± 0.33 | −3.26 ± 0.03 | −3.25 ± 0.04 | −3.25 ± 0.03 | −3.25 ± 0.03 |

| log10(a10) | −2.00 ± 0.33 | −1.31 ± 0.06 | −1.31 ± 0.06 | −1.30 ± 0.06 | −1.31 ± 0.06 |

| log10(k10) | 3.00 ± 0.33 | 3.04 ± 0.34 | 3.05 ± 0.34 | 3.06 ± 0.30 | 3.04 ± 0.31 |

| log10(k12) | −3.00 ± 0.33 | −3.08 ± 0.03 | −3.08 ± 0.04 | −3.08 ± 0.04 | −3.08 ± 0.03 |

| log10(k21) | −2.00 ± 0.33 | −1.25 ± 0.15 | −1.26 ± 0.14 | −1.28 ± 0.14 | −1.28 ± 0.13 |

| log10(α1) | −3.00 ± 0.33 | −3.60 ± 0.15 | −3.60 ± 0.16 | −3.60 ± 0.16 | −3.60 ± 0.16 |

| log10(α2) | 0.00 ± 0.33 | 0.71 ± 0.15 | 0.70 ± 0.14 | 0.68 ± 0.13 | 0.68 ± 0.13 |

| log10(γ) | −4.00 ± 0.33 | −4.56 ± 0.05 | −4.56 ± 0.05 | −4.56 ± 0.05 | −4.56 ± 0.05 |

| log10(T0) | 4.00 ± 0.33 | 5.36 ± 0.09 | 5.36 ± 0.09 | 5.37 ± 0.08 | 5.37 ± 0.09 |

FIG. 7:

Comparison of data and the posterior mRNA distribution predictions for the IL1beta transcription model at zero and four hour after LPS induction. The mean Bayesian prediction for the mRNA probability distribution is computed by averaging the solution of the CME over all posterior samples. The area of one standard deviation around the mean is shown in shade. Visually speaking, samples from different ST-MCMC formulations yield identical predictions.

6. CONCLUSION

Rapid advancements in experimental techniques are allowing biologists to collect quantitative data about cellular processes at ever smaller scales with increasing detail [6–8]. Mathematical models have become an indispensable part in the process of learning and making predictions from this data. Stochastic reaction networks (SRNs) form a powerful class of models that have found widespread use within the quantitative biology community [7]. Identifying these models from the data, however, is a challenging task due to the computational cost of solving the chemical master equation (CME). This has prevented a fully Bayesian statistical framework from being adopted widely in real biological studies. In this paper, we seek to address the challenge of applying the Bayesian philosophy to analyzing stochastic gene expression data by proposing an efficient computational framework for Bayesian parameter calibration and model selection for SRNs. This framework combines novel multifidelity formulations of the massively parallel ST-MCMC sampler with surrogate models of the CME. Numerical tests demonstrate that this combined approach leads to significant savings in comparison to a state-of-the-art method that uses solely the high-fidelity models. Further, we also propose a new criteria for tuning model fidelity within multifidelity SMC type methods based on information theory that compares favorably to effective sample size based techniques.

The research reported here may potentially lead to fruitful future directions. With respect to surrogate models, the approach proposed here for the efficient solution of the surrogate master equations is only one among various alternatives that have been proposed over the years since the introduction of the FSP algorithm [10]. Another attractive option for constructing multifidelity models is to utilize a low-rank tensor format such as the quantized tensor train that has been proposed for the forward solution of the CME [72–77]. It is also possible to exploit bounds on the log-likelihood function as done in Fox et al. [58]. While the present paper focuses on inference from smFISH data, our approach may also be adapted to analyze other types of single-cell data, such as time-course fluorescence measurements whose likelihood is also amenable to the FSP approach [78]. The improved efficiency may lead to more widespread adoptions of the Bayesian approach in answering biological questions. We refer to Catanach et al. [16] for an example of a Bayesian approach to studying the phenomenon of context dependence in synthetic gene circuits using Bayesian model selection, which required significant computational resources.

There are also many directions for improving Multifidelity ST-MCMC in general. We expect estimating the information gain criteria could be significantly improved. One possibility is using a more advanced sampling scheme that leverages model evaluations from across the multifidelity hierarchy. This could even further reduce the number of full model evaluations needed at each level. Another avenue of research is designing Multifidelity ST-MCMC specifically for estimating a quantity of interest to a given accuracy as is done in Multilevel MCMC. If we have a design object, ST-MCMC may not need to progress through the full model hierarchy or all annealing levels in order to provide enough information to estimate the quantity of interest to the desired accuracy. By further reducing the computational cost of Bayesian methods like ST-MCMC, engineers and scientists will be better able to integrate uncertainty quantification into their workflow. Therefore, as high performance computing resources are becoming increasingly accessible, we expect the Multifidelity ST-MCMC framework to provide a useful tool for researchers who are interested in model calibration and uncertainty propagation for complex models.

ACKNOWLEDGEMENT

HV and BM were supported by National Institutes of Health (R35 GM124747). We thank James Werner and Daniel Kalb for kindly sharing with us the data from their smFISH experiment. The cited work [44] was performed, in part, at the Center for Integrated Nanotechnologies, an Office of Science User Facility operated for the U.S. Department of Energy (DOE) Office of Science by Los Alamos National Laboratory (Contract 89233218CNA000001) and Sandia National Laboratories (Contract DE-NA-0003525). The work presented here was also funded in part by the Department of Energy Office of Advanced Scientific Computing Research through the John von Neumann Fellowship. Sandia National Laboratories is a multimission laboratory managed and operated by National Technology and Engineering Solutions of Sandia, LLC., a wholly owned subsidiary of Honeywell International, Inc., for the U.S. Department of Energy’s National Nuclear Security Administration under contract DE-NA-0003525. This paper describes objective technical results and analysis. Any subjective views or opinions that might be expressed in the paper do not necessarily represent the views of the U.S. Department of Energy or the United States Government.

We also thank Ania-Ariadna Baetica for providing constructive comments on the manuscript and Jed Duersch for discussions regarding information theory.

REFERENCES

- 1.Elowitz MB and Leibler S, A synthetic oscillatory network of transcriptional regulators., Nature, 403(6767):335–338, 2000. [DOI] [PubMed] [Google Scholar]

- 2.Munsky B, Trinh B, and Khammash M, Listening to the noise: random fluctuations reveal gene network parameters., Mol. Syst. Biol, 5(318):318, 2009. 10.1038/msb.2009.75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Neuert G, Munsky B, Tan RZ, Teytelman L, Khammash M, and Van Oudenaarden A, Systematic identification of signal-activated stochastic gene regulation, Science (80-.), 339(6119):584–587, 2013. www.sciencemag.org/cgi/content/full/339/6119/584/DC1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Singh A and Dennehy JJ, Stochastic holin expression can account for lysis time variation in the bacteriophage λ, J. R. Soc. Interface, 11(95), 2014. 10.1098/rsif.2014.0140http://rsif.royalsocietypublishing.org. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Van Boxtel C, Van Heerden JH, Nordholt N, Schmidt P, and Bruggeman FJ, Taking chances and making mistakes: Non-genetic phenotypic heterogeneity and its consequences for surviving in dynamic environments, J. R. Soc. Interface, 14(132), 2017. https://royalsocietypublishing.org/doi/pdf/10.1098/rsif.2017.0141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Raj A, van den Bogaard P, Rifkin SA, van Oudenaarden A, and Tyagi S, Imaging individual mRNA molecules using multiple singly labeled probes, Nat. Methods, 5:877, September 2008. 10.1038/nmeth.1253https://www.nature.com/articles/nmeth.1253{#}supplementary-information [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Munsky B, Fox Z, and Neuert G , Integrating single-molecule experiments and discrete stochastic models to understand heterogeneous gene transcription dynamics, Methods, 85:12–21, September 2015. http://www.ncbi.nlm.nih.gov/pubmed/26079925http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=PMC4537808 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Li G and Neuert G, Multiplex RNA single molecule FISH of inducible mRNAs in single yeast cells, Sci. data, 6(1):94, June 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gillespie DT, A rigorous derivation of the chemical master equation, Phys. A Stat. Mech. its Appl, 188(1–3):404–425, September 1992. http://linkinghub.elsevier.com/retrieve/pii/037843719290283V [Google Scholar]

- 10.Munsky B and Khammash M, The finite state projection algorithm for the solution of the chemical master equation, J. Chem. Phys, 124(4):44104, 2006. 10.1063/1.2145882http://aip.scitation.org/toc/jcp/124/4http://jcp.aip.org/resource/1/jcpsa6/v124/i4/p044104{_}s1 [DOI] [PubMed] [Google Scholar]

- 11.Gomez-Schiavon M, Chen LF, West AE, and Buchler NE, BayFish: Bayesian inference of transcription dynamics from population snapshots of single-molecule RNA FISH in single cells, Genome Biol, 18(1):164, 2017. https://genomebiology.biomedcentral.com/track/pdf/10.1186/s13059-017-1297-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schnoerr D, Sanguinetti G, and Grima R, Approximation and inference methods for stochastic biochemical kinetics—a tutorial review, J. Phys. A, 50(9):093001, January 2017. https://doi.org/10.1088%2F1751-8121%2Faa54d9 [Google Scholar]

- 13.Tiberi S, Walsh M, Cavallaro M, Hebenstreit D, and Finkenstädt B, Bayesian inference on stochastic gene transcription from flow cytometry data, In Bioinformatics, Vol. 34, pp. i647–i655, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Weber L, Raymond W, and Munsky B, Identification of gene regulation models from single-cell data, Phys. Biol, 15(5):055001, May 2018. https://doi.org/10.1088%2F1478-3975%2Faabc31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lin YT and Buchler NE, Exact and efficient hybrid monte carlo algorithm for accelerated bayesian inference of gene expression models from snapshots of single-cell transcripts, J. Chem. Phys, 151(2):024106, 2019. 10.1063/1.5110503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Catanach TA, McCardell R, Baetica AA, and Murray RM, Context dependence of biological circuits, bioRxiv, 2018. https://www.biorxiv.org/content/early/2018/07/03/360040 [Google Scholar]

- 17.Lindley DV, On a Measure of the Information Provided by an Experiment, Ann. Math. Stat, 27(4):986–1005, 2007. https://projecteuclid.org/download/pdf{_}1/euclid.aoms/1177728069 [Google Scholar]

- 18.Huan X and Marzouk YM, Simulation-based optimal Bayesian experimental design for nonlinear systems, J. Comput. Phys, 232(1):288–317, 2013. 10.1016/j.jcp.2012.08.013 [DOI] [Google Scholar]

- 19.Ruess J, Milias-Argeitis A, and Lygeros J, Designing experiments to understand the variability in biochemical reaction networks., J. R. Soc. Interface, 10(88):20130588, 2013. http://www.ncbi.nlm.nih.gov/pubmed/23985733{%37}5Cnhttp://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=PMC3785824 [DOI] [PMC free article] [PubMed] [Google Scholar]