Key Points

Question

What is the association between hospital responses to changing incentives in Medicare’s Comprehensive Care for Joint Replacement program and savings?

Findings

In this controlled population-based study, savings demonstrated in years 1 to 2 of the Comprehensive Care for Joint Replacement program largely dissipated by year 4. Entrance of fewer patients with high anticipated cost of care or use of lower-cost outpatient surgical settings, as well as program dropout by regionally high-spending hospitals, were associated with diminished savings.

Meaning

Savings in this episode-based payment program were greatly reduced owing to selective participation by hospitals and selection of patients with low-cost care or low-cost outpatient procedures.

This controlled population-based study investigates the response of hospitals to changing incentives in Medicare’s Comprehensive Care for Joint Replacement program, an episode-based payment model.

Abstract

Importance

Medicare’s Comprehensive Care for Joint Replacement (CJR) model, initiated in 2016, is a national episode-based payment model for lower-extremity joint replacement (LEJR). Metropolitan statistical areas (MSAs) were randomly assigned to participation. In the third year of the program, Medicare made hospital participation voluntary in half of the MSAs and enabled LEJRs for knees to be performed in the outpatient setting without being subject to episode-based payment. How these changes affected program savings is unclear.

Objective

To estimate savings from the CJR program over time and assess how responses by hospitals to changing incentives were associated with those savings.

Design, Participants, and Setting

This controlled population-based study used Medicare claims data from January 1, 2014, to December 31, 2019, to analyze the spending for beneficiaries who received LEJR in 171 MSAs randomized to CJR vs typical payment. One-quarter of beneficiaries before and after the April 1, 2016, start date were excluded as a 6-month washout period (January 1 to June 30, 2016) to allow time in the evaluation period for hospitals to respond to the program rules.

Main Outcomes and Measures

The main outcomes were episode spending and, starting in year 3 of the program, the hospitals’ decision to no longer participate in CJR and perform LEJRs in the outpatient setting.

Results

Data from 1 087 177 patients (mean [SD] age, 74.4 [8.4] years; 692 604 women [63.7%]; 980 635 non-Hispanic White patients [90.2%]) were analyzed. Over the first 4 years of CJR, 321 038 LEJR episodes were performed at 702 CJR hospitals, and 456 792 episodes were performed at 826 control hospitals. From the second to the fourth year of the program, savings in CJR vs control MSAs diminished from −$976 per LEJR episode (95% CI, −$1340 to −$612) to −$331 (95% CI, −$792 to $130). In MSAs where hospital participation was made voluntary in the third year, more hospitals in the highest quartile of baseline spending dropped out compared with the lowest quartile (56 of 60 [93.3%] vs 29 of 56 [51.8%]). In MSAs where participation remained mandatory, CJR hospitals shifted fewer knee replacements to the outpatient setting in years 3 to 4 than controls (12 571 of 59 182 [21.2%] vs 21 650 of 68 722 [31.5%] of knee LEJRs). In these mandatory MSAs, 75% of the reduction in savings per episode from years 1 to 2 to years 3 to 4 of the program ($455; 95% CI, $137-$722) was attributable to CJR hospitals’ decision on which patients would undergo surgery or whether the surgical procedure would occur in the outpatient setting.

Conclusions and Relevance

This controlled population-based study found that savings observed in the second year of CJR largely dissipated by the fourth year owing to a combination of responses among hospitals to changes in the program. These results suggest a need for caution regarding the design of new alternative payment models.

Introduction

As an alternative to fee-for-service payment, episode-based payments (also known as bundled payments) intend to promote more efficient care by setting a spending benchmark for a defined episode of care and providing financial incentives for hospitals, clinicians, and postacute care facilities and agencies to work together to lower episode spending.1,2,3 Debate continues about the potential for hospitals to “game” these payment systems with responses that undermine program savings.4,5,6 For example, if adjustment of benchmarks for patient factors is inadequate, hospitals could profit by selectively caring for healthier patients who require lower-cost care.7,8 If spending in the geographic region is used to set the benchmarks, hospitals whose spending at baseline was already well below this benchmark could preferentially participate, further reducing the potential for program savings.9 To date, there has been limited evaluation of whether hospitals’ responses affect the savings observed in episode-based payment models.

Recent changes in Medicare’s Comprehensive Care for Joint Replacement (CJR) model, an episode-based payment initiative for lower-extremity joint replacement (LEJR) begun in 2016, provide a unique opportunity to study responses by hospitals in episode-based payment models and how those responses affect savings.10,11 The CJR program was initially implemented as a mandatory payment model for hospitals in randomly selected metropolitan statistical areas (MSAs), with hospitals in 67 MSAs assigned to episode-based payment and 104 control MSAs assigned to no payment change.11 Hospitals participating in CJR paid penalties or received bonuses based on spending during hospitalization and the 90 days after discharge relative to a benchmark based on regional average episode spending.11 Risk adjustment for patient characteristics was minimal; benchmarks were only adjusted for the presence of a fracture or a major complication.12

Two key policy changes implemented in 2018, year 3 of the program, created the opportunity for strategic responses by hospitals. First, Medicare made participation voluntary in half of the MSAs originally selected to participate in CJR.13 Second, Medicare modified regulations to enable total knee replacement procedures to be performed in the outpatient setting; however, these outpatient procedures were excluded as episodes in the CJR program.14 Outpatient knee LEJRs are less costly for hospitals because they attract patients who are less likely to experience complications or use more expensive postacute care settings after discharge (ie, “lower-risk” patients) and require fewer inpatient resources. However, among hospitals in the CJR program, it would be more financially attractive to perform surgeries on low-risk patients in the inpatient setting.

To understand responses by hospitals in episode-based payment models, we estimated changes in episode savings attributed to CJR from years 1 to 2 of the program to years 3 to 4 of the program. We then performed additional analyses to evaluate how responses by hospitals might explain the changes in savings we observed. First, in MSAs that transitioned to voluntary participation, we examined whether hospital dropout from the program differed by hospitals’ baseline spending. Second, in MSAs that maintained mandatory enrollment, we quantified the extent to which selection of low-risk patients and use of inpatient or outpatient LEJR procedures affected savings estimates from CJR.

Methods

This controlled population-based study used Medicare claims data from January 1, 2014, to December 31, 2019, to analyze the spending for beneficiaries who received LEJR in 171 MSAs randomized to CJR vs typical payment. This study was approved by the institutional review board at Harvard Medical School, and the requirement for informed consent was waived because the study involved no more than minimal risk and analyzed already collected deidentified data. We followed the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline.

The CJR Model

In communities participating in the CJR model, fee-for-service payments were made as usual during the course of patient care. Hospitals were then assessed on their average cost for the index LEJR hospitalization and all LEJR-related spending in the 90 days after discharge compared with a benchmark price.15 The benchmark was prespecified to shift over the course of the program from hospital-specific to fully regional and was affected by quality performance. If spending fell sufficiently below the benchmark and if a hospital met or surpassed a threshold on a composite quality measure of postsurgical complications and patient satisfaction, it received a financial bonus. Starting in 2017, the second year of the program, hospitals with spending per episode that exceeded the benchmark paid a penalty to Medicare (more details on the design of CJR and its evolution are listed in eMethods 1 in the Supplement).

Across all 380 MSAs nationally, 171 were identified by Medicare as eligible for the CJR program and divided into strata based on their LEJR spending in the preintervention period: 85 communities in the higher-spending MSA strata and 86 in the lower-spending MSA strata. Of the 85 higher-spending MSAs, 38 were randomized to the intervention. Of the 86 lower-spending MSAs, 29 were randomized to the intervention (eFigure 1 in the Supplement). The MSAs not randomized to participate in CJR were available as control MSAs.

In the first 2 years of the program, all hospitals in MSAs randomized to the intervention were required to participate. In 2018, the third year of the program, Medicare made the program voluntary in the 29 lower-cost MSAs randomized initially to participate in CJR. Another 4 participating MSAs in the higher-spending MSA strata were also transitioned to voluntary status. We continued to categorize these 4 MSAs as mandatory MSAs to preserve the initial randomization to the program, which was done within high- and low-cost strata. By doing so, we were able to preserve the original set of MSAs randomized to CJR or control from each strata of higher-cost mandatory and lower-cost voluntary MSAs.

Study Population

We analyzed Medicare claims and enrollment data from January 1, 2014, to December 31, 2019, for all Medicare fee-for-service beneficiaries receiving LEJR at hospitals in one of the 171 MSAs eligible for participation in CJR. The CJR program began in April 2016, but as in our prior work,16 we excluded one-quarter before and after the April 1, 2016, start date as a 6-month washout period (January 1-June 30, 2016) to allow time in the evaluation period for hospitals to respond to the program rules. We defined 5 different time periods: (1) a baseline preintervention period from 2014 to 2015; (2) year 1, including LEJR episodes beginning in July through December 2016; (3) year 2, including episodes beginning in 2017; (4) year 3, including episodes beginning in 2018; and (5) year 4, including episodes beginning in January through September 2019. The calendar year designations were the same used by the Centers for Medicare & Medicaid Services for assessing hospital performance in CJR.

We defined LEJR episodes as the time between the date of hospital admission and 90 days postdischarge for diagnosis related groups (DRGs) 469 or 470. We limited the study population to beneficiaries without end-stage kidney failure who were continuously enrolled in fee-for-service Medicare Parts A and B for 12 months before their LEJR episode through 90 days after discharge or until death (eFigure 2 in the Supplement). Consistent with the CJR program, we also excluded LEJR episodes that involved hospitals ever enrolled in the Bundled Payments for Care Improvement program, another episode-based payment program.

As mentioned previously, CJR excluded knee replacements performed in the outpatient setting, which were only possible beginning in 2018. In our analyses, we included both inpatient knee procedures (DRG 469-470 with an International Classification of Diseases, Ninth Revision, code 81.54 or an International Statistical Classification of Diseases and Related Health Problems, Tenth Revision, code starting with 0SRC and 0SRD) and outpatient knee procedures (identified on outpatient claims using Current Procedural Terminology code 27447) to capture the full effect of the program.

Patient, Procedure, and Hospital Characteristics

We gathered information on patients’ age, sex, race/ethnicity, rural or urban categorization of their zip code of residence,17 original reason for Medicare enrollment (ie, disability vs age), Medicaid enrollment in the 12 months before admission, and the presence of 27 chronic conditions before the year of their LEJR.18 We assessed whether LEJR procedures were billed as inpatient or outpatient, the type of procedure (eg, hip or knee replacement), DRG, and presence of a fracture. Using the Centers for Medicare & Medicaid Services–published data,10 we identified which hospitals stayed vs dropped out of the program beginning in 2018. Using a regression model adjusting for episode DRG and fracture with region fixed effects and random effects for each hospital, we identified how far above or below the regional average for LEJR spending each hospital was during the period used by Medicare to determine their regional benchmarks for the first 2 years of the program (eMethods 2 in the Supplement).11

Outcomes

Our main outcome was institutional spending, which encompassed total payments to a hospital, postacute care facility or agency, hospice, and durable medical equipment spending.16,19,20,21 We focused on institutional spending as our primary outcome because noninstitutional spending (eg, payments to physicians and other health care professionals) was only available for a 20% random sample of Medicare beneficiaries. Institutional spending composes approximately 85% of all spending in LEJR episodes and is the area where prior LEJR bundled payment demonstrations have shown savings.22 We also assessed the fraction of knee LEJR episodes billed as outpatient procedures.

One of our goals was to understand whether hospitals avoided performing LEJR on patients likely to require higher-cost care. To identify such LEJR episodes, we calculated an expected episode-level spending risk score that was based on patients’ sociodemographic and clinical characteristics weighted by their effect on total spending (eTable 1 in the Supplement contains the estimated weights, and eMethods 2 in the Supplement has more details on the model we used). For each hospital, we measured the proportion of LEJR procedures performed on patients in the highest quartile of expected spending.

Statistical Analysis

Our main analytic model used a difference-in-differences approach (eMethods 2 in the Supplement) with the LEJR episode as the unit of analysis. We fit linear regression models that controlled for patient and procedure characteristics and MSA fixed effects and indicators for each quarter of our study period. The key variable in each model was an interaction between the postintervention periods (either 4 indicators for CJR years 1-4 or 2 indicators for CJR years 1-2 and years 3-4) and an indicator for the LEJR being performed in a treatment MSA. The coefficients estimated for these variables describe the average differential change in the outcome from the preintervention period to each postperiod (eg, from preintervention to year 1 or preintervention to year 2) for episodes in treatment MSAs relative to those in control MSAs. The use of a linear model with MSA fixed effects allowed us to control for baseline differences in outcomes between CJR and control MSAs without the potential bias associated with interpreting an interaction term in a nonlinear model.23

Though CJR participation was randomly assigned, we used a difference-in-differences approach in our analysis because of observed baseline differences in spending and spending risk (ie, patient factors) between treatment and control groups after randomization (details and sensitivity analysis using a randomized trial framework are presented in eMethods 3 in the Supplement). In a test of the assumptions in a difference-in-differences model, we did not observe differential trends in our outcomes in the period before CJR implementation (eTable 2 in the Supplement); nor did we see differential shifts in LEJR volumes (eMethods 4 and eTable 3 in the Supplement).

In the MSAs where participation was made voluntary in the third year, we compared the dropout rate among hospitals across quartiles of preintervention episode spending relative to average spending in their geographic region. Across these quartiles, we also compared CJR reconciliation payments in year 2.

To better examine the contribution patient selection had on savings under the CJR program, we fit 3 different spending models and described them sequentially. Model 1 made no adjustment for patient characteristics and substitutes average inpatient prices for outpatient LEJR prices, which provides a naive estimate of program savings that would be consistent with a savings estimate that excludes outpatient knee procedures. Next, in Model 2, we dropped the substitution of average inpatient prices and used the actual observed outpatient prices for knee LEJRs. This model illustrates how much the naive estimates in Model 1 change after incorporating outpatient knee prices. Finally, in Model 3, we expanded on Model 2 and adjusted for patient characteristics to quantify the association of selection on observable patient characteristics with savings after accounting for outpatient LEJR prices. We then estimated the overall association patient selection had with savings estimates by taking the difference between our Model 1 and Model 3 estimates.

Robust variance estimators were used to account for clustering of observations within MSAs.24 For all analyses, we provided 95% CIs as exploratory estimates which were not adjusted for multiple testing. We used Stata software, version 16 (StataCorp LLC) for all statistical analyses. Two-sided significance was determined by P < .05.

Results

Data from 1 087 177 patients (mean [SD] age, 74.4 [8.4] years; 692 604 women [63.7%]; 980 635 non-Hispanic White patients [90.2%]) were analyzed. In years 1 to 4 of the CJR program, there were 321 038 LEJR episodes performed at 702 intervention hospitals and 456 792 LEJR episodes performed at 826 control hospitals. The characteristics of episodes in the intervention and control hospitals overall and by mandatory or voluntary MSA group are shown in eTables 4, 5, and 6 in the Supplement.

Impact of CJR on Institutional Spending Over 4 Years

After adjustment for patient characteristics and incorporating all LEJR episodes regardless of site, overall savings in CJR diminished from year 2 through year 4 (all MSAs, year 2, −$976 [95% CI, −$1340 to −$612] vs year 4, −$331 [95% CI, −$792 to $130]) (Table 1). By year 4, across all treatment MSAs, there was no longer any statistically significant differential change in institutional spending. This was true in both mandatory MSAs (year 4, −$422 [95% CI, −$1088 to $243]) and voluntary MSAs (year 4, −$168; 95% CI, −$624 to $289).

Table 1. Effect of CJR on Institutional Spending in MSAs Randomized to Participation, Stratified by Type of MSA.

| MSA type | Preintervention period (2014-2015), institutional spending, $a | Differential change in institutional spending from preintervention period to given year in CJR vs control MSAs (95% CI), $b | |||||

|---|---|---|---|---|---|---|---|

| Before voluntary participation and outpatient knee LEJR pricing changes | After voluntary participation and outpatient knee LEJR pricing changes | ||||||

| CJR | Control | Difference | Year 1 (2016) | Year 2 (2017) | Year 3 (2018) | Year 4 (2019) | |

| All MSAs | 26 620 | 25 088 | 1532 | −875 (−1196 to −555) | −976 (−1340 to −612) | −575 (−923 to −227) | −331 (−792 to 130) |

| Mandatory MSAs (randomized to mandatory participation years 1-4) | 27 995 | 25 440 | 2555 | −1097 (−1528 to −667) | −1240 (−1729 to −751) | −717 (−1164 to −270) | −422 (−1088 to 243) |

| Voluntary MSAs (randomized to mandatory participation for years 1-2, and voluntary years 3-4) | 24 863 | 24 697 | 166 | −582 (−979 to −186) | −624 (−1010 to −237) | −369 (−823 to 86) | −168 (−624 to 289) |

Abbreviations: CJR, care for joint replacement; LEJR, lower-extremity joint replacement; MSA, metropolitan statistical area.

Components of institutional spending are detailed in eMethods 2 in the Supplement. Currency is in US dollars.

In this episode-level analysis, all estimates were adjusted for MSA fixed effects and episode and patient characteristics, as described in eMethods 2 in the Supplement. Standard errors were clustered at the MSA level.

Outcomes and Dropout Among Hospitals in Voluntary MSAs

The CJR and control hospitals in the voluntary MSAs were classified into 4 quartiles based on the hospitals’ baseline episode spending (from furthest below the regional average, quartile 1, to furthest above the regional average, quartile 4) (Table 2). Compared with control hospitals, in year 2, CJR hospitals in the highest quartile had savings of −$1244 (95% CI, −$2110 to −$379) in institutional spending per episode, whereas hospitals in the lowest quartile had no significant differential change in spending (−$414; 95% CI, −$939 to $111).

Table 2. Comparison of Outcomes in Hospitals in Voluntary MSAs Randomized to Participation, Stratified by Hospital Preperiod Episode Spendinga.

| Variable | Spending relative to the regional average for LEJR episodes, No.b | |||

|---|---|---|---|---|

| Quartile 1 (spending lower than regional average) | Quartile 2 | Quartile 3 | Quartile 4c (spending higher than regional average) | |

| No. of hospitals | ||||

| All | 161 | 160 | 160 | 160 |

| Hospitals in CJR MSAs | 56 | 66 | 47 | 60 |

| Hospitals in control MSAs | 105 | 94 | 113 | 100 |

| Spending in preintervention period in CJR and control hospitals | ||||

| Institutional spending, $ | 23 132 | 24 079 | 27 910 | 37 368 |

| Difference between hospital and CJR regional institutional spending, $d | −4180 | −1211 | 2514 | 11 506 |

| Mean predicted LEJR institutional spending based on patient risk, $ | 25 912 | 26 023 | 27 145 | 28 429 |

| Impact of CJR in year 2, before transition to voluntary program (2016-2017) | ||||

| Change in institutional spending in CJR hospitals vs control hospitals (95% CI), $e | −414 (−939 to 111) | −440 (−874 to −6) | −685 (−1208 to −163) | −1244 (−2110 to −379) |

| CJR hospitals with no savings bonus, No. (%)f | 13 (23.3) | 13 (19.7) | 12 (25.5) | 24 (40.0) |

| Fraction of hospitals dropping out in year 3 (2018) | ||||

| Hospitals in CJR MSAs dropping out in year 3, No. (%) | 29 (51.8) | 48 (72.7) | 36 (76.6) | 56 (93.3) |

Abbreviations: CJR, care for joint replacement; LEJR, lower-extremity joint replacement; MSA, metropolitan statistical area.

These MSAs were randomized to participation vs control. Participation was mandatory in years 1-2 of program and then voluntary in years 3-4 of program.

CJR and control hospitals were ranked by how far (measured in US dollars, $) below or above they were from their regions’ average spending per LEJR episode over the period 2012-2014. Regions were defined by the Census division (9 regions) of the hospital’s MSA. After ranks were determined, hospitals were divided evenly into quartiles within the 86 voluntary MSAs. eMethods 2 in the Supplement has details on the model used to rank hospitals.

P values reported in the text are from χ2 tests of quartiles 1 and 4.

Regional institutional spending is the average spending per LEJR episode in the hospital’s region. The difference is the hospital’s average less their regions’ average.

Estimates were obtained by running difference-in-differences models similar to the main analysis (described in eMethods 2 in the Supplement) in each quartile separately, comparing year 2 to the preperiod years 2014-2015.

In performance year 2, if hospitals met minimum quality thresholds, then they were eligible to earn a bonus equal to their savings differential (their actual less their target price, capped at 10% of the target price) multiplied by their total LEJR volume in performance period.

Across CJR hospitals in voluntary MSAs, there was higher dropout among hospitals with historical spending greater than their regional average (93.3% in the highest quartile vs 51.8% in the lowest quartile). Hospitals in the highest quartile were also more likely to have no bonus or a penalty payment in year 2 vs the lowest quartile (24 of 60 [40.0%] vs 13 of 56 [23.3%]).

Selection of Patients and Outpatient Pricing

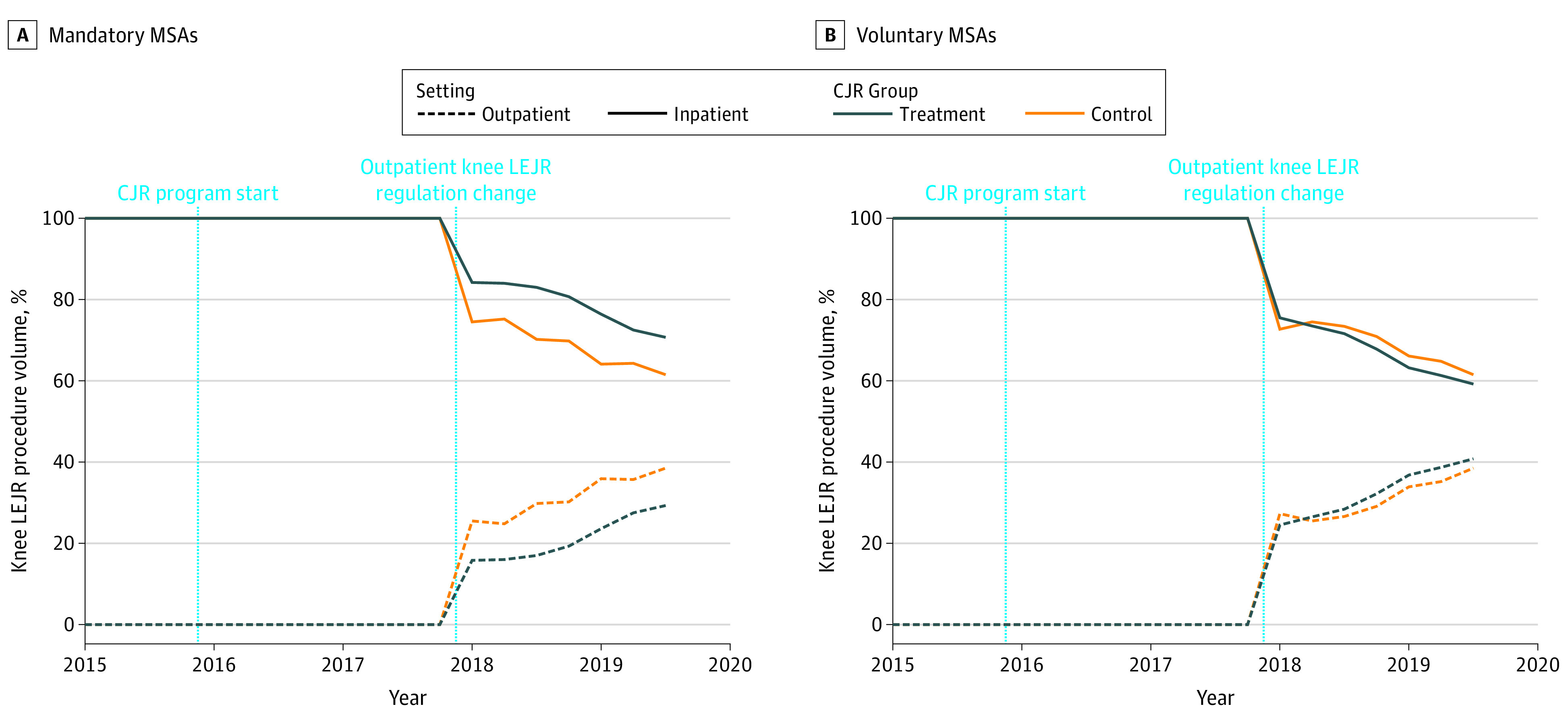

In mandatory MSAs, CJR hospitals shifted fewer knee replacements to the outpatient setting in years 3 and 4 than control hospitals (12 571 of 59 182 [21.2%] vs 21 650 of 68 722 [31.5%]) of total knee LEJRs per quarter, respectively) (Figure). Compared with control hospitals, there was a small differential reduction among CJR hospitals in the fraction of patients with LEJR who were in the highest quartile of predictive spending (years 1-2, −0.3% [95% CI, −1.2% to 0.6%]; years 3-4, −1.1% [95% CI, −2.0% to −0.2%]) (Table 3). In voluntary MSAs, we did not observe any differential shift in outpatient knee volume or spending risk.

Figure. Shift of Total Knee Lower-Extremity Joint Replacement (LEJR) Volume to the Outpatient Setting in Years 3 to 4 of the Comprehensive Care for Joint Replacement (CJR) Program, Stratified by Type of Metropolitan Statistical Area (MSA).

Knee LEJR procedure volume shown over time for mandatory MSAs (A) and voluntary MSAs (B). Total knee replacements were identified from inpatient claims for LEJR by searching their procedure codes (International Classification of Diseases, Ninth Revision, code 8154 and International Statistical Classification of Diseases and Related Health Problems, Tenth Revision, codes starting with 0SRC and 0SRD) from 2015 through 2019, whereas outpatient total knee replacements were identified using the Current Procedural Terminology code 27447 (Access Healthcare Common Procedure Coding System) on outpatient claims starting in 2018.

Table 3. Shift in Predicted Spending Based on Sociodemographic and Clinical Characteristics of Patients Undergoing LEJR in Hospitals in Mandatory and Voluntary CJR-Participation MSAs.

| Variable | Preintervention period (2014-2015), preperiod % or meana | Differential change from preperiod for CJR vs Control MSAs, $b | |||

|---|---|---|---|---|---|

| CJR MSAs | Control MSAs | Difference | Years 1-2 (95% CI) | Years 3-4 (95% CI) | |

| Mandatory MSAs | |||||

| Predicted total spending, $ | 31 242 | 30 294 | 947 | −58 (−264 to 147) | −175 (−399 to 49) |

| Top quartile of predicted spending, % | 29.4 | 24.9 | 4.5 | −0.3 (−1.2 to 0.6) | −1.1 (−2.0 to −0.2) |

| Voluntary MSAs | |||||

| Predicted total spending, $ | 29 502 | 29 310 | 192 | 63 (−77 to 204) | 12 (−129 to 154) |

| Top quartile of predicted spending, % | 21.8 | 21.2 | 0.6 | 0.3 (−0.4 to 0.9) | −0.03 (−0.7 to 0.6) |

Abbreviations: CJR, care for joint replacement; LEJR, lower-extremity joint replacement; MSA, metropolitan statistical area.

All averages and estimates incorporate probability weights (eMethods 1 in the Supplement).

Model estimates are adjusted only with MSA fixed effects. Errors were clustered at the MSA level.

Of the observed reduction in savings from years 1 to 2 to years 3 to 4 among mandatory MSAs in the CJR program ($606 [95% CI, $348-$864]) (model 3 in Table 4), we estimate 75% ($455 [95% CI, $137-$722]) was attributable to patient selection (final row in Table 4). We did not observe similar selection effects on savings among CJR hospitals in the voluntary MSAs.

Table 4. Estimated Savings From CJR Accounting for Different Forms of Patient Selection, Stratified by Type of MSA.

| Model | Approach to account for patient selection on spending estimates, adjustments included in model | Difference-in-differences estimates of program effect on institutional spending under each approach (95% CI), $ | ||||||

|---|---|---|---|---|---|---|---|---|

| Mandatory MSAs | Voluntary MSAs | |||||||

| Patient characteristics? | Which knee price was used? | Year 1-2 | Year 3-4 | Change from year 1-2 to year 3-4 | Year 1-2 | Year 3-4 | Change from year 1-2 to year 3-4 | |

| Model description | ||||||||

| (1) Not accounting for patient selectiona | No | Average inpatient | −1223 (−1809 to −638) | −1043 (−1748 to −338) | +180 (−134 to 495) | −516 (−981 to −51) | −276 (−780 to 229) | +240 (−92 to 573) |

| (2) Accounting for differential selection of patients for outpatient LEJR | No | Observed outpatient | −1226 (−1813 to −640) | −750 (−1414 to −86) | +476 (207 to 746) | −517 (−982 to −53) | −332 (−847 to 182) | +185 (−167 to 537) |

| (3) Additionally accounting for differential risk selection based on observed patient characteristics | Yes | Observed outpatient | −1194 (−1631 to −758) | −588 (−1115 to −62) | +606 (348 to 864) | −610 (−983 to −238) | −283 (−718 to 152) | +327 (40 to 615) |

| Net effect of accounting for patient selection in estimate of program effect (model 3 minus model 1 estimates)b | NA | NA | +28 (−192 to 224) | +455 (137 to 722) | NA | −98 (−215 to 35) | −9 (−226 to 222) | NA |

Abbreviations: CJR, care for joint replacement; CMS, Centers for Medicare & Medicaid Services; LEJR, lower-extremity joint replacement; MSA, metropolitan statistical area; NA, not applicable.

The counterfactual used for this analysis analogous to the benchmarks used by CMS to calculate savings in the CJR program year 4. The benchmarks in year 4 were based on historical episode spending in a hospital’s region from 2014-2016 and thus only reflected spending for inpatient LEJRs. These benchmarks were adjusted minimally for patient factors (only for whether patient had a fracture and whether the procedure was associated with major complications).

CIs on the net effects before and after year 3 were obtained by bootstrapping from 1000 random draws of MSAs (mandatory or voluntary respectively), equal to their number in treatment and control. All estimates were adjusted by MSA fixed effects and errors were clustered at the MSA level. eMethods 2 in the Supplement on the model specification shows the full list of patient characteristics used in model 3.

Discussion

Hospitals responded to changing regulations in the CJR program in several ways that were associated with a decrease in the savings from this program. In voluntary MSAs, where CJR hospitals were given a choice of staying in the program or dropping out, higher-cost hospitals that cared for more expensive, complex patient populations preferentially dropped out. This differential dropout is particularly notable because these higher-cost CJR hospitals generated more savings per episode up to that point. In MSAs where participation remained mandatory, CJR hospitals selectively treated healthier patients and were more likely to perform knee LEJR in the inpatient setting. By the fourth year of the program, largely owing to these responses, CJR participation was no longer associated with significant savings.

Our study adds to the literature on the impact of CJR and episode-based payment models in general. Although other studies have highlighted that most CJR hospitals in voluntary MSAs dropped out of the program when given the choice in year 3,6,25 to our knowledge, our study is among the first to suggest that dropout was greater among the set of hospitals that had generated the most savings per episode and also the set of hospitals furthest above their regional average for LEJR spending. Our finding that patient selection was an issue in mandatory MSAs but not voluntary MSAs is consistent with a recent analysis of a different episode-based payment program that found little evidence of patient selection.7 Patient selection may be less of an issue under a voluntary program because clinicians can selectively refer lower-risk patients to participating hospitals and higher-risk patients to nonparticipating hospitals.24

The responses we observed were largely predictable given the design of the CJR program and changes made in its third year.11 Including the average regional price into hospitals’ benchmarks appeared to strongly disadvantage hospitals with higher baseline spending before entering the program.9,26,27,28 It was simply more difficult for these hospitals to reduce their spending below the benchmark, and therefore it was not surprising that these hospitals preferentially dropped out of the program when given the choice in the third year. This is a trade-off when using regional benchmarks vs benchmarks based on a hospital’s own historical spending. Regional benchmarks may provide a greater incentive to decrease spending, but they also appear to promote selective participation if episode-based payments are voluntary.

The results of this analysis suggest that the paucity of risk adjustment for patient complexity and exclusion of outpatient LEJR in the CJR program encourages patient risk selection. In comparison to reducing their spending through improved care or reducing service utilization, it is probably easier for hospitals to reduce their spending by selective decisions on whom to treat and where to perform surgery. A related challenge in the design of episode-based payment models is that as care delivery evolves, these models may discourage adoption of new, more efficient models of delivery, such as outpatient knee LEJR, that do not trigger the episode payment or are not incorporated into benchmark prices. Episode-based payment models need to have the flexibility to be continually updated to mitigate this issue. In contrast, population-based payment models, such as accountable care organizations, largely avoid this problem.

These results suggest a need for caution regarding the design of new alternative payment models. Medicare recently proposed addressing some of the challenges we found by including outpatient LEJR in the model and by adjusting estimates of spending for age and chronic conditions.29 Medicare could go even further by incorporating additional variables available on beneficiary complexity into the risk adjustment model. To address issues related to selective participation, Medicare could either return to a mandatory program or change the benchmarks so that they are based to a greater degree on a hospital’s historical spending.

Limitations

Our study has limitations. Our focus was on the CJR program, which only encompasses episode-based payments for LEJR procedures and may not generalize to episode-based payments for other medical or surgical conditions. Additionally, the true influence of patient risk selection may be even greater than our estimates, as we did not observe many relevant patient characteristics that are only measurable outside of claims data (eg, functional status or social support).

Conclusions

In summary, the study results from this controlled population-based study suggest that the savings observed in the first 2 years of Medicare’s episode-based payment program for LEJR dissipated by the fourth year largely owing to institutional responses to changes in the program: hospitals selectively dropped out of the program and were selective when choosing which patients would receive LEJR and whether to perform LEJR procedures in the outpatient setting. Future episode-based payment models may be able to mitigate these behaviors by making programs mandatory, making more robust risk adjustment changes in benchmarking, and including flexibility to adapt to evolving clinical care.

eMethods 1. CJR Program and Impact on Study Design

eMethods 2. Details on Other Methods, Outcome Definitions and Model Specifications

eMethods 3. Comparison of Difference-in-Differences Analytic Strategy vs Analysis of the CJR Program as a Randomized Controlled Trial

eMethods 4. Other Supplemental Results

eFigure 1. CJR Voluntary and Mandatory Community Cohort Flow Diagram

eFigure 2. Exclusions Used to Create Analytic Sample

eTable 1. Risk Model Coefficients and Deconstructing the Change in Patient Risk Score in Mandatory and Voluntary MSAs by 2018-2019

eTable 2. Preperiod Annual Trend in Outcomes, 2011-2015

eTable 3. Per Capita LEJR Volume Differences in CJR vs Control MSAs after the Start of CJR

eTable 4. Sociodemographic and Clinical Characteristics of Patients Undergoing Lower-Extremity Joint Replacement Among Hospitals in Metropolitan Statistical Areas Randomized to CJR (Overall) Participation or Controls

eTable 5. Sociodemographic and Clinical Characteristics of Patients Undergoing Lower-Extremity Joint Replacement Among Hospitals in Metropolitan Statistical Areas Randomized to Mandatory Participation or Controls

eTable 6. Sociodemographic and Clinical Characteristics of Patients Undergoing Lower-Extremity Joint Replacement Among Hospitals in Metropolitan Statistical Areas Randomized Voluntary Participation or Controls

References

- 1.Porter ME, Lee TH. The strategy that will fix health care. Accessed August 28, 2017. https://hbr.org/2013/10/the-strategy-that-will-fix-health-care

- 2.Agarwal R, Liao JM, Gupta A, Navathe AS. The impact of bundled payment on health care spending, utilization, and quality: a systematic review. Health Aff (Millwood). 2020;39(1):50-57. doi: 10.1377/hlthaff.2019.00784 [DOI] [PubMed] [Google Scholar]

- 3.Liao JM, Navathe AS, Werner RM. The impact of Medicare’s alternative payment models on the value of care. Annu Rev Public Health. 2020;41(1):551-565. doi: 10.1146/annurev-publhealth-040119-094327 [DOI] [PubMed] [Google Scholar]

- 4.Weeks WB, Fisher ES. Potential unintended effects of Medicare’s bundled payments for care improvement program. JAMA. 2019;321(1):106-107. doi: 10.1001/jama.2018.18150 [DOI] [PubMed] [Google Scholar]

- 5.Mechanic RE. Opportunities and challenges for episode-based payment. N Engl J Med. 2011;365(9):777-779. doi: 10.1056/NEJMp1105963 [DOI] [PubMed] [Google Scholar]

- 6.Kim H, Meath THA, Grunditz JI, Quiñones AR, Ibrahim SA, McConnell KJ. Characteristics of hospitals exiting the newly voluntary comprehensive care for joint replacement program. JAMA Intern Med. 2018;178(12):1715-1717. doi: 10.1001/jamainternmed.2018.4743 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Navathe AS, Liao JM, Dykstra SE, et al. Association of hospital participation in a Medicare bundled payment program with volume and case mix of lower extremity joint replacement episodes. JAMA. 2018;320(9):901-910. doi: 10.1001/jama.2018.12345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ellimoottil C, Ryan AM, Hou H, Dupree J, Hallstrom B, Miller DC. Medicare’s new bundled payment for joint replacement may penalize hospitals that treat medically complex patients. Health Aff (Millwood). 2016;35(9):1651-1657. doi: 10.1377/hlthaff.2016.0263 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rose S, Zaslavsky AM, McWilliams JM. Variation In accountable care organization spending and sensitivity to risk adjustment: implications for benchmarking. Health Aff (Millwood). 2016;35(3):440-448. doi: 10.1377/hlthaff.2015.1026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Centers for Medicare & Medicaid Services. Comprehensive care for joint replacement model. Accessed October 9, 2016. https://innovation.cms.gov/initiatives/CJR

- 11.Federal Register . Medicare program; comprehensive care for joint replacement payment model for acute care hospitals furnishing lower extremity joint replacement services. Accessed August 23, 2018. https://www.federalregister.gov/documents/2015/11/24/2015-29438/medicare-program-comprehensive-care-for-joint-replacement-payment-model-for-acute-care-hospitals [PubMed]

- 12.Mechanic RE. Mandatory Medicare bundled payment—is it ready for prime time? N Engl J Med. 2015;373(14):1291-1293. doi: 10.1056/NEJMp1509155 [DOI] [PubMed] [Google Scholar]

- 13.Centers for Medicare & Medicaid Services. CMS finalizes changes to the comprehensive care for joint replacement model, cancels episode payment models and cardiac rehabilitation incentive payment model. Accessed May 17, 2018. https://www.cms.gov/Newsroom/MediaReleaseDatabase/Press-releases/2017-Press-releases-items/2017-11-30.html

- 14.Hirsch R . News alert: CMS says OK to admit total knee replacements as inpatient. Accessed July 31, 2019. https://www.racmonitor.com/news-alert-cms-says-ok-to-admit-total-knee-replacements-as-inpatient

- 15.Federal Register . Medicare program; comprehensive care for joint replacement payment model for acute care hospitals furnishing lower extremity joint replacement services. Accessed April 18, 2017. https://www.federalregister.gov/documents/2015/07/14/2015-17190/medicare-program-comprehensive-care-for-joint-replacement-payment-model-for-acute-care-hospitals [PubMed]

- 16.Barnett ML, Wilcock A, McWilliams JM, et al. Two-year evaluation of mandatory bundled payments for joint replacement. N Engl J Med. 2019;380(3):252-262. doi: 10.1056/NEJMsa1809010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Morrill R, Cromartie J, Hart G. Rural-urban commuting code database. Accessed June 29, 2018. http://depts.washington.edu/uwruca/index.php

- 18.Centers for Medicare & Medicaid Services . Welcome to the chronic conditions data warehouse. Accessed March 25, 2015. https://www.ccwdata.org/

- 19.Finkelstein A, Ji Y, Mahoney N, Skinner J. Mandatory Medicare bundled payment program for lower extremity joint replacement and discharge to institutional postacute care: interim analysis of the first year of a 5-year randomized trial. JAMA. 2018;320(9):892-900. doi: 10.1001/jama.2018.12346 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.The Lewin Group . CMS comprehensive care for joint replacement model: performance year 1 evaluation report. Accessed September 5, 2018. https://innovation.cms.gov/Files/reports/cjr-firstannrpt.pdf

- 21.Haas DA, Zhang X, Kaplan RS, Song Z. Evaluation of economic and clinical outcomes under Centers for Medicare & Medicaid Services mandatory bundled payments for joint replacements. JAMA Intern Med. 2019;179(7):924-931. doi: 10.1001/jamainternmed.2019.0480 [DOI] [PMC free article] [PubMed]

- 22.Barnett ML, Mehrotra A, Grabowski DC. Postacute care—the piggy bank for savings in alternative payment models? N Engl J Med. 2019;381(4):302-303. doi: 10.1056/NEJMp1901896 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lechner M. The estimation of causal effects by difference-in-difference methods. Found Trends Econom. 2010;4(3):165–224.

- 24.Bertrand M, Duflo E, Mullainathan S.. How much should we trust differences-in-differences estimates? Q J Econ. 2004;119(1):249–275. doi: 10.1162/003355304772839588 [DOI] [Google Scholar]

- 25.Einav L, Finkelstein A, Ji Y, Mahoney N. Voluntary regulation: evidence from Medicare payment reform. Accessed March 1, 2021. https://www.nber.org/papers/w27223 [DOI] [PMC free article] [PubMed]

- 26.Kim H, Meath THA, Dobbertin K, Quiñones AR, Ibrahim SA, McConnell KJ. Association of the mandatory Medicare bundled payment with joint replacement outcomes in hospitals with disadvantaged patients. JAMA Netw Open. 2019;2(11):e1914696. doi: 10.1001/jamanetworkopen.2019.14696 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.McWilliams JM, Landon BE, Rathi VK, Chernew ME. Getting more savings from ACOs—can the pace be pushed? N Engl J Med. 2019;380(23):2190-2192. doi: 10.1056/NEJMp1900537 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chernew ME, de Loera-Brust A, Rathi V, McWilliams JM. MSSP participation following recent rule changes: what does it tell us? Accessed October 18, 2020. https://www.healthaffairs.org/do/10.1377/hblog20191120.903566/full/

- 29.Federal Register . Medicare program: comprehensive care for joint replacement model three-year extension and changes to episode definition and pricing. Accessed June 8, 2020. https://www.federalregister.gov/documents/2020/02/24/2020-03434/medicare-program-comprehensive-care-for-joint-replacement-model-three-year-extension-and-changes-to

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eMethods 1. CJR Program and Impact on Study Design

eMethods 2. Details on Other Methods, Outcome Definitions and Model Specifications

eMethods 3. Comparison of Difference-in-Differences Analytic Strategy vs Analysis of the CJR Program as a Randomized Controlled Trial

eMethods 4. Other Supplemental Results

eFigure 1. CJR Voluntary and Mandatory Community Cohort Flow Diagram

eFigure 2. Exclusions Used to Create Analytic Sample

eTable 1. Risk Model Coefficients and Deconstructing the Change in Patient Risk Score in Mandatory and Voluntary MSAs by 2018-2019

eTable 2. Preperiod Annual Trend in Outcomes, 2011-2015

eTable 3. Per Capita LEJR Volume Differences in CJR vs Control MSAs after the Start of CJR

eTable 4. Sociodemographic and Clinical Characteristics of Patients Undergoing Lower-Extremity Joint Replacement Among Hospitals in Metropolitan Statistical Areas Randomized to CJR (Overall) Participation or Controls

eTable 5. Sociodemographic and Clinical Characteristics of Patients Undergoing Lower-Extremity Joint Replacement Among Hospitals in Metropolitan Statistical Areas Randomized to Mandatory Participation or Controls

eTable 6. Sociodemographic and Clinical Characteristics of Patients Undergoing Lower-Extremity Joint Replacement Among Hospitals in Metropolitan Statistical Areas Randomized Voluntary Participation or Controls