Abstract

Objectives

As laboratory medicine continues to undergo digitalization and automation, clinical laboratorians will likely be confronted with the challenges associated with artificial intelligence (AI). Understanding what AI is good for, how to evaluate it, what are its limitations, and how it can be implemented are not well understood. With a survey, we aimed to evaluate the thoughts of stakeholders in laboratory medicine on the value of AI in the diagnostics space and identify anticipated challenges and solutions to introducing AI.

Methods

We conducted a web-based survey on the use of AI with participants from Roche’s Strategic Advisory Network that included key stakeholders in laboratory medicine.

Results

In total, 128 of 302 stakeholders responded to the survey. Most of the participants were medical practitioners (26%) or laboratory managers (22%). AI is currently used in the organizations of 15.6%, while 66.4% felt they might use it in the future. Most had an unsure attitude on what they would need to adopt AI in the diagnostics space. High investment costs, lack of proven clinical benefits, number of decision makers, and privacy concerns were identified as barriers to adoption. Education in the value of AI, streamlined implementation and integration into existing workflows, and research to prove clinical utility were identified as solutions needed to mainstream AI in laboratory medicine.

Conclusions

This survey demonstrates that specific knowledge of AI in the medical community is poor and that AI education is much needed. One strategy could be to implement new AI tools alongside existing tools.

Keywords: Artificial intelligence, Laboratory medicine, Diagnostics, Medical education

Key Points.

Artificial intelligence (AI) is used in 15.6% of the organizations while 66.4% felt they might use AI in the future. Key uses of AI include diagnostics, review risk profile of patients, laboratory results, and financial analytics.

To implement AI, the laboratory community will need education on the technology and usage, as well as research into generating clinical evidence and addressing implementation challenges.

We believe AI in laboratory medicine can help with reducing health care costs, improve access to generate better insights, and enhance the quality of care delivered to the patient.

Advances in our understanding of biology, disease, and molecular medicine have created a central role for laboratory medicine in the diagnostic workup of many, if not most, diseases. It is estimated that 70% of decisions regarding a patient’s diagnosis, treatment, and discharge are in part based on results of laboratory tests.1 Unfortunately, the main cause of medical errors in the United States is inaccurate diagnosis.2-5 The ever-increasing workload, high health care costs, and need for improved precision call for continuous optimization of the laboratory processes.6 With both health care and laboratory medicine7 transitioning into an era of big data and artificial intelligence (AI), the ability to provide accurate, readily available, and contextualized data is crucial. AI in health care is the use of complex algorithms and software to emulate human cognition in the analysis of complicated medical data generated from diagnostics, medical records, claims, clinical trials, and so on. AI algorithms can only properly function with reliable and accurate laboratory data.8 Automation and AI can fundamentally change the way medicine is practiced, as demonstrated by the recent applications in ophthalmology9 and dermatology.10 Some possible applications of AI specific to laboratory medicine are presented in Table 1. With the increasing role of laboratory medicine in many diseases, AI has the potential to improve diagnostics through more accurate detection of pathology, better laboratory workflows, improved decision support, and reduced costs, leading to higher efficiencies.8,11,12

Table 1.

Baseline Characteristics of Survey Respondents (n = 128)

| Characteristic | No. (%) |

|---|---|

| Sex | |

| Male | 80 (62.5) |

| Female | 48 (37.5) |

| Age, y | |

| 31-40 | 23 (18.0) |

| 41-50 | 41 (32.0) |

| 51-60 | 32 (25.0) |

| 61-70 | 29 (22.7) |

| 70+ | 3 (0.2) |

| AI use | |

| Currently use AI | 20 (15.6) |

| Not currently, may use AI in future | 85 (66.4) |

| Not currently and will never use AI | 8 (6.3) |

| Unsure about AI use | 15 (11.7) |

| Role | |

| Physicians | 28 (22.0) |

| Laboratory management | 24 (19.0) |

| Pathologists | 21 (16.0) |

| Executive-level management | 16 (13.0) |

| Purchasing/procurement management | 5 (4.0) |

| Information technology management | 3 (2.0) |

| Other | 10 (8.0) |

| Employment type | |

| Hospital | 38 (30.0) |

| Other | 26 (20.0) |

| Academic medical center/teaching hospitals | 14 (11.0) |

| Integrated health network | 9 (7.0) |

| Private clinics | 7 (5.0) |

| Physician laboratory offices, federal government acute care facility, reference laboratory | 13 (10.0) |

AI, artificial intelligence.

As laboratory medicine continues to undergo digitalization and automation, clinical laboratorians will likely be confronted with the challenges associated with evaluating, implementing, and validating AI algorithms, both inside and outside their laboratories. Understanding what AI is good for and where it can be applied, along with the state-of-the-art and limitations, will be useful to practicing laboratory professionals and clinicians. On the other hand, the introduction of new technologies requires willingness to change the current structure and mindset toward these technologies, which are not always well understood. Historically, there has been resistance to the adoption of new technologies in the medical community.13

With a web-based survey among stakeholders in laboratory medicine in the United States, we aimed to evaluate their current perspectives on the value of AI in the diagnostics space and identify anticipated challenges with the introduction of AI in this field, as well as resistance to introduction of this new technology in today’s practice.

Today, AI is occasionally used in laboratory medicine for enabling the effective use of resources, avoiding unnecessary tests, improving patient safety, and alerting for abnormal results.14-18 AI is also being used in limited clinical usage for molecular/genomic testing19-21 by accurately identifying variants and matching it to possible treatments.

Materials and Methods

Survey Development

A web-based survey on the use of AI in laboratory medicine was designed in several independent steps. First, 98 stakeholders participated in a 2-week online discussion board on AI in diagnostics in August 2019. These participants were part of Roche’s Strategic Advisory Network (SAN), a group consisting of laboratory medicine decision makers, practicing physicians and surgeons, point-of-care coordinators, anatomic pathologists, laboratory management, information technology (IT) management, and senior leadership. Roche does not know the identity of the community members to protect their privacy. The online discussion board was moderated by C-space and developed questions to gain insights on potentially important topics to discuss in the survey. Open-ended as well as multiple-choice questions were formulated based on content of the discussion board.

Next, two 1-hour online group chats were organized on October 2 and 3, 2019, to discuss these questions and fine-tune their phrasing and to refine the answer possibilities to the multiple-choice questions. In these group chats, a total of 11 practitioners in laboratory medicine were asked to answer the initial survey questions one at the time, after which they could comment on each other’s answers and discuss their opinions on and interpretations of the questions.

This thoroughly discussed survey was fielded to a group of 302 laboratory medicine practitioners who are part of SAN via email. These individuals were both available for completing surveys and in a position to decide on embracing or refraining from using technologies such as AI in their respective organizations. The survey was available from October 21 until November 1, 2019. The data were collected using a software platform called Confirmit, and all participants gave informed consent for their input to be used for research purposes.

Finally, as there are multiple different definitions of AI, for the sake of the survey we defined AI as follows: “Artificial intelligence (AI) in health care is the use of complex algorithms and software to emulate human cognition in the analysis of complicated medical data generated from diagnostics, medical records, claims, clinical trials, and so on. AI is truly the ability for computer algorithms to approximate conclusions without direct human input.”

Questions

In the survey, we posed 21 questions that ranged from collecting demographic information to answering questions about if the respondents used AI in their organizations, what kind of improvements they would like to see in the current use of AI, how valuable they think AI will be in their practice, and what challenges they feel exist. A full list of all the questions can be found in the Supplement (all supplemental material can be found at American Journal of Clinical Pathology online).

Data Analysis

Quantitative Data

Categorical data were analyzed using a Pearson χ 2 test, considering a P value less than .05 to be statistically significant. The perceived value of AI was compared for different age groups and different experience levels with AI. The analyses were performed in Microsoft Excel. Data from the multiple-choice questions were presented as percentages per category.

The number of participants who could be reached was highly dependent on the number of available advisers in the SAN network. With 302 available advisers and an acceptable response rate of above 40%, we got responses from 128 participants. The study was powered to detect a 20% difference across subgroups in how they valued AI.

Qualitative Data

An inductive approach22 of direct content analysis was used to analyze the open-ended questions. First, two researchers, B.S. (psychologist) and M.S. (internal medicine doctor), independently screened the answers and drafted a rough framework of themes. After consensus on the overarching themes, answers were independently coded with this framework by both researchers. Then conflicts were resolved by consensus to account for different interpretations of the answers. Coding was performed and bar charts were created in Excel.

Results

Demographics

The survey was fielded to 302 stakeholders in laboratory medicine, of whom 128 (42%) responded. The modal age group was aged between 41 and 50 years (32.0%), while 23 (18.0%) of the respondents were younger than 41 years. The top three participants were physicians, laboratory managers, and pathologists. See Table 1 for further details on demographic information.

Qualitative Analysis

Based on the data, six main themes were derived (attitude, quality of care, organizational value, data analysis, prerequisites, and education). The “attitude” theme was further categorized into three subthemes (positive, unsure, and negative). To prevent losing valuable information, multiple themes could be assigned to an answer. The specific content of these themes is presented in Table 2, as well as in subsequent paragraphs, along with quotes from the survey participants. It should be noted that the attitude theme could be interpreted as being a separate sentiment analysis. However, this is not the case. Attitude was merely a theme in which many answers could be categorized according to both researchers.

Table 2.

List of Six Themes Derived From the Survey

| Theme | Examples of Content |

|---|---|

| Attitude—positive | Respondent showed a positive attitude toward AI rather than giving a really specific answer to the question. |

| Attitude—negative | Respondent showed a negative attitude toward AI rather than giving a really specific answer to the question. |

| Attitude—unsure | Respondent generally was not sure about the influence of AI in a certain area. |

| Quality of care | Accessibility of care, accuracy of diagnoses, and early recognition of certain disease states |

| Organizational value | Providing quick results, reducing redundancy, and resource management |

| Data analysis | Analyzing large data sets (big data) |

| Prerequisites | Workable user interface, IT support, and better software |

| Education | Education specific to tools and AI in general |

AI, artificial intelligence; IT, information technology.

In 173 (73%) of the 237 coded cases, there was an initial agreement on the codes to be assigned. In 64 (27%) cases, when there was a discrepancy between codes assigned by the two different coders, an extensive consensus procedure was followed. This resulted in a 100% agreement between coders after this consensus procedure.

Current Uses of AI in Laboratory Medicine

AI is currently used in the organizations of 20 (15.6%; 95% confidence interval [CI], 9.8%-23.1%) of the participants, while 85 (66.4%; 95% CI, 57.5%-74.5%) felt they might use it in the future and 8 (6.3%; 95% CI, 2.7%-11.9%) felt that they would never use AI. Respondents who have AI in their practice use it for diagnosing diseases from images (30%), reviewing patients’ risk profiles for certain conditions (40%), preempting rapid response solutions (30%), and automatically releasing laboratory results and financial analytics (10%). Examples of specific use cases include AI to perform digital cell analysis for peripheral blood, analyze medical record laboratory data to determine which patients are at risk of infection, improve patients’ outcomes and length of stays and readmissions, or preempt rapid response situations in hospitals and automated sepsis alerts to identify patients early.

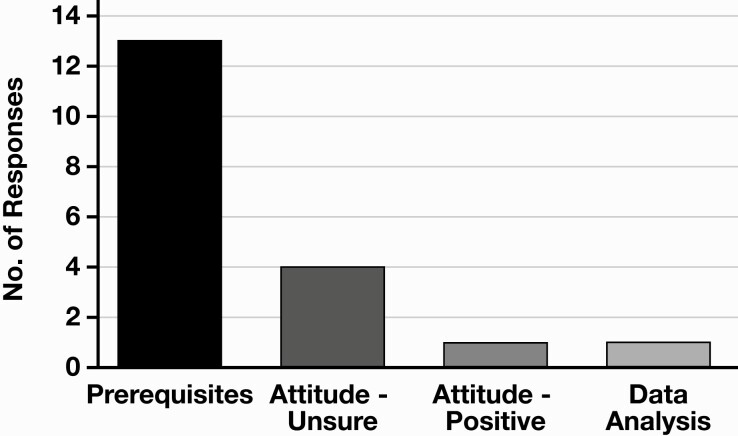

When asked how respondents felt that these current applications could be improved, most of them answered that certain prerequisites (eg, user interface, IT support, and better software) were needed. All 20 participants who currently use AI answered this question; see Figure 1 for counts against themes. For example, respondent 119 answered, “reduce the number of pop-ups in the EMR [electronic medical record],” and respondent 70 said, “We use AI for chatbots about common questions for diagnostics. The AI chatbots are not very intelligent. Need to make AI smarter.”

Figure 1.

Answers to the survey question “How can current AI applications in your organization be improved?”—categorized as counts per theme. AI, artificial intelligence.

Value of AI in Practice

Regarding the potential use of AI in the diagnostics space, of the 90 (81%; 95% CI, 72.6%-87.9%) participants who felt AI will be valuable in their organization within the next 5 years, 20 (18%; 95% CI, 11.4%-26.5%) labeled it as expected to be extremely valuable. Twenty-one (19%; 95% CI, 12.1%-27.5%) of the respondents felt like AI will not be valuable in their organization within the next 5 years. There were missing data on this question for 17 participants. To further examine whether the results were different in subgroups of respondents, we dichotomized the answers to finding AI valuable (including extremely valuable, very valuable, and somewhat valuable; n = 90) or not valuable at all (n = 21). A χ 2 test showed no significant difference in how participants in the different age groups valued AI in the diagnostics space (χ 2 = 5.0947 [4 degrees of freedom]; P = .28). Also, there was no significant difference in how AI was valued between respondents who currently use AI in their practice (n = 17) compared with respondents who have not used it yet (χ 2 = 0.6698 [1 degree of freedom]; P = .41). Table 3 shows the number of respondents to find AI valuable or not valuable in the different subgroups.

Table 3.

Categorized Subgroup Results for Finding AI Valuable or Not Valuable in the Diagnostics Spacea

| Characteristic | Valuable, No. (%) | Not Valuable, No. (%) |

|---|---|---|

| Age, y | ||

| 31-40 | 17 (85) | 3 (15) |

| 41-50 | 22 (69) | 10 (21) |

| 51-60 | 25 (83) | 5 (17) |

| 61-70 | 23 (88) | 3 (12) |

| 70+ | 3 (100) | 0 (0) |

| Experience | ||

| Use AI | 15 (88) | 2 (12) |

| Do not use AI | 75 (80) | 19 (20) |

AI, artificial intelligence.

aPercentage calculated row-wise.

Valuable

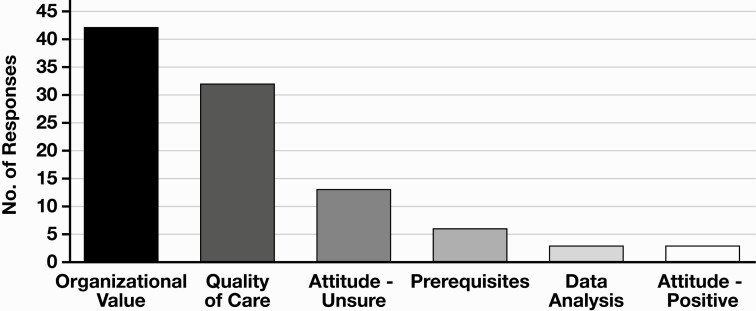

Respondents were also given the opportunity to elaborate on why they thought AI would or would not be valuable in their organizations. A plethora of reasons were given, and all were coded by theme, as shown in Figure 2. Most answers indicated that AI could be valuable because of the “organizational value” (eg, quicker results, reduced redundancy, and resource management). As an example, respondent 87 answered, “it could make the lab more efficient by streamlining work flow.” Another frequently reported theme was “quality of care” (eg, accessibility of care, accuracy, and early recognition). This was illustrated by respondent 100, who said, “might help in keeping patients informed of test results/appointments/follow up more efficiently,” and respondent 47 believed “could have some useful clinical algorithms to identify problems before they are known by humans, but the technology is still in early development.” Another substantial part of respondents, who thought AI would be valuable, were not sure about the reasons for this (“attitude—unsure”), as suggested by respondent 68: “I’m not entirely sure, I just know something is there!”

Figure 2.

Answers to the survey question “Why will AI be valuable in your organization?”—categorized as counts per theme. AI, artificial intelligence.

Not Valuable

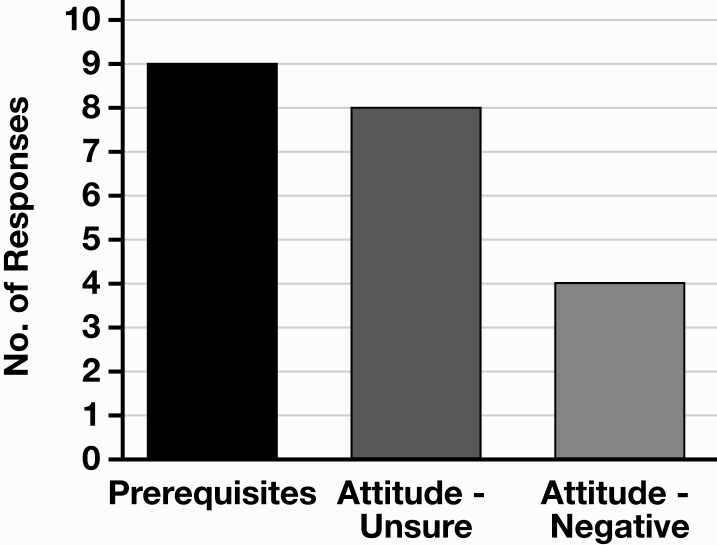

The 19% (95% CI, 12.1%-27.5%) of respondents who did not consider AI to be valuable in their organizations in the next 5 years had more uniform responses. The answers were largely split between the themes’ “prerequisites” (eg, budget and strategic plan) and an unsure attitude. See Figure 3 for more details. The missing prerequisites, for example, were presented by respondent 47: “very expensive and we have very limited capital dollars that we need to use to refresh old technology,” and respondent 106 said, “it’s not in our strategic plan to implement AI at this time.” The unsure attitude toward AI was summed up by respondent 75: “I’m not sure about the use of AI.”

Figure 3.

Answers to the survey question “Why will AI not be valuable in your organization?”—categorized as counts per theme. AI, artificial intelligence.

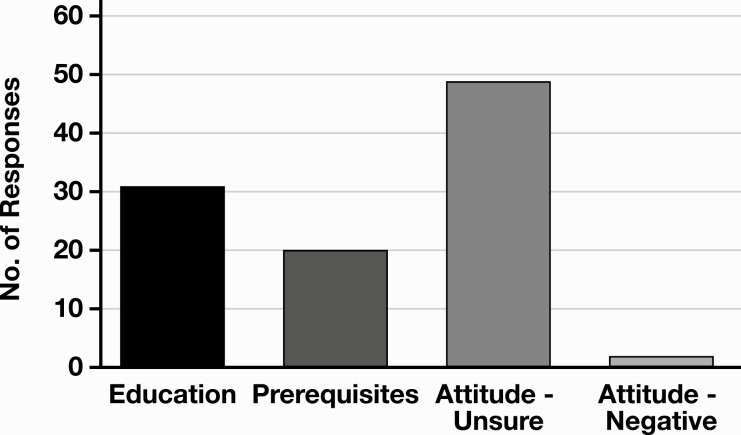

Requirements for Implementing AI

Participants were asked what they would need to feel comfortable with using AI in the diagnostics space. Most respondents had an “unsure attitude” toward what they needed most to adopt AI in their practice. For example, respondent 69 said, “this seems like too forward thinking of a question that we aren’t yet prepared to answer.” Others felt like they need education (eg, specific to tools and on AI in general). Respondent 40 answered “specific training to device” and respondent 46 said “AI short course training.” Most of the remaining group felt like they needed various prerequisites (eg, support systems, certifications, and evidence of benefits) to feel comfortable to adopt AI in their practice. There were missing data for 23 of the participants; see Figure 4 for the counts per theme.

Figure 4.

Answers to the survey question “What requirements are necessary in order for you to feel more comfortable with adopting AI?”—categorized as counts per theme. AI, artificial intelligence.

In the next question, participants were asked to specify how they would like to be trained to use AI. Twenty-three (22%) of 105 (95% CI, 14.4%-31.0%) participants felt that they were not able to speak to how they should be educated on these new technologies.

Finally, we asked participants to select persons within their organizations who they felt should be involved in the selection of AI equipment. Up to 10 individuals across an organization were identified who could be involved in evaluating a potential AI diagnostic solution. Respondent 56 said, “Medical staff committees and physicians and mid-level providers that use AI, utilization review staff that monitor provider performance, IT department and leadership that maintain AI software.”

Discussion

With this survey, we aimed to evaluate the thoughts of stakeholders in laboratory medicine on the value of AI in the diagnostics space and identify anticipated challenges with the introduction of AI in this field. About four in five respondents believed AI will be valuable in their organization within the next 5 years, mostly because of organizational and patient-oriented benefits. One in five respondents do not see any value in AI, which is often because of the lack of prerequisites such as budget for the implementation and not being adopted as part of an overall strategic initiative. Maybe this is because of the bias management might have toward AI.

The Value of AI

The quantitative results in this survey are similar to those found in surveys on this subject among other health care professionals. Similar to our findings, surveys on AI for pathologists,23 medical students,24 physicians,25 and radiologists26 all found that about 80% of participants believe AI will be influential or valuable in their practice in the upcoming years. Interestingly enough, a 2019 survey shows that 84% of the general population in the United States thinks that AI will be at the center of the next technological revolution.27

All surveys on this subject in the medical community seem to show similar results regarding the perceived value of AI, independent of age or experience with AI in clinical practice. The fact that these results also overlap with the value of AI as perceived by the general population raises concerns that specific knowledge on this subject has not yet penetrated the medical community at large and that the surveys on this subject just reflect the ongoing AI hype. Our survey adds to this concern by showing that many respondents are unsure about why AI would or would not be valuable, what is needed to comfortably adopt AI, or how to be educated on AI.

There certainly seems to be a disconnect between the more positive views of information experts on AI and views of the medical community.28 To get all the benefits AI presents, while keeping its drawbacks to a minimum, drastic changes are needed in the medical community. There is a need for general AI training to the various health care stakeholders as identified in a recent publication on the need to introduce AI training in medical education.29 In the meantime, training on new AI tools should also be the responsibility of the companies who provide the algorithms through extensive web-based training, along with on-site hands-on training.

Health Care Costs

Another highlight from this survey was the potential of AI to target high health care expenditure, since it can reduce and replace repetitive manual labor. Recent study has shown that AI can help reduce the waste in the US health care system in the range from $760 billion to $935 billion in 2019.30 Respondents believe AI can make the diagnostics process more efficient and decrease costs.1 For example, safely reducing the number of laboratory tests ordered or the frequency of ordering repeat tests is illustrated with a quote from respondent 94: “Alert me to the fact that a lab test I ordered was already completed at another hospital system in the past week.”

Impact on Jobs

Finally, we learned from the discussion board conducted prior to developing the survey that advisers also mentioned that they know AI is rapidly increasing in importance and value and want it to evolve their roles rather than replace them.31 “AI will become an integral concept for health care. Whether diagnosis or process improvement in medicine, AI will impact the industry. For personalized medicine and improving diagnostic accuracy AI will drive decision making in the hands of providers,” said respondent 12, an executive at a large integrated health network. Laboratory managers similarly think that AI could create efficiencies that expedite their workflows but want to ensure that they are still in control. Respondent 31 believes, “AI needs to be used in the right spaces and not to eliminate med techs but to supplement them.”

Implementation Strategies

In this survey, 19% of respondents did not see the added value of implementing AI in laboratory medicine, partially because of the high initial investment costs. This will be a limiting factor as long as the return on investment and clinical benefits of these tools are not well understood. A recent narrative review on the clinical applications of AI for sepsis validates this idea by identifying that a large gap still remains between the development of AI algorithms and their clinical implementation.32 The question remains whether this gap between development and clinical implementation might be caused by the resistance to implementing new technologies. Unfortunately, this can hold back research on this subject and thereby delay the gathering of evidence on whether AI tools can be beneficial and cost-effective in clinical practice on a large scale.

Although it was not mentioned in the survey itself, an interesting strategy to implement AI in laboratory medicine was discussed in one of the group chats that was used to shape the final survey. One of the participants disclosed that in their hospital, a new AI tool was introduced alongside an existing tool that was used in routine clinical practice. The old tool was still used but as a backup for when the AI tool failed. Practitioners were encouraged to try the AI tool but could choose either of the available options. They gradually got familiar with the AI tool and could see the added value firsthand. They ended up switching to the AI tool completely. This illustrates a viable way to integrate AI tools in health care. Although more expensive, this provides an opportunity to compare these tools in practice and allow the practitioners to feel comfortable with the tool before having to rely on it completely. See Table 4 for our key recommendations.

Table 4.

Key Recommendations for Implementing AI in Laboratory Medicine

| Area | Recommendation |

| Education | Need for general AI training in medical education—an approach has been proposed29 |

| Implementation | Implement new AI tools alongside current tools to give practitioners time to get comfortable and see benefits firsthand, albeit suggested by only one respondent |

| Research | Research on AI in laboratory medicine should focus on generating clinical evidence of benefits and implementation |

AI, artificial intelligence.

Patient Viewpoint

The overarching goal of implementing AI in clinical practice is to benefit the patient. Therefore, the patient’s perspective should also be discussed. One of the respondents posed an interesting question in the online group chat: “Should the patients be informed that some of the decisions are being recommended by AI?” Another question is whether we should inform patients when an AI recommendation is not followed. Unfortunately, this burdens the patient as they now have to choose between the physician and the computer. Many algorithms are already being used in medicine, like the YEARS criteria33 for pulmonary embolisms. Their role in the diagnostic process is rarely explained to or discussed with the patient, as only trained physicians can interpret the results of these algorithms. We therefore believe that a similar approach might be best when using more advanced algorithms, in which explainability and interpretation are an even larger problem. These tools and algorithms are an aid to complement the health care practitioners who are eventually responsible for the diagnostic process and decision making. Finally, from a provider’s viewpoint, they will need to know details of the algorithms they use to make decisions.

Strengths of the Survey

We addressed a target population of participants who are currently in a position to influence organizational policies to either embrace new technologies or refrain from using them in their laboratories. Any specific intervention to encourage the introduction of AI in the diagnostics space should be tailored to such a population of decision makers. Another strength is that the results were independently analyzed by two researchers with different backgrounds, thereby minimalizing the chance of interpretation bias. Finally, the questions were extensively scrutinized in the initial discussion board and group chats prior to fielding the final survey.

Limitations of the Survey

The participants did not represent the entire population of practitioners who will be using AI in a diagnostic setting. We cannot generalize these findings to all laboratory medicine practitioners across multiple types of settings. Finally, the study population (n = 128) was relatively small for quantitative analyses, perhaps causing the nonsignificance of the χ 2 tests. Only a large difference in how AI was valued between groups would have shown significant results in the quantitative analysis.

Conclusions

This survey shows that many stakeholders in laboratory medicine think that AI will be valuable to them in the near future, mostly given the “organizational value” and expected improvements in “quality of care,” although vital prerequisites such as support systems, strategic plans, and budgets need to be provided. The overall response to this and other similar surveys raises the concern that specific knowledge on AI in the medical community at large is still poor. AI education in the medical community is much needed. As suggested by one respondent, one strategy to the implement new AI tools could be to implement it alongside existing tools, so that practitioners can feel comfortable with the new tools and experience their added value in practice firsthand while awaiting further research studies on the clinical evidence, implementation, and benefits of AI.

Supplementary Material

Roche funded the C-space consultancy.

Ketan Paranjape wrote this article as part of his PhD studies. He is a vice president at Roche. There is no conflict of interest with his employment at Roche.

Contributor Information

Ketan Paranjape, Amsterdam UMC.

Michiel Schinkel, Section Acute Medicine, Department of Internal Medicine, Amsterdam UMC.

Richard D Hammer, Department of Pathology and Anatomical Sciences, University of Missouri School of Medicine, Columbia.

Bo Schouten, Amsterdam UMC; Department of Public and Occupational Health, Amsterdam Public Health Research Institute, Amsterdam, The Netherlands.

R S Nannan Panday, Section Acute Medicine, Department of Internal Medicine, Amsterdam UMC.

Paul W G Elbers, Department of Intensive Care Medicine, Amsterdam Medical Data Science, Amsterdam Cardiovascular Science, Amsterdam Infection and Immunity Institute, Amsterdam UMC.

Mark H H Kramer, Board of Directors, Amsterdam UMC, Vrije Universiteit, Amsterdam, The Netherlands.

Prabath Nanayakkara, Section Acute Medicine, Department of Internal Medicine, Amsterdam UMC.

References

- 1. Badrick T. Evidence-based laboratory medicine. Clin Biochem Rev. 2013;34:43-46. [PMC free article] [PubMed] [Google Scholar]

- 2. Makary MA, Daniel M. Medical error—the third leading cause of death in the US. BMJ. 2016;353:i2139. [DOI] [PubMed] [Google Scholar]

- 3. Institute of Medicine Committee on Quality of Health Care in America. Kohn LT, Corrigan JM, Donaldson MS, eds. To Err Is Human: Building a Safer Health System. Washington, DC: National Academies Press; 2000. [PubMed] [Google Scholar]

- 4. Brennan TA, Leape LL, Laird NM, et al. Incidence of adverse events and negligence in hospitalized patients. N Engl J Med. 1991;324:370-376. [DOI] [PubMed] [Google Scholar]

- 5. Leape LL, Brennan TA, Laird N, et al. The nature of adverse events in hospitalized patients: results of the Harvard Medical Practice Study II. N Engl J Med. 1991;324:377-384. [DOI] [PubMed] [Google Scholar]

- 6. Naugler C, Church DL. Automation and artificial intelligence in the clinical laboratory. Crit Rev Clin Lab Sci. 2019;56:98-110. [DOI] [PubMed] [Google Scholar]

- 7. Tizhoosh HR, Pantanowitz L. Artificial intelligence and digital pathology: challenges and opportunities. J Pathol Inform. 2018;9:38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Cabitza F, Banfi G. Machine learning in laboratory medicine: waiting for the flood? Clin Chem Lab Med. 2018;56:516-524. [DOI] [PubMed] [Google Scholar]

- 9. Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402-2410. [DOI] [PubMed] [Google Scholar]

- 10. Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115-118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Zhang Z. The role of big-data in clinical studies in laboratory medicine. J Lab Precis Med. 2017;2:34-34. [Google Scholar]

- 12. Durant T. Machine learning and laboratory medicine: now and the road ahead.2019. https://www.aacc.org/publications/cln/articles/2019/march/machine-learning-and-laboratory-medicine-now-and-the-road-ahead. Accessed April 17, 2020. [Google Scholar]

- 13. Safi S, Thiessen T, Schmailzl KJ. Acceptance and resistance of new digital technologies in medicine: qualitative study. JMIR Res Protoc. 2018;7:e11072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Cismondi F, Celi LA, Fialho AS, et al. Reducing unnecessary lab testing in the ICU with artificial intelligence. Int J Med Inform. 2013;82:345-358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Gunčar G, Kukar M, Notar M, et al. An application of machine learning to haematological diagnosis. Sci Rep. 2018;8:411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Gruson D, Helleputte T, Rousseau P, et al. Data science, artificial intelligence, and machine learning: opportunities for laboratory medicine and the value of positive regulation. Clin Biochem. 2019;69:1-7. [DOI] [PubMed] [Google Scholar]

- 17. Li L, Georgiou A, Vecellio E, et al. The effect of laboratory testing on emergency department length of stay: a multihospital longitudinal study applying a cross-classified random-effect modeling approach. Acad Emerg Med. 2015;22:38-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Garcia E, Kundu I, Ali A, et al. The American Society for Clinical Pathology’s 2016-2017 vacancy survey of medical laboratories in the United States. Am J Clin Pathol. 2018;149:387-400. [DOI] [PubMed] [Google Scholar]

- 19. Xu J, Yang P, Xue S, et al. Translating cancer genomics into precision medicine with artificial intelligence: applications, challenges and future perspectives. Hum Genet. 2019;138:109-124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Serag A, Ion-Margineanu A, Qureshi H, et al. Translational AI and deep learning in diagnostic pathology. Front Med (Lausanne). 2019;6:185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Dias R, Torkamani A. Artificial intelligence in clinical and genomic diagnostics. Genome Med. 2019;11:70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Thomas DR. A general inductive approach for analyzing qualitative evaluation data. Am J Eval. 2006;27:237-246. [Google Scholar]

- 23. Sarwar S, Dent A, Faust K, et al. Physician perspectives on integration of artificial intelligence into diagnostic pathology. NPJ Digit Med. 2019;2:28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Pinto Dos Santos D, Giese D, Brodehl S, et al. Medical students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol. 2019;29:1640-1646. [DOI] [PubMed] [Google Scholar]

- 25. Oh S, Kim JH, Choi SW, et al. Physician confidence in artificial intelligence: an online mobile survey. J Med Internet Res. 2019;21:e12422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Collado-Mesa F, Alvarez E, Arheart K. The role of artificial intelligence in diagnostic radiology: a survey at a single radiology residency training program. J Am Coll Radiol. 2018;15:1753-1757. [DOI] [PubMed] [Google Scholar]

- 27.Edelman. 2019 Artificial Intelligence Survey.https://www.edelman.com/research/2019-artificial-intelligence-survey. Accessed December 22, 2019.

- 28. Blease C, Bernstein MH, Gaab J, et al. Computerization and the future of primary care: a survey of general practitioners in the UK. PLoS One. 2018;13:e0207418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Paranjape K, Schinkel M, Nannan Panday R, et al. Introducing artificial intelligence training in medical education. JMIR Med Educ. 2019;5:e16048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Shrank WH, Rogstad TL, Parekh N. Waste in the US health care system. JAMA. 2019;322:1501. [DOI] [PubMed] [Google Scholar]

- 31. Obermeyer Z, Emanuel EJ. Predicting the future—big data, machine learning, and clinical medicine. N Engl J Med. 2016;375:1216-1219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Schinkel M, Paranjape K, Panday RSN, et al. Clinical applications of artificial intelligence in sepsis: a narrative review. Comput Biol Med. 2019;115:103488. [DOI] [PubMed] [Google Scholar]

- 33. van der Hulle T, Cheung WY, Kooij S, et al. ; YEARS Study Group . Simplified diagnostic management of suspected pulmonary embolism (the YEARS study): a prospective, multicentre, cohort study. Lancet. 2017;390:289-297. [DOI] [PubMed] [Google Scholar]

- 34. Yuan C, Ming C, Chengjin H. UrineCART, a machine learning method for establishment of review rules based on UF-1000i flow cytometry and dipstick or reflectance photometer. Clin Chem Lab Med. 2012;50:2155-2161. [DOI] [PubMed] [Google Scholar]

- 35. Putin E, Mamoshina P, Aliper A, et al. Deep biomarkers of human aging: application of deep neural networks to biomarker development. Aging (Albany NY). 2017;8:1021-1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Razavian N, Blecker S, Schmidt AM, et al. Population-level prediction of type 2 diabetes from claims data and analysis of risk factors. Big Data. 2015;3:277-287. [DOI] [PubMed] [Google Scholar]

- 37. Nelson DW, Rudehill A, MacCallum RM, et al. Multivariate outcome prediction in traumatic brain injury with focus on laboratory values. J Neurotrauma. 2012;29:2613-2624. [DOI] [PubMed] [Google Scholar]

- 38. Lin C, Karlson EW, Canhao H, et al. Automatic prediction of rheumatoid arthritis disease activity from the electronic medical records. PLoS One. 2013;8:e69932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Liu KE, Lo CL, Hu YH. Improvement of adequate use of warfarin for the elderly using decision tree-based approaches. Methods Inf Med. 2014;53:47-53. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.