Abstract

Knowledge was mapped about how usability has been applied in the evaluation of blended learning programs within health professions education. Across 80 studies, usability was explicitly mentioned once but always indirectly evaluated. A conceptual framework was developed, providing a foundation for future instruments to evaluate usability in this context.

Supplementary Information

The online version contains supplementary material available at 10.1007/s40670-021-01295-x.

Keywords: Blended learning, Evaluation, Health professions education, Scoping review, Usability

Introduction

Blended learning is a pedagogical approach that combines face-to-face teaching with information technology [1–3]. Over the last decade, blended learning programs (BLPs) have been widely implemented in institutions of higher education worldwide, and particularly in the field of health professions education (HPE) [4–7]. In light of the COVID-19 global pandemic, institutions are ramping up their use of BLPs or transitioning to blended and remote learning formats if they have not already done so [8–10]. Discussions across institutions of higher education are currently being undergone to highlight the potential and need to keep and refine BLPs even after this pandemic dissipates [11, 12].

Several studies indicate that BLPs are highly effective in providing opportunities for meaningful learning as they enable learners to tailor their educational experiences according to their needs and objectives [1, 5, 13–19]. BLPs enable learners to control the content, sequence, pace, and time of their learning [5, 18]. Concurrently, BLPs empower educators to effectively guide and monitor learner progress through a learning management system (LMS). These systems allow educators to accurately identify where learners are in relation to the course content and identify potential issues learners may have while progressing through the course [2, 6, 7, 13, 14, 20, 21]. Furthermore, BLPs provide a cost-saving potential for educational institutions in the long run [22]. These advantages of BLPs make them well suited for adult learning [2, 18, 23], especially amidst the current global state in which learners are unable to attend traditional classrooms [11, 12].

However, difficulties arise in adopting BLPs into educational systems [24, 25]. Implementation of BLPs requires an initial large investment in faculty training, time, and money [18]. It appears necessary to rigorously evaluate both the technological platforms and face-to-face educational aspects of BLPs prior to their widespread implementation [1, 18]. Here, usability appears to be one of the most important dimensions of the BLP that needs to be considered and evaluated for [26–28].

Usability is a multidimensional concept defined by the International Organization for Standardization (ISO) as the “extent to which a system, product or service can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use” [29]. Often understood simply as “the ease-of-use” of a technology or technological system, the ISO clearly stresses that this idea of usability does not reflect the comprehensiveness nature of this multidimensional concept.

The ISO and other scholars indicate that usability has three major components: effectiveness, efficiency, and satisfaction [27–34]. Through measuring for its components, creators of educational programs can understand if their program is well designed and well received by users [15, 26], facilitates learning [22, 26], and how the program can be improved for the future [20]. Also, the ISO framework indicates that usability can be applied to both the technological (i.e., e-learning platform) and service (i.e., face-to-face component and overall content) aspects of a system, thus making it ideal for the evaluation of BLPs [29–31].

Although usability is a highly researched and heavily defined concept in the field of integrated technology [35, 36], its adoption in relation to BLPs appears unclear [26, 34, 37–39]. To date, no study addressing the conceptualization and evaluation of usability in BLPs within HPE has been conducted. As such, the purpose of this study was to map current knowledge about and develop a foundational understanding of how usability has been conceptualized and evaluated in the context of BLPs within HPE.

Method

Scoping Review

A scoping review guided by Arksey and O’Malley’s five-stage framework was conducted iteratively over a 2-year period to identify all relevant studies published between August 6, 1991, and August 4, 2020 [40]. The PRISMA-ScR checklist was corroborated [41]. This methodology was used as it is pertinent in answering broad and exploratory research questions which focus on examining the extent and nature of a specified body of research, and identifying existing gaps in the literature [40–44]. Note that this review methodology does not seek to evaluate the quality of evidence, which is a task more akin to the systematic review methodology [40].

Academic Librarian Involvement

The review question, search strategy, and eligibility criteria pertaining to this review were developed in collaboration with three academic librarians at McGill University, where one librarian is an expert in usability, a second is an expert in conducting literature searchers with the concepts of medical and health professions education, and a third is an expert in conducting literature searches with the concepts of family medicine and primary care. A fourth librarian at McGill University assisted in guiding the scoping review process.

Step 1: Identify the Research Question

How has the concept of usability been defined and evaluated for in BLPs within HPE?

Step 2: Identifying Relevant Studies

Scopus and ERIC were searched [45, 46]. Under the guidance of the librarians, it was decided that searching these two databases with a broad strategy would be sufficient for answering the research question. This is because Scopus is one of the largest global interdisciplinary databases which retain studies conducted in the field of health professions education and technology [46] and ERIC is one of the largest global education databases [45]. See Supplementary Appendix 1 and 2 for the full search strategies.

Step 3: Study Selection

All articles were imported into EndNote X8. The first author screened all titles, abstracts, and full-text articles using a questionnaire guide (Supplementary Appendix 3). The fourth and fifth authors functioned as second reviewers, each completing a portion of the title, abstract, and full-text screening using the questionnaire guide. The third author validated 36% of the included studies to ensure that they matched the eligibility criteria.

Inclusion Criteria

Studies had to empirically evaluate both the online and in-person aspects of a BLP within HPE. Learners must have been the primary evaluators for the BLP.

This study adopts the definition of blended learning established by Watson, where BLPs must utilize asynchronous online learning methods to deliver approximately 30 to 79% of the educational content [21]. The technological component must have been accessible outside of the typical teaching environment (i.e., at home). Studies must describe the synchronous and asynchronous components of their BLP or provide some indication about the number of hours of learning that each component of the BLP took. Studies must indicate that learner use of online material was tracked (i.e., an LMS was utilized).

For the definition of HPE, we refer to any undergraduate or graduate education provided to students in health professional programs, or continuing education and faculty development training provided to practicing health professionals [47]. This study adopts the term “learner” to amalgamate both trainees and trained professionals taking part in a BLP within the field of HPE.

Studies had to be written in English, come from a peer-reviewed journal article, and be published and indexed between August 6, 1991 (as this is when the world wide web went live, making the creation of BLPs a possibility) and August 4, 2020 (as this is when the last search was performed).

Exclusion Criteria

Studies using CD-ROMs, DVDs, and other downloadable software as their primary mode of asynchronous delivery were excluded, as this is not online learning. Studies that utilize simulation centers or computer labs as their primary technological component were excluded, as these may not be accessible to students outside the typical teaching environment. Studies that pertain to learners that do not directly provide care to human patients, such as veterinarians, were excluded [48]. Studies that evaluated the BLPs of undergraduate courses that are not predominantly delivered to HPE learners, such as general biology or psychology courses provided to all students in a Faculty of Science, were excluded. Studies that solely conducted evaluations by BLP instructors were excluded.

Step 4: Charting the Data

The first author entered extracted data into a form developed in Microsoft Excel, Version 16.0. Appropriateness of the charting form was discussed through consultation with co-authors and academic librarians. The fourth author validated the charted data.

Step 5: Collating, Summarizing, and Reporting the Results

Descriptive Quantitative Analysis

Charted data were synthesized through tabulation. Descriptive statistics were estimated to depict the nature and distributions of the trends found through tabulation.

Qualitative Content Analysis

To understand which components of usability were evaluated for in each study, directed content analysis was utilized as described by Hsieh and Shannon, 2005 [49]. ISO definitions of each component were used as a guiding framework [29]. Summarized ISO definitions of each usability component and our associated framework for identifying relevant codes can be seen in Table 1. Findings were validated by the fourth author.

Table 1.

ISO definitions of usability components and examples of coding

| Usability component | Summarized definition by ISO 9241-11:2018 | Example of usability component in a program evaluation context | Example of usability component as discussed in retained studies |

|---|---|---|---|

| Effectiveness | Accuracy and completeness with which users achieve specified goals; extent to which actual outcomes match intended outcomes | Measure of knowledge increase (i.e., grade change through pre-post test) | “This BLP assisted in my understanding of the content at hand” |

| Efficiency | Resources used in relation to the results achieved; resources are expandable and include time, human effort, money, and materials | According to ISO, “typical resources include time, human effort, costs and materials” when discussing efficiency | “I watched all the modules from beginning to end” |

| Satisfaction | Extent to which the user’s physical, cognitive and emotional responses that result from the use of a system, product or service meet the user’s needs and expectations | Statement of enjoyment/disappointment with aspects of the BLP | “I was satisfied with this program” |

Qualitative Thematic Analysis

Inductive semantic thematic analysis was conducted as discussed by Braun and Clarke, 2006 [50]. All retained materials for this review were imported into QSR’s NVivo 12 and coded independently by the first and fourth authors. Reviewers met three times during the coding process; at the 10%, 36%, and 100% marks. Potential themes were discussed in the final meeting. Themes were then further developed and consolidated through meetings with the entire research team. A deductive analysis using the ISO framework for usability was then conducted to assist in further analyzing and bringing structure to the data.

Results

Eligible Studies

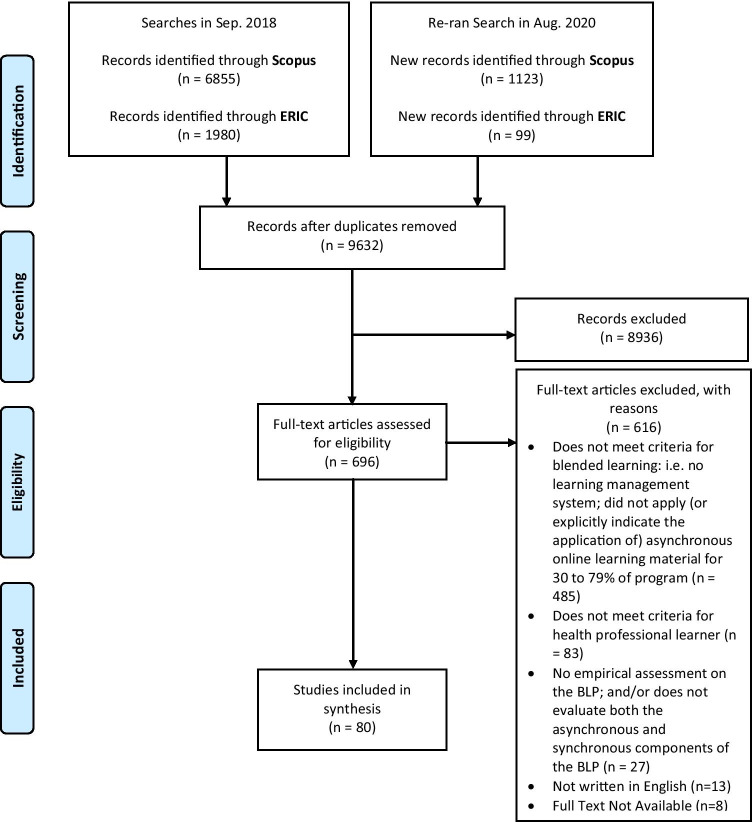

A total of 9632 titles were identified after the removal of duplicates. Title and abstract screening left 696 studies to be full-text reviewed. Ultimately, 80 studies were retained. Figure 1 provides a summary of the included and excluded studies. Table 2 includes a detailed summary of extracted data from all retained studies.

Fig. 1.

PRISMA flow diagram of included/excluded studies

Table 2.

Synthesized table of extracted data for studies that met eligibility criteria

| In-text citation | Type of health professional learner | Description and/or purpose of the BLP | How is the program labeled in the study | Country and/or Region of Study | Usability Component Being Evaluated | Was the overall concept of “Usability” explicitly evaluated and/or discussed? | Method of evaluation | Did the study discuss instrument reliability, standardization, and/or validity if utilized? |

|---|---|---|---|---|---|---|---|---|

| [86] | Physiotherapy students (3rd year) | To teach ethics in physiotherapy | Blended learning | Spain | Effectiveness; satisfaction | No | Self-reported questionnaires (the Attitudes Questionnaire towards Professional Ethics in Physiotherapy – AQPEPT; Perceptions about Knowledge regarding Professional Ethics in Physiotherapy; and a student’s opinion questionnaire | The self-reported questionnaire were previously validated with good-high reliability (Cronbach’s alpha = 0.898; 0.760 respectively); the reliability/standardization/validity of the student’s opinion questionnaire was Not indicated |

| [103] | Medical students (6th year); practicing family doctors and interns | To improve professional competence in the certification of causes of death in the Spanish National Health System | Blended learning | Spain | Effectiveness; satisfaction | No | “Quasi-experimental” pre- and post-survey | Not indicated |

| [104] | Internal medicine postgraduate trainees (2nd year) | To teach outpatient diabetes management | Flipped classroom | USA | Effectiveness; efficiency; satisfaction | No | Opinion questionnaire; focus groups; pre-post and 6 month follow-up knowledge test (New England Journal of Medicine Knowledge + Question Bank); and an attitudinal survey using a 5-point Likert scale | Not indicated for the opinion questionnaire and the attitudinal survey; the Knowledge + Question Bank seems to be standardized |

| [105] | All dental students (year not specified) registered in a Clerkship at the Department of Oral and Maxillofacial Surgery | To teach material on oral and maxillofacial surgery in a 2-week clerkship | Blended learning using a flipped classroom approach | Germany | Effectiveness; efficiency; satisfaction | No | Pre-post test with 20 single-choice questions; program evaluation questionnaire delivered on a 10-point Likert scale | Not indicated |

| [106] | Dental students (2nd year) | To teach pediatric dentistry | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Pre- and post-survey (8 items), which includes an additional 12 questions administered at the end of the program only. Surveys included Likert scales (1–5); thematic analysis conducted on open-ended questions | Not indicated |

| [107] | Medical residents (internal medicine) | To teach students in an internal medicine residency program | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Kirkpatrick’s model of evaluation level 1 and 2. Pre-post survey with Likert scale (1–5); comparison between students enrolled in the flipped classroom curriculum vs. the traditional course | Study functioned to validate their survey |

| [87] | Medical students (4th and 5th year) | To teach students differential diagnosis | Inverted classroom | Germany | Effectiveness; satisfaction; efficiency | No | Pre- and post-survey (standardized questionnaire form University of Marburg) with Likert scales (1–5); focus group | Standardized |

| [88] | Pharmacy students (3rd year) | To teach pharmacotherapy oncology | Flipped classroom | USA | Effectiveness; satisfaction | No | ANCOVA analysis on examination scores using previous academic performance variables (i.e., undergraduate GPA) as covariates. Summative teaching evaluation (two-item questionnaire) | Not indicated |

| [93] | Medical students (1st year) | To teach advanced cardiac life support | Flipped classroom | USA | Effectiveness; efficiency | No | Comparison of three written evaluations (multiple-choice questions) between students taking part in BLP vs. traditional program. Ungraded 10-question quizzes to gauge student compliance with podcast viewing | - |

| [108] | Chiropractic students (2nd year) | To teach clinical microbiology | Inverted classroom model | USA | Effectiveness; satisfaction | No | Test performance compared between students being taught using an inverted classroom model and students being taught using the traditional lecture-based face-to-face method; a six-question survey | Indicates that most survey items were adapted from items appeared in validated study surveys; face validity of the survey was established by the university’s director of academic assessment and two other faculty members; Cronbach’s alpha was also calculated |

| [109] | Health professional students (physical therapy, occupational therapy, and other) and health professionals (physical therapist, occupational therapist, prosthetics and orthotics, MD—physiatry working in hospital, academic, outpatient and in-patient settings | To increase knowledge in basic-level wheelchair service provision | Hybrid course | India and Mexico | Effectiveness; satisfaction | No | Quasi-experimental design with non-equivalent control groups; test and satisfaction survey using a 5-point Likert scale | Validity and standardization discussed for the test, and mentions that the satisfaction survey was created from adapting a previously established survey |

| [110] | Nursing students (4th-year undergraduates) | To teach pediatric nursing content | Flipped classroom | Bahrain | Effectiveness; efficiency; satisfaction | No | Quiz; class-engagement scores; and focus groups | Not indicated |

| [67] | Nursing students (1st year) | To teach a course called “Human Beings and Health” | Flipped learning | South Korea | Effectiveness; satisfaction; efficiency | No | Flipped Course Evaluation Questionnaire; open-ended questions; focus groups. Conventional content analysis | Not indicated |

| [89] | Practicing nurses | To teach a course called “Patient Navigation in Oncology Nursing” | Blended learning | Canada | Effectiveness | No | Questionnaire (adapted from standardized questionnaire), including a Likert scale (1–5) and additional open-ended questions | Standardized |

| [111] | Doctor of Physical Therapy students (in the first two semesters) | To teach gross anatomy | Flipped classroom | USA | Effectiveness | No | Comparison of examinations and grades between students in a flipped vs. traditional anatomy class | - |

| [112] | Medical students (4th year) | To teach a course on management of trauma patients | Inverted classroom | Colombia | Effectiveness; satisfaction; efficiency | No | Pre- and post-test; generic institutional questionnaire; and evaluation by Flipped Classroom Perception Instrument (FCPI) | Not indicated |

| [71] | Medical students (3rd and 4th year) | To teach students in a geriatric medicine rotation | Blended learning | Australia | Effectiveness; satisfaction; efficiency | No | Pre- and post-knowledge assessment instrument | Not indicated |

| [113] | Dental students (3rd and 4th year) | To teach topics from dental pharmacology related to oral lesions and orofacial pain | Blended learning | Malaysia | Effectiveness; satisfaction; efficiency | No | Qualitative thematic analysis of student reflections | - |

| [72] | Emergency department staff members (RNs, nursing assistants, and unit coordinators) | To provide staff with health information technology training | Blended learning | USA | Effectiveness; satisfaction; efficiency | No | Qualitative analysis of 13-question survey, including Likert scale (1–5). Responses summarized into “satisfaction score,” plus additional thematic analysis | Indicates that the survey used was pre-existent, but does not use the word validated |

| [51] | Nursing students (undergraduates—year not explicitly stated) | To teach a course about information technology for nurses | Blended learning | Saudi Arabia | Effectiveness; satisfaction; efficiency | No | 6-tool descriptive research design, including comparison of student grades (enrolled vs. not enrolled in BLP); Student Satisfaction Survey (Likert scale 1–5), and teacher/course evaluations | Indicates that the survey used is a modified version of the Students’ Evaluation of Educational Quality Survey |

| [114] | Midwifery students in a master’s program (note that all students in the program were midwifery educators who had previous training as nurses-midwives and 5 to 30 years of experience) | To teach midwifery educators about learning styles and pedagogical approaches | Blended learning | Bangladesh | Effectiveness; efficiency | No | Structured baseline questionnaire; endpoint questionnaire; and focus groups—the questionnaires used 5-point Likert scales and open-ended responses; qualitative data were analyzed using content analysis | Not indicated |

| [115] | Pharmacy students (final year) | To teach diabetes mellitus counseling skills | Blended learning | Germany | Effectiveness; satisfaction | No | Online tests; objective structured clinical examination scores; surveys using a 6-point Likert scale | Not indicated |

| [73] | Practicing midwives | To provide midwives with increased training and education in perinatal mental health education | Blended learning | UK (Scotland) | Effectiveness; satisfaction; efficiency | No | A modified online Objective Structured Clinical Examination (OSCE); evaluation of portfolios of reflective accounts | - |

| [74] | Nursing students (3rd year) | To assist learners in strengthening their communication skills in mental health nursing | Blended learning | Norway | Effectiveness; satisfaction; efficiency | No | Exploratory design; questionnaire with open-ended questions (Likert scale 1–5). Content analysis | Determined face validity of the questionnaire through discussion with a reference group |

| [75] | Nutrition/dietetics students (undergraduates—year not explicitly stated) | To teach the courses “Professional Skills in Dietetics” and “Community Nutrition” | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Survey with Likert scale (1–5) | Reliability tested (assessed the Cronbach alpha of the survey prior to utilizing it) |

| [84] | Pharmacy students (2nd year) | To teach a course called Dosage Form II (sterile preparations) | Flipped classroom | Malaysia | Effectiveness; satisfaction | No | Quasi-experimental pre- and post-test intervention; and a web-based survey using a 5-point Likert scale | Not indicated |

| [76] | Medical students (3rd year—family medicine clerkship) | To teach behavior change counseling | Blended learning | USA | Effectiveness | No | Attitude and Knowledge assessment; 12-item pre- and post-class assessment; additional 5 questions only at conclusion | Not clearly indicated—mentions that items were derived from questions developed by Martino et al. (2007) |

| [77] | Pharmacy students (1st year) | To teach advanced physiology | Flipped teaching | USA | Effectiveness | No | Comparison of exam grades between different cohorts (flipped vs. non-flipped) | - |

| [78] | Medical students (4th year) | To teach social determinants of health | Flipped learning | UK | Effectiveness; satisfaction; efficiency | No | First level of Kirkpatrick’s evaluation model: questionnaire (Likert scale 1–4); semi-structured group interview; thematic analysis | Not indicated |

| [56] | Physiotherapy students (2nd year) | To teach gross anatomy | Blended learning | Australia | Effectiveness; satisfaction; efficiency | No | Retrospective cohort study of student grades and student feedback (“Likert-style questions”); thematic and content analysis | Not indicated |

| [57] | Medical students (5th year) | To teach radiology content | Blended learning | UK | Effectiveness; satisfaction; efficiency | No | Questionnaire | Not indicated |

| [116] | Doctor of Chiropractic Program students (year not specified) | To teach an introductory extremities radiology course | Integrative blended learning | USA | Effectiveness; efficiency; satisfaction | No | Cross-sectional comparison of students cohorts learning via an integrative blended learning approach vs. traditional approach; comparison of test scores from lecture and laboratory examinations; and a course evaluation | The course evaluation questions that were analyzed came from the institutional-based course evaluation system |

| [58] | Pharmacy students (2nd year) | To teach the principles of nutrition for diabetes mellitus | Flipped classroom | Thailand | Effectiveness; satisfaction; efficiency | No | Test scores compared between different cohorts (flipped vs. non-flipped); student feedback via 15-item survey (Likert scale 1–5); plus open-ended feedback from two peer instructors (not affiliated with course development or instruction) | Not indicated |

| [117] | Healthcare providers (family physicians and allied health professionals) | To train primary care physicians in rheumatology care | Blended learning | Pakistan | Effectiveness; efficiency; satisfaction | No | Participation in teaching activities; pre- and post-course self-assessment; written feedback; and a questionnaire | Not indicated |

| [118] | Nursing students (2nd to 4th year) | To teach a course on patient safety | Flipped classroom | South Korea | Effectiveness | No | Quasi-experimental study with a non-equivalent control group; pre-post test administered as a survey containing demographic questionnaire and the Patient Safety Competency Self-Evaluation (PSCSE) which uses a 5-point Likert scale | Indication of PSCSE validation and reliability is made |

| [52] | Pharmacy students (2nd year) | To teach a pharmacotherapy course | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Pre- and post test scores compared between different cohorts (flipped vs. un-flipped); pre- and post-course survey (designed to assess levels of Bloom’s Taxonomy of Learning with Likert scale 1–5); content analysis | Indicates that survey questions were adapted from a validated survey instrument of student attitudes toward televised courses |

| [55] | Doctor of Pharmacy students (2nd year) | To teach a course on gastrointestinal and liver pharmacotherapy | Flipped classroom | USA | Effectiveness; efficiency; satisfaction | No | Traditional vs. flipped classroom instruction impacts measured in cohorts via pre-post course survey using Likert scales, quizzes, and mean student performance | Not indicated |

| [59] | Medical students (1st year) | To teach biochemistry | Inverted classroom | Germany | Effectiveness; satisfaction; efficiency | No | Exam marks compared between different cohorts; course evaluation; questionnaire; indicates that qualitative data were collected but did not reference the type of qualitative analysis that was conducted in the study | Not indicated (but does mention that the questionnaire was derived from a previous study by Rindermann et al., 2001) |

| [90] | Osteopathic medicine students (3rd year—pediatric clerkship) | To teach students in a pediatric rotation | Blended learning | USA | Effectiveness; satisfaction; efficiency | No | Osteopathic Medical Achievement Test (120 items) scores and final course grades; preceptor evaluations (18 items, Likert scale 1–10) were compared between the standard learning and blended learning groups; post-course survey; identifies themes but does not discuss the type of qualitative analysis (i.e., thematic, content, etc.) or provide a reference to the approach that was used to derive these findings | Not indicated |

| [91] | Practicing pharmacists | To assist pharmacy practitioners in acquiring competency in and accreditation for conducting collaborative comprehensive medication reviews (CMRs) | Does not refer to BL or any of its synonyms | Finland | Effectiveness; satisfaction; efficiency | No | Evaluation of participants’ learning through learner diaries; written assignments and portfolio. Post-intervention survey (Likert scale 1–5) | Not indicated, but survey routinely used by University of Kuopio, Centre for Training and Development |

| [53] | Medical students (3rd year—surgery clerkship) | To teach a surgical clerkship | Flipped classroom | USA | Effectiveness; satisfaction | No | Traditional vs. flipped classroom instruction impacts measured in cohorts via end-of-rotation NBME Surgery Subject Examination; course evaluation survey using a 5-point Likert scale and open-ended questions; and an open-ended survey analyzed using conventional qualitative content analysis | Not indicated for the surveys; the exam was indicated to be valid and standardized |

| [54] | Diagnostic Radiology and Imaging BSc Honours Program students (2nd year) | To teach a course in relation to radiology and imaging | Blended learning | UK | Effectiveness; satisfaction; efficiency | No | Two questionnaires, one for students and one for staff; identifies themes but does not discuss the type of qualitative analysis (i.e., thematic, content, etc.) or provide a reference to the approach that was used to derive these findings | Not indicated |

| [79] | Medical students (4th year—family medicine course) | To teach a family medicine course | Blended learning | Saudi Arabia | Effectiveness; satisfaction; efficiency | No | Dundee “ready educational environment measure” (50-items, Likert scale 1–4); the ‘objective structured clinical examination’; written examination with multiple-choice questions; analysis of case scenarios—comparison between intervention and non-intervention groups | Validated |

| [60] | Midwifery students (1st year) | To teach a course on Research, Evidence and Clinical Practice | Blended learning | Australia | Effectiveness; satisfaction; efficiency | No | University-based course evaluations (Likert scale 1–5) | Not indicated |

| [61] | Nursing students (accelerated undergraduates—year not explicitly stated) | To teach a course on evidence-based nursing practice | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | 2 surveys (one after pre-class module, one at end of semester) with Likert scale (1–5); plus qualitative questions. Conventional content analysis | Not indicated |

| [120] | Pharmacy students (1st year) | To teach the course Basic Pharmaceutics II (PHCY 411) | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Pre- and post-course surveys | Not indicated |

| [119] | Pharmacy students (2nd year) | To teach venous thromboembolism (VTE) to students enrolled in a pharmacotherapy course | Blended learning | USA | Effectiveness; satisfaction; efficiency | No | Comparison of engagement and performance based on online module access; pre-and post-test; response to in-class Automated Response System; exam performance; survey | Not indicated |

| [69] | Medical students (3rd year) | To teach introductory medical statistics | Blended learning | Serbia | Effectiveness; efficiency | No | Comparison of grades (20 multiple-choice test, plus final knowledge test) between students taking part in a blended program and a traditional program | - |

| [92] | General practice trainers | To teach a “Modular Trainers Course” which provided instruction on General Practice Specialty Registrars | Blended learning | UK | Satisfaction; efficiency | No | Participant feedback (Likert scale 1–4); Identifies themes but does not discuss the type of qualitative analysis (i.e., thematic, content, etc.) or provide a reference to the approach that was used to derive these findings | Not indicated |

| [121] | Master of Public Health students | To teach an introductory graduate course on epidemiology | Flipped classroom | Canada | Effectiveness; satisfaction; efficiency | No | Surveys containing both Likert scale (1–5) and open-ended questions, which were administered at 3 time points; additional Learner Evaluation of Educational Quality (SEEQ) Survey | Standardized |

| [122] | Medical students (1st year) | To teach a required integrated basic-science course called Foundations of Medicine | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Comparison of final exam marks between students that took part in the FC vs. those in the LC; learner evaluations. Evaluations derived from Bloom’s taxonomy | Not indicated |

| [62] | Medical students (1st and 2nd year) | To teach an anatomy course | Blended learning | Turkey | Effectiveness; satisfaction; efficiency | No | Focus groups with purposive sample of students with high, medium and low academic scores; content analysis | - |

| [70] | Medical students (3rd and 4th year—radiology clerkship or elective) | To teach neuroimaging content | Flipped learning | USA | Effectiveness; satisfaction; efficiency | No | 19-item electronic survey; shortened version of the class-related emotions section of the Achievement Emotions Questionnaire; pre- and post-test | Validated |

| [63] | Nursing students (undergraduates—year not explicitly stated) | To teach a flipped learning nursing informatics course | Flipped learning | South Korea | Effectiveness; satisfaction; efficiency | No | 3 levels of Kirkpatrick’s evaluation model: 10-item questionnaire; course outcomes achievement (multiple choice test, essay, checklist); follow-up survey | Not indicated |

| [123] | Nursing students (2nd year) | To teach a nursing informatics course | Flipped learning | South Korea | Effectiveness; satisfaction | No | Pre-post test, one-group, quasi-experimental design; preliminary test; 5-point Likert scale questionnaire; post-course feedback analyzed using the generation of themes | Preliminary test questions were reliability tested; not indicated for the questionnaire |

| [124] | Health professional students (dental medicine; dietetics; medicine; occupational therapy; pharmacy; physical therapy; social work; speech language pathology) | To teach an interdisciplinary evidence-based practice course | Flipped classroom | USA | Effectiveness; satisfaction | No | Module quizzes; Readiness for Interprofessional Learning Scale (RIPLS), plus survey with Likert scale (1–5) | Validated |

| [80] | Allied health students (nursing; health science; podiatry; occupational therapist; physiotherapist; paramedicine; speech pathology; exercise physiology; oral health) | To teach a first-year, first semester, physiology course | Blended learning | Australia | Effectiveness; satisfaction; efficiency | No | Student grades; cross-sectional survey (Likert scale 1–5). Thematic analysis | Not indicated |

| [125] | Nursing students (1st year) | To teach a first-year course on health assessment | Flipped classroom | Canada | Effectiveness; satisfaction; efficiency | No | Student grades; feedback (comparison between different cohort of students—intervention vs. no intervention) | Not indicated |

| [81] | Pharmacy students (1st, 2nd, and 3rd year) | To teach three courses: (1) small ambulatory care; (2) cardiovascular pharmacotherapeutics; and (3) evidence-based medicine | Blended learning | USA | Effectiveness; satisfaction; efficiency | No | Exam grades; student survey; and additional open-ended questions asked to faculty. Thematic analysis on open ended questions | Not indicated |

| [68] | Medical students; dental students; pharmacy students | To teach physiology | Blended learning | Montenegro | Effectiveness; satisfaction; efficiency | No | Comparison of grades on assessments between intervention and non-intervention group; survey (Likert scale 1–5); use of online material | Not indicated |

| [126] | Dental students (4th year) | To teach conservative dentistry and clinical dental skills in a course | Blended learning with a flipped classroom approach | Jordan | Effectiveness; satisfaction | No | Comparison between students being taught via blended learning and traditional methods; performance measures included two exams, two assignments, in-clinic quizzes, and a clinical assessment; number of posts made by students in study groups and online discussion forums; and a questionnaire | Not indicated |

| [127] | Emergency medicine residents (post graduate year 3) | To teach a course on pediatric emergency medicine | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Pre- and Post-test; survey. Zaption analytics to determine levels of interaction with online content | Not indicated |

| [82] | Emergency medicine residents | To teach a course on pediatric emergency medicine | Flipped classroom | USA | Effectiveness; satisfaction; efficiency | No | Pre- and post-test; survey. Zaption analytics to determine levels of interaction with online content | Not indicated |

| [128] | Nursing students (3rd year) | To teach a course entitled “Quality management methodology of nursing services” | Blended learning | Spain | Effectiveness | No | Quasi-experimental post-treatment design with equal control group; the ACRAr Scales of Learning Strategies; student learning results | The scale was indicated to be reliability tested and validated |

| [64] | Medical students (1st and 2nd year) | To teach radiology interpretation skills | Blended learning | Australia | Effectiveness; satisfaction; efficiency | No | Pre- and post-test; survey. Identifies themes but does not discuss the type of qualitative analysis (i.e., thematic, content, etc.) or provide a reference to the approach that was used to derive these findings | Not indicated |

| [129] | Medical Documentation and Secretarial Program students | To teach a course on medical terminology | Flipped classroom | Turkey | Effectiveness; efficiency; satisfaction | No | Study Process Questionnaire which uses a 5-point Likert-type scale; learning activity participation rates; final exam grades; and an online survey comprised of open-ended questions which were followed up by telephone interviews—qualitative analysis technique was not specified explicitly | The Study Process Questionnaire was previously validated and reliability tested; no indication for the survey |

| [130] | Faculty of Medicine students (2nd year) | To provide vascular access skill training via a Good Medical Practices Program in the faculty | Flipped classroom | Turkey | Effectiveness; efficiency; satisfaction | No | Prospective controlled post-test and delayed-test research design involving a comparison with a control group; performance test; 5-point Likert-type assessment scale; feedback analyzed through qualitative themes | Not indicated |

| [65] | Undergraduate medical students (year not explicitly stated—pediatric clerkship) | To improve newborn examination skills/neonatology | Blended learning | Australia | Effectiveness; satisfaction; efficiency | No | Performance of newborn examination on standardized assessment compared between blended learning and control group; questionnaire | Not indicated |

| [83] | Medical students (4th-year ophthalmology clerkship) | To teach an ophthalmology clerkship | Flipped classroom | China | Effectiveness; satisfaction; efficiency | No | A questionnaire modified from Paul Ramsden’s Course Experience Questionnaire and Biggs’ Study Process questionnaire with verified reliability and validity | Adapted from a questionnaire that was previously reliability tested and validated |

| [85] | Dental students (2nd year) | To teach a medical physiology course | Inverted classroom model | China | Effectiveness; efficiency | No | Comparison between traditional and blended learning classes; Kolb’s learning style inventory (LSI); and a satisfaction questionnaire using a 5-point Likert scale | Kolb’s LSI is indicated to be reliability tested and valid; the satisfaction questionnaire was developed based on the Course Experience Questionnaire which is indicated to be used in Australia |

| [131] | Medical students (3rd year) | To teach a medical statistics course | Flipped classroom | China | Effectiveness; satisfaction | No | Learning motivation measured by an 11-item students’ interest questionnaire; self-regulated learning was measured using a 10-item questionnaire; academic performance measured using a self-designed test; course satisfaction measured via student feedback using a 5-point Likert Scale | The learning motivation questionnaire was partially developed using items revised from an academic interest scale—internal consistency discussed; self-regulated learning questionnaire was developed according to previously developed scales—internal consistency discussed; not indicated for the satisfaction measurement |

| [132] | Practicing nurses | To teach a course on occupational health nursing | Blended learning | USA | Effectiveness | No | Survey | Not indicated |

| [97] | Postgraduates enrolled in a master program (includes nurses, medical doctors, pharmacists, paramedics, and policy officer) | To teach a course on quality and safety in patient care | Blended learning | Netherlands | Effectiveness; efficiency; satisfaction | Yes | Questionnaires using a 5-point Likert scale | Indicates that one of the questionnaires was developed previously but not published elsewhere, provides a Cronbach’s alpha score for the constructs of the questionnaire |

| [133] | Doctor of Pharmacy students (all years) | To teach advanced pharmacy practice courses | Blended learning | Qatar | Effectiveness; efficiency; satisfaction | No | Focus groups analyzed using thematic analysis | - |

| [66] | Pharmacy students (1st year) | To teach 3 classes on cardiac arrhythmias | Flipped teaching | USA | Effectiveness; satisfaction; Efficiency | No | Exam scores; 15-item survey (Likert scale 1–4); student feedback (comparison of intervention and non-intervention groups) | Not indicated |

| [134] | Doctor of Dental Surgery students (1st year) | To teach physiology of the autonomic nervous system | Flipped classroom | USA | Effectiveness | No | Student performance assessed via quiz | - |

| [135] | Nursing students (senior level students—year not explicitly stated) | To teach a course on sleep education | Does not refer to BL or any of its synonyms | USA | Effectiveness; satisfaction; efficiency | No | Pre- and post-quiz; student feedback (Likert scale, 1–10) | Not indicated |

| [136] | Undergraduate clinical medicine students (2nd year) | To teach a course on physiology | Flipped classroom | China | Effectiveness; satisfaction | No | Pre-post test comparison between students being taught in traditional vs. flipped classroom methods; and a questionnaire | Not indicated |

| [94] | Pharmacists working in various community pharmacies in urban areas | To train pharmacists in cardiovascular disease risk assessment | Blended learning | Qatar | Effectiveness; satisfaction | No | The authors sought to evaluate the program using Kirkpatrick’s four-level evaluation framework (though the results do not explicitly indicate which levels of the model were assessed for); this includes pre-post questionnaires; interactive quizzes; objective structured clinical examination; and a satisfaction survey using a 5-point Likert Scale | Not indicated |

| [137] | Graduate health professional students [medicine; nursing (clinical nurse leader); pharmacy; public health; and Master of Social Work] | To teach a course on population health and clinical emersion | Blended learning | USA | Effectiveness; satisfaction | No | Pre- and post-assessment; reflection paper; assessment for Interprofessional Team Communication scale (AITCS) with Likert scale (1–5); course evaluations; benchmark reported through electronic medical record | Validated |

Quantitative Findings

BLPs were implemented globally and for various HPE populations. One study explicitly evaluated the overall concept of usability. Most studies did not follow or refer to any formal evaluation framework. Only four studies (5.0%) referenced the 4-level Kirkpatrick model of evaluation. Fifty-five out of 80 studies (68.8%) explicitly evaluated to see if a change in learner attitudes, knowledge, skills, and/or overall learning was achieved. These evaluations were often done through a pre-post test design.

Sixty-nine out of 80 studies (86.3%) utilized some sort of questionnaire, survey, course evaluation, or feedback tool (henceforth instrument). Only 30 of the 69 studies (43.5%) discussed if their instrument was reliability tested, standardized, or validated. Among these 30 studies that discussed validation, reliability testing, or standardization, instruments utilized in these studies were not identified as being specifically developed to evaluate aspects of BLPs. Rather, these instruments were developed to measure concepts such as “communication” or “learning” in general, and not specifically within the context of a BLP.

Seven out of 80 studies (8.8%) used focus groups to evaluate their BLP. One out of 80 studies (1.3%) used semi-structured group interviews. Twenty-two out of 80 studies (27.5%) used qualitative techniques to analyze data obtained from open-ended questionnaires, learner feedback, or learner reflections. Figures that further illustrate the nature and extent of BLP evaluation across HPE can be found under Supplementary Appendices 4, 5, 6, 7 and 8.

Directed Content Analysis

All studies were found to evaluate for one or more of the usability components (effectiveness, efficiency, or satisfaction) as defined by the ISO. However, it must be noted that scholars did not always explicitly label the component they focused their evaluation on, in their studies. For example, scholars would often list the purpose of their study to evaluate the effectiveness of a blended learning program; however, when assessing their methods, questionnaires with items focused on learner satisfaction were often identified.

Inductive Thematic Analysis

Theme 1: Avoiding the “Usability” Label and Using Undefined Related Terms Instead

Although only one study employed the term “usability” when reporting their BLP evaluation, scholars across the data set adopted several terms and concepts which were identified as related to usability and its major components, for instance, “appropriate” [51–54],“beneficial” [55],“clear” or “clarity” [52, 56–66],“easy” or “ease-of-use” [57, 62, 65–68],“efficacy” [59, 60, 69, 70],“favourable” [67],“flexibility” [51, 54, 56, 57, 62, 64, 67–69, 71–83], “help,” “helpful,” or “helpfulness” [56, 59, 66, 83–85],“informative” [74],“useful” or “usefulness” [60, 62, 67, 70, 71, 78, 86–92],“utility” [61], and “worthwhile” [61].

What is more, interpretations of these words were found to be quite ambiguous and dissimilar across studies. For example, refer to the following two excerpts:

“The students preferred learning the course online since the crowded setting decreased the laboratory’s effectiveness and access to the resources out of the class was useful for their learning. ‘It was like a course for us. It is like we are taking a class for 1 or 2 more hours at home. From this point, it was very useful’” [62] [italics are mine].

And:

“After completion of their ICA in geriatric medicine, 88% of the students agreed that WebCT was a useful tool for this rotation. When the students were asked about their perceptions of the use of a paper-based portfolio, 68% agreed that they felt comfortable using it whereas 16% somewhat disagreed with this statement” [71] [italics are mine].

In the first excerpt, authors associate the number of students in a room with effectiveness and discuss access as related to usefulness. A student’s response in this excerpt relates usefulness with time and location. However, in the second excerpt, usefulness is broader. It seems to be related to the perceived importance or effect, as well as the comfort that students have in relation to different aspects of the BLP. This example highlights that without the reference of an explicit definition or a framework that guides the use of terminology, ambiguity in the interpretations of terms and concepts results between scholars.

Theme 2: Confusing Conceptualization of Usability’s Primary Components

The conceptualization of the terms effectiveness, efficiency, and satisfaction differed significantly across studies. This theme captures and highlights the ambiguity surrounding the way in which these terms were discussed across retained studies.

Effectiveness

Effectiveness was explicitly used in most studies. Although widely used, the interpretation of this word was seldom unanimous. No study provided a framework to define this term but often associated the term with unique ideas or concepts specific to each study. For instance, refer to the following two excerpts:

“Our results are in agreement with those of other studies on the effectiveness of e-learning as part of blended learning, which showed that students’ engagement was increased, and their perception of the educational environment was improved…Thus, although the use of technology in teaching is effective and is perceived as such, it requires a cultural change in learning practice that might not be easy for everyone” [79] [italics are mine].

And:

“When the findings on the effective learning of [the BLP] were analyzed, students stated that the images made learning long-lasting; made learning easy for the students; and helped the students get prepared before the class…The students preferred learning the course online since the crowded setting decreased the laboratory’s effectiveness…” [62] [italics are mine].

In the first excerpt, effectiveness is related to the concepts of learners’ engagement and perception, whereas in the second excerpt, effectiveness is related to several different concepts including permanence of learning, ease-of-learning, and assistance with pre-class preparation. The second excerpt goes on to relate effectiveness to the number of learners taking part in an activity.

Efficiency

Although the qualitative content analysis in Table 2 indicates that studies often discussed the concept of efficiency, studies seldom used the label efficiency. However, even when scholars explicitly used the term efficiency, the connotation they applied to it differed. For example, refer to the following two excerpts:

“Although a well-crafted and captivating lecture presentation seems like an efficient way for an instructor to cover course content, converging evidence implies that listening to a classroom lecture is not an effective way to promote deep and lasting student learning” [77] [italics are mine].

And:

“Students frequently claim that they prefer podcasts to real-time instruction because they can both speed up the podcast…as well as review portions of podcasts that they need to see again. They view this as more efficient” [93] [italics are mine].

In the first excerpt, efficiency is related to the idea of being well crafted and captivating, whereas the second study discusses efficiency as related to the concept of time and the review function of online material.

Satisfaction

Satisfaction was discussed across most retained studies, though not always explicitly. Often, the words “positive” and “negative” were used instead. The majority of the ambiguity in relation to this concept arises through two specific issues. Firstly, each study applied different connotations to satisfaction. For example, some studies discuss satisfaction as a concept that is used to measure effectiveness [51, 60], whereas others discuss satisfaction as related to the concepts of learner attitudes and experiences [80]. Secondly, studies differ regarding what their focus of evaluation was (i.e., either on the satisfaction of specific components of the BLP or the entire program in general).

In sum, a great deal of ambiguity in the connotations of effectiveness, efficiency, and satisfaction can be seen across all retained studies.

Theme 3: Lack of Consensual Approach to Usability Evaluation

The conceptual and definitional ambiguity concerning usability and its major components highlighted in the first two themes was accompanied by an absence of a consensual approach to its evaluation. Each study adopted a unique set of methods and instruments to evaluate a unique set of concepts. Some studies attempted to complement subjective measures (i.e., learner perceptions) with objective measures (i.e., changes in grades), while others focused evaluations on only one type of measure. Some authors included open-ended responses in their instruments. In these cases, studies differed in their analysis, where some used thematic analysis, others used content analysis, and some did not specify their method of analysis. Each study also had a different focus of evaluation, where some studies separated the evaluations for asynchronous and synchronous components, while others evaluated the BLP as a whole.

These issues might be present because most studies do not utilize established frameworks to guide their evaluations. In fact, only 4 studies referenced the 4-level Kirkpatrick evaluation model [51, 63, 78, 94], which is considered to be a widely used model for educational program evaluation [95, 96]. However, of these studies, one completed only the first level of evaluation, another completed the first and second level of evaluation, a third completed the first to third levels of evaluation, and the fourth discussed “…an attempt to assess all 4 levels of Kirkpatrick’s evaluation framework…” though the depth to which this was done is questionable.

Deductive Thematic Analysis

The ISO framework for usability allowed for easy replacement of ambiguous terms with the labels: usability, effectiveness, efficiency, and satisfaction. Alongside these labels, this analysis also revealed that scholars consistently utilized the terms accessibility and 'user' experience, two concepts which the ISO describe as being critical and closely related to usability [29]. Refer to the following excerpt:

“The feature of the course that participants liked most was the eLearning modules. They found them very interactive, creative, easy to understand, and useful in addressing multiple learning styles. Participants appreciated the accessibility and self-paced nature of the eLearning modules. The participants also valued the peer-reviewed journal readings and reported that these readings complemented the material presented in the modules and reinforced current practice issues and evidence-based practice. Participants reported that the discussion forums, which were another interactive part of the course, allowed nurses an opportunity to share opinions, knowledge, and practice experiences” [89].

In this excerpt, effectiveness can be interpreted from “easy to understand”; satisfaction can be interpreted from statements such as “participants liked/appreciated/valued…”; efficiency can be interpreted from statements regarding timing and resources (i.e., “self-paced nature”); accessibility is explicitly discussed in this excerpt; and learner experiences are also explicitly discussed (i.e., “participants reported that the discussion forums…”).

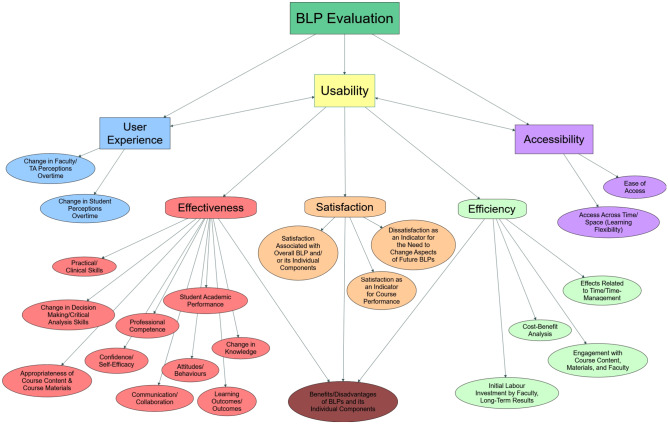

Moreover, this deductive analysis identified 22 concepts associated with usability that were applied consistently across all 53 studies. These concepts include change in knowledge, skills, and perceptions, to name a few. Many of these concepts were interpreted through the items present in the evaluation instruments of retained studies. These concepts were amalgamated into a conceptual map (Fig. 2).

Fig. 2.

Concept map developed from the deductive findings of the thematic analysis

Discussion

In this scoping review, we aimed to map current knowledge about how usability has been conceived and employed in the evaluation of BLPs within HPE. Only one study was found to explicitly apply the term usability [97]. In this study, usability seems to have been evaluated as one item in an instrument. As such, the complexity and depth of this concept seems to have been neglected. Though usability has been identified by scholars in HPE as being an important aspect of educational programs that involve technology [26, 27], its explicit and appropriate implementation has yet to be fully achieved in this field of inquiry. The lack of uptake may be due to confusion, ambiguity, or lack of knowledge associated with usability and its various definitions [26–34]. Usability is often understood simply as “the ease-of-use” of a technology or technological system. However, the ISO indicates that this idea of usability does not reflect the comprehensiveness nature of this multidimensional concept [29]. The ISO framework for usability serves well to facilitate the clarification and implementation of this concept into the field of HPE by elucidating its comprehensive nature and its ability to evaluate both the technological and educational components of BLPs.

Through applying the ISO framework to guide the content analysis conducted in this scoping review, it was noted that not all studies explicitly discuss evaluations of effectiveness, efficiency, and satisfaction, and that the connotations ascribed to these terms possibly varied across studies. In the inductive phase of the thematic analysis, the depth of the disparity in the labels and conceptualization of terms used across studies was captured. Across studies, scholars seemed to apply different terms to describe the same concepts. For instance, to evaluate their BLPs, some scholars employed the term helpful, whereas others employed the term useful, and both of these are potentially attempting to measure the concept of effectiveness. Additionally, when scholars adopted the same terms, they applied different connotations to them. For instance, in one study, the term useful was related to the idea of access, whereas in another study, the term useful was related to the idea of perceived importance and effect. The lack of a common language to facilitate evaluations is a potential threat to the comparability and generalizability of BLP evaluative studies in the field of HPE. Moreover, this lack of commonality in language, in addition to a lack of uptake of an evaluative framework, may serve as a reason for why so many different methods are used to evaluate BLPs.

In the deductive phase of the thematic analysis, the framework for usability established by the ISO, a global organization that develops standards through the collaboration and consensus of international experts [29, 31], served to overcome the disparity in the language employed by scholars. Adoption of this framework demonstrated that although scholars do not explicitly make use of the term usability, they are in fact evaluating and describing this concept, albeit implicitly.

We also identified that, in the current context, the concept of usability extends beyond the three major components of effectiveness, efficiency, and satisfaction. Specifically, accessibility and user experience were identified as being closely associated concepts to usability. A concept map that consolidates and clarifies the relationships between the major and associated components of usability, as well as the 22 most common concepts that were evaluated within the retained studies was generated through this review (Fig. 2). This figure depicts that usability is often implicitly evaluated through a focus on one or more of its major components. Bi-directional arrows can be seen between usability and both accessibility and user experience. This indicates that the connotations that scholars often provided to the labels “user experience” and “accessibility” were essentially interchangeable with that of the definition of usability. This conceptual map elucidates a practical application of usability for BLPs in HPE literature. Through application of this map, the potential to begin comparing and contrasting different BLPs emerges.

Moving forward, we suggest that scholars conducting evaluations of BLPs within HPE must adopt a common lexicon and set of concepts to be evaluated to begin establishing the comparability and generalizability of these studies. In this regard, usability can serve well as a multifaceted concept that not only clarifies what is meant by terms such as effectiveness, efficiency, satisfaction, user experience, and accessibility, but also begins to consolidate how evaluations for each of these terms may be conducted (i.e., what sub-concepts relate to these domains of usability).

The explicit adoption of usability in HPE may be facilitated with an instrument to measure this concept in its entirety. Notably, many usability evaluation instruments exist in relation to e-learning programs or platforms such as the LMSs [39, 98–102], though, no instrument developed specifically for usability evaluation of BLPs in HPE was identified. Thus, our research team will use the findings of this review to work on developing and initially validating a comprehensive instrument to measure usability in this context. This instrument will assist in establishing a systematic evaluation procedure, which may lead to increased similarity in the terms, connotations, and concepts that are being measured across BLPs in HPE. This in turn may assist in increasing the comparability and rigor of evaluative studies in this context, and thereby, the systematic improvement of BLPs in HPE. As the application of BLPs continues to rise, evaluating the usability of these initiatives will ensure that they are well designed, well received by learners, facilitate learning, and can be systematically improved.

Limitations and Strengths

This review was the first to evaluate the application of usability within HPE. Only two literature databases were searched. The use of additional databases specific to various health professional disciplines may have assisted in identifying relevant studies. However, the broad search strategy was validated by several academic librarians, implemented iteratively over a 2-year period, and was used to cover an extensive range of HPE initiatives around the world. A major strength of this review is the use of rigorous methods to analyze the data, particularly a deductive analysis that brought clarity to the large discrepancy identified in the inductive analysis.

Conclusion

BLPs are being implemented in the field of HPE globally. The introduction of these programs has been further increased due to the COVID-19 pandemic. To ensure that learners can benefit from BLPs, they must be evaluated and systematically improved over time. Our findings indicate that the comparability and generalizability of BLP evaluative research in HPE appears compromised. Critical concepts such as effectiveness, efficiency, and satisfaction are often poorly labeled or conceptualized across HPE literature examining BLPs. This is coupled with a lack of uptake of, or reference to, established evaluative frameworks to guide scholars. Ultimately, the concepts and methods used to evaluate BLPs in the field of HPE are disparate. However, adoption of the ISO framework for usability addresses these issues by establishing clear definitions for scholars to consider with respect to various evaluative concepts. Also, scholars already seem to be discussing and evaluating for usability, though implicitly. Explicit acceptance of this framework may facilitate the adoption of a common language among scholars conducting BLP evaluations in HPE. A conceptual map that clarifies the consideration of usability evaluation in the current context provides a foundation for the future development of instruments to evaluate usability in BLPs within HPE. This may facilitate the adoption of a common set of methods or frameworks, which ultimately may allow for increased rigor and systematization of BLP evaluations in HPE, factors that may determine the overall utility, impact, and value of these programs amidst and beyond a global pandemic.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We would like to thank all academic librarians that assisted with this study: Genevieve Gore, Ekatarina Grguric, Lucy Kiester, and Vera Granikov.

Funding

This study received funding through the McGill University’s Department of Family Medicine and a Canada Graduate Scholarship – Master’s through the Social Science and Humanities Research Council.

Declarations

Ethics Approval

This scoping review is a part of a larger mixed-method study, the protocol for which was approved by the McGill University Faculty of Medicine’s Institutional Review Board in June 2018 (reference number: A06 E42 18A).

Conflict of Interest

The authors declare no competing interests.

Disclaimer

The present research was conducted as part of the first author’s (Anish Arora’s) Master of Science thesis. Dr. Charo Rodriguez was the primary supervisor, and Dr. Tibor Schuster was the co-supervisor. Dr. Tamara Carver was part of the thesis committee.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Garrison DR, Kanuka H. Blended learning: uncovering its transformative potential in higher education. Internet High Educ. 2004;7(2):95–105. doi: 10.1016/j.iheduc.2004.02.001. [DOI] [Google Scholar]

- 2.Garrison DR, Vaughan ND. Blended learning in higher education: framework, principles, and guidelines. John Wiley & Sons; 2008. [Google Scholar]

- 3.Williams C. Learning on-line: a review of recent literature in a rapidly expanding field. J Furth High Educ. 2002;26(3):263–272. doi: 10.1080/03098770220149620. [DOI] [Google Scholar]

- 4.Frenk J, Chen L, Bhutta ZA, Cohen J, Crisp N, Evans T, Kistnasamy B. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. The lancet. 2010;376(9756):1923–1958. doi: 10.1016/S0140-6736(10)61854-5. [DOI] [PubMed] [Google Scholar]

- 5.Graham CR. Blended learning systems. The handbook of blended learning: Global perspectives, local designs; 2006. pp. 3–21. [Google Scholar]

- 6.Graham CR, Woodfield W, Harrison JB. A framework for institutional adoption and implementation of blended learning in higher education. The internet and higher education. 2013;18:4–14. doi: 10.1016/j.iheduc.2012.09.003. [DOI] [Google Scholar]

- 7.Vahed M, Hossain R, Min R, Yu R, Javed S, Nguyen T. The future of blended learning: a report for students and policy makers. Federation of Canadian Secondary Students. 2017.

- 8.Ehrlich H, McKenney M, Elkbuli A. We asked the experts: virtual learning in surgical education during the COVID-19 pandemic—shaping the future of surgical education and training. World J Surg. 1. 2020. [DOI] [PMC free article] [PubMed]

- 9.Moszkowicz D, Duboc H, Dubertret C, Roux D, Bretagnol F. Daily medical education for confined students during COVID‐19 pandemic: a simple videoconference solution. Clin Anat. 2020. [DOI] [PMC free article] [PubMed]

- 10.Torda AJ, Velan G, Perkovic V. The impact of COVID-19 pandemic on medical education. Med J Aust. 1. 2020.

- 11.Jones K, Sharma R. On reimagining a future for online learning in the post-COVID era. Springer; 2020. [Google Scholar]

- 12.Nerantzi C. The use of peer instruction and flipped learning to support flexible blended learning during and after the COVID-19 Pandemic. International Journal of Management and Applied Research. 2020;7(2):184–195. doi: 10.18646/2056.72.20-013. [DOI] [Google Scholar]

- 13.Bersin J. The blended learning book: best practices, proven methodologies, and lessons learned. John Wiley & Sons; 2004. [Google Scholar]

- 14.Choules AP. The use of elearning in medical education: a review of the current situation. Postgrad Med J. 2007;83(978):212–216. doi: 10.1136/pgmj.2006.054189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Masters K, Ellaway R. e-Learning in medical education Guide 32 Part 2: technology, management and design. Med Teach. 2008;30(5):474–489. doi: 10.1080/01421590802108349. [DOI] [PubMed] [Google Scholar]

- 16.Means B, Toyama Y, Murphy R, Baki M. The effectiveness of online and blended learning: a meta-analysis of the empirical literature. Teach Coll Rec. 2013;115(3):1–47. [Google Scholar]

- 17.Pereira JA, Pleguezuelos E, Merí A, Molina-Ros A, Molina-Tomás MC, Masdeu C. Effectiveness of using blended learning strategies for teaching and learning human anatomy. Med Educ. 2007;41(2):189–195. doi: 10.1111/j.1365-2929.2006.02672.x. [DOI] [PubMed] [Google Scholar]

- 18.Ruiz JG, Mintzer MJ, Leipzig RM. The impact of e-learning in medical education. Acad Med. 2006;81(3):207–212. doi: 10.1097/00001888-200603000-00002. [DOI] [PubMed] [Google Scholar]

- 19.Twigg CA. Improving quality and reducing costs: lessons learned from round III of the pew grant program in course redesign. National Center for Academic Transformation. Retrieved 2011. 2004.

- 20.Precel K, Eshet-Alkalai Y, Alberton Y. Pedagogical and design aspects of a blended learning course. International Review of Research in Open and Distributed Learning. 2009:10(2).

- 21.Watson J. Blended learning: the convergence of online and face-to-face education. North American Council for Online Learning: Promising Practices in Online Learning; 2008. [Google Scholar]

- 22.Singh H. Building effective blended learning programs. Educational Technology-Saddle Brook Then Englewood Cliffs NJ. 2003;43(6):51–54. [Google Scholar]

- 23.Allen IE, Seaman J, Garrett R. Blending in: the extent and promise of blended education in the United States. Sloan Consortium. 2007.

- 24.Gedik N, Kiraz E, Ozden, MY. Design of a blended learning environment: considerations and implementation issues. Australas J Educ Technol. 2013:29(1).

- 25.O'Connor C, Mortimer D, Bond S. Blended learning: Issues, benefits and challenges. International Journal of Employment Studies. 2011;19(2):63. [Google Scholar]

- 26.Sandars J. The importance of usability testing to allow e-learning to reach its potential for medical education. Educ Prim Care. 2010;21(1):6–8. doi: 10.1080/14739879.2010.11493869. [DOI] [PubMed] [Google Scholar]

- 27.Asarbakhsh M, Sandars J. E-learning: the essential usability perspective. Clin Teach. 2013;10(1):47–50. doi: 10.1111/j.1743-498X.2012.00627.x. [DOI] [PubMed] [Google Scholar]

- 28.Parlangeli O, Marchigiani E, Bagnara S. Multimedia systems in distance education: effects of usability on learning. Interact Comput. 1999;12(1):37–49. doi: 10.1016/S0953-5438(98)00054-X. [DOI] [Google Scholar]

- 29.International Organization for Standardization. Ergonomics of human-system interaction — part 11: usability: definitions and concepts. Retrieved from. 2018(en):ISO 9241–11 https://www.iso.org/obp/ui/#iso:std:iso:9241:-11:ed-2:v1:en.

- 30.Bevan N, Carter J, Harker S. What have we learnt about usability since 1998?. In International Conference on Human-Computer Interaction (pp. 143–151). Springer, Cham. 2015:ISO 9241–11 revised.

- 31.Bevan N, Carter J, Earthy J, Geis T, Harker S. New ISO standards for usability, usability reports and usability measures. In International conference on human-computer interaction. Springer, Cham. 2016:268–278.

- 32.Frøkjær E, Hertzum M, Hornbæk K. Measuring usability: are effectiveness, efficiency, and satisfaction really correlated?. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems. 2000:345–352.

- 33.Hansen G K, Olsson N, Blakstad S H. Usability evaluations–user experiences–usability evidence. In CIB Proceedings: Publication. 2010;336:37–48.

- 34.Van Nuland SE, Eagleson R, Rogers KA. Educational software usability: artifact or design? Anat Sci Educ. 2017;10(2):190–199. doi: 10.1002/ase.1636. [DOI] [PubMed] [Google Scholar]

- 35.Jeng J. Usability assessment of academic digital libraries: effectiveness, efficiency, satisfaction, and learnability. Libri. 2005;55(2–3):96–121. [Google Scholar]

- 36.Seffah A, Donyaee M, Kline RB, Padda HK. Usability measurement and metrics: a consolidated model. Software Qual J. 2006;14(2):159–178. doi: 10.1007/s11219-006-7600-8. [DOI] [Google Scholar]

- 37.Ssemugabi S, De Villiers MR. Effectiveness of heuristic evaluation in usability evaluation of e-learning applications in higher education. South African comp journal. 2010;45:26–39. [Google Scholar]

- 38.Sandars J, Lafferty N. Twelve tips on usability testing to develop effective e-learning in medical education. Med Teach. 2010;32(12):956–960. doi: 10.3109/0142159X.2010.507709. [DOI] [PubMed] [Google Scholar]

- 39.Zaharias P, Poylymenakou A. Developing a usability evaluation method for e-learning applications: beyond functional usability. Int J Hum Comput Interact. 2009;25(1):75–98. doi: 10.1080/10447310802546716. [DOI] [Google Scholar]

- 40.Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32. doi: 10.1080/1364557032000119616. [DOI] [Google Scholar]

- 41.Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, Hempel S. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–473. doi: 10.7326/M18-0850. [DOI] [PubMed] [Google Scholar]

- 42.Colquhoun HL, Levac D, O'Brien KK, Straus S, Tricco AC, Perrier L, Moher D. Scoping reviews: time for clarity in definition, methods, and reporting. J Clin Epidemiol. 2014;67(12):1291–1294. doi: 10.1016/j.jclinepi.2014.03.013. [DOI] [PubMed] [Google Scholar]

- 43.Morris M, Boruff JT, Gore GC. Scoping reviews: establishing the role of the librarian. J Med Libr Assoc: JMLA. 2016;104(4):346. doi: 10.3163/1536-5050.104.4.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Peters MD, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. Int J Evid Based Healthc. 2015;13(3):141–146. doi: 10.1097/XEB.0000000000000050. [DOI] [PubMed] [Google Scholar]

- 45.EBSCO. ERIC. Retrieved from. 2018. https://www.ebsco.com/products/research-databases/eric.

- 46.Elsevier. Scopus: Access and use Support Centre. Retrieved from. 2018. https://service.elsevier.com/app/answers/detail/a_id/15534/supporthub/scopus/#tips.

- 47.Mann K, Gordon J, MacLeod A. Reflection and reflective practice in health professions education: a systematic review. Adv Health Sci Educ. 2009;14(4):595–621. doi: 10.1007/s10459-007-9090-2. [DOI] [PubMed] [Google Scholar]

- 48.World Health Organization. N.D. Definition and list of health professionals: transformative education for health professionals. Retrieved from: https://whoeducationguidelines.org/content/1-definition-and-list-health-professionals.

- 49.Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–1288. doi: 10.1177/1049732305276687. [DOI] [PubMed] [Google Scholar]

- 50.Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77–101. doi: 10.1191/1478088706qp063oa. [DOI] [Google Scholar]

- 51.Elebiary HA, Al Mahmoud S. Enhancing blended courses to facilitate student achievement of learning outcomes. Life Science Journal. 2013;10(2).

- 52.Koo CL, Demps EL, Farris C, Bowman JD, Panahi L, Boyle P. Impact of flipped classroom design on student performance and perceptions in a pharmacotherapy course. Am J Pharm Educ. 2016;80(2). [DOI] [PMC free article] [PubMed]

- 53.Lewis CE, Chen DC, Relan A. Implementation of a flipped classroom approach to promote active learning in the third-year surgery clerkship. The American Journal of Surgery. 2018;215(2):298–303. doi: 10.1016/j.amjsurg.2017.08.050. [DOI] [PubMed] [Google Scholar]

- 54.Lorimer J, Hilliard A. Incorporating learning technologies into undergraduate radiography education. Radiography. 2009;15(3):214–219. doi: 10.1016/j.radi.2009.02.003. [DOI] [Google Scholar]

- 55.Kugler AJ, Gogineni HP, Garavalia LS. Learning outcomes and student preferences with flipped vs lecture/case teaching model in a block curriculum. Am J Pharm Educ. 2019;83(8):1759–1766. doi: 10.5688/ajpe7044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Green RA, Whitburn LY. Impact of introduction of blended learning in gross anatomy on student outcomes. Anat Sci Educ. 2016;9(5):422–430. doi: 10.1002/ase.1602. [DOI] [PubMed] [Google Scholar]

- 57.Howlett D, Vincent T, Watson G, Owens E, Webb R, Gainsborough N, Vincent R. Blending online techniques with traditional face to face teaching methods to deliver final year undergraduate radiology learning content. Eur J Radiol. 2011;78(3):334–341. doi: 10.1016/j.ejrad.2009.07.028. [DOI] [PubMed] [Google Scholar]

- 58.Kangwantas K, Pongwecharak J, Rungsardthong K, Jantarathaneewat K, Sappruetthikun P, Maluangnon K. Implementing a flipped classroom approach to a course module in fundamental nutrition for pharmacy students. Pharm Educ. 2017;17.

- 59.Kühl SJ, Toberer M, Keis O, Tolks D, Fischer MR, Kühl M. Concept and benefits of the Inverted Classroom method for a competency-based biochemistry course in the pre-clinical stage of a human medicine course of studies. GMS J Med Educ. 2017;34(3). [DOI] [PMC free article] [PubMed]

- 60.Mary S, Julie J, Jennifer G. Teaching evidence based practice and research through blended learning to undergraduate midwifery students from a practice based perspective. Nurse Educ Pract. 2014;14(2):220–224. doi: 10.1016/j.nepr.2013.10.001. [DOI] [PubMed] [Google Scholar]

- 61.Matsuda Y, Azaiza K, Salani D. Flipping the classroom without flipping out the students. Distance Learning Issue: 2017;14(1):31. [Google Scholar]

- 62.Ocak MA, Topal AD. Blended learning in anatomy education: a study investigating medical students’ perceptions. Eurasia Journal of Mathematics, Science and Technology Education. 2015;11(3):647–683. doi: 10.12973/eurasia.2015.1326a. [DOI] [Google Scholar]

- 63.Oh J, Kim SJ, Kim S, Vasuki R. Evaluation of the effects of flipped learning of a nursing informatics course. J Nurs Educ. 2017;56(8):477–483. doi: 10.3928/01484834-20170712-06. [DOI] [PubMed] [Google Scholar]

- 64.Salajegheh A, Jahangiri A, Dolan-Evans E, Pakneshan S. A combination of traditional learning and e-learning can be more effective on radiological interpretation skills in medical students: a pre-and post-intervention study. BMC Med Educ. 2016;16(1):46. doi: 10.1186/s12909-016-0569-5. [DOI] [PMC free article] [PubMed] [Google Scholar]