Abstract

Diagnosis of Parkinson's disease (PD) is commonly based on medical observations and assessment of clinical signs, including the characterization of a variety of motor symptoms. However, traditional diagnostic approaches may suffer from subjectivity as they rely on the evaluation of movements that are sometimes subtle to human eyes and therefore difficult to classify, leading to possible misclassification. In the meantime, early non-motor symptoms of PD may be mild and can be caused by many other conditions. Therefore, these symptoms are often overlooked, making diagnosis of PD at an early stage challenging. To address these difficulties and to refine the diagnosis and assessment procedures of PD, machine learning methods have been implemented for the classification of PD and healthy controls or patients with similar clinical presentations (e.g., movement disorders or other Parkinsonian syndromes). To provide a comprehensive overview of data modalities and machine learning methods that have been used in the diagnosis and differential diagnosis of PD, in this study, we conducted a literature review of studies published until February 14, 2020, using the PubMed and IEEE Xplore databases. A total of 209 studies were included, extracted for relevant information and presented in this review, with an investigation of their aims, sources of data, types of data, machine learning methods and associated outcomes. These studies demonstrate a high potential for adaptation of machine learning methods and novel biomarkers in clinical decision making, leading to increasingly systematic, informed diagnosis of PD.

Keywords: Parkinson's disease, machine learning, deep learning, diagnosis, differential diagnosis

Introduction

Parkinson's disease (PD) is one of the most common neurodegenerative diseases with a prevalence rate of 1% in the population above 60 years old, affecting 1–2 people per 1,000 (Tysnes and Storstein, 2017). The estimated global population affected by PD has more than doubled from 1990 to 2016 (from 2.5 million to 6.1 million), which is a result of increased number of elderly people and age-standardized prevalence rates (Dorsey et al., 2018). PD is a progressive neurological disorder associated with motor and non-motor features (Jankovic, 2008) which comprises multiple aspects of movements, including planning, initiation and execution (Contreras-Vidal and Stelmach, 1995).

During its development, movement-related symptoms such as tremor, rigidity and difficulties in initiation can be observed, prior to cognitive and behavioral alterations including dementia (Opara et al., 2012). PD severely affects patients' quality of life (QoL), social functions and family relationships, and places heavy economic burdens at individual and society levels (Johnson et al., 2013; Kowal et al., 2013; Yang and Chen, 2017).

The diagnosis of PD is traditionally based on motor symptoms. Despite the establishment of cardinal signs of PD in clinical assessments, most of the rating scales used in the evaluation of disease severity have not been fully evaluated and validated (Jankovic, 2008). Although non-motor symptoms (e.g., cognitive changes such as problems with attention and planning, sleep disorders, sensory abnormalities such as olfactory dysfunction) are present in many patients prior to the onset of PD (Jankovic, 2008; Tremblay et al., 2017), they lack specificity, are complicated to assess and/or yield variability from patient to patient (Zesiewicz et al., 2006). Therefore, non-motor symptoms do not yet allow for diagnosis of PD independently (Braak et al., 2003), although some have been used as supportive diagnostic criteria (Postuma et al., 2015).

Machine learning techniques are being increasingly applied in the healthcare sector. As its name implies, machine learning allows for a computer program to learn and extract meaningful representation from data in a semi-automatic manner. For the diagnosis of PD, machine learning models have been applied to a multitude of data modalities, including handwritten patterns (Drotár et al., 2015; Pereira et al., 2018), movement (Yang et al., 2009; Wahid et al., 2015; Pham and Yan, 2018), neuroimaging (Cherubini et al., 2014a; Choi et al., 2017; Segovia et al., 2019), voice (Sakar et al., 2013; Ma et al., 2014), cerebrospinal fluid (CSF) (Lewitt et al., 2013; Maass et al., 2020), cardiac scintigraphy (Nuvoli et al., 2019), serum (Váradi et al., 2019), and optical coherence tomography (OCT) (Nunes et al., 2019). Machine learning also allows for combining different modalities, such as magnetic resonance imaging (MRI) and single-photon emission computed tomography (SPECT) data (Cherubini et al., 2014b; Wang et al., 2017), in the diagnosis of PD. By using machine learning approaches, we may therefore identify relevant features that are not traditionally used in the clinical diagnosis of PD and rely on these alternative measures to detect PD in preclinical stages or atypical forms.

In recent years, the number of publications on the application of machine learning to the diagnosis of PD has increased. Although previous studies have reviewed the use of machine learning in the diagnosis and assessment of PD, they were limited to the analysis of motor symptoms, kinematics, and wearable sensor data (Ahlrichs and Lawo, 2013; Ramdhani et al., 2018; Belić et al., 2019). Moreover, some of these reviews only included studies published between 2015 and 2016 (Pereira et al., 2019). In this study, we aim to (a) comprehensively summarize all published studies that applied machine learning models to the diagnosis of PD for an exhaustive overview of data sources, data types, machine learning models, and associated outcomes, (b) assess and compare the feasibility and efficiency of different machine learning methods in the diagnosis of PD, and (c) provide machine learning practitioners interested in the diagnosis of PD with an overview of previously used models and data modalities and the associated outcomes, and recommendations on how experimental protocols and results could be reported to facilitate reproduction. As a result, the application of machine learning to clinical and non-clinical data of different modalities has often led to high diagnostic accuracies in human participants, therefore may encourage the adaptation of machine learning algorithms and novel biomarkers in clinical settings to assist more accurate and informed decision making.

Methods

Search Strategy

A literature search was conducted on the PubMed (https://pubmed.ncbi.nlm.nih.gov) and IEEE Xplore (https://ieeexplore.ieee.org/search/advanced/command) databases on February 14, 2020 for all returned results. Boolean search strings used are shown in Table 1. No additional filters were applied in the literature search. All retrieved studies were systematically identified, screened and extracted for relevant information following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Moher et al., 2009).

Table 1.

Boolean search strings used for the retrieval of relevant publications on PubMed and IEEE Xplore databases.

| Database | Boolean search string |

|---|---|

| PubMed | (“Parkinson Disease”[Mesh] OR Parkinson*) AND (“Machine Learning”[Mesh] OR machine learn* OR machine-learn* OR deep learn* OR deep-learn*) AND (human OR patient) AND (“Diagnosis”[Mesh] OR diagnos* OR detect* OR classif* OR identif*) NOT review[Publication Type] |

| IEEE Xplore | (Parkinson*) AND (machine learn* OR machine-learn* OR deep learn* OR deep-learn*) AND (human OR patient) AND (diagnosis OR diagnose OR diagnosing OR detection OR detect OR detecting OR classification OR classify OR classifying OR identification OR identify OR identifying) |

Inclusion and Exclusion Criteria

Studies that satisfy one or more of the following criteria and used machine learning methods were included:

Classification of PD from healthy controls (HC),

Classification of PD from Parkinsonism (e.g., progressive supranuclear palsy (PSP) and multiple system atrophy (MSA)), and

Classification of PD from other movement disorders (e.g., essential tremor (ET)).

Studies falling into one or more of the following categories were excluded:

Studies related to Parkinsonism or/and diseases other than PD that did not involve classification or detection of PD (e.g., differential diagnosis of PSP, MSA, and other atypical Parkinsonian disorders),

Studies not related to the diagnosis of PD (e.g., subtyping or severity assessment, analysis of behavior, disease progression, treatment outcome prediction, identification, and localization of brain structures or parameter optimization during surgery),

Studies related to the diagnosis of PD, but performed analysis and assessed model performance at sample level (e.g., classification using individual MRI scans without aggregating scan-level performance to patient level),

Classification of PD from non-Parkinsonism (e.g., Alzheimer's disease),

Study did not use metrics that measure classification performance,

Study used organisms other than human (e.g., Caenorhabditis elegans, mice or rats),

Study did not provide sufficient or accurate descriptions of machine learning methods, datasets or subjects used (e.g., does not provide sample size, or incorrectly described the dataset(s) used),

Not original journal article or conference proceedings papers (e.g., review and viewpoint paper), and

In languages other than English.

Data Extraction

The following information is included in the data extraction table: (1) objectives, (2) type of diagnosis (diagnosis, differential diagnosis, sub-typing), (3) data source, (4) data type, (5) number of subjects, (6) machine learning method(s), splitting strategy and cross validation, (7) associated outcomes, (8) year, and (9) reference.

For studies published online first and archived in another year, “year of publication” was defined as the year during which the study was published online. If this information was unavailable, the year in which the article was copyrighted was regarded as the year of publication. For studies that introduced novel models and used existing models merely for comparison, information related to the novel models was extracted. Classification of PD and scans without evidence for dopaminergic deficit (SWEDD) was treated as subtyping (Erro et al., 2016).

Study Objectives

To outline the different goals and objectives of included studies, we have further categorized them based on the type of diagnosis and their general aim. From the perspective of diagnostics, these studies could be divided into (a) the diagnosis or detection of PD (which compares data collected from PD patients and healthy controls), (b) differential diagnosis (discrimination between patients with idiopathic PD and patients with atypical Parkinsonism), and (c) sub-typing (discrimination among sub-types of PD).

Included studies were also analyzed for their general aim: For studies with a focus on the development of novel technical approaches to be used in the diagnosis of Parkinson's disease, e.g., new machine learning and deep learning models and architectures, data acquisition devices, and feature extraction algorithms that haven't been previously presented and/or employed, we defined them as (a) “methodology” studies. Studies that validate and investigate (a) the application of previously published and validated machine learning and deep learning models, and/or (b) the feasibility of introducing data modalities that are not commonly used in the machine learning-based diagnosis of PD (e.g., CSF data), were defined as (b) “clinical application” studies.

Model Evaluation

In the present study, accuracy was used to compare performance of machine learning models. For each data type, we summarized the type of machine learning models that led to the per-study highest accuracy. However, in some studies, only one machine learning model was tested. Therefore, we define “model associated with the per-study highest accuracy” as (a) the only model implemented and used in a study or (b) the model that achieved the highest accuracy or that was highlighted in studies that used multiple models. Results are expressed as mean (SD).

For studies reporting both training and testing/validation accuracy, testing or validation accuracy was considered. For studies that reported both validation and test accuracy, test accuracy was considered. For studies with more than one dataset or classification problem (e.g., HC vs. PD and HC vs. idiopathic hyposmia vs. PD), accuracy was averaged across datasets or classification problems. For studies that reported classification accuracy for each group of subjects individually, accuracy was averaged across groups. For studies reporting a range of accuracies or accuracies given by different cross validation methods or feature combinations, the highest accuracies were considered. In studies that compared HC with diseases other than PD or PD with diseases other than Parkinsonism, diagnosis of diseases other than PD or Parkinsonism (e.g., amyotrophic lateral sclerosis) was not considered. Accuracy of severity assessment was not considered.

Results

Literature Review

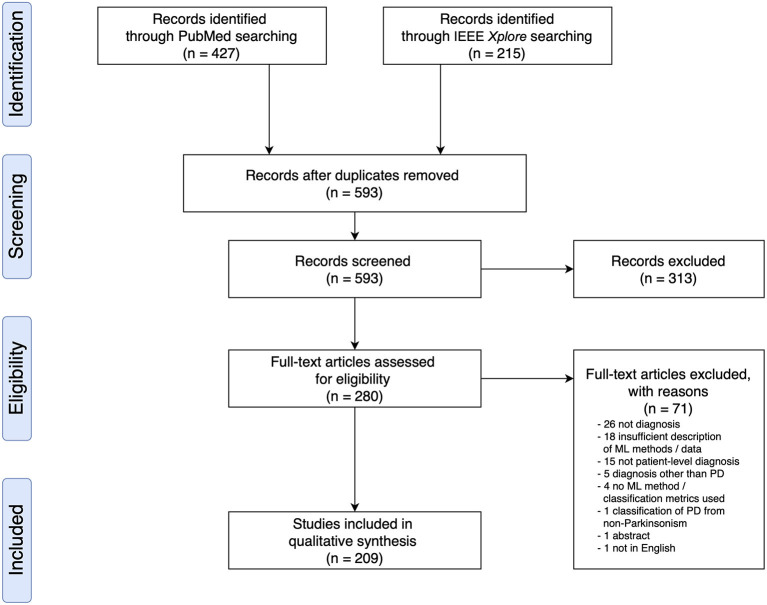

Based on the search criteria, we retrieved 427 (PubMed) and 215 (IEEEXplore) search results, leading to a total of 642 publications. After removing duplicates, we screened 593 publications for titles and abstracts, following which we excluded 313 based on the exclusion criteria and examined 280 full text articles. Overall, we included 209 research articles for data extraction (Figure 1 and see Supplementary Materials for a full list of included studies). All articles were published from the year 2009 onwards, and an increase in the number of papers published per year was observed (Supplementary Figure 1).

Figure 1.

PRISMA Flow Diagram of Literature Search and Selection Process showing the number of studies identified, screened, extracted, and included in the review.

Data Source and Sample Size

In 93 out of 209 studies (43.1%), original data were collected from human participants. In 108 studies (51.7%), data used were from public repositories and databases, including University of California at Irvine (UCI) Machine Learning Repository (Dua and Graff, 2018) (n = 44), Parkinson's Progression Markers Initiative (Marek et al., 2011) (PPMI; n = 33), PhysioNet (Goldberger et al., 2000) (n = 15), HandPD dataset (Pereira et al., 2015) (n = 6), mPower database (Bot et al., 2016) (n = 4), and 6 other databases (Mucha et al., 2018; Vlachostergiou et al., 2018; Bhati et al., 2019; Hsu et al., 2019; Taleb et al., 2019; Wodzinski et al., 2019; Table 2).

Table 2.

Source of data of the included studies.

| Data source/Database | Number of studies | Percentage |

|---|---|---|

| independent recruitment of human participants | 93 | 43.06% |

| UCI Machine Learning Repository | 44 | 20.37% |

| PPMI database | 33 | 15.28% |

| PhysioNet | 15 | 6.94% |

| HandPD dataset | 6 | 2.78% |

| mPower database | 4 | 1.85% |

| Other databases (1 PACS, 1 PaHaW, 1 PC-GITA database, 1 PDMultiMC database, 1 Neurovoz corpus, 1 The NTUA Parkinson Dataset) |

6 | 2.78% |

| Collected postmortem | 1 | 0.46% |

| Commercially sourced | 1 | 0.46% |

| Acquired at another institution | 1 | 0.46% |

| From another study | 1 | 0.46% |

| From the author's institutional database | 1 | 0.46% |

| Others (1 PPMI + Sheffield Teaching Hospitals NHS Foundation Trust; 1 PPMI + Seoul National University Hospital cohort; 1 UCI + collected from participants) |

3 | 1.39% |

PACS, Picture Archiving and Communication System; PaHaW, Parkinson's Disease Handwriting Database.

In 3 studies, data from public repositories were combined with data from local databases or participants (Agarwal et al., 2016; Choi et al., 2017; Taylor and Fenner, 2017). In the remaining studies, data were sourced (Wahid et al., 2015) from another study (Fernandez et al., 2013), collected at another institution (Segovia et al., 2019), obtained from the authors' institutional database (Nunes et al., 2019), collected postmortem (Lewitt et al., 2013), or commercially sourced (Váradi et al., 2019).

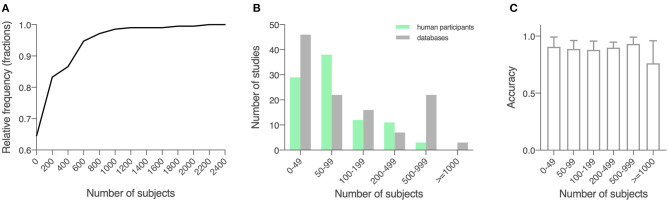

The 209 studies had an average sample size of 184.6 (289.3), with a smallest sample size of 10 (Kugler et al., 2013), and a largest sample size of 2,289 (Tracy et al., 2019; Figure 2A). For studies that recruited human participants (n = 93), data from an average of 118.0 (142.9) participants were collected (range: 10–920; Figure 2B). For other studies (n = 116), an average sample size of 238.1 (358.5) was reported (range: 30–2,289; Figure 2B). For a description of average accuracy reported in these studies in relation to sample size, see Figure 2C.

Figure 2.

Sample size of the included studies. (A) Cumulative relative frequency graph depicting the frequency of the sample sizes studied. (B) Histogram depicting the frequency of a sample size of 0–50, 50–100, 100–200, 200–500, 500–100, and over 1,000 for studies using locally recruited human participants and studies using previously published open databases. Green, studies using locally recruited human participants; gray, studies using data sourced from public databases. (C) Model performance as measured by accuracy in relation to sample size, shown in means (SD).

Study Objectives

In included studies, although “diagnosis of PD” was used as the search criteria, machine learning had been applied for diagnosis (PD vs. HC), differential diagnosis (idiopathic PD vs. atypical Parkinsonism) and sub-typing (differentiation of sub-types of PD) purposes. Most studies focused on diagnosis (n = 168, 80.4%) or differential diagnosis (n = 20, 9.6%). Fourteen studies performed both diagnosis and differential diagnosis (6.7%), 5 studies (2.4%) diagnosed and subtyped PD, 2 studies (1.0%) included diagnosis, differential diagnosis, and subtyping.

Among the included studies, a total of 132 studies (63.2%) implemented and tested a machine learning method, a model architecture, a diagnostic system, a feature extraction algorithm, or a device for non-invasive, low-cost data acquisition that hasn't been established for the detection and early diagnosis of PD (methodology studies). In 77 studies (36.8%), previously proposed and validated machine learning methods were tested in clinical settings for early detection of PD, identification of novel biomarkers or examination of uncommonly used data modalities for the diagnosis of PD (e.g., CSF; clinical application studies).

Comparing Studies With Different Objectives

Source of Data

In the 132 studies that proposed or tested novel machine learning methods (i.e., methodology studies), a majority used data from publicly available databases (n = 89, 67.4%). Data collected from human participants were used in 41 studies (31.1%) and the two remaining studies (1.5%) used commercially sourced data or data from both existing public databases and local participants specifically recruited for the study. Out of the 77 studies that used machine learning models in clinical settings (i.e., clinical application studies), 52 (67.5%) collected data from human participants, 22 (28.6%) used data from public databases. Two (2.6%) studies obtained data from a database and a local cohort, and 1 (1.3%) study collected data postmortem.

Data Modality

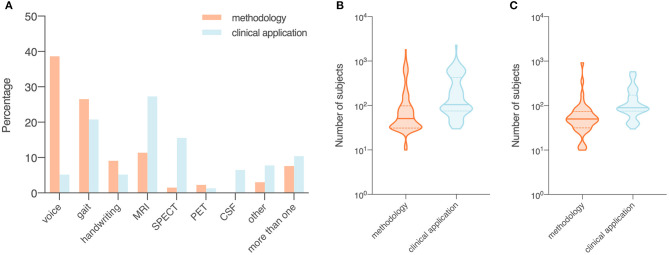

In methodology studies, the most commonly used data modalities were voice recordings (n = 51, 38.6%), movement (n = 35, 26.5%), and MRI data (n = 15, 11.4%). For studies on clinical applications, MRI data (n = 21, 27.3%), movement (n = 16, 20.8%), and SPECT imaging data (n = 12, 15.6%) were of high relevance. All studies using CSF features (n = 5) focused on validation of existing machine learning models in a clinical setting (Figure 3A).

Figure 3.

Data modality (A) and number of subjects (B,C) of included studies, summarized by objectives (i.e., methodology or clinical application). Orange, studies with a focus on the development of a novel technical approach to be used in the diagnosis of Parkinson's disease (i.e., methodology); blue, studies that investigate the use of published machine learning models or novel data modalities (i.e., clinical application). (A) Proportion of data modalities in included studies displayed as percentages. (B) Sample size in all included studies. (C) Sample size in studies that collected data from recruited human participants. Data shown are means (SD).

Number of Subjects

The average sample size was 137.1 for the 132 methodology studies (Figure 3B). For 41 out of the 132 studies that used data from recruited human participants, the average sample size was 81.7 (Figure 3C). In the 77 studies on clinical applications, the average sample size was 266.2 (Figure 3B). For 52 out of the 77 clinical studies that collected data from recruited participants, the average sample size was 145.9 (Figure 3C).

Machine Learning Methods Applied to the Diagnosis of PD

We divided 448 machine learning models from the 209 studies into 8 categories: (1) support vector machine (SVM) and variants (n = 132 from 130 studies), (2) neural networks (n = 76 from 62 studies), (3) ensemble learning (n = 82 from 57 studies), (4) nearest neighbor and variants (n = 33 from 33 studies), (5) regression (n = 31 from 31 studies), (6) decision tree (n = 28 from 27 studies), (7) naïve Bayes (n = 26, from 26 studies), and (8) discriminant analysis (n = 12 from 12 studies). A small percentage of models used did not fall into any of the categories (n = 28, used in 24 studies).

On average, 2.14 machine learning models per study were applied to the diagnosis of PD. One study may have used more than one category of models. For a full description of data types used to train each type of machine learning models and the associated outcomes, see Supplementary Materials and Supplementary Figure 2.

Performance Metrics

Various metrics have been used to assess the performance of machine learning models (Table 3). The most common metric was accuracy (n = 174, 83.3%), which was used individually (n = 55) or in combination with other metrics (n = 119) in model evaluation. Among the 174 studies that used accuracy, some have combined accuracy with sensitivity (i.e., recall) and specificity (n = 42), or with sensitivity, specificity and AUC (n = 16), or with recall (i.e., sensitivity), precision and F1 score (n = 7) for a more systematic understanding of model performance. A total of 35 studies (16.7%) used metrics other than accuracy. In these studies, the most used performance metrics were AUC (n = 19), sensitivity (n = 17), and specificity (n = 14), and the three were often applied together (n = 9) with or without other metrics.

Table 3.

Performance metrics used in the evaluation of machine learning models.

| Performance metric | Definition | Number of studies |

|---|---|---|

| Accuracy | 174 | |

| Sensitivity (recall) | 110 | |

| Specificity (TNR) | 94 | |

| AUC | The two-dimensional area under the Receiver Operating Characteristic (ROC) curve | 60 |

| MCC | 9 | |

| Precision (PPV) | 31 | |

| NPV | 8 | |

| F1 score | 25 | |

| Others (7 kappa; 4 error rate; 3 EER; 1 MSE; 1 LOR; 1 confusion matrix; 1 cross validation score; 1 YI; 1 FPR; 1 FNR; 1 G-mean; 1 PE; 5 combination of metrics) |

N/A | 28 |

TNR, true negative rate; AUC, Area under the ROC Curve; MCC, Matthews correlation coefficient; PPV, positive predictive value; NPV, negative predictive value; EER, equal error rate; MSE, mean squared error; LOR, log odds ratio; YI, Youden's Index; FPR, false positive rate; FNR, false negative rate; PE, probability excess.

Data Types and Associated Outcomes

Out of 209 studies, 122 (58.4%) applied machine learning methods to movement-related data, i.e., voice recordings (n = 55, 26.3%), movement data (n = 51, 24.4%), or handwritten patterns (n = 16, 7.7%). Imaging modalities analyzed including MRI (n = 36, 17.2%), SPECT (n = 14, 6.7%), and positron emission tomography (PET; n = 4, 1.9%). Five studies analyzed CSF samples (2.4%). In 18 studies (8.6%), a combination of different types of data was used.

Ten studies (4.8%) used data that do not belong to any categories mentioned above, such as single nucleotide polymorphisms (Cibulka et al., 2019) (SNPs), electromyography (EMG) (Kugler et al., 2013), OCT (Nunes et al., 2019), cardiac scintigraphy (Nuvoli et al., 2019), Patient Questionnaire of Movement Disorder Society Unified Parkinson's Disease Rating Scale (MDS-UPDRS) (Prashanth and Dutta Roy, 2018), whole-blood gene expression profiles (Shamir et al., 2017), transcranial sonography (Shi et al., 2018) (TCS), eye movements (Tseng et al., 2013), electroencephalography (EEG) (Vanegas et al., 2018), and serum samples (Váradi et al., 2019).

Given that studies used different data modalities and sources, and sometimes different samples of the same database, a summary of model performance, instead of direct comparison across studies, is provided.

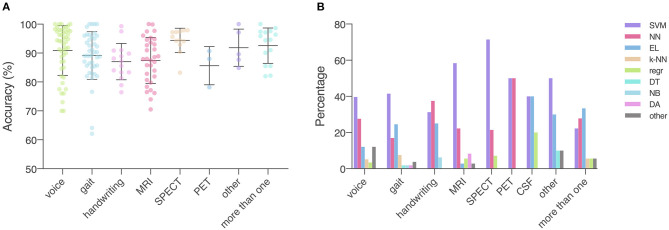

Voice Recordings (n = 55)

The 49 studies that used accuracy to evaluate machine learning models achieved an average accuracy of 90.9 (8.6) % (Figure 4A), ranging from 70.0% (Kraipeerapun and Amornsamankul, 2015; Ali et al., 2019a) to 100.0% (Hariharan et al., 2014; Abiyev and Abizade, 2016; Ali et al., 2019c; Dastjerd et al., 2019). In 3 studies, the highest accuracy was achieved by two types of machine learning models individually, namely regression or SVM (Ali et al., 2019a), neural network or SVM (Hariharan et al., 2014), and ensemble learning or SVM (Mandal and Sairam, 2013). The per-study highest accuracy was achieved with SVM in 23 studies (39.7%), with neural network in 16 studies (27.6%), with ensemble learning in 7 studies (12.1%), with nearest neighbor in 3 studies (5.2%), and with regression in 2 studies (3.4%). Models that do not belong to any given categories led to the per-study highest accuracy in 7 studies (12.1%; Figure 4B).

Figure 4.

Data type, machine learning models applied, and accuracy. (A) Accuracy achieved in individual studies and average accuracy for each data type. Error bar: standard deviation. (B) Distribution of machine learning models applied per data type. MRI, magnetic resonance imaging; SPECT, single-photon emission computed tomography; PET, positron emission tomography; CSF, cerebrospinal fluid; SVM, support vector machine; NN, neural network; EL, ensemble learning; k-NN, nearest neighbor; regr, regression; DT, decision tree; NB, naïve Bayes; DA, discriminant analysis; other: data/models that do not belong to any of the given categories.

Voice recordings from the UCI machine learning repository were used in 42 studies (Table 4). Among the 42 studies, 39 used accuracy to evaluate classification performance and the average accuracy was 92.0 (9.0) %. The lowest accuracy was 70.0% and the highest accuracy was 100.0%. Eight out of 9 studies that collected voice recordings from human participants used accuracy as the performance metric, and the average, lowest and highest accuracies were 87.7 (6.8) %, 77.5%, and 98.6%, respectively. The 4 remaining studies used data from the Neurovoz corpus (n = 1), mPower database (n = 1), PC-GITA database (n = 1), or data from both the UCI machine learning repository and human participants (n = 1). Two out of these 4 studies used accuracy to evaluate model performance and reported an accuracy of 81.6 and 91.7%.

Table 4.

Studies that applied machine learning models to voice recordings to diagnose PD (n = 55).

| Objectives | Type of diagnosis | Source of data | Number of subjects (n) | Machine learning method(s), splitting strategy and cross validation | Outcomes | Year | References |

|---|---|---|---|---|---|---|---|

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Fuzzy neural system with 10-fold cross validation | Testing accuracy = 100% | 2016 | Abiyev and Abizade, 2016 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | RPART, C4.5, PART, Bagging CART, random forest, Boosted C5.0, SVM | SVM: | 2019 | Aich et al., 2019 |

| Accuracy = 97.57% | |||||||

| Sensitivity = 0.9756 | |||||||

| Specificity = 0.9987 | |||||||

| NPV = 0.9995 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | DBN of 2 RBMs | Testing accuracy = 94% | 2016 | Al-Fatlawi et al., 2016 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | EFMM-OneR with 10-fold cross validation or 5-fold cross validation | Accuracy = 94.21% | 2019 | Sayaydeha and Mohammad, 2019 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | Linear regression, LDA, Gaussian naïve Bayes, decision tree, KNN, SVM-linear, SVM-RBF with leave-one-subject-out cross validation | Logistic regression or SVM-linear accuracy = 70% | 2019 | Ali et al., 2019a |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | LDA-NN-GA with leave-one-subject-out cross validation | Training: | 2019 | Ali et al., 2019c |

| Accuracy = 95% | |||||||

| Sensitivity = 95% | |||||||

| Test: | |||||||

| Accuracy = 100% | |||||||

| Sensitivity = 100% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | NNge with AdaBoost with 10-fold cross validation | Accuracy = 96.30% | 2018 | Alqahtani et al., 2018 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Logistic regression, KNN, naïve Bayes, SVM, decision tree, random forest, DNN with 10-fold cross validation | KNN accuracy = 95.513% | 2018 | Anand et al., 2018 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | MLP with a train-validation-test ratio of 50:20:30 | Training accuracy = 97.86% | 2012 | Bakar et al., 2012 |

| Test accuracy = 92.96% | |||||||

| MSE = 0.03552 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31 (8 HC + 23 PD) for dataset 1 and 68 (20 HC + 48 PD) for dataset 2 | FKNN, SVM, KELM with 10-fold cross validation | FKNN accuracy = 97.89% | 2018 | Cai et al., 2018 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | SVM, logistic regression, ET, gradient boosting, random forest with train-test split ratio = 80:20 | Logistic regression accuracy = 76.03% | 2019 | Celik and Omurca, 2019 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | MLP, GRNN with a training-test ratio of 50:50 | GRNN: | 2016 | Çimen and Bolat, 2016 |

| Error rate = 0.0995 (spread parameter = 195.1189) | |||||||

| Error rate = 0.0958 (spread parameter = 1.2) | |||||||

| Error rate = 0.0928 (spread parameter = 364.8) | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | ECFA-SVM with 10-fold cross validation | Accuracy = 97.95% | 2017 | Dash et al., 2017 |

| Sensitivity = 97.90% | |||||||

| Precision = 97.90% | |||||||

| F-measure = 97.90% | |||||||

| Specificity = 96.50% | |||||||

| AUC = 97.20% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | Fuzzy classifier with 10-fold cross validation, leave-one-out cross validation or a train-test ratio of 70:30 | Accuracy = 100% | 2019 | Dastjerd et al., 2019 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Averaged perceptron, BPM, boosted decision tree, decision forests, decision jungle, locally deep SVM, logistic regression, NN, SVM with 10-fold cross-validation | Boosted decision trees: | 2017 | Dinesh and He, 2017 |

| Accuracy = 0.912105 | |||||||

| Precision = 0.935714 | |||||||

| F-score = 0.942368 | |||||||

| AUC = 0.966293 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 50; 8 HC + 42 PD | KNN, SVM, ELM with a train-validation ratio of 70:30 | SVM: | 2017 | Erdogdu Sakar et al., 2017 |

| Accuracy = 96.43% | |||||||

| MCC = 0.77 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 252; 64 HC + 188 PD | CNN with leave-one-person-out cross validation | Accuracy = 0.869 | 2019 | Gunduz, 2019 |

| F-measure = 0.917 | |||||||

| MCC = 0.632 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | SVM, logistic regression, KNN, DNN with a train-test ratio of 70:30 | DNN: | 2018 | Haq et al., 2018 |

| Accuracy = 98% | |||||||

| Specificity = 95% | |||||||

| sensitivity = 99% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | SVM-RBF, SVM-linear with 10-fold cross validation | Accuracy = 99% | 2019 | Haq et al., 2019 |

| Specificity = 99% | |||||||

| Sensitivity = 100% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | LS-SVM, PNN, GRNN with conventional (train-test ratio of 50:50) and 10-fold cross validation | LS-SVM or PNN or GRNN: | 2014 | Hariharan et al., 2014 |

| Accuracy = 100% | |||||||

| Precision = 100% | |||||||

| Sensitivity = 100% | |||||||

| specificity = 100% | |||||||

| AUC = 100 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Random tree, SVM-linear, FBANN with 10-fold cross validation | FBANN: | 2014 | Islam et al., 2014 |

| Accuracy = 97.37% | |||||||

| Sensitivity = 98.60% | |||||||

| Specificity = 93.62% | |||||||

| FPR = 6.38% | |||||||

| Precision = 0.979 | |||||||

| MSE = 0.027 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | SVM-linear with 5-fold cross validation | Error rate ~0.13 | 2012 | Ji and Li, 2012 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | Decision tree, random forest, SVM, GBM, XGBoost | SVM-linear: | 2018 | Junior et al., 2018 |

| FNR = 10% | |||||||

| Accuracy = 0.725 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | CART, SVM, ANN | SVM accuracy = 93.84% | 2020 | Karapinar Senturk, 2020 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | Dataset 1: 31; 8 HC + 23 PD Dataset 2: 40; 20 HC + 20 PD |

EWNN with a train-test ratio of 90:10 and cross validation | Dataset 1: Accuracy = 92.9% |

2018 | Khan et al., 2018 |

| Ensemble classification accuracy = 100.0% | |||||||

| Sensitivity = 100.0% | |||||||

| MCC = 100.0% | |||||||

| Dataset 2: | |||||||

| Accuracy = 66.3% | |||||||

| Ensemble classification accuracy = 90.0% | |||||||

| Sensitivity = 93.0% | |||||||

| Specificity = 97.0% | |||||||

| MCC = 87.0% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | Stacked generalization with CMTNN with 10-fold cross validation | Accuracy = ~70% | 2015 | Kraipeerapun and Amornsamankul, 2015 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 40; 20 HC + 20 PD | HMM, SVM | HMM: | 2019 | Kuresan et al., 2019 |

| Accuracy = 95.16% | |||||||

| Sensitivity = 93.55% | |||||||

| Specificity = 91.67% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | IGWO-KELM with 10-fold cross validation | Iteration number = 100 | 2017 | Li et al., 2017 |

| Accuracy = 97.45% | |||||||

| Sensitivity = 99.38% | |||||||

| Specificity = 93.48% | |||||||

| Precision = 97.33% | |||||||

| G-mean = 96.38% | |||||||

| F-measure = 98.34% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | SCFW-KELM with 10-fold cross validation | Accuracy = 99.49% | 2014 | Ma et al., 2014 |

| Sensitivity = 100% | |||||||

| Specificity = 99.39% | |||||||

| AUC = 99.69% | |||||||

| F-measure = 0.9966 | |||||||

| Kappa = 0.9863 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | SVM-RBF with 10-fold cross validation | Accuracy = 96.29% | 2016 | Ma et al., 2016 |

| Sensitivity = 95.00% | |||||||

| Specificity = 97.50% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Logistic regression, NN, SVM, SMO, Pegasos, AdaBoost, ensemble selection, FURIA, rotation forest Bayesian network with 10-fold cross-validation | Average accuracy across all models = 97.06% SMO, Pegasos, or AdaBoost accuracy = 98.24% |

2013 | Mandal and Sairam, 2013 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Logistic regression, KNN, SVM, naïve Bayes, decision tree, random forest, ANN | ANN: | 2018 | Marar et al., 2018 |

| Accuracy = 94.87% | |||||||

| Specificity = 96.55% | |||||||

| Sensitivity = 90% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | Dataset 1: 31; 8 HC + 23 PD | KNN | Dataset 1 accuracy = 90% | 2017 | Moharkan et al., 2017 |

| Dataset 2: 40; 20 HC + 20 PD | Dataset 2 accuracy = 65% | ||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Rotation forest ensemble with 10-fold cross validation | Accuracy = 87.1% | 2011 | Ozcift and Gulten, 2011 |

| Kappa error = 0.63 | |||||||

| AUC = 0.860 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Rotation forest ensemble | Accuracy = 96.93% | 2012 | Ozcift, 2012 |

| Kappa = 0.92 | |||||||

| AUC = 0.97 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | SVM-RBF with 10-fold cross validation or a train-test ratio of 50:50 | 10-fold cross validation: | 2016 | Peker, 2016 |

| Accuracy = 98.95% | |||||||

| Sensitivity = 96.12% | |||||||

| Specificity = 100% | |||||||

| F-measure = 0.9795 | |||||||

| Kappa = 0.9735 | |||||||

| AUC = 0.9808 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | ELM with 10-fold cross validation | Accuracy = 88.72% | 2016 | Shahsavari et al., 2016 |

| Recall = 94.33% | |||||||

| Precision = 90.48% | |||||||

| F-score = 92.36% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Ensemble learning with 10-fold cross validation | Accuracy = 90.6% | 2019 | Sheibani et al., 2019 |

| Sensitivity = 95.8% | |||||||

| Specificity = 75% | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | GLRA, SVM, bagging ensemble with 5-fold cross validation | Bagging: | 2017 | Wu et al., 2017 |

| Sensitivity = 0.9796 | |||||||

| Specificity = 0.6875 | |||||||

| MCC = 0.6977 | |||||||

| AUC = 0.9558 | |||||||

| SVM: | |||||||

| Sensitivity = 0.9252 | |||||||

| specificity = 0.8542 | |||||||

| MCC = 0.7592 | |||||||

| AUC = 0.9349 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | Decision tree classifier, logistic regression, SVM with 10-fold cross validation | SVM: | 2011 | Yadav et al., 2011 |

| Accuracy = 0.76 | |||||||

| Sensitivity = 0.9745 | |||||||

| Specificity = 0.13 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 80; 40 HC + 40 PD | KNN, SVM with 10-fold cross validation | SVM: | 2019 | Yaman et al., 2020 |

| Accuracy = 91.25% | |||||||

| Precision = 0.9125 | |||||||

| Recall = 0.9125 | |||||||

| F-Measure = 0.9125 | |||||||

| Classification of PD from HC | Diagnosis | UCI machine learning repository | 31; 8 HC + 23 PD | MAP, SVM-RBF, FLDA with 5-fold cross validation | MAP: | 2014 | Yang et al., 2014 |

| Accuracy = 91.8% | |||||||

| Sensitivity = 0.986 | |||||||

| Specificity = 0.708 | |||||||

| AUC = 0.94 | |||||||

| Classification of PD from other disorders | Differential diagnosis | Collected from participants | 50; 30 PD + 9 MSA + 5 FND + 1 somatization + 1 dystonia + 2 CD + 1 ET + 1 GPD | SVM, KNN, DA, naïve Bayes, classification tree with LOSO | SVM-linear: | 2016 | Benba et al., 2016a |

| Accuracy = 90% | |||||||

| Sensitivity = 90% | |||||||

| Specificity = 90% | |||||||

| MCC = 0.794067 | |||||||

| PE = 0.788177 | |||||||

| Classification of PD from other disorders | Differential diagnosis | Collected from participants | 40; 20 PD + 9 MSA + 5 FND + 1 somatization + 1 dystonia + 2 CD + 1ET + 1 GPD | SVM (RBF, linear, polynomial, and MLP kernels) with LOSO | SVM-linear accuracy = 85% | 2016 | Benba et al., 2016b |

| Classification of PD from HC and assess the severity of PD | Diagnosis | Collected from participants | 52; 9 HC + 43 PD | SVM-RBF with cross validation | Accuracy = 81.8% | 2014 | Frid et al., 2014 |

| Classification of PD from HC | Diagnosis | Collected from participants | 54; 27 HC + 27 PD | SVM with stratified 10-fold cross validation or leave-one-out cross validation | Accuracy = 94.4% | 2018 | Montaña et al., 2018 |

| Specificity = 100% | |||||||

| Sensitivity = 88.9% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 40; 20 HC + 20 PD | KNN, SVM-linear, SVM-RBF with leave-one-subject-out or summarized leave-one-out | SVM-linear: | 2013 | Sakar et al., 2013 |

| Accuracy = 77.50% | |||||||

| MCC = 0.5507 | |||||||

| Sensitivity = 80.00% | |||||||

| Specificity = 75.00% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 78; 27 HC + 51 PD | KNN, SVM-linear, SVM-RBF, ANN, DNN with leave-one-out cross validation | SVM-RBF: | 2017 | Sztahó et al., 2017 |

| Accuracy = 84.62% | |||||||

| Precision = 88.04% | |||||||

| Recall = 78.65% | |||||||

| Classification of PD from HC and assess the severity of PD | Diagnosis | Collected from participants | 88; 33 HC + 55 PD | KNN, SVM-linear, SVM-RBF, ANN, DNN with leave-one-subject-out cross validation | SVM-RBF: | 2019 | Sztahó et al., 2019 |

| Accuracy = 89.3% | |||||||

| Sensitivity = 90.2% | |||||||

| Specificity = 87.9% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 43; 10 HC + 33 PD | Random forests, SVM with 10-fold cross validation and a train-test ratio of 90:10 | SVM accuracy = 98.6% | 2012 | Tsanas et al., 2012 |

| Classification of PD from HC | Diagnosis | Collected from participants | 99; 35 HC + 64 PD | Random forest with internal out-of-bag (OOB) validation | EER = 19.27% | 2017 | Vaiciukynas et al., 2017 |

| Classification of PD from HC | Diagnosis | UCI machine learning repository and participants | 40 and 28; 20 HC + 20 PD and 28 PD, respectively | ELM | Training data: | 2016 | Agarwal et al., 2016 |

| Accuracy = 90.76% | |||||||

| MCC = 0.815 | |||||||

| Test data: | |||||||

| Accuracy = 81.55% | |||||||

| Classification of PD from HC | Diagnosis | The Neurovoz corpus | 108; 56 HC + 52 PD | Siamese LSTM-based NN with 10-fold cross- validation | EER = 1.9% | 2019 | Bhati et al., 2019 |

| Classification of PD from HC | Diagnosis | mPower database | 2,289; 2,023 HC + 246 PD | L2-regularized logistic regression, random forest, gradient boosted decision trees with 5-fold cross validation | Gradient boosted decision trees: | 2019 | Tracy et al., 2019 |

| Recall = 0.797 | |||||||

| Precision = 0.901 | |||||||

| F1-score = 0.836 | |||||||

| Classification of PD from HC | Diagnosis | PC-GITA database | 100; 50 HC + 50 PD | ResNet with train-validation ratio of 90:10 | Precision = 0.92 | 2019 | Wodzinski et al., 2019 |

| Recall = 0.92 | |||||||

| F1-score = 0.92 | |||||||

| Accuracy = 91.7% |

ANN, artificial neural network; AUC, area under the receiver operating characteristic (ROC) curve; CART, classification and regression trees; CD, cervical dystonia; CMTNN, complementary neural network; CNN, convolutional neural network; DA, discriminant analysis; DBN, deep belief network; DNN, deep neural network; ECFA, enhanced chaos-based firefly algorithm; EFMM-OneR, enhanced fuzzy min-max neural network with the OneR attribute evaluator; ELM, extreme Learning machine; ET, extra trees or essential tremor; EWNN, evolutionary wavelet neural network; FBANN, feedforward back-propagation based artificial neural network; FKNN, fuzzy k-nearest neighbor; FLDA, Fisher's linear discriminant analysis; FND, functional neurological disorder; FNR, false negative rate; FPR, false positive rate; FURIA, fuzzy unordered rule induction algorithm; GA, genetic algorithm; GBM, gradient boosting machine; GLRA, generalized logistic regression analysis; GPD, generalized paroxysmal dystonia; GRNN, general(ized) regression neural network; HC, healthy control; HMM, hidden Markov model; IGWO-KELM, improved gray wolf optimization and kernel(-based) extreme learning machine; KELM, kernel-based extreme learning machine; KNN, k-nearest neighbors; LDA, linear discriminant analysis; LOSO, leave-one-subject-out; LS-SVM, least-square support vector machine; LSTM, long short-term memory; MAP, maximum a posteriori decision rule; MCC, Matthews correlation coefficient; MLP, multilayer perceptron; MSA, multiple system atrophy; MSE, mean squared error; NN, neural network; NNge, non-nested generalized exemplars; NPV, negative predictive value; PD, Parkinson's disease; PNN, probabilistic neural network; RBM, restricted Boltzmann machine; ResNet, residual neural network; RPART, recursive partitioning and regression trees; SCFW-KELM, subtractive clustering features weighting and kernel-based extreme learning machine; SMO, sequential minimal optimization; SVM, support vector machine; SVM-linear, support vector machine with linear kernel; SVM-RBF, support vector machine with radial basis function kernel; XGBoost, extreme gradient boosting.

Movement Data (n = 51)

The 43 out of 51 studies using accuracy to assess model performance achieved an average accuracy of 89.1 (8.3) %, ranging from 62.1% (Prince and de Vos, 2018) to 100.0% (Surangsrirat et al., 2016; Joshi et al., 2017; Pham, 2018; Pham and Yan, 2018; Figure 4A). One study reported three machine learning methods (SVM, nearest neighbor and decision tree) achieving the highest accuracy individually (Félix et al., 2019). Out of the 51 studies, the per-study highest accuracy was achieved with SVM in 22 studies (41.5%), with ensemble learning in 13 studies (24.5%), with neural network in 9 studies (17.0%), with nearest neighbor in 4 studies (7.5%), with discriminant analysis in 1 study (1.9%), with naïve Bayes in 1 study (1.9%), and with decision tree in 1 study (1.9%). Models that do not belong to any given categories were associated with the highest per-study accuracy in two studies (3.8%; Figure 4B).

Among the 33 studies that collected movement data from recruited participants, 25 used accuracy in model evaluation, leading to an average accuracy of 87.0 (7.3) % (Table 5). The lowest and highest accuracies were 64.1% (Martínez et al., 2018) and 100.0% (Surangsrirat et al., 2016), respectively. Fifteen studies used data from the PhysioNet database (Table 5) and had an average accuracy of 94.4 (4.6) %, a lowest accuracy of 86.4% and a highest accuracy of 100%. Three studies used data from the mPower database (n = 2) or data sourced from another study (n = 1), and the average accuracy of these studies was 80.6 (16.2) %.

Table 5.

Studies that applied machine learning models to movement data to diagnose PD (n = 51).

| Objectives | Type of diagnosis | Source of data | Number of subjects (n) |

Machine learning method(s), splitting strategy and cross validation | Outcomes | Year | References |

|---|---|---|---|---|---|---|---|

| Classification of PD from HC | Diagnosis | Collected from participants | 103; 71 HC + 32 PD | Ensemble method of 8 models (SVM, MLP, logistic regression, random forest, NSVC, decision tree, KNN, QDA) | Sensitivity = 96% Specificity = 97% AUC = 0.98 |

2017 | Adams, 2017 |

| Classification of PD, HC and other neurological stance disorders | Diagnosis and differential diagnosis | Collected from participants | 293; 57 HC + 27 PD + 49 AVS + 12 PNP + 48 CA + 16 DN + 25 OT + 59 PPV | Ensemble method of 7 models (logistic regression, KNN, shallow and deep ANNs, SVM, random forest, extra-randomized trees) with 90% training and 10% testing data in stratified k-fold cross-validation | 8-class classification accuracy = 82.7% | 2019 | Ahmadi et al., 2019 |

| Classification of PD from HC | Diagnosis | Collected from participants | 137; 38 HC + 99 PD | SVM with leave-one-out-cross validation | PD vs. HC accuracy = 92.3% | 2016 | Bernad-Elazari et al., 2016 |

| Mild vs. severe accuracy = 89.8% | |||||||

| Mild vs. HC accuracy = 85.9% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 30; 14 HC + 16 PD | SVM (linear, quadratic, cubic, Gaussian kernels), ANN, with 5-fold cross-validation | Classification with ANN: | 2019 | Buongiorno et al., 2019 |

| Accuracy = 89.4% | |||||||

| Sensitivity = 87.0% | |||||||

| Specificity = 91.8% | |||||||

| Severity assessment with ANN: | |||||||

| Accuracy = 95.0% | |||||||

| sensitivity = 90.0% | |||||||

| Specificity = 99.0% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 28; 12 HC + 16 PD | NN with a train-validation-test ratio of 70:15:15, SVM with leave-one-out cross-validation, logistic regression with 10-fold cross validation | SVM: Accuracy = 85.71% Sensitivity = 83.5% Specificity = 87.5% |

2017 | Butt et al., 2017 |

| Classification of PD from HC | Diagnosis | Collected from participants | 28; 12 HC + 16 PD | Logistic regression, naïve Bayes, SVM with 10-fold cross validation | Naïve Bayes: | 2018 | Butt et al., 2018 |

| Accuracy = 81.45% | |||||||

| Sensitivity = 76% | |||||||

| Specificity = 86.5% | |||||||

| AUC = 0.811 | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 54; 27 HC + 27 PD | Naïve Bayes, LDA, KNN, decision tree, SVM-linear, SVM-RBF, majority of votes with 5-fold cross validation | Majority of votes (weighted) accuracy = 96% | 2018 | Caramia et al., 2018 |

| Classification of PD, HC and PD, HC, IH | Diagnosis | Collected from participants | 90; 30 PD + 30 HC + 30 IH | SVM, random forest, naïve Bayes with 10-fold cross validation | Random forest: | 2019 | Cavallo et al., 2019 |

| HC vs. PD: | |||||||

| Accuracy = 0.950 | |||||||

| F-measure = 0.947 | |||||||

| HC + IH vs. PD: | |||||||

| Accuracy = 0.917 | |||||||

| F-measure = 0.912 | |||||||

| HC vs. IH vs. PD: | |||||||

| Accuracy = 0.789 | |||||||

| F-measure = 0.796 | |||||||

| Classification of PD from HC and classification of HC, MCI, PDNOMCI, and PDMCI | Diagnosis, differential diagnosis and subtyping | Collected from participants | PD vs. HC: | Decision tree, naïve Bayes, random forest, SVM, adaptive boosting (with decision tree or random forest) with 10-fold cross validation | Adaptive boosting with decision tree: | 2015 | Cook et al., 2015 |

| 75; 50 HC + 25 PD | PD vs. HC: | ||||||

| Accuracy = 0.79 | |||||||

| Subtyping: | AUC = 0.82 | ||||||

| 52; 18 HC + 16 PDNOMCI + 9 PDMCI + 9 MCI | Subtyping (HOA vs. MCI vs. PDNOMCI vs. PDMCI): | ||||||

| Accuracy = 0.85 | |||||||

| AUC = 0.96 | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 580; 424 HC + 156 PD | Hidden Markov models with nearest neighbor classifier with cross validation and train-test ratio of 66.6:33.3 | Accuracy = 85.51% | 2017 | Cuzzolin et al., 2017 |

| Classification of PD from HC | Diagnosis | Collected from participants | 80; 40 HC + 40 PD | Random forest, SVM with 10-fold cross validation | SVM-RBF: | 2017 | Djurić-Jovičić et al., 2017 |

| Accuracy = 85% | |||||||

| Sensitivity = 85% | |||||||

| Specificity = 82% | |||||||

| PPV = 86% | |||||||

| NPV = 83% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 13; 5 HC + 8 PD | SVM-RBF with leave-one-out cross validation | 100% HC and PD classified correctly (confusion matrix) | 2014 | Dror et al., 2014 |

| Classification of PD from HC | Diagnosis | Collected from participants | 75; 38 HC + 37 PD | SVM with leave-one-out cross validation | Accuracy = 85.61% | 2014 | Drotár et al., 2014 |

| Sensitivity = 85.95% | |||||||

| Specificity = 85.26% | |||||||

| Classification of PD from ET | Differential diagnosis | Collected from participants | 24; 13 PD + 11 ET | SVM-linear, SVM-RBF with leave-one-out cross validation | Accuracy = 83% | 2016 | Ghassemi et al., 2016 |

| Classification of PD from HC | Diagnosis | Collected from participants | 41; 22 HC + 19 PD | SVM, decision tree, random forest, linear regression with 10-fold and leave-one-individual out (L1O) cross validation | SVM accuracy = 0.89 | 2018 | Klein et al., 2017 |

| Classification of PD from HC | Diagnosis | Collected from participants | 74; 33 young HC + 14 elderly HC + 27 PD | SVM with 10-fold cross validation | Sensitivity = ~90% | 2017 | Javed et al., 2018 |

| Classification of PD from HC and assess the severity of PD | Diagnosis | Collected from participants | 55; 20 HC + 35 PD | SVM with leave-one-out cross validation | PD diagnosis: | 2016 | Koçer and Oktay, 2016 |

| Accuracy = 89% | |||||||

| Precision = 0.91 | |||||||

| Recall = 0.94 | |||||||

| Severity assessment: | |||||||

| HYS 1 accuracy = 72% | |||||||

| HYS 2 accuracy = 77% | |||||||

| HYS 3 accuracy = 75% | |||||||

| HYS 4 accuracy = 33% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 45; 20 HC + 25 PD | Naïve Bayes, logistic regression, SVM, AdaBoost, C4.5, BagDT with 10-fold stratified cross-validation apart from BagDT | BagDT: Sensitivity = 82% Specificity = 90% AUC = 0.94 |

2015 | Kostikis et al., 2015 |

| Classification of PD from HC | Diagnosis | Collected from participants | 40; 26 HC + 14 PD | Random forest with leave-one-subject-out cross-validation | Accuracy = 94.6% Sensitivity = 91.5% Specificity = 97.2% |

2017 | Kuhner et al., 2017 |

| Classification of PD from HC | Diagnosis | Collected from participants | 177; 70 HC + 107 PD | ESN with 10-fold cross validation | AUC = 0.852 | 2018 | Lacy et al., 2018 |

| Classification of PD from HC | Diagnosis | Collected from participants | 39; 16 young HC + 12 elderly HC + 11 PD | LDA with leave-one-out cross validation | Multiclass classification (young HC vs. age-matched HC vs. PD): | 2018 | Martínez et al., 2018 |

| Accuracy = 64.1% | |||||||

| Sensitivity = 47.1% | |||||||

| Specificity = 77.3% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 38; 10 HC + 28 PD | SVM-Gaussian with leave-one-out cross validation | Training accuracy = 96.9% | 2018 | Oliveira H. M. et al., 2018 |

| Test accuracy = 76.6% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 30; 15 HC + 15 PD | SVM-RBF, PNN with 10-fold cross validation | SVM-RBF: | 2015 | Oung et al., 2015 |

| Accuracy = 88.80% | |||||||

| Sensitivity = 88.70% | |||||||

| Specificity = 88.15% | |||||||

| AUC = 88.48 | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 45; 14 HC + 31 PD | Deep-MIL-CNN with LOSO or RkF | With LOSO: | 2019 | Papadopoulos et al., 2019 |

| Precision = 0.987 | |||||||

| Sensitivity = 0.9 | |||||||

| specificity = 0.993 | |||||||

| F1-score = 0.943 | |||||||

| With RkF: | |||||||

| Precision = 0.955 | |||||||

| Sensitivity = 0.828 | |||||||

| Specificity = 0.979 | |||||||

| F1-score = 0.897 | |||||||

| Classification of PD, HC and post-stroke | Diagnosis and differential diagnosis | Collected from participants | 11; 3 HC + 5 PD + 3 post-stroke | MTFL with 10-fold cross validation | PD vs. HC AUC = 0.983 | 2017 | Papavasileiou et al., 2017 |

| Classification of PD from HC | Diagnosis | Collected from participants | 182; 94 HC + 88 PD | LSTM, CNN-1D, CNN-LSTM with 5-fold cross-validation and a training-test ratio of 90:10 | CNN-LSTM: | 2019 | Reyes et al., 2019 |

| Accuracy = 83.1% | |||||||

| Precision = 83.5% | |||||||

| Recall = 83.4% | |||||||

| F1-score = 81% | |||||||

| Kappa = 64% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 60; 30 HC + 30 PD | Naïve Bayes, KNN, SVM with leave-one-out cross validation | SVM: | 2019 | Ricci et al., 2020 |

| Accuracy = 95% | |||||||

| Precision = 0.951 | |||||||

| AUC = 0.950 | |||||||

| Classification of PD, HC and IH | Diagnosis and differential diagnosis | Collected from participants | 90; 30 HC + 30 PD + 30 IH | SVM-polynomial, random forest, naïve Bayes with 10-fold cross validation | HC vs. PD, naïve Bayes or random forest: | 2018 | Rovini et al., 2018 |

| Precision = 0.967 | |||||||

| Recall = 0.967 | |||||||

| Specificity = 0.967 | |||||||

| Accuracy = 0.967 | |||||||

| F-measure = 0.967 | |||||||

| HC + IH vs. PD, random forest: | |||||||

| Precision = 1.000 | |||||||

| Recall = 0.933 | |||||||

| Specificity = 1.000 | |||||||

| Accuracy = 0.978 | |||||||

| F-measure = 0.966 | |||||||

| Multiclass classification, random forest: | |||||||

| Precision = 0.784 | |||||||

| Recall = 0.778 | |||||||

| Specificity = 0.889 | |||||||

| Accuracy = 0.778 | |||||||

| F-measure = 0.781 | |||||||

| Classification of PD, HC and IH | Diagnosis and differential diagnosis | Collected from participants | 45; 15 HC + 15 PD + 15 IH | SVM-polynomial, random forest with 5-fold cross validation | HC vs. PD, random forest: | 2019 | Rovini et al., 2019 |

| Precision = 1.000 | |||||||

| Recall = 1.000 | |||||||

| Specificity = 1.000 | |||||||

| Accuracy = 1.000 | |||||||

| F-measure = 1.000 | |||||||

| Multiclass classification (HC vs. IH vs. PD), random forest: | |||||||

| Precision = 0.930 | |||||||

| Recall = 0.911 | |||||||

| Specificity = 0.956 | |||||||

| Accuracy = 0.911 | |||||||

| F-measure = 0.920 | |||||||

| Classification of PD from ET | Differential diagnosis | Collected from participants | 52; 32 PD + 20 ET | SVM-linear with 10-fold cross validation | Accuracy = 1 | 2016 | Surangsrirat et al., 2016 |

| Sensitivity = 1 | |||||||

| Specificity = 1 | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 12; 10 HC + 2 PD | Naive Bayes, LogitBoost, random forest, SVM with 10-fold cross-validation | Random forest: | 2017 | Tahavori et al., 2017 |

| Accuracy = 92.29% | |||||||

| Precision = 0.99 | |||||||

| Recall = 0.99 | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 39; 16 HC + 23 PD | SVM-RBF with 10-fold stratified cross validation | Sensitivity = 88.9% | 2010 | Tien et al., 2010 |

| Specificity = 100% | |||||||

| Precision = 100% | |||||||

| FPR = 0.0% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 60; 30 HC + 30 PD | Logistic regression, naïve Bayes, random forest, decision tree with 10-fold cross validation | Random forest: | 2018 | Urcuqui et al., 2018 |

| Accuracy = 82% | |||||||

| False negative rate = 23% | |||||||

| False positive rate = 12% | |||||||

| Classification of PD from HC | Diagnosis | PhysioNet | 47; 18 HC + 29 PD | SVM, KNN, random forest, decision tree | SVM with cubic kernel: | 2017 | Alam et al., 2017 |

| Accuracy = 93.6% | |||||||

| Sensitivity = 93.1% | |||||||

| Specificity = 94.1% | |||||||

| Classification of PD from HC | Diagnosis | PhysioNet | 34; 17 HC + 17 PD | MLP, SVM, decision tree | MLP: | 2018 | Alaskar and Hussain, 2018 |

| Accuracy = 91.18% | |||||||

| Sensitivity = 1 | |||||||

| Specificity = 0.83 | |||||||

| Error = 0.09 | |||||||

| AUC = 0.92 | |||||||

| Classification of PD from HC and assess the severity of PD | Diagnosis | PhysioNet | 166; 73 HC + 93 PD | 1D-CNN, 2D-CNN, LSTM, decision tree, logistic regression, SVM, MLP | 2D-CNN and LSTM accuracy = 96.0% | 2019 | Alharthi and Ozanyan, 2019 |

| Classification of PD from HC | Diagnosis | PhysioNet | 146; 60 HC + 86 PD | SVM-Gaussian with 3- or 5-fold cross validation | Accuracy = 100%, 88.88%, and 100% in three test groups | 2019 | Andrei et al., 2019 |

| Classification of PD from HC | Diagnosis | PhysioNet | 166; 73 HC + 93 PD | ANN, SVM, naïve Bayes with cross validation | ANN accuracy = 86.75% | 2017 | Baby et al., 2017 |

| Classification of PD from HC | Diagnosis | PhysioNet | 31; 16 HC + 15 PD | SVM-linear, KNN, naïve Bayes, LDA, decision tree with leave-one-out cross validation | SVM, KNN and decision tree accuracy = 96.8% | 2019 | Félix et al., 2019 |

| Classification of PD from HC | Diagnosis | PhysioNet | 31; 16 HC + 15 PD | SVM-linear with leave-one-out cross validation | Accuracy = 100% | 2017 | Joshi et al., 2017 |

| Classification of PD from HC | Diagnosis | PhysioNet | 165; 72 HC + 93 PD | KNN, CART, decision tree, random forest, naïve Bayes, SVM-polynomial, SVM-linear, K-means, GMM with leave-one-out cross validation | SVM: Accuracy = 90.32% Precision = 90.55% Recall = 90.21% F-measure = 90.38% |

2019 | Khoury et al., 2019 |

| Classification of ALS, HD, PD from HC | Diagnosis | PhysioNet | 64; 16 HC + 15 PD + 13 ALS + 20 HD | String grammar unsupervised possibilistic fuzzy C-medians with FKNN, with 4-fold cross validation | PD vs. HC accuracy = 96.43% | 2018 | Klomsae et al., 2018 |

| Classification of PD from HC | Diagnosis | PhysioNet | 166; 73 HC + 93 PD | Logistic regression, decision trees, random forest, SVM-Linear, SVM-RBF, SVM-Poly, KNN with cross validation | KNN: | 2018 | Mittra and Rustagi, 2018 |

| Accuracy = 93.08% | |||||||

| Precision = 89.58% | |||||||

| Recall = 84.31% | |||||||

| F1-score = 86.86% | |||||||

| Classification of PD from HC | Diagnosis | PhysioNet | 85; 43 HC + 42 PD | LS-SVM with leave-one-out, 2- or 10-fold cross validation | Leave-one-out cross validation: | 2018 | Pham, 2018 |

| AUC = 1 | |||||||

| Sensitivity = 100% | |||||||

| Specificity = 100% | |||||||

| Accuracy = 100% | |||||||

| 10-fold cross validation: | |||||||

| AUC = 0.89 | |||||||

| Sensitivity = 85.00% | |||||||

| Specificity = 73.21% | |||||||

| Accuracy = 79.31% | |||||||

| Classification of PD from HC | Diagnosis | PhysioNet | 165; 72 HC + 93 PD | LS-SVM with leave-one-out, 2- or 5- or 10-fold cross validation | Accuracy = 100% | 2018 | Pham and Yan, 2018 |

| Sensitivity = 100% | |||||||

| Specificity = 100% | |||||||

| AUC = 1 | |||||||

| Classification of PD from HC | Diagnosis | PhysioNet | 166; 73 HC + 93 PD | DCALSTM with stratified 5-fold cross validation | Sensitivity = 99.10% | 2019 | Xia et al., 2020 |

| Specificity = 99.01% | |||||||

| Accuracy = 99.07% | |||||||

| Classification of HC, PD, ALS and HD | Diagnosis and differential diagnosis | PhysioNet | 64; 16 HC + 15 PD + 13 ALS + 20 HD | SVM-RBF with 10-fold cross validation | PD vs. HC: | 2009 | Yang et al., 2009 |

| Accuracy = 86.43% | |||||||

| AUC = 0.92 | |||||||

| Classification of PD, HD, ALS and ND from HC | Diagnosis | PhysioNet | 64; 16 HC + 15 PD + 13 ALS + 20 HD | Adaptive neuro-fuzzy inference system with leave-one-out cross validation | PD vs. HC: | 2018 | Ye et al., 2018 |

| Accuracy = 90.32% | |||||||

| Sensitivity = 86.67% | |||||||

| Specificity = 93.75% | |||||||

| Classification of PD from HC and assess the severity of PD | Diagnosis | mPower database | 50; 22 HC + 28 PD | Random forest, bagged trees, SVM, KNN with 10-fold cross validation | Random forest: | 2017 | Abujrida et al., 2017 |

| PD vs. HC accuracy = 87.03% | |||||||

| PD severity assessment accuracy = 85.8% | |||||||

| Classification of PD from HC | Diagnosis | mPower database | 1,815; 866 HC + 949 PD | CNN with 10-fold cross validation | Accuracy = 62.1% | 2018 | Prince and de Vos, 2018 |

| F1 score = 63.4% | |||||||

| AUC = 63.5% | |||||||

| Classification of PD from HC | Diagnosis | Dataset from Fernandez et al., 2013 | 49; 26 HC + 23 PD | KFD-RBF, naïve Bayes, KNN, SVM-RBF, random forest with 10-fold cross validation | Random forest accuracy = 92.6% | 2015 | Wahid et al., 2015 |

ALS, amyotrophic lateral sclerosis; ANN, artificial neural network; AUC, area under the receiver operating characteristic (ROC) curve; AVS, acute unilateral vestibulopathy; BagDT, bootstrap aggregation for a random forest of decision trees; CA, anterior lobe cerebella atrophy; CART, classification and regression trees; DCALSTM, dual-modal with each branch has a convolutional network followed by an attention-enhanced bi-directional LSTM; DN, downbeat nystagmus syndrome; ESN, echo state network; FKNN, fuzzy k-nearest neighbor; GMM, Gaussian mixture model; HC, healthy control; HD, Huntington's disease; IH, idiopathic hyposmia; KFD, kernel Fisher discriminant; KNN, k-nearest neighbors; LDA, linear discriminant analysis; LOSO, leave-one-subject-out; LS-SVM, least-squares support vector machine; LSTM, long short-term memory; MCI, mild cognitive impairment; MIL, multiple-instance learning; MLP, multilayer perceptron; MTFL, multi-task feature learning; NN, neural network; NSVC, nu-support vector classification; OT, primary orthostatic tremor; PD, Parkinson's disease; PDMCI, PD participants who met criteria for mild cognitive impairment; PDNOMCI, PD participants with no indication of mild cognitive impairment; PNN, probabilistic neural network; PNP, sensory polyneuropathy; PPV, phobic postural vertigo; QDA, quadratic discriminant analysis; RkF, repeated k-fold; SVM, support vector machine; SVM-Poly, support vector machine with polynomial kernel; SVM-RBF, support vector machine with radial basis function kernel.

MRI (n = 36)

Average accuracy of the 32 studies that used accuracy to evaluate the performance of machine learning models was 87.5 (8.0) %. In these studies, the lowest accuracy was 70.5% (Liu L. et al., 2016) and the highest accuracy was 100.0% (Cigdem et al., 2019; Figure 4A). Out of the 36 studies, the per-study highest accuracy was obtained with SVM in 21 studies (58.3%), with neural network in 8 studies (22.2%), with discriminant analysis in 3 studies (8.3%), with regression in 2 studies (5.6%), and with ensemble learning in 1 study (2.8%). One study (2.8%) obtained the highest per-study accuracy using models that do not belong to any of the given categories (Figure 4B). In 8 of 36 studies, neural networks were directly applied to MRI data, while the remaining studies used machine learning models to learn from extracted features, e.g., cortical thickness and volume of brain regions, to diagnose PD.

Out of 17 studies that used MRI data from the PPMI database, 16 used accuracy to evaluate model performance and the average accuracy was 87.9 (8.0) %. The lowest and highest accuracies were 70.5 and 99.9%, respectively (Table 6). In 16 out of 19 studies that acquired MRI data from human participants, accuracy was used to evaluate classification performance and an average accuracy was 87.0 (8.1) % was achieved. The lowest reported accuracy was 76.2% and the highest reported accuracy was 100% (Table 6).

Table 6.

Studies that applied machine learning models to MRI data to diagnose PD (n = 36).

| Objectives | Type of diagnosis | Source of data | Number of subjects (n) |

Machine learning method(s), splitting strategy and cross validation | Outcomes | Year | References |

|---|---|---|---|---|---|---|---|

| Classification of PD from MSA | Differential diagnosis | Collected from participants | 150; 54 HC + 65 PD + 31 MSA | SVM with leave-one-out-cross validation | MSA vs. PD: | 2019 | Abos et al., 2019 |

| Accuracy = 0.79 | |||||||

| Sensitivity = 0.71 | |||||||

| Specificity = 0.86 | |||||||

| MSA vs. HC: | |||||||

| Accuracy = 0.79 | |||||||

| Sensitivity = 0.84 | |||||||

| Specificity = 0.74 | |||||||

| MSA vs. subsample of PD: | |||||||

| Accuracy = 0.84 | |||||||

| Sensitivity = 0.77 | |||||||

| Specificity = 0.90 | |||||||

| Classification of PD from MSA | Differential diagnosis | Collected from participants | 151; 59 HC + 62 PD + 30 MSA | SVM with leave-one-out-cross validation | Accuracy = 77.17% | 2019 | Baggio et al., 2019 |

| Sensitivity = 83.33% | |||||||

| Specificity = 74.19% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 94; 50 HC + 44 PD | CNN with 85 subjects for training and 9 for testing | Training accuracy = 95.24% | 2019 | Banerjee et al., 2019 |

| Testing accuracy = 88.88% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 47; 26 HC + 21 PD | SVM-linear with leave-one-out cross validation | Accuracy = 93.62% | 2015 | Chen et al., 2015 |

| Sensitivity = 90.47% | |||||||

| Specificity = 96.15% | |||||||

| Classification of PD from PSP | Differential diagnosis | Collected from participants | 78; 57 PD + 21 PSP | SVM with leave-one-out cross validation | Accuracy = 100% | 2013 | Cherubini et al., 2014a |

| Sensitivity = 1 | |||||||

| Specificity = 1 | |||||||

| Classification of PD, MSA, PSP and HC | Diagnosis and differential diagnosis | Collected from participants | 106; 36 HC + 35 PD + 16 MSA + 19 PSP | Elastic Net regularized logistic regression with nested 10-fold cross validation | HC vs. PD/MSA-P/PSP: | 2017 | Du et al., 2017 |

| AUC = 0.88 | |||||||

| Sensitivity = 0.80 | |||||||

| Specificity = 0.83 | |||||||

| PPV = 0.82 | |||||||

| NPV = 0.81 | |||||||

| HC vs. PD: | |||||||

| AUC = 0.91 | |||||||

| Sensitivity = 0.86 | |||||||

| Specificity = 0.80 | |||||||

| PPV = 0.82 | |||||||

| NPV = 0.89 | |||||||

| PD vs. MSA/PSP: | |||||||

| AUC = 0.94 | |||||||

| Sensitivity = 0.86 | |||||||

| Specificity = 0.87 | |||||||

| PPV = 0.88 | |||||||

| NPV = 0.84 | |||||||

| PD vs. MSA: | |||||||

| AUC = 0.99 | |||||||

| Sensitivity = 0.97 | |||||||

| Specificity = 1.00 | |||||||

| PPV = 1.00 | |||||||

| NPV = 0.93 | |||||||

| PD vs. PSP: | |||||||

| AUC = 0.99 | |||||||

| Sensitivity = 0.97 | |||||||

| Specificity = 1.00 | |||||||

| PPV = 1.00 | |||||||

| NPV = 0.94 | |||||||

| MSA vs. PSP: | |||||||

| AUC = 0.98 | |||||||

| Sensitivity = 0.94 | |||||||

| Specificity = 1.00 | |||||||

| PPV = 1.00 | |||||||

| NPV = 0.93 | |||||||

| Classification of HC, PD, MSA and PSP | Diagnosis and differential diagnosis | Collected from participants | 64; 22 HC + 21 PD + 11 MSA + 10 PSP | SVM-linear with leave-one-out cross validation | PD vs. HC: | 2011 | Focke et al., 2011 |

| Accuracy = 41.86% | |||||||

| Sensitivity = 38.10% | |||||||

| Specificity = 45.45% | |||||||

| PD vs. MSA: | |||||||

| Accuracy = 71.87% | |||||||

| Sensitivity = 36.36% | |||||||

| Specificity = 90.48% | |||||||

| PD vs. PSP: | |||||||

| Accuracy = 96.77% | |||||||

| Sensitivity = 90% | |||||||

| Specificity = 100% | |||||||

| MSA vs. PSP: | |||||||

| Accuracy = 76.19% | |||||||

| MSA vs. HC: | |||||||

| Accuracy = 78.78% | |||||||

| Sensitivity = 54.55% | |||||||

| Specificity = 90.91% | |||||||

| PSP vs. HC: | |||||||

| Accuracy = 93.75% | |||||||

| Sensitivity = 90.00% | |||||||

| Specificity = 95.45% | |||||||

| Classification of PD and atypical PD | Differential diagnosis | Collected from participants | 40; 17 PD + 23 atypical PD | SVM-RBF with 10-fold cross-validation | Accuracy = 97.50% | 2012 | Haller et al., 2012 |

| TPR = 0.94 | |||||||

| FPR = 0.00 | |||||||

| TNR = 1.00 | |||||||

| FNR = 0.06 | |||||||

| Classification of PD and other forms of Parkinsonism | Differential diagnosis | Collected from participants | 36; 16 PD + 20 other Parkinsonism | SVM-RBF with 10-fold cross validation | Accuracy = 86.92% | 2012 | Haller et al., 2013 |

| TP = 0.87 | |||||||

| FP = 0.14 | |||||||

| TN = 0.87 | |||||||

| FN = 0.13 | |||||||

| Classification of HC, PD, PSP, MSA-C and MSA-P | Diagnosis and differential diagnosis | Collected from participants | 464; 73 HC + 204 PD + 106 PSP + 21 MSA-C + 60 MSA-P | SVM-RBF with 10-fold cross validation | PD vs. HC: | 2016 | Huppertz et al., 2016 |

| Sensitivity = 65.2% | |||||||

| Specificity = 67.1% | |||||||

| Accuracy = 65.7% | |||||||

| PD vs. PSP: | |||||||

| Sensitivity = 82.5% | |||||||

| Specificity = 86.8% | |||||||

| Accuracy = 85.3% | |||||||

| PD vs. MSA-C: | |||||||

| Sensitivity = 76.2% | |||||||

| Specificity = 96.1% | |||||||

| Accuracy = 94.2% | |||||||

| PD vs. MSA-P: | |||||||

| Sensitivity = 86.7% | |||||||

| Specificity = 92.2% | |||||||

| Accuracy = 90.5% | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 42; 21 HC + 21 PD | SVM-linear with stratified 10-fold cross validation | Accuracy = 78.33% | 2017 | Kamagata et al., 2017 |

| Precision = 85.00% | |||||||

| Recall = 81.67% | |||||||

| AUC = 85.28% | |||||||

| Classification of PD, PSP, MSA-P and HC | Diagnosis and differential diagnosis | Collected from participants | 419; 142 HC + 125 PD + 98 PSP + 54 MSA-P | CNN with train-validation ratio of 85:15 | PD: | 2019 | Kiryu et al., 2019 |

| Sensitivity = 94.4% | |||||||

| Specificity = 97.8% | |||||||

| Accuracy = 96.8% | |||||||

| AUC = 0.995 | |||||||

| PSP: | |||||||

| Sensitivity = 84.6% | |||||||

| Specificity = 96.0% | |||||||

| Accuracy = 93.7% | |||||||

| AUC = 0.982 | |||||||

| MSA-P: | |||||||

| Sensitivity = 77.8% | |||||||

| Specificity = 98.1% | |||||||

| Accuracy = 95.2% | |||||||

| AUC = 0.990 | |||||||

| HC: | |||||||

| Sensitivity = 100.0% | |||||||

| Specificity = 97.5% | |||||||

| Accuracy = 98.4% | |||||||

| AUC = 1.000 | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 65; 31 HC + 34 PD | FCP with 36 out of the 65 subjects as the training set | AUC = 0.997 | 2016 | Liu H. et al., 2016 |

| Classification of PD, PSP, MSA-C and MSA-P | Differential diagnosis | Collected from participants | 85; 47 PD + 22 PSP + 9 MSA-C + 7 MSA-P | SVM-linear with leave-one-out cross validation | 4-class classification (MSA-C vs. MSA-P vs. PSP vs. PD) accuracy = 88% | 2017 | Morisi et al., 2018 |

| Classification of PD from HC | Diagnosis | Collected from participants | 89; 47 HC + 42 PD | Boosted logistic regression with nested cross-validation | Accuracy = 76.2% | 2019 | Rubbert et al., 2019 |

| Sensitivity = 81% | |||||||

| Specificity = 72.7% | |||||||

| Classification of PD, PSP and HC | Diagnosis and differential diagnosis | Collected from participants | 84; 28 HC + 28 PSP + 28 PD | SVM-linear with leave-one-out cross validation | PD vs. HC: | 2014 | Salvatore et al., 2014 |

| Accuracy = 85.8% | |||||||

| Specificity = 86.0% | |||||||

| Sensitivity = 86.0% | |||||||

| PSP vs. HC: | |||||||

| Accuracy = 89.1% | |||||||

| Specificity = 89.1% | |||||||

| Sensitivity = 89.5% | |||||||

| PSP vs. PD: | |||||||

| Accuracy = 88.9% | |||||||

| Specificity = 88.5% | |||||||

| Sensitivity = 89.5% | |||||||

| Classification of PD, APS (MSA, PSP) and HC | Diagnosis and differential diagnosis | Collected from participants | 100; 35 HC + 45 PD + 20 APS | CNN-DL, CR-ML, RA-ML with 5-fold cross-validation | PD vs. HC with CNN-DL: | 2019 | Shinde et al., 2019 |

| Test accuracy = 80.0% | |||||||

| Test sensitivity = 0.86 | |||||||

| Test specificity = 0.70 | |||||||

| Test AUC = 0.913 | |||||||

| PD vs. APS with CNN-DL: | |||||||

| Test accuracy = 85.7% | |||||||

| Test sensitivity = 1.00 | |||||||

| Test specificity = 0.50 | |||||||

| Test AUC = 0.911 | |||||||

| Classification of PD from HC | Diagnosis | Collected from participants | 101; 50 HC + 51 PD | SVM-RBF with leave-one-out cross validation | Sensitivity = 92% Specificity = 87% |

2017 | Tang et al., 2017 |

| Classification of PD from HC | Diagnosis | Collected from participants | 85; 40 HC + 45 PD | SVM-linear with leave-one-out, 5-fold, 0.632-fold (1-1/e), 2-fold cross validation | Accuracy = 97.7% | 2016 | Zeng et al., 2017 |

| Classification of PD from HC | Diagnosis | PPMI database | 543; 169 HC + 374 PD | RLDA with JFSS with 10-fold cross validation | Accuracy = 81.9% | 2016 | Adeli et al., 2016 |

| Classification of PD from HC | Diagnosis | PPMI database | 543; 169 HC + 374 PD | RFS-LDA with 10-fold cross validation | Accuracy = 79.8% | 2019 | Adeli et al., 2019 |