Abstract

Engagement activities are defined along a continuum that analyzes and represents nonacademic stakeholder activities and interactions with academic researchers. Proposed continua begin with none to limited stakeholder inclusion and input into research and continue with descriptions of increasing presence, input, and participation in decision-making. Despite some agreement in the literature, development of consistent terminology and definitions has been recommended to promote the common understanding of strategies in engaged research. This paper sought to develop and understand classifications and definitions of community-engaged research that can serve as the foundation of a measure of engaged research that permits comparisons among engagement strategies and the outcomes that they produce in health- and healthcare-related research studies. Data on academic and stakeholder perceptions and understandings of classifications and definitions were obtained using Delphi process (N = 19) via online and face-to-face survey and cognitive response interviews (N = 16). Participants suggested the need for more nuanced understanding of engagement along portions of the continuum, with active involvement and decision-making as engagement progressed. Cognitive interview responses suggested that outreach and education is a more advanced level of engagement than previously discussed in the literature and viewed consultation negatively because it required work without guaranteeing community benefit. It is possible to define a continuum of patient- and community-engaged research that is understood and accepted by both academic researchers and community members. However, future research should revisit the understanding and depiction of the strategies that are to be used in measure development.

Keywords: Community-engaged research, Community-based participatory research, patient-engaged research, community engagement, patient engagement

Implications.

Practice: It is possible for researchers and stakeholders to clarify terminology so that roles, participation, and benefits are clear at each level of community engagement along the continuum.

Policy: Policymakers who want to encourage community-engaged research must consider similarities and differences that may emerge in community and academic perceptions of community-engaged research.

Research: Future research is needed to clarify the understanding and depiction of community engagement activities and strategies that guide measure development.

INTRODUCTION

As the scientific community increasingly focuses on translation and implementation of scientific discoveries [1–3], stakeholder engagement is receiving increased attention in research as a key component in the process of tailoring best practices for specific populations [4–6]. However, as ongoing research and analysis indicate [7], there continues to be a need to reach agreement on the terminology and definitions used in the field of engaged research. To examine critical issues in the field, a consortium was formed, composed of individuals from the USA, Canada, UK, and Australia who recognized the importance and potential impact of funder advancement of patient- and stakeholder-engaged research. One of the issues that the consortium members undertook was an examination of definitional issues in engaged research. The group recognized variation in the terminology used [7]. Thus, the authors recommended that consistent terminology and definitions be generated to promote the common understanding of strategies in engaged research. At the same time, the authors remained flexible, encouraging continued growth and innovation in the field. The recommendations included use of the term engagement as opposed to participation and involvement, which were deemed either overly narrow or too broad. The consortium proposed the definition of research engagement as “an active partnership between stakeholders and researchers in production of new healthcare knowledge and evidence” [7] (p. 7). The field’s acceptance of this foundational definition would suggest the need to clarify definitions of classifications and the continua of engagement.

In terms of engagement, the literature has consistently divided academic research into that which is considered nonengaged, advisory, symbolic, collaborative, or full engagement [8–11]. Engagement activities are defined along a continuum that analyzes and represents nonacademic stakeholder activities and interactions with academic researchers. In the continuum of engagement, engagement may represent an effort to share education and information with the community, but at another point on the continuum, engagement may involve shared decision-making throughout the research process. There seems to be agreement in the literature on the trajectory of the continua and what activities and strategies represent the endpoint of authentic engagement [5,8–11]. The continua proposed begin with none to limited stakeholder inclusion and input into research, and continue with descriptions of progressively increasing presence, input, and participation in decision-making. There seems to be less agreement on how stakeholder engagement strategies are defined and positioned along the continua proposed as researchers and stakeholders form collaborations and partnerships. For example, the number of strategies included and described varies, as does the inclusion of strategies such as education, outreach, and informational strategies. In addition, whether outreach and education, as well as informative and consultative strategies are combined varies in descriptions of the stakeholder engagement continua.

Fundamental to classifications of strategies for engaged research are the questions of whether, how, and when stakeholder voices are heard and carry weight and whether the voices of academic researchers overpower those of the nonacademic stakeholders [7,9–11]. If stakeholders are allowed to advise but the researchers have the ultimate decision-making power, stakeholder presence may give the appearance of engagement although the stakeholders may not be meaningfully engaged. Several scholars contend that meaningful engagement requires some shared decision-making [9–11]. Previously research suggested a classification system that moved from nonengaged to engaged participation [9]. Nonparticipation is represented by outreach and education, with researchers developing, implementing, and evaluating strategies to reach the community of interest and with researchers providing education to try to educate nonacademic stakeholders about a particular topic [9]. The continuum continues with symbolic participation, which includes the following: (a) coordination with researchers in which researchers gather community stakeholders together to assess important elements of a project or activity and in which community members provide feedback to academic researchers and (b) cooperation where researchers ask community members for help with a project, instead of just asking for advice. The final category on the continuum is collaboration, with two categories that reference researchers and community members being actively involved in the design and implementation of the project and in the interpretation of the findings. One of the two types of collaboration is patient-centered collaboration, with patients, caregivers, and advocacy groups determining the priority setting for research choices and controlling the design and implementation of the project activities in addition to the interpretation and publication of findings. The other type of collaboration is community-based participatory research (CBPR), which is a population health approach to the patient-centered engagement model [9,12].

In this paper, we report on efforts to subject our engagement classifications and definitions to researcher and stakeholder scrutiny and feedback. This paper seeks to understand the changes in classification and definition that were required to achieve agreement among researchers and stakeholders. Classifications and definitions that are agreed upon can serve as the foundation of a measure of engaged research that might allow comparisons among engagement strategies and the outcomes that they produce in a variety of health- and healthcare-related research studies.

METHODS

The study and consent procedures described received institutional review board approval.

Development of definitions for stakeholder-engaged research classifications

The Disparities Elimination Advisory Committee (DEAC) of the Program for Elimination of Cancer Disparities (PECaD) of a comprehensive cancer center [12] worked collaboratively with study investigators to develop a standardized measure of stakeholder engagement [13]. In addition, this measurement development group sought a way to classify strategies of engaged research and, specifically, how programs and projects adhered to community engagement principles. The team initiated discussion using the definition of stakeholder-engaged research provided by the CDC [13] and the principles outlined in the work of Israel [10,11]. The process began in April 2014 with DEAC members (n = 15) participating in a focus group on perceptions of the implementation of CBPR principles used in each PECaD project and by each PECaD cancer partnership. The DEAC members consisted of 3 (20%) men, 12 (80%) women, 8 (53%) Black, 5 (33%) White, and 2 (13%) Native American participants.

As work proceeded, the DEAC members and the academic researchers used the definition of patient-engaged research—as offered by the Patient-Centered Outcomes Research Institute (PCORI) [14]—to develop definitions for the strategies included on the continuum of stakeholder-engaged research: coordination, cooperation, patient-centered collaboration, and CBPR collaboration [9]. PCORI defines patient-engaged research as, “The meaningful involvement of patients, caregivers, clinicians, and other healthcare stakeholders throughout the entire research process—from planning the study, to conducting the study, and disseminating study results” [14]. In the spring of 2017, the research team conducted an extensive literature review on measures of community engagement, which led to revised definitions.

The final iteration of the effort involved additional input from the DEAC members and the Patient Research Advisory Board (PRAB) at a mid-western school of medicine [15]. The PRAB includes the alumni of a program that provided a 15-week training program on research methods, who are certified to conduct research with human subjects by the university human research protections office. The PRAB is designed to help investigators with community-engaged or community-based research proposals/projects by having community members review proposals and give feedback. The additional interaction with DEAC and PRAB resulted in the addition of a fifth category of engagement: outreach and education [9]. In the spring of 2017, study investigators reviewed PCORI-funded projects for definitions and examples of engagement that were used in patient-centered outcomes research and activities. The definitions were presented to the DEAC members for comment in April 2017. To obtain more in-depth input and feedback, volunteers were solicited; six members (DEAC or PRAB members) agreed to provide feedback on the definitions in June 2017.

Delphi panel process

As CBPR practitioners, the research team believed that it was important that a measure of stakeholder-engaged research be developed in a way that was consistent with CBPR principles, particularly the inclusion of stakeholder input into the classification of engagement and their definitions. A modified Delphi process was designed to fulfill this goal; thus, the Delphi panel was convened. The Delphi method uses structured communication among individuals with expertise on a topic, with the goal of reaching agreement on a designated outcome [16]. The process involves administration of multiple rounds of individual surveys (online and in-person), with aggregation of responses and participant receipt of feedback on the group response for each round until reaching majority agreement.

Sample

Nineteen participants, composed of academic researchers and nonacademic members (see Table 1) with expertise in community engagement in research, were recruited through the authors’ networks and were asked to participate in a panel for a study on developing and validating a quantitative measure of community engagement in research [17]. The panel purposefully consisted of both stakeholders/community partners (57.9%) and academic researchers (42.1%). Most panelists did not focus on a specific disease or health condition in their work (57.9%). For the other panelists, the health conditions of focus included HIV/AIDS, hepatitis C, mental illnesses, respiratory illnesses, health behaviors and prevention, breast cancer, sickle cell, cancer in general, or hypertension. One panelist dropped after completing the first of the five rounds of the Delphi process, leaving 18 panelists remaining engaged throughout the entire process.

Table 1.

Demographics of Delphi panel (N = 19)

| Characteristic | Category | N (%) |

|---|---|---|

| Race | African American or Black | 12 (63.2%) |

| White | 6 (31.6%) | |

| Multiracial | 1 (5.3%) | |

| Ethnicity | Hispanic or Latino | 1 (5.3%) |

| Non-Hispanic or non-Latino | 18 (94.7%) | |

| Sex | Male | 2 (10.5%) |

| Female | 17 (89.5%) | |

| Education | Some college or associate degree | 4 (21.1%) |

| College degree | 1 (5.3%) | |

| Graduate degree | 14 (73.7%) | |

| Nationality | American/U.S. | 18 (94.7%) |

| Dominican | 1 (5.3%) | |

| Community or academic | Academic | 8 (42.1%) |

| Community | 11 (57.9%) | |

| Focus on certain disease | Yes | 8 (42.1%) |

| No | 11 (57.9%) | |

| Direct service provider | Current | 4 (21.0%) |

| Past | 5 (26.3%) | |

| Never | 10 (52.6%) | |

| Median (range) | ||

| Age, years | 55 (26–76) | |

| Years of research experience | 10 (0–35) | |

| Years of CBPR research | 10 (0–30) | |

| Years of direct provider experience (n = 9)a | 18 (2–30) |

CBPR community-based participatory research.

aNot all Delphi panel members were providers; nine were current or past service providers.

Procedures

An introductory webinar was held to inform the panel members about the Delphi process. The introductory webinar was followed by three computer rounds, one face-to-face round, and a final computer round. In each round, panelists were asked whether they agreed or disagreed with the definitions for the classifications of stakeholder engagement research, and they were asked to suggest modifications. The goal of the process was to reach agreement (>80%).

Round 1 was focused on items that might measure strategies of stakeholder-engaged research. The stakeholder engagement classifications and definitions were presented to panelists in October 2017, during Round 2 of the Delphi process. The stakeholder engagement classifications and their definitions had been determined by the DEAC-PRAB working group. Input and refinement continued through Round 5 (August 2018). Rounds 2, 3, and 5 of the Delphi process were online surveys (via Qualtrics platform) that allowed for feedback. Round 4 was an in-person meeting conducted over 2 days [17]. Three polling activities took place at the in-person meeting during Round 4. There were eight people who could not attend the in-person meeting, but were able to participate by using an online meeting platform (GoToMeeting) or by submitting a premeeting survey before the first day. Three of the six panelists who completed the premeeting survey attended the in‐person meeting virtually at varied times throughout the 2‐day meeting. All but two panelists were able to participate in some format for Round 4. During the in-person meeting, notes were taken by the research team and a professional editor to explain key changes and areas of disagreement. The professional editor also checked for grammar and consistency issues.

The definitions for the proposed DEAC-PRAB stakeholder engagement classifications were based on a literature review and synthesis first presented by the research team [9]. The original strategies of engagement and their definitions, as informed by CDC/ATSDR [13], Israel [10,11], and the DEAC team [12], were the following:

Outreach: Researchers develop, implement, and evaluate strategies to reach the target population. Key members of the target population (gatekeepers) can be engaged as advisors and can make key connections.

Education: Researchers are trying to educate stakeholders about a particular topic. This is usually combined with outreach efforts to gain audiences for education sessions and/or materials.

Coordination: Researchers gather community health stakeholders together to assess important elements of a project or activity. Community members give feedback, and this feedback informs researchers’ decisions. However, it is the researchers’ responsibility to design and implement the study with no help expected from the community members. Research and related programs are strengthened through community outreach, and results are disseminated through community groups and gatekeepers.

Cooperation: Researchers ask community members for help with a project, instead of just asking for advice. There is some activity on the part of community members in defined aspects of the project, including recruitment, implementation of interventions, measurement, and interpretation of outcomes. Community health stakeholders are ongoing partners in the decision-making for the project. Community health stakeholders’ understanding of research and its potential importance are enhanced through participation in activities.

Collaboration: Both researchers and community members are actively involved in the design and implementation of the project and in the interpretation of the findings. In addition, all stakeholders benefit in some way from working together, including increased capacity of community groups to engage in research implementation. Community health stakeholders collaborate in decision-making and resource allocation with an equitable balance of power that values input from the community health stakeholders.

Patient-centered: Patients, caregivers, and advocacy groups dictate the priority setting for research choices and control the design and implementation of the project activities in addition to the interpretation and publication of findings. Researchers use their expertise to move these components along, but community health stakeholders make all major decisions about research approaches. Systems are in place for patient participation in research at all points of the engagement continuum. Community health stakeholders have the capacity to engage in partnerships with an equitable balance of power for governance and a strong level of accountability to the public or community.

Community-based participatory research: CBPR is the population health approach to the patient-centered engagement model. The principles of CBPR highlight trust among partners, respect for each partner’s expertise and contributions, mutual benefit among all partners, and a community-driven partnership with equitable and shared decision-making [9] (p. 487).

Cognitive response interviews

Cognitive response interviews were conducted to identify problems with survey items and definition wording and to help us modify the definitions to improve their use in community-engaged research [18]. Cognitive response interviewing is an evidence-based method of examining participant understanding and interpretation of survey items. Participants are asked to answer questions about their interpretation of items, paraphrase items, and identify words, phrases, or item components that are problematic [18], after each individually present item.

Sample

A purposive sample of 16 individuals (see Table 2) was recruited to complete one-on-one cognitive response interviews in November of 2018. Eligibility criteria for the cognitive response interviews were that participants had to be adults (18 years or older) and that they had to have experience partnering with researchers on patient- or community-engaged research. Participants were recruited through a database of alumni who had completed the Community Research Fellows Training (CRFT) program in a mid-western city [19] and through referral by CRFT alumni. CRFT was established in 2013 and maintains an opt-in database of graduates of four cohorts (n = 125), 94 (75%) of whom are active alumni and have updated contact information.

Table 2.

Demographics of cognitive interview participants (N = 16)

| Characteristic | Category | N (%) |

|---|---|---|

| Race | Non-Hispanic/Latino(a) Black | 10 (62.5%) |

| Non-Hispanic/Latino(a) White | 4 (25.0%) | |

| Hispanic/Latino(a) | 1 (6.3%) | |

| Other/Multiracial/Unknown | 1 (6.3%) | |

| Sex | Male | 2 (12.5%) |

| Female | 13 (81.3%) | |

| Unknown | 1 (6.3%) | |

| Education | HS degree or GED | 2 (12.5%) |

| Some college or associate degree | 4 (25.0%) | |

| College degree | 2 (12.5%) | |

| Graduate degree | 7 (43.8%) | |

| Unknown | 1 (6.3%) | |

| Median (range) | ||

| Age, years | 46.5 (24–73) |

GED general education diploma; HS high school.

Interviewer training and interviews

The lead author of the manuscript trained the project manager and two research assistants (n = 4 interviewers, including the lead author) on how to conduct in-depth cognitive interviewing to ensure consistent interview and data collection procedures. The training also provided an orientation to the interview guide and protocol. The project manager provided interviewers instruction on the use of tablets to administer the cognitive response interview to assure consistency and ease of administration. Although tablets were used during the interview to capture responses to survey items and quantitative questions, computer-assisted personal interview software was not used, and participant qualitative responses were captured using a digital recorder.

The one-on-one, semistructured interviews were completed in person. In order to assure that respondents understood their role in the cognitive response interviews, interviewers explained that the purpose of the interview was to identify problems with survey items and definition wording and to help us modify the definitions to improve their use in community-engaged research. The interviews lasted 90–120 min, and each session’s digital recording was professionally transcribed. Each individual received a $50 gift card for participation. Each participant completed 16 (50%) of 32 items on the quality and 16 (50%) of 32 items quantity scales. Interviews were conducted using verbal probing after participant responses.

The primary means of administering survey questions was by tablet, participants were provided with the option to use a paper version if preferred. Participants were then presented with six classifications of stakeholder-engaged research definitions, which were presented in pairs, and the participants were asked to explain the difference between them for a total of three separate comparisons. To minimize the impact that the order of questions had on the overall results, we used four different versions of the questionnaire, which listed definition comparisons in different orders, and we randomly assigned the questionnaires to participants.

Coding and analysis

After reviewing the project goals, the content of the interviews, and the existing literature, the first author developed a defined coding guide that prescribed rules and categories for identifying and recording content. The coding was completed in three phases. In the first phase, the study investigator and one research assistant coded the transcripts based on the participant’s discernment (understood or misunderstood) for definitions. Additional codes were developed to note if participants’ explanations included certain keywords or themes (e.g., control/power, decision-making). The coders read and coded the interview transcripts individually, identifying text units that addressed the participants’ understanding of engagement definitions and rationale for differentiating between the classifications of stakeholder-engaged research.

In the second phase of coding, the two coders (the senior investigator and the research assistant) met to reach agreement on the definitions and examples used to code the interview transcripts. Finally, on completion of coding and development of agreement, the coders reconvened to formulate core ideas and general themes that emerged from each interview.

RESULTS

Results of polling and discussion on Delphi panel definitions

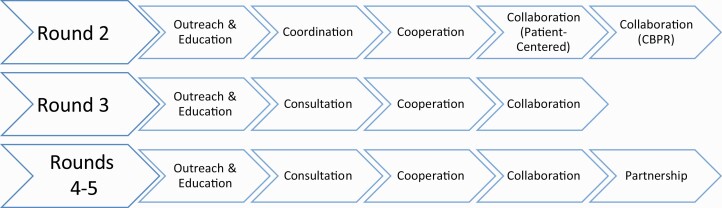

The Delphi panel provided input and voted on three iterations of engagement strategies and definitions during Rounds 2 through 5 (see Figure 1) to reach agreement (94.4%–100.0%; Table 3). At the end of Round 2 of the Delphi process, nonacademic researchers reached strong agreement on definitions of the engaged research classification: outreach and education (80%), coordination (100%), cooperation (90%), patient-centered collaboration (100%), and CBPR collaboration (100%). However, academic stakeholders did not reach strong agreement on any of the definitions: outreach and education (75%), coordination (75%), cooperation (75%), patient-centered collaboration (75%), and CBPR collaboration (75%). In Round 3, academic researchers reached strong agreement only on the definition for cooperation (88%, ranged from 63% to 75% for all others), while nonacademic researchers reached strong agreement on all definitions, 80%–100% (80% agreement for collaboration, 90% for outreach and engagement, 100% for both cooperation and consultation). Also, collaboration, which had been of two types previously, was classified as one category in Round 3 and coordination was renamed consultation. A fifth classification of engaged research, partnership, was added in Round 4 and remained in Round 5. The in-person meeting and discussion (Round 4) made a substantial difference in reconciling divergent perspectives on classification definitions (see Table 3), reaching 80% agreement among participants. However, lower and variable participation (no participation, presurvey/on and off virtual, presurvey only) may have influenced the flow and direction of the discussion, affecting the validity of the Round 4 process. Because some panelists did not vote and to assure that everyone’s input was incorporated, a final online round, Round 5, was completed. Only one dissenting opinion was recorded in the last online survey (Round 5), and this was for the collaboration definition (see Table 3).

Fig 1.

Change of categories in continuum of community-engaged research, Rounds 2–5 of Delphi panel.

Table 3.

Agreement of Delphi panel in online surveys on definitions in the community-engaged research continuum

| Original continuum | Round 2 (n = 18) | First revised continuum | Round 3 (n = 18) | Second revised continuum | Round 4 (n = 12)a | Round 5 (n = 18) |

|---|---|---|---|---|---|---|

| Outreach and education | 14 (77.8%) | Outreach and education | 14 (77.8%) | Outreach and education | 12 (100%) | 18 (100%) |

| Coordination | 16 (88.9%) | Consultation | 16 (88.9%) | Consultation | 12 (100%) | 18 (100%) |

| Cooperation | 15 (83.3%) | Cooperation | 17 (94.4%) | Cooperation | 12 (100%) | 18 (100%) |

| Collaboration–PC | 16 (88.9%) | Collaboration | 13 (72.2%) | Collaboration | 12 (100%) | 17 (94.4%) |

| Collaborationb–CBPR | 16 (88.9%) | – | – | Partnership | 13 (100%) | 18 (100%) |

CBPR community-based participatory research; PC patient centered.

aDelphi panel members voting on the Round 4 surveys (present or online); N = 13 for Day 2 voting on partnership (due to the number of virtual participants differing throughout the day).

bCollaboration–CBPR and Collaboration–PC were combined as a classification during the first revision and changed to Collaboration in Round 3.

On the basis of the Round 2 survey feedback, academic researcher members of the Delphi panel wanted definitions to be more concise, and they expressed concerns about terminology used to categorize the strategies of engagement. In particular, their concerns were related to differences in how the categories were represented in the community-based participatory and patient-centered research literatures. Community members seemed most concerned that definitions state exactly how community members would be provided services (e.g., provision of culturally appropriate education) and how they would be involved in research (i.e., in outreach efforts). In the Round 3 definitions, academic researchers, again, called for concise terms to improve applicability, while community members had fewer suggestions and mostly small edits (e.g., do not use the word “target” when referring to community members).

Community stakeholders and academic researchers had extended discussions about how to classify who was on the research team (e.g., what happens when “researchers are the community and not ‘academic researchers’”) and about using the right language to be as inclusive as possible (e.g., using “community members/patients” vs. “patients, caregivers, partner organizations, etc.”; see Table 4). Community stakeholders and academic researchers also wanted stronger wording. An academic researcher suggested describing and highlighting what constitutes equity in engaged research, and one community stakeholder suggested a category that represented long-term relationships between community stakeholders and researchers to be the gold standard (i.e., partnership). In the third and fourth rounds, strong agreement existed, and few definitional modifications were developed (see Table 4).

Table 4.

Delphi panel’s changes in key terms in community engagement definitions, Rounds 2–5

| Classifying people | ||

|---|---|---|

| Original terms | Changed terms | Round changed |

| Stakeholders | Community residents and/or patients | Round 2 |

| Both researchers and community members | All partners | Round 2 |

| The community | Patients, caregivers, clinicians, and community members | Round 2 |

| Target population | Population of interest | Round 3 |

| Staff members who are similar to target population | Organizational partners | Round 3 |

| People with relevant lived experience | Community residents and/or patients | Round 4 |

| Classifying descriptions or actions | ||

|---|---|---|

| Original terms | Changed terms | Round changed |

| Attempt to act | Act | Round 2 |

| Have a major voice or role in | Partner in every aspect (of the research) | Round 3 |

| Study design, recruitment, study questions and measures, activities of the research project, and the interpretation of findings | Setting priorities, study design, implementation, analysis/interpretation, and dissemination | Round 3 |

| Discuss ways to have respectful and trusting relationships | Built on trust and mutual respect | Round 3 |

| Being valued | Are valued | Round 4 |

| Partnership structures in place | Partnership processes exist | Round 4 |

Results from cognitive response interviews

The discussion and recommendations of the Delphi panel resulted in a final set of engagement strategies and definitions that were examined using cognitive response interviews of a purposive sample of 16 interviewees. The terms and definitions tested were the following:

1.Outreach and Education

Definition: Research team members develop, implement, and evaluate strategies to reach the population of interest. Organizational partners can be engaged as advisors and can make key connections. In some instances, researchers are trying to educate community residents and/or patients about a particular topic. In these cases, outreach efforts are used to gain audiences for education sessions and/or materials.

2.Consultation

Definition: Researchers ask community residents and/or patients for advice on important elements of a project or activity. The provided feedback informs the research, but the researchers are responsible for designing and implementing projects with no help expected from the people who were consulted.

3.Cooperation

Definition: Researchers ask community residents and/or patients for advice and help with a project. Such help may include activity in defined aspects of the project, including recruitment, activities related to doing the intervention, the creation of study questions and measures, and the interpretation of outcomes. Researchers and community residents and/or patients work together to make decisions throughout the project.

4.Collaboration

Definition: Patients, caregivers, clinicians, researchers, and/or community members partner in every aspect of the research, including setting priorities, study design, implementation, analysis/interpretation, and dissemination. Collaborations are built on mutual respect and trust. All partners are valued, benefit from the research, and share decision-making, power, and resources.

5.Partnership

Definition: A strong, bidirectional relationship exists among patients, caregivers, clinicians, researchers, and community members (or a combination of these categories) regarding every aspect of the research, including setting priorities, study design, implementation, analysis/interpretation, and dissemination. The relationship is built on trust and mutual respect. All partners are valued, benefit from the research, and share decision-making, power, and resources. Strong partnership processes exist for how resources are shared, how decisions are made, and how ownership of the work is determined and maintained. Partnerships are the result of long-term relationships and have moved beyond working on a single project. Partners have a history of collaboration, having worked together previously.

Participants in the cognitive response interviews considered two strategies of community engagement at a time and the underlying rationale for both in order to distinguish their differences. Overall, 85% of cognitive response interviewees agreed on such distinctions. The most misunderstood difference was between the outreach and education level and the consultation level (37.5% misunderstanding). Participants seemed to confuse the strategies because they thought the outreach and education level was a higher form of community-engaged research than cooperation. Cognitive interview responses suggest that 37.5% of participants found strong similarities between cooperation and collaboration and that 50% of participants did so between collaboration and partnership. Despite the perceived similarities, most participants (87.5%) agreed on the distinctions. A key distinction that participants made between cooperation and collaboration was related to decision-making, control, or power. Involvement in decision-making, control, or power was an important feature of collaboration. A key distinction between collaboration and partnership was the number of projects researchers and community members had collaborated on. All of the eight participants who completed the collaboration/partnership comparison used the words long-term, ongoing, or history to describe the important features of partnership.

DISCUSSION

Over the rounds of review for the Delphi panel, the categorization of community members and researchers shifted toward more common vernacular (i.e., community residents and/or patients) than research language (i.e., stakeholders). Whereas the outreach and education category was initially defined using specific ideas such as “staff members who are similar to target population” or “people with relevant lived experience,” in the final definition, the category was defined in broad terms like “organizational partners” or “community residents and/or patients.” Definitions of strategies of engagement further along the continuum, such as collaboration, were described in broad terms (e.g., “the community”) at the beginning of the process but in specific groups (e.g., “patients, caregivers, clinicians, and community members”) by the end. Also regarding categories further along the continuum, such as collaboration and partnership, Delphi and interview participants desired that the terms reflect community members’ active involvement and decision-making influence in the research process. In these stages of engagement, researchers are not expected to merely make the attempt to have a respectful and equal relationship with community members; they are expected to actually have a respectful and equal relationship. Trust and a strong relationship should be in place to achieve a high level of engagement.

Participants seemed to believe that outreach and education is a more advanced level of community engagement than has been discussed in the historical literature, where it has been described as ranging from nonparticipation to the lowest level of tokenism [8,9]. Some participants negatively described consultation because it required the most work from them without guaranteeing anything in return. This deficit in giving was a major problem, and the idea that their efforts may not even influence the research project was an additional injury. In contrast, with outreach and education, participants receive something, and participants assumed that this stage naturally incorporated feedback, as respondents talked about community members being needed for outreach and actively engaging in education sessions.

Because cognitive response participants judged outreach and education as being an advanced stage of engagement, we looked back at comments from the Delphi panel when discussing outreach and education. In the first round of definition changes from the Delphi panel, one academic researcher commented that the definition for outreach and education seemed more involved than what the researchers had seen before. At the same time, two community members wanted the definition to involve community members more in outreach activities and producing appropriate educational materials. It was a difference in perception of outreach and education projects that continued to show up during cognitive interviews and participant surveys predominantly with community member participants. Explanations from the cognitive response interviews and comments on comparisons of category definitions and position within the continuum suggested the need to rethink the presentation of outreach and education in comparison to consultation.

The PCORI Compensation Framework states:

Research and other research-related activities funded by the Patient-Centered Outcomes Research Institute (PCORI) should reflect the time and contributions of all partners. Fair financial compensation demonstrates that patients, caregivers, and patient/caregiver organizations’ contributions to the research, including related commitments of time and effort, are valuable and valued. Compensation demonstrates recognition of the value, worth, fairness of treatment with others involved in the research project, and contributes to all members of the research team being valued as contributors to the research project [20].

Compensation was not discussed directly, but the principles selected and the discussion of these during cognitive interviews allude to the issues noted in the PCORI framework. The Delphi panel discussion and cognitive response interviews suggest an expectation that community resources and contributions be valued and compensated. These resources might include compensation for the use of organizational space, staff or community contributions to recruitment, data collection, or other research-related projects and activities, but might also include appropriate compensation of research participants. Hence, means for compensation may include financial, along with other kinds of intellectual and community investment.

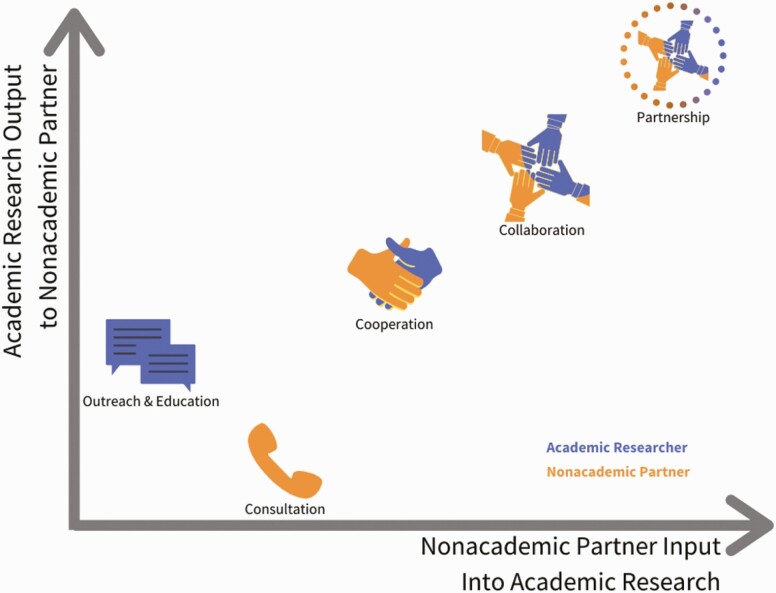

It is possible that the standard single-axis approach to describing community-engaged research continua does not adequately depict community contributions and benefits for each engagement strategy. We developed the framework shown in Figure 2 as an alternative for consideration. This depiction seems to adequately capture community understanding of the contributions from researchers and benefits to communities regarding outreach and education compared to consultation.

Fig 2.

Recommended portrayal of continuum of community-engaged research interactions.

Limitations

These findings should be interpreted cautiously due to the small sample size. However, cognitive response interviewing provides in-depth insight into how participants are thinking about and interpreting surveys, the factors that affect their interpretation and responses, and how comfortable they feel with the language, options, and coverage of topics important to an issue. In addition, the Delphi process allows a diverse group of stakeholders to consider issues of importance until agreement has been reached. The findings and recommendations presented require quantitative assessment and discussion in the literature.

CONCLUSIONS

The results of Delphi panel and cognitive response interviews indicate that it is possible to define a continuum of patient- and community-engaged research that is understood and accepted by both academic researchers and community members. However, responses from the cognitive response interviews suggest the need to revisit the understanding and depiction of the strategies that are to be used in measure development. Cognitive response data also indicate that terminology matters in the ability to adequately convey roles, participation, and benefits at each level of community engagement along the continuum.

Funding

This research was funded by the Patient Centered Outcome Research Institute (PCORI), ME-1511-33027. The funder had no role in the study design, data collection, analysis, interpretation, or drafting of this article.

Compliance with Ethical Standards

Conflict of Interest: The authors declare that they have no conflicts interests.

Authors’ Contributions: V.S.T. conceived of the study, participated in the design of the study, completed interviews, assisted with interview coding and analysis. In addition, V.S.T. wrote the draft version and revisions of the manuscript. N.A. participated in the study design, performed interviews, completed quantitative data analysis and assisted with manuscript revision. D.B. participated in the study design and revision of the manuscript. M.G. conceived of the study, directed the design and coordination of the study and helped to draft the manuscript. All authors read and approved the final version of the manuscript.

Ethical Approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. The institutional review boards at Washington University in St. Louis and at New York University approved this study.

Informed Consent: Informed consent was obtained from all individual participants included in the study. The institutional review boards at Washington University in St. Louis and at New York University approved the consent procedures used in this study.

References

- 1. Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. 2006;1(1). doi: 10.1186/1748-5908-1-1 [DOI] [Google Scholar]

- 2. Feldstein AC, Glasgow RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. Jt Comm J Qual Patient Saf. 2008;34(4):228–243. [DOI] [PubMed] [Google Scholar]

- 3. Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci. 2012;7(1):50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Kost RG, Reider C, Stephens J, Schuff KG. Research subject advocacy: program implementation and evaluation at clinical and translational science award centers. Acad Med. 2012;87(9):1228–1236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Wilkins CH, Spofford M, Williams N, et al. ; CTSA Consortium’s Community Engagement Key Function Committee Community Partners Integration Workgroup . Community representatives’ involvement in clinical and translational science awardee activities. Clin Transl Sci. 2013;6(4):292–296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Yarborough M, Edwards K, Espinoza P, et al. Relationships hold the key to trustworthy and productive translational science: recommendations for expanding community engagement in biomedical research. Clin Transl Sci. 2013;6(4):310–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Frank L, Morton SC, Guise JM, Jull J, Concannon TW, Tugwell P; Multi Stakeholder Engagement (MuSE) Consortium . Engaging patients and other non-researchers in health research: defining research engagement. J Gen Intern Med. 2020;35(1):307–314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Arnstein SR. A ladder of citizen participation. J Am Inst Plann. 1969;35(4):216–224. [Google Scholar]

- 9. Goodman MS, Sanders Thompson VL. The science of stakeholder engagement in research: classification, implementation, and evaluation. Transl Behav Med. 2017;7(3):486–491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Israel BA, Schulz AJ, Parker EA, Becker AB. Review of community-based research: assessing partnership approaches to improve public health. Annu Rev Public Health. 1998;19(1):173–202. [DOI] [PubMed] [Google Scholar]

- 11. Israel BA, Schulz AJ, Parker EA, Becker AB, Allen AJ, Guzman JR. Critical issues in developing and following community-based participatory research principles. In: Minkler M, Wallerstein N, eds. Community-Based Participatory Research for Health. San Francisco, CA: Jossey-Bass; 2008:47–62 [Google Scholar]

- 12. Thompson VL, Drake B, James AS, et al. A community coalition to address cancer disparities: transitions, successes and challenges. J Cancer Edu. 2014;30(4):616–622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Centers for Disease Control and Prevention/Agency for Toxic Substances and Disease Registry. Principles of Community Engagement. 2nd ed. 2011. (page last reviewed June 25, 2015). Available at https://www.atsdr.cdc.gov/communityengagement/. Accessibility verified June 28, 2017. [Google Scholar]

- 14. Patient-Centered Outcomes Research Institute. 2017. What we mean by engagement. Available at https://www.pcori.org/engagement/what-we-mean-engagement. Accessibility verified June 28, 2017.

- 15. Goodman MS, Thompson Sanders VL. Public Health Research Methods for Partnerships and Practice. New York, NY: CRC Press, Taylor & Francis Group; 2017a. [Google Scholar]

- 16. Brady SR. Utilizing and adapting the Delphi method for use in qualitative research. Int J Qual Methods. 2015;14(5):1–6. [Google Scholar]

- 17. Goodman MS, Ackermann N, Bowen DJ, Thompson V. Content validation of a quantitative stakeholder engagement measure. J Community Psychol. 2019;47(8):1937–1951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Drennan J. Cognitive interviewing: verbal data in the design and pretesting of questionnaires. J Adv Nurs. 2003;42(1):57–63. [DOI] [PubMed] [Google Scholar]

- 19. Coats JV, Stafford JD, Sanders Thompson V, Johnson Javois B, Goodman MS. Increasing research literacy: the community research fellows training program. J Empir Res Hum Res Ethics. 2015;10(1):3–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Patient-Centered Outcomes Research Institute. 2015. Financial compensation of patients, caregivers, and patient/caregiver organizations engaged in PCORI-funded research as engaged research partners. Available at https://www.pcori.org/document/compensation-framework. Accessibility verified March 30, 2020.