Abstract

We live in a world that changes on many timescales. To learn and make decisions appropriately, the human brain has evolved to integrate various types of information, such as sensory evidence and reward feedback, on multiple timescales. This is reflected in cortical hierarchies of timescales consisting of heterogeneous neuronal activities and expression of genes related to neurotransmitters critical for learning. We review the recent findings on how timescales of sensory and reward integration are affected by the temporal properties of sensory and reward signals in the environment. Despite existing evidence linking behavioral and neuronal timescales, future studies must examine how neural computations at multiple timescales are adjusted and combined to influence behavior flexibly.

Keywords: decision making, reinforcement learning, Working memory, recurrent neural network, learning rate, cortical hierarchy, volatility

Introduction

Animals adapt their physiological responses according to the changes in its environment. Environmental changes occur on many timescales, ranging from milliseconds to years, and mechanisms to accomplish this adaptability vary greatly across species. These adaptive mechanisms are shaped by many factors, including the animal’s lifespan, the range and precision of sensors that detect the changes in the environment, behavioral repertoire, and the computational machinery available to identify and select the most desirable response [1]. For many animals, the last element is implemented in the brain, and thus, the spatial and temporal organizations of the brain reflect the evolutionary history of adjustments to changes in the environment.

Learning appropriate behavioral responses is not trivial, because the animal’s environment is always stochastic and a particular behavioral response in a certain environment seldom leads to the same outcome [2]. In rare cases, the probabilities of different outcomes for each response might be fixed, which is referred to as expected uncertainty, and this can simplify and even hard-wire certain learning algorithms in the brain through evolution. This still leaves the main challenge for the animal to properly weigh reward probabilities relative to other reward attributes based on the animal’s current physiological state. By contrast, in a non-stationary environment in which outcome probabilities are unknown, which is referred to as unexpected uncertainty, the animals need to adjust their learning and behavioral strategies [2]. Furthermore, sensory signals provide the information about the animal’s environment only probabilistically. Thus, the timescales for integrating sensory signals can also vary substantially. More importantly, different factors that are crucial for some behaviors, such as the traffic laws and road conditions for driving, must be learned over different timescales.

This review provides an overview of behavioral and neural adaptations on multiple timescales. We consider how animals adjust their behaviors according to timescales of changes in the environment, and how these adjustments rely on integration of relevant information over multiple timescales in the brain. We also examine how hierarchy and heterogeneity of intrinsic timescales in neural response and gene expression throughout the brain could support such adaptive behavior. We conclude with remaining questions about the timescales of brain and behavior and how they can be studied.

Timescales of brain and behavior

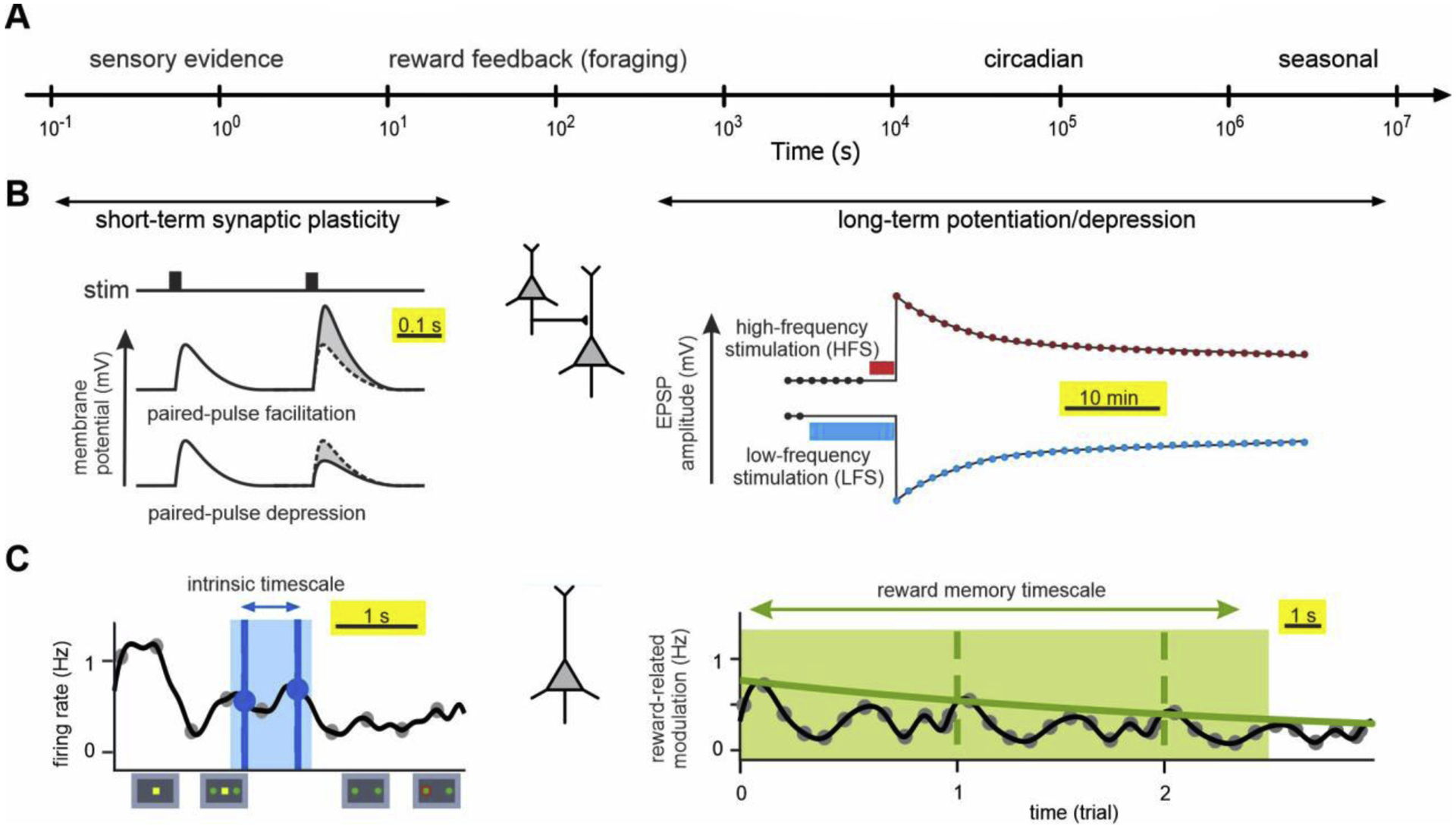

Behavioral timescales might be matched to the timescales of important changes in the animal’s environment using several different mechanisms (Figure 1). For example, information about regularities in the environment might be stored in synaptic connections between neurons [3]. Therefore, different types of changes in the synaptic weights across multiple timescales, such as short-term and long-term plasticity, might allow flexible behavioral adjustments to environmental changes [3]. In addition, neuronal activity often displays multiple concurrent timescales that might support behavioral changes at different timescales, although the range of experimentally measured timescales of neural activity is relatively small compared to the full range of possible behavioral timescales. For example, neurons in the primate prefrontal cortex display activity related to multiple timescales of reward integration in that neuronal activity modulated by a reward outcome decays at different rates across neurons [4]. Moreover, these neuronal timescales are correlated with the behavioral timescales for integration of reward feedback during decision making [5, 6]. Concurrent integration of reward feedback on multiple timescales is also manifest in the activity of neurons in the of lateral habenula and dopamine neurons in substantia nigra pars compacta [7], serotonergic neurons [8], and hemodynamic signals in the human anterior cingulate cortex [9].

Figure 1.

Timescales of environmental changes, synaptic plasticity, and neural response. (A) Changes in the environment across multiple timescales. (B) Different forms of synaptic plasticity at different timescales. (C) Different timescales of spiking activity in an example cortical neuron.

Timescale of behavioral changes associated with reward integration is often estimated using the learning rates of reinforcement learning models fit to choices. A single timescale of reward integration is parsimonious and computationally convenient, but often relies on the assumption that the learning rate can be optimized in a given environment. By contrast, multiple timescales of reward integration can be inferred from a better fit of choice behavior by models that incorporate multiple time averages of reward outcomes [10, 11, 12], reward-dependent modulations of value representation [13], or average reward prediction error [14]. Several well-known behavioral observations, such as spontaneous recovery and motor memory, also suggest that multiple memory traces with different timescales might be widespread in the brain [15].

Previous studies also demonstrated a substantial level of heterogeneity in the observed timescales within and across brain areas, suggesting that learning might proceed in parallel at multiple timescales [6]. This implies that the values of different options or actions would vary according to the timescales of different learning algorithms, and therefore requires mediation to match timescales of neural circuits to those of the environment for guiding behavior, similar to the mechanisms proposed for the arbitration between model-based vs. model free reinforcement learning algorithms [16]. It remains an open question how the brain adapts to changing environmental timescales through adaptation of neuronal timescales or selection of an appropriate timescale.

Volatility and reward integration

Reward may not arrive due to the probabilistic nature of reward outcome or actual changes in the environment, but these two scenarios require very different responses from the animal, namely, no update or faster update, respectively. Actual changes in the environment could happen with different frequency or rate, often quantified as volatility [2,17,18]. In hierarchical Bayesian models, volatility can be equated with a parameter to measure the width of distribution for transition probability between different values of reward probability [17, 19]. Experimentally, volatility can be controlled in various ways, but has been mainly manipulated by changing the block length in probabilistic reversal learning tasks [2,17,20]. Although some studies have reported higher learning rates in a volatile environment [17, 21], other studies in monkeys [20] and humans [22] have not observed similar changes in learning rates. The reasons for this discrepancy should be investigated further.

Volatility and uncertainty can modulate learning and choice through means other than a change in the learning rate [12, 22, 23]. For example, a modeling study showed that reward-dependent metaplasticity can allow continuous adjustments in learning without an overall change in the learning rates [23]. In this model, reward integration is performed by transitions between states on multiple timescales, allowing the model to incorporate the history of reward feedback and thus volatility. In addition, more detailed examinations of learning and choice behavior in monkeys and humans have revealed that uncertainty results in fundamental changes in valuation and choice strategies, instead of a change in the overall timescale of reward integration [22]. These results suggest that integration of reward feedback might not happen on a single timescale adjusted by volatility. Instead, reward integration might happen on multiple timescales across many brain areas.

Adaptability-precision tradeoff

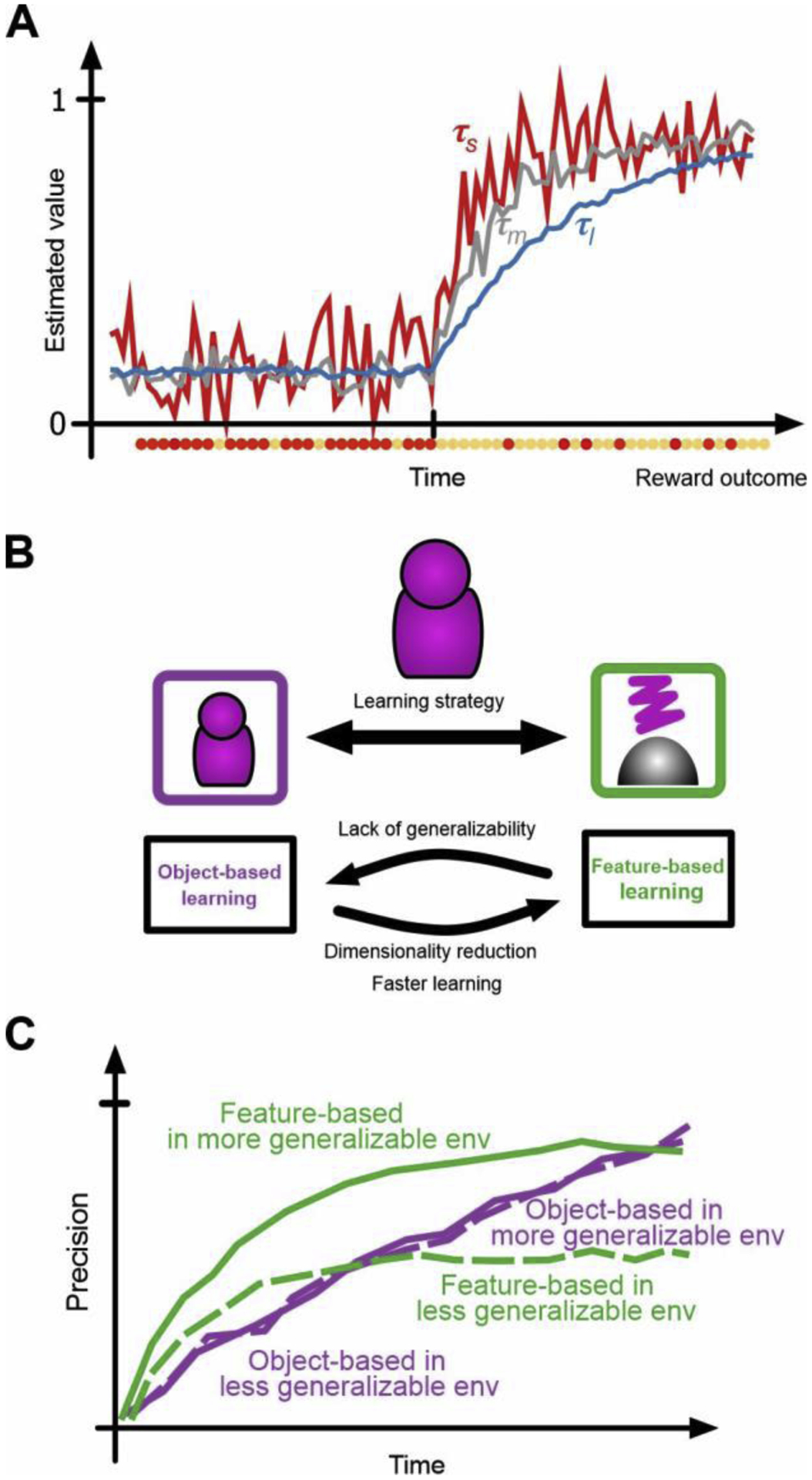

Regardless of the complexity of the learning mechanism, there is always a tradeoff between how fast and how accurate new information can be acquired. This adaptability-precision tradeoff has important implications for timescales of reward integration. On the one hand, increasing the timescale of reward integration or, equivalently, reducing the learning rate can improve the precision in estimating the value of a given action or option, but also results in less adaptability (Figure 2A). On the other hand, shorter timescales of reward integration can improve adaptability but at the cost of precision.

Figure 2.

Adaptability-precision tradeoff in the integration of reward feedback. (A) Different timescales of reward integration allow different speeds for updating estimated values (adaptability) and different levels of accuracy for those estimates (precision). (B) Learning about multi-dimensional stimuli/options could be achieved by strategies with different levels of adaptability and precisions. (C) Accuracy in estimated values over time using feature-based or object-based learning in two environments with different levels of generalizability. With more generalizability, it takes longer for the object-based strategy to surpass the level of precision achieved by the feature-based strategy.

It has been suggested that specific structures of metaplasticity [24] or the addition of a surprise-detection system [12] can partially mitigate the adaptability-precision tradeoff. However, neither mechanism can overcome the adaptability-precision tradeoff completely. In general, adaptability or precision must be prioritized at different points relative to a change in the environment. Therefore, the agent should choose adaptability or precision depending on internal or external factors such as hunger or threat, rather than trying to optimize a single learning rate. Integration of reward feedback on multiple timescales could allow additional flexibility in managing the adaptability-precision tradeoff because the brain could adjust its priority on different timescales at different time points.

In addition to integration over multiple timescales, uncertainty requires that reward feedback should be integrated based on different models of the environment. This becomes more important in the real world where stimuli and objects have numerous features or attributes, making it difficult to determine what reliably predicts reward outcomes. For example, one can learn reward values of individual features and combine this information to estimate values associated with each option [25, 26]. Such feature-based learning reduces precision, since individual features often do not predict reward consistently across many stimuli. Nevertheless, this strategy allows much faster learning because values of all features of the chosen option/stimulus can be updated after each feedback (Figure 2B, 2C). Indeed, recent studies showed that learning strategy depends on volatility, generalizability, and dimensionality of the environment [26, 27]. These findings suggests that timescales and strategies for integrating reward feedback are adjusted according to properties of the environment, and that this adjustment depends on how attention is deployed among many features or attributes of a choice option.

Perceptual decision making

Similar to reward learning, perceptual decision is commonly postulated to rely on the integration of sensory signals over time. Although earlier models assumed perfect integration and hence an infinitely long timescale, perceptual decision making in dynamic environments requires the timescale of evidence integration to be flexibly adjusted, not only by reflecting the timescale of the change in sensory signals itself [28], but also by exploiting multiple sources of information other than the sensory signal.

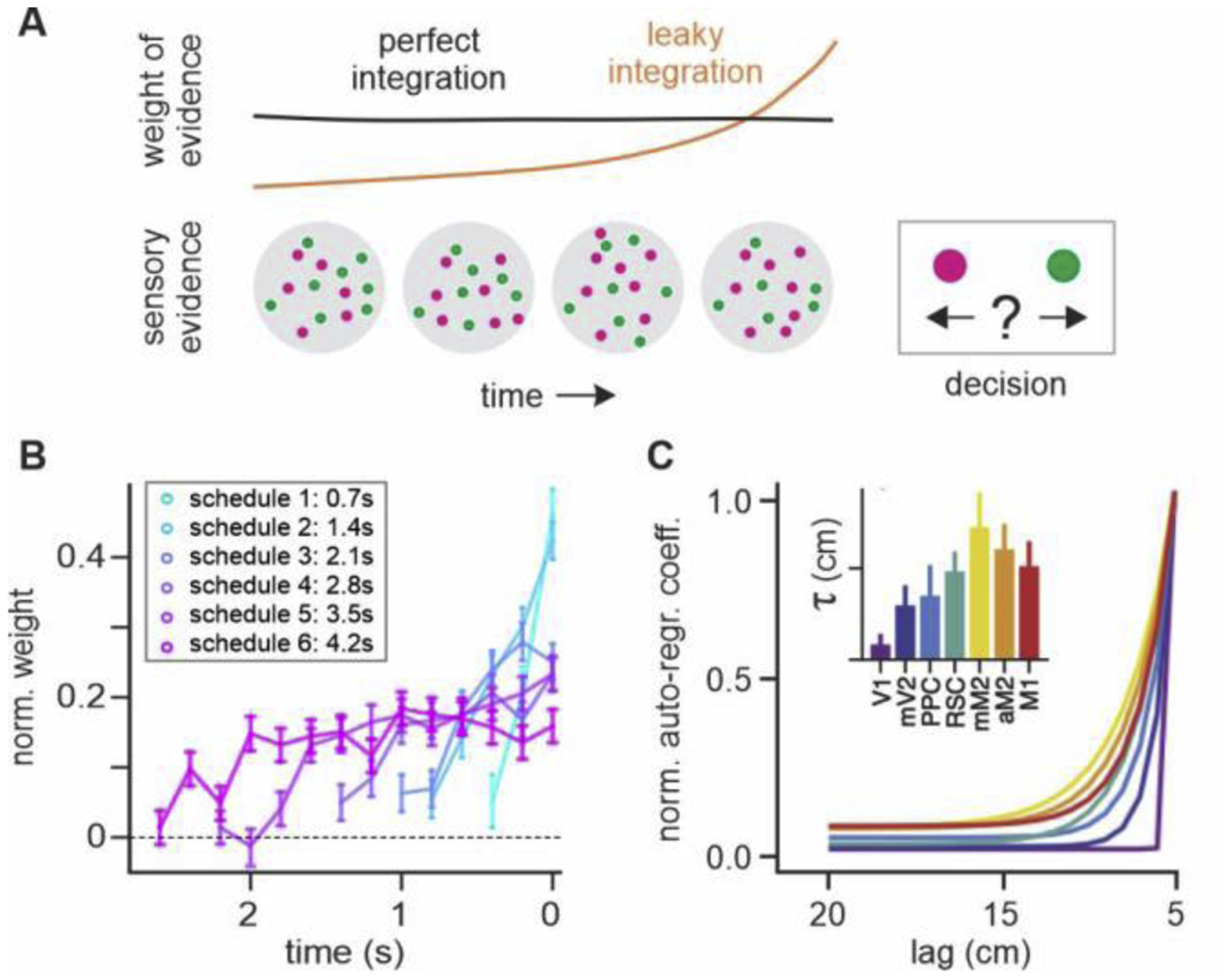

The timescale of evidence integration can be estimated by examining how the weight of evidence on decision varies with the time lag between evidence and decision (Fig. 3A). For time-varying stimuli, the evidence presented close to the decision (late evidence) tended to have stronger influence compared to earlier evidence, consistent with leaky, rather than perfect, integration (Figure 3B) [29,30,31,32]. Although leaky integration can limit the accuracy of decisions by using partial evidence, this enables flexible and strategic modulation of the integration process. For example, the onset of integration can be delayed to make it aligned with the timing of relevant evidence by considering internal processing delays and the temporal structure of dynamic stimulus [31, 33]. Computationally, this can be achieved by time-varying urgency [31]. In addition, the integration gain can be dynamically modulated by the temporal statistics of the evidence, signal duration, and task demands [34,35,36]. Although available descriptive models can account for sensory integration and its interaction with other choice processes such as urgency [31], it remains unknown whether timescales associated with these processes can be distinguished reliably.

Figure 3.

Behavioral and neural timescales during perceptual decision making. (A) Temporal changes in the weight of sensory evidence that reflect different timescales of evidence integration. (B) Recency effect in decision weight may reflect imperfect integration in humans [30]. (C) Heterogeneous intrinsic timescales in rodent dorsal cortex during evidence integration [39].

Despite behavioral evidence for flexible evidence integration, neural mechanisms for adjusting the timescale of neural integration remains poorly understood. Nevertheless, similar to the timescales of reward memory signals observed in the primate cortex, integration of sensory evidence might be performed across multiple timescales in parallel. For example, heterogeneous timescales for evidence integration were reported within and across cortical regions [37,38]. A recent study has also found a hierarchy of intrinsic timescales in the rodent dorsal cortex during a perceptual decision-making task (Figure 3C) [39].

Hierarchy of neuronal timescales

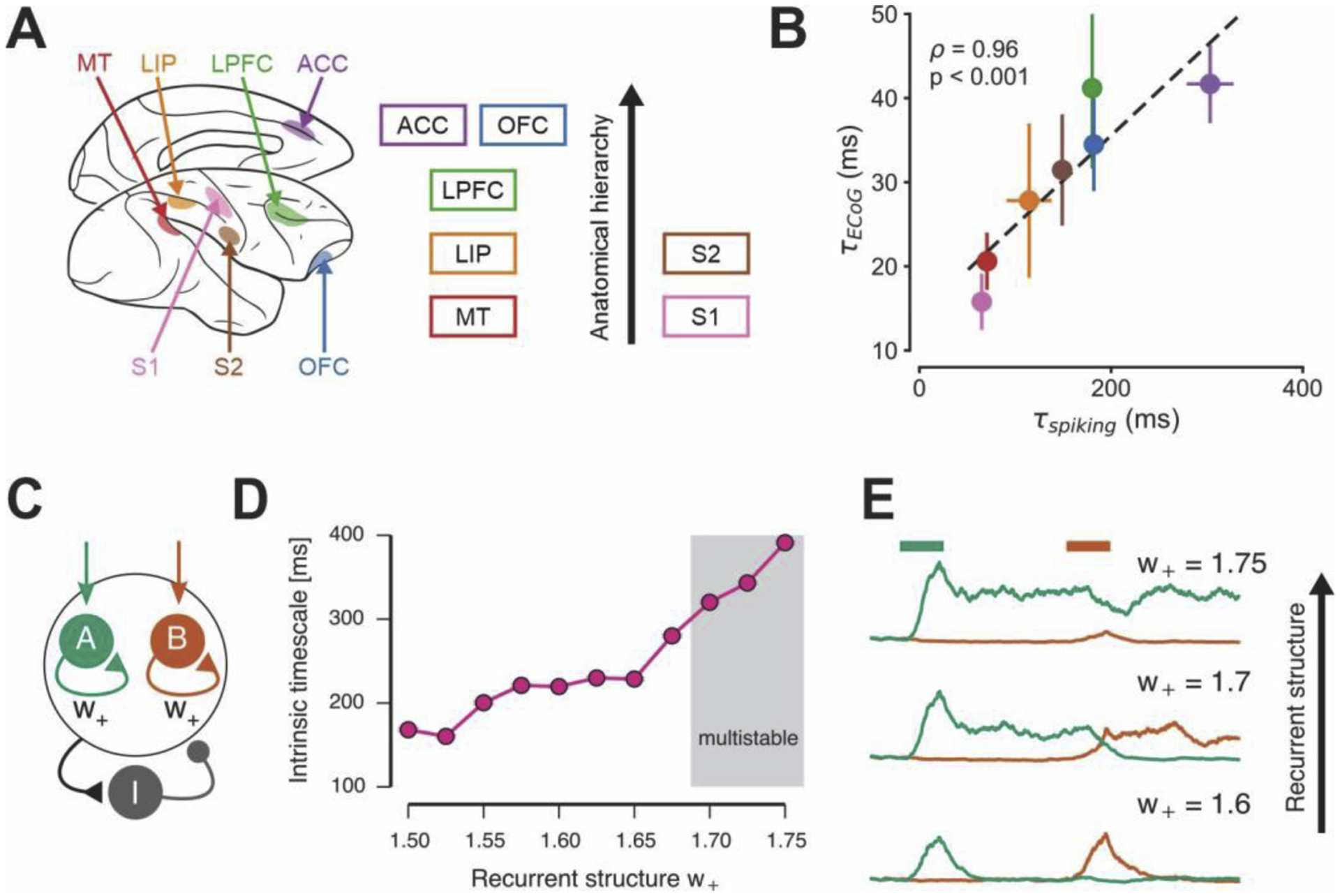

The possibility that the timescales of cognitive computations might be adjusted strategically according to statistics of the environment and task demands raises the question of how a diversity of timescales might be generated in the brain. One possibility is that different brain regions exhibit distinct characteristic timescales in their intrinsic activity, which in turn can shape the functional specialization of regions in terms of reward and sensory integration. In support of this possibility, a growing literature has found that timescales of neuronal spiking activity, related to intrinsic dynamics and cognition, vary across cortical areas. For instance, the intrinsic timescale of spiking fluctuations increases across the cortical hierarchy in the macaque brain, from faster in sensory areas to slower in association areas [4,6]. A similar hierarchical pattern of increasing timescales from sensory to association areas was also found in electrocorticographic recordings in monkeys (Figure 4A, 4B) [40]. These findings are also in line with timescale hierarchies in human cortex measured by various methods including electrocorticography [40,41], magnetoencephalography [42], and functional MRI [43].

Figure 4.

Hierarchical variation in intrinsic timescales across primate cortex. (A) Intrinsic neuronal timescales in spike-train recordings from multiple regions of the macaque cortex, from sensory to association areas [4]. (B) Intrinsic timescales of electrocorticographic recordings in the monkey cortex follow the same hierarchy as those of spiking activity [40]. (C) A spiking-neuron model of an association cortical circuit that contains sub-populations of pyramidal neurons with strong recurrent excitatory connections, parameterized by w+ [45]. (D) and (E) Intrinsic timescales of spiking fluctuations in model pyramidal neurons (D) and strength of persistent activity related to working memory (E) increases with the strength of recurrent structure.

Regional specialization in intrinsic timescales can arise from regional differences in the strength of recurrent connectivity within cortical circuits, which in turn can support functional specialization for cognitive processes such as working memory and decision making [44]. This circuit mechanism can be demonstrated in a canonical association cortical model that performs working memory and decision-making functions (Figure 4C) [45]. As recurrent structure is strengthened, intrinsic timescales of spiking fluctuations increases as seen in empirical data (Figure 4D). Strength of recurrent structure also controls the working-memory computations in the circuit, as it transitions the circuit among different regimes of persistent activity (Figure 4E). This spiking circuit model provides a mechanistic hypothesis for variation in intrinsic timescales. Different intrinsic timescales reflect differences in local cortical microcircuitry, leading to functional differences across areas, such as their capacity for persistent activity.

In line with this theoretical proposal, studies have characterized intrinsic timescales at the single-neuron level, and found that longer timescales are associated with neurons that support persistent delay activity in working memory tasks [46, 47]. Interestingly, during a decision-making task, single-neuron intrinsic timescales were not correlated with timescales of memory related to rewards or actions [6]. Thus, neuronal and circuit mechanisms for dissociation of intrinsic and functional timescales remain open questions.

Another important neural circuit property that varies across the cortical hierarchy is the relative amount of intracortical myelination in that sensory areas are more highly myelinated than association areas. In structural MRI, T1w/T2w map follows the cortical topography of intracortical myelination [48]. This measure also correlates with cortical hierarchical levels in monkeys and with the dominant topographical pattern of transcriptomic variation in humans that reflects regional specialization of cellular and synaptic processes [49]. One intriguing possibility is that this hierarchical gradient in myelination may contribute to a hierarchical gradient in synaptic plasticity for learning. High myelination in sensory cortical areas means less axonal area available for structural plasticity to form new synaptic connections, whereas the relatively unmyelinated axons in association areas can more readily form new synaptic connections in support of learning and memory.

Conclusion

The diversity of timescales related to cognitive functions across cortical areas raises the question of what neural mechanisms may contribute to these patterns and how signals generated by these diverse timescales are combined to determine choice behavior. Areal specialization for timescales of learning is potentially shaped by gradients in the densities of receptors for neuromodulators thought to play a role in learning and decision making, including dopamine and serotonin [49]. Linking neuronal and regional differences in synaptic and neuromodulatory physiology to functional differences in timescales related to cognitive processes is crucial for understanding neural mechanisms underlying the generation of diverse timescales [50].

Recent findings on the independence of timescales and selectivity to task-relevant signals within individual neurons in a given area suggest that multiple mechanisms must underlie the generation of these timescales [6]. If representations of learning and choice behavior are distributed, independence among timescales in different signals can generate less correlated signals that allow higher dimensional representations and easier decoding of signals from relevant ensembles of neurons. Nonetheless, how such distributed representations can be adjusted according to the reliability of different signals remains unknown.

Future experiments that examine learning behavior under conditions with different timescales of changes in reward environment can be used to address whether behavioral adjustments emerge from adaptation of single or multiple timescales to the environment, or from different arbitrations between systems with different timescales. This requires the development of new methods for estimation of multiple timescales in behavior to accompany multiple existing methods for estimation of neural timescales.

Acknowledgements

This work was supported by the National Institutes of Health (Grants R01 DA047870 to A.S.; R01 MH112746 to J.D.M.; R01 NS118463 to H. S.; R01 MH108629 and R01 MH118925 to D.L.) and National Science Foundation (CAREER Award BCS1943767 to A.S.).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

conflict of interest

There is no conflict of interest.

References

- [1].Lee D: Birth of Intelligence. Oxford University Press; 2020. [Google Scholar]

- [2].Soltani A, Izquierdo A: Adaptive learning under expected and unexpected uncertainty. Nat Rev Neurosci 2019, 20:635–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Magee JC, Grienberger C: Synaptic plasticity forms and functions. Annu Rev Neurosci, 2020, 43: 95–117. [DOI] [PubMed] [Google Scholar]

- [4].Murray JD, Bernacchia A, Freedman DJ, Romo R, Wallis JD, Cai X, Padoa-Schioppa C, Pasternak T, Seo H, Lee D et al. : A hierarchy of intrinsic timescales across primate cortex. Nat Neurosci, 2014, 17: 1661–1663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Bernacchia A, Seo H, Lee D, Wang XJ: A reservoir of time constants for memory traces in cortical neurons. Nat Neurosci, 2011, 14: 366–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Spitmaan MM, Seo H, Lee D, Soltani, A: Multiple timescales of neural dynamics and integration of task-relevant signals across cortex. Proc Natl Acad Sci USA, 2020, 117: 22522–22531. [DOI] [PMC free article] [PubMed] [Google Scholar]; •• This paper demonstrates four parallel hierarchies of timescales in the activity of individual neurons as well as correlation between timescales of reward memory in neural activity and behavior.

- [7].Bromberg-Martin ES, Matsumoto M, Hikosaka O: Distinct tonic and phasic anticipatory activity in lateral habenula and dopamine neurons. Neuron 2010, 67:144–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Cohen JY, Amoroso MW, Uchida N: Serotonergic neurons signal reward and punishment on multiple timescales. eLife 2015, 4 :e06346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Meder D, Kolling N, Verhagen L, Wittmann MK, Scholl J, Madsen KH, Hulme OJ, Behrens TE, Rushworth MF: Simultaneous representation of a spectrum of dynamically changing value estimates during decision making. Nat Commun 2017, 8: 1942. [DOI] [PMC free article] [PubMed] [Google Scholar]; •• This study shows that BOLD response in dACC reflects integration of reward on multiple timescales and that this spectrum of timescales is influenced by the volatility of the environment.

- [10].Soltani A, Lee D, Wang XJ: Neural mechanism for stochastic behaviour during a competitive game. Neural Netw 2006, 19: 1075–1090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Wittmann MK, Kolling N, Akaishi R, Chau BK, Brown JW, Nelissen N, Rushworth MF: Predictive decision making driven by multiple time-linked reward representations in the anterior cingulate cortex. Nat Commun 2016, 7:12327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Iigaya K: Adaptive learning and decision-making under uncertainty by metaplastic synapses guided by a surprise detection system. eLife 2016, 5: e18073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Zimmermann J, Glimcher PW, Louie K: Multiple timescales of normalized value coding underlie adaptive choice behavior. Nat Commun 2018, 9: 3206. [DOI] [PMC free article] [PubMed] [Google Scholar]; •• This study shows that increased sensitivity of choice due to lower variance in the reward environment can be explained by adaptation of value representation over multiple timescales.

- [14].Grossman CD, Bari BA, Cohen JY: Serotonin neurons modulate learning rate through uncertainty. bioRxiv 2020.10.24.353508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Pekny SE, Criscimagna-Hemminger SE, Shadmehr R: Protection and expression of human motor memories. J Neurosci 2011, 31: 13829–13839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Lee SW, Shimojo S, O’Doherty JP: Neural computations underlying arbitration between model-based and model-free learning. Neuron 2014, 81: 687–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS: Learning the value of information in an uncertain world. Nat Neurosci 2007, 10: 1214–1221. [DOI] [PubMed] [Google Scholar]

- [18].Massi B, Donahue CH, Lee D: Volatility facilitates value updating in the prefrontal cortex. Neuron 2018, 99: 598–608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Mathys C, Daunizeau J, Friston KJ, Stephan KE: A Bayesian foundation for individual learning under uncertainty. Front Hum Neurosci 2011, 5: 39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Donahue CH, Lee D: Dynamic routing of task-relevant signals for decision making in dorsolateral prefrontal cortex. Nat Neurosci 2015,18: 295–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Blain B, Rutledge RB: Momentary subjective well-being depends on learning and not reward. eLife 9: e57977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Farashahi S, Donahue CH, Hayden BY, Lee D, Soltani A: Flexible combination of reward information across primates. Nat Hum Behav 2019, 3:1215–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Farashahi S, Donahue CH, Khorsand P, Seo H, Lee D, Soltani A: Metaplasticity as a neural substrate for adaptive learning and choice under uncertainty. Neuron 2017, 94: 401–414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Khorsand P, Soltani A: Optimal structure of metaplasticity for adaptive learning. PLoS Comput Biol 2017, 13: e1005630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Niv Y, Daniel R, Geana A, Gershman SJ, Leong YC, Radulescu A, Wilson RC: Reinforcement learning in multidimensional environments relies on attention mechanisms. J Neurosci 2015, 35: 8145–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Farashahi S, Rowe K, Aslami Z, Lee D, Soltani A: Feature-based learning improves adaptability without compromising precision. Nat Commun 2017, 8:1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Farashahi S, Xu J, Wu SW, Soltani A: Learning arbitrary stimulus-reward associations for naturalistic stimuli involves transition from learning about features to learning about objects. Cognition 2020, 205:104425. [DOI] [PubMed] [Google Scholar]

- [28].Buracas GT, Zador AM, DeWeese MR, Albright TD: Efficient discrimination of temporal patterns by motion-sensitive neurons in primate visual cortex. Neuron 1998, 20: 959–69. [DOI] [PubMed] [Google Scholar]

- [29].Tsetsos K, Pfeffer T, Jentgens P, Donner TH: Action planning and the timescale of evidence accumulation. PLoS One 2015, 10:e0129473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Farashahi S, Ting CC, Kao CH, Wu SW, Soltani A: Dynamic combination of sensory and reward information under time pressure. PLoS Comput Biol 2018, 14: e1006070. [DOI] [PMC free article] [PubMed] [Google Scholar]; •• This study demonstrates how dynamics of evidence integration can be modulated by non-sensory factors such as time pressure and payoffs.

- [31].Shinn M, Ehrlich DB, Lee D, Murray JD, Seo H: Confluence of timing and reward biases in perceptual decision-making dynamics. J Neurosci 2020, 40:7326–7342. [DOI] [PMC free article] [PubMed] [Google Scholar]; •• This study provides a general computational framework that can be used to analyze and model temporal dynamics and timescales of evidence integration in realistic perceptual decision-tasks.

- [32].Rakhshan M, Lee V, Chu E, Harris L, Laiks L, Khorsand P, Soltani A: Influence of expected reward on temporal order judgement. J Cogn Neurosci 2020, 32:674–690. [DOI] [PubMed] [Google Scholar]

- [33].Teichert T, Ferrera VP, Grinband J: Humans optimize decision-making by delaying decision onset. PLoS One 2014, 9:e89638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Levi AJ, Yates JL, Huk AC, Katz LN: Strategic and dynamic temporal weighting for perceptual decisions in humans and macaques. eNeuro. 2018, 5: e0169–18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Brornfman ZZ, Brezis N, Usher M: Non-monotonic temporal-weighting indicates a dynamically modulated evidence-integration mechanism. PLoS Comput Biol 2016, 12: e1004667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Ganupuru P, Goldring AB, Harun R, Hanks TD: Flexibility of timescales of evidence evaluation for decision making. Curr Biol 2019, 29: 2091–2097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Hanks TD, Kopec CD, Brunton BW, Duan CA, Erlich JC, Brody CD: Distinct relationships of parietal and prefrontal cortices to evidence accumulation. Nature 2015, 520: 220–223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Scott BB, Constantinople CM, Akrami A, Hanks TD, Brody CD, Tank DW: Fronto-parietal cortical circuits encode accumulated evidence with a diversity of timescales. Neuron 2017, 95: 385–398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Pinto L, Tank DW, Brody CD: Multiple timescales of sensory-evidence accumulation across the dorsal cortex. bioRxiv 2020.12.28.424600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Gao R, van den Brink RL, Pfeffer T, Voytek B: Neuronal timescales are functionally dynamic and shaped by cortical microarchitecture. eLife 2020, 9: e61277. [DOI] [PMC free article] [PubMed] [Google Scholar]; •• This study shows that intrinsic timescales in the human cortex, measured by intracranial recordings, follows a hierarchical gradient which is consistent with the results from single-neuron recordings in monkey cortex.

- [41].Honey CJ, Thesen T, Donner TH, Silbert LJ, Carlson CE, Devinsky O, Doyle WK, Rubin N, Heeger DJ, Hasson U: Slow cortical dynamics and the accumulation of information over long timescales. Neuron 2012, 76: 423–434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Demirtaş M, Burt JB, Helmer M, Ji JL, Adkinson BD, Glasser MF, Van Essen DC, Sotiropoulos SN, Anticevic A, Murray JD: Hierarchical Heterogeneity across Human Cortex Shapes Large-Scale Neural Dynamics. Neuron 2019, 101: 1181–1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Raut RV, Snyder AZ, Raichle ME: Hierarchical dynamics as a macroscopic organizing principle of the human brain. Proc Natl Acad Sci USA 2020, 117: 20890–20897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Murray JD, Jaramillo J, Wang XJ: Working Memory and Decision-Making in a Frontoparietal Circuit Model. J Neurosci 2017, 37: 12167–12186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Wang XJ: Probabilistic decision making by slow reverberation in cortical circuits. Neuron 2002, 36: 955–968. [DOI] [PubMed] [Google Scholar]

- [46].Cavanagh SE, Towers JP, Wallis JD, Hunt LT, Kennerley SW: Reconciling persistent and dynamic hypotheses of working memory coding in prefrontal cortex. Nat Commun 2018, 9: 3498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Wasmuht DF, Spaak E, Buschman TJ, Miller EK, Stokes MG: Intrinsic neuronal dynamics predict distinct functional roles during working memory. Nat Commun 2018, 9: 3499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Glasser MF, Goyal MS, Preuss TM, Raichle ME, Van Essen DC: Trends and properties of human cerebral cortex: correlations with cortical myelin content. NeuroImage 2014, 93 Pt 2: 165–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Burt JB, Demirtaş M, Eckner WJ, Navejar NM, Ji JL, Martin WJ, Bernacchia A, Anticevic A, Murray JD: Hierarchy of transcriptomic specialization across human cortex captured by structural neuroimaging topography. Nat Neurosci 2018, 21: 1251–1259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [50].Sun L, Tang J, Liljenbäck H, Honkaiemi A, Virta J, Isojärvi J, Karjalainen T, Kantonen T, Nuutila P, Hietala J, et al. : Seasonal variation in the brain μ-opioid receptor availability. J Neurosci 2021, 41: 1265–1273. [DOI] [PMC free article] [PubMed] [Google Scholar]