Abstract

To date, retinal implants are the only available treatment for blind individuals with retinal degenerations such as retinitis pigmentosa. Argus II is the only visual implant with FDA approval, with more than 300 users worldwide. Argus II stimulation is based on a grayscale image coming from a head-mounted visible-light camera. Normally, the 11° × 19° field of view of the Argus II user is full of objects that may elicit similar phosphenes. The prosthesis cannot meaningfully convey so much visual information, and the percept is reduced to an ambiguous impression of light. This study is aimed at investigating the efficacy of simplifying the video input in real-time using a heat-sensitive camera. Data were acquired from four Argus II users in 5 stationary tasks with either hot objects or human targets as stimuli. All tasks were of m-alternative forced choice design where precisely one of the m ≥ 2 response alternatives was defined to be “correct” by the experimenter. To compare performance with heat-sensitive and normal cameras across all tasks, regardless of m, we used an extension of signal detection theory to latent variables, estimating person ability and item difficulty in d′ units. Results demonstrate that subject performance was significantly better across all tasks with the thermal camera compared to the regular Argus II camera. The future addition of thermal imaging to devices with very poor spatial resolution may have significant real-life benefits for orientation, personal safety, and social interactions, thereby improving quality of life.

Keywords: Retinal implant, thermal camera, m-alternative forced choice, activities of daily living, performance measures, psychophysics

Introduction

Retinal prosthetic implants are targeted at patients with end-stage outer retinal degenerative diseases such as retinitis pigmentosa (RP) and age-related macular degeneration. These diseases primarily affect photoreceptors and the retinal pigment epithelium, and only indirectly the bipolar cells and ganglion cells. More specifically, due to the loss of input from photoreceptors in RP, the other layers of the retina change in such a way that at the end stage of the disease the affected retina is no longer recognizable as an orderly layered structure of parallel processing units (Jones et al., 2003; Marc et al., 2007). Changes in the retina include new connections between bipolar cells, which result in signals spreading out horizontally through the retina. This particularly limits the achievable resolution using electrical stimulation (Dagnelie, 2012; Marc et al., 2007). To date, there is no cure to prevent or stop the degeneration progress that happens slowly over decades. Yet even in the end-stage of these diseases there are surviving ganglion cells that can send signals to the brain through the optic nerve in response to electrical stimulation (Humayun et al., 1996; Santos et al., 1997).

To deliver the most effective stimulation to the remaining ganglion cells, one challenge is to get close to these cells. Surface electrodes have been shown to be safe and provide visual perception, but if the implant is not perfectly apposed to the retina they may remain far from the ganglion cell layer and thus, require a large surface area in order to sufficiently stimulate neurons without exceeding safe charge density limitations (De Balthasar et al., 2008). To date, the Alpha AMS by Retina Implant AG in Germany (Stingl et al., 2017; no longer in production) and Argus II by Second Sight Medical Products in CA, USA (Cruz et al., 2016; no longer in production) are the only retinal implants that have been approved for clinical use, but they offer only limited functional vision to blind subjects, best described as “moving shadows.”

The Argus II consists of a 6 × 10 array of round platinum-coated 200 μm diameter electrodes that stimulate the surviving ganglion cells in the retina, and it covers 11° × 19°, or ~ 22° diagonally, of the visual field. The stimulation is based on head mounted-camera imagery that is fed into a video processing unit (VPU). The VPU converts the image into grayscale, reduces the resolution to that of the electrode array, and generates the signals to stimulate the 60 electrodes through a wireless telemetry link (Stronks & Dagnelie, 2014).

The Argus II has been implanted in more than 300 blind individuals worldwide. It has been shown to improve performance for locating and identifying the direction of motion of an object, and is helpful for orientation and mobility in high contrast settings in laboratory experiments (Dagnelie, 2006; Dagnelie et al., 2017; Humayun, de Juan, & Dagnelie, 2016; Humayun et al., 2009, 2003; Stronks & Dagnelie, 2014). However, under normal circumstances, the visual field of an Argus II wearer may be full of people and objects at multiple distances. The complex scene, low resolution, and spread of elicited activity through the retina combine to yield a poorly structured blurry image.

In real life, Argus II users will want to find and communicate with people, detect and avoid obstacles, and manipulate objects. With the regular Argus II camera, stimulation is based on light intensity throughout the visible image. Due to the charge spread mentioned above, in low contrast and/or brightness situations, using a visible-light camera may cause the target to blur into the background requiring Argus II users to spend more time and energy to perform even a simple task. In many situations, it may not be possible to compensate by using other senses, e.g., hearing and touch, by hand or cane. For example, when handling a hot pot on a stove, caution is required using only touch, which may result in a dangerous accident. When looking for someone to ask for assistance in a public place or looking for an empty seat in a crowded auditorium, one needs to distinguish people from inanimate objects. Thus, the use of other senses alone may not eliminate safety hazards or improve performance. A better solution may be to simplify the input image before presenting it to the user. The approach investigated in this study was to utilize a thermal imager as an alternative to the normal Argus II visible-light camera. A thermal camera filters out cooler objects and presents only warmer objects in a visual scene regardless of scene illumination and target contrast.

Thermography or thermal imaging has been successfully used in a wide range of fields that require safety monitoring; these include applications in firefighting to detect smoke and heat (Cope, Arias, Williams, Bahm, & Ngwazini, 2019), road safety in vehicles with an autonomous operation mode to detect pedestrians (Miethig, Liu, Habibi, & Mohrenschildt, 2019), military and defense for target recognition (Stout, Madineni, Tremblay, & Tane, 2019), and even disease control by screening people at airports or hospitals for elevated body temperatures related to epidemics such as Covid-19, SARS and bird flu (Lee et al., 2020; Ng, Kaw, & Chang, 2004). Moreover, thermal imaging has found new uses in medical imaging such as corneal thermography to assess corneal integrity in the study of dry eye, corneal ulcers and diabetic retinopathy (Konieczka, Schoetzau, Koch, Hauenstein, & Flammer, 2018), and a recent case report discusses the utility of thermal imaging in Horner Syndrome showing anhidrosis (Henderson, Ramulu, & Lawler, 2019).

The ability to detect warm objects could be helpful to blind individuals for locating hazards such as a stove or toaster, or desired objects such as a hot cup of coffee or a meal. In addition, people and pets are visible in the thermal image, which makes it easier for users to locate and differentiate them from inanimate objects. We first reported the use of thermal imaging for Argus II users during ARVO 2016 (Dagnelie et al., 2016). In two recently published studies, Argus II users performed two stationary tasks, a mobility task, and an orientation/mobility task with a thermal camera; the results suggested some benefit of using a thermal camera for Argus II users and potentially other low vision individuals (He, Sun, Roy, Caspi, & Montezuma, 2020; Montezuma et al., 2020). The present study bears similarities to those of Montezuma et al. and He et al., but differs in three important respects: 1) We chose our tasks to be more diverse, and more similar to what an Argus II user would encounter in the real world; 2) all tasks were structured as m-alternative forced choice tasks (m-AFC) – on each trial there were m ≥ 2 possible response alternatives with precisely one of the response alternatives defined as “correct”; 3) we used a novel analysis framework that allows quantification of subject ability and task difficulty regardless of the number of response alternatives m. Subject ability and task difficulty are latent variables, and to estimate these we used an extension of signal detection theory (SDT) to latent variables (Bradley & Massof, 2019) that transforms percent correct scores into d′ units and thus, enables comparison of subject ability across tasks with different chance performance levels. The new method provides calibrated task difficulties which can be used to estimate person abilities on the same scale in different studies; it also provides estimated person abilities which can be compared to future performance, for example during a treatment trial.

There are standardized tests in the literature to measure visual function in individuals with ultra-low and prosthetic vision, (Bach, Wilke, Wilhelm, Zrenner, & Wilke, 2010; Bailey, Jackson, Minto, Greer, & Chu, 2012) and a set of lab-based functional vision measures has been developed for Argus II users (Ahuja et al., 2011; Caspi & Zivotofsky, 2015; Dorn et al., 2013). However, such measures are not informative for device functionality in daily life, and more representative tests have not yet been developed for the level of vision afforded by the Argus II; nor did previous reports take thermal imaging into account. Thus, the present study aimed to quantitatively compare the benefits offered by thermal and regular Argus II cameras in a set of standardized situations approximating real life.

Methods

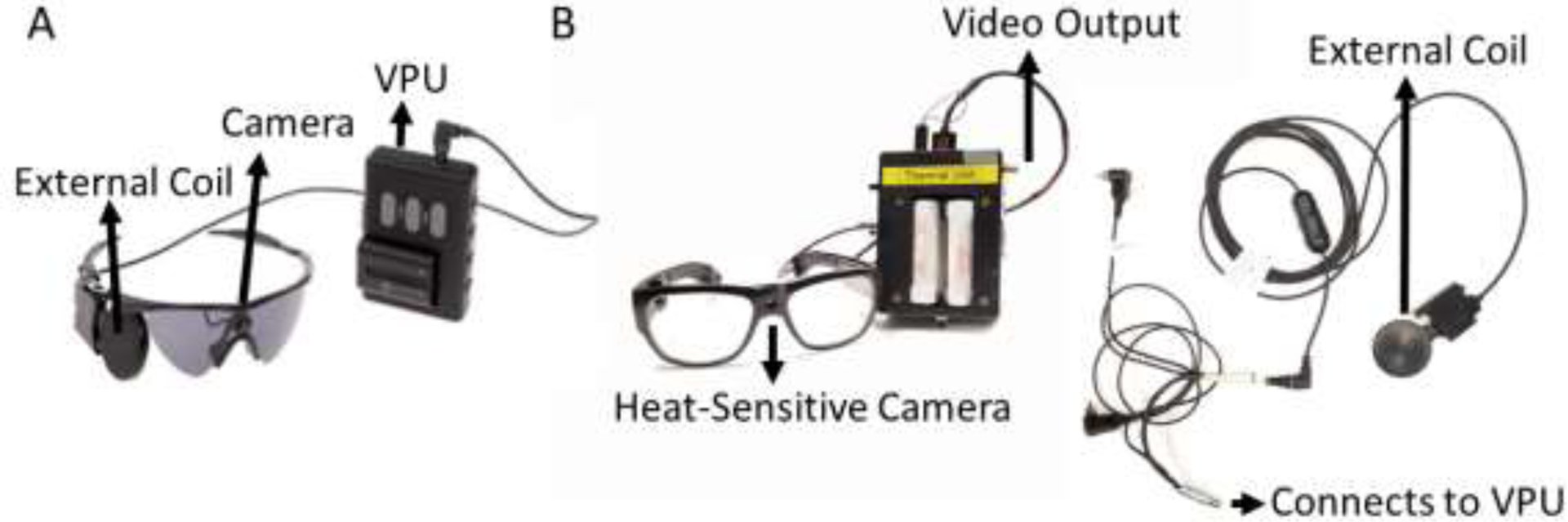

Thermal Camera System

In our experiment, we used a miniature heat-sensitive infrared camera (Lepton; FLIR Corp.) in a custom-designed frame. The heat-sensitive camera feeds radiometric information to an electronics box that generates and transfers the thermal image of the scene to the Argus II VPU. The VPU communicates stimulation parameters based on the local thermal image intensity to each electrode through the transmitter coil (Fig. 1). This real-time stimulation results in visual percepts corresponding to the location of warm objects in the scene.

Fig. 1.

Side-by-side views of the external Argus II system components and the heat-sensitive adaptation. A) A miniature head-mounted video camera sends a grayscale image to the VPU, and the VPU transfers energy and the real-time stimulation parameters to the implant array through the transmitter coil. B) Heat-sensitive camera connected to the electronics box that processes and transfers the thermal camera images to the VPU for transmission to the implant through the external transmitter coil.

Study Design

Four experienced Argus II users participated in this study (Table 1) and were asked to perform 5 tasks while wearing either the normal Argus II glasses with a visible-light camera or a glasses frame with the heat-sensitive camera. All tasks in our experiment were designed to approximate real-life scenarios such as identifying stationary and moving targets. Target sizes as small as a cup and as large as a human were chosen to assess how useful information from the thermal camera was for Argus II users compared to information from the visible-light camera. The study was approved by the Johns Hopkins Medical Institutions IRB and followed the tenets of the Declaration of Helsinki. All subjects signed an informed consent form to participate after being informed of the goals and methods of the study.

Table 1.

Demographics table for the four subjects who participated in this study.

| Subject | Age | Gender | Years of using Argus II | Number of Active Electrodes |

|---|---|---|---|---|

| S1 | 87 | Male | 12 | 55 |

| S2 | 80 | Male | 10 | 56 |

| S3 | 67 | Female | 5 | 57 |

| S4 | 58 | Male | 4 | 57 |

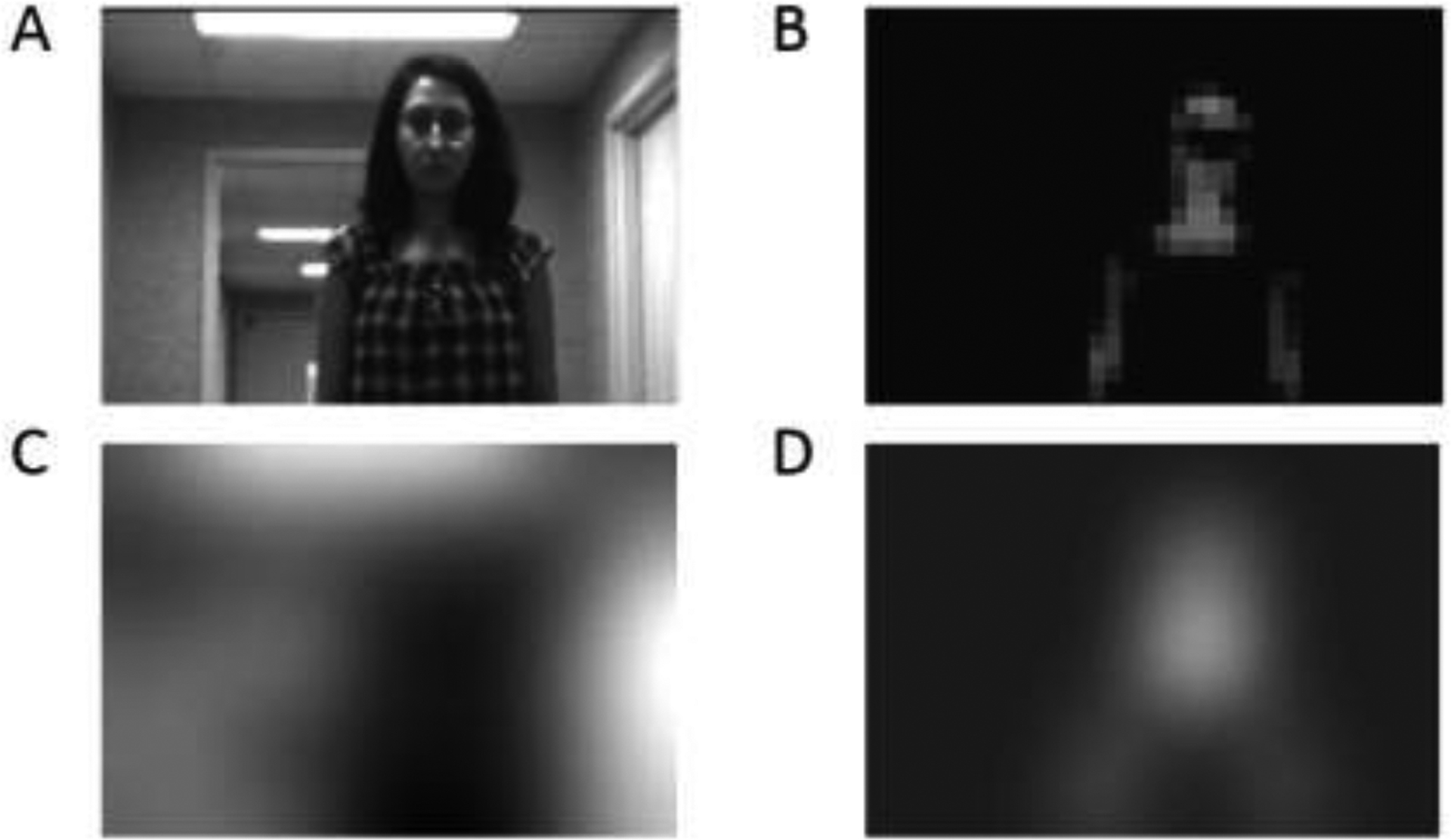

Fig. 2 shows example image frames with visible-light and heat-sensitive cameras that were used in this study. The image frame of the thermal camera only contains the face and arms and removes all other cooler objects that are visible in the normal view. This potentially helps subjects perceive the location of warm targets more accurately.

Fig. 2.

Image frame examples A) from a visible-light camera and B) a thermal camera. Approximation of Argus II user percept from the image frame example with C) visible-light camera and D) a thermal camera.

As mentioned above, all 5 tasks were structured as m-AFC. The tasks were designed to cover a range of difficulty levels encountered in daily life (Table 2). In all 5 tasks, subjects were seated with no constraints on head movement, and no time limits were imposed. Prior to this study, all four subjects had participated in thermal camera system testing, on similar tasks, so they were familiar with the characteristics of thermal imaging. At the beginning of each task, subjects were given 15 minutes to practice locating the target with both cameras.

Table 2.

Tasks performed using the thermal and the visible-light camera. These tasks were designed to approximate real-life scenarios to investigate the possible benefit of using the heat-sensitive camera.

| Task Number | Task Name | Detail | Data Collection | Trial Count per subject, per camera |

|---|---|---|---|---|

| 1 | Cups | Report the side of closer cup | 2-AFC | 40 |

| 2 | Bowls | Report the location of the missing bowl if there is any | 5-AFC | 80 |

| 3 | Distance discrimination | Report the distance of the standing person | 3-AFC | 60 |

| 4 | Direction of person walking | Report the direction of the person’s movement | 2-AFC | 100 (opportunity trials) |

| 5 | Direction of closer escalator | After 10 passengers passed by, report the direction of close escalator | 2-AFC | 10 |

Tasks details:

Cups: Two white cups with warm water were placed on a black table in front of the subject, at 53.3 and 78.7 cm distances. The transverse (left-right) distance between the cups was 25.4 cm. The subject was asked to report which cup was closer. The response of left or right was recorded.

Bowls: Three or four white bowls with warm water were located at the 3, 6, 9, and 12 o’clock positions on a black square table in front of a seated subject, similar to a meal set for four people; in a randomly chosen 80% of the trials one bowl was removed, with the missing bowl being chosen randomly, balanced across conditions. The subject was asked to detect if any bowl was missing, and if so, report the location of missing bowl. The subject responded with one of five possible choices: “full set”, or 3, 6, 9, or 12 o’clock is missing.

- Distance discrimination: A person with a white shirt was standing in front of the seated subject at 1, 3, or 6 m and the subject was asked to report the location of the person. The responses of 1, 3, or 6 m was recorded.

- Direction of motion tests near a pair of escalators (tasks 4 and 5): the subject was seated 536 cm to the side of a pair of escalators moving in opposite directions and observed people moving onto and off the escalators (Fig. 3). The subject could not see the escalators themselves but could use size cues provided by people walking onto and off the escalators to determine in which directions the closer and farther escalators were moving. Due to the real-life nature of the scenario, we had no control over the number of people passing in each direction; however, an off-line review of the video recordings confirmed that approximately 50% of passengers traveled in each direction over the course of the experiment.

Direction of person walking: if any motion was detected that indicated a person walking onto or off the escalators, the subject was asked to specify the direction of motion (left or right). The subject was not informed if a person was passing by. Each person passing by and detected constituted a trial for this task; if the subject failed to detect the person, the trial was not included in the direction of person walking analysis for this task. A total of 100 persons passed by in either direction, thus the maximum possible number of trials was 100, but the actual (i.e., detected) number of trials was lower, and varied by subject and by camera system.

Direction of closer escalator: Each time 10 people had passed by (on either escalator, and whether the subject detected them or not), the subject was asked to report in which direction the closer escalator was moving (left or right) using any available clue, e.g., size of the persons. Since the passing of 10 persons (detected or not) constituted a trial, there were 10 trials in this task. To avoid subjects learning the true escalator direction, the video image was left/right reversed for a randomly chosen 5 out of these 10 trials; subjects also wore ear plugs to prevent the use of auditory cues.

Fig. 3.

Subjects’ view for tasks 4 and 5 is indicated by the white rectangle, which approximates the visual field of an Argus II user.

Data Analysis

In functional vision assessment, patient reported outcomes and performance measures are typically used to estimate the person’s ability to perform standardized activities. When the person’s ability is defined along a latent (or unobservable) variable axis — as is the case in our experiment where “functional ability” is not directly measurable — responses from different persons to the same set of tasks are used to estimate both task difficulty and person ability on a common scale, often using a mathematical tool called Rasch analysis. Rasch analysis yields item measures (estimates of task difficulty) and person measures (estimates of person ability) along this scale when a person “rates” each item (e.g., in a questionnaire), but Rasch analysis does not apply to tasks with a defined correct response such as our m-AFC tasks. Bradley & Massof (2019) extended SDT – the standard method in psychophysics for analyzing m-AFC task responses – to latent variables in an analog of Rasch analysis for m-AFC tasks, which we apply to our data.

In the method by Bradley & Massof, person measures and item measures are estimated on an “ability” axis in d′ units, as is customary in SDT. Item measures are estimated first, by mapping probability correct across all subjects into d′ units using standard SDT (Green & Swets, 1966), and then each person measure is estimated, independent of all other person measures, using maximum likelihood estimation (MLE). Confidence intervals for the item measures are obtained by calculating 95% binomial confidence intervals in probability correct units, using the Wilson method (Wilson, 1927), and then mapping the 95% CI into d′ units. Standard errors for the person measures are calculated based on the square root of the Hessian (because MLE was used).

On this “ability” scale, more positive person measures represent more capable persons while more negative item measures represent easier items. Unlike in typical applications of SDT, both positive and negative person measures are possible because multiple persons and items are placed on the same scale. The ability of any person relative to any item is represented by the person minus item measure, with chance performance for any person-item combination predicted to occur whenever the person measure equals the item measure. The axis origin (d′ = 0) represents chance performance for the average person, for all tasks regardless of the number of response alternatives m. Thus, positive person measures represent above average ability while negative person measures represent below average ability. The fact that d′ = 0 represents chance performance for the average person regardless of the number of response alternatives m allows person and item measures to be compared across different m-AFC tasks. All d′ analyses were performed using the R programs provided in Bradley & Massof (2019).

Since our goal was to compare subject performance in the two camera conditions, we estimated two person measures for each subject, one for each camera condition (4 subjects × 2 conditions = total of 8 estimated person measures). Estimated person measures in the two camera conditions were compared using Welch’s test (t-test for unequal variance).

In task 4, 95% binomial confidence intervals using the Wilson method were computed for the detection of motion with the visible-light camera, and detection with the thermal camera was compared to this reference. In task 5, we used the Wilcoxon-Mann-Whitney rank-sum test to compare walking motion detection rates with the thermal camera and the visible-light camera.

Results

All subjects successfully completed the cups, bowls and distance discrimination tasks in a lab setting with both heat-sensitive and visible-light cameras. The direction of person walking, and direction of closer escalator tasks were performed at a public escalator, and only subjects 2 and 4 completed these tasks with both heat-sensitive and visible-light cameras. Subject 3 completed the tests near the pair of escalators only with visible-light camera and subject 1 did not perform this task, due to limited access.

In tasks 4 and 5 (the tasks near a pair of escalators), using a visible-light camera, out of 100 passengers that passed by, subject 2 detected 9 passengers, subject 3 detected 35 passengers, and subject 4 detected 29 passengers. When the heat-sensitive camera was used, out of 100 passengers that passed by, subject 2 detected 33 passengers and subject 4 detected 69 passengers (no data for subject 3; Table 3). Performance with the thermal camera fell well outside the estimated confidence intervals for performance with the normal Argus II camera (subject 2: 95% CI [4, 16]; subject 4: 95% CI [20, 39]).

Table 3.

The range of people detected per block of 10 (task 5), and out of 100 total passengers (task 4), for the tasks near the pair of escalators.

| Visible Camera | Thermal Camera | |||

|---|---|---|---|---|

| People detected per block of 10 | Total number of people detected out of 100 | People detected per block of 10 | Total number of people detected out of 100 | |

| Subject 2 | 0 – 3 | 9 | 2 – 5 | 33 |

| Subject 3 | 2 – 7 | 35 | N/A | N/A |

| Subject 4 | 1 – 5 | 29 | 5 – 9 | 69 |

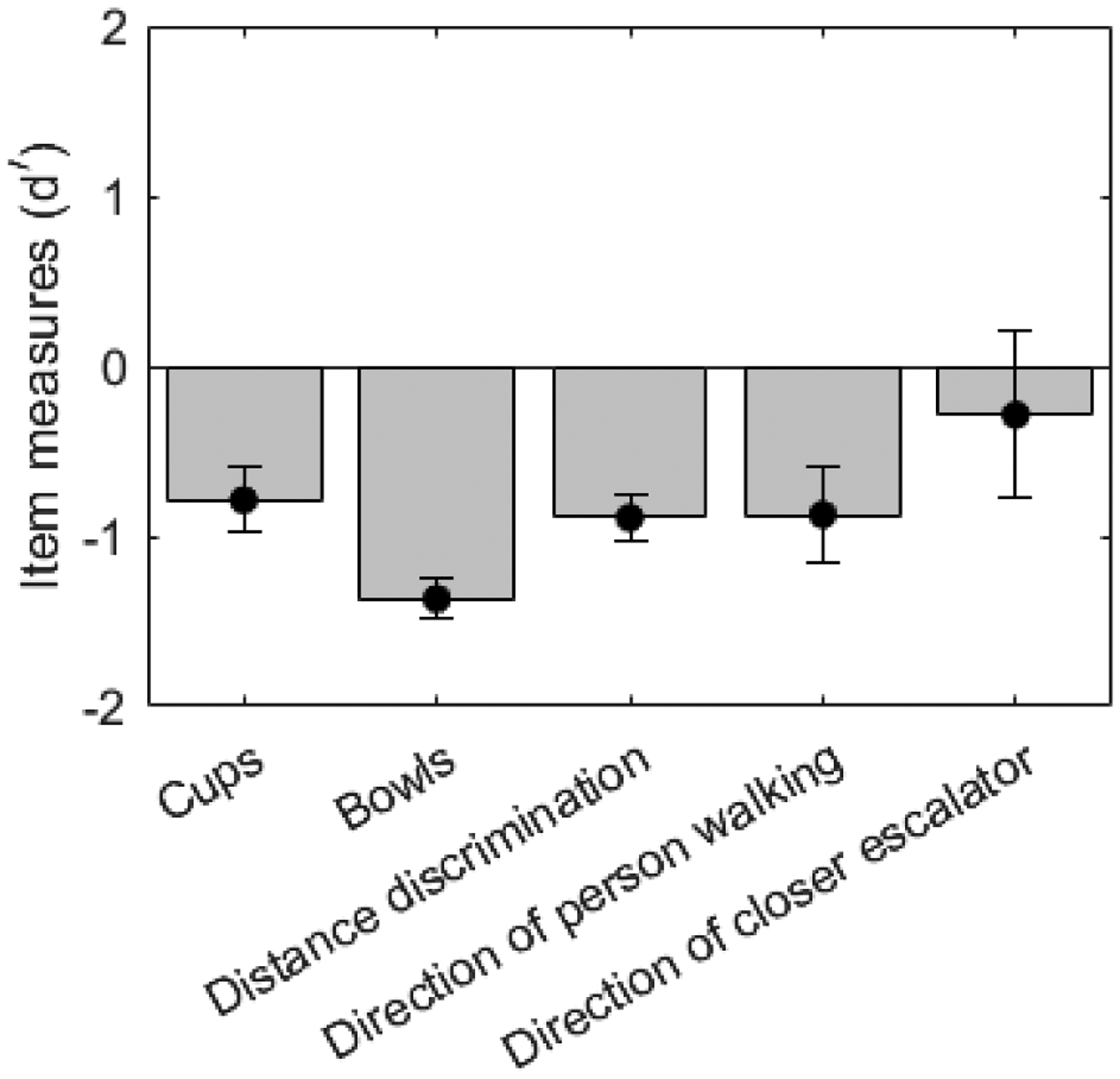

Fig. 4 shows estimated item measures and 95% confidence intervals for each task across all subjects and conditions. All estimated item measures were negative and showed the range of difficulty of the tasks in our experiment. More negative d′ item measures represent easier tasks, and d′ = 0 represents chance performance for the average person.

Fig. 4.

Estimated item measures in d′ units show the range of difficulty levels for the tasks in our experiment. Error bars specify 95% confidence intervals.

The direction of closer escalator task (task 5) was particularly difficult compared to other tasks because after every 10 passengers passing by the subjects had to infer the direction of the closer escalator based on the movements of the detected passengers (the subject was required to guess if none of the 10 passengers were detected on a given trial). With the visible-light camera, subject 2 detected between 0 and 3 out of 10 passengers per trial, subject 3 detected between 2 and 7 out of 10 passengers per trial, and subject 4 detected between 1 and 5 out of 10 passengers per trial. With the thermal camera, subject 2 detected between 2 and 5 out of 10 passengers per trial, and subject 4 detected between 5 and 9 out of 10 passengers per trial (Table 3). A Wilcoxon–Mann–Whitney two-sample rank-sum test showed that these differences were statistically significant for the two subjects who completed the tasks with both cameras (for both subjects p < 0.001; subject 2 Mann-Whitney U = 150; and subject 4 Mann-Whitney U = 152).

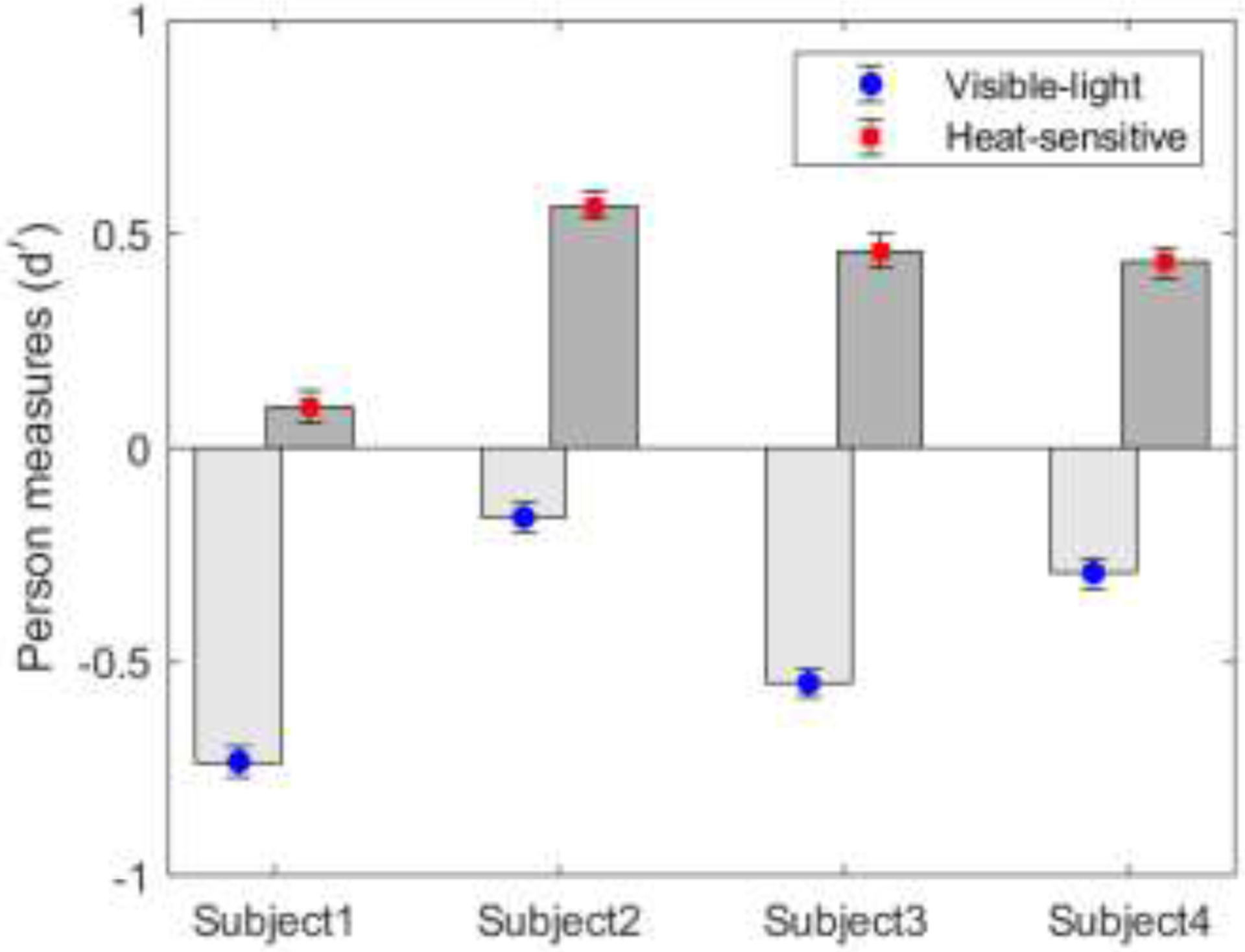

Fig. 5 shows estimated person measures for each subject in the two camera conditions across all tasks, with d′ = 0 representing the average ability of all subjects across all tasks and cameras. The thermal camera improved performance by 0.83, 0.73, 1.00 and 0.73 d′ units for subjects 1 to 4, respectively. Welch’s test showed that this performance difference was significant across all m-AFC tasks for all subjects (all four p < 0.001; for subject 1, t(370.7) = −215.5; subject 2, t(482.2) = −262.8; subject 3, t(346.9) = −263.1; subject 4, t(465.6) = −223.7).

Fig. 5.

Estimated person measures for each subject across all tasks, relative to the average person measure (at d′ = 0) across all tasks, are plotted for the heat-sensitive camera (red squares) and visible-light camera (blue circles). Higher person measures indicate more capable persons. The error bars show the estimated standard errors.

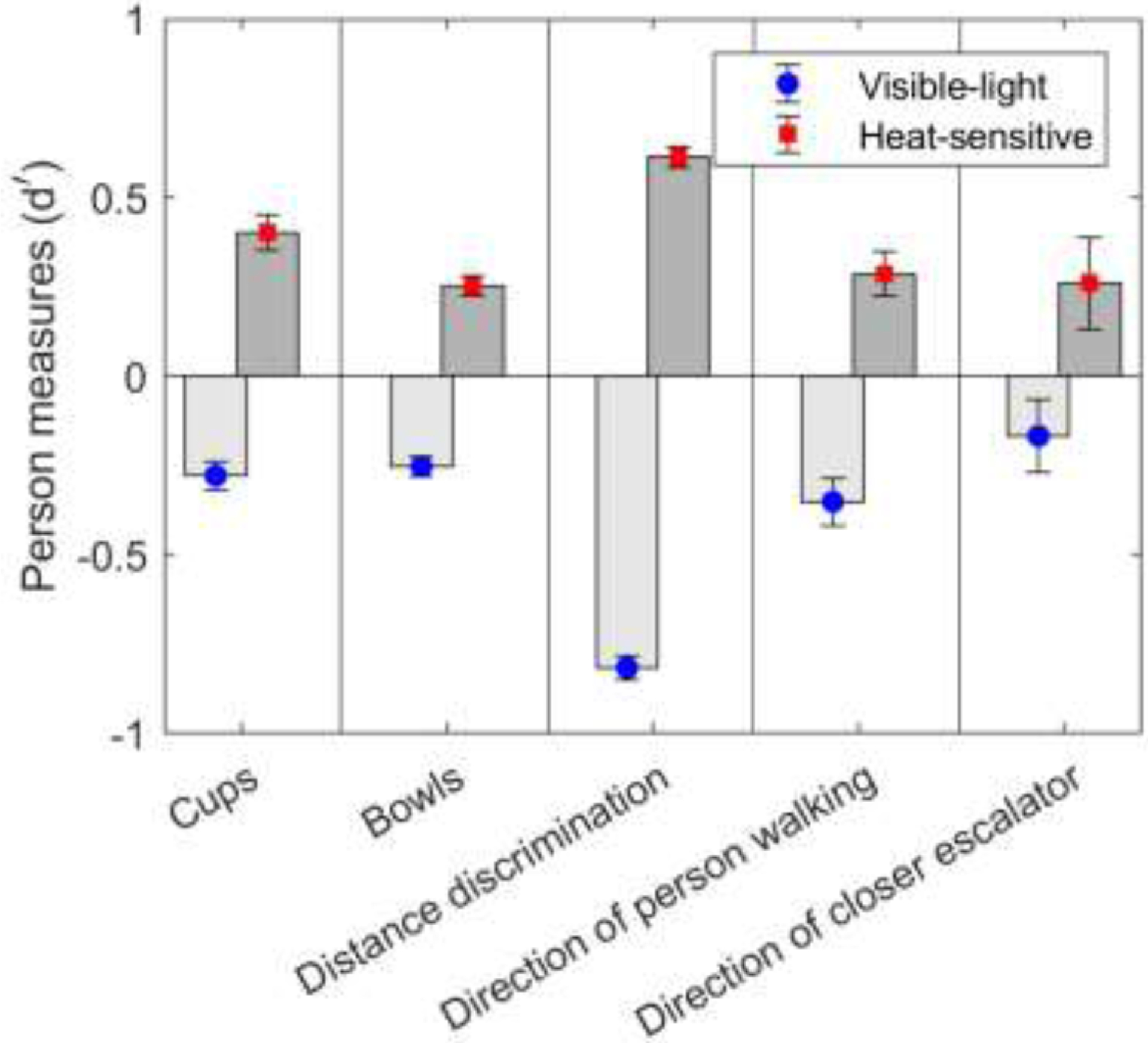

Fig. 6 shows estimated person measures for each task, plotted relative to the average person ability (d′ = 0) in that task, across all subjects in the two conditions. Note that average person ability in each task varied due to different levels of task difficulty (see Fig. 4), which means that for each task d′ = 0 represents a different level of person ability. Despite the differences between subjects and tasks, the estimated person measures show that all tasks were easier for subjects with the heat-sensitive camera than with the visible-light camera (using Welch’s test all five p < 0.001; for task 1, t(301.4) = −139.5; task 2, t(656.9) = −239.4; task 3, t(470.7) = −533.2; task 4, t(34.4) = −12.5; task 5, t(148.7) = −64.1). In order of benefit level, the thermal camera improved performance in the distance discrimination task by 1.43 d′ units, in the cups task by 0.68 d′ units, in the direction of person walking task by 0.64 d′ units, in the bowls task by 0.51 d′ units, and in the direction of closer escalator task by 0.42 d′ units.

Fig. 6.

Estimated person measures across subjects, by task, relative to the average person ability (d′ = 0), for the thermal (red squares) and visible-light (blue circles) cameras. Vertical lines separating the tasks are for emphasis that each task has its own performance average. Error bars show the estimated standard errors.

Discussion and conclusion

In this study, to improve the current Argus II system, we investigated the benefit of using a heat-sensitive camera compared to the regular Argus II camera in tests approximating real-life scenarios. As expected, the thermal camera simplified the information provided to Argus II users and improved performance in tasks where temperature differences play a role.

At the end of the test, subjects were asked how useful the thermal camera is and in what tasks they might use it. Subjects indicated that it takes too long to understand the phosphene vision conveyed by the current Argus II system, and the addition of a thermal camera to the Argus II system could improve performance of certain activities. For example, subjects 2 and 3 suggested safety might improve when using a cane with the thermal camera. Subject 4 mentioned the increased ease in locating people with a thermal camera while having a conversation. Subject 1 reported that he is willing to try the new camera even though he can achieve his daily goals without using the Argus II system.

The d′ analysis for the m-AFC tasks showed that the heat-sensitive camera significantly improved performance for the diverse set of tasks in our study. Even for the easier tasks, such as the bowls task where average performance of all subjects with both cameras was much better than chance level, subject accuracy improved when using the thermal camera. The average improvement across all tasks combined was similar for all subjects, regardless of their baseline performance with the normal Argus II camera (Fig. 5). In real-life, this could mean that the chance of knocking over a hot cup or touching a very hot object is much lower with the thermal camera because the user can more readily locate the object. The heat-sensitive camera also offers benefits for distinguishing persons from other objects in a room, as well as determining how far away they are by using size cues (for a known object). In our experiments, subjects were significantly better at distinguishing the distance of a standing person at 1, 3, or 6 m with the thermal camera than with the regular Argus II camera. Our experiments also showed that the thermal camera improved subjects’ abilities to not only detect a walking person, but also identify the direction of the person walking. This could help with orientation and with safely walking toward a target location in a crowded place such as a metro station.

One limitation to the use of a thermal camera is that it only detects warm targets. In the absence of a warm target, a thermal imager filters out all cold objects in the scene and will not provide any useful information. However, in the presence of a warm target, a thermal camera detects the target regardless of the brightness and luminance contrast. Since the normal Argus II camera depends on visible light, it requires high contrast and enough brightness to show a target object against a background, and even then, the object may be hard to distinguish from a cluttered background. Therefore, a thermal camera would be a useful addition to the current Argus II system if added in such a way that the user can switch between using the thermal camera and the normal Argus II camera. For example, consider a situation where a subject enters a room with people sitting on chairs and looks for an empty chair to sit down. The user may perceive both chairs and people similarly with the normal camera, but only people will be visible when using a thermal camera, so by switching back and forth between two cameras the user will be able to distinguish the empty chair from the occupied chairs. If the image frames from thermal and visible-light cameras can be fused, then warm targets can be highlighted within normal settings without need for a switch. A production prototype with these capabilities will be tested in upcoming studies.

One possible alternative to using a thermal camera for detecting and identifying objects is using machine learning algorithms. However, the robustness of these algorithms depends on many factors such as the luminance contrast, brightness, and the quality of the training set data (Dhillon & Verma, 2020; Padilla, Netto, & Da Silva, 2020). For example, it is still a major challenge for face recognition algorithms to identify a person’s face from every angle in a wide range of light conditions (Kumar Dubey & Jain, 2019). Another challenge is how to convey the information to a blind user without adding more confusion. A thermal camera is simple, has a low processing cost, and subjects who participated in our study learned how to use the system within a few minutes.

We have shown that all four subjects benefited from the thermal camera in stationary tasks. However, we have not tested the system in mobility tasks where the user is required to move through the environment. Temperatures may change when moving from one location to another and warm targets may be detected differently. A recently published study (He et al., 2020) reported a benefit for Argus II users of using a thermal camera in a mobility and an orientation task in an indoor setting. However, it is important to also test the efficacy of the system in outdoor settings where buildings and hot pavement in the summer might look brighter than people when using the thermal camera. In future studies, it is important to assess how the heat-sensitive camera would be useful to each person’s unique needs, and whether a blended image from thermal and visible-light sensitive cameras may have greater utility for certain tasks. If such tests use m-AFC scenarios they should employ the analysis used in the present study.

Overall, this study demonstrated the efficacy of the heat-sensitive camera for Argus II users in a set of standardized situations approximating real-life scenarios. The results show that a thermal camera can be helpful to detect hot objects and distinguish people, at different distances. The item measures estimated for the tasks in this study can also be used as a guideline for further training and rehabilitation.

Acknowledgement

This study is supported by grant: R44 EY024498 to Advanced Medical Electronics Corp. The authors wish to thank Liancheng Yang for technical support and the Argus II wearers participating in this study for their valuable contributions.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosure: R. Sadeghi, None; A. Kartha, None; M. P. Barry, Second Sight Medical Products (Patent, Employment); C. Bradley, None; P. Gibson, Advanced Medical Electronics (Employment); A. Caspi, Second Sight Medical Products (Patent, Consultant); A. Roy, Second Sight Medical Products (Patent, Employment); G. Dagnelie, Second Sight Medical Products (Financial Support, Consultant, Patent)

Contributor Information

Roksana Sadeghi, Department of Biomedical Engineering, Johns Hopkins School of Medicine, Baltimore, MD, USA.

Arathy Kartha, Department of Ophthalmology, Johns Hopkins School of Medicine, Baltimore, MD, USA.

Michael P. Barry, Second Sight Medical Products, Sylmar, California, United States

Chris Bradley, Department of Ophthalmology, Johns Hopkins School of Medicine, Baltimore, MD, USA.

Paul Gibson, Advanced Medical Electronics Corporation, Maple Grove, MN, USA.

Avi Caspi, Second Sight Medical Products, Sylmar, California, United States; Jerusalem College of Technology, Jerusalem, Israel.

Arup Roy, Second Sight Medical Products, Sylmar, California, United States.

Gislin Dagnelie, Department of Ophthalmology, Johns Hopkins School of Medicine, Baltimore, MD, USA.

References

- Ahuja AK, Dorn JD, Caspi A, McMahon MJ, Dagnelie G, DaCruz L, … Greenberg RJ (2011). Blind subjects implanted with the Argus II retinal prosthesis are able to improve performance in a spatial-motor task. British Journal of Ophthalmology, 95(4), 539–543. 10.1136/bjo.2010.179622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bach M, Wilke M, Wilhelm B, Zrenner E, & Wilke R (2010). Basic quantitative assessment of visual performance in patients with very low vision. Investigative Ophthalmology and Visual Science, 51(2), 1255–1260. 10.1167/iovs.09-3512 [DOI] [PubMed] [Google Scholar]

- Bailey IL, Jackson AJ, Minto H, Greer RB, & Chu MA (2012). The Berkeley Rudimentary Vision Test. Optometry and Vision Science, 89(9), 1257–1264. 10.1097/OPX.0b013e318264e85a [DOI] [PubMed] [Google Scholar]

- Bradley C, & Massof RW (2019). Estimating measures of latent variables from m-alternative forced choice responses. PLoS ONE, 14(11). 10.1371/journal.pone.0225581 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspi A, & Zivotofsky AZ (2015). Assessing the utility of visual acuity measures in visual prostheses. Vision Research, 108, 77–84. 10.1016/j.visres.2015.01.006 [DOI] [PubMed] [Google Scholar]

- Cope J, Arias M, Williams DA, Bahm C, & Ngwazini V (2019). Firefighters’ Strategies for Processing Spatial Information During Emergency Rescue Searches. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Vol. 11420 LNCS, pp. 699–705). Springer Verlag. 10.1007/978-3-030-15742-5_66 [DOI] [Google Scholar]

- da Cruz L, Dorn JD, Humayun MS, Dagnelie G, Handa J, Barale PO, … Greenberg RJ (2016). Five-Year Safety and Performance Results from the Argus II Retinal Prosthesis System Clinical Trial. Ophthalmology, 123(10), 2248–2254. 10.1016/j.ophtha.2016.06.049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dagnelie G (2006). Visual prosthetics 2006: Assessment and expectations. Expert Review of Medical Devices, 3(3), 315–325. 10.1586/17434440.3.3.315 [DOI] [PubMed] [Google Scholar]

- Dagnelie G (2012). Retinal implants: Emergence of a multidisciplinary field. Current Opinion in Neurology, 25(1), 67–75. 10.1097/WCO.0b013e32834f02c3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dagnelie G, Caspi A, Barry MP, Gibson P, Seifert G, & Roy A (2016). Thermal imaging prototype enhances person identification and warm Object Localization by Argus II wearers. Investigative Ophthalmology and Visual Science, 57(12), 5167. Retrieved from https://iovs.arvojournals.org/article.aspx?articleid=2563307 [Google Scholar]

- Dagnelie G, Christopher P, Arditi A, da Cruz L, Duncan JL, Ho AC, … Greenberg RJ (2017). Performance of real-world functional vision tasks by blind subjects improves after implantation with the Argus® II retinal prosthesis system. Clinical and Experimental Ophthalmology, 45(2), 152–159. 10.1111/ceo.12812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Balthasar C, Patel S, Roy A, Freda R, Greenwald S, Horsager A, … Fine I (2008). Factors affecting perceptual thresholds in epiretinal prostheses. Investigative Ophthalmology and Visual Science, 49(6), 2303–2314. 10.1167/iovs.07-0696 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dhillon A, & Verma GK (2020). Convolutional neural network: a review of models, methodologies and applications to object detection. Progress in Artificial Intelligence, 9, 85–112. 10.1007/s13748-019-00203-0 [DOI] [Google Scholar]

- Dorn JD, Ahuja AK, Caspi A, da Cruz L, Dagnelie G, Sahel JA, … McMahon MJ (2013). The detection of motion by blind subjects with the epiretinal 60-electrode (Argus II) retinal prosthesis. JAMA Ophthalmology, 131(2), 183–189. 10.1001/2013.jamaophthalmol.221 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green DM, & Swets JA (1966). SIGNAL DETECTION THEORY AND PSYCHOPHYSICS. Retrieved from http://andrei.gorea.free.fr/Teaching_fichiers/SDT and Psytchophysics.pdf

- He Y, Sun SY, Roy A, Caspi A, & Montezuma SR (2020). Improved mobility performance with an artificial vision therapy system using a thermal sensor. Journal of Neural Engineering, 17. 10.1088/1741-2552/aba4fb [DOI] [PubMed] [Google Scholar]

- Henderson AD, Ramulu PY, & Lawler JF (2019, September 24). Teaching neuroimages: Thermal imaging in horner syndrome. Neurology. Lippincott Williams and Wilkins. 10.1212/WNL.0000000000008182 [DOI] [PubMed] [Google Scholar]

- Humayun MS, de Juan E, & Dagnelie G (2016). The Bionic Eye: A Quarter Century of Retinal Prosthesis Research and Development. Ophthalmology, 123(10), S89–S97. 10.1016/j.ophtha.2016.06.044 [DOI] [PubMed] [Google Scholar]

- Humayun MS, De Juan E, Dagnelie G, Greenberg RJ, Propst RH, & Phillips DH (1996). Visual perception elicited by electrical stimulation of retina in blind humans. Archives of Ophthalmology, 114(1), 40–46. 10.1001/archopht.1996.01100130038006 [DOI] [PubMed] [Google Scholar]

- Humayun MS, Dorn JD, Ahuja AK, Caspi A, Filley E, Dagnelie G, … Greenberg RJ (2009). Preliminary 6 month results from the argus™ II epiretinal prosthesis feasibility study. Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society: Engineering the Future of Biomedicine, EMBC 2009, 4566–4568. 10.1109/IEMBS.2009.5332695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humayun MS, Weiland JD, Fujii GY, Greenberg R, Williamson R, Little J, … De Juan E (2003). Visual perception in a blind subject with a chronic microelectronic retinal prosthesis. Vision Research, 43(24), 2573–2581. 10.1016/S0042-6989(03)00457-7 [DOI] [PubMed] [Google Scholar]

- Jones BW, Watt CB, Frederick JM, Baehr W, Chen CK, Levine EM, … Marc RE (2003). Retinal remodeling triggered by photoreceptor degenerations. Journal of Comparative Neurology, 464(1), 1–16. 10.1002/cne.10703 [DOI] [PubMed] [Google Scholar]

- Konieczka K, Schoetzau A, Koch S, Hauenstein D, & Flammer J (2018). Cornea thermography: Optimal evaluation of the outcome and the resulting reproducibility. Translational Vision Science and Technology, 7(3). 10.1167/tvst.7.3.14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumar Dubey A, & Jain V (2019). A review of face recognition methods using deep learning network. Journal of Information and Optimization Sciences ISSN:, 40(2), 547–558. 10.1080/02522667.2019.1582875 [DOI] [Google Scholar]

- Lee IK, Wang CC, Lin MC, Kung CT, Lan KC, & Lee CT (2020, May 1). Effective strategies to prevent coronavirus disease-2019 (COVID-19) outbreak in hospital. Journal of Hospital Infection. W.B. Saunders Ltd. 10.1016/j.jhin.2020.02.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marc RE, Jones BW, Anderson JR, Kinard K, Marshak DW, Wilson JH, … Lucas RJ (2007). Neural reprogramming in retinal degeneration. Investigative Ophthalmology and Visual Science, 48(7), 3364–3371. 10.1167/iovs.07-0032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miethig B, Liu A, Habibi S, & Mohrenschildt M. v. (2019). Leveraging Thermal Imaging for Autonomous Driving. In 2019 IEEE Transportation Electrification Conference and Expo (ITEC) (pp. 1–5). IEEE. 10.1109/ITEC.2019.8790493 [DOI] [Google Scholar]

- Montezuma SR, Sun SY, Roy A, Caspi A, Dorn JD, & He Y (2020). Improved localisation and discrimination of heat emitting household objects with the artificial vision therapy system by integration with thermal sensor. Br J Ophthalmol, 0, 1–5. 10.1136/bjophthalmol-2019-315513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ng EYK, Kaw GJL, & Chang WM (2004). Analysis of IR thermal imager for mass blind fever screening. Microvascular Research, 68(2), 104–109. 10.1016/j.mvr.2004.05.003 [DOI] [PubMed] [Google Scholar]

- Padilla R, Netto SL, & Da Silva EAB (2020). A Survey on Performance Metrics for Object-Detection Algorithms. In International Conference on Systems, Signals, and Image Processing (Vol. 2020-July, pp. 237–242). IEEE Computer Society. 10.1109/IWSSIP48289.2020.9145130 [DOI] [Google Scholar]

- Palanker D, Vankov A, Huie P, & Baccus S (2005). Design of a high-resolution optoelectronic retinal prosthesis. In Journal of Neural Engineering (Vol. 2). 10.1088/1741-2560/2/1/012 [DOI] [PubMed] [Google Scholar]

- Santos A, Humayun MS, De Juan E, Greenburg RJ, Marsh MJ, Klock IB, & Milam AH (1997). Preservation of the inner retina in retinitis pigmentosa:A morphometric analysis. Archives of Ophthalmology, 115(4), 511–515. 10.1001/archopht.1997.01100150513011 [DOI] [PubMed] [Google Scholar]

- Stingl K, Schippert R, Bartz-Schmidt KU, Besch D, Cottriall CL, Edwards TL, … Zrenner E (2017). Interim results of a multicenter trial with the new electronic subretinal implant alpha AMS in 15 patients blind from inherited retinal degenerations. Frontiers in Neuroscience, 11(AUG), 445. 10.3389/fnins.2017.00445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stout A, Madineni K, Tremblay L, & Tane Z (2019). The development of synthetic thermal image generation tools and training data at FLIR (p. 38). SPIE-Intl Soc Optical Eng. 10.1117/12.2518655 [DOI] [Google Scholar]

- Stronks CH, & Dagnelie G (2014). The functional performance of the Argus II retinal prosthesis. Expert Review of Medical Devices, 27(3), 320–331. 10.1002/nbm.3066.Non-invasive [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson EB (1927). Probable Inference, the Law of Succession, and Statistical Inference. Journal of the American Statistical Association, 22(158), 209. 10.2307/2276774 [DOI] [Google Scholar]