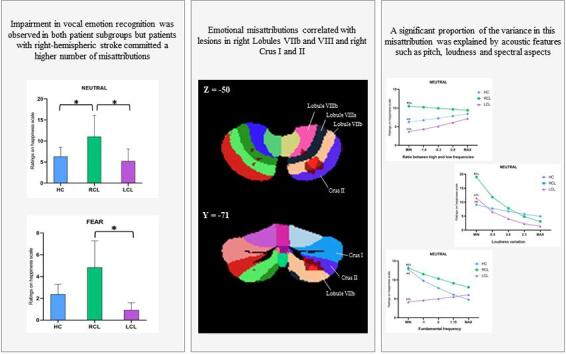

Graphical abstract

Abbreviations: ANOVA, analysis of variance; FDR, false discovery rate; fMRI, functional magnetic resonance imaging; GLMM, generalized linear mixed model; HC, healthy controls; RCL, right cerebellar lesions; LCL, left cerebellar lesions; VLSM, voxel-based lesion–symptom mapping; PEGA, Montreal–Toulouse auditory agnosia battery; MOCA, Montreal Cognitive Assessment; FAB, Frontal Assessment Battery; AES, Apathy Evaluation Scale; SARA, Scale for the Assessment and Rating of Ataxia; BDI-II, Beck Depression Inventory; TAS-20, Toronto Alexithymia Scale; eGeMAPS, extended Geneva Minimalistic Acoustic Parameter Set; MNI, Montreal Neurological Institute; BF, Bayes factor; AIC, Akaike information criterion; BG, basal ganglia; F0, fundamental frequency

Keywords: Cerebellum, Emotional prosody, Acoustics, Stroke

Highlights

-

•

Patients with cerebellar stroke have deficits in emotional prosody recognition.

-

•

Patients with right-hemispheric lesions make more misattributions.

-

•

Emotion deficits correlate with lesions in right Lobules VIIb–VIII and Crus I–II.

-

•

Acoustic features explain a significant proportion of the variance.

-

•

The posterior cerebellar lobe is involved in both sensory and emotional processes.

Abstract

In recent years, there has been increasing evidence of cerebellar involvement in emotion processing. Difficulties in the recognition of emotion from voices (i.e., emotional prosody) have been observed following cerebellar stroke. However, the interplay between sensory and higher-order cognitive dysfunction in these deficits, as well as possible hemispheric specialization for emotional prosody processing, has yet to be elucidated. We investigated the emotional prosody recognition performances of patients with right versus left cerebellar lesions, as well as of matched controls, entering the acoustic features of the stimuli in our statistical model. We also explored the cerebellar lesion-behavior relationship, using voxel-based lesion-symptom mapping. Results revealed impairment of vocal emotion recognition in both patient subgroups, particularly for neutral or negative prosody, with a higher number of misattributions in patients with right-hemispheric stroke. Voxel-based lesion-symptom mapping showed that some emotional misattributions correlated with lesions in the right Lobules VIIb and VIII and right Crus I and II. Furthermore, a significant proportion of the variance in this misattribution was explained by acoustic features such as pitch, loudness, and spectral aspects. These results point to bilateral posterior cerebellar involvement in both the sensory and cognitive processing of emotions.

1. Introduction

Evidence from neuroimaging and clinical studies points to the functional involvement of the cerebellum in vocal emotion recognition (for a review, see (Adamaszek et al., 2017)). The vermian region, Lobule VI and Crus I in the lateral cerebellum have been identified as key regions that interact with the prefrontal cortex and temporal lobes, including the amygdala, via reciprocal connections (Stoodley and Schmahmann, 2009). Some studies among patients with cerebellar lesions have reported impaired recognition for all emotional prosody (Adamaszek et al., 2014), whereas others have failed to find any deficit (Heilman et al., 2014). The lack of agreement in the literature could be due to several factors, including type of task and methodology (for more details, see (Thomasson et al., 2019). In one previous study, the authors reported more fine-grained results, with patients giving erroneous ratings on the Surprise scale when they listened to fear stimuli. Using voxel-based lesion–symptom mapping (VLSM), they found that these emotional misattributions correlated with lesions in the right cerebellar hemisphere (Lobules VIIb, VIII and IX) (Thomasson et al., 2019), confirming the involvement of the cerebellum in vocal emotion processing, and also pointing to potential cerebellar hemispheric specialization in these processes.

Traditionally, the dentate-rubro-thalamo-cortical tract, identified as a feed-forward cerebellar pathway, is described as being composed of crossed fibers. Connections are mainly or even exclusively contralateral, suggesting a functional hemispheric asymmetry that mirrors that of cerebral function (Keser et al., 2015). Concerning emotion perception, fMRI studies suggest that the left cerebellum plays a critical role in the recognition of others’ facial expressions (Uono, 2017). Based on these results and on the classic models of emotional prosody processing, more activation would be expected in the left cerebellar hemisphere during emotional prosody recognition. However, although fMRI studies investigating emotional prosody processing in healthy individuals have reported cerebellar activation, none of them has postulated that one of the cerebellar hemispheres is more specialized for this processing. Some studies have reported bilateral cerebellar activation (Imaizumi, 1997, Wildgruber et al., 2005), whereas others have found only left (Kotz et al., 2013) or right (Alba-Ferrara et al., 2011) activation. The basal ganglia (BG), which have very strong connections with the cerebellum (Bostan and Strick, 2018), have been far more extensively studied in this domain. It has been suggested that the right BG (Péron et al., 2017, Stirnimann et al., 2018) are specifically involved in emotional prosody recognition. The question of the possible hemispherical specialization of the cerebellum in the recognition of emotional prosody therefore remains unanswered. Another critical question in this field of research concerns the involvement of low-level sensory processes in the processing of emotional prosody by the cerebellum.

Based on internal models theory (for reviews, see (Caligiore et al., 2017, Koziol et al., 2014)) and the idea that both the BG and cerebellum participate in response selection and inhibition (Krakauer and Shadmehr, 2007), Adamaszek et al. (Adamaszek et al., 2019) assumed that the cerebellum identifies and shapes appropriate reactions to a specific sensory state, while the BG compute the cost–benefit analysis and optimum resource allocation. It is key to identify the sensory contributions of both the cerebellum and BG to vocal emotion deficits. According to classic models of emotional prosody processing (Schirmer and Kotz, 2006, Wildgruber et al., 2009) the first step of prosody processing consists of the extraction of suprasegmental acoustic information, predominantly subserved by right-sided primary and higher-order acoustic brain regions (including the middle part of the bilateral superior temporal sulcus and anterior insula). The BG have already been described as playing an important role in the extraction and integration of acoustic cues during emotion perception (Péron et al., 2015). Concerning the cerebellum, studies of individuals with cerebellar disturbances have highlighted its contribution to timing and sensory acquisition, as well as to the prediction of the sensory consequences of a given action (Adamaszek et al., 2019). Increased cerebellar activity has been reported during pitch discrimination tasks (Petacchi et al., 2011). Patients with cerebellar disorder have difficulty comparing the durations of two successive time intervals (Ivry and Keele, 1989). Moreover, according to Pichon and Kell (Pichon and Kell, 2013), the cerebellar vermis modulates fundamental frequency (F0) in emotional speech production. A review of the literature on the perception and production of singing and speech (Callan et al., 2007) highlighted left cerebellar hemispheric specialization for the processing of singing and, more particularly, its involvement in the analysis of low-frequency information (prosodic and melodic properties). By contrast, right hemispheric specialization for speech processing points to involvement in the analysis of high-pass filtered information (segmental properties). Interestingly, regarding the idea of cerebellar hemispheric sensitivity to different timescales, a recent study (Stockert et al., 2021) suggested that temporo–cerebellar interactions are involved in the construction of internal auditory models. Reciprocal temporo–cerebellar connections thus contribute to the production of unitary temporally structures stimulus representations, and these internal representations fit cortical representations of the auditory input, to allow for the efficient integration of distinctive sound features. Thus, the cerebellum, in close collaboration with the BG, participates in the organization of sound processing and temporal predictions (Grandjean, 2021).

However, cerebellar hemispheric specialization for the processing of emotional information conveyed in a speaker's voice has not yet been established, and its contribution to acoustic feature processing during an emotional episode has never been explored. In this context, the purpose of the present study was to investigate i) cerebellar hemispheric specialization for the recognition of emotional prosody, and ii) the influence of acoustic features on this processing. To this end, we assessed the vocal emotion recognition performances of 13 patients with right cerebellar lesions (RCL) and 11 patients with left cerebellar lesions (LCL) due to ischemic stroke, comparing them with a group of 24 age-matched healthy control participants (HC). We used a previously validated methodology that has been shown to be sensitive enough to detect even slight emotional impairments (Péron et al., 2011, Péron et al., 2010). This relies on visual (continuous) analog scales, which do not induce biases, unlike categorization and forced-choice tasks (e.g., naming of emotional faces and emotional prosody). Thus, the results of this study would shed light on the participation of the cerebellum in the different sensory an cognitive processing steps described in current models of emotional prosody (Schirmer and Kotz, 2006, Wildgruber et al., 2009). They might also suggest hemispheric sensitivity to different temporal processing patterns (as already shown at the cortical level). This would strengthen evidence of temporo–cerebellar collaboration in prosody processing through internal models (Stockert et al., 2021).

Regarding behavioral results, and on the basis of prior findings (Thomasson et al., 2019), we expected to observe a greater deficit in the recognition of vocal expressions (for all emotions, but not for neutral stimuli) in the patient group (Adamaszek et al., 2014, Adamaszek et al., 2019, Thomasson et al., 2019) than in HC. We also postulated that patients with RCL would be more severely impaired, as a prior study (Thomasson et al., 2019) had shown that the severity of emotion recognition disturbances is best be explained by lesions in the right posterior cerebellar lobe (Lobules VIIb, VIII and IX). Moreover, based on a previous hypothesis formulated by Callan et al., (Callan et al., 2007) concerning the differential roles of the cerebellar hemispheres in speech and song processing, we predicted that the acoustic features of the emotional stimuli would have a differential impact on these emotional prosody disturbances, depending on the lateralization of the cerebellar lesion. We expected patients with LCL to have greater difficulty processing low-frequency information (prosodic, melodic properties), and patients with RCL to have greater difficulty processing high-pass filtered information (segmental properties) (Callan et al., 2007). However, even if acoustic feature processing significantly contributes to changes in emotional prosody recognition following cerebellar stroke, we predicted that any variance we observed would not be sufficient to explain all the emotionally biased results. As the cerebellum is involved in cognitive functions (Schmahmann, 2019), it must also be involved in higher levels (cognitive evaluative judgments) of emotional prosody processing.

2. Materials and methods

2.1. Participants (Table 1)

Table 1.

Clinical, demographic, and neuropsychological data of the two subgroups of patients with cerebellar stroke.

| Patient | Age | Sex | Handedness | Education (years) | Side of lesion | SARA | MOCA | FAB | Cat. fluency | Act. fluency | BDI-II | TAS-20 | AES | Lesion volume (voxels) | Lesion location | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P1 | 52 | M | Right | 20 | RH | 0 | 21# | 12# | 6# | 3# | 23# | 45 | 1 | 5546 | Lobules IV, V, VI, VIII and Crus I | |

| P2 | 55 | M | Right | 22 | RH | – | 23# | – | 16# | 11 | 5 | – | – | 3566 | Lobules III, IV, V, VI, VIII, Crus I and Vermis III | |

| P3 | 56 | F | Right | 15 | LH | – | 18# | – | 31 | 14 | 17 | – | – | 652 | Lobules VIII, VIIb and Vermis VIII | |

| P4 | 62 | F | Left | 12 | LH | 7.5 | 30 | 13# | 25 | 19 | 15 | 36 | 7 | 22,038 | Lobules III, IV, V, VI, VIIb, VIII, IX, Crus I, II andVermis I, II, VI, VIII, IX, X | |

| P5 | 73 | F | Right | 18 | RH | – | 19# | – | 15# | 5# | 24# | – | – | 25 | Crus I | |

| P6 | 61 | F | Left | 9 | RH | 3 | 23# | 13# | 16# | 9 | 23# | 63# | 10 | 6221 | Lobules VIIb, VIII, IX, Crus II and Vermis VIII | |

| P7 | 58 | M | Right | 20 | LH | 0 | 30 | 17 | 19# | 17 | 5 | 72# | 0 | 15,103 | Lobules VIIb, VIII, IX, Crus I, II and Vermis IX | |

| P8 | 68 | F | Right | 14 | LH | 3.5 | 24# | 14# | 5# | 16 | 19# | 61# | 0 | 3309 | Lobules VI, VIIb, VIII, IX and Crus I, II | |

| P9 | 50 | M | Right | 19 | RH | 0 | 26 | 15# | 18# | 19 | 5 | 36 | 0 | 53 | Lobule VIII and Vermis VIII | |

| P10 | 50 | F | Right | 19 | RH | 0 | 27 | 18 | 20# | 29 | – | – | 0 | 1254 | Lobules IV, V, VI and Crus I, II | |

| P11 | 77 | M | Right | 9 | LH | 6.5 | 22# | 12# | 10# | 11 | 7 | 35 | 6 | 1605 | Lobules VI, VIIb, VIII, IX, Crus I and Vermis IX | |

| P12 | 72 | M | Right | 20 | RH | 6 | 28 | 15# | 18 | 20 | 11 | 46 | 2 | 19 | Lobule VIII | |

| P13 | 43 | M | Right | 10 | LH | 2 | 22# | 9# | 16# | 14 | 19 | 69 | 13 | 6396 | Lobule VIIb, VIII and Crus I, II | |

| P14 | 73 | M | Right | 10 | RH | 2 | 23# | 17 | 10# | 4# | 17 | 84 | 1 | 35 | Lobule VI and Crus I | |

| P15 | 74 | F | Right | 13 | RH | 1 | 26 | 18 | 19 | 13 | 8 | 61# | 0 | 38,489 | Lobules VI, VIIb, VIII, IX, X, Crus I, II andVermis IV, V, VI, VII, VIII, IX, X | |

| P16 | 66 | F | Right | 19 | LH | 1 | 27 | 16 | 27 | 22 | 2 | 40 | 0 | 351 | Lobules IV, V, VI, VIII | |

| P17 | 53 | M | Right | 19 | RH | 0 | 26 | 17 | 31 | 21 | 12 | 45 | 1 | 23,290 | Lobules VI, VIIb, VIII, XI, X and Crus I, II | |

| P18 | 85 | M | Right | 12 | RH | 5 | 19# | 11# | 14 | 7 | 10 | 66# | 2 | 239 | Lobules VIIb, VIII and Crus I, II | |

| P19 | 76 | F | Right | 9 | LH | 1 | 24# | 14# | 18 | 14 | 10 | 52 | 0 | 13 | Lobules IV, V, VI | |

| P20 | 69 | M | Right | 7 | LH | 0 | 27 | 14# | 15 | 7 | 5 | 34 | 9 | 31 | Lobule VIII | |

| P21 | 52 | M | Right | 9 | RH | 0 | 25# | 13# | 9# | 7 | 10 | 43 | 0 | 692 | Lobules VIIb, VIII and Crus II | |

| P22 | 58 | M | Right | 12 | LH | 0.5 | 27 | 16 | 14# | 9 | 21# | 84# | 19# | 4206 | Lobules III, IV, V, VI and Crus I | |

| P23 | 48 | F | Right | 15 | RH | 0 | 25# | 18 | 33 | 22 | 14 | 34 | 0 | 3064 | Lobules VI, VIIb, VIII and Crus I, II | |

| P24 | 53 | F | Right | 12 | LH | 0 | 22# | 17 | 20 | 16 | 10 | 59 | 3 | 6520 | Lobules VIIb, VIII, IX and Crus I, II |

Note. Dashes indicate missing values. Act. fluency: action verb fluency task; AES: Apathy Evaluation Scale; BDI-II: Beck Depression Inventory; Cat. fluency: categorical fluency task; FAB: Frontal Assessment Battery; MOCA: Montreal Cognitive Assessment; PEGA: Montreal–Toulouse auditory agnosia battery; SARA: Scale for the Assessment and Rating of Ataxia; TAS-20: Toronto Alexithymia Scale. # scores below the clinical threshold.

One group of 24 patients with first-ever cerebellar ischemic stroke (>3 months prior to enrolment, corresponding to chronic post-stroke phase) and one group of 24 HC took part in the study. The data of 12 of the 24 patients had already been acquired in a previous study (Thomasson et al., 2019). The patient group was divided into two subgroups: 13 patients with RCL, and 11 patients with LCL. The mean age of the RCL group was 61.4 years (SD = 12.33, range = 48–85), and the mean age of the LCL group was 62.4 years (SD = 10.15, range = 43–77). According to the criteria of the Edinburgh Handedness Inventory (Oldfield, 1971), 22 patients were right-handed and two were left-handed. The mean education level was 16 years (SD = 4.67, range = 9–22) for the RCL group, and 12.6 years (SD = 4.10, range = 7–20) for the LCL group. The two groups of patients were matched for sex (z = 0.64, p = 0.52), age (z = 0.61, p = 0.54), education level (z = -1.53, p = 0.12), and handedness (z = 0.03, p = 0.98). All patients were French speakers. Mean time since stroke was 29.2 months (SD = 34.16, range = 3–155). Exclusion criteria were 1) brainstem or occipital lesion (factor influencing clinical signs), 2) one or more other brain lesions, 3) diffuse and extensive white-matter disease, 4) other degenerative or inflammatory brain disease, 5) confusion or dementia, 6) major psychiatric disease, 7) the wearing of hearing aids or a history of tinnitus or a hearing impairment, as attested by the Montreal–Toulouse auditory agnosia battery (PEGA (Agniel et al., 1992)) (mean total score = 28.4, SD = 2.0, range = 24–30), 8) age below 18 years, and 9) major language comprehension deficits precluding reliable testing. All the tasks described below were designed to be highly feasible even for patients in clinical settings.

The participants in the HC group had no history of neurological disorders, head trauma, anoxia, stroke or major cognitive deterioration, as attested by their score on either the Mattis Dementia Rating Scale (Mattis, 1988) (mean score = 142, SD = 1.5, range = 139–144) or the French version of the modified telephone interview for cognitive status (Lacoste and Trivalle, 2009) (mean score = 36.6, SD = 5.0, range = 34–43). They were all French speakers with a mean age of 60.4 years (SE = 8.9, range = 41–80). According to the Edinburgh Handedness Inventory criteria (Oldfield, 1971), all HC were right-handed, except for one who was left-handed. Their mean education level was 13.6 years (SE = 2.57, range = 9–18). As with the patient group, none of the HC wore hearing aids or had a history of tinnitus or a hearing impairment, as attested either by their PEGA score (mean = 27.75, SD = 1.3, range = 27–29) or by the results of a standard audiometric screening procedure (AT-II-B audiometric test) to measure tonal and vocal sensitivity.

All participants gave their written informed consent, and the study was approved by the local ethics committee. The three groups were comparable for age (χ2 = 0.70, p = 0.70) and education level (χ2 = 2.87, p = 0.24) (Table 2).

Table 2.

Statistical results of group (LCL, RCL and HC) comparisons for clinical, demographic, and neuropsychological data.

|

LCL subgroup (n = 11) |

RCL subgroup (n = 13) |

HC (n = 24) |

Stat.val. | p value | |||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | ± SD | Mean | ± SD | Mean | ± SD | ||||

| Age | 62.36 | 10.15 | 61.38 | 12.33 | 60.37 | 8.91 | 0.39 | 0.82 | |

| Education | 12.64 | 4.10 | 16.00 | 4.67 | 13.58 | 2.57 | 4.70 | 0.09 | |

| MOCA | 24.82 | 3.74 | 23.92 | 2.90 | −0.55 | 0.58 | |||

| Verbal fluency | Categorical | 18.18 | 7.52 | 17.31 | 7.72 | −0.35 | 0.73 | ||

| Action | 14.45 | 4.32 | 13.08 | 8.28 | −0.69 | 0.49 | |||

| FAB | 14.20 | 2.48 | 15.50 | 2.50 | 1.17 | 0.24 | |||

| AES | 5.70 | 6.50 | 1.60 | 3.06 | −1.17 | 0.24 | |||

| PEGA | 28.20 | 2.20 | 28.60 | 1.90 | 0.23 | 0.82 | |||

| SARA | 2.20 | 2.76 | 1.70 | 2.26 | −0.57 | 0.57 | |||

| BDI-II | 11.82 | 6.66 | 13.50 | 6.81 | 0.61 | 0.54 | |||

| TAS-20 | 54.20 | 17.66 | 53.11 | 16.42 | 0.04 | 0.97 | |||

Note. Stat. val.: statistical value; MOCA: Montreal Cognitive Assessment; FAB: Frontal Assessment Battery; AES: Apathy Evaluation Scale; PEGA: Montreal–Toulouse auditory agnosia battery; SARA: Scale for the Assessment and Rating of Ataxia; BDI-II: Beck Depression Inventory; TAS-20: Toronto Alexithymia Scale.

2.2. Experimental protocol

Before patients performed the emotional prosody task, a motor assessment was carried out by a board-certified neurologist (FA) to quantify their cerebellar ataxia (Scale for the Assessment and Rating of Ataxia (Schmitz-Hubsch et al., 2006).

Neuropsychological and psychiatric tests were then administered to patients by either board-certified neuropsychologists (JP or AS) or a trainee neuropsychologist (MT or PV) supervised by the board-certified neuropsychologists. We administered the Montreal Cognitive Assessment (MOCA (Nasreddine and Patel, 2016)) and a series of tests assessing executive functions: the Frontal Assessment Battery (FAB (Dubois et al., 2000), categorical and literal fluency tasks (Cardebat et al., 1990), and an action verb fluency task (Woods et al., 2005).

Depression was assessed with the Beck Depression Inventory (BDI-II (Steer et al., 2001) and alexithymia with the Toronto Alexithymia Scale (TAS-20 (Bagby et al., 1994)). As apathy symptoms are commonly found in patients presenting cerebellar stroke (Villanueva, 2012), we administered the Apathy Evaluation Scale (AES (Marin et al., 1991)).

Finally, participants performed the emotional prosody recognition task. The testing took around 90 min. To prevent the session from lasting too long and avoid the effects of fatigue, the self-report questionnaires (BDI-II and TAS-20) were given to participants at the end of the session for them to fill in at home.

2.3. Vocal emotion recognition task

We used the same task as in a previous study (Thomasson et al., 2019). This task evaluating the recognition of emotional prosody has already been used with other clinical populations, such as patients with depression (Péron et al., 2011) or Parkinson disease (Péron et al., 2010). Participants listen to meaningless speech (60 pseudowords) expressed in five different emotional prosodies (anger, fear, happiness, neutral, and sadness), played bilaterally through stereo headphones. For each pseudoword, they have to indicate the extent to which it expresses different emotions, by moving a cursor along a visual analog scale ranging from No emotion expressed to Emotion expressed with exceptional intensity. There is a scale for each emotion (happiness, anger, fear, and sadness), a neutral scale, and a scale to rate the surprise emotion (for more details concerning this task, see (Thomasson et al., 2019).

2.3.1. Extraction of acoustic features from the original stimuli

We extracted several relevant acoustic features from the original stimuli, using the openSmile toolkit (Eyben et al., 2013), in order to study the proportions of variance they explained. The extended Geneva Minimalistic Acoustic Parameter Set (eGeMAPS) described by Eyben et al. (Eyben et al., 2016) allowed us to obtain 88 features, divided into four groups: frequency-related features, energy-related features, spectral features, and temporal features (see SI 1 for the full list of acoustic features and their corresponding categories).

2.4. Neuroradiological assessment

The brain images were acquired in a 1.5 T MRI scanner when each patient was admitted to hospital. All the lesions were mapped on diffusion-weighted (22 patients) or CT (2 patients) brain scans, using the Clusterize-toolbox (http://www.medezin.uni-tuebingen.de/kinder/ en/research/neuroimaging/ software/).The resulting lesion map was then normalized to the Montreal Neurological Institute (MNI) single-subject template, with a resolution of 1 × 1 × 1 mm voxel size, using SPM12 software (http://www.fil.ion.ucl.ac.uk/spm/). In particular, we applied a deformation field estimated from a registered T2 (22 patients) or CT brain scan (2 patients) to each map. The mean time between stroke and image acquisition was 1.82 days (SE = 1.84, range = 0.5).

2.5. Statistical analysis

2.5.1. Behavioral data analysis

Concerning vocal emotion recognition data, the exploratory analysis showed that the data were not normally distributed (abnormal proportion of 0). Consequently, we performed two levels of analysis, adopting a generalized linear mixed model (GLMM) approach. First, we compared the performances of the two patient subgroups (RCL and LCL) with that of the HC group. To do so, we ran a GLMM with a compound Poisson–Tweedie distribution, with emotion (5 levels) and scale (6 levels) as within-participants variables, group (HC, RCL and LCL) as the between-participants variable, and participant as the random factor. The model following the Tweedie distribution presented a better fit of the data than the models following a Gaussian distribution did (Akaike information criterion; Tweedie: 93940; Gaussian: 160760). Second, we ran contrasts between the groups for each prosodic category and each scale, based on the GLMM model using the phia package in R. This type of statistical model allows for the control of random effects such as inter-individual variability, in addition to fixed effects. Each p value yielded by the contrasts was false discovery rate (FDR) corrected. However, as Tweedie analysis can be oversensitive to spurious effects, we performed a control analysis using the BayesFactor package. Bayesian t tests were performed between the groups, and significant Tweedie effects were selected if the Bayes factor (BF) exceeded 3. Although GLMM analysis provides an accurate estimation of effects of interest over confounding variables, the frequential approach it employs does not allow the null hypothesis (H0) to be tested. Nor does it provide any information about the strength of the observed effects. Thus, when this approach yields negative results, there is no way of determining whether these results stem from a lack of power and are indecisive, or whether they indicate the absence of an effect of the variable (i.e., indication of H0 validity). Furthermore, this approach is very sensitive to multiple comparisons error, and the methods used to resolve this issue are often so conservative that they can erase weak but real effects. It was to overcome these obstacles that we decided to adopt a Bayesian approach. Implemented using the BayesFactor package ((Morey and Rouder, 2011) or JASP software), this approach computes an index of the relative Bayesian likelihood of an alternative (H1) model compared with the H0 model. Consequently, the BF computed by this method allows H0 to be assessed. A BF > 3 is substantial proof of H1, while a BF < 1/3 is substantial proof of H0. Furthermore, this approach allows the strength of the proof to be directly assessed for either H1 or H0 (strong proof corresponds to a BF within the [10–30] or [1/10–1/30] boundaries, while extreme proof corresponds to a BF > 100 or < 1/100). Finally, the comparative nature of the Bayesian GLMM renders it unsusceptible to multiple comparisons. We performed contrast analysis using the Bayesian t test in JASP software. A positive result was therefore considered to be significant when p < 0.05 in the classical GLMM analysis and BF exceeded 3 in the Bayesian analysis. A negative result was considered to be significant when p < 0.05 in the classical GLMM analysis and BF were below 1/3 in the Bayesian analysis. Any other scenario was deemed inconclusive.

Moreover, we looked for correlations between the clinical and emotional data for the patient group using Spearman’s rank test as the distribution of the data was not normal. To avoid Type-I errors, we only included emotional variables that differed significantly between patients and HC, or between the two patient subgroups in the analyses.

Concerning the acoustic data we extracted, we first performed a principal component analysis and retained factors with an eigenvalue > 5. In the screeplot, we observed a strong decrease in variance contribution above the fifth factor, so we chose to retain five factors explaining 55.9% of the variance. After varimax rotation, we examined the factor loadings, and retained items that satisfied the following criterion: loading equal to or above 0.8 on the factor.

Second, we added the factorial score (of the five selected factors) as a covariate in our previous GLMM model, and performed contrast analyses (with FDR correction for each p value). Therefore, if the factor entered as a covariate explained a larger proportion of the variance for a given emotion and scale than the group difference, the previously significant results would become nonsignificant. Finally, to test each factor’s contribution to the effects of interest, we calculated a model with the four-way interaction (three within-participants variables: emotion (5 levels), scale (6 levels) and factor; and one between-participants variable: group (HC, RCL and LCL)), as well as contrasts based on this GLMM model (with FDR correction for each p value).

2.5.2. Cerebellar lesion–behavior relationship analysis

To evaluate the statistical relationship between lesion location and behavioral scores, we ran a VLSM analysis on the group level, using the t test statistic, with the absolute value of behavioral scores as a continuous, dependent variable. The behavioral scores were calculated by subtracting the mean of the control group. This algorithm distinguished individuals who had a lesion in a particular voxel from those who did not. We ran a t test on the dependent variable (i.e., ratings that differed significantly between the RCL and LCL groups) between the participants with a lesion versus those without. This procedure was repeated for every voxel (i.e., for each voxel, participants were arranged in a different constellation for the t test, depending on whether or not there was a lesion in that particular voxel). All the patients (RCL and LCL) were included in the same analysis. We decided not to flip the left lesions to the right in this analysis, in order to retain the ability to discern potential lateralization effects. Areas (minimum cluster size > 5 voxels) showing significant correlations with behavioral scores were identified using the FDR-corrected threshold of p = 0.001. The resulting statistics were mapped onto MNI standardized brain templates and color coded.

3. Results

3.1. Clinical examination

Comparisons between demographic and clinical screening scores of HC and each of the patients in the cerebellar stroke subgroups failed to reveal any significant differences (p > 0.05) (Table 2).

3.2. Vocal emotion recognition (Table 3)

Table 3.

Mean ratings and 95% confidence intervals (CI95%) on emotion scales in the emotional prosody recognition task for the LCL, RCL and HC groups.

| LCL group (n = 11) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Happiness scale |

Fear scale |

Sadness scale |

Anger scale |

Neutral scale |

Surprise scale |

|||||||

| Emotion | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% |

| Anger | 2.13 | 1.3; 3.5 | 6.20a | 4.0; 9.6 | 3.27 | 2.0; 5.2 | 44.81 | 31.3; 64.1 | 5.97 | 3.9; 9.3 | 10.04 | 6.6; 15.2 |

| Fear | 0.92b | 0.5; 1.6 | 38.08 | 26.5; 54.7 | 9.99 | 6.6; 15.1 | 10.41 | 6.9; 15.7 | 3.60 | 2.3; 5.7 | 13.60 | 9.1; 20.3 |

| Happiness | 30.44b | 21.0; 44.1 | 8.97 | 5.9; 13.6 | 8.52 | 5.6; 13.0 | 9.74 | 6.4; 14.8 | 2.95 | 1.8 ; 4.8 | 12.41 | 8.3; 18.6 |

| Neutral | 5.20b | 3.3; 8.1 | 2.10 | 1.3; 3.5 | 3.17 | 2.3; 4.4 | 1.30 | 0.8; 2.2 | 29.16 | 20.1; 42.3 | 15.04 | 10.1; 22.4 |

| Sadness | 1.80 | 1.1; 3.0 | 12.36 | 8.2; 18.5 | 39.37 | 27.4; 56.7 | 2.02 | 1.2; 3.3 | 15.08 | 10.1; 22.4 | 4.44 | 2.8; 7.0 |

| RCL group (n=13) | ||||||||||||

| Happiness scale | Fear scale | Sadness scale | Anger scale | Neutral scale | Surprise scale | |||||||

| Emotion | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% |

| Anger | 3.05 | 4.7; 2.0 | 5.13 | 3.4; 7.7 | 2.23 | 1.4; 3.5 | 49.70 | 35.8; 68.9 | 4.65 | 3.1; 7.0 | 14.94 | 10.4; 21.5 |

| Fear | 4.83c | 3.2; 7.3 | 39.38 | 28.2; 55.0 | 12.53 | 8.6; 18.2 | 10.48 | 7.2 ; 15.3 | 3.48 | 2.3; 5.4 | 22.06 | 15.5; 31.4 |

| Happiness | 40.60 | 29.1; 56.7 | 9.85 | 6.7; 14.4 | 8.40 | 5.7; 12.4 | 9.62 | 6.6; 14.1 | 2.84 | 1.8; 4.4 | 18.59 | 13.0; 26.6 |

| Neutral | 11.01a,c | 7.5; 16.1 | 2.10a | 1.03; 3.3 | 3.13 | 2.0; 4.8 | 1.31 | 0.8; 2.1 | 37.78 | 27.0; 52.8 | 15.25 | 10.6; 22.0 |

| Sadness | 1.62 | 1.0; 2.6 | 12.63 | 8.7; 18.3 | 35.60 | 25.4; 49.9 | 1.61 | 1.0; 2.6 | 18.82 | 13.2; 26.9 | 7.78a | 5.3; 11.5 |

| HC (n = 24) | ||||||||||||

| Happiness scale | Fear scale | Sadness scale | Anger scale | Neutral scale | Surprise scale | |||||||

| Emotion | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% | Mean | CI95% |

| Anger | 1.35 | 0.9; 1.9 | 3.28c | 2.4; 4.5 | 2.00 | 1.4; 2.8 | 52.03 | 41.1; 65.9 | 5.43 | 4.0; 7.3 | 12.03 | 9.2; 15.8 |

| Fear | 2.36 | 1.7; 3.3 | 38.59 | 30.3; 49.2 | 15.40 | 11.8; 20.1 | 9.15 | 6.9; 12.1 | 3.71 | 2.7; 5.1 | 15.23 | 11.7; 19.9 |

| Happiness | 30.59 | 23.9; 39.2 | 7.03 | 5.3; 9.4 | 10.89 | 8.3; 14.4 | 8.98 | 6.8; 11.9 | 2.74 | 2.0; 3.8 | 18.61 | 14.3; 24.2 |

| Neutral | 6.32b | 4.7; 8.5 | 0.94b | 0.7; 1.4 | 3.17 | 2.3; 4.4 | 0.62 | 0.4; 0.9 | 42.42 | 33.3; 54.0 | 14.00 | 10.7; 18.3 |

| Sadness | 1.21 | 0.8; 1.7 | 8.57 | 6.5 ; 11.4 | 38.31 | 30.0; 48.9 | 1.10 | 0.8; 1.6 | 24.69 | 19.2; 31.8 | 3.19b | 2.3; 4.4 |

aSignificant if p value below 0.05 (FDR-corrected) in comparison with HC group; b Significant if p value below 0.05 (FDR-corrected) in comparison with RCL group; c Significant if p value below 0.05 (FDR-corrected) in comparison with LCL group.

We found a significant Group × Emotion × Scale three-way interaction, showing that group influenced the recognition of emotional prosody, χ2 (40) = 73.83, p < 0.0001.

Other main and interaction effects were as follows: emotion: χ2 (4) = 45.32, p < 0.0001; scale: χ2 (5) = 93.29, p < 0.0001; group: χ2 (2) = 1.38, p = 0.50; Group × Emotion: χ2 (8) = 2.73, p = 0.95; Group × Scale: χ2 (10) = 34.71, p < 0.001; and Emotion × Scale: χ2 (20) = 3875.9, p < 0.0001.

We performed contrasts for each vocal emotion and each rating scale, with FDR-corrected p values and controlled by the Bayesian t test analysis. We obtained the following results:

Anger. Anger stimuli on the Fear scale: contrasts revealed a significant difference between the LCL and HC groups, z(17186) = -2.32, p = 0.03 (BF = 5.38).

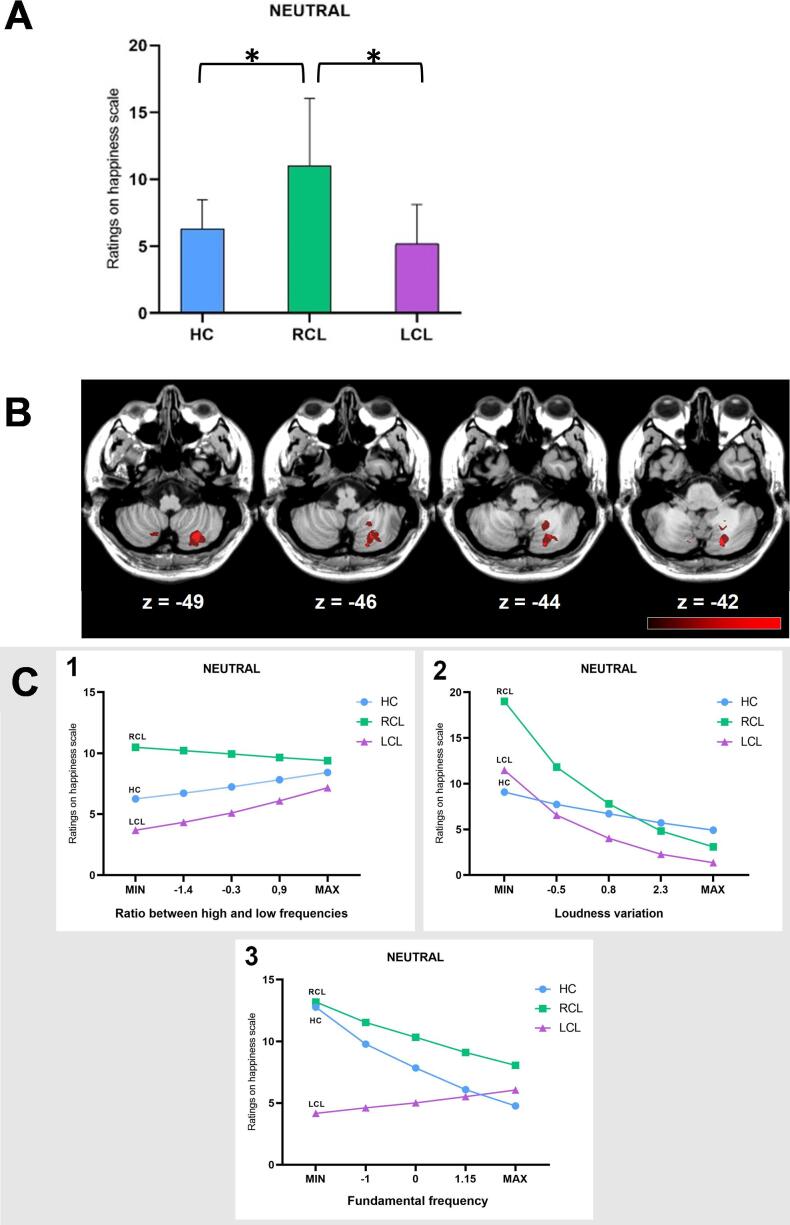

Neutral. Neutral stimuli on the Happiness scale: contrasts revealed a significant difference between the RCL and HC groups, z(17186) = -2.27, p = 0.04 (BF = 20.361), and between the RCL and LCL subgroups, z(17186) = -2.50, p = 0.02 (BF = 4.45) (Fig. 1A).

Fig. 1.

(A) Mean ratings for neutral prosody on the Happiness scale for HC (blue), RCL (green), and LCL (purple) groups. (B) VLSM results. Misattributions of happiness to neutral stimuli correlated with lesions in right Lobules VIIb and VIII, and right Crus I and II (peak coordinates: x = 20, y = -75, z = − 42). The colors indicate t test values, ranging from black (nonsignificant) to red (maximum significance). The lesion–symptom maps were projected onto the ch2bet template of MRIcron®. (C) Differential impact of acoustic features on vocal emotion recognition between the HC, RCL and LCL groups. 1) Differential impact of the ratio between high and low frequencies (spectral features) on the Happiness scale when the stimulus was neutral between the HC (blue), RCL (green), and LCL (purple) groups. 2) Differential impact of loudness variation on the Happiness scale when the stimulus was neutral between the HC (blue), RCL (green), and LCL (purple) groups. 3. Differential impact of F0 on the Happiness scale when the stimulus was neutral between the HC (blue), RCL (green), and LCL (purple) groups. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Neutral stimuli on the Fear scale: contrasts revealed a significant difference between the RCL and HC groups, z(17186) = -2.66, p = 0.01 (BF = 3.29).

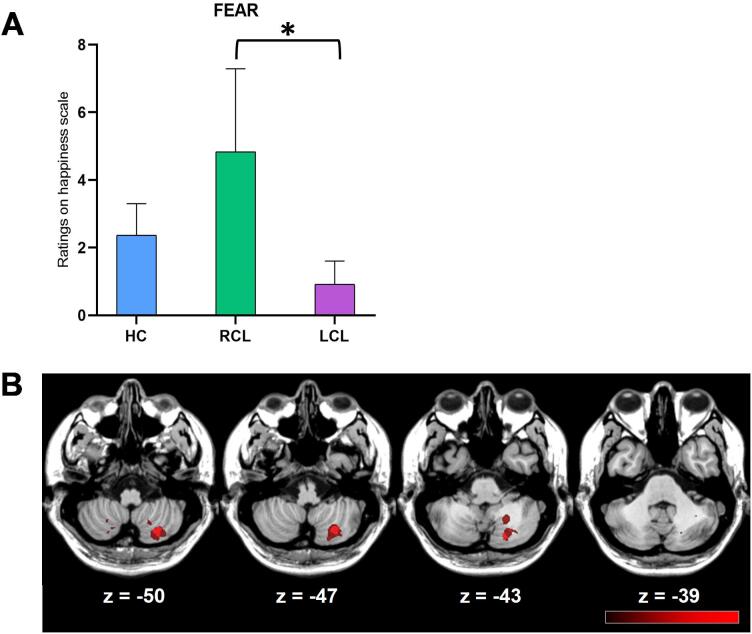

Fear. Fear stimuli on the Happiness scale: contrasts revealed a significant difference between the RCL and LCL subgroups, z(17186) = 4.66, p < 0.001 (BF = 5.91) (Fig. 2A).

Fig. 2.

(A) Mean ratings for fear prosody on the Happiness scale for HC (blue), RCL (green), and LCL (purple) groups. (B) VLSM results. Misattributions of happiness to fearful stimuli correlated with lesions in the right Lobules VIIb and VIII, and right Crus I and II (peak coordinates: x = 28, y = -67, z = − 48). The colors indicate t test values, ranging from black (nonsignificant) to red (maximum significance). The lesion–symptom maps were projected onto the ch2bet template of MRIcron®. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Sadness. Sadness stimuli on the Surprise scale: contrasts revealed a significant difference between the RCL and HC groups, z(17186) = -3.46, p < 0.001 (BF = 20.43).

3.3. Impact of clinical characteristics on vocal emotion recognition

We found a significant correlation between ratings on the Happiness scale for neutral prosody and scores on the AES (Marin et al., 1991) (r = -0.54, p = 0.01). We also found a significant correlation between ratings on the Surprise scale for sadness prosody and the categorical fluency scores (r = -0.49, p = 0.01). All other correlations between clinical and emotional variables were nonsignificant (p > 0.05). In addition, no significant correlation was found between time since stroke and emotional variables. We entered the volume of the lesion in our statistical model to see if this variable affected the emotion ratings, taking relevant factors and interactions of interest into account. Results showed that lesion volume did not significantly affect ratings (χ2 = 0.004, p = 0.95). We therefore calculated the Akaike information criterion (AIC) and Bayesian information criterion (BIC) to see whether the models containing the categorical fluency score or AES score variables presented a better fit of the data than the model that did not contain them. The lower the AIC or BIC value, the better the fit. Without the categorical fluency scores, the AIC was 46841.8 and the BIC was 47286.9. With the categorical fluency scores, the AIC was 46843.7 and the BIC was 47295.8. Accordingly, the prediction model was better if the categorical fluency scores were not included in it. The lack of verbal self-activation for the categorical fluency task did not, therefore, explain participants’ judgments in the emotional prosody recognition task. However, with the AES scores, the AIC was 39247.6 and the BIC was 39688.2, suggesting that the prediction model was better if AES scores were included in it.

3.4. Impact of acoustic features on vocal emotion recognition

Following a principal component analysis conducted on the 88 acoustic features extracted, analysis of the saturation matrix after varimax rotation (saturation cut-off applied = 0.80) allowed us to interpret the five extracted factors and identify a specific group of acoustic cues for each of them. Factors 1, 2 and 5 were related to spectral features, while Factor 3 was related to loudness features, and Factor 4 to F0. Factor 1 could be expressed as the ratio between high and low frequencies, Factor 3 as the variation in loudness, and Factor 4 as mean F0.

The GLMM we calculated, adding each of the five factors as a continuous predictor, revealed the following significant effects: Factor 1, z(1 1 3) = 44.875, p < 0.001; Factor 3, z(1 1 3) = 8.33, p = 0.004; and Factor 4, z(1 1 3) = 74.56, p < 0.001.

The contrasts, performed with one of the three significant factors identified above, revealed the following results (Table 4). The differences between the RCL and HC groups for neutral stimuli on the Fear scale and between the RCL and LCL subgroups for fear stimuli on the Happiness scale remained significant after the addition of all the factors, so they were not explained by acoustic features. By contrast, the difference between the LCL and RCL subgroups for neutral stimuli on the Happiness scale ceased to be significant when Factors 1, 3 and 4 were added. Thus, a significant proportion of the variance was explained by Factor 1 (spectral features), Factor 3 (related to loudness), and Factor 4 (related to F0) (Fig. 1C and Table 4). Concerning the difference between the LCL and HC groups for anger stimuli on the Fear scale, a significant proportion of the variance was explained by Factors 1 and 4. Moreover, a significant proportion of the variance for the difference between the RCL and HC groups on the Surprise scale for sadness prosody was explained by Factor 4.

Table 4.

Differential impact of the three significant factors identified on vocal emotion recognition between the LCL, RCL and HC groups.

| Factors | Variables | T ratio | P value (FDR-corrected) |

|---|---|---|---|

| Factor1 (Spectral features) | Anger on Fear scale (LCL vs. HC) | −2.145 | 0.06* |

| Neutral on Happiness scale (RCL vs. HC) | −1.27 | 0.27* | |

| Neutral on Happiness scale (RCL vs. LCL) | 2.131 | 0.06* | |

| Neutral on Fear scale (RCL vs. HC) | −2.587 | 0.02 | |

| Fear on Happiness scale (RCL vs. LCL) | 4.31 | 0.0002 | |

| Sadness on Surprise scale (RCL vs. HC) | −3.291 | 0.002 | |

| Factor 3 (Amplitude) | Anger on Fear scale (LCL vs. HC) | −2.169 | 0.05 |

| Neutral on Happiness scale (RCL vs. HC) | −1.266 | 0.27* | |

| Neutral on Happiness scale (RCL vs. LCL) | 2.101 | 0.06* | |

| Neutral on Fear scale (RCL vs. HC) | −2.554 | 0.02 | |

| Fear on Happiness scale (RCL vs. LCL) | 4.292 | 0.0002 | |

| Sadness on Surprise scale (RCL vs. HC) | −3.303 | 0.002 | |

| Factor 4 (Fundamental frequency) | Anger on Fear scale (LCL vs. HC) | −2.141 | 0.06* |

| Neutral on Happiness scale (RCL vs. HC) | −1.295 | 0.26* | |

| Neutral on Happiness scale (RCL vs. LCL) | 2.047 | 0.06* | |

| Neutral on Fear scale (RCL vs. HC) | −2.702 | 0.01 | |

| Fear on Happiness scale (RCL vs. LCL) | 3.502 | 0.001 | |

| Sadness on Surprise scale (RCL vs. HC) | 1.535 | 0.18* | |

*Results where the factor (entered as covariate) explained a significant proportion of variance.

3.5. Cerebellar lesion–behavior relationships

VLSM analysis, which was only performed on behavioral scores of interest (i.e., ratings that differed significantly between the RCL and LCL subgroups), revealed the following results:

The significant difference between the LCL and RCL subgroups on the Happiness scale for neutral stimuli correlated with lesions in the right Lobules VIIb and VIII and right Crus I and II (peak coordinates: x = 20, y = -75, z = − 42) (Fig. 1B). The significant difference between LCL and RCL on the Happiness scale for fear stimuli correlated with lesions in the same regions (peak coordinates: x = 28, y = -67, z = − 48) (Fig. 2B).

4. Discussion

The purpose of the present study was to investigate whether acoustic features have a differential impact on emotional prosody disturbances, depending on the location of the lesion. To this end, we quantified the misattributions made by patients with RCL or LCL, using a sensitive and validated behavioral methodology (Péron et al., 2011, Péron et al., 2010), as well as state-of-the-art statistical and neuroimaging analyses, in order to study the potential sensory contribution to emotion recognition deficits in patients with cerebellar stroke. Consequently, and as predicted, we confirmed impaired recognition of emotional prosody in patients and showed that these emotional misattributions are correlated with lesions in the right Lobules VIIb, VIII and IX. Moreover, we found for the first time, to our knowledge, that the pattern and number of errors differed according to the side of the cerebellar lesion, and that patients’ misattributions were indeed partially explained by auditory sensory processing, with an interaction effect according to the hemispheric location of the lesion. The biased ratings displayed by LCL were explained by spectral and F0 features, whereas loudness, spectral and F0 features explained a significant proportion of the variance in the misattributions made by patients with RCL.

First, as previously reported (Thomasson et al., 2019), we confirmed that patients’ emotional misattributions were all made when listening to prosody with a neutral or negative valence. Cerebellar involvement in the processing of negative emotions has been mentioned several times in the literature (Adamaszek et al., 2017, Ferrucci et al., 2012, Paradiso et al., 1999). Authors have suggested that there is a complex cortico–cerebellar network specific to aversive stimuli, with functional connectivity between the cerebellum and cortical structures supposedly involved in the processing of negatively valenced emotions (Moulton et al., 2011). In this context, the postulate that the cerebellum is involved in affective communication seems to be confirmed. However, the question of its specific role in comparison with those of the cortex and other subcortical structures remains to be elucidated. It has recently been proposed that the so called limbic cerebellum modulates the amplitude of cortical oscillations based on prediction error feedback of the selected response relative to the given context (Schmahmann, 2019, Booth et al., 2007). Input to the cerebellum regarding the salience and motivational value of emotional stimuli guides internal models to determine how an emotional response benefits individuals in their current state, and therefore shapes how output from the cerebellum modifies the limbic response pattern. By continuously monitoring the performance of the individual in terms of prediction errors, the cerebellum ensures that large deviations from the expected response/outcome are quickly corrected (Peterburs and Desmond, 2016). In cases of cerebellar lesions, however, a lack of cerebellar amplification of emotional networks may lead to the blunted affect and decreased subjective experience of emotions that are often reported in patients (Adamaszek et al., 2017).

As far as the comparison between RCL and LCL is concerned, congruent with our hypotheses, we observed that patients with RCL committed more misattributions in emotional prosody recognition than either patients with LCL or HC. RCL rated happiness significantly higher when they listened to neutral prosody than either patients with LCL or HC. Compared with HC, they also gave higher ratings on the Fear scale when they listened to neutral stimuli, and higher ratings on the Surprise scale when they listened to sadness prosody. Moreover, they gave significantly higher ratings on the Happiness scale than patients with LCL did when they listened to fear stimuli. This tendency to attribute significantly more positive emotions when listening to negative or neutral prosody, which had already observed in a previous study (Thomasson et al., 2019), could be interpreted as reflecting a deficit in valence processing. In HC, increased BOLD signal intensity has been found in the right dorsal cerebellum as the degree of unpleasantness of the induced emotion increases (Colibazzi et al., 2010). A possible functional segregation and specialization within the cerebellum in the processing of emotional dimensions has been suggested (Styliadis et al., 2015). Authors have also postulated that the lateral zone of the right cerebellar hemisphere participates in the regulation of cognitive components of emotional task performance (Baumann and Mattingley, 2012). Interestingly, we observed that the patients who gave higher happiness ratings when they listened to neutral prosody had the lowest AES scores. Commonly seen as an affective disorder that can lead to inadequate appraisal (malfunctioning of conduciveness check) (Scherer, 2009), apathy could thus be related to a reduction in misattributions on the Happiness scale for neutral prosody among patients with RCL. Interestingly, and using VLSM analysis, we found that the difference between patients with RCL versus LCL correlated with lesions in the right Lobules VIIb and VIII and right Crus I and II. This finding partially confirms Schraa-Tam et al. (Schraa-Tam et al., 2012)’s fMRI results in HC (Schraa-Tam et al., 2012), showing activation of the posterior cerebellum (i.e., Crus II, hemispheric Lobules VI and VIIa, and vermal Lobules VIII and IX) during the processing of emotional facial expressions. Relations have been highlighted between Lobules VIIb and VIII and the task-positive and salience networks, suggesting that these two subregions are involved in the performance of attention-demanding cognitive tasks and the identification of salient stimuli to guide behavior. Moreover, we observed higher ratings on the Fear scale for angry stimuli among patients with LCL than among HC (whereas RCL did not differ significantly from HC). It has been suggested that there is a key hub in the left cerebellar hemisphere (more precisely, Crus I and II and Lobule VII), where emotional and cognitive information converges and is forwarded to cerebral (and more particularly prefrontal) areas (Han et al., 2016). Moreover, we can assume that the amygdala participates in this process, given its important role in fear learning and its close links to the vermis and prefrontal areas (Snider and Maiti, 1976).

The second aim of our study was to investigate whether acoustic features have a differential impact on emotional prosody disturbances. We found that the biased ratings on the Fear scale when patients with LCL listened to anger prosody were explained by spectral (ratio between high and low frequency) and mean F0 features. This result is partially congruent with the speculative hypothesis concerning the role of the left cerebellar hemisphere in the processing of relatively low-frequency information (e.g., prosody, musical melodies). Interestingly, a study reported that fear ratings were predicted by spectral properties, whereas anger ratings were predicted by both spectral and pitch information (Sauter et al., 2010). Altered processing of these acoustic cues could explain patients’ misattributions. Contrary to our hypotheses, we also observed that loudness, spectral and F0 features explained a significant proportion of the variance in the misattributions made by patients with RCL on the Happiness scale for neutral stimuli. Moreover, we found that biased ratings on the Surprise scale when patients with RCL listened to sadness stimuli were correlated with mean F0. Pitch is reportedly crucial for correctly judging surprise and sadness prosody (Sauter et al., 2010). However, this result was unexpected, regarding the differential temporal processing specialization of the cerebellar hemispheres, based on the cross-lateral interconnectivity hypothesis. The presence of contrasting cerebellar and cerebral hemispheric functional asymmetries has not always been supported. One study using a clinically applicable diffusion tensor imaging sequence highlighted both ipsilateral and contralateral connections (Karavasilis et al., 2019). Thus, as with the cerebral cortex, research investigating cerebellar hemisphere specialization for processing the emotional information conveyed by a speaker’s voice has failed to yield consistent results. Our study also revealed that the biased ratings of patients with RCL on the Fear scale when they listened to neutral stimuli, or on the Happiness scale when they listened to fear stimuli, were not correlated with any acoustic features. Thus, these results revealed that patients’ misattributions could not be explained solely by a deficit in sensory processing, suggesting the involvement of higher-order cognitive processes. The present results strengthen the idea that clinicians should assess patients with cerebellar dysfunction at the sensorimotor level, but also at the cognitive and emotional levels. The clinical community often tends to evaluate these patients less thoroughly than patients with lesions in the cerebral cortex, especially since they do not display gross clinical manifestations. Our patients successfully identified the target emotions, but nevertheless confused them with other emotions (which can be interpreted as an increase in noise in the decoding process). Small and underestimated neuropsychological deficits can potentially cascade into more severe deficits over time, as is the case for example with dysexecutive syndrome in traumatic brain injury. As emotional symptoms are sometimes the main complaint of patients with cerebellar injury (Annoni et al., 2003), rehabilitation of emotion processing, focusing on decoding by low-level sensory processes, could be very beneficial.

Finally, in the context of existing physiological models of emotional prosody decoding, we suggest that in each processing subcomponent, the cerebellum detects and then adjusts the temporal pattern encoded for a given response, so that it is as close as possible to the expected one (Pierce and Péron, 2020). This ability to fine-tune and correct responses in sensorimotor analysis, acoustic integration, or cognitive evaluative judgment is above all made possible by the cerebellum’s ability to analyze and process irregular temporal patterns (Breska and Ivry, 2018). This work is done in tandem with the BG, which select and strengthen appropriate cortical responses using reward feedback (Bostan and Strick, 2018). This is possible by the BG’s ability to analyze and process regular temporal patterns (Breska and Ivry, 2018). Regarding the hemispheric specialization of the cerebellum in this process, the present findings revealed cooperation between the two cerebellar hemispheres for sensory but also cognitive processes during the decoding of vocal emotional stimuli. Interestingly, a recent study (Stockert et al., 2021) highlighted bilateral and bidirectional connections between the cerebellum (Crus I, II and dentate nuclei) and the temporo-frontal region. The involvement of both cerebellar hemispheres in auditory spectro-temporal encoding, with hemispheric sensitivity for different time-scales, would allow the construction of internal auditory models. More studies are needed to explore the precise nature and timing of this cooperation during emotional processing.

5. Conclusion

For the first time to our knowledge, the present study tested how damage to either the left or right cerebellum impairs the recognition of emotional prosody and the sensory contribution to vocal affect processing. Deficits in both patient subgroups were observed, particularly for neutral or negative prosody, with a higher number of misattributions in patients with right-hemispheric stroke. VLSM revealed that some emotional misattributions correlated with lesions in the right Lobules VIIb and VIII and right Crus I and II. A significant proportion of the variance in this misattribution was explained by acoustic features such as pitch, loudness and spectral aspects, suggesting a sensory cerebellar contribution.

The present results seem to suggest two-step processing operating in both cerebellar hemispheres, rather than hemispheric specialization per se: both right and left cerebellar hemispheres appear to process spectral and F0 features, but the right hemisphere is additionally involved in loudness processing during an emotional episode. Further studies are needed to better understand the processing of specific properties by left versus right cerebellar hemispheres, and integrate these results with recent suggestions that the cerebellum can refine the cortical/BG response and recalibrate the internal model by checking whether the state of the individual diverges from the expected state at any time during the emotion recognition process.

CRediT authorship contribution statement

Marine Thomasson: Conceptualization, Formal analysis, Investigation, Writing - original draft, Writing - review & editing. Damien Benis: Conceptualization, Formal analysis, Writing - review & editing. Arnaud Saj: Conceptualization, Formal analysis, Investigation, Writing - original draft, Writing - review & editing, Supervision. Philippe Voruz: Investigation, Writing - review & editing. Roberta Ronchi: Formal analysis, Writing - review & editing. Didier Grandjean: Conceptualization, Formal analysis, Writing - review & editing, Supervision. Frédéric Assal: Conceptualization, Investigation, Writing - review & editing, Supervision. Julie Péron: Conceptualization, Formal analysis, Investigation, Writing - original draft, Writing - review & editing, Supervision, Funding acquisition.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The present study was carried out at the Neurology Department of the University Hospitals of Geneva (Prof. Kleinschmidt). The project was funded by Swiss National Foundation grant no. 105314_182221 (PI: Dr Julie Péron). The funders had no role in data collection, discussion of content, preparation of the manuscript, or decision to publish. We would like to thank the patients and healthy controls for contributing their time to this study.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.nicl.2021.102690.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- Adamaszek M., D’Agata F., Ferrucci R., Habas C., Keulen S., Kirkby K.C., Leggio M., Mariën P., Molinari M., Moulton E., Orsi L., Van Overwalle F., Papadelis C., Priori A., Sacchetti B., Schutter D.J., Styliadis C., Verhoeven J. Consensus Paper: Cerebellum and Emotion. Cerebellum. 2017;16(2):552–576. doi: 10.1007/s12311-016-0815-8. [DOI] [PubMed] [Google Scholar]

- Stoodley C., Schmahmann J. Functional topography in the human cerebellum: a meta-analysis of neuroimaging studies. Neuroimage. 2009;44(2):489–501. doi: 10.1016/j.neuroimage.2008.08.039. [DOI] [PubMed] [Google Scholar]

- Heilman K.M., Leon S.A., Burtis D.B., Ashizawa T., Subramony S.H. Affective communication deficits associated with cerebellar degeneration. Neurocase. 2014;20(1):18–26. doi: 10.1080/13554794.2012.713496. [DOI] [PubMed] [Google Scholar]

- Thomasson M., Saj A., Benis D., Grandjean D., Assal F., Péron J. Cerebellar contribution to vocal emotion decoding: Insights from stroke and neuroimaging. Neuropsychologia. 2019;132:107141. doi: 10.1016/j.neuropsychologia.2019.107141. [DOI] [PubMed] [Google Scholar]

- Keser Z., Hasan K.M., Mwangi B.I., Kamali A., Ucisik-Keser F.E., Riascos R.F., Yozbatiran N., Francisco G.E., Narayana P.A. Diffusion tensor imaging of the human cerebellar pathways and their interplay with cerebral macrostructure. Front. Neuroanat. 2015;9 doi: 10.3389/fnana.2015.00041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uono S. Neural substrates of the ability to recognize facial expressions: a voxel-based morphometry study. Soc. Cogn. Affect Neurosci. 2017;12:487–495. doi: 10.1093/scan/nsw142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imaizumi S. Vocal identification of speaker and emotion activates different brain regions. NeuroReport. 1997;8:2809–2812. doi: 10.1097/00001756-199708180-00031. [DOI] [PubMed] [Google Scholar]

- Wildgruber D., Riecker A., Hertrich I., Erb M., Grodd W., Ethofer T., Ackermann H. Identification of emotional intonation evaluated by fMRI. Neuroimage. 2005;24(4):1233–1241. doi: 10.1016/j.neuroimage.2004.10.034. [DOI] [PubMed] [Google Scholar]

- Kotz S.A., Kalberlah C., Bahlmann J., Friederici A.D., Haynes J.-D. Predicting vocal emotion expressions from the human brain. Hum. Brain Mapp. 2013;34(8):1971–1981. doi: 10.1002/hbm.22041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alba-Ferrara L., Hausmann M., Mitchell R.L., Weis S., Valdes-Sosa P.A. The neural correlates of emotional prosody comprehension: disentangling simple from complex emotion. PLoS ONE. 2011;6(12):e28701. doi: 10.1371/journal.pone.0028701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bostan A.C., Strick P.L. The basal ganglia and the cerebellum: nodes in an integrated network. Nat. Rev. Neurosci. 2018;19(6):338–350. doi: 10.1038/s41583-018-0002-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Péron J., Renaud O., Haegelen C., Tamarit L., Milesi V., Houvenaghel J.-F., Dondaine T., Vérin M., Sauleau P., Grandjean D. Vocal emotion decoding in the subthalamic nucleus: An intracranial ERP study in Parkinson's disease. Brain Lang. 2017;168:1–11. doi: 10.1016/j.bandl.2016.12.003. [DOI] [PubMed] [Google Scholar]

- Stirnimann N., N'Diaye K., Jeune F.L., Houvenaghel J.-F., Robert G., Drapier S., Drapier D., Grandjean D., Vérin M., Péron J. Hemispheric specialization of the basal ganglia during vocal emotion decoding: Evidence from asymmetric Parkinson's disease and (18)FDG PET. Neuropsychologia. 2018;119:1–11. doi: 10.1016/j.neuropsychologia.2018.07.023. [DOI] [PubMed] [Google Scholar]

- Caligiore D., Pezzulo G., Baldassarre G., Bostan A.C., Strick P.L., Doya K., Helmich R.C., Dirkx M., Houk J., Jörntell H., Lago-Rodriguez A., Galea J.M., Miall R.C., Popa T., Kishore A., Verschure P.F.M.J., Zucca R., Herreros I. Consensus Paper: Towards a Systems-Level View of Cerebellar Function: the Interplay Between Cerebellum, Basal Ganglia, and Cortex. Cerebellum. 2017;16(1):203–229. doi: 10.1007/s12311-016-0763-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koziol L.F., Budding D., Andreasen N., D’Arrigo S., Bulgheroni S., Imamizu H., Ito M., Manto M., Marvel C., Parker K., Pezzulo G., Ramnani N., Riva D., Schmahmann J., Vandervert L., Yamazaki T. Consensus paper: the cerebellum's role in movement and cognition. Cerebellum. 2014;13(1):151–177. doi: 10.1007/s12311-013-0511-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krakauer J.W., Shadmehr R. Towards a computational neuropsychology of action. Prog. Brain Res. 2007;165:383–394. doi: 10.1016/S0079-6123(06)65024-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adamaszek M., D’Agata F., Kirkby K.C., Trenner M.U., Sehm B., Steele C.J., Berneiser J., Strecker K. Impairment of emotional facial expression and prosody discrimination due to ischemic cerebellar lesions. Cerebellum. 2014;13:338–345. doi: 10.1007/s12311-013-0537-0. [DOI] [PubMed] [Google Scholar]

- Adamaszek M., D’Agata F., Steele C.J., Sehm B., Schoppe C., Strecker K., Woldag H., Hummelsheim H., Kirkby K.C. Comparison of visual and auditory emotion recognition in patients with cerebellar and Parkinson s disease. Soc. Neurosci. 2019;14(2):195–207. doi: 10.1080/17470919.2018.1434089. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Kotz S.A. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends in Cognitive Sci. 2006;10(1):24–30. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Wildgruber D., Ethofer T., Grandjean D., Kreifelts B. A cerebral network model of speech prosody comprehension. Int. J. Speech-Language Pathol. 2009;11(4):277–281. [Google Scholar]

- Péron J., Cekic S., Haegelen C., Sauleau P., Patel S., Drapier D., Vérin M., Grandjean D. Sensory contribution to vocal emotion deficit in Parkinson's disease after subthalamic stimulation. Cortex. 2015;63:172–183. doi: 10.1016/j.cortex.2014.08.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petacchi A., Kaernbach C., Ratnam R., Bower J.M. Increased activation of the human cerebellum during pitch discrimination: a positron emission tomography (PET) study. Hear. Res. 2011;282(1-2):35–48. doi: 10.1016/j.heares.2011.09.008. [DOI] [PubMed] [Google Scholar]

- Ivry R.B., Keele S.W. Timing functions of the cerebellum. J Cogn Neurosci. 1989;1(2):136–152. doi: 10.1162/jocn.1989.1.2.136. [DOI] [PubMed] [Google Scholar]

- Pichon S., Kell C.A. Affective and sensorimotor components of emotional prosody generation. J. Neurosci. 2013;33(4):1640–1650. doi: 10.1523/JNEUROSCI.3530-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callan D., Kawato M., Parsons L., Turner R. Speech and song: the role of the cerebellum. The Cerebellum. 2007;6(4):321–327. doi: 10.1080/14734220601187733. [DOI] [PubMed] [Google Scholar]

- Stockert A., Schwartze M., Poeppel D., Anwander A., Kotz S.A. Temporo-cerebellar connectivity underlies timing constraints in audition. bioRxiv. 2021 doi: 10.7554/eLife.67303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grandjean D. Brain networks of emotional prosody processing. Emotion Review. 2021;13(1):34–43. [Google Scholar]

- Péron J., El Tamer S., Grandjean D., Leray E., Travers D., Drapier D., Vérin M., Millet B. Major depressive disorder skews the recognition of emotional prosody. Prog. Neuro-Psychopharmacol. Biol. Psychiatry. 2011;35(4):987–996. doi: 10.1016/j.pnpbp.2011.01.019. [DOI] [PubMed] [Google Scholar]

- Péron J., Grandjean D., Le Jeune F., Sauleau P., Haegelen C., Drapier D., Rouaud T., Drapier S., Vérin M. Recognition of emotional prosody is altered after subthalamic nucleus deep brain stimulation in Parkinson's disease. Neuropsychologia. 2010;48(4):1053–1062. doi: 10.1016/j.neuropsychologia.2009.12.003. [DOI] [PubMed] [Google Scholar]

- Schmahmann J.D. The cerebellum and cognition. Neurosci. Lett. 2019;688:62–75. doi: 10.1016/j.neulet.2018.07.005. [DOI] [PubMed] [Google Scholar]

- Oldfield R.C. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Agniel A., Joanette Y., Doyon B., Duchein C. L’Ortho édition; Isbergues France: 1992. Protocole Montréal-Toulouse d'Evaluation des Gnosies Auditives. [Google Scholar]

- S. Mattis, Dementia rating scale: DRS: Professional manual (PAR, 1988).

- Lacoste L., Trivalle C. Adaptation française d’un outil d’évaluation par téléphone des troubles mnésiques: le French Telephone Interview for Cognitive Status Modified (F-TICS-m) NPG Neurologie-Psychiatrie-Gériatrie. 2009;9:17–22. [Google Scholar]

- Schmitz-Hubsch T., du Montcel S.T., Baliko L., Berciano J., Boesch S., Depondt C., Giunti P., Globas C., Infante J., Kang J.-S., Kremer B., Mariotti C., Melegh B., Pandolfo M., Rakowicz M., Ribai P., Rola R., Schols L., Szymanski S., van de Warrenburg B.P., Durr A., Klockgether T. Scale for the assessment and rating of ataxia: development of a new clinical scale. Neurology. 2006;66(11):1717–1720. doi: 10.1212/01.wnl.0000219042.60538.92. [DOI] [PubMed] [Google Scholar]

- Moulton E.A., Elman I., Pendse G., Schmahmann J., Becerra L., Borsook D. Aversion-related circuitry in the cerebellum: responses to noxious heat and unpleasant images. J. Neurosci. 2011;31(10):3795–3804. doi: 10.1523/JNEUROSCI.6709-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasreddine Z.S., Patel B.B. Validation of Montreal Cognitive Assessment, MoCA, Alternate French Versions. Can. J. Neurol. Sci. 2016;43(5):665–671. doi: 10.1017/cjn.2016.273. [DOI] [PubMed] [Google Scholar]

- Dubois B., Slachevsky A., Litvan I., Pillon B. The FAB: a frontal assessment battery at bedside. Neurology. 2000;55(11):1621–1626. doi: 10.1212/wnl.55.11.1621. [DOI] [PubMed] [Google Scholar]

- Cardebat D., Doyon B., Puel M., Goulet P., Joanette Y. Formal and semantic lexical evocation in normal subjects. Performance and dynamics of production as a function of sex, age and educational level. Acta Neurol. Belg. 1990;90:207–217. [PubMed] [Google Scholar]

- Woods S.P., Scott J.C., Sires D.A., Grant I., Heaton R.K., Tröster A.I., HIV Neurobehavioral Research Center (HNRC) Group Action (verb) fluency: Test-retest reliability, normative standards, and construct validity. JINS. 2005;11(4):408. [PubMed] [Google Scholar]

- Steer R.A., Brown G.K., Beck A.T., Sanderson W.C. Mean Beck Depression Inventory–II scores by severity of major depressive episode. Psychol. Rep. 2001;88:1075–1076. doi: 10.2466/pr0.2001.88.3c.1075. [DOI] [PubMed] [Google Scholar]

- Bagby R.M., Parker J.D.A., Taylor G.J. The twenty-item Toronto Alexithymia Scale—I. Item selection and cross-validation of the factor structure. J. Psychosom. Res. 1994;38(1):23–32. doi: 10.1016/0022-3999(94)90005-1. [DOI] [PubMed] [Google Scholar]

- Villanueva R. The cerebellum and neuropsychiatric disorders. Psychiatry Res. 2012;198(3):527–532. doi: 10.1016/j.psychres.2012.02.023. [DOI] [PubMed] [Google Scholar]

- Marin R.S., Biedrzycki R.C., Firinciogullari S. Reliability and validity of the Apathy Evaluation Scale. Psychiatry Res. 1991;38(2):143–162. doi: 10.1016/0165-1781(91)90040-v. [DOI] [PubMed] [Google Scholar]

- Eyben F., Weninger F., Gross F., Schuller B. in Proceedings of the 21st ACM international conference on Multimedia. 2013. Recent developments in opensmile, the munich open-source multimedia feature extractor; pp. 835–838. [Google Scholar]

- Eyben F., Scherer K.R., Schuller B.W., Sundberg J., Andre E., Busso C., Devillers L.Y., Epps J., Laukka P., Narayanan S.S., Truong K.P. The Geneva minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing. IEEE Trans. Affective Comput. 2016;7(2):190–202. [Google Scholar]

- Morey R.D., Rouder J.N. Bayes factor approaches for testing interval null hypotheses. Psychol. Methods. 2011;16(4):406–419. doi: 10.1037/a0024377. [DOI] [PubMed] [Google Scholar]

- Ferrucci R., Giannicola G., Rosa M., Fumagalli M., Boggio P.S., Hallett M., Zago S., Priori A. Cerebellum and processing of negative facial emotions: cerebellar transcranial DC stimulation specifically enhances the emotional recognition of facial anger and sadness. Cogn. Emot. 2012;26(5):786–799. doi: 10.1080/02699931.2011.619520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paradiso S., Johnson D.L., Andreasen N.C., O’Leary D.S., Watkins G.L., Boles Ponto L.L., Hichwa R.D. Cerebral blood flow changes associated with attribution of emotional valence to pleasant, unpleasant, and neutral visual stimuli in a PET study of normal subjects. Am. J. Psychiatry. 1999;156(10):1618–1629. doi: 10.1176/ajp.156.10.1618. [DOI] [PubMed] [Google Scholar]

- Booth J.R., Wood L., Lu D., Houk J.C., Bitan T. The role of the basal ganglia and cerebellum in language processing. Brain Res. 2007;1133:136–144. doi: 10.1016/j.brainres.2006.11.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterburs J., Desmond J.E. The role of the human cerebellum in performance monitoring. Curr. Opin. Neurobiol. 2016;40:38–44. doi: 10.1016/j.conb.2016.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colibazzi T., Posner J., Wang Z., Gorman D., Gerber A., Yu S., Zhu H., Kangarlu A., Duan Y., Russell J.A., Peterson B.S. Neural systems subserving valence and arousal during the experience of induced emotions. Emotion. 2010;10(3):377–389. doi: 10.1037/a0018484. [DOI] [PubMed] [Google Scholar]

- Styliadis C., Ioannides A.A., Bamidis P.D., Papadelis C. Distinct cerebellar lobules process arousal, valence and their interaction in parallel following a temporal hierarchy. Neuroimage. 2015;110:149–161. doi: 10.1016/j.neuroimage.2015.02.006. [DOI] [PubMed] [Google Scholar]

- Baumann O., Mattingley J.B. Functional topography of primary emotion processing in the human cerebellum. NeuroImage. 2012;61(4):805–811. doi: 10.1016/j.neuroimage.2012.03.044. [DOI] [PubMed] [Google Scholar]

- Scherer K.R. Emotions are emergent processes: they require a dynamic computational architecture. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2009;364(1535):3459–3474. doi: 10.1098/rstb.2009.0141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schraa-Tam C.K.L., Rietdijk W.J.R., Verbeke W.J.M.I., Dietvorst R.C., van den Berg W.E., Bagozzi R.P., De Zeeuw C.I. fMRI activities in the emotional cerebellum: a preference for negative stimuli and goal-directed behavior. Cerebellum. 2012;11(1):233–245. doi: 10.1007/s12311-011-0301-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han H., Chen J., Jeong C., Glover G.H. Influence of the cortical midline structures on moral emotion and motivation in moral decision-making. Behav. Brain Res. 2016;302:237–251. doi: 10.1016/j.bbr.2016.01.001. [DOI] [PubMed] [Google Scholar]

- Snider R.S., Maiti A. Cerebellar contributions to the Papez circuit. J. Neurosci. Res. 1976;2(2):133–146. doi: 10.1002/jnr.490020204. [DOI] [PubMed] [Google Scholar]

- Sauter D.A., Eisner F., Calder A.J., Scott S.K. Perceptual cues in nonverbal vocal expressions of emotion. The quarterly journal of experimental psychology. 2010;63(11):2251–2272. doi: 10.1080/17470211003721642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karavasilis E., Christidi F., Velonakis G., Giavri Z., Kelekis N.L., Efstathopoulos E.P., Evdokimidis I., Dellatolas G. Ipsilateral and contralateral cerebro-cerebellar white matter connections: A diffusion tensor imaging study in healthy adults. J. Neuroradiol. 2019;46(1):52–60. doi: 10.1016/j.neurad.2018.07.004. [DOI] [PubMed] [Google Scholar]

- Annoni J.-M., Ptak R., Caldara-Schnetzer A.-S., Khateb A., Pollermann B.Z. Decoupling of autonomic and cognitive emotional reactions after cerebellar stroke. Ann. Neurol.: Official Journal of the American Neurological Association and the Child Neurology Society. 2003;53(5):654–658. doi: 10.1002/ana.10549. [DOI] [PubMed] [Google Scholar]

- J. E. Pierce, J. Péron, The basal ganglia and the cerebellum in human emotion. Social Cognitive and Affective Neuroscience (2020). [DOI] [PMC free article] [PubMed]

- Breska A., Ivry R.B. Double dissociation of single-interval and rhythmic temporal prediction in cerebellar degeneration and Parkinson’s disease. Proc. Natl. Acad. Sci. 2018;115(48):12283–12288. doi: 10.1073/pnas.1810596115. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.