Abstract

Segmentation of brain tissue types from diffusion MRI (dMRI) is an important task, required for quantification of brain microstructure and for improving tractography. Current dMRI segmentation is mostly based on anatomical MRI (e.g., T1- and T2-weighted) segmentation that is registered to the dMRI space. However, such inter-modality registration is challenging due to more image distortions and lower image resolution in dMRI as compared with anatomical MRI. In this study, we present a deep learning method for diffusion MRI segmentation, which we refer to as DDSeg. Our proposed method learns tissue segmentation from high-quality imaging data from the Human Connectome Project (HCP), where registration of anatomical MRI to dMRI is more precise. The method is then able to predict a tissue segmentation directly from new dMRI data, including data collected with different acquisition protocols, without requiring anatomical data and inter-modality registration. We train a convolutional neural network (CNN) to learn a tissue segmentation model using a novel augmented target loss function designed to improve accuracy in regions of tissue boundary. To further improve accuracy, our method adds diffusion kurtosis imaging (DKI) parameters that characterize non-Gaussian water molecule diffusion to the conventional diffusion tensor imaging parameters. The DKI parameters are calculated from the recently proposed mean-kurtosis-curve method that corrects implausible DKI parameter values and provides additional features that discriminate between tissue types. We demonstrate high tissue segmentation accuracy on HCP data, and also when applying the HCP-trained model on dMRI data from other acquisitions with lower resolution and fewer gradient directions.

1. Introduction

Segmentation of brain tissue types, e.g., the labeling of gray matter (GM), white matter (WM), and cerebrospinal fluid (CSF), is a critical step in many diffusion MRI (dMRI) visualization and quantification tasks such as tractography and brain surface reconstruction. Most current tissue segmentation approaches are based on T1-weighted (T1w) or T2-weighted (T2w) anatomical MRI data (Ashburner and Friston, 2005; Fischl, 2012; Smith et al., 2004), which has high image resolution and image contrast that differentiates between tissue types. However, application of anatomical-MRI-based segmentation to dMRI requires inter-modality registration, which is challenging since dMRI often has echo-planar imaging (EPI) distortions (Wu et al., 2008; Albi et al., 2018; Jones and Cercignani, 2010) and low image resolution (Malinsky et al., 2013). Improved dMRI acquisitions can mitigate these challenges. For instance, the Human Connectome Project (HCP) acquired high-resolution dMRI data with alternating phase encoding to correct EPI distortions, making it easier to register between the anatomical MRI data and the dMRI data (Glasser et al., 2013). However, advanced HCP-like acquisitions are not always available and are not yet feasible for many clinical settings where scan time is limited. Therefore, there is a need for segmentation approaches that can be applied on the dMRI data directly. Such approaches would be useful for MRI protocols that are missing high quality anatomical data, or when it is difficult to achieve high quality registration of the anatomical-MRI-based segmentation to the dMRI space.

Most dMRI-based brain tissue segmentation methods use rotation-invariant features derived from the diffusion tensor imaging (DTI) model, such as mean diffusivity (MD) that quantifies the overall magnitude of diffusion within a voxel, and fractional anisotropy (FA) that quantifies the variability of diffusion across different orientations. DTI features are found useful to differentiate between brain tissue types, where, for example, FA differentiates between voxels with WM fibers that have a clear orientation, versus voxels with GM or CSF where diffusion is more isotropic (Liu et al., 2007; Schnell et al., 2009; Wen et al., 2013; Kumazawa et al., 2013; Yap et al., 2015; Ciritsis et al., 2018; Zhang et al., 2015; Nie et al., 2018). However, these DTI-based segmentation studies have achieved limited accuracy, partly because DTI features are known to be nonspecific in characterization of water diffusion in voxels with complex intracellular and extracellular in vivo environments (O’Donnell and Pasternak, 2015). Diffusion kurtosis imaging (DKI) (Jensen and Helpern, 2010), a clinically feasible extension of DTI that characterizes non-Gaussian water molecule diffusion, enhances DTI by providing information about molecular restrictions and tissue heterogeneity in the brain. DKI parameterizes the dMRI data with a diffusion tensor and a kurtosis tensor (Tabesh et al., 2011). From the kurtosis tensor, additional parameters are derived, including mean kurtosis (MK), axial kurtosis (AK) and radial kurtosis (RK), that can quantify the complexity of the microstructural environment of different brain tissues (Steven et al., 2014).

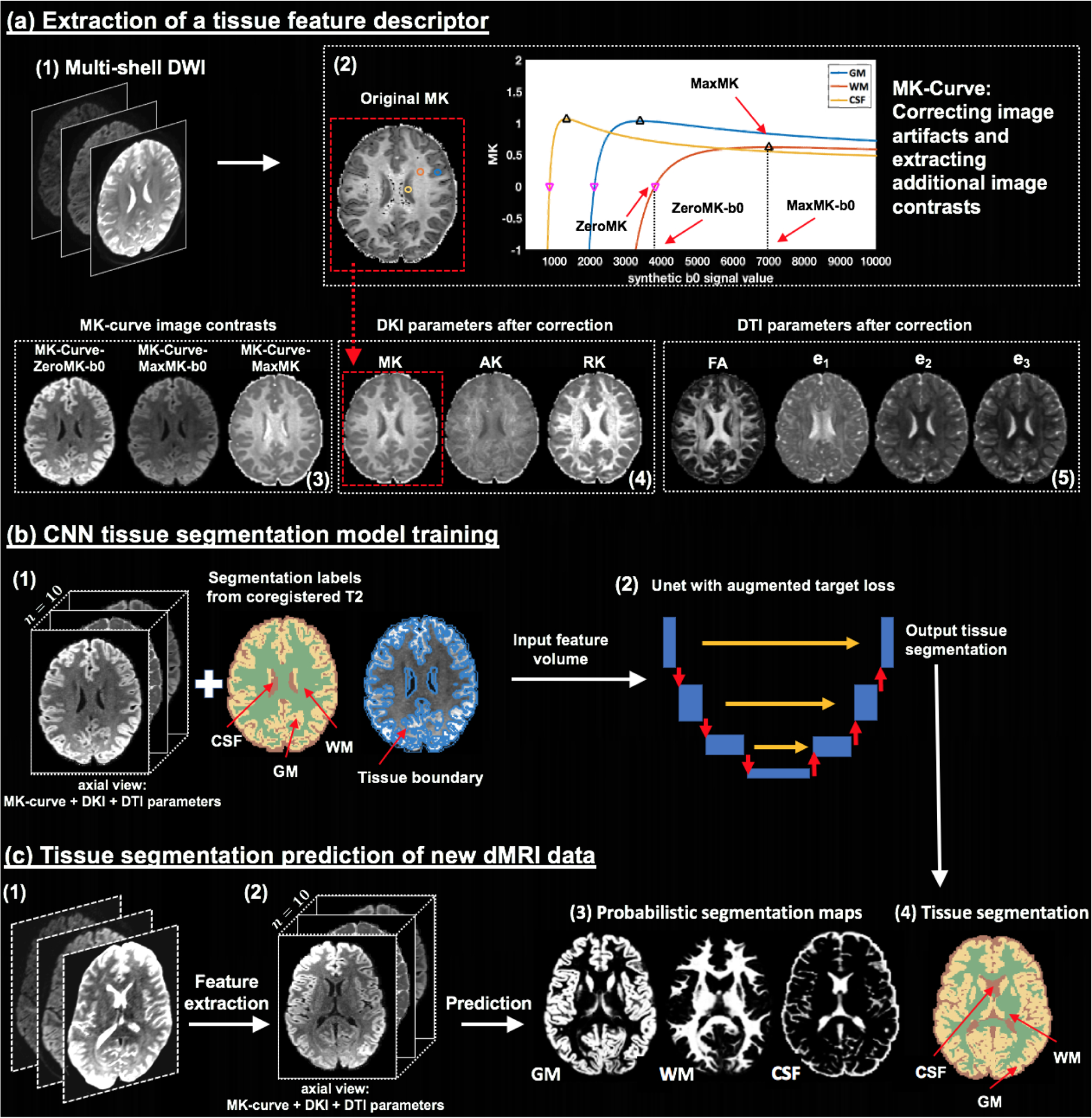

Segmentation methods that include DKI parameters show improved brain tissue segmentation compared to DTI (Beejesh et al., 2019; Hui et al., 2015; Steven et al., 2014). However, one important challenge is that DKI parameters can be affected by signal alterations caused by imaging artifacts such as noise, motion and Gibbs ringing (Veraart et al., 2016; Shaw and Jensen, 2017; Zhang et al., 2019). Consequently, DKI often yields output parameter values that are implausible (e.g., see MK in Figure 1(a2)), which affects DKI-based analyses including tissue segmentation (Veraart et al., 2016; Shaw and Jensen, 2017). However, correction methods such as the mean-kurtosis-curve (MK-Curve) method (Zhang et al., 2019) are now available to robustly identify and correct implausible kurtosis tensor parameter values (Zhang et al., 2019).

Figure 1:

Method overview. Given input dMRI data (a1), an MK-Curve is computed for each voxel (a2, showing three example voxels). The MK-Curve is used to correct implausible DKI and DTI parameters, and to derive three additional image contrasts that are useful to identify tissue types: ZeroMK-b0, MaxMK-b0 and MaxMK (a3). A total of 10 features are computed: the 3 MK-Curve contrasts (a3), 3 DKI maps (a4) and 4 DTI maps (a5). The corrected images no longer have implausible values (e.g., original MK map (a2) versus corrected MK map (a4); in a red frame). The 3D volumes of the 10 features along with the segmentation labels and the tissue boundary segmentation computed from co-registered T2w data (b1) are used to train a CNN with a Unet architecture and with a novel augmented target loss function (b2). For new dMRI data (c1), the trained CNN is applied on the computed 3D volume of the 10 features in the dMRI space directly (c2), not requiring inter-modality registration. The final prediction output includes a probabilistic segmentation map for each tissue type (c3) and a segmentation label per voxel (c4).

Recent advances in deep learning significantly improved image segmentation results (e.g., see reviews in (Akkus et al., 2017; Bernal et al., 2019; Garcia-Garcia et al., 2017)). Initially, traditional machine learning methods such as support vector machine (SVM) (Ciritsis et al., 2018; Schnell et al., 2009) and non-negative matrix factorization (NMF) (Jeurissen et al., 2015; Sun et al., 2019) were considered. More recently, studies have started to utilize deep learning to further improve segmentation performance. Of note are studies that utilized multilayer perceptron (MLP) (Hastie et al., 2009) to perform MRI-based tissue segmentation (Bagher-Ebadian et al., 2011; Golkov et al., 2016). Specifically, Golkov et al. (Golkov et al., 2016) demonstrated successful application of MLP for segmentation of GM, WM, and CSF using dMRI data.

Currently, the most widely used deep learning model is the convolutional neural network (CNN) that is designed to automatically and adaptively learn spatial hierarchies of image features from low- to high-level patterns (LeCun et al., 1998; Krizhevsky et al., 2012). So far CNN for brain segmentation have been considered as part of multi-modal segmentation that includes dMRI, showing highly promising tissue segmentation performance (Zhang et al., 2015; Nie et al., 2018). However, this sort of use still depends on the accuracy of cross-modality registration that is required to form the multi-modal input used for the segmentation.

In this paper, we propose a deep learning approach, DDSeg, that utilizes a CNN to learn the segmentation of WM, GM, and CSF directly from dMRI features while obviating the need for inter-modality registration. The CNN is trained using high quality and coregistered anatomical and dMRI data (from the HCP), and then predicts tissue segmentation of new subjects directly from dMRI data, without the need for anatomical MRI data nor inter-modality registration. To further improve accuracy we trained the CNN using a novel augmented target loss function (Breger et al., 2020) that penalizes segmentation errors in voxels that are on the boundary between two different tissue types (Figure 1b). The dMRI input includes seven DKI and DTI parameter maps that have been corrected for implausible values using MK-Curve and three additional MK-Curve-derived maps (Zhang et al., 2019). We trained, validated and tested the proposed method using 100 high-quality and co-registered anatomical and dMRI datasets from HCP, and then demonstrated generalizability to dMRI data from a clinical acquisition with lower resolution and fewer gradient directions. Overall, quantitative and qualitative comparisons with several state-of-the-art segmentation methods show that our method provides highly accurate and reliable dMRI tissue segmentation performance.

2. Methods

The proposed method includes 3 main steps (overviewed in Figure 1): (a) extracting a tissue feature descriptor from DKI and MK-Curve-based measures (Section 2.1), (b) training a CNN model for tissue segmentation (Section 2.2), and (c) predicting subject-specific tissue segmentation from new dMRI data (Section 2.3).

2.1. Extraction of a tissue feature descriptor

Feature extraction from the DWI data was performed using a DKI model fit (Tabesh et al., 2011), in combination with generating the MK-Curve for each voxel (Zhang et al., 2019), to correct implausible DKI and DTI parameter values, and to provide three additional image contrasts that are useful for tissue segmentation.

MK-Curve is a continuous plot that shows the dependence of MK on variation in the b0 signal (Zhang et al., 2019). In brief, an MK-Curve is generated for each voxel by replacing the original b0 value with a range of synthetic values, and calculating MK (Tabesh et al., 2011) for each signal realization. The resulting curve is characterized by three features for each voxel (Figure 1(a2)): ZeroMK-b0 – the largest b0 value where MK crosses 0; MaxMK-b0 – a b0 value larger than ZeroMK-b0 where MK reaches maximum; and MaxMK – the MK value at MaxMK-b0. These three features define ranges of b0 values that generate implausible MK values. Implausible values can then be corrected by projecting out-of-range b0 values to the plausible range (Zhang et al., 2019), and refitting the DKI model (Tabesh et al., 2011) using the projected b0 value. This process results with corrected DKI and DTI maps that no longer have physically implausible values and fewer visually implausible voxels (e.g., comparing dark voxels in the original MK in Figure 1(a2) with the corrected MK in Figure 1(a4); in a red frame). The MK-Curve method has shown to improve sensitivity to identify white matter alterations in clinical high risk for psychosis (Zhang et al., 2020a)

As a result of this process, for each subject, we calculate a tissue feature descriptor that is a 4D image with 10 features per voxel (Figs. 1(a3 to a5)): 3 MK-Curve features (ZeroMK-b0, MaxMK-b0, and MaxMK), 3 MK-Curve-corrected DKI parameters (MK, AK and RK) and 4 MK-Curve-corrected DTI parameters (FA, and the three eigenvalues, e1, e2, e3). Each parameter was rescaled using a z-transform across all voxels within a brain mask.

For comparison (see Section 3.2.1) we also considered the following descriptors: 1) a DKI-based descriptor that included only the DKI and DTI features obtained by fitting the DKI model to the original, uncorrected data. 2) a DTI-based descriptor, which was computed by nonlinear fit of the b=0 and b=1000 s/mm2 data to a diffusion tensor.

2.2. CNN tissue segmentation model training

We applied CNNs to train models that segment WM, GM and CSF from different dMRI descriptors as input. In brief, the CNN had the typical three layers (LeCun et al., 1998; Krizhevsky et al., 2012): a convolution layer and a pooling layer to perform feature extraction, and a fully connected layer to map the extracted features into the final tissue segmentation maps. There were two main CNN design choices: 1) choice of CNN architecture, and 2) design of loss function. These two choices are described below.

2.2.1. Multi-view 2D Unet CNNs

For the CNN architecture, we selected a Unet architecture (Ronneberger et al., 2015), which previously has been successfully applied for neuroimage analysis (Dong et al., 2017; Wasserthal et al., 2018; Hwang et al., 2019). While in principle the Unet architecture allows extensions to 3D image segmentation, we used 2D image slices as network input to boost memory efficiency and to enable processing of the high-resolution HCP data used for model training (see Section 3.1 for data details). However, to leverage the 3D neighborhood information in the 3D feature volume, we trained three 2D CNNs using 2D slices from three different orientations: axial, coronal and sagittal. This is similar to the model training process proposed in Wasserthal et al. (2018). The final tissue segmentation prediction was computed based on the average of the prediction probabilities across the three views (See Supplementary Figure 2 for a comparison between using the three views and using each individual view).

2.2.2. Augmented target loss function

A loss function, which evaluates how well the CNN models the training data, is needed to optimize the weights of the CNN. To further improve the CNN training, we designed a new loss function according to the recently proposed framework of augmented target loss functions (Breger et al., 2020). Augmented target loss functions modify a given loss function by including prior knowledge of a particular task. The prior knowledge is introduced by using transformations to project the prediction (output y) and the ground truth (target t) into alternative spaces (Breger et al., 2020). In its general form, the augmented target loss function, LAT, can be written as

| (1) |

where Tj is a transformation, Lj is a loss function, and αj > 0 is a weight for each of the d loss functions j ∈{1,···, d}.

To improve the segmentation of GM, WM and CSF, we added two kinds of prior information: 1) that misclassified voxels are more common on the boundaries of the different regions, and 2) that CSF has essentially different diffusion properties (free diffusion) from GM and WM (hindered and restricted diffusion). Therefore, we added two penalty elements to the CNN’s loss function:

| (2) |

Here, the first term, LCE, is the conventional categorical cross-entropy loss function (Goodfellow et al., 2016). The second term penalizes misclassification in voxels included in the boundary region,Ω, which was computed by applying a Laplacian of Gaussian (LoG) edge filter (Parker, 2010) to the target segmentation, t. The third term penalizes misclassification between CSF and either GM or WM by applying the transformation T(·) = 〈·, (1, 1, 0)T〉 on y and t, where 〈·,·〉 denotes the inner product and the three channels correspond to WM, GM and CSF, respectively. LMSE is the mean-squared error loss function, i.e., (T(y) − T(t))2 over all samples. The added information/penalization is weighted by the parameters α1 and α2.

We trained three Unet CNN models, corresponding to the axial, coronal and sagittal views of the input volume, using the Deep Learning ToolboxTM in Matlab (version 2018). Adaptive moment estimation (ADAM) was used for optimization, with a learning rate of 0.0005, a batch size of 8, and a total of 50 epochs, which was sufflcient to reach convergence in training and validation (Supplementary Figure 1).

2.3. Tissue segmentation prediction of new dMRI data

To perform tissue segmentation on new subjects that were not included in the training, we applied the trained CNN model to the dMRI data of these subjects (Figure 1(c)). First, tissue feature descriptor was extracted from the new dMRI data (as described in Section 2.1), resulting in a 4D volume of the 10 features (Figure 1(c2)). Zero padding was performed to maintain the same matrix size as the training images. Then, the 4D volume was separated to axial, sagittal and coronal slices, and each slice was fed to the trained CNN model of the corresponding view to output segmentation probability maps with a probability score for each tissue type. For each voxel, these scores were averaged across the output of the three views. These prediction outputs were then re-constructed into 4D maps (Figure 1(c3)). From the 4D probabilistic segmentation maps, we computed a 3D tissue segmentation map (Figure 1(c4)), where each voxel was assigned with the tissue type that had maximal probability.

3. Experiments

3.1. MRI data

We included dMRI and anatomical T1w or T2w MRI data from five different sources. The first source included high-quality MRI data from the HCP (Glasser et al., 2013). The HCP data were used to train the CNNs, as well as for model validation and testing. The other four sources included data from studies geared towards clinical applications, where MRI data were obtained with a clinical acquisition protocol (CAP). The CAP MRI data were used to test how the trained CNNs generalized to different acquisitions protocols. The dMRI data were used to extract the MK-Curve-based feature descriptor. The anatomical MRI (T1w or T2w) data were used to generate tissue segmentation that will be considered as “ground truth”. As mentioned above, the high quality of the HCP data allows accurate registration of the anatomical-MRI-based segmentation to the dMRI space, resulting with a high quality segmentation in the dMRI space that can be considered close to ground truth. For the CAP data, the co-registered anatomical-MRI-based segmentation may not be optimal, but we use it here as a comparison to maintain a consistent reference point. Below, we introduce the MRI acquisition, data preprocessing, and anatomical-MRI-based tissue segmentation for each dataset.

3.1.1. Human Connectome Project (HCP) data

We included MRI data1 from a total of 100 HCP subjects (age: 29.1 ± 3.7 years; gender: 54 females and 46 males), where 70 MRI datasets were assigned for model training, 20 MRI datasets were assigned for validation, and 10 MRI datasets were assigned for testing.

The HCP MRI data were acquired with a high quality image acquisition protocol using a customized Connectome Siemens Skyra scanner. The acquisition parameters used for the dMRI data were TE = 89.5 ms, TR = 5520 ms, phase partial Fourier = 6/8, and voxel size = 1.25 × 1.25 × 1.25 mm3. A total of 288 volumes were acquired for each subject (acquired in both LR and RL phase encoding to correct for EPI distortions), including 18 baseline volumes with a low diffusion weighting b = 5 s/mm2 and 270 volumes evenly distributed at three shells of b = 1000/2000/3000 s/mm2. The acquisition parameters for the T2w data were TE = 565 ms, TR = 3200 ms, and voxel size = 0.7 × 0.7 × 0.7 mm3. The dMRI data were provided to us already processed with the well-designed HCP minimum processing pipeline (Glasser et al., 2013), which includes brain masking, motion correction, eddy current correction, EPI distortion correction, and coregistation with the anatomical T2w data.

To obtain input tissue type labels for training and testing, we applied Statistical Parametric Mapping (SPM, version SPM12) (Ashburner and Friston, 2005) on the T2w images, resulting in a CSF, WM or GM label for each voxel. The labels were projected into the co-registered dMRI data using nearest neighbor interpolation. We note that SPM is often the method of choice in generating high quality reference brain segmentation to compare with dMRI-based tissue segmentation (Schnell et al., 2009; Ciritsis et al., 2018; Cheng et al., 2020), although other segmentation tools, e.g., FMRIB’s Automated Segmentation Tool (FSL FAST) (Jenkinson et al., 2012) could also be used instead.

3.1.2. Clinical Acquisition Protocol (CAP) data

The CNN tissue segmentation models were tested on another 90 MRI datasets that were from four different studies geared towards clinical applications (referred to as VERIO, MultiCenter, ABCD, and SUDMEX), and were acquired with different diffusion imaging protocols. The MRI data from the VERIO study were used to compare between tissue feature descriptors and various machine learning classifiers. The MRI data from all CAP studies were used to compare between our proposed segmentation approach and other state-of-the-art dMRI segmentation methods.

The VERIO data contained our onsite MRI datasets from 10 healthy subjects (age: 22.7 ± 5.4 years; gender: 5 females and 5 males). These datasets were acquired with approval of the local ethics board. The MRI data was acquired using a Siemens Magnetom Verio 3T scanner (Siemens Healthcare, Erlangen, Germany). The acquisition parameters used for the dMRI data were TE = 109 ms, TR = 15800 ms, phase partial Fourier = 6/8, and voxel size = 2 × 2 × 2 mm3. A total of 65 volumes were acquired for each subject, including 5 baseline volumes with b = 0 s/mm2 and 30 volumes with gradient directions that were evenly distributed, and repeated at two shells of b = 1000/3000 s/mm2. The acquisition parameters for the T2w data were TE = 422ms, TR = 3200 ms, and voxel size = 1 × 1 × 1 mm3 using a 3D T2-SPACE sequence. The dMRI data were processed using our in-house data processing pipeline, including brain masking using the SlicerDMRI extension (dmri.slicer.org) (Norton et al., 2017; Zhang et al., 2020b) in 3D Slicer (www.slicer.org), eddy current-induced distortion correction and motion correction using FSL (Jenkinson et al., 2012), and EPI distortion correction and coregistation with the anatomical T2w data using the Advanced Normalization Tools (ANTS) (Avants et al., 2009). Manual quality check was performed to confirm good registration performance.

The MultiCenter data included 30 MRI datasets2 (Tong et al., 2020) from 3 healthy traveling subjects (one male, age 23 years, and two females, age 23 and 26 years). For each subject, 10 MR datasets including both dMRI and T1w scans were acquired across 10 imaging centers, where the scanners were all 3T MR Magneton Prisma (Siemens, Erlangen, Germany). The acquisition protocols were identical across the different centers. The acquisition parameters used for the dMRI data were TE = 71 ms, TR = 5400 ms, and voxel size = 1.5 × 1.5 × 1.5 mm3. 96 volumes were acquired for each subject, repeated with inverted phase encoding (AP and PA), including 6 baseline volumes with b = 0 s/mm2 and 30 diffusion weighted images evenly distributed and repeated in three shells of b = 1000/2000/3000 s/mm2. The acquisition parameters for the T1w data were TE = 2.9 ms, TR = 5000 ms, and voxel size = 1.2 × 1 × 1 mm3. The downloaded dMRI data have been already preprocessed as described in (Tong et al., 2020), which includes brain masking, field map estimation, head motion correction, and EPI distortion correction.

The ABCD data included 30 MRI datasets3 (16 females and 14 males; age: 10.2 ± 0.6 years) that were randomly selected, 10 per site, from three sites of the Adolescent Brain Cognitive Development (ABCD) database (Casey et al., 2018). 20 subjects were scanned using a Siemens Prisma 3T Scanner (Siemens, Erlangen, Germany) and 10 were scanned using a GE MR750 3T scanner (GE Healthcare, Milwaukee, USA). For each subject, we used both dMRI and T1w scans. The acquisition parameters used for the dMRI data were TE = 88 ms (Siemens) / 81.9 ms (GE), TR = 4100 ms (both), and voxel size = 1.7 × 1.7 × 1.7 mm3 (both). A total of 103 volumes were acquired for each subject, including 7 baseline volumes with b = 0 s/mm2, 6 volumes with b = 500 s/mm2, 15 volumes with b = 1000 s/mm2, 15 volumes with b = 2000 s/mm2, and 60 volumes with b = 3000 s/mm2. The downloaded dMRI data have been already preprocessed as described in (Hagler Jr et al., 2019), using a pipeline that included eddy current distortion correction, motion correction and B0 distortion correction. Additionally, we performed brain masking using the SlicerDMRI extension in 3D Slicer.

The SUDMEX data included 20 MRI datasets4 (3 females and 17 males; age: 33.9 ± 7.3 years) randomly selected from a study of substance use disorders (SUD) in Mexico (Garza-Villarreal et al., 2020). The MRI data were acquired using a Philips Ingenia 3T scanner (Philips Healthcare, Best, The Netherlands), and included dMRI and T1w scans. The acquisition parameters used for the dMRI data were TE = 127 ms, TR = 8552 ms, and voxel size = 2 × 2 × 2 mm3. A total of 136 volumes were acquired for each subject, including 8 baseline volumes with b = 0 s/mm2, 32 volumes at b = 1000 s/mm2, and 96 volumes at b = 3000 s/mm2. The acquisition parameters for the T1w data were TE = 3.5 ms, TR = 7 ms, and voxel size = 1 × 1 × 1 mm3. Raw dMRI data were downloaded, on which we applied our in-house data processing pipeline, including brain masking using the SlicerDMRI extension in 3D Slicer, eddy current induced distortion correction and motion correction using FSL.

For evaluation purposes, each dMRI scan from the four studies with CAP data, was coregistered with the anatomical MRI data using the Advanced Normalization Tools (ANTS) (Avants et al., 2009). Manual quality check was performed to confirm good registration performance. We then applied SPM12 on the anatomical MRI to obtain a tissue segmentation, which was then projected onto the co-registered diffusion space using a nearest neighbor interpolation sampling.

3.2. Experimental comparison

We evaluated the performance of our proposed CNN based segmentation against: 1) other machine learning classifiers, and when using different dMRI feature descriptors, and 2) three state-of-the-art dMRI-based tissue segmentation methods.

3.2.1. Comparison of tissue feature descriptors and machine learning classifiers

We compared 9 approaches that used different tissue feature descriptors and machine learning classifiers. The compared feature descriptors were: 1) FDTI - containing the 4 DTI parameters computed from DTI modeling (as in (Kumazawa et al., 2010)); 2) FOrigDKI - containing the 3 DKI and 4 DTI parameters from DKI modeling (without any correction for implausible parameter values) (as in (Beejesh et al., 2019)); and 3) FProposed - containing all 10 MK-Curve-based parameters. For each of these feature descriptors, we performed tissue segmentation using three different classifiers: 1) SVM - a support vector machine classifier with a radial basis function (RBF) kernel function, as used in Ciritsis et al. (2018); Schnell et al. (2009), 2) CNN - a Unet architecture CNN with the conventional categorical cross-entropy loss function, 3) CNNAT - a Unet architecture CNN with our augmented target loss function. For each compared classifier, hyperparameters were tuned following a cross-validation process on the validation dataset. For the proposed CNNAT, the weighting parameters α1 and α2 were set to 0.05 and 0.5, respectively. To determine the settings of α1 and α2, we performed an evaluation of different combinations of the two parameters (each ranged from 0 to 1) and selected the combination that generated the lowest training loss. In total, there were 9 comparison methods in this experiment.

For each of the compared methods, we computed a tissue segmentation model using the 70 HCP datasets assigned for training, where the hyperparameters of the model were tuned using the 20 HCP datasets assigned for validation. To evaluate the tissue segmentation prediction performance, we applied each trained model on the 10 HCP datasets designated for testing, and on the 10 VERIO datasets, and we calculated the segmentation prediction accuracy, ACC, against the T2w-based labels for each dataset, as:

| (3) |

We computed ACC across voxels from the entire brain including all voxels within the brain mask, and also separately across voxels from the tissue boundary and from non-boundary voxels (detected using a LoG filter; as illustrated in Figure 1b). For each compared method, we computed the mean and the standard deviation of the segmentation prediction accuracy across the 10 HCP and the 10 VERIO datasets, respectively, across each of the regions (entire brain, boundary and non-boundary).

In addition, we also compared the segmentation performance across the entire WM, GM and CSF regions using the probabilistic similarity index (PSI)(Anbeek et al., 2005). PSI is a quantitative measure that evaluates probabilistic tissue segmentation prediction performance for each tissue type, T, as:

| (4) |

where ⎹ProbSegPD ∩ ProbSegGT⎹ indicates the sum of the probability values over the intersection of the predicted and the ground truth segmentations, and ⎹ProbSegPD(T)⎹ and ⎹ProbSegGT(T)⎹ indicate the sum of the probability values the predicted of and the ground truth segmentations. The PSI is in the range between 0 and 1, where a higher value represents a better agreement with the ground truth. The mean and the standard deviation of the Dice score and PSI across the 10 testing HCP subjects and the 10 testing CAP datasets, respectively, were computed for a quantitative comparison.

3.2.2. Comparison with other state-of-the-art dMRI tissue segmentation methods

We evaluated the proposed method with comparison to three other state-of-the-art dMRI-based tissue segmentation methods: 1) segmentation based on the estimation of a dMRI-based tissue response function (Dhollander et al., 2016, 2018) in the constrained spherical deconvolution (CSD) framework (Tournier et al., 2007), 2) application of the SPM tissue segmentation algorithm (Ashburner and Friston, 2005) directly to the diffusion baseline (b0) image, 3) a method that uses the direction-averaged diffusion weighted imaging (daDWI) signal as input to SPM tissue segmentation (Cheng et al., 2020). In the rest of the paper, we refer to these methods as the CSD method, the SPM+b0 method, and the daDWI method, respectively. Briefly, the CSD method is an unsupervised algorithm that leverages the relative diffusion properties to estimate response functions5 of GM, WM and CSF (Dhollander et al., 2016). Subsequently, the response functions are used in the multi-shell multi-tissue CSD (MSMT-CSD) algorithm (Tournier et al., 2007) to calculate volume fraction maps for the three tissue types (Jeurissen et al., 2014), which are analogous to the probabilistic maps that our proposed method outputs. The SPM+b0 method leverages the fact that the b0 has a T2w contrast and thus applies the SPM tissue segmentation method (Ashburner and Friston, 2005) directly on the b0 image without inter-MR-modulity registration. The SPM+b0 method was previously used, for example, to assist brain tissue segmentation (Mah et al., 2014). The daDWI method fits a power-law based model to the direction-averaged signal of the DWI images to obtain two parametric maps named alpha and beta (McKinnon et al., 2017). The alpha and beta images are used for creating a pseudo T1w image (Cheng et al., 2020), which is subsequently inputted to SPM to obtain the GM, WM and CSF segmentation. For each of the compared methods, the output of the prediction includes the overall tissue segmentation map where each voxel has a predicted label (GM, WM, CSF or background) and three probabilistic segmentation maps (for GM, WM and CSF, respectively) where each voxel has a probability belonging to a certain tissue type.

We performed the experimental comparison using the 10 HCP datasets designed for testing and the 90 datasets from the four different CAP studies. We evaluated our proposed method against the CSD, the SPM+b0, and the daDWI methods by comparing the overall ACC and the PSI (as introduced in Section 3.2.1). We also assessed spatial overlap with the co-registered T2w-based “ground truth” segmentation for each individual tissue type, T=(WM, GM, CSF), by computing the Dice score (Dice, 1945) of the predicted segmentation, SegPD, and the ground truth segmentation, SegGT, as:

| (5) |

where ⎹SegPD ∩ SegGT⎹ indicates the number of voxels in the intersection of the predicted and the ground truth segmentations, and ⎹SegPD⎹ and ⎹SegGT⎹ indicate the number of voxels of the predicted and the ground truth segmentations. The values of the Dice scores are between 0 and 1, where a high value represents better prediction corresponding to the ground truth segmentation.

4. Results

4.1. Comparison of tissue feature descriptors and machine learning classifiers

The comparison of ACC across the 9 combinations of 3 feature descriptors and 3 machine learning classifiers are presented in Tables 1 and 2 for the HCP and VERIO data, respectively. The proposed classifier with augmented target loss function, CNNAT, obtained higher accuracy than both SVM and conventional CNN classifiers for each feature descriptor. The improvement in accuracy comparing to the conventional CNN was mostly in the tissue boundary region. Applying the proposed feature descriptor, FProposed, which includes the 10 MK-Curve-based features, generated higher ACC than the other feature descriptors for each of the classifiers studied. Overall, applying our proposed classifier, CNNAT, on the proposed descriptor, FProposed, achieved the highest tissue segmentation accuracy across the entire brain, with an ACC of 90.48% in the HCP data and 80.30% in the VERIO data. Notably, the proposed CNNAT also achieved reasonable accuracy using only the DTI features, FDTI (Table 1 and Supplementary Figure 3). Tables 1 and 2 also show that the proposed classifier, CNNAT, on the proposed descriptor, FProposed had the highest PSI score for each of the GM, WM and CSF regions.

Table 1:

Quantitative comparison of the segmentation prediction across different tissue feature descriptors and machine learning classifiers on test HCP data. Reported are the prediction accuracy (ACC) across the entire brain, and separately across tissue boundary and non-boundary regions, as well as the probabilistic similarity index (PSI) for WM, GM and CSF.

| region | SVM | CNN | CNNAT | |

|---|---|---|---|---|

| FDTI | entire brain | 76.80±1.39% | 89.35±0.69% | 89.85±0.61% |

| boundary | 63.60±1.25% | 79.74±1.75% | 81.78 ±1.56% | |

| non-boundary | 88.54±1.49% | 99.11 ±0.24% | 99.09±0.22% | |

| FOrigDKI | entire brain | 81.69±1.62% | 89.23±0.60% | 89.82±0.54% |

| boundary | 68.49±1.64% | 80.64±1.61% | 82.81 ±1.46% | |

| non-boundary | 93.43±1.83% | 99.08±0.28% | 99.12±0.27% | |

| FProposed | entire brain | 82.92±1.53% | 89.95±0.53% | 90.48±0.51% |

| boundary | 69.72±1.50% | 81.03 ±1.54% | 83.09±1.48% | |

| non-boundary | 94.66±1.73% | 99.17±0.25% | 99.17±0.26% | |

| FDTI | GM | 0.853±0.004 | 0.968±0.003 | 0.969±0.003 |

| WM | 0.861±0.003 | 0.974±0.002 | 0.975±0.003 | |

| CSF | 0.849±0.010 | 0.953±0.009 | 0.957±0.010 | |

| FOrigDKI | GM | 0.857±0.004 | 0.972±0.004 | 0.973±0.004 |

| WM | 0.860±0.003 | 0.973±0.003 | 0.975±0.003 | |

| CSF | 0.855±0.008 | 0.960±0.008 | 0.961±0.008 | |

| FProposed | GM | 0.858±0.004 | 0.973±0.004 | 0.974±0.004 |

| WM | 0.862±0.002 | 0.975±0.002 | 0.976±0.002 | |

| CSF | 0.853±0.009 | 0.957±0.008 | 0.961±0.009 |

Table 2:

Quantitative comparison of the segmentation prediction across different tissue feature descriptors and machine learning classifiers on test VERIO data. Reported are the prediction accuracy (ACC) across the entire brain, and separately across tissue boundary and non-boundary regions, as well as the probabilistic similarity index (PSI) for WM, GM and CSF.

| region | SVM | CNN | CNNAT | |

|---|---|---|---|---|

| FDTI | entire brain | 56.65±6.61% | 76.25±3.66% | 76.68±4.35% |

| boundary | 55.82±6.62% | 66.22±4.21% | 69.49±4.64% | |

| non-boundary | 57.58±6.62% | 88.78±3.56% | 90.32±2.79% | |

| FOrigDKI | entire brain | 59.55±3.53% | 77.78±3.10% | 78.35±3.02% |

| boundary | 58.73±3.54% | 66.19±3.67% | 70.36±3.67% | |

| non-boundary | 60.48±3.52% | 91.53±2.14% | 91.57±2.30% | |

| FProposed | entire brain | 62.28±3.49% | 79.39±2.25% | 80.30±2.02% |

| boundary | 61.46±3.48% | 68.70±3.05% | 73.00±2.78% | |

| non-boundary | 63.21±3.48% | 92.05±2.45% | 92.62±2.55% | |

| FDTI | GM | 0.831±0.019 | 0.947±0.019 | 0.948±0.017 |

| WM | 0.814±0.009 | 0.927±0.009 | 0.934±0.009 | |

| CSF | 0.831±0.031 | 0.936±0.030 | 0.940±0.029 | |

| FOrigDKI | GM | 0.831±0.023 | 0.947±0.021 | 0.949±0.021 |

| WM | 0.817±0.017 | 0.932±0.017 | 0.934±0.017 | |

| CSF | 0.858±0.015 | 0.961±0.013 | 0.956±0.015 | |

| FProposed | GM | 0.832±0.023 | 0.946±0.021 | 0.951±0.021 |

| WM | 0.836±0.011 | 0.949±0.011 | 0.953±0.010 | |

| CSF | 0.847±0.032 | 0.951 ±0.028 | 0.965±0.032 |

4.2. Comparison with state-of-the-art tissue segmentation methods

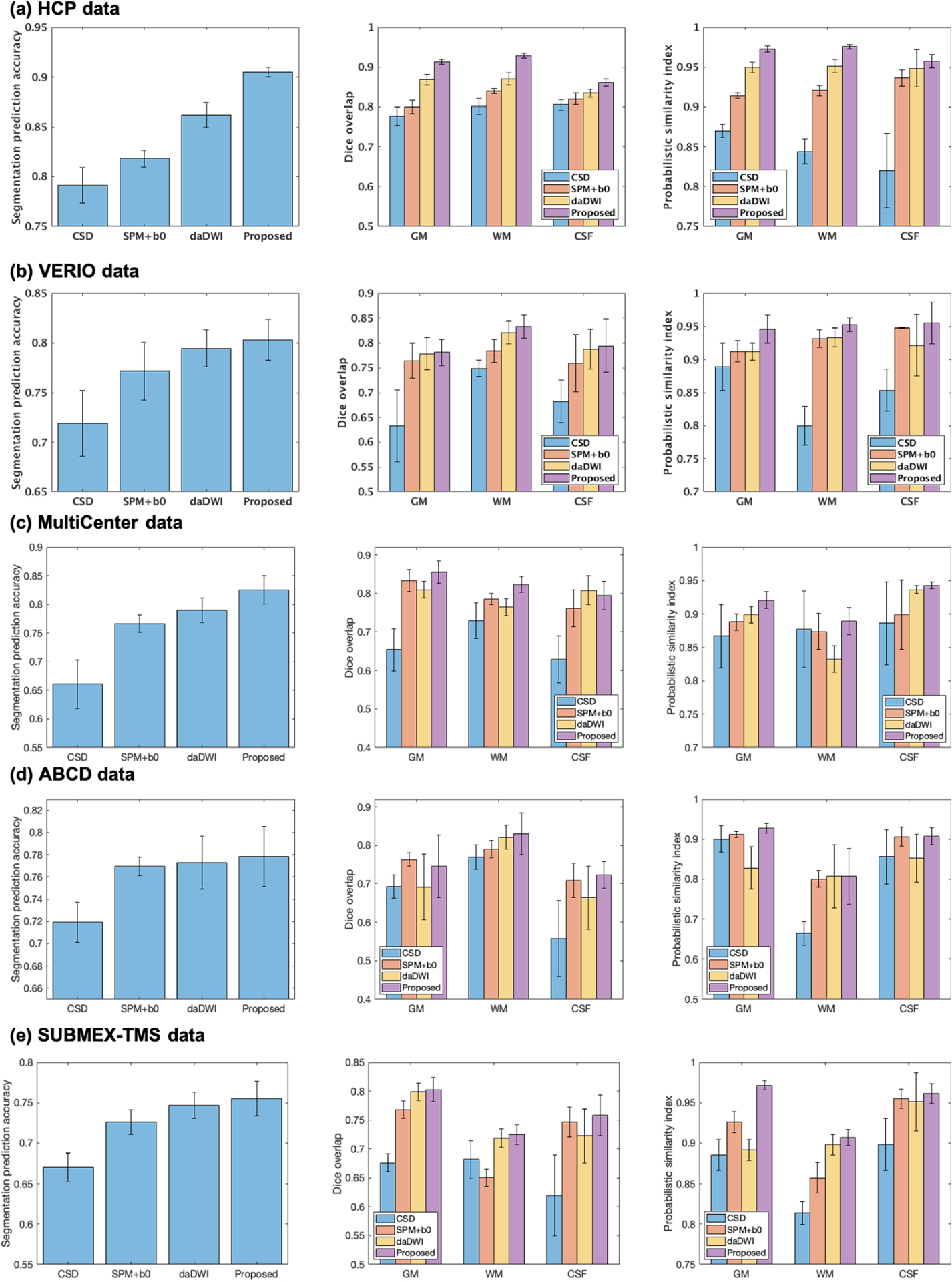

Quantitative comparisons between our method and other state-of-the-art tissue segmentation methods (Figure 2) show that our method resulted with the highest mean classification accuracy (ACC) across the testing datasets. This was the case for each of the five studies considered as data sources. Our proposed method also consistently outperformed the three state-of-the-art methods (CSD, SPM+b0 and daDWI), where the Dice overlap and PSI were higher for the proposed method in 28 out of 30 comparisons (2 measures, 3 tissue types and 5 studies). Computational time of each compared method is reported in Supplementary Table 1.

Figure 2:

Quantitative comparison of the overall segmentation prediction accuracy (left column), the Dice overlap (center column), and the probabilistic similarity index (PSI; right column) across the CSD, the SPM+b0, the daDWI, and the proposed tissue segmentation methods.

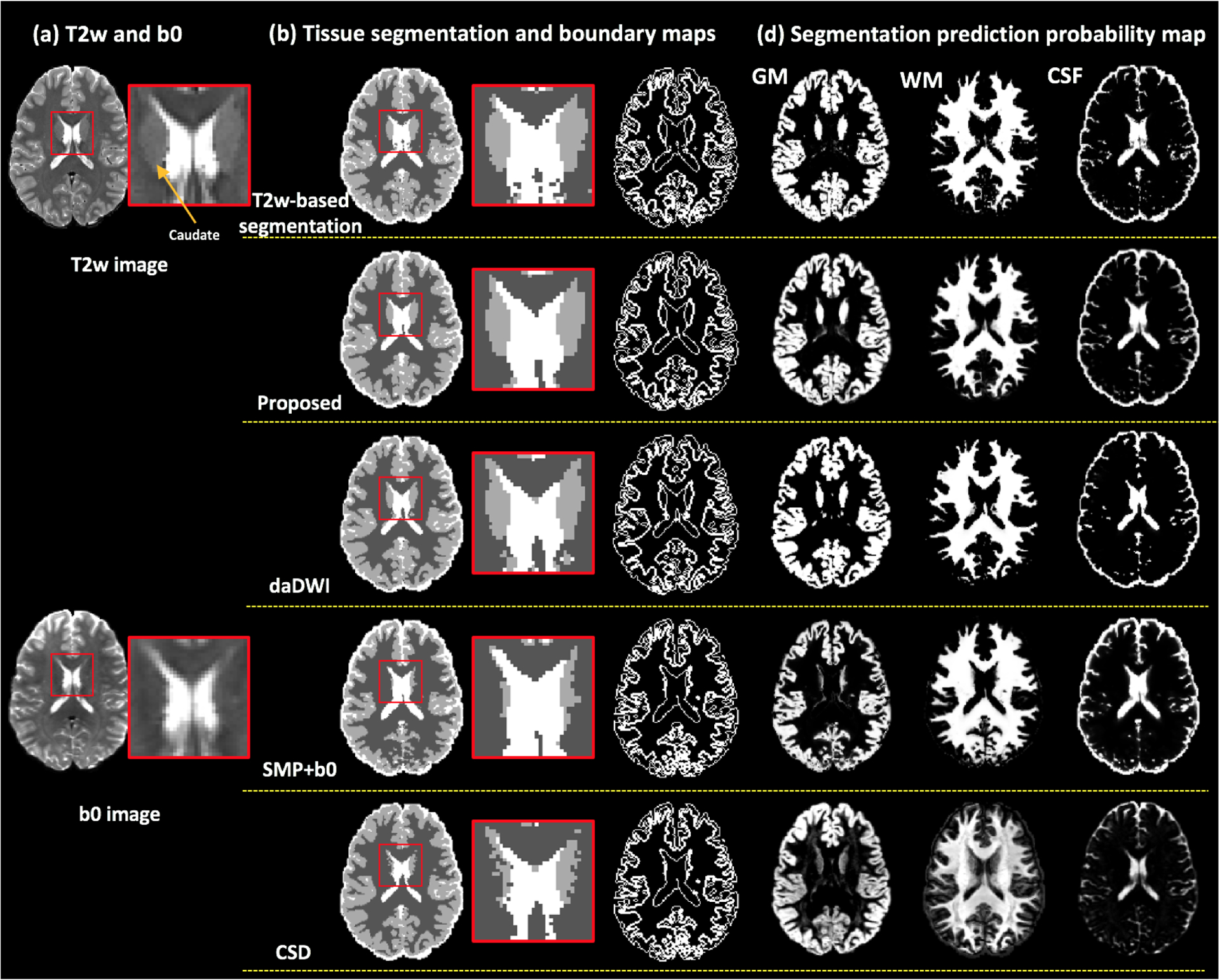

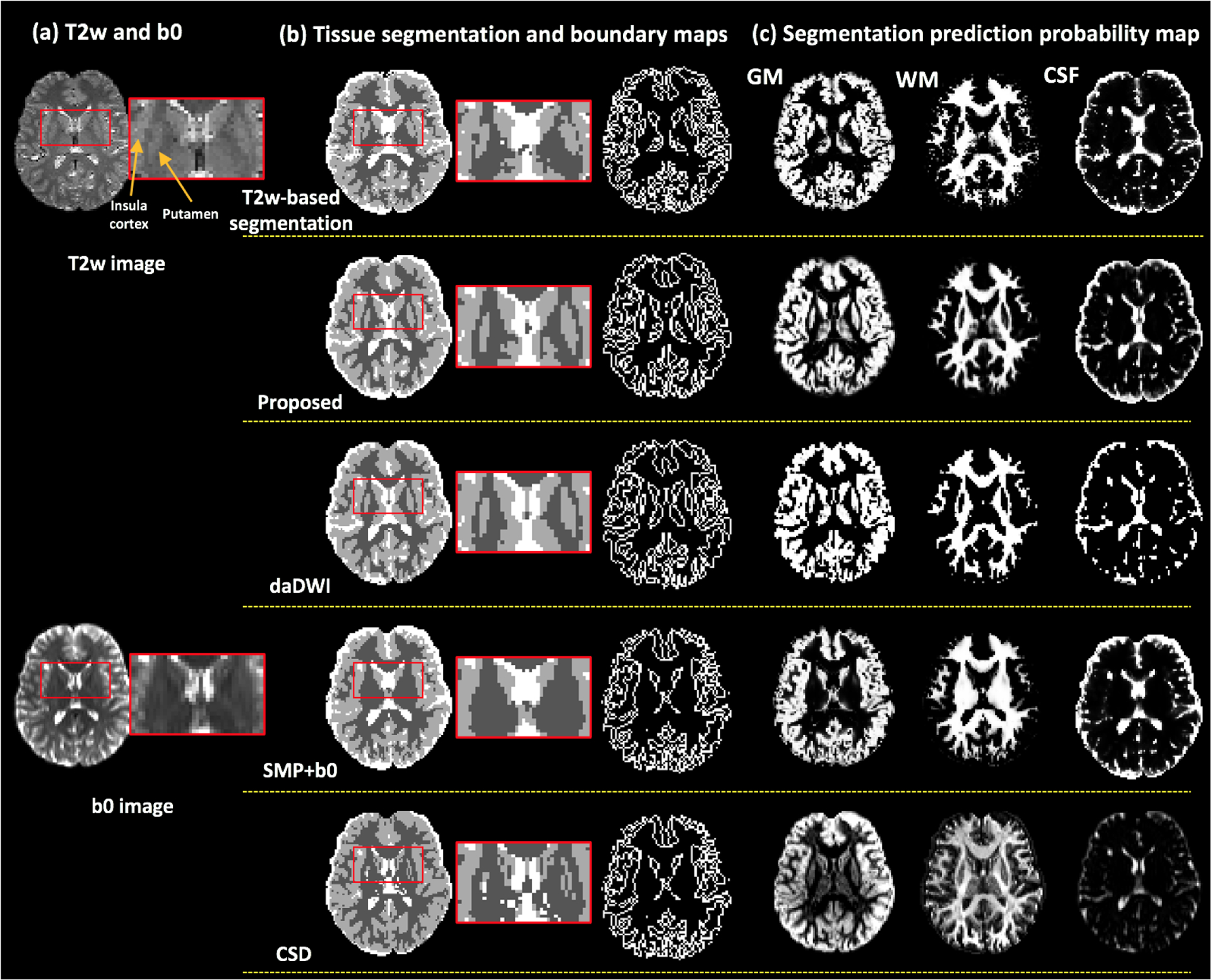

Example maps of the predicted tissue segmentation, tissue boundary and probabilistic maps are provided (see Figure 3 for HCP data, Figure 4 for VERIO data, and supplementary Figures 4–6 for MultiCenter, ABCD and SUDMEX data). Visual assessment of these maps shows that our method resulted with tissue segmentation maps that are highly similar to the T2w-based tissue segmentation. The daDWI method also resulted with segmentation maps that are similar to the T2w-based method, while the SPM+b0 and CSD methods are relatively less similar. For example, in Figure 3, the SPM-b0 and the CSD methods mislabeled parts of the caudate as WM, and in Figure 4, the SPM-b0 method mispredicted the putamen to be WM.

Figure 3:

HCP data. Visual comparison between the proposed method and the daDWI method, the SPM+b0 method and the CSD method on one example HCP subject, with respect to the T2w-based segmentation. Column (a) shows the T2w and the b0 images of the example HCP subject. Column (b) shows the overall GM/WM/CSF segmentation and tissue boundary map calculated from each compared method. The inset images enlarge the region near the caudate. Column (c) shows the segmentation prediction probability maps for each tissue type that were calculated from each compared method.

Figure 4:

CAP data. Visual comparison between the proposed method and the daDWI method, the SPM+b0 method and the CSD method on one example HCP subject, with respect to the T2w-based segmentation. Column (a) shows the T2w and the b0 images of the example HCP subject. Column (b) shows the comparison of the overall GM/WM/CSF segmentation and tissue boundary map. The inset images enlarge the region near the putamen. Column (c) shows the segmentation prediction probability maps for each tissue type that were calculated from each compared method.

Visual inspection also demonstrates that there are apparent tissue segmentation errors in the T2w-based method, in particular in the CAP data, whereas our method achieves a more anatomically correct tissue segmentation. For example, in the T2w-based tissue segmentation in Figure 4(b), several WM voxels belonging to the external capsule between the putamen and the insula cortex are mislabeled to be GM. Our method generates a tissue segmentation map that is smoother and more closely resembles the expected shape of the putamen and the insula cortex, better corresponding with the anatomy as appearing on the T2w image.

5. Discussion

In this work, we proposed a novel deep learning brain tissue segmentation method that can be applied directly on dMRI data. We trained a CNN tissue segmentation model from high quality HCP data, using MK-Curve-based DKI features and a new augmented target loss function. On the testing HCP data, our method achieved highly comparable results to anatomical-MRI-based “ground truth” tissue segmentation, while avoiding inter-modality registration. We also demonstrated that our method performed well with dMRI data from acquisition protocols that had lower spatial and angular resolutions than those of the training HCP data. Quantitative and visual comparisons showed that our method consistently outperformed several state-of-the-art dMRI-based tissue segmentation methods.

The two CNN-based classifiers (i.e., with the conventional categorical cross-entropy loss function and with the augmented target loss function) obtained much higher prediction accuracies than the SVM classifier. The improvement might be because CNN involves application of spatial operators that in each voxel take into account information from neighboring voxels, while the SVM classifier performs prediction based only on features derived from each individual voxel. Therefore, the CNN classifier can identify textures, shapes and relative locations of the voxels in the input feature volume that could contribute to the segmentation task. Comparing between the two CNN-based classifiers, incorporating the augmented target loss function generated a higher prediction accuracy, in particular on the tissue boundary region, which suggests that additional penalizations on misclassification of voxels at the tissue boundaries and the CSF tissue type was important for better segmentation.

The proposed CNNAT achieved the highest prediction accuracy when using the 10 MK-Curve-based feature descriptor. However, the feature descriptor with only the DTI parameters also showed a large improvement compared with the SVM classifier with a promising prediction accuracy (89.85% on the HCP data and 76.68% on the CAP data; see Supplementary Figure 3 for a visualization of DTI-feature-based tissue segmentation). These results demonstrate that the proposed CNN-based segmentation is also useful for standard single-shell dMRI data, where the additional DKI parameters are not available. However, when multi-shell data is available, adding DKI parameters (before or after MK-Curve correction) improves the prediction accuracy when compared to using only the DTI parameters. The improvements were potentially due to including information about restricted water diffusion properties that could be inferred from DKI but not DTI, and that better segments white matter from CSF or GM. Correcting implausible parameters and adding the MK-Curve features consistently obtained a higher prediction accuracy compared to the original DKI parameters, demonstrating the usefulness of the MK-Curve method for tissue segmentation.

We showed good performance of the proposed method when applied to the CAP data, despite the fact that the CAP data had a lower image resolution and a lower number of gradient directions than the training HCP data. The ability of a tissue segmentation method to generalize to data from different acquisitions is important. dMRI acquisitions can have widely varying numbers of gradient directions, b-values, and magnitude of b-values, posing a challenge for machine learning. In our quantitative evaluation we showed that nearly 80% of the voxels were in agreement with the SPM segmentation of the coregistered anatomical MRI data, which was higher than the other methods that were evaluated.

Our proposed method performed tissue segmentation prediction directly from the dMRI data and thus could avoid obvious segmentation errors when transferring the anatomical T2w-based “ground truth” segmentation to the dMRI space. In the literature, anatomical-MRI-based segmentation, e.g., the one obtained by SPM, is usually used as the “ground truth” data (Ciritsis et al., 2018; Schnell et al., 2009; Cheng et al., 2020), since the segmentation appears in good agreement with the known anatomy. However, transferring T1w- or T2w-based segmentation into the dMRI space is challenging due to the image distortions in dMRI data, which affected inter-modality registration significantly (Albi et al., 2018; Wu et al., 2008; Jones and Cercignani, 2010). In addition, the spatial resolution of anatomical MRI data is often higher than dMRI data, resulting in segmentation errors from smoothing and image interpolation when transferring the tissue segmentation computed from the high-resolution anatomical data to the low-resolution dMRI data. Segmentation errors in the “ground truth” T2w-based segmentation were also reflected in our data, especially in the CAP data where visual inspection of the T2w co-registered segmentation appears to mislabel some brain areas, in particular at the tissue boundaries. We showed that our proposed method was able to correctly segment in areas where the T2w co-registered segmentation were apparently wrong, thus generating a visually more correct segmentation corresponding to the anatomy as appearing on the T2w image. Similar to previous studies (Beejesh et al., 2019; Hui et al., 2015; Steven et al., 2014; Cheng et al., 2020; Yap et al., 2015), we chose to calculate the accuracy against the “ground truth” segmentation from co-registered anatomical MRI data. Therefore, regions where our method correctly labeled the tissue type but the “ground truth” did not would cause a lower accuracy score. This observation might explain the overall relatively lower accuracy scores of the CAP data, and especially of those with acquisition protocols where reversed phase encoding was not available and image resolution was relatively low compared to the HCP data.

Potential future directions and limitations of the current work are as follows. First, the current work focused on tissue segmentation of the GM, WM and CSF. An interesting future investigation could include segmentation of more specific anatomical structures such as subcortical GM regions, white matter bundles, and specific cortices. Second, in the current work, we evaluated our method on healthy adult brains. Future work could include investigation of the proposed tissue segmentation method in brains with lesions (such as tumors or edema) and/or from different age ranges (e.g., from children and elderly people). Such work would require curation of further training data that reflect anatomy of certain populations. Third, in the present study, we explored a Unet architecture trained on 70 HCP datasets, which provided highly promising performance. Future work could include an investigation of more advanced network architectures and expending training data to more subjects from different acquisition schemes, in combination with our proposed augmented target loss function to further improve classification accuracy. For instance, in our current work, we use multi-view 2D Unet CNNs to train tissue segmentation from different views, it would be interesting to investigate the advances of a 3D Unet that can better leverage the 3D volume information. Fourth, an interesting further direction is to investigate applications of the developed methods. For example, we have shown good performance of the segmentation on extracting the boundaries of different tissue types, which can be helpful for the purpose of brain surface reconstruction applications. Another example is applying the tissue segmentation as an anatomical prior to guide tractography, e.g., to avoid false positive tracking into the CSF. Fifth, we demonstrated the capacity of the proposed model on dMRI data from several different acquisition protocols. However, it will be interesting to investigate further the generalizability of our method as well as the possible benefit of combining our method with other related generalization techniques such as data harmonization (Karayumak et al., 2019; Fortin et al., 2017) and domain adaptation (Chen et al., 2020; Clark et al., 2017).

Supplementary Material

Acknowledgment

The authors would like to thank the NYU Diffusion MRI group (DKI model fit), the SPM community, the MRtrix community (CSD tissue segmentation) for making code used in this study available online, and Dr. Hu Cheng for sharing the daDWI segment code. We gratefully acknowledge funding provided by the following National Institutes of Health (NIH) grants: P41 EB015902, P41 EB015898, R01 MH108574, R01 MH074794, R01 MH119222, R21 MH115280, and U01 CA199459.

Footnotes

Data downloaded at: https://www.humanconnectome.org

Data downloaded at: https://doi.org/10.6084/m9.figshare.8851955.v6

Data downloaded at: https://abcdstudy.org

Data downloaded at: https://openneuro.org/datasets/ds003037/versions/1.0.0

In the CSD framework, a response function is used as the kernel during the deconvolution step to estimate the fiber orientation distribution (FOD) function.

References

- Akkus Z, Galimzianova A, Hoogi A, Rubin DL, Erickson BJ, 2017. Deep learning for brain MRI segmentation: state of the art and future directions. Journal of digital imaging 30, 449–459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Albi A, Meola A, Zhang F, Kahali P, Rigolo L, Tax CM, Ciris PA, Essayed WI, Unadkat P, Norton I, Rathi Y, Olubiyi O, Golby AJ, O’Donnell LJ, 2018. Image Registration to Compensate for EPI Distortion in Patients with Brain Tumors: An Evaluation of Tract-Specific Effects. Journal of Neuroimaging 28, 173–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anbeek P, Vincken KL, Van Bochove GS, Van Osch MJ, van der Grond J, 2005. Probabilistic segmentation of brain tissue in MR imaging. Neuroimage 27, 795–804. [DOI] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ, 2005. Unified segmentation. Neuroimage 26, 839–851. [DOI] [PubMed] [Google Scholar]

- Avants BB, Tustison N, Song G, 2009. Advanced normalization tools (ANTS). Insight j 2, 1–35. [Google Scholar]

- Bagher-Ebadian H, Jafari-Khouzani K, Mitsias PD, Lu M, Soltanian-Zadeh H, Chopp M, Ewing JR, 2011. Predicting final extent of ischemic infarction using artificial neural network analysis of multi-parametric MRI in patients with stroke. PloS one 6, e22626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beejesh A, Gopi VP, Hemanth J, 2019. Brain MR kurtosis imaging study: Contrasting gray and white matter. Cognitive Systems Research 55, 135–145. [Google Scholar]

- Bernal J, Kushibar K, Asfaw DS, Valverde S, Oliver A, Martí R, Lladó X, 2019. Deep convolutional neural networks for brain image analysis on magnetic resonance imaging: a review. Artificial intelligence in medicine 95, 64–81. [DOI] [PubMed] [Google Scholar]

- Breger A, Orlando J, Harar P, Dörfler M, Klimscha S, Grechenig C, Gerendas B, Schmidt-Erfurth U, Ehler M, 2020. On Orthogonal Projections for Dimension Reduction and Applications in Augmented Target Loss Functions for Learning Problems. Journal of Mathematical Imaging and Vision 62, 376–394. [Google Scholar]

- Casey B, Cannonier T, Conley MI, Cohen AO, Barch DM, Heitzeg MM, Soules ME, Teslovich T, Dellarco DV, Garavan H, et al. , 2018. The adolescent brain cognitive development (abcd) study: imaging acquisition across 21 sites. Developmental cognitive neuroscience 32, 43–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen CL, Hsu YC, Yang LY, Tung YH, Luo WB, Liu CM, Hwang TJ, Hwu HG, Tseng WYI, 2020. Generalization of diffusion magnetic resonance imaging–based brain age prediction model through transfer learning. NeuroImage, 116831. [DOI] [PubMed] [Google Scholar]

- Cheng H, Newman S, Afzali M, Fadnavis SS, Garyfallidis E, 2020. Segmentation of the brain using direction-averaged signal of DWI images. Magnetic Resonance Imaging 69, 1–7. [DOI] [PubMed] [Google Scholar]

- Ciritsis A, Boss A, Rossi C, 2018. Automated pixel-wise brain tissue segmentation of diffusion-weighted images via machine learning. NMR in Biomedicine 31, e3931. [DOI] [PubMed] [Google Scholar]

- Clark T, Zhang J, Baig S, Wong A, Haider MA, Khalvati F, 2017. Fully automated segmentation of prostate whole gland and transition zone in diffusion-weighted mri using convolutional neural networks. Journal of Medical Imaging 4, 041307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dhollander T, Raffelt D, Connelly A, 2016. Unsupervised 3-tissue response function estimation from single-shell or multi-shell diffusion mr data without a co-registered t1 image, in: ISMRM, p. 5. [Google Scholar]

- Dhollander T, Raffelt D, Connelly A, 2018. Accuracy of response function estimation algorithms for 3-tissue spherical deconvolution of diverse quality diffusion MRI data, in: ISMRM, p. 1569. [Google Scholar]

- Dice LR, 1945. Measures of the amount of ecologic association between species. Ecology 26, 297–302. [Google Scholar]

- Dong H, Yang G, Liu F, Mo Y, Guo Y, 2017. Automatic brain tumor detection and segmentation using u-net based fully convolutional networks, in: Annual Conference on Medical Image Understanding and Analysis, Springer. pp. 506–517. [Google Scholar]

- Fischl B, 2012. FreeSurfer. NeuroImage 62, 774–781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fortin JP, Parker D, Tunç B, Watanabe T, Elliott MA, Ruparel K, Roalf DR, Satterthwaite TD, Gur RC, Gur RE, et al. , 2017. Harmonization of multi-site diffusion tensor imaging data. Neuroimage 161, 149–170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcia-Garcia A, Orts-Escolano S, Oprea S, Villena-Martinez V, Garcia-Rodriguez J, 2017. A review on deep learning techniques applied to semantic segmentation. arXiv, 1704.06857. [Google Scholar]

- Garza-Villarreal EA, Alcala-Lozano R, Fernandez-Lozano S, Morelos-Santana E, Davalos A, Villicana V, Alcauter S, Castellanos FX, Gonzalez-Olvera JJ, 2020. Clinical and functional connectivity outcomes of 5-hz repeated transcranial magnetic stimulation as an add-on treatment in cocaine use disorder: a double-blind randomized controlled trial. medRxiv. [DOI] [PubMed] [Google Scholar]

- Glasser MF, Sotiropoulos SN, Wilson JA, Coalson TS, Fischl B, Andersson JL, Xu J, Jbabdi S, Webster M, Polimeni JR, et al. , 2013. The minimal preprocessing pipelines for the Human Connectome Project. Neuroimage 80, 105–124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golkov V, Dosovitskiy A, Sperl JI, Menzel MI, Czisch M, Sämann P, Brox T, Cremers D, 2016. Q-space deep learning: twelve-fold shorter and model-free diffusion MRI scans. IEEE transactions on medical imaging 35, 1344–1351. [DOI] [PubMed] [Google Scholar]

- Goodfellow I, Bengio Y, Courville A, 2016. Deep learning. MIT press. [Google Scholar]

- Hagler DJ Jr, Hatton S, Cornejo MD, Makowski C, Fair DA, Dick AS, Sutherland MT, Casey B, Barch DM, Harms MP, et al. , 2019. Image processing and analysis methods for the adolescent brain cognitive development study. Neuroimage 202, 116091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman J, 2009. The elements of statistical learning: data mining, inference, and prediction. Springer Science & Business Media. [Google Scholar]

- Hui ES, Glenn GR, Helpern JA, Jensen JH, 2015. Kurtosis analysis of neural diffusion organization. Neuroimage 106, 391–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hwang H, Rehman HZU, Lee S, 2019. 3D U-Net for skull stripping in brain MRI. Applied Sciences 9, 569. [Google Scholar]

- Jenkinson M, Beckmann CF, Behrens TE, Woolrich MW, Smith SM, 2012. Fsl. Neuroimage 62, 782–790. [DOI] [PubMed] [Google Scholar]

- Jensen JH, Helpern JA, 2010. MRI quantification of non-Gaussian water diffusion by kurtosis analysis. NMR in Biomedicine 23, 698–710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeurissen B, Tournier JD, Dhollander T, Connelly A, Sijbers J, 2014. Multi-tissue constrained spherical deconvolution for improved analysis of multi-shell diffusion MRI data. NeuroImage 103, 411–426. [DOI] [PubMed] [Google Scholar]

- Jeurissen B, Tournier JD, Sijbers J, 2015. Tissue-type segmentation using non-negative matrix factorization of multi-shell diffusion-weighted MRI images. 23th ISMRM, 0346. [Google Scholar]

- Jones DK, Cercignani M, 2010. Twenty-five pitfalls in the analysis of diffusion MRI data. NMR in Biomedicine 23, 803–820. [DOI] [PubMed] [Google Scholar]

- Karayumak SC, Bouix S, Ning L, James A, Crow T, Shenton M, Kubicki M, Rathi Y, 2019. Retrospective harmonization of multi-site diffusion mri data acquired with different acquisition parameters. Neuroimage 184, 180–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krizhevsky A, Sutskever I, Hinton GE, 2012. Imagenet classification with deep convolutional neural networks, in: Advances in neural information processing systems, pp. 1097–1105. [Google Scholar]

- Kumazawa S, Yoshiura T, Honda H, Toyofuku F, 2013. Improvement of partial volume segmentation for brain tissue on diffusion tensor images using multiple-tensor estimation. Journal of digital imaging 26, 1131–1140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumazawa S, Yoshiura T, Honda H, Toyofuku F, Higashida Y, 2010. Partial volume estimation and segmentation of brain tissue based on diffusion tensor MRI. Medical physics 37, 1482–1490. [DOI] [PubMed] [Google Scholar]

- LeCun Y, Bottou L, Bengio Y, Haffner P, 1998. Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 2278–2324. [Google Scholar]

- Liu T, Li H, Wong K, Tarokh A, Guo L, Wong ST, 2007. Brain tissue segmentation based on DTI data. NeuroImage 38, 114–123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mah YH, Jager R, Kennard C, Husain M, Nachev P, 2014. A new method for automated high-dimensional lesion segmentation evaluated in vascular injury and applied to the human occipital lobe. Cortex 56, 51–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malinsky M, Peter R, Hodneland E, Lundervold A, Lundervold A, Jan J, 2013. Registration of FA and T1-weighted MRI data of healthy human brain based on template matching and normalized cross-correlation. J Digit Imaging 26, 774–785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKinnon ET, Jensen JH, Glenn GR, Helpern JA, 2017. Dependence on b-value of the direction-averaged diffusion-weighted imaging signal in brain. Magnetic resonance imaging 36, 121–127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nie D, Wang L, Adeli E, Lao C, Lin W, Shen D, 2018. 3-D fully convolutional networks for multimodal isointense infant brain image segmentation. IEEE transactions on cybernetics 49, 1123–1136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norton I, Essayed WI, Zhang F, Pujol S, Yarmarkovich A, Golby AJ, Kindlmann G, Wassermann D, Estepar RSJ, Rathi Y, et al. , 2017. SlicerDMRI: open source diffusion MRI software for brain cancer research. Cancer research 77, e101–e103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Donnell LJ, Pasternak O, 2015. Does diffusion MRI tell us anything about the white matter? An overview of methods and pitfalls. Schizophrenia research 161, 133–141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker JR, 2010. Algorithms for image processing and computer vision. John Wiley & Sons. [Google Scholar]

- Ronneberger O, Fischer P, Brox T, 2015. U-net: Convolutional networks for biomedical image segmentation, in: International Conference on Medical image computing and computer-assisted intervention, Springer. pp. 234–241. [Google Scholar]

- Schnell S, Saur D, Kreher B, Hennig J, Burkhardt H, Kiselev V, 2009. Fully automated classification of HARDI in vivo data using a support vector machine. NeuroImage 46, 642–651. [DOI] [PubMed] [Google Scholar]

- Shaw CB, Jensen JH, 2017. Recent Computational Advances in Denoising for Magnetic Resonance Diffusional Kurtosis Imaging (DKI). Journal of the Indian Institute of Science 97, 377–390. [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, Bannister PR, Luca MD, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, Stefano ND, Brady JM, Matthews PM, 2004. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage 23, S208–S219. [DOI] [PubMed] [Google Scholar]

- Steven AJ, Zhuo J, Melhem ER, 2014. Diffusion kurtosis imaging: an emerging technique for evaluating the microstructural environment of the brain. American journal of roentgenology 202, W26–W33. [DOI] [PubMed] [Google Scholar]

- Sun P, Wu Y, Chen G, Wu J, Shen D, Yap PT, 2019. Tissue Segmentation Using Sparse Non-negative Matrix Factorization of Spherical Mean Diffusion MRI Data, in: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer. pp. 69–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tabesh A, Jensen JH, Ardekani BA, Helpern JA, 2011. Estimation of tensors and tensor-derived measures in diffusional kurtosis imaging. Magnetic resonance in medicine 65, 823–836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong Q, He H, Gong T, Li C, Liang P, Qian T, Sun Y, Ding Q, Li K, Zhong J, 2020. Multicenter dataset of multi-shell diffusion mri in healthy traveling adults with identical settings. Scientific Data 7, 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tournier JD, Calamante F, Connelly A, 2007. Robust determination of the fibre orientation distribution in diffusion MRI: non-negativity constrained super-resolved spherical deconvolution. Neuroimage 35, 1459–1472. [DOI] [PubMed] [Google Scholar]

- Veraart J, Fieremans E, Jelescu IO, Knoll F, Novikov DS, 2016. Gibbs ringing in diffusion MRI. Magnetic resonance in medicine 76, 301–314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wasserthal J, Neher P, Maier-Hein KH, 2018. Tractseg-fast and accurate white matter tract segmentation. NeuroImage 183, 239–253. [DOI] [PubMed] [Google Scholar]

- Wen Y, He L, von Deneen KM, Lu Y, 2013. Brain tissue classification based on DTI using an improved Fuzzy C-means algorithm with spatial constraints. Magnetic Resonance Imaging 31, 1623–1630. [DOI] [PubMed] [Google Scholar]

- Wu M, Chang LC, Walker L, Lemaitre H, Barnett AS, Marenco S, Pierpaoli C, 2008. Comparison of EPI distortion correction methods in diffusion tensor mri using a novel framework, in: Medical Image Computing and Computer-Assisted Intervention, pp. 321–329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yap PT, Zhang Y, Shen D, 2015. Brain Tissue Segmentation Based on Diffusion MRI Using L0 Sparse-Group Representation Classification, in: Medical Image Computing and Computer-Assisted Intervention, pp. 132–139. [PMC free article] [PubMed] [Google Scholar]

- Zhang F, Cho KIK, Tang Y, Zhang T, Kelly S, Di Biase M, Xu L, Li H, Matcheri K, Whitfield-Gabrieli S, et al. , 2020a. Mk-curve improves sensitivity to identify white matter alterations in clinical high risk for psychosis. NeuroImage 226, 117564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang F, Ning L, O’Donnell LJ, Pasternak O, 2019. MK-curve - Characterizing the relation between mean kurtosis and alterations in the diffusion MRI signal. NeuroImage 196, 68–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang F, Noh T, Juvekar P, Frisken SF, Rigolo L, Norton I, Kapur T, Pujol S, Wells W, Yarmarkovich A, Kindlmann G, Wassermann D, San Jose Estepar R, Rathi Y, Kikinis R, Johnson HJ, Westin CF, Pieper S, Golby AJ, O’Donnell LJ, 2020b. SlicerDMRI: Diffusion MRI and Tractography Research Software for Brain Cancer Surgery Planning and Visualization. JCO Clinical Cancer Informatics 4, 299–309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W, Li R, Deng H, Wang L, Lin W, Ji S, Shen D, 2015. Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. NeuroImage 108, 214–224. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.