Abstract

Randomized trials have demonstrated that ablation of dysplastic Barrett’s esophagus can reduce the risk of progression to cancer. Endoscopic resection for early stage esophageal adenocarcinoma and squamous cell carcinoma can significantly reduce postoperative morbidity compared to esophagectomy. Unfortunately, current endoscopic surveillance technologies (e.g., high-definition white light, electronic, and dye-based chromoendoscopy) lack sensitivity at identifying subtle areas of dysplasia and cancer. Random biopsies sample only approximately 5% of the esophageal mucosa at risk, and there is poor agreement among pathologists in identifying low-grade dysplasia. Machine-based deep learning medical image and video assessment technologies have progressed significantly in recent years, enabled in large part by advances in computer processing capabilities. In deep learning, sequential layers allow models to transform input data (e.g., pixels for imaging data) into a composite representation that allows for classification and feature identification. Several publications have attempted to use this technology to help identify dysplasia and early esophageal cancer. The aims of this reviews are as follows: (a) discussing limitations in our current strategies to identify esophageal dysplasia and cancer, (b) explaining the concepts behind deep learning and convolutional neural networks using language appropriate for clinicians without an engineering background, (c) systematically reviewing the literature for studies that have used deep learning to identify esophageal neoplasia, and (d) based on the systemic review, outlining strategies on further work necessary before these technologies are ready for “prime-time,” i.e., use in routine clinical care.

Keywords: Computer assisted diagnosis, Esophageal cancer, Barrett’s esophagus, Convolutional neural network, Artificial intelligence, Deep learning

Rationale for Endoscopic Surveillance

Esophageal cancer is the 8th most common cancer and the 6th leading cause of cancer death worldwide [1]. Primary malignancies of the esophagus include esophageal adenocarcinoma (EAC) and squamous cell carcinoma (SCC). The overall prognosis for both EAC and SCC is poor because most of these cancers are identified at an advanced stage. Randomized trials have demonstrated that identification and endoscopic eradication of dysplasia via ablation or resection reduce the risk of progression to cancer [2, 3]. Endoscopic resection of esophageal cancer at an early stage (i.e., limited to the mucosa or muscularis mucosa) can achieve > 90% cure rates without the need for esophagectomy, which carries substantial morbidity [4].

Barrett’s esophagus (BE) occurs in 1–2% of the population in Western countries and is characterized by replacement of the squamous lining of the esophagus by columnar mucosa (i.e., intestinal metaplasia) [5]. Because BE patients have an increased risk of EAC compared to the general population, endoscopic surveillance is generally recommended in Western nations to identify dysplasia and early stage cancer. Similarly, endoscopic surveillance is performed in many areas with a high prevalence of SCC (e.g., China, Eastern Asia, and Africa) to identify dysplasia and early stage SCC [6].

Endoscopic Surveillance Techniques and Limitations

Visual identification of dysplasia and early esophageal cancer using high-definition white light endoscopy (HD-WLE) is a highly subjective task that requires significant effort and experience. Even in expert hands, the sensitivity of HD-WLE for identifying dysplasia and cancer in BE is a mere 48% [7] likely due to lack of specific training for gastroenterologists to recognize subtle mucosal changes visualized by this modality. Guidelines therefore recommend random 4-quadrant biopsies at 1–2 cm intervals, a practice dubbed the Seattle protocol [5]. While the Seattle protocol does improve yield for dysplasia, adherence to the protocol is as low in clinical practice, likely due to the time-consuming nature of the protocol [8]. Furthermore, only 5% of the esophageal mucosa at risk is sampled [11], and there is poor agreement among pathologists at identifying low-grade dysplasia (LGD) [12, 13], both of which can lead to missed diagnoses and progression of disease.

The limitations in our current approach in identifying esophageal neoplasia have spurred development of several novel technologies that may enhance diagnostic capabilities. Commercially available imaging-based technologies such as probe-based confocal endomicroscopy (pCLE) and optical coherence tomography (OCT) are expensive and operator-dependent, requiring specialized training to perform and interpret. Chromoendoscopy technologies are less costly, highlighting the mucosal surface pattern and include dye-based (e.g., acetic acid, Lugol’s iodine) and electronic techniques (e.g., narrow-band imaging, Fuji intelligent chromoendoscopy, and i-scan) [9]. However, chromoendoscopy also requires specialized training. Wide area trans-epithelial sampling (WATS) utilizes an abrasive brush that can sample tissue from a wide area of the esophagus [10]. A disadvantage of this technology is that cytology rather than histologic specimens with preserved tissue architecture is used for analysis.

In 2016, the American Society for Gastrointestinal Endoscopy (ASGE) published thresholds for a technology to replace random biopsies in clinical practice. The ASGE recommends an imaging technology along with targeted biopsy must demonstrate a per-patient sensitivity of ≥ 90%, negative predictive value (NPV) ≥ 98%, and specificity > 80% for detecting dysplasia or early EAC when compared to random biopsies [11]. Unfortunately, none of the aforementioned technological advances met these criteria for replacing random biopsies [12, 13]. There exists a clear need for technological improvements to the current random biopsy protocol, and recent developments in computing power have brought artificial intelligence to the forefront of this discussion.

Artificial Intelligence (AI): A Primer

Computer-aided detection is not an entirely new concept in esophageal neoplasia identification; in fact, WATS samples are analyzed using a computerized imaging processing system. Yet, only recently progressive increases in computer storage and processing speed, along with the availability of large datasets (“big data”), converged to markedly expand the capabilities of AI. The vocabulary of AI studies may seem daunting to those without a background in computer science and may dissuade clinicians from critically appraising AI-based gastroenterology studies. Lay persons’ definitions of terms commonly utilized in AI-based studies are summarized in Table 1. Beginning with the basics, let us first define “machine learning (ML),” which refers to the development of algorithms that can use structured data in order to perform tasks with minimal human input [14]. For example, if one feeds a ML algorithm thousands of images of sedans and trucks, the algorithm will eventually be able to classify a brand-new image as being either a sedan or a truck. Deep learning (DL), by contrast, is a subset of ML in which the algorithm uses layers of data transformations to identify features of the given dataset in order to learn how to classify the data [14]. To use our prior example, one could once again provide the DL algorithm with images of sedans and trucks, and this time it would learn to identify the features (this is the key distinction in DL) of each class of vehicle in order to classify new images. This ability to identify features and learn with minimal human supervision is part of what makes DL so exciting in the field of medicine, which (between CT scans, endoscopy video data, surgical biopsies, and beyond) has ample visual data in need of analysis and interpretation.

Table 1.

Artificial intelligence terminology

| Term | Abbreviation | Definition |

|---|---|---|

| Activation Map | – | The output of a convolutional layer that highlights features detected by the algorithm |

| Convolutional neural network | CNN | An algorithm that can classify images by identifying features in the image using mathematical filters |

| Deep learning | DL | A subset of machine learning in which the data provided to the algorithm do not need to be structured in order for the algorithm to learn |

| Feature | – | An aspect of an image that can be identified by a CNN. Can be simple (e.g., lines, edges) or complex (e.g., specific faces). |

| Filter | – | The mathematical “lens” that the CNN hovers over (i.e., convolves over) the image in order to highlight features |

| Layers | – | Aspects of the CNN algorithm that refine the feature identification and image classification. Convolutional layers refer to the filters described above. Other layers can perform complex mathematical tasks in the algorithm. |

| Machine learning | ML | The development of algorithms that can use structured data in order to perform tasks without human input |

The second key term to define in the world of AI is “convolutional neural network” or CNN, which represents one of the most common ways in which modern DL algorithms accomplish their feature recognition. The term is actually based on the way in which the neurons in the visual cortex in the brain respond specifically in the presence of certain visual stimuli (e.g., horizontal vs. vertical lines) [15]. In similar fashion, the goal of a CNN is to develop filters that hover over an image (i.e., convolve over them) in order to identify features. Each of these filters produces an “activation map,” which essentially highlights the feature identified by a given filter. These filters start out very simply, with the initial filters or convolutional “layers” identifying simple features such as curves or edges. Each subsequent layer of the CNN works on the activation maps from the previous layer; the result is the identification of increasingly complex features as the network moves deeper and deeper. Instead of simple lines and edges, deeper layers may light up in response to complex shapes, faces, words, etc. [16]. In the end, the CNN then uses these activation maps to classify the image as belonging to one of the multiple classes by correlating specific features with each class. To effectively make such correlations and ascribe appropriate weights to given features, a CNN requires data to train itself. After many iterations of training on labeled images, a CNN should have fine-tuned its layers and is ready to be tested on a fresh dataset. The larger and higher quality the dataset for the training phase, the more accurate the CNN is likely to be for the testing phase. As mentioned, we as clinicians have access to massive quantities of data; CNNs and DL represent means by which we can help make sense of this treasure trove.

Exciting though this new technology undoubtedly is, there are significant pitfalls of which to be aware. For example, it can be difficult for humans to distinguish between esophageal dysplasia and non-dysplastic BE with inflammation [17], meaning that a classification algorithm will need to identify large numbers of features in order to separate the two classes. In general, complex models with many features are needed to tackle difficult datasets; to achieve this level of complexity, extremely large training datasets are necessary to generalize the model to new data. [18]. Unfortunately, datasets in medicine tend to be fragmented (e.g., rather than having access to one million biopsies across the nation, researchers may have access to only a few hundred at one or two centers), and these piecemeal datasets make applications of DL more difficult. An additional and related pitfall involves the quality of the data, specifically the accuracy of “ground truth” to which the model is being compared. To once again use our example of esophageal dysplasia, human pathologists have relatively poor inter-observer agreement at identifying LGD in BE [19–21]. A DL model that attempts to distinguish LGD from non-dysplastic BE would be difficult to train and test because the certainty of the underlying data is suspect. Unlike our relatively simple example of sedans versus trucks, medical image analysis is much murkier territory. One potential solution to these pitfalls would be to collect and annotate large quantities of medical data from across multiple centers and to have these data reviewed by multiple experts to ensure quality, as has been done with breast cancer data in the CAMELYON dataset, among others [22].

Identifying Esophageal Neoplasia with AI

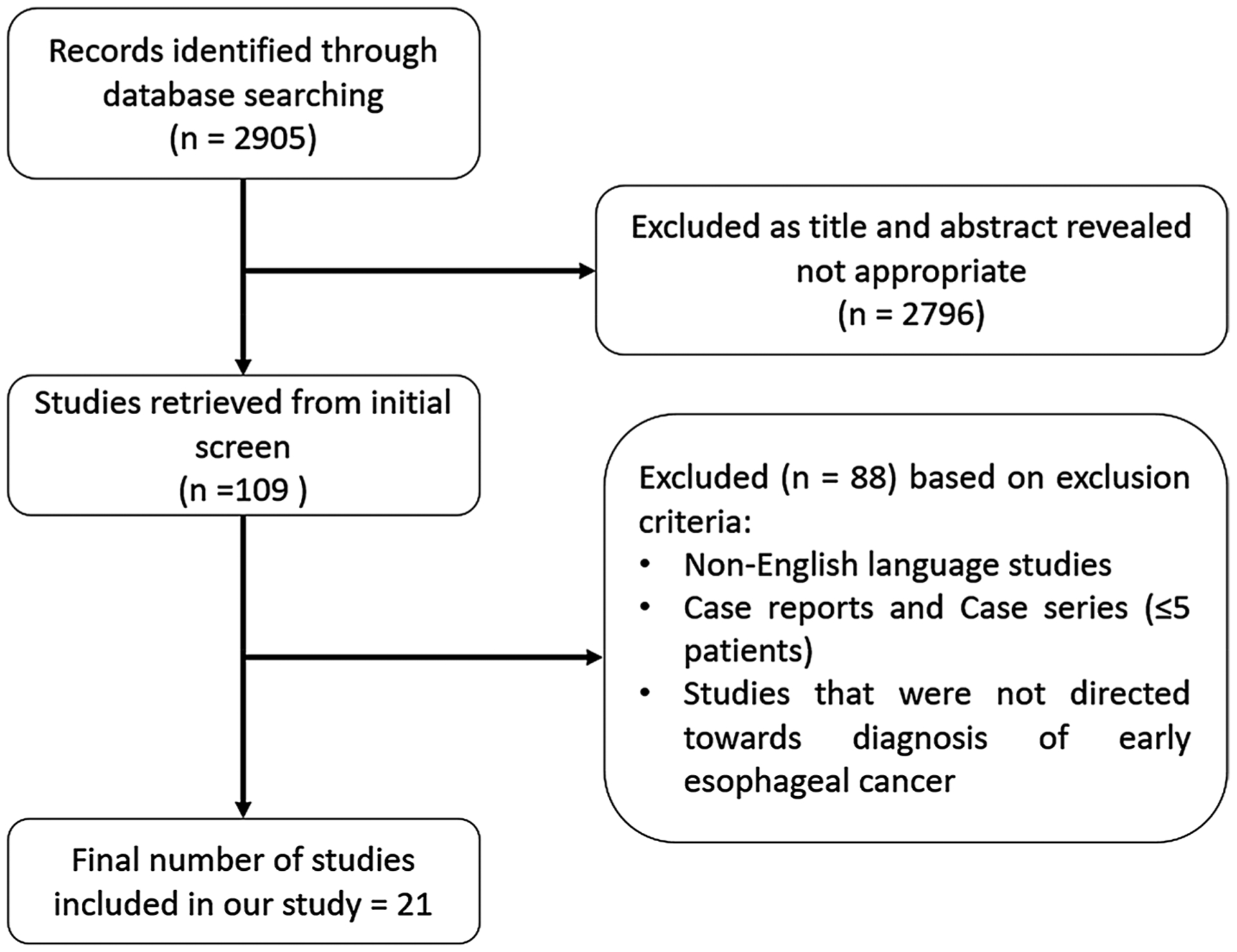

In order to summarize the literature on using DL to identify esophageal neoplasia, we searched Pubmed/Medline, Embase, Cochrane library, and Web of Science for studies on the diagnostic performance of AI in the detection of esophageal neoplasia (BE, squamous dysplasia, EAC, and SCC) as per the guidelines outlined by the Preferred Reporting Items for Systematic Reviews and Meta-analyses statement (PRISMA) [23]. The literature search was performed in close collaboration with a health sciences librarian at Virginia Commonwealth University. When key information regarding accuracy of the DL models at identifying neoplasia was missing, we attempted to contact authors to obtain this information. We identified 21 relevant studies, 6 of which were conference abstracts (Fig. 1). All studies were published after 2017. Characteristics of all studies including methodology and sample sizes are outlined in Table 2. Study outcomes are listed in Table 3. The primary outcome was the identification of squamous dysplasia or SCC in 11 studies, and BE with dysplasia or EAC in 15 studies. All studies used endoscopic images for training. One study used live endoscopy videos for training and validation [24]. In 17 out of 21 studies, data were available for both the training and the test/validation datasets. Among these studies, the number of unique patients in the training set ranged from 17 to 1530. (The number of specimens ranged from 60 to 196,200.) The number of unique patients in the test/validation set ranged from 9 to 880. (The number of specimens ranged from 62 to 21,800.) In three studies the, test/validation was performed prospectively [24–26]. Sensitivity across the studies ranged from 70 to 100%, specificity ranged from 42 to 100%, and overall accuracy ranged from 66 to 98% (Table 3). The models achieved ASGE recommended sensitivity and specificity thresholds in 15 and 14 studies, respectively. In most of these studies, ground truth was established based on expert pathologist review of histopathology.

Fig. 1.

Flow diagram of studies identified in the systematic review

Table 2.

Characteristics of clinical studies involving computer-aided diagnosis for early esophageal cancer

| References | Study design | Target esophageal disease* | Type of specimens for training | Number of specimens for training set | Number of unique patients for training set | Number of specimens for test/validation | Number of unique patients for test/validation |

|---|---|---|---|---|---|---|---|

| Zhang et al. [28] | Retrospective | SCC, EAC | Endoscopic images* | 196,200 | – | 21,800 | – |

| Mendel et al.[29] | Retrospective | EAC | Endoscopic images | 100 | 39 | 100 | 39 |

| Ebigbo et al.[30] (Augsburg data) | Retrospective | EAC | Endoscopic images | 148 | 62 | – | – |

| Ebigbo et al.[30] (MICCAI data) | Retrospective | EAC | Endoscopic images | 100 | 78 | – | – |

| Everson et al.[31] | Retrospective | SCC | Endoscopic images/video | 2045 | 17 | 812 | – |

| Guo et al. [32] | Retrospective | SCC | Endoscopic images | 2085 | 293 | 1478 | 59 |

| Horie et al. [33] | Retrospective | SCC, EAC | Endoscopic images | 8428 | 387 | 1128 | 97 |

| Cai et al. [34] | Retrospective | SCC | Endoscopic images | 2428 | 746 | 187 | 52 |

| de Groof et al.[26] | Prospective | EAC, BE | Endoscopic images | 60 | 60 | – | – |

| Ghatwary et al.[35] | Retrospective | EAC | Endoscopic images | 100 | 21 | – | 9 |

| Horie et al. [36] | Retrospective | SCC, EAC | Endoscopic images | 8428 | 384 | 1118 | 97 |

| Liu et al. [37] | Retrospective | SCC | Endoscopic images | 10,950 | 503 | 500 | – |

| Shiroma et al.[38] | Retrospective | SCC, SD, EAC | Endoscopic images | 8428 | – | 40¥ | 20 |

| Struyvenberg et al. [39] | Retrospective | EAC | Endoscopic images€ | 1247 | – | 183 | 100 |

| Tang et al. [40] | Retrospective | SCC, SD | Endoscopic images | 5174 | 1530 | 946 | 280 |

| de Groof et al.[25] | Prospective | EAC | Endoscopic images€ | 297 | 95 | 80 | 80 |

| Ebigbo et al.[41] | Retrospective | EAC | Endoscopic images | 129 | – | 62 | 14 |

| Guo et al. [42] | Retrospective | SCC | Endoscopic images | 6473 | 549 | 6671 | 880 |

| Ohmori et al.[43] | Retrospective | SCC, SD | Endoscopic images | 22,562 | – | 727 | 135 |

| de Groof et al.[24] | Prospective | EAC | Endoscopic images (application in live endoscopy) | 1544 | – | – | 160 |

| Hashimoto et al.[44] | Retrospective | EAC | Endoscopic images | 1832 | 100 | 458 | 39 |

| Iwagami et al.[45] | Retrospective | EAC-EJ | Endoscopic images | 3443 | 159 | 275 | 36 |

1000 patches were made from each endoscopic image, and total number of endoscopic images were 218.

Test/validation was done on 40 test movies of 20 patients.

The CAD system was pre-trained on a unique dataset of 494,364 labeled endoscopic images named GastroNet. SCC squamous cell cancer, SD squamous dysplasia, EAC esophageal adenocarcinoma, BE Barrett’s esophagus, EAC-EJ esophageal adenocarcinoma at esophagogastric junction

Table 3.

Performance data on artificial intelligence algorithms used for computer-aided diagnosis for early esophageal cancer

| References | Deep learning model? | Model | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) | Accuracy (%) |

|---|---|---|---|---|---|---|---|

| Zhang et al. [28]* | No | Supervised learning via CNN. 218,000 patches generated from 218 endoscopic images | 73.41 | 83.54 | 72.09 | 84.44 | 79.38 |

| Mendel et al. [29] | Yes | Deep CNN with a residual net (ResNet) architecture | 94 | 88 | – | – | – |

| Ebigbo et al. [30] | Yes | Deep CNN with a residual net (ResNet) architecture | 97 (WLE) 94 (NBI) | 88 (WLE) 80 (NBI) | – | – | – |

| Ebigbo et al. [30] | Yes | Deep CNN with a residual net (ResNet) architecture | 92 | 100 | – | – | – |

| Everson et al. [31] | Yes | Deep CNN | 89.3 | 98 | – | – | 93.7 |

| Guo et al. [32]* | NA | NA | 97.8 | 76.2 | – | – | – |

| Horie et al. [33]* | – | Deep CNN | – | – | 54 | – | 98 |

| Cai et al. [34] | Yes | CNN | 97.8 | 85.4 | 86.4 | 97.6 | 91.4 |

| de Groof et al. [26] | No | Supervised learning technique | 95 | 85 | 87.2 | – | 91.7 |

| Ghatwary et al. [35] | Yes | Regional-based convolutional neural network (R-CNN), Fast R-CNN, Faster R-CNN and Single Shot MultiBox Detector (SSD) | 96 | 92 | – | – | – |

| Horie et al. [36] | Yes | Deep CNN (Single Shot MultiBox Detector) | 98 | 79 | 39 | 95 | 98 |

| Liu et al. [37] | Yes | CNN | 77.58 | – | 78.06 | – | – |

| Shiroma et al. [38]* | Yes | CNN | 70 | – | 42.1 | – | 80 |

| Struyvenberg et al. [39]* | Yes | CNN | 88 | 78 | – | – | 84 |

| Tang et al. [40]* | Yes | CNN | 97 | 94 | – | – | 97 |

| de Groof et al. [25] | Yes | ResNet U-net | 93 | 83 | – | – | 88 |

| Ebigbo et al. [41] | Yes | Encoder–decoder artificial neural network | 83.7 | 100 | – | – | 89.9 |

| Guo et al. [42] | Yes | Detection neural network | 98.04 | 95.03 | – | – | – |

| Ohmori et al. [43] | Yes | CNN | 100 (NME-NBI) 90 (WLE) | 63(NME-NBI) 76(WLE) | 63(NME-NBI) 70(WLE) | 100(NME-NBI 93(WLE) | 77(NME-NBI) 81 (WLE) |

| de Groof et al. [24] | Yes | Custom-made, fully residual, hybrid ResNet/U-Net model | 91 | 89 | – | – | 90 |

| Hashimoto et al.[44] | Yes | CNN: inception-ResNet-v2 algorithm | 96.4 | 94.2 | 89.2 | – | 95.4 |

| Iwagami et al. [45] | Yes | CNN: single shot multiBox detector | 94 | 42 | 58 | 90 | 66 |

Conference abstracts. WLE; White light endoscopy, NBI; narrow-band imaging, NME; non-magnified endoscopy

Summary

As described earlier, random esophageal biopsies to identify dysplasia and cancer during endoscopic surveillance are fraught with limitations. Technologies that have attempted to replace random biopsies to date have not been able to achieve sufficient accuracy and require substantial expertise to interpret, which limits widespread utilization. Additionally, the high cost of imaging technologies such as pCLE and OCT represents a significant barrier to adoption in routine clinical practice. As with other technologies, in order to replace the Seattle protocol, DL-based AI must not only meet recommended accuracy thresholds in prospective randomized trials but should also be inexpensive and easy to use and interpret. In an ideal scenario, manufacturers would incorporate AI as a toggle on the endoscope, similar to electronic chromoendoscopy technologies like NBI. Once AI is turned “on,” the algorithm would rapidly and accurately discriminate non-dysplastic areas from segments with a very high probability of dysplasia for targeted biopsy or mucosal resection. Alternatively, if the technology is sufficiently accurate, the endoscopist could proceed with ablation during the same session, thereby eliminating the time, cost, and risk of an additional endoscopic procedure. DL also has the potential to improve uncertainty in histologic diagnosis, particularly for LGD. For instance, our group recently demonstrated that DL algorithms are capable of identifying dysplasia in histologic BE specimens with high accuracy, suggesting that future algorithms may be able to distinguish low-grade from high-grade dysplasia [27]. The most recent published studies using this technology have used real-time endoscopic videos to target areas of concern [24]. Preliminary results suggest that some algorithms achieve high levels of accuracy. Rather than working in silos, researchers must conduct head-to-head comparisons in order to converge on the optimal iterative algorithm and, importantly, compare the results of DL models to human accuracy. Finally, well-designed randomized trials comparing DL to Seattle protocol biopsies are necessary before endoscopists can utilize AI in routine clinical practice.

Acknowledgments

John Cyrus (Research and Education Librarian): Assisted in literature search of following databases: Pubmed/Medline, Embase, Cochrane library and Web of Science for studies. Tilak Shah: ASGE, McGuire Research Institute. Research contract with Allergan. Sana Syed: K23DK117061-01A1 (SS) National Institute of Diabetes and Digestive and Kidney Diseases (NIDDK) of the National Institutes of Health.

References

- 1.Arnold M, Soerjomataram I, Ferlay J, Forman D. Global incidence of oesophageal cancer by histological subtype in 2012. Gut. 2015;64:381–387. [DOI] [PubMed] [Google Scholar]

- 2.Cotton CC, Wolf WA, Overholt BF, et al. Late recurrence of Barrett’s esophagus after complete eradication of intestinal metaplasia is rare: final report from ablation in intestinal metaplasia containing dysplasia trial. Gastroenterology. 2017;153:681–688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Phoa KN, Rosmolen WD, Weusten B, et al. The cost-effectiveness of radiofrequency ablation for Barrett’s esophagus with low-grade dysplasia: results from a randomized controlled trial (SURF trial). Gastrointest Endosc. 2017;86:120–129. [DOI] [PubMed] [Google Scholar]

- 4.Naveed M, Kubiliun N. Endoscopic treatment of early-stage esophageal cancer. Curr Oncol Rep. 2018;20:71. [DOI] [PubMed] [Google Scholar]

- 5.Shaheen NJ, Falk GW, Iyer PG, Gerson LB, American College of G. ACG clinical guideline: diagnosis and management of Barrett’s esophagus. Am J Gastroenterol. 2016;111:30–50. (quiz 1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Domper Arnal MJ, Ferrandez Arenas A, Lanas Arbeloa A. Esophageal cancer: Risk factors, screening and endoscopic treatment in Western and Eastern countries. World J Gastroenterol. 2015;21:7933–7943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sharma N, Ho KY. Recent updates in the endoscopic diagnosis of Barrett’s oesophagus. Gastrointest Tumors. 2016;3:109–113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wani S, Williams JL, Komanduri S, Muthusamy VR, Shaheen NJ. Endoscopists systematically undersample patients with long-segment Barrett’s esophagus: an analysis of biopsy sampling practices from a quality improvement registry. Gastrointest Endosc. 2019;90:732–741. [DOI] [PubMed] [Google Scholar]

- 9.Davila RE. Chromoendoscopy. Gastrointest Endosc Clin N Am. 2009;19:193–208. [DOI] [PubMed] [Google Scholar]

- 10.Goldblum JR, Shaheen NJ, Vennalaganti PR, Sharma P, Light-dale CJ. WATS for Barrett’s surveillance. Gastrointest Endosc. 2018;88:201–202. [DOI] [PubMed] [Google Scholar]

- 11.Committee AT, Thosani N, Abu Dayyeh BK, et al. ASGE Technology Committee systematic review and meta-analysis assessing the ASGE preservation and incorporation of valuable endoscopic innovations thresholds for adopting real-time imaging-assisted endoscopic targeted biopsy during endoscopic surveillance of Barrett’s esophagus. Gastrointest Endosc. 2016;83:684–698. [DOI] [PubMed] [Google Scholar]

- 12.Ferguson DD, DeVault KR, Krishna M, Loeb DS, Wolfsen HC, Wallace MB. Enhanced magnification-directed biopsies do not increase the detection of intestinal metaplasia in patients with GERD. Am J Gastroenterol. 2006;101:1611–1616. [DOI] [PubMed] [Google Scholar]

- 13.Sanghi V, Thota PN. Barrett’s esophagus: novel strategies for screening and surveillance. Ther Adv Chronic Dis. 2019;10:2040622319837851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Patel V, Khan MN, Shrivastava A, et al. Artificial intelligence applied to gastrointestinal diagnostics: a review. J Pediatr Gastroenterol Nutr. 2020;70:4–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hubel DH, Wiesel TN. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol. 1962;160:106–154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zeiler MD, Fergus R. Visualizing and understanding convolutional networks. Eur Conf Comput Vis. 2014;8689:818–833. [Google Scholar]

- 17.Goldblum JR. Controversies in the diagnosis of Barrett esophagus and Barrett-related dysplasia: one pathologist’s perspective. Arch Pathol Lab Med. 2010;134:1479–1484. [DOI] [PubMed] [Google Scholar]

- 18.Deo RC. Machine learning in medicine. Circulation. 2015;132:1920–1930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wani S, Rubenstein JH, Vieth M, Bergman J. Diagnosis and management of low-grade dysplasia in Barrett’s esophagus: expert review from the clinical practice updates committee of the american gastroenterological association. Gastroenterology. 2016;151:822–835. [DOI] [PubMed] [Google Scholar]

- 20.Shah T, Lippman R, Kohli D, Mutha P, Solomon S, Zfass A. Accuracy of probe-based confocal laser endomicroscopy (pCLE) compared to random biopsies during endoscopic surveillance of Barrett’s esophagus. Endosc Int Open. 2018;6:E414–E420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Vennalaganti P, Kanakadandi V, Goldblum JR, et al. Discordance among pathologists in the United States and Europe in diagnosis of low-grade dysplasia for patients with Barrett’s Esophagus. Gastroenterology. 2017;152:564–570. [DOI] [PubMed] [Google Scholar]

- 22.Litjens G, Bandi P, Ehteshami Bejnordi B, et al. 1399 H&E-stained sentinel lymph node sections of breast cancer patients: the CAMELYON dataset. Gigascience. 2018;7:giy065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151:264–269. [DOI] [PubMed] [Google Scholar]

- 24.de Groof AJ, Struyvenberg MR, Fockens KN, et al. Deep learning algorithm detection of Barrett’s neoplasia with high accuracy during live endoscopic procedures: a pilot study (with video). Gastrointest Endosc. 2020;91:1242–1250. [DOI] [PubMed] [Google Scholar]

- 25.de Groof AJ, Struyvenberg MR, van der Putten J, et al. Deep-learning system detects neoplasia in patients with Barrett’s esophagus with higher accuracy than endoscopists in a multistep training and validation study with benchmarking. Gastroenterology. 2020;158:915–929. [DOI] [PubMed] [Google Scholar]

- 26.de Groof J, van der Sommen F, van der Putten J, et al. The Argos project: the development of a computer-aided detection system to improve detection of Barrett’s neoplasia on white light endoscopy. United Eur Gastroenterol J. 2019;7:538–547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sali R, Moradinasab N, Guleria S, Ehsan L, Fernandes P, Shah T, Syed S, Brown DE. Deep learning for whole slide tissue histopathology classification: a comparative study in identification of dysplastic and non-dysplastic Barrett’s esophagus. J Pers Med. Accepted manuscript. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Zhang CML, Uedo N, Matsuura N, Tam P, Teoh AY. The use of convolutional neural artificial intelligence network to aid the diagnosis and classification of early esophageal neoplasia. A feasibility study. Gastrointestinal Endosc. 2017;85:AB581–AB582. [Google Scholar]

- 29.Mendel R, Ebigbo A, Probst A, Messmann H, Palm C. Barrett’s Esophagus analysis using convolutional neural networks. Bildver-arbeitung für die Medizin. 2017:80–5. [Google Scholar]

- 30.Ebigbo A, Mendel R, Probst A, et al. Computer-aided diagnosis using deep learning in the evaluation of early oesophageal adenocarcinoma. Gut. 2019;68:1143–1145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Everson M, Herrera L, Li W, et al. Artificial intelligence for the real-time classification of intrapapillary capillary loop patterns in the endoscopic diagnosis of early oesophageal squamous cell carcinoma: a proof-of-concept study. United Eur Gastroenterol J. 2019;7:297–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Guo L, Wu C, Xiao X, et al. Automated detection of precancerous lesion and early esophageal squamous cancer using a deep learning model. Am J Gastroenterol. 2018;113:S173–S174. [Google Scholar]

- 33.Horie Y, Yoshio T, Aoyama K, Fujisaki J, Tada T. Application of artificial intelligence using convolutional neural networks in the detection of esophageal cancer. Gastrointestinal Endosc. 2018;87:AB538. [Google Scholar]

- 34.Cai SL, Li B, Tan WM, et al. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest Endosc. 2019;90:745–753. [DOI] [PubMed] [Google Scholar]

- 35.Ghatwary N, Zolgharni M, Ye X. Early esophageal adenocarcinoma detection using deep learning methods. Int J Comput Assist Radiol Surg. 2019;14:611–621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Horie Y, Yoshio T, Aoyama K, et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest Endosc. 2019;89:25–32. [DOI] [PubMed] [Google Scholar]

- 37.Liu DY, Jiang H, Rao N, Luo C, Du W, Li Z, et al. Computer aided annotation of early esophageal cancer in gastroscopic images based on Deeplabv3+ network. ICBIP ‘19: Proceedings of the 2019 4th International Conference on Biomedical Signal and Image Processing (ICBIP 2019). 2019: pp 56–61. [Google Scholar]

- 38.Shiroma S, Yoshio T, Aoyama K, Tsuchida T, Fujisaki J, Tada T. The application of artificial intelligence to detect esophageal squamous cell carcinoma in movies using convoluntional neural networks. Gastrointest Endosc. 2019;89:AB89. [Google Scholar]

- 39.Struyvenberg MRDG, Van Der Putten J, Van Der Sommen F, et al. Deep learning algorithm for characterization of barrett’s neoplasia demonstrates high accuracy on NBI-zoom images. Gastroenterology. 2019;156:S-58. [Google Scholar]

- 40.Tang DW, Wang L, He G, et al. Artificial intelligence network to aid the diagnosis of early esophageal squamous cell carcinoma and esophageal inflammations in white light endoscopic images. Gastrointestinal Endosc. 2019;89:AB654. [Google Scholar]

- 41.Ebigbo A, Mendel R, Probst A, et al. Real-time use of artificial intelligence in the evaluation of cancer in Barrett’s oesophagus. Gut. 2020;69:615–616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Guo L, Xiao X, Wu C, et al. Real-time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos). Gastrointest Endosc. 2020;91:41–51. [DOI] [PubMed] [Google Scholar]

- 43.Ohmori M, Ishihara R, Aoyama K, et al. Endoscopic detection and differentiation of esophageal lesions using a deep neural network. Gastrointest Endosc. 2020;91:301–309. [DOI] [PubMed] [Google Scholar]

- 44.Hashimoto R, Requa J, Dao T, et al. Artificial intelligence using convolutional neural networks for real-time detection of early esophageal neoplasia in Barrett’s esophagus (with video). Gastrointest Endosc. 2020;91:1264–1271. [DOI] [PubMed] [Google Scholar]

- 45.Iwagami H, Ishihara R, Aoyama K, Fukuda H, Shimamoto Y, Kono M, et al. Artificial intelligence for the detection of esophageal and esophagogastric junctional adenocarcinoma. J Gastroenterol Hepatol. 2020. [DOI] [PubMed] [Google Scholar]